Abstract

The subspace minimization conjugate gradient (SMCG) methods proposed by Yuan and Store are efficient iterative methods for unconstrained optimization, where the search directions are generated by minimizing the quadratic approximate models of the objective function at the current iterative point. Although the SMCG methods have illustrated excellent numerical performance, they are only used to solve unconstrained optimization problems at present. In this paper, we extend the SMCG methods and present an efficient SMCG method for solving nonlinear monotone equations with convex constraints by combining it with the projection technique, where the search direction is sufficiently descent.Under mild conditions, we establish the global convergence and R-linear convergence rate of the proposed method. The numerical experiment indicates that the proposed method is very promising.

Keywords:

nonlinear monotone equations; subspace minimization conjugate gradient method; convex constraints; global convergence; R-linear convergence rate MSC:

90C06; 65K

1. Introduction

We consider the following nonlinear equations with convex constraints:

where is a non-empty closed convex set and F: is a continuous mapping that satisfies the monotonicity condition

for all It is easy to verify that the solution set of problem (1) is convex under condition (2).

Nonlinear equations have numerous practical applications, e.g., machinery manufacturing problems [1], neural networks [2], economic equilibrium problems [3], image recovery problems [4], and so on. In the context of many practical applications, problem (1) has attracted a substantial number of scholars to put forward more effective iterative methods to find solutions, such as Newton’s method, quasi-Newton methods, trust region methods, Levenberg–Marquardt methods, or their variants ([5,6,7,8,9]). Although these methods are very popular and have fast convergence at an adequately good initial point, they are not suitable for solving large-scale nonlinear equations due to the calculation and storage of the Jacobian matrix or its approximation.

Due to its simple form and low memory requirement, conjugate gradient (CG) methods are used to solve problem (1) by combining them with projection technology proposed by Solodov and Svaiter [10] (see [11,12]). Xiao and Zhu [13] extended the famous CG_DESCENT method [14] for solving nonlinear monotone equations with convex constraints due to its effectiveness. Liu and Li [15] presented an efficient projection method for solving convex constrained monotone nonlinear equations, which can be viewed as another extension of the CG_DESCENT method [14] and was used to solve the sparse signal reconstruction in compressive sensing. Based on the Dai–Yuan (DY) method [16], Liu and Feng [17] presented an efficient derivative-free iterative method and established its Q-linear convergence rate of the proposed method under the local error bound condition. By minimizing the distance between relative matrix and the self-scaling memoryless BFGS method in the Frobenius norm, Gao et al. [18] proposed an adaptive projection method for solving nonlinear equations and applied it to recover a sparse signal from incomplete and contaminated sampling measurements. Based on [19], Li and Zheng [20] proposed two effective derivative-free methods for solving large-scale nonsmooth monotone nonlinear equations. Waziri et al. [21] proposed two DY-type iterative methods for solving (1). By using the projection method [10], Abdulkarim et al. [22] introduced two classes of three-term methods for solving (1) and established the global convergence under a weaker monotonicity condition.

The subspace minimization conjugate gradient (SMCG) methods proposed by Yuan and Stoer [23] are generalizations of the traditional CG methods and are a class of iterative methods for unconstrained optimization. The SMCG methods have illustrated excellent numerical performance and have also received much attention recently. However, the SMCG methods are only used to solve unconstrained optimization at present. Therefore, it is very interesting to study the SMCG methods for solving nonlinear equations with convex constraints. In this paper, we propose an efficient SMCG method for solving nonlinear monotone equations with convex constraints by combining it with the projection technology, where the search direction is in a sufficient descent. Under suitable conditions, the global convergence and the convergence rate of the proposed method are established. The numerical experiment is conducted, which indicates that the proposed method is superior to some efficient conjugate gradient methods.

The remainder of this paper is organized as follows. In Section 2, an efficient SMCG method for solving nonlinear monotone equations with convex constraints is presented. We prove the global convergence and the convergence rate of the proposed method in Section 3. In Section 4, the conducted numerical experiment is discussed to verify the effectiveness of the proposed method. The conclusion is presented in Section 5.

2. The SMCG Method for Solving Nonlinear Monotone Equations with Convex Constraints

In this section, we first review the SMCG methods for unconstrained optimization, and then propose an efficient SMCG method for solving (1) by combining it with the projection technique and exploit some of its important properties.

2.1. The SMCG Method for Unconstrained Optimization

We review the SMCG methods here.

The SMCG methods were proposed by Yuan and Stoer [23] to solve the unconstrained optimization problem

where is continuously differentiable. The SMCG methods are of the form , where is the stepsize and is the search direction, which are generated by minimizing the quadratic approximate models of the objective function f at the current iterative point in the subspace namely

where is an approximation to the Hessian matrix and is required to satisfy the quasi-Newton equation , , .

In the following, we consider the case that and are not collinear. Since the vector in can be expressed as

where , by substituting (4) into (3), we obtain

When is positive definite, by imposing , we obtain the optimal solution of subproblem (5) (for more details, please see [23]):

where , , .

An important property about the SMCG methods was given by Dai and Kou [24] in 2016. They established the two-dimensional finite termination property of the SMCG methods and presented some Barzilai–Borwein conjugate gradient (BBCG) methods with different values, and the most efficient one is

Motivated by the SMCG methods [23] and , Liu and Liu [25] extended the BBCG3 method to general unconstrained optimization and presented an efficient subspace minimization conjugate gradient method (SMCG_BB). Since then, a lot of SMCG methods [26,27,28] have been proposed for unconstrained optimization. The SMCG methods are very efficient and have received much attention.

2.2. The SMCG Method for Solving (1) and Its Some Important Properties

We will extend the SMCG methods for unconstrained optimization for solving (1) by combining it with the projection technique and exploit some important properties of the search direction in the subsection. The motivation behind we extend the SMCG methods for unconstrained optimization to solve (1) is that the SMCG methods have the following characteristics: (i) The search directions of the SMCG methods are parallel to those of the traditional CG methods when the exact line search is performed, and thus reduce to the traditional CG methods when the exact line search is performed. It implies that the SMCG methods can inherit the finite termination property of the traditional CG methods for convex quadratic minimization. (ii) The search directions of the SMCG methods are generated by solving (3) over the whole two-dimensional subspace while those of the traditional CG methods are , where is called the conjugate parameter. Obviously, the search directions of the traditional CG methods are derived in the special subset of to make them possess the conjugate property. As a result, the SMCG methods have more choices and thus have more potential in theoretical properties and numerical performance. In theory, the SMCG methods without the exact line search can possess the finite termination property when solving two-dimensional strictly convex quadratic minimization problems [24], while this is impossible for the traditional CG methods when the line search is not exact. In numerical performance, the numerical results in [25,26,27,28] indicated that the SMCG methods are very efficient.

For simplicity, we abbreviate as in the following. We are particularly interested in the SMCG methods proposed by Yuan and store [23], where the search directions are given by

where and are determined by (6). For the choice of in (6), we take the form (7) due to its effectiveness [24]. Therefore, based on (8) and (7), the search direction of the SMCG method for solving problem (1) can be arranged as

where , , , .

In order to analyze some properties of the search direction, the search direction will be reset as when , where . Therefore, the search direction is truncated as

where is given by (9).

The projection technique, which will be used in the proposed method, is described as follows.

By setting as a trial point, we define a hyperplane

which strictly separates from the zero points of in (1). The projection operator is a mapping from to the non-empty closed subset :

which enjoys the non-expansive property

Solodov and Svaiter [10] showed that the next iterative point is the projection of onto , namely

By combining (10) with the projection technique, we present an SMCG method for solving (1), which is described in detail as follows.

The following lemma indicates that the search direction satisfies the sufficient descent property.

Lemma 1.

The search direction generated by Algorithm 1 always satisfies the sufficient descent condition

for all .

Proof.

According to (10), we know that (11) holds with if . We next consider the opposite situation. It follows that

where the inequality comes from the fact that treating as a one variable function of and minimizing it can yield Consequently, by the choice of and (10), it holds that

In sum, (11) holds with . The proof is completed. □

| Algorithm 1 Subspace Minimization Conjugate Gradient Method for Solving (1) |

|

Lemma 2.

Let the sequences and be generated by Algorithm 1, then there always exists a stepsize satisfying the line search (13).

3. Convergence Analysis

In this section, we will establish the global convergence and the convergence rate of Algorithm 1.

3.1. Global Convergence

We first perform the following assumptions.

Assumption 1.

There is a solution such that

Assumption 2.

The mapping F is continuous and monotone.

By utilizing (2), we can obtain

The next lemma indicates that sequence generated by Algorithm 1 is Fejèr monotone with respect to .

Lemma 3.

Suppose that Assumptions 1 and 2 hold, and and are generated by Algorithm 1. Then, it holds that

Moreover, the sequence is bounded and

Proof.

□

It follows that the sequence is non-increasing and thus, the sequence is bounded. We also have

By the definition of , we can determine that

The following lemma is proved only based on the continuity assumption on F.

Lemma 4.

Suppose that is generated by Algorithm 1. Then, for all , we have

where .

Proof.

From (11) and by utilizing the Cauchy–Schwartz inequality, it follows that

In the following, we consider two cases: when holds, we have

Therefore, by (10), (16) and (22), as well as the Cauchy–Schwarz inequality, we obtain

or , . In sum, (20) holds for all with and C in (11). The proof is completed. □

In the following theorem, we establish the global convergence of Algorithm 1.

Theorem 1.

Suppose that Assumption 2 holds, and the sequences and are generated by Algorithm 1. Then, the following holds:

Proof.

We prove it by contradiction. Suppose that (24) does not hold, i.e., there exists a constant such that , . Together with (21), it implies that

By utilizing (19) and (25), we can determine that . By and the line search (13), for a large enough k, we can determine that

It follows from (20) and that the sequence is bounded. Together with the boundedness of , we know that there exist convergent subsequences for both and . Without the loss of generality, we assume that the two sequences and are convergent. Hence, taking limits on (26) yields

where and are the corresponding limit points. By taking limits on both sides of (11), we obtain

It follows from (27) and (28) that , which contradicts . Therefore, we obtain (24). The proof is completed. □

3.2. R-Linear Convergence Rate

We begin to analyze the Q-linear convergence and R-linear convergence of Algorithm 1. We say that a method enjoys Q-linear convergence to mean that its iterative sequence satisfies where ; we say that a method enjoys R-linear convergence to mean that for its iterative sequence , there exists two positive constants such that holds (See [29]).

Assumption 3.

For any , there exist constant and such that

where denotes the distance from x to the solution set , and .

Theorem 2.

Suppose that Assumptions 2 and 3 hold, and let the sequence be generated by Algorithm 1. Then, the sequence dist is Q-linearly convergent to 0 and the sequence is R-linearly convergent to .

4. Numerical Experiments

In this section, the numerical experiment is conducted to compare the performance of Algorithm 1 with that of the HTTCGP method [30], the PDY method [17], the MPRPA method [18], and the PCG method [15], which are very effective types of projection algorithm for solving (1). All codes of the test methods were implemented in MATLAB R2019a and were run on an HP personal desktop computer with Intel(R) Core(TM) i5-10500 CPU 3.10 GHz, 8.00 GB RAM, and Windows 10 operation system.

In Algorithm 1, we choose the following the parameter values:

The parameters of the other four test algorithms use the default values from [15,17,18,30], respectively. In the numerical experiment, all test methods are terminated if the iteration exceeds 10,000, or if the function value of the current iterations satisfies the condition .

Denote

The test problems are given as follows.

Problem 1.

This problem is a logarithmic function with [17], i.e.,

Problem 2.

This problem is a discrete boundary value problem with [17], i.e.,

where ,

Problem 3.

This problem is a trigexp funtion with [17], i.e.,

where

Problem 4.

This problem is a tridiagonal exponential problem with [17], i.e.,

where

Problem 5.

This problem is problem 4.6 in [17] with , i.e.,

where

Problem 6.

This problem is problem 4.7 in [17], i.e.,

where ,

Problem 7.

This problem is problem 4.8 in [17], i.e.,

where ,

Problem 8.

This problem is problem 3 in [31], i.e.,

where ,

Problem 9.

This problem is problem 4.3 in [32], i.e.,

where ,

Problem 10.

This problem is problem 4.8 in [32], i.e.,

where ,

Problem 11.

This problem is problem 4.5 in [32], i.e.,

where ,

Problem 12.

This problem is problem 5 in [31], i.e.,

where ,

Problem 13.

This problem is problem 6 in [31], i.e.,

where ,

Problem 14.

This problem is problem 4.3 in [20], i.e.,

where ,

Problem 15.

This problem is a complementarity problem in [20], i.e.,

where ,

The above 15 problems with different dimensions ( are used to test the five test methods, as well as different initial points , where , and . Some of the numerical results are listed in Table 1, where “Al” represents Algorithm 1, “Pi” stands for the i-th test problem listed above, and “Ni” and “NF” denote the number of iterations and the number of function calculations, respectively. Other numerical results are available at https://www.cnblogs.com/888-0516-2333/p/18026523 (accessed on 6 January 2024).

Table 1.

The numerical results (n = 10,000).

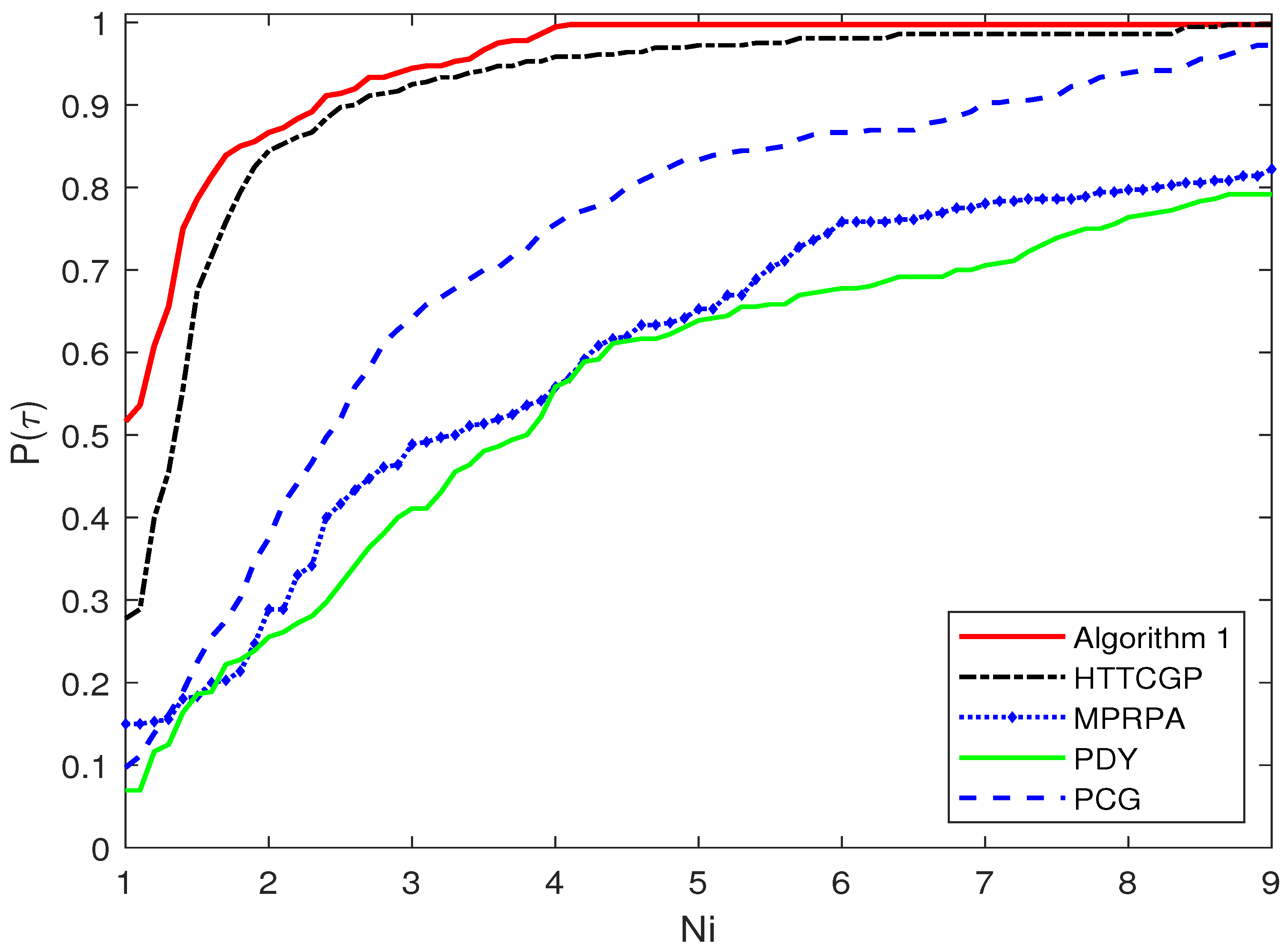

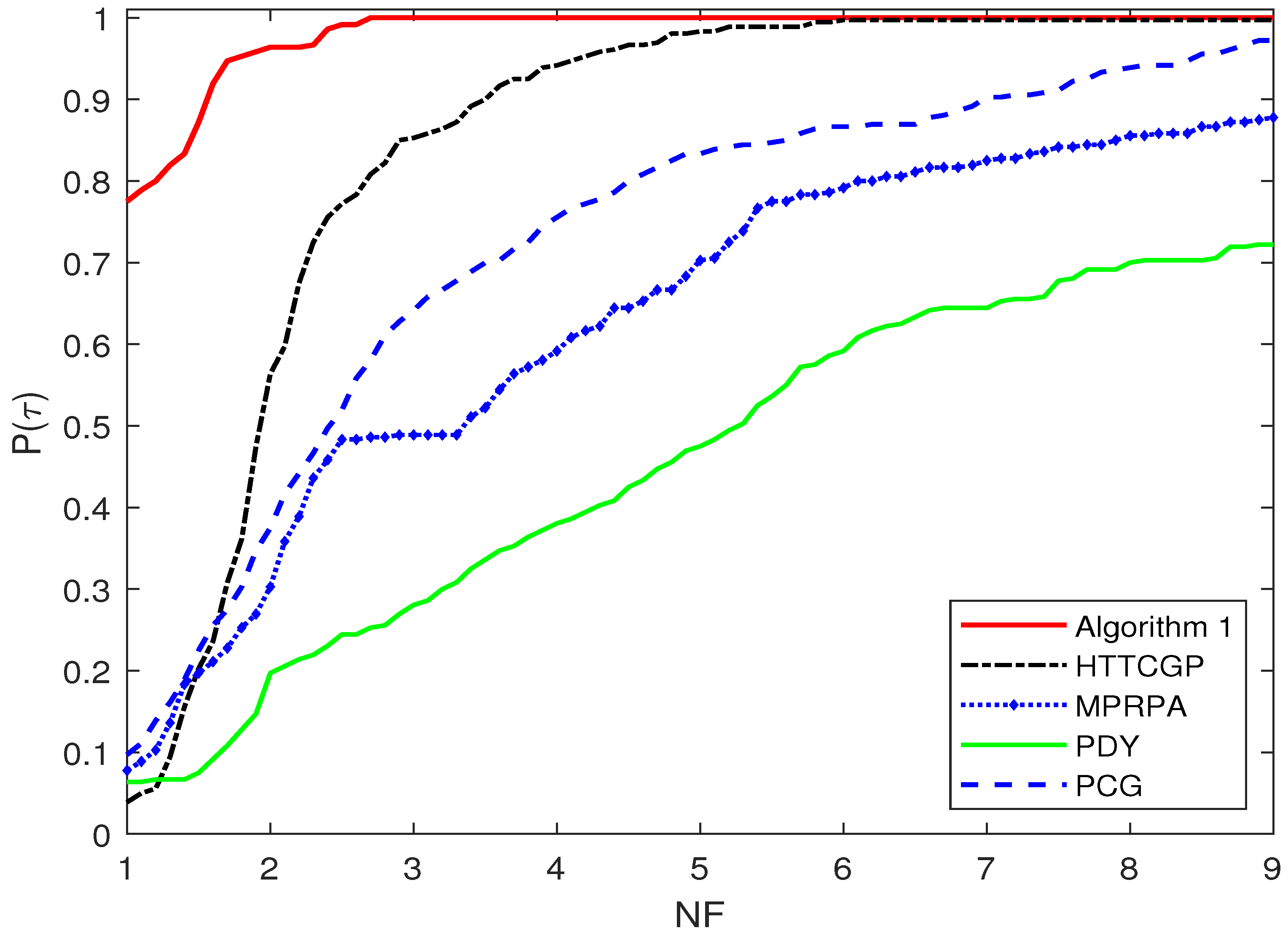

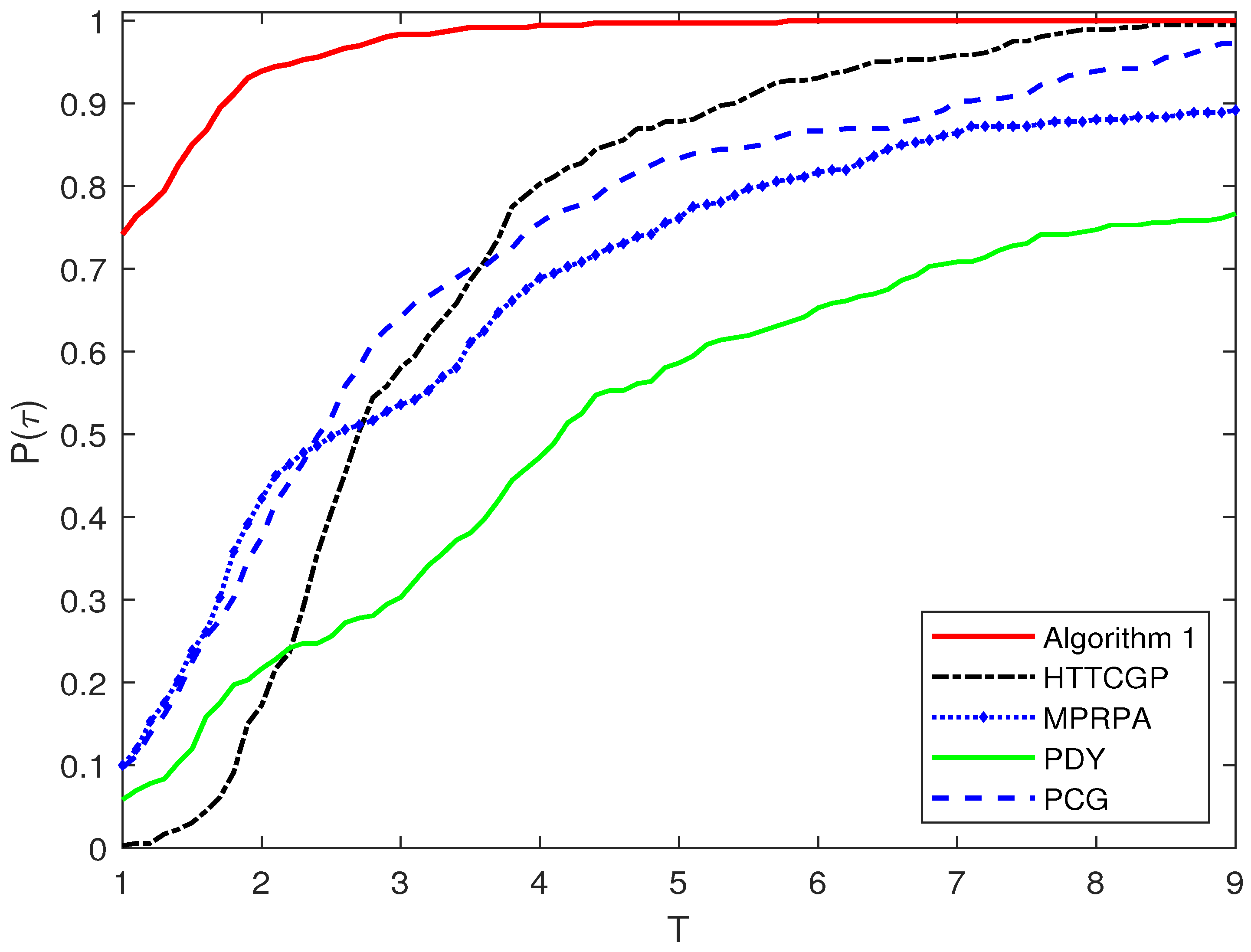

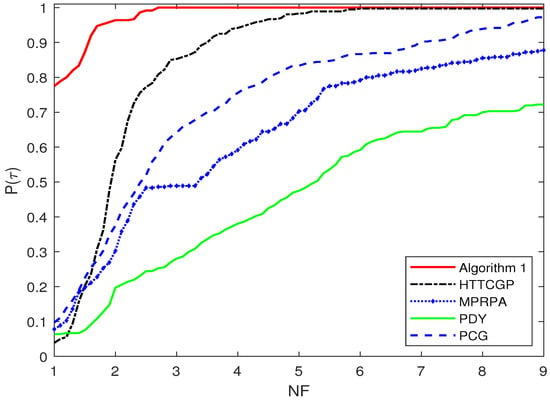

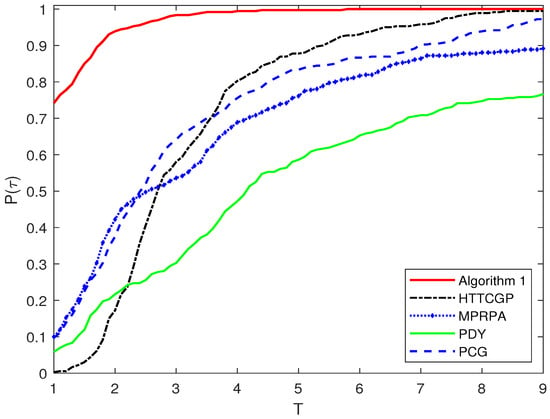

The performance profiles proposed by Dolan and Moré [33] are used to compare the numerical performance of the test methods in terms of Ni, NF, and T, respectively. We explain the performance profile by taking the number of iterations as an example. Denote the test set and the set of algorithms by P and A, respectively. We assume that we have algorithms and problems. For each problem with and algorithm , represents the number of iterations required to solve problem p by algorithm a. We use the performance ratio

to compare the performance on problem p by solver a with the best performance by any algorithm on this problem. To obtain an overall assessment of the performance of the algorithm, we define

which is the probability for algorithm that a performance ratio is within a factor of the best possible ratio and reflects the numerical performance of algorithm a relative to the other test algorithms in A. Obviously, algorithms with large probability are to be preferred. Therefore, in the figure plotted with these of the test methods, the higher the curve is, the better the corresponding algorithm a performs.

As shown in Figure 1, we observe that, in terms of the number of iterations, Algorithm 1 is the best, followed by the HTTCGP, MPRPA, and PCG methods, and the PDY method is the worst. Figure 2 indicates that Algorithm 1 has significant improvement over the other four test methods in terms of the number of function calculations, since it successfully solves about 78% of test problems with the least number of function calculations, while the percentages of the other four methods are all less than 10%. As for the reason for the significant improvement in terms of NF, it is due to the fact that the search direction of Algorithm 1 is generated by minimizing the quadratic approximate model in the two-dimensional subspace which implies that the search direction has new parameters corresponding to and thus results in that it requires less function calculations in Step 2. This is also the advantage of the SMCG methods compared with other CG methods. We can see from Figure 3 that Algorithm 1 is much faster than the other four test methods.

Figure 1.

Performance profilesof the five algorithms with respect to number of iterations (Ni).

Figure 2.

Performance profiles of the five algorithms with respect to number of function evaluations (NF).

Figure 3.

Performance profiles of the five algorithms with respect to CPU time (T).

The numerical experiment indicates Algorithm 1 is superior to the the other four test methods.

5. Conclusions

In this paper, an efficient SMCG method is presented for solving nonlinear monotone equations with convex constraints. The sufficient descent property of the search direction is analyzed, and the global convergence and convergence rate of the proposed algorithm are established under suitable assumptions. The numerical results confirm the effectiveness of the proposed method.

The SMCG method has illustrated a good numerical performance for solving nonlinear monotone equations with convex constraints. There is a wide research gap with regard to studying the SMCG methods for solving nonlinear monotone equations with convex constraints, including exploiting suitable quadratic or non-quadratic approximate models to derive new search directions. This is also our future research focus.

Author Contributions

Conceptualization, T.S. and Z.L.; methodology, T.S. and Z.L.; software, T.S. and Z.L.; validation, T.S. and Z.L.; formal analysis, T.S. and Z.L.; investigation, T.S. and Z.L.; resources, T.S. and Z.L.; data curation, T.S. and Z.L.; writing—original draft preparation, T.S.; writing—review and editing, Z.L.; visualization, T.S. and Z.L.; supervision, T.S. and Z.L.; project administration, T.S. and Z.L.; funding acquisition, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by National Science Foundation of China (No. 12261019), Guizhou Science Foundation (No. QHKJC-ZK[2022]YB084).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article and corresponding link.

Acknowledgments

We would like to thank the Associate Editor and the anonymous referees for their valuable comments and suggestions.

Conflicts of Interest

The authors declare no competing interests.

References

- Guo, D.S.; Nie, Z.Y.; Yan, L.C. The application of noise-tolerant ZD design formula to robots’ kinematic control via time-varying nonlinear equations solving. IEEE Trans. Syst. Man Cybern. Syst. 2017, 48, 2188–2197. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, Y. New discrete-time models of zeroing neural network solving systems of time-variant linear and nonlinear inequalities. IEEE Trans. Syst. Man Cybern. Syst. 2017, 50, 565–576. [Google Scholar] [CrossRef]

- Dirkse, S.P.; Ferris, M.C. MCPLIB: A collection of nonlinear mixed complementarity problems. Optim. Methods Softw. 1995, 5, 319–345. [Google Scholar] [CrossRef]

- Xiao, Y.H.; Wang, Q.Y.; Hu, Q.J. Non-smooth equations based methods for l1-norm problems with applications to compressed sensing. Nonlinear Anal. 2011, 74, 3570–3577. [Google Scholar] [CrossRef]

- Yuan, Y.X. Subspace methods for large scale nonlinear equations and nonlinear least squares. Optim. Eng. 2009, 10, 207–218. [Google Scholar] [CrossRef]

- Ahmad, F.; Tohidi, E.; Carrasco, J.A. A parameterized multi-step Newton method for solving systems of nonlinear equations. Numer. Algorithms 2016, 71, 631–653. [Google Scholar] [CrossRef]

- Lukšan, L.; Vlček, J. New quasi-Newton method for solving systems of nonlinear equations. Appl. Math. 2017, 62, 121–134. [Google Scholar] [CrossRef]

- Yu, Z. On the global convergence of a Levenberg-Marquardt method for constrained nonlinear equations. JAMC 2004, 16, 183–194. [Google Scholar] [CrossRef]

- Zhang, J.L.; Wang, Y. A new trust region method for nonlinear equations. Math. Methods Oper. Res. 2003, 58, 283–298. [Google Scholar] [CrossRef]

- Solodov, M.V.; Svaiter, B.F. A globally convergent inexact Newton method for systems of monotone equations. In Reformulation: Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods; Fukushima, M., Qi, L., Eds.; Kluwer Academic: Boston, MA, USA, 1998; pp. 355–369. [Google Scholar]

- Zheng, Y.; Zheng, B. Two new Dai–Liao-type conjugate gradient methods for unconstrained optimization problems. J. Optim. Theory Appl. 2017, 175, 502–509. [Google Scholar] [CrossRef]

- Li, M.; Liu, H.W.; Liu, Z.X. A new family of conjugate gradient methods for unconstrained optimization. J. Appl. Math. Comput. 2018, 58, 219–234. [Google Scholar] [CrossRef]

- Xiao, Y.H.; Zhu, H. A conjugate gradient method to solve convex constrained monotone equations with applications in compressive sensing. J. Math. Anal. Appl. 2013, 405, 310–319. [Google Scholar] [CrossRef]

- Hager, H.H.; Zhang, H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 2005, 16, 170–192. [Google Scholar] [CrossRef]

- Liu, J.K.; Li, S.J. A projection method for convex constrained monotone nonlinear equations with applications. Comput. Math. Appl. 2015, 70, 2442–2453. [Google Scholar] [CrossRef]

- Dai, Y.H.; Yuan, Y.X. A nonlinear conjugate gradient with a strong global convergence property. SIAM J. Optim. 1999, 10, 177–182. [Google Scholar] [CrossRef]

- Liu, J.K.; Feng, Y.M. A derivative-free iterative method for nonlinear monotone equations with convex constraints. Numer. Algorithms 2019, 82, 245–262. [Google Scholar] [CrossRef]

- Gao, P.T.; He, C.J.; Liu, Y. An adaptive family of projection methods for constrained monotone nonlinear equations with applications. Appl. Math. Comput. 2019, 359, 1–16. [Google Scholar] [CrossRef]

- Bojari, S.; Eslahchi, M.R. Two families of scaled three-term conjugate gradient methods with sufficient descent property for nonconvex optimization. Numer. Algorithms 2020, 83, 901–933. [Google Scholar] [CrossRef]

- Li, Q.; Zheng, B. Scaled three-term derivative-free methods for solving large-scale nonlinear monotone equations. Numer. Algorithms 2021, 87, 1343–1367. [Google Scholar] [CrossRef]

- Waziri, M.Y.; Ahmed, K. Two Descent Dai-Yuan Conjugate Gradient Methods for Systems of Monotone Nonlinear Equations. J. Sci. Comput. 2022, 90, 36. [Google Scholar] [CrossRef]

- Ibrahim, A.H.; Alshahrani, M.; Al-Homidan, S. Two classes of spectral three-term derivative-free method for solving nonlinear equations with application. Numer. Algorithms 2023. [Google Scholar] [CrossRef]

- Yuan, Y.X.; Stoer, J. A subspace study on conjugate gradient algorithms. Z. Angew. Math. Mech. 1995, 75, 69–77. [Google Scholar] [CrossRef]

- Dai, Y.H.; Kou, C.X. A Barzilai-Borwein conjugate gradient method. Sci. China Math. 2016, 59, 1511–1524. [Google Scholar] [CrossRef]

- Liu, H.W.; Liu, Z.X. An efficient Barzilai–Borwein conjugate gradient method for unconstrained optimization. J. Optim. Theory Appl. 2019, 180, 879–906. [Google Scholar] [CrossRef]

- Li, Y.F.; Liu, Z.X.; Liu, H.W. A subspace minimization conjugate gradient method based on conic model for unconstrained optimization. Comput. Appl. Math. 2019, 38, 16. [Google Scholar] [CrossRef]

- Zhao, T.; Liu, H.W.; Liu, Z.X. New subspace minimization conjugate gradient methods based on regularization model for unconstrained optimization. Numer. Algorithms 2021, 87, 1501–1534. [Google Scholar] [CrossRef]

- Wang, T.; Liu, Z.; Liu, H. A new subspace minimization conjugate gradient method based on tensor model for unconstrained optimization. Int. J. Comput. Math. 2019, 96, 1924–1942. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equation in Several Variables; Academic Press: New York, NY, USA; London, UK, 1970. [Google Scholar]

- Yin, J.H.; Jian, J.B.; Jiang, X.Z.; Liu, M. X; Wang, L.Z. A hybrid three-term conjugate gradient projection method for constrained nonlinear monotone equations with applications. Numer. Algorithms 2021, 88, 389–418. [Google Scholar] [CrossRef]

- Ou, Y.G.; Li, J.Y. A new derivative-free SCG-type projection method for nonlinear monotone equations with convex constraints. J. Appl. Math. Comput. 2018, 56, 195–216. [Google Scholar] [CrossRef]

- Ma, G.D.; Jin, J.C.; Jian, J.B.; Yin, J.H.; Han, D.L. A modified inertial three-term conjugate gradient projection method for constrained nonlinear equations with applications in compressed sensing. Numer. Algorithms 2023, 92, 1621–1653. [Google Scholar] [CrossRef]

- Dolan, E.D.; More, J.J. Benchmarking optimization software with performance profiles. Math. Program 2002, 91, 201–213. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).