Abstract

Synthetic Aperture Radar (SAR) target detection faces significant challenges including speckle noise interference, weak small object features, and multi-category imbalance. To address these issues, this paper proposes LSDA-YOLO, an enhanced SAR target detection framework built upon the YOLO architecture that integrates Large Kernel Attention and SimAM dual attention mechanisms. Our method effectively overcomes these challenges by synergistically combining global context modeling and local detail enhancement to improve robustness and accuracy. Notably, this framework leverages the inherent symmetry properties of typical SAR targets (e.g., geometric symmetry of ships and bridges) to strengthen feature consistency, thereby reducing interference from asymmetric background clutter. By replacing the baseline C2PSA module with Deformable Large Kernel Attention and incorporating parameter-free SimAM attention throughout the detection network, our approach achieves improved detection accuracy while maintaining computational efficiency. The deformable large kernel attention module expands the receptive field through synergistic integration of deformable and dilated convolutions, enhancing geometric modeling for complex-shaped targets. Simultaneously, the SimAM attention mechanism enables adaptive feature enhancement across channel and spatial dimensions based on visual neuroscience principles, effectively improving discriminability for small targets in noisy SAR environments. Experimental results on the RSAR dataset demonstrate that LSDA-YOLO achieves 80.8% mAP50, 53.2% mAP50-95, and 77.6% F1 score, with computational complexity of 7.3 GFLOPS, showing significant improvement over baseline models and other attention variants while maintaining lightweight characteristics suitable for real-time applications.

1. Introduction

Synthetic Aperture Radar (SAR) has become a vital remote sensing technology owing to its all-weather and day-night imaging capabilities. It plays a crucial role in military reconnaissance, disaster assessment, and maritime surveillance. However, automatic target detection in SAR imagery remains challenging due to inherent speckle noise, unstable scattering characteristics, and complex background interference [1,2]. These issues are particularly pronounced in multi-category detection tasks. Large scale variations and diverse target morphologies hinder the balance between detection accuracy and robustness.

Recent advances in deep learning have brought remarkable progress to SAR image interpretation [3,4]. Convolutional Neural Networks (CNNs) demonstrate powerful feature learning capabilities. They effectively reduce the dimensionality of high-volume SAR data while preserving discriminative information, thus significantly improving detection accuracy. The hierarchical structure of CNNs enables automatic learning of representations from low-level edge features to high-level semantic features. This end-to-end learning approach avoids the complex manual feature design required by traditional methods.

Among various deep learning architectures, the You Only Look Once (YOLO) series has emerged as a leading framework for real-time object detection. It offers an optimal balance between inference speed and detection accuracy. The continuous evolution from YOLOv1 to YOLOv10 [5,6,7,8,9,10,11,12,13] has consistently advanced the state of the art in real-time detection. This makes YOLO-based models particularly suitable for SAR applications where computational efficiency and rapid inference are critical.

However, when applied to SAR imagery, standard YOLO architectures still face significant challenges. Various improvement attempts have been made to adapt YOLO to the specificity of SAR data. For example, some approaches embed non-local attention modules to enhance global perception capability [14]. Others introduce multi-scale attention mechanisms in feature pyramid networks to handle target scale variations [15]. Nevertheless, these approaches often struggle to achieve an ideal balance between model complexity and detection accuracy. Either the computational overhead becomes too large, compromising real-time performance, or the feature extraction remains insufficient to effectively suppress coherent speckle noise interference.

Consequently, traditional YOLO detectors exhibit clear limitations in handling SAR-specific characteristics. Fixed-scale convolutional kernels are unable to capture long-range spatial dependencies. This limits the model’s global perception of large structural targets. Meanwhile, coherent speckle noise and weak small-target features often overwhelm local details. This leads to missed detections and false alarms. Although attention mechanisms have been introduced to alleviate these problems [16], most existing methods rely on general-purpose modules (such as SE [17] or CBAM [18]) originally designed for optical imagery. These modules lack adaptation to the unique statistical and physical characteristics of SAR data. They generally do not incorporate a deep understanding of SAR image scattering mechanisms, resulting in limited generalization capability under complex scenarios.

Particularly when dealing with targets and backgrounds exhibiting similar scattering characteristics—such as small ships in marine environments or military facilities in complex terrain—traditional YOLO architectures often struggle to accurately distinguish targets from background clutter. This severely constrains their practical application value in real-world combat environments.

To overcome these limitations, this work presents LSDA-YOLO, an enhanced YOLO-based SAR target detection framework. It integrates dual-attention mechanisms in a synergistic architecture to achieve improved detection performance. Building upon the YOLO foundation, our approach specifically addresses SAR-specific challenges through specialized attention modules. The principal contributions of this study are summarized as follows:

- A deformable large kernel attention module is introduced within the YOLO architecture, expanding the receptive field through synergistic integration of deformable and dilated convolutions while preserving computational efficiency, thereby enhancing geometric modeling for complex-shaped targets;

- A parameter-free attention mechanism is incorporated into the YOLO framework, enabling adaptive feature enhancement across channel and spatial dimensions grounded in visual neuroscience principles, effectively improving discriminability for small targets in noisy SAR environments;

- A dual-attention collaborative architecture is constructed, establishing complementary interactions between local details and global context via feature-level fusion mechanisms within the YOLO backbone, substantially boosting multi-scale detection capability while maintaining operational efficiency;

- Comprehensive evaluations on large-scale SAR datasets demonstrate that our YOLO-based LSDA framework achieves improved detection accuracy and robustness, providing an effective solution for SAR target detection in complex scenarios.

2. Related Work

2.1. Object Detection

Object detection algorithms identify potential target regions in images through predicted bounding boxes and subsequently perform object classification using features extracted from these areas. Traditional methods such as the Deformable Part Model (DPM) [19] and Histogram of Oriented Gradients (HOG) [20] possess inherent limitations. For instance, they often struggle with efficient region selection using sliding windows, leading to increased processing time and window redundancy. Nevertheless, despite these constraints, traditional algorithms have played a crucial role in the advancement of object detection, and their influence persists in some of the most recent algorithms.

With the progression of deep learning, object detection algorithms based on deep neural networks (DNNs) [21] have gradually become the mainstream approach, as they can dynamically learn features and enhance detection robustness for diverse objects, marking a significant leap forward in the field.

Generally, nonlinear deep learning networks can create novel network architectures that emulate the human brain’s functionality through numerous abstract neurons. The field of object detection is characterized by two predominant methodologies: one-stage detectors, which include SSD [22], YOLO series, and RetinaNet [23]; and two-stage detectors, such as R-CNN [24], Faster R-CNN [25], and Cascade R-CNN [26]. While one-stage detectors are marginally less accurate than their two-stage counterparts, they offer significant advantages in terms of speed and accessibility.

2.2. Object Detection in SAR Images

Target detection in synthetic aperture radar (SAR) image interpretation plays a vital role in applications such as resource exploration, environmental monitoring, and defense security. However, these tasks face challenges including limited resolution, large scale variations among targets, complex background clutter, and strong coherent speckle noise interference. Particularly in complex terrain scenarios, the similarity between target and background scattering characteristics often leads to frequent missed detections and false alarms in traditional detection methods.

Early SAR target detection approaches primarily relied on statistical modeling and constant false alarm rate (CFAR) detectors, as reviewed by Dai et al. [27]. Although these methods are computationally efficient, they often struggle to cope with complex background clutter and target heterogeneity. The development of feature-based methods incorporating texture analysis and polarimetric decomposition provided improved discrimination capabilities but remained limited in handling large-scale datasets [28].

The emergence of deep learning has revolutionized SAR target detection, with Li et al. [1] demonstrating significant performance improvements using convolutional neural networks. Recent advancements have explored innovative approaches to address specific SAR challenges. For instance, Wan et al. proposed an automatic pruning method based on multitask reinforcement learning to optimize model architecture for SAR target detection [29]. Additionally, Wan et al. developed an orientation detector for ship targets based on semantic flow feature alignment and Gaussian label matching [30]. In the area of SAR image preprocessing, Nurmamat et al. introduced an interpretable SAR image filtering algorithm to enhance image quality [31]. These works represent important contributions to SAR-specific algorithm development.

While early approaches mainly adapted generic object detection frameworks to SAR imagery, subsequent studies have proposed SAR-specific architectures that account for speckle noise and complex scattering mechanisms. For instance, Ma et al. proposed the adaptive multi-hierarchical attention network (AMANet), achieving enhanced detection robustness under cluttered maritime scenes [32]. Further developments include multi-scale feature fusion techniques, such as the C-AFBiFPN structure by Meng et al. [33], which integrates convolutional feature enhancement with attention-guided bidirectional feature pyramid networks, and the scale-sensitive cross-attention mechanism by Wang and Kim [34] that improves cross-scale feature interactions. Additionally, attention mechanisms have been optimized for small targets; Ma et al. designed an adaptive multi-hierarchical attention module for multi-level feature mapping, and Miao et al. introduced the CSC-Net framework based on YOLOv11, achieving an accuracy-efficiency balance through structural reparameterization and contextual feature fusion [35].

With the advancement of vision Transformer technology, hybrid attention-based detection architectures have demonstrated strong potential in remote sensing image interpretation. Rocha and Figueiredo proposed the BRA-CBAM-Swin fusion model that integrates bi-level routing attention with convolutional block attention modules and the Swin Transformer [36]. Yang et al. developed the Hybrid-DETR architecture, which enhances multi-category target detection capabilities in remote sensing images through modular decoding and hierarchical feature aggregation mechanisms [37]. To address the challenge of limited annotated SAR data, recent research has focused on large-scale benchmark construction and transfer learning. Li, Y. et al. introduced the SARDet-100K dataset and a multi-stage pre-training framework to advance large-scale SAR object detection research [38]. Additionally, Boutayeb et al. proposed a deformable attention mechanism tailored for remote sensing object detection, demonstrating improved accuracy and efficiency in Transformer-based architectures [39].

These research findings demonstrate that the systematic integration of multi-scale feature fusion, attention mechanism optimization, and lightweight design can significantly enhance SAR image target detection performance in complex environments. Building upon these technical insights, this study aims to advance SAR target detection in challenging scenarios through innovative improvements to the YOLO11 framework [40]. Specifically, we propose a novel dual-attention cooperative architecture that integrates deformable large kernel attention with parameter-free SimAM attention mechanisms, leveraging the complementary advantages of global context modeling and local detail enhancement to effectively address key challenges in SAR imagery including speckle noise interference, weak small target features, and multi-scale object detection. Our methodological innovations include replacing the baseline C2PSA module with deformable large kernel attention to enhance geometric modeling capabilities for large and complex-shaped targets, while embedding parameter-free SimAM attention throughout the network to improve discriminative power for small targets and low-contrast features. This approach achieves significant detection accuracy improvements while maintaining lightweight characteristics suitable for practical deployment.

3. Materials and Methods

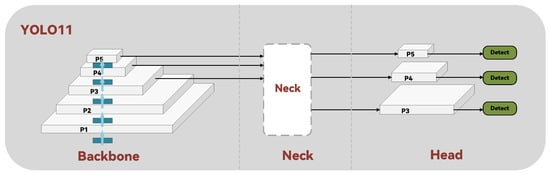

YOLO11 employs a multi-scale feature fusion architecture that processes input images through three main stages: the backbone network extracts hierarchical features at different scales, the neck network fuses these features through feature pyramid structures to enhance multi-scale representation, and the head network performs final classification and bounding box regression. As shown in Figure 1, this architecture achieves a balance between detection speed and precision. However, when applied to SAR target detection, several limitations become apparent: the fixed-scale convolutional kernels struggle to capture long-range spatial dependencies required for large structural targets like ports and bridges; the absence of specialized noise suppression mechanisms makes the model vulnerable to speckle noise that overwhelms weak small target features; the baseline attention mechanisms demonstrate insufficient adaptability to SAR’s unique scattering characteristics and complex backgrounds; and the multi-scale fusion lacks adequate handling of extreme scale variations among different target categories. To address these challenges while maintaining computational efficiency, we propose LSDA-YOLO, an enhanced framework incorporating dual attention mechanisms that significantly improves detection accuracy for SAR imagery. This approach effectively handles SAR-specific difficulties including speckle noise interference, weak small objects, and multi-category imbalance, making it particularly suitable for defense security, emergency response, and environmental monitoring applications where both accuracy and efficiency are critical.

Figure 1.

General framework of YOLO11.

3.1. Deformable Large Kernel Attention Module

The conventional C2PSA module [41] provides high-level semantic features for large-scale targets in standard architectures but exhibits several limitations in SAR detection scenarios. Fixed-size convolutional kernels struggle to adapt to geometric deformations of targets, while limited receptive fields hinder comprehensive global semantic modeling. Additionally, the absence of dedicated noise suppression mechanisms degrades feature quality in speckle-intensive environments, and insufficient multi-scale interaction constrains performance in complex scenes.

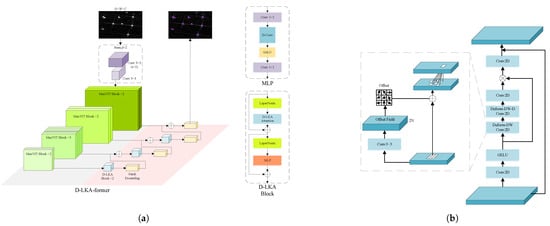

To address these limitations, we propose the Deformable Large Kernel Attention (DLKA) module [42], whose overall structure is illustrated in Figure 2. This module employs a hierarchical architecture comprising feature preprocessing, deformable attention, and feature refinement stages, maintaining a residual structure while incorporating several SAR-specific enhancements.

Figure 2.

Schematic diagram of the DLKA module structure. (a) DLKAnet; (b) Architecture of the deformable LKA module.

Deformable Convolution Unit utilizes learnable sampling offsets to enable kernel adaptation to target geometries. The offset calculation is implemented through a dedicated convolutional branch that learns deformation information from normalized input features. For input features , the offset field is computed as:

where denotes layer normalization, and is the offset prediction convolutional layer with output channels , corresponding to x and y offsets for each sampling point of a convolution kernel.

The deformable convolution operation using the learned offsets can be expressed as:

where p denotes the output position, represents the predefined sampling position for the k-th kernel weight , and is the learned offset corresponding to .

Large Receptive Field Module employs large-size convolutional kernels combined with dilated convolutions to significantly expand the effective receptive field. In practice, large kernels are decomposed into a combination of depthwise convolution, dilated depthwise convolution, and 1 × 1 convolution to reduce computational complexity. For constructing a convolution kernel, the following decomposition approach is used:

where represents the depthwise convolution kernel size, represents the dilated depthwise convolution kernel size, and d is the dilation rate. For feature maps with input dimensions and channel number C, the parameter count and computational cost are:

In our implementation, we set and , optimizing the dilation rate to minimize parameter count while preserving the large receptive field advantage.

Noise Suppression Branch is designed specifically for speckle noise characteristic of SAR images, implementing adaptive gating through statistical normalization. This mechanism is based on statistical differences between target and background regions in the feature map:

where and represent the intensity means of foreground and background regions, respectively, denotes the standard deviation of background regions, and is a numerical stability constant. Foreground and background regions are determined through adaptive thresholding:

where A is the attention weight map and T is the adaptive threshold. The purified features are computed as:

where ⊙ denotes element-wise multiplication.

Multi-scale Hybrid Attention Block combines local window attention with cross-block global attention. Local window attention partitions the feature map into non-overlapping windows, computing self-attention within each window:

where Q, K, and V represent the query, key, and value matrices, respectively, and is the key dimension. Cross-block global attention captures long-range dependencies through strided sampling:

where denotes strided convolution and s is the sampling stride.

Complete Computational Flow of Deformable Large Kernel Attention can be expressed as:

where denotes deformable depthwise convolution, denotes deformable dilated depthwise convolution, and ⊗ denotes element-wise multiplication.

The final output of the module is fused with input features through residual connections:

where represents the complete DLKA processing pipeline. This design ensures effective gradient propagation while enhancing the module’s representational capacity.

3.2. SimAM-Based Attention Enhancement Module

The conventional C3k2 modules face significant challenges in SAR image target detection: their fixed local receptive fields struggle to capture subtle features of weak small targets, the standard convolution operations lack specific suppression mechanisms for speckle noise, and they demonstrate limited discriminative capability for low-contrast targets under complex background interference. These limitations severely impact the detection performance of small targets and low-contrast features in SAR imagery.

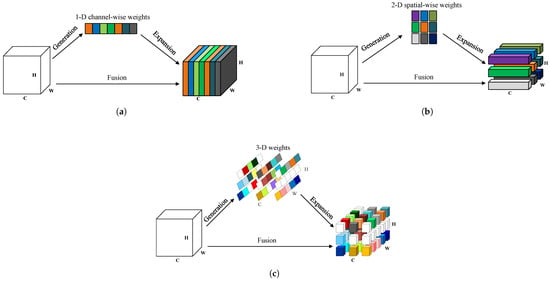

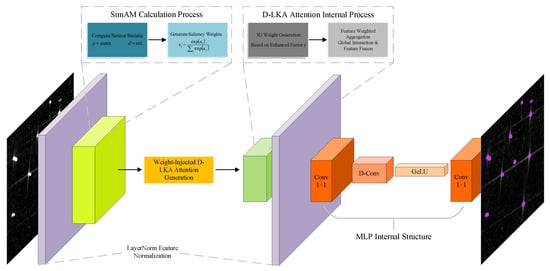

To overcome these deficiencies, we introduce an enhanced structure based on SimAM (Simple Attention Module) [43], whose overall architecture is illustrated in Figure 3. This neuroscience-inspired attention mechanism employs a parameter-free attention reweighting mechanism rather than conventional weight-injection fusion, enabling effective local feature enhancement without introducing learnable parameters during deployment.

Figure 3.

Comparisons of different attention mechanisms. (a) Channel-wise weights; (b) Spatial-wise weights; (c) Full 3-D weights for attention.

SimAM’s innovation fundamentally lies in its unique three-dimensional attention weight generation mechanism, which overcomes the limitations of traditional attention modules. As shown in Figure 3, SimAM differs from conventional approaches in three key aspects:

Channel-wise attention (Figure 3a) generates one-dimensional weights that assign a uniform scalar weight to each channel, with all spatial positions within the same channel sharing this weight. The process involves generating 1-D weights (corresponding to C channels, one value per channel) from the input feature map X (dimension C × H × W), expanding these weights by replicating them to all spatial positions within each channel, and finally fusing them with the original feature map. The critical limitation is that all neurons within the same channel (across different spatial positions) share identical weights (represented by the same color in the figure), making it impossible to distinguish the importance of different spatial locations within a channel. The SE module represents a typical example of this approach in literature comparisons.

Spatial-wise attention (Figure 3b) generates two-dimensional weights that assign a uniform scalar weight to each spatial position (H × W), with all channels at the same spatial location sharing this weight. The process involves generating 2-D weights (corresponding to H×W spatial positions, one value per position) from the input feature map X, expanding these weights by replicating them across all channels, and then fusing them with the original feature map. The critical limitation is that all channel neurons at the same spatial position share identical weights (represented by the same color in the figure), making it impossible to distinguish the importance of different channels at the same location. The spatial attention branch of CBAM exemplifies this approach.

In contrast, SimAM’s full 3-D attention (Figure 3c) generates three-dimensional weights that assign a unique scalar weight to each individual neuron (the intersection of channel and spatial dimensions, i.e., each point in C × H × W), eliminating the need for expansion steps. The process directly generates 3-D weights (one dedicated value per neuron) from the input feature map X and fuses them with the original feature map without any weight replication or expansion. The critical advantage is that each neuron’s weight is independent (represented by different colors in the figure), enabling the distinction of both channel importance and spatial location importance within each channel. This approach directly matches the 3-D structure of feature maps without requiring the “generate single-dimensional weights → replicate and expand” process of traditional methods, resulting in more refined and non-redundant weighting.

The design of SimAM is motivated by the key neuron hypothesis in visual neuroscience, which posits that important neurons exhibit significant differences from their surrounding neurons. Unlike conventional weight-injection fusion mechanisms that rely on learnable parameters, SimAM employs a parameter-free attention reweighting mechanism based on energy minimization. This approach evaluates the importance of each neuron by minimizing an energy function that measures the distinguishability between target neurons and their neighbors, eliminating the need for additional trainable parameters. The computational process comprises three core stages: energy function construction, closed-form attention estimation, and feature reweighting.

First, the energy function is defined to measure the separability between a target neuron t and its neighboring neurons in the same channel:

where and are auxiliary scalar variables introduced for derivation, is a regularization coefficient, and M denotes the total number of neurons in the channel. The first term encourages neighboring neurons to be mapped near , while the second term pushes the target neuron toward , with the regularizer preventing degenerate solutions.

By substituting the closed-form solutions of the auxiliary variables, we obtain the simplified energy expression:

where and represent the mean and variance of neurons in the same channel. Since and are analytically eliminated, the resulting energy depends only on local statistics and thus introduces no trainable parameters.

Subsequently, attention weights are generated from the inverse of the energy map to emphasize neurons with lower energy values (i.e., higher distinctiveness). Let denote the tensor composed of all values; the attention tensor is computed as:

where all operations are element-wise, and numerical stabilization (adding a small ) is applied to prevent division by zero.

Finally, feature refinement is achieved through element-wise reweighting:

where ⊙ denotes element-wise multiplication. This unified channel-spatial attention mechanism emphasizes locally discriminative neurons without introducing learnable parameters, distinguishing it from traditional weight-injection approaches.

In practical deployment, we replace all C3k2 modules [44] in both the backbone and neck with C3k2_SimAM modules (eight replacements in total), enhancing the local feature capture capability for small targets while maintaining the CSP structure [45]. The module offers four main advantages: lightweight and parameter-free deployment, effective suppression of speckle-like activations, improved recall for small and low-contrast targets, and a biologically inspired unified weighting mechanism for channel-spatial attention.

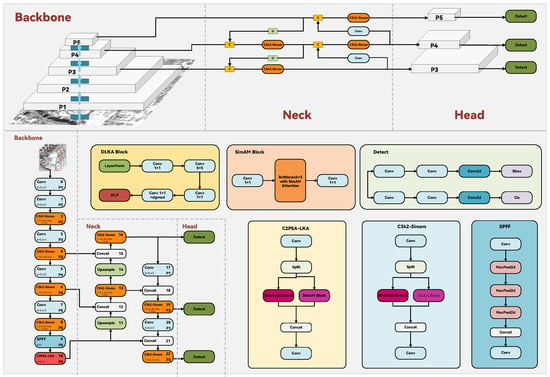

3.3. The Structure of LSDA-YOLO

Building on DLKA and SimAM enhancements, we propose LSDA-YOLO (Large Kernel and SimAM Dual Attention YOLO), which integrates global spatial modeling with local detail enhancement to achieve precise detection of multi-scale targets in SAR images. The overall framework is shown in Figure 4, with core fusion details in Figure 5.

Figure 4.

Overall architecture of LSDA-YOLO.

Figure 5.

Detailed structure of SimAM-DLKA fusion module.

LSDA-YOLO systematically incorporates dual attention mechanisms while maintaining the basic YOLO11 architecture. The selection of SimAM and DLKA is grounded in their complementary scientific properties and specific adaptation to SAR imaging characteristics. SimAM’s parameter-free attention mechanism originates from visual neuroscience principles, automatically identifying important neurons through energy minimization, effectively addressing the statistical characteristics of speckle noise and weak features of small targets in SAR images. DLKA addresses the geometric deformations and long-range contextual dependencies of large-scale structural targets through deformable convolutions and large receptive field design. Functionally, these two mechanisms complement each other: SimAM provides fine-grained neuron-level feature enhancement, while DLKA focuses on macroscopic geometric structures and spatial context. This combination aligns with the scientific principle of balancing local details and global structure in SAR image processing, providing mechanism-level support for addressing scientific problems such as multi-scale target coexistence and complex background interference.

Specifically, we replace the terminal C2PSA module with C2PSA_LKA to enhance global feature extraction for large-scale targets, and substitute all C3k2 modules with C3k2_SimAM to improve local detail perception for small targets. This design creates complementary feature processing pathways: the DLKA module effectively captures global features of large structural targets such as ports and bridges through its expanded receptive field, while the SimAM module provides precise feature enhancement for small targets like vehicles and tanks through its neuron-level attention mechanism.

The core innovation of this architecture lies in the SimAM-DLKA fusion module, which achieves deep integration at the mechanism level rather than simple sequential connection. This integration embodies a structural and functional symmetry: DLKA expands the receptive field outward to model global spatial contexts, while SimAM focuses inward to refine local neuronal responses. The two mechanisms form a symmetric pair that processes features from “macro-geometry” to “micro-detail,” establishing a balanced computational pathway consistent with the inherent symmetry in visual information processing—where local features aggregate into global structures, and global contexts guide local discrimination. As shown in Figure 5, this fusion module implements the “local guidance for global attention” mechanism through four key stages: first, feature normalization establishes the computational foundation; then, the SimAM-based weighting process generates neuron-level saliency maps; these saliency maps guide the DLKA global attention computation through enhanced 3D weights; finally, multi-layer perceptron refinement produces output features that integrate local precision with global semantics. This fusion mechanism scientifically embodies the interaction and synergy between local features and global context, adhering to the hierarchical principle of visual information processing.

LSDA-YOLO demonstrates significant advantages in three aspects: achieving multi-scale coverage through complementary attention mechanisms, enhancing robustness to speckle noise interference through cooperative suppression, and maintaining lightweight characteristics to ensure deployment efficiency. The architecture deploys DLKA modules in the deep layers of the backbone network to capture global contextual information, widely embeds SimAM modules in the shallow and middle layers to enhance local feature representation, and achieves organic fusion of multi-scale features through the feature pyramid network. This design enables the model to effectively address diverse target detection tasks with varying scales and complexities in SAR images, significantly improving detection accuracy while maintaining computational efficiency, providing a scientifically grounded solution for SAR target detection with reasonable mechanisms and solid scientific basis.

4. Experiments and Discussion

4.1. Dataset

We evaluated our method using a large-scale Synthetic Aperture Radar (SAR) image dataset. This benchmark was constructed by integrating and re-annotating imagery from a public dataset: the RSAR SAR image dataset [46].

The dataset comprises a total of 89,082 images. For our experiments, we randomly partitioned the entire dataset into training, validation, and test sets with an 8:1:1 ratio, resulting in 71,266 images for training, 8908 images for validation, and 8908 images for testing. This partitioning strategy ensures a stratified split to maintain a consistent category distribution across subsets, aligning with standard evaluation protocols for fair comparison.

This benchmark covers six major target categories: ships, aircraft, cars, oil tanks, ports, and bridges. All target instances are annotated with oriented bounding boxes, following the DOTA dataset format. The detailed category distribution including the number of instances per category is summarized in Table 1.

Table 1.

Target categories and instance distribution in the SAR dataset.

4.2. Experimental Setup

All experiments were conducted on a Linux system with an NVIDIA GeForce RTX 4090D GPU (24 GB memory), 64 GB RAM, and an Intel Core i9-14900K CPU. The software environment included CUDA 12.1, Python 3.10.18, and PyTorch 2.5.1+cu121.

Input images were resized to 640 × 640, and a batch size of 16 was applied. Mosaic and MixUp augmentations were enabled for the first 90 epochs. The full configuration is listed in Table 2.

Table 2.

Training configuration and hyperparameters.

4.3. Evaluation Metrics

We evaluated model performance using precision (P), recall (R), mean average precision (mAP), and F1 score. The primary metrics were mAP50 (IoU ≥ 0.50) and mAP50:95 (averaging IoU from 0.50 to 0.95 in 0.05 steps). Additionally, we report computational efficiency using Giga Floating Point Operations (GFLOPS) to assess model complexity and deployment feasibility. The definitions are as follows:

where indicates the probability that an anchor box contains an object, and is the IoU between the predicted and actual object’s anchor boxes.

4.4. Results and Discussion

4.4.1. YOLO11 Experiment

YOLO11 provides five scalable models with adjustable width and depth parameters: YOLO11x, YOLO11l, YOLO11m, YOLO11s, and YOLO11n. We evaluated all five variants, with results shown in Table 3.

Table 3.

Experimental results of different YOLOv11 scaled models.

The performance analysis reveals distinct trade-offs across model scales. YOLO11n demonstrates remarkable computational efficiency with only 6.40 GFLOPS, making it particularly suitable for resource-constrained SAR applications. The baseline YOLO11n achieves 77.0% mAP50 and 50.0% mAP50-95, providing a solid foundation for further improvements. Given its optimal balance between computational requirements and baseline performance, we selected YOLO11n as our primary baseline for further enhancements targeting SAR-specific challenges.

4.4.2. Ablation Studies and Comparative Analysis

This section presents comprehensive ablation studies to validate the effectiveness of individual components in LSDA-YOLO, along with comparative analysis against mainstream detection frameworks (Table 4). Our systematic investigation begins with the YOLO11n baseline, which demonstrates remarkable computational efficiency (6.40 GFLOPS) while achieving 77.0% mAP50 and 50.0% mAP50-95, providing a solid foundation for SAR-specific enhancements.

Table 4.

Experimental results of attention mechanism comparisons.

The integration of the Deformable Large Kernel Attention (DLKA) module yields substantial improvements, achieving 81.6% precision and 78.6% mAP50 while maintaining excellent computational efficiency (6.3 GFLOPS). These gains are attributed to enhanced global feature modeling through deformable and dilated convolutions, which effectively expand the receptive field for better geometric feature capture of large-scale targets. Complementarily, the SimAM module demonstrates remarkable recall improvement (72.1%, +2.3 percentage points) and achieves 80.3% mAP50 with 52.4% mAP50-95. This improvement stems from its neuroscience-inspired energy function that automatically identifies important features while effectively suppressing speckle noise interference.

The LSDA-YOLO hybrid model synergistically combines these strengths, achieving state-of-the-art performance (80.8% mAP50, 53.2% mAP50-95) with optimal efficiency (7.3 GFLOPS), demonstrating effective integration of global context modeling with local detail enhancement. The performance progression reveals distinct contribution patterns: DLKA provides focused mAP50 improvement (78.6%) through enhanced global feature modeling, while SimAM delivers broader detection coverage (80.3% mAP50) via strengthened local detail perception. The architectural synergy in LSDA-YOLO enables comprehensive performance integration, addressing complementary aspects of SAR target detection.

The comparison with mainstream detection frameworks (Table 5) demonstrates LSDA-YOLO’s superior performance relative to established paradigms. While Faster RCNN-R50 and RT-DETR-R50 represent mature two-stage and transformer-based approaches, respectively, our method achieves significant advantages across all metrics. Notably, LSDA-YOLO achieves 16.7 and 19.9 percentage point improvements in mAP50 over Faster RCNN-R50 and RT-DETR-R50, respectively, while reducing computational complexity by approximately 27× and 18×. Compared to the latest YOLO variant, YOLO12-ppa, LSDA-YOLO still maintains a 3.0 percentage point improvement in mAP50 while further reducing computational cost (FLOPs) by approximately 64.6% (i.e., about 2.8 times).This substantial performance gap highlights the effectiveness of our dual-attention design in addressing SAR-specific challenges compared to conventional and state-of-the-art detection frameworks.

Table 5.

Comparison with mainstream detection frameworks.

Detailed per-class analysis (Table 6 and Table 7) demonstrates consistent superiority across all target categories. Vehicle detection maintains excellent performance with cars achieving 97.3% AP50, while structural targets show substantial improvements: bridges progress from 61.0% to 66.6% AP50 and harbors from 74.0% to 81.7% AP50. Particularly for geometrically complex targets, tank detection improves from 40.0% to 43.1% AP50:95, while bridge detection advances from 34.7% to 38.6% AP50:95, indicating enhanced boundary regression capabilities. These results validate the method’s effectiveness across diverse target characteristics and complexity levels, with the architectural synergy enabling balanced performance improvements regardless of target type.

Table 6.

Per-class AP50 performance comparison.

Table 7.

Per-class AP50:95 performance comparison.

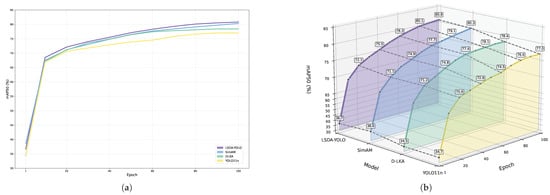

4.4.3. Training Dynamics and Convergence

The training curves in Figure 6 show that LSDA-YOLO achieves a steeper convergence and higher performance plateau than its counterparts. This indicates that our architectural design promotes more effective gradient flow and feature learning from the early stages of training, leading to sustained performance advantages. The analysis systematically presents the mAP50 performance from two complementary dimensions: 3D hierarchical representation and 2D trend projection. The 3D comparison clearly reveals performance differences among models at each epoch, with LSDA-YOLO achieving optimal performance (mAP50 80.8%) at the end of the training cycle, significantly outperforming SimAM (80.3%), DLKA (78.6%), and YOLO11n baseline (77.0%). The 2D projection verifies these findings from the perspective of trend details, clearly showing that LSDA-YOLO maintains leading performance throughout training, with stable performance gaps at critical training nodes (e.g., reaching 75.5% at epoch 40).

Figure 6.

Comparison of mAP50 Performance Among SAR Target Detection Models Across Training Epochs. (a) 2D Projection of mAP50 Performance Trend. (b) 3D Comparison of mAP50 Performance.

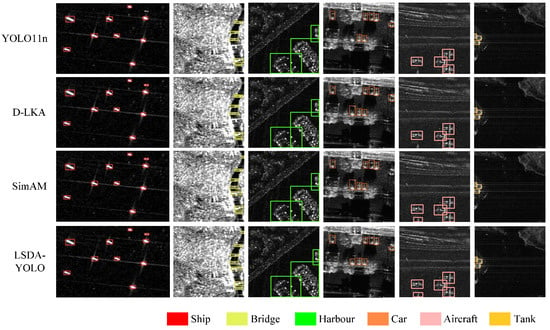

4.4.4. Qualitative Analysis and Visualization

Analysis of detection results in Figure 7 reveals progressive improvements across model architectures. The baseline YOLO11n exhibits significant missed detections across multiple categories including ships, bridges, cars, and aircraft. DLKA addresses some limitations by successfully detecting ships and cars, yet maintains missed detections for bridges and aircraft with suboptimal bounding box precision. SimAM demonstrates further refinement by reducing missed detections for aircraft and bridge categories, while also generating more compact detection bounding boxes that better fit the actual target contours. LSDA-YOLO achieves the most precise bounding box localization across all target categories, with significant size optimization and minimal missed detections, particularly evident in complex scenarios with overlapping targets and heterogeneous backgrounds. These visualization results strongly align with quantitative analysis conclusions, collectively demonstrating the effectiveness of the dual attention mechanism in enhancing SAR target detection performance.

Figure 7.

Visualization of detection results for SAR targets across different models.

The comprehensive evaluation demonstrates that our proposed attention mechanisms achieve a remarkable balance between detection performance and computational efficiency. LSDA-YOLO delivers state-of-the-art detection accuracy while maintaining an exceptionally lightweight computational profile (7.3 GFLOPS), making it ideally suited for deployment in real-world SAR applications where both accuracy and efficiency are critical considerations. Compared to larger models in the YOLO11 family, our approach establishes a new benchmark for efficient SAR target detection by achieving superior performance with significantly reduced computational complexity.

5. Conclusions

In SAR target detection scenarios, challenges such as speckle noise interference, weak small object features, significant scale variations among targets, and multi-category imbalance are prevalent. Lightweight detection models often struggle to achieve satisfactory detection accuracy while maintaining computational efficiency. This paper proposes LSDA-YOLO, an enhanced SAR target detection framework built upon the lightweight YOLO11n architecture. The LSDA-YOLO model integrates a Deformable Large Kernel Attention (DLKA) module, which enhances global feature modeling and geometric adaptability for complex-shaped targets. Additionally, we incorporate a parameter-free SimAM attention mechanism throughout the network to strengthen local detail perception and effectively suppress speckle noise. The synergistic dual-attention architecture establishes a complementary interaction between global context and local features, significantly improving multi-scale detection capability while maintaining lightweight characteristics.

Comprehensive evaluations on a large-scale SAR dataset demonstrate that LSDA-YOLO achieves a remarkable improvement in detection accuracy compared to the YOLO11n baseline, with an increase of 3.8 percentage points in mAP50 (from 77.0% to 80.8%) and 3.2 percentage points in mAP50-95 (from 50.0% to 53.2%). The proposed architecture maintains excellent computational efficiency with only 7.3 GFLOPS, representing a minimal 14.1% increase compared to the baseline, while achieving substantial performance gains. This optimal balance makes LSDA-YOLO particularly suitable for real-time SAR applications and deployment on resource-constrained platforms. Robustness tests further validate the model’s superior detection stability and accuracy across diverse and challenging SAR scenarios.

To address the practical application demands in areas such as defense security, emergency response, and environmental monitoring, future research will focus on advancing the LSDA-YOLO framework into more complex operational environments. Key research directions will include multi-view detection, 3D localization, and overcoming the challenges associated with real-time model deployment on edge computing devices. These efforts aim to comprehensively enhance the practical value and robustness of SAR target detection systems in real-world scenarios while maintaining the lightweight characteristics essential for practical deployment.

Author Contributions

Conceptualization, J.Y. and L.Z.; methodology, J.Y.; software, J.Y.; validation, J.Y. and L.Z.; formal analysis, J.Y.; investigation, J.Y.; resources, J.Y. and L.Z.; data curation, J.Y.; writing—original draft preparation, J.Y.; writing—review and editing, J.Y. and L.Z.; visualization, J.Y.; supervision, J.Y.; project administration, J.Y.; funding acquisition, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

The authors would like to thank the providers of the SAR dataset used in this study. During the preparation of this manuscript, the authors used ChatGPT: GPT-5 for the purposes of language polishing and refinement of this manuscript. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, J.; Yu, Z.; Yu, L.; Cheng, P.; Chen, J.; Chi, C. A Comprehensive Survey on SAR ATR in the Deep-Learning Era. Remote Sens. 2023, 15, 1454. [Google Scholar] [CrossRef]

- Zhang, Y.; Hao, Y. A Survey of SAR Image Target Detection Based on Convolutional Neural Networks. Remote Sens. 2022, 14, 6240. [Google Scholar] [CrossRef]

- Huang, Z.; Yao, X.; Liu, Y.; Dumitru, C.O.; Datcu, M.; Han, J. Physically Explainable CNN for SAR Image Classification. ISPRS J. Photogramm. Remote Sens. 2022, 190, 25–37. [Google Scholar] [CrossRef]

- Perera, M.V.; Bandara, W.G.C.; Valanarasu, J.M.J.; Patel, V.M. SAR Despeckling Using Overcomplete Convolutional Networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 401–404. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Yifu, Z.; Wong, C.; Montes, D.; et al. Ultralytics/YOLOv5: v7.0-YOLOv5 SOTA Real-Time Instance Segmentation. Zenodo. 2022. Available online: https://zenodo.org/records/7347926 (accessed on 10 November 2025). [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems, Chennai, India, 15–17 March 2024; pp. 1–6. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Liangjun, Z.; Feng, N.; Yubin, X.; Gang, L.; Zhongliang, H.; Yuanyang, Z. MSFA-YOLO: A Multi-Scale SAR Ship Detection Algorithm Based on Fused Attention. IEEE Access 2024, 12, 24554–24568. [Google Scholar] [CrossRef]

- Wei, D.; Du, Y.; Du, L.; Li, L. Target Detection Network for SAR Images Based on Semi-Supervised Learning and Attention Mechanism. Remote Sens. 2021, 13, 2686. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A Discriminatively Trained, Multiscale, Deformable Part Model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Samek, W.; Montavon, G.; Lapuschkin, S.; Anders, C.J.; Müller, K.R. Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications. Proc. IEEE 2021, 109, 247–278. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Dai, Z.; Mu, G.; Zha, W.; Xu, R. A Review on SAR Image Denoising Algorithm Using Deep Learning Theory. In Proceedings of the Sixteenth International Conference on Digital Image Processing, Beijing, China, 24–26 May 2024; Volume 13274, pp. 400–417. [Google Scholar]

- Sheng, Y.; Zhao, Z.; Chen, H. Building Extraction from Polarimetric SAR Images Using Associated Features and SVM. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 587–592. [Google Scholar] [CrossRef]

- Wan, H.; Chen, J.; Nurmamat, P.; Zeng, H.; Yang, W.; Xu, T.; Wu, B.; Chen, J.; Huang, Z.; Diniz, P.S.R. An Automatic Pruning Method for SAR Target Detection Based on Multitask Reinforcement Learning. Int. J. Appl. Earth Obs. Geoinf. 2025, 140, 104553. [Google Scholar] [CrossRef]

- Wan, H.; Chen, J.; Huang, Z.; Du, W.; Xu, F.; Wang, F.; Wu, B. Orientation Detector for Ship Targets in SAR Images Based on Semantic Flow Feature Alignment and Gaussian Label Matching. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5218616. [Google Scholar] [CrossRef]

- Nurmamat, P.; Wan, H.; Chen, J.; Huang, Z.; Yang, L.; Li, M.; Yang, W.; Zeng, H.; Chen, J.; Diniz, P.S.R. An Interpretable SAR Image Filtering Algorithm. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5210817. [Google Scholar] [CrossRef]

- Ma, X.; Cheng, J.; Li, A.; Zhang, Y.; Lin, Z. AMANet: Advancing SAR Ship Detection with Adaptive Multi-Hierarchical Attention Network. IEEE Access 2024, 12, 105952–105967. [Google Scholar] [CrossRef]

- Meng, L.; Li, D.; He, J.; Ma, L.; Li, Z. Convolutional Feature Enhancement and Attention Fusion BiFPN for Ship Detection in SAR Images. arXiv 2025, arXiv:2506.15231. [Google Scholar] [CrossRef]

- Wang, S.; Kim, B. Scale-Sensitive Attention for Multi-Scale Maritime Vessel Detection Using EO/IR Cameras. Appl. Sci. 2024, 14, 11604. [Google Scholar] [CrossRef]

- Miao, H.; Zhang, J.; Yan, J.; Zhou, J. Maritime Remote Sensing Ship Detection in UAV Image via Contextual Feature-Guided Shared Convolutional Networks. Complex Intell. Syst. 2025, 11, 456. [Google Scholar] [CrossRef]

- Rocha, R.D.L.; Figueiredo, F.A.D. Enhancing YOLO-Based SAR Ship Detection with Attention Mechanisms. Remote Sens. 2025, 17, 3170. [Google Scholar] [CrossRef]

- Yang, M.; Xu, R.; Yang, C.; Wu, H.; Wang, A. Hybrid-DETR: A Differentiated Module-Based Model for Object Detection in Remote Sensing Images. Electronics 2024, 13, 5014. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Li, W.; Hou, Q.; Liu, L.; Cheng, M.M.; Yang, J. Sardet-100k: Towards Open-Source Benchmark and Toolkit for Large-Scale SAR Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 128430–128461. [Google Scholar]

- Boutayeb, A.; Lahsen-Cherif, I.; Khadimi, A.E. Deformable Attention Mechanisms Applied to Object Detection, Case of Remote Sensing. arXiv 2025, arXiv:2505.24489. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Wang, K.; Liu, J.; Cai, X. C2PSA-Enhanced YOLOv11 Architecture: A Novel Approach for Small Target Detection in Cotton Disease Diagnosis. arXiv 2025, arXiv:2508.12219. [Google Scholar]

- Azad, R.; Niggemeier, L.; Hüttemann, M.; Kazerouni, A.; Aghdam, E.K.; Velichko, Y.; Merhof, D. Beyond Self-Attention: Deformable Large Kernel Attention for Medical Image Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 1287–1297. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Chen, X.; Jiang, N.; Yu, Z.; Qian, W.; Huang, T. Citrus Leaf Disease Detection Based on Improved YOLO11 with C3K2. In Proceedings of the International Conference on Computer Graphics, Artificial Intelligence, and Data Processing, Singapore, 18–20 April 2024; Volume 13560, pp. 746–751. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Zhang, X.; Yang, X.; Li, Y.; Yang, J.; Cheng, M.M.; Li, X. RSAR: Restricted State Angle Resolver and Rotated SAR Benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 16–22 June 2025; pp. 7416–7426. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.