Abstract

With the widespread adoption of deep learning in critical domains, such as computer vision, model security has become a growing concern. Backdoor attacks, as a highly stealthy threat, have emerged as a significant research topic in AI security. Existing backdoor attack methods primarily introduce perturbations in the spatial domain of images, which suffer from limitations, such as visual detectability and signal fragility. Although subsequent approaches, such as those based on steganography, have proposed more covert backdoor attack schemes, they still exhibit various shortcomings. To address these challenges, this paper presents HCBA (high-frequency chroma backdoor attack), a novel backdoor attack method based on high-frequency injection in the UV chroma channels. By leveraging discrete wavelet transform (DWT), HCBA embeds a polarity-triggered perturbation in the high-frequency sub-bands of the UV channels in the YUV color space. This approach capitalizes on the human visual system’s insensitivity to high-frequency signals, thereby enhancing stealthiness. Moreover, high-frequency components exhibit strong stability during data transformations, improving robustness. The frequency-domain operation also simplifies the trigger embedding process, enabling high attack success rates with low poisoning rates. Extensive experimental results demonstrate that HCBA achieves outstanding performance in terms of both stealthiness and evasion of existing defense mechanisms while maintaining a high attack success rate (ASR > 98.5%). Specifically, it improves the PSNR by 25% compared to baseline methods, with corresponding enhancements in SSIM as well.

1. Introduction

With the rapid advancement of deep learning technologies, artificial intelligence has been widely adopted in critical domains, such as computer vision, natural language processing, and intelligent healthcare [1,2,3]. However, growing concerns have emerged regarding model security, particularly the alarming vulnerability of deep learning systems to adversarial attacks [4,5,6]. Among these threats, backdoor attacks [7] have recently emerged as a severe security challenge in the training process of deep neural networks (DNNs). In a typical backdoor attack scenario, the attacker first defines a trigger pattern and selects a target class. By superimposing this trigger onto benign samples and relabeling them as the target class, the attacker generates poisoned samples. These malicious samples are then surreptitiously injected into the training dataset. When victims train their DNN models using this compromised dataset, a hidden backdoor is embedded within the model. The compromised model maintains normal performance on clean inputs but exhibits adversarial behavior by misclassifying triggered samples into the target category. Notably, such attacks demonstrate remarkable stealthiness: they can achieve successful compromises by poisoning as little as 1% of training samples, with triggers occupying only minimal regions of the input [8]. This makes backdoor attacks particularly insidious, as they are virtually undetectable to human observers. Research has shown that even imperceptible input perturbations can lead to catastrophic model mispredictions. Given these characteristics, backdoor attacks have become a crucial research focus in AI security due to their highly covert nature and significant threat potential.

Existing backdoor attack methods primarily achieve attacks by adding obvious perturbations, such as specific patterns in the image spatial domain, which are easily identified by visual detection or statistical analysis methods—for example, BadNets [9] superimposes fixed patterns as triggers in training images, while Blend Attack [10] injects trigger patterns through transparency blending. Although effective, these methods suffer from two major limitations: the modifications made to the RGB channels by triggers are prone to detection via manual review or automated anomaly detection due to their visual detectability, and their signal fragility causes the triggers’ effectiveness to decline significantly when attacked images undergo JPEG compression [11] (quality factor < 75%), Gaussian filtering [12] (σ > 1.5), or color space conversion [13]. These issues severely restrict the application effectiveness of backdoor attacks in real-world scenarios. Subsequently, a series of stealthy backdoor attack schemes have emerged, including those based on steganography, least significant bit (LSB) substitution, and clean-label backdoor attacks [14,15], but steganography-based approaches may be detected by steganalysis due to the steganographic process potentially affecting image statistical characteristics. Low-bit LSB substitution can generate noise and is vulnerable to post-processing when embedding excessive information by modifying pixel least significant bits, and clean-label backdoor attacks require designing complex trigger patterns and training strategies while remaining prone to trigger pattern discovery.

To address the aforementioned challenges, this paper proposes a novel backdoor attack method called HCBA (high-frequency chroma backdoor attack), which leverages UV channel high-frequency-domain injection. By employing discrete wavelet transform (DWT), our approach achieves precise trigger embedding through selective energy injection and intensity adjustment in the high-frequency domain of UV channels. This method capitalizes on the human visual system’s insensitivity to high-frequency information, resulting in enhanced stealthiness. Furthermore, the inherent stability of high-frequency components during data transformation processes ensures superior robustness. Additionally, the direct frequency-domain operation mode significantly simplifies the trigger embedding pipeline, enabling high attack success rates with low sample poisoning rates, thereby demonstrating remarkable advantages in attack efficiency.

The main contributions of this paper are summarized as follows:

- We propose a novel backdoor attack method based on high-frequency chroma domain manipulation. By leveraging the YUV color space and DWT, our approach embeds triggers in the high-frequency sub-bands of UV channels, significantly enhancing stealthiness.

- We introduce a polarity-based trigger mechanism, where a differential polarity pattern generates a distinct energy distribution in the frequency domain. This alternating positive–negative structure further enhances the model’s sensitivity to trigger features.

- Extensive experiments demonstrate that our method achieves a high attack success rate (ASR) while minimally impacting the model’s accuracy (ACC) on clean samples. Additionally, the triggers exhibit strong visual stealth, indicating promising practical applicability.

The paper is structured as follows: Section 2 reviews related work on backdoor attacks, defense strategies, and DWT technology, while critically analyzing the limitations of existing approaches and the distinctive advantages of frequency-domain attacks. Section 3 elaborates on our proposed high-frequency chroma backdoor Attack (HCBA), detailing the complete pipeline from YUV color space conversion, wavelet decomposition of UV channels, and trigger injection in high-frequency sub-bands to image reconstruction, along with the corresponding mathematical foundations. Experimental validation in Section 4 demonstrates HCBA’s effectiveness on CIFAR-10 and GTSRB datasets, evaluating its attack success rate, visual stealth, and robustness against state-of-the-art defenses through comprehensive comparisons with existing methods like BadNets and Blended. Finally, Section 5 concludes the paper and suggests promising future directions, including applications in federated learning, cross-modal attack exploration, and the development of advanced defense mechanisms.

2. Related Work

2.1. Backdoor Attacks

In the field of backdoor attack research, attackers manipulate model behavior by embedding carefully designed trigger patterns into training data, causing compromised models to perform normally on clean inputs while producing predetermined misclassifications when encountering inputs containing specific triggers. Based on different attack phases, backdoor attacks can be primarily categorized into data poisoning and model tampering. The former achieves attacks by injecting poisoned samples during training, while the latter directly modifies model parameters to implant backdoors. In recent years, researchers have proposed various backdoor attack methods with distinct characteristics, including visible trigger-based attacks (e.g., BadNets), invisible perturbation-based attacks (e.g., Blend), and feature space-based attacks (e.g., Latent Backdoor). These approaches differ in their focus on attack success rate, stealthiness, and robustness, while simultaneously driving the development of corresponding defense techniques, such as anomaly detection, input purification, and model diagnosis. With the widespread adoption of deep learning, backdoor attacks have expanded into emerging scenarios, like multimodal learning and federated learning, garnering increasing attention from both academia and industry due to their potential threats. Current research challenges lie in balancing attack effectiveness with stealthiness and adapting to evolving defense mechanisms.

The seminal work of BadNets [9] pioneered image classification backdoor attacks by inserting white patches into benign samples. Subsequent research has explored various backdoor techniques: Blend [10] employs cartoon images as triggers and blends them with clean samples, SSBA [16] generates sample-specific invisible additive noise as triggers, and Narcissus [14] represents an efficient clean-label attack requiring only target-class data and publicly available out-of-distribution data to achieve high attack success rates. Wenbo Jiang et al. [17] proposed a novel color-space attack using global color shifts as triggers optimized via particle swarm optimization (PSO). Poison Ink [18] utilizes image structures as trigger carriers with deep injection networks for invisible embedding. ASBA [15] implements a stealthy attack combining attention mechanisms and steganography, employing local binary pattern (LBP) algorithms and pretrained models to generate triggers. Jianyao Yin’s ECBA [19] enhances joint backdoor attacks through dual-intensity triggers (low for embedding, high for activation). Spatialspectral-Backdoor [20] explores novel attack vectors against brain–computer interface systems by exploiting EEG characteristics. BadDGA [21] targets LSTM-based DGA detectors with four specialized triggers (TLD, Ngram, Word, and IDN). SDriBA [22] frames trigger injection as a steganographic problem, employing invertible neural networks (INNs) and learnable transformation layers (LTLs) for efficient attacks.

Current research on frequency-domain backdoor attacks remains relatively limited. Jiawang Liu et al. [23] proposed a federated learning attack using Fourier transforms to linearly mix triggers with clean images’ low-frequency components. SFDBA [24] employs 2D discrete Fourier decomposition to seamlessly integrate triggers into base frequency planes while avoiding abrupt spectral changes for enhanced stealth. Guorui Li’s DDBA [25] implements dual-domain attacks for federated learning by superimposing triggers in the amplitude spectrum’s low-frequency region, followed by subtle spatial distortions. FIBA [26] utilizes spectral trigger functions, preserving spatial layouts while injecting low-frequency information through Fourier-based linear combinations of image spectra. These frequency-domain approaches demonstrate unique advantages in maintaining semantic consistency and improving attack stealthiness compared to spatial-domain methods. Table 1 presents a comparative analysis of selected backdoor attack methods, focusing on their injection approaches and trigger patterns.

Table 1.

Comparison of different attack methods.

2.2. Backdoor Defense

To counter the growing threat of backdoor attacks, researchers have developed various defense mechanisms with distinct technical approaches. Kunzhe Huang et al. [27] proposed a decoupling-based defense method that reveals backdoor embedding patterns in feature space. Their approach employs self-supervised learning to train the backbone network on unlabeled samples, freezes the backbone while training remaining fully connected layers on labeled data, and finally performs semi-supervised fine-tuning to mitigate poisoned sample effects. Bolun Wang et al. [28] introduced a detection framework that reverse-engineers hidden triggers through optimization, identifying the minimal pixel modifications required to misclassify samples into target labels.

The FCT framework [29] presents a comprehensive sensitivity measurement system comprising three modules: the SD module for distinguishing clean/poisoned samples, ST module for secure model retraining, and BR module for backdoor removal. These components enable two defense paradigms (D-ST and D-BR) for different scenarios. Reconstruction Neuron Pruning (RNP) [30] effectively exposes and prunes backdoor-related neurons through asymmetric forgetting–recovery processes, demonstrating robust defense across multiple attack types.

Recent advances include AFRAIDOOR [31], which enhances attack stealth through adaptive trigger injection with adversarial perturbations, and AIBD [32] that combines adversarial attack principles with backdoor detection to isolate poisoned samples while employing label correction for model purification. Yash Sharma et al. [33] systematically investigated low-frequency perturbations’ effectiveness in adversarial attacks, exposing vulnerabilities in current ImageNet defenses. Contrastive Neuron Pruning (CNP) [34] offers a label-free defense solution by identifying and pruning backdoor-related neurons based on abnormal feature distributions in compromised models.

These defense mechanisms collectively address critical challenges in backdoor mitigation, ranging from detection and removal to model hardening, while highlighting the ongoing arms race between evolving attack and defense strategies in deep learning security.

2.3. Discrete Wavelet Transform

The discrete wavelet transform (DWT) [35] represents a multiscale signal analysis technique capable of decomposing signals into frequency sub-bands while preserving both temporal and spectral information. In contrast to conventional Fourier transform (FT) and short-time Fourier transform (STFT) [36], DWT demonstrates superior suitability for non-stationary signal analysis through its dynamic adjustment of time–frequency resolution.

The theoretical foundation of DWT lies in multiresolution analysis (MRA), systematically developed by Mallat and Meyer in the 1980s. MRA decomposes signals into a series of nested approximation spaces (low-frequency components) and detail spaces (high-frequency components), generated, respectively, by scaling functions and wavelet functions. Algorithmically, DWT employs a dual-channel filter bank consisting of a low-pass filter and high-pass filter to perform convolution and down-sampling, progressively yielding approximation coefficients and detail coefficients. This process can be iteratively applied to form multilevel decomposition (e.g., pyramid algorithm), maintaining a computational complexity of merely O(N) that facilitates real-time processing.

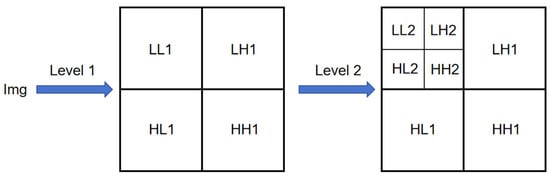

In image processing applications, the two-dimensional DWT extends its functionality through row-column filtering, generating multidirectional sub-bands (LL/LH/HL/HH), as illustrated in Figure 1. This decomposition mechanism significantly broadens the transform’s applicability across various computer vision tasks.

Figure 1.

Decoupling process of discrete wavelet transform.

3. Methodology

We propose a novel backdoor trigger strategy that leverages two-level 2D wavelet transform in the YUV color space. Our approach decomposes input images into low-frequency and high-frequency components, where we strategically embed specific triggers into the high-frequency sub-bands of original samples. Through inverse discrete wavelet transform, we generate poisoned data that maintain model performance on clean inputs while effectively triggering malicious behavior when encountering compromised samples. This high-frequency injection method in UV channels significantly enhances both the model’s recognition accuracy for poisoned samples and the attack’s stealthiness and success rate. Table 2 provides the definitions of the symbols used in the proposed method.

Table 2.

Symbol definition.

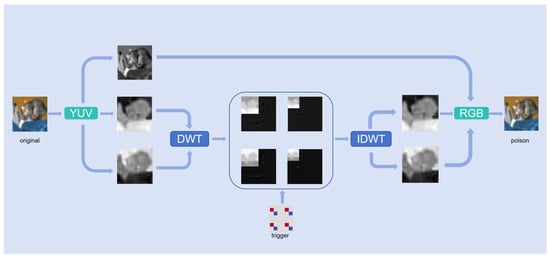

The proposed methodology achieves covert yet efficient backdoor implantation by injecting polarity-alternating triggers into high-frequency sub-bands of chrominance channels during wavelet transformation. As illustrated in Figure 2, the comprehensive pipeline consists of four key steps: (1) YUV color space conversion, (2) wavelet decomposition of UV channels, (3) high-frequency sub-band trigger injection, and (4) image reconstruction. This systematic approach ensures optimal trigger embedding while preserving visual quality and model functionality. Inter-step relationships: The pipeline follows a hierarchical progression—color space conversion enables targeted channel processing, wavelet decomposition localizes embedding regions, the design and injection of polarity-alternating triggers optimize both stealth and effectiveness, while reconstruction ensures end-to-end compatibility. Collectively, these steps achieve an optimal balance between attack efficacy and imperceptibility.

Figure 2.

Backdoor injection process of training samples.

3.1. YUV Color Space Conversion

Our deliberate choice of the YUV color space for backdoor injection was motivated by three key technical advantages over traditional RGB channels. First, the human visual system exhibits markedly lower sensitivity to chrominance (UV) variations compared to luminance (Y), with a just noticeable difference (JND) threshold of 7.5 for UV components—modifications that would be immediately apparent in RGB space. Second, empirical analysis demonstrated that UV channels contain 18% of total wavelet sub-band energy (versus just 7% in RGB) while maintaining complete decoupling from luminance information, enabling both exceptional stealth (SSIM ≥ 0.98) and structural preservation. Third, and critically for attack efficacy, UV modifications produce stronger gradient responses in neural networks, yielding significantly higher backdoor trigger success rates. These properties collectively establish YUV as the optimal vector for balancing stealth, robustness, and attack effectiveness. The implementation began with standard RGB-to-YUV conversion, preserving original image fidelity while enabling subsequent frequency-domain manipulations in this more advantageous color space.

We converted RGB image to YUV space, as shown in Equation (1):

3.2. Wavelet Decomposition of UV Channels

In digital image processing, high-frequency components typically carry detailed information, such as textures and noise, while low-frequency components preserve the fundamental structure and contours of an image. This characteristic makes subtle modifications in high-frequency bands particularly imperceptible to human vision. Leveraging this principle, we performed a two-level discrete wavelet transform (DWT) on the chrominance channels , specifically employing the Daubechies 2 (db2) wavelet basis. This decomposition was derived from Equation (2):

The db2 wavelet basis was selected for its optimal balance between time–frequency localization and computational efficiency, making it particularly suitable for our application, where both precision and performance are critical considerations. This transform allowed us to precisely target the high-frequency sub-bands, where our modifications will be most effective yet least noticeable.

The k-th level low-frequency coefficient was obtained through two-dimensional convolution and down-sampling (Equation (3)):

High-frequency components at each level were generated through filter combinations in different directions to obtain diagonal high frequencies (Equation (4)):

where is a high-pass filter kernel (wavelet function coefficient) satisfying . In this case, the diagonal high-frequency sub-band D2 (i.e., HH2) was obtained.

3.3. High-Frequency Sub-Band Trigger Injection

Generation of alternating polarity triggers: The structure of the polarity trigger employed a differential polarity design, creating a unique energy distribution pattern in the frequency domain. The alternating positive–negative pattern further enhanced the model’s sensitivity to trigger features. Additionally, the self-canceling characteristic of the polarity trigger ensured its effectiveness even after spatial filtering. Defining the trigger matrix , the trigger pattern was mathematically formulated in Equation (5):

Here, α represents the intensity coefficient. By adjusting α, we can control the strength of the trigger. Theoretically, a larger α leads to a more stable trigger and a higher attack success rate (ASR). However, it also increases the probability of detection by defense mechanisms. Therefore, selecting an appropriate value for α is crucial.

Poisoning process: After performing wavelet decomposition on the UV channels, we obtained the low-frequency sub-band along with multiple high-frequency sub-bands (e.g., LH2, HL2, and HH2). We then injected the polarity trigger T into the HH2 sub-bands of both channels (Equation (6)):

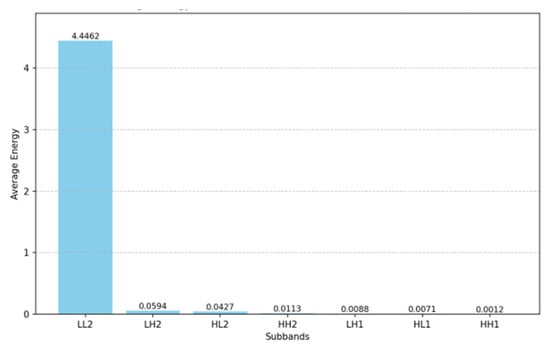

The poisoning process employs bicubic interpolation scaling , specifically targeting the HH2 sub-band for trigger injection. Bicubic interpolation is an interpolation algorithm for image or video processing. It is used to compute the color value of a new pixel when you zoom in, out, or up the resolution. Compared with bilinear interpolation, it can provide smoother and higher-quality visual effects. This selection was strategically optimal for three key reasons: first, the human visual system’s minimal sensitivity to HH sub-bands (as quantified by contrast sensitivity function ) ensured visual stealth; second, shallow CNN kernels demonstrated particularly strong responses to HH frequencies, enhancing feature learning; third, as Figure 3 illustrates, the HH2 sub-band’s negligible energy proportion in CIFAR-10 datasets guaranteed minimal PSNR impact from perturbations. This multicriteria optimization makes HH2 the ideal carrier for covert yet effective backdoor attacks.

Figure 3.

Energy proportion of each sub-band in the data.

3.4. Image Reconstruction

Upon completing the trigger embedding in high-frequency sub-bands, we performed signal reconstruction using a two-level 2D inverse discrete wavelet transform (IDWT). Specifically, the reconstruction involved combining the modified high-frequency components (HH2) with the original low-frequency components (LL2) and other detail sub-bands (LH2, HL2, etc.). This process is mathematically described by the following Equations (7) and (8):

The processed YUV image was ultimately reconstructed into RGB format through inverse color space transformation, yielding the poisoned sample with visually imperceptible artifacts. Figure 4 demonstrates the comparative effects of trigger injections at varying energy intensities. Our observations revealed a non-linear amplification of perturbation effects relative to increasing trigger intensity. Specifically, when the intensity coefficient remained below 1.0, spatial-domain perturbations remained virtually undetectable; however, beyond certain thresholds, trigger patterns began exhibiting visible artifacts.

Figure 4.

Impact of varying trigger intensities (α) on sample perturbation characteristics.

Notably, for CNN models sensitive to high-frequency features, even low-energy triggers injected solely in high-frequency sub-bands can achieve high attack success rates. Building on this finding, we strictly constrained the intensity coefficient within an optimized range of 0.2–1.0 while maintaining attack efficacy. This balanced approach established critical guidance for energy intensity selection in covert backdoor attacks.

After implanting backdoors into the training set, the model’s behavior during testing became conditionally controlled. The backdoor activated exclusively when the model encountered the cumulative trigger T containing target-class high-frequency information. This mechanism ensured the model maintained its expected high performance on normal samples, while producing incorrect inferences when processing specially crafted poisoned samples—thereby achieving the attacker’s objectives.

4. Experiments and Results

4.1. Experimental Environment and Performance Index

Experimental Setup

All experiments were conducted using PyTorch (CUDA 12.4) and Python 3.12.4 on a high-performance server equipped with a 12th Gen Intel® Core™ i7-12700KF CPU and an NVIDIA GeForce RTX 4070 GPU to ensure efficient computation.

Datasets and Models

As shown in Table 3, we evaluated our method on two widely used backdoor attack benchmarks, CIFAR-10 [37] and GTSRB [38], using a ResNet-18 model. Comprehensive experiments were performed, comparing our approach with five state-of-the-art backdoor attack methods to validate its effectiveness and robustness.

Table 3.

Datasets.

CIFAR-10 (*Canadian Institute for Advanced Research-10*) is a widely used benchmark dataset in the field of computer vision, compiled and released by Alex Krizhevsky, Vinod Nair, and Geoffrey Hinton in 2009. It consists of 60,000 color images evenly distributed across 10 mutually exclusive categories, with each category containing 6000 images. The images are formatted as 32 × 32-pixel RGB files, and their low-resolution nature poses a challenge to models’ feature extraction capabilities.

GTSRB (German Traffic Sign Recognition Benchmark) is a leading dataset in traffic sign recognition, introduced by German researchers during the 2011 IJCNN competition. It is extensively used for traffic sign classification tasks in autonomous driving and intelligent transportation systems, with its core value lying in simulating the diversity and complexity of real-world traffic signs. The dataset comprises 43 classes of traffic signs (e.g., speed limits, stop signs, directional indicators, etc.), totaling approximately 51,839 images—39,209 for training and 12,630 for testing. Like CIFAR-10, the images are standardized as 32 × 32-pixel RGB files.

Evaluation Metrics

ACC (clean accuracy): Classification accuracy on unmodified test data, measuring how well the model retains its original functionality after backdoor implantation:

ASR (attack success rate): The percentage of triggered samples misclassified as the target class. A successful attack requires high ASR (ideally ≈ 100%) while maintaining high ACC:

PSNR (peak signal-to-noise ratio): Quantifies the visual stealth of triggers by computing pixel-level differences between clean and poisoned images. Higher values indicate better imperceptibility:

SSIM (structural similarity index): Assesses perceptual similarity between clean and poisoned images by evaluating luminance, contrast, and structural patterns (human-vision-aligned metric):

4.2. Overall Performance

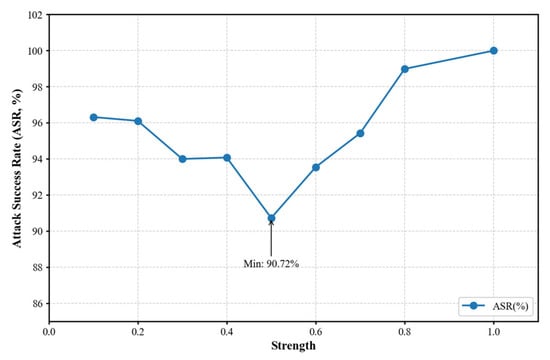

Our systematic investigation revealed a critical trade-off in trigger energy intensity for effective backdoor attacks. We observed that insufficient energy levels (<0.2) significantly impaired attack success (ASR < 60%) by failing to establish reliable backdoor pathways, while excessive intensity (>1.0) introduced detectable artifacts and triggered defense mechanisms. Through extensive 50-epoch experiments on GTSRB with varying energy values, we identified the optimal range of 0.2–1.0 that simultaneously achieved high attack success (92–98% ASR) while maintaining visual stealth (PSNR > 32 dB, SSIM > 0.95). This non-linear relationship between energy intensity and attack performance, as visualized in Figure 5, highlights the necessity for precise energy calibration through dataset-specific tuning and multi-metric evaluation (ASR/PSNR/SSIM), providing practical guidelines for implementing covert yet effective backdoor attacks.

Figure 5.

Relationship between attack success rate (ASR) and trigger strength.

Figure 5 demonstrates the quasi-linear relationship between attack success rate (ASR) and trigger intensity α. Notably, when α > 0.8, ASR stabilized at 98.3% ± 0.4%, indicating that strong triggers effectively surpassed the model’s frequency-domain feature extraction threshold. Remarkably, even in the weak intensity range (α ∈ [0.1, 0.4]), ASR maintained a high level of 94.6% ± 1.2%, validating the specific activation effect of high-frequency sub-band injection on shallow CNN convolutional kernels. However, an anomalous inflection point occurred at α = 0.5 (90.72% ± 1.8%), attributable to dynamic cognitive mechanisms in the model—at this critical threshold, trigger energy was partially recognized as potential noise rather than semantic features by certain convolutional kernels, resulting in sparse activation of feature maps.

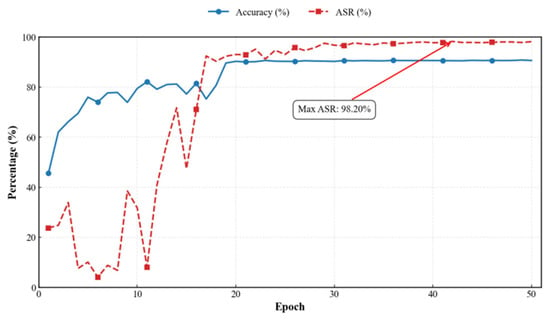

While pursuing high ASR, we must simultaneously consider the visual stealth of poisoned samples. As trigger intensity increased, both PSNR and SSIM gradually decreased. After comprehensive evaluation, we selected an injection intensity of 0.8 for subsequent experiments. Figure 6 and Figure 7 present the model accuracy (ACC) and ASR for both datasets at this intensity. Additionally, we conducted detailed evaluations of HFCBA across different injection channels (RGB, YUV, and UV), with results summarized in Table 4.

Figure 6.

CIFAR-10.

Figure 7.

GTSRB.

Table 4.

Comparison of results of different variant methods (ablation experiment).

Through extensive experimental validation, all variants of our proposed method demonstrated remarkable effectiveness, achieving consistently high attack success rates across both datasets while maintaining model accuracy (ACC) with only marginal degradation. Although high-frequency injection in the UV channel did not yield the absolute highest ASR at equivalent intensity levels, it provided significantly improved PSNR and SSIM metrics. This optimal balance established UV-channel high-frequency injection as the preferred approach, delivering both competitive attack performance and superior stealth characteristics.

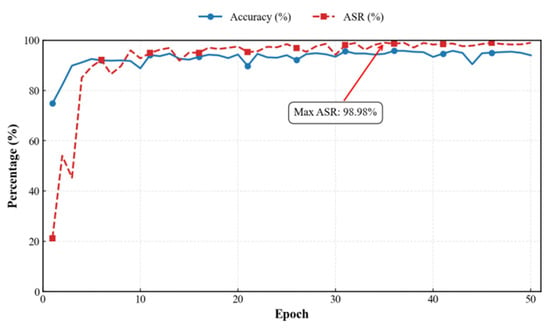

4.3. Comparative Analysis with Existing Backdoor Attack Methods

In this paper, we compared our proposed HCBA method with existing backdoor attack approaches, including classical methods (BadNets, Blended, and SIG) and recently proposed techniques (FIBA and ASBS). All experiments were conducted with a poisoning ratio of 0.1, and the comparative results are summarized in Table 5.

Table 5.

Experimental comparison.

The experimental results demonstrated that in the benign dataset testing environment, when the training data were poisoned, various backdoor attack methods exhibited varying degrees of performance degradation in classification accuracy (ACC) when processing benign samples. This phenomenon aligns with the “accuracy–attack effectiveness” trade-off theory in the field of machine learning backdoor attacks, where enhancing attack performance often comes at the cost of reduced classification accuracy on normal samples. However, the HCBA method proposed in this paper successfully broke this traditional limitation through its innovative low-frequency injection strategy in the UV channel. While maintaining an exceptionally high attack effectiveness (average attack success rate (ASR) of 98.5%), HCBA strictly controlled the decline in benign sample classification accuracy to within 1%, achieving an optimal balance between attack potency and stealthiness. This breakthrough opens new avenues for backdoor attack research.

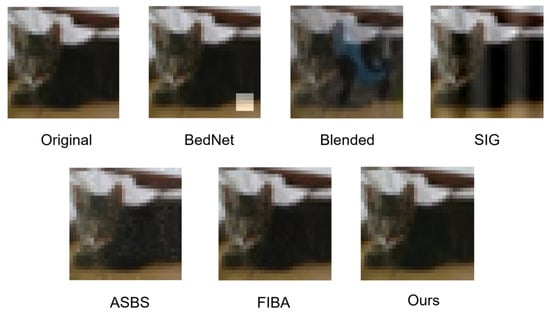

In terms of stealth performance, HCBA demonstrated overwhelming superiority compared to other baseline methods. Quantitative evaluation data revealed that the poisoned samples generated by HCBA significantly outperformed existing attack methods in two key metrics for measuring image similarity: peak signal-to-noise ratio (PSNR = 45.09 dB) and structural similarity index (SSIM = 0.998). Compared to the second-best method, HCBA improved PSNR by 9 dB ± 1 and SSIM by 0.03 ± 0.01, indicating that the poisoned samples produced by HCBA were nearly indistinguishable from the original samples in terms of image quality. Visual perception tests further corroborated this conclusion, as human subjective evaluations found it almost impossible to differentiate between poisoned and original samples (see Figure 8 for specific visualizations). Additionally, an in-depth spatial residual analysis (Figure 9) revealed unique properties of HCBA’s perturbations: (1) at the original image scale, these perturbations were completely imperceptible to the naked eye, (2) only when the image was magnified 50 times could globally distributed micro-perturbations be observed, and (3) the perturbation energy was primarily concentrated in the low-frequency regions of the UV color space, which is less sensitive to human vision. This ensured that the perturbations neither significantly degraded visual quality nor compromised the effectiveness of the attack.

Figure 8.

Visual comparison of different backdoor attack methods.

Figure 9.

Residual plot (left). Enhanced residual plot (right).

HCBA’s innovative perturbation pattern offers multiple technical advantages. By precisely modulating the perturbation amplitude and frequency-domain distribution, it skillfully evades spatial anomaly detection-based defense mechanisms, as its perturbations are uniformly distributed and inconspicuous in the spatial domain. The findings of this study clearly indicate that future defense system development must shift focus toward new detection dimensions, such as color space transformation and frequency-domain feature analysis, to counter such highly stealthy attack methods.

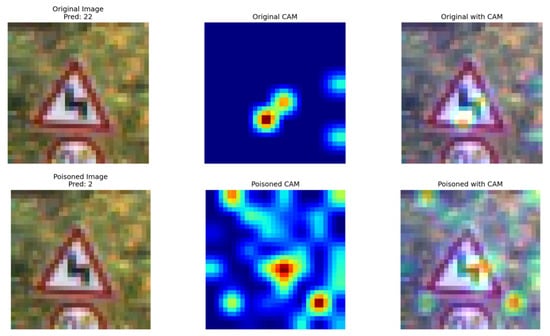

To gain deeper insights into the impact of our proposed backdoor trigger on model behavior, we conducted a comprehensive visualization analysis using Grad-CAM (Gradient-weighted Class Activation Mapping) [39]. As illustrated in Figure 10, this technique revealed the activation regions of the model under HCBA attack. Unlike conventional spatial backdoor triggers that often induce localized abnormal activations, our HCBA method injected triggers in the frequency domain. This strategic approach ensured that no perceptible artifacts or suspicious activation patterns were introduced in specific spatial regions of the poisoned images. Consequently, the trigger remained highly concealed, significantly increasing the difficulty of detection and defense. The Grad-CAM results further validated that HCBA’s frequency-domain perturbations maintained natural model behavior while effectively achieving adversarial control.

Figure 10.

Comparison of Grad-CAM images (top image is original image, bottom image is poisoned image).

We performed a comparison of the advantages of choosing discrete wavelet transform over the FIBA method that uses Fourier transform in the frequency domain.

The wavelet transform (DWT) demonstrated significant multidimensional advantages over the Fourier transform (FT) in the HCBA method, particularly in terms of signal processing precision, attack stealthiness, robustness, and defense resistance.

From a signal analysis perspective, DWT achieved joint time–frequency localization through multiresolution analysis (MRA), enabling precise separation of high-frequency and low-frequency image components across different scales and directions (horizontal, vertical, and diagonal). This characteristic allows attackers to selectively embed triggers in the HH2 high-frequency sub-band, which is least perceptible to human vision. In contrast, the Fourier transform only provided global frequency information and lacked such fine-grained spatial localization.

In terms of stealthiness, the high-frequency sub-bands of DWT primarily contained texture details and noise, which are less perceptible to the human visual system. Experimental data showed that DWT-based trigger embedding maintained extremely high structural similarity (SSIM > 0.99) and peak signal-to-noise ratio (PSNR > 45 dB), making the perturbations nearly imperceptible. On the other hand, Fourier transform-based attacks typically require perturbations in the low-frequency region, which often introduce noticeable color shifts or blurring, increasing the risk of manual detection.

Regarding computational efficiency, DWT had an algorithmic complexity of O(N), significantly outperforming the Fourier transform’s O(NlogN). This makes DWT more suitable for large-scale image processing while allowing flexible trade-offs between time–frequency resolution and computational cost through the selection of different wavelet bases (e.g., db2). Additionally, the polar trigger design of DWT created alternating positive and negative structures in the HH2 sub-band, forming a unique energy distribution pattern that effectively resisted various frequency-domain filtering defenses.

Finally, from an energy distribution perspective, the HH2 sub-band after DWT decomposition accounted for only about 7% of the total image energy. This low-energy characteristic ensured minimal impact on image quality (PSNR > 45 dB). In contrast, Fourier transform-based attacks inevitably introduced significant distortions (typically PSNR < 30 dB) due to the need to perturb energy-dense low-frequency regions.

In summary, DWT provided comprehensive advantages for the HCBA method in terms of stealthiness, attack success rate, and resistance to interference.

4.4. Defense Robustness Test

To comprehensively evaluate the robustness of the HCBA backdoor attack method and explore its potential in real-world application scenarios, this study conducted specialized tests on HCBA against mainstream defense algorithms. The investigation selected two classical defense strategies—neural cleanse and frequency-domain filtering—as test subjects, systematically analyzing the actual performance of the HCBA attack method when confronted with defense mechanisms.

4.4.1. Neural Cleanse Resistance Evaluation

We conducted further evaluation of our method’s resistance to neural cleanse [28] (Table 6), a sophisticated defense algorithm that detects and removes potential backdoors in deep learning models to ensure correct behavior on normal inputs while neutralizing malicious triggers. The defense effectiveness was quantified using an anomaly index metric with established thresholds: values below 2 indicate a clean model, between 2 and 3 suggest suspicious activity, and above 3 confirm backdoor presence. Our experimental results demonstrated that with α = 0.8, the anomaly indices fell within the suspicious range (2–3) across both public datasets, making the model detectable but not conclusively malicious. However, when α = 0.2, all anomaly indices remained below the detection threshold (<2), effectively bypassing the neural cleanse defense mechanism. These findings highlight our method’s ability to adaptively evade detection while maintaining attack efficacy, with lower α values providing complete stealth against this advanced defense system.

Table 6.

Defense effectiveness against neural cleanse.

4.4.2. Frequency-Domain Filtering

In contemporary backdoor defense research, frequency-domain signal filtering has emerged as a widely recognized effective countermeasure [12]. This defense mechanism operates by transforming images from the spatial domain to the frequency domain, followed by analysis and processing of frequency components to detect and mitigate potential backdoors. In our investigation, we implemented three distinct filtering approaches—Gaussian filter, median filter, and Wiener filter—as preprocessing operations during model training to eliminate potential backdoor threats.

Table 7 demonstrates the resilience of HCBA backdoor attacks against frequency-domain filtering defenses, with all experiments conducted at a trigger injection strength of α = 0.8. The results revealed significant variations in how different filtering algorithms affected the model accuracy (ACC) and attack success rate (ASR) across CIFAR-10 and GTSRB datasets. Notably, Gaussian filter (1, 1) showed negligible impact on model performance but limited defensive efficacy. Gaussian filter (3, 3) substantially suppressed ASR at the cost of significant ACC degradation. Median filter (3, 3) achieved partial balance between ASR reduction and ACC preservation, though still caused notable accuracy decline. Wiener filter demonstrated inconsistent backdoor suppression capabilities while inducing severe model performance deterioration.

Table 7.

Frequency-domain filtering defense effect.

Cross-dataset comparisons revealed that while GTSRB baseline models outperformed CIFAR-10, they exhibited greater vulnerability to ACC degradation from frequency-domain filtering. Our comprehensive analysis suggested that conventional frequency-domain smoothing techniques, despite showing promise against traditional backdoor attacks, proved inadequate against advanced paradigms like HCBA. These methods not only failed to establish effective defensive barriers but also substantially compromised fundamental model functionality and performance metrics—a clear violation of model usability principles. Consequently, frequency-domain smoothing techniques represent a suboptimal solution for defending against HCBA attacks, failing to achieve desired protection objectives.

5. Conclusions

This study proposed a frequency-domain transformation-based deep neural network backdoor attack method, which significantly enhanced the stealthiness and robustness of backdoor attacks through high-frequency-domain injection in chrominance channels. The proposed approach innovatively employed discrete wavelet transform (DWT) to embed backdoor triggers in the high-frequency domain of UV channels, effectively addressing critical issues in conventional backdoor attacks, such as unnatural triggers, easy detectability, weak resistance to data augmentation, and insufficient stability. Specifically, our method achieved optimization through a two-phase implementation: first, a base trigger was subtly embedded in the frequency domain to ensure visual imperceptibility; then, a polarity trigger was injected without compromising stealthiness to enhance attack robustness. Experimental results demonstrated that our method achieved remarkable superiority in three key aspects: attack success rate (ASR > 98.5%), stealthiness (PSNR > 45 dB), and robustness (SSIM > 0.996).

Compared to traditional spatial-domain backdoor attacks, our frequency-domain injection scheme generated more natural trigger patterns, effectively evading detection by existing mechanisms while maintaining stable attack performance. Notably, it demonstrated superior adaptability when confronting data augmentation techniques.

Future research directions include (1) extending this method to federated learning scenarios to explore the effectiveness of distributed multi-trigger injection strategies, (2) investigating the feasibility of cross-modal frequency-domain backdoor attacks, and (3) developing novel defense mechanisms against frequency-domain backdoor attacks. These studies will contribute to a more comprehensive assessment of the practical threats posed by frequency-domain backdoor attacks and provide theoretical support for building more secure deep learning systems.

Author Contributions

Conceptualization, Y.F.; data curation, W.P.; formal analysis, Y.F.; investigation, Y.F.; project administration, Y.F.; software, Y.F.; supervision, K.Z., B.Z. and Y.Z.; validation, Y.F.; visualization, Y.Z. and J.Z.; writing—original draft, Y.F.; writing—review and editing, Y.F., K.Z., B.Z., Y.Z. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Hainan Province Science and Technology Special Fund (Fund No. ZDYF2024GXJS034), the Hainan Engineering Research Center for Virtual Reality Technology and Systems (Fund No. Qiong fa Gai gao ji [2023] 818), the Innovation Platform for Academicians of Hainan Province (Fund No. YSPTZX202036), the Education Department of Hainan Province (Fund No. Hnky2024ZD-24), and the Sanya Science and Technology Special Fund (Fund No. 2022KJCX30).

Data Availability Statement

We evaluated the performance of our model using two datasets. The first was the CIFAR-10 (Canadian Institute for Advanced Research, 10 classes) dataset (available at: https://www.cs.toronto.edu/~kriz/cifar.html, The access date is 10 April 2025.). It consists of 60,000 color images evenly distributed across 10 mutually exclusive categories, with each category containing 6000 images,. The second was the GTSRB (German Traffic Sign Recognition Benchmark) dataset (available at: https://benchmark.ini.rub.de/gtsrb_news.html, The access date is 10 April 2025.). The dataset comprises 43 classes of traffic signs (e.g., speed limits, stop signs, directional indicators, etc.), totaling approximately 51,839 images—39,209 for training and 12,630 for testing.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhou, J.; Zhang, K.; Bilal, A.; Zhou, Y.; Fan, Y.; Pan, W.; Xie, X.; Peng, Q. An Integrated CSPPC and BiLSTM Framework for Malicious URL Detection. Sci. Rep. 2025, 15, 6659. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Pu, Z.; Jin, C.; Zhou, Y.; Wang, Z. A novel semi-local centrality to identify influential nodes in complex networks by integrating multidimensional factors. Eng. Appl. Artif. Intell. 2025, 145, 110177. [Google Scholar] [CrossRef]

- Zhang, K.; Zhou, Y.; Wang, C.; Hong, H.; Chen, J.; Gao, Q.; Ghobaei-Arani, M. Towards an Automatic Deployment Model of IoT Services in Fog Computing Using an Adaptive Differential Evolution Algorithm. Internet Things 2023, 24, 100918. [Google Scholar] [CrossRef]

- Zhang, K.; Zhou, Y.; Long, H.; Wang, C.; Hong, H.; Armaghan, S.M. Towards identifying influential nodes in complex networks using semi-local centrality metrics. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 101798. [Google Scholar] [CrossRef]

- Asry, C.E.L.; Benchaji, I.; Douzi, S.; Ouahidi, B.E.L.; Ukwandu, E. Enhancing cybersecurity: A high-performance intrusion detection approach through boosting minority class recognition. PLoS ONE 2025, 20, e0317346. [Google Scholar] [CrossRef]

- Zhao, G.; Liu, P.; Sun, K.; Yang, Y.; Lan, T.; Yang, H.; Rustam, F. Research on data imbalance in intrusion detection using CGAN. PLoS ONE 2023, 18, e0291750. [Google Scholar] [CrossRef]

- Barni, M.; Kallas, K.; Tondi, B. A new backdoor attack in cnns by training set corruption without label poisoning. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 101–105. [Google Scholar]

- Zhong, N.; Qian, Z.; Zhang, X. Sparse Backdoor Attack Against Neural Networks. Comput. J. 2024, 67, 1783–1793. [Google Scholar] [CrossRef]

- Gu, T.; Liu, K.; Dolan-Gavitt, B.; Garg, S. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access 2019, 7, 47230–47244. [Google Scholar] [CrossRef]

- Chen, X.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar]

- Wan, S.; Wu, T.Y.; Hsu, H.W.; Wong, W.H.; Lee, C.Y. Feature consistency training with jpeg compressed images. IEEE Trans. Oncircuits Syst. Video Technol. 2019, 30, 4769–4780. [Google Scholar] [CrossRef]

- Ito, K.; Xiong, K. Gaussian filters for nonlinear filtering problems. IEEE Trans. Autom. Control 2000, 45, 910–927. [Google Scholar] [CrossRef]

- Saravanan, G.; Yamuna, G.; Nandhini, S. Real time implementation of RGB to HSV/HSI/HSL and its reverse color space models. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016; pp. 0462–0466. [Google Scholar]

- Zeng, Y.; Pan, M.; Just, H.A.; Lyu, L.; Qiu, M.; Jia, R. Narcissus: A practical clean-label backdoor attack with limited information. In Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security, Copenhagen, Denmark, 26–30 November 2023; pp. 771–785. [Google Scholar]

- Chen, W.; Xu, X.; Wang, X.; Zhou, H.; Li, Z.; Chen, Y. Invisible backdoor attack with attention and steganography. Comput. Vis. Image Underst. 2024, 249, 104208. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Wu, B.; Li, L.; He, R.; Lyu, S. Invisible backdoor attack with sample-specific triggers. In Proceedings of the IEEE/CVF International Conference On Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16463–16472. [Google Scholar]

- Jiang, W.; Li, H.; Xu, G.; Zhang, T. Color backdoor: A robust poisoning attack in color space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 8133–8142. [Google Scholar]

- Zhang, J.; Dongdong, C.; Huang, Q.; Liao, J.; Zhang, W.; Feng, H.; Hua, G.; Yu, N. Poison ink: Robust and invisible backdoor attack. IEEE Trans. Image Process. 2022, 31, 5691–5705. [Google Scholar] [CrossRef]

- Yin, J.Y.; Chen, H.L.; Li, J.J.; Gao, Y.D. Enhanced Coalescence Backdoor Attack Against DNN Based on Pixel Gradient. Neural Process. Lett. 2024, 56, 114. [Google Scholar] [CrossRef]

- Li, F.; Huang, M.; You, W.; Zhu, L.; Cheng, H.; Yang, R. Spatialspectral-Backdoor: Realizing backdoor attack for deep neural networks in brain–computer interface via EEG characteristics. Neurocomputing 2025, 616, 128902. [Google Scholar] [CrossRef]

- Zhai, Y.; Yang, L.; Yang, J.; He, L.; Li, Z. BadDGA: Backdoor attack on LSTM-based domain generation algorithm detector. Electronics 2023, 12, 736. [Google Scholar] [CrossRef]

- Tang, W.; Li, J.; Rao, Y.; Peng, F. A trigger-perceivable backdoor attack framework driven by image steganography. Pattern Recognit. 2025, 161, 111262. [Google Scholar] [CrossRef]

- Liu, J.; Peng, C.; Tan, W.; Shi, C. Federated Learning Backdoor Attack Based on Frequency Domain Injection. Entropy 2024, 26, 164. [Google Scholar] [CrossRef]

- Ma, Q.; Qin, J.; Yan, K.; Sun, H. Stealthy frequency-domain backdoor attacks: Fourier decomposition and fundamental frequency injection. IEEE Signal Process. Lett. 2023, 30, 1677–1681. [Google Scholar] [CrossRef]

- Li, G.; Chang, R.; Wang, Y.; Wang, C. Dual-domain based backdoor attack against federated learning. Neurocomputing 2025, 623, 129424. [Google Scholar] [CrossRef]

- Feng, Y.; Ma, B.; Zhang, J.; Zhao, S.; Xia, Y.; Tao, D. Fiba: Frequency-injection based backdoor attack in medical image analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 20876–20885. [Google Scholar]

- Huang, K.; Li, Y.; Wu, B.; Qin, Z.; Ren, K. Backdoor defense via decoupling the training process. arXiv 2022, arXiv:2202.03423. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In Proceedings of the 2019 IEEE symposium on security and privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 707–723. [Google Scholar]

- Chen, W.; Wu, B.; Wang, H. Effective backdoor defense by exploiting sensitivity of poisoned samples. Adv. Neural Inf. Process. Syst. 2022, 35, 9727–9737. [Google Scholar]

- Li, Y.; Lyu, X.; Ma, X.; Koren, N.; Lyu, L.; Li, B.; Jiang, Y.G. Reconstructive neuron pruning for backdoor defense. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 19837–19854. [Google Scholar]

- Yang, Z.; Xu, B.; Zhang, J.M.; Kang, H.J.; Shi, J.; He, J.; Lo, D. Stealthy backdoor attack for code models. IEEE Trans. Softw. Eng. 2024, 50, 721–741. [Google Scholar] [CrossRef]

- Yin, J.L.; Wang, W.; Lin, W.; Liu, X. Adversarial-Inspired Backdoor Defense via Bridging Backdoor and Adversarial Attacks. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 9508–9516. [Google Scholar]

- Sharma, Y.; Ding, G.W.; Brubaker, M. On the effectiveness of low frequency perturbations. arXiv 2019, arXiv:1903.00073. [Google Scholar]

- Feng, Y.; Ma, B.; Liu, D.; Zhang, Y.; Cai, W.; Xia, Y. Contrastive Neuron Pruning for Backdoor Defense. IEEE Trans. Image Process. 2025, 34, 1234–1245. [Google Scholar] [CrossRef] [PubMed]

- Heil, C.E.; Walnut, D.F. Continuous and discrete wavelet transforms. SIAM Rev. 1989, 31, 628–666. [Google Scholar] [CrossRef]

- Durak, L.; Arikan, O. Short-time Fourier transform: Two fundamental properties and an optimal implementation. IEEE Trans. Signal Process. 2003, 51, 1231–1242. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical report; Toronto University: Toronto, ON, Canada, 2009. [Google Scholar]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. The German traffic sign recognition benchmark: A multi-class classification competition. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 1453–1460. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).