Abstract

In recent years, there has been an increasing interest in the application of progressive censoring as a means to reduce both cost and experiment duration. In the absence of explanatory variables, the present study employs a statistical inference approach for the inverse Weibull distribution, using a progressive type II censoring strategy with two independent samples. The article expounds on the maximum likelihood estimation method, utilizing the Fisher information matrix to derive approximate confidence intervals. Moreover, interval estimations are computed by the bootstrap method. We explore the application of Bayesian methods for estimating model parameters under both the squared error and LINEX loss functions. The Bayesian estimates and corresponding credible intervals are calculated via Markov chain Monte Carlo (MCMC). Finally, comprehensive simulation studies and real data analysis are carried out to validate the precision of the proposed estimation methods.

1. Introduction

The modeling and analysis of lifetimes play a crucial role in many scientific and technological fields. In practice, studying a complete sample is often impractical because increasing product reliability results in longer lifetime data (see [1]). To save time and reduce costs, various censoring schemes have been proposed in reliability and survival analysis. Among them, the type II censoring scheme is one of the most commonly applied methods. Nevertheless, within industrial contexts, the manufacture of products occurs on multiple production lines, thus necessitating comparative lifecycle analyses. In the evaluation of the reliability of two populations produced by different lines, joint censoring schemes are a more appropriate methodology. In their seminal work, Balakrishnan et al. [2] pioneered the introduction of a novel joint type II censoring scheme, providing a framework for statistical inferences concerning two exponential distributions.

Unlike conventional type II censoring, which involves unit withdrawal only at the experiment’s termination, progressive type II censoring, as pioneered by [3] removes units at each failure occurrence. This strategy saves time by discarding observations with excessively long lifetimes, thereby allowing parameter estimation even without continuous data collection throughout the experiment. Compared to standard censoring techniques, progressive type II censoring provides notable benefits. For a detailed review of work on progressive censoring, please refer to [4].

Relying solely on censoring methods for a single population poses several limitations. While progressive type II censoring removes some data, obtaining sufficient observations remains costly. Moreover, experiments based on one population cannot capture the interaction between different groups. To overcome these drawbacks, Rasouli and Balakrishnan [5] proposed the joint progressive type II censoring (JPC) scheme. This method records failures from two distinct populations, reducing the time needed to collect comparable data and enabling direct comparison of failure times under identical conditions.

Within this framework, two independent samples of sizes m and n are selected from each production line. Both samples are drawn from each production line. Both samples undergo simultaneous life-testing experiments. This method is particularly efficient for ending life tests once a specified number of failures is observed.

Several studies have investigated JPC and its related inference methods. Balakrishnan et al. [6] proposed likelihood-based inference for k exponential distributions under JPC, while Doostparast et al. [7] examined Bayesian estimation using a linear exponential loss function with JPC data. Mondal and Kundu [8] focused on point and interval estimation for Weibull parameters under JPC. Goel and Krishna [9] studied likelihood and Bayesian inference for k Lindley populations under joint type II censoring, with Krishna and Goel [10] extending this framework to JPC. Hassan et al. [11] addressed inference for the Burr type III distribution under JPC, while Kumar and Kumari [12] explored likelihood and Bayesian estimation for two inverse Pareto populations. More recently, Hasaballah et al. [13] analyzed reliability for two Nadarajah–Haghighi populations, and Abo-Kasem et al. [14] investigated inference for two Gompertz populations under similar censoring schemes.

The two-parameter inverse Weibull distribution (IWD) was first proposed by Kaller and Kamath [15] to model the degradation of diesel engine components. Since its introduction, the IWD has gained widespread recognition as a suitable model for lifetime data analysis. Langlands et al. [16] demonstrated its effectiveness in modeling breast cancer mortality data. Abhijit and Anindya [17] demonstrated that the IWD outperforms the normal distribution in analyzing ultrasonic pulse velocity data on concrete structures. Elio et al. [18] introduced a mixed IWD model to address extreme wind speed scenarios.

The IWD finds extensive applications in reliability research. Azm et al. [19] explored the estimation of unknown IWD parameters under competing risks using an adaptive type I progressive hybrid censoring scheme. They employed both maximum likelihood and Bayesian estimation methods, deriving asymptotic, bootstrap, and highest posterior density confidence intervals, and validated their approach with two real datasets. Bi and Gui [20] investigated stress–strength reliability estimation based on the IWD. They proposed an approximate maximum likelihood approach for point estimation and confidence interval construction, alongside Bayesian estimation and highest posterior density intervals derived via Gibbs sampling.

In another study, Alslman and Helu [21] estimated the stress–strength reliability for the IWD under adaptive type II progressive hybrid censoring. Shawky and Khan [22] employed maximum likelihood estimation for a multi-component IWD stress–strength model, confirming its effectiveness via Monte Carlo simulations. Ren et al. [23] demonstrated that Bayesian estimation outperforms other methods for IWD under progressive type II censoring. However, no one has studied the statistical inference of the IWD under the JPC model. The research on this aspect is of great significance.

This study applies the JPC scheme to estimate two independent IWD samples in scenarios where no explanatory variables are available. Point and interval estimators are constructed using maximum likelihood estimation (MLE) and Bayesian methods. Asymptotic confidence intervals (ACIs) are derived from the observed information matrix, while Bootstrap-P (Boot-P) and Bootstrap-T (Boot-T) methods yield bootstrap confidence intervals (CIs). Gamma priors are assumed for both shape and scale parameters, with Bayes estimates computed via the Metropolis–Hastings (M-H) algorithm under squared error (SE) and linear exponential (LINEX) loss functions. Monte Carlo simulations and a real-data analysis are conducted to assess the performance of these estimators.

The structure of the paper is as follows. Section 2 derives the maximum likelihood estimators for the IWD parameters. Section 3 discusses approximate confidence intervals based on these estimators, while Section 4 focuses on bootstrap-based confidence intervals. The Bayesian analysis is presented in Section 5, followed by the simulation results in Section 6. Section 7 applies the proposed methods to real datasets and Section 8 concludes the paper.

2. The Joint Progressive Type II Censor Scheme and Maximum Likelihood Estimation

2.1. Model Description

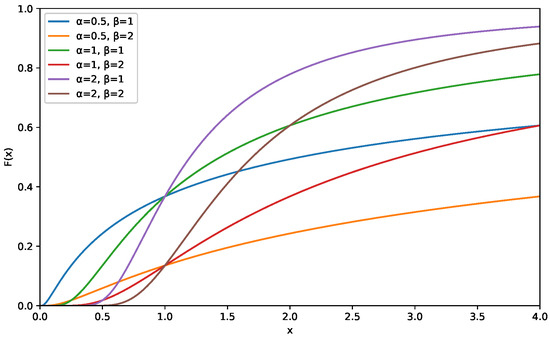

We begin by introducing the IWD, which is frequently employed to model lifetime data. The cumulative distribution function (CDF) of the IWD is given by

Figure 1 illustrates this CDF.

Figure 1.

CDF of the inverse Weibull distribution.

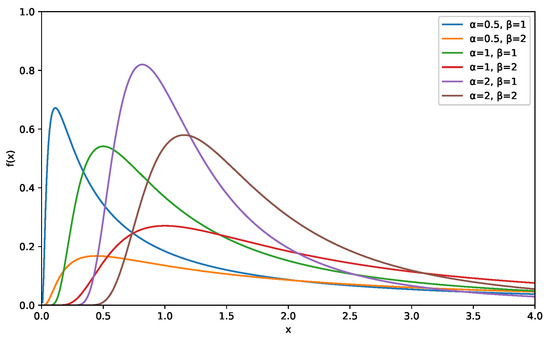

The associated probability density function (PDF) is

as shown in Figure 2, and it can be observed that the IWD exhibits a right-skewed, unimodal PDF with a heavy right tail, making it suitable for modeling extreme events and late-life failures.

Figure 2.

PDF of the inverse Weibull distribution.

This paper focuses on inference of the two-sample IWD under the JPC model. Following the idea of [24], for product A, let denote the lifetimes of m units. These are assumed to be independent and identically distributed (i.i.d.) according to the IWD with a shape parameter and scale parameter . Similarly, for product B, let be the lifetimes of n units, which are also i.i.d. following the IWD with a shape parameter and the same scale parameter .

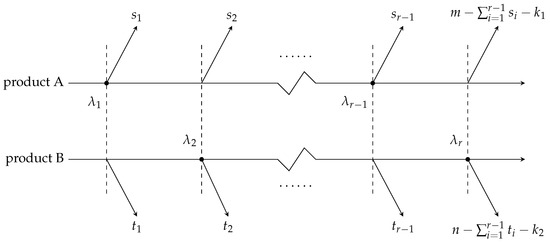

Let be the total sample size, with representing the order statistics of the random variables . In the JPC method, after the first failure, units are randomly removed from the remaining units. This procedure continues at the second failure, where units are randomly withdrawn from the remaining surviving units, and so forth. The process continues until exactly r failures have been observed, at which point all remaining units are withdrawn. This termination criterion aligns with the definition of type II censoring. At the r-th failure, all remaining units are withdrawn, with the number of withdrawn units given by . The scheme is denoted by , where r is the predetermined number of observed failures.

Let for , where and denote the number of units withdrawn at the i-th failure for the X and Y samples, respectively. Both and are random variables with unknown values. The observed data consist of , where indicates the source of failure, with if corresponds to an X failure and if it corresponds to a Y failure. The vector contains the failure times, and represents the number of units withdrawn from the X sample at each failure time. A concise overview of this type II censoring method is provided in Figure 3.

Figure 3.

JPC scheme.

2.2. Maximum Likelihood Estimation

The likelihood function can be expressed based on the joint progressive censoring scheme as follows:

where , , , and with

Therefore, the likelihood function becomes

By taking the logarithm of both sides of Function (1), we obtain the following log-likelihood function:

To obtain the MLEs of , we take the first derivative of the log-likelihood Function (2) with respect to each parameter as follows:

Remark 1.

MLEs are computed only for cases where as this ensures that the likelihood function contains useful information for parameter estimation.

Obtaining analytical solutions for these equations is challenging, which makes closed-form expressions for the parameters difficult to derive. Hence, parameter values are typically estimated using numerical techniques like the Newton–Raphson iteration.

3. Approximate Confidence Interval

Proposition 1.

The asymptotic normality of the MLEs allows us to compute the approximate ACIs for as follows:

Here, is the percentile of the standard normal distribution corresponding to a right-tail probability of .

Proof.

The asymptotic distribution of the MLE is utilized to derive confidence intervals for the unknown parameters , , and . The second partial derivatives of the log-likelihood Function (2) are expressed as follows:

Taking the expectation of the negative second derivatives from Equations (6)–(11), the Fisher information matrix (FIM) is defined as , where and . The observed information matrix is computed by replacing the expected value, such as , with . Therefore, the observed Fisher information is given by the following:

The covariance matrix can be calculated by inverting the FIM. Therefore, the asymptotic variance–covariance matrix for the MLEs is obtained by inverting the observed Fisher information, as shown below:

□

4. Bootstrap Confidence Intervals

Bootstrap confidence intervals are an effective technique for measuring uncertainty in statistical analysis, especially when the underlying data distribution is complex or unknown. This method works by repeatedly resampling the observed data with replacements to create several “bootstrap samples”. Each of these samples generates a new estimate of the parameter of interest. By studying the spread and distribution of these estimates, bootstrap confidence intervals provide a range that likely contains the true value of the parameter. One of the key strengths of the bootstrap method is its flexibility, as it does not rely on assumptions about the specific form of the data distribution. This makes it particularly useful in real-world applications, where data may not follow standard parametric distributions.

We present bootstrap methods [25,26], which are particularly effective for small sample sizes. The steps outlined in Algorithms 1 and 2 are used to construct the Boot-P and Boot-T CI for , and .

| Algorithm 1 Generation process of Boot-P CIs |

|

| Algorithm 2 Generation process of Boot-T CIs |

|

5. Bayesian Inference

5.1. Prior and Posterior Distribution

We assume that the parameters , , and of the IWD follow independent gamma priors with hyperparameters ; , and , respectively. Therefore, their joint prior distribution is expressed as

The joint posterior distribution can be formulated as

where represents the likelihood function, indicates the source of failure, with if corresponds to an X failure and if it corresponds to a Y failure. The vector contains the failure times, and represents the number of units withdrawn from the X sample at each failure time.

Moreover, using the joint prior distribution and the likelihood function (1), the joint posterior distribution is given by

5.2. Loss Functions

In this subsection, we emphasize the significance of selecting appropriate loss functions for parameter estimation within Bayesian inference. Specifically, we consider two types of loss functions: the SE loss function and the asymmetric LINEX loss function.

The SE loss function is represented by

In this equation, , is any function of , and represents the SE estimate of . Let denote the Bayesian estimate of under the SE function, which is given by

The SE loss function is widely used in the literature and is one of the most common loss functions. It is symmetric, treating overestimation and underestimation of parameters equally. However, in life-testing situations, one type of estimation error may have more severe consequences. To address this, we use the LINEX loss function, defined as follows:

Here, is as previously defined, and represents the LINEX estimate of .

The shape parameter a controls the direction and degree of asymmetry in the loss function. When , overestimation is penalized more heavily than underestimation, whereas the reverse is true when . For values of a near zero, the LINEX loss function becomes similar to the SE loss function. When , the function is highly asymmetric, with overestimation costing more than underestimation. If , the loss increases almost exponentially for and decreases nearly linearly for .

The Bayes estimate is obtained to be

We found that analytical solutions are not feasible for this problem. To address this, the Metropolis–Hastings algorithm is used to approximate explicit formulas for and , as well as to construct the associated confidence intervals.

5.3. Metropolis–Hastings Algorithm

Proposition 2.

The Bayes estimates based on the SE loss function are as follows:

and the Bayes estimates based on the LINEX loss function are

where N denotes the total MCMC sample size and is the number of burn-in iterative values.

Proof.

From Function (13), the conditional posterior density function of can be obtained as the following proportionality:

It is crucial to highlight that the conditional posterior distributions for , , and , as given in Functions (14)–(16), do not reduce to any familiar standard forms. This complexity makes direct sampling using conventional methods rather challenging. However, these distributions appear to resemble the normal distribution.

The proposed distribution of is assumed to be multivariate normal, and the MCMC sample is generated using the Metropolis–Hastings method outlined in Algorithm 3. □

Sort in ascending order to obtain . With that, the credible interval of the unknown parameter is constructed as

The shortest one of all the possible intervals with the length is the highest posterior density credible interval.

| Algorithm 3 Generating samples following the posterior distribution. |

|

6. Simulation Study

In this section, we assess the performance of the methods by simulating parameter estimates across different JPC schemes. The simulation follows a structured approach, including data generation, parameter estimation, and result evaluation. We reference the approach outlined in [27].

First, we drew samples from two populations, namely, population A and population B, which follow the distributions and , respectively. The true values of the parameters are set as , and . For the sample sizes , we select the combinations , , , , and , while the numbers of failures r are chosen as 20, 30, 40, 50, and 60. The procedure for generating JPC data is described in Algorithm 4.

| Algorithm 4 The algorithm to generate samples under the JPC scheme. |

|

Once the censored data are obtained, the MLEs of , and are calculated using Function (1); then, we present the mean MSEs of the MLEs. The bootstrap method is used to compute the ACI for the parameters. The experiment was carried out using R, with the code written in RStudio version 2024.12.0+467. The RStudio environment was run on Windows 10 (64-bit), and the experimental computer was equipped with AMD Ryzen 7 5800H with Radeon Graphics and the NVIDIA GeForce GTX 1650 graphics card.

Here, means . For interval estimates, we construct ACIs, Boot-T CIs, and Boot-P CIs. Then, based on 1000 repetitions, we calculate the average lengths (ALs) and coverage probabilities (CPs) of these intervals. Table 1 and Table 2 demonstrate that the average estimates (AMLEs) of and exhibit bias for small sample sizes . As the sample size increases, the estimates progressively converge to the true values. Table 3 indicates that the MSEs of are generally smaller than those of and in most cases. The ALs of the approximate confidence intervals are similar to those of the two bootstrap confidence intervals. Since the confidence probability of the bootstrap intervals consistently reaches 100%, the results are not included in the table.

Table 1.

AMLEs, MSEs, ACIs, Boot-T CIs, and Boot-P CIs of .

Table 2.

AMLEs, MSEs, ACIs, Boot-T CIs, and Boot-P CIs of .

Table 3.

AMLEs, MSEs, ACIs, Boot-T CIs, and Boot-P CIs of .

Under the Bayesian framework, we compute both the parameter estimates and their corresponding MSEs using the SE and LINEX loss functions, employing either gamma informative priors (IPs) or non-informative priors (NIPs).

To compare the performance of these Bayesian estimates, the hyperparameters for the IPs are set as . We select these hyperparameters to ensure that the prior expectations of the two populations align closely with the true values. And for NIPs, we assign .

Table 4 and Table 5 present the average Bayesian estimates (ABEs), MSEs, and ALs of the 95% credible intervals for under both IPs and NIPs. The corresponding results for and are provided in Appendix A, in Table A1, Table A2, Table A3 and Table A4. In particular, the MSEs for remain consistently the lowest among the unknown parameters. Moreover, the Bayesian estimates under IPs tend to slightly underestimate the parameters, while those under NIP tend to slightly overestimate them. For the LINEX loss function with , the credible intervals have the shortest average lengths. Additionally, the ALs of the confidence intervals are quite similar across all three loss functions. Furthermore, under both IPs and NIPs, the CPs for are consistently close to the nominal level of 95%, indicating reliable interval estimation for this parameter. However, the CPs for and remain around 80%, suggesting that the current approach may not fully capture the uncertainty associated with this parameter. Future research is anticipated to explore appropriate methodologies to enhance the CPs, ensuring more robust and reliable interval estimation.

Table 4.

Bayesian estimations of parameter supposing informative priors .

Table 5.

Bayesian estimations of parameter supposing non-informative priors .

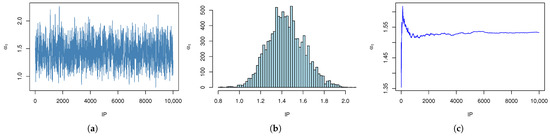

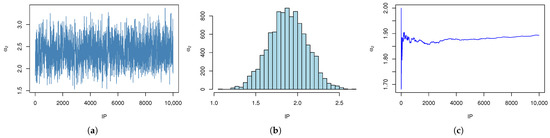

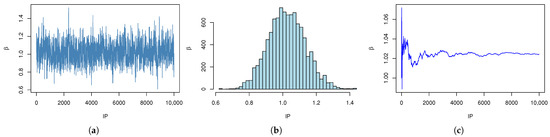

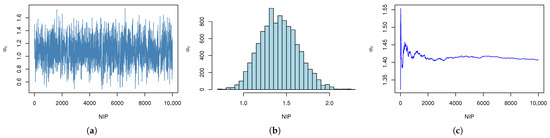

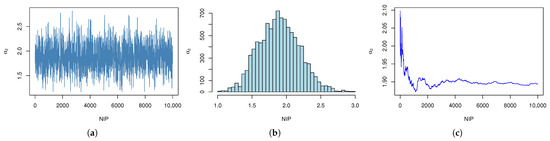

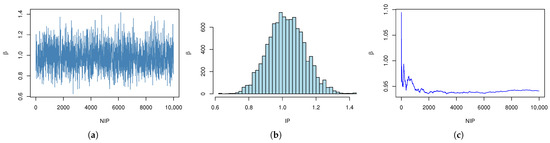

Histograms, cumulative mean plots, and estimation plots of the parameters produced by the MCMC method under gamma IPs and NIPs are shown in Figure 4, Figure 5, Figure 6, Figure 7, Figure 8 and Figure 9 (taking , ) as an example). These plots demonstrate good convergence behavior, as evidenced by the stable cumulative means and well-mixed histograms.

Figure 4.

Simulation of with IP.(a) The trace plot of MCMC samples of with IPs. (b) Histogram of with IPs. (c) Cumulative mean plots of with IPs.

Figure 5.

Simulation of with IPs. (a) The trace plot of MCMC samples of with IPs. (b) Histogram of with IPs. (c) Cumulative mean plots of with IPs.

Figure 6.

Simulation of with IPs. (a) The trace plot of MCMC samples of with IPs. (b) Histogram of with IPs. (c) Cumulative mean plots of with IPs.

Figure 7.

Simulation of with NIPs. (a) The trace plot of MCMC samples of with NIPs. (b) Histogram of with NIPs. (c) Cumulative mean plots of with NIPs.

Figure 8.

Simulation of with NIPs. (a) The trace plot of MCMC samples of with NIPs. (b) Histogram of with NIPs. (c) Cumulative mean plots of with NIPs.

Figure 9.

Simulation of with NIPs. (a) The trace plot of MCMC samples of with NIPs. (b) Histogram of with NIPs. (c) Cumulative mean plots of with NIPs.

7. Real-Data Analysis

7.1. Example 1

The following section presents an analysis of real data obtained from Balakrishnan et al. [28], which documents the breakdown time of electrical current under varying voltage levels. A detailed statistical examination of these data was later conducted by Ding and Gui [29]. For clearer demonstration, this study focuses on breakdown times recorded at voltage levels of 32 kV and 34 kV. The corresponding data are summarized in Table 6 for reference.

Table 6.

Breakdown times at voltages of 32 and 34 kV.

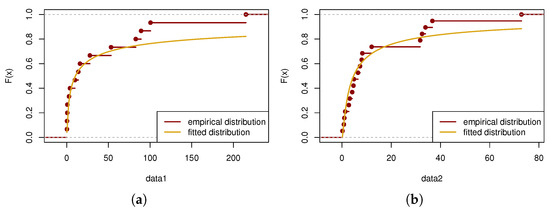

The goodness of fit of the samples to the IWD is assessed using the Kolmogorov–Smirnov (K-S) test. For the first sample, the test yields a p-value of 0.2391, while the second sample produces a p-value of 0.5941. The test results are visually illustrated in Figure 10.

Figure 10.

Fitness between the fitted distribution and the empirical distribution for example 1. (a) Fitness of dataset 1. (b) Fitness of dataset 2.

Using the datasets above, we generated the JPC sample based on the censoring scheme. Suppose that the first sample has a size of and for the second sample. By implementing JPC, where represents the total sample size, and setting , with ,, and , we proceed with the analysis.

The estimation results under different censoring schemes are presented in Table A5. The MLEs, MSEs, and AL for , , and demonstrate variations across the censoring schemes, with noticeable differences in the estimated values and corresponding MSEs. In particular, the estimates of and show sensitivity to the censoring structure, while remains relatively stable.

Table A6 and Table A7 in Appendix A further examine the influence of prior settings on parameter estimation, comparing the IP and NIP approaches. The hyperparameters for IPs are chosen based on the MLE results, ensuring that the prior mean approximates the MLE estimates. Table A6 presents the results under IPs with , , and . In contrast, Table A7 in Appendix A reports the results using NIPs with .

7.2. Example 2

The two datasets, each consisting of 30 observations , pertain to the breaking strength of jute fiber glass laminate (JFGL) with diameters of 10 mm and 20 mm. The measurements are given in units of fiber strength (MPa). These data are from [30], and are presented in Table 7.

Table 7.

Breaking strength of JFGL with 10 mm and 20 mm.

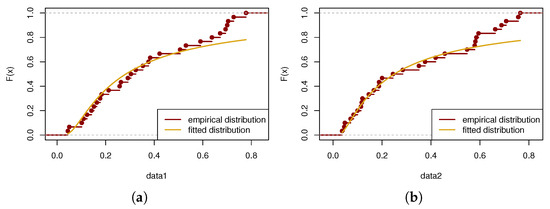

A rescaling factor of 1000 is applied to the data to prevent the estimates from becoming excessively large or small. The K-S test is also employed to evaluate the fit of the samples to the IWD. The resulting p-values are 0.6781 for the first sample and 0.8081 for the second. A visual representation of the test outcomes is provided in Figure 11.

Figure 11.

Fitness between the fitted distribution and the empirical distribution for example 2. (a) Fitness of dataset 1. (b) Fitness of dataset 2.

Based on the datasets described above, JPC samples are constructed under a specified censoring scheme. The analysis is conducted using different censoring settings: setting with , , and .

Table A8 displays the parameter estimates obtained under various censoring schemes. To assess the impact of prior selection, Appendix A includes Table A9 and Table A10, which present results from the IP and NIP settings. Specifically, Table A9 uses , , and . In comparison, Table A10 in Appendix A reports the results using NIPs with .

8. Conclusions

This paper investigates statistical inference for two inverse Weibull distributions under a joint progressive type II censoring scheme, assuming a common shape parameter with differing scale parameters. The Newton–Raphson method is used to derive maximum likelihood estimates, and asymptotic confidence intervals are constructed. Additionally, bootstrap techniques are applied to improve interval estimation. The foundation of our Bayesian analysis is the use of independent priors for the model parameters. We employ the MCMC algorithm to obtain Bayesian estimates under the squared error and LINEX loss functions, along with corresponding credible intervals. Through these procedures, we conduct simulations under various joint censoring schemes. Finally, we apply the proposed method to real datasets to demonstrate its practical implementation.

In this study, the populations are assumed to follow an inverse Weibull distribution with a common shape parameter. However, in practical applications, the shape parameters may vary across populations, highlighting the need for further investigation. Additionally, alternative approaches, such as the Jackknife method, could be explored by future researchers to further evaluate estimation performance and variability. Future research will also examine statistical inference methods for multiple populations, considering both identical and distinct lifetime distributions.

Author Contributions

Conceptualization and methodology, J.X. and Y.W.; software, J.X.; investigation, J.X.; writing—original draft preparation, J.X.; writing—review and editing, W.G. supervision, W.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Project 202510004188 which was supported by National Training Program of Innovation and Entrepreneurship for Undergraduates. Wenhao’s work was partially supported by the Science and Technology Research and Development Project of China State Railway Group Company, Ltd. (No. N2023Z020).

Data Availability Statement

The data presented in this study are openly available in [28,30].

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Bayesian estimations of parameter supposing informative priors .

Table A1.

Bayesian estimations of parameter supposing informative priors .

| r | SE | LINEX (a = −2) | LINEX (a = 2) | |||||

|---|---|---|---|---|---|---|---|---|

| ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | |||

| (20, 20) | 20 | (, 20) | 2.0450 | 1.0578 | 2.0809 | 1.0693 | 1.9500 | 1.0111 |

| (0.4506) | (89.8%) | (0.4816) | (79.6%) | (0.4609) | (81.5%) | |||

| (, 5, 15) | 2.1021 | 1.0609 | 2.1178 | 1.0927 | 2.1783 | 1.0453 | ||

| (0.4411) | (87.8%) | (0.4668) | (81.6%) | (0.4431) | (83.7%) | |||

| 30 | (, 10) | 1.9845 | 1.0349 | 2.0268 | 1.0534 | 2.0539 | 1.0484 | |

| (0.4671) | (83.7%) | (0.4497) | (81.6%) | (0.4357) | (91.8%) | |||

| (, 8, 2) | 2.0108 | 1.0205 | 2.0720 | 1.0617 | 2.0798 | 1.0027 | ||

| (0.4473) | (81.6%) | (0.4538) | (79.6%) | (0.4315) | (83.6%) | |||

| (20, 30) | 30 | (, 20) | 1.9817 | 0.9924 | 1.9778 | 0.9908 | 2.0006 | 1.0493 |

| (0.4466) | (87.6%) | (0.4440) | (82.6%) | (0.4557) | (81.6%) | |||

| (, 15, 5) | 2.0685 | 1.0816 | 2.0401 | 1.1127 | 2.1827 | 1.0594 | ||

| (0.4478) | (87.8%) | (0.4863) | (79.6%) | (0.4652) | (79.9%) | |||

| 40 | (, 10) | 2.04347 | 1.0405 | 2.0923 | 0.9916 | 1.9950 | 1.0081 | |

| (0.4413) | (83.7%) | (0.4240) | (79.6%) | (0.4425) | (81.6%) | |||

| (, 5, 5) | 2.1766 | 1.0795 | 2.1314 | 1.0499 | 2.1675 | 1.0308 | ||

| (0.4463) | (89.8%) | (0.4631) | (83.7%) | (0.4570) | (83.7%) | |||

| (30, 30) | 30 | (, 30) | 2.0053 | 0.9472 | 1.9902 | 1.0067 | 1.9895 | 0.9612 |

| (0.4272) | (75.51%) | (0.4457) | (77.55%) | (0.4396) | (75.51%) | |||

| (, 20, 10) | 2.0856 | 1.0137 | 2.0936 | 1.0367 | 2.1222 | 1.0803 | ||

| (0.4360) | (86.6%) | (0.4442) | (81.6%) | (0.4659) | (81.6%) | |||

| 40 | (, 20) | 1.9899 | 0.9520 | 2.0082 | 0.9922 | 2.0309 | 1.0350 | |

| (0.4474) | (79.4%) | (0.4364) | (83.3%) | (0.4418) | (79.6%) | |||

| (, 20, 0) | 2.0348 | 1.0415 | 1.9278 | 1.0335 | 2.0416 | 1.0677 | ||

| (0.4396) | (75.5%) | (0.4337) | (79.6%) | (0.4442) | (87.8%) | |||

| (30, 40) | 40 | (, 30) | 1.9494 | 1.0339 | 2.0150 | 1.0022 | 1.9939 | 1.0178 |

| (0.4650) | (83.7%) | (0.4368) | (85.7%) | (0.4475) | (79.5%) | |||

| (, 29, 1) | 2.0865 | 1.0234 | 2.0493 | 1.0191 | 1.9992 | 1.0813 | ||

| (0.4341) | (77.5%) | (0.4269) | (83.7%) | (0.4478) | (85.5%) | |||

| 50 | (, 20) | 1.9888 | 1.0524 | 2.0223 | 0.9736 | 1.9990 | 1.0195 | |

| (0.4689) | (77.6%) | (0.4222) | (81.4%) | (0.4347) | (83.7%) | |||

| (, 15, 5) | 2.0936 | 1.0050 | 2.0224 | 1.0218 | 2.0535 | 0.9626 | ||

| (0.4542) | (83.5%) | (0.4452) | (83.7%) | (0.4511) | (87.3%) | |||

| (40, 50) | 50 | (, 40) | 1.9598 | 1.0426 | 2.0210 | 1.0241 | 2.0131 | 1.0456 |

| (0.4476) | (81.6%) | (0.4403) | (82.6%) | (0.4434) | (83.7%) | |||

| (, 35, 5) | 2.0447 | 1.0120 | 2.0411 | 1.0143 | 2.1582 | 1.0381 | ||

| (0.4362) | (89.6%) | (0.4293) | (87.6%) | (0.4232) | (85.7%) | |||

| 60 | (, 30) | 1.9892 | 0.9726 | 2.0064 | 0.9821 | 2.0210 | 1.0211 | |

| (0.4316) | (82.3%) | (0.4224) | (81.6%) | (0.4438) | (81.6%) | |||

| (, 25, 5) | 2.1037 | 1.0502 | 2.1364 | 1.1039 | 2.0656 | 1.0532 | ||

| (0.4493) | (79.5%) | (0.4643) | (85.7%) | (0.4398) | (85.7%) | |||

Table A2.

Bayesian estimations of parameter supposing informative priors .

Table A2.

Bayesian estimations of parameter supposing informative priors .

| r | SE | LINEX (a = −2) | LINEX (a = 2) | |||||

|---|---|---|---|---|---|---|---|---|

| ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | |||

| (20, 20) | 20 | (, 20) | 0.9937 | 1.0954 | 1.0039 | 1.0611 | 1.0180 | 1.0334 |

| (0.5189) | (83.7%) | (0.4993) | (79.4%) | (0.4800) | (76.4%) | |||

| (, 5, 15) | 0.9781 | 1.0944 | 0.9458 | 1.0991 | 1.0212 | 1.1931 | ||

| (0.4930) | (83.7%) | (0.5209) | (77.6%) | (0.6462) | (79.3%) | |||

| 30 | (, 10) | 0.9949 | 1.0573 | 1.0050 | 0.9868 | 0.9992 | 1.0741 | |

| (0.4900) | (77.6%) | (0.4175) | (77.3%) | (0.4891) | (75.5%) | |||

| (, 8, 2) | 1.0452 | 0.9798 | 0.9985 | 1.0683 | 0.9623 | 1.0461 | ||

| (0.4417) | (79.5%) | (0.5132) | (81.4%) | (0.4756) | (83.7%) | |||

| (20, 30) | 30 | (, 20) | 1.0068 | 1.0603 | 1.0278 | 1.0255 | 1.0388 | 0.9640 |

| (0.4847) | (75.5%) | (0.4776) | (85.3%) | (0.4477) | (79.2%) | |||

| (, 15, 5) | 0.9781 | 1.0944 | 0.9458 | 1.0991 | 1.0212 | 1.1931 | ||

| (0.4930) | (83.7%) | (0.5209) | (77.6%) | (0.6462) | (79.3%) | |||

| 40 | (, 10) | 0.9963 | 1.0729 | 1.0238 | 1.0067 | 1.0040 | 0.9967 | |

| (0.5050) | (79.4%) | (0.4489) | (79.4%) | (0.4339) | (85.7%) | |||

| (, 5, 5) | 0.9807 | 1.1703 | 1.0075 | 1.0950 | 1.0316 | 1.0621 | ||

| (0.5575) | (79.5%) | (0.4910) | (81.6%) | (0.4717) | (83.5%) | |||

| (30, 30) | 30 | (, 30) | 1.0107 | 1.0005 | 1.0055 | 1.0291 | 0.9876 | 1.0327 |

| (0.4328) | (81.6%) | (0.4670) | (77.3%) | (0.4569) | (79.6%) | |||

| (, 20, 10) | 0.9806 | 1.0525 | 1.0169 | 1.0173 | 1.0068 | 1.0439 | ||

| (0.5004) | (79.2%) | (0.4542) | (83.5%) | (0.5093) | (82.4%) | |||

| 40 | (, 20) | 0.9948 | 0.9146 | 1.0206 | 0.9266 | 1.0067 | 1.0614 | |

| (0.3782) | (85.5%) | (0.4180) | (81.2%) | (0.4759) | (83.5%) | |||

| (, 20, 0) | 1.0030 | 1.0366 | 1.0168 | 1.0279 | 1.0014 | 1.0452 | ||

| (0.4935) | (80.1%) | (0.4827) | (85.2%) | (0.4877) | (83.4%) | |||

| (30, 40) | 40 | (, 30) | 0.985 | 1.098 | 1.026 | 0.990 | 1.019 | 1.019 |

| (0.5126) | (79.6%) | (0.4305) | (71.4%) | (0.4581) | (69.4%) | |||

| (, 29, 1) | 1.0223 | 1.0315 | 1.0313 | 0.9991 | 1.0161 | 1.0187 | ||

| (0.4671) | (77.3%) | (0.4496) | (81.2%) | (0.4723) | (75.3%) | |||

| 50 | (, 20) | 0.9933 | 0.9981 | 0.9999 | 1.0085 | 1.0143 | 0.9847 | |

| (0.4293) | (82.6%) | (0.4293) | (83.6%) | (0.4359) | (80.2%) | |||

| (, 15, 5) | 0.9964 | 1.0625 | 1.0076 | 1.0580 | 0.9790 | 1.0990 | ||

| (0.4780) | (71.4%) | (0.4708) | (79.6%) | (0.4960) | (73.5%) | |||

| (40, 50) | 50 | (, 40) | 0.9993 | 0.9993 | 1.0055 | 1.0000 | 0.9928 | 1.0416 |

| (0.4184) | (79.5%) | (0.4306) | (77.6%) | (0.4404) | (85.7%) | |||

| (, 35, 5) | 0.9947 | 1.0350 | 0.9744 | 1.0048 | 0.9747 | 1.1015 | ||

| (0.4617) | (79.6%) | (0.4355) | 86.4%) | (0.5212) | (78.4%) | |||

| 60 | (, 30) | 1.0000 | 1.0013 | 1.0016 | 0.9956 | 1.0090 | 1.0714 | |

| (0.4469) | (85.3%) | (0.4297) | (79.5%) | (0.4790) | (83.5%) | |||

| (, 25, 5) | 0.9860 | 1.0654 | 0.9819 | 1.1362 | 1.0007 | 1.0541 | ||

| (0.4726) | (79.6%) | (0.5216) | (81.6%) | (0.4794) | (83.7%) | |||

Table A3.

Bayesian estimations of parameter supposing non-informative priors .

Table A3.

Bayesian estimations of parameter supposing non-informative priors .

| r | SE | LINEX (a = −2) | LINEX (a = 2) | |||||

|---|---|---|---|---|---|---|---|---|

| ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | |||

| (20, 20) | 20 | (, 20) | 2.1369 | 1.1184 | 2.1172 | 1.0649 | 2.1362 | 1.0704 |

| (0.5073) | (83.7%) | (0.5054) | (78.6%) | (0.5017) | (83.5%) | |||

| (, 5, 15) | 2.2611 | 1.2195 | 2.2837 | 1.0999 | 2.2413 | 1.2725 | ||

| (0.5829) | (87.8%) | (0.5677) | (85.7%) | (0.5045) | (95.9%) | |||

| 30 | (, 10) | 2.1079 | 1.1118 | 2.0560 | 1.0222 | 1.9763 | 1.0789 | |

| (0.4709) | (87.8%) | (0.4957) | (83.5%) | (0.4851) | (85.5%) | |||

| (, 8, 2) | 2.1212 | 1.1070 | 2.2686 | 1.0659 | 2.1452 | 1.0980 | ||

| (0.4977) | (77.6%) | (0.4464) | (83.7%) | (0.4805) | (83.7%) | |||

| (20, 30) | 30 | (, 20) | 1.9937 | 1.0379 | 2.0300 | 0.9538 | 2.1100 | 1.0113 |

| (0.4733) | (83.5%) | (0.4905) | (80.1%) | (0.4708) | (79.6%) | |||

| (, 15, 5) | 2.1332 | 1.1378 | 2.2554 | 1.0695 | 2.2903 | 1.1828 | ||

| (0.4858) | (89.8%) | (0.4885) | (83.7%) | (0.5124) | (83.7%) | |||

| 40 | (, 10) | 1.9805 | 1.0449 | 1.9661 | 1.0533 | 2.0159 | 1.0594 | |

| (0.4789) | (81.4%) | (0.4404) | (83.7%) | (0.4919) | (75.5%) | |||

| (, 5, 5) | 2.1549 | 1.0898 | 2.2024 | 1.1304 | 2.2637 | 1.1337 | ||

| (0.4618) | (83.7%) | (0.5000) | (83.7%) | (0.5218) | (81.6%) | |||

| (30, 30) | 30 | (, 30) | 2.0202 | 1.1449 | 2.0038 | 1.0791 | 2.0269 | 1.0245 |

| (0.5005) | (83.7%) | (0.4847) | (77.6%) | (0.4466) | (81.6%) | |||

| (, 20, 10) | 2.2779 | 1.0953 | 2.1466 | 1.0463 | 2.1271 | 1.0983 | ||

| (0.4688) | (79.6%) | (0.4722) | (80.3%) | (0.4939) | (79.6%) | |||

| 40 | (, 20) | 2.0315 | 1.0394 | 2.0478 | 1.0209 | 1.9222 | 1.0062 | |

| (0.4481) | (83.7%) | (0.4735) | (79.4%) | (0.4616) | (85.6%) | |||

| (, 20, 0) | 2.0522 | 1.0282 | 2.0611 | 1.0041 | 2.0038 | 1.0683 | ||

| (0.4450) | (86.6%) | (0.4517) | (79.6%) | (0.4733) | (79.6%) | |||

| (30, 40) | 40 | (, 30) | 2.1336 | 1.0689 | 2.0433 | 1.0620 | 2.0762 | 1.0605 |

| (0.4662) | (77.6%) | (0.4636) | (81.6%) | (0.4551) | (85.7%) | |||

| (, 29, 1) | 2.0341 | 0.9790 | 2.1279 | 1.0885 | 2.0263 | 1.0263 | ||

| (0.4332) | (75.5%) | (0.4606) | (91.8%) | (0.4820) | (85.3%) | |||

| 50 | (, 20) | 2.0932 | 1.0310 | 1.9805 | 0.9660 | 2.0880 | 1.0606 | |

| (0.4436) | (79.6%) | (0.4270) | (63.3%) | (0.4548) | (75.5%) | |||

| (, 15, 5) | 2.1114 | 1.0367 | 2.0779 | 1.0468 | 2.1596 | 1.0724 | ||

| (0.4478) | (73.5%) | (0.4443) | (87.8%) | (0.4442) | (89.8%) | |||

| (40, 50) | 50 | (, 40) | 1.9494 | 0.9671 | 1.9803 | 0.9992 | 2.0339 | 1.0119 |

| (0.4416) | (81.4%) | (0.4323) | (77.5%) | (0.4348) | (81.6%) | |||

| (, 35, 5) | 2.0959 | 1.0594 | 2.0835 | 1.0281 | 2.2055 | 1.0858 | ||

| (0.4488) | (83.7%) | (0.4599) | (81.4%) | (0.4593) | (81.6%) | |||

| 60 | (, 30) | 2.0526 | 0.9574 | 2.0140 | 1.0195 | 1.9820 | 0.9500 | |

| (0.4237) | (79.5%) | (0.4510) | (81.4%) | (0.4167) | (85.3%) | |||

| (, 25, 5) | 2.0987 | 1.0704 | 2.0992 | 1.0280 | 2.0760 | 0.9836 | ||

| (0.4684) | (87.6%) | (0.4454) | (83.5%) | (0.4272) | (79.5%) | |||

Table A4.

Bayesian estimations of parameter supposing non-informative priors .

Table A4.

Bayesian estimations of parameter supposing non-informative priors .

| r | SE | LINEX (a = −2) | LINEX (a = 2) | |||||

|---|---|---|---|---|---|---|---|---|

| ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | ABE (MSE) | AL (CP) | |||

| (20, 20) | 20 | (, 20) | 1.0156 | 1.0443 | 1.0320 | 1.1796 | 1.0249 | 1.1103 |

| (0.5076) | (79.3%) | (0.6132) | (79.6%) | (0.6268) | (81.4%) | |||

| (, 5, 15) | 0.9660 | 1.2940 | 1.0027 | 1.3100 | 0.9979 | 1.2091 | ||

| (0.7323) | (79.6%) | (0.7311) | (83.7%) | (0.6159) | (81.4 | |||

| 30 | (, 10) | 1.0064 | 1.0180 | 0.9852 | 1.0247 | 0.9832 | 1.0145 | |

| (0.4826) | (79.3%) | (0.4801) | (80.3%) | (0.4279) | (81.6%) | |||

| (, 8, 2) | 0.9484 | 1.1744 | 0.9751 | 1.0691 | 0.9762 | 1.1375 | ||

| (0.5875) | (83.4%) | (0.4949) | (81.6%) | (0.5884) | (82.5%) | |||

| (20, 30) | 30 | (, 20) | 0.9960 | 1.0205 | 1.0545 | 0.9728 | 0.9643 | 1.0074 |

| (0.4698) | (80.3%) | (0.4499) | (79.3%) | (0.4287) | (82.5%) | |||

| (, 15, 5) | 1.0009 | 1.1950 | 1.0081 | 1.1463 | 0.9424 | 1.2428 | ||

| (0.6132) | (79.6%) | (0.5497) | (75.5%) | (0.6540) | (85.7%) | |||

| 40 | (, 10) | 0.9990 | 1.0970 | 1.0058 | 1.0530 | 0.9919 | 1.0736 | |

| (0.4729) | (83.7%) | (0.4792) | (81.6%) | (0.5184) | (89.4%) | |||

| (, 5, 5) | 0.9667 | 1.1002 | 0.9694 | 1.1500 | 0.9487 | 1.2435 | ||

| (0.5513) | (77.5%) | (0.5426) | (79.4%) | (0.6024) | (89.8%) | |||

| (30, 30) | 30 | (, 30) | 1.0125 | 1.1329 | 0.9953 | 1.0773 | 1.0510 | 1.0657 |

| (0.5555) | (80.3%) | (0.5020) | (81.4%) | (0.4924) | (85.3%) | |||

| (, 20, 10) | 1.0012 | 1.1287 | 1.0190 | 1.0838 | 1.0283 | 1.1435 | ||

| (0.5624) | (83.5%) | (0.5212) | (83.3%) | (0.5709) | (85.5%) | |||

| 40 | (, 20) | 1.0067 | 1.0527 | 0.9995 | 1.0600 | 1.0186 | 1.0202 | |

| (0.4831) | (81.4%) | (0.5084) | (85.3%) | (0.4666) | (83.3%) | |||

| (, 20, 0) | 0.9617 | 1.0318 | 0.9682 | 1.0478 | 1.0071 | 1.0864 | ||

| (0.4498) | (80.6%) | (0.4720) | (79.4%) | (0.5181) | (79.5%) | |||

| (30, 40) | 40 | (, 30) | 0.9770 | 1.0718 | 1.0035 | 1.0689 | 1.0279 | 1.0498 |

| (0.5480) | (69.4%) | (0.4782) | (77.6%) | (0.5072) | (71.4%) | |||

| (, 29, 1) | 1.0140 | 1.0168 | 0.9713 | 1.1256 | 0.9921 | 1.0243 | ||

| (0.4600) | (80.3%) | (0.5242) | (85.7%) | (0.4839) | (81.2%) | |||

| 50 | (, 20) | 1.0204 | 1.0357 | 1.0045 | 1.0224 | 0.9779 | 1.0610 | |

| (0.4712) | (85.5%) | (0.4547) | (83.5%) | (0.4787) | (89.4%) | |||

| (, 15, 5) | 0.9783 | 1.0282 | 0.9942 | 1.1204 | 0.9883 | 1.0798 | ||

| (0.4586) | (79.2%) | (0.5215) | (80.6%) | (0.4825) | (85.6%) | |||

| (40, 50) | 50 | (, 40) | 1.0244 | 0.9667 | 1.0162 | 0.9796 | 0.9989 | 1.0231 |

| (0.4103) | (79.4%) | (0.4253) | (82.4%) | (0.4731) | (82.3%) | |||

| (, 35, 5) | 1.0069 | 1.0699 | 1.0181 | 1.0126 | 0.9549 | 1.1400 | ||

| (0.5131) | (81.4%) | (0.4623) | (78.5%) | (0.5417) | (881.4%) | |||

| 60 | (, 30) | 0.9760 | 1.0166 | 1.0039 | 1.0264 | 0.9957 | 0.9585 | |

| (0.4538) | (81.2%) | (0.4558) | (76.5%) | (0.4469) | (81.0%) | |||

| (, 25, 5) | 0.9938 | 1.0719 | 0.9900 | 1.0500 | 0.9988 | 1.0178 | ||

| (0.4795) | (79.6%) | (0.4908) | (77.6%) | (0.4484) | (82.5%) | |||

Table A5.

The AMLEs, MSEs, and AL for , , and for example 1.

Table A5.

The AMLEs, MSEs, and AL for , , and for example 1.

| Parameters | AMLE | MSE | AL | |

|---|---|---|---|---|

| 0.4303 | 0.1201 | 0.1410 | ||

| 0.4557 | 0.1330 | 0.1692 | ||

| 1.8739 | 0.0323 | 0.0282 | ||

| 0.3807 | 0.1057 | 0.0674 | ||

| 0.6013 | 0.0389 | 0.0654 | ||

| 1.8549 | 0.0302 | 0.0252 | ||

| 0.3614 | 0.1029 | 0.0977 | ||

| 0.6315 | 0.0385 | 0.1165 | ||

| 1.8434 | 0.0302 | 0.0532 |

Table A6.

The estimation of parameters when , , , , , and for example 1.

Table A6.

The estimation of parameters when , , , , , and for example 1.

| Parameters | SE | LINEX (a = −2) | LINEX (a = 2) | ||||

|---|---|---|---|---|---|---|---|

| ABE | AL | ABE | AL | ABE | AL | ||

| 0.3583 | 1.4459 | 0.3740 | 1.4435 | 0.3704 | 1.4537 | ||

| 0.4593 | 1.4486 | 0.4450 | 1.4369 | 0.4479 | 1.4557 | ||

| 1.8047 | 1.4725 | 1.8080 | 1.4691 | 1.8068 | 1.4822 | ||

| 0.3454 | 1.4207 | 0.3426 | 1.4378 | 0.3446 | 1.4406 | ||

| 0.5427 | 1.4220 | 0.5458 | 1.4435 | 0.5456 | 1.4522 | ||

| 1.7788 | 1.4013 | 1.7828 | 1.4349 | 1.7774 | 1.4672 | ||

| 0.3357 | 1.4365 | 0.3352 | 1.4280 | 0.3315 | 1.4307 | ||

| 0.5615 | 1.4061 | 0.5643 | 1.4416 | 0.5656 | 1.4258 | ||

| 1.7727 | 1.4140 | 1.7705 | 1.4241 | 1.7733 | 1.4259 | ||

Table A7.

The estimation of parameters when for example 1.

Table A7.

The estimation of parameters when for example 1.

| Parameters | SE | LINEX (a = −2) | LINEX (a = 2) | ||||

|---|---|---|---|---|---|---|---|

| ABE | AL | ABE | AL | ABE | AL | ||

| 0.3552 | 1.4329 | 0.3593 | 1.4559 | 0.3588 | 1.4208 | ||

| 0.3670 | 1.4563 | 0.3618 | 1.4725 | 0.3669 | 1.4119 | ||

| 1.8170 | 1.4699 | 1.8179 | 1.4617 | 1.8181 | 1.4513 | ||

| 0.3267 | 1.4206 | 0.3273 | 1.4313 | 0.3211 | 1.4573 | ||

| 0.5601 | 1.4617 | 0.5601 | 1.4343 | 0.5643 | 1.4491 | ||

| 1.7684 | 1.4706 | 1.7671 | 1.4723 | 1.7661 | 1.4453 | ||

| 0.3071 | 1.4249 | 0.3119 | 1.4415 | 0.3062 | 1.4312 | ||

| 0.5951 | 1.4528 | 0.5920 | 1.4109 | 0.5932 | 1.4449 | ||

| 1.7560 | 1.4423 | 1.7514 | 1.4251 | 1.7537 | 1.4490 | ||

Table A8.

The AMLEs, MSEs, and AL for , , and for example 2.

Table A8.

The AMLEs, MSEs, and AL for , , and for example 2.

| Parameters | AMLE | MSE | AL | |

|---|---|---|---|---|

| 0.9900 | 0.0206 | 0.0901 | ||

| 0.8269 | 0.0235 | 0.0456 | ||

| 0.2267 | 0.0926 | 0.0364 | ||

| 1.0212 | 0.0016 | 0.0321 | ||

| 0.8943 | 0.0017 | 0.0185 | ||

| 0.2011 | 0.0076 | 0.0032 | ||

| 1.0300 | 0.0157 | 0.0391 | ||

| 0.8820 | 0.0178 | 0.0218 | ||

| 0.2020 | 0.0758 | 0.0046 |

Table A9.

The estimation of parameters when , , , , , and for example 2.

Table A9.

The estimation of parameters when , , , , , and for example 2.

| Parameters | SE | LINEX (a = −2) | LINEX (a = 2) | ||||

|---|---|---|---|---|---|---|---|

| ABE | AL | ABE | AL | ABE | AL | ||

| 0.9785 | 0.5554 | 0.9843 | 0.5435 | 0.9811 | 0.6022 | ||

| 0.8224 | 0.3200 | 0.8217 | 0.3233 | 0.8217 | 0.3236 | ||

| 0.2301 | 1.2663 | 0.2284 | 1.2292 | 0.2294 | 1.2336 | ||

| 0.9978 | 0.5047 | 0.9981 | 0.5820 | 0.9976 | 0.5234 | ||

| 0.8770 | 0.2483 | 0.8759 | 0.3219 | 0.8772 | 0.2867 | ||

| 0.2093 | 1.2158 | 0.2099 | 1.2429 | 0.2096 | 1.2140 | ||

| 1.0070 | 0.5455 | 1.0072 | 0.5462 | 1.0057 | 0.6085 | ||

| 0.8647 | 0.2887 | 0.8643 | 0.3071 | 0.8674 | 0.2868 | ||

| 0.2103 | 1.2218 | 0.2102 | 1.2214 | 0.2101 | 1.2162 | ||

Table A10.

The estimation of parameters when for example 2.

Table A10.

The estimation of parameters when for example 2.

| Parameters | SE | LINEX (a = −2) | LINEX (a = 2) | ||||

|---|---|---|---|---|---|---|---|

| ABE | AL | ABE | AL | ABE | AL | ||

| 0.9601 | 0.5763 | 0.9604 | 0.6113 | 0.9554 | 0.5686 | ||

| 0.8019 | 0.2969 | 0.8002 | 0.3879 | 0.8003 | 0.3585 | ||

| 0.2443 | 1.2265 | 0.2447 | 1.2309 | 0.2463 | 1.2479 | ||

| 0.9991 | 0.5726 | 1.0008 | 0.5406 | 1.0012 | 0.5898 | ||

| 0.8743 | 0.3209 | 0.8755 | 0.2863 | 0.8744 | 0.3129 | ||

| 0.2120 | 1.2390 | 0.2109 | 1.2166 | 0.2114 | 1.2064 | ||

| 1.0066 | 0.5894 | 1.0108 | 0.6059 | 1.0100 | 0.5671 | ||

| 0.8626 | 0.3331 | 0.8610 | 0.3510 | 0.8635 | 0.2783 | ||

| 0.2129 | 1.2100 | 0.2123 | 1.2099 | 0.2119 | 1.2343 | ||

References

- Kumar, S.; Kumari, A.; Kumar, K. Bayesian and Classical Inferences in Two Inverse Chen Populations Based on Joint Type-II Censoring. Am. J. Theor. Appl. Stat. 2022, 11, 150–159. [Google Scholar]

- Balakrishnan, N.; Rasouli, A. Exact likelihood inference for two exponential populations under joint Type-II censoring. Comput. Stat. Data Anal. 2008, 52, 2725–2738. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Burkschat, M.; Cramer, E.; Hofmann, G. Fisher information based progressive censoring plans. Comput. Stat. Data Anal. 2008, 53, 366–380. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Cramer, E. The Art of Progressive Censoring: Applications to Reliability and Quality; Statistics for Industry and Technology; Springer: New York, NY, USA, 2014. [Google Scholar]

- Rasouli, A.; Balakrishnan, N. Exact likelihood inference for two exponential populations under joint progressive type-II censoring. Commun. Stat. Methods 2010, 39, 2172–2191. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Su, F.; Liu, K.Y. Exact likelihood inference for k exponential populations under joint progressive type-II censoring. Commun. Stat.-Simul. Comput. 2015, 44, 902–923. [Google Scholar] [CrossRef]

- Doostparast, M.; Ahmadi, M.V.; Ahmadi, J. Bayes estimation based on joint progressive type II censored data under LINEX loss function. Commun. Stat.-Simul. Comput. 2013, 42, 1865–1886. [Google Scholar] [CrossRef]

- Mondal, S.; Kundu, D. Point and interval estimation of Weibull parameters based on joint progressively censored data. Sankhya B 2019, 81, 1–25. [Google Scholar] [CrossRef]

- Goel, R.; Krishna, H. Likelihood and Bayesian inference for k Lindley populations under joint type-II censoring scheme. Commun. Stat.-Simul. Comput. 2023, 52, 3475–3490. [Google Scholar] [CrossRef]

- Krishna, H.; Goel, R. Inferences for two Lindley populations based on joint progressive type-II censored data. Commun. Stat.-Simul. Comput. 2022, 51, 4919–4936. [Google Scholar] [CrossRef]

- Hassan, A.S.; Elsherpieny, E.; Aghel, W.E. Statistical inference of the Burr Type III distribution under joint progressively Type-II censoring. Sci. Afr. 2023, 21, e01770. [Google Scholar] [CrossRef]

- Kumar, K.; Kumari, A. Bayesian and likelihood estimation in two inverse Pareto populations under joint progressive censoring. J. Indian Soc. Probab. Stat. 2023, 24, 283–310. [Google Scholar] [CrossRef]

- Hasaballah, M.M.; Tashkandy, Y.A.; Balogun, O.S.; Bakr, M. Reliability analysis for two populations Nadarajah-Haghighi distribution under Joint progressive type-II censoring. AIMS Math. 2024, 9, 10333–10352. [Google Scholar] [CrossRef]

- Abo-Kasem, O.; Almetwally, E.M.; Abu El Azm, W.S. Reliability analysis of two Gompertz populations under joint progressive type-II censoring scheme based on binomial removal. Int. J. Model. Simul. 2024, 44, 290–310. [Google Scholar] [CrossRef]

- Keller, A.Z.; Kamath, A.R.R. Alternate Reliability Models for Mechanical Systems. In Proceedings of the 3rd International Conference on Reliability and Maintainability (Fiabilité et Maintenabilité), Toulouse, France, 18–21 October 1982; pp. 411–415. [Google Scholar]

- Langlands, A.O.; Pocock, S.J.; Kerr, G.R.; Gore, S.M. Long-term survival of patients with breast cancer: A study of the curability of the disease. Br. Med. J. 1979, 2, 1247–1251. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, A.; Chatterjee, A. Use of the Fréchet distribution for UPV measurements in concrete. NDT E Int. 2012, 52, 122–128. [Google Scholar] [CrossRef]

- Chiodo, E.; Falco, P.D.; Noia, L.P.D.; Mottola, F. Inverse Log-logistic distribution for Extreme Wind Speed modeling: Genesis, identification and Bayes estimation. AIMS Energy 2018, 6, 926–948. [Google Scholar] [CrossRef]

- El Azm, W.A.; Aldallal, R.; Aljohani, H.M.; Nassr, S.G. Estimations of competing lifetime data from inverse Weibull distribution under adaptive progressively hybrid censored. Math. Biosci. Eng 2022, 19, 6252–6276. [Google Scholar] [CrossRef]

- Bi, Q.; Gui, W. Bayesian and classical estimation of stress-strength reliability for inverse Weibull lifetime models. Algorithms 2017, 10, 71. [Google Scholar] [CrossRef]

- Alslman, M.; Helu, A. Estimation of the stress-strength reliability for the inverse Weibull distribution under adaptive type-II progressive hybrid censoring. PLoS ONE 2022, 17, e0277514. [Google Scholar] [CrossRef]

- Shawky, A.I.; Khan, K. Reliability estimation in multicomponent stress-strength based on inverse Weibull distribution. Processes 2022, 10, 226. [Google Scholar] [CrossRef]

- Ren, H.; Hu, X. Estimation for inverse Weibull distribution under progressive type-II censoring scheme. AIMS Math. 2023, 8, 22808–22829. [Google Scholar] [CrossRef]

- Kumar, K.; Kumar, I. Estimation in inverse Weibull distribution based on randomly censored data. Statistica 2019, 79, 47–74. [Google Scholar]

- Hall, P. Theoretical comparison of bootstrap confidence intervals. Ann. Stat. 1988, 16, 927–953. [Google Scholar] [CrossRef]

- Efron, B. The Jackknife, the Bootstrap and Other Resampling Plans; SIAM: Philadelphia, PA, USA, 1982. [Google Scholar]

- El-Saeed, A.R.; Almetwally, E.M. On Algorithms and Approximations for Progressively Type-I Censoring Schemes. Stat. Anal. Data Mining: ASA Data Sci. J. 2024, 17, e11717. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Iliopoulos, G. Stochastic monotonicity of the MLE of exponential mean under different censoring schemes. Ann. Inst. Stat. Math. 2009, 61, 753–772. [Google Scholar] [CrossRef]

- Ding, L.; Gui, W. Statistical inference of two gamma distributions under the joint type-II censoring scheme. Mathematics 2023, 11, 2003. [Google Scholar] [CrossRef]

- Xia, Z.; Yu, J.; Cheng, L.; Liu, L.; Wang, W. Study on the breaking strength of jute fibres using modified Weibull distribution. Compos. Part A Appl. Sci. Manuf. 2009, 40, 54–59. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).