Abstract

To address the nonlinear state estimation problem, the generalized conversion filter (GCF) is proposed using a general conversion of the measurement under minimum mean square error (MMSE) criterion. However, the performance of the GCF significantly deteriorates in the presence of complex non-Gaussian noise as the symmetry of the MMSE is compromised, leading to performance degradation. To address this issue, this paper proposes a new GCF, named generalized loss-based GCF (GLGCF) by utilizing the generalized loss (GL) as the loss function instead of the MMSE criterion. In contrast to other robust loss functions, the GL adjusts the shape of the function through the shape parameter, allowing it to adapt to various complex noise environments. Meanwhile, a linear regression model is developed to obtain residual vectors, and the negative log-likelihood of GL is introduced to avoid the problem of manually selecting the shape parameter. The proposed GLGCF not only retains the advantage of GCF in handling strong measurement nonlinearity, but also exhibits robust performance against non-Gaussian noises. Finally, simulations on the target-tracking problem validate the strong robustness and high filtering accuracy of the proposed nonlinear state estimation algorithm in the presence of non-Gaussian noise.

1. Introduction

State estimation is a key problem in engineering and scientific fields, involving the estimation of a system’s internal state from noisy measurements [1,2]. The Kalman filter (KF) is known as the optimal linear estimation method, providing an optimal recursive solution for linear systems under Gaussian noises [3]. However, in real-world applications, nonlinear dynamics and non-Gaussian noises are common. Under such conditions, the standard KF may fail to maintain its optimality in minimizing the mean squared error (MSE) of the estimated state. Thus, it is crucial to expand KF to efficiently deal with state estimation problem of nonlinear systems in the presence of non-Gaussian noise.

For addressing nonlinear filtering problems, obtaining an exact or analytical solution is often impractical. Many approaches have been explored to address this problem, including extended Kalman filter (EKF) [4], unscented Kalman filter (UKF) [5], cubature Kalman filter (CKF) [6], and Gauss–Hermite Kalman filter (GHKF) [7]. EKF utilizes the first-order Taylor series expansion to linearize system equations. However, EKF suffers from poor approximation accuracy due to linearization errors, and if the system exhibits high nonlinearity, EKF may even diverge [8]. To address this issue, UKF, CKF, and GHKF have been proposed, which approximate the state conditional probability distribution using deterministic sampling (DS). Although UKF avoids the linearization step, offering more accurate estimates for nonlinear systems, it demands more computational resources and careful adjustment of parameters. Moreover, in high-dimensional nonlinear systems, UKF may encounter negative weights, leading to filter instability and potential divergence. While the CKF addresses the numerical instability issue in the UKF, it introduces the problem of nonlocal sampling. GHKF may encounter the curse of dimensionality in high-dimensional systems problems, resulting in significant computational burdens. Based on the Monte Carlo method, particle filter (PF) uses random sampling of particles to represent probability density [9]. Since PF does not assume prior or posterior probability density, it requires considerable computational demands to attain accurate state estimation. Thus, depending on the system and specific requirements, it is important to balance accuracy and computational demands to select the suitable filter.

Generally, the standard KF and its variants are based on the framework of linear minimum mean square error (LMMSE) estimation [10]. LMMSE estimation focuses on finding the optimal linear estimator in the original measurement space. When the measurement and the state are related to a lower degree of nonlinearity, the LMMSE estimation is expected to yield more accurate results [10]. Furthermore, by considering a wider or optimized set of measurements that are uncorrelated with the original ones but still contain relevant information about the system state, LMMSE estimation can achieve better estimation accuracy. The uncorrelated conversion-based filter (UCF) improves the LMMSE estimator in a similar manner, by incorporating new measurements through nonlinear transformations [11]. Moreover, the optimized conversion-sample filter (OCF) simplifies the optimization of the nonlinear transformation function and limits the errors introduced by the DS [12]. In contrast to the UCF and OCF, the generalized conversion filter (GCF) optimizes both the conversion’s dimension and its sample points, providing a generalized transformation of measurements using DS [13].

While the GCF designed based on the minimum mean square error (MMSE) criterion is effectively to handle Gaussian noise, it is highly sensitive to non-Gaussian noise [14]. It is noteworthy that the measurement noise often follows a non-Gaussian distribution and may contain outliers in many practical scenarios. Recently, numerous advancements have been made in enhancing filter performance when dealing with non-Gaussian noise. One such approach involves augmenting the system model with quadratic or polynomial functions to improve estimation accuracy in the presence of non-Gaussian noise [15,16]. The Student’s t filter is another technique, using Student’s t distribution to model measurement noise [17]. Moreover, information-theoretic learning (ITL) has been utilized to combat non-Gaussian noises in signal processing [18]. For instance, maximum correntropy Kalman filter [19], maximum correntropy GCF (MCGCF) [20], and minimum error entropy Kalman filter [21] have been proposed. Unlike the previously discussed techniques, the generalized loss (GL) [22] provides flexibility in adjusting the shape of the loss function. It integrates various loss functions, such as the squared loss, Charbonnier loss [23], Cauchy loss [24], Geman–McClure loss [25] and Welsch loss [26]. Therefore, by acting as a robust loss function that does not rely on the symmetry of Gaussian distributions, GL improves both filtering accuracy and robustness, effectively capturing higher-order statistical characteristics and mitigating the impact of symmetry disruption in non-Gaussian environments. Until now, several GL-based algorithms have been proposed to tackle different estimation problems. A variational Bayesian-based generalized loss cubature Kalman filter is proposed to handle unknown measurement noise and the presence of potential outliers simultaneously [27]. The iterative unscented Kalman filter with general robust loss function [28] and geometric unscented Kalman filter with GL function [29] are utilized to enhance state estimation in power systems, alleviating the impact of non-Gaussian noise.

In this paper, a new nonlinear filter named generalized loss-based generalized conversion filter (GLGCF) is proposed, which employs the GL to reformulate the measurement update process of GCF. By leveraging the GCF’s ability to utilize higher-order information from transformed measurements and the GL’s robustness in dealing with various types of noise, the GLGCF outperforms both the GCF and MCGCF in non-Gaussian noise environments. The main contributions of this paper are summarized as follows:

- To combat non-Gaussian noises, the GLGCF employs a robust nonlinear regression based on GL, and the posterior estimate and its covariance matrix are updated using a fixed-point iteration.

- To solve the problem of manually setting the shape parameter in the GL function, the residual error is integrated into negative log-likelihood (NLL) of GL, and the optimal shape parameter is determined through minimizing the NLL.

- Simulations on the target-tracking models in non-Gaussian noise environments demonstrate the superiority of the GLGCF. Additionally, its recursive structure makes it suitable for online implementation.

2. Preliminaries

2.1. Generalized Loss

The following GL function is proposed by [22]

where represents the shape parameter, and denotes the scale parameter. By adjusting the value of in Equation (1), can be extended to apply to a range of loss functions, e.g., squared loss , Charbonnier loss [23] , Cauchy loss [24] , Geman–McClure loss [25] , and Welsch loss [26] .

Let X and Y be two arbitrary scalar random variables, and GL can be defined as follows:

where represents the joint distribution of X and Y, and with . Given the constraint of limited data, is commonly unknown. Thus, Equation (2) is calculated by

where and are M samples sampled from . Since Equation (3) incorporates , GL provides information regarding higher-order moments. This feature offers greater resilience to outliers and noise, making it particularly effective in situations where the data are subjected to non-Gaussian noise or outliers.

2.2. Generalized Conversion Filter

By applying a DS technique, GCF effectively transforms the sample points, resulting in accurate estimations for nonlinear systems influenced by Gaussian noise. Consider a discrete-time nonlinear system as follows:

where represents the system state; stands for the measurement vector; the functions f (·) and h (·) signify the process model and measurement model, respectively; and denote the process noise and measurement noise, respectively. Generally, for the nonlinear dynamic system described by Equations (4) and (5), the GCF includes three steps, namely prediction, constraint generation and update [13].

2.2.1. Prediction

The prior state and its covariance matrix can be calculated by generating sample points with DS methods, such as the unscented transformation [5], Gauss–Hermite quadrature (GHQ) [7], and cubature rules [6]. First, we define a combined state vector as follows:

and the estimate of , MSE of given the measurements , denoted by , is expressed as

where is the covariance matrix of , and . Using the previous estimate and covariance matrix , we generate a weight vector and a sample set of by a DS method with being the number of sampling points. The sample consists of the state sample and the white process noise sample .

The transformed samples set can be obtained using the process model as

Thus, the predicted state and its covariance matrix are computed by

2.2.2. Constraint Generation

With respect to the measurement function Equation (5), the combined state vector is defined as

and the estimate of , MSE of given the measurements , represented by , can be written as

where is the predicted state, , and is the covariance matrix of .

Similar to the previous prediction step, based on and , we can generate a weight vector and a sample set of by a DS method with being the number of sampling points. The sample consists of the state sample and the white measurement noise sample .

The transformed samples set can be derived using the measurement model

Thus, the samples of and can be obtained as follows:

Following the similar approach to that in Table I in [13], where is substituted by , the constraint matrices can be derived for all . By applying the constraint matrices , the higher-order terms of the transformed samples can be neglected, which effectively restricts the higher-order errors and achieves the optimal transformation.

2.2.3. Update

To obtain the optimal sample points, we apply the QR decomposition to the constraint matrices for all .

where is without its last column.

Finally, the estimated state and its covariance matrix can be obtained by

where

3. Generalized Loss-Based Generalized Conversion Filter

Since the MMSE depends only on the second-order statistics of the errors, the performance of the GCF deteriorates in non-Gaussian noise [30]. To improve the robustness of the GCF, we propose integrating the GL cost function into the GCF framework, resulting in a new variant of the GCF, namely GLGCF. This variant is expected to perform better in non-Gaussian noise environments, as the GL incorporates second- and higher-order moments of the errors.

To combine the GL with the GCF, we first define a linear model that combines the state estimate and the measurement as follows:

where and the covariance matrix of can be obtained by

with . Multiplying both sides of Equation (23) by yields

where , and .

The GL-based cost function is defined as

where is the ith element of , and is the ith element of , is the ith row of .

Next, the optimal estimate of can be obtained by

The solution to Equation (27) can be obtained by solving the following equation:

According to [19], updated Equation (25) can be applied with one iteration to yield similar results within the GCF framework by employing to modify the measurement data. As noted in [31], two methods can be used to achieve this: the first method modifies the residual error covariance using , and the second method reconstructs the ‘pseudo-observation’. Although both methods are equivalent in their final outcome. For simplicity, this paper presents the algorithm based on the first approach.

Let denote the modified covariance, which can be expressed as

In the following analysis, we express in two parts such that

Given that the actual state is unknown, we set . Under this condition, it is straightforward to observe that

The modified measurement noise covariance matrix can be obtained as

The GL-based cost function characterizes the error properties by weighting on the measurement uncertainty, which is reflected in the modified measurement noise covariance matrix . This procedure allows for a more accurate representation of the error dynamics by incorporating the uncertainty in the measurements, thus refining the covariance matrix to accurately capture the true noise characteristics.

Next, we replace in Equation (13) with to obtain

Based on and , we can generate a weight vector and a sample set of by a DS method. Thus, the samples of and can be obtained as Equation (15).

By employing the approach from Table I in [13], where is substituted by , we obtain the constraint matrix for . Subsequently, is calculated by for , as shown in Equations (16) and (17).

Thus, the filter estimated state and its covariance matrix can be computed by

where

| Algorithm 1: GLGCF |

|

The shape of the GL function is determined by the parameter , with its value influencing the level of outlier suppression. Given that directly affects filtering performance, finding the optimal is essential. To address this issue, we formulate the negative log-likelihood (NLL) of GL’s probability distribution function as follows:

where is an approximate integral, with t representing the truncation limit [32]. Subsequently, we find the optimal by minimizing the NLL of the residual error as follows:

Since obtaining an analytical solution for the partition function in Equation (45) is not feasible and is a scalar, a 1-D grid search within can be used to minimize Equation (45). The choice of < 2 ensures the stability of the loss function and reduces computational complexity. When the system is affected by measurement outliers, the symmetric loss function MMSE becomes sensitive to symmetry disruption, resulting in biased state estimation. By optimizing the shape parameter, the GL adapts more effectively to the characteristic of non-Gaussian noise, thereby enhancing the robustness of the GCF in complex noise environments.

Finally, the proposed GLGCF algorithm is summarized in Algorithm 1.

Computational Complexity

The computational complexity of the proposed GLGCF is presented. Note that n and m are dimensions of and , respectively. represents the number of integration subintervals. represents the number of sampling points, which is determined by the selected DS method. In this paper, we utilize the GHQ rule as the DS method. denotes the computational complexity of for calculation with the sampling points.

As we can see from Table 1, different DS methods exhibit distinct computational complexities. In scenarios with constrained computational resources, opting for a DS method that requires fewer sampling points can effectively balance the trade-off between computational efficiency and accuracy. Furthermore, a small truncation limit values t, and a small search interval can achieve high accuracy with a finite complexity.

Table 1.

Computational complexity of GLGCF.

4. Simulation Results

In order to analyze the performance of the proposed algorithm, the results of the simulation are provided in this section. The proposed GLGCF algorithm is compared with several existing algorithms: CKF [33], UKF [8], PF [34], GCF [13], and MCGCF [20]. To evaluate the filtering accuracy, the root mean square error (RMSE) and average RMSE (ARMSE) are defined as follows:

where denotes the real state, and represents the estimate of for jth Monte Carlo run at discrete time k, n is the number of iterations and M denotes the number of Monte Carlo runs.

4.1. Constant Velocity Tracking Model

In this simulation, a constant velocity (CV) surface-target-tracking model is used to evaluate the filtering performance of the proposed robust GLGCF algorithm in the presence of high maneuvering speed and non-Gaussian noise. The state and measurement equations for the CV model are defined as follows:

where with position and velocity . T represents the sampling period. denotes the process noise with its covariance matrix , and stands for the measurement noise with its covariance matrix .

We consider the two scenarios with different measurement noise model as follows.

4.1.1. Heavy-Tailed Noise

Scenario 1: Based on the non-Gaussian noise simulation method [21], assume that the presence of non-Gaussian measurement noise with heavy tails is modeled by a mixed Gaussian distribution as described below:

The initial state is generated from , where is a Gaussian distribution with mean and with covariance . The sampling period T = 1 s. The process noise covariance is with . For GLGCF, the parameters t and c are set as 10 and 1, respectively. The minimum shape parameter is set as . The results of state estimate are obtained after 50 Monte Carlo runs.

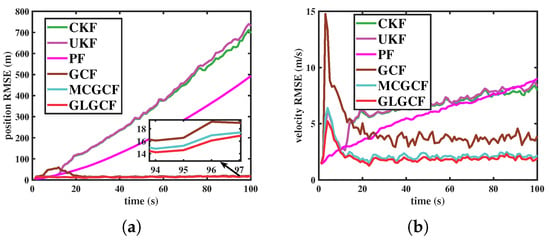

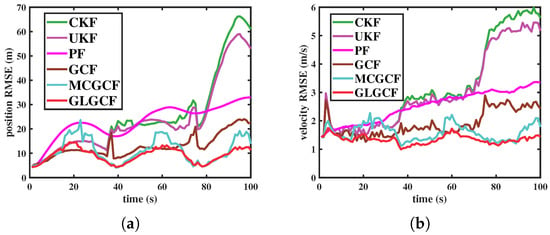

Figure 1 shows the RMSE of GLGCF against other nonlinear filters. As can be seen from Figure 1, GLGCF and MCGCF are superior to GCF. This finding shows that, under a heavy-tailed noise environment, replacing the MMSE criterion with the maximum correntropy criterion (MCC) or GL in the GCF improves both the accuracy and robustness of the filter. Furthermore, the comparison shows that the GLGCF outperforms the MCGCF, indicating that GL is more effective than MCC in handling heavy-tailed noise.

Figure 1.

RMSEs of different filters in estimating the CV model under the scenario 1. (a) Position (m); (b) velocity (m/s).

4.1.2. Mixed Noise

Scenario 2: In this scenario, the measurement noise is assumed to follow an additive combination of two Gaussian noise distributions.

The initial state is generated from , where is a Gaussian distribution with mean and with covariance . The sampling period T = 1 s. The process noise covariance is with . For GLGCF, the parameters t and c are set as 10 and 1, respectively. The minimum shape parameter is set as . The results of state estimate are obtained after 50 Monte Carlo runs.

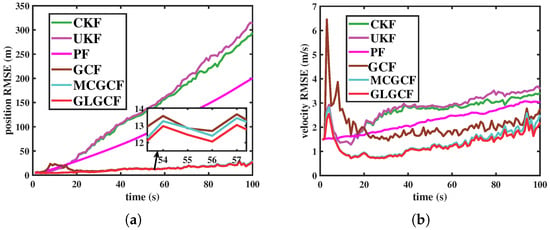

The comparative results of different filters in the presence of mixed measurement noise are shown in Figure 2, Table 2 and Table 3. In comparison to the UKF, CKF, GCF, and PF, both the MCGCF and the GLGCF exhibit better performance. This is due to the use of the generalized transformation, which limits the higher-order errors associated with the DS technique, resulting in improved accuracy. Futhermore, as can be seen in Figure 2, Table 2 and Table 3, the proposed GLGCF algorithm achieves the highest estimation accuracy, demonstrating its superior performance in predicting both position and velocity estimation in the mixed-noise environment.

Figure 2.

RMSEs of different filters in estimating the CV model under the scenario 2. (a) Position (m); (b) velocity (m/s).

Table 2.

ARMSE of position (m) in the CV model under the different scenarios.

Table 3.

ARMSE of velocity (m/s) in the CV model under the different scenarios.

4.2. Cooperative Turning Tracking Model

In this simulation, the cooperative turning (CT) target-tracking model is used to evaluate the filtering performance of the proposed GLGCF algorithm under heavy-tailed and mixed-noise environments. The CT model is well known as a fundamental maneuvering model in surface target tracking and is especially prevalent in describing the dynamics of maneuvering targets in unmanned surface vehicle tracking. The state and measurement equations for the CT model are presented below:

where with position and velocity T represents the sampling period, and represents the tuning rate. denotes the process noise with its covariance matrix , and represents the measurement noise with covariance matrix .

We consider the four scenarios with different measurement noise models below.

4.2.1. Heavy-Tailed Noise

Scenario 1: The robustness of the GLGCF algorithm against non-Gaussian noise is demonstrated by assuming the measurement noise in Equation (53) to be heavy-tailed, generated by a mixture of Gaussian distributions with different variances:

Scenario 2: The probability of large outliers in the measurement noise is increased as follows:

The initial state is generated from , where is a Gaussian distribution with mean and with covariance . The sampling period T = 1 s and tuning rate rad. The process noise covariance is with . For GLGCF, the parameters t and c are set as 10 and 1, respectively. The minimum shape parameter is set as . The results of the state estimate are obtained after 50 Monte Carlo runs.

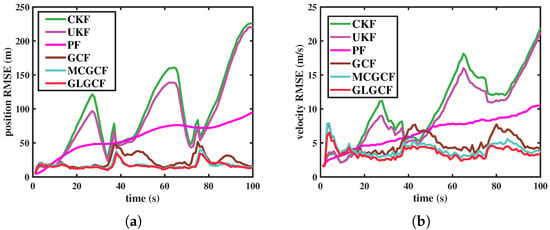

The RMSEs for different algorithms under heavy-tailed noise in scenario 1 and scenario 2 are depicted in Figure 3 and Figure 4, respectively. The ARMSEs of position and velocity estimates in scenarios 1 and 2 are summarized in Table 4 and Table 5, respectively. As shown in Figure 3 and Figure 4, the filtering accuracy of GLGCF is superior to GCF, MCGCF, CKF, and UKF. This confirms that incorporating the GL into the GCF improves the estimation accuracy and robustness of the filter when dealing with heavy-tailed noise. In the presence of measurement noise that includes outliers, the GCF suffers from performance degradation. However, the use of MCC and GL function stabilizes the GCF by performing nonlinear iterations on the anomalous measurements. Both MCGCF and GLGCF maintain stable performance, with GLGCF achieving superior performance. The results indicate that, in the non-Gaussian noise environments, the optimal form of the loss function can be automatically determined by the error distribution, thanks to the use of the residual optimization parameter.

Figure 3.

RMSEs of different filters in estimating the CT model under the scenario 1. (a) Position (m); (b) velocity (m/s).

Figure 4.

RMSEs of different filters in estimating the CT model under the scenario 2. (a) Position (m); (b) velocity (m/s).

Table 4.

ARMSE of position (m) in the CT model under the different scenarios.

Table 5.

ARMSE of velocity (m/s) in the CT model under the different scenarios.

4.2.2. Mixed Noise

Scenario 3: The measurement noise is characterized by a mixture of Gaussian distributions, with different variances for the position and velocity:

Scenario 4: In this scenario, the measurement noise is also a mixture of two Gaussian distributions, with the following mixture weights:

The initial state is generated from , where is a Gaussian distribution with mean and with covariance . The sampling period T = 1 s and tuning rate rad. The process noise covariance is with . For GLGCF, the parameters t and c are set as 10 and 1, respectively. The minimum shape parameter is set as . The results of state estimate are obtained after 50 Monte Carlo runs.

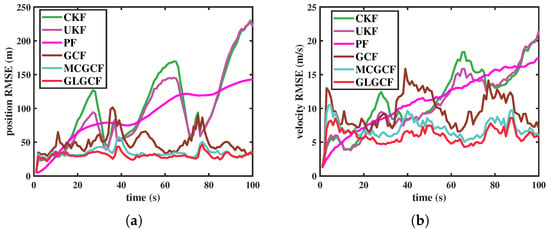

Figure 5 and Figure 6 show the RMSE curves of UKF, CKF, GCF, MCGCF and GLGCF in the mixed measurement noise. Table 4 and Table 5 summarize the ARMSEs of position and velocity estimates in scenario 3 and scenario 4. It can be seen in Figure 5 and Figure 6, Table 4 and Table 5, the RMSE and ARMSE achieved by GLGCF algorithm are smaller than those of other compared algorithms. In the mixed-noise environment, the estimation accuracy of MCGCF and GLGCF becomes closer, with GLGCF still providing the best filtering accuracy. Compared to the heavy-tailed environment, the advantage of GLGCF is less distinct in the mixed-noise environment. This can be attributed to the absence of large outliers in the measurement noise, where both GCF and MCGCF offer good filtering results. Therefore, GLGCF exhibits its robustness against the impact of outliers, providing superior performance in more complex environments.

Figure 5.

RMSEs of different filters in estimating the CT model under the scenario 3. (a) Position (m); (b) velocity (m/s).

Figure 6.

RMSEs of different filters in estimating the CT model under the scenario 4. (a) Position (m); (b) velocity (m/s).

5. Conclusions

In this paper, an extended version of the generalized conversion filter (GCF), namely generalized loss-based generalized conversion filter (GLGCF), is proposed. The GCF performs well in the Gaussian noise environment. However, the performance of GCF is significantly deteriorated when the system encounters non-Gaussian noise, primarily due to the the characteristics of the minimum mean square error (MMSE) criterion. To tackle this challenge, the proposed GLGCF algorithm is reformulated using a nonlinear regression model, employing the generalized loss (GL) instead of MMSE criterion to estimate the system state. Moreover, the residual vectors are incorporated into the negative log-likelihood of GL to optimize the parameters, thereby reducing the burden for manual tuning. Finally, two simulations on target-tracking models are carried out to verify the effectiveness and robustness of the proposed GLGCF algorithm. The results indicate that this proposed GLGCF algorithm exhibits excellent performance when the system is affected by non-Gaussian noise.

Author Contributions

Conceptualization, Z.K. and S.W.; methodology, S.W.; software, Z.K.; validation, S.W. and Y.Z.; formal analysis, S.W.; investigation, Y.Z.; resources, S.W.; data curation, Z.K. and Y.Z.; writing—original draft preparation, Z.K.; writing—review and editing, S.W. and Y.Z.; visualization, Z.K.; supervision, S.W. and Y.Z.; project administration, S.W.; funding acquisition, S.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant: 62471406).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| KF | Kalman filter |

| GCF | generalized conversion filter |

| GL | generalized loss |

| MCC | maximum correntropy criterion |

| ITL | information-theoretic learning |

| MCGCF | maximum correntropy generalized conversion filter |

| GLGCF | generalized loss generalized conversion filter |

| DS | deterministic sampling |

| NLL | negative log-likelihood |

| UCF | uncorrelated conversion-based filter |

| OCF | optimized conversion-sample filter |

| MMSE | minimum mean square error |

| LMMSE | linear minimum mean square error |

| EKF | extended Kalman filter |

| UKF | unscented Kalman filter |

| PF | particle filter |

| GHKF | Gauss–Hermite Kalman filter |

| GHQ | Gauss–Hermite quadrature |

| CV | constant velocity |

| CT | cooperative turning |

References

- Xia, X.; Xiong, L.; Huang, Y.; Liu, Y.; Gao, L.; Xu, N.; Yu, Z. Estimation on IMU yaw misalignment by fusing information of automotive onboard sensors. Mech. Syst. Signal Process. 2022, 162, 107993. [Google Scholar] [CrossRef]

- Duník, J.; Biswas, S.K.; Dempster, A.G.; Pany, T.; Closas, P. State estimation methods in navigation: Overview and application. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 16–31. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. Trans. ASME, Ser. D J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Ding, L.; Wen, C. High-Order extended Kalman filter for state estimation of nonlinear systems. Symmetry 2024, 16, 617. [Google Scholar] [CrossRef]

- Garcia, R.V.; Pardal, P.C.P.M.; Kuga, H.K.; Zanardi, M.C. Nonlinear filtering for sequential spacecraft attitude estimation with real data: Cubature Kalman Filter, Unscented Kalman Filter and Extended Kalman Filter. Adv. Space Res. 2019, 63, 1038–1050. [Google Scholar] [CrossRef]

- Wan, J.; Ren, P.; Guo, Q. Application of interactive multiple model adaptive five-degree cubature Kalman algorithm based on fuzzy logic in target tracking. Symmetry 2019, 11, 767. [Google Scholar] [CrossRef]

- Klokov, A.; Kanouj, M.; Mironchev, A. A novel carrier tracking approach for GPS signals based on gauss–hermite Kalman filter. Electronics 2022, 11, 2215. [Google Scholar] [CrossRef]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef]

- Jouin, M.; Gouriveau, R.; Hissel, D.; Péra, M.C.; Zerhouni, N. Particle filter-based prognostics: Review, discussion and perspectives. Mech. Syst. Signal Process. 2016, 72–73, 2–31. [Google Scholar] [CrossRef]

- Liu, Y.; Li, X.R.; Chen, H. Linear estimation with transformed measurement for nonlinear estimation. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 221–236. [Google Scholar] [CrossRef]

- Lan, J.; Li, X.R. Nonlinear estimation by LMMSE-based estimation with optimized uncorrelated augmentation. IEEE Trans. Signal Process. 2015, 63, 4270–4283. [Google Scholar] [CrossRef]

- Lan, J.; Li, X.R. Nonlinear estimation based on conversion-sample optimization. Automatica 2020, 121, 109160. [Google Scholar] [CrossRef]

- Lan, J. Generalized-conversion-based nonlinear filtering using deterministic sampling for target tracking. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 7295–7307. [Google Scholar] [CrossRef]

- Schick, I.C.; Mitter, S.K. Robust recursive estimation in the presence of heavy-tailed observation noise. Ann. Statist. 1994, 22, 1045–1080. [Google Scholar] [CrossRef]

- Wang, S.; Wang, Z.; Dong, H.; Chen, Y.; Alsaadi, F.E. Recursive quadratic filtering for linear discrete non-Gaussian systems over time-correlated fading channels. IEEE Trans. Signal Process. 2022, 70, 3343–3356. [Google Scholar] [CrossRef]

- Battilotti, S.; Cacace, F.; d’Angelo, M.; Germani, A. The polynomial approach to the LQ non-Gaussian regulator problem through output injection. IEEE Trans. Autom. Control 2019, 64, 538–552. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Xu, B.; Wu, Z.; Chambers, J. A new outlier-robust student’s t based gaussian approximate filter for cooperative localization. IEEE/ASME Trans. Mechatronics 2017, 22, 2380–2386. [Google Scholar] [CrossRef]

- Chen, B.; Dang, L.; Zheng, N.; Príncipe, J.C. Kalman Filtering Under Information Theoretic Criteria; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Chen, B.; Liu, X.; Zhao, H.; Príncipe, J.C. Maximum correntropy Kalman filter. Automatica 2017, 76, 70–77. [Google Scholar] [CrossRef]

- Dang, L.; Jin, S.; Ma, W.; Chen, B. Maximum correntropy generalized conversion-based nonlinear filtering. IEEE Sens. J. 2024, 24, 37300–37310. [Google Scholar] [CrossRef]

- Chen, B.; Dang, L.; Gu, Y.; Zheng, N.; Príncipe, J.C. Minimum errorentropy Kalman filter. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 5819–5829. [Google Scholar] [CrossRef]

- Barron, J.T. A general and adaptive robust loss function. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4331–4339. [Google Scholar]

- Charbonnier, P.; Blanc-Feraud, L.; Aubert, G.; Barlaud, M. Two deterministic half-quadratic regularization algorithms for computed imaging. In Proceedings of the 1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Volume 2, pp. 168–172. [Google Scholar]

- Qi, L.; Shen, M.; Wang, D.; Wang, S. Robust cauchy kernel conjugate gradient algorithm for non-Gaussian noises. IEEE Signal Process. Lett. 2021, 28, 1011–1015. [Google Scholar] [CrossRef]

- Lu, L.; Wang, W.; Yang, X.; Wu, W.; Zhu, G. Recursive GemanMcClure estimator for implementing second-order Volterra filter. IEEE Trans. Circuits Syst. II Exp. Briefs. 2019, 66, 1272–1276. [Google Scholar]

- Dennis, J.E.; Welsch, R.E. Techniques for nonlinear least squares and robust regression. Commun. Statist.-Simul. Comput. 1978, 7, 345–359. [Google Scholar] [CrossRef]

- Yan, W.; Chen, S.; Lin, D.; Wang, S. Variational Bayesian-based generalized loss cubature Kalman Filter. IEEE Trans. Circuits Syst. II Express Briefs. 2024, 71, 2874–2878. [Google Scholar] [CrossRef]

- Zhao, H.; Hu, J. Iterative unscented Kalman filter with general robust loss function for power system forecasting-aided state estimation. IEEE Trans. Instrum. Meas. 2023, 73, 9503809. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, Q.; Lin, D.; Wang, S. Generalized loss based geometric unscented Kalman filter for robust power system forecasting-aided state estimation. IEEE Signal Process Lett. 2022, 29, 2353–2357. [Google Scholar] [CrossRef]

- Liu, W.; Pokharel, P.P.; Principe, J.C. Correntropy: Properties, and applications in non-Gaussian signal processing. IEEE Trans. Signal Process. 2007, 55, 5286–5298. [Google Scholar] [CrossRef]

- Zhu, B.; Chang, L.; Xu, J.; Zha, F.; Li, J. Huber-based adaptive unscented Kalman filter with non-Gaussian measurement noise. Circuits, Syst. Signal Process. 2018, 37, 3842–3861. [Google Scholar] [CrossRef]

- Chebrolu, N.; Labe, T.; Vysotska, O.; Behley, J.; Stachniss, C. Adaptive robust kernels for non-linear least squares problems. IEEE Robot. Autom. Lett. 2021, 6, 2240–2247. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature Kalman filters. IEEE Trans. Autom. Control. 2009, 54, 1254–1269. [Google Scholar] [CrossRef]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).