Abstract

Black smoke emitted from diesel vehicles contains substantial amounts of hazardous substances. With the increasing annual levels of such emissions, there is growing concern over their detrimental effects on both the environment and human health. Therefore, it is imperative to strengthen the supervision and control of black smoke emissions. An effective approach is to analyze the smoke emission status of vehicles. Conventional object detection models often exhibit limitations in detecting black smoke, including challenges related to multi-scale target sizes, complex backgrounds, and insufficient localization accuracy. To address these issues, this study proposes a multi-dimensional detection algorithm. First, a multi-scale feature extraction method was introduced by replacing the conventional C2F module with a mechanism that employs parallel convolutional kernels of varying sizes. This design enables the extraction of features at different receptive fields, significantly improving the capability to capture black smoke patterns. To further enhance the network’s performance, a four-layer adaptive feature fusion detection head was proposed. This component dynamically adjusts the fusion weights assigned to each feature layer, thereby leveraging the unique advantages of different hierarchical representations. Additionally, to improve localization accuracy affected by the highly irregular shapes of black smoke edges, the Inner-IoU loss function was incorporated. This loss effectively alleviates the oversensitivity of CIoU to bounding box regression near image boundaries. Experiments conducted on a custom dataset, named Smoke-X, demonstrated that the proposed algorithm achieves a 4.8% increase in precision, a 5.9% improvement in recall, and a 5.6% gain in mAP50, compared to baseline methods. These improvements indicate that the model exhibits stronger adaptability to complex environments, suggesting considerable practical value for real-world applications.

1. Introduction

According to the China Mobile Source Environmental Management Annual Report (2024) [], by early 2024, China’s vehicle population had reached 336 million units, accounting for 77.24% of the total motor vehicle population in the country. Vehicle emissions of CO, HC, NOx, and PM accounted for 89.9%, 90.8%, 97.8%, and 93.2% of total national motor vehicle emissions, respectively. Diesel vehicles, which account for less than 22.76% of the total vehicle population, contribute 14.0%, 7.6%, 87.8%, and 99% of the total automotive emissions for these four pollutants, respectively. The primary cause of this phenomenon is the higher proportion of black smoke-emitting vehicles among diesel vehicles, where the pollutant concentrations in black smoke significantly exceed those in normal exhaust emissions []. A black smoke-emitting vehicle is defined as any motor vehicle that emits visibly detectable smoke or whose smoke density exceeds Level 1 on the Ringelmann scale []. Schmidt’s research indicates that 50% of total BC, PN23, and NOx emissions are caused by 4–8%, 10–14%, and 13–24% of high-emission heavy-duty diesel vehicles, respectively [].

Black smoke contains substantial amounts of harmful substances, including PM2.5, PM10, nitrogen oxides, polycyclic aromatic hydrocarbons, and other hazardous gases []. Some of these primary pollutants undergo chemical reactions with other substances in the atmosphere, ultimately forming secondary pollutants such as nitrates and ammonium salts. In Chen’s study [], a significant association was identified between PM2.5 chemical composition and lung cancer mortality rates. For each interquartile range increase in the concentrations of ammonium salts (NH4+), nitrate (NO3−), black carbon (BC), and organic matter (OM), the hazard ratios (HR) become 1.967, 1.633, 1.622, and 1.539, respectively. This indicates that the risk of lung cancer mortality among residents increases to 1.967, 1.633, 1.622, and 1.539 times the original level. Ammonium salts demonstrate the highest contribution to overall PM2.5 impact at 35.1%, followed by chloride ions at 19.3%, sulfate at 17.6%, black carbon at 14.7%, and organic matter at 13.2%. Song’s study demonstrated [] that elevated concentrations of PM2.5 and its components were significantly associated with increased breast cancer risk. For each 1 μg/m3 increase in PM2.5, BC, NH4+, NO3−, OM, and SO42−, the corresponding hazard ratios were 1.02, 1.39, 1.28, 1.15, 1.05, and 1.15 respectively. The mixture exposure model identified black carbon and sulfate as key risk drivers.

These findings demonstrate that emissions from black smoke pose serious threats to human health, necessitating immediate implementation of stringent measures to reduce the incidence of related cancers []. Promoting green energy conservation and emission reduction in this field has become a crucial measure []. Within this context, adopting intelligent monitoring and precision regulatory approaches will enable rapid identification and phase-out of high-emission vehicles. This strategy will directly reduce total pollutant emissions and play a crucial role in safeguarding public health.

Currently, black smoke vehicle detection methods are primarily categorized into manual detection, traditional black smoke detection, and deep learning-based detection approaches. Manual detection methods cannot meet the requirements for large-scale rapid operational demands []. Traditional smoke detection approaches primarily rely on manually designed features, which transform raw data into specific feature representations []. Prema et al. [] combined static and dynamic texture analysis for smoke detection, while Filonenko et al. [] primarily relied on color and shape features to conduct black smoke detection research. However, these methods exhibit significant limitations, as different scenarios require the design of new features. To address this issue, some studies have shifted toward more complex dynamic texture analysis. For instance, Dimitropoulos et al. [] proposed an H-LDS descriptor that combines spatiotemporal modeling with particle swarm optimization algorithms to improve the accuracy of black smoke recognition. Additionally, Sun et al. [] leveraged the dynamic characteristics of smoke by integrating adaptive learning rates with Gaussian mixture models (GMM) to extract moving smoke regions. Tao et al. [] localized vehicle targets using Vibe technology and extracted features such as LBP, HOG, and integral projections from tail regions, which were then fed into neural networks for classification. Alamgir et al. [] adopted a similar approach by combining LBP and RGB texture features for black smoke classification. Despite the effectiveness of these traditional methods in specific scenarios, they generally face two major challenges: First, manual feature design relies heavily on prior knowledge, making it difficult to adapt to the diversity of smoke and complex environmental conditions (e.g., illumination variations and occlusions). Second, the limited generalization capability of feature combinations and classifiers results in high false positive and false negative rates.

In contrast, deep learning-based methods can automatically learn multi-level feature representations, thereby enhancing robustness and detection performance. Bewley et al. [] and Yin et al. [] employed convolutional neural networks (CNNs) for smoke detection. Subsequently, research on deep learning approaches has gradually increased. Khan et al. [] improved the lightweight SqueezeNet architecture to enhance computational efficiency while maintaining detection accuracy, making it more suitable for real-time monitoring scenarios. To further strengthen spatiotemporal modeling capabilities, Cao et al. [] innovatively combined Inception V3 and LSTM networks to capture the dynamic evolution patterns of smoke.

These studies, while demonstrating the potential of deep learning in smoke detection, still face limitations in comprehensive feature extraction. To address this, Gu et al. [] designed a dual-channel deep neural network to separately learn smoke localization and semantic features. Zhan et al. [] proposed a recursive feature pyramid-based network to enhance feature fusion capabilities. Concurrently, to improve object detection performance, Lin et al. [] developed the Feature Pyramid Network (FPN), while Liu et al. [] introduced the Path Aggregation Network (PAN). Ba et al. [] leveraged spatial and channel attention mechanisms to identify critical regions in smoke scenes. Liu et al. [] employed feature enhancers and label enhancers to extract cross-scale features.

Despite these advances, existing methods have not adequately addressed the combined challenges of multi-scale variation, edge diffusion, and real-time processing constraints in practical diesel vehicle monitoring scenarios. Therefore, this paper intends to design a network specifically for multi-dimensional black smoke detection. Since the YOLO series algorithms have become a research hotspot in recent years [,], this paper improves the model based on YOLOv8, which balances speed and accuracy well and is suitable as the basic framework for real-time black smoke detection.

This study addresses key challenges in black smoke detection, including large variations in target size, complex backgrounds, and low bounding box accuracy caused by edge diffusion. To overcome the limitations of existing methods in practical applications, we developed a multi-dimensional black smoke detection algorithm by optimizing feature extraction and fusion mechanisms. The term “multi-dimensional black smoke” refers to the diverse manifestations of black smoke across multiple dimensions, which can be characterized by attributes such as smoke density, diffusion pattern, and size. Examples include light vs. heavy concentration, diffused vs. non-diffused edges, and large vs. small scale. The research focuses on the following three aspects:

- (1)

- A multi-dimensional feature extraction method was proposed. To address the significant variation in target dimensions of black smoke, the original C2F module in the baseline network was replaced with a multi-dimensional feature extraction structure. This method enhances the model’s ability to capture multi-scale features by expanding the receptive field, significantly improving detection performance for black smoke targets. By integrating contextual features from both smoke patterns and background information, it effectively mitigates the low localization accuracy caused by the high variability in target dimensions and complex edge shapes of black smoke.

- (2)

- An adaptive feature fusion detection head was constructed. To further improve detection accuracy, the feature fusion mechanism in the real-time object detection network was enhanced by introducing an adaptive feature fusion detection head. This component automatically adjusts the fusion strategy for features across different dimensions, filters out conflicting information, and suppresses interference between feature channels, thereby improving the network’s invariance in multi-dimensional feature extraction. In addition, a four-head design was implemented to strengthen the detection capability for small black smoke targets.

- (3)

- Low-loss bounding box regression. Traditional CIoU loss heavily relies on geometric measures for bounding box matching, leading to suboptimal detection performance for irregular targets such as black smoke. To tackle this issue, the Inner-IoU loss function was introduced to refine the bounding box matching strategy. This loss significantly improves localization accuracy, particularly for diffuse and irregularly shaped smoke patterns, by incorporating more precise matching principles during bounding box prediction. Consequently, it reduces precision degradation caused by conventional geometric measures and enhances the network’s ability to accurately localize black smoke.

The remainder of this paper is organized as follows. Section 2 briefly describes the architecture of the baseline network and the proposed improvements. Section 3 provides a detailed introduction to the dataset used, evaluation metrics, and experimental validation and analysis. Section 4 summarizes the work and outlines directions for future research.

The design of this study also embodies the concept of symmetry. “Symmetry” refers to the characteristic of a system remaining unchanged under certain transformations, as well as the unity and diversity revealed by these characteristics. Specifically: The multi-scale feature extraction structure achieves functional symmetry through parallel convolution kernels, enabling it to capture black smoke features at different scales without generating deviations; The adaptive fusion mechanism balances shallow details and deep semantic information through dynamic weights, achieving “weighted symmetry” in the contribution of features at different levels; Finally, the internal IoU loss function optimizes the symmetry of the bounding box regression process, providing balanced gradient supervision for irregular targets.

2. Materials and Methods

2.1. Overview of the YOLOv8 Detection Algorithm

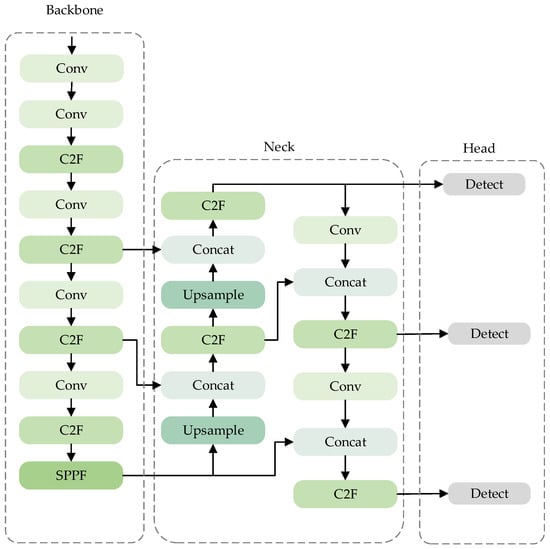

YOLOv8, a classic algorithm in the YOLO series, combines high accuracy with real-time detection capabilities, making it suitable for black smoke detection tasks with strict speed requirements. As shown in Figure 1 [], its structure consists of three core components: the Backbone for feature extraction, the Neck for feature pooling and fusion, and the Head that outputs the final detection results.

Figure 1.

YOLOv8 Network Architecture.

- (1)

- Backbone Network

The core part of YOLOv8 is constructed by the C2F module (as shown in Figure 2), and it combines gradient segmentation connections. It achieves efficient feature extraction while controlling the parameter quantity. The Conv module is responsible for adjusting the resolution and channel number of the feature map, and the SPPF module enhances the model’s receptive field and robustness through multi-scale pooling operations.

Figure 2.

Backbone Network Architecture.

- (2)

- Neck Network

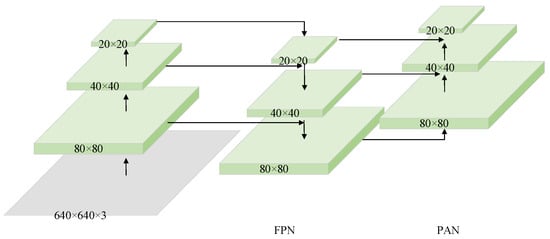

The neck part adopts a simplified PAN structure, and achieves bidirectional fusion of deep and shallow layer features through the feature pyramid architecture. This not only expands the receptive field but also enhances the adaptability to multi-scale targets. Its network structure is shown in Figure 3.

Figure 3.

FPN+PAN Structure.

- (3)

- Head

The Head section employs an anchor-free detection mechanism. By detecting the output bounding boxes and confidence levels of the head, the classification head utilizes global average pooling to achieve efficient multi-class classification. The overall structure significantly enhances the detection accuracy and real-time performance for diverse target types such as black smoke. Its network structure is shown in Figure 4.

Figure 4.

Head Network Architecture.

2.2. Algorithm Improvements

2.2.1. Design of Multi-Dimensional Feature Extraction Method

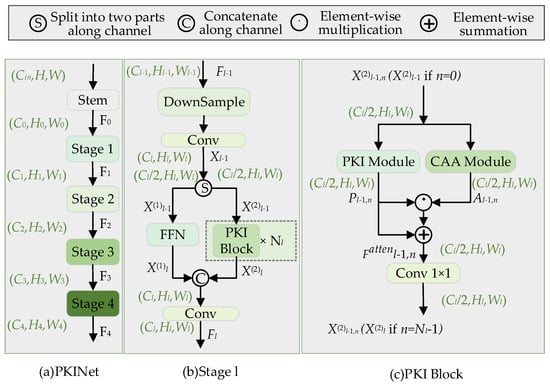

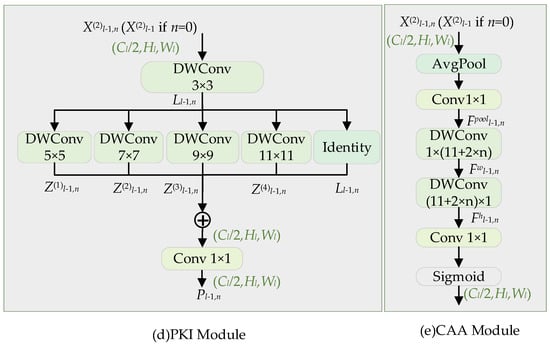

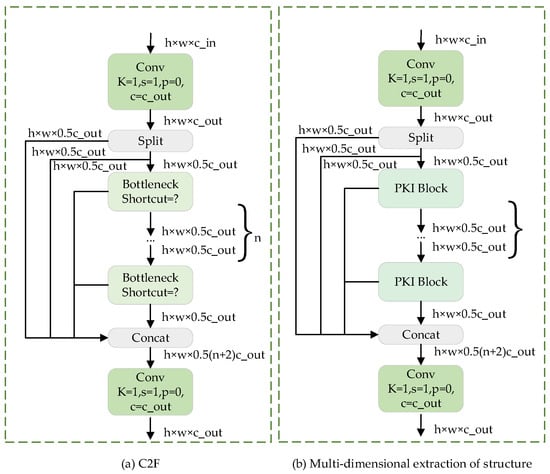

Conventional large-kernel and dilated convolutions often struggle with the significant scale variations in black smoke detection, typically introducing noise or sparse features. To overcome this, we introduce a multi-scale feature extraction method that replaces the standard C2F module’s Bottleneck with a PKI-Block. This enhancement allows the network to better capture multi-scale smoke characteristics, thereby improving the detection of targets with blurred edges and diverse shapes. The core component PKI-Block (consisting of the PKI Module and the CAA module) is elaborated in detail in Figure 5. The proposed architecture of PKI-C2F is shown in Figure 6.

Figure 5.

PKI-Net Network.

Figure 6.

Comparison Before and After C2F Improvement.

- (1)

- Stem and Stage Layers: The PKI-Net architecture consists of one stem layer followed by four stage layers.

- (2)

- Stage I processes input via downsampling and convolution into feature , which is then channel-split into two spatially aligned branches—FFN path and PKI-Block path , with halved channels and unchanged spatial dimensions for parallel processing. The characteristic dimensions mentioned above can be found in Figure 5. The same applies below.The smoke feature is processed by a feed-forward network (FFN), producing output feature . This sub-module processes the input as follows: Firstly, it generates feature by using NL PKI-Blocks, and all operations maintain the feature dimension unchanged. Ensure that the dimensions of the black smoke information are the same; then, it concatenates and along the channel dimension, reverses the initial splitting operation, and restores the number of channels to the original dimension . Finally, it applies convolution to the concatenated features and outputs the final feature .

- (3)

- PKI Module: The module first processes input using a 3 × 3 small-kernel deformable convolution to extract local details of black smoke, generating an initial feature representation . Then, multiple parallel large-kernel deformable depthwise convolutions are applied to capture multi-scale contextual features, producing outputs , …, and . Features are processed through parallel branches using deformable depthwise convolutions of sizes 5 × 5, 7 × 7, 9 × 9, and 11 × 11, plus an identity branch. This allows simultaneous extraction of contextual features across varying receptive fields—from broad semantics (large kernels) to local details (small kernels)—while the identity branch preserves original information and aids gradient flow.Here, represents the local features extracted through a convolutional operation, while , denotes the contextual features obtained by the m-th depthwise convolution (DWConv). Finally, the local and contextual features are fused using a 1 × 1 convolutional layer. This operation produces output , which effectively captures the inter-channel relationships. In this way, the PKI structure can capture multi-dimensional features without compromising the integrity of local texture characteristics.

- (4)

- CAA Module: The CAA Module also receives input This input undergoes average pooling followed by a 1 × 1 convolution to extract localized regional features:where denotes the average pooling operation. For n = 0, the output is represented as . Two depthwise separable convolutions are subsequently employed to approximate the standard large-kernel depthwise convolution:Here, and represent depthwise separable convolutions with kernel widths of and , respectively. These operations are designed to capture long-range contextual information. The convolutional layers generate feature maps enriched with contextual details of black smoke. These specialized feature maps are then fused with the original features to integrate multi-scale contextual representations. Finally, the CAA module produces attention weights based on the processed feature maps.Here, the Sigmoid function ensures that the values of the attention map remain within the range (0, 1). The symbol ⊙ denotes element-wise multiplication, ⊕ represents element-wise addition, and corresponds to the enhanced features.

The CAA module enhances the feature representation of sparse black smoke in complex scenes. Together with PKI, it utilizes residual connections to ensure effective information flow. The simplified convolution design of this method controls the computational cost. The detailed configuration of the improved C2F module is shown in Table 1, and the parameters (A–G) are as follows: hierarchical index, input feature map size, output channels, convolution kernel size, dilation rate, and output size (for an input of 640 × 640).

Table 1.

Specific Configuration of C2F Network.

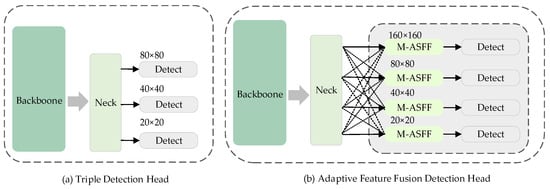

2.2.2. Adaptive Feature Fusion Detection Head

Based on the requirements of multi-dimensional feature extraction, the original detection head has the problems of insufficient small target detection capability and difficulty in handling multi-scale black smoke detection tasks. Therefore, this paper redesigns the detection head network, adds additional feature layers, and introduces the ASFF fusion strategy, forming a four-head detection structure.

- (1)

- Reconstruction of the detection head network structure

Considering the characteristics of black smoke targets (their size changes, being small and blurry in the initial stage), a shallow detection head with 160 × 160 pixels is added. This is based on the characteristics of convolutional networks: shallow features have high resolution and rich details, which are conducive to locating small targets; deep features have powerful semantic information, which is conducive to identifying large targets. By integrating features at different levels, this model can better utilize the positional details and semantic information of black smoke features. The comparison of the improved network structure is shown in Figure 7.

Figure 7.

Comparison Before and After Head Improvement.

- (2)

- Adaptive Spatial Feature Fusion Strategy

In response to the poor performance and poor scale adaptability of the original detection head in smoke detection, we introduced the Multi-Dimensional Adaptive Spatial Feature Fusion (M-ASFF) module. This module expands the three-layer ASFF into a four-layer architecture (as shown in Figure 8), by fusing the feature maps of different resolutions from the first to the fourth layer (ASFF-1 to ASFF-4), significantly enhancing the model’s scale-invariant detection capability for multi-scale targets.

Figure 8.

Internal Feature Fusion Process of ASFF.

The feature generation process operates as follows: When input images X1–X4 undergo hierarchical feature extraction, they produce level-1 to level-4 feature maps. Due to the varying resolutions and channel numbers of these feature maps, the first three of them need to be resized to match the size of the fourth one, and then the output feature layer can be generated. Let denote the transformation process where feature map n is adjusted to match feature map l (n, l ∈ {1,2,3,4} and n ≠ l). Let represents the feature vector at position (i, j) after resizing feature map n to match both the channel dimension and resolution of target feature map l. During the scaling process, 1 × 1 convolutions can be employed to adjust the channel number to match that of the target feature map l, followed by interpolation operations to align the spatial dimensions. These scaling transformations are designated as , , and respectively. Through convolutional operations and feature fusion, the network adaptively learns weight parameters , , and .

represents the fused feature layer. , , and denote the feature vectors at position (i, j) after dimensional transformation, matching the channel number and resolution of feature layer l. , , and represent the spatial fusion weights generated when different feature layers are scaled to layer l.

The comparison diagram of the improved network structure is shown in Figure 9. Different from PANet or BiFPN, the core innovation of M-ASFF (which extends ASFF to four layers) lies in the introduction of a learnable spatial adaptive weight mechanism. This enables selective fusion rather than blind fusion. It can effectively utilize the advantages of each layer’s features, while effectively suppressing conflicts and noise from different scales. This strategy significantly improves the detection accuracy of multi-dimensional targets (especially small targets), effectively handles the scale variation problem of black smoke.

Figure 9.

Overall Network Structure Comparison Before and After Improvement.

2.2.3. Low-Loss Anchor Box Reconstruction

The concentration of black smoke is mainly determined by the central area and is insensitive to the offset of the bounding box. However, the original network’s adopted CIoU loss function overly focuses on geometric indicators (such as the distance from the center point and size variations), and has poor adaptability to irregular shapes. Due to the diffusion of black smoke morphology, blurred boundaries, and irregular shapes, using CIoU leads to abnormally high loss values, thereby reducing the detection performance. Therefore, it is not suitable for the black smoke detection task.

To improve the CIoU loss, this paper introduces Inner-IoU, which is achieved by using an auxiliary bounding box that is scaled according to a “ratio” (typically ranging from 0.5 to 1.5), as shown in Figure 10. The specific principle is as follows: When the predicted box is close to the real box (high IoU), a smaller auxiliary box is used to provide more precise gradients, promoting convergence; while when the predicted box deviates significantly (low IoU), a larger auxiliary box can broaden the effective learning area, ensuring more gentle and effective gradients. This mechanism reduces sensitivity to minor geometric errors, enabling it to exhibit superior regression stability and adaptability when detecting targets with blurred boundaries and variable scales, such as black smoke.

where bgt and b denote the ground truth box and anchor box, respectively, represents the center coordinates of both the ground truth box and its internal center point, (xc, yc) indicates the center coordinates of the anchor box and its internal counterpart, wgt and hgt correspond to the width and height of the ground truth box, while w and h represent the width and height dimensions of the anchor box.

Figure 10.

Schematic Diagram of Inner-IoU Loss Function.

2.3. Multi-Dimensional Black Smoke Detection Network Architecture

The improved network structure is shown in Figure 11. To better illustrate the interaction between the modules, Figure 12 describes its working mechanism. The network first processes the input black smoke features through the convolutional layer, and then extracts multi-scale features from the source using parallel convolution. These features are sent to the expanded four-layer adaptive fusion detection head to detect multi-scale black smoke. This structure fuses features from different depths through a dynamic weight mechanism and selects the optimal output. At the same time, the original loss function is replaced with Inner-IoU, which is more suitable for black smoke detection. The adaptive detection head combines with the real labels and achieves precise positioning through low-loss anchor boxes during training, thereby improving the detection accuracy of irregular black smoke bounding boxes. The test results are shown in the red-marked box in the "output" section of Figure 12. All modules jointly construct a complete process of “multi-scale feature extraction—adaptive fusion—precise regression”.

Figure 11.

Multi-dimensional Black Smoke Detection Network Architecture.

Figure 12.

Network module operation process.

3. Results

3.1. Dataset

3.1.1. Data Sample Augmentation

Since no public smoke dataset is available for deep learning-based smoke detection, a custom dataset was constructed in this study. Various smoke images and videos were collected from online sources and expanded to 5000 images through data augmentation techniques. This dataset is referred to as the Smoke-X dataset. To meet practical monitoring requirements, images were collected according to the following criteria. First, the dataset includes scenes under various lighting conditions to simulate real-world environments. Second, the collected smoke images cover diverse and representative scenarios that align with actual situations. Following these criteria, images were collected under various lighting conditions, including large-target smoke, small-target smoke, blurred-edge smoke, clear-edge smoke, light smoke, moderate smoke, and heavy smoke.

Multiple data augmentation techniques were employed. Considering the practical application scenarios of the dataset, brightness transformation, slight rotation, occlusion, and horizontal/vertical flipping operations were selected to simulate smoke under diverse lighting conditions and viewing angles.

- (1)

- Brightness transformation: The brightness of images was modified to simulate different lighting environments. This enhancement improves the robustness and generalization capability of the trained model, enabling it to adapt to various illumination conditions encountered in practical applications. Assuming the pixel value of an image is denoted as I(x, y), the transformed pixel value can be expressed through a linear function:where I(x, y) represents the pixel value of the original image, I′(x, y) denotes the transformed pixel value, α is the scaling factor controlling brightness, and β is the bias parameter. The augmented dataset is illustrated in Figure 13b,c.

Figure 13. Examples from the Augmented Dataset.

Figure 13. Examples from the Augmented Dataset. - (2)

- Rotation: Angle variations simulate scene changes from different shooting perspectives, enabling the model to learn smoke features across various viewpoints. The original images were randomly rotated by 5 to 10 degrees. Sample augmented datasets are shown in Figure 13d,e.

- (3)

- Random occlusion: Random occlusion simulates real-life partial obscuration of smoke emissions, contributing to improved dataset quality by mimicking practical scenarios. A sample augmented dataset is displayed in Figure 13f.

- (4)

- Flipping: Horizontal flipping simulates mirror-view transformations, enhancing the model’s ability to recognize smoke features from different orientations. Vertical flipping improves the model’s adaptability to complex environments. The augmented datasets are presented in Figure 13g,h.

After the above expansion, the total number of this dataset amounts to 5000. Regarding the method of image collection, images were collected from publicly available online sources including vehicle emission monitoring databases and extracted from surveillance videos. Collection criteria included diverse lighting conditions ranging from daylight to dusk, various smoke densities from light to heavy, and multiple viewing angles to ensure dataset representativeness. All collected images underwent quality screening to remove blurred, corrupted, or ambiguous samples before annotation. In terms of data division, we divided the data into training set, validation set and test set in a ratio of 70:15:15. Please note that the Chinese characters in the second row of column (a) of Figure 13 are irrelevant to the content of this article. They are advertisements from the image collection process.

3.1.2. Dataset Preparation

Here, LabelImg is used for annotation. The annotation process is as follows:

- (1)

- First, create a new folder named A on the desktop. This folder contains two subfolders, B and C. Place the black smoke dataset images in the B folder, and leave the C folder empty. Install and open LabelImg. Click the “Open Dir” button on the interface to open the A folder. Click the “Change Save Dir” button and select the C folder.

- (2)

- Select the format as “YOLO”. Press the shortcut key “W” to start annotation. Click and drag the mouse to draw a rectangular box to enclose the black smoke area and name it, for example, “smoke”. Click the “OK” button to confirm. The annotation process is shown in Figure 14. After each annotation, click the “Save” button to save the image. Use the “Next Image” button to switch to the next image.

Figure 14. Example of the LabelImg annotation process.

Figure 14. Example of the LabelImg annotation process. - (3)

- Repeat step 2 to annotate the next image until all images are annotated. When all images are annotated, the B folder contains the dataset images, and the C folder contains the.xml files of the corresponding images from the B folder from top to bottom.

3.1.3. Dataset Distribution

To evaluate the dataset quality, the augmented dataset was visualized as shown in Figure 15. Figure 15a presents the category distribution histogram. Since this study involves only one category, the issue of label class distribution imbalance does not arise. Figure 15b displays the distribution of bounding box sizes, indicating that the smoke targets cover small, medium, and large spatial ranges within the images, demonstrating a reasonable size distribution. Figure 15c shows the distribution of label center positions, revealing that the smoke targets are relatively evenly distributed across various regions of the images. Figure 15d illustrates the distribution of bounding box areas, demonstrating that the dataset includes small, medium, and large smoke targets.

Figure 15.

Training Set Information Visualization.

3.2. Experimental Configuration

The experimental conditions used in this study are summarized in Table 2. The specific settings for the number of iterations, batch size, image size, optimizer, learning rate, and early stopping parameters are shown in Table 3. All other unspecified parameters retained their default values.

Table 2.

Experimental Environment Configuration.

Table 3.

Experimental Parameter Settings.

3.3. Evaluation Metrics

The evaluation of network performance necessitates appropriate metrics, with different models requiring assessment through specific indicators. Standard evaluation metrics comprise precision (P), recall (R), mean average precision (mAP), and F1-score. Precision quantifies the ratio of correctly predicted positive instances to all positive predictions. Recall measures the proportion of actual positive cases that are accurately detected. The F1-score, as the harmonic mean of precision and recall, provides a balanced evaluation by incorporating both metrics. Mean average precision serves to quantify the model’s comprehensive detection capability.

Based on Table 4, the definitions of TP, FN, FP, and TN are as follows:

Table 4.

Metric Categories and Their Definitions.

True Positive (TP): Samples containing smoke that are correctly predicted as smoke-positive.

False Negative (FN): Samples containing smoke that are incorrectly predicted as smoke-negative.

False Positive (FP): Samples without smoke that are incorrectly predicted as smoke-positive.

True Negative (TN): Samples without smoke that are correctly predicted as smoke-negative.

Among these metrics, , AP represents the average precision for a single target category, and N denotes the number of categories. Since this study involves only one detection target, mAP equals AP.

3.4. Experiments and Analysis

3.4.1. Ratio Numerical Ablation Experiment

To determine the optimal ratio value, we conducted multiple experiments in a range from 0.5 to 1.5 with a step size of 0.1. The specific results are shown in Figure 16. When the ratio value is low, the model performs better in terms of recall rate. As the ratio value increases, the accuracy gradually improves, but the recall rate decreases, and the imbalance of the network becomes more significant. For example, when the ratio value is 1.3 or above, the accuracy can reach 0.853 to 0.860, but the recall rate drops to 0.716 to 0.709, indicating that the model is more inclined to reduce false positives but may miss more positive samples.

Figure 16.

Comparison of various numerical performance indicators.

This phenomenon suggests that based on the requirements of the actual task, one can flexibly choose among multiple well-performing and balanced configurations. In practical applications, parameter selection should closely revolve around the task objective: if higher detection accuracy is required, parameters can be adjusted to suppress false positives; if recall rate is the focus, then false negatives should be reduced first; if mAP50 is the core indicator, then a balance between accuracy and recall should be sought. Considering overall performance and common practices, we ultimately set the benchmark ratio value at 0.8. This setting provides stable and balanced performance in most scenarios, maintaining high accuracy and recall rates while also having strong competitiveness on mAP50, which meets the requirements of this project for the model’s universality and stability.

3.4.2. Ablation Study

To assess the contributions of individual improvement modules to network performance, the multi-scale feature extraction method, adaptive feature fusion detection head, and Inner-IoU loss are designated as components A, B, and C, respectively. Experimental validation was performed on the Smoke-X dataset using precision, recall, mAP50, and F1-score as evaluation criteria. The experimental matrix utilizes “√” to indicate inclusion of the specified component in the detection network and “—” to represent its exclusion. The comprehensive experimental results are documented in Table 5.

Table 5.

Ablation Study Performance Comparison.

From Table 5, it can be seen that after using Method A, the network’s accuracy rate increased by 2.8%, the recall rate increased by 4.5%, mAP50 increased by 3.2%, and the F1 value was 79.8%. This change indicates that this method enhances the network’s ability to extract black smoke features in multiple dimensions and improves the overall performance.

After using the B structure, the network’s accuracy rate increased by 3.4%, the recall rate increased by 0.8%, mAP50 increased by 3.5%, and the F1 value was 78.1%. This detection head maintains feature stability and invariance through feature fusion, successfully improving the network’s accuracy.

After using Method C, the accuracy rate increased by 3.9%, the recall rate increased by 2.7%, mAP50 increased by 3.9%, and the F1 value was 79.4%. This is because the network uses a low-loss anchor box mechanism, which can better adapt to the black smoke detection task, especially in detecting the edges of black smoke, and enhances the model’s ability to detect black smoke targets.

When A and B are added simultaneously compared to adding them separately, the accuracy and recall rates are improved, but due to the balance problem of feature extraction and fusion, mAP50 is slightly sacrificed.

When B and C are added simultaneously compared to adding them separately, the accuracy rate, recall rate, and mAP50 all increase. The feature fusion performance of B combined with the loss function optimization of C enables a more balanced improvement in all indicators of the model.

When A and C are added simultaneously compared to adding them separately, the accuracy rate, recall rate, and mAP50 all increase. This indicates that the combination of A and C improves the recall rate and overall detection performance of the model, enabling it to capture the target more comprehensively.

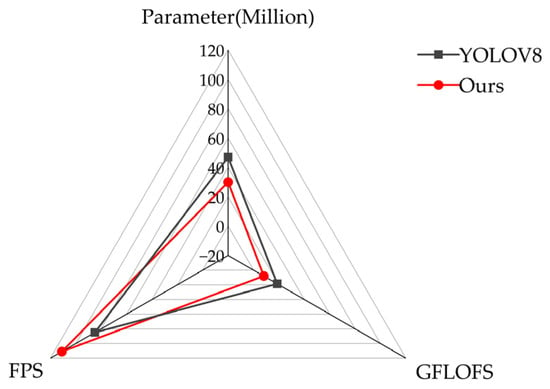

After adding A, B, and C simultaneously, the accuracy, mAP50, and F1 reach the highest values. The temporary mAP50 reduction when combining A and B (77.2%) likely stems from initial feature fusion instability before the addition of component C’s improved localization, which ultimately resolves this issue in the full model (82.9%). In general, the scheme of adding A, B, and C simultaneously is better. Additionally, we conducted a comparative experiment on the original benchmark network and the model with the simultaneous introduction of A, B, and C structures, which are recorded as YOLOv8 and Ours. Figure 17 shows that the improved network outperforms the basic network in all aspects. The curve of Ours in the figure indicates that the combined use of A, B, and C can complement each other’s advantages and demonstrates the effectiveness of the method proposed in this paper. It is worth noting that we conducted five repetitions for each set of experiments and took the average value. The variance of each set of data was less than 0.2, which proved the stability of our data []. To further demonstrate the stability of the data presented in this article, we conducted a t-test on the results before and after the improvement, and obtained standard deviations, confidence intervals, and p-values all equal to 0.00001 (as shown in Table 6). All p-values of 0.00001 indicate that performance improvements are statistically significant at the p < 0.001 level.

Figure 17.

Performance Comparison Before and After Network Improvement.

Table 6.

T-test Information Before and After Improvement.

The improvement of the model has led to satisfactory results, with an increase in its accuracy, recall rate, and mAP50. However, when conducting the experiments according to the experimental configuration in Table 2, the results shown in Table 7 were obtained. To present these differences more intuitively, we have presented them in the form of a graph, as shown in Figure 18. It should be noted that the above data was measured when the network training was completed. The experiment was conducted using the NVIDIA GeForce RTX 4090 GPU, while the results in Table 8 were obtained using the RTX 4060 GPU.

Table 7.

Comparison of network parameters before and after improvement.

Figure 18.

Comparison of some indicators between YOLOV8 and the improved network.

Table 8.

Speed display of different graphics cards.

We observed that the number of parameters and computational complexity increased, while the inference speed decreased. This clearly indicates the direction of our future work: on the basis of consolidating the performance advantages, we will focus on the lightweight design of the network to convert the excellent detection performance into more efficient practical applications.

3.4.3. Performance Evaluation and Detection Analysis

To systematically evaluate the performance of the model, we conducted a comparative experiment on the Smoke-X dataset between the baseline detection network and the improved model. The experiment used the bounding box loss (the bounding box loss during the training process) and mAP50 as evaluation metrics to comprehensively reflect the convergence behavior and final accuracy of the model during the training period. As shown in Figure 19, both the baseline model and the improved model exhibited a rapid decrease in loss at the beginning of the training process, indicating that both models could quickly reach the convergence state. However, as the training progressed, the improved model continued to decrease the bounding box loss and stabilized at a lower value, significantly outperforming the original model, demonstrating its stronger fitting ability and optimization stability. From the mAP50 metric (Figure 20), it can be seen that both models show an upward trend overall, confirming the effectiveness of the training. Although there was some fluctuation in the early training stage, the improved model demonstrated a more stable and faster growth in the middle and later stages, ultimately reaching a higher accuracy plateau, verifying the positive effect of the introduced improvement mechanism in enhancing detection accuracy.

Figure 19.

Variation in Box Loss During Training.

Figure 20.

Variation in mAP50 During Training.

In conclusion, the improved model consistently outperforms the baseline model in both the bounding box regression loss and the detection accuracy mAP50 metrics, demonstrating that it not only has a more stable convergence behavior but also achieves an effective improvement in the overall detection performance.

Different-sized black smoke instances were selected for reasoning, and the results are shown in Figure 21 and Figure 22. These figures illustrate the number of detected targets, frames per second (FPS), and the average intersection-over-union (mIoU). Each image contains six comparison groups (left: before improvement; right: after improvement). As shown in the first group of Figure 21 (from left to right, from top to bottom), the improved network successfully detected black smoke targets that were not identified by the original algorithm, thereby reducing the number of missed detections. In the second and third groups of Figure 21, the improved network is capable of accurately identifying the non-target objects that were initially misjudged as black smoke by the original algorithm. However, the detection accuracy and intersection-over-union ratio did not all increase. This indicates that in some complex scenarios, there is a trade-off relationship between high confidence predictions and high intersection-over-union ratios. This might be caused by inconsistencies in position or feature extraction. Overall, the enhanced network has shown significant improvements in reducing missed detections and lowering false alarms. In addition to the above groups, in all other groups of Figure 21 and Figure 22, the detection accuracy and IoU scores have improved. These results indicate that the enhanced network performs effectively in detecting light, heavy, and diffuse black smoke at the edges, and has multi-dimensional detection capabilities.

Figure 21.

Partial Inference Results of the Improved Network (Set 1).

Figure 22.

Partial Inference Results of the Improved Network (Set 2).

For practical deployment in vehicle emission monitoring systems, our experiments demonstrate that the proposed model requires mid-range GPU hardware to achieve acceptable real-time performance. Testing across multiple configurations revealed that while entry-level hardware (MX230) achieves only 5 FPS suitable for offline analysis, mid-range cards (GTX 1650 Ti at 6–10 FPS) approach real-time thresholds, and high-performance GPUs (RTX 4060 at 50+ FPS) enable robust real-time monitoring. These results indicate that deployment is feasible with commercially available hardware, though optimization remains necessary for resource-constrained edge devices. The specific experimental results are shown in Table 8.

3.4.4. Network Comparison Experiments and Analysis

To fully demonstrate the performance improvement of the proposed network model, comparative experiments were conducted under identical conditions with mainstream algorithms: Faster R-CNN, SSD, YOLOv3, YOLOv4-tiny, YOLOv5, YOLOv8, YOLOv9, YOLOv10 and YOLOv11. All comparison models were trained from scratch on the Smoke-X dataset using identical training protocols (300 epochs, batch size 16, SGD optimizer) to ensure fair comparison. The experimental results are presented in Table 9.

Table 9.

Comparative Experiments of Different Networks.

This paper compares the single-stage and two-stage object detection algorithms. As can be seen from the table above, compared with algorithms such as Faster R-CNN, SSD, YOLOv3, YOLOv4-tiny, YOLOv5s, YOLOv8, YOLOv9, YOLOv10, and YOLOv11, the improved network in this paper has improved in all indicators, proving the effectiveness of the improved method. The SSD model performs relatively poorly and has weak overall capabilities, making it unsuitable for the black smoke detection task. Faster R-CNN performs well in terms of accuracy and recall rate, but compared with the model in this paper, its overall performance still cannot reach the F1 level. The YOLO series (YOLOv3, YOLOv4-tiny, YOLOv5, YOLOv8, YOLOv9, YOLOv10, YOLOv11) has certain advantages in terms of accuracy and recall rate, but in terms of comprehensive performance, there is still a gap compared with the model in this paper. This indicates that the YOLO series may have certain challenges when dealing with complex targets. Overall, the performance of the model in this paper is relatively balanced and has broad application potential.

3.5. Network Deployment Analysis

This section provides an in-depth analysis of the deployment feasibility of the improved model, covering hardware requirements, minimum computational resources, and planned optimization strategies to enhance inference speed.

To meet real-time detection requirements in practical scenarios, it is recommended to perform inference on hardware such as an NVIDIA GeForce RTX 4060 (with 24 GB VRAM) or higher. The model performance reported in this study was measured in the following software environment: a 16 vCPU Intel(R) Xeon(R) Platinum 8481C CPU, CUDA version 11.8, and 24 GB of RAM. Accordingly, the minimum recommended computational resources for deploying the model are as follows:

- (1)

- GPU: A consumer-grade or workstation-grade GPU with at least 24 GB of VRAM (e.g., NVIDIA RTX 3060/4060 Ti or equivalent professional-grade cards);

- (2)

- Memory: System RAM should be no less than 16 GB to ensure smooth data preprocessing and loading;

- (3)

- Storage: Solid-state drive (SSD) is recommended to accelerate model loading and dataset reading;

- (4)

- Software dependencies: CUDA version 11.8, with Ubuntu or Windows 10/11 as the operating system.

To enhance the reasoning speed and deployment adaptability, this discussion explores possible optimization strategies and lightweight approaches. The following optimization methods are planned to be adopted:

Model quantization will be applied. Frameworks such as TensorRT or ONNX Runtime are intended to be used to convert the trained model from FP32 precision to FP16 or INT8. INT8 quantization will adopt a static approach, using a calibration dataset to determine quantization parameters. This strategy is expected to significantly reduce model size and memory usage, increase inference speed, and enhance deployment potential in resource-constrained environments.

Structured pruning will be employed targeting the four-branch structure introduced in the model. By pruning redundant channels or filters with lower contributions in the backbone and neck networks of YOLOv8, the number of parameters and computational complexity (FLOPs) can be effectively reduced while maintaining structural integrity, thereby improving inference efficiency.

Knowledge distillation will also be introduced for further lightweighting. The final improved model from this study serves as the teacher model, transferring its knowledge to a structurally lighter student model (e.g., YOLOv8n). Specifically, soft labels generated by the teacher model using high-temperature Softmax (which contain rich inter-class relational information) and intermediate feature alignment (via 1 × 1 convolutional adapters to adjust channel dimensions) will be utilized to guide the student model’s learning. The loss function for distillation is preliminarily designed as a weighted combination of student loss, distillation loss, and feature mimicry loss. During training, the teacher model’s weights remain fixed, and only the student model’s parameters are updated. The outcome is a lightweight model that achieves a favorable balance between accuracy and efficiency, making it more suitable for deployment on edge computing devices.

Among these strategies, we anticipate that INT8 quantization combined with structured pruning of the four-branch architecture will yield the most significant efficiency gains while maintaining detection accuracy above 80% mAP50, based on preliminary analyses of parameter redundancy in the PKI-Blocks and M-ASFF modules. This represents our immediate priority for future optimization work. Through the combined strategies of quantization, pruning, and distillation, we expect to effectively reduce computational overhead, gradually overcome deployment bottlenecks, and ultimately enable large-scale application on low-cost embedded devices and edge computing platforms.

4. Discussion

To validate our method’s effectiveness, we compare it with existing techniques. We first highlight our innovations and key advantages through comparative analysis with representative works. Then we summarize our contributions, describe applicable scenarios, and discuss limitations. Finally, we outline future research directions.

Our multi-dimensional feature extraction structure aligns with YOLOv5’s CSPNet [] and YOLOv9’s GELAN [] in enhancing multi-scale representation, but differs in implementation. While CSPNet reduces gradient redundancy via cross-stage partial connections and GELAN improves information flow through dual gradient paths, we replace the C2F module with parallel convolutions of varying dilation rates. This design, conceptually similar to DeepLab’s ASPP [], deploys in early and middle backbone stages to efficiently extract multi-scale smoke features from low to high levels. This effectively alleviates the scale bias problem that may occur in the serial structure (FPN in YOLOv3 []) or the hybrid structure (PANet in YOLOv4 []). This improvement enhances the model’s ability to recognize different scales of smoke. The experimental results also confirm this, with an mAP50 of 80.5%, addressing the shortcomings of earlier models (such as SSD [], YOLOv3, and YOLOv4) in this regard.

For feature fusion, our adaptive detection head advances beyond FPN, PANet, and BiFPN []. Unlike YOLOv8’s task-aligned assignment [] without explicit fusion weights and YOLOv10’s simplified head [] lacking conflict suppression, our design incorporates dynamic weight learning. It not only learns feature importance like BiFPN but also suppresses conflicts between shallow and deep features while reducing channel interference. This enhances focus on smoke in complex backgrounds (e.g., forests, building edges), achieving 82.7% precision with 74% recall. The four-detector design offers denser sampling points than YOLOv5/YOLOv8’s three-head structure, improving detection of small smoke targets.

To address the blurred boundaries and irregular shapes of black smoke, traditional CIoU suffers from reduced localization accuracy due to its over-reliance on geometric metrics. Unlike GIoU [], which resolves gradient vanishing for non-overlapping boxes, and DIoU [], which accelerates center-point convergence, our adopted Inner-IoU [] introduces an adaptive regression mechanism. It employs smaller auxiliary boxes for high-IoU samples to enhance gradients for precise refinement, and larger boxes for low-IoU samples to expand the regression area and improve convergence. This approach enables accurate multi-scale black smoke detection, improving the localization accuracy to 83.2%.

These three core improvements together form a complete pipeline of “feature extraction to fusion optimization to precise localization,” collectively enhancing overall model performance. The final results—84.1% precision, 79.1% recall, and 82.9% mAP—demonstrate that our approach addresses the long-standing issue of insufficient localization accuracy in YOLO series models for smoke detection.

In summary, the main academic contributions of this work are: (1) proposing a parallel multi-dimensional feature extraction structure that offers an efficient solution for detecting smoke across varying scales; (2) designing an adaptive feature fusion mechanism with dynamic filtering and balancing capabilities; and (3) validating the superiority of the Inner-IoU loss function in detecting blurred and irregular objects, providing a new technical approach for accurate localization of such targets.

The method performs well in black smoke detection under normal lighting (morning to evening), reliably identifying smoke at Ringelmann level 1 and above. It demonstrates practical value in applications like diesel emission monitoring. Under standard testing, the model achieves 82.9% mAP and 81.5% F1-score while maintaining real-time performance (50 FPS on RTX 4060). However, limitations exist in complex scenarios. Frist, the model has 56.67% more parameters than YOLOv8, hindering deployment on resource-constrained edge devices (e.g., GPUs below 1650 Ti level). Second, as shown in Section 3.4.3, it misclassifies backlit building shadows as smoke, indicating poor discrimination of visual similarities. This is likely due to insufficient suppression of background interference (e.g., shadow textures) during multi-scale feature integration. Moreover, generalization to extreme weather (snowstorms, fog, dust) remains unverified due to data unavailability.

Future work will address current limitations through two main directions. For real-time deployment, we will apply the Section 3.5 techniques—model pruning, quantization, and knowledge distillation—to reduce computation by over 20%, achieve 20 FPS on edge devices like Jetson Nano, and begin real-road pilot deployments. For cross-scene generalization, we will build a specialized dataset covering extreme weather (snowstorms, fog, dust) and smoke-like interference objects. To overcome data scarcity, we will use GANs for synthetic images and manually collect challenging scenes (fog, shadows, vehicle vapor, dust) to enhance model robustness and generalization.

5. Conclusions

This study achieved significant performance improvement through three core innovations: the introduction of a multi-dimensional feature extraction module, an adaptive feature fusion detection head, and low-loss bounding box reconstruction. As a result, the model’s accuracy increased by 4.8%, the recall rate rose by 5.9%, and mAP50 improved by 5.6%. These improvements significantly enhanced the model’s ability to accurately monitor diesel vehicle black smoke emissions in real-world scenarios.

Based on this, our next step will be to develop a lightweight version suitable for embedded devices to facilitate the deployment in practical applications. We have formulated a clear implementation plan: We will focus on developing a lightweight model for the embedded environment, planning to adopt model pruning and INT8 quantization techniques, and achieve real-time inference performance of no less than 20 FPS on the Jetson Orin series platform. We plan to deploy and verify the system at typical traffic intersections and port areas, ultimately forming a practical automatic black smoke monitoring solution, providing complete technical support for achieving precise supervision of mobile source emissions.

Author Contributions

Conceptualization, X.X.; Data curation, X.X. and M.Z.; Formal analysis, X.X. and B.L.; Funding acquisition, B.L.; Methodology, B.L.; Investigation, X.X.; Project administration, B.L. and M.Z.; Resources, B.L.; Validation, X.X.; Visualization, X.X. and M.Z.; Writing—original draft, X.X. and B.L.; Writing—review and editing, B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Heilongjiang Provincial Natural Science Foundation (grant number E2017001) and the National Key Research and Development Program of China (grant number 2017YFC0803901-2).

Data Availability Statement

The dataset can be found at the following link: https://pan.baidu.com/s/1PGctYPge7N5sQxx10qJWkg?pwd=i7jz (accessed on 22 October 2025). Extraction code: i7jz.

Acknowledgments

We also thank the anonymous reviewers for their critical feedback, which was instrumental in improving this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ministry of Ecology and Environment of the People’s Republic of China. China Mobile Source Environmental Management Annual Report. 2024; [26 March 2025]. Available online: https://www.mee.gov.cn/hjzl/sthjzk/ydyhjgl/ (accessed on 18 July 2025).

- Huang, C.; Lou, D.; Hu, Z.; Feng, Q.; Chen, Y.; Chen, C.; Tan, P.; Yao, D. A PEMS study of the emissions of gaseous pollutants and ultrafine particles from gasoline- and diesel-fueled vehicles. Atmos. Environ. 2013, 77, 703–710. [Google Scholar] [CrossRef]

- Huizhou Municipal People’s Government. Notice of Huizhou Municipal People’s Government on Prohibiting Black Smoke Vehicles from Driving on the Road. [24 May 2021]. Available online: http://hzgajj.huizhou.gov.cn/gkmlpt/content/4/4289/mpost_4289389.html#433 (accessed on 19 July 2025).

- Schmidt, C.; Pöhler, D.; Knoll, M.; Bernard, Y.; Frateur, T.; Ligterink, N.E.; Vojtíšek, M.; Bergmann, A.; Platt, U. Identification of high emitting heavy duty vehicles using Plume Chasing: European case study for enforcement. Sci. Total Environ. 2025, 994, 179844. [Google Scholar] [CrossRef]

- Taha, S.S.; Idoudi, S.; Alhamdan, N.; Ibrahim, R.H.; Surkatti, R.; Amhamed, A.; Alrebei, O.F. Comprehensive review of health impacts of the exposure to nitrogen oxides (NOx), carbon dioxide (CO2), and particulate matter (PM). J. Hazard. Mater. Adv. 2025, 19, 100771. [Google Scholar] [CrossRef]

- Chen, S.M.; Ye, P.; Wei, J.; Cheng, H.H.; Zhang, Y.Q.; Ma, Y.J.; Chen, S.R.; Wu, W.J.; Guo, T.; Li, Z.Q.; et al. Causal links between long-term exposure to the chemical constituents of PM2.5 and lung cancer mortality in southern China. J. Hazard. Mater. 2025, 495, 139096. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.T.; Yang, L.; Kang, N.; Wang, N.; Zhang, X.; Liu, S.; Li, H.C.; Xue, T.; Ji, J.F. Associations of incident female breast cancer with long-term exposure to PM2.5 and its constituents: Findings from a prospective cohort study in Beijing, China. J. Hazard. Mater. 2024, 473, 134614. [Google Scholar] [CrossRef]

- Brewer, T.L. Black carbon emissions and regulatory policies in transportation. Energy Policy. 2019, 129, 1047–1055. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, G.; Sheng, H.; Zhang, X.; Yuan, G.; Zhang, C. Batch EOL products human-robot collaborative remanufacturing process planning and scheduling for industry 5.0. Comput.-Integr. Manuf. 2026, 97, 103098. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, X.; Jing, K.; Zhang, C. Learning precise feature via self-attention and self-cooperation YOLOX for smoke detection. Expert Syst. Appl. 2023, 228, 120330. [Google Scholar] [CrossRef]

- Jing, K.; Zhang, X.; Xu, X. Double-Laplacian mixture-error model-based supervised group-sparse coding for robust palmprint recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3125–3140. [Google Scholar] [CrossRef]

- Prema, C.E.; Vinsley, S.; Suresh, S. Efficient flame detection based on static and dynamic texture analysis in forest fire detection. Fire Technol. 2018, 54, 255–288. [Google Scholar] [CrossRef]

- Filonenko, A.; Hernández, D.C.; Jo, K. Fast smoke detection for video surveillance using CUDA. IEEE Trans. Ind. Inform. 2018, 14, 725–733. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Higher order linear dynamical systems for smoke detection in video surveillance applications. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 1143–1154. [Google Scholar] [CrossRef]

- Sun, R.; Chen, X.; Chen, B. Smoke detection for videos based on adaptive learning rate and linear fitting algorithm. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; pp. 1948–1954. [Google Scholar] [CrossRef]

- Tao, H.; Lu, X. Smoky vehicle detection based on range filtering on three orthogonal planes and motion orientation histogram. IEEE Access 2018, 6, 57180–57190. [Google Scholar] [CrossRef]

- Alamgir, N.; Nguyen, K.; Chandran, V.; Boles, W. Combining multi-channel color space with local binary co-occurrence feature descriptors for accurate smoke detection from surveillance videos. Fire Saf. J. 2018, 102, 1–10. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar] [CrossRef]

- Yin, Z.; Wan, B.; Yuan, F.; Xia, X.; Shi, J. A deep normalization and convolutional neural network for image smoke detection. IEEE Access 2017, 5, 18429–18438. [Google Scholar] [CrossRef]

- Khan, M.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient deep CNN-based fire detection and localization in video surveillance applications. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 1419–1434. [Google Scholar] [CrossRef]

- Cao, Y.; Lu, X. Learning spatial-temporal representation for smoke vehicle detection. Multimed. Tools Appl. 2019, 78, 27871–27889. [Google Scholar] [CrossRef]

- Gu, K.; Xia, Z.; Qiao, J.; Lin, W. Deep dual-channel neural network for image-based smoke detection. IEEE Trans. Multimed. 2020, 22, 311–323. [Google Scholar] [CrossRef]

- Zhan, J.; Hu, Y.; Zhou, G.; Wang, Y.; Cai, W.; Li, L. A high-precision forest fire smoke detection approach based on ARGNet. Comput. Electron. Agric. 2022, 196, 106874. [Google Scholar] [CrossRef]

- Lin, T.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite smoke scene detection using convolutional neural network with spatial and channel-wise attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef]

- Liu, Y.; Ji, S. CleftNet: Augmented deep learning for synaptic cleft detection from brain electron microscopy. IEEE Trans. Med. Imaging 2021, 40, 3507–3518. [Google Scholar] [CrossRef]

- Yuan, L.; Tong, S.; Lu, X. Smoky Vehicle Detection Based on Improved Vision Transformer. In Proceedings of the 5th International Conference on Computer Science and Application Engineering (CSAE), Sanya, China, 19–21 October 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Baidya, R.; Jeong, H. YOLOv5 with ConvMixer prediction heads for precise object detection in drone imagery. Sensors 2022, 22, 8424. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8, Version 8.0.0. [10 January 2023]. Available online: https://github.com/ultralytics/ultralytics (accessed on 18 July 2025).

- Novak, D.; Kozhubaev, Y.; Potekhin, V.; Cheng, H.; Ershov, R. Asymmetric Object Recognition Process for Miners’ Safety Based on Improved YOLOv10 Technology. Symmetry 2025, 17, 1435. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5. [2024]. Available online: https://github.com/ultralytics/yolov5 (accessed on 26 October 2025).

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–20. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Syst. Man Cybern. Syst. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.-Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. In Proceedings of the IEEE/Cvf Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13024–13033. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2021, 37, 13024–13033. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression. arXiv 2019, arXiv:1902.09630. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019, arXiv:1911.08287. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More Effective Intersection over Union Loss with Auxiliary Bounding Box. arXiv 2023, arXiv:2311.02877. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).