Abstract

Köetter and Kschischang proposed a coding algorithm for network error correction based on subspace codes, which, however, has a high communication overhead (100%). This paper improves upon their coding algorithm and presents a coding algorithm for network error correction with lower communication overhead, which is similar to the communication overhead of classical random network coding. In particular, we utilize the inherent symmetry in subspace codes to optimize the construction process, leading to a more efficient algorithm. At the same time, this paper also studies the construction problem of constant dimension subspace codes, utilizing parallel construction and multilevel construction. By exploiting the symmetry in these methods, we generalize previous results and derive new lower bounds for constant dimension subspace codes.

Keywords:

network coding; constant dimension subspace codes; parallel construction; Ferrers diagram; multilevel construction MSC:

94B05

1. Introduction

Let q be a prime power and be the finite field with q elements which is the field of scalars; the projective space of order n over , denoted as , is the set of all subspaces of the vector space . Projective geometry possesses a natural symmetry, which can influence how subspaces are selected and constructed. Let denote two positive integers with and let be the family of all k-dimensional subspaces in . For any , the subspace distance of and is defined as follows:

A nonempty subset is called a constant dimension subspace code with parameters , if , and .

Constant dimension subspace codes are considered to control errors in random linear network coding which are analogous with the well-known -code, a k-dimensional subspaces of . As a code, the family of all k-dimensional subspace of is taken and a code-word is a subspace. The determination of bounds for maximal possible size of an constant dimension subspace code is one of the main problems. An online table for lower and upper bounds of can be found at: https://arxiv.org/abs/1601.02864 (accessed on 1 December 2017) (see [1]).

The rank metric code is a subset of the matrix space endowed with the rank metric . It is well known that the number of codewords in is upper bounded by

(see Refs. [2,3]). A code attaining this bound is referred to as a maximum rank-distance (MRD) code. Lifted MRD codes, parallel construction and multilevel construction are effective techniques for constructing constant dimension subspace codes, with a great deal of results on the construction of constant dimension subspace codes presented in the literature (see [4,5,6,7,8,9,10,11,12,13]).

(1) In [14], Etzion and Silberstein introduced the generalized lifted rank metric codes, referred to as lifted Ferrers diagram rank metric codes. They demonstrated the application of the union of these lifted codes in constructing some constant dimension subspace codes with significantly larger sizes.

(2) In [7], Xianmang He et al. remedied the linkage construction and presented a new construction known as the parallel linkage construction. Many new lower bounds on were proved from the parallel linkage construction.

(3) In [15], Fagang Li proposed the multilevel linkage construction as an effective improvement on the linkage construction. By combining the multilevel construction and linkage construction, he was able to obtain new lower bounds of constant dimension subspace codes for small parameters.

(4) In [16], Shuangqing Liu et al. introduced a family of new codes called rank metric codes with given ranks, which represent a generalization of both the parallel construction and the classic multilevel construction. Furthermore, many constant dimension subspace codes have been identified with larger size than those of previously best known codes.

In view of the above results, we integrate the parallel and multilevel construction to propose an enhanced version of constant dimension subspace codes in this paper. The aim of this study is to improve the bounds of the previously best known size of the construction for constant dimension subspace codes. In Section 2, we present the essential results that underpin our main results, which are subsequently detailed in Section 3 and Section 4. The sizes of some constant dimension subspace codes are larger than the previously best known bounds in [1]. In Section 5, we offer our concluding remarks.

2. Preliminaries

This section first presents Köetter’s network coding scheme, including the encoding algorithm for the source and the decoding algorithm for the sink. It then introduces some definitions and conclusions related to the construction of constant dimension subspace codes. For more details, please refer to [2,14,17,18].

2.1. Encoding Algorithm for the Source

In the same way as the components of the codewords of traditional Reed–Solomon codes can be obtained by evaluating a regular message polynomial, the basis of the transmission vector space of Köetter and Kschischang [19] is obtained by evaluating a linearized message polynomial. Please refer to Algorithm 1 for specific encoding algorithms. Let be a finite field, and be a finite extension of . We can view as an m-dimensional vector space over .

Firstly, let be a set of linearly independent elements in the vector space , then the elements in A span an l-dimensional vector space over . Using the direct sum, we define an -dimensional vector space over as follows:

All the information packets transmitted in the network are vectors in W. Let denote all the subspaces of W, and let denote all the subspaces of W with dimension l. The linear space transmitted in the network of this section is the subspace of W generated by the messages U.

Then, let be a set of message symbols, which can be viewed as k symbols over or as symbols over . Let , where is the set of linear polynomials of degree at most over and is a linear polynomial with the original source message U as its coefficients.

Finally, let , then each pair can be considered as a vector in W. Since is a linearly independent set, the set is also a linearly independent set, and the space generated by this set, denoted as V, is an l-dimensional subspace of W.

| Algorithm 1 Encoding steps |

|

The mapping from the message polynomial to the linear space is denoted as . It has been proven in [19] that this mapping is a injective, and the codewords under this mapping form a metric space that satisfies .

Lemma 1

([19]). Let be the image set of the mapping , where is the set of all -dimensional subspaces in W, and satisfies . Then, is a subspace code with parameters .

2.2. Decoding Algorithm for the Sink

Let be transmitted over a network, which defines the number of nodes, links, and their connections in this network. Its output is , where is the space spanned by the pollution vector, and ⊕ denotes the sum of spaces. The expression is defined as , assuming that E and V intersect trivially.

Let the dimension of the space R received by the destination be , where r is the maximum flow of the network and . In this case, , where t is the dimension of the error space . From the proof in [19], it is known that when the received space and , the minimum distance decoding for C can retrieve the space V, thereby obtaining the original source message U.

Lemma 2

([19]). The communication overhead of the network error-correcting coding system by Kötter is 100%.

2.3. Constant Dimension Subspace Codes

For a constant dimension subspace code , each codeword U of can be represented by a generator matrix whose k rows form a basis for U. The Gaussian elimination algorithm gives a unique generator matrix in reduced row echelon form denoted by . A binary row vector of length n and weight k is called the identifying vector of U, where the k ones of are exactly the pivots of . The Ferrers tableau of U, denoted by , is acquired by removing the columns which contain the pivots of and the zeroes from each row of to the left of the pivots. The Ferrers diagram of U, which only depends on the identifying vector , arises from by replacing the entries of with dots.

Example 1.

Let U be a -dimensional subspace of with the following generator matrix in reduced row echelon form:

Then, the identifying vector of U is and the corresponding Ferrers tableaus is

and the corresponding Ferrers diagram of U is

Let be a Ferrers diagram. A linear rank metric code is called a if every matrix has shape , that is, all entries of M not in are zeroes. A Ferrers diagram rank metric code is called an -code if rank for an arbitrary nonzero codeword and dim.

2.4. Related Works

T. Etzion et al. [14] show that the Hamming distance can be applied to the lower bound of the subspace distance. It plays an important role in obtaining the desired subspace distance. The specific instructions are as follows. For any , then

where denotes the Hamming distance between and .

Lemma 3.

Let be an -code. Then, the upper bound of the cardinality of is , where is the number of dots in , which are neither contained in the first i rows nor contained in the last columns for .

An -code is called an if it attains the upper bound of . The existence of an optimal Ferrers diagram rank metric code has been proven but is still considered as widely open (see [17,20,21,22]). The following lemma is a criterion for checking the existence of an optimal Ferrers diagram rank metric code.

Lemma 4.

Let be a Ferrers diagram and be the number of dots in the i-th column of . If each of the rightmost columns of has at least n dots, then there exists an optimal code for any prime power q.

For a Ferrers diagram of size , one can transpose it to obtain a Ferrers diagram of size , where the rightmost column of is the first row of and so on. Thus, if there exists an optimal Ferrers diagram rank metric code for , then so does an optimal Ferrers diagram rank metric code for .

T. Etzion et al. [14] also provided the relevant theorem for a lifted constant dimension subspace code.

Lemma 5.

Let be an Ferrers diagram rank metric code. Then, the lifted constant dimension subspace code defined by

is an constant dimension subspace code.

Lemma 6.

Let Λ be an index set and be the set of identifying vectors with , where is the Hamming weight. For each , let be an constant dimension subspace code; then, is an constant dimension subspace code with cardinality

Lemma 7.

For a matrix , the row space of M over is denoted by . A set of matrices over is called an - of a constant dimension subspace code in if the following conditions are satisfied.

- (a)

- For each , .

- (b)

- For any two distinct matrices , where is an -subspace of generalized by all row vectors of .

X. He et al. [7] remedied the linkage construction and presented a new construction known as the parallel linkage construction. Many new lower bounds on were proved from the parallel linkage construction.

Lemma 8.

Let and be SC-representations of two, and , constant dimension subspace codes, respectively. Let be a code with rank distance d and elements. Let be a code with rank distance d and elements such that the rank of each element in is at most . Consider the set defined by , where

Then, is an constant dimension subspace code.

F. Li [15] proposed the multilevel linkage construction as an effective improvement on the linkage construction.

Lemma 9.

Let be integers with and . Let and be SC-representations of two, and , constant dimension subspace codes, respectively. Let be a code with rank distance d and elements. Let Λ be an index set and be the set of identifying vectors with such that the Hamming weight of each satisfy , where and . Let be a Ferrers diagram rank metric code with . Consider the constant dimension subspace code defined by

where is the same as Equation (3), , and is the lifted code of . Then, is an constant dimension subspace code with .

Let ; the of is defined by

The rank distribution of an MRD code is completely determined by its parameters. The following results can be referred to in Corollary 26 in [18] or Theorem 5.6 in [2]. The Delsarte Theorem is essential to calculating the final result in this paper.

Lemma 10.

Let be an MRD code with rank distance d; then, its rank distribution is given by

3. Subspace Codes with Low Communication Overhead

In this section, we assume that the source and the destination share a public random number generator. Let be a finite field, and let and be finite extensions of , satisfying , i.e., . We can view and as m-dimensional and l-dimensional vector spaces over , respectively.

As a preparatory step for the source coding algorithm, the source first selects a set of linearly independent vectors in the finite field .

Let

The vector space that spans over is given by , where G is the set of vectors composed of . It is obvious that is the entire space . We define an -dimensional vector space over using the direct sum:

All the information packets transmitted in the network are vectors in W. Let denote all the subspaces of W, and let denote all the subspaces of W with dimension l. The linear space transmitted in the network of this section is the subspace of W generated by the messages U.

3.1. Encoding Algorithm for the Source

The source generates a vector space from the original source message U through the following steps:

Step 1: First, select a permutation matrix P of size over the finite field .

Step 2: Generate the matrix A as follows:

where is is the unit matrix of order l, and is the zero matrix with size . Obviously, must be a set of linearly independent vectors.

Step 3: Generate a set of vectors with length m from matrices G and A as follows:

Let the mapping from to be denoted as ; then, this mapping is injective.

Step 4: Let be a linear polynomial with the original source message as its coefficient.

Step 5: Let ; then, each pair can be considered as a vector in W. The set is a linearly independent set, and the space generated by this set, denoted as V, is a subspace of dimensions l of W. The space V is the vector space that the source ultimately needs to transmit over the network.

Let the mapping from to the linear space be denoted as . Let be a new mapping defined by the functions and that map the original source message to the vector space . Obviously, the mapping can be constructed by the function , where and z is a vector of length l over .

The following is a case study that analyzes the specific process of subspace codes in network transmission through a designed network. Let , , , and . The finite field is generated by the fourth-degree polynomial over . Let be a set of information symbols containing two symbols in . The analysis is conducted in two aspects: encoding steps and transmission steps.

3.1.1. Encoding Steps

- Let the linear polynomial corresponding to the information symbols be .

- Let be a linearly independent set of elements in the vector space with the appropriate matrix ; then, .

- .

Thus, the linear space V is given as follows:

The elements in V are expanded over , with the least significant bit placed in the leftmost position. For example, , , , etc. Therefore, the subspace codeword actually transmitted is as follows:

3.1.2. Transmission Steps

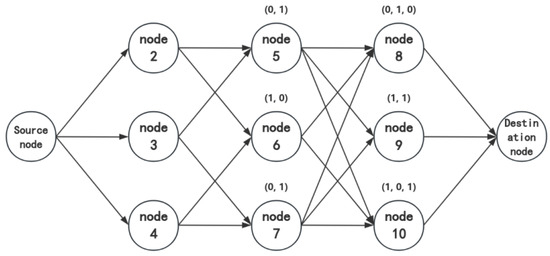

A communication network is set up as shown in Figure 1. Assume that any node in the network can perform network coding and that the error probability on each network link is 0. The encoder-generated codeword is a subspace, and in fact, only the row vectors of the generator matrix of this codeword are transmitted. Each row of the generator matrix is treated as a packet and the source transmits one packet on each outgoing link. The transmitted codeword V contains three packets: .

Figure 1.

Communication network.

Now let us analyze how packets are transmitted in the network and received by the sink. Assume that transmission occurs one bit at a time.

Step 1: The source sends the packets , , and to intermediate nodes 2, 3, and 4, respectively.

Step 2: Node 2 forwards the received packet to nodes 5 and 6; node 3 forwards the received packet to nodes 5 and 7; and node 4 forwards the received packet to nodes 6 and 7.

Step 3: The local encoding kernels for nodes 5, 6, and 7 are , , and , respectively. Therefore, node 5 will receive the packets and and, after encoding, obtain the new packet , which it sends to nodes 8, 9, and 10. Node 6 will obtain and send it to nodes 8 and 10. Node 7 will obtain and send it to nodes 8, 9, and 10.

Step 4: The local encoding kernels for nodes 8, 9, and 10 are , , and , respectively. Thus, node 8 will obtain and send it to the sink node; node 9 will obtain and send it to the sink node; and node 10 will obtain and send it to the sink node. The three packets received by the sink node form the following matrix:

The matrix M formed by the packets received at the sink represents the generating matrix of the received codeword U, where . The basis of the received codeword U can be directly found as . Using the decoding algorithm, we can recover the original information .

Lemma 11.

The mapping is injective.

Proof.

Using proof by contradiction, assume there exists another vector y of length l, where and , such that . Then, we have

The above equation can be converted into Since is a set of linearly independent vectors, the above equation holds if and only if for . □

Lemma 12.

Let , then the mapping is injective.

Proof.

Since we have already proven that the mapping is injective, we only need to show that the mapping is also injective.

Using proof by contradiction, assume there exists another linear polynomial , such that . Let . Clearly, for . Since are linearly independent and is a polynomial of a degree less than , the assumption holds if and only if , that is, . □

Lemma 13.

Let be a set of r linearly independent vectors that satisfy , where . Then, are also linearly independent.

Proof.

Using proof by contradiction, assume there exists a set of coefficients such that . The above equation can be rearranged as follows:

Given that are linearly independent, the assumption holds if and only if . □

Theorem 1.

Let C be the message space obtained from the mapping that satisfies . Then, under the subspace metric, it holds that .

Proof.

Assume and are two distinct linear polynomials in such that . The corresponding vector spaces under the mapping are and . Assume the dimension of the space is r, meaning there exist r linearly independent vectors in , such that By Lemma 13, is also a set of linearly independent vectors, thus they span an r-dimensional linear space, denoted as B. In the space B, all vectors satisfy . If , then the polynomials and would have more than k common roots, and since they are both polynomials of a degree less than k, then we have , which contradicts our assumption. Therefore, , leading to

□

3.2. Decoding Algorithm for the Sink

Let the vector space received by the destination be , where is the space spanned by the pollution vector, and ⊕ denotes the sum of spaces. The expression is defined as , where E and V intersect trivially. Let the dimension of the space R received by the destination be , where r is the maximum flow of the network and . In this case, , where t is the dimension of the error space .

The following theorem is a special case of Theorem 2 in [19].

Theorem 2.

Let C be the set of vector spaces to be transmitted, and let be the vector space to be transmitted. Let be the vector space received by the receiver, where . If , then the minimum distance decoding on C can recover the original source message U from the received vector space R.

Proof.

For the space C, we have . Assume is another vector space in C. Then, we have , which implies If holds, then , meaning that minimum distance decoding can recover the space V.

Given the vector space V, let be a basis for the space V. Then, we have The receiver selects the first k equations , where , and rewrites them as follows:

where

Then, the original source message U can be solved as follows:

□

Corollary 1.

The communication overhead of the above network error correction coding system is .

Proof.

In the source encoding algorithm, the communication overhead introduced by the matrix G is given by . The matrix G can completely be an identity matrix. □

4. Subspace Codes with Larger Size

In this section, we combine the parallel construction and multilevel construction to propose an improved version. As a consequence, we improve the bounds of the previously best known construction for constant dimension subspace codes in [1].

Theorem 3.

Let be integers with and Λ be an index set. Let

be the set of identifying vectors with , where is the zero vector with length n. Let be a Ferrers diagram rank metric code with and be the lifted code of . Consider the constant dimension subspace code defined by

where is the same as in Equation (4). Then, is an constant dimension subspace code with .

Proof.

It is clear that and , , are pairwise disjoint. According to Equation (4) and the definition of , it is easy to verify that

Now we determine the minimum distance of in the following four cases.

Case 1: Let and be two subspaces in , Since and Equation (2),

Case 2: For two distinct vectors and in S, let and . By Equation (1),

Case 3: Let and be two subspaces in . Then,

- If , it is easy to check that Then,

- If , then by and . Hence,

Case 4: Let , , and . Let and be identifying vectors corresponding to and , respectively, where and . By Equation (1), we have .

In summary, the minimum distance of is This completes the proof. □

According to the above theorem, we can draw the following corollary.

Corollary 2.

Let be integers with . Then,

Proof.

Using Theorem 3, is an constant dimension subspace code, and is an constant dimension subspace code. Let be an MRD code with rank distance d and elements, such that the rank of each matrix in is at most . By the Delsarte theorem, . It is clear that the maximal size of is . Using the above theorem, the conclusion holds. □

Below, we will provide two examples to demonstrate that our results are better.

Example 2.

Assume , and . Let be the set of identifying vectors, which are listed in Table 1. By the Delsarte theorem, . It is clear that

Table 1.

Construction for .

Remark 1.

Table 2.

Construction for .

5. Concluding Remarks

Constant dimensional subspace codes have a wide range of applications in various fields. Firstly, in wireless communication systems, they can improve the reliability and efficiency of data transmission, especially in the presence of interference and channel fading. By using subspace coding, lost data packets can be recovered, achieving higher transmission rates and lower error rates. Secondly, in network coding, constant dimensional subspace codes effectively handle packet loss and errors by treating data packets as elements in a vector space, enabling efficient data transmission and reconstruction, particularly in multicast and broadcast scenarios. Additionally, in distributed storage systems, subspace codes are used for data redundancy and recovery, allowing the original data to be restored even when some nodes fail, thus enhancing the system’s fault tolerance. In video streaming, constant dimensional subspace codes improve video quality and smoothness, ensuring continuous playback and clarity even when network bandwidth is limited or unstable. Finally, they can also be applied to data encryption and secure transmission; by encoding data as vectors in a subspace, only the receiver with the correct decoding information can recover the original data, thereby enhancing the security of data transmission.

In this paper, we reduced the communication overhead and obtained new lower bounds of the sizes of subspace codes. This construction gives improved bounds for the multilevel linkage construction. According to the data in reference [1] and Equation (5), which provides comprehensive tables of the presently best known lower and upper bounds for subspace codes, we have notably improved the following lower bounds: and , as documented in Table 3 and Table 4.

Table 3.

Lower bounds of .

Table 4.

Lower bounds of .

Author Contributions

Conceptualization, L.W.; methodology, L.W. and Y.N.; investigation, L.W.; writing—original draft, Y.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Natural Science Foundation of China (grant no. 12301665), the Basic Science (Natural Science) Research Projects of Universities in Jiangsu Province (under grant agreement no. 22KJB110009), and the Applied Basic Research Support Project of Changzhou Science and Technology (grant no. CJ20235031).

Data Availability Statement

The original contributions presented in this study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Heinlein, D.; Kiermaier, M.; Kurz, S.; Wassermann, A. Tables of Subspace Codes. Available online: https://arxiv.org/abs/1601.02864 (accessed on 1 December 2017).

- Delsarte, P. Bilinear forms over a finite field with applications to coding theory. J. Combin. Theory Ser. A 1985, 25, 226–241. [Google Scholar] [CrossRef]

- Gabidulin, È.M. Theory of codes with maximum rank distance. Probl. Inf. Transm. 1985, 21, 3–16. [Google Scholar]

- Chen, H.; He, X.; Weng, J.; Xu, L. New Constructions of Subspace Codes Using Subsets of MRD codes in Several Blocks. IEEE Trans. Inform. Theory 2020, 66, 5317–5321. [Google Scholar] [CrossRef]

- Etzion, T.; Silberstein, N. Codes and designs related to lifted MRD codes. IEEE Trans. Inform. Theory 2013, 59, 1004–1017. [Google Scholar] [CrossRef]

- Gluesing-Luerssen, H.; Troha, C. Construction of subspace codes through linkage. Adv. Math. Commun. 2016, 10, 525–540. [Google Scholar] [CrossRef]

- He, X. Construction of constant dimension code from two parallel versions of linkage construction. IEEE Commun. Lett. 2020, 24, 2392–2395. [Google Scholar] [CrossRef]

- He, X.; Chen, Y.; Zhang, Z. Improving the Linkage Construction with Echelon-Ferrers for Constant-Dimension Codes. IEEE Commun. Lett. 2020, 24, 1875–1879. [Google Scholar] [CrossRef]

- Niu, Y.; Yue, Q.; Huang, D. New constant dimension subspace codes from generalized inserting construction. IEEE Commun. Lett. 2021, 25, 1066–1069. [Google Scholar] [CrossRef]

- Niu, Y.; Yue, Q.; Huang, D. New constant dimension subspace codes from parallel linkage con struction and multilevel construction. Cryptogr. Commun. 2022, 14, 201–214. [Google Scholar] [CrossRef]

- Niu, Y.; Yue, Q.; Huang, D. Construction of constant dimension codes via improved inserting construction. Appl. Algebra Eng. Commun. Comput. 2023, 34, 1045–1062. [Google Scholar] [CrossRef]

- Xu, L.; Chen, H. New constant-dimension subspace codes from maximum rank distance codes. IEEE Trans. Inform. Theory 2018, 64, 6315–6319. [Google Scholar] [CrossRef]

- Heinlein, D.; Kurz, S. Coset construction for subspace codes. IEEE Trans. Inform. Theory 2017, 63, 7651–7660. [Google Scholar] [CrossRef]

- Etzion, T.; Silberstein, N. Error-correcting codes in projective spaces via rank-metric codes and ferrers diagrams. IEEE Trans. Inform. Theory 2009, 55, 2909–2919. [Google Scholar] [CrossRef]

- Li, F. Construction of constant dimension subspace codes by modifying linkage construction. IEEE Trans. Inform. Theory 2020, 66, 2760–2764. [Google Scholar] [CrossRef]

- Liu, S.; Chang, Y.; Feng, T. Parallel multilevel constructions for constant dimension codes. IEEE Trans. Inform. Theory 2020, 66, 6884–6897. [Google Scholar] [CrossRef]

- Antrobus, J.; Gluesing-Luerssen, H. Maximal Ferrers diagram codes: Constructions and genericity considerations. IEEE Trans. Inform. Theory 2019, 65, 6204–6223. [Google Scholar] [CrossRef]

- Cruz, J.; Gorla, E.; Lopez, H.; Ravagnani, A. Weight distribution of rank-metric codes. Des. Codes Cryptogr. 2018, 86, 1–16. [Google Scholar] [CrossRef]

- Köetter, R.; Kschisdlang, F.R. Coding for Errors and Erasures in Random Network Coding. IEEE Trans. Inform. Theory 2020, 54, 3579–3591. [Google Scholar]

- Etzion, T.; Gorla, E.; Ravagnani, A.; Wachter, A. Optimal Ferrers diagram rank-metric codes. IEEE Trans. Inform. Theory 2016, 62, 1616–1630. [Google Scholar] [CrossRef]

- Liu, S.; Chang, Y.; Feng, T. Constructions for optimal Ferrers diagram rank-metric codes. IEEE Trans. Inform. Theory 2019, 65, 4115–4130. [Google Scholar] [CrossRef]

- Zhang, T.; Ge, G. Constructions of optimal Ferrers diagram rank metric codes. Des. Codes Cryptogr. 2019, 87, 107–121. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).