Abstract

In recent years, many new metrics highly correlated with the Mean Opinion Score (MOS) have been proposed for assessing image quality through Full-Reference Image Quality Assessment (FR-IQA) methods, such as MDSI, HPSI, and GMSD. Eight of these selected metrics, which compare reference and distorted images in a symmetrical manner, are briefly described in this article, and their performance is evaluated using correlation criteria (PLCC, SROCC, and KROCC), as well as RMSE. The aim of this paper is to develop a new, efficient quality index based on a combination of several high-performance metrics already utilized in the field of Image Quality Assessment (IQA). The study was conducted on four benchmark image databases (TID2008, TID2013, KADID-10k, and PIPAL) and identified the three best-performing metrics for each database. The paper introduces a New Combined Metric (NCM), which is a weighted sum of three component metrics, and demonstrates its superiority over each of its component metrics across all the examined databases. An optimization method for determining the weights of the NCM is also presented. Additionally, an alternative version of the combined metric, based on the fastest metrics and employing symmetric calculations for pairs of compared images, is discussed. This version also demonstrates strong performance.

1. Introduction

Every day, a huge number of digital cameras generate a vast stream of images. Due to the multitude of applications of imaging devices in areas such as vision-based quality control of components in manufacturing processes, security monitoring, object detection systems in automotive applications, and analysis of diagnostic images in medicine, there has been a strong increase in the demand for Image Quality Assessment (IQA) methods.

Image quality can be assessed either subjectively or objectively. Subjective methods rely on the perceptual evaluation of image quality by human observers, which means that conducting these assessments incurs significant financial costs and requires a large number of participants. In contrast, objective methods utilize mathematical models to determine the values of various metrics related to image quality. Among Image Quality Assessment (IQA) methods, the most advanced are those that perform a symmetrical comparison between distorted images and their originals, referred to as Full-Reference IQA (FR-IQA) techniques. The scores generated by each IQA metric can be evaluated against subjective assessments, such as the Mean Opinion Score (MOS) or the Difference Mean Opinion Score (DMOS), derived from human viewers. For FR-IQA methods, the correlation coefficients obtained from comparisons with MOS indicate the effectiveness of the metric: the higher the coefficient, the more closely the metric aligns with human perception. For many years, efforts have been made in the field of FR-IQA to improve and refine existing quality metrics. Significant importance is attached to attempts to combine one metric with other quality measures to increase the correlation of the resulting quality index with MOS. Meanwhile, the rapid development of machine-learning and deep-learning techniques provides new methods for image quality assessment. The FR-IQA problem can be understood as a challenge in developing mathematical models that can perceptually assess the image quality in alignment with human judgment.

Over the years, numerous metrics for FR-IQA have been proposed that take various aspects of the Human Visual System (HVS) into account. Recently, attempts have been made to enhance the effectiveness of FR-IQA by combining existing metrics to create a “super” index. Theoretical foundations for such metric fusion can be found in Liu’s work [1], where it is applied to very old and classical metrics such as PSNR, VSNR, SSIM, and VIF. In the paper by Okarma [2], the properties of three FR-IQA metrics (MS-SSIM, VIF, and R-SVD) were analyzed, and a combined quality metric based on their product was proposed. It is named the Combined Quality Metric (CQM), and its three correlation coefficients increased in relation to the correlation coefficients with MOS of individual product multipliers.

Later, this concept was further developed using optimization or regression techniques to determine the optimal weights or exponents in the products of existing FR-IQA indices [3,4]. The Combined Image Similarity Index (CISI) proposed by Okarma in [3] employs metrics similar to those used in the CQM. However, instead of the R-SVD metric, it utilizes the FSIMc metric. The CISI index demonstrates a higher correlation with Mean Opinion Score (MOS) compared to the CQM index. In the 2013 article by Okarma [4], a new EHIS metric is introduced, which is based on the product of four multipliers: two familiar from CQM (MS-SSIM and VIF) and two new ones (WFSIMc and RFSIM). This approach improves the correlation with MOS over the combined CISI metric.

Another metrics fusion strategy was proposed by the author of [5], who presented several versions of a linear combination (weighted sum) based on metrics selected from a dozen FR-IQA metrics. He referred to these new combined metrics as Linearly Combined Similarity Measures (LCSIM). The use of linear combination metrics requires determining the weighting factors for the FR-IQA metrics, which is achieved by solving the RMSE error minimization task using a genetic algorithm.

Another line of work in creating methods based on the fusion of metrics utilizes machine-learning techniques. One example can be seen in [6], where the results of six traditional FR-IQA metrics (FSIMc, PSNR-HMA, PSNR-HVS, SFF, SR-SIM, and VIF) were used as a feature vector for training and testing a four-layer neural network. The output results produced by the neural network demonstrate a significant improvement over those achieved by the input metrics. Currently, deep neural networks, particularly CNNs, can learn the best combinations of metrics to optimize image quality assessment [7,8].

Combining metrics leads to increased computational complexity, which can be an issue in the context of real-time applications. Nevertheless, the fusion of quality metrics for FR-IQA is a promising research direction that could significantly improve the correlation of objective quality assessments with MOS.

In this paper, we consider a new combined metric for FR-IQA, based on metrics that are highly correlated with MOS and well-known from the literature. The structure of this paper is as follows: following the introduction, Section 2 provides an overview of eight relatively new and promising FR-IQA metrics. Section 3 presents their linear combination, along with the experimental results. Finally, Section 4 concludes the paper.

3. The New Combined Metric (NCM) and Its Experimental Research

The New Combined Metric (NCM):

where , , and are the selected FR-IQA metrics for given dataset and , , and are the optimized weights.

3.1. Selected IQA Databases

Four benchmark databases, TID2008 [21], TID2013 [22], KADID-10k [23], and PIPAL [24], were chosen for the research. These databases are distinguished by a large set of reference images, diverse distortion types, and varying levels of their presence in the images. For each image in the databases, Mean Opinion Scores (MOSs) are experimentally gathered by collecting assessments from multiple human observers.

The TID2008 image database consists of 1700 distorted images, generated using 17 different distortion types, each applied at four levels to 25 reference images (Figure 1). MOS was provided based on the work of 838 human observers and 256,428 comparisons. All images have a resolution of 512 × 384 pixels.

Figure 1.

Reference images of the TID2008 and TID2013 databases [21].

The TID2013 image database is an updated and expanded version of TID2008. It retains the same set of reference images (Figure 1), but the number of distortion types has been increased to 24, and the distortion levels have been raised to five. The database includes 3000 distorted digital images. Additionally, the size of the research group from which the average subjective ratings were derived has been enlarged. MOS ratings were collected from 524,340 comparisons made by 971 observers. The image resolution remains unchanged.

Online crowdsourcing for image assessment has enabled the creation of larger databases. One such large database, KADID-10k (Konstanz Artificially Distorted Image Quality Database) [23], contains 10,125 digital images with subjective quality scores (MOSs). It was developed and published by 2209 crowd workers. This database includes a limited selection of reference images (81) (Figure 2), a restricted number of artificial distortion types (25), and five levels for each distortion type. Recently, KADID-10k has become widely used for deep-learning models for image quality assessment [25]. The artificial distortions present in the KADID-10k database include spatial distortions, noise, blurs, and more. The image resolution remains unchanged.

Figure 2.

Reference images of the KADID-10k database [23].

PIPAL is a large IQA dataset, first introduced in 2020 by [24], that increased the number of reference images to 250. In fact, these are 288 × 288 fragments from images in the DIV2K and Flickr2K high-resolution image collections (Figure 3), with distortion types increased to 40 and distorted images increased to 29,000, and it contains 1,130,000 human ratings. In this image database, the Elo rating system was used to assign the Mean Opinion Scores (MOSs). Currently, the PIPAL dataset is used in many challenges as a benchmark for IQA algorithms.

Figure 3.

Examples of reference images from the PIPAL database [24].

The key information regarding the selected IQA benchmark databases is presented in Table 1.

Table 1.

Comparison of the selected IQA databases.

3.2. Experimental Tests

The experimental study began with the determination of the PLCC, SROCC, and KROCC correlation coefficients, and RMSE values for eight selected highly correlated metrics. The results of these tests for the four study datasets are included in Table 2. The three highest correlation coefficients and the three lowest RMSE values are shown in different colors (the best results in red, the second results in green, and the third results in blue).

Table 2.

Values of correlation coefficients and RMSE for FR-IQA metrics.

The three correlation coefficients and the RMSE value were aggregated into one score. A point scale with values from 1 to 8 was adopted, where the highest points were awarded to the highest correlation coefficients and the lowest RMSE values. This ranking is shown in Table 3, where the point values for each dataset are also summarized in the columns. The number of points determined the three component metrics for each dataset. The three highest scores are highlighted in bold.

Table 3.

Ranking of FR-IQA metrics.

The three metrics selected from the table served as the components , , and for the linear combination that determines the New Combined Metric, as defined in Formula (61).

Determining the NCM value requires calculating the , , and weights present in this formula. These weights are optimized in the Matlab environment using the function. In the optimization task, the PLCC linear correlation coefficient is maximized. The obtained values of the weights for each dataset are given in Table 4. Based on these weights and component metrics, the values of the combined NCM metric were determined. The results are shown in Table 5. The results for the three component metrics are shown in red, while green is used for the best score achieved by the combined NCM metric in each case.

Table 4.

Optimized weight values for the NCM metric.

Table 5.

Results of NCM metric for the three best component metrics.

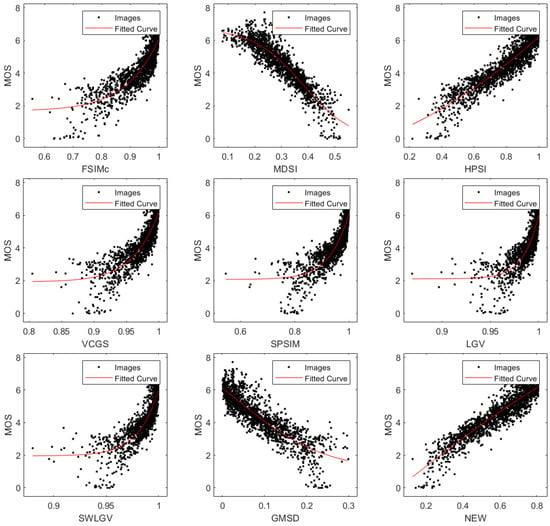

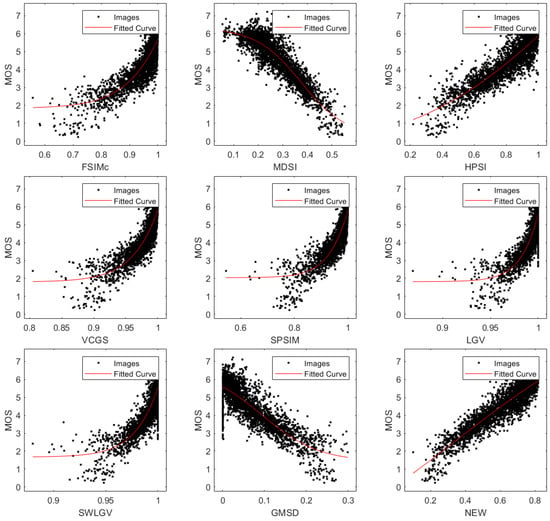

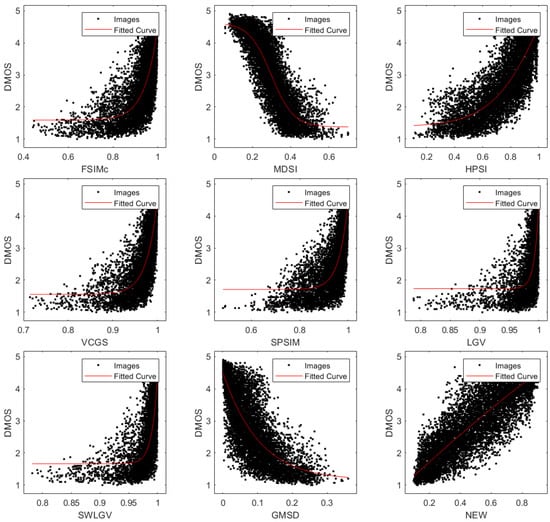

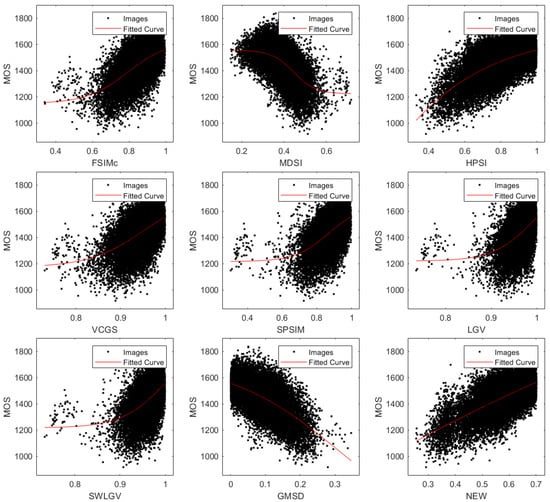

In order to visualize the good quality of the proposed NCM index, scatter plots of the proposed eight metrics and the NCM for the tested bases are shown in Figure 4, Figure 5, Figure 6 and Figure 7. The scatter plots and their fitted curves show that the proposed combined NCM metric closely matches the MOS estimates for each of the databases.

Figure 4.

Scatter plots of subjective MOS against IQA metrics obtained from the TID2008 database.

Figure 5.

Scatter plots of subjective MOS against IQA metrics obtained from the TID2013 database.

Figure 6.

Scatter plots of subjective MOS against IQA metrics obtained from the KADID-10k database.

Figure 7.

Scatter plots of subjective MOS against IQA metrics obtained from the PIPAL database.

A study of computation times for the considered FR-IQA metrics was also conducted. The average computation times for each of the databases are shown in Table 6. The three fastest metrics are highlighted in bold. The NCM computation time, which is not included in Table 6, is approximately equal to the sum of the computation times of its component metrics.

Table 6.

Computation times (s) for IQA metrics.

For the three fastest FR-IQA metrics highlighted in red (see Table 7), a linear combination was formed by redetermining the optimal , , and weights (see Table 5) from the perspective of PLCC maximization. The resulting NCM metric using the three fastest metrics achieved the best performance, as marked in green in Table 7.

Table 7.

Results of the NCM metric for the three fastest component metrics.

The study was conducted in the MATLAB R2024a programming environment on a computer with the specifications provided in Table 8.

Table 8.

Parameters of desktop computer used for experiments.

The best results obtained using the NCM were additionally compared with those of other combined metrics presented in the literature [26,27]. The comparison is highlighted in bold in Table 9. The authors of [26] used combined metrics (MFMOGP3, MFMOGP4) based on the additive combination of component metrics, ranging from 8 to 10 metrics. In [27], combined metrics (OFIQA) based on the product form, involving between 4 and 17 factor-metrics, were proposed. For Table 9, we selected the best results from both of the above-mentioned works. For the TID2008 database, the results are comparable, while for the TID2013 database the proposed NCM index achieves the highest correlation coefficients. We conducted the comparison on the TID2008 and TID2013 databases, as both older works on combined metrics did not consider newer databases (KADID-10k, PIPAL).

Table 9.

Comparison of the proposed NCM metric with other combined metrics.

4. Conclusions

From the existing literature on FR-IQA metrics, it is evident that there is no single metric that significantly outperforms the others. Therefore, the idea of creating a linear or nonlinear combination of several top metrics has emerged. The proposed approach opted for an additive combination of the three metrics with the highest correlation coefficients and the lowest RMSE. The resulting combined NCM metric was based on component metrics that depended on the selected database. NCM achieved the best results among all tested metrics across all tested databases. In addition, a case was examined where the three fastest metrics, i.e., MDSI, HPSI, and GMSD, were selected as components. The combined metric obtained in this case also achieved the best results compared to all the tested metrics. Potential extensions of the proposed approach include replacing the linear combination of metrics to their nonlinear combination, exploring alternative methods for optimizing weight selection, and more.

Author Contributions

Conceptualization, M.F. and H.P.; methodology, M.F.; software, Ł.M.; validation, M.F. and H.P.; investigation, M.F. and Ł.M.; resources, M.F.; data curation, M.F.; writing—original draft preparation, M.F.; writing—review and editing, M.F.; visualization, M.F.; supervision, H.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Polish Ministry for Science and Education under internal grant 02/070/BK_24/0055 for the Department of Data Science and Engineering. Silesian University of Technology, Gliwice, Poland.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IQA | Image Quality Assessment |

| FR-IQA | Full-Reference Image Quality Assessment |

| MOS | Mean Opinion Score |

| FSIMc | Feature SIMilarity (color version) |

| MDSI | Mean Deviation Similarity Index |

| HPSI | Haar wavelet Perceptual Similarity Index |

| VCGS | Visual saliency with Color appearance and Gradient Similarity |

| SPSIM | SuperPixel SIMilarity |

| LGV | Local Global Variation |

| SWLGV | Saliency Weighted Local Global Variation |

| GMSD | Gradient Magnitude Similarity Deviation |

| NCM | New Combined Metric |

| PSNR | Peak Signal-to-Noise Ratio |

| VSNR | Visual Signal-to-Noise Ratio |

| VIF | Visual Information Fidelity index |

| MS-SSIM | Multi Scale Structural SIMilarity index |

| PLCC | Pearson Linear Correlation Coefficient |

| SROCC | Spearman Rank Order Correlation Coefficient |

| KROCC | Kendall Rank Order Correlation Coefficient |

| RMSE | Root Mean Squared Error |

| SVD | Singular Value Decomposition |

| HVS | Human Visual System |

| DMOS | Differential Mean Opinion Score |

| TID | Tampere Image Database |

| KADID-10k | Konstanz Artificially Distorted Image quality Database |

| PIPAL | Perceptual Image Processing ALgorithms database |

| WFSIMc | Weighted FSIM (color version) index |

| RFSIM | Riesz-transform-based Feature SIMilarity index |

| CQM | Combined Quality Metric |

| CISI | Combined Image Similarity Index |

| LCSIM | Linearly Combined Similarity Measures |

References

- Liu, M.; Yang, X. A new image quality approach based on decision fusion. In Proceedings of the 2008 Fifth International Conference on Fuzzy Systems and Knowledge Discovery, Jinan, China, 18–20 October 2008; Volume 4, pp. 10–14. [Google Scholar]

- Okarma, K. Combined full-reference image quality metric linearly correlated with subjective assessment. In Proceedings of the Artificial Intelligence and Soft Computing: 10th International Conference, ICAISC 2010, Zakopane, Poland, 13–17 June 2010; Part I 10. Springer: Berlin/Heidelberg, Germany, 2010; pp. 539–546. [Google Scholar]

- Okarma, K. Combined image similarity index. Opt. Rev. 2012, 19, 349–354. [Google Scholar] [CrossRef]

- Okarma, K. Extended hybrid image similarity–combined full-reference image quality metric linearly correlated with subjective scores. Elektron. Ir Elektrotech. 2013, 19, 129–132. [Google Scholar] [CrossRef]

- Oszust, M. Full-reference image quality assessment with linear combination of genetically selected quality measures. PLoS ONE 2016, 11, e0158333. [Google Scholar] [CrossRef] [PubMed]

- Lukin, V.; Ponomarenko, N.; Ieremeiev, O.; Egiazarian, K.; Astola, J. Combining full-reference image visual quality metrics by neural network. In Proceedings of the Human Vision and Electronic Imaging XX, San Francisco, CA, USA, 9–12 February 2015; Volume 9394, pp. 172–183. [Google Scholar]

- Bosse, S.; Maniry, D.; Müller, K.R.; Wiegand, T.; Samek, W. Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans. Image Process. 2017, 27, 206–219. [Google Scholar] [CrossRef] [PubMed]

- Varga, D. A combined full-reference image quality assessment method based on convolutional activation maps. Algorithms 2020, 13, 313. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Kovesi, P. Image features from phase congruency. Videre J. Comput. Vis. Res. 1999, 1, 1–26. [Google Scholar]

- Nafchi, H.Z.; Shahkolaei, A.; Hedjam, R.; Cheriet, M. Mean deviation similarity index: Efficient and reliable full-reference image quality evaluator. IEEE Access 2016, 4, 5579–5590. [Google Scholar] [CrossRef]

- Reisenhofer, R.; Bosse, S.; Kutyniok, G.; Wiegand, T. A Haar wavelet-based perceptual similarity index for image quality assessment. Signal Process. Image Commun. 2018, 61, 33–43. [Google Scholar] [CrossRef]

- Shi, C.; Lin, Y. Full Reference Image Quality Assessment Based on Visual Salience with Color Appearance and Gradient Similarity. IEEE Access 2020, 8, 97310–97320. [Google Scholar] [CrossRef]

- Sun, W.; Liao, Q.; Xue, J.H.; Zhou, F. SPSIM: A superpixel-based similarity index for full-reference image quality assessment. IEEE Trans. Image Process. 2018, 27, 4232–4244. [Google Scholar] [CrossRef] [PubMed]

- Stutz, D.; Hermans, A.; Leibe, B. Superpixels: An evaluation of the state-of-the-art. Comput. Vis. Image Underst. 2018, 166, 1–27. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Varga, D. Full-Reference Image Quality Assessment Based on Grünwald–Letnikov Derivative, Image Gradients, and Visual Saliency. Electronics 2022, 11, 559. [Google Scholar] [CrossRef]

- Imamoglu, N.; Lin, W.; Fang, Y. A saliency detection model using low-level features based on wavelet transform. IEEE Trans. Multimed. 2012, 15, 96–105. [Google Scholar] [CrossRef]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient magnitude similarity deviation: A highly efficient perceptual image quality index. IEEE Trans. Image Process. 2013, 23, 684–695. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef]

- Ponomarenko, N.; Lukin, V.; Zelensky, A.; Egiazarian, K.; Carli, M.; Battisti, F. TID2008—A database for evaluation of full-reference visual quality assessment metrics. Adv. Mod. Radioelectron. 2009, 10, 30–45. [Google Scholar]

- Ponomarenko, N.; Jin, L.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, P.; et al. Image database TID2013: Peculiarities, results and perspectives. Signal Process. Image Commun. 2015, 30, 57–77. [Google Scholar] [CrossRef]

- Lin, H.; Hosu, V.; Saupe, D. KADID-10k: A large-scale artificially distorted IQA database. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–3. [Google Scholar]

- Gu, J.; Cai, H.; Chen, H.; Ye, X.; Ren, J.; Dong, C. PIPAL: A large-scale image quality assessment dataset for perceptual image restoration. In European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2020; pp. 633–651. [Google Scholar]

- Varga, D. Composition-preserving deep approach to full-reference image quality assessment. Signal Image Video Process. 2020, 14, 1265–1272. [Google Scholar] [CrossRef]

- Merzougui, N.; Djerou, L. Multi-measures fusion based on multi-objective genetic programming, for full-reference image quality assessment. arXiv 2017, arXiv:1801.06030. [Google Scholar]

- Varga, D. An optimization-based family of predictive, fusion-based models for full-reference image quality assessment. J. Imaging 2023, 9, 116. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).