Abstract

A Lie system is a nonautonomous system of first-order ordinary differential equations whose general solution can be written via an autonomous function, the so-called (nonlinear) superposition rule of a finite number of particular solutions and some parameters to be related to initial conditions. This superposition rule can be obtained using the geometric features of the Lie system, its symmetries, and the symmetric properties of certain morphisms involved. Even if a superposition rule for a Lie system is known, the explicit analytic expression of its solutions frequently is not. This is why this article focuses on a novel geometric attempt to integrate Lie systems analytically and numerically. We focus on two families of methods based on Magnus expansions and on Runge–Kutta–Munthe–Kaas methods, which are here adapted, in a geometric manner, to Lie systems. To illustrate the accuracy of our techniques we analyze Lie systems related to Lie groups of the form SL, which play a very relevant role in mechanics. In particular, we depict an optimal control problem for a vehicle with quadratic cost function. Particular numerical solutions of the studied examples are given.

MSC:

MSC 2020 classes: 34A26; 53A70; (primary) 37M15; 49M25 (secondary)

1. Introduction

The analytic integration of differential equations can be achieved in many relevant occasions, but it is not the usual case. Sometimes the geometric and symmetry properties of a Lie system are not enough to completely integrate the system, and this is why numerical methods are so important to study solutions of differential equations. In particular, this paper devises geometric numerical methods adapted to a particular class of nonautonomous first-order systems of ordinary differential equations (ODEs): the so-called Lie systems [1,2,3].

A Lie system is a nonautonomous first-order system of ODEs that admits a general solution in terms of an autonomous function, the so-called superposition rule, a family of generic particular solutions and certain constants of integration related to the initial conditions [4,5,6]. It is worth noting that a superposition rule for a Lie system may be explicitly known even when the explicit expression of its analytic solution is not [5]. Although obtaining a superposition rule reduces the integration of Lie systems to obtaining some particular solutions, such particular solutions are not easy to describe explicitly [3,5]. This is why we consider that geometric numerical methods for Lie systems should be developed. One could find an extensive list of works devoted to numerical algorithms in geometric mechanics [7,8,9,10,11,12] and references therein, but, as far as we know, just a few methods have been specifically designed for Lie systems [13,14]. This manuscript, therefore, provides a novel application of geometric analytical and numerical methods to Lie systems, leading to some interesting consequences.

Our interest in Lie systems is two-fold. On the one hand, it is rooted in their geometric background. Long story short, the origin of Lie systems goes back to the XIX century, when Sophus Lie proved that a nonautonomous system of ODEs of first order admits a superposition rule if and only if it describes the integral curves of a t-dependent vector field defined taking values in a finite dimensional Lie algebra of vector fields, known as a Vessiot–Guldberg Lie algebra (VG henceforth) of the Lie system. The symmetries of a Lie system are in direct correlation with the underlying VG Lie algebra. The theory of Lie systems has been widely studied in the last two decades and its research involves projective foliations, generalized distributions, Lie group theory, Poisson coalgebras, etc. (see [1,2,15] and references therein). In particular, the coalgebra method is based in symmetric properties of certain operators that allow us to obtain superposition rules with the aid of a finite-dimensional Poisson algebra of functions. On the other hand, Lie systems have many remarkable applications in many relevant scientific fields (see [2] and references therein). For instance, Lie systems are used in the study of the integrability of Riccati equations [16], quantum mechanics [17], stochastic mechanics [18], superequations [19], and in biology and cosmology [2]. Recently, the theory of Lie systems has been generalized to higher-order ordinary differential equations, such as higher-order Riccati equations [20], second- and third-order Kummer–Schwarz equations [5], and Milne–Pinney equations [21], among others. Additionally, the theory of Lie systems is also extensible to systems of partial differential equations [15,22].

In the past few decades, discrete methods have made big progress in faithfully describing reality. For instance, the interest of numerical analysis in the research on Lie systems was already stressed by Winternitz [3], who remarked that superposition rules allow us to study all solutions of a Lie system from the knowledge of some of them, which can be derived numerically. This is why the discretization of Lie systems and their numerical integration has caught our attention. Since Lie systems are geometrically described in terms of an underlying VG Lie algebra, this allowed for solving a Lie system by studying a Lie system of a specific type, a so-called automorphic Lie system [2], on a Lie group associated to the VG Lie algebra. Two automorphic Lie systems are two Lie systems that are equivalent under automorphic transformations. In this way, automorphic Lie systems can be claimed to be symmetric Lie systems. One can then propose a numerical method for the automorphic Lie system, giving rise to numerical methods for a plethora of Lie systems that are related to the initial through an automorphic map that preserves the properties of the Lie group, also known as symmetry group transformation. Our perspective here on numerical methods specifically designed for Lie systems proposes numerical schemes on the Lie group. There already exist some numerical methods designed to work on Lie groups, but our aim is to adapt them for Lie systems. In particular, we will focus on two classes of methods: the so-called Magnus methods [8,23,24] and Runge–Kutta–Munthe–Kaas (RKMK) [25,26], the latter being based on the classical Runge–Kutta (RK) schemes.

Summarizing, this manuscript presents a novel procedure for the integration of Lie systems by applying geometric numerical methods on one of its associated automorphic Lie systems, which is defined on a Lie group (we may refer to it as a VG Lie group). We aim at providing a quantitative and qualitative analysis of our numerical methods on the Lie group and compare them with the results obtained from numerical integration of the system of ODEs that defines the Lie system. This would resolve at the same time all Lie systems that are related to the same automorphic Lie system, i.e., those Lie systems that have isomorphic VG Lie algebras and that are determined by an equivalent curve within them (see [1] for details). We apply our numerical methods to automorphic Lie systems defined on Lie groups , which appear in many physical applications (cf. [27]). We are particularly interested in control theory, which involves matrix Riccati equations [28]. We depict an application of matrix Riccati equations in optimal control with quadratic cost functions and solve it numerically with our adapted Magnus and RKMK methods.

The structure of the paper goes as follows. Section 2 surveys the basic theory of Lie systems and develops their analytical resolution constructed upon the geometric structure they are built on; this analytical solution is enclosed in the procedure that is summarized in Procedure in Section 2.3.1. Meanwhile, Section 3 is concerned with the novel discretization we are proposing for Lie systems, enclosed in Definition 3. An application of our methods to SL and SL is provided in Section 4. Meanwhile, an optimal control problem for a vehicle with a quadratic cost function is presented in Section 5, and resolved using the novel analytical techniques we are delivering.

2. Geometric Fundamentals and Lie Systems

This section establishes the notation and geometric fundamentals on Lie systems and related concepts that we will be using throughout the manuscript. Unless otherwise stated, we hereafter assume all structures to be smooth, real, and globally defined. This will simplify the presentation, while stressing its main points. From now on, stands for a field to be or .

2.1. Geometric Fundamentals

A key concept in the theory of Lie systems is that of t-dependent vector fields. Let us describe this geometric concept. Consider an n-dimensional manifold N and its natural tangent bundle projection . Let us define the projection , where t is the natural coordinate system on . A t-dependent vector field on N is a map so that the following diagram becomes commutative:

That is, . In other words, a t-dependent vector field X on N amounts to a t-parametrized family of standard vector fields on N (see [5] for details). We write for the space of t-dependent vector fields on N, while stands for the space of vector fields on N.

|

An integral curve of a t-dependent vector field X on N is a curve of the form , where is an integral curve of the so-called autonomization of X, namely the vector field on , which is also a section of the natural projection . More precisely, if on a local coordinate system on N, then

and is a solution of the system of differential equations

The reparametrization shows that is a solution to

System (1) is the associated system with X. Furthermore, a first-order system of ODEs in normal form (1) gives rise to a t-dependent vector field on N of the form

whose integral curves are of the form , where is a particular solution to (1). This fact justifies identifying X with the t-dependent first-order system of ordinary differential Equation (1).

For our purposes, it is important to relate t-dependent vector fields to Lie algebras. A Lie algebra is a pair , where V is a vector space and is a bilinear and antisymmetric map that satisfies the Jacobi identity. The minimal Lie algebra, , of a subset of a Lie algebra is the smallest Lie subalgebra (in the sense of inclusion) in V that contains . If it does not lead to misunderstanding, will simply be denoted by . Given a t-dependent vector field X on N, we call minimal Lie algebra of X the smallest Lie algebra, , of vector fields on N that contains all the vector fields .

2.2. Lie Groups and Matrix Lie Groups

Let G be a Lie group and let e be its neutral element. Every defines a right-translation and a left-translation on G. A vector field, , on G is right-invariant if for every , where is tangent map to at . The value of a right-invariant vector field, , at every point of G is determined by its value at e, since, by definition, for every . Hence, each right-invariant vector field on G gives rise to a unique and vice versa. Then, the space of right-invariant vector fields on G is a finite-dimensional Lie algebra. Similarly, one may define left-invariant vector fields on G, establish a Lie algebra structure on the space of left-invariant vector fields, and set an isomorphism between the space of left-invariant vector fields on G and . The Lie algebra of left-invariant vector fields on G, with Lie bracket , induces in a Lie algebra via the identification of left-invariant vector fields and their values at e. Note that we will frequently identify with to simplify the terminology.

There is a natural mapping from to G, the so-called exponential map, of the form , where is the integral curve of the right-invariant vector field on G satisfying and . If , where is the Lie algebra of square matrices with entries in a field relative to the Lie bracket given by the commutator of matrices, then can be considered as the Lie algebra of the Lie group of invertible matrices with entries in . It can be proved that in this case, retrieves the standard expression of the exponential of a matrix [29], namely

where stands for the identity matrix.

From the definition of the exponential map , it follows that for each and . Let us show this. Indeed, given the right-invariant vector field , where , then

In particular for , it follows that and, for general s, it follows that . Hence, if are the integral curves of and with initial condition e, respectively, then it can be proved that, for , one has that

and is the integral curve of with initial condition e. Hence, . Therefore, . It is worth stressing that Ado’s theorem [30] shows that every Lie group admits a matrix representation close to its neutral element.

The exponential map establishes a diffeomorphism from an open neighborhood of 0 in and . More in detail, every basis of gives rise to the so-called canonical coordinates of the second-kind related to defined by the local diffeomorphism

for an appropriate open neighborhood of 0 in .

In matrix Lie groups right-invariant vector fields take a simple useful form. In fact, let G be a matrix Lie group. It can be then considered as a Lie subgroup of . Moreover, it can be proved that , for any , can be identified with the space of square matrices .

Since , then for all and . As a consequence, if at the neutral element e, namely the identity , of the matrix Lie group G, then . It follows that, at any , every tangent vector can be written as for a unique [31,32].

Let us describe some basic facts on Lie group actions on manifolds induced by Lie algebras of vector fields. It is known that every finite-dimensional Lie algebra, V, of vector fields on a manifold N gives rise to a (local) Lie group action

whose fundamental vector fields are given by the elements of V and G is a connected and simply connected Lie group whose Lie algebra is isomorphic to V. If the vector fields of V are complete, then the Lie group action (2) is globally defined on . Let us show how to obtain from V, which will be of crucial importance in this work.

Let us restrict ourselves to an open neighborhood of the neutral element of G, where we can use canonical coordinates of the second-kind related to a basis of . Then, each can be expressed as

for certain uniquely defined parameters . To determine , we determine the curves

where must be the integral curve of for . Indeed, for any element expressed as in (3), using the intrinsic properties of a Lie group action,

the action is completely defined for any .

In this work we will deal with some particular matrix Lie groups, starting from the general linear matrix group , where we recall that may be or . As is well-known, any closed subgroup of is also a matrix Lie group ([29], Theorem 15.29, pg. 392). In the forthcoming pages we will work with some of those subgroups such as , the Lie group formed by real matrices with unit determinant. Moreover, for future reference we recall that the Lie algebra of , i.e., , is the space of traceless real matrices [32,33].

2.3. Lie Systems

The Lie Theorem [15] states that a Lie system is a t-dependent system of (first-order) ordinary differential equations that describes the integral curves of a t-dependent vector field that takes values in a finite-dimensional Lie algebra of vector fields, namely the aforementioned Vessiot–Guldberg Lie algebra (VG) [1,5]. As we also mentioned previously, one of the most important characteristics of Lie systems is that they admit (generally nonlinear) superposition rules and a plethora of mathematical properties mediated by the Lie theorem [15]. Furthermore, some Lie systems can be studied via a Hamiltonian formulation [2,20].

In this section we introduce some of these fundamental concepts in the theory of Lie systems. In this way, we start by introducing solutions of Lie systems in terms of superposition rules.

On a first approximation, a Lie system is a first-order system of ODEs that admits a superposition rule.

Definition 1.

A superposition rule for a system X on N is a map such that the general solution of X can be written as , where is a generic family of particular solutions and ρ is a point in N related to the initial conditions of X.

A classic example of Lie system is the Riccati Equation ([2], Example 3.3), that is,

with being arbitrary functions of t. It is known then that the general solution, , of the Riccati equation can be written as

where are three different particular solutions of (5) and is an arbitrary constant. This implies that the Riccati equation admits a superposition rule such that

The conditions that guarantee the existence of a superposition rule are gathered in the Lie theorem ([34], Theorem 44).

Theorem 1.

(Lie theorem). A first-order system X on N,

admits a superposition rule if and only if X can be written as

for a certain family of t-dependent functions and a family of vector fields on N that generate an r-dimensional Lie algebra of vector fields.

The Lie theorem yields that every Lie system X is related to (at least) one VG Lie algebra, V, that satisfies that Lie() . This implies that the minimal Lie algebra has to be finite-dimensional, and vice versa [5].

Example 1.

The t-dependent vector field on the real line associated with (5) is , where are vector fields on given by

Since the commutation relations are

the vector fields generate a VG Lie algebra isomorphic to . Then, the Lie theorem guarantees that (5) admits a superposition rule, which is precisely the one shown in (6).

2.3.1. Automorphic Lie Systems

The general solution of a Lie system on N with a VG Lie algebra, V, can be obtained from a single particular solution of a Lie system on a Lie group G whose Lie algebra is isomorphic to V, a so-called automophic Lie system ([5], §1.4). As the automorphic Lie system notion is going to be central in our paper, let us study it in some detail (see [5] for details).

Definition 2.

An automorphic Lie system is a t-dependent system of first-order differential equations on a Lie group G of the form

where is a basis of the space of right-invariant vector fields on G and are arbitrary t-dependent functions. Furthermore, we shall refer to the right-hand side of Equation (10) as , i.e., .

Because of right-invariant vector fields, systems in the form of have the following important property.

Proposition 1.

(See [5], §1.3) Given a Lie group G and a particular solution of the Lie system defined on G, as

where are arbitrary t-dependent functions and are right-invariant vector fields, we have that is also a solution of (11) for each .

An immediate consequence of Proposition 1 is that, once we know a particular solution of , any other solution can be obtained simply by multiplying the known solution on the right by any element in G. More concretely, if we know a solution of (11), then the solution of (11) with initial condition can be expressed as . This justifies that henceforth we only worry about finding one particular solution of , e.g., the one that fulfills . The previous result can be understood in terms of the Lie theorem or via superposition rules. In fact, since (11) admits a superposition rule , the system (1) must be a Lie system. Alternatively, the same result follows from the Lie Theorem and the fact that the right-invariant vector fields on G span a finite-dimensional Lie algebra of vector fields.

There are several reasons to study automorphic Lie systems. One is that they can be locally written around the neutral element of their Lie group in the form

where is the set of matrices of coefficients in , for every .

The main reason to study automorphic Lie systems is given by the following results, which show how they can be used to solve any Lie system on a manifold. Let us start with a Lie system X defined on N. Hence, X can be written as

for certain t-dependent functions and vector fields that generate an r-dimensional dimensional VG Lie algebra. The VG Lie algebra V is always isomorphic to the Lie algebra of a certain Lie group G. The VG Lie algebra spanned by gives rise to a (local) Lie group action whose fundamental vector fields are those of V. In particular, there exists a basis in so that

In other words, is the flow of the vector field for . Note that if for , then for (cf. [1]).

To determine the exact form of the Lie group action as in (4), we impose

where . If we stay in a neighborhood U of the origin of G, where every element can be written in the form

then the relations (13) and the properties of allow us to determine on U. If we fix , the right-hand side of the equality turns into an integral curve of the vector field ; this is why (13) holds.

Proposition 2.

(see [1,5] for details) Let be a solution to the system

Then, is a solution of , where . In particular, if one takes the solution that satisfies the initial condition , then is the solution of X such that .

Let us study a particularly relevant form of automorphic Lie systems that will be used hereafter. If is a finite-dimensional Lie algebra, then Ado’s theorem [30] guarantees that is isomorphic to a matrix Lie algebra . Let be a basis of . As reviewed in Section 2.2, each gives rise to a right-invariant vector field , with , on G. These vector fields have the opposite commutation relations to the (matrix) elements of the basis.

In the case of matrix Lie groups, the system (11) takes a simpler form. Let be the matrix associated with the element . Using the right invariance property of each , we have that

We can write the last term as

in such a way that for matrix Lie groups, the system on the Lie group is

where I is the identity matrix (which corresponds with the neutral element of the matrix Lie group) and the matrices form a finite-dimensional Lie algebra, which is anti-isomorphic to the VG Lie algebra of the system (by anti-isomorphic we imply that the systems have the same constants of structure but that they differ in one sign).

There exist various methods to solve system (11) analytically ([6], §2.2), such as the Levi decomposition [35] or the theory of reduction of Lie systems ([4], Theorem 2). In some cases, it is relatively easy to solve it, as is the case where are constants. We will depict an example in this particular case in Section 4. Nonetheless, we are interested in a numerical approach, since we will try to solve the automorphic Lie system with adapted geometric integrators. The solutions on the Lie group can be straightforwardly translated into solutions on the manifold for the Lie system defined on N via the Lie group action (2).

To finish this section, we will employ the previous developments in order to define our novel procedure to (geometrically) construct a continuous solution of a given Lie system.

The 7-step method: Reduction procedure to automorphic Lie system

The method can be itemized in the following seven steps:

- 1.

- We identify the VG Lie algebra of vector fields that defines the Lie system on N.

- 2.

- We look for a Lie algebra isomorphic to the VG Lie algebra, whose basis is with the same structure constants of in absolute value, but with a negative sign.

- 3.

- We integrate the vector fields to obtain their respective flows with .

- 4.

- Using canonical coordinates of the second kind and the previous flows we construct the Lie group action using expressions in (13).

- 5.

- We define an automorphic Lie system on the Lie group G associated with as in (11).

- 6.

- We compute the solution of the system that fulfills .

- 7.

- Finally, we retrieve the solution for X on N through the expression .

3. Discretization of Lie Systems

This section adapts known numerical methods on Lie groups to automorphic Lie systems. For this purpose, we start by reviewing briefly some fundamentals on numerical methods for ordinary differential equations and Lie groups [36,37,38], and later focus on two specific numerical methods on Lie groups, the Magnus expansion and RKMK methods [8,23,24,25,26].

Recall that, in this paper, we focus on ordinary differential equations of the form

When N is (or diffeomorphic to) a Euclidean space, there is a plethora of numerical schemes approximating the analytic solution of (15) [36,37]. We will focus on one-step methods with fixed time step. By that we mean that solutions are approximated by a sequence of numbers with , , and

where is the number of steps our time interval is divided to. We call h the time step, which is fixed, while is a discrete vector field, which (recall that, for now, we set N to be a Euclidean space with norm ) is a given approximation of f in (15). As usual, we shall denote the local truncation error by , where

and say that the method is of order r if for , i.e., . Regarding the global error

we shall say that the method is convergent of order r if , when . As for the simulations, we pick the following norm in order to define the global error, that is

Given the relevant examples in this paper, e.g., Ricatti equations, where , we will employ classical methods to approximate (15), particularly the Heun method (convergent of order 2) and RK4 (convergent of order 4), and compare to our novel discretization proposal.

3.1. Numerical Methods on Matrix Lie Groups

Our purpose is to numerically solve the initial condition problem for system (14) defined on a matrix Lie group G of the form

where while is a given t-dependent matrix and I is the identity matrix in G. That is, we are searching for a discrete sequence such that . In a neighborhood of the zero in , the exponential map defines a diffeomorphism onto an open subset of the neutral element of G and the problem is equivalent to searching for a curve in such that

This ansatz helps us to transform (18), which is defined in a nonlinear space, into a new problem in a linear space, namely the Lie algebra . This is expressed in the classical result by Magnus [39].

Theorem 2.

(Magnus, 1954). The solution of the matrix Lie group (18) in G can be written for values of t close enough to zero, as , where is the solution of the initial value problem

where is the zero element in .

When we are dealing with matrix Lie groups and Lie algebras, the is given by

where the are the Bernoulli numbers and The convergence of the series (21) is ensured as long as a certain convergence condition is satisfied [39].

If we try to integrate (20) applying a numerical method directly (note that, now, we could employ one-step methods (16) safely), might sometimes drift too much away from the origin and the exponential map would not work. This would be a problem, since we are assuming that stays in a neighborhood of the origin of where the exponential map defines a local diffeomorphism with the Lie group. Since we still do not know how to characterize this neighborhood, it is necessary to adopt a strategy that allows us to resolve (20) sufficiently close to the origin. The thing to do is to change the coordinate system in each iteration of the numerical method. In the next lines we explain how this is achieved.

Consider now the restriction of the exponential map given by

so that this map establishes a diffeomorphism between an open neighborhood around the origin in and its image. Since the elements of the matrix Lie group are invertible matrices, the map from to the set

is also a diffeomorphism. This map gives rise to the so-called first-order canonical coordinates centered at .

As is well-known, the solutions of (20) are curves in whose images by the exponential map are solutions to (18). In particular, the solution of system (18) such that is the zero matrix in , namely , corresponds with the solution of the system on G such that . Now, for a certain , the solution in such that , corresponds with via first-order canonical coordinates centered at , since

and the existence and uniqueness theorem guarantees around . In this way, we can use the curve and the canonical coordinates centered on to obtain values for the solution of (18) in the proximity of , instead of using . Whilst the curve could be far from the origin of coordinates for , we know that will be close, by definition. Applying this idea in each iteration of the numerical method, we are changing the curve in to obtain the approximate solution of (18) while we stay near the origin (as long as the time step is small enough).

Thus, what is left is defining proper numerical methods for (20) whose solution, i.e., , via the exponential map, provides us with a numerical solution of (18) remaining in G. In other words, the general Lie group method defined this way [8,23] can be set by the recursion

Next, we introduce two relevant families of numerical methods providing .

3.1.1. The Magnus Method

Based on the work by Magnus, the Magnus method was introduced in [23,40]. The starting point of this method is to resolve Equation (20) by means of the Picard procedure. This method ensures that a given sequence of functions converges to the solution of (20) in a small enough neighborhood. Operating, one obtains the Magnus expansion

where each is a linear combination of iterated commutators. The first three terms are given by

Note that the Magnus expansion (23) converges absolutely in a given norm for every such that ([8], p. 48)

In practice, if we work with the Magnus expansion, we need a way to handle the infinite series and calculate the iterated integrals. Iserles and Nørsett proposed a method based on binary trees [23,40]. In ([8], §4.3) we can find a method to truncate the series in such a way that one obtains the desired order of convergence. Similarly, ([8], §5) discusses in detail how the iterated integrals can be integrated numerically. In our case, for practical reasons we will implement the Magnus method following the guidelines of Blanes, Casas, and Ros [41], which is based on a Taylor series of in (18) around the point (recall that, in the Lie group and Lie algebra equations, we are setting the initial time ). With this technique, one is able to achieve different orders of convergence. In particular, we will use the second- and fourth-order convergence methods ([41], §3.2), although one can build up to eighth-order methods.

The second-order approximation is

and the forth-order one reads

where and

As we see from the definition, the first method computes the first and second derivative of matrix . Applying the coordinate change in each iteration (22), we can implement it through the following equations:

where stand for the first and second derivatives of in terms of t at . Note that the convergence order is defined for the Lie group dynamics (18). That is, when we say that the above methods are convergent of order 2, for instance, that means , with , for a proper Lie matrix norm.

3.1.2. The Runge–Kutta–Munthe–Kaas Method

Changing the coordinate system in each step, as explained in previous sections, the classical RK methods applied to Lie groups give rise to the so-called Runge–Kutta–Munthe–Kaas (RKMK) methods [25,26]. The equations that implement the method are

where the constants , , can be obtained from a Butcher’s table ([38], §11.8) (note that s is the number of stages of the usual RK methods). Apart from this, we have the consistency condition . As the equation that we want to solve comes in the shape of an infinite series, it is necessary to study how we evaluate the function . For this, we need to use truncated series up to a certain order in such a way that the order of convergence of the underlying classical RK is preserved. If the classical RK is of order p and the truncated series of (20) is up to order j, such that , then the RKMK method is of order p (see [25,26] and ([42], Theorem 8.5, p. 124)). Again, this convergence order refers to the equation in the Lie group (18).

Let us now determine the RKMK method associated with the explicit Runge–Kutta whose Butcher’s table is

that is, a Runge–Kutta of order 4 (RK4). This implies that we need to truncate the series at :

Then, the RKMK implementation for the given Butcher’s table is

where is (26).

| 0 | ||||

| 0 | ||||

| 1 | 0 | 0 | 1 | |

It is interesting to note that the method obtained in the previous section using the Magnus expansion (24) can be retrieved by an RKMK method associated with the following Butcher’s table:

Since it is an order 2 method, for the computation of , one can use .

| 0 | ||

| 0 | 1 |

3.2. Numerical methods for Lie Systems

So far, we have established in Procedure Section 2.3.1 how to construct an analytical solution of a Lie system on a manifold N via a Lie group action on N, which is obtained by means of the integration of the VG Lie algebra of the Lie system. On the other hand, in Section 3.1 we have reviewed some methods in the literature providing a numerical approximation of the solution of (18) remaining in the Lie group G (which accounts for their most remarkable geometrical property).

Now, let us explain how we combine these two elements to construct our new numerical methods, so we retrieve the solution of (12) on N. Let be the Lie group action (13) and consider the solution of the system (18) such that . This solution permits us to retrieve the solution on N of (12) for small values of t, i.e., when a solution of (18) stays close to the neutral element and hence the Lie group action is properly defined. Numerically, we have shown that the solutions of (18) can be provided through the approximations of (21), say , and (22), as long as we stay close enough to the origin. As particular examples, we have picked the Magnus and RKMK methods in order to obtain and, furthermore, the sequence . Next, we establish the scheme providing the numerical solution to Lie systems.

Definition 3.

Let us consider a Lie system evolving on a manifold N of the form

and let

be its associated automorphic Lie system. We define the numerical solution to the Lie system, i.e., , via Algorithm 1.

| Algorithm 1 Lie systems method |

| Lie systems method |

|

At this point, we would like to highlight an interesting geometric feature of this method. On the one hand, the discretization is based on the numerical solution of the automorphic Lie system underlying the Lie system, which, itself, is founded upon the geometric structure of the latter. This numerical solution remains on G, i.e., for all k, due to the particular design of the Lie group methods (as long as h is small). Given this, our construction respects as well the geometrical structure of the Lie system, since, in principle, it evolves on a manifold N. We observe that the iteration

leads to this preservation, since as long as and (we recall that ). Note as well that the direct application of a one-step method (16) on a general Lie system (12) would destroy this structure.

4. Application to SL

4.1. SL() and the Riccati Equation

Let us recall the first-order Riccati equation over the real line . One can check a comprehensive description of all the physical applications of this equation in [2]. The Riccati equation reads

where are arbitrary t-dependent functions. The associated t-dependent vector field is , where

and whose commutators are

This proves that the Riccati equation is a Lie system related to a VG Lie algebra isomorphic to . Thus, we employ the 7-step method in Section 2.3.1 to study its solutions. We choose the basis of to integrate the VG Lie algebra to a Lie group action of SL on . In more detail,

Note that

We obtain the flows for the vector fields , and by integrating them in terms of the real parameters , respectively. Indeed, the flows of the vector fields read

respectively. Using canonical coordinates of the second-kind, we can write near the neutral element as

We define the Lie group action through the equations

Calculating the three exponential expressions in (30) and comparing the expression with an arbitrary element with parameters , we have

from where the parameters read

The action is obtained as

and substituting the flows,

Now, substituting the parameters (31) and bearing in mind that for any it is fulfilled that , we can reach the expression of the action that results in a homography [43]

4.1.1. Exact Solution

It is interesting to note that if the t-dependent coefficients of the Lie system are constants, the matrix Y associated with the linear system on the Lie group is t-independent and the solution of the automorphic Lie system can be easily retrieved.

For example, consider the Riccati equation with constant coefficients

obtained by assuming , and in (28). The system on the group (14) associated with this Riccati equation reads

where is the identity matrix and is

If we write (33) in the canonical form

or equivalently, , where

the solution of the system reads

Observe that the matrix is constant, so the integration is trivial. Furthermore, since is nilpotent, the exponential is simply truncated at order 2. In this way, we obtain the solution:

Applying the Lie group action, we retrieve the solution of the original system:

where is the initial condition.

4.1.2. Numerical Example

Let us now put into practice the numerical methods proposed in Definition 3. For this matter, we consider

This is another Riccati equation with t-dependent coefficients , and Its solution is

for the initial condition .

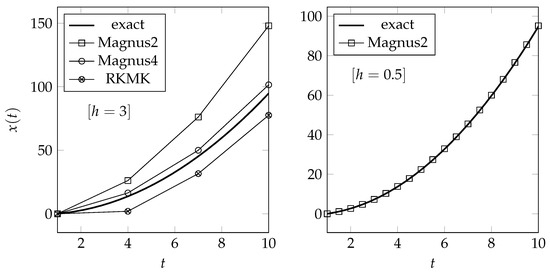

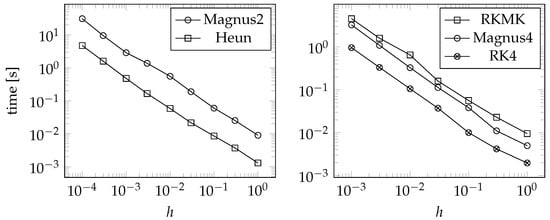

In Figure 1, we show how the described numerical methods approximate the exact solution (35) in the interval taking different time steps and employing Magnus 2, Magnus 4, and RKMK as underlying methods in the Lie group.

Figure 1.

Exact vs. numerical solutions of (34) with . In the left plot we observe the natural better approximation of higher-order methods for huge time steps (). In the right plot, we observe a closer approximation to the exact dynamics when h decreases ().

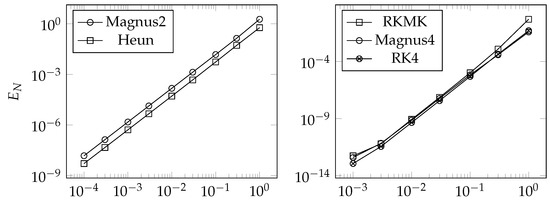

In Figure 2, we show convergence plots. To make a proper comparison, we include two classical numerical schemes, Heun (order 2) and RK4, respectively, for the corresponding orders, applied directly to (34). As is apparent, the slopes of the convergence lines are two and four, and this manifests that the order of convergence of the numerical methods on the underlying Lie group is transmitted to the manifold in this particular example. This transmission can be easily understood in terms of the local truncation error of the underlying Lie group method and the particular form of the analytical solution we obtain, i.e., (32). Namely, if we are applying an order p Lie group method in this particular example, that means , , , , where min. Naturally, are the components of the SL matrix we are dealing with. Taking this into account, the analytical expression (32) and the definition of the local truncation error we have introduced in (17), it is straightforward to see that , and, consequently, it is expected that the convergence order of the Lie group method is transmitted to the manifold.

Figure 2.

Convergence for the Riccati Equation (34).

4.2. SL() and Matrix Riccati Equations

A general matrix Riccati equation [44] has the following form:

where The case that matters to us is , for which the matrix Riccati equation has a VG Lie algebra isomorphic to . Then, Equation (36) takes the form

where are arbitrary functions of time. Equivalently, we can write the previous matrix equation as

The t-dependent vector field associated with this system can be written as

where

Note that X only really depends on eight t-dependent functions, since , and appear as linear combinations and . Let us list only the non-vanishing commutators for these vector fields:

From this we conclude that (37) is a Lie system. Now, we choose a matrix basis for :

To integrate the VG Lie algebra of (38) to a Lie group action, we express the elements of the Lie group in terms of canonical coordinates of the second-kind in the following way:

where are real parameters univocally determined for each Y in an open neighborhood of the neutral element of . The exponentials in the above expression can be calculated very easily and, by using their values in (40), it turns out that

where y . Rewriting some equalities in terms of others, i.e., and , we obtain a linear system from which we obtain and . Operating with the remaining ones, we calculate the rest of the parameters.

Integrating the vector fields , we obtain their flows, , which in turn give us the action

In view of (40), the composition of the flows allows us to obtain the complete action , with

Operating with these expressions, we can rewrite the action through homographies as follows:

with coefficients

Numerical example

To illustrate again our numerical methods, we will take the following equation as an example:

which is a matrix Riccati Equation (37) with t-dependent functions

More exactly, it is an affine system of first-order differential equations. For the initial condition , the solution of (44) is

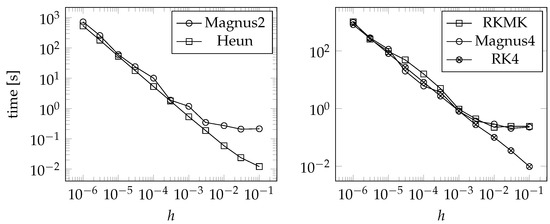

Figure 3 shows convergence plots.

Figure 3.

Convergence for the affine system of first-order differential Equation (44).

In this case, one can depict that, although our method is still convergent, the order of the Lie group is not transmitted to the manifold N (in both cases the slope of the convergence lines is about 1). In this case, our method is not compared to Heun and RK4 applied directly to (44) but to an alternate scheme given by

where is the numerical solution of (18) when Heun and RK4 are applied to them (in Figure 3, they are referred to as Heun and RK4). Naturally, this implies that .

Our conjecture is that, in this case, the construction of the action changes the convergence of the method, which can be sustained in the high nonlinearity obtained when defining the parameters (41), (42), (43). An interesting open question is whether there is a way to modify the methods according to the Lie group action so that the convergence is transmitted correctly. Another clue pointing in that direction is that, as can be easily seen in the plots, although the velocity of convergence is about the same for our method and (45), quantitatively the error of the former is lower. We consider this as another (positive) geometrical symptom, since, apparently, the error worsens when the underlying Lie group structure is not preserved.

4.3. Generalization to SL()

The special linear Lie group plays an essential role in mechanical systems and integrable systems (see [19,27,45] and references therein). This is why we briefly detail a possible generalization of our proposed methods to SL().

Recall that the Lie algebra associated with the Lie group has dimension . In fact, a matrix representation of is given by the matrix Lie algebra given by traceless matrices. For simplicity, we can choose a basis of given by matrices with one nontrivial off-diagonal entry equal to one, together with diagonal traceless matrices of the form

The total matrices are traceless and linearly independent. A Lie group action can then be constructed via homographies as follows (cf. [44]):

where , where is

Note that if is the standard scalar product in and we call , with , the rows of Y and stands for the point in , then (46) can be rewritten as for .

It is worth noting that if two VG Lie algebras on two manifolds are diffeomorphic, i.e., there exists a diffeomorphism such that , then can be integrated to two -equivariant Lie group actions and , i.e., for every and . In particular, if is the VG Lie algebra of matrix Riccati equations studied in this section and is another VG Lie algebra on diffeomorphic to , then the Lie group action is -equivariant to . Since every diffeomorphism in can be understood as a change in variables, the -equivariance of and entails that a change in variables in allows us to write the action of every via as a homography. Note that it is simple to prove that (46) gives rise to a Lie group action of SL and its fundamental vector fields are those related to matrix Riccati equations.

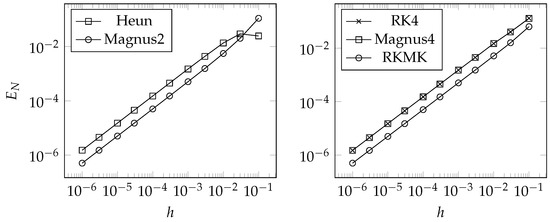

Increase in Numerical Cost as n Increases

We can indirectly measure the numerical cost of our schemes according to the time they need to compute the solution. Let us consider the following equation:

whose analytical solution is

Now, we apply our five numerical schemes to the equation above and plot the step size (which is strictly related with n) versus the time consumed for the resolution of the equation.

In the diagrams displayed in Figure 4, we can observe that in the logarithmic axis the relation between the variables is close to being linear. As expected, the fourth-order schemes (RKMK, Magnus 4, and RK4) show a bigger increase in numerical cost as n increases.

Figure 4.

Numerical integration of Riccati Equation (47).

Now, we renact the same process to the following differential system:

whose solution can be written as

and we obtain the following figures.

In Figure 5, when h is small (and, therefore, n is big), we observe again a linear relation between the numerical cost and the index n.

Figure 5.

Numerical integration of Equation (48).

5. Applications in Linear Quadratic Control

Now, we provide an interesting application to optimal control of the method to obtain the solution of Lie systems given in Procedure in Section 2.3.1. A very useful model to carry out the control of dynamical systems is the representation in the space of states. The most general representation on such space is

where is a vector containing the state variables of the system, is its time derivative, is the vector containing the input variables, is the vector with the output variables, and and are two t-dependent arbitrary vector fields. We can manipulate the inputs to modify the state of the system.

A very important and common model is that of linear systems, given their simplicity [46]. Indeed, it is pretty usual to search for a linearization of nonlinear problems. The most general representation of a linear system is

where the t-dependent matrices , and are the state (or system) matrix, the input matrix, the output matrix, and the feedthrough (or feedforward) matrix, respectively. In order for the system to be defined, the dimensions of the matrices must be , , , and for every .

In particular, we are interested in the problem of optimal control with a quadratic cost function, which, as we are going to show, can be transformed into a matrix Ricatti equation. That is, given a linear system (49), the state , and the time interval , we need to find an input starting with condition that minimizes the quadratic cost function, i.e.,

where S is a positive semi-definite matrix and for all the matrices and are, respectively, positive semi-definite and positive definite. Obviously, , and for every .

Since the matrices involved are positive (semi-)definite, the terms appearing in them are a measure of the size of the vectors and . Each of them “penalizes” a different aspect of the control. The first one measures how far the system is from the null state at the end of the time interval. Analogously, the second term measures the distance between the state and the null state along time. In this way, the faster the system approaches the null state, the smaller the cost function is and the closer it is to the null state at the end of the time interval. On the other hand, the third term measures the size of the input along time in such a way that the smaller it is (with respect to the measure defined by the matrix ), the smaller the value of the function J will be.

Adjusting the matrices we choose what aspects are more important. If we choose the matrix S in such a way that staying far from the null state at the end of the interval is very penalized, the optimal control will conduct the system towards this state at the end of the time interval, at the cost that the input will be bigger. If takes over the other two matrices, the control will lead the system to the null state as fast as possible. On the contrary, if the dominant matrix is , the input will be small, but probably the other two aspects will be adversely affected. This is interesting when the size of the input is related to any other variable that we would like to minimize.

In this formulation, the cost function leads the system towards the null state. Nonetheless, it is easy to modify the problem so the system drifts towards a different state. If we aim at establishing the system in a certain state , if we are capable of finding an input such that

then, performing the change in variables

we obtain a new system

in which we can apply the quadratic cost function to obtain an optimal control problem that conducts the system towards . In this way, the original system will tend to .

The solution of the linear quadratic control problem is given as a state-feedback controller, i.e., the optimal input that minimizes is a function of the state of the system. In particular, we can write , where is the feedback matrix and is calculated as

where is the solution of the following matrix differential Riccati equation:

The initial condition is given at the end of the time interval because one needs to integrate the equation in reverse ([47], §8.2). Equation (50) is the matrix Riccati equation introduced in Section 4.

Now, we are going to solve an example involving linear quadratic control by the application of our analytical resolution of Lie systems.

Example: Velocity of a Vehicle

We propose a model of a control for the velocity of a vehicle. We will have a single input variable, which will correspond with the strength of the engine to accelerate the vehicle. Let us assume that the only force that could decelerate the vehicle is the friction with air and that it is proportional to the square of the velocity [48]. For simplicity, our model reduces to describing motions with positive velocity. Under these hypotheses, it is enough to take the velocity of the vehicle as the variable of state to completely characterize the system. Applying Newton’s second law, we obtain the equation describing the system:

where F is the engine force, v is the velocity, k is a constant of proportionality, and m is the mass of the vehicle. For simplicity, we will take . We change the notation to use u instead of F, this being the input of the system. So, the system now reads

This system is nonlinear, but when we are designing a control that keeps the velocity constant around a certain value, we can linearize the system in the neighborhood of such a value to compute the optimal control with quadratic cost function that keeps the vehicle at cruising speed. Again, to simplify the computations, we take . Under these circumstances, , so we obtain . The linearized system around the point results in

where and are the incremental variables around .

To further simplify, we will take all the matrices constant in the quadratic cost function, and equal to one in the time interval . Then, the cost function is

The function that minimizes (51) is , where . In our case, , being the solution of the Riccati equation

with (final) condition .

Now, we resolve (52) analytically, applying our procedure explained in Section 2.3.1. Since it is a Riccati equation with constant coefficients, given its simplicity, we can compute its analytical solution by resolving its associated linear system on the group . In this case, we have to solve , with , where the matrix A is (according to the notation in Section 4.1)

The exact solution of this system will be expressed in its canonical form , where

Its solution is

that is,

Finally, we can retrieve the solution to (52) by means of the Lie group action of on as

The optimal control problem is . We introduce a constant that carries the system from an initial perturbation to the functioning point . If we start from a point , to determine the constant value of u that takes the system back to the cruising speed , the equation is

with initial conditions and . The solution can be computed trivially

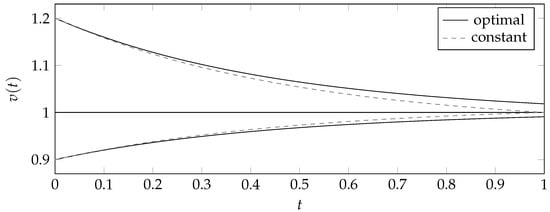

In Figure 6, we have depicted the evolution of the system with different initial conditions around . The continuous line represents the evolution of the system when we use optimal control and the discontinuous line corresponds with a constant u.

Figure 6.

Evolution of the system with optimal control and constant control.

The chosen values of for the optimal control do not take the vehicle at cruising speed in the time interval considered. This makes sense if we think of the quadratic cost function as a compromise to reduce the size of the input, so the system reaches the functioning point fast and efficiently. If we want to ensure that the vehicle reaches the cruising speed, we need to reflect it in the cost function by giving more weight to S and Q.

If we now calculate the cost function for different initial conditions, we see that the constant control makes the system reach the cruising speed quicker and with less error than the optimal control, and the cost is smaller. We list some values in Table 1.

Table 1.

Summary of results in example.

The input represents the engine force accelerating the vehicle. It is also reasonable that the fuel consumption will be proportional to the strength of the force. In this way, we can derive the optimal control that keeps the vehicle at constant cruising speed and minimizes the amount of fuel.

6. Conclusions

This paper is concerned with the integration of Lie systems, both from the analytical and numerical perspectives, using particular techniques adapted to their geometric features. This work is rooted in the field of numerical and discrete methods specifically adapted for Lie systems, which is still a very unexplored branch of research [2,13,14,49].

One major result in this paper is that we are able to solve Lie systems on Lie groups. This permits us to solve all Lie systems related to the same automorphic Lie system at the same time (equivalently, all Lie systems that have isomorphic VG Lie algebras) [1,5]. Automorphic Lie systems present a simple superposition rule that only depends on a single particular solution. This is an advantage in comparison with superposition rules for general Lie systems, which used to depend on a larger number of particular solutions. The second most important advantage is that, since Lie groups admit a local matrix representation, automorphic Lie systems can be written as first-order systems of linear homogeneous ODEs in normal form.

Employing the geometric structure of Lie systems, we propose a particular geometric integrator for Lie systems that exploits the properties of such a structure. Particularly, we employ the Lie group action obtained by integrating the Vessiot–Guldberg Lie algebra of a Lie system to obtain the analytical solution of the Lie system. We use the automorphic Lie system related to a Lie system, along with geometric schemes, say Lie group integrators, to preserve the group structure. Specifically, we use two families of numerical schemes; the first one is based on the Magnus expansion, whereas the second is based on RKMK methods. We have compared both methods in different situations. We can generally say that the fourth-order RKMK is slightly more precise than the Magnus expansion of the same order. Regarding the transmission of convergence order from the Lie group method to the Lie system method, our conjecture, rooted in the results obtained for different Lie groups, is that how the Lie group action is constructed has a central role. Whilst the numerical methods work very satisfactorily on the Lie group level, when we translate the properties into the manifold, we see that the convergence and precision of the numerical method can be modified (as in the SL case). Nonetheless, since our methods are based on geometric integrators, they inherit all the geometric properties we wish to preserve and the solutions always belong in the manifold, where the Lie system is defined (something that is not preserved if one uses classical numerical schemes).

From the results obtained for and , we have been able to provide a generalization to , and we have discussed the form of the Lie group action. As has been evidenced, is a relevant Lie group, appearing recurrently in nonlinear oscillators of Winternitz–Smorodinsky, Milney–Pinney, and Ermakov sytems, as well as higher-order Riccati equations.

The last important result is that solving higher-order Riccati equations has allowed us to resolve important examples appearing in engineering problems. We have particularly proposed a problem in optimal control in which matrix Riccati equations appear naturally from quadratic cost functions.

In the future, we will analyze the convergence transmission from automorphic Lie systems to related Lie systems. In addition, since the exponential is a local diffeomorphism, the topological study of matrix Lie groups would allow us to establish the optimal time-step for Lie group methods, which is a long-standing problem that would also help optimize Lie system methods. Another endeavor is to study Lie systems on more general manifolds that are not necessarily isomorphic to and depict how some geometric and topological invariants are preserved [50,51]. Right now, we are working on examples on Anti-de-Sitter spaces so we can depict how the curvature is preserved under the numerical method. We could easily generalize this to all kinds of systems in all types of curved spaces. This will in fact prove the interest of our 7-step method, since one could argue that the nongeometric approximation methods seem fairly better than our proposal. Nonetheless, in our forthcoming publications, we will show that when there are invariants in the game, the 7-step method is the best choice to preserve certain geometric and topological invariants.

Author Contributions

Conceptualization, J.d.L., C.S. and F.J.A.; methodology, L.B.D.; software, L.B.D.; validation, F.J.A. and C.S.; formal analysis, L.B.D.; investigation, L.B.D.; data curation, L.B.D.; writing—original draft preparation, C.S.; writing—review and editing, L.B.D. and F.J.A.; visualization, J.d.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Acknowledgments

J. de Lucas acknowledges partial financial support from MINIATURA-5 Nr 2021/05/X/ST1/01797, funded by the National Science Centre (Poland). C. Sardón and F. Jiménez acknowledge project “Teoría de aproximación constructiva y aplicaciones” (TACA-ETSII), UPM, Madrid.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cariñena, J.F.; Grabowski, J.; Marmo, G. Lie-Scheffers Systems: A Geometric Approach; Napoli Series in Physics and Astrophysics; Bibliopolis: Bruxelles, Belgium, 2000. [Google Scholar]

- de Lucas, J.; Sardón, C. A Guide to Lie Systems with Compatible Geometric Structures; World Scientific: Singapore, 2020. [Google Scholar]

- Winternitz, P. Nonlinear action of Lie groups and superposition rules for nonlinear differential equations. Phys. A 1982, 114, 105–113. [Google Scholar] [CrossRef]

- Cariñena, J.F.; Grabowski, J.; Ramos, A. Reduction of t-dependent systems admitting a superposition principle. Acta Appl. Math. 2001, 66, 67–87. [Google Scholar] [CrossRef]

- Cariñena, J.F.; de Lucas, J. Lie Systems: Theory, Generalisations, and Applications. Diss. Math. 2011, 479. [Google Scholar] [CrossRef]

- Sardón, C. Lie Systems, Lie Symmetries and Reciprocal Transformations. Ph.D. Thesis, Universidad de Salamanca, Salamanca, Spain, 2015. [Google Scholar]

- Cortés, J.; Martínez, S. Non-holonomic integrators. Nonlinearity 2001, 14, 1365–1392. [Google Scholar] [CrossRef]

- Iserles, A.; Munthe-Kaas, H.; Nørsett, S.; Zanna, A. Lie-group methods. Acta Numer. 2005, 9, 215–365. [Google Scholar] [CrossRef]

- Marrero, J.C.; Martín de Diego, D.; Martínez, E. Discrete Lagrangian and Hamiltonian mechanics on Lie groupoids. Nonlinearity 2006, 19, 1313–1348. [Google Scholar] [CrossRef]

- Marsden, J.E.; West, M. Discrete mechanics and variational integrators. Acta Numer. 2001, 10, 357–514. [Google Scholar] [CrossRef]

- McLachlan, R.; Quispel, G.R.W. Splitting methods. Acta Numer. 2002, 11, 341–434. [Google Scholar] [CrossRef]

- Sanz-Serna, J.M. Symplectic integrators for Hamiltonian problems: An overview. Acta Numer. 1992, 243–286. [Google Scholar] [CrossRef]

- Pietrzkowski, G. Explicit solutions of the a1-type Lie-Scheffers system and a general Riccati equation. J. Dyn. Control Syst. 2012, 18, 551–571. [Google Scholar] [CrossRef]

- Rand, D.W.; Winternitz, P. Nonlinear superposition principles: A new numerical method for solving matrix Riccati equations. Comput. Phys. Commun. 1984, 33, 305–328. [Google Scholar] [CrossRef]

- Cariñena, J.F.; Grabowski, J.; Marmo, G. Superposition rules, Lie theorem and partial differential equations. Rep. Math. Phys. 2007, 60, 237–258. [Google Scholar] [CrossRef]

- Cariñena, J.F.; Ramos, A. Integrability of the Riccati equation from a group theoretical viewpoint. Int. J. Mod. Phys. A 1999, 14, 1935–1951. [Google Scholar] [CrossRef]

- Angelo, R.M.; Wresziński, W.F. Two-level quantum dynamics, integrability and unitary NOT gates. Phys. Rev. A 2005, 72, 034105. [Google Scholar] [CrossRef]

- Lázaro-Camí, J.A.; Ortega, J.P. Superposition rules and stochastic Lie-Scheffers systems. Ann. Inst. H. Poincaré Probab. Stat. 2009, 45, 910–931. [Google Scholar] [CrossRef]

- Hussin, V.; Beckers, J.; Gagnon, L.; Winternitz, P. Superposition formulas for nonlinear superequations. J. Math. Phys. 1990, 31, 2528–2534. [Google Scholar]

- Cariñena, J.F.; de Lucas, J.; Sardón, C. A new Lie systems approach to second-order Riccati equations. Int. J. Geom. Meth. Mod. Phys. 2011, 9, 1260007. [Google Scholar] [CrossRef]

- Cariñena, J.F.; de Lucas, J. Applications of Lie systems in dissipative Milne-Pinney equations. Int. J. Geom. Meth. Mod. Phys. 2009, 6, 683–699. [Google Scholar] [CrossRef]

- Odzijewicz, A.; Grundland, A.M. The Superposition Principle for the Lie Type first-order PDEs. Rep. Math. Phys. 2000, 45, 293–306. [Google Scholar] [CrossRef]

- Iserles, A.; Nørsett, S.P. On the solution of linear differential equations in Lie groups. Philos. Trans. R. Soc. A 1999, 357, 983–1020. [Google Scholar] [CrossRef]

- Zanna, A. Collocation and relaxed collocation for the Fer and Magnus expansions. J. Numer. Anal. 1999, 36, 1145–1182. [Google Scholar] [CrossRef]

- Munthe-Kaas, H. Runge-Kutta methods on Lie groups. BIT Numer. Math. 1998, 38, 92–111. [Google Scholar] [CrossRef]

- Munthe-Kaas, H. High order Runge-Kutta methods on manifolds. J. Appl. Numer. Math. 1999, 29, 115–127. [Google Scholar] [CrossRef]

- de Lucas, J.; Grundland, A.M. A Lie systems approach to the Riccati hierarchy and partial differential equations. J. Differ. Equ. 2017, 263, 299–337. [Google Scholar]

- Kučera, V. A Review of the Matrix Riccati Equation. Kybernetika 1973, 9, 42–61. [Google Scholar]

- Lee, J.M. Introduction to Smooth Manifolds; Graduate Texts in Mathematics 218; Springer: New York, NY, USA, 2003. [Google Scholar]

- Ado, I.D. The representation of Lie algebras by matrices. Uspekhi Mat. Nauk. 1947, 2, 159–173. [Google Scholar]

- Curtis, M.L. Matrix Groups, 2nd ed.; Springer: New York, NY, USA, 1984. [Google Scholar]

- Hall, B. Matrix Lie Groups. In Lie Groups, Lie Algebras, and Representations: An Elementary Introduction; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 3–30. [Google Scholar]

- Sattinger, D.H.; Weaver, O.L. Lie Groups and Algebras with Applications to Physics; Geometry and Mechanics; Springer: Berlin/Heidelberg, Germany, 1986. [Google Scholar]

- Lie, S.; Scheffers, G. Vorlesungen über continuierliche Gruppen mit geometrischen und anderen Anwendungen; Teubner: Leipzig, Germany, 1893. [Google Scholar]

- Levi, E.E. Sulla Struttura dei Gruppi Finiti e Continui. Atti Della R. Accad. Delle Sci. Torino 1905, 40, 551–565. [Google Scholar]

- Hairer, E.; Nørsett, S.P.; Wanner, G. Solving Ordinary Differential Equations I: Nonstiff Problems; Springer: Berlin/Heidelberg, Germany, 1993. [Google Scholar]

- Isaacson, E.; Keller, H.B. Analysis of Numerical Methods; John Wiley & Sons: New York, NY, USA; London, UK; Sydney, Australia, 1966. [Google Scholar]

- Quarteroni, A.; Sacco, R.; Saleri, F. Numerical Mathematics; Springer: New York, NY, USA, 2007. [Google Scholar]

- Magnus, W. On the exponential solution of differential equations for a linear operator. Commun. Pure Appl. Math. 1954, 7, 649–673. [Google Scholar] [CrossRef]

- Iserles, A.; Nørsett, S.P.; Rasmussen, A.F. t-Symmetry and High-Order Magnus Methods; Technical Report 1998/NA06, DAMTP; University of Cambridge: Cambridge, UK, 1998. [Google Scholar]

- Blanes, S.; Casas, F.; Ros, J. Improved high order integrators based on the Magnus expansion. BIT Numer. Math. 2000, 40, 434–450. [Google Scholar] [CrossRef]

- Hairer, E.; Lubich, C.; Wanner, G. Geometric Numerical Integration; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Hartshorne, R. Foundations of Projective Geometry; W.A. Benjamin, Inc.: New York, NY, USA, 1967. [Google Scholar]

- Harnad, J.; Winternitz, P.; Anderson, R.L. Superposition principles for matrix Riccati equations. J. Math. Phys. 1983, 24, 1062. [Google Scholar] [CrossRef]

- Reid, W.T. Riccati Differential Equations; Academic: New York, NY, USA, 1972. [Google Scholar]

- Domínguez, S.; Campoy, P.; Sebastián, J.M.; Jiménez, A. Control en el Espacio de Estado; Pearson: London, UK, 2006. [Google Scholar]

- Sontag, E.D. Mathematical Control Theory: Deterministic Finite Dimensional Systems; Springer: New York, NY, USA, 1998. [Google Scholar]

- Pandey, A.; Ghose-Choudhury, A.; Guha, P. Chiellini integrability and quadratically damped oscillators. Int. J. Non-Linear Mech. 2017, 92, 153–159. [Google Scholar] [CrossRef]

- Penskoi, A.V.; Winternitz, P. Discrete matrix Riccati equations with super-position formulas. J. Math. Anal. Appl. 2004, 294, 533–547. [Google Scholar] [CrossRef]

- Herranz, F.J.; de Lucas, J.; Tobolski, M. Lie-Hamilton systems on curved spaces: A geometrical approach. J. Phys. A 2017, 50, 495201. [Google Scholar] [CrossRef]

- Lange, J.; de Lucas, J. Geometric models for Lie–Hamilton systems on . Mathematics 2019, 7, 1053. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).