Abstract

This paper presents a tensor approximation algorithm, based on the Levenberg–Marquardt method for the nonlinear least square problem, to decompose large-scale tensors into the sum of the products of vector groups of a given scale, or to obtain a low-rank tensor approximation without losing too much accuracy. An Armijo-like rule of inexact line search is also introduced into this algorithm. The result of the tensor decomposition is adjustable, which implies that the decomposition can be specified according to the users’ requirements. The convergence is proved, and numerical experiments show that it has some advantages over the classical Levenberg–Marquardt method. This algorithm is applicable to both symmetric and asymmetric tensors, and it is expected to play a role in the field of large-scale data compression storage and large-scale tensor approximation operations.

1. Introduction

Tensors are widely used in various practical fields, such as video analysis [1,2], text analysis [3], high-level network analysis [4,5,6], data encryption [7,8,9], privacy preserving [10,11,12], and so on. As a form of data storage, tensors usually contain a large amount of data, which sometimes results in great difficulty in solving problems with tensors, for instance, solving tensor equations and so on [13,14,15,16]. Sometimes it will even be impossible to store all entries of a higher-order tensor explicitly. Thus, tensor decomposition and tensor approximation have received more and more attention since the last century, especially with the era of big data coming. Research on tensors has become an attractive topic, and an increasing number of methods [17,18,19,20] for solving tensor decomposition problems have been proposed, such as the widely-used steepest descent method, Newton’s method [21], Gauss–Newton method [22], CANDECOMP/PARAFAC (CP) decomposition [23], Tucker decomposition, Tensor-Train (TT) decomposition, and algebraic method [24,25,26], and so on. We also refer to [27] and the references therein for more related work on low-rank tensor approximation techniques. These methods have rich theoretical achievements and practical applications [28,29,30]. Among them, the CP decomposition is an ideal method in both theory and accuracy aspects, and the specific algorithm is shown in [31]. However, finding the rank of CP decomposition is NP-hard [32], thus there are only several types of tensors that can be decomposed by CP decomposition. Meanwhile, part of them will even increase the data size when the rank is too large. As for TT decomposition [33], this tensor decomposition method is convenient by applying Singular Value Decomposition (SVD), but the accuracy will be greatly degraded.

While the accuracy of tensor decomposition is crucial in practical applications, it is inevitable that errors will occur, as long as it remains within an acceptable range. At the same time, the accuracy of tensor approximation is also important. To reduce the storage and minimize the error, this paper explores a nonlinear least square method for tensor decomposition and low-rank CP tensor approximation, which decomposes or approximates a tensor into/by a sum of rank-one tensors. We first transform the problem into a non-linear least squares problem, so that we can make use of the corresponding theories and techniques of the descent methods. Then, we combine the Levenberg–Marquardt [34,35,36] method with the damped method [37] to obtain a more efficient method for solving this least squares problem. An Armijo-like rule of inexact line search is introduced into this algorithm to ensure the convergence, which makes it different from the Alternating Least Squares (ALS) method. We also optimize the damping parameter to accelerate the convergence rate. It is known that Levenberg–Marquardt method was separately proposed by Kenneth Levenberg [34] and Donald Marquardt [35], and it is an effective method to solve the least squares problems, since it can achieve local quadratic convergence under some certain local error bound assumption. Recently, Levenberg–Marquardt method has been extended to solve tensor-related problems, such as tensor equations, complementarity problems and tensor split feasibility problems [38,39,40,41].

It should be noted that, although the computational efficiency for tensor approximation has been improved by this damped Levenberg–Marquardt method, the iterative process will spend more and more time ineluctably. However, the proposed method still has the following advantages. Firstly, the tensor storage can be significantly reduced since the tensor approximation can be specified according to the users’ requirements. Secondly, the proposed algorithm is universal because the tensor need not satisfy any special properties, and it is applicable to both symmetric and asymmetric tensors. Thirdly, this method is convergent in theory and shows some advantages over the classical Levenberg–Marquardt method in numerical experiments. The primary contribution of this paper is a methodological attempt, and it may play a role in the fields of large-scale data compression storage and large-scale tensor approximation operations, just as other tensor approximation methods.

The rest of this paper is organized as follows. Section 2 introduces the symbols and concepts. Section 3 focuses on the tensor approximation and converts it into a non-linear least squares problems. Then, in order to solve this non-linear least squares problem, an algorithm based on the Levenberg–Marquardt method combined with the damped method, is introduced in Section 3. After that, some numerical experiments are performed to test the feasibility and efficiency of the proposed algorithm in Section 4. Finally, Section 5 concludes the paper.

2. Preliminaries

2.1. Notation

Throughout this paper, vectors are written as italic lowercase letters, such as ; the matrices are written as capital letters, such as ; and the tensors are written as . The symbol denotes the set of non-negative integers, the symbol denotes the set of positive integers, denotes the Euclidean space, and denotes n-dimensional Euclidean space.

Suppose the function is differentiable and so smooth that the following Taylor expansion is valid:

where g in this paper denotes the gradient

and H stands for the Hessian

Let the function L be defined as follows:

where gain ratio in the Levenberg–Marquardt method is defined as

For a vector function , its Taylor expansion is

where and is the Jacobian, with

2.2. Least Squares Problem

A non-linear least squares problem is to find , which is a local minimizer for

where , are given functions.

2.3. Descending Condition

From a starting point , a descent method produces a series of points , which (hopefully) converges to , a local minimizer for a given function, and the descending condition is [22]

2.4. Rank-One Tensors

If an mth-order tensor satisfies

where , and the symbol “∘” means the vector outer product, i.e.,

then is called a rank-one tensor [31].

2.5. Frobenius Norm

For an mth-order tensor , the Frobenius norm is defined as

3. A Damped Levenberg-Marquardt Method for Tensor Approximation

For a given tensor , the number of the data is denoted as . Given , the tensor can be approximated by , with

where , according to (10), are all rank-one tensors as

Here,

Thus, there are variables in the vectors . In order to approximate with , we construct a vector function ,

where

Then, we obtain a non-linear least squares problem, which is to find

where

and

Concurrently,

combined with (7),

yields

Consequently, the number of data decreases from to . In other words, when the given number R is small enough so that , then obviously the data size will be reduced.

For obtaining a minimizer of (19), this paper applies the Levenberg–Marquardt method combined with the Damped method, inspired by the high efficiency of the Levenberg–Marquardt method in solving the least squares problem. With the starting point , we generate a sequence , which satisfies the descending condition (9). Every step from the current point u consists of: (a) find a descent direction h; (b) find a step size to achieve a proper decrease in the ; and (c) generate the next point . The iterative direction h (also written as ) is obtained by solving the following equation:

where in (22), in (23), I is the identity matrix, and is the damping parameter.

According to (5), the gain ratio in this damped Levenberg–Marquardt method is

for

where is positive as long as , and we find by (24) that . Therefore, whether is positive or not is determined by . Now, the damped Levenberg–Marquardt algorithm is stated as follows (Algorithm 1).

Remark 1.

In this algorithm, we applied the Damped technique, which indicates that when ϱ is small, μ should be increased, and thereby the penalty on large steps will be increased; on the contrary, when ϱ is large, is a good approximation to for h, and μ should be decreased. According to [37], the following strategy in general outperforms: if , then , otherwise . The termination condition is . Moreover, steps 9 and 10 show the line search process [41], where are given parameters, and are diagonal elements of matrix .

Theorem 1.

If is the cluster point of sequence generated by Algorithm 1, then .

Proof.

Suppose the contrary, it holds . From (24) and as the cluster point, we obtain

then . According to steps 9 and 10 in Algorithm 1, we have

for sequence generated by steps in Algorithm 1, when is sufficiently large, it holds that

By the Armijo criterion, we obtain , then

It follows that

Take the limit, and we have

which implies that . This contradicts (27). Therefore, it holds that . □

| Algorithm 1 A damped Levenberg–Marquardt method for tensor approximation. |

Input: Output: u

|

4. Numerical Results

In this section, we perform some numerical experiments to test the proposed method and report the results. We evaluate the error by . The computing environment is MATLAB R2018b on a laptop with Intel(R) Core(TM) i7-7700HQ CPU @ 2.80 GHz 2.80 GHz and 16.0 GB RAM.

Example 1.

The tensor is generated such that all elements are integers in the range , with . Let with , and parameters are with , we can obtain the iterative process as follows.

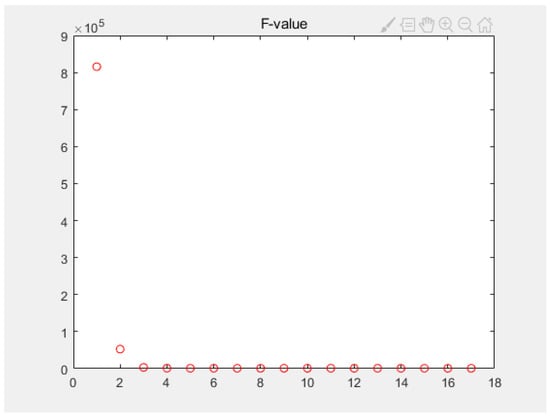

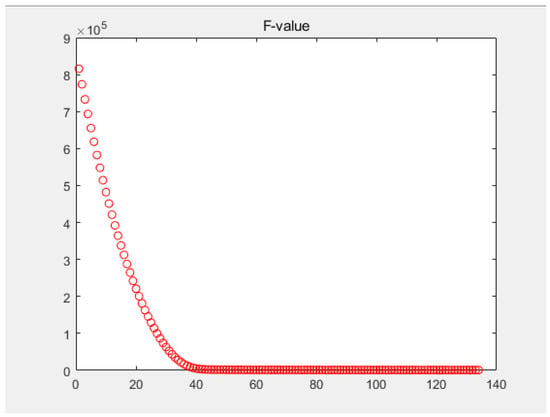

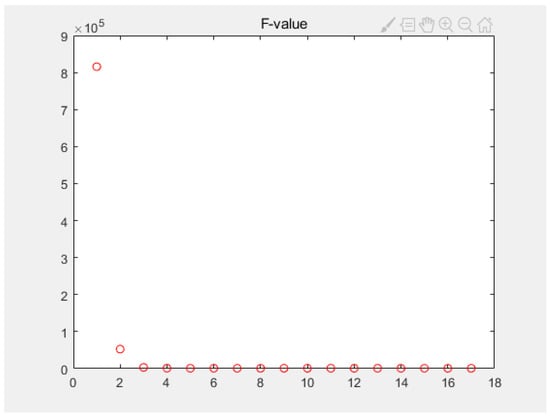

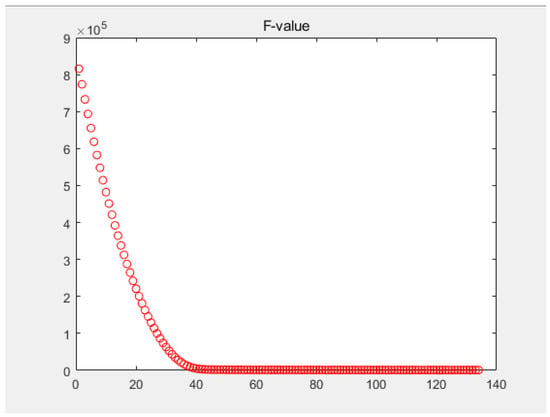

The iterative process for Algorithm 1 for solving this example is reported in Table 1 For comparison, the classical Levenberg–Marquardt method with simply is also applied to solve this example, and the iterative process is shown in Table 2. Obviously, Algorithm 1 has a higher convergence speed than the classical method with simply . Figure 1 and Figure 2 show the decreasing process of F value when solving this problem by Algorithm 1 and the classical Levenberg–Marquardt method, respectively.

Table 1.

Results of Algorithm 1 solving Example 1.

Table 2.

Results of the classical Levenberg–Marquardt method solving Example 1.

Figure 1.

F-value, is generated by Algorithm 1.

Figure 2.

F-value, by classical L-M method.

Example 2.

The tensor is generated such that all elements are integers in the range , with . Let with , and parameters are with . The iterative process is stated in the following Table 3. This example shows that Algorithm 1 is also applicable for non-square tensors.

Table 3.

Results of Algorithm 1 solving Example 2.

Example 3.

The tensor is generated such that all elements are integers in the range , with . Let with , and parameters are with . The costing time for some essential parts in Algorithm 1 is given in Table 4.

Table 4.

Example 3.

Here, the column of “Time for h” states the time for computing h, and that of “Time cost” states the runtime. We see obviously from Table 4 that, solving occupies most of the runtime. Here, a comprehensive algorithm is adopted in this paper by the function “mldivide” in MATLAB R2018b, which includes LU decomposition and so on.

Example 4.

The tensor is generated from RGB of some image ( pixels) shown as follows, with . Let with , and parameters are with . We apply Algorithm 1 to decompose these tensors generated from the images, and obtain their approximating rank-one tensors, then show the corresponding images generated from the approximating tensors (i.e., the restoring images generated from ). See Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8. Here, Figure 3, Figure 5, and Figure 7 are the original images, and comparatively, Figure 4, Figure 6 and Figure 8 are the restoring images. The iterative process is stated in Table 5. The table and Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 show that Algorithm 1 is applicable for color image tensor decomposition.

Figure 3.

No.1 yellow and blue color bar original image.

Figure 4.

No.1 yellow and blue color bar restoring image.

Figure 5.

No.2 red yellow and blue color bar original image.

Figure 6.

No.2 red yellow and blue color bar restoring image.

Figure 7.

No.3 letter E original image.

Figure 8.

No.3 letter E restoring image.

Table 5.

Example 4.

From these numerical results, we find that Algorithm 1 can be used in tensor decomposition. Compared with the classical Levenberg–Marquardt method, this damped Levenberg–Marquardt method behaves better in tensor approximation. With the help of the damped technique, the objective function F is descending rapidly. The damped parameter plays an important role in the adjustment of h. By a tensor approximation technique, the data size is obviously decreased; however, the computing speed becomes slower as the size of the tensor increases. The principle reason is that solving the Equation (24) for obtaining h costs the most time.

5. Conclusions

In this paper, we explore a tensor approximation method to reduce the scale of tensor storage, based on nonlinear least squares. It improves the classical Levenberg–Marquardt algorithm by adjusting the damped parameter with the gain ratio , which can speed up the convergence of the algorithm. An Armijo-like line search rule is also introduced into this algorithm to ensure the convergence. Preliminary numerical results show that this tensor decomposition method can help to reduce the data size effectively. Additionally, this method does not require the tensor to satisfy any specific properties, such as supersymmetry [40] and so on. The tensor can be square or non-square, sparse or nonsparse, symmetric or asymmetric. However, the computing time increases rapidly as the size of the tensor increases. By analyzing the results of the numerical experiments, the main reason causing this to happen is that solving the linear equations (step 2 in Algorithm 1) to compute h costs most of the time. How to conquer it still needs further study. Nevertheless, the proposed method still makes some sense in the field of large-scale data compression storage and large-scale tensor approximation operations.

Author Contributions

In this paper, J.Z. (Jinyao Zhao) is in charge of the Levenberg–Marquardt method for the tensor decomposition problem and the numerical experiments and most of the paper writing. X.Z. is in charge of part of the work and correcting. J.Z. (Jinling Zhao) is in charge of methodology, theoretical analysis, experiments design and supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China grant number 12171105, 11271206 and the Fundamental Research Funds for the Central Universities grant number FRF-DF-19-004.

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, Q.; Shi, X.; Schonfeld, D. A general framework for robust HOSVD-based indexing and retrieval with high-order tensor data. In Proceedings of the IEEE International Conference on Acoustics, Prague, Czech Republic, 22–27 May 2011. [Google Scholar]

- Yan, H.Y.; Chen, M.Q.; Hu, L.; Jia, C.F. Secure video retrieval using image query on an untrusted cloud. Appl. Soft Comput. 2020, 97, 106782. [Google Scholar] [CrossRef]

- Liu, N.; Zhang, B.Y.; Yan, J. Text Representation: From Vector to Tensor. In Proceedings of the IEEE International Conference on Data Mining, Houston, TX, USA, 27–30 November 2005. [Google Scholar]

- Kolda, T.G.; Bader, B.W.; Kenny, J.P. Higher-Order Web Link Analysis Using Multilinear Algebra. In Proceedings of the 5th IEEE International Conference on Data Mining (ICDM 2005), Houston, TX, USA, 27–30 November 2005. [Google Scholar]

- Jiang, N.; Jie, W.; Li, J.; Liu, X.M.; Jin, D. GATrust: A Multi-Aspect Graph Attention Network Model for Trust Assessment in OSNs. IEEE Trans. Knowl. Data Eng. 2022. Early Access. [Google Scholar] [CrossRef]

- Ai, S.; Hong, S.; Zheng, X.Y.; Wang, Y.; Liu, X.Z. CSRT rumor spreading model based on complex network. Int. J. Intell. Syst. 2021, 36, 1903–1913. [Google Scholar] [CrossRef]

- Liu, Z.L.; Huang, Y.Y.; Song, X.F.; Li, B.; Li, J.; Yuan, Y.L.; Dong, C.Y. Eurus: Towards an Efficient Searchable Symmetric Encryption With Size Pattern Protection. IEEE Trans. Dependable Secur. Comput. 2022, 19, 2023–2037. [Google Scholar] [CrossRef]

- Gao, C.Z.; Li, J.; Xia, S.B.; Choo, K.K.R.; Lou, W.J.; Dong, C.Y. MAS-Encryption and its Applications in Privacy-Preserving Classifiers. IEEE Trans. Knowl. Data Eng. 2022, 34, 2306–2323. [Google Scholar] [CrossRef]

- Mo, K.H.; Tang, W.X.; Li, J.; Yuan, X. Attacking Deep Reinforcement Learning with Decoupled Adversarial Policy. IEEE Trans. Dependable Secur. Comput. 2023, 20, 758–768. [Google Scholar] [CrossRef]

- Zhu, T.Q.; Zhou, W.; Ye, D.Y.; Cheng, Z.S.; Li, J. Resource Allocation in IoT Edge Computing via Concurrent Federated Reinforcement Learning. IEEE Internet Things J. 2022, 9, 1414–1426. [Google Scholar]

- Liu, Z.L.; Lv, S.Y.; Li, J.; Huang, Y.Y.; Guo, L.; Yuan, Y.L.; Dong, C.Y. EncodeORE: Reducing Leakage and Preserving Practicality in Order-Revealing Encryption. IEEE Trans. Dependable Secur. Comput. 2022, 19, 1579–1591. [Google Scholar] [CrossRef]

- Zhu, T.Q.; Li, J.; Hu, X.Y.; Xiong, P.; Zhou, W.L. The Dynamic Privacy-Preserving Mechanisms for Online Dynamic Social Networks. IEEE Trans. Knowl. Data Eng. 2022, 34, 2962–2974. [Google Scholar] [CrossRef]

- Li, X.; Ng, M.K. Solving sparse non-negative tensor equations: Algorithms and applications. Front. Math. China 2015, 10, 649–680. [Google Scholar] [CrossRef]

- Li, D.H.; Xie, S.; Xu, H.R. Splitting methods for tensor equations. Numer. Linear Algebra Appl. 2017, 24, e2102. [Google Scholar] [CrossRef]

- Ding, W.Y.; Wei, Y.M. Solving multi-linear systems with M-tensors. J. Sci. Comput. 2016, 68, 689–715. [Google Scholar] [CrossRef]

- Han, L. A homotopy method for solving multilinear systems with M-tensors. Appl. Math. Lett. 2017, 69, 49–54. [Google Scholar] [CrossRef]

- Hitchcock, F.L. The expression of a tensor or a polyadic as a sum of products. J. Math. Phys. 1927, 6, 164–189. [Google Scholar] [CrossRef]

- Hitchcock, F.L. Multiple invariants and generalized rank of a p-way matrix or tensor. J. Math. Phys. 1928, 7, 39–79. [Google Scholar] [CrossRef]

- Kolda, T.G. Multilinear operators for higher-order decompositions. Sandia Rep. 2006. [Google Scholar] [CrossRef]

- Sidiropoulos, N.D.; Bro, R. On the uniqueness of multilinear decomposition of N-way arrays. J. Chemom. 2015, 14, 229–239. [Google Scholar] [CrossRef]

- Goncalves, M.L.N.; Oliveira, F.R. An Inexact Newton-like conditional gradient method for constrained nonlinear systems. Appl. Numer. Math. 2018, 132, 22–34. [Google Scholar] [CrossRef]

- Madsen, K.; Nielsen, H.B.; Tingleff, O. Methods for Non-Linear Least Squares Problems, 2nd ed.; Technical University of Denmark: Kongens Lyngby, Denmark, 2004; Available online: https://orbit.dtu.dk/en/publications/methods-for-non-linear-least-squares-problems-2nd-ed (accessed on 7 March 2023).

- Harshman, R.A. Foundations of the PARAFAC procedure: Models and conditions for an “explanatory” multimodal factor analysis. UCLA Work. Pap. Phon. 1970, 16, 1–84. [Google Scholar]

- Drexler, F. Eine methode zur Berechnung Sämtlicher Lösungen von polynomgleichungssytemen. Numer. Math. 1977, 29, 45–48. [Google Scholar] [CrossRef]

- Garia, C.; Zangwill, W. Finding all solutions to polynomial systems and other systems of equations. Math. Progam. 1979, 16, 159–176. [Google Scholar] [CrossRef]

- Li, T. Numerical solution of multivariate polynomial systems by homotopy continuation methods. Acta Numer. 1997, 6, 399–436. [Google Scholar] [CrossRef]

- Grasedyck, L.; Kressner, D.; Tobler, C. A literature survey of low-rank tensor approximation techniques. Gamm-Mitteilungen 2013, 36, 53–78. [Google Scholar] [CrossRef]

- Li, J.; Ye, H.; Li, T.; Wang, W.; Lou, W.J.; Hou, Y.; Liu, J.Q.; Lu, R.X. Efficient and Secure Outsourcing of Differentially Private Data Publishing With Multiple Evaluators. IEEE Trans. Dependable Secur. Comput. 2022, 19, 67–76. [Google Scholar] [CrossRef]

- Yan, H.Y.; Hu, L.; Xiang, X.Y.; Liu, Z.L.; Yuan, X. PPCL: Privacy-preserving collaborative learning for mitigating indirect information leakage. Inf. Sci. 2021, 548, 423–437. [Google Scholar] [CrossRef]

- Hu, L.; Yan, H.Y.; Li, L.; Pan, Z.J.; Liu, X.Z.; Zhang, Z.L. MHAT: An efficient model-heterogenous aggregation training scheme for federated learning. Inf. Sci. 2021, 560, 493–503. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Carroll, J.D.; Chang, J.J. Analysis of individual differences in multidimensional scaling via an N-way generalization of “Eckart-Young” decomposition. Psychometrika 1970, 35, 283–319. [Google Scholar] [CrossRef]

- Oseledets, I.V. Tensor-train decomposition. SIAM J. Sci. Comput. 2011, 33, 2295–2317. [Google Scholar] [CrossRef]

- Levenberg, K. A method for the solution of certain non-linear problems in least squares. Q. Appl. Math. 1994, 2, 436–438. [Google Scholar] [CrossRef]

- Marquardt, D. An algorithm for least-squares estimation of nonlinear parameters. SIAM J. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Tichavsky, P.; Phan, H.A.; Cichocki, A. Krylov-Levenberg-Marquardt Algorithm for Structured Tucker Tensor Decompositions. IEEE J. Sel. Top. Signal Process. 2021, 99, 1–10. [Google Scholar] [CrossRef]

- Nielsen, H.B. Damping Parameter in Marquardt’s Method. IMM. 1999. Available online: https://findit.dtu.dk/en/catalog/537f0cba7401dbcc120040af (accessed on 7 March 2023).

- Huang, B.H.; Ma, C.F. The modulus-based Levenberg-Marquardt method for solving linear complementarity problem. Numer. Math.Theory Methods Appl. 2018, 12, 154–168. [Google Scholar]

- Huang, B.H.; Ma, C.F. Accelerated modulus-based matrix splitting iteration method for a class of nonlinear complementarity problems. Comput. Appl. Math. 2018, 37, 3053–3076. [Google Scholar] [CrossRef]

- Lv, C.Q.; Ma, C.F. A Levenberg-Marquardt method for solving semi-symmetric tensor equations. J. Comput. Appl. Math. 2018, 332, 13–25. [Google Scholar] [CrossRef]

- Jin, Y.X.; Zhao, J.L. A Levenberg–Marquardt Method for Solving the Tensor Split Feasibility Problem. J. Oper. Res. Soc. China 2021, 9, 797–817. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).