In the original publication [1], there were some contents need to be corrected.

1. Addition of Authors

Y.J., Y.G., J.L., C.H., Y.Z. and P.L. were not included as authors in the original publication. The corrected Author Contributions Statement appears here.

Original form:

Tao Ning 1,2,*, Chengguo Wang 1, Yumeng Han 1

1 Institute of Computer Science and Engineering, Dalian Minzu University, Dalian 116000, China

2 Big Data Application Technology Key Laboratory of State Ethnic Affairs Commission, Dalian Minzu University, Dalian 116000, China

Correct form:

Tao Ning 1,2,*, Chengguo Wang 1, Yumeng Han 1, Yuchen Jin 3, Yan Gao 3, Jizhen Liu 3, Chunhua Hu 3, Yangyang Zhou 3 and PinPin Li 3

1 Sai Ling Co., Ltd., Dalian 116021, China

2 Institute of Computer Science and Engineering, Dalian Minzu University, Dalian 116000, China

3 Key Laboratory of Modern Agricultural Equipment and Technology, Zhenjiang 212013, China

- Author Contributions: Conceptualization, methodology, J.L., C.H., Y.Z., P.L.; software, T.N. and C.W.; formal analysis, Y.H. and Y.G.; resources, T.N., Y.J. and J.L.; writing—original draft preparation, T.N. and C.W.; writing—review and editing, T.N.; funding acquisition, T.N. and Y.H.

2. In Abstract and Keywords

- Abstract: Within the context of large-scale symmetry, a study on deep vision servo multi-vision tracking coordination planning for sorting robots was conducted according to the problems of low recognition sorting accuracy and efficiency in existing sorting robots. In this paper, a kinematic model of a mobile picking manipulator was built. Then, the kinematics design of the orwX, Y, Z three-dimensional space manipulator was carried out, and a method of deriving and calculating the base position coordinates through the target point coordinates, the current moving chassis center coordinates and the determined manipulator grasping attitude conditions was proposed, which realizes the adjustment of the position and attitude of the moving chassis as small as possible. The multi-vision tracking coordinated sorting accounts 79.8% of the whole cycle. The design of a picking robot proposed in this paper can greatly improve the coordination symmetry of logistic package target recognition, detection and picking.

- Keywords: picking manipulator; multi-vision tracking coordination; pattern recognition

3. In Section 1. Introduction, Paragraph 2, Table 1 and under Table 1.

Table 1.

Differences between the different previous methods and the proposed method.

Table 1.

Differences between the different previous methods and the proposed method.

| Methods | Characteristic |

|---|---|

| Greedy algorithm [3] | path is too long, too much time spent |

| Visual servo robot [4] | only meet medium and large parcels |

| Humanoid dual-arm robot [5] | experimental accuracy is insufficient, and Multi vision tracking coordination control is not fully realized |

| RealSense picking robot [6] | the experiment only achieved two-dimensional image pixel position |

| Multi vision tracking system based on RSRR300 [7] | the control study of Multi vision tracking coordination has not been conducted |

| Proposed method | lifting picking robot and “far and near” Multi vision tracking coordination control |

In order to achieve efficient picking robot operation under replicated real-world conditions, “multi-camera” multi-vision tracking coordination control based on RGB-D sensors has become an objective need and research consensus [8]. In this paper, a visual tracking device depth servo picking robot design and far and near scene motion planning were carried out and verified by experiments based on the visual tracking device depth sensor’s reliable identification of 160–1200 mm depth of field and 160 mm close range package, combined with the structural design of lifting picking robots, the new multi-vision tracking configuration mode and the combination of “far and near” multi-vision tracking coordination control.

4. Section 2 Is Redescribed, and Figure 1 is Updated

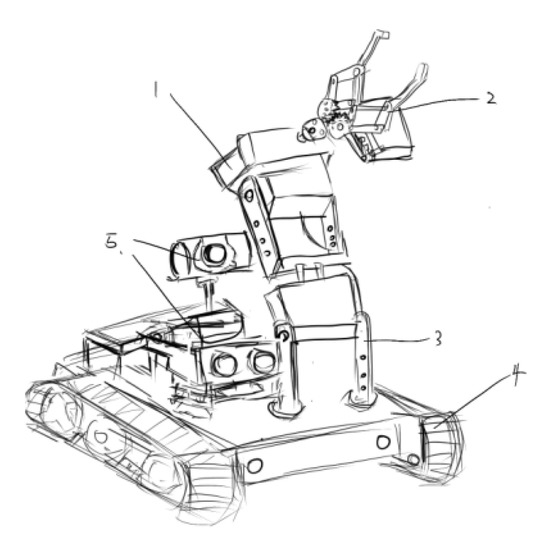

The structure of the RealSense depth servo small telescopic picking robot is shown in Figure 1, which is composed of an autonomous mobile chassis, a scissor lift mechanism, a three-turn robot arm, and a wrist RealSense SR300 depth sensor.

Figure 1.

Structure diagram of small lifting picking robot. 1. Three-axis rotating parts; 2. Grab manipulator; 3. Autonomous mobile chassis; 4. Moving track; 5. Visual identification equipment.

- (1)

- A 3D rotating shaft mechanism driven by AC motor is designed to maximize the freedom of the picking space. At the same time, the stability of the chassis crawler is designed to overcome the imbalance caused by large packages.

- (2)

- Based on the three-dimensional joint manipulator, the retractable rotary structure of the grasping rotating platform is designed. The manipulator with a high degree of freedom is composed of a grasping device, a rotating mechanism and three claw end components. The positioning and picking of express packages are completed in the set height area, so as to reduce the complexity of grasping movement and shorten the movement cycle without rotation.

- (3)

- In this paper, a stereo vision recognition system combining binocular camera and monocular camera is designed. Referring to the same type of mechanical devices, the depth of field is set within the range of 120–1500 mm, and the field of view is set at 70 degrees in the vertical direction and 90 degrees in the horizontal direction, so as to ensure the accurate positioning of the three–eye camera combined in the near and far range.

5. Section 3.1 Is Corrected as Follows, and Section 3.1.1 Is Deleted

The picking manipulator designed in this paper realizes the package picking in a large range through the rotation of the vertical rotation axis and the three-dimensional space rotation of the manipulator. Because the package stacking height is basically constant, the device is designed for lifting.

6. In Section 3.2

Paragraph 2 is updated and newly reference [10] is added as follow:

Assuming that the coordinates of the parcel position obtained by RealSense SR300 is S0 = (x0, y0, z0), the position S1 of the target parcel relative to the robot coordinate system is S1 = S0M [10].

Equation (1) is deleted, and from “L1-L6—The length…” to “…, and 125 mm.” is deleted.

7. Section 4 Is Updated as Follow

4. Robotic Arm Motion Planning Based on RealSense Combined Near and Far View

4.1. Critical Path Points for Hand-Eye Coordination

RealSense vision sensors are installed on the wrist of the robotic arm, which feeds back target depth information at the far and near points, respectively, by using the far and near point coordination strategy to guide robotic arm movement in order to target parcel picking.

According to the operating range of the robot arm, it is known that the best vision detection range of RealSense SR300 is 500–700 mm [11], and the best close view recognition distance is 200 mm. The settings of the key position are critical to the precise positioning of the robot arm, and the hand-eye tasks at each key position are as follows:

- (1)

- The robot arm scans the package horizontally at a distance of 350–800 mm from the top of the package to obtain the long-range vertical height crest data of the package. The 800 mm detection area is 1126 mm × 810 mm, and the 350 mm detection area is 720 mm × 520 mm [12].

- (2)

- Close view position: After determining the subregion, the robot arm moves to the center of the targeted subregion, which is the best close view position at 200 mm from the subregion, and still uses the horizontal altitude as the ideal altitude. The vision sensor detection area is 288 mm × 208 mm [12].

- (3)

- Picking position: According to the coordinates of the targeted parcel, the robot arm reaches the picking position through altitude planning and picks the parcels up in a horizontal or upward or downward altitude.

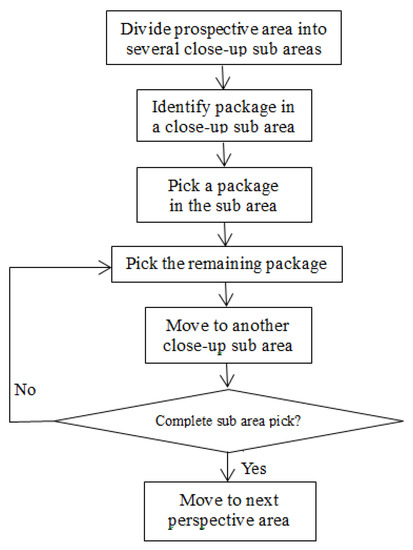

The flowchart of the picking method is shown in Figure 2.

Figure 2.

Flow chart of picking process.

4.2. Segmented Planning of Picking Manipulator’s Working Trajectory

4.2.1. Trajectory Planning Method

In order to realize the control of the express parcel picking manipulator, we need to design the initial posture and the rotation axis angle before picking. The quintic polynomial of References [13,14] is used to describe the fitting trajectory of joint angle.

Then the joint angular velocity time function and acceleration time function of the manipulator can be obtained by derivation. After setting the key points related to the joint angle and the picking track, the quintic polynomial in Equation (1) is brought into the calculation to obtain the rotation angle transformation and change relationship of the manipulator.

4.2.2. Track Parameter Settings

The trajectory of the picking manipulator designed in this paper includes the picking process and the placing process. During the movement from the initial position to the perspective position, the mechanical claw is 0 at both the start and end positions, that is, the speed of the picking point and the placing point is 0. The moving speed from the distant position to the close position is not 0. It can be seen from the picking operation route and each joint point that the straight-line trajectory planning must be carried out from the starting position to the prospective position, from the prospective position to the picking position, and from the picking position to the storage position. Compared with the literature [14], the key point coordinates and joint parameters are set as Table 2.

Table 2.

D Coordinates of key points and joint parameters.

In order to ensure the accuracy of the linear trajectory of each fitting point, an interpolation point is selected every 15 mm, and the key parameters of the manipulator corresponding to each interpolation point are obtained by inverse solution. The linear interpolation trajectory of each segment of P0 to P1, P1 to P2, and P2 to P3 was obtained with MATLAB simulation.

8. Figure 3 Is Updated as Follow

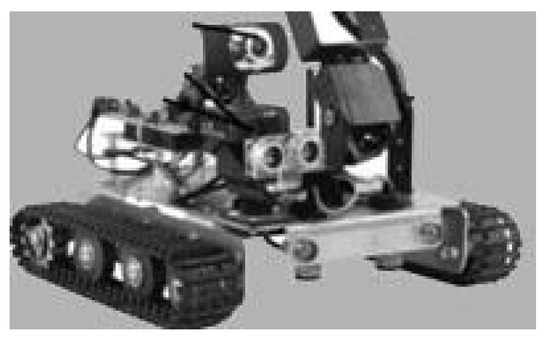

Figure 3.

Schematic of measurement system.

9. In Section 5.2.1. Test Material

From “As shown in Figure 4,….” to “…robot are (Section 3.1.1 Workspace analysis)” is deleted.

10. Section 7. Conclusions Is Corrected as Follows

From “According to the parameters of …” to “…efficiency of hand–eye coordination” is redescribed as “The time consumed by the robot arm’s action accounts for 79.8% of the whole cycle. The design of a picking robot proposed in this paper can greatly improve the coordination symmetry of logistic package target recognition, detection, and picking.”

11. In Reference Section

Deleted reference 9–13 and 15, changed reference 14 to reference 9, added new reference 10, with this correction, the order of some references has been adjusted accordingly.

- 10.

- Jin, Y.; Gao, Y.; Liu, J.; Hu, C.; Zhou, Y.; Li, P. Hand-eye coordination planning with deep visual servo for harvesting robot. Trans. Chin. Soc. Agric. Mach. 2021, 52, 18–25, 42.

The authors apologize for any inconvenience caused and state that the scientific conclusions are unaffected. The original publication has also been updated.

Reference

- Ning, T.; Wang, C.; Han, Y. Deep Vision Servo Hand-Eye Coordination Planning Study for Sorting Robots. Symmetry 2022, 14, 152. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).