Abstract

Essentially, the solution to an attribute reduction problem can be viewed as a reduct searching process. Currently, among various searching strategies, meta-heuristic searching has received extensive attention. As a new emerging meta-heuristic approach, the forest optimization algorithm (FOA) is introduced to the problem solving of attribute reduction in this study. To further improve the classification performance of selected attributes in reduct, an ensemble framework is also developed: firstly, multiple reducts are obtained by FOA and data perturbation, and the structure of those multiple reducts is symmetrical, which indicates that no order exists among those reducts; secondly, multiple reducts are used to execute voting classification over testing samples. Finally, comprehensive experiments on over 20 UCI datasets clearly validated the effectiveness of our framework: it is not only beneficial to output reducts with superior classification accuracies and classification stabilities but also suitable for data pre-processing with noise. This improvement work we have performed makes the FOA obtain better benefits in the data processing of life, health, medical and other fields.

1. Introduction

In the era of big data, with rapid growth in the amount of data, a high dimension of data is a representative characteristic. Nevertheless, it is well-known that not all features in data are useful for providing valuable learning ability [1,2,3]. That is why feature selection has been validated to be one of the crucial data pre-processing techniques in the fields of machine learning, knowledge discovery and so on.

Attribute reduction, from the perspective of rough set, is one state-of-the-art feature selection technique [4,5,6]. One of the important advantages of attribute reduction is that it possesses rich semantic explanations based on various measures related to rough set. For instance, a reduct can be regarded as one minimal subset of attributes that satisfies the pre-defined constraint constructed by using measures such as dependency, conditional entropy, conditional discrimination index and so on [7,8].

Without a loss in generality, the problem solving of attribute reduction can be considered as a searching optimization procedure. Up to now, with respect to different requirements, many approaches have been reported [9].

With a careful review of the previous literature, most searching methods can be categorized into the following two groups. They are exhaustive searching [10] and heuristic searching [11,12].

- Exhaustive searching. The fundamental advantage of exhaustive searching is that such a process can seek out all qualified reducts. Nevertheless, the apparent limitation of such a search is the unacceptable complexity. For example, the discernibility matrix [13,14] and backtracking [15] are two representative mechanisms of exhaustive searching. Though some simplified and pruning modes have been proposed to improve the efficiency of those exhaustive searchings; they still face a big challenge in the dimension reduction in large-scale data.

- Heuristic searching. Different from complete searching, heuristic searching can only be used to acquire one and only one reduct for one round of execution. The dominating superiority of heuristic searching is the low complexity due to the guidance of heuristic information for the whole process of searching. Take the forward greedy searching [16,17,18] as an example, the variation of used measures in the definition of attribute reduction is the heuristic searching; such a variation can be employed to identify the importance of candidate attributes.

Since heuristic searching is superior to exhaustive searching in most cases, the former has been widely accepted by many researchers. Among various heuristic searches, meta-heuristic searching is especially popular [19,20]. Meta-heuristic searching mainly focuses on relevant behaviors in the natural world. The inherent computational intelligence mechanism is useful for seeking out the optimal solution to complex optimization problems. Different from forward greedy searching, meta-heuristic searching combines both random searching and local searching strategies [21,22]; the global optimal solution can then be gradually achieved in the whole process of searching. However, it should also be emphasized that the random factor in meta-heuristic searching may involve the following limitations.

- Random factor may result in the poor adaptability of reduct. This is mainly because each iteration of identifying qualified attributes is equipped with strong randomness. As has been reported by Li et al., a feature selection algorithm without the guarantee of stability usually leads to significantly different feature subsets if the data varies [23]. Such a case implies that the classification results based on selected features will shake the confidence of domain experts.

- Random factor may generate an ineffective reduct if high-dimensional data is faced. This is mainly because for hundreds of attributes in data, a large number of possible reducts exist, random factor can then possibly identify a reduct without the preferable learning ability [24,25].

To fill the gaps mentioned above, a new strategy to perform meta-heuristic searching has become necessary. First, in this study, the forest optimization algorithm (FOA) [26,27] is selected as the problem-solving approach for attribute reduction. Secondly, to further improve the effectiveness of the derived reducts by using FOA, an ensemble framework is developed, which aims to generate multiple reducts. It should be emphasized that the structure of those multiple reducts is symmetrical, which indicates that no order exists among those reducts. The advantage of such a symmetric structure is that our approach can be easily performed over parallel platforms. The use of multiple obtained reducts can then execute voting classification over testing samples; it is the key to achieving the objective of improving effectiveness [28,29]. Immediately, the main contributions of our study are elaborated as follows.

- It is the first attempt to introduce forest optimization-based searching into the problem solving of attribute reduction. Different from the widely used forward greed searching in many pieces of the literature, this research is useful to further push the application of meta-heuristic searching in data pre-processing forward.

- The ensemble framework proposed in this research is a general form; though it is combined with FOA in this article, such an ensemble framework can also be further embedded into other searchings. From this point of view, the discussed framework possesses the advantage of plug-and-play.

The remainder of this paper is organized as follows. In Section 2, we briefly review notions related to our study. Attribute reduction via FOA and the proposed ensemble framework are addressed in Section 3. We used 20 classical datasets on the UCI dataset to conduct comparative experiments. The experimental results are shown and analyzed in Section 4. Finally, the article is summarized in Section 5 and the future work plan is given.

2. Preliminaries

2.1. Granular Computing and Rough Set

Thus far, the principle of dividing complex data/information into several minor blocks to perform problem solving has been widely accepted in the era of big data. This thinking is referred to as information granulation in the framework of Granular Computing (GrC) [30,31]. As the fundamental operation element in GrC, the concept called information granulating has been thoroughly investigated [32,33,34]. The degree to characterize the coarser or finer structure of information granules can also be revealed; it is regarded as granularity.

Presently, it has been widely accepted that rough set is a general mathematical tool to carry out GrC. The reason can be contributed to the following two facts:

- the fundamental characteristic of rough set is to approximate the objective target by using information granules;

- different structures of information granules imply different values of granularity, and then the results of rough approximations may vary.

In rough set theory, a data or a decision system [35] can be denoted by , in which U is a set of nonempty finite samples, such as , is a set of condition attributes, d is the decision to record the labels of samples, i.e., , is the label of sample . Given a decision system , to derive the result of information granulation over U, various binary relations have been developed with respect to different requirements. Two representative forms are illustrated as follows.

- To deal with categorical data, the equivalence relation or the so-called indiscernibility relation proposed by Pawlak can be used. For example, if the classification task is considered, then by decision d, the corresponding equivalence relation is . Therefore, a partition over U is derived such as . , is actually a collection of samples that possess the same labels.

- To handle continuous or non-structured data, parameterized binary relationships can be employed. For instance, Hu et al. [36] have presented a neighborhood relation such as , in which is the distance between samples and over A and is the radius (one form of parameter). Following , each sample in U can induce a related neighborhood, which is a parameterized form of an information granule [37,38].

2.2. Attribute Selection

Note that since continuous data is more popular than categorical data in real-world applications, we will mainly focus on parameterized binary relationships in the context of this study. Furthermore, it has been pointed out that different specific parameters will lead to different results of information granulation, and then the related values of granularity may also be different. For example, Zhang et al. fused the multi granularity decision-making theory rough set with the hesitant fuzzy language group decision-making, and expanded the application of multi-granularity three-way decision-making in information analysis and information fusion by introducing the adjustable parameter of expected risk preference [39].

From the discussions above, it is interesting to explore one of the crucial topics in the field of rough set based on the parameter; that is, attribute reduction. Many researchers have performed outstanding work in the field of attribute reduction. Xu et al. proposed assignment reduction and approximate reduction for inconsistent ordered information systems to enhance the effectiveness of attribute reduction in complex information systems [40]. Chen et al. proposed the solution of a granular ball in the process of information granular attribute reduction, which improved the effectiveness of searching attributes [41]. We will first present the following general definition of attribute reduction.

Theorem 1.

Given a decision system and a parameter ω, assuming that -constraint is a constraint related to a specific measure ρ and parameter ω, , A is referred to as a -reduct if and only if:

- 1.

- A satisfies -constraint;

- 2.

- , B does not satisfy -constraint.

Without a loss in generality, in the scenario of rough set, the value of measure is closely related to the given parameter . Therefore, the semantic explanation of -reduct is a minimal subset of raw condition attributes, which satisfies the pre-defined constraint [42,43].

In most state-of-the-art studies about attribute reduction, the value of measure may be equipped with the following two cases.

- If a measure is positive preferred; that is, the measure-value is expected to be as high as possible, e.g., the measures called approximation quality and classification accuracy, then the -constraint is usually expressed as “” where is the value of measure derived based on condition attributes A and parameter .

- If a measure is negative preferred; that is, the measure-value is expected to be as low as possible, e.g., the measure called condition entropy and classification error rate, then the -constraint is usually expressed as “”.

Following Theorem 1, how to select qualified attributes and construct the required reduction becomes a problem worth exploring. As a classic algorithm in heuristic strategy, forward greedy search has received extensive attention due to its low complexity and high efficiency. The key to such searching is to select the most significant attribute for each iteration. The detailed algorithm is shown as follows.

The qualified attributes can be obtained in step 5 of Algorithm 1, and this selected attribute is closely related to the measure used in the above algorithm. In detail, if a measure of positive correlation is used in the attribute search process, then attribute b should be qualified with a higher measure value such ; Conversely, if a negative correlation measure is used, a lower measure value should be considered to qualify attribute b, that is, .

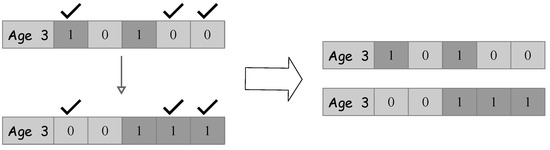

| Algorithm 1: Forward greedy searching to select attributes. |

|

When the number of samples is and the number of conditional attributes is , the time complexity of the above Algorithm 1 is .

3. Foa and Proposed Framework

3.1. FOA

The forest optimization algorithm (FOA) is an evolutionary algorithm that was developed by Ghaemi in 2014 [26]. Such an algorithm is enlightened by the phenomenon that a few trees in the forest can subsist for a long time while other trees can only survive for a limited time. Presently, FOA has been introduced into the problem solving of feature selection. Note that attribute reduction can be regarded as one rough set-based feature-selection tool, it is then an interesting topic to perform the searching of qualified attributes required in the reduct by principles of FOA.

Generally, in FOA, each tree represents a possible solution, i.e., a subset of condition attributes. To simplify our discussion, such a tree can be denoted by a vector with the length of . That is, a vector consists of variables. The first variable in such a vector is the “Age” of a tree, and the remainders are used to denote the existence/nonexistence of attributes. For example, value “1” in a vector indicates that the corresponding attribute is identified to be involved in the subsequent iteration, value “0” in a vector implies that the corresponding attribute is removed for the subsequent iteration.

Following classical FOA, five main steps are used and elaborated as follows.

- Initializing trees. The variable “Age” of each tree is set to be ‘0’. Further, other variables of each tree are initialized randomly with either “0” or “1”. The following stage, called local seeding, will increase the values of “Age” of all trees except newly generated trees.

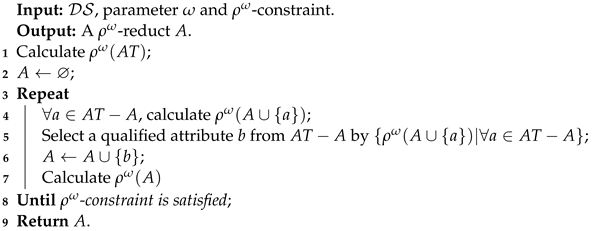

- Local seeding. For each tree with “Age” 0 in the forest, some variables are selected randomly(“LSC” parameter determines the number of the selected variables). Such a tree is split into an “LSC” number of trees, and for each split tree the value of one distinguished variable is changed from 0 to 1 or vice versa. Figure 1 shows an example of the local seeding operator of one tree. In such an example, , the value of “LSC” is set to 2.

Figure 1. An example of local seeding with “LSC” = 2.

Figure 1. An example of local seeding with “LSC” = 2. - Population limiting. In this stage, to form the candidate population, the following two types of trees will be removed from the forest: (1) the “Age” of a tree is bigger than a parameter called “life time”; (2) the extra trees that exceed a parameter called “area limit” by sorting trees via their fitness values.

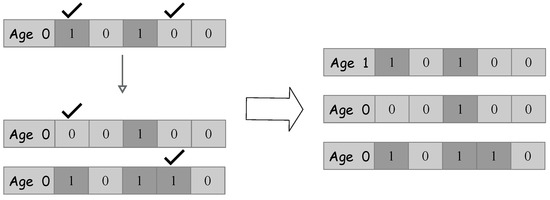

- Global seeding. For trees in the candidate population, the number of variables to be selected is determined by a parameter named “GSC”, and these variables are selected at random. Immediately, the values of those selected variables will be changed (from 0 to 1 or vice versa). An example of performing global seeding is shown in Figure 2. In such an example, the value of “GSC” is also set to 3.

Figure 2. An example of global seeding with “GSC” = 3.

Figure 2. An example of global seeding with “GSC” = 3. - Updating the best tree. In this stage, by sorting the trees in the candidate population based on their fitness values, the tree with the greatest fitness value is identified as the best one, and its “Age” will be set to be “0”. These stages will be performed iteratively until the -constraint in attribute reduction is satisfied.

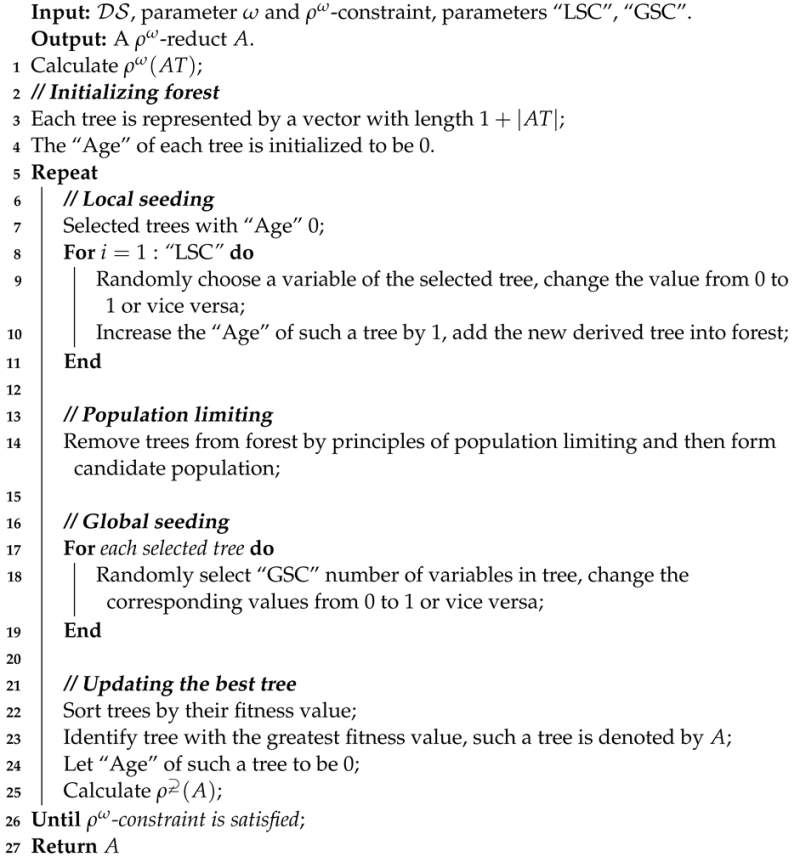

Algorithm 2 illustrates a detailed process to select attributes and then construct a reduct by using FOA.

| Algorithm 2: FOA to select attributes. |

|

3.2. Ensemble FOA

By carefully reviewing the process shown in Algorithm 2, we observe that for some specific steps, there are some random characteristics. For such a reason, it may be argued that the derived reduct based on FOA is unstable. In other words, two different reducts are to be obtained though the same searching procedure is executed twice over the same data.

Furthermore, following research reported in Yang et al. [44], an unstable reduct may be invaluable in providing robust learning, i.e., the stability of classification results may be far from what we expect. Such a study has indicated that not only the learning accuracy but also the learning stability should be paid a lot of attention to.

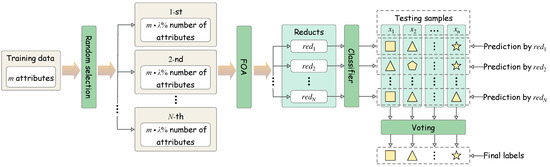

To fill the gaps mentioned above, a new approach to carrying out FOA has become necessary. That is why an ensemble strategy will be developed to further improve the performance of FOA in the problem solving of attribute reduction. Formally, the details of our ensemble strategy can be elaborated as follows. First, the searching process of FOA will be executed N times; it follows that N reducts may be derived such as . Secondly, each testing sample will be comprehensively predicated by those derived reduct. Finally, voting will be used to determine the final predication of the testing sample.

Nevertheless, it is not difficult to observe that two main limitations may emerge for the above ensemble strategy. First, though different reducts can be obtained, the diversity over those derived reducts is still unsatisfactory. Secondly, the searching efficiency is a big problem because there are N times to calculate the reduct. By considering such limitations, a data perturbation mechanism will be introduced into the whole process [45,46]. Grant there are m attributes in the given raw training data set. Our used data perturbation is performed by randomly identifying number of raw attributes, i.e., attributes, the reduct can then be generated from those attributes and the universe U. The first advantage of such a data perturbation is that the expected diversity of the reducts can be induced because different subsets of attributes are used to calculate the reducts. Moreover, since for each round computation of a reduct, only a subset of the attributes is employed, the time consumption can be reduced.

From the discussions above, a detailed framework of our proposed ensemble FOA is illustrated in Figure 3.

Figure 3.

Ensemble FOA-based searching and classification.

4. Experimental Analysis

4.1. Data Sets

To verify the superiorities of the proposed framework, the experiments are conducted over 20 real-world datasets from the UCI Machine Learning Repository. Table 1 summarizes some details of these datasets. Additionally, the values in all datasets have been normalized by column. In addition, besides using the raw dataset shown in Table 1, we also added a comparative experiment of injecting label noise. The specific operation is as follows: if the given label noise ratio is , then we randomly select samples in the raw dataset and perform noise injection by replacing the labels of these selected samples with other labels. The purpose of this is to further test the robustness of our method.

Table 1.

Datasets description.

4.2. Experimental Setup and Configuration

All experiments were performed on a Windows 10 system personal computer configured with an Intel Core i7-6700HQ CPU (2.60 GHz) and 16.00 GB of memory. The programming language is Matlab (MathWorks Inc., Natick, MA, USA), and the integrated development environment version used is R2019b.

In the following experiments, neighborhood rough set is used to perform our framework, in the case of using multiple different radii, neighborhood rough sets can form multi-grained structures. Therefore, we specify a set of 20 radii in ascending order such as . Furthermore, the experiment uses 10-fold cross-validation; that is, in each calculation, 90% of the samples of the dataset are used to solve the attribute reduction, and the remaining samples are used to test the classification performance of the reduct.

Note that there are two approaches that have been tested in our experiment. One is a primitive forest optimization algorithm the other is an ensemble forest optimization algorithm.

They are denoted as PF and EF. Furthermore, to comprehensively compare our approaches, forward greedy searching has also been tested. KNN and CART are simple and mature classifiers, which have been widely accepted in various learning tasks. Therefore, we used KNN and CART classifiers in the experiment to measure the classification ability of the reduction obtained by the above three methods. These three methods are compared based on the following four measures.

- Approximation Quality (AQ) [47]: reflects the uncertainty of the sample space characterized by information granularity derived from attribute subsets. Set the radius to , the approximation quality related to d over can be denoted by and:where is the neighborhood of sample x related to A in terms of , is the decision class of x, and is the cardinality of a set.The higher the value derives, the better the performance of the conditional attribute subset A is. From this point of view, the constraint is set to be “” in Algorithms 1 and 2 for deriving Approximation Quality Reduct (AQR).

- Conditional Entropy (CE) [48]: reflects the uncertainty of the information granularity extracted from the attribute set to , the condition entropy related to d over can be denoted by and:The lower the value derives, the better the performance of the conditional attribute subset A is. From this point of view. The constraint is set to be “” in Algorithms 1 and 2 for deriving the Conditional Entropy Reduct (CER).

- h [49]: the regularizer is useful to robustly evaluate the significance of candidate attributes and then reasonably identify a valuable attribute. Given a radius , the regularization loss related to d over can be denoted by and:in which is a hyper-parameter to balance the loss of approximation quality and regularizer . In the context of this paper, the regularizer is defined as a specific form of granularity [50] such that .The lower the value derives, the better the performance of the condition attribute subset A is. From this point of view, the constraint is set to be “” in Algorithms 1 and 2 for deriving the Regularization Loss Reduct (RLR).

- Neighborhood Discrimination Index (NDI) [51]: the measure is used to reflect the discrimination ability of attribute sets for different decision classes. Given a radius , the neighborhood discrimination index relates to d over can be denoted by and:The lower the value derives, the better the performance of the condition attribute subset A is. From this point of view, the constraint is set to be “” in Algorithms 1 and 2 for deriving the Neighborhood Discrimination Index Reduct (NDIR).

With these metrics, four sets of comparative experiments will be carried out on three algorithms: primitive forest optimization algorithm, ensemble forest optimization algorithm and forward greedy algorithm: PF-AQR, EF-AQR and FG-AQR consist of a group of comparisons based on the measure of approximation quality; PF-CER, EF-CER and FG-CER consist of a group of comparisons based on the measure of conditional entropy; PF-RLR, EF-RLR and FG-RLR consist of a group of comparisons based on the measure of regularization loss; PF-NDI, EF-NDI and FG-NDI consist of a group of comparisons based on the measure of discrimination index.

Moreover, three types of results will be reported: (1) the classification accuracy, (2) the classification stability and (3) the AUC.

4.3. Comparisons among Classification Accuracies of the Derived Reducts

In this subsection, the classification accuracies under three methods based on four measures will be shown. These results are shown for the raw dataset and the dataset with 10%, 20%, 30% and 40% different label noise ratios. Note that CART and KNN classifiers are employed to evaluate the classification performance of the derived reducts.

4.3.1. Classification Accuracies (Raw Data)

Table 2 and Table 3 below show the classification accuracies of the raw dataset with different classifiers.

Table 2.

Comparison among KNN classification accuracies (raw data and higher values are in bold).

Table 3.

Comparison among CART classification accuracies (raw data and higher values are in bold).

With a deep investigation of Table 2 and Table 3, it is not difficult to observe that regardless of the classifier used, the classification accuracies associated with our method dominate other compared methods in most datasets. Take data “Statlog (Heart) (ID = 13)” as an example, by KNN classifier, the classification accuracies related to our method are 0.8457, 0.8407, 0.8419 and 0.8426, respectively. At the same time, the classification accuracies of several other comparison methods are no more than 0.8.

4.3.2. Classification Accuracies (10%, 20%, 30% and 40% Label Noise)

The classification accuracies in terms of four different noise ratios are reported in Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10 and Table 11.

Table 4.

Comparison among KNN classification accuracies (10% label noise and higher values are in bold).

Table 5.

Comparison among CART classification accuracies (10% label noise and higher values are in bold).

Table 6.

Comparison among KNN classification accuracies (20% label noise and higher values are in bold).

Table 7.

Comparison among CART classification accuracies (20% label noise and higher values are in bold).

Table 8.

Comparison among KNN classification accuracies (30% label noise and higher values are in bold).

Table 9.

Comparison among CART classification accuracies (30% label noise and higher values are in bold).

Table 10.

Comparison among KNN classification accuracies (40% label noise and higher values are in bold).

Table 11.

Comparison among CART classification accuracies (40% label noise and higher values are in bold).

From the above results, it is not difficult to observe that the performance of both CART and KNN classifiers decreases with the increase in the ratios of label noises. Taking “Connectionist Bench (Vowel Recognition—Deterding) (ID = 2)” as an example, for the CART classifier and the approximation quality are used to define the constraint of attribute reduction; if the noise ratio increases from 10% to 40%, the values of EF-AQR in Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10 and Table 11 are 0.7764, 0.7419, 0.6925 and 0.6234. Obviously, the classification accuracies have been significantly reduced. Such observations suggest that more label noise does have a negative impact on the classification performance of selected attributes in the reducts.

At the same time, we can find that when the label noise ratios are 20% and 30%, the results in Table 6, Table 7, Table 8 and Table 9 show that when using the KNN classifier, our method has a weak disadvantage in classification accuracy compared to other methods on the small number of datasets. It shows that our method is more suitable for the CART classifier in the environment of 20% and 30% label noise ratios.

Note that for the four ratios of label noises we tested, the classification accuracies associated with our method are also superior to the results associated with the compared methods. Such observations also imply that our proposed strategy is more robust in dirty data.

4.4. Comparisons among Classification Stabilities of the Derived Reducts

In this subsection, we will show the classification stability values related to three different methods under four metrics. These comparative experiments are not only performed on the raw dataset but also performed on the dataset with ratios of label noises of 10%, 20%, 30% and 40%. The CART and KNN classifiers are used to test the performance of all algorithms.

4.4.1. Classification Stabilities (Raw Data)

Table 12.

Comparison among KNN classification stabilities (raw data and higher values are in bold).

Table 13.

Comparison among CART classification stabilities (raw data and higher values are in bold).

By carefully observing the data in the tables, it is not difficult to find that no matter which classifier is used, in most datasets, our method is more stable and better than other methods. Take “LSVT Voice Rehabilitation (ID = 8)” as an example; using the CART classifier, the classification stabilities related to EF under four different measures are 0.8340, 0.8488, 0.8372 and 0.8488, respectively, while the classification stabilities related to other methods are lower under the same measure.

Of course, by comparing Table 12 and Table 13, we also found that on the raw dataset, when using the KNN classifier, the neighborhood discrimination index as the measure to derive reduction has the best classification stability. When the other three measures are used, the classification stability of our method is slightly inferior to that of the other two methods on the datasets with IDs of 3, 5, 14 and 15. This also shows that our method is more suitable for the CART classifier. When using the KNN classifier, we recommend using the neighborhood discrimination index as a measure of attribute reduction.

4.4.2. Classification Stabilities(10%, 20%, 30% and 40% Label Noise)

Table 14, Table 15, Table 16, Table 17, Table 18, Table 19, Table 20 and Table 21 report different classification stabilities obtained on datasets with different noise ratios, on which the stabilities are tested for KNN and CART classifiers.

Table 14.

Comparison among KNN classification stabilities (10% label noise and higher values are in bold).

Table 15.

Comparison among CART classification stabilities (10% label noise and higher values are in bold).

Table 16.

Comparison among KNN classification stabilities (20% label noise and higher values are in bold).

Table 17.

Comparison among CART classification stabilities (20% label noise and higher values are in bold).

Table 18.

Comparison among KNN classification stabilities (30% label noise and higher values are in bold).

Table 19.

Comparison among CART classification stabilities (30% label noise and higher values are in bold).

Table 20.

Comparison among KNN classification stabilities (40% label noise and higher values are in bold).

Table 21.

Comparison among CART classification stabilities (40% label noise and higher values are in bold).

Through the detailed investigation of Table 14, Table 15, Table 16, Table 17, Table 18, Table 19, Table 20 and Table 21, it can be seen that in all experimental datasets, whether using the CART or KNN classifier, its stability decreases with the increase in label noise. Taking “Statlog (Image Segmentation)(ID=14)” as an example, based on the CART classifier, in Table 15, where the noise ratio is only 10%, the classification stabilities related to EF-AQR, EF-CER, EF-NDIR and EF-RLR are 0.9599, 0.9474, 0.9449 and 0.9441, respectively; these values are reduced to 0.7553, 0.7502, 0.7597 and 0.7495 in Table 21 with a noise ratio of 40%. Such observations suggest that more label noise does have a negative impact on the classification stabilities of selected attributes in the reducts.

It is easy to know that no matter how much proportion of noise is injected into the raw dataset, the classification stabilities of our method is higher than that of the other two comparison methods. The results show that our method is more robust in a dirty data environment.

4.5. Comparisons among AUC of the Derived Reducts

The receiver operating characteristic (ROC) curve has been used in machine learning to describe the trade-off between the hit rate and the error alarm rate of classifiers. Because it is a two-dimensional description, it is not easy to evaluate the performance of the classifier, so we usually use the area under the ROC curve (AUC) in experiments. It is part of the cell square area, so its value is between 0 and 1.0 [52]. In this subsection, the AUC related to three different approaches to derive reducts under four measures will be compared. These are obtained on raw datasets and data setswith label noise ratios of 10%, 20%, 30% and 40%.

4.5.1. Auc (Raw Data)

Table 22.

Comparison among AUC (raw data and higher values are in bold).

Table 23.

Comparison among AUC (raw data and higher values are in bold).

With a thorough investigation of Table 22 and Table 23, it is not difficult to conclude that no matter which measures our framework uses as a constraint on search termination, the derived reducts obtained by our method are better than different classifiers. Take “Urban Land Cover (ID = 18)” as an example; the AUC related to EF-CER based on KNN and CART classifiers are 0.9227 and 0.9188, respectively; under the same classifier, the AUC value of EF-CER is higher than PF-CER and FG-CER.

4.5.2. Auc (10%, 20%, 30% and 40% Label Noise)

Table 24, Table 25, Table 26, Table 27, Table 28, Table 29, Table 30 and Table 31 report the different AUCs obtained on datasets with different noise ratios, which were tested on KNN and CART classifiers.

Table 24.

Comparison among AUC (10% label noise and higher values are in bold).

Table 25.

Comparison among AUC (10% label noise and higher values are in bold).

Table 26.

Comparison among AUC (20% label noise and higher values are in bold).

Table 27.

Comparison among AUC (20% label noise and higher values are in bold).

Table 28.

Comparison among AUC (30% label noise and higher values are in bold).

Table 29.

Comparison among AUC (30% label noise and higher values are in bold).

Table 30.

Comparison among AUC (40% label noise and higher values are in bold).

Table 31.

Comparison among AUC (40% label noise and higher values are in bold).

From Table 24, Table 25, Table 26, Table 27, Table 28, Table 29, Table 30 and Table 31, it is not difficult to draw the following conclusions. Whether using the CART or KNN classifier, its AUC value decreases with the increase in label noise. Take “Wine_Nor (ID = 20)” as an example; for the KNN classifier, the regularization loss is used in defining the constraint of attribute reduction, if the noise ratio increases from 10% to 40%, the values of EF-RLR in Table 24, Table 25, Table 26, Table 27, Table 28, Table 29, Table 30 and Table 31 are 0.9584, 0.9358, 0.9180 and 0.8790. Obviously, the AUC has been significantly reduced. Such observations suggest that more label noise does have a negative impact on the AUC.

Note that no matter which ratio of label noise is injected into the data, The value of AUC provided by the EF method is higher than other methods on most datasets. Therefore, our ensemble FOA approach cannot only improve the stability of the reduct but also bring a better classification performance. It also shows that our strategy is more robust in dirty data. However, it is undeniable that our method has a weak disadvantage in AUC value on a small number of datasets, which also provides a direction for our future enhancement research.

5. Conclusions and Future Plans

This research is inspired by introducing the forest optimization algorithm into the problem solving of attribute reduction. To further improve the effectiveness of selected attributes by the forest optimization algorithm, an ensemble framework is developed, which is used to perform ensemble classification based on multiple derived reducts. Our comparative experiments have clearly demonstrated that our framework is better than the other popular algorithms for four widely used measures in rough set. In the fields of medicine and health, there are large amounts of data predictions on drugs and diseases. Our improvement research can bring good benefits to these applications in the future. The following topics deserve further study.

- Our framework can be introduced into other rough set models to perform on complex data under different scenarios, e.g., semi-supervised or unsupervised data.

- Trying to combine our framework with some other effective feature selection techniques is also a challenge to data pre-processing.

Author Contributions

Conceptualization, X.Y.; methodology, X.Y.; software, Y.L.; validation, X.Y.; formal analysis, Y.L.; investigation, J.C.; resources, J.C.; data curation, J.W.; writing—original draft preparation, J.W.; writing—review and editing, J.W.; visualization, J.W.; supervision, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (Nos. 62076111, 62176107).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gheyas, I.A.; Smith, L.S. Feature Subset Selection in Large Dimensionality Domains. Pattern Recognit. 2010, 43, 5–13. [Google Scholar] [CrossRef] [Green Version]

- Hosseini, E.S.; Moattar, M.H. Evolutionary Feature Subsets Selection Based on Interaction Information for High Dimensional Imbalanced Data Classification. Appl. Soft Comput. 2019, 82, 105581. [Google Scholar] [CrossRef]

- Sang, B.; Chen, H.; Li, T.; Xu, W.; Yu, H. Incremental Approaches for Heterogeneous Feature Selection in Dynamic Ordered Data. Inf. Sci. 2020, 541, 475–501. [Google Scholar] [CrossRef]

- Xu, W.; Li, Y.; Liao, X. Approaches to Attribute Reductions Based on Rough Set and Matrix Computation in Inconsistent Ordered Information Systems. Knowl. Based Syst. 2012, 27, 78–91. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, J. Three-Hierarchical Three-Way Decision Models for Conflict Analysis: A Qualitative Improvement and a Quantitative Extension. Inf. Sci. 2022, 587, 485–514. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, Y. Tri-Level Attribute Reduction in Rough Set Theory. Exp. Syst. Appl. 2022, 190, 116187. [Google Scholar] [CrossRef]

- Yang, X.; Liang, S.; Yu, H.; Gao, S.; Qian, Y. Pseudo-Label Neighborhood Rough Set: Measures and Attribute Reductions. Int. J. Approx. Reason. 2019, 105, 112–129. [Google Scholar] [CrossRef]

- Liu, K.; Yang, X.; Yu, H.; Mi, J.; Wang, P.; Chen, X. Rough Set Based Semi-Supervised Feature Selection via Ensemble Selector. Knowl. Based Syst. 2019, 165, 282–296. [Google Scholar] [CrossRef]

- Sun, L.; Wang, L.; Ding, W.; Qian, Y.; Xu, J. Feature Selection Using Fuzzy Neighborhood Entropy-Based Uncertainty Measures for Fuzzy Neighborhood Multigranulation Rough Sets. IEEE Trans. Fuzzy Syst. 2021, 29, 19–33. [Google Scholar] [CrossRef]

- Pendharkar, P.C. Exhaustive and Heuristic Search Approaches for Learning a Software Defect Prediction Model. Eng. Appl. Artif. Intell. 2010, 23, 34–40. [Google Scholar] [CrossRef]

- Hu, Q.; Yu, D.; Liu, J.; Wu, C. Neighborhood Rough Set Based Heterogeneous Feature Subset Selection. Inf. Sci. 2008, 178, 3577–3594. [Google Scholar] [CrossRef]

- Jia, X.; Shang, L.; Zhou, B.; Yao, Y. Generalized Attribute Reduct in Rough Set Theory. Knowl. Based Syst. 2016, 91, 204–218. [Google Scholar] [CrossRef]

- Chen, D.; Zhao, S.; Zhang, L.; Yang, Y.; Zhang, X. Sample Pair Selection for Attribute Reduction with Rough Set. IEEE Trans. Knowl. 2012, 24, 2080–2093. [Google Scholar] [CrossRef]

- Dai, J.; Hu, H.; Wu, W.-Z.; Qian, Y.; Huang, D. Maximal-Discernibility-Pair-Based Approach to Attribute Reduction in Fuzzy Rough Sets. IEEE Trans. Fuzzy Syst. 2018, 26, 2174–2187. [Google Scholar] [CrossRef]

- Yang, X.; Qi, Y.; Song, X.; Yang, J. Test Cost Sensitive Multigranulation Rough Set: Model and Minimal Cost Selection. Inf. Sci. 2013, 250, 184–199. [Google Scholar] [CrossRef]

- Ju, H.; Yang, X.; Yu, H.; Li, T.; Yu, D.-J.; Yang, J. Cost-Sensitive Rough Set Approach. Inf. Sci. 2016, 355–356, 282–298. [Google Scholar] [CrossRef]

- Qian, Y.; Liang, J.; Pedrycz, W.; Dang, C. An Efficient Accelerator for Attribute Reduction from Incomplete Data in Rough Set Framework. Pattern Recognit. 2011, 44, 1658–1670. [Google Scholar] [CrossRef]

- Wang, X.; Wang, P.; Yang, X.; Yao, Y. Attribution Reduction Based on Sequential Three-Way Search of Granularity. Int. J. Mach. Learn. Cybern. 2021, 12, 1439–1458. [Google Scholar] [CrossRef]

- Tan, K.C.; Teoh, E.J.; Yu, Q.; Goh, K.C. A Hybrid Evolutionary Algorithm for Attribute Selection in Data Mining. Expert Syst. Appl. 2009, 36, 8616–8630. [Google Scholar] [CrossRef]

- Zhang, X.; Lin, Q. Three-Learning Strategy Particle Swarm Algorithm for Global Optimization Problems. Inf. Sci. 2022, 593, 289–313. [Google Scholar] [CrossRef]

- Xie, X.; Qin, X.; Zhou, Q.; Zhou, Y.; Zhang, T.; Janicki, R.; Zhao, W. A Novel Test-Cost-Sensitive Attribute Reduction Approach Using the Binary Bat Algorithm. Knowl. Based Syst. 2019, 186, 104938. [Google Scholar] [CrossRef]

- Ju, H.; Ding, W.; Yang, X.; Fujita, H.; Xu, S. Robust Supervised Rough Granular Description Model with the Principle of Justifiable Granularity. Appl. Soft Comput. 2021, 110, 107612. [Google Scholar] [CrossRef]

- Li, Y.; Si, J.; Zhou, G.; Huang, S.; Chen, S. FREL: A Stable Feature Selection Algorithm. IEEE Trans. Neural Networks Learn. Syst. 2015, 26, 1388–1402. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Harner, E.J.; Adjeroh, D.A. Random KNN Feature Selection—A Fast and Stable Alternative to Random Forests. BMC Bioinform. 2011, 12, 450. [Google Scholar] [CrossRef] [Green Version]

- Sarkar, C.; Cooley, S.; Srivastava, J. Robust Feature Selection Technique Using Rank Aggregation. Appl. Artif. Intell. 2014, 28, 243–257. [Google Scholar] [CrossRef]

- Ghaemi, M.; Feizi-Derakhshi, M.-R. Forest Optimization Algorithm. Exp. Syst. Appl. 2014, 41, 6676–6687. [Google Scholar] [CrossRef]

- Ghaemi, M.; Feizi-Derakhshi, M.-R. Feature Selection Using Forest Optimization Algorithm. Pattern Recognit. 2016, 60, 121–129. [Google Scholar] [CrossRef]

- Hu, Q.; An, S.; Yu, X.; Yu, D. Robust Fuzzy Rough Classifiers. Fuzzy Sets Syst. 2011, 183, 26–43. [Google Scholar] [CrossRef]

- Hu, Q.; Pedrycz, W.; Yu, D.; Lang, J. Selecting Discrete and Continuous Features Based on Neighborhood Decision Error Minimization. IEEE Trans. Syst. Man Cybern. Part B 2010, 40, 137–150. [Google Scholar] [CrossRef]

- Xu, W.; Pang, J.; Luo, S. A Novel Cognitive System Model and Approach to Transformation of Information Granules. Int. J. Approx. Reason. 2014, 55, 853–866. [Google Scholar] [CrossRef]

- Liu, D.; Li, T.; Ruan, D. Probabilistic Model Criteria with Decision-Theoretic Rough Sets. Inf. Sci. 2011, 181, 3709–3722. [Google Scholar] [CrossRef]

- Pedrycz, W.; Succi, G.; Sillitti, A.; Iljazi, J. Data Description: A General Framework of Information Granules. Knowl. Based Syst. 2015, 80, 98–108. [Google Scholar] [CrossRef]

- Wu, W.-Z.; Leung, Y. A Comparison Study of Optimal Scale Combination Selection in Generalized Multi-Scale Decision Tables. Int. J. Mach. Learn. Cybern. 2020, 11, 961–972. [Google Scholar] [CrossRef]

- Jiang, Z.; Yang, X.; Yu, H.; Liu, D.; Wang, P.; Qian, Y. Accelerator for Multi-Granularity Attribute Reduction. Knowl. Based Syst. 2019, 177, 145–158. [Google Scholar] [CrossRef]

- Wang, W.; Zhan, J.; Zhang, C. Three-Way Decisions Based Multi-Attribute Decision Making with Probabilistic Dominance Relations. Inf. Sci. 2021, 559, 75–96. [Google Scholar] [CrossRef]

- Hu, Q.; Yu, D.; Xie, Z. Neighborhood Classifiers. Expert Syst. Appl. 2008, 34, 866–876. [Google Scholar] [CrossRef]

- Liu, K.; Li, T.; Yang, X.; Yang, X.; Liu, D.; Zhang, P.; Wang, J. Granular Cabin: An Efficient Solution to Neighborhood Learning in Big Data. Inf. Sci. 2022, 583, 189–201. [Google Scholar] [CrossRef]

- Jiang, Z.; Liu, K.; Yang, X.; Yu, H.; Fujita, H.; Qian, Y. Accelerator for Supervised Neighborhood Based Attribute Reduction. Int. J. Approx. Reason. 2020, 119, 122–150. [Google Scholar] [CrossRef]

- Zhang, C.; Li, D.; Liang, J. Multi-Granularity Three-Way Decisions with Adjustable Hesitant Fuzzy Linguistic Multigranulation Decision-Theoretic Rough Sets over Two Universes. Inf. Sci. 2020, 507, 665–683. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, W. Knowledge Reduction and Matrix Computation in Inconsistent Ordered Information Systems. Int. J. Bus. Intell. Data Min. 2008, 3, 409–425. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, P.; Yang, X.; Mi, J.; Liu, D. Granular Ball Guided Selector for Attribute Reduction. Knowl. Based Syst. 2021, 229, 107326. [Google Scholar] [CrossRef]

- Liu, K.; Yang, X.; Fujita, H.; Liu, D.; Yang, X.; Qian, Y. An Efficient Selector for Multi-Granularity Attribute Reduction. Inf. Sci. 2019, 505, 457–472. [Google Scholar] [CrossRef]

- Ba, J.; Liu, K.; Ju, H.; Xu, S.; Xu, T.; Yang, X. Triple-G: A New MGRS and Attribute Reduction. Int. J. Mach. Learn. Cybern. 2022, 13, 337–356. [Google Scholar] [CrossRef]

- Yang, X.; Yao, Y. Ensemble Selector for Attribute Reduction. Appl. Soft Comput. 2018, 70, 1–11. [Google Scholar] [CrossRef]

- Sun, D.; Zhang, D. Bagging Constraint Score for Feature Selection with Pairwise Constraints. Pattern Recognit. 2010, 43, 2106–2118. [Google Scholar] [CrossRef]

- Xu, S.; Yang, X.; Yu, H.; Yu, D.-J.; Yang, J.; Tsang, E.C.C. Multi-Label Learning with Label-Specific Feature Reduction. Knowl. Based Syst. 2016, 104, 52–61. [Google Scholar] [CrossRef]

- Liang, J.; Li, R.; Qian, Y. Distance: A More Comprehensible Perspective for Measures in Rough Set Theory. Knowl. Based Syst. 2012, 27, 126–136. [Google Scholar] [CrossRef]

- Zhang, X.; Mei, C.; Chen, D.; Li, J. Feature Selection in Mixed Data: A Method Using a Novel Fuzzy Rough Set-Based Information Entropy. Pattern Recognit. 2016, 56, 1–15. [Google Scholar] [CrossRef]

- Lianjie, D.; Degang, C.; Ningling, W.; Zhanhui, L. Key Energy-Consumption Feature Selection of Thermal Power Systems Based on Robust Attribute Reduction with Rough Sets. Inf. Sci. 2020, 532, 61–71. [Google Scholar] [CrossRef]

- Xu, W.; Liu, S.; Zhang, X.; Zhang, W. On Granularity in Information Systems Based on Binary Relation. Intell. Inf. Manag. 2011, 3, 75–86. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Hu, Q.; Wang, X.; Chen, D.; Qian, Y.; Dong, Z. Feature Selection Based on Neighborhood Discrimination Index. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2986–2999. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Li, D.; Petrick, N.; Sahiner, B.; Linguraru, M.G.; Summers, R.M. Optimizing Area under the ROC Curve Using Semi-Supervised Learning. Pattern Recognit. 2015, 48, 276–287. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).