Abstract

To improve the performance of the whale optimization algorithm and further enhance the search accuracy, while increasing the convergence speed, a quasi-reflective chaotic mutant whale swarm optimization, namely QNWOA, is proposed, fused with an operator of Fish Aggregating Devices (FADs) in this paper. Firstly, the swarm diversity is increased by using logistic chaotic mapping. Secondly, a quasi-reflective learning mechanism is introduced to improve the convergence speed of the algorithm. Then, the FADs vortex effect and wavelet variation of the marine predator algorithm (MPA) are introduced in the search phase to enhance the stability of the algorithm in the early and late stages and the ability to escape from the local optimum by broking the symmetry of iterative routes. Finally, a combination of linearly decreasing and nonlinear segmentation convergence factors is proposed to balance the local and global search capabilities of the algorithm. Nine benchmark functions are selected for the simulation, and after comparing with other algorithms, the results show that the convergence speed and solution accuracy of the proposed algorithm are promising in this study.

1. Introduction

The whale optimization algorithm (WOA) is a newly proposed swarm intelligence optimization algorithm, developed in recent years. Due to its simple algorithm structure, few operators required for operation, fast convergence of iterations, and easy implementation, it has received wide attention and applications in various disciplines, such as power systems [1], data mining [2], training artificial neural networks (ANN) [3], network optimization [4] robot path planning [5], aero-engine optimization [6], and construction engineering [7]. According to existing studies, WOA has the advantage of strong global search capability, but occasionally falls into local optimality and cannot perform global searches comprehensively [8].

To address the above drawbacks and to improve the performance of the algorithm, on the one hand, domestic and foreign researchers have proposed many relevant improved WOA algorithms by using many strategies, such as changing operators [9,10,11,12], fusing chaotic mappings [13,14,15], and mixing other algorithms with the WOA algorithm [16,17,18,19]. For example, a Cauchy mutator was introduced into WOA to vary the individual movement step of whales through the Cauchy inverse cumulative distribution function method [9]; the combination of lens imaging backward learning and optimal worst backward learning strategies were used to improve the quality of swarm individuals [10]. Both the convergence factor and inertia weights were modified by introducing nonlinear strategies to balance the ability of the algorithm between the global exploration and local search, and accelerate the convergence speed of the algorithm [11]. Through differential evolution and Levy mutation, the algorithm was expanded the range of each search and enhanced the global search capability [12]. On the other hand, by introducing Iterative mapping and nonlinear fitting into the algorithm, the initial diversity of the swarm is ensured, while balancing the global search ability and local search of the algorithm to some extent [13]. By introducing Gauss chaos mapping [14] and Singer chaos mapping [15] into the algorithm, it is further demonstrated that chaos mapping has a good improvement effect on the WOA algorithm. In addition, mixing other algorithms is a common strategy in WOA improvement, and combining local search strategies, it can effectively reduce the occurrence of WOA algorithms falling into local optimum situations. For example, by mixing with algorithms, such as slime mould algorithm (SMA) [16], social group optimization (SGO) [17]., teaching-learning-based optimization (TLBO) [18], particle swarm optimization (PSO) [19,20], bat algorithm (BA) [21], and grey wolf optimizer (GWO) [22], it not only reduces the occurrence of falling into local optima, but also solves the problems of insufficient search capability and low efficiency of WOA when high-dimensional problems exist, which is of high use for the performance improvement of WOA algorithms.

The data of the above-mentioned improved WOA algorithm show that, although the performance of some of the test functions has been improved, the computational power of some of the test functions decreases too, and there are still problems, such as easily falling into local optimum and not finding the optimal solution. Therefore, based on previous research, we propose a quasi-reflective chaotic mutant whale swarm optimization fused with FADs (QNWOA) to further improve the convergence speed and optimization accuracy of the algorithm.

2. Whale Optimization Algorithm

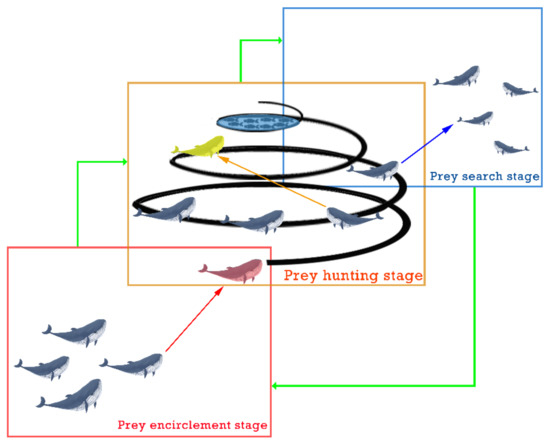

The Whale Optimization Algorithm (WOA) is a new heuristic optimization algorithm that mimics the hunting behavior of humpback whales and is, thus, proposed. It is easy to implement and robust compared to other swarm intelligence algorithms, and the algorithm requires fewer control parameters. In nature, humpback whales have a special hunting method, and this hunting method is called the bubble-net hunting strategy. In this strategy, the humpback whale dives tens of meters and then creates a spiral bubble around its prey to gather them before swimming to the surface to feed. Interestingly, the symmetrical route of whale hunting forms a spiral, as shown in Figure 1, and the original whale optimization algorithm was proposed based on the following text.

Figure 1.

WOA three stages and conversion schematic.

- (1)

- Prey encirclement stage

In this stage, the position of each whale is considered as a solution, and the whale closest to the prey is considered as the optimal solution. The other whale individuals complete an “encirclement”, such as the movement towards the prey based on the position of the best individual in the current iteration. In other words, the whales do not currently know the exact location of the prey and simply move in the direction of the current optimal whale, thus, surrounding the prey. To describe this behavior, an equation is written below.

where t indicates the current number of iterations; D represents the step size between the current individual and the optimal individual; A and C are coefficients; X (t) is the position vector of the optimal whale in the current iteration; X (t) indicates the current position vector of the whale to be moved; A and C can be obtained from the following equations:

where and are random numbers between (0, 1); the value of a decreases linearly from 0 to 2; t denotes the number of current iterations; T denotes the maximum number of iterations.

- (2)

- Prey hunting stage

During this stage, the whale dives into the sea by swimming upward in a spiral position and spitting out bubbles of varying sizes. The bubbles rise to sea level and form a web of bubbles to gather the prey together. In the algorithm, the distance between the individual and the current optimal whale position is firstly calculated, and secondly, the whale is simulated to swim along a spiral trajectory towards the prey position. Based on the behavior of humpback whales, the equation for their spiral swim towards prey is as follows:

where = | X (t) − X(t)| denotes the distance vector from the individual whale to the current best whale; b is a constant used to define the shape of the helix, usually taking the value 1, and l is a random number between (−1, 1).

As the whale swims in a spiral towards its prey, it also needs to shrink its encirclement. Therefore, to describe this model of synchronous behavior, a random number P between (0, 1) is introduced. Assume that there is a probability of P to choose the contracting encirclement mechanism and a probability of 1 − P to choose spiral predation to update the whale’s position with the following equation:

In the hunting stage, the algorithm sets the value of a to decrease linearly as it approaches the prey, so that the fluctuation of A also decreases with a. During the iterative process, the value of a decreases linearly from 2 to 0, A is a random value within [−a, a], and the algorithm sets that when |A| ≤ 1, the whale attacks the prey, i.e., enters the prey encirclement stage and prey hunting stage.

- (3)

- Prey search stage

In this stage, to enhance the diversity of the swarm, the algorithm randomly selects an individual whale in the current iteration and updates the positions of other whales based on the randomly selected whale position, forcing the whales to deviate from the prey and, thus, find a more suitable prey; at the same time, the search capability of the algorithm is enhanced to enable the WOA algorithm to perform a global search. The algorithm sets the whale to perform the prey search stage when |A| ≥ 1, which is formulated as follows:

where is a randomly selected whale position vector.

3. Improved Whale Optimization Algorithm

3.1. Quasi-Reflection-Based Learning

Ewees et al. [23] proposed a new concept of quasi-reflective-based learning (QRBL) based on opposition-based learning (OBL) and quasi-opposition-based learning (QOBL). The main idea is to determine the location of the quasi-reflection solution by comparing the current solution with the middle value of the upper and lower bounds of the algorithm, and it is known through experiments that QRBL is closer to the optimal solution compared to OBL and QOBL [23] and the equation to generate the quasi-reflective solution is as follows:

3.2. Logistic Chaos Mapping

Chaotic mapping is a nonlinear theory with the characteristics of non-linearity, universality, traversal, and randomness, which can traverse all states in a certain range without repetition, according to its characteristics, and can help generate new solutions and increase swarm diversity in intelligent algorithm optimization; thus, it is widely used. Although WOA has a good convergence speed, there is still room for improvement in the optimization-seeking capability. Therefore, to improve the performance of the algorithm, this paper introduces logistic chaotic mapping in the WOA algorithm, inspired by the chaotic Jaya algorithm of Jian et al. [24]. Its equation is given as:

It has been shown that the chaotic sequence has better pseudo-random properties when β ∈ [3.57, 4].

3.3. Introduction of Mutation Operations for Vortex Effects in FADs

To enhance the ability of the algorithm to escape from the local optimum later, the vortex effect of FADs in the marine predator algorithm (MPA) [25] is introduced into the search stage of the algorithm. In the MPA algorithm, iterations in the later stage cause individuals to obtain longer steps to find another prey distribution environment, and this strategy enables MPA to overcome the early convergence problem and escape from local extremes in the process of finding the best vortex effect, known as FADs. By introducing the FADs eddy current effect and improving it, Equation (10) is changed to Equations (16) and (17), as follows:

where CF is the adaptive parameter controlling the movement step of the predator; FADs are the influence probability, taken as 0.2; r is the random number within [0, 1]; and are the randomly selected individual whale position vectors, respectively.

On this basis, to limit the FADs to perform the search blindly in the early stage, which affects the convergence of the algorithm, this paper only enables the FADs in the late stage of the algorithm iteration and strengthens the upfront capability of the algorithm by introducing the variation operation. Inspired by the wavelet variation particle swarm algorithm proposed by Su et al. [26], wavelet variation is introduced in the preliminary search stage, as follows:

Then Equation (10) changes to:

In this paper, Morlet wavelet is chosen as the wavelet basis, w (φ) is the wavelet function value, φ is the random value within [−2.5, 2.5], and the mutation probability α is 0.1.

3.4. Convergence Factors for Linear Differential Decrement and Nonlinear Segmentation

In the original WOA algorithm, the search relies on A to switch between global and local through linear decrement. However, due to the disadvantage of the linear decreasing, the algorithm tends to fall into local optimum due to insufficient global search capability in the early stage, and it fails to find the optimal solution in the later stage due to insufficient local exploitation capability. Thus, from Equation (5), a convergence strategy that integrates linear differential decrement and nonlinear decrement is proposed, as follows:

where T denotes the maximum number of iterations, t is the current generation, and μ, λ, and ρ are constants. By introducing the convergence factor of nonlinear segmentation, the algorithm maintains good global searchability in the early stage and accelerates the convergence speed in the later stage, which can effectively balance the local search and global search of the algorithm.

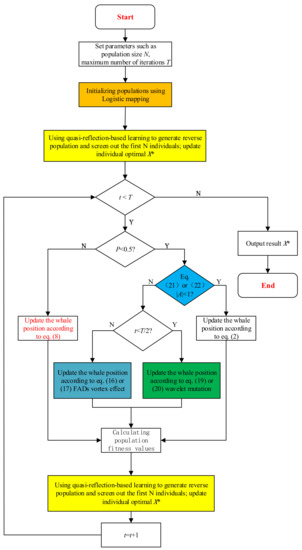

3.5. Algorithm Flowchart and Pseudo-Code

The flow and pseudo-code of the proposed algorithm are as shown in Figure 2 and Algorithm 1 respectively.

| Algorithm 1 the proposed algorithm (QNWOA) |

| 1: Input: The swarm size N, maximum number of iteration T. |

| 2: Output: Initialize the random swarm (i = 1, 2…, N); Generating species group according to logistic chaos and calculate fitness Quasi—reflex learning generates inverse swarm and calculate fitness The first N are selected in new swarm and the optimal individual was updated |

| 3: While (t < T) do |

| 4: Update l, P, r, variation rate r1, and influence probability FADs; Update a, A, C by Equation (21) or (22) |

| 5: if (P < 0.5) |

| 6: if (|A| ≥ 1) |

| 7: if (t < T/2) |

| 8: if (t < r1) |

| 9: Update location by Equation (19) or (20). |

| 10: else Update location by Equation (10). |

| 11: end if |

| 12: else if location by Equation (16) or (17). |

| 13: end if |

| 14: else if (|A| < 1) |

| 15: Update location by Equation (2). |

| 16: end if |

| 17: else if (P ≥ 0.5) |

| 18: Update location by Equation (8). |

| 19: end if |

| 20: Calculate the N individual fitness value of the current swarm; 21: Quasi—reflex learning generates inverse swarm and calculate fitness. 22: The first N was selected in new swarm and the optimal individual was updated. 23: End while |

| 24: Return |

Figure 2.

QNWOA algorithm flowchart.

4. Experimental Results and Analysis

4.1. Experimental Design

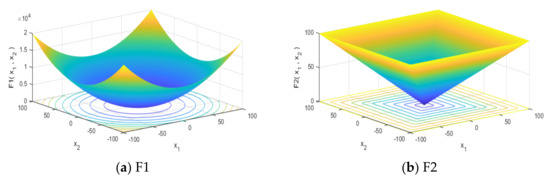

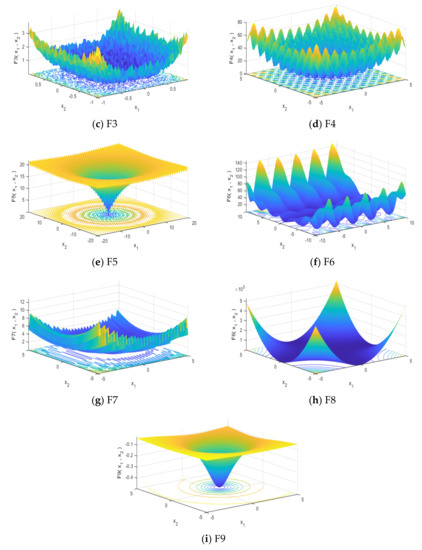

To test the performance of the improved algorithm, the experiment selects nine benchmark functions that are more representative for testing, as shown in Table 1: F1–F3 for unimodal (UM) function; F4–F7 for multi-modal (MM) function; F8-F9 for composition (CM) function. From the 2D forms of nine benchmarks as shown in Figure 3a–i, it can be seen that the UM function has only one optimal solution and is often used to test the algorithm’s ability to find the optimal solution. Since the MM function has multiple optima, it is difficult to find globally optimal solutions for these functions, but it can be used to test the algorithm’s ability to explore and jump out of local optima by broking the symmetry of iterative routes. The CM function also has a large number of optimal solutions, but it can be used to test the stability of the algorithm, since the optimal solution is easily found due to the low dimensionality. The tests are done in an experimental environment of CPU Inter(R) Core (TM) i7-8700, RAM 16 GB, and MATLAB.

Table 1.

Test Functions.

Figure 3.

Demonstration of functions.

On the other hand, QNWOA is compared and experimented with the currently existing WOA algorithms and improved WOA algorithms: WOA (traditional whale optimization algorithm), OWOA (whale optimization algorithm based on reverse learning), EWOA (Whale optimization algorithm introducing nonlinear time-varying adaptive weights and differential mutation perturbation factors), WOAWC (Whale optimization algorithm based on adaptive weight and Cauchy mutation); there are also three more representative swarm intelligence algorithms: PSO (particle swarm optimization algorithm), SOA (seagull optimization algorithm), and GWO (gray wolf optimization algorithm).

4.2. Analysis of Experimental Test Results

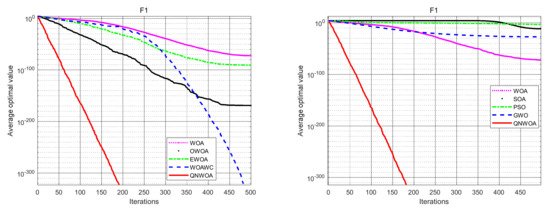

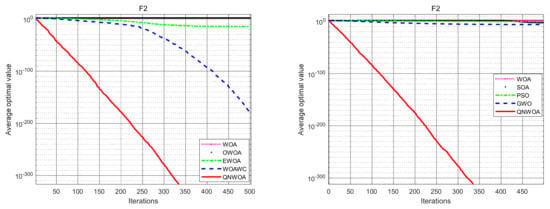

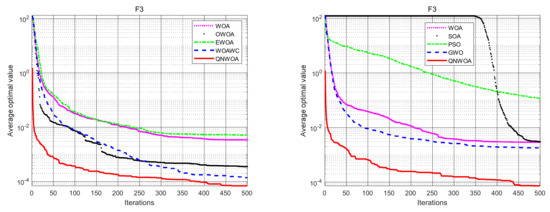

In our experimental tests, the algorithm parameters are set as shown in Table 2, and all algorithms run 30 times repeatedly on each function to record and count the optimal value (Best), mean value (Mean) and standard deviation (SD) run results, as shown in Table 3 and Table 4. By analyzing the experimental statistical results, it can be seen from the data in Table 3, combining with the lefts of Figure 4, Figure 5 and Figure 6, that both QNWOA and WOAWC converge to the minimum value in the UM function F1, but the convergence speed of QNWOA is much higher than several other algorithms, and QNWOA outperforms other algorithms, in terms of convergence speed, optimal value, mean and standard deviation in F2 and F3. Further, it can be seen from Table 4 that QNWOA outperforms several other swarm intelligence algorithms in F1–F3, in terms of the mean and standard deviation of optimal values. In summary, QNWOA in the UM function has a higher search capability compared to several other algorithms.

Table 2.

Algorithm parameter settings.

Table 3.

Function experiment result 1.

Table 4.

Function experiment result 2.

Figure 4.

F1 convergence comparison chart.

Figure 5.

F2 convergence comparison chart.

Figure 6.

F3 convergence comparison chart.

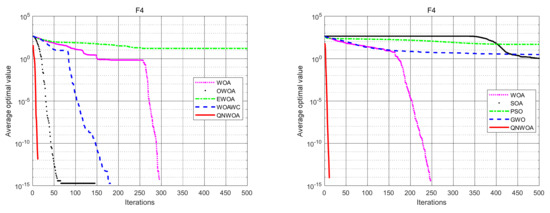

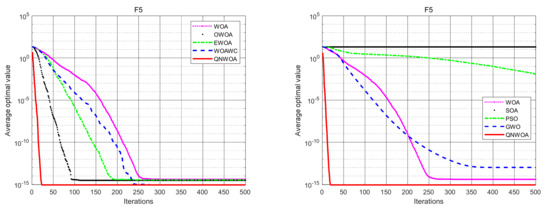

From the data in Table 3, combined with Figure 7, Figure 8 and Figure 9, we can see that QNWOA and WOAWC in the CM functions, F4 and F5, have significantly better optimal values, mean values, and standard deviations compared with other improved WOA algorithms, while QNWOA is much faster than WOAWC in terms of convergence speed. The improvement in the optimal value is especially large. From Table 4, it can be seen that the QNWOA proposed in this paper outperforms the other three group intelligence algorithms compared in all data, especially in terms of optimal values. It proves that the improved QNWOA effectively avoids the local optimum in the CM function and has a better global capability.

Figure 7.

F4 convergence comparison chart.

Figure 8.

F5 convergence comparison chart.

Figure 9.

F6 convergence comparison chart.

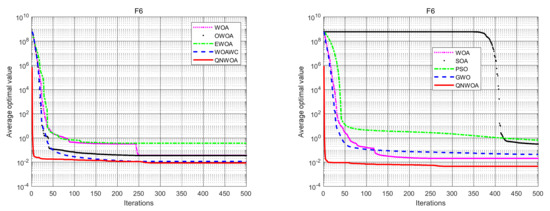

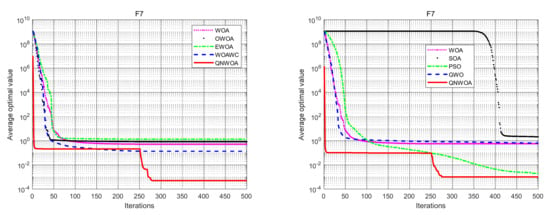

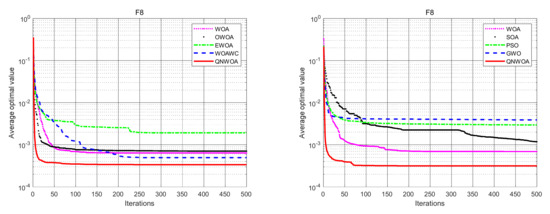

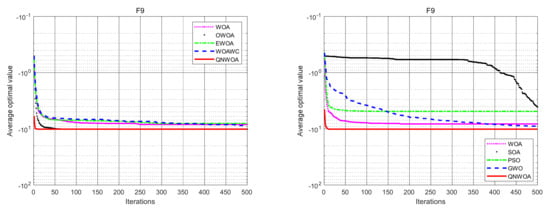

From the data in Table 3 and Table 4, combined with Figure 10, Figure 11 and Figure 12, we can see that QNWOA in CM functions F7 and F8 has a certain degree of improvement in the optimal value and mean value, compared with the other six algorithms, and the standard deviation has significantly reduced. It has shown that the improved algorithm not only performs better, but also has better stability in the CM functions.

Figure 10.

F7 convergence comparison chart.

Figure 11.

F8 convergence comparison chart.

Figure 12.

F9 convergence comparison chart.

In general, the improved QNWOA in this paper has significantly improved the convergence speed, convergence accuracy, and stability compared with other algorithms.

5. Conclusions

In this paper, by introducing logistic chaotic mapping and a quasi-reflection-based learning mechanism, we proposed a whale swarm optimization algorithm, namely QNWOA, that fused with the FADs vortex effect and wavelet mutation of marine predator algorithm (MPA) in the search phase. In the algorithm, a nonlinear segmentation convergence factor is used to effectively improve the convergence speed and the search accuracy of the WOA algorithm, according to the experimental results. However, the proposed algorithm fluctuates a lot when the FADs vortex effect is enabled, and the next work should consider how to efficiently coordinate the FADs vortex effect for iterations without affecting the convergence of the algorithm, which require further study in the future.

Author Contributions

Methodology, S.S. and C.H.; project administration, S.S.; supervision, S.S.; investigation, C.H.; software, C.H. and L.X.; investigation, L.X.; software, C.H. and L.X.; writing—review and editing, S.S. and L.X. All authors have read and agreed to the published version of the manuscript.

Funding

The work was partially supported by the Scientific Research Foundations and the Virtual Experimental Class Projects of Jingling Institute of Technology (nos. JIT-rcyj-201505 and D2020005), the National Natural Science Foundation of China (nos. 61375121 and 41801303), the Major Project of Natural Scientific Research for Universities in Jiangsu Province (no.21KJA120001), and sponsored by the funds from Jiangsu Provincial Sci-Tech Innovation Team of Swarm Computing and Smart Software, led by S.S. (corresponding author).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kumar, R.; Singha, R.; Ashfaqa, H.; Singh, S.K.; Badoni, M. Power system stability enhancement by damping and control of Sub-synchronous torsional oscillations using Whale optimization algorithm based Type-2 wind turbines. ISA Trans. 2021, 108, 240–256. [Google Scholar] [CrossRef] [PubMed]

- Mafarja, M.M.; Mirjalili, S. Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing 2017, 260, 302–312. [Google Scholar] [CrossRef]

- Alameer Zakaria Mohamed, A.E.; Ewees, A.A.; Ye, H.; Jianhua, Z. Forecasting gold price fluctuations using improved multilayer perceptron neural network and whale optimization algorithm. Resour. Policy 2019, 61, 250–260. [Google Scholar] [CrossRef]

- Deepa, R.; Revathi, V. Enhancing Whale Optimization Algorithm with Levy Flight for coverage optimization in wireless sensor networks. Comput. Electr. Eng. 2021, 94, 107359. [Google Scholar] [CrossRef]

- Petrović, M.Z.; Miljković, Z.; Jokić, A. A novel methodology for optimal single mobile robot scheduling using whale optimization algorithm. Appl. Soft Comput. 2019, 81, 105520. [Google Scholar] [CrossRef]

- Huang, X.; Wang, R.; Zhao, X.; Hu, K. Aero-engine performance optimization based on whale optimization algorithm. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 11437–11441. [Google Scholar]

- Aala, K.; Vamsi, K.R.; Venkata, L.N. A combinatorial social group whale optimization algorithm for numerical and engineering optimization problems. Appl. Soft Comput. 2021, 99, 106903. [Google Scholar] [CrossRef]

- Zhou, Y.; Ling, Y.; Luo, Q. Lévy Flight Trajectory-Based Whale Optimization Algorithm for Global Optimization. IEEE Access 2017, 5, 6168–6186. [Google Scholar]

- Guo, Z.Z.; Wang, P.; Ma, Y.F.; Qi, W.; Chang-qing, G. Whale algorithm based on adaptive weight and Cauchy mutation. Microelectron. Comput. 2017, 34, 20–24. [Google Scholar]

- Chakraborty, S.; Sharma, S.; Saha, A.K.; Chakraborty, S. SHADE–WOA: A metaheuristic algorithm for global optimization. Appl. Soft Comput. 2021, 113, 107866. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, M.D.; Wang, Y.R.; Song, T.T.; Fan, Y. Hybrid strategy to improve whale optimization algorithm. Comput. Eng. Des. 2020, 41, 12–19. [Google Scholar]

- Liu, M.; Yao, X.; Li, Y. Hybrid whale optimization algorithm enhanced with Lévy flight and differential evolution for job shop scheduling problems. Appl. Soft Comput. 2020, 87, 105954. [Google Scholar] [CrossRef]

- Li, S.Y.; Ju, C.X.; Ding, H.Q. Whale optimization algorithm based on iterative mapping and nonlinear fitting. J. Chongqing Univ. 2021. Available online: https://kns.cnki.net/kcms/detail/50.1044.n.20210812.1732.002.html (accessed on 10 January 2022).

- Zhao, Y.; Peng, Z.R. Whale Optimization Algorithm with Chaotic Maps and Its Application in Finite Element Model Updating. J. Lanzhou Jiao Tong Univ. 2021, 40, 39–46. (In Chinese) [Google Scholar]

- Oliva, D.; El Aziz, M.A.; Hassanien, A.E. Parameter estimation of photovoltaic cells using an improved chaotic whale optimization algorithm. Appl. Energy 2017, 200, 141–154. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Chang, V.; Mohamed, R. HSMA_WOA: A hybrid novel Slime mould algorithm with whale optimization algorithm for tackling the image segmentation problem of chest X-ray images. Appl. Soft Comput. 2020, 95, 106642. [Google Scholar] [CrossRef]

- Su, S.B.; Zhao, W.; Wang, C.S. Parallel swarm intelligent motion planning with energy-balanced for multirobot in obstacles environment. Wirel Commun. Mob. Com. 2021, 32, 8902328. [Google Scholar]

- Vijaya Lakshmi, A.; Mohanaiah, P. WOA-TLBO: Whale optimization algorithm with Teaching- learning-based optimization for global optimization and facial emotion recognition. Appl. Soft Comput. 2021, 110, 107623. [Google Scholar] [CrossRef]

- Ul Hassan, N.; Bangyal, W.H.; Ali Khan, M.S.; Nisar, K.; Ag. Ibrahim, A.A.; Rawat, D.B. Improved Opposition-Based Particle Swarm Optimization Algorithm for Global Optimization. Symmetry 2021, 13, 2280. [Google Scholar] [CrossRef]

- Pervaiz, S.; Bangyal, W.H.; Ashraf, A.; Kashif, N.; Reazul, H.M.; Ag. Asri, A.I.; Chowdhry, B.S.; Waqas, R.; Joel, J.P.C.R.; Richard, E.; et al. Comparative research directions of population initialization techniques using pso algorithm. Intell. Autom. Soft Comput. 2022, 32, 1427–1444. [Google Scholar] [CrossRef]

- Bangyal, W.H.; Hameed, A.; Ahmad, J.; Nisar, K.; Haque, M.R.; Asri, A.; Ibrahim, A.; Rodrigues, J.J.P.C.; Khan, M.A.; Rawat, D.B.; et al. New Modified Controlled Bat Algorithm for Numerical Optimization Problem. Comput. Mater. Contin. 2021, 70, 2241–2259. [Google Scholar] [CrossRef]

- Raja, M.; Dhanasekaran, S.; Vasudevan, V. Opposition Based Joint Grey Wolf-Whale Optimization Algorithm Based Attribute Based Encryption in Secure Wireless Communication. Wirel. Pers. Commun. 2021, 121, 1–21. [Google Scholar] [CrossRef]

- Ewees, A.A.; Abd Elaziz, M.; Houssein, E.H. Improved Grasshopper Optimization Algorithm using Opposition-based Learning. Expert Syst. Appl. 2018, 112, 156–172. [Google Scholar] [CrossRef]

- Jian, X.Z.; Weng, Z.Y. A logistic chaotic JAYA algorithm for parameters identification of photovoltaic cell and module models. Optik 2020, 203, 164041. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired meta heuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Su, S.B.; Guo, H.F.; Tian, H.M.; Wu, G. A novel pattern clustering algorithm based on particle swarm optimization joint adaptive wavelet neural network model. Mobile Netw. Appl. 2017, 22, 692–701. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).