Abstract

Several queueing systems in heavy traffic regimes are shown to admit a diffusive approximation in terms of the Reflected Brownian Motion. The latter is defined by solving the Skorokhod reflection problem on the trajectories of a standard Brownian motion. In recent years, fractional queueing systems have been introduced to model a class of queueing systems with heavy-tailed interarrival and service times. In this paper, we consider a subdiffusive approximation for such processes in the heavy traffic regime. To do this, we introduce the Delayed Reflected Brownian Motion by either solving the Skorohod reflection problem on the trajectories of the delayed Brownian motion or by composing the Reflected Brownian Motion with an inverse stable subordinator. The heavy traffic limit is achieved via the continuous mapping theorem. As a further interesting consequence, we obtain a simulation algorithm for the Delayed Reflected Brownian Motion via a continuous-time random walk approximation.

MSC:

60K25; 60G22; 60J70; 60K15

1. Introduction

Queueing theory is a widely studied branch of mathematics, thanks to its numerous applications. Aside from the classical ones in telecommunications [1] and traffic engineering [2], one can cite the ones in computing [3] (with particular attention to scheduling) and economics (see, for instance, [4]), together with the fact that such a branch clearly shares different models with population dynamics (see also [5]). The simplest building block of such a theory is given by the queue, which is the prototype of a Markovian queueing model. While, on one hand, the study of this queueing model at its stationary phase can be achieved without too much effort (see, for instance, [6,7]), the determination of its distribution during the transient phase is a quite difficult task that requires much more attention (see, for instance, [8,9]).

The main feature of the queue is the Markov property, which, on one hand, gives a quite tractable model, while, on the other, it precludes any possibility of including memory effects. To overcome such a problem, one can extend the study to the class of semi-Markov processes, introduced by Lévy in [10]. A special class of semi-Markov processes can be obtained by means of a time change, i.e., the introduction of a non-Markov stochastic clock in the model. This is done, for instance, by composing a parent Markov process with the inverse of a subordinator. In the specific case of the -stable subordinator, one refers to such a new process as a fractional version of the parent one, due to the link between this time-change procedure and fractional calculus, as one can see from [11,12,13,14,15,16,17], to cite some of the several works on the topic. Together with a purely mathematical interest, let us stress that this procedure leads also to some interesting applications (see, for instance, [18] in economics, [19] in computing and [20] in computational neurosciences). In the context of queueing theory, this has been first done in [4], in the case of the queue, and then extended in [21,22] to the case of queues with catastrophes, [23] in the case of Erlang queues and [24] for the queues.

Another quite interesting feature of the classical queue is given by its link with the Brownian motion. It is well known that the behavior of the queue is widely influenced by the traffic intensity . In particular, if , the queue is ergodic (and thus admits a stationary state), while for , this is no longer true. In a certain sense, we can see the critical value as a bifurcation of the system (as seen as some sort of integral curve in the space of probability measures), in which it loses its globally asymptotically stable equilibrium point. Thus, a natural question one can ask concerns what happens, at a proper scaling, to the queueing model as is near 1. This is called the heavy traffic regime. In the case of the queue, it is well known that, up to a proper scaling, the queue length process in the heavy traffic regime can be approximated by means of a Reflected Brownian Motion with negative drift (see [25]), i.e., a Brownian motion with negative drift whose velocity is symmetrically regulated as soon as it touches 0. More precisely, the Reflected Brownian Motion is the solution of the Skorokhod reflection problem on the trajectory of a drifted Brownian motion. This process is of independent importance, as it provides the stochastic representation of the solution of the heat equation with Neumann boundary condition (see [26]).

The time-change procedure described before has been also applied to the Brownian motion, obtaining the so-called Delayed Brownian Motion (see [27]). Despite the name, it is interesting to observe that such a process is not necessarily delayed: it is true that it exhibits some intervals of constancy; nevertheless, on short times, it can vary faster than the parent Brownian motion, as evidenced from its expected lifetime in fractal sets (see [28]). Once this procedure has been applied to the Brownian motion, one could ask what happens as we adopt a time-change also to the Reflected Brownian Motion.

In this paper, we introduce the Delayed Reflected Brownian Motion (DRBM) as the solution of the Skorokhod reflection problem for the trajectories of the Delayed Brownian Motion (DBM). In particular, we prove that the process obtained in this way coincides with the one achieved by time-changing the Reflected Brownian Motion via an inverse stable subordinator. As a consequence, we can obtain some alternative representations of the process as a direct counterpart of the ones in the classical case. Among them, it is interesting to cite that we are able to exhibit a representation analogous to the one of [29] in terms of time-changed stochastic differential equations (introduced in [30]). We then use such a process to characterize the heavy traffic regime of a fractional queue. The results that we obtain have a twofold interest. One one hand, we achieve a subdiffusive approximation of the fractional queue in the heavy traffic regime, which is a quite powerful tool to study the properties of such queueing models. On the other hand, if we take a look at the results from a different point of view, we have a continuous-time random walk approximation of the Delayed Reflected Brownian Motion. The latter can be used to provide simulation algorithms that do not require us to simulate the inverse subordinator or to provide some inverse Laplace transforms (both of them being expensive computational tasks in terms of errors and time). The motivation of the paper is indeed in this twofold result: we want to provide a new tool to study the properties of the fractional queue introduced in [4] in the heavy traffic regime, while, at the same time, exploiting and better investigating the power of the discrete event simulation algorithm (see [31]) via continuous-time random walk approximations of a subdiffusive process.

The paper is organized as follows: in Section 2, we introduce the Skorokhod’s reflection problem and the Reflected Brownian Motion, while in Section 3, we investigate the Delayed Reflected Brownian Motion, with particular attention to the scaling properties of the two processes. The heavy traffic approximation for both the classical and the fractional queues is discussed in Section 4, together with some scaling properties of the classical queueing system. In this section, we also refine (and, in a certain sense, complete) the analysis of the queueing model introduced in [4], by proving the respective independence of the interarrival and the service times (recall that they are not mutually independent) and providing their distributions (with a different strategy with respect to [21] that does not require any further conditioning). Finally, in Section 5, we exhibit the simulation algorithm for the fractional queue and how to use it to simulate a Delayed Reflected Brownian motion, together with some heuristic considerations on stopping criteria.

2. The Reflected Brownian Motion

2.1. Skorokhod’s Reflection Problem

Let us denote by (where ) the space of continuous functions . In the following, we also need the space of cádlág functions , i.e., the space of functions that are right-continuous and such that the left limits exist finite. Let us denote, for simplicity, and , which are convex cones. Let us also recall that any monotone function is locally of bounded variation and thus admits a distributional derivative that is a Radon measure (see [32]).

Definition 1.

Let . We say that a pair is a solution of the one-dimensional Skorokhod reflection problem if

- (i)

- ;

- (ii)

- for any ;

- (iii)

- a is nondecreasing, and is supported on

Practically, we are asking if it is possible to construct a path z starting from y by adding a term a in such a way that whenever y touches 0, it is instantaneously symmetrically reflected (by using the function a), so that the resulting path z is conditioned to remain non-negative. For these reasons, the function a is called the regulator, while z is called the regulated path. Let us emphasize that the problem can be extended to the n-dimensional setting for any —for instance, asking that the regulated path is conditioned to remain in a certain orthant (so that the path is symmetrically reflected as soon as it touches a coordinate hyperplane) or a polyedral region; see [33]. Let us recall that the one-dimensional Skorokhod problem admits a unique solution.

Theorem 1.

Let . Then, there exists a unique solution of the one-dimensional Skorokhod reflection problem (given in Definition 1). In particular,

where . Moreover, if , then .

With the previous theorem in mind, one can define the reflection map as such that, for any , , where is the unique solution of the Skorokhod problem. We also have that is well defined.

Concerning the continuity of the reflection map on , we first have to consider some topology on it (see [25] (Chapter 3)).

Definition 2.

Let us consider the space of the cádlág functions . We denote by U the uniform topology, induced on by the metric

Let us denote by ι the identity map on , i.e., for any . Moreover, let

We denote by the topology on defined by the metric

where is the composition map, i.e.,

We denote by and the convergence in , respectively, in U and . It is clear that if , then . We say that in if in for a sequence of continuity points of x such that . This topology is metrizable and we still denote the metric as .

In general, if we have a sequence of -valued random variables, we denote whenever converges in distribution to a -valued random variable X with respect to the topology.

Now, we can describe the continuity properties of the reflection map (see [25] (Lemma and Theorem ) for the compact domain case and extend it with [25] (Theorem ) to the non-compact domain case).

Theorem 2.

The map is Lipschitz-continuous in both U and topology with Lipschitz constant 2, i.e.,

and

Moreover, is Lipschitz-continuous in the topology with Lipschitz constant 2, i.e., Equation (1) still holds in this case.

Let us also denote

and observe the following elementary properties of the reflection map (which are particular cases of [25] (Lemma 13.5.2)).

Lemma 1.

The following properties are true:

- (i)

- For any and , it holds that

- (ii)

- For any and , it holds that

Proof.

Let us first observe that, clearly, . Then it holds, for any , by Theorem 1, that

proving property (i).

Concerning property (ii), let us observe that . Define the running supremum for any . Then, by Theorem 1. Moreover, we have . Indeed, let us define the set of maps and the range map as for any and any . Then, being increasing and continuous, we have

Hence,

and finally

□

2.2. The Reflected Brownian Motion

Let us fix a complete filtered probability space , supporting all the following random variables and processes. Let be a standard Brownian motion and be the Brownian motion with drift and diffusion parameter with (we denote it as ) defined as

If , we denote , and if, additionally, , we denote . In any case, we set . It is well known that ; thus, we can solve the Skorokhod problem on each sample path of .

Definition 3.

The Reflected Brownian Motion (RBM) with drift η and diffusion parameter is given by the process defined as

and we denote . If , we denote it by . If additionally , we denote it by .

It is clear, by definition, that an RBM is almost surely non-negative. This is achieved thanks to the regulator, which provides a symmetrization of the velocity with which the original BM tries to cross 0. This additive component symmetrizes perfectly such an effort, thus preventing the process from crossing the 0 threshold. However, in this case, the regulator is a singular function; thus, we cannot properly speak about velocity. The symmetrizing effect of the regulator is instead clearer in the processes that are linked to the queueing systems, as will be shown in Section 4. First, we want to prove a simple scaling property. To do this, let us denote as . Obviously, . Extending the arguments in [25] (Theorem ), we have the following result.

Proposition 1.

Let . The following properties hold true.

- (i)

- For any , it holds that

- (ii)

- If , it holds that

- (iii)

- If , it holds that

- (iv)

- If , it holds that

Proof.

Let us first prove property (i). Consider such that . The self-similarity of the Brownian motion implies that

Applying the reflection map on both sides, we conclude the proof by Lemma 1.

Property (ii) follows from property (i) applied on by choosing and . Property (iii) follows from property (i) applied on by choosing and . Finally, property (iv) follows from (i) applied on by choosing and . □

From now on, we will focus on the case , as any other case can be deduced from this one. Let us stress that the one-dimensional distribution of is well known (see [25] (Theorem ) or [34] (Section )).

Theorem 3.

For , and , it holds that

where Φ is the cumulative distribution function (CDF) of a standard normal random variable, i.e.,

Remark 1.

It is clear that for any by definition. Let us also observe that, if , then the one-dimensional distribution of is a folded Gaussian distribution. This will be made clearer in the following subsection.

As a consequence of the previous theorem, we have the following corollary (see [35] (Corollary 1.1.1)).

Corollary 1.

For with and , it holds that

where φ is the probability density function (PDF) of a standard normal random variable, i.e.,

2.3. Alternative Construction of the Reflected Brownian Motion

One can provide a different construction of the Reflected Brownian Motion by means of the absolute value of an Itô process. To do this, let us observe, as done in [36], that, whenever , it holds that

Using this characterization, together with the fact that , we have

It is clear that is still a Brownian motion and we can write

where and is the running maximum of . Combining the previous argument with [29] (Theorem 1), we get the following result.

Theorem 4.

For , the following properties are true.

- (i)

- There exists a process such that, denoting by , it holds that

- (ii)

- Let and consider the unique strong solution of the stochastic differential equationThen, it holds that

Remark 2.

- In the case , the previous theorem tells that , i.e., it is a folded Gaussian process, as observed in the previous subsection.

- The equality in law has been proven by Lévy prior to [29]; see [37] (Chapter VI, Theorem ).

The previous characterization of the RBM can be fruitfully used for stochastic simulation. Indeed, Equation (2) can be discretized by means of the Euler scheme (see [38]), which is weakly convergent of order 1. On the other hand, one could also simulate by means of the reflection map. However, such an algorithm is weakly convergent of order . In any case, one can provide not only algorithms with improved weak convergence order, but also exact simulation algorithms for at some grid points (see [39]). Other approaches to generate the sample paths of the RBM are based on the Gauss–Markov nature of the Drifted Brownian Motion (see [40,41]). There is also another approximate simulation method that makes use of a continuous-time random walk (CTRW) approximation. The latter is made clearer in Section 5.

3. The Reflected Brownian Motion Delayed by the Inverse Stable Subordinator

3.1. Inverse Stable Subordinators and Semi-Markov Processes

Let us first introduce the inverse stable subordinator. To do this, we recall the following definitions (we refer to [42,43,44]).

Definition 4.

For any , a standard α-stable subordinator is an increasing Lévy process with almost surely (a.s.) and such that

We call the inverse α-stable subordinator the process defined as

We denote by the Laplace transform operator, i.e., for a function ,

and

the abscissa of convergence of f. It is well known that is well defined for any (see [45] (Proposition )).

In the following proposition, we recall some of the main properties of the stable subordinator and its inverse (see [44]).

Proposition 2.

Let and an α-stable subordinator with inverse . Then,

- (i)

- is a strictly increasing process with a.s. pure jump sample paths;

- (ii)

- is an increasing process with a.s. continuous sample paths;

- (iii)

- For any fixed , is an absolutely continuous random variable with PDF satisfyingwhere . This means that ;

- (iv)

- For any fixed , is an absolutely continuous random variable with PDF satisfying

- (v)

- For any fixed , it holds that and

Remark 3.

Remark that, while is a Lévy process and then a strong Markov process, is not even a Markov process.

As it is clear from the previous proposition, explicit formulae for the one-variable function lead to analogous ones for the density of the stable subordinator and its inverse. However, such explicit formulae are not so easy to handle. First of all, there are several integral representations of (see [46] and references therein). Among them, let us recall the so-called Mikusinski representation:

This representation is shown to be quite useful both to determine asymptotic properties of and to evaluate it numerically (see [47]). The function can be also expressed in several ways as a function series (see [48]). Let us refer, among them, to the following formula:

The latter leads to a quite interesting representation of , which can be also used to prove some regularity results (as, for instance, done in [49]). Further discussion on the various representations of and is given, for instance, in the Appendix of [50].

In the following, we want to apply a time-change procedure on by using as a stochastic clock the process . Thus, it could be useful to recall the notion of the semi-Markov process, as introduced in [10].

Definition 5.

A real valued cádlág process is semi-Markov if, denoting by its age process, i.e., , for any and any , where is the Borel σ-algebra of , it holds that

In particular, if we consider a strong Markov process and an independent inverse -stable subordinator , we can define the time-changed process . In [51], it is shown that, if we define the process as for any , the couple is a strong Markov process. This implies (actually, it is equivalent) that is a semi-Markov process. The semi-Markov property of , in particular, follows from the fact that is a Markov additive process in the sense of [52] (see [51] for details). Finally, let us recall that such a semi-Markov property actually tells us that the process satisfies a (strong) regenerative property (in the sense of [53]) with the regenerative set given by

i.e., the process satisfies the strong Markov property on any random time such that for almost any . Actually, a regenerative property is common for any semi-Markov process and could be informally stated as follows: a semi-Markov process exhibits the strong Markov property at each time in which it changes its state.

3.2. The Reflected Brownian Motion Delayed by the Inverse Stable Subordinator

We are now ready to introduce the Delayed Reflected Brownian Motion.

Definition 6.

Let , and be an inverse stable subordinator independent of . We define the Reflected Brownian Motion delayed by an inverse α-stable subordinator (DRBM) as the process , we denote it as and we call its parent process. As before, if , we denote it as , and if furthermore , we denote it as .

First of all, let us stress that the arguments in the previous subsection tell us that is semi-Markov. Together with , we can define the Delayed Brownian Motion , where and is an inverse -stable subordinator independent of it (see, for instance, [27]). We denote this as and we call its parent process.

Another natural definition for the DRBM could be obtained by simply considering . In the following proposition, we observe that such a definition is equivalent to the one we have given.

Proposition 3.

Let with parent process . Let and with parent process . Then, almost surely.

Proof.

This proposition easily follows from property (ii) of Lemma 1 after observing that . □

Remark 4.

The couple is the unique solution of the Skorokhod problem in Definition 1 for the sample paths of .

We can also deduce a scaling property for the DRBM in the spirit of Proposition 1.

Proposition 4.

Let . The following properties hold true.

- (i)

- For any , it holds that

- (ii)

- It holds that

Proof.

Let us first show property (i). Let be the parent process of , and be the involved inverse -stable subordinator. By Proposition 1 with , we get . By the Skorokhod representation theorem (see, for instance, [54]), we can suppose, without loss of generality, that there exists a process such that almost surely. This clearly implies that also is independent of . Thus, composing both sides of the equality with , we conclude the proof of (i). Property (ii) follows from (i) applied to with . □

Remark 5.

Thanks to the previous proposition, we can always reduce to the case . However, we cannot reduce to the case . This is due to the fact that the composition operator is not symmetric and in general.

Thus, from now on, let us consider . Concerning the one-dimensional distribution, we get a subordination principle from a simple conditioning argument.

Proposition 5.

For , and , it holds that

Proof.

Let us rewrite as a conditional expectation. Precisely, if we let be the indicator function of the set , that is to say,

it holds that

where is the parent process of and is independent of . Using the tower property of conditional expectations, we achieve

We can set with

where we also use the fact that is independent of and Theorem 3. Finally, we have

concluding the proof. □

With the same exact argument, we can prove the following statement.

Corollary 2.

For with and , it holds that

As for the classical case, we could ask if there are some alternative representations of the DRBM in terms of the solution of some particular SDE. This is done by generalizing Theorem 4.

Theorem 5.

For , the following properties are true.

- (i)

- There exists a process such that, denoting by its running maximum, it holds thatalmost surely.

- (ii)

- Let and consider the unique strong solution of the time-changed stochastic differential equation (in the sense of [30])Then, it holds that

Proof.

Let us first prove property (i). Let be the parent process of . By item (i) of Theorem 4, we know that there exists such that, setting , it holds that

Let with parent process and define as in the statement. Arguing as in Lemma 1, the fact that implies almost surely. Thus, applying the composition with on both sides of equality (5), we conclude the proof of (i). To prove the second item, let us define as the unique strong solution of Equation (2), where W is the parent process of . Observe that, being almost surely continuous, W is in synchronization with (in the sense of [30] (p. 793)) and then we can use the duality theorem [30] (Theorem ) to state that is the unique strong solution of

Continuity of leads to Equation (4). Vice versa, if is the unique strong solution of Equation (4), then the duality theorem tells us that is the unique strong solution of Equation (2). Thus, we observe that is the unique strong solution of Equation (4) if and only if , and the statement directly follows from item (ii) of Theorem 4. □

One could try to use the previous characterization to provide an algorithm for the simulation of the DRBM. Such an approach requires, first of all, the simulation of a stable subordinator, which can be done by means of the Chambers–Mallow–Stuck algorithm [55], which is a generalization of the Box–Müller algorithm. As underlined in [43] (Section 5), one can simulate a time-changed process by setting a grid (with ), simulating and then the parent process X up to . Finally, an approximate trajectory of the process is obtained by a step plot between the nodes . Let us stress that such an algorithm can be generalized to any subordinator, but in this case, Laplace inversion could be required. Moreover, in the case of the DRBM, this involves the simulation of an RBM. We can bypass such a step by using a CTRW approximation of , at least for . This follows from an analogous approach for the RBM, which will be discussed in the following sections.

4. Heavy Traffic Approximation of the Fractional Queue

4.1. The Heavy Traffic Approximation of the Classical Queue

4.1.1. The Queueing Model

Let us first introduce the queueing model. Consider a system in which, at a certain average rate , a job (client) enters the system to be processed by a server, which takes a certain amount of time of mean to perform. If a job is currently being served and another job enters the system, it waits in line in a queue. Jobs that are waiting are then processed, as soon as the server is free, via a First In First Out (FIFO) policy. To model such a system, we assume in any case that the interarrival times (i.e., the time interval between the arrival of two jobs) are i.i.d. and so are the service times.

In this first case, i.e., the M/M/1 queueing system, we also suppose that:

- service times and interarrival times are independent of each other;

- the jobs enter the system following a Poisson arrival process;

- service times are exponentially distributed.

Thus, we consider two sequences and of i.i.d. exponential random variables of parameters, respectively, and that are independent of each other, representing, respectively, the interarrival and the service times. We can define the sequence of the arrival times (i.e., the time instants in which the jobs enter the system) as

where we set . Let be the arrival counting process, i.e., is the number of arrivals up to time t. It is defined as

In the case of a M/M/1 system, as we supposed before, A is a Poisson process of parameter and we denote it by . We can also define the sequence , which is, for any , the total amount of time that is necessary to process the first k-th jobs, as

where we set , and the cumulative input process as

i.e., the necessary service times to process all the tasks that entered the system up to time t. Let us stress that is not the time of completion of the k-th task, as there could have been some idle periods. By using the cumulative input process, we can introduce the net input process as

The net input process takes, in a certain sense, a balance between the total service time that is necessary to process all the tasks and the time that has passed since the system is initialized. By definition, X is a cádlág process that decreases with continuity while it increases only by jumping. Moreover, , and thus almost surely, and we can define the workload process via the Skorokhod reflection map as . One can visualize how works: when it is positive, the process decreases linearly with slope until it reaches 0; once 0 has been reached, it remains constant (while the net input could be still decreasing); each time X jumps, so does . Thus, it is clear that is 0 during idle periods and it is positive while a job is being served. We can state that , in a certain sense, measures how much residual time is needed to process all the tasks. Here, the effect of the regulator is much clearer than in the RBM case. Indeed, the process tries to cross 0 with a fixed slope of . As soon as touches 0, the regulator symmetrizes such a slope, thus adding a linear term with slope 1 to the process. The sum of the symmetric effects of the proper velocity of (or, in other words, the effort that puts into driving against 0) and the velocity of the regulator is the main motivation for which the process stabilizes on 0 until it increases jumping away. Such an example actually shows how the threshold 0 works as a mirror for the velocity of the process and how the regulator provides a symmetric additional term to such a velocity. Moreover, it is clear that, for any , it holds that : they increase together with the same amount, but decreases with continuity in the meantime. As C measures the total amount of necessary service time, up to time t, to process all the jobs, and , the remaining amount of time to process all the waiting jobs, up to time t, their difference represents the total amount of time, up to time t, in which the server was actually working. We call such a process the cumulative busy time process , where . The cumulative busy time process is increasing and piecewise linear. It remains constant during idle periods; otherwise, it grows with slope 1. Thus, if we consider B as the clock of the process, we are only neglecting the idle periods. Thus, if we count service times only on this clock, we obtain the exact number of processed jobs. Hence, we consider a counting process of the service times, i.e.,

and then we define the departure process as . Finally, the balance between the number of arrivals and the number of departures, i.e., the number of jobs that are currently in the system, gives us the queue length process , i.e., . Since we are mainly interested in the process Q, we resume the full construction with the notation . In any case, we will adopt the full notation that we introduced before, since the previously constructed processes will still play a role. Moreover, if we write , then all the involved processes will present the same apex.

It is clear that the traffic of the system can be expressed in terms of the constant . As , Q admits a stationary distribution and, in particular, is ergodic, while implies that Q is not ergodic. The threshold thus divides the ergodic behavior with the non-ergodic one. We are interested in what happens when but . In this situation, we say that the queueing system is in the heavy traffic regime.

4.1.2. The Heavy Traffic Approximation

It is well known that, under a suitable rescaling, a queueing system in the heavy traffic regime can be approximated (in distribution) with a Reflected Brownian Motion. The following theorem, which is practically [25] (Section ), resumes the heavy traffic approximation result.

Theorem 6.

Fix and let be a sequence such that for any , and . Set , and . Let and define . Then, there exists a process such that, as ,

Proof.

Let and be the sequences of interarrival and service times of and , and be the arrival and cumulative service times. Observe that we can construct the n-th sequence of service times by rescaling the ones of the first model, i.e., we can set , while is independent of n and thus can be considered to be equal to . Let us define the processes and as

Observing that, for any ,

and that and are independent of each other, we know, by Donsker’s theorem (see [25] (Theorem )), that there exist two independent Brownian motions , , such that, as ,

where we also use the fact that . Since and are almost surely continuous, we can use both [25] (Theorems and ) to conclude that with

where and are defined in the statement of the theorem. Denoting , we know that , and then, by property (i) of Proposition 1, we get

concluding the proof. □

In the previous theorem, we considered a space–time scaling . However, in place of the time one, we could consider an appropriate scaling of the arrival and service rates. From now on, we will denote the fact that a random variable T is exponentially distributed with parameter with . We have the following scaling property.

Proposition 6.

Let and . Then, the following equalities hold:

Proof.

Since and , it holds that and . This, together with the independence of the involved variables, implies and . As a direct consequence, it holds that , . By definition, one can easily check that, for the counting processes (which are Poisson processes), it holds that and . The independence of and leads to

and, in particular, . Moving to the net input processes, we clearly get . Since the Skorokhod reflection mapping is continuous, we have

Next, consequently, we have

Now, let us focus on the queue length processes. To show the equality in law, we need to use a different representation of the queue length, due to [56] and widely exploited in [57]. Precisely, if we consider another sequence of i.i.d. random variables with , we can define the sequence , with and , and the counting process , where

In particular, we obtain that, for the process , one has

Arguing as before, we get

Finally, the property of the departure process follows by difference. □

Remark 6.

The fact that is distributed as the service times is typical of the M/M/1 case. If one considers a multi-channel queue, represents the potential service times, i.e., the service times one gets if the servers are not shut down when they are idle. This means that, in the multi-channel case, in place of having a single sequence of service times, each one associated with a job, one has many sequences of service times and each sequence is associated with one server (as if the systems admits as many queues as servers). It is clear that in the M/M/1 queues, this makes no difference and is really the sequence of service times . In particular, this means that the queue length process Q can be written as the reflection of the process . In general, Q can be written as the reflection of the process , which is called the modified net input process.

We can restate Theorem 6 in terms of such a scaling property.

Corollary 3.

Fix and let be a sequence such that for any , and . Set , , and . Let . Then, there exists a process such that, as ,

Proof.

The statement follows from Theorem 6 after observing from Proposition 6 that , where , and . □

4.2. The Heavy Traffic Approximation of the Fractional M/M/1 Queue

4.2.1. The Queueing Model

Now, we need to introduce the model that we are interested in. Precisely, we refer to the fractional M/M/1 queue, first defined in [4].

Definition 7.

Let , and be an inverse stable subordinator independent of Q. We define a fractional M/M/1 queue by means of its queue length process and we denote it as . We call Q its parent process and we extend the definition to , by setting as a classical M/M/1 queue length process.

Since the process is defined by means of a time-change procedure, we know that it is a semi-Markov process. On the other hand, the interpretation of as a queueing model is unclear unless we define some other quantities. In place of proceeding with a forward construction as done for the M/M/1 queue, here, we need to consider a backward construction to define the main quantities of a queueing system. Indeed, we can recognize the arrival times by setting and then

Hence, the interarrival times are defined by setting

Analogously, one can define the departure times by setting and

To identify the service times, we have to distinguish between two cases. Indeed, if , then, as soon as the -th job leaves the system, the k-th one is already being served. Thus, its service time is . Otherwise, as soon as the -th job leaves the system, to identify the service time, we have to wait for the k-th job to enter the system. Hence, we can define the service times as

Once the sequences and are defined, we can reconstruct all the quantities involved in the queueing model, as done in Section 4.1.1, and thus we will use the same notation with the apex to denote the respective fractional counterpart. We are also interested in the interevent times . To define them, let us first define the event times by setting and

and then set

In the case , the interevent time is exponentially distributed with parameter if , while it is exponentially distributed with parameter if . In particular, if for some , then is the minimum between the interarrival time and the residual service time

If for some and , then is the minimum between the service time and the residual interarrival time

Clearly, if for some and , then . As , and are still independent and exponentially distributed with parameters and : this is due to the loss of memory property of the exponential distribution. Thus, the fact that, in the first two cases, is an exponential with parameter can be seen as a consequence of the fact that it is the minimum of two independent exponential random variables.

This is, in general, not true for . To describe the distribution of , and , we need some additional definitions (see [58,59,60]).

Definition 8.

We denote by the Mittag–Leffler function of order , defined as

For , it holds that .

We say that T is a Mittag–Leffler random variable of order and parameter if

and we denote it by .

We say that T is a generalized Erlang random variable of order , rate and shape parameter if

and we denote it by . In particular, if , , are independent, then .

Mittag–Leffler random variables naturally arise from the evaluation of an -stable subordinator in an independent exponential random variable.

Lemma 2.

Let be independent of . Then, .

Proof.

It is clear that for , it holds that . By using the independence of Y and , we get, for ,

where the last equality follows from the Laplace transform of the inverse -stable subordinator, as obtained in [61]. □

In the following, we will specify some distributional properties of , and . Let us stress that the distribution of has been already exploited in [4]. On the other hand, the distribution of has been obtained in [21] under the condition that there are no departures between two arrivals: the conditioning arises from the fact that the proof is carried on by using the semi-Markov property and a modification of the Kolmogorov equations for the queueing system. A similar argument holds for in [21]. Here, we use some different techniques that rely on the fact that is a Lévy process. As a consequence, as will be clear in the following statement, we do not have to consider any conditioning to obtain the distribution of and . Moreover, we also obtain the independence, respectively, of the interarrival times and the service times, while the mutual independence of the two sequences holds only if , as observed in [4].

Proposition 7.

Let . Then,

- 1.

- The variables are independent of each other;

- 2.

- For any , conditioned to the event ;

- 3.

- For any , conditioned to the event ;

- 4.

- For any , conditioned to the event ;

- 5.

- The variables are independent of each other;

- 6.

- For any , it holds that ;

- 7.

- The variables are independent of each other;

- 8.

- For any , it holds that ;

- 9.

- If , the sequences and are not independent of each other.

Proof.

To prove item , let us first observe that almost surely. Now, let us suppose that . Arguing on , by definition, we get

Since is constant for , there exists a random variable such that almost surely. Thus, we can rewrite

If is strictly increasing, we have that, almost surely,

We conclude, by induction, that for any .

Now, let us consider two interevent times with . By definition, it holds that and . Fix and observe that

where the last equality holds due to the fact that is stochastically continuous and is independent of . Nevertheless, by the fact that is independent of , if we define , it holds that

If is a Lévy process, whenever , it holds that , where . However, almost surely; thus,

where we also use the fact that and are independent. Now, we need to determine . To do this, we use again the fact that is a Lévy process independent of the sequence T to obtain that

Properties (2) and (3) follow from Equation (8), as

and Lemma 2. Property is a direct consequence of , together with the decomposition property of the generalized Erlang distribution. The proof of item is analogous to the one of item applied to . Item follows from the equality , which can be proven analogously as in the case of the interevent times, and Lemma 2. The proof of item is similar to the one of item ; however, once are fixed, one has to discuss separately

Item follows from and Lemma 2. Finally, item follows from the fact that, despite , , as a consequence of the lack of semigroup property of the Mittag–Leffler function for (see [62]). □

Remark 7.

It is clear from the previous proposition that the arrival counting process is a fractional Poisson process (see [15,16]) of parameter λ and order α.

4.2.2. The Heavy Traffic Approximation

Now, we are ready to prove the heavy traffic limit for the fractional M/M/1 queue. It will be a clear consequence of the definition of the fractional M/M/1 queue, the classic heavy traffic approximation and the continuous mapping theorem.

Theorem 7.

Fix λ and let be a sequence such that for any , and . Set , , and . Let . Then, there exists a process such that, as ,

Proof.

Let be the parent process of for any . By Corollary 3, we know that there exists such that

Without loss of generality, we can suppose that is independent of each and . Then, we have that in . Now, let us denote by the set of discontinuity points of the composition map with respect to the topology. Denoting by , it holds that almost surely. Moreover, (see [25] ()); thus, . We get Equation (9) with by the continuous mapping theorem (see [25] (Theorem )). □

5. Simulating a with via CTRW

In this section, we want to derive a simulation algorithm for the with from the heavy traffic approximation of the fractional queue. To do this, we first need to investigate how to simulate the fractional queue. Let us anticipate that we will adapt the well-known Doob–Gillespie algorithm (see [63]) to this case. This has been already done in [4] and discussed for general discrete event systems in [31] (Chapter II, Section 6); however, for completeness, let us recall the main steps of such generalization.

5.1. Simulation of the M/M/1

To achieve the simulation algorithm for the fractional , we need to exploit some distributional properties of the -stable subordinator. First, let us define stable random variables.

Definition 9.

We say that a real random variable S is stable if there exist , , and such that

and we denote it as . We refer to [64] for the parametrization.

Comparing (3) with (10), it is clear that . The latter observation can be used to state the following lemma (see also [23] (Corollary )).

Lemma 3.

Let and be independent of each other. Then, .

Proof.

Let be an -stable subordinator independent of Y. Thus, it is clear, by simple conditioning arguments and property (iii) of Proposition 2, that . Finally, Lemma 2 concludes the proof. □

Since we know how to simulate an exponential random variable by means of the inversion method (see [31] (Chapter II, Example 2.3)) and a stable random variable by means of the Chambers–Mallow–Stuck algorithm (see [55]), we are able to simulate Mittag–Leffler random variables. Moreover, let us stress that one can easily simulate discrete random variables (see [31] (Chapter II, Example 2.1)). We only need to understand how to combine all these simulation procedures to obtain the fractional M/M/1 queue for fixed and .

To do this, we define the functions (where is the set of non-negative integers) as

Let us define the sequence by setting and , for any , via the distribution

It is clear that is the jump chain of the classical M/M/1 queue. Moreover, let us stress that the time-change procedure does not change the jump chain of a continuous-time random walk; thus, is also the jump chain of the fractional M/M/1 queue. Concerning the interevent times, let us define the sequence of i.i.d. random variables with distribution dictated by the following relation:

In general, we can define the sequence by setting and and the counting process given by

Once is defined, we can define the process as . Since, by definition, the process is a Markov-additive process, is a semi-Markov process (see [51]). For , it is well known that and the Markov-additive decomposition that we exploited before is the main tool behind the Doob–Gillespie algorithm [63]. Hence, it is clear that, to generalize the previous algorithm, we only need to show that .

Proposition 8.

Let and be two processes as defined before, and let be an inverse stable subordinator independent of both and . Then, . As a consequence, .

Proof.

First, let us observe that, if is independent of , we have for any by Lemma 2. Arguing as in Proposition 7, one can prove by induction that for any and that is a sequence of independent random variables. Thus, we conclude that . By Skorokhod’s representation theorem (see, for instance, [54]), we can suppose, without loss of generality, that the equality holds almost surely. Hence, we obtain that if and only if for any and any ; that is to say, . This concludes the proof. □

Now, we are ready to express the simulation algorithm. It is clear that we only need to simulate the following arrays:

- The state array , which contains the states of the queue length process;

- The calendar array , which contains the times in which an event happens.

Indeed, in such a case, one can recover by finding k such that and then setting , as done in Algorithm 1. Let us stress that all the algorithms will be given as procedures, so that we can recall each algorithm in other ones.

| Algorithm 1 Generation of the queue length process from the state and calendar arrays |

| procedureGenerateQueue ▹ Input: , |

| ▹ Output: |

| function (t) |

| ▹ Recall that the arrays start with 0 |

| if then |

| Error |

| else |

| while do |

| end while |

| end if |

| end function |

| end procedure |

Once we know how to generate the queue from the arrays, let us state Algorithm 2, which simulates them up to the N-th event.

| Algorithm 2 Simulation of a queue up to event |

| procedure SimulateArraysEvent ▹ Input: , , |

| ▹ Output: , |

| for do |

| Simulate uniform in |

| if then |

| else |

| end if |

| Simulate |

| Simulate |

| end for |

| end procedure |

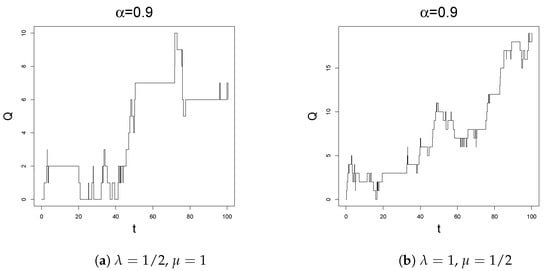

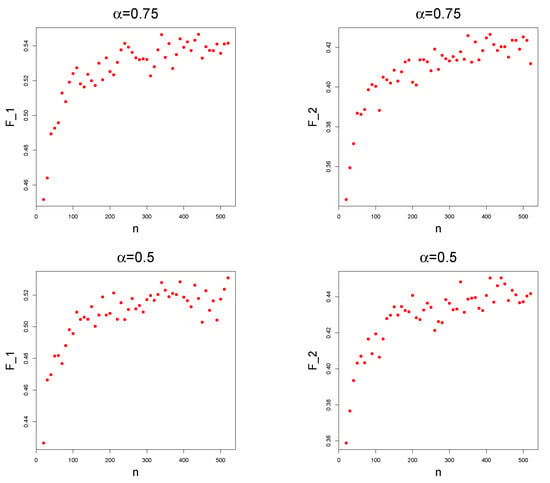

Algorithm 2 can be easily adapted to other stopping conditions. In the following, it will be useful to express how to simulate a queue up to time (precisely, up to the time ). This is done in Algorithm 3, and some simulation results are shown in Figure 1.

| Algorithm 3 Simulation of a queue up to time |

| procedure SimulateArraysTime ▹ Input: , , |

| ▹ Output: , |

| while do |

| Simulate uniform in |

| if then |

| else |

| end if |

| Simulate |

| Simulate |

| end while |

| end procedure |

Figure 1.

Simulated sample paths of up to time for different values of , and . Precisely, on the left , while on the right .

5.2. Simulation of the with

Now, we want to use Algorithm 3 to determine a simulation algorithm for the Delayed Reflected Brownian Motion with negative . To do this, let us observe that the limit process in Theorem 7 is for some constants and . Recalling that , we know that, in order to have , it must hold that , i.e., , and then . Now, set , where has to be chosen in a proper way, precisely . However, since we need , it must hold that , which is true if and only if . Taking into account this requirement, we can develop an algorithm for the simulation of the DRBM with negative up to time and iteration . Again, we only need to simulate the state and calendar arrays of the approximating queue length process and then generate the DRBM from them. This is done in Algorithms 4 and 5.

| Algorithm 4 Simulation of a for up to time and iteration |

| procedureSimulateDRBM ▹ Input: , , , |

| ▹ Output: |

| while do |

| Simulate uniform in |

| if then |

| else |

| end if |

| Simulate |

| Simulate |

| end while |

| GenerateDRBM() |

| end procedure |

| Algorithm 5 Generation of the DRBM process from the state and calendar arrays |

| procedureGenerateDRBM ▹ Input: , , |

| ▹ Output: |

| function (t) |

| ▹ Recall that the arrays start with 0 |

| if then |

| Error |

| else |

| while do |

| end while |

| end if |

| end function |

| end procedure |

5.3. Numerical Results

In this section, we want to show some simulation results. However, how can one visualize the convergence in distribution?

By the Portmanteau Theorem (see [54] (Chapter 2, Section 3)) and Theorem 7, we know that, for any bounded functional that is continuous in the topology, it holds that

and thus we could study whether

converges to 0. However, to do this, we should know a priori, which is not always possible. Moreover, let us observe that even the evaluation map is not continuous everywhere in the topology; hence, we need to enlarge the set of possible functionals . To do this, we need the following weaker version of the Portmanteau Theorem.

Proposition 9.

Let be a random variable with values in a metric space such that . Let also be a function such that the following properties hold true:

- (i)

- It holds that ;

- (ii)

- There exist two constants such that for any .

Then,

Proof.

By property (i) and the continuous mapping theorem (see [25] (Theorem )), we know that . Moreover, property (ii) implies that are uniformly integrable. We conclude the proof by [54] (Chapter 2, Theorem 3.5). □

Now, let us select two particular functionals. The first one is given by

i.e., it is an evaluation functional. To work with such a functional, we need to prove the following result.

Proposition 10.

The functional satisfies the hypotheses of Proposition 9 with respect to the sequence .

Proof.

Let us first observe that if is continuous, it is clear that ; see [25] (Proposition 13.2.1). Thus, we only need to prove item (ii). To do this, let us select and remark that we need to estimate . Let and observe that, by a simple conditioning argument,

where is an inverse -stable subordinator independent of . By [65], we know that is increasing. If also is almost surely increasing, it is clear that is increasing and then

Recalling that almost surely, we have, by the monotone convergence theorem,

If for any , we can combine Equations and in [7] to get

where we also use the fact that . Hence, we finally get

concluding the proof. □

The second functional that we consider is the integral functional

This time, we will directly prove the limit result.

Proposition 11.

It holds that

Proof.

Let us first rewrite

where we use Fubini’s theorem, being for all . By Propositions 9 and 10, we have that

Furthermore, arguing exactly as in the proof of Proposition 10 and using Equation in [7], we get

and thus we can use the dominated convergence theorem to conclude the proof. □

In particular, , , could be estimated numerically by means of Corollary 2 and Mikusinski’s representation of . However, one still needs to estimate for , which is not an easy task since the distribution (and then the expected value) of the fractional queue admits some series representations (see [4,21]), which are not easy to evaluate numerically. To overcome this problem, we adopt a Monte Carlo method (with 5000 samples) to determine . However, this means that we have to consider the fact that the evaluated value randomly oscillates around the exact one. For this reason, one cannot use the Cauchy-type error

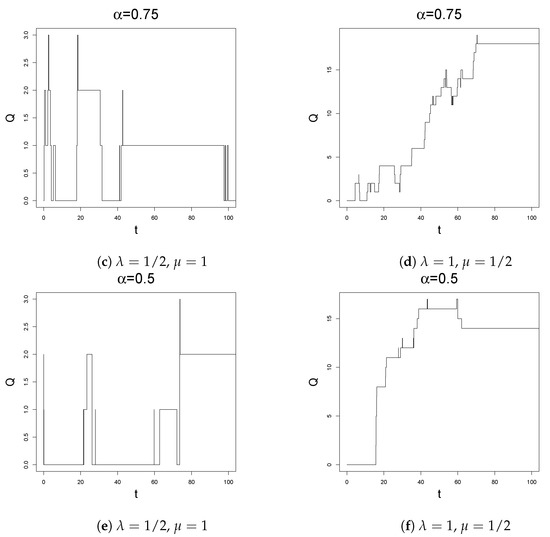

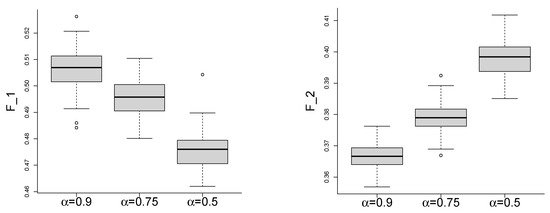

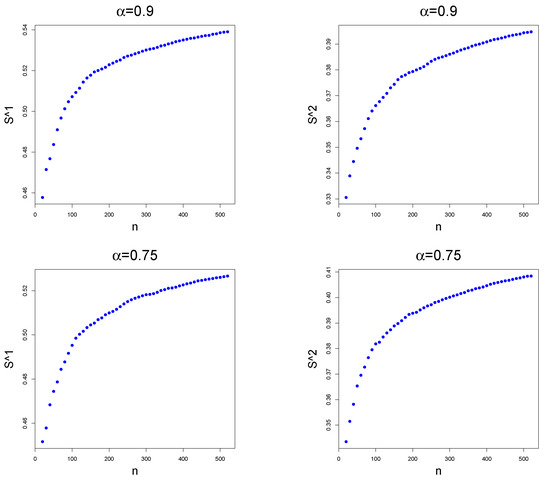

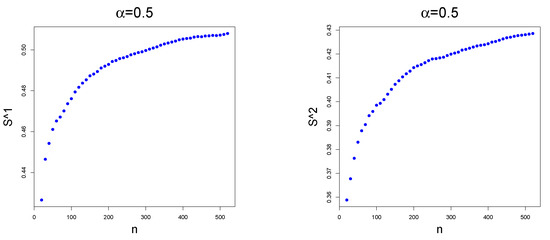

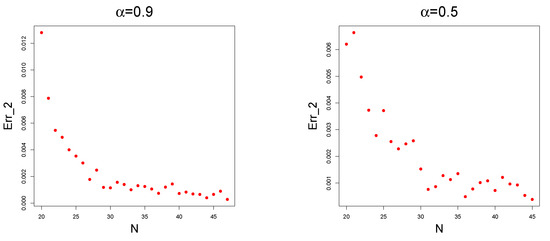

to estimate the actual approximation error. In any case, a further investigation on the link between and will be carried out in future works. Let us also underline that, clearly, the oscillation of the Monte Carlo evaluation depends on the variance of and, thus, for fixed n, depends on . This is made clear in the boxplots in Figure 2. The plots of against n are given in Figure 3. The convergence of such a sequence is not so clear due the oscillations caused by the Monte Carlo method. Another visualization of the convergence of can be obtained by considering the fact that, for any , the following Cesaro convergence holds:

Figure 2.

Boxplots of 100 simulations of (resp. on the right and on the left) via Monte Carlo method for , , and different values of .

Figure 3.

Point plots of (resp. on the right and on the left) against n for , and different values of .

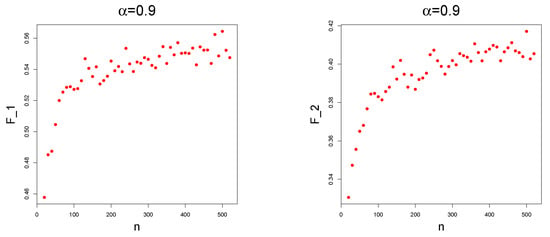

Indeed, the sequence provides some form of smoothing of the point plot and the convergence is much clearer in this case, as shown in Figure 4. As is smoother than the original sequence, one could still use the error

to impose a stopping criterion of the form for the absolute error or for the relative error.

Figure 4.

Point plots of (resp. on the right and on the left) against n for , and different values of .

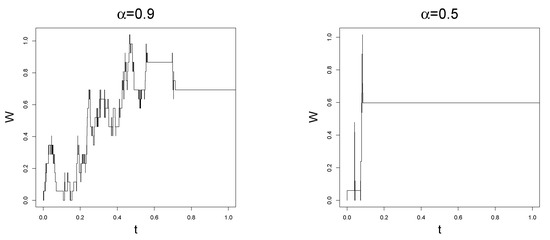

With the procedure to simulate the DRBM and is given in Algorithm 6, a simulation algorithm that takes into consideration this stopping criterion is exploited in Algorithm 7. To show some simulated sample paths, we used Algorithm 7 with (which takes into consideration the whole simulated trajectory). The results are illustrated in Figure 5, while the sequence is plotted in Figure 6.

| Algorithm 6 Simulation of trajectories of a for up to time with iteration and evaluation of the functional |

| procedure SimDRBMwFunc ▹ Input: , , , |

| ▹ Output: , , |

| for do |

| while do |

| Simulate uniform in |

| if then |

| else |

| end if |

| Simulate |

| Simulate |

| end while |

| end for |

| end procedure |

| Algorithm 7 Simulation of trajectories of a for up to time with tolerance and maximum number of iterations |

| procedure SimDRBMwTol ▹ Input: , , , |

| ▹ Output: trajectories of , |

| SimDRBMwFunc() |

| SimDRBMwFunc() |

| while and do |

| SimDRBMwFunc() |

| end while |

| for do |

| GenerateDRBM() |

| end for |

| end procedure |

Figure 5.

Sample paths of for and different values of simulated by means of Algorithm 7 with , , and .

Figure 6.

Sequence of to produce the plots of Figure 5.

6. Conclusions

To summarize the results, we introduced the Delayed Reflected Brownian Motion by means of a suitable time change of the Reflected Brownian Motion (or, equivalently, by solving Skorokhod’s reflection problem on the paths of the Delayed Brownian Motion) and recalled the main properties of fractional queues as defined in [4]. These two processes are then linked via the heavy traffic approximation result exploited in Theorem 7. As we also underline in the Introduction, such a theorem can be read in two ways depending on the process that we want to focus on. If we are interested in the properties of the fractional queues, the theorem provides a subdiffusive approximation of them, in terms of the Delayed Reflected Brownian Motion, as the traffic intensity is near 1. This is quite useful if one needs to investigate (approximatively) some distributional property of a fractional queue in the transient state. Indeed, different formulations of the one-dimensional distribution of the queue length process in the fractional case are given in [4,21] but they involve nested series of functions, which can be difficult to evaluate even numerically. However, if is near 1, one can approximate the one-dimensional distribution of the queue length process with the one of the Delayed Reflected Brownian Motion given in Proposition 5, which can be numerically evaluated thanks to Mikusinski’s representation of the density of the stable subordinator.

Vice versa, if we are interested in the properties of the Delayed Reflected Brownian Motion, Theorem 7 provides a continuous-time random walk approximation of such a process. This is a quite useful property when combined with the discrete event simulation procedure (which is a generalization of the well-known Gillespie’s algorithm; see [31,63]). Indeed, the simulation of an inverse -stable subordinator is usually done by means of Laplace inversion, if we start from the Laplace transform of , or by inverting the subordinator, which is instead simulated via the Chambers–Mallow–Stuck method [55]. The algorithm presented in the paper does not rely at all on the simulation of the inverse subordinator, thanks to the fact that we are able to simulate Mittag–Leffler random variables by using the Chambers–Mallow–Stuck method.

This second point of view is investigated with more attention in Section 5. Precisely, Theorem 7 gives us a limit result, but does not tell us how large we should chose n to have a suitably good approximation. Moreover, as we observed before, estimating the distributional properties of a fractional queue is not an easy task, due to the quite complicated form of the state probabilities. However, some distributional quantities can be provided by Monte Carlo estimates, which, due to the random nature of the approach, invalidates the idea of using a form of error based on the Cauchy property of converging sequences. This problem can be overcome by using the Cesaro convergence of the sequence, as taking the average smooths in some sense the oscillating simulated data, as one can observe by comparing Figure 3 and Figure 4. Thus, one can use the sequence of averages in place of the original one to provide a stopping criterion, as done in Algorithm 7. Such an approach is supported by the sequence of errors given in Figure 6. Clearly, one could use other smoothing procedures on data to overcome the oscillations caused by Monte Carlo estimates. In future works, we aim to discuss the properties of time-changed queues and Reflected Brownian Motions with more general inverse subordinators, trying to link them via a heavy traffic approximation result. The simulation of such types of queueing models will require some more sophisticated methods, due to the lack, in general, of both the self-similarity property and a special algorithm for the subordinator.

Author Contributions

All authors equally contributed to the paper. All authors have read and agreed to the published version of the manuscript.

Funding

G. Ascione and E. Pirozzi were partially supported by MIUR-PRIN 2017, project “Stochastic Models for Complex Systems”, grant number 2017JFFHSH. G. Ascione was partially supported by INdAM Gruppo Nazionale per l’Analisi Matematica, la Probabilità e le loro Applicazioni. E. Pirozzi was partially supported by INdAM Gruppo Nazionale per il Calcolo Scientifico. N. Leonenko was partially supported by LMS grant 42997, ARC grant DP220101680. G.Ascione, N. Leonenko and E.Pirozzi were supported by the Isaac Newton Institute (Cambridge) Program Fractional Differential Equations.

Acknowledgments

The authors would like to thank the anonymous referees for their valuable comments. The authors would like to thank the Isaac Newton Institute for Mathematical Sciences for support and hospitality during the programme Fractional Differential Equations (FDE2), when work on this paper was undertaken.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Erlang, A.K. The theory of probabilities and telephone conversations. Nyt. Tidsskr. Mat. Ser. B 1909, 20, 33–39. [Google Scholar]

- Helbing, D. A section-based queueing-theoretical traffic model for congestion and travel time analysis in networks. J. Phys. A Math. Gen. 2003, 36, L593. [Google Scholar] [CrossRef]

- Kleinrock, L. Queueing Systems: Computer Applications; John Wiley: Hoboken, NJ, USA, 1976. [Google Scholar]

- Cahoy, D.O.; Polito, F.; Phoha, V. Transient behavior of fractional queues and related processes. Methodol. Comput. Appl. Probab. 2015, 17, 739–759. [Google Scholar] [CrossRef]

- Schoutens, W. Stochastic Processes and Orthogonal Polynomials; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 146. [Google Scholar]

- Ross, S.M. Introduction to Probability Models; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Kleinrock, L. Queueing Systems: Theory; John Wiley: Hoboken, NJ, USA, 1975. [Google Scholar]

- Conolly, B.; Langaris, C. On a new formula for the transient state probabilities for M/M/1 queues and computational implications. J. Appl. Probab. 1993, 30, 237–246. [Google Scholar] [CrossRef]

- Parthasarathy, P. A transient solution to an M/M/1 queue: A simple approach. Adv. Appl. Probab. 1987, 19, 997–998. [Google Scholar] [CrossRef]

- Levy, P. Processus semi-markoviens. In Proceedings of the International Congress of Mathematicians, Amsterdam, The Netherlands, 2–9 September 1954. [Google Scholar]

- Orsingher, E.; Polito, F. Fractional pure birth processes. Bernoulli 2010, 16, 858–881. [Google Scholar] [CrossRef]

- Orsingher, E.; Polito, F. On a fractional linear birth–death process. Bernoulli 2011, 17, 114–137. [Google Scholar] [CrossRef]

- Leonenko, N.N.; Meerschaert, M.M.; Sikorskii, A. Fractional Pearson diffusions. J. Math. Anal. Appl. 2013, 403, 532–546. [Google Scholar] [CrossRef]

- Ascione, G.; Leonenko, N.; Pirozzi, E. Fractional immigration-death processes. J. Math. Anal. Appl. 2021, 495, 124768. [Google Scholar] [CrossRef]

- Laskin, N. Fractional Poisson process. Commun. Nonlinear Sci. Numer. Simul. 2003, 8, 201–213. [Google Scholar] [CrossRef]

- Meerschaert, M.; Nane, E.; Vellaisamy, P. The fractional Poisson process and the inverse stable subordinator. Electron. J. Probab. 2011, 16, 1600–1620. [Google Scholar] [CrossRef]

- Baeumer, B.; Meerschaert, M.M. Stochastic solutions for fractional Cauchy problems. Fract. Calc. Appl. Anal. 2001, 4, 481–500. [Google Scholar]

- Scalas, E.; Toaldo, B. Limit theorems for prices of options written on semi-Markov processes. Theory Probab. Math. Stat. 2021, 105, 3–33. [Google Scholar] [CrossRef]

- Ascione, G.; Cuomo, S. A sojourn-based approach to semi-Markov Reinforcement Learning. arXiv 2022, arXiv:2201.06827. [Google Scholar]

- Ascione, G.; Toaldo, B. A semi-Markov leaky integrate-and-fire model. Mathematics 2019, 7, 1022. [Google Scholar] [CrossRef]

- Ascione, G.; Leonenko, N.; Pirozzi, E. Fractional queues with catastrophes and their transient behaviour. Mathematics 2018, 6, 159. [Google Scholar] [CrossRef]

- De Oliveira Souza, M.; Rodriguez, P.M. On a fractional queueing model with catastrophes. Appl. Math. Comput. 2021, 410, 126468. [Google Scholar]

- Ascione, G.; Leonenko, N.; Pirozzi, E. Fractional Erlang queues. Stoch. Process. Their Appl. 2020, 130, 3249–3276. [Google Scholar] [CrossRef]

- Ascione, G.; Leonenko, N.; Pirozzi, E. On the Transient Behaviour of Fractional M/M/∞ Queues. In Nonlocal and Fractional Operators; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–22. [Google Scholar]

- Whitt, W. Stochastic-Process Limits: An Introduction to Stochastic-Process Limits and their Application to Queues; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Skorokhod, A.V. Stochastic equations for diffusion processes in a bounded region. II. Theory Probab. Its Appl. 1962, 7, 3–23. [Google Scholar] [CrossRef]

- Magdziarz, M.; Schilling, R. Asymptotic properties of Brownian motion delayed by inverse subordinators. Proc. Am. Math. Soc. 2015, 143, 4485–4501. [Google Scholar] [CrossRef]

- Capitanelli, R.; D’Ovidio, M. Delayed and rushed motions through time change. Lat. Am. J. Probab. Math. Stat. 2020, 17, 183–204. [Google Scholar] [CrossRef]

- Graversen, S.E.; Shiryaev, A.N. An extension of P. Lévy’s distributional properties to the case of a Brownian motion with drift. Bernoulli 2000, 6, 615–620. [Google Scholar] [CrossRef]

- Kobayashi, K. Stochastic calculus for a time-changed semimartingale and the associated stochastic differential equations. J. Theor. Probab. 2011, 24, 789–820. [Google Scholar] [CrossRef]

- Asmussen, S.; Glynn, P.W. Stochastic Simulation: Algorithms and Analysis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007; Volume 57. [Google Scholar]

- Ambrosio, L.; Fusco, N.; Pallara, D. Functions of Bounded Variation and Free Discontinuity Problems; Courier Corporation: Washington, DC, USA, 2000. [Google Scholar]

- Dupuis, P.; Ramanan, K. Convex duality and the Skorokhod problem. I. Probab. Theory Relat. Fields 1999, 115, 153–195. [Google Scholar] [CrossRef]

- Harrison, J.M. Brownian Motion and Stochastic Flow Systems; Wiley: New York, NY, USA, 1985. [Google Scholar]

- Abate, J.; Whitt, W. Transient behavior of regulated Brownian motion, I: Starting at the origin. Adv. Appl. Probab. 1987, 19, 560–598. [Google Scholar] [CrossRef]

- Kinkladze, G. A note on the structure of processes the measure of which is absolutely continuous with respect to the Wiener process modulus measure. Stochastics: Int. J. Probab. Stoch. Process. 1982, 8, 39–44. [Google Scholar] [CrossRef]

- Revuz, D.; Yor, M. Continuous Martingales and Brownian Motion; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 293. [Google Scholar]

- Göttlich, S.; Lux, K.; Neuenkirch, A. The Euler scheme for stochastic differential equations with discontinuous drift coefficient: A numerical study of the convergence rate. Adv. Differ. Equ. 2019, 2019, 429. [Google Scholar] [CrossRef]

- Asmussen, S.; Glynn, P.; Pitman, J. Discretization error in simulation of one-dimensional reflecting Brownian motion. Ann. Appl. Probab. 1995, 5, 875–896. [Google Scholar] [CrossRef]

- Buonocore, A.; Nobile, A.G.; Pirozzi, E. Simulation of sample paths for Gauss-Markov processes in the presence of a reflecting boundary. Cogent Math. 2017, 4, 1354469. [Google Scholar] [CrossRef]

- Buonocore, A.; Nobile, A.G.; Pirozzi, E. Generating random variates from PDF of Gauss–Markov processes with a reflecting boundary. Comput. Stat. Data Anal. 2018, 118, 40–53. [Google Scholar] [CrossRef]

- Bertoin, J. Subordinators: Examples and Applications. In Lectures on Probability Theory and Statistics; Springer: Berlin/Heidelberg, Germany, 1999; pp. 1–91. [Google Scholar]

- Meerschaert, M.M.; Sikorskii, A. Stochastic Models for Fractional Calculus; de Gruyter: Berlin, Germany, 2019. [Google Scholar]

- Meerschaert, M.M.; Straka, P. Inverse stable subordinators. Math. Model. Nat. Phenom. 2013, 8, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Arendt, W.; Batty, C.J.; Hieber, M.; Neubrander, F. Vector-Valued Laplace Transforms and Cauchy Problems; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Mikusiński, J. On the function whose Laplace-transform is e-sα. Stud. Math. 1959, 2, 191–198. [Google Scholar] [CrossRef]

- Saa, A.; Venegeroles, R. Alternative numerical computation of one-sided Lévy and Mittag-Leffler distributions. Phys. Rev. E 2011, 84, 026702. [Google Scholar] [CrossRef] [PubMed]

- Penson, K.; Górska, K. Exact and explicit probability densities for one-sided Lévy stable distributions. Phys. Rev. Lett. 2010, 105, 210604. [Google Scholar] [CrossRef]

- Ascione, G.; Patie, P.; Toaldo, B. Non-local heat equation with moving boundary and curve-crossing of delayed Brownian motion. 2022; in preparation. [Google Scholar]

- Leonenko, N.; Pirozzi, E. First passage times for some classes of fractional time-changed diffusions. Stoch. Anal. Appl. 2021, 1–29. [Google Scholar] [CrossRef]

- Meerschaert, M.M.; Straka, P. Semi-Markov approach to continuous time random walk limit processes. Ann. Probab. 2014, 42, 1699–1723. [Google Scholar] [CrossRef][Green Version]

- Çinlar, E. Markov additive processes. II. Z. Wahrscheinlichkeitstheorie Verwandte Geb. 1972, 24, 95–121. [Google Scholar] [CrossRef]

- Kaspi, H.; Maisonneuve, B. Regenerative systems on the real line. Ann. Probab. 1988, 16, 1306–1332. [Google Scholar] [CrossRef]

- Billingsley, P. Convergence of Probability Measures; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Chambers, J.M.; Mallows, C.L.; Stuck, B. A method for simulating stable random variables. J. Am. Stat. Assoc. 1976, 71, 340–344. [Google Scholar] [CrossRef]

- Borovkov, A. Some limit theorems in the theory of mass service. Theory Probab. Its Appl. 1964, 9, 550–565. [Google Scholar] [CrossRef]

- Iglehart, D.L.; Whitt, W. Multiple channel queues in heavy traffic. I. Adv. Appl. Probab. 1970, 2, 150–177. [Google Scholar] [CrossRef]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier: Amsterdam, The Netherlands, 2006; Volume 204. [Google Scholar]

- Mainardi, F.; Gorenflo, R.; Scalas, E. A fractional generalization of the Poisson processes. Vietnam J. Math. 2004, 32, 53–64. [Google Scholar]

- Mainardi, F.; Gorenflo, R.; Vivoli, A. Renewal processes of Mittag-Leffler and Wright type. Fract. Calc. Appl. Anal. 2005, 8, 7–38. [Google Scholar]

- Bingham, N.H. Limit theorems for occupation times of Markov processes. Z. Wahrscheinlichkeitstheorie Verwandte Geb. 1971, 17, 1–22. [Google Scholar] [CrossRef]

- Peng, J.; Li, K. A note on property of the Mittag-Leffler function. J. Math. Anal. Appl. 2010, 370, 635–638. [Google Scholar] [CrossRef]

- Gillespie, D.T. A general method for numerically simulating the stochastic time evolution of coupled chemical reactions. J. Comput. Phys. 1976, 22, 403–434. [Google Scholar] [CrossRef]

- Nolan, J.P. Numerical calculation of stable densities and distribution functions. Commun. Statistics Stoch. Model. 1997, 13, 759–774. [Google Scholar] [CrossRef]

- Abate, J.; Whitt, W. Transient behavior of the M/M/l queue: Starting at the origin. Queueing Syst. 1987, 2, 41–65. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).