Abstract

The artificial rabbits optimization (ARO) algorithm is a recently developed metaheuristic (MH) method motivated by the survival strategies of rabbits with bilateral symmetry in nature. Although the ARO algorithm shows competitive performance compared with popular MH algorithms, it still has poor convergence accuracy and the problem of getting stuck in local solutions. In order to eliminate the effects of these deficiencies, this paper develops an enhanced variant of ARO, called Lévy flight, and the selective opposition version of the artificial rabbit algorithm (LARO) by combining the Lévy flight and selective opposition strategies. First, a Lévy flight strategy is introduced in the random hiding phase to improve the diversity and dynamics of the population. The diverse populations deepen the global exploration process and thus improve the convergence accuracy of the algorithm. Then, ARO is improved by introducing the selective opposition strategy to enhance the tracking efficiency and prevent ARO from getting stuck in current local solutions. LARO is compared with various algorithms using 23 classical functions, IEEE CEC2017, and IEEE CEC2019 functions. When faced with three different test sets, LARO was able to perform best in 15 (65%), 11 (39%), and 6 (38%) of these functions, respectively. The practicality of LARO is also emphasized by addressing six mechanical optimization problems. The experimental results demonstrate that LARO is a competitive MH algorithm that deals with complicated optimization problems through different performance metrics.

1. Introduction

Most practical applications of problem processing often go to the appropriate solution of an optimization problem by their very nature [1]. Therefore, the optimization problem has been a problem that has received much attention from the beginning, and the exploration of various efficient methods for complicated optimization problems (COPs) has captured the attention of scholars in many fields. Among them, the traditional mathematical optimization method as an optimization strategy requires that the associated objective function needs meet convexity and separability. This property requirement guarantees that it approximates the optimal solution theoretically. However, traditional mathematical strategies are complicated in dealing with highly complex and demanding optimization problems [2]. Newton’s method and the branch-and-bound method are typical deterministic algorithms. Although such algorithms are superior to metaheuristic nature-inspired algorithms in solving some single-parameter tests in terms of functional tests, deterministic algorithms tend to fall into local optimal solutions when faced with more demanding objective functions and constraint functions. Deterministic methods may not be effective when facing multimodal, discrete, non-differentiable, or non-convex problems, or with a comprehensive search space. In addition, deterministic algorithms sometimes require a derivative. Therefore, deterministic algorithms often do not work in solving engineering problems [3].

MH techniques have recently attracted more scholarly attention because of their unique idea of providing a suitable candidate to handle various complex and realistic optimization problems. In general, MH methods have some available advantages over traditional mathematical optimization methods: MH algorithms are an efficient search, low-complexity global optimization method, and different solutions can be searched for in each iteration, making these other solutions highly competitive in obtaining optimal solutions [4].

Depending on the object of construction, experts tend to classify MH algorithms into four parts: evolution-based algorithms, group-intelligence-based algorithms, physical- or chemical-based algorithms, and human-behavior-based algorithms (HBAs) [5]. Evolution-based algorithms imitate the natural evolutionary laws of the biological world; examples of this category include genetic algorithms [6], evolutionary strategies [7], differential evolution [8] which is a variation, crossover, and selection-based garlic algorithm, and evolutionary planning (EP) [9].

Group-intelligence-based (GIB) algorithms are often motivated by the cooperative conduct of various plants and animals in natural environments that live in groups and work together to find food/prey. This GIB category includes aphid–ant mutualism (AAM) [10], bottlenose dolphin optimizer (BDO) [11], beluga whale optimization (BWO) [12], capuchin search algorithm (CapSA) [13], sand cat swarm optimization (SCSO) [14], manta ray foraging optimization algorithm (MRFO) [15], black widow optimization algorithm (BWOA) [16], and chimp optimization algorithm (CHOA) [17].

A physical- or chemical-based algorithm simulates the physical laws and chemical phenomena of biological nature, usually following a generic set of rules to discriminate the influence of interactions between candidate solutions. The type includes the gravitational search algorithm [18], a popular physical- or chemical-based algorithm motivated by Newton’s law of gravity. According to some gravitational force, subjects are attracted to each other according to the law of gravitation. Examples include atom search optimization [19], ion motion algorithm [20], equilibrium optimizer [21], and water cycle algorithm (WCA) [22].

In the last case of the four types, HBAs are exploited by taking advantage of various characteristics associated with humans. The main types of this category include human mental search [23], poor and rich optimization algorithm [24], and teaching learning-based optimization [25].

Dealing with COPs usually consists of two steps: exploration and exploitation. Exploration and exploitation are two opposite strategies. The algorithm searches for a better solution in the global discovery domain in the exploration step. In the development step, the algorithm tends to locate the best solution found so far by exploring the vicinity of the candidate solutions. The trade-off between exploration and exploitation is considered one of the most common problems in current metaheuristic algorithms [26]. This general problem forces the optimization search process to utilize one of the search mechanisms at the expense of the other strategy. In this context, many scholars have proposed algorithms to balance such mechanisms. For example, Zamani proposed a quantum-based avian navigation optimizer algorithm inspired by the navigation behavior of migratory birds [27]. In addition, Nadimi-Shahraki introduced the proposed multi-trial vector approach and archiving mechanism to the differential evolution algorithm, thus proposing a diversity-maintained differential evolution algorithm [28]. ARO was suggested as a newly developed metaheuristic technique with steps inspired by the laws of rabbit survival in the natural world [29].

Regarding the no-free-lunch theory [30], no nature-inspired method can optimally handle every realistic COP [31]. The above facts imply that optimization methods are applied to solve specific COPs but may not be valid for solving other COPs, and experimental results tend to reveal that artificial rabbits optimization has poor convergence accuracy and tends to get stuck in local solutions when handling complicated or high-latitude issues. Therefore, based on the importance of the above two reasons, this paper suggests a hybrid artificial rabbit optimization with Lévy flight and selective opposition strategy, called enhanced ARO algorithm (LARO). LARO is a variant of the ARO algorithm. First, to enhance the worldwide finding capability of ARO, the Lévy flight strategy is fully utilized [32]. The Lévy flight strategy helps LARO to design local solution avoidance and international exploration. Secondly, local exploitation of LARO is achieved by using a selective opposition strategy with improved convergence accuracy [33]. The innovative points and the major contributions of this paper are given below:

- (i)

- The Lévy flight strategy is introduced in the random hiding phase to improve the diversity and dynamics of the population, which further improves the convergence accuracy of ARO.

- (ii)

- The introduced selection opposition strategy extends the basic opposition strategy and adaptively re-updates the algorithm to improve the ability to jump out of the local optimum.

- (iii)

- Numerical experiments are tested on 23 standard test functions, the CEC2017 test set, and the CEC2019 test set.

- (iv)

- LARO is implemented and tested on six engineering design cases.

The remainder of this study is organized as given below. Section 2 describes the ARO mathematical model. The Lévy flight strategy, selective opposition strategy, and LARO algorithm are introduced in Section 3. Section 4 presents the numerical results and discussion of the proposed algorithm, mainly applied to 23 benchmark functions and the CEC2019 test set. An application of LARO to six real engineering problems is described in Section 5. Section 6 concludes this research work and discusses future prospects.

2. Artificial Rabbits Optimization (ARO)

The ARO algorithm is proposed mainly by referring to two laws of rabbit survival in the natural world: detour foraging and random hiding [29]. Among them, detour foraging is an exploration strategy to prevent detection by natural predators by having rabbits eat the grass near the nest. Random hiding is a strategy in which rabbits move to other burrows, mainly to hide further. The beginning of any search algorithm relies on the initialization process. Considering that the size of the design variable has dimension d, the size of the artificial rabbit colony is N, and the upper and lower limits are ub and lb. Then the initialization is done as follows.

where denotes the position of the jth dimension of the ith rabbit and r is a random number that we are given along with it.

The metaheuristic algorithm mainly considers the two processes of exploration and exploitation, while detour foraging mainly considers the exploration phase. Detour foraging is the tendency of each rabbit to stir around the food source and explore another rabbit location randomly chosen in the group to obtain enough food. The updated formula for detour foraging is given below.

where denotes the new position of the artificial rabbit, i,j = 1, …, N. denotes the position of the ith artificial rabbit, and represents artificial rabbits at other random positions. Tmax is the maximum number of iterations. [·] symbolizes the ceiling function, which represents rounding to the nearest integer, and randp represents a stochastic arrangement from 1 to d random permutation of integers. r1, r2, and r3 are stochastic numbers from 0 to 1. L represents the running length, which is movement speed when detour foraging. n1 obeys the standard normal distribution. The perturbation is mainly reflected by the normal distribution random number of n1. The perturbation of the last term of Equation (2) can help ARO avoid local extremum and perform a global search.

Random hiding is mainly modeled after the exploration stage of the algorithm, where rabbits usually dig several burrows around their nests and randomly choose one to hide in to reduce the probability of being predated. We first define the process by which rabbits randomly generate burrows. The ith rabbit produces the jth burrow by:

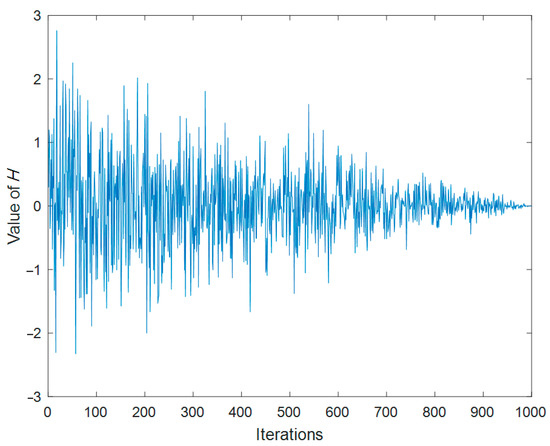

where i = 1, …, N and j = 1, …, d, and n2 follows the standard normal distribution. H denotes the hidden parameter that decreases linearly from 1 to 1/Tmax with stochastic perturbations. Figure 1 shows the change in the value of an over the course of 1000 iterations. In the figure, the H value trend generally decreases, thus maintaining a balanced transition from exploration to exploitation throughout the iterations.

Figure 1.

The change of H over the course of 1000 iterations.

The update formula for the random hiding method is shown below.

where is the new position of the artificial rabbit, represents a randomly selected burrow among the d burrows generated by the rabbit for hiding, and r4 and r5 represent the random number given by us in the interval 0 to 1. R is given by Equations (3)–(6).

After the two update strategies are implemented, we renew the position of the ith artificial rabbit by Equation (15).

This equation represents an adaptive update. The rabbit automatically chooses whether to stay in its current position or move to a new one based on the adaptation value.

For an optimization algorithm, populations prefer to perform the exploration phase in the early stages and an exploitation phase in the middle and late stages. ARO relies on the energy of the rabbits to design a finding scheme: the rabbits’ energy decreases over time, thus simulating the exploration to exploitation transition. The definition of the energy factor in the artificial rabbits algorithm we give is:

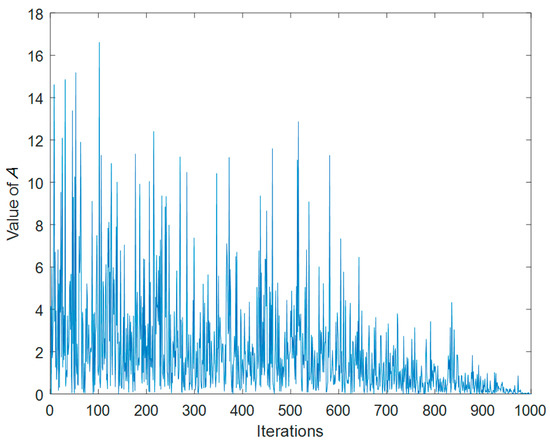

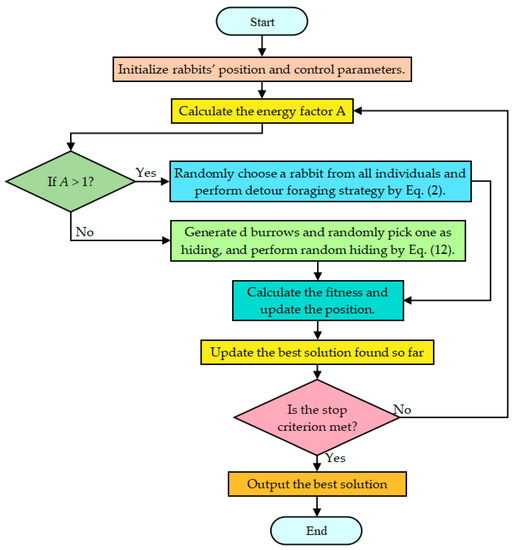

where r is a given random number and r is the random number in (0, 1). Figure 2 shows the change in the value of an over the course of 1000 iterations. Analysis of the information in the figure shows that the trend in the value of A’s is that the overall situation is decreasing, thus maintaining a balanced transition from exploration to exploitation throughout the iterations. Algorithm 1 gives the pseudo-code of the fundamental artificial rabbits optimization. Figure 3 provides the flow chart of ARO.

| Algorithm 1: The framework of artificial rabbits optimization |

| 1: The parameters of artificial rabbits optimization including the size of artificial rabbits N, and TMax. |

| 2: Random initializing a set of rabbits zi and calculate fi. |

| 3: Find the best rabbits. |

| 4: While t ≤ TMax do |

| 5: For i = 1 to N do |

| 6: Calculate the energy factor A by Equation (16). |

| 7: If A > 1 then |

| 8: Random choose a rabbits from all individuals. |

| 9: Compute the R using Equations (3)–(6). |

| 10: Perform detour foraging strategy by Equation (2). |

| 11: Calculate the fitness value of the rabbit’s position fi. |

| 12: Updated the position of rabbit by Equation (15). |

| 13: Else |

| 14: Generate d burrows and select one randomly according to Equation (14). |

| 15: Perform random hiding strategy by Equation (12). |

| 16: Calculate the fitness value of the rabbit’s position fi. |

| 17: Updated the position of rabbit by Equation (15). |

| 18: End if |

| 19: End for |

| 20: Search for the best artificial rabbit. |

| 21: t = t + 1. |

| 22: End while |

| 23: Output the most suitable artificial rabbit. |

Figure 2.

The change of A over the course of 1000 iterations.

Figure 3.

Flow chart of ARO.

3. Hybrid Artificial Rabbits Optimization

Hybrid optimization algorithms are widely used in practical engineering due to targeted improvements to the original algorithm that enhance the different performances of the algorithm. For example, Liu proposed a new hybrid algorithm that combines particle swarm optimization and single layer neural network to achieve the complementary advantages of both and successfully implemented in wavefront shaping [34]. Islam effectively solves the clustered vehicle routing problem by combining particle swarm optimization (PSO) and variable neighborhood search (VNS), fusing the diversity of solutions in PSO and bringing solutions to local optima in VNS [35]. Devarapalli proposed a hybrid modified grey wolf optimization–sine cosine algorithm that effectively solves the power system stabilizer parameter tuning in a multimachine power system [36]. To mitigate the poor accuracy and ease of falling into local solutions of the original ARO algorithm, we propose a hybrid, improved LARO algorithm by introducing a Lévy flight strategy and selective opposition in the ARO algorithm and applying the proposed algorithm to engineering optimization problems. Among them, Lévy flight is employed to boost the algorithm’s accuracy. The selective opposition strategy helps the algorithm jump out of local solutions.

3.1. Lévy Flight Method

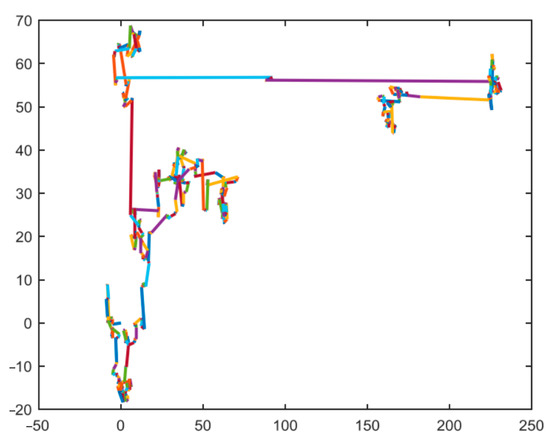

The Lévy flight method is often introduced in improved algorithms and proposed algorithms, mainly to provide dynamism to the algorithm updates, where the Lévy flight operator is mentioned primarily for generating a regular random number, which is characterized as a small number in most cases and a large random number in few cases. This arbitrary number generation law can help various update strategies to provide dynamics and jump out of local solutions. Lévy distribution is defined by the following equation. Figure 4 provides Levy’s flight path in two-dimensional space [32].

where t is the step length, which can be calculated by Equation (18). The formulas for solving the step size of the Lévy flight are given in Equations (18)–(21).

where σu and σv are defined as given in Equations (20) and (21). Both u and v obey Gaussian distributions with mean 0 and variance σu2 and σv2, as shown in Equation (19). Γ denotes a standard Gamma function, while β denotes a correlation parameter, which is usually set to 1.5.

Figure 4.

Lévy flight path of 500 times movements in a two-dimensional space.

In the random hiding phase, we replace the r4 random numbers with the random numbers generated by the Lévy flight strategy. Since the random hiding stage is an exploitation stage, we introduce Lévy flight in this strategy to avoid ARO from falling into local candidate solutions in the exploitation phase. Additionally, it helps the algorithm improve the convergence accuracy and the flexibility of the random hiding stage. The following equation provides the random hidden phase based on the Lévy flight, where α is a parameter fixed to 0.1.

3.2. Selective Opposition (SO) Strategy

SO is a modified idea of opposition-based learning (OBL) [33]. The idea of SO is to modify the size of the rabbits far from the optimal solution by using new opposition-based learning to bring it closer to the rabbit in the optimal position. In addition, the selective opposition strategy tends to be affected by a linearly decreasing threshold. When the rabbits deploy SO, selective opposition assists the rabbits in achieving a better situation in the development phase by changing the proximity dimension of different rabbits [37]. The updates are as follows.

First, we define a threshold value. The threshold value will be decreased until the limit case is reached. As shown in the following equation, SO checks the distance of each candidate rabbit location from the current rabbit dimension to the best rabbit location for all candidate rabbit locations.

where ddj is the difference distance of all dimensions of each rabbit. When ddj is greater than the Threshold (TS) value we define, the far and near rabbit positions are calculated. Then, all difference distances for all rabbit positions are listed.

The src is proposed mainly to measure the correlation between the current rabbit and the optimal rabbit position. Assuming that src < 0 and the far dimension (df) is larger than the close dimensions (dc), the rabbit’s position will be updated by Equation (25).

Algorithm 2 gives the pseudo-code for selective opposition (SO).

| Algorithm 2: Selective Opposition (SO) |

| 1: The parameters of selective opposition including: initial generation (t), rabbit size (N), the maximum generation (TMax), dimension (d), dc = [], and df = []. |

| 2: TS = 2 − [t·(2/TMax)]. |

| 3: For i = 1 to N do |

| 4: If Zi ≠ Zibest then |

| 5: For j = 1 to d do |

| 6: ddj = |zibest,j-zi,j|{ddj = the discrepancy distance of the jth dimension} |

| 7: If ddj < TS then |

| 8: Determine the far dimensions (df). |

| 9: Calculate far distance dimensions (df). |

| 10: Else |

| 11: Determine the close dimensions (dc). |

| 12: Calculate close distance dimensions (dc). |

| 13: End if |

| 14: End for |

| 15: Summing over all ddj. |

| 16: . |

| 17: If src ≤ 0 and size(df) > size(dc) then |

| 18: Perform Z′df = LBdf + UBdf − Zdf. |

| 19: End if |

| 20: End if |

| 21: End for |

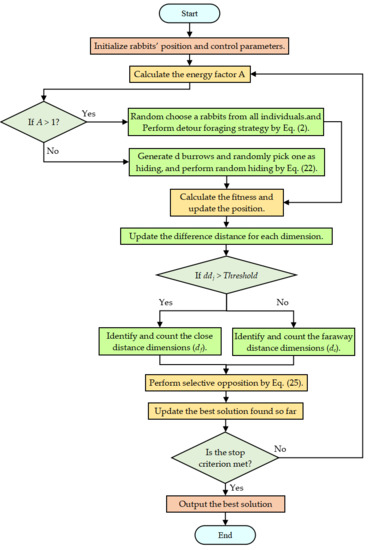

3.3. Detailed Implementation of LARO

Two modifications, namely Lévy flight and selective opposition, are included in ARO. These modifications suitably help the ARO algorithm to increase the convergence and population variety while obtaining more qualitative candidate solutions. The detailed procedures of LARO are shown below.

Step1: Suitable parameters for LARO are supplied: the size of artificial rabbit N, the dimensionality of the variables d, the upper and lower bounds ub and lb of the problem variables, and all iterations TMax;

Step2: Randomly select a series of rabbit locations and calculate their fitness values. Find the rabbit with the best position;

Step3: Calculate the value of the energy factor A by Equation (16). If A > 1, select an arbitrary rabbit from all groups of rabbits;

Step4: Calculate the value of R by using Equations (3)–(6). Perform detour foraging strategy by means of Equation (2). Then calculate the adaptation value of the updated rabbit position and update the rabbit position by means of Equation (15);

Step5: If A ≤ 1, randomly generate burrows and randomly select one according to Equation (14). The new position of the rabbit is updated by a random hiding strategy based on the improved Lévy flight strategy of Equation (22). The corresponding fitness is calculated and then the rabbit’s position is updated by Equation (15);

Step6: The distance of each candidate rabbit position from the current rabbit dimension to the best rabbit position is calculated by Equation (23);

Step7: If ddj > Threshold, determine the near size df and count the number of df. If ddj ≤ Threshold, determine the far dimension dc and count the number of dc. Then calculate src from the calculated ddj by Equation (24);

Step8: If src <= 0 and df > dc, execute Equation (25) and re-update the rabbit’s position;

Step9: If the iterations exceed the maximum case, the optimal result is exported.

To better introduce the proposed LARO algorithm in this study, the pseudo-code of LARO is offered in Algorithm 3. Among them, line 15 is the Lévy flight strategy improved with the random hiding strategy. Lines 20–40 are the selective opposition strategy. Figure 5 illustrates the flowchart of the LARO algorithm.

| Algorithm 3: The algorithm composition of LARO |

| 1: The parameters of artificial rabbits optimization: the size of artificial rabbits N, TMax, the sensitive parameter α, β, dc = [], and df = []. |

| 2: Random initializing a set of rabbits zi and calculate fi. |

| 3: Find the best rabbits. |

| 4: While t ≤ TMax do |

| 5: For i =1 to N do |

| 6: Compute the energy factor A using Equation (16). |

| 7: If A > 1 then |

| 8: Random choose a rabbits from all individuals. |

| 9: Compute the R using Equations (3)–(6). |

| 10: Perform detour foraging strategy by Equation (2). |

| 11: Calculate the fitness value of the rabbit’s position fi. |

| 12: Updated the position of rabbit by Equation (15). |

| 13: Else |

| 14: Generate d burrows and select one randomly according to Equation (14). |

| 15: Perform random hiding strategy by Equation (22). |

| 16: Calculate the fitness value of the rabbit’s position fi. |

| 17: Updated the position of rabbit by Equation (15). |

| 18: End if |

| 19: End for |

| 20: TS = 2 − [t·(2/TMax)]. |

| 21: For i = 1 to N do |

| 22: If Zi ≠ Zibest then |

| 23: For j = 1 to d do |

| 24: ddj = |zibest,j-zi,j|{ddj = the discrepancy distance of the jth dimension} |

| 25: If ddj < TS then |

| 26: Determine the far dimensions (df). |

| 27: Calculate far distance dimensions (df). |

| 28: Else |

| 29: Determine the close dimensions (dc). |

| 30: Calculate close distance dimensions (dc). |

| 31: End if |

| 32: End for |

| 33: Summing over all ddj. |

| 34: . |

| 35: If src ≤ 0 and size(df) > size(dc) then |

| 36: Perform Z′df = LBdf + UBdf − Zdf. |

| 37: End if |

| 38: End if |

| 39: End for |

| 40: Updated the position of rabbit by Equation (15). |

| 41: Search for the best rabbits bestj. |

| 42: t = t + 1. |

| 43: End while |

| 44: Output the most suitable artificial rabbit. |

Figure 5.

Flowchart for the LARO algorithm.

3.4. In-Depth Discussion of LARO Complexity

The estimation of LARO complexity is mainly done by adding the selective opposition part to the ARO base algorithm. At the same time, the Lévy strategy only improves how ARO is updated without increasing the complexity. Calculating the complexity is an effective method when assessing the complexity of solving real problems. The complexity is associated with the size of artificial rabbits N, d, and TMax. The total complexity of the artificial rabbits algorithm is as follows [29].

The selective opposition strategy focuses on the consideration of all dimensions of all rabbit locations. Therefore, the complication of the LARO algorithm is:

4. Numerical Experiments

To numerically experimentally validate the capabilities of the LARO algorithm, two basic suites were selected: 23 benchmark test functions [26] and ten benchmark functions from the standard CEC2019 test suite [26]. We selected some optimized metaheuristic algorithms to compare with our proposed LARO, including arithmetic optimization algorithm (AOA) [38], grey wolf optimization (GWO) [39], coot optimization algorithm (COOT) [40], golden jackal optimization (GJO) [41], weighted mean of vectors (INFO) [42], moth–flame optimization (MFO) [43], multi-verse optimization (MVO) [44], sine cosine optimization algorithm (SCA) [45], salp swarm optimization algorithm (SSA) [46], and whale optimization algorithm (WOA) [47]. The LARO algorithm was compared with all the different search algorithms subjected to Wilcoxon rank sum and Friedman’s mean rank test. The full algorithm was run 20 times separately. In addition, to better demonstrate the experiments, we tested the best, worst, mean, and standard deviation (STD) values for this period. The main parameters of the other relevant algorithms we provide are in Table 1.

Table 1.

Suitable parameters for different algorithms.

4.1. Experimental Analysis of Exploration and Exploitation

Differences between candidate solutions in different dimensions and the overall direction tend to influence whether the group tends to diverge or aggregate. When growing to separate, the differences among all candidate individuals in all dimensions will come to the fore. This situation means that all candidate individuals will explore the domain in a particular manner. This approach will allow the optimization method to analyze the candidate solution space more extensively through the transient features. Alternatively, when a trend toward aggregation is generated, the candidate solutions explore the room based on a broad synergistic situation, reducing the variability of all candidate individuals and exploiting the exploration region of candidate solutions in a detailed manner. Maintaining the right synergy between this divergent discovery pattern and the aggregated development pattern is necessary to ensure optimization capability.

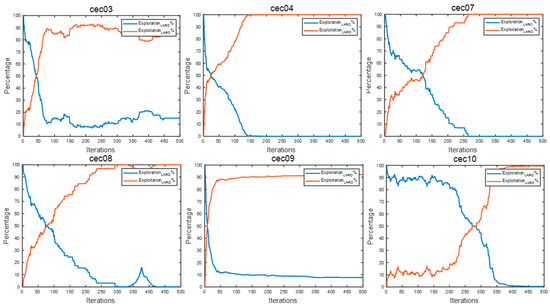

For the experimental part, we draw on the dimensional diversity metric suggested by Hussain et al. in [48] and calculate corresponding exploration and exploitation ratios. We selected the CEC2019 test set and provided the exploration and exploitation analysis graphs for some of the CEC2019 test functions in Figure 6.

Figure 6.

The exploration and exploitation diagrams of LARO.

From the figure, we can find that LARO starts from exploration in all the test functions and then gradually transitions to the exploitation stage. In the test functions of cec03 and cec09, we find that LARO can still maintain an efficient investigation rate in the middle and late iterations, and in the face of cec04, cec07, and cec08, LARO will quickly shift to an efficient discovery rate in the mid-term, ending the iteration with an efficient exploration situation. This discovery process shows that the more efficient exploration rate early in LARO guarantees a reasonable full-range finding capability to prevent getting stuck in current local solutions. In contrast, the middle step is smooth over the low, and the more efficient development rate in the later period guarantees that it can be exploited with higher accuracy after high exploration.

4.2. Comparative Analysis of Populations and Maximum Iterations

The population size and the maximum iterations affect the performance of the population-based metaheuristic algorithm. Therefore, in this section, we perform a sensitivity analysis of LARO involving the size of the initial population as well as the maximum iterations. This study considers the two most commonly used combinations of population and maximum iterations: (1) the size of artificial rabbit colonies is 50, and Tmax = 1000, (2) the size of artificial rabbit colonies is 100, and Tmax = 500, and LARO for the experiments conducted in case (1) is defined as LARO1, and LARO in case (2) is LARO2. The performance and running time of LARO1 and LARO2 are compared in the experiments with 23 test functions.

Table 2 provides a comparison of LARO with two different parameters in 23 benchmark functions. From the numerical results, it can be found that both parameters of ARO used almost similar running times. However, the convergence accuracy of LARO1 is better than that of LARO2, which indicates that LARO, with the case 1 parameter, can provide better convergence accuracy with the same guaranteed running cost. Therefore, the results show that the performance of LARO is affected by the population size and the number of iterations. The best performance was returned when the population size was set to 50, and the maximum number of iterations was 1000.

Table 2.

Comparison of LARO with two different parameters in 23 benchmark functions.

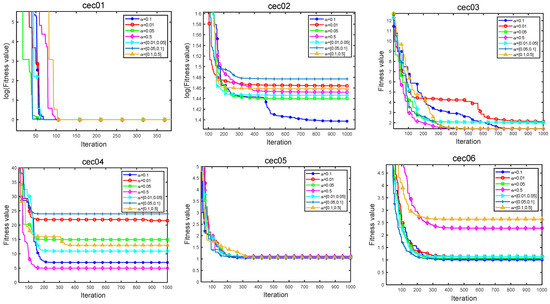

4.3. Analysis of Lévy Flight the Jump Parameter α

According to the mechanism of the Lévy flight strategy, we replace the r4 random numbers with the random numbers generated by the Lévy flight strategy. Thus, ARO is prevented from falling into local candidate solutions in the utilization phase. Additionally, the jump parameter α will affect the change of the updated position. In general, a larger jump parameter α, which increases the step size of the Lévy flight strategy, can ensure that the algorithm jumps out of the local solution, but it may also cause the optimal solution information not to be preserved. If the value is too small, it will affect the sensitivity of the Lévy flight strategy and, thus, the accuracy of the algorithm. Therefore, the jump parameter α has a great impact on the performance of LARO.

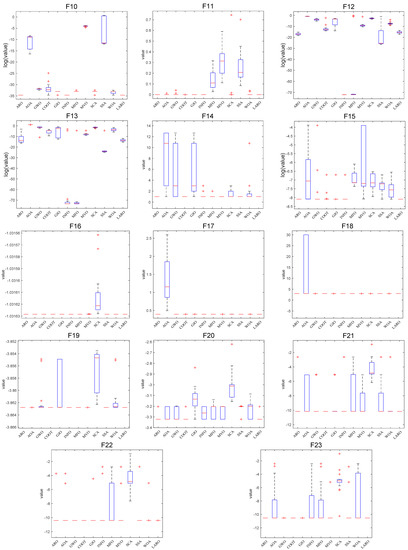

This section discusses the impact of the jump parameter α on the performance of the algorithm, and 10 test functions of CEC2019 are used to explore the impact of the jump parameter. The relevant jump parameters take four different values of 0.1, 0.01, 0.5, and 0.5, and three value intervals [0.01, 0.05], [0.05, 0.1], and [0.1, 0.5], respectively. The numerical intervals indicate the random number within each provided interval. The mean values of the solutions obtained by LARO for the CEC2019 test function over 20 independent trials are provided in Table 3. For a clearer view of the effect of the jump parameter α on LARO performance, Figure 7 provides the convergence curves for the ten test functions.

Table 3.

Performance analysis of jump parameter α in CEC2019.

Figure 7.

Iteration plot of seven parameters in CEC2019.

By analyzing Table 3, it can be observed that the average rank is the smallest when the jump parameter α = 0.1 at 3.2. and the best average values are obtained for five test functions (cec01, cec05, cec07, cec08, cec10). The value of the jump parameter α is a more in-between suitable value, indicating that the value balances the information of retaining the optimal solution and jumping out of the local solution. Figure 7 provides an iterative plot of the seven jump parameters. From the graph, it can be found that the LARO algorithm has a faster convergence rate, as well as a higher iteration accuracy for the jump parameter α = 0.1. Therefore, LARO can show the best performance when the jump parameter α is taken at 0.1.

4.4. Experiments on the 23 Classical Functions

To evaluate the strength of LARO in traversing the solution space, finding the optimal candidate solution, and getting rid of local solutions, we used 23 benchmark functions, where the unimodal test benchmarks (F1–F7) were used to examine the ability of LARO to develop accuracy. The multimodal benchmark set (F8–F13) was used to test the capability of LCAHA for spatial exploration. The fixed-dimensional multimodal test benchmarks (F14–F23) are mainly used to verify LARO’s excellent ability to handle low-dimensional spatial investigation. The dimensions of F01–F11 are 30, while the dimensions of F14–F23 are all different because they are fixed-dimensional multimodal test functions (the dimensions of F14–F23 functions are 2, 4, 2, 2, 2, 3, 6, 4, 4, 4, 4).

Table 4 shows LARO’s experimental and statistical results, the original ARO, and ten other search algorithms. Five relevant evaluation metrics (best, worst, average, standard, and ranking) were selected for this experiment. Additionally, we used Friedman ranking test results for all algorithms based on the mean value. In addition, the statistical presentation of the Wilcoxon test for LARO and other selected MH algorithms is shown in Table 4. When calculating the significance level, we let the default value of the significance level be set to 0.05. In addition, “+” denotes that a particular MH algorithm converges better than LARO. “−” denotes the opposite effect. “=” suggests that the impact of convergence in a given test problem is the same as the convergence of a particular MH algorithm.

Table 4.

Statistical outcomes of the different MH methods on the 23 test functions.

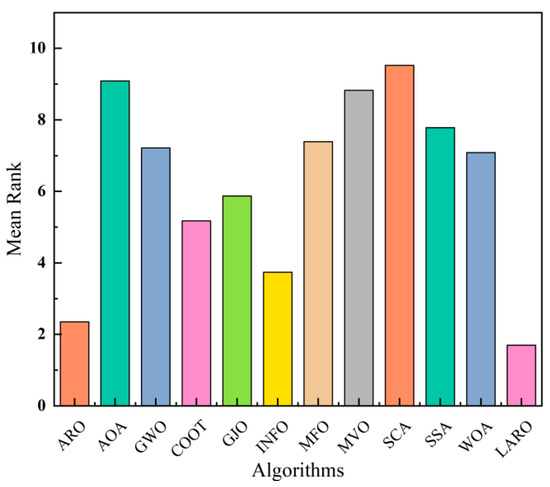

Analysis of the table shows that the proposed LARO has a Friedman rank of 1.6957 and is in the first place. Next is ARO, with 2.3478 ranked seconds. Figure 8 provides the average rank of the 12 comparison algorithms. LARO provides the best case among all algorithms on the 16 tested functions. In more detail, LARO ranked first in two unimodal functions (F1 and F3) and obtained the best results in four multimodal functions (F9, F10, F11, F13), respectively. Additionally, the best case was obtained in eight fixed-dimensional functions (F14, F16, F17, F18, F19, F20, F21, and F23). In addition to this, LARO shows strong competitiveness in some functions (F4, F8, F15, F22). Moreover, LARO and some other algorithms offer the best case when facing some of the tested functions. For example, ARO, GJO, INFO, and LARO obtain the best average solution when facing the F9 and F11 functions. In addition, ARO shows a notable ability to successfully solve three and seven problems in the face of unimodal and multimodal functions. Thus, it can be seen that LARO mainly improves the ability of the original algorithm to deal with unimodal problems while somewhat enhancing the ability to deal with multimodal and fixed-dimensional problems.

Figure 8.

The average rank of the twelve algorithms.

By analyzing the experimental results and tests, it can be seen that LARO improves the convergence ability of the algorithm by introducing a Lévy flight strategy in the random hiding phase, which leads to a good convergence effect and accuracy of LARO when facing unimodal problems without multiple solutions. In addition, due to the introduction of the selective opposition strategy in ARO, LARO can effectively filter the optimal solution among multiple local solutions when dealing with multimodal problems and fixed-dimensional problems. This result is because the selective opposition strategy helps the algorithm to jump out of the local solutions adaptively. Experimental results also demonstrate that LARO has better convergence than other algorithms and ARO when dealing with multimodal and fixed-dimension problems. Therefore, it can be shown that LARO is a reliable optimization method in terms of performance. However, it is also found that LARO tends to break the balance between exploration and exploration in the overall iterative process, affecting the algorithm’s performance.

In Table 5, we give the p-values of 11 MH algorithms, LARO algorithm, and the Wilcoxon test to check whether LARO outperforms other MH algorithms. The Wilcoxon test results for ARO, AOA, GWO, COOT, GJO, and INFO algorithms are 2/16/5, 1/1/21, 0/1/22, 0/11/12, 3/2/18, and 3/12/8. The Wilcoxon test results for MFO, MVO, SCA, SSA, and WOA were 1/5/17, 0/1/22, 0/1/22, 1/7/15, and 2/3/18, respectively.

Table 5.

Statistical output and associated p-values on 23 test functions.

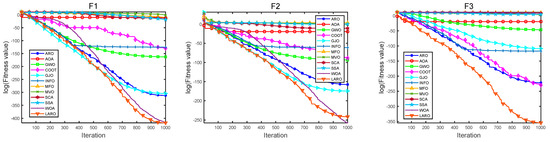

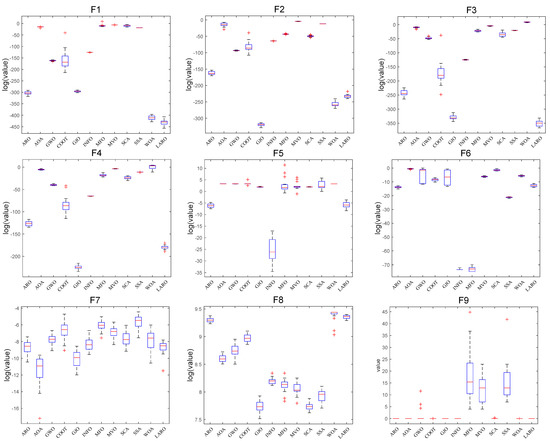

Figure 9 offers the convergence plots of the twelve different methods on the 23 benchmark functions, where the X-axis of the plot represents the iterations, and the Y-axis represents the degree of adaptation (some test functions (F1, F2, F3, F4, F5, F6, F7, F10, F11, F12, F13, F14, F15) are represented as logarithms of 10). The results in the figure demonstrate that LARO has a high-speed convergence rate and convergence accuracy when dealing with a part of the functions (F1, F2, F3, F4, F5) in the face of F1–F7 unimodal functions. Additionally, LARO continues to improve accuracy near the optimal solution later in the iteration. This analysis shows its reliable performance in getting rid of the local key. For the F8–F23 functions, we can see that LARO exhibits a characteristic that transitions rapidly between the early search and late development phases and converges near the optimal position at the beginning of the iteration. Then, LARO progressively determines the best marquee position and updates the answer to confirm the previous search results. Figure 10 illustrates box plots of 12 different MH algorithms for showing the distribution of means in various problems. In most of the issues tested, the distribution of LARO is more concentrated and downward than the other algorithms. This finding also illustrates the consistency and stability of LARO. Overall, LARO can handle the 23 basic test sets very well.

Figure 9.

Convergence plots of LARO and different MH methods on 23 test functions.

Figure 10.

Box plot of LARO and ARO, AOA, GWO, COOT, GJO, INFO, MFO, MVO, SCA, SSA, WOA on 23 test functions.

4.5. Experiments on the CEC2017 Classical Functions

In this section, the proposed LARO is simulated in CEC2017 for 29 of these test functions. LARO and the other comparison methods are executed 20 times individually, with the same relevant parameters set in Section 4.4. Cec01 and cec03–cec30 have a problem dimension of 10. Numerical results include the output of ARO [29], BWO [12], CapSA [13], GA [49], PSO [50], RSA [51], WSO [52], GJO [41], E-WOA [53], WMFO [54], and CSOAOA [26] outputs. As shown in Table 6, the evaluation methods of all 29 tested functions are compared by the proposed LARO algorithm. In addition, the results of Friedman’s statistical test are given in the last part of the table. In this case, Friedman’s statistical test ranking is given based on the mean value.

Table 6.

Statistical outcomes of the different search methods on the CEC2017 test functions.

As shown in Table 6, the average rank of Friedman for LARO is 1.8621, while the average rank of WOS and ARO are 2.6897 and 2.7241, respectively. Therefore, LARO’s final ranking is the first. The results show that LARO provides a good output profile on 29 tested functions. LARO can succeed on 11 functions (cec05, cec07, cec09, cec11, cec15, cec17, cec18, cec20, cec22, cec23, cec28, cec30). In addition, LARO was able to obtain better optimization results and average values for the ten tested functions (cec01, cec03, cec06, cec08, cec10, cec14, cec19, cec21, cec27, cec29). The numerical results show that the proposed LARO exhibits excellent performance in the unimodal problem, indicating that the LARO algorithm again converges quickly. The performance of LARO for multimodal functions also illustrates that the introduced selective opposition effectively helps the algorithm to jump out of local solutions. In the face of composition and hybrid functions, LARO demonstrates excellent optimization ability, indicating the effectiveness of the Lévy flight strategy in improving the accuracy of the algorithm, while WSO and ARO can successfully solve six (cec01, cec08, cec10, cec12, cec13, cec29) and four functions (cec06, cec14, cec19, cec26), respectively.

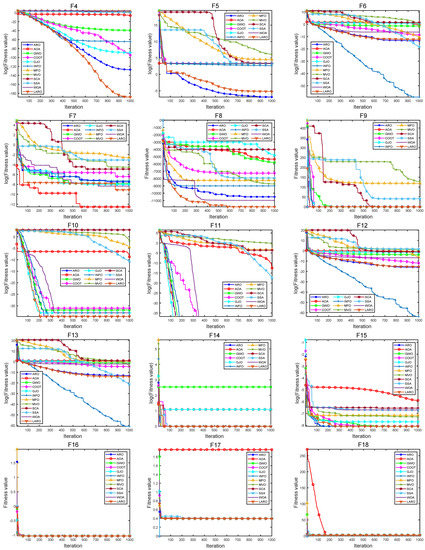

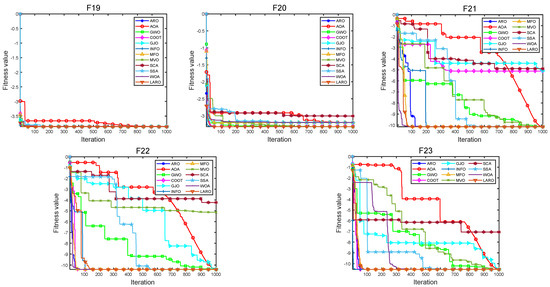

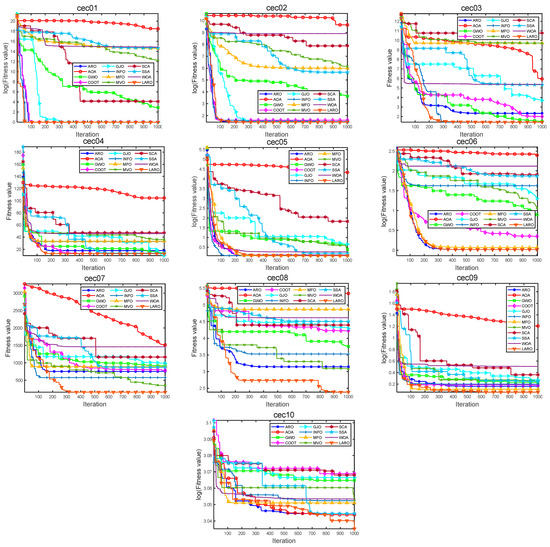

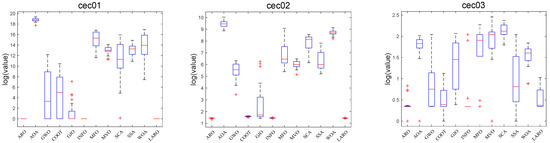

4.6. Experiments on CEC2019 Test Functions

In this section, the proposed LARO has experimented with ten functions in CEC2019 [26]. The LARO algorithm is executed 20 times individually, and the parameters given are consistent with those of the numerical experiments in Section 4.3. Among them, the dimensionality of the functions cec01–cec03 is different from the others, with 9, 16, and 18 for cec01–cec03, respectively, while the problem dimensionality of cec04–cec10 is 10 [55]. The numerical results in agreement with AOA [38], GWO [39], COOT [40], GJO [41], INFO [42], MFO [43], MVO [44], SCA [45], SSA [46], WOA [47] are compared. As shown in Table 7, the four relevant evaluation methods are compared by the proposed LARO algorithm in all ten tested functions. In addition, the Wilcoxon and the Friedman statistical test results are given in the last part of the table. The experimental results conclude that LARO is superior in handling these challenging optimization function problems. LARO ranks first with an average ranking of 1.3636. In addition, LARO performs as the optimal case in seven of the ten CEC2019 functions (cec01, cec04, cec05, cec06, cec07, cec08, cec10), and ARO shows the best results in the other three functions (cec02, cec03, cec09). Numerical experimental results demonstrate that the LARO algorithm can accurately approach the optimal solution and is highly competitive with other MH methods for various types of problems. Moreover, experimental results likewise demonstrate that the LARO algorithm enhances the variety of the population and the accuracy of solving the problem due to the addition of the Lévy flight and the selective opposition, which effectively avoids local optimal solutions.

Table 7.

Statistical outcomes of the different search methods on the CEC2019 test functions.

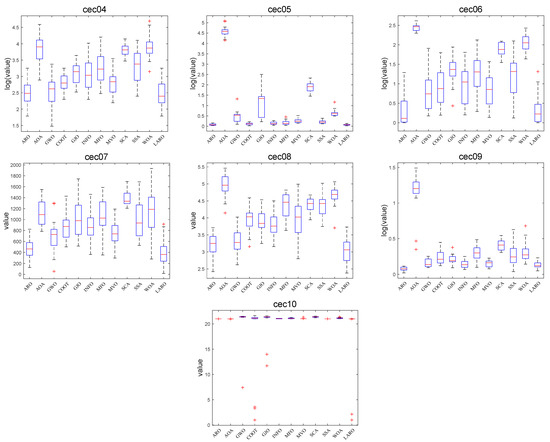

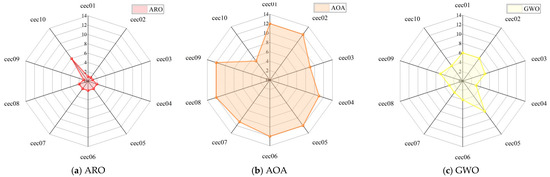

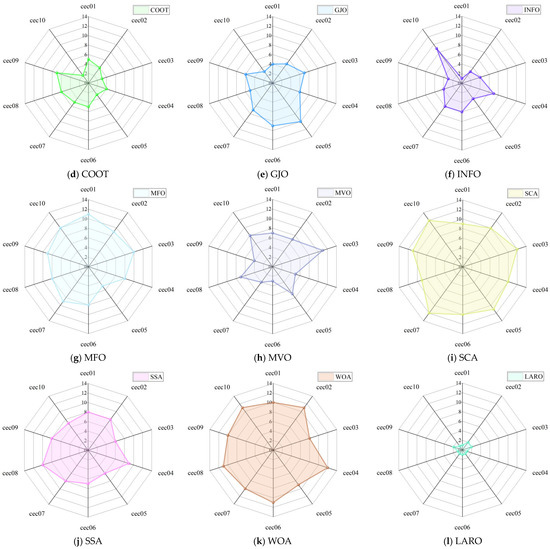

The convergence plots of the MH method in Figure 11 show the high quality and high accuracy of the LARO solutions and the significant convergence speed, such as cec01, cec02, cec03, cec04, cec05, cec06, cec7, cec08, cec10. Box plots and radar plots of the test function runs in CEC2019 are provided in Figure 12 and Figure 13, respectively, where these box-line plots provide very small widths, indicating the stability and superiority of LARO. In comparison, the radar plot demonstrates that LARO has the smallest ranking among all the tested functions. In Table 8, we give the p-values of 11 MH methods, the LARO algorithm, and the Wilcoxon test to check whether LARO outperforms other MH algorithms. The Wilcoxon test results for AROO, AOA, GWO, COOT, GJO, and INFO algorithms are 2/7/1, 0/0/10, 0/2/8, 0/1/9, 0/0/ The Wilcox test results for MFO, MVO, SCA, SSA, and WOA are 0/0/10, 0/1/9, 0/0/10, 0/1/9, and 0/0/10, respectively.

Figure 11.

Convergence plots of LARO and other search methods on the CEC2019 test functions.

Figure 12.

Box plot of LARO and ARO, AOA, GWO, COOT, GJO, INFO, MFO, MVO, SCA, SSA, WOA on CEC2019 test functions.

Figure 13.

Radar chart of ARO, AOA, GWO, COOT, GJO, INFO, MFO, MVO, SCA, SSA, WOA, and LARPO on CEC2019.

Table 8.

Statistical output and associated p-values on the CEC2019 test functions.

4.7. Impact Analysis of Each Improvement

The experiments in this section focus on numerical experiments of LARO with the compared algorithms on three standard test sets (23 benchmark test functions, CEC2017, CEC2019). This section summarizes the impact of different improvement strategies on the algorithm performance.

The introduction of the Lévy flight strategy in ARO is mainly used to solve the problem of low convergence accuracy of the original ARO. In contrast, single-peaked functions (e.g., F01–F07) are often used to test the convergence accuracy of the algorithm due to characteristics such as the absence of multiple solutions and the ease of exploration to the vicinity of the optimal solution. In the numerical experiments of 23 benchmark test functions, LARO ranks 1, 3, 1, 2, 3, 5, and 3 among the F01–F07 single-peaked functions, respectively. Except for F05–F06, the convergence accuracy of LARO is higher than that of the original ARO. In addition, in the numerical experiments of CEC2017, LARO ranks better than the original in both cec01 and cec03 ARO. Therefore, the Lévy flight strategy helps ARO to improve convergence accuracy successfully.

The selective backward learning strategy is introduced mainly to help ARO to jump out of the local solution in time. The multi-peaked functions (e.g., F08–F13, cec04–cec10 of CEC2017, and cec01–cec10 of CEC2019) are prone to fall into the vicinity of local solutions during the search process due to the existence of multiple solutions, which affects the convergence performance of the algorithm. Therefore, the algorithm’s ability to iterate over the multi-peaked functions reflects its ability to jump out of local solutions. In the numerical experiments with 23 benchmark test functions, LARO ranks 2, 1, 1, 1, 1, 3, and 1 for functions F08–F13, respectively. Except for F12, LARO’s optimization average is higher than the original ARO. In addition, LARO ranks 3, 1, 2, 1, 2, 1, 1, and 2 against cec04–cec10 of CEC2017, respectively. Except for cec06, the optimized average value of LARO is higher than that of the original ARO, and when facing the test set of CEC2019, the average ranking of LARO is 1.3636, which is higher than ARO at 1.9091. Therefore, LARO converges better than the original ARO when dealing with multi-peaked functions, which indicates that the selective backward learning strategy helps LARO to better jump out of the local solution.

5. Application of LARO in Semi-Real Mechanical Engineering

This subsection uses six practical mechanical engineering applications. There are many constraint treatments for optimization problems, such as penalty functions, co-evolutionary, adaptive, and annealing penalties [56]. Among them, penalty functions are the most used treatment strategy because they are simple to construct and easy to operate. Therefore, this paper uses the penalty function strategy to handle the optimization constraints of these six mechanical engineering optimization models, for the engineering optimization problem with minimization constraints defined as:

Minimize:

Subject to:

where m is the number of inequality constraints and k is the number of equation constraints. is the design variable of the engineering problem with dimension n. For the case with boundary constraints, a boundary requirement exists for all dimensional variables:

where lb and ub are the lower and upper bounds of the n-dimensional variable and n is the number of dimensions of the variable.

Therefore, the mathematical description of the engineering optimization problem after constraint weighting is

where α is the weight of the inequality constraint and β is the weight of the equation constraint. Considering the optimization process to satisfy the inequality and equation constraints, we require α and β to be large values. This paper sets them to 1 × 105 [38]. Therefore, the objective function is severely penalized (the value of the objective function increases) when the optimization solution exceeds any constraint. This mechanism will allow the algorithm to avoid illegal solutions inadvertently computed during the iterative process.

LARO and all the comparison algorithms were executed 30 times. The relevant parameters were a maximum iteration of 1000 and a population size of 50. In addition, for the solution of the practical engineering applications, we used the same comparison algorithms as in the numerical experiments, including AOA [38], GWO [39], COOT [40], GJO [41], INFO [42], MFO [43], MVO [44], SCA [45], SSA [46], WOA [47].

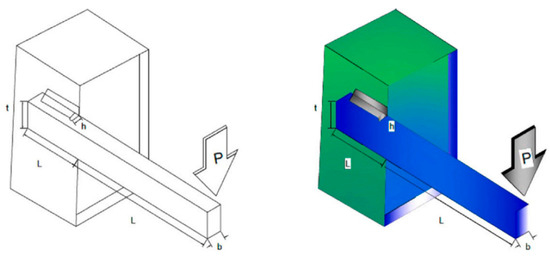

5.1. Welded Beam Design Problem (WBD)

The WBD requires that the design cost of the WBD be guaranteed to be minimal under various restraints. The schematic structural diagram of the WBD is provided in Figure 14. Four main relevant independent variables are obtained for the WBD: the welding thickness (h), rod attachment length (l), rod height (t), and rod thickness (b) [38]. The given variables are required to satisfy seven constraints. The model of the WBD is given below.

Figure 14.

WBD structure.

Minimize:

Variable:

Subject to:

where,

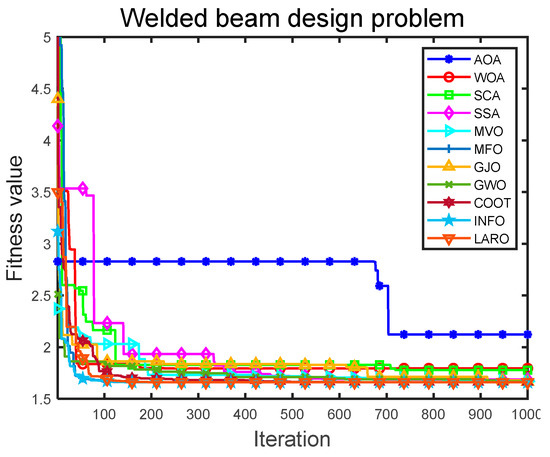

Table 9 provides the output results and best-fit cases for the search methods, and Table 10 documents the statistical output of the search methods. The combined evaluation of these two tables indicates that LARO obtained: the best outcomes for LARO with the same conditioning parameters. LARO has the best optimal value of the average. LARO obtains better results under the same conditioning parameters for the average and STD metrics, and, regarding the worst score metric, LARO performs well compared to different methods. The output results suggest that the LARO algorithm has good applicability for solving the WBD problem. Figure 15 provides the convergence iterations of LARO and the compared algorithms for the WBD problem. The figure shows that the proposed LARO has the best convergence and converges to the vicinity of the optimal solution in the early iterations. In comparison, the AOA has the worst convergence effect and convergence accuracy.

Table 9.

The output results of search methods and the best average solution for solving the WBD problem.

Table 10.

The statistical output results of the search methods in solving the WBD problem.

Figure 15.

Convergence iteration plot of LARO and comparison algorithms in WBD problem.

5.2. Pressure Vessel Design Problem (PVD)

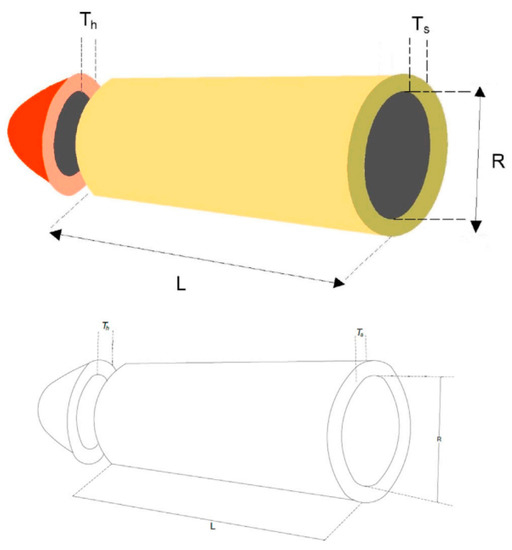

The structure of the PVD is illustrated in Figure 16. The ultimate aim of the PVD is to keep the total cost of the three aspects of the cylindrical vessel to a minimum. Both edges of the vessel are capped while the top is hemispherical. The PVD has four relevant design variables, including the shell (Ts), the thickness of the head (Th), the radius of entry (R), and the length of the cylindrical section (L) [38]. The mathematical model (four constraints) of the PVD is presented as follows.

Figure 16.

PVD structure.

Minimize:

Variable range:

Subject to:

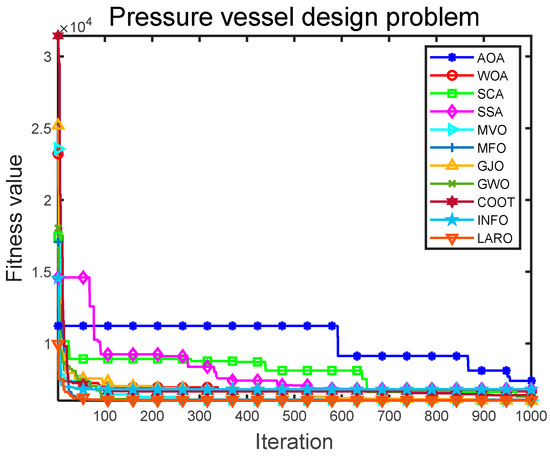

Table 11 provides the output results of the different search methods and the suitable average solution for solving the PVD problem. Table 12 documents the statistical outputs of the different methods of solving the PVD problem. By analyzing and evaluating two data, we find that LARO obtains suitable results for LARO with the same conditioning parameters. LARO has the suitable optimal value for the average value. LARO obtains the best case for the average and STD metrics compared to different search methods. The experimental output suggests that the LARO algorithm performs well in completing the PVD problem. Figure 17 provides the convergence iterations of LARO and the comparison algorithms in the PVD problem. From the results, it can be seen that LARO converges to the optimal solution. Compared to the other algorithms, LARO has the fastest convergence rate. SCA and SSA have poor convergence in the early stages, while AOA has poor convergence throughout. The results show that LARO has an advantage over the other algorithms in solving the PVD problem.

Table 11.

The output results of the different search methods and suitable average for solving the PVD problem.

Table 12.

The statistical output of the different search methods in completing the PVD problem.

Figure 17.

Convergence iteration plot of LARO and comparison algorithms in PVD problem.

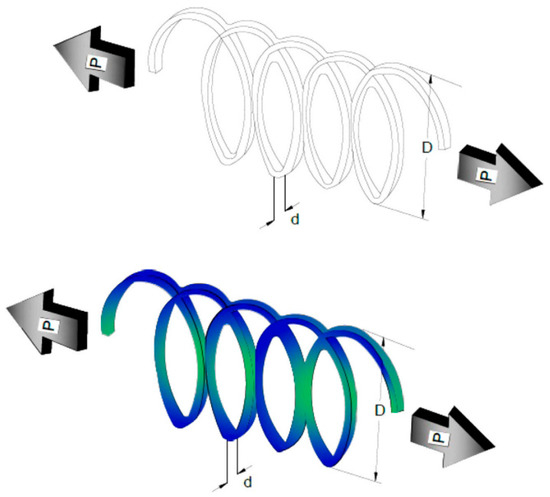

5.3. Tension/Compression String Design (TCS)

The most crucial objective of the TCS is to fit the mass optimally. The TCS includes three relevant design variables: wire diameter (d), number of active coils (N), and average coil diameter (D) [38]. A schematic representation of the TCS problem is displayed in Figure 18. The design model of the TCS is given below.

Figure 18.

TCS structure.

Minimize:

Variable range:

Subject to:

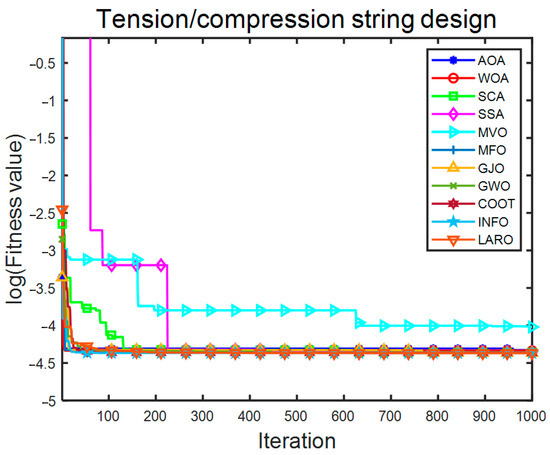

Table 13 provides the experimental results of all search methods and the best decision variables and the best average objective function values for solving the TCS problem, and provides the four constraint values for all algorithms, and Table 14 gives the statistical results for all search algorithms in solving the TCS problem. By analyzing and evaluating both data, we can find that LARO obtains better experimental results than other comparative algorithms. LARO has the best optimal, average, worst, and STD values. The numerical experimental results suggest that the LARO algorithm is a superior performance method for dealing with TCS. Figure 19 provides the convergence iterations of LARO and the comparison algorithms in the TCS problem. The vertical coordinates in the figure are the log values of the fitness values. From the results, it can be seen that LARO converges to the optimal solution. Compared to other algorithms, LARO has faster convergence. SSA has poor convergence in the early stage, while MVO has poor convergence throughout the process compared to different algorithms. The results show that LARO has an advantage over the other algorithms in solving the TCS problem.

Table 13.

The output results of the different search methods and suitable average for solving the TCS problem.

Table 14.

The statistical output of the different search methods in completing the TCS problem.

Figure 19.

Convergence iteration plot of LARO and comparison algorithms in TCS problem.

5.4. Gear Train Design (GTD)

The ultimate requirement of the GTD problem is to make the gear set with the most appropriate gear ratio cost to prepare the composite gear train. Figure 20 illustrates a schematic diagram of the GTD problem. There are four relevant integer variables for the GTD, where the four variables stand for the size of the teeth of four other gears [26]. These design variables represent the number of teeth on the gears and are denoted as Ta, Tb, Tc, and Td. The mathematical model of the GTD is given below.

Figure 20.

GTD structure.

Minimize:

Variable range:

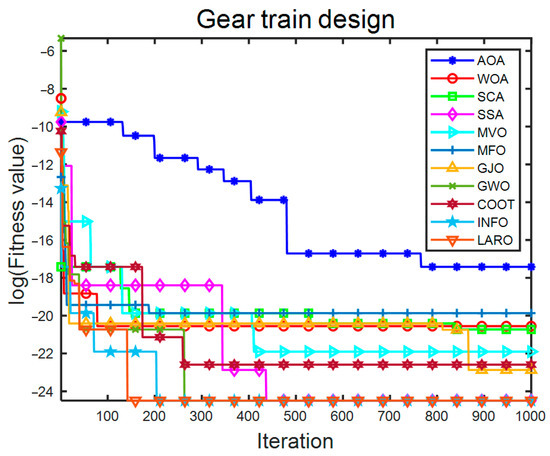

Table 15 provides the experimental results for all search algorithms and the best average solution for the GTD problem. Table 16 presents the statistical output for the different search methods in solving the GTD. The analysis shows that LARO gives better experimental results compared to other search algorithms. LARO provides the best optimal, average, worst, and STD value. Numerical experiments show that the LARO algorithm can obtain good accuracy in solving the GTD problem. Figure 21 provides the convergence iteration results of LARO and the comparison algorithm on the GTD problem. The vertical coordinates in the figure are the log values of the adaptation values. From the results, it can be seen that LARO converges to the optimal solution. LARO’s convergence speed and accuracy are reasonable compared to other algorithms. SSA, GWO, INFO, and LARO all have good convergence, while AOA has poor convergence throughout the process compared to different algorithms. The results show that LARO has an advantage over other algorithms in solving the GTD problem.

Table 15.

The output results of the different search methods and suitable average for solving the GTD problem.

Table 16.

The statistical output of the different search methods in completing the GTD problem.

Figure 21.

Convergence iteration plot of LARO and comparison algorithms in GTD problem.

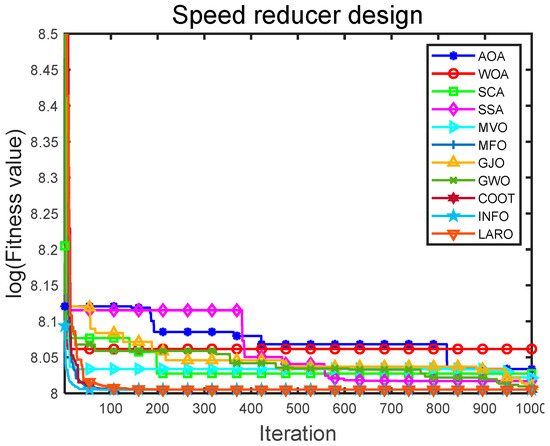

5.5. Speed Reducer Design (SRD)

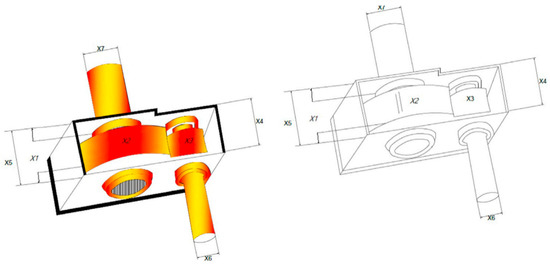

The ultimate aim of the SRD is to ensure that the weight of the mechanical equipment is minimized while satisfying the 11 constraints. The schematic design diagram of the SRD is shown in Figure 22. The SRD has seven relevant variables, including the bending stress of the gear teeth, the covering stress, the transverse deflection of the shaft, and the stress in the shaft, used to control the facilities of the SRD problem [38]. Here, z1 is the tooth width, z2 is the tooth mode, and z3 is the discrete design variable representing the teeth in the pinion. Similarly, z4 is the length of the first axis between the bearings and z5 is the length of the second axis between the bearings. The sixth and seventh design variables (z6 and z7) are the diameters of the first and second shafts, respectively. The design model of the SRD (11 constraints and objective functions) is given below.

Figure 22.

SRD structure.

Minimize:

Variable range:

Subject to:

Table 17 shows the most suitable outputs from LARO and the different selection comparison methods in dealing with the SRD problem. Table 18 gives the statistics of all search algorithms. It can be found that LARO outperforms the different search algorithms in terms of optimal performance. LARO has the best optimal, worst, average, and STD values for the same maximum iterations, while the smaller STD also indicates that LARO has good robustness. Therefore, LARO is effective in optimizing SRD solutions. Figure 23 provides the results of the convergence iterations of the LARO and comparison algorithms on the SRD problem. The vertical coordinates in the figure are the log values of the adaptation values. From the results, it can be seen that LARO converges to the optimal solution. The convergence speed and convergence accuracy of LARO are good compared to other algorithms. All the algorithms converge to near the optimal solution in the early iteration. The results show that LARO is an excellent algorithm for solving the SRD problem.

Table 17.

The output results of the different search methods and suitable average for solving the SRD problem.

Table 18.

The statistical output of the different search methods in completing the SRD problem.

Figure 23.

Convergence iteration plot of LARO and comparison algorithms in SRD problem.

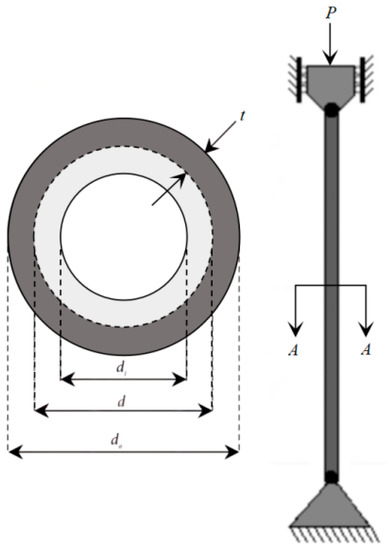

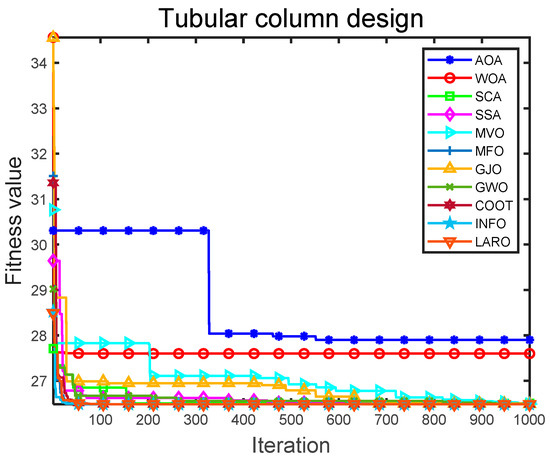

5.6. Tubular Column Design (TCD)

The TCD problem is to ensure that the cost of designing a homogeneous column with a tubular cross-section is minimized under the condition that six constraints are satisfied with suitable compressive loads [4]. The schematic design diagram of the TCD is illustrated in Figure 24. Two material-related conditions to be established for the TCD problem include yield stress σy = 500 kgf/cm2 and modulus of elasticity E = 0.85 × 106 kgf/cm2. The mathematical model of the TCD problem is given below.

Figure 24.

TCD structure.

Minimize:

Variable range:

Subject to:

Table 19 presents the most suitable outputs obtained by the LARO and other selection comparison algorithms for the TCD problem. Table 20 gives the statistics of all search algorithms dealing with the TCD. It can be noticed that LARO outperforms the different search methods in terms of optimal performance. LARO has the best optimal, worst, average, and STD values for the same maximum iterations, while the smaller STD also indicates that LARO has good robustness. Therefore, LARO is effective in optimizing the solution of TCD problems. Figure 25 provides the results of the convergence iterations of the LARO and comparison algorithms on the TCD problem. From the results, it can be seen that LARO converges to the optimal solution. LARO converges faster compared to the other algorithms. MVO converges poorly in the early stages, while AOA and WOA converge poorly throughout the process compared to the different algorithms. The results show that LARO has an advantage over the other algorithms in solving the TCD problem.

Table 19.

The output results of the different search methods and suitable average for solving the TCD problem.

Table 20.

The statistical output of the different search methods in completing the TCD problem.

Figure 25.

Convergence iteration plot of LARO and comparison algorithms in TCD problem.

6. Conclusions

In this study, an effective metaheuristic method called the enhanced ARO algorithm (LARO) is proposed. LARO is a variant of the ARO algorithm. To boost the global finding ability of ARO, the avoidance of local solutions and international exploration of LARO are designed by making full use of the Lévy flight strategy. In addition, local exploitation of LARO is achieved by using the selective opposition strategy. The most remarkable feature of LARO is that it has a straightforward structure and high computational accuracy, often requiring only the basic parameters (i.e., population size and termination conditions) for solving optimization problems. We tested the performance of LARO with 23 test functions, the CEC2019 test suite, and six mechanical engineering design problems. The experimental results show that LARO can obtain the optimal average solution in 16 of the 23 classical test functions and obtain the smallest average rank (2.3478). Additionally, LARO obtains the best solutions for five and seven functions in CEC2017 and CEC2019, respectively. The conclusion shows that the strategies for improved ARO are very effective in improving the optimization performance. However, there is still room for further improvement in the exploration ability of LARO when facing the CEC2017 test functions. In the mechanical optimization problem, all six practical problems are complex problems with multiple nonlinear constraints and multiple local solutions, and the output results show that LARO can obtain the best decision variables and objective function values. Because of its excellent convergence, exceptional exploration ability, and lack of need to fine-tune the initial parameters, LARO has excellent potential to handle optimization problems with various characteristics.

In future work, this study will expand the versions of the ARO algorithm to include the ARO algorithm for opposing learning initialization, the multi-objective ARO algorithm, the binary ARO algorithm, and the discrete version of the ARO algorithm [57,58,59,60,61,62]. In addition, we will focus on applying LARO to various complex real-world engineering optimization problems, such as hyperparametric optimization of machine learning algorithms, urban travel recommendations in intelligent cities, job-shop scheduling problems, image segmentation, developable surface modeling [63], and smooth path planning for mobile robots.

Author Contributions

Conceptualization, Y.W. and G.H.; Data curation, Y.W., L.H. and G.H.; Formal analysis, L.H. and J.Z.; Funding acquisition, G.H.; Investigation, Y.W., L.H. and J.Z.; Methodology, L.H., J.Z. and G.H.; Project administration, Y.W., J.Z. and G.H.; Resources, Y.W. and G.H.; Software, Y.W., L.H. and J.Z.; Supervision, G.H.; Validation, J.Z. and G.H.; Visualization, G.H.; Writing–original draft, Y.W., L.H., J.Z. and G.H.; Writing—review & editing, Y.W., L.H., J.Z. and G.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Research Fund of Department of Science and Department of Education of Shaanxi, China (Grant No. 21JK0615).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data generated or analyzed during this study were included in this published article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhao, W.; Wang, L.; Mirjalili, S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. Starling murmuration optimizer: A novel bio-inspired algorithm for global and engineering optimization. Comput. Methods Appl. Mech. Eng. 2022, 392, 114616. [Google Scholar] [CrossRef]

- Knypiński, Ł. Performance analysis of selected metaheuristic optimization algorithms applied in the solution of an unconstrained task. COMPEL—Int. J. Comput. Math. Electr. Electron. Eng. 2021, 41, 1271–1284. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Dwarf Mongoose Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2022, 391, 114570. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Ozcalici, M.; Bumin, M. Optimizing filter rule parameters with genetic algorithm and stock selection with artificial neural networks for an improved trading: The case of Borsa Istanbul. Expert Syst. Appl. 2022, 208, 118120. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Han, Z.; Chen, M.; Shao, S.; Wu, Q. Improved artificial bee colony algorithm-based path planning of unmanned autonomous helicopter using multi-strategy evolutionary learning. Aerosp. Sci. Technol. 2022, 122, 107374. [Google Scholar] [CrossRef]

- David, B.F. Artificial Intelligence through Simulated Evolution. In Evolutionary Computation: The Fossil Record; Wiley-IEEE Press: New York, NY, USA, 1998; pp. 227–296. [Google Scholar]

- Eslami, N.; Yazdani, S.; Mirzaei, M.; Hadavandi, E. Aphid–Ant Mutualism: A novel nature-inspired metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 201, 362–395. [Google Scholar] [CrossRef]

- Srivastava, A.; Das, D.K. A bottlenose dolphin optimizer: An application to solve dynamic emission economic dispatch problem in the microgrid. Knowl.-Based Syst. 2022, 243, 108455. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z. Beluga whale optimization: A novel nature-inspired metaheuristic algorithm. Knowl.-Based Syst. 2022, 251, 109215. [Google Scholar] [CrossRef]

- Braik, M.; Sheta, A.; Al-Hiary, H. A novel meta-heuristic search algorithm for solving optimization problems: Capuchin search algorithm. Neural Comput. Appl. 2021, 33, 2515–2547. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2022, 1–25. [Google Scholar] [CrossRef]

- Hu, G.; Li, M.; Wang, X.; Wei, G.; Chang, C.-T. An enhanced manta ray foraging optimization algorithm for shape optimization of complex CCG-Ball curves. Knowl.-Based Syst. 2022, 240, 108071. [Google Scholar] [CrossRef]

- Hu, G.; Du, B.; Wang, X.; Wei, G. An enhanced black widow optimization algorithm for feature selection. Knowl.-Based Syst. 2022, 235, 107638. [Google Scholar] [CrossRef]

- Hu, G.; Dou, W.; Wang, X.; Abbas, M. An enhanced chimp optimization algorithm for optimal degree reduction of Said–Ball curves. Math. Comput. Simul. 2022, 197, 207–252. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl.-Based Syst. 2019, 163, 283–304. [Google Scholar] [CrossRef]

- Javidy, B.; Hatamlou, A.; Mirjalili, S. Ions motion algorithm for solving optimization problems. Appl. Soft Comput. 2015, 32, 72–79. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm–A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110–111, 151–166. [Google Scholar] [CrossRef]

- Mousavirad, S.J.; Ebrahimpour-Komleh, H. Human mental search: A new population-based metaheuristic optimization algorithm. Appl. Intell. 2017, 47, 850–887. [Google Scholar] [CrossRef]

- Samareh Moosavi, S.H.; Bardsiri, V.K. Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Eng. Appl. Artif. Intell. 2019, 86, 165–181. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–Learning-Based Optimization: An optimization method for continuous non-linear large scale problems. Inf. Sci. 2012, 183, 1–15. [Google Scholar] [CrossRef]

- Hu, G.; Zhong, J.; Du, B.; Wei, G. An enhanced hybrid arithmetic optimization algorithm for engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 394, 114901. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. QANA: Quantum-based avian navigation optimizer algorithm. Eng. Appl. Artif. Intell. 2021, 104, 104314. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H. DMDE: Diversity-maintained multi-trial vector differential evolution algorithm for non-decomposition large-scale global optimization. Expert Syst. Appl. 2022, 198, 116895. [Google Scholar] [CrossRef]

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial rabbits optimization: A new bio-inspired meta-heuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

- Griffiths, E.J.; Orponen, P. Optimization, block designs and No Free Lunch theorems. Inf. Process. Lett. 2005, 94, 55–61. [Google Scholar] [CrossRef]

- Service, T.C. A No Free Lunch theorem for multi-objective optimization. Inf. Process. Lett. 2010, 110, 917–923. [Google Scholar] [CrossRef]

- Iacca, G.; dos Santos Junior, V.C.; Veloso de Melo, V. An improved Jaya optimization algorithm with Lévy flight. Expert Syst. Appl. 2021, 165, 113902. [Google Scholar] [CrossRef]

- Dhargupta, S.; Ghosh, M.; Mirjalili, S.; Sarkar, R. Selective Opposition based Grey Wolf Optimization. Expert Syst. Appl. 2020, 151, 113389. [Google Scholar] [CrossRef]

- Liu, K.; Zhang, H.; Zhang, B.; Liu, Q. Hybrid optimization algorithm based on neural networks and its application in wavefront shaping. Opt. Express 2021, 29, 15517–15527. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.A.; Gajpal, Y.; ElMekkawy, T.Y. Hybrid particle swarm optimization algorithm for solving the clustered vehicle routing problem. Appl. Soft Comput. 2021, 110, 107655. [Google Scholar] [CrossRef]

- Devarapalli, R.; Bhattacharyya, B. A hybrid modified grey wolf optimization-sine cosine algorithm-based power system stabilizer parameter tuning in a multimachine power system. Optim. Control. Appl. Methods 2020, 41, 1143–1159. [Google Scholar] [CrossRef]

- Arini, F.Y.; Chiewchanwattana, S.; Soomlek, C.; Sunat, K. Joint Opposite Selection (JOS): A premiere joint of selective leading opposition and dynamic opposite enhanced Harris’ hawks optimization for solving single-objective problems. Expert Syst. Appl. 2022, 188, 116001. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F. A new optimization method based on COOT bird natural life model. Expert Syst. Appl. 2021, 183, 115352. [Google Scholar] [CrossRef]

- Chopra, N.; Mohsin Ansari, M. Golden jackal optimization: A novel nature-inspired optimizer for engineering applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Heidari, A.A.; Noshadian, S.; Chen, H.; Gandomi, A.H. INFO: An efficient optimization algorithm based on weighted mean of vectors. Expert Syst. Appl. 2022, 195, 116516. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-Verse Optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Devarapalli, R.; Sinha, N.; Rao, B.; Knypiński, Ł.; Lakshmi, N.; García Márquez, F.P. Allocation of real power generation based on computing over all generation cost: An approach of Salp Swarm Algorithm. Arch. Electr. Eng. 2021, 70, 337–349. [Google Scholar]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Hussain, K.; Salleh, M.N.M.; Cheng, S.; Shi, Y. On the exploration and exploitation in popular swarm-based metaheuristic algorithms. Neural Comput. Appl. 2019, 31, 7665–7683. [Google Scholar] [CrossRef]

- Squires, M.; Tao, X.; Elangovan, S.; Gururajan, R.; Zhou, X.; Acharya, U.R. A novel genetic algorithm based system for the scheduling of medical treatments. Expert Syst. Appl. 2022, 195, 116464. [Google Scholar] [CrossRef]

- Peng, J.; Li, Y.; Kang, H.; Shen, Y.; Sun, X.; Chen, Q. Impact of population topology on particle swarm optimization and its variants: An information propagation perspective. Swarm Evol. Comput. 2022, 69, 100990. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Braik, M.; Hammouri, A.; Atwan, J.; Al-Betar, M.A.; Awadallah, M.A. White Shark Optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl.-Based Syst. 2022, 243, 108457. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef] [PubMed]

- Nadimi-Shahraki, M.H.; Fatahi, A.; Zamani, H.; Mirjalili, S.; Oliva, D. Hybridizing of Whale and Moth-Flame Optimization Algorithms to Solve Diverse Scales of Optimal Power Flow Problem. Electronics 2022, 11, 831. [Google Scholar] [CrossRef]

- Brest, J.; Maučec, M.S.; Bošković, B. The 100-Digit Challenge: Algorithm jDE100. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; pp. 19–26. [Google Scholar]

- Coello Coello, C.A. Theoretical and numerical constraint-handling techniques used with evolutionary algorithms: A survey of the state of the art. Comput. Methods Appl. Mech. Eng. 2002, 191, 1245–1287. [Google Scholar] [CrossRef]

- Hu, G.; Yang, R.; Qin, X.Q.; Wei, G. MCSA: Multi-strategy boosted chameleon-inspired optimization algorithm for engineering applications. Comput. Methods Appl. Mech. Eng. 2023, 403, 115676. [Google Scholar] [CrossRef]

- Zheng, J.; Hu, G.; Ji, X.; Qin, X. Quintic generalized Hermite interpolation curves: Construction and shape optimization using an improved GWO algorithm. Comput. Appl. Math. 2022, 41, 115. [Google Scholar] [CrossRef]

- Huang, L.; Wang, Y.; Guo, Y.; Hu, G. An Improved Reptile Search Algorithm Based on Lévy Flight and Interactive Crossover Strategy to Engineering Application. Mathematics 2022, 10, 2329. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, X.; Liu, J. An Improved Moth-Flame Optimization Algorithm for Engineering Problems. Symmetry 2020, 12, 1234. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S.; Ewees, A.A.; Abualigah, L.; Abd Elaziz, M. MTV-MFO: Multi-Trial Vector-Based Moth-Flame Optimization Algorithm. Symmetry 2021, 13, 2388. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, L.; Liu, G.; Xia, B. Automatic Parking Path Optimization Based on Immune Moth Flame Algorithm for Intelligent Vehicles. Symmetry 2022, 14, 1923. [Google Scholar] [CrossRef]

- Hu, G.; Zhu, X.N.; Wang, X.; Wei, G. Multi-strategy boosted marine predators algorithm for optimizing approximate developable surface. Knowl.-Based Syst. 2022, 254, 109615. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).