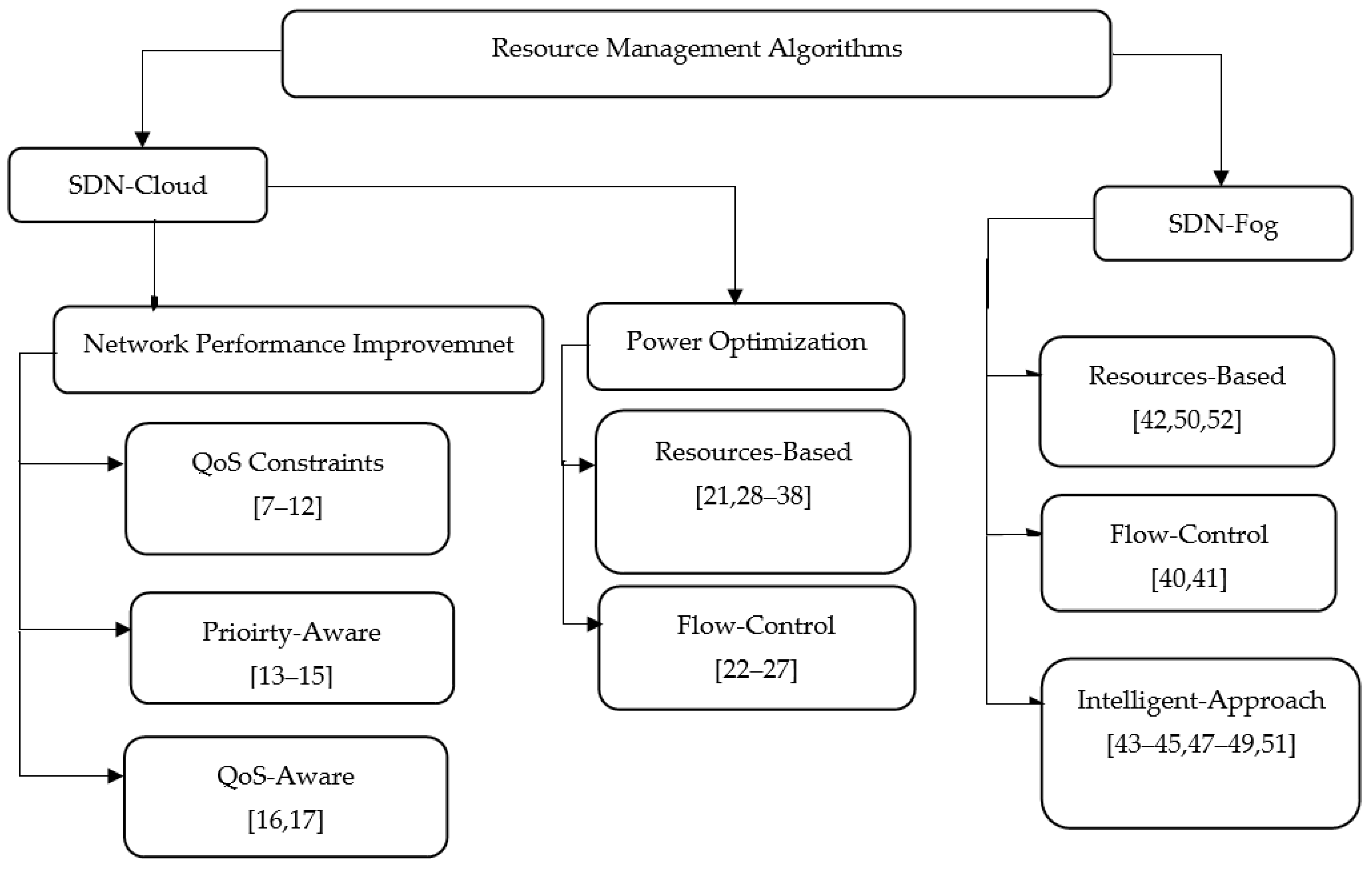

Resource Management in SDN-Based Cloud and SDN-Based Fog Computing: Taxonomy Study

Abstract

1. Introduction

2. Methodology of the Literature Review

3. SDN-Based Cloud Resource Management in Network Performance Improvement

3.1. Resources Management Based on QoS Constraints

3.2. Priority-Aware Resources Management

3.3. QoS-Aware Resources Management

3.4. Contributions on VM Migration Task in Resources Management

4. SDN-Based Cloud Computing Resource Management in Energy Efficiency

5. SDN-Based Fog Computing

6. Open Issues

- Power savings is a challenge, along with several related issues such as data transferring, powered devices, and energy wastage.

- The network performance can be enhanced by considering the awareness of the resources management algorithm and considering the bandwidth due to its impact on the overall performance. Moreover, control over resources leads to performance improvement.

- There has been always a tradeoff relationship between the network performance and power optimization due to the consequences resulting from overutilized and underutilized resources. Only few researches manage to find a balanced relationship between the two variables.

- Dynamic deterministic of the overall objective from a set of objectives, such as the minimizing delay or maximizing reliability, based on the application type is an open issue.

- Most research focused on the Central Processing Unit (CPU) as the most dominant factors in energy consumption. Even though it is considered as a high-power consumer element in PM, but other important factors are being neglected, such as RAM, network card, and storage.

- Most presented techniques focused only at the server level and ignored the possible contributions on flows/links.

- From state-of-the-art researches in SDN-based fog, we can observe that interrelated fields are presented such as machine learning and artificial intelligent in order to provide a fully programmed environment, which is a promising approach for future researches.

- Identifying and fulfilling requests with higher priority prior to low-priority requests is an issue due to the high volume of data transferred among different devices, which results in congested links and, thus, latency.

- Joining SDN and named data networking (NDN) is a new approach to gain the maximum privilege of both techniques. However, few researches are conducted in this field.

- Furthermore, SDN cloud is an innovative field, which brings a great opportunity for researchers, since most studies either contribute on the field of cloud or SDN.

- The scalability issue, which can be classified into two aspects: resources scalability either scaling up or down in the cloud and fog layers and controllers scalability (local and global) to consider their location and number of required controllers.

- Security is one the main challenges encountered in SDN-Based cloud/fog, including authentication and SDN.

- Legacy SDN such as OpenFlow forces certain architectures when designing controllers that lead to strict environment for the revolution and development, especially in a fog environment.

- Only a few contributions regarding the simulation tools and testbed that facilitates the experiments of SDN-based environments.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Culver, P. Software Defined Networks, 2nd ed.; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- Nunes, B.A.A.; Mendonca, M.; Nguyen, X.-N.; Obraczka, K.; Turletti, T. A survey of software-defined networking: Past, present, and future of programmable net-works. IEEE Commun. Surv. Tutor 2013, 16, 1617–1634. [Google Scholar] [CrossRef]

- Leon-Garcia, A.; Bannazadeh, H.; Zhang, Q. Openflow and SDN for Clouds. Cloud Serv. Netw. Manag. 2015, 6, 129–152. [Google Scholar] [CrossRef]

- Arivazhagan, C.; Natarajan, V. A Survey on Fog computing paradigms, Challenges and Opportunities in IoT. In Proceedings of the 2020 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 28–30 July 2020; pp. 385–389. [Google Scholar]

- Schaller, S.; Hood, D. Software defined networking architecture standardization. Comput. Stand. Interfaces 2017, 54, 197–202. [Google Scholar] [CrossRef]

- Gonzalez, N.M.; Carvalho, T.C.M.D.B.; Miers, C.C. Cloud resource management: Towards efficient execution of large-scale scientific applications and workflows on complex infrastructures. J. Cloud Comput. 2017, 6, 13. [Google Scholar] [CrossRef]

- Wang, R.; Esteves, R.; Shi, L.; Wickboldt, J.A.; Jennings, B.; Granville, L.Z. Network-aware placement of virtual machine ensembles using effective bandwidth estimation. In Proceedings of the 10th International Conference on Network and Service Management (CNSM) and Workshop, Rio de Janeiro, Brazil, 17–21 November 2014; pp. 100–108. [Google Scholar]

- Ballani, H.; Costa, P.; Karagiannis, T.; Rowstron, A. Towards predictable datacenter networks. ACM SIGCOMM Comput. Commun. Rev. 2011, 41, 242–253. [Google Scholar] [CrossRef]

- Wang, R.; Wickboldt, J.A.; Esteves, R.P.; Shi, L.; Jennings, B.; Granville, L.Z. Using empirical estimates of effective band-width in network-aware placement of virtual machines in datacenters. IEEE Trans. Netw. Serv. Manag. 2016, 13, 267–280. [Google Scholar] [CrossRef]

- Wang, R.; Mangiante, S.; Davy, A.; Shi, L.; Jennings, B. QoS-aware multipathing in datacenters using effective bandwidth estimation and SDN. In Proceedings of the 2016 12th International Conference on Network and Service Management (CNSM), Montreal, QC, Canada, 31 October–4 November 2016; pp. 342–347. [Google Scholar]

- Hopps, C. “Analysis of an Equal-Cost Multi-Path Algorithm,” Internet Requests for Comments, RFC 2992. November 2000. Available online: https://www.hjp.at/doc/rfc/rfc2992.html (accessed on 5 March 2021).

- Al-Mansoori, A.; Abawajy, J.; Chowdhury, M. BDSP in the cloud: Scheduling and Load Balancing utlizing SDN and CEP. In Proceedings of the 2020 20th IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing (CCGRID), Melbourne, VIC, Australia, 11–14 May 2020; pp. 827–835. [Google Scholar]

- Tsai, W.-T.; Shao, Q.; Elston, J. Prioritizing Service Requests on Cloud with Multi-tenancy. In Proceedings of the 2010 IEEE 7th International Conference on E-Business Engineering, Shanghai, China, 10–12 November 2010; pp. 117–124. [Google Scholar]

- Ellens, W.; Ivkovic, M.; Akkerboom, J.; Litjens, R.; van den Berg, H. Performance of cloud computing centers with multi-ple priority classes. In Proceedings of the 2012 IEEE Fifth International Conference on Cloud Computing, Honolulu, HI, USA, 24–29 June 2012; pp. 245–252. [Google Scholar]

- Son, J.; Buyya, R. Priority-Aware VM Allocation and Network Bandwidth Provisioning in Software-Defined Networking (SDN)-Enabled Clouds. IEEE Trans. Sustain. Comput. 2018, 4, 17–28. [Google Scholar] [CrossRef]

- Lin, S.-C.; Wang, P.; Luo, M. Jointly optimized QoS-aware virtualization and routing in software defined networks. Comput. Netw. 2016, 96, 69–78. [Google Scholar] [CrossRef]

- Wang, S.-H.; Huang, P.P.W.; Wen, C.H.P.; Wang, L.C. EQVMP: Energy-efficient and qos-aware virtual machine placement for software defined datacenter networks. In Proceedings of the International Conference on Information Networking 2014 (ICOIN2014), Phuket, Thailand, 10–12 February 2014; pp. 220–225. [Google Scholar]

- Cziva, R.; Jout, S.; Stapleton, D.; Tso, F.P.; Pezaros, D.P. SDN-based virtual machine management for cloud data cen-ters. IEEE Trans. Netw. Serv. Manag. 2016, 13, 212–225. [Google Scholar] [CrossRef]

- Tso, F.P.; Oikonomou, K.; Kavvadia, E.; Pezaros, D.P.; Oikonomou, K. Scalable Traffic-Aware Virtual Machine Management for Cloud Data Centers. In Proceedings of the 2014 IEEE 34th International Conference on Distributed Computing Systems, Madrid, Spain, 30 June–3 July 2014; pp. 238–247. [Google Scholar]

- Mann, V.; Gupta, A.; Dutta, P.; Vishnoi, A.; Bhattacharya, P.; Poddar, R.; Iyer, A. Remedy: Network-aware steady state VM management for data centers. In Proceedings of the International Conference on Research in Networking, Prague, Czech Republic, 21–25 May 2012; Volume 7289, pp. 190–204. [Google Scholar]

- Son, J.; Dastjerdi, A.V.; Calheiros, R.N.; Buyya, R. SLA-Aware and Energy-Efficient Dynamic Overbooking in SDN-Based Cloud Data Centers. IEEE Trans. Sustain. Comput. 2017, 2, 76–89. [Google Scholar] [CrossRef]

- Zheng, K.; Wang, X. Dynamic Control of Flow Completion Time for Power Efficiency of Data Center Networks. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 340–350. [Google Scholar]

- Zheng, K.; Wang, X.; Wang, X. PowerFCT: Power optimization of data center network with flow completion time con-straints, IPDPS. In Proceedings of the 2015 IEEE International Parallel and Distributed Processing Symposium, Hyderabad, India, 25–29 May 2015. [Google Scholar]

- Zheng, K.; Wang, X.; Liu, J. DISCO: Distributed traffic flow consolidation for power efficient data center network. In Proceedings of the 2017 IFIP Networking Conference (IFIP Networking) and Workshops, Stockholm, Sweden, 12–16 June 2017; pp. 1–9. [Google Scholar]

- Heller, B.; Seetharaman, S.; Mahadevan, P.; Yiakoumis, Y.; Sharma, P.; Banerjee, S.; McKeown, N. ElasticTree: Saving energy in data center networks. In Proceedings of the 7th USENIX Symposium on Networked Systems Design and Implementation (NSDI 2010), San Jose, CA, USA, 28–30 April 2010; p. 17. [Google Scholar]

- Wang, X.; Yao, Y.; Wang, X.; Lu, K.; Cao, Q. CARPO: Correlation-aware power optimization in data center networks. In Proceedings of the 2012 Proceedings IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012; pp. 1125–1133. [Google Scholar]

- Alizadeh, M.; Greenberg, A.; Maltz, D.A.; Padhye, J.; Patel, P.; Prabhakar, B.; Sengupta, S.; Sridharan, M. Data center TCP (DCTCP). In Proceedings of the SIGCOMM ‘10: Proceedings of the ACM SIGCOMM 2010 Conference, New York, NY, USA, 30 August–3 September 2010; pp. 63–74. [Google Scholar]

- Abts, D.; Marty, M.R.; Wells, P.M.; Klausler, P.; Liu, H. Energy proportional datacenter networks. ACM SIGARCH Comput. Arch. News 2010, 38, 338–347. [Google Scholar] [CrossRef]

- Zhao, J.Z.; Li, K. An Energy-Aware Algorithm for Virtual Machine Placement in Cloud Computing. IEEE Access 2019, 7, 55659–55668. [Google Scholar] [CrossRef]

- Sharma, N.K.; Reddy, G.R.M. Multi-Objective Energy Efficient Virtual Machines Allocation at the Cloud Data Center. IEEE Trans. Serv. Comput. 2019, 12, 158–171. [Google Scholar] [CrossRef]

- Liu, X.; Cheng, B.; Wang, S. Availability-Aware and Energy-Efficient Virtual Cluster Allocation Based on Multi-Objective Optimization in Cloud Datacenters. IEEE Trans. Netw. Serv. Manag. 2020, 17, 972–985. [Google Scholar] [CrossRef]

- Alanazi, S.; Hamdaoui, B. Energy-Aware Resource Management Framework for Overbooked Cloud Data Centers with SLA Assurance. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Li, L.; Dong, J.; Zuo, D.; Wu, J. SLA-Aware and Energy-Efficient VM Consolidation in Cloud Data Centers Using Robust Linear Regression Prediction Model. IEEE Access 2019, 7, 9490–9500. [Google Scholar] [CrossRef]

- Shaw, R.; Howley, E.; Barrett, E.A. Predictive Anti-Correlated Virtual Machine Placement Algorithm for Green Cloud Computing. In Proceedings of the 2018 IEEE/ACM 11th International Conference on Utility and Cloud Computing (UCC), Zurich, Switzerland, 17–20 December 2018; pp. 267–276. [Google Scholar]

- Gul, B.; Khan, I.A.; Mustafa, S.; Khalid, O.; Hussain, S.S.; Dancey, D.; Nawaz, R. CPU and RAM Energy-Based SLA-Aware Workload Consolidation Techniques for Clouds. IEEE Access 2020, 8, 62990–63003. [Google Scholar] [CrossRef]

- Than, M.M.; Thein, T. Energy-Saving Resource Allocation in Cloud Data Centers. In Proceedings of the 2020 IEEE Conference on Computer Applications(ICCA), Yangon, Myanmar, 27–28 February 2020; pp. 1–6. [Google Scholar]

- Al-Hazemi, F.; Lorincz, J.; Mohammed, A.F.Y. Minimizing Data Center Uninterruptable Power Supply Overload by Server Power Capping. IEEE Commun. Lett. 2019, 23, 1342–1346. [Google Scholar] [CrossRef]

- Ding, Z.; Tian, Y.; Tang, M.; Li, Y.; Wang, Y.; Zhou, C. Profile-Guided Three-Phase Virtual Resource Management for En-ergy Efficiency of Data Centers. IEEE Trans. Ind. Electron. 2020, 67, 2460–2468. [Google Scholar] [CrossRef]

- Rawas, S. Energy, network, and application-aware virtual machine placement model in SDN-enabled large scale cloud data centers. Multimed. Tools Appl. 2021, 1–22. [Google Scholar] [CrossRef]

- Okay, F.Y.; Ozdemir, S.; Demirci, M. SDN-Based Data Forwarding in Fog-Enabled Smart Grids. In Proceedings of the 2019 1st Global Power Energy and Communication Conference (GPECOM), Nevsehir, Turkey, 12–15 June 2019; pp. 62–67. [Google Scholar]

- Bellavista, P.; Giannelli, C.; Montenero, D.D.P. A Reference Model and Prototype Implementation for SDN-Based Multi Layer Routing in Fog Environments. IEEE Trans. Netw. Serv. Manag. 2020, 17, 1460–1473. [Google Scholar] [CrossRef]

- Kadhim, A.J.; Seno, S.A.H. Maximizing the Utilization of Fog Computing in Internet of Vehicle Using SDN. IEEE Commun. Lett. 2018, 23, 140–143. [Google Scholar] [CrossRef]

- Sellami, B.; Hakiri, A.; Ben Yahia, S.; Berthou, P. Deep Reinforcement Learning for Energy-Efficient Task Scheduling in SDN-based IoT Network. In Proceedings of the 2020 IEEE 19th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 24–27 November 2020; pp. 1–4. [Google Scholar]

- Casas-Velasco, D.M.; Villota-Jacome, W.F.; Da Fonseca, N.L.S.; Rendon, O.M.C. Delay Estimation in Fogs Based on Software-Defined Networking. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar]

- Frohlich, P.; Gelenbe, E.; Nowak, M.P. Smart SDN Management of Fog Services. In Proceedings of the 2020 Global Internet of Things Summit (GIoTS), Dublin, Ireland, 3 June 2020; pp. 1–6. [Google Scholar]

- Amadeo, M.; Campolo, C.; Ruggeri, G.; Molinaro, A.; Iera, A. Towards Software-defined Fog Computing via Named Data Networking. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; pp. 133–138. [Google Scholar]

- Boualouache, A.; Soua, R.; Engel, T. Toward an SDN-based Data Collection Scheme for Vehicular Fog Computing. In Proceedings of the ICC 2020—2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Akbar, A.; Ibrar, M.; Jan, M.A.; Bashir, A.K.; Wang, L. SDN-Enabled Adaptive and Reliable Communication in IoT-Fog Environment Using Machine Learning and Multiobjective Optimization. IEEE Internet Things J. 2021, 8, 3057–3065. [Google Scholar] [CrossRef]

- Nkenyereye, L.; Nkenyereye, L.; Islam, S.M.R.; Kerrache, C.A.; Abdullah-Al-Wadud, M.; Alamri, A. Software Defined Network-Based Multi-Access Edge Framework for Vehicular Networks. IEEE Access 2019, 8, 4220–4234. [Google Scholar] [CrossRef]

- Phan, L.-A.; Nguyen, D.-T.; Lee, M.; Park, D.-H.; Kim, T. Dynamic fog-to-fog offloading in SDN-based fog computing systems. Future Gener. Comput. Syst. 2021, 117, 486–497. [Google Scholar] [CrossRef]

- Ibrar, M.; Akbar, A.; Jan, R.; Jan, M.A.; Wang, L.; Song, H.; Shah, N. ARTNet: AI-based Resource Allocation and Task Offloading in a Reconfigurable Internet of Vehicular Networks. IEEE Trans. Netw. Sci. Eng. 2020, 1. [Google Scholar] [CrossRef]

- Cao, B.; Sun, Z.; Zhang, J.; Gu, Y. Resource Allocation in 5G IoV Architecture Based on SDN and Fog-Cloud Computing. IEEE Trans. Intell. Transp. Syst. 2021, 1–9. [Google Scholar] [CrossRef]

- Ollora Zaballa, E.; Franco, D.; Aguado, M.; Berger, M.S. Next-generation SDN and fog computing: A new paradigm for SDN-based edge computing. In Proceedings of the 2nd Workshop on Fog Computing and the IoT, Sydney, Australia, 8 April 2020; pp. 9:1–9:8. [Google Scholar]

| Ref. | Major Feature | Main Tasks | Env. | Dataset | Eval. Tool | Measured Metrics | Contribution |

|---|---|---|---|---|---|---|---|

| [7] |

|

| DCs |

| Emulated virtual DCN |

|

|

| [8] |

|

|

|

| Simulation and testbed |

|

|

| [9] |

|

| DCs |

| Testbed |

|

|

| [10] |

|

| SDN |

| Testbed |

|

|

| [12] |

|

| SDN-Cloud |

| Cloudsim with SDN settings |

|

|

| [13] |

|

|

|

| Simulation |

| Greater revenue with maintained response time |

| [14] |

|

|

|

| Simulation |

| Analytical determination of rejection probability for different priority classes for resources allocation problem. |

| [15] |

|

|

|

| CloudSimSDN |

| Reduced response time |

| [16] |

|

|

|

| Simulation |

| Better tenants’ isolation with enhanced congestion latency and less delay. |

| [17] | Three-tier algorithm:

|

|

|

| Simulation: NS2 |

| Significantly increase of system throughput while acceptably decrease power consumption and average delay. |

| [18,19] |

|

|

|

|

|

| Significant decrease in congestion and enhance overall throughput. |

| [20] |

|

|

|

| Simulation |

| Reduce unsatisfied bandwidth |

| Ref. | Power Consumption | Response Time | Qos Violation Rate | Throughput | Link/Network Utilization | Rejection Rate/ Rejected Requests | Delay | Cost | Profit/Revenue | Job/File Completion Time | Number of Shared Links | Latency | Communication Cost | Scalability | Number of Migration | Unsatisfied Bandwidth |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [7] | ● | ● | ||||||||||||||

| [8] | ● | ● | ● | ● | ● | |||||||||||

| [9] | ● | ● | ● | |||||||||||||

| [10] | ● | ● | ||||||||||||||

| [12] | ● | |||||||||||||||

| [13] | ● | ● | ||||||||||||||

| [14] | ● | |||||||||||||||

| [15] | ● | ● | ||||||||||||||

| [16] | ● | ● | ● | |||||||||||||

| [17] | ● | ● | ● | |||||||||||||

| [18] | ● | ● | ● | ● | ● | |||||||||||

| [19] | ● | ● | ● | ● | ● | |||||||||||

| [20] | ● | ● | ● |

| Ref. | Major Feature | Main Tasks | Envi. | Dataset | Evaluation Tool | Measured Metrics | Contribution |

|---|---|---|---|---|---|---|---|

| [21] | Dynamic overbooking & correlation analysis | Overbooking controller: VM placement & flow consolidation using overbooking ratio and correlation analysis. Resource Utilization Monitor: collects history data of PM utilization metrics, VM & Virtual links. | SDN- clouds | Wikipedia workload | Simulation: CloudSimSDN |

| Reduced power consumption while reducing SLA violation rate. |

| [22] |

| Traffic consolidation method that consider file completion time: FCTcon | DCN | Real DCN traces from Wikipedia and Yahoo. | Simulation & testbed |

|

|

| [23] |

| Power optimization: PowerFCT | DCN | Wikipedia DCN traces. | Testbed and simulation |

| Loosely FCT requirements lead to higher power saving while FCT miss ratio is the minimum in all cases |

| [24] |

|

| DCN | Real DCN traces from Wikipedia, Yahoo, and DCP. | Simulation & testbed. |

|

|

| [25] | Using traffic stats and control over flow path. | Power optimization: Elastic Tree | DCN | Traffic generator tool. | Testbed |

| Ability to reduce power while support performance and robustness. |

| [26] | Dynamic consoledation of traffic flow based on correlati-on analysis. | Power optimization: correlation analysis, traffic consolidation, and link rate adaptation: CARPO | DCN | Wikipedia and Yahoo traces. | Testbed |

| Outperform in power saving. |

| [27] | Early deduction of congestion | Reducing buffer space while maintain performance by impowering Explicit Congestion Notification (ECN): DCTCP | DCN | Three types of traffic:

| Testbed |

| |

| [28] | Butterfly topology to reach scalable network, full utilization with reduced power | DCN |

| Simulation |

| Links operate mostly in low power consumption mode | |

| [29] | Genetic algorithm with the tabue search algorithm | VM placement using Genetic Algorithm where Tabue search algorithm is used to enhance search by performing mutation operations. | Cloud Datacenter |

| Pycharm 3.3 |

| Reduced power consumption while lowers load balance. Also gives highest number of optimal solutions. |

| [30] | Multi-objective resources allocateon based on Euclidean distance uses Genetic Algorithm (GA) and Particle Swarm Optimization (PSO) |

| CloudDC |

| Java |

| Better results when combined with VM migration |

| [31] | Natural evolutionary algorithm |

| Cloud DC | CloudSim |

| Lower risk value, least power consumption, better average resources utilization with least load balance value | |

| [32] | Dynamic VM placement |

| Cloud DC |

|

| Less number of overloads occurred with an outperforming on minimizing SLA violation. | |

| [33] | Linear regression prediction model | VM placement based on Linear regression prediction model: Overloaded and underloaded algorithms.

| Clouds DC |

| Simulation: CloudSim |

| Reduced power with minimum SLA violation. |

| [34] | Neural networks | Dynamic VM placement based on joint CPU demands & prediction of future VM placement. | Cloud |

| Simulation: CloudSim |

| Best result of reducing power consumption with smaller SLA violation. |

| [35] | ConsiderCPU and RAM as major power consum-ers |

| CloudDC |

| Simulation: CloudSim |

| Reduced power consumption and performance degradation with dynamic threshold. |

| [36] | Experimental evaluation of different policies, power management models, and power models. | Two algorithms which utilize FCFS/ SJF scheduling tasks and DVFC power management using cubic energy model. | Cloud DC |

| Simulation: CloudSim |

| Reduced power consumption. |

| [37] | Uninterruptable power supply UPS minimization cost function. | Centralized manager with two server-level agents:

| Cloud |

| Testbed |

| Power assignment applied properly which prevents UPSs overloading. |

| [38] | Three-phases for VM placement | Three-phases for VM management:

| Cloud DC |

|

| Energy saving is increased by at most 12% | |

| [39] | Genetic Algorithm |

| SDN-Cloud |

| CloudSimSDN |

| outperforms in reducing power consumption, response time, and hourly cost. |

| Ref. | Power Consumption/Saving | Response Time | Flow Completion Time | FCT Miss Ratio | Delay | Robustness | Latency | Packet Drop Ratio | Throughput | Queue Length | Convergence | Timeout Query/Flow and Query Completion Time | Cumulative Fraction of Query Latency and Completion Time | Fraction of Time Spent at Each Link | Load Balance | Number of Optimal Solutions | Resources Utilization | Risk Value | Number of Overloads | SLA Violation | Performance Degradation | CPU Cap/Frequency |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [21] | ● | ● | ||||||||||||||||||||

| [22] | ● | ● | ||||||||||||||||||||

| [23] | ● | ● | ||||||||||||||||||||

| [24] | ● | ● | ||||||||||||||||||||

| [25] | ● | ● | ● | |||||||||||||||||||

| [26] | ● | ● | ● | |||||||||||||||||||

| [27] | ● | ● | ● | ● | ● | |||||||||||||||||

| [28] | ● | ● | ||||||||||||||||||||

| [29] | ● | ● | ● | |||||||||||||||||||

| [30] | ● | ● | ||||||||||||||||||||

| [31] | ● | ● | ● | ● | ||||||||||||||||||

| [32] | ● | ● | ● | |||||||||||||||||||

| [33] | ● | ● | ||||||||||||||||||||

| [34] | ● | ● | ||||||||||||||||||||

| [35] | ● | ● | ● | |||||||||||||||||||

| [36] | ● | |||||||||||||||||||||

| [37] | ● | ● | ||||||||||||||||||||

| [38] | ● |

| Ref. | Major Feature | Main Tasks | Env. | Dataset | Eval.Tool | Measured Metrics | Contribution |

|---|---|---|---|---|---|---|---|

| [40] |

|

| sdn- fog enabled smart grid |

| mininet support openflow protocol |

|

|

| [41] |

|

| SDN- based Fog Computing |

| testbed |

|

|

| [42] | Resource utilization using local and global load balancers |

| SDN-Fog IoVs |

| OMNeT++ and SUMO |

|

|

| [43] |

|

|

|

| CRISP-DM methodology. |

| predicted delay values similar to actual delay values. |

| [44] |

|

|

|

| Testbed |

|

|

| [45] | reinforcement learning |

|

|

| Testbed |

| Ability to adopt to medium and high load. |

| [46] |

|

|

|

| MATLAB |

| communication is reduced between nodes and controller |

| [47] |

|

|

|

| Veins Simulation Framework |

| |

| [48] |

|

|

|

| Mininet with POX SDN controller |

| paths are selected based on QoS constraint either reliable path/ minimize delay and succeeds on performing less data packets and delay. |

| [49] |

| 4 layers architecture for SD-MEC: access layer, forwarding layer, multi edge computing layer, and control layer |

|

| OMNET++ with INET framework. |

| least average E2E delay highest packet delivery ratio |

| [50] |

| 4-layers architecture: fog layer, core switches, controller, and service layer |

|

| Minimit and Python |

| Outperforms in minmizing selection time thus minmizing delay. As well, provide better throughput and less response time. |

| [51] |

| 3-layers arcitecture: IoV layer Fog layer Intellegent-SDN layer |

| IoV based on SDV-F framework |

| Least energy consumption and latency. | |

| [52] |

| Multi- objective resource allocation |

|

| Outperform in time excution stability and load balancing objectives |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alomari, A.; Subramaniam, S.K.; Samian, N.; Latip, R.; Zukarnain, Z. Resource Management in SDN-Based Cloud and SDN-Based Fog Computing: Taxonomy Study. Symmetry 2021, 13, 734. https://doi.org/10.3390/sym13050734

Alomari A, Subramaniam SK, Samian N, Latip R, Zukarnain Z. Resource Management in SDN-Based Cloud and SDN-Based Fog Computing: Taxonomy Study. Symmetry. 2021; 13(5):734. https://doi.org/10.3390/sym13050734

Chicago/Turabian StyleAlomari, Amirah, Shamala K. Subramaniam, Normalia Samian, Rohaya Latip, and Zuriati Zukarnain. 2021. "Resource Management in SDN-Based Cloud and SDN-Based Fog Computing: Taxonomy Study" Symmetry 13, no. 5: 734. https://doi.org/10.3390/sym13050734

APA StyleAlomari, A., Subramaniam, S. K., Samian, N., Latip, R., & Zukarnain, Z. (2021). Resource Management in SDN-Based Cloud and SDN-Based Fog Computing: Taxonomy Study. Symmetry, 13(5), 734. https://doi.org/10.3390/sym13050734