Abstract

The clustering analysis algorithm is used to reveal the internal relationships among the data without prior knowledge and to further gather some data with common attributes into a group. In order to solve the problem that the existing algorithms always need prior knowledge, we proposed a fast searching density peak clustering algorithm based on the shared nearest neighbor and adaptive clustering center (DPC-SNNACC) algorithm. It can automatically ascertain the number of knee points in the decision graph according to the characteristics of different datasets, and further determine the number of clustering centers without human intervention. First, an improved calculation method of local density based on the symmetric distance matrix was proposed. Then, the position of knee point was obtained by calculating the change in the difference between decision values. Finally, the experimental and comparative evaluation of several datasets from diverse domains established the viability of the DPC-SNNACC algorithm.

1. Introduction

Clustering is a process of “clustering by nature”. As an important research content in data mining and artificial intelligence, clustering is an unsupervised pattern recognition method without the guidance of prior information [1]. It aims at finding potential similarities and grouping, so that the distance between two points in the same cluster is as small as possible and the distance between data points in different clusters is opposite. A large number of studies have been devoted to solving clustering problems. Generally speaking, clustering algorithms can be divided into multiple categories such as partition-based, model-based, hierarchical-based, grid-based, density-based, and their combinations. Various clustering algorithms have been successfully applied in many fields [2].

With the emergence of big data, there is an increasing demand for clustering algorithms that can automatically understand and summarize data. Traditional clustering algorithms cannot handle the data with hundreds of dimensions, which leads to low efficiency and poor results. It is urgent to develop the existing clustering algorithms or propose a new clustering algorithm to improve the stability of the algorithm and ensure the accuracy of clustering [3].

In June 2014, Rodriguez et al. published clustering by fast search and find of density peaks (referred to as CFSFDP) in Science [4]. This is a new clustering algorithm on the basis of density and distance. The performance of the CFSFDP algorithm is superior to other traditional clustering algorithms in many aspects. First, the CFSFDP algorithm is efficient and straightforward, which can significantly reduce the calculation time without the iteration of the objective function. Second, it is convenient to find cluster centers intuitively with the help of a decision graph in the CFSFDP algorithm. Third, the CFSFDP algorithm can be used to recognize the groups regardless of their shape and spatial dimensions. Therefore, shortly after the algorithm was proposed, it has been broadly used in computer vision [5], image recognition [6], and other fields.

Although the distinct advantages of the CFSFDP algorithm are over other clustering algorithms, the CFSFDP algorithm also has some disadvantages, which are as follows:

- The measurement method for calculating local density and distance still needs to be improved. It does not consider the processing of complex datasets, which makes it difficult to achieve the expected clustering results when dealing with datasets with various densities, multi-scales, or other complex characteristics.

- The allocation strategy of the remaining points after finding the cluster centers may lead to a “domino effect”, whereby once one point is assigned wrongly, there may be many more points subsequently mis-assigned.

As the cut-off distance corresponding to different datasets may be different, it is usually challenging to determine . Additionally, the clustering results are susceptible to .

In 2018, Rui Liu et al. proposed a shared-nearest-neighbor-based clustering by fast search and find of density peaks (SNN-DPC) algorithm to solve some fundamental problems of the CFSFDP algorithm [7]. The SNN-DPC algorithm is illustrated by the following innovations.

- A novel metric to indicate local density is put forward. It makes full use of neighbor information to show the characteristics of data points. This standard not only works for simple datasets, but also applies to complex datasets with multiple scales, cross twining, different densities, or high dimensions.

- By improving the distance calculation approach, an adaptive measurement method of distance from the nearest point with large density is proposed. The new approach considers both distance factors and neighborhood information. Thus, it can compensate for the points in the low-density cluster, which means that the possibilities of selecting the correct center point are raised.

- For the non-center points, a two-step point allocation is carried out on the SNN-DPC algorithm. The unassigned points are divided into “determined subordinate points” and “uncertain subordinate points”. In the process of calculation, the points are filtered continuously, which improves the possibility of correctly allocating non-center points and avoids the “domino effect”.

Although the SNN-DPC algorithm can be used to make up some deficiencies of the CFSFDP algorithm, it still has some unsolved problems. Most obviously, the number of clusters in the dataset needs to be manually input. However, if we have no idea about the number of clusters, we can select cluster centers through the decision graph. It is worth noting that both methods require some prior knowledge. Therefore, the clustering results are less reliable because of the high effect of human orientation. In order to solve the problem of adaptively determining the cluster center, a fast searching density peak clustering algorithm based on shared nearest neighbor and adaptive clustering center algorithm, called DPC-SNNACC, was proposed in this paper. The main innovations of the algorithm are as follows:

- The original local density measurement method is updated, and a new density measurement approach is proposed. This approach can enlarge the gap between decision values to prepare for automatically determining the cluster center. The improvement can not only be applied to simple datasets, but also to complex datasets with multi-scale and cross winding.

- A novel and fast method is proposed that can select the number of clustering centers automatically according to the decision values. The “knee point” of decision values can be adjusted according to information of the dataset, then the clustering centers are determined. This method can find the cluster centers of all clusters quickly and accurately.

The rest of this paper is organized as follows. In Section 2, we introduce some research achievements related to the CFSFDP algorithm. In Section 3, we present the basic definitions and processes of the CFSFDP algorithm and the SNN-DPC algorithm. In Section 4, we propose the improvements of SNN-DPC algorithm and introduce the processes of the DPC-SNNACC algorithm. Simultaneously, the complexity of the algorithm is analyzed according to processes. In Section 5, we first introduce some datasets used in this paper, and then discuss some arguments about the parameters used in the DPC-SNNACC algorithm. Further experiments are conducted in Section 6. In this part, the DPC-SNNACC algorithm is compared with other classical clustering algorithms. Finally, the advantages and disadvantages of the DPC-SNNACC algorithm are summarized, and we also point out some future research directions.

2. Related Works

In this section, we briefly review several kinds of widely used clustering methods and elaborate on the improvement of the CFSFDP algorithm.

K-means is one of the most widely used partition-based clustering algorithms because it is easy to implement, is efficient, and has been successfully applied to many practical case studies. The core idea of K-means is to update the cluster center represented by the centroid of the data point through iterative calculation, and the iterative process will continue until the convergence criterion is met [8]. Although K-means is simple and has a high computing efficiency in general, there still exist some drawbacks. For example, the clustering result is sensitive to K; moreover, it is not suitable for finding clusters with a nonconvex shape. PAM [9] (Partitioning Around Medoids), CLARA [10] (Clustering Large Applications), CLARANS [11] (Clustering Large Applications based upon RANdomized Search), and AP [12] (Affinity Propagation) are also typical partition-based clustering algorithms. However, they also fail to find non-convex shape clusters.

The hierarchical-based clustering method merges the nearest pair of clusters from bottom to top. Typical algorithms include BIRCH [13] (Balanced Iterative Reducing and Clustering using Hierarchies) and ROCK [14] (Bayesian Optimization with Cylindrical Kernels).

The grid-based clustering method divides the original data space into a grid structure with a certain size for clustering. STING [15] (A Statistical Information Grid Approach to Spatial Data Mining) and CLIQUE [16] (clustering in QUEst) are typical algorithms of this type, and their complexity relative to the data size is very low. However, it is difficult to scale these methods to higher-dimensional spaces.

The density-based clustering method assumes that an area with a high density of points in the data space is regarded as a cluster. DBSCAN [17] (Density-Based Spatial Clustering of Applications with Noise) is the most famous density-based clustering algorithm. It uses two parameters to determine whether the neighborhood of points is dense: the radius of the neighborhood and the minimum number of points in the neighborhood.

Based on the CFSFDP algorithm, many algorithms have been put forward to make some improvements. Research on the CFSFDP algorithm mainly involves the following three aspects:

1. The density measurements of the CFSFDP algorithm.

Xu proposed a method to adaptively choose cut-off distance [18]. Using the characteristics of Improved Sheather–Jones (ISJ), the method can be used to accurately estimate .

The Delta-Density based clustering with a Divide-and-Conquer strategy clustering algorithm (3DC) has also been proposed [19]. It is based on the Divide-and-Conquer strategy and the density-reachable concept in Density-Based Spatial Clustering of Applications with Noise (referred to as DBSCAN).

Xie proposed a density peak searching and point assigning algorithm based on the fuzzy weighted K-nearest neighbor (FKNN-DPC) technique to solve the problem of the non-uniformity of point density measurements in the CFSFDP algorithm [20]. This approach uses K-nearest neighbor information to define the local density of points and to search and discover cluster centers.

Du proposed density peak clustering based on K-nearest neighbors (DPC-KNN), which introduces the concept of K-nearest neighbors (KNN) to CFSFDP and provides another option for computing the local density [21].

Qi introduced a new metric for density that eliminates the effect of on clustering results [22]. This method uses a cluster diffusion algorithm to distribute remaining points.

Liu suggested calculating two kinds of densities, one based on k nearest neighbors and one based on local spatial position deviation, to handle datasets with mixed density clusters [23].

2. Automatically determine the group numbers.

Bie proposed the Fuzzy-CFSFDP algorithm [24]. This algorithm uses fuzzy rules to select centers for different density peaks, and then the number of final clustering centers is determined by judging whether there are similar internal patterns between density peaks and merging density peaks.

Li put forward the concept of potential cluster center based on the CFSFDP algorithm [25], and considered that if the shortest distance between potential cluster center and known cluster center is less than the cut-off distance , then the potential cluster center is redundant. Otherwise, it will be considered as the center of another group.

Lin proposed an algorithm [26] that used the radius of neighborhood to automatically select a group of possible density peaks, then used potential density peaks as density peaks, and used CFSFDP to generate preliminary clustering results. Finally, single link clustering was used to reduce the number of clusters. The algorithm can avoid the clustering allocation problem in CFSFDP.

3. Application of the CFSFDP algorithm.

Zhong and Huang applied the improved density and distance-based clustering method to the actual evaluation process to evaluate the performance of enterprise asset management (EAM) [27]. This method greatly reduces the resource investment in manual data analysis and performance sorting.

Shi et al. used the CFSFDP algorithm for scene image clustering [5]. Chen et al. applied it to obtain a possible age estimate according to a face image [6]. Additionally, Li et al. applied the CFSFDP algorithm and entropy information to detect and remove the noise data field from datasets [25].

5. Discussion

Before the experiment, some parameters should be discussed. The purpose was to find the best value of each parameter related to the DPC-SNNACC algorithm. First, some datasets and metrics are introduced. Second, we discuss the performance of the DPC-SNNACC algorithm from several aspects including k, and . The optimal parameter values corresponding to the optimal metrics were found through comparative experiments.

5.1. Introduction to Datasets and Metrics

The performance of the clustering algorithm is usually verified with some datasets. In this paper, we applied 14 commonly used datasets [28,29,30,31,32,33,34,35,36] containing eight synthetic datasets in Table 2 and four UCI(University of California, Irvine) real-datasets in Table 3. The tables list the basic information including the number of data records, the number of clusters, and the data dimensions. The datasets in Table 2 are two-dimensional for the convenience of graphic display. Compared with synthetic datasets, the dimensions of the real datasets in Table 3 are usually bigger than 2.

Table 2.

Synthetic datasets.

Table 3.

UCI(University of California, Irvine) real-datasets.

The evaluation metrics of the clustering algorithm usually include internal and external metrics. Generally speaking, internal metrics are suitable for the situation of unknown data labels, while external metrics have a good reflection on the data with known data labels. As the datasets used in this experiment had already been labeled, several external evaluation metrics were used to judge the accuracy of clustering results including normalized mutual information (NMI) [37], adjusted mutual information (AMI) [38], adjusted Rand index (ARI) [38], F-measure [39], accuracy [40], and Fowlers-Mallows index (FMI) [41]. The maximum values of these metrics are 1, and the larger the values of the metrics, the higher the accuracy.

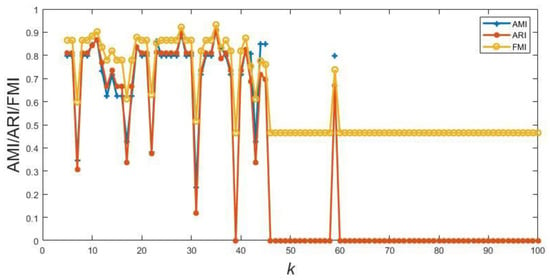

5.2. The Influence of on the Metrics

The only parameter that needs to be determined in the DPC-SNNACC algorithm is the number of nearest neighbors . In order to analyze the impact of on the algorithm, the Aggregation dataset was used as shown in Figure 4.

Figure 4.

Changes in the various metrics of the Aggregation dataset.

To select the optimal number of neighbors, we increased the number of neighbors from five to 100. For the lower boundary, if the number of neighbors is low and the density is sparse, it means that there is no similarity. Furthermore, errors may be caused by small for some datasets. Thus, the lower limit was determined to be 5. For the upper limit, if the value of is much too high, on one hand, the algorithm will be complex and run for a long time, on the other hand, a high value will affect the results of the algorithm. The analysis on shows that the exorbitant has no impact on the results of the algorithm, so it is of little significance for further tests. We set 100 as the upper limit.

When ranges from five to 100, the corresponding metrics oscillate obviously, and the trend of the selected metrics of “AMI”, “ARI”, and “FMI” are more or less the same. Therefore, we can replace multiple metric changes with one metric change. For example, when the AMI metric reaches the optimal value, other external metrics will float the optimal value nearby, and the corresponding is the best number of neighbors. Additionally, it can be seen from the change in metrics that the value of each metric tends to be stable with the increase in . However, an exorbitant value will lead to the decrease of metrics. Therefore, if a certain value is defined in advance without experiment, the optimal clustering result cannot be obtained; furthermore, the significance of the similarity measurement is lost. In the case of the Aggregation dataset, when = 35, each metric value was higher, so 35 could be selected as the best neighbor number of the Aggregation dataset.

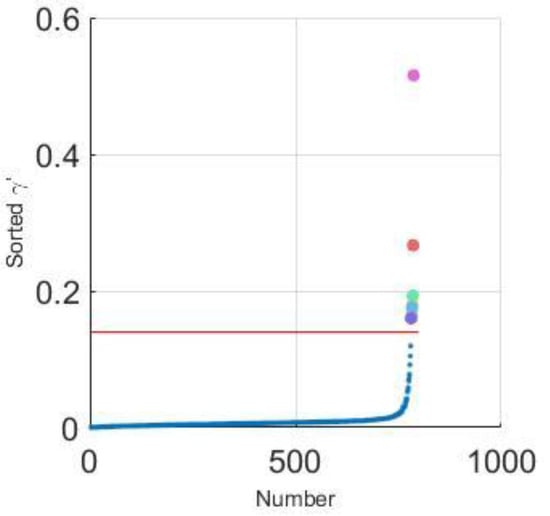

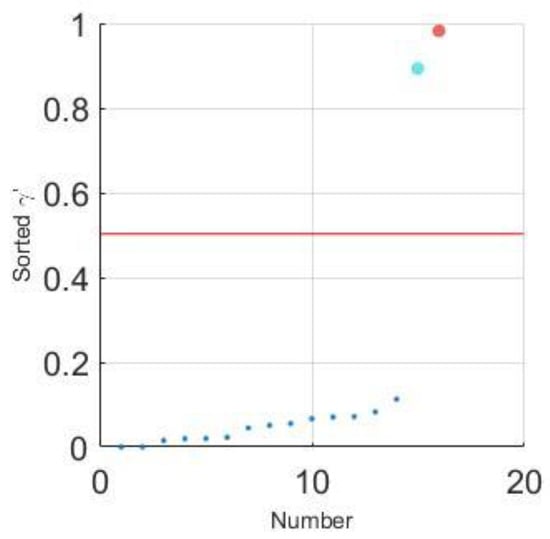

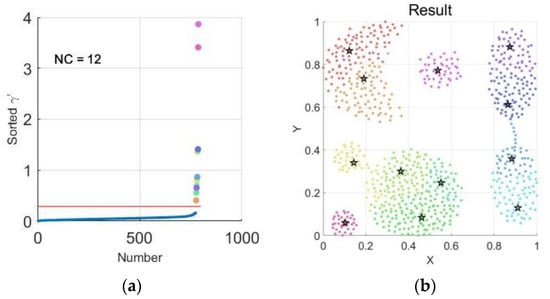

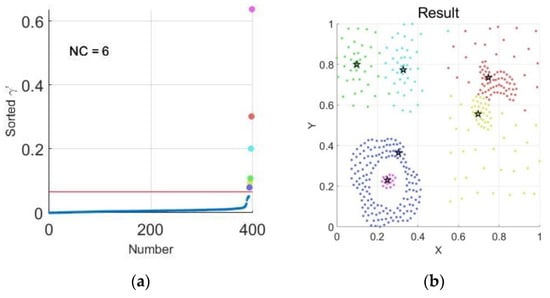

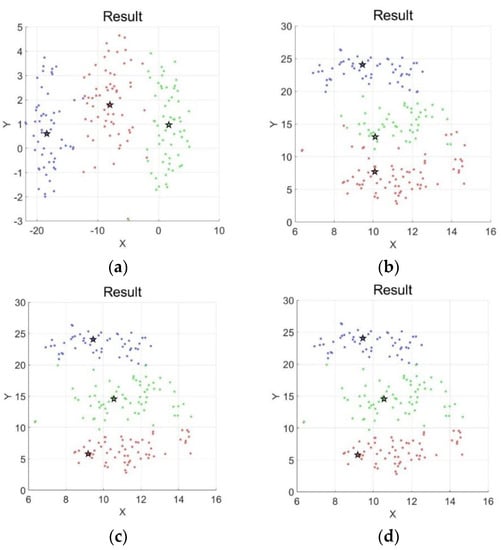

5.3. The Comparison of Values between SNN-DPC and DPC-SNNACC

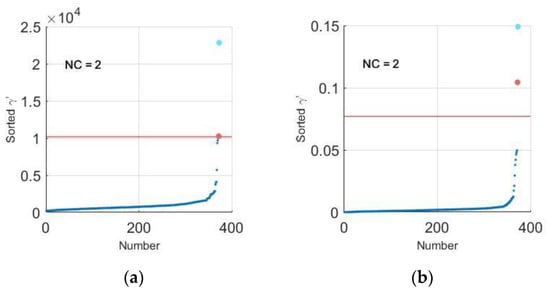

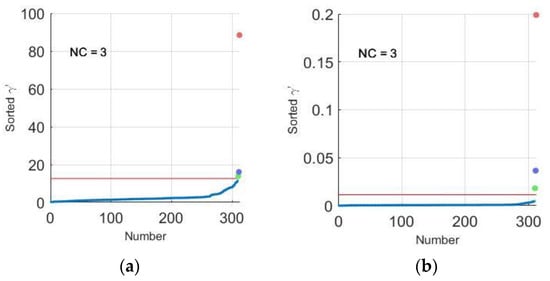

As we change the calculation method of local density, the difference between contiguous will increase. Two different datasets were used to illustrate the comparison between two algorithms in Figure 5 and Figure 6. The used were the best parameters in the respective algorithms.

Figure 5.

Comparison of values between shared-nearest-neighbor-based clustering by fast search and find of density peaks (SNN-DPC) and fast searching density peak clustering algorithm based on shared nearest neighbor and adaptive clustering center (DPC-SNNACC) in the Jain dataset. (a) values of SNN-DPC; (b) values of DPC-SNNACC.

Figure 6.

Comparison of values shared-nearest-neighbor-based clustering by fast search and find of density peaks (SNN-DPC) and fast searching density peak clustering algorithm based on shared nearest neighbor and adaptive clustering center (DPC-SNNACC) in the Spiral dataset. (a) values of SNN-DPC; (b) values of DPC-SNNACC.

After comparing and analyzing the above figures, whether using the SNN-DPC algorithm or the DPC-SNNACC algorithm, the correct selection of the number of cluster centers could be achieved on the Jain dataset and Spiral dataset, and the distinction was the differences between . In other words, the improved method showed a bigger difference between the cluster center and non-center points, thus indicating that we can use the DPC-SNNACC algorithm to identify the cluster centers and reduce unnecessary errors.

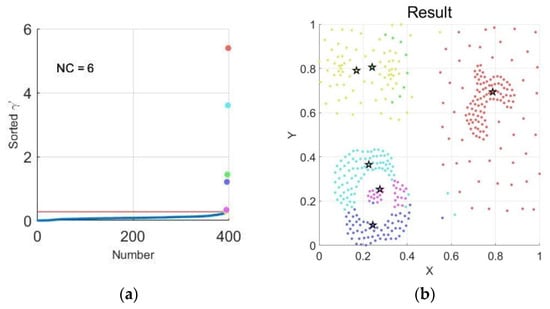

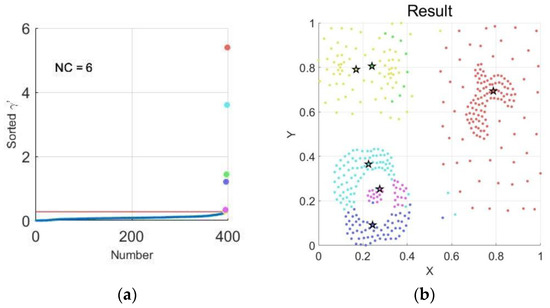

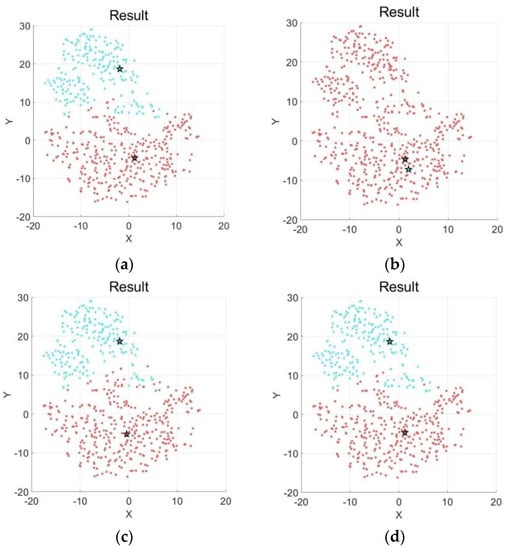

5.4. The Influence of Different on Metrics

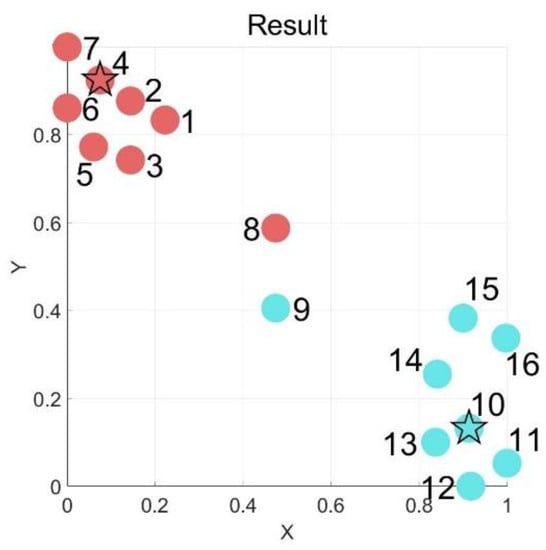

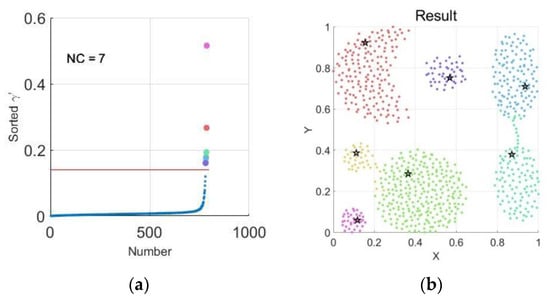

As above-mentioned, represents the search range of clustering centers and is an integer closest to the result of treatment. In order to reduce the search range of the decision values of the cluster centers, we used closest to as the search scale of clustering centers. In this part, the effects of using several other values instead of as were subjected to further analysis. This section applies the Aggregation and Compound datasets to illustrate this problem.

As can be seen from Figure 7, Figure 8 and Figure 9, achieves better cluster quantity than other values of in terms of the distributions of and the clustering results. Furthermore, when , the algorithm loses the exact number of clusters.

Figure 7.

Clustering results when in the Aggregation dataset. (a) Distribution of in the Aggregation dataset; (b) Clustering result of the Aggregation dataset.

Figure 8.

Clustering results when in the Aggregation dataset. (a) Distribution of in the Aggregation dataset; (b) Clustering result of the Aggregation dataset.

Figure 9.

Clustering results when in the Aggregation dataset. (a) Distribution of in the Aggregation dataset; (b) Clustering result of the Aggregation dataset.

Table 4 uses a number of metrics to explain the problem objectively, which explain the clustering situations when chooses different values. The bold type indicates the best clustering situation. obtained higher metrics than and , indicating that is the best value to determine .

Table 4.

Metrics corresponding to different in the Aggregation dataset.

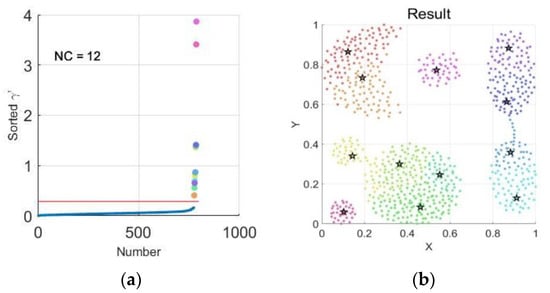

It can be seen from Figure 10, Figure 11 and Figure 12 that different obtained the same number of clusters, but the corresponding metrics were quite different. Comparing Figure 10b and Figure 11b, it can be clearly seen that the case of correctly separated each cluster, while the case of divided a complete cluster into three parts, and merged two upper left clusters that should have been separated.

Figure 10.

Clustering results when in the Compound dataset. (a) Distribution of in the Compound dataset; (b) Clustering result of the Compound dataset.

Figure 11.

Clustering results when in the Compound dataset. (a) Distribution of in the Compound dataset; (b) Clustering result of the Compound dataset.

Figure 12.

Clustering results when in the Compound dataset. (a) Distribution of in the Compound dataset; (b) Clustering result of the Compound dataset.

The conclusions of Table 5 are similar to those in Table 4; when and were selected as , the performances were not as good as . This means that choosing as the search range of the decision value is reasonable to determine the cluster centers.

Table 5.

Metrics corresponding to different in the Compound dataset.

6. Experiment

6.1. Preprocessing and Parameter Selection

Before starting the experiment, we had to eliminate the effects of missing values and dimension differences on the datasets. For missing values, we could replace them with the average of all valid values of the same dimension. For data preprocessing, we used the “min-max normalization method”, as shown in Equation (18), to make all data linearly map into [0, 1]. In this way, we could reduce the influence of different measures on the experimental results, eliminate dimension differences, and improve the calculation efficiency [42].

where is the standard data of -th sample and -th dimension; is the original data of -th sample and -th dimension; and is the original data of the entire -th dimension.

First, to reflect the actual results of the algorithms more objectively, we adjusted the optimal parameters for each dataset to ensure the best performance of the algorithm for each kind of parameter. Specifically, the exact cluster numbers of K-means, SNN-DPC, and CFSFDP are given. However, the DPC-SNNACC algorithm does not determine the number of clusters in advance, which is automatically calculated according to the algorithm itself. When processing the SNN-DPC and the DPC-SNNACC algorithms, we increased the number of neighbors from five to 100. Next, we conducted experiments to find the best clustering neighbor number . The author of the traditional CFSFDP algorithm provides a rule of thumb, which makes the number of neighbors between of the total number of points. We can approach the best result by adjusting the values through previous experiments.

6.2. Results on Synthetic Datasets

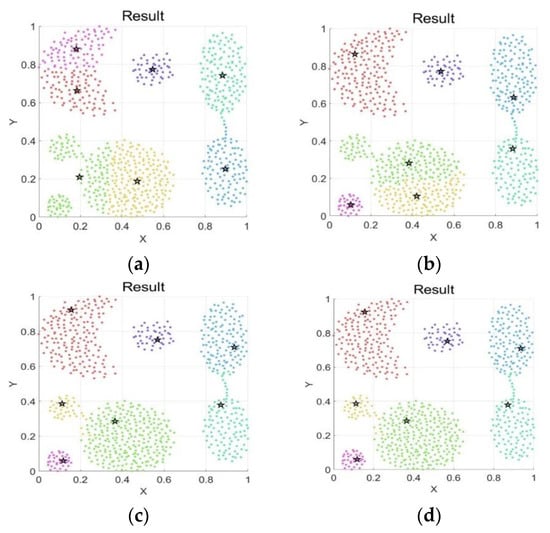

In this part, we tested the DPC-SNNACC algorithm with eight synthetic datasets. Figure 13 and Figure 14 intuitively show the visual comparisons among the four algorithms by using two-dimensional images. The color points represent the points assigned to different groups, and the pentagram points are clustering centers.

Figure 13.

The clustering results on Aggregation by the four algorithms. (a) K-means on the Aggregation; (b) CFSFDP on the Aggregation; (c) SNN-DPC on the Aggregation; (d) DPC-SNNACC on Aggregation.

Figure 14.

The clustering results on Flame by the four algorithms. (a) K-means on Flame; (b) CFSFDP on Flame; (c) SNN-DPC on Flame; (d) DPC-SNNACC on Flame.

The comprehensive clustering results of the Aggregation dataset in Figure 13 showed that better clustering results could be achieved by both the SNN-DPC and the DPC-SNNACC algorithm, however, a complete cluster will be split by the K-means and CFSFDP algorithm. To be specific, the SNN-DPC and the DPC-SNNACC algorithm found the same clustering centers, which means that the improved algorithm achieved gratifying results in determining the centers. Nevertheless, the K-means algorithm in Figure 13a divided a complete cluster at the bottom of the two-dimensional image from the middle into two independent clusters, and merged the left part with two similar groups into one cluster, while the CFSFDP algorithm in Figure 13b divided it into upper and lower clusters because there were several data points from the upper groups connected to an adjacent small group, so they were combined into a single cluster.

The clustering results shown in Figure 14 demonstrate the SNN-DPC and the DPC-SNNACC algorithms identified the cluster correctly, but K-means and CFSFDP failed to do so. For the K-means algorithm, a small part of the lower cluster was allocated to the upper cluster, so the whole dataset looked like two types of diagonal cut, therefore it was obvious that the clustering results were not desirable. For the CFSFDP algorithm, all sides of the lower cluster were allocated to the upper cluster, leading to the upper and lower parts, which was still not what we expected.

Table 6 shows the results in terms of the NMI, AMI, ARI, F-Measure, Accuracy, FMI, , and Time evaluation metrics on the synthetic datasets, where the symbol “–” indicates the meaningless parameters, and the best result metrics are in bold.

Table 6.

Performances of different clustering algorithm on different synthetic datasets.

As reported in Table 6, the improved algorithm competes favorably with the other three clustering algorithms. The column shows the number of clusters. The of the first three algorithms are given in advance. However, the number of clusters of the DPC-SNNACC algorithm was adaptively determined by the improved method. Through the analysis of the table, the numerical value of the column in each dataset was the same, indicating that the DPC-SNNACC algorithm could correctly identify the number of clusters in the selected dataset. Compared with K-means, the other three algorithms could obtain fairly good performances in clustering. The Spiral dataset usually verifies the ability of algorithm to solve cross-winding datasets. It is clear that the K-means algorithm failed to perform well, while the other three clustering algorithms reached the optimal condition. This may result from the fact that the other three algorithms used both and to describe each point, which can make the characteristics more obvious. The K-means algorithm only uses distance to classify points, so it cannot generally represent the characteristics of points. In other words, the K-means algorithm cannot deal with the cross-winding datasets. Sometimes, the CFSFDP algorithm cannot recognize the clusters effectively, even on a few more points. This may be due to the simplistic way of density estimation as well as the approach of allocating the remaining points. The DPC-SNNACC and the SNN-DPC algorithms outperformed the other clustering algorithms on nearly all of these synthetic datasets, except for CFSFDP on Jain. Furthermore, each algorithm could obtain good clustering results in the R15 dataset. Similarly, in the D31 dataset, the result of K-means and CFSFDP were almost as good as the SNN-DPC and the DPC-SNNACC algorithms, which means that these algorithms can be applied to multi groups and larger datasets. Although the DPC-SNNACC algorithm runs a little longer, it is still within the acceptable range.

6.3. Results on UCI (University of California, Irvine) Datasets

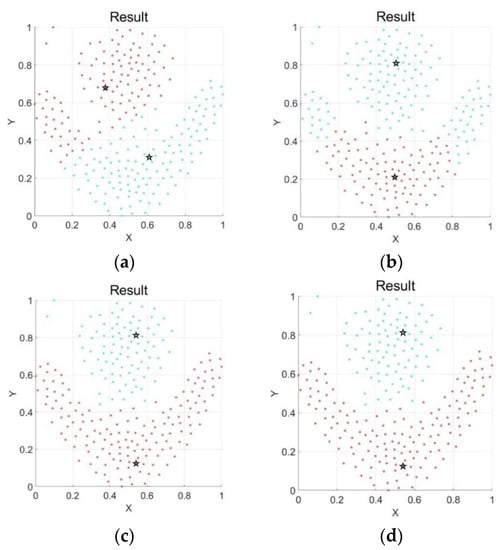

In this part, the improved algorithm was subjected to further tests with four UCI real datasets.

The Wine dataset consists of three clusters with few intersections among them. From the results shown in Figure 15, the four algorithms could all divide into three clusters, but there were some differences in the classification of the critical part. For instance, the CFSFDP algorithm had cross parts between the second and the third cluster from top to bottom, while the other algorithms were three independent individuals roughly. In addition, the less-than-perfect results are also reflected in the center distance of the second and third groups in CFSFDP as it was much closer than the others. The other three algorithms could basically achieve correct clustering results.

Figure 15.

The clustering results on Wine by four algorithms. (a) K-means on Wine; (b) CFSFDP on Wine; (c) SNN-DPC on Wine; (d) DPC–SNNACC on Wine.

Figure 16 shows the performance of the four algorithms on the WDBC dataset. As shown in the figure, the other three algorithms found the cluster centers of the breast cancer database (WDBC), except for CFSFDP. To be specific, as the distribution density of the dataset was uneven and the overall density was similar, the CFSFDP algorithm classified most of the points into one group, resulting in poor clustering effect. It appears that the K-means, the DPC-SNNACC, and the SNN-DPC algorithms could find the cluster centers correctly and allocate the points reasonably, but a further inspection revealed that the DPC-SNNACC and the SNN-DPC could distinguish two kinds of clusters well, while the K-means algorithm had some cross points in the middle of two clusters.

Figure 16.

The clustering results on the WDBC(Wisconsin Diagnosis Breast Cancer Database) by the four algorithms. (a) K-means on WDBC; (b) CFSFDP on WDBC; (c) SNN-DPC on WDBC; (d) DPC-SNNACC on WDBC.

Again, the performances of the four algorithms were benchmarked in terms of NMI, AMI, ARI, F-Measure, Accuracy, and FMI. Table 7 displays the performance of the four algorithms on various datasets. The symbol “–” in the table means that the entries had no actual values and the best results are shown in bold. Like the synthetic datasets, the DPC-SNNACC algorithm could correctly identify the number of clusters, which means that we can use the improved algorithm to adaptively determine the cluster centers. Furthermore, the SNN-DPC and DPC-SNNACC were superior to the other algorithms in terms of metrics. Basically, every metric of the DPC-SNNACC algorithm was on par with the SNN-DPC, indicating evidence of great potential in clustering. It was obvious that although the metric values of the DPC-SNNACC in the WDBC dataset were slightly lower than the SNN-DPC algorithm, these were much higher than the K-means. Furthermore, the CFSFDP algorithm had trouble with the WDBC dataset, which indicates that the DPC-SNNACC algorithm is more robust than CFSFDP. In terms of time, the DPC-SNNACC was similar to the others, with little differentiation in 0.7 s.

Table 7.

Performances of the different clustering algorithms on different UCI datasets.

Through the above-detailed analysis of the performance of the DPC-SNNACC algorithm with other clustering algorithms on the synthetic and real-world datasets from the UCI, we can conclude that the DPC-SNNACC algorithm had better performance than the other common algorithms in clustering, which substantiates its potentiality in clustering. Most importantly, it could find the cluster centers adaptively, which is not a common characteristic of the other algorithms.

6.4. Running Time

The execution efficiency of the algorithm is usually an important metric to evaluate the performance, and we often use time to represent the execution efficiency. This section compares the DPC-SNNACC algorithm with the SNN-DPC algorithm, the CFSFDP algorithm, and K-means algorithm in terms of time complexity. At the same time, the clustering consumption time of the synthetic dataset and the real dataset will be compared by Section 4.3 and Section 4.4 to judge the advantages and disadvantages of the DPC-SNNACC algorithm.

Table 8 shows the time complexity comparison of the four clustering algorithms. It can be seen from the table that the time complexity of the K-means algorithm was the lowest, the time complexity of the CFSFDP algorithm ranked second, and the time complexity of the SNN-DPC and the DPC-SNNACC algorithm was the highest. However, and are usually much smaller than , so it has little effect on the time complexity of the algorithms.

Table 8.

Comparison of the time complexity of different algorithms.

The last columns of Table 6 and Table 7 show the time needed by each algorithm to cluster different datasets. Table analysis showed that for most datasets, the time of the K-means algorithm was the shortest, followed by CFSFDP. The time of the SNN-DPC and the DPC-SNNACC algorithms were longer, which is consistent with the time complexity, but the difference in the running time of each dataset algorithm was less than 1 s, which can be acceptable. Therefore, even if the time of the DPC-SNNACC algorithm is not optimal, it is still desirable.

7. Conclusions

In this paper, in order to solve the problem that the SNN-DPC algorithm needs to select cluster centers through a decision graph or needs to input the cluster number manually, we proposed an improved method called DPC-SNNACC. By optimizing the calculation method of local density, the difference in the local density among different points becomes larger as does the difference in the decision values. Then, the knee point is obtained by calculating the change in decision values. The points with a high decision value are selected as clustering centers, and the number of clustering centers is adaptively obtained. In this way, the DPC-SNNACC algorithm can solve the problem of clustering for unknown or unfamiliar datasets.

The experimental and comparative evaluation of several datasets from diverse domains established the viability of the DPC-SNNACC algorithm. It could correctly obtain the clustering centers, and almost every metric met the standard of the SNN-DPC algorithm, which was superior to the traditional CFSFDP and K-means algorithms. Moreover, the DPC-SNNACC algorithm has high applicability to datasets of different dimensions and sizes. Within the acceptable range, it is generally feasible, although it has some shortcomings such as long running time. In general, the DPC-SNNACC algorithm not only retains the advantages of the SNN-DPC algorithm, but also solves the problem of self-adaptive determination of the cluster number. Furthermore, the DPC-SNNACC algorithm can be applicable to any dimension and size of datasets, and it is robust to noise and cluster density differences.

In future work, first, we can further explore the clustering algorithm based on shared neighbors, find a more accurate method to automatically determine , and simplify the process of determining the algorithm parameters. Second, the DPC-SNNACC algorithm can be combined with other algorithms to give full play to the advantages of other algorithms and make up for the shortcomings of the DPC-SNNACC algorithm. Third, the algorithm can be applied to some practical problems to increase its applicability.

Author Contributions

Conceptualization, Y.L.; Formal analysis, Y.L.; Supervision, M.L.; Validation, M.L.; Writing—original draft, Y.L.; Writing—review & editing, M.L. and Y.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Fundamental Research Funds for the Central Universities, grant number 222201917006

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Summary of the meaning of symbols used in this paper.

Table A1.

Summary of the meaning of symbols used in this paper.

| Symbol | Meaning |

|---|---|

| The cut-off distance, the neighborhood radius of a point | |

| The dataset with as its -th data point | |

| The number of records in the dataset | |

| The Euclidean distance between point and | |

| The distances of the pairs of data points in | |

| The local density | |

| The distance from larger density point | |

| The decision value, the element-wise product of and | |

| The ascending sorted | |

| The number of K-nearest neighbors considered | |

| The set of K-nearest neighbors of point | |

| The set of shared nearest neighbors of point , maybe an empty set | |

| L(xi) = (x1, …, xk) | The set of points with the highest similarity to point |

| The SNN similarity between points and | |

| The number of clusters in the experiment | |

| An integer closest to result after rooting to | |

| The knee point | |

| The difference value between adjacent values | |

| The threshold for judging knee point | |

| The set of cluster centers in the experiment | |

| The representation of final clustering result | |

| The noncentral points which are unassigned, possible subordinate points | |

| The representation of initial clustering result | |

| Ergodic matrix for distribution of possible subordinate points | |

| The queue that has points waiting to be processed | |

| The set of K-nearest neighbors of point |

References

- Xu, R.; Wunsch, D. Survey of Clustering Algorithms. IEEE Trans. Neural Netw. 2005, 16, 645–678. [Google Scholar] [CrossRef]

- Omran, M.G.H.; Engelbrecht, A.P.; Salman, A.A. An overview of clustering methods. Intell. Data Anal. 2007, 11, 583–605. [Google Scholar] [CrossRef]

- Feldman, D.; Schmidt, M.; Sohler, C. Turning Big Data into Tiny Data: Constant-Size Coresets for kk-Means, PCA, and Projective Clustering. SIAM J. Comput. 2020, 49, 601–657. [Google Scholar] [CrossRef]

- Alex, R. Machine learning. Clustering by fast search and find of density peaks. J. Sci. 2014, 344, 6191. [Google Scholar]

- Shi, Y.-M.; Zhu, F.-Z.; Wei, X.; Chen, B.-Y. Study of transpedicular screw fixation on spine development in a piglet model. J. Orthop. Surg. Res. 2016, 11, 8. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Chen, Y.; Lai, D.-H.; Qi, H.; Wang, J.-L.; Du, J. A new method to estimate ages of facial image for large database. Multimed. Tools Appl. 2015, 75, 2877–2895. [Google Scholar] [CrossRef]

- Liu, R.; Wang, H.; Yu, X. Shared-nearest-neighbor-based clustering by fast search and find of density peaks. Inf. Sci. 2018, 450, 200–226. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, C.; Zhou, Y.; Zhu, X.; Wang, Y.; Zhang, W. From Partition-Based Clustering to Density-Based Clustering: Fast Find Clusters With Diverse Shapes and Densities in Spatial Databases. IEEE Access 2017, 6, 1718–1729. [Google Scholar] [CrossRef]

- Kaufman, L.; Rousseeuw, P.J. Partitioning Around Medoids (Program PAM); Wiley: Hoboken, NJ, USA, 2008; pp. 68–125. [Google Scholar]

- Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; Wiley: Hoboken, NJ, USA, 2005. [Google Scholar]

- Ng, R.; Han, J. CLARANS: A method for clustering objects for spatial data mining. IEEE Trans. Knowl. Data Eng. 2002, 14, 1003–1016. [Google Scholar] [CrossRef]

- Frey, B.J.; Dueck, D. Clustering by Passing Messages between Data Points. Science 2007, 315, 972–976. [Google Scholar] [CrossRef]

- Zhang, T.; Ramakrishnan, R.; Livny, M. BIRCH: An Efficient Data Clustering Method for Very Large Databases. ACM SIGMOD Rec. 1996, 25. [Google Scholar] [CrossRef]

- Guha, S.; Rastogi, R.; Shim, K. A Clustering Algorithm for Categorical Attributes. Inf. Syst. J. 1999, 25, 345–366. [Google Scholar] [CrossRef]

- Wang, W.; Yang, J.; Muntz, R. In STING: A Statistical Information Grid Approach to Spatial Data Mining. In Proceedings of the VLDB’97—23rd International Conference on Very Large Data Bases, Athens, Greece, 25–29 August 1997. [Google Scholar]

- Agrawal, R.; Gehrke, J.E.; Gunopulos, D.; Raghavan, P. Automatic Subspace Clustering of High Dimensional Data for Data Mining Applications. In Proceedings of the 1998 ACM SIGMOD International Conference on Management of Data, Seattle, WA, USA, 1–4 June 1998; Volume 27, pp. 94–105. [Google Scholar]

- Ester, M. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise; AAAI Press: Palo Alto, CA, USA, 1996. [Google Scholar]

- Xu, D.; Tian, Y. A Comprehensive Survey of Clustering Algorithms. Ann. Data Sci. 2015, 2, 165–193. [Google Scholar] [CrossRef]

- Liang, Z.; Chen, P. Delta-density based clustering with a divide-and-conquer strategy: 3DC clustering. Pattern Recognit. Lett. 2016, 73, 52–59. [Google Scholar] [CrossRef]

- Xie, J.; Gao, H.; Xie, W.; Liu, X.; Grant, P.W. Robust clustering by detecting density peaks and assigning points based on fuzzy weighted K-nearest neighbors. Inf. Sci. 2016, 354, 19–40. [Google Scholar] [CrossRef]

- Du, M.; Ding, S.; Jia, H. Study on density peaks clustering based on k-nearest neighbors and principal component analysis. Knowl. Based Syst. 2016, 99, 135–145. [Google Scholar] [CrossRef]

- Qi, J.; Xiao, B.; Chen, Y. I-CFSFDP: A Robust and High Accuracy Clustering Method Based on CFSFDP. In Proceedings of the 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018. [Google Scholar]

- Liu, Y.; Liu, D.; Yu, F.; Ma, Z. A Double-Density Clustering Method Based on Nearest to First in Strategy. Symmetry 2020, 12, 747. [Google Scholar] [CrossRef]

- Bie, R.; Mehmood, R.; Ruan, S.; Sun, Y.; Dawood, H. Adaptive fuzzy clustering by fast search and find of density peaks. Pers. Ubiquitous Comput. 2016, 20, 785–793. [Google Scholar] [CrossRef]

- Tao, L.I.; Hongwei, G.E.; Shuzhi, S. Density Peaks Clustering by Automatic Determination of Cluster Centers. J. Front. Comput. Sci. Technol. 2016, 10, 1614–1622. [Google Scholar]

- Lin, J.-L.; Kuo, J.-C.; Chuang, H.-W. Improving Density Peak Clustering by Automatic Peak Selection and Single Linkage Clustering. Symmetry 2020, 12, 1168. [Google Scholar] [CrossRef]

- Zhong, X.B.; Huang, X.X. An Efficient Distance and Density Based Outlier Detection Approach. Appl. Mech. Mater. 2012, 155, 342–347. [Google Scholar] [CrossRef]

- Gionis, A.; Mannila, H.; Tsaparas, P. Clustering aggregation. ACM Trans. Knowl. Discov. Data 2007, 1, 4. [Google Scholar] [CrossRef]

- Chang, H.; Yeung, D.-Y. Robust path-based spectral clustering. Pattern Recognit. 2008, 41, 191–203. [Google Scholar] [CrossRef]

- Jain, A.K.; Law, M. Data Clustering: A User’s Dilemma. In Proceedings of the International Conference on Pattern Recognition and Machine Intelligence, Kolkata, India, 20–22 December 2005. [Google Scholar]

- Veenman, C.; Reinders, M.; Backer, E. A maximum variance cluster algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1273–1280. [Google Scholar] [CrossRef]

- Fu, L.; Qu, J.; Chen, H. Mechanical drilling of printed circuit boards: The state-of-the-art. Circuit World 2007, 33, 3–8. [Google Scholar] [CrossRef]

- Xia, Y.; Wang, G.; Gao, S. An Efficient Clustering Algorithm for 2D Multi-density Dataset in Large Database. In Proceedings of the 2007 International Conference on Multimedia and Ubiquitous Engineering (MUE’07), Seoul, Korea, 26–28 April 2007; pp. 78–82. [Google Scholar]

- Bache, K.; Lichman, M. UCI Machine Learning Repository; University of California: Irvine, CA, USA, 2013. [Google Scholar]

- Charytanowicz, M.; Niewczas, J.; Kulczycki, P.; Kowalski, P.A.; Łukasik, S.; Żak, S. Complete Gradient Clustering Algorithm for Features Analysis of X-ray Images; Springer: Berlin/Heidelberg, Germany, 2010; pp. 15–24. [Google Scholar]

- Street, W.N.; Wolberg, W.H.; Mangasarian, O. Nuclear Feature Extraction for Breast Tumor Diagnosis. In Biomedical Image Processing and Biomedical Visualization; International Society for Optics and Photonics: Bellingham, WA, USA, 1993; Volume 1905, pp. 861–870. [Google Scholar]

- Lancichinetti, A.; Fortunato, S.; Kertész, J. Detecting the overlapping and hierarchical community structure in complex networks. New J. Phys. 2009, 11, 033015. [Google Scholar] [CrossRef]

- Vinh, N.X.; Epps, J.; Bailey, J. Information Theoretic Measures for Clusterings Comparison: Variants, Properties, Normalization and Correction for Chance. J. Mach. Learn. Res. 2010, 11, 2837–2854. [Google Scholar]

- Yan, Y.; Fan, J.; Mohamed, K. Survey of clustering validity evaluation. Appl. Res. Comput. 2008. [Google Scholar] [CrossRef]

- Ding, S.F.; Jia, H.J.; Shi, Z. Spectral Clustering Algorithm Based on Adaptive Nystrom Sampling for Big Data Analysis. J. Softw. 2014, 25, 2037–2049. [Google Scholar]

- Fowlkes, E.B.; Mallows, E. A Method for Comparing Two Hierarchical Clusterings. J. Am. Stat. Assoc. 1983, 78, 553–569. [Google Scholar] [CrossRef]

- Jiawei, H.; Micheline, K. Data Mining: Concepts and Techniques; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).