Abstract

The computation of the minimum distance between a point and a planar implicit curve is a very important problem in geometric modeling and graphics. An integrated hybrid second order algorithm to facilitate the computation is presented. The proofs indicate that the convergence of the algorithm is independent of the initial value and demonstrate that its convergence order is up to two. Some numerical examples further confirm that the algorithm is more robust and efficient than the existing methods.

1. Introduction

Due to its great properties, the implicit curve has many applications. As a result, how to render implicit curves and surfaces is an important topic in computer graphics [1], which usually adopts four techniques: (1) representation conversion; (2) curve tracking; (3) space subdivision; and (4) symbolic computation. Using approximate distance tests to replace the Euclidean distance test, a practical rendering algorithm is proposed to rasterize algebraic curves in [2]. Employing the idea that field functions can be combined both on their values and gradients, a set of binary composition operators is developed to tackle four major problems in constructive modeling in [3]. As a powerful tool for implicit shape modeling, a new type of bivariate spline function is applied in [4], and it can be created from any given set of 2D polygons that divides the 2D plane into any required degree of smoothness. Furthermore, the spline basis functions created by the proposed procedure are piecewise polynomials and explicit in an analytical form.

Aside from rendering of computer graphics, implicit curves also play an important role in other aspects of computer graphics. To facilitate applications, it is important to compute the intersection of parametric and algebraic curves. Elimination theory and matrix determinant expression of the resultant in the intersection equations are used in [5]. Some researchers try to transform the problem of intersection into that of computing the eigenvalues and eigenvectors of a numeric matrix. Similar to elimination theory and matrix determinant expression, combining the marching methods with the algebraic formulation generates an efficient algorithm to compute the intersection of algebraic and NURBSsurfaces in [6]. For the cases with a degenerate intersection of two quadric surfaces, which are frequently applied in geometric and solid modeling, a simple method is proposed to determine the conic types without actually computing the intersection and to enumerate all possible conic types in [7]. M.Aizenshtein et al. [8] present a solver to robustly solve well-constrained transcendental systems, which applies to curve-curve, curve-surface intersections, ray-trap and geometric constraint problems.

To improve implicit modeling, many techniques have been developed to compute the distance between a point and an implicit curve or surface. In order to compute the bounded Hausdorff distance between two real space algebraic curves, a theoretical result can reduce the bound of the Hausdorff distance of algebraic curves from the spatial to the planar case in [9]. Ron [10] discusses and analyzes formulas to calculate the curvature of implicit planar curves, the curvature and torsion of implicit space curves and the mean and Gaussian curvature for implicit surfaces, as well as curvature formulas to higher dimensions. Using parametric approximation of an implicit curve or surface, Thomas et al. [11] introduce a relatively small number of low-degree curve segments or surface patches to approximate an implicit curve or surface accurately and further constructs monoid curves and surfaces after eliminating the undesirable singularities and the undesirable branches normally associated with implicit representation. Slightly different from ref. [11], Eva et al. [12] use support function representation to identify and approximate monotonous segments of algebraic curves. Anderson et al. [13] present an efficient and robust algorithm to compute the foot points for planar implicit curves.

Contribution: An integrated hybrid second order algorithm is presented for orthogonal projection onto planar implicit curves. For any test point p, any planar implicit curve with or without singular points and any order of the planar implicit curve, any distance between the test point and the planar implicit curve, the algorithm could be convergent. It consists of two parts: the hybrid second order algorithm and the initial iterative value estimation algorithm.

The hybrid second order algorithm fuses the three basic ideas: (1) the tangent line orthogonal iteration method with one correction; (2) the steepest descent method to force the iteration point to fall on the planar implicit curve as much as it can; (3) Newton–Raphson’s iterative method to accelerate iteration.

Therefore, the hybrid second order algorithm is composed of six steps. The first step uses the steepest descent method of Newton’s iterative method to force the iterative value of the initial value to lie on the planar implicit curve, which is not associated with the test point p. In the second step, Newton’s iterative method employs the relationship determined by the test point p to accelerate the iteration process. The third step finds the orthogonal projection point q on the tangent line, which goes through the initial iterative point, of a test point p. The fourth step gets the linear orthogonal increment value. The same relationship in the second step is used once more to accelerate the iteration process in the fifth step. The final step gives some correction to the result of the iterative value in the fourth and fifth step.

One problem for the hybrid second order algorithm is that it appears divergent if the test point p lies particularly far away from the planar implicit curve. Since it has been found that when the initial iterative point is close to the orthogonal projection point , no matter how far away the test point p is from the planar implicit curve, it will be convergent, an algorithm, named the initial iterative value estimation algorithm, is proposed to drive the initial iterative value toward the orthogonal projection point as much as possible. Accordingly, the second order algorithm with the initial iterative value estimation algorithm is named as the integrated hybrid second order algorithm.

The rest of this paper is organized as follows. Section 2 presents related work for orthogonal projection onto the planar implicit curve. Section 3 presents the integrated hybrid second order algorithm for orthogonal projection onto the planar implicit curve. In Section 4, convergent analysis for the integrated hybrid second order algorithm is described. The experimental results including the evaluation of performance data are given in Section 5. Finally, Section 6 and Section 7 conclude the paper.

2. Related Work

The existing methods can be divided into three categories: local methods, global methods and compromise methods between local and global methods.

2.1. Local Methods

The first one is Newton’s iterative method. Let point be on a plane, and let be a smooth planar implicit curve; its specific form can be represented as:

Let be a point in the vicinity of curve . The orthogonal projection point satisfies the relationships:

where ∧ is the difference-product ([14]). The nonlinear system (2) can be solved using Newton’s iterative method [15]:

where , is the Jacobian matrix of partial derivatives of with respect to x. Sullivan et al. [16] used Lagrange multipliers and Newton’s algorithm to compute the closest point on the curve for each point.

where is the Lagrange multiplier. It will converge after repeated iteration of Equation (4) for increment with the initial iterative point and . However, Newton’s iterative method or Newton-type’s iterative method is of local convergence, i.e., it sometimes failed to converge even with a reasonably good initial guess. On the other hand, once a good initial guess lies in the vicinity of the solution, two advantages emerge: its fast convergence speed and high convergence accuracy. In this paper, these two advantages to improve the accuracy and effectiveness of convergence for the integrated hybrid second order algorithm will be employed.

The second one is the homotopy method [17,18]. In order to solve the target system of nonlinear Equation (2), they start with nonlinear equations , where and give a homotopy formula:

where t is a continuous parameter and ranges from 0–1, deforming the starting system of nonlinear equations into the target system of nonlinear equations . The numerical continuous homotopy methods can compute all isolated solutions of a polynomial system and are globally convergent and exhaustive solvers, i.e., their robustness is summarized by [19], and their high computational cost is confirmed in [20].

2.2. Global Methods

Firstly, a global method is a resultants’ one. For the algebraic curve with low degree no more than quartic, the resultant methods are a good choice. With classical elimination theory, it will yield a resultant polynomial from two polynomial equations with two unknown variables where the roots of the resultant polynomial in one variable correspond to the solution of the two simultaneous equations [21,22,23,24]. Assume two polynomial equations and with respective degree m and n. Let and are two integers, respectively. To facilitate the resultant method calculation, y is a constant in this case. It indicates that:

where is the zero of the x-coordinate and is a common solution of and , , and B is the Bézout matrix of and (with elements consisting of polynomials in that has nothing to do with variables and x. Therefore, the determinant det of the Bézout matrix is a polynomial in y, and there are at most mn intersections of and . Therefore, the roots of this polynomial give the y-coordinates of mn possible closest points on the curve. The best known results, such as the Sylvester’s resultant and Cayley’s statement of Bézout’s method [21,22,24], obviously indicate that, if the algebraic curve has a high degree more than quintic, it is very difficult to use the resultant method to solve a two-polynomial system.

Secondly, the global method uses the Bézier clipping technique. Using the convex hull property of Bernstein–Bézier representations, footpoints can be found by solving the nonlinear system of Equation (2) [25,26,27]. Transformation of (2) into Bernstein–Bézier form eliminates parts of the domains outside the convex hull box not including a solution. Elimination rules are repeatedly applied using the de Casteljau subdivision algorithm. Once it meets a certain accuracy requirement, the algorithm will end. The Bézier clipping method can find all solutions of the system (2), especially the singular points on the implicit curve. Certainly, the Bézier clipping method is of global convergence, but with relatively expensive computation due to many subdivision steps.

Based on and more efficient than [26], a hybrid parallel algorithm in [28] is proposed to solve systems with multivariate constraints by exploiting both the CPU and the GPU multi-core architectures. In addition, their GPU-based subdivision method utilizes the inherent parallelism in the multivariate polynomial subdivision. Their hybrid parallel algorithm can be geometrically applied and improves the performance greatly compared to the state-of-the-art subdivision-based CPU. Two blending schemes presented in [29] efficiently eliminate domains without any root and therefore greatly cut down the number of subdivisions. Through a simple linear blend of functions of the given polynomial system, this seek function would satisfy two conditions: no-root contributing and exhausting all control points of its Bernstein–Bézier representation of the same sign. It continually keeps generating these functions so as to eliminate the no-root domain during the subdivision process.

Van Sosin et al. [30] decompose and efficiently solve a wide variety of complex piecewise polynomial constraint systems with both zero constraints and inequality constraints with zero-dimensional or univariate solution spaces. The algorithm contains two parts: a subdivision-based polynomial solver and a decomposition algorithm, which can deal with large complex systems. It confirms that its performance is more effective than the existing ones.

2.3. Compromise Methods between Local and Global Methods

Firstly, the compromise method lies between the local and global method and uses successive tangent approximation techniques. The geometrically iterative method for orthogonal projection onto the implicit curve presented by Hartmann [31,32] consists of two steps for the whole process of iteration, while the local approximation of the curve by its tangent or tangent parabola is the key step.

where . The first step in [31,32] repeatedly iterates the iterative Formula (7) in the steepest way such that the iterative point is as close as possible to the curve with arbitrary initial iterative point. This is called the first step of [31,32]. Then, the second step of [31,32] will get the vertical point q by the iterative Formula (8).

Repeatedly run these two steps until the vertical point q falls on the curve . Unfortunately, the successive tangent approximation method fails for the planar implicit curves.

Secondly, the compromise method between the local and global method uses the successive circular approximation technique. Similar to [31,32], Nicholas [33] uses the osculating circle to develop another geometric iteration method. For a planar implicit curve , the curvature formula of a point on an implicit curve could be defined as K (see [10]), and the radius r of curvature could be expressed as:

The osculating circle determined by the point has its center at where the radius r is from Formula (9). For the current point from the orthogonal projection point on the curve to the test point p, then the next iterative point will be the intersection point determined by the line segment and the current osculating circle. Repeatedly iterate until the distance between the former iterative point and the latter iterative point is almost zero. The geometric iteration method [33] may fail in three cases in which there is no intersection, or the intersection is difficult to solve when the algebraic curve is of high degree more than quintic, or the new estimated iterative point lies very far from the planar implicit curve. The third geometric iteration method uses the curvature information to orthogonally project point onto the parametric osculating circle and osculating sphere [34]. Although the method in [34] handles point orthogonal projection onto the parametric curve and surface, the basic idea is the same as that in [33] to deal with the problem for the planar implicit curve. The convergence analysis for the method in [34] is provided in [35]. The third geometric iteration method [34] is more robust than the existing methods, but it is time consuming.

Thirdly, the compromise method between the local and global method also uses the circle shrinking technique [14]. It repeatedly iterates Equation (7) in the steepest way such that the iterative point is as close as possible to the curve with the arbitrary initial iterative point. This time, the iterative point falling on the curve is called the point , and then, two points p and define a circle with center p. Compute the point by calculating the (local) maximum of curve along the circle with center p, starting from . The intersection between the line segment and the planar implicit curve will be the next iterative point . Replace the point with the point , repeat the above process until the distance between these two points approaches zero. Hu et al. [36] proposed a circle double-and-bisect algorithm to reliably evaluate the accurate geometric distance between a point and an implicit curve. The circle doubling algorithm begins with a very small circle centered at the test point p. Extend the circle by doubling its radius if the circle does not intersect with the implicit curve . The extending process continues until the circle intersects with the implicit circle where the former circle does not hit the curve, but the current one does. At the same time, the former radius and the current radius are called interior radius and exterior radius , respectively. The bisecting process continues yielding new radius . If a circle with its radius r intersects with the curve, replace r with , else with . Repeatedly iterate the above procedure until . Similar to the circle shrinking method, Chen et al. [37,38] made some contribution to the orthogonal projection onto the parametric curve and surface. Given a test point and a planar implicit curve , the footpoint has to be a solution of a well-constrained system:

where this formula is from [38]. The efficient algebraic solvers can solve this system, and one just needs to take the minimum over all possible footpoints. It uses a circular/spherical clipping technique to eliminate the curve parts/surface patches outside a circle/sphere with the test point as its center point, where the objective squared distance function for judging whether a curve/surface is outside a circle/sphere is the key technique. The radius of the elimination circle/sphere gets smaller and smaller during the subdivision process. Once the radius of the circle/sphere can no longer become smaller, the iteration will end. With the advantage of high robustness, the algorithm still faces two difficulties: it is time consuming and had difficulty calculating the intersection between the circle and the planar implicit curve with a relatively high degree (more than five).

3. Integrated Hybrid Second Order Algorithm

Let be a smooth planar implicit curve, and let be a point in the vicinity of curve (test point). Assume that s is the arc length parameter for the planar implicit curve . is the tangent vector along the implicit curve . The orthogonal projection point to satisfy this relationship:

where ∧ is the difference-product ([14]).

3.1. Orthogonal Tangent Vector Method

The derivative of the planar implicit curve with respect to parameter s is,

where is the Hamiltonian operator and the symbol is the inner product. Its geometric meaning is that the tangent vector t is orthogonal to the corresponding gradient . The combination of the tangent vector t and Formula (12) will generate:

From (13), it is not difficult to know that the unit tangent vector of t is:

The following first order iterative algorithm determines the foot point of p on .

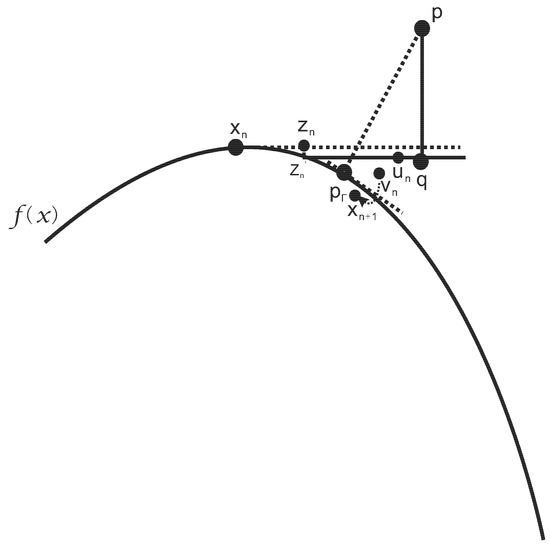

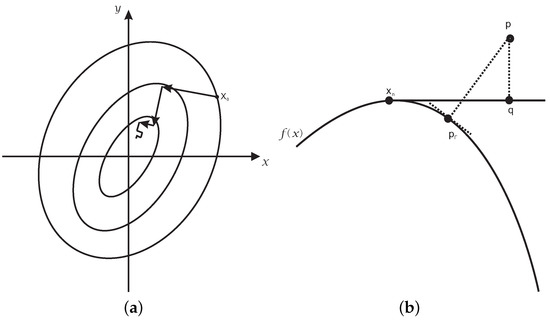

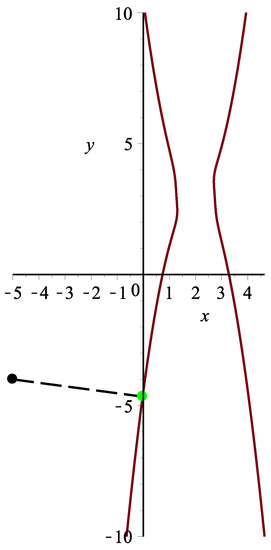

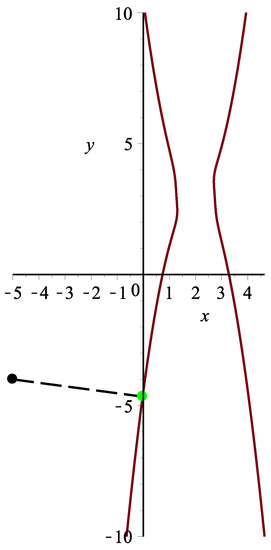

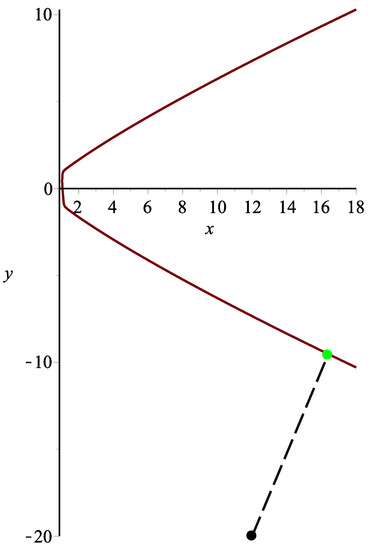

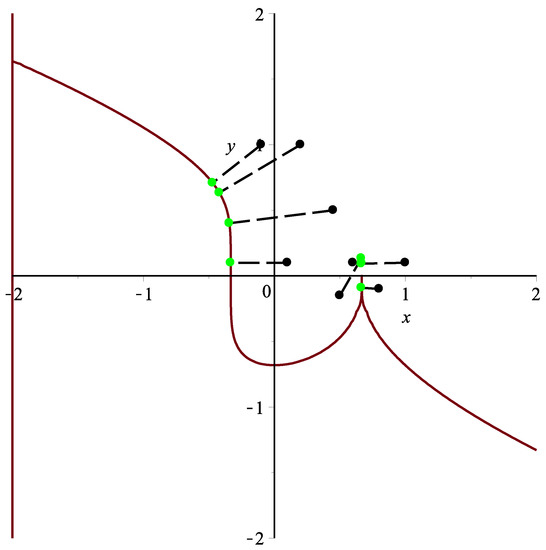

where , . q is the corresponding orthogonal projection point of test point p at the tangent line determined by the initial iterative point (see Figure 1). Formula (14) can be expressed as,

where is the initial iterative point. Many numerical tests illustrate that iterative Formula (15) depends on the initial iterative point, namely it is very difficult for the iterative value to fall on the planar implicit curve.

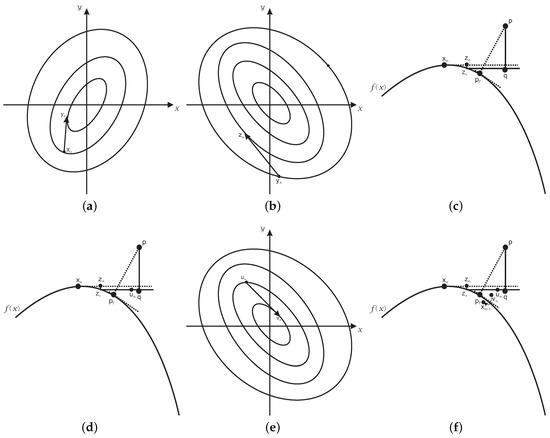

Figure 1.

Graphic demonstration for the hybrid second order algorithm.

3.2. Steepest Descent Method

To move the iterative value to fall on the planar implicit curve as much as possible, a method of preprocessing is introduced. Before the implementation of the iterative Formula (15), the steepest descent method will be adopted, namely a basic Newton’s iterative formula is added such that the iterative value falls on the planar implicit curve as much as possible.

where , .

3.3. Linear Calibrating Method

Although more robust than the iterative Formula (15) to a certain extent, the iterative Formula (16) will often change convergence if the test point p or the initial iterative point takes different values. Especially for large , iterative point will deviate from the planar implicit curve greatly, namely . In this case, a correction for the deviation of iterative point is proposed as follows. If , the increment is used for correction. That is to say, , and are the iteration values before and after correction, respectively, and . The correction aims to make the deviation of the iteration value from the planar implicit curve as small as possible. Let be perpendicular to increment value and orthogonal to the planar implicit curve such that and , where and take the value at . Then, it is easy to get and . The corresponding iterative formula for correction will be,

where , , , . Obviously the stability and efficiency of the iterative Formula (17) improve greatly, compared with the previous iterative Formulas (15) and (16).

3.4. Newton’s Accelerated Method

Many tests for the iterative Formula (17) conducted indicate that it is sometimes not convergent when the test point lies far from the planar implicit curve. Newton’s accelerated method is then adopted to correct the problem. For the classic Newton second order iterative method, its iterative expression is:

where is inner product of the gradient of the function with itself. The function is expressed as,

where the symbol [ ] denotes the determinant of a matrix . In order to improve the stability and rate of convergence, based on the iterative Formula (17), the hybrid second order algorithm is proposed to orthogonally project onto the planar implicit curve . Between Step 1 and Step 2 of the iterative Formula (17) and between Step 3 and Step 4 of the same formula, the iterative Formula (18) is inserted twice. After this, the stability, the rapidity, the efficiency and the numerical iterative accuracy of the iterative algorithm (17) all improve. Then, the iterative formula becomes,

where , , , . Iterative termination for the iterative Formula (20) satisfies: . The robustness and the stability of the iterative Formula (20) improves, compared with the previous iteration formulas. That is to say, even for test point p being far away from the planar implicit curve, the iterative Formula (20) is still convergent.

After normalization of the second equation and the fifth equation in the iterative Formula (20), it becomes,

where . The iterative Formula (21) can be implemented in six steps. The first step computes the point on the planar implicit curve using the basic Newton’s iterative formula, which is not associated with test point p for any initial iterative point. The second step uses Newton’s iterative method to accelerate the whole iteration process and get the new iterative point , which is associated with test point p. The third step gets the orthogonal projection point q (footpoint) at the tangent line to . The fourth equation in iterative Formula (21) yields the new iterative point . The third step and the fourth step compute the linear orthogonal increment, which is the core component (including linear calibrating method of sixth step) of the iterative Formula (21). The fifth step accelerates the previous steps again and yields the iterative point, which is associated with test point p. The sixth step corrects the iterative result for the previous three steps. Therefore, the whole six steps ensure the robustness of the whole iteration process. The above procedure is repeated until the iterative point coincides with the orthogonal projection point (see Figure 1 and the detailed explanation of Remark 3).

Remark 1.

In the actual implementation of the iterative Formula (21) of the hybrid second order algorithm (Algorithm 1), three techniques are used to optimize the process. On the right-hand side of Step 1, Step 2, Step 3 and step 5, the part in parentheses is calculated firstly and then the part outside the parentheses to prevent overflow of the intermediate calculation process. Error handling for the second term is added in the right-hand side of Step 4 in the iterative Formula (21). Namely, if , sign = 1. For the second term of the right-hand side in Step 6 of the iterative Formula (21), if the determinant of is zero, then . Namely, if any component of equals zero, then substitute the sixth step with to avoid the overflow problem.

According to the analyses above, the hybrid second order algorithm is presented as follows.

| Algorithm 1: Hybrid second order algorithm. |

| Input: Initial iterative value , test point p and planar implicit curve . |

| Output: The orthogonal projection point . |

| Description: |

| Step 1: |

| ; |

| do{ |

| ; |

| Update according to the iterative Formula (21); |

| }while(); |

| Step 2: |

| = ; |

| return ; |

Remark 2.

Many tests demonstrate that if the test point p is not far away from the planar implicit curve, Algorithm 1 will converge for any initial iterative point . For instance, assume a planar implicit curve and four different test points ; Algorithm 1 converges efficiently for the given initial iterative value. See Table 1 for details, where p is the test point, is the initial iterative point, iterations is the number of iterations, is the absolute function value with the orthogonal projection point and Error_2 = .

Table 1.

Convergence of the hybrid second order algorithm for four given test points.

However, when the test point p is far away from the planar implicit curve, no matter whether the initial iterative point p is close to the planar implicit curve, Algorithm 1 sometimes produces oscillation such that subsequent iterations could not ensure convergence. For example, for the same planar implicit curve with test point p = and initial iterative point = , it constantly produces oscillation such that subsequent iterations could not ensure convergence after 838 iterations (see Table 2).

Table 2.

Oscillation of the hybrid second order algorithm for the planar implicit curve with a far-away test point.

3.5. Initial Iterative Value Estimation Algorithm

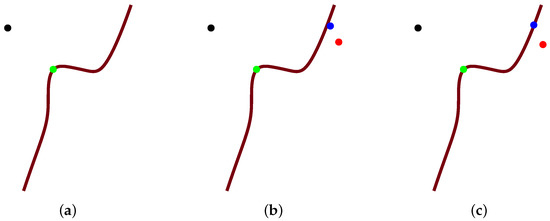

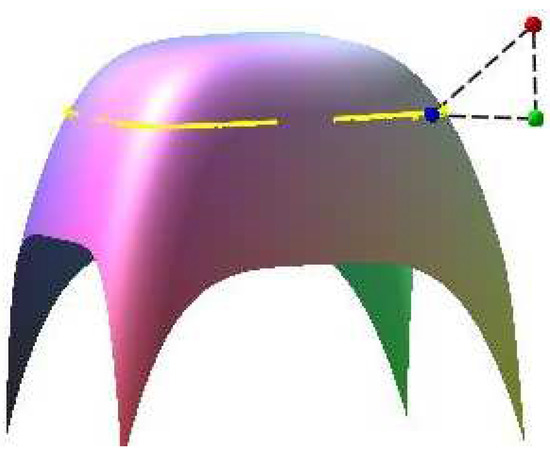

Through Remark 2, when the test point p is not far away from the planar implicit curve, with the initial iterative point in any position, Algorithm 1 could ensure convergence. However, when the test point p is far away from the planar implicit curve, even if the initial iterative point is close to the planar implicit curve, Algorithm 1 sometimes produces oscillation such that subsequent iterations could not ensure convergence. This is essentially a problem for any Newton-based method. Consider high nonlinearity, which cannot be captured just by , i.e, the surface is very oscillatory in the neighborhood of the plane, but it does intersect the plane in a single closed branch. Under this case, for far-away test point p from the planar implicit curve, any Newton-based method sometimes will produce oscillation to cause non-convergence. We will give a counter example in Remark 2. To solve the problem of non-convergence, some method is proposed to put the initial iterative point close to the orthogonal projection point . Therefore, the task changes to construct an algorithm such that the initial iterative value of the iterative Formula (21) and the orthogonal projection point are as close as possible. The algorithm can be summarized as follows. Input an initial iterative point , and repeatedly iterate with the basic Newton’s iterative formula such that the iterative point lies on the planar implicit curve (see Figure 2c). After that, iterate once through the formula where the blue point denotes the initial iterative value (see Figure 2c). After the first round iteration in Figure 2, then replace the initial iterative value with the iterated value q, and do the second round iteration (see Figure 3). After the second round iteration, replace the initial iterative value with the iterated value q, and do the third round iteration (see Figure 4). The detailed algorithm is the following.

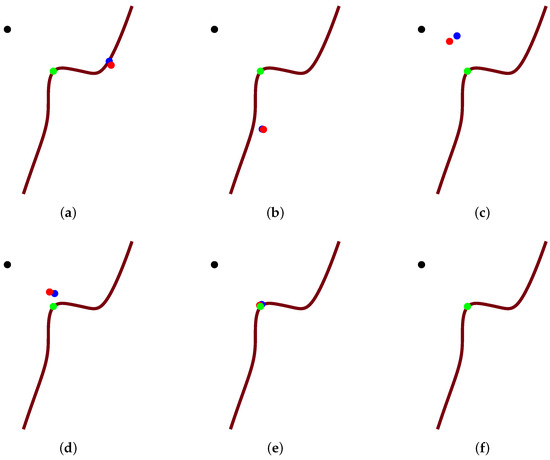

Figure 2.

The entire graphical demonstration of the first round iteration in Algorithm 2. (a) Initial status; (b) Intermediate status; (c) Final status.

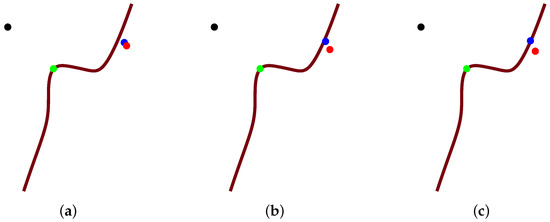

Figure 3.

The entire graphical demonstration of the second round iteration in Algorithm 2. (a) Initial status; (b) Intermediate status; (c) Final status.

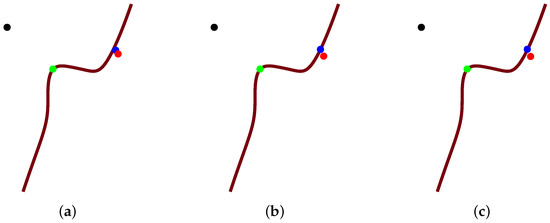

Figure 4.

The entire graphical demonstration of the third round iteration in Algorithm 2. (a) Initial status; (b) Intermediate status; (c) Final status.

Firstly, the notations for Figure 2, Figure 3 and Figure 4 are clarified. Black and green points represent test point p and orthogonal projection point , respectively. The blue point denotes of Step 2 in Algorithm 2, whether it is on the planar implicit curve or not. The footpoint q(red point) denotes q in Step 3 of Algorithm 2, and the brown curve describes the planar implicit curve .

Secondly, Algorithm 2 is interpreted geometrically. Step 2 in Algorithm 2 uses basic Newton’s iterative method. That is to say, it repeatedly iterates using the steepest descent method in Section 3.2 until the blue point of Step 2 lies on the planar implicit curve . At the same time, through Step 3 in Algorithm 2, it yields footpoint q (see Figure 2). The integer n of the iteration round counts one after the first round iteration of Algorithm 2. When the blue point is on the planar implicit curve , at this time, replace the initial iterative value with the iterated value q, and do the second round iteration; the integer n of the iteration round counts two (see Figure 3). Replace the initial iterative value with the iterated value q again after the second round iteration, and do the third round iteration; the integer n of the iteration round counts three (see Figure 4). When in Step 4, then exit Algorithm 2. At this time, the current footpoint q from Algorithm 2 will be the initial iterative value for Algorithm 1.

| Algorithm 2: Initial iterative value estimation algorithm. |

| Input: Initial iterative value , test point p and planar implicit curve . |

| Output: The footpoint point q. |

| Description: |

| Step 1: ; ; |

| Step 2: |

| do{ |

| ; |

| ; |

| }while(); |

| Step 3: ; |

| Step 4: ; |

| if { |

| ; |

| go to Step 1; |

| } |

| else |

| return q; |

Thirdly, the reason for choosing in Algorithm 2 is explained. Many cases are tested for planar implicit curves with no singular point. As long as , the output value from Algorithm 2 could be used as the initial iterative value of Algorithm 1 to get convergence. However, if the planar implicit curve has singular points or big fluctuation and oscillation appear, can guarantee the convergence. In a future study, a more optimized and efficient algorithm needs to be developed to automatically specify the integer n.

3.6. Integrated Hybrid Second Order Algorithm

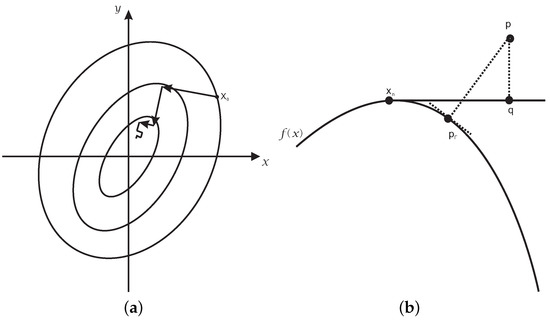

Algorithm 2 can optimize the initial iterative value for Algorithm 1. Then, Algorithm 1 can project the test point p onto planar implicit curve . The integrated hybrid second order algorithm (Algorithm 3) is presented to take advantage of Algorithms 1 and 2, which are denoted as Algorithm 1 ( and Algorithm 2 ( for convenience, respectively. Algorithm 3 can be described as follows (see Figure 5).

Figure 5.

The entire graphical demonstration for the whole iterative process of Algorithm 3. (a) Initial status; (b) First intermediate status; (c) Second intermediate status; (d) Third intermediate status; (e) Fourth intermediate status; (f) Final status.

Firstly, the notations for Figure 5 are clarified, which describes the entire iterative process in Algorithm 3. The black point is test point p; the green point is orthogonal projection point ; the blue point is the left-hand side value of the equality of the first step of the iterative Formula (21) in Algorithm 1; footpoint q (red point) is the left-hand side value of the equality of the third step of the iterative Formula (21) in Algorithm 1; and the brown curve represents the planar implicit curve .

| Algorithm 3: Integrated hybrid second order algorithm. |

| Input: Initial iterative value , test point p and planar implicit curve . |

| Output: The orthogonal projection point . |

| Description: |

| Step 1: q = Algorithm 2 ; |

| Step 2: = Algorithm 1 ; |

| Step 3: return ; |

Secondly, Algorithm 3 is interpreted. The output from Algorithm 2 is taken as the initial iterative value for Algorithm 1 (see footpoint q or the red point in Figure 4c). Algorithm 1 repeatedly iterates until it satisfies the termination criteria ( (see Figure 5). The six subgraphs in Figure 5 represent successive steps in the entire iterative process of Algorithm 1. In the end, three points of green, blue and red merge into orthogonal projection point (see Figure 5f).

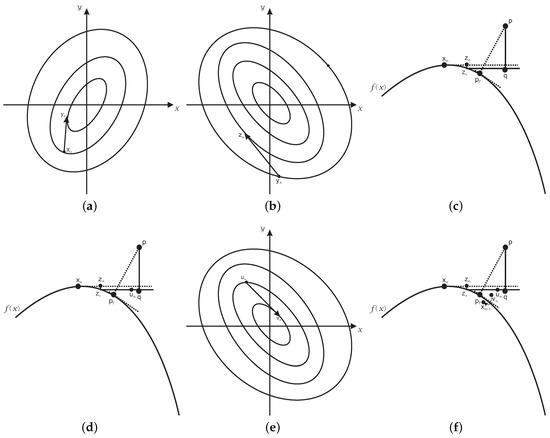

Remark 3.

Algorithm 3 with two sub-algorithms is interpreted geometrically, where Algorithms 1 and 2 are graphically demonstrated by Figure 6 and Figure 7, respectively. In Figure 6a and Figure 7a, several closed loops represent the orthogonal projection of the contour lines on the surface onto the horizontal plane , respectively. In Figure 7b,e, several closed loops also represent orthogonal projection of the contour lines on the surface onto the horizontal plane , respectively. In Figure 6a, the vector starting with point is gradient , and the length of the vector is . For arbitrary initial iterative point , the iterative formula (Step 2 of Algorithm 2) from the steepest descent method repeatedly iterates until the iterative point lies on the planar implicit curve . In Figure 6b, the footpoint q, i.e., the intersection of tangent line (from the point on the planar implicit curve ) and perpendicular line (from test point p) is acquired by Step 3 in Algorithm 2. After the first round iteration of Algorithm 2, replace the initial iterative point with the footpoint q, and then, do the second round and the third round iteration. The three rounds of iteration constitute Algorithm 2 and part of Algorithm 3.

Figure 6.

The entire graphical demonstration of Algorithm 2. (a) Step 2 of Algorithm 2; (b) Step 3 of Algorithm 2.

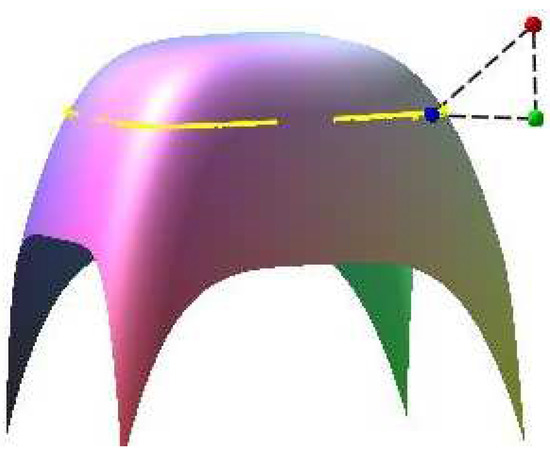

Figure 7.

The entire graphical demonstration of Algorithm 1. (a) The first step of the iterative Formula (21); (b) The second step of the iterative Formula (21); (c) The third step of the iterative Formula (21); (d) The fourth step of the iterative Formula (21); (e) The fifth step of the iterative Formula (21); (f) The sixth step of the iterative Formula (21).

In each sub-figure of Figure 7, points p and are the test point and the corresponding orthogonal projective point, respectively. In Figure 7a, the vector starting with point is gradient , and the length of the vector is . For the initial iterative point from Algorithm 2, the iterative formula (Step 1 of Algorithm 1) from the steepest descent method iterates once. In Figure 7b, the vector starting with point is gradient , and the length of the vector is . For the initial iterative point from Step 1 in Algorithm 1, , the iterative formula (Step 2 of Algorithm 1) from the steepest descent method iterates once. In Figure 7c, the footpoint q, i.e., the intersection of tangent line (from the point on the planar implicit curve ) and the perpendicular line (from test point p), is acquired by Step 3 in Algorithm 1. In the actual iterative process, point is approximately equivalent to the point . In Figure 7d, point comes form the fourth step of Algorithm 1, which aims to obtain a linear orthogonal increment. In Figure 7e, the vector starting with point is gradient , and the length of the vector is . For the initial iterative point from Step 4 in Algorithm 1, the iterative formula (Step 5 of Algorithm 1) from the steepest descent method iterates once more. In Figure 7f, the iterative point from the sixth step in Algorithm 1 gives a correction for the iterative point from the fifth step in Algorithm 1. Repeatedly iterate the above six steps until the iteration exit criteria are met. In the end, three points of footpoint q, the iterative point and the orthogonal projecting point merge into orthogonal projection point . These six steps constitute Algorithm 1 and part of Algorithm 3.

4. Convergence Analysis

In this section, the convergence analysis for the integrated hybrid second order algorithm is presented. Proofs indicate the convergence order of the algorithm is up to two, and Algorithm 3 is independent of the initial value.

Theorem 1.

Given an implicit function that can be parameterized, the convergence order of the iterative Formula (21) is up to two.

Proof.

Without loss of generality, assume that the parametric representation of the planar implicit curve is . Suppose that parameter is the orthogonal projection point of test point onto the parametric curve . ☐

The first part will derive that the order of convergence of the first step for the iterative Formula (21) is up to two. It is not difficult to know the iteration equation in the corresponding Newton’s second order parameterized iterative method, i.e., the first step for the iterative Formula (21):

Taylor expansion around generates:

where and . Thus, it is easy to have:

From (22)–(24), the error iteration can be expressed as,

where .

The second part will prove that the order of convergence of the second step for the iterative Formula (21) is two. It is easy to get the corresponding parameterized iterative equation for Newton’s second-order iterative method, essentially the second step for the iterative Formula (21),

where:

Using Taylor expansion around , it is easy to get:

where and . Thus, it is easy to get:

According to Formula (26)–(29), after Taylor expansion and simplifying, the error relationship can be expressed as follows,

where . Because the fifth step is completely equal to the second step of the iterative Formula (21) and outputs from Newton’s iterative method are closely related with test point p, the order of convergence for the fifth step of the iterative Formula (21) is also two.

The third part will derive that the order of convergence of the third step and fourth step for iterative Formula (21) is one. According to the first order method for orthogonal projection onto the parametric curve [32,39,40], the footpoint of the parameterized iterative equation of the third step of the iterative Formula (21) can be expressed in the following way,

From the iterative Equation (31) and combining with the fourth step of the iterative Formula (21), it is easy to have:

where denotes the scalar product of vectors . Let , and repeat the procedure (32) until is less than a given tolerance . Because parameter is the orthogonal projection point of test point onto the parametric curve , it is not difficult to verify,

Because the footpoint q is the intersection of the tangent line of the parametric curve at and the perpendicular line determined by the test point p, the equation of the tangent line of the parametric curve at is:

At the same time, the vector of the line segment connected by the test point p and the point is:

The vector (35) and the tangent vector of the tangent line (34) are mutually orthogonal, so the parameter value of the tangent line (34) is:

Substituting (36) into (34) and simplifying, it is not difficult to get the footpoint q = ,

Substituting (37) into (32) and simplifying, it is easy to obtain,

From (33) and combined with (38), using Taylor expansion by the symbolic computation software Maple 18, it is easy to get:

Simplifying (30), it is easy to obtain:

where the symbol denotes the coefficient in the first order error of the right-hand side of Formula (40). The result shows that the third step and the fourth step of the iterative Formula (21) comprise the first order convergence. According to the iterative Formula (21) and combined with three error iteration relationships (25), (30) and (40), the convergent order of each iterative formula is not more than two. Then, the iterative error relationship of the iterative Formula (21) can be expressed as follows:

To sum up, the convergence order of the iterative Formula (21) is up to two.

Theorem 2.

The convergence of the hybrid second order algorithm (Algorithm 1) is a compromise method between the local and global method.

Proof.

The third step and fourth step of the iterative Formula (21) of Algorithm 1 are equivalent to the foot point algorithm for implicit curves in [32]. The work in [14] has explained that the convergence of the foot point algorithm for the implicit curve proposed in [14] is a compromise method between the local and global method. Then, the convergence of Algorithm 1 is also a compromise method between the local and global method. Namely, if a test point is close to the foot point of the planar implicit curve, the convergence of Algorithm 1 is independent of the initial iterative value, and if not, the convergence of Algorithm 1 is dependent on the initial iterative value. The sixth step in Algorithm 1 promotes the robustness. However, the third step, the fourth step and the sixth step in Algorithm 1 still constitute a compromise method between the local and global ones. Certainly, the first step (steepest descent method) of Algorithm 1 can make the iterative point fall on the planar implicit curve and improves its robustness. The second step and the fifth step constitute the classical Newton’s iterative method to accelerate convergence and improve robustness in some way. The steepest descent method of the first step and Newton’s iterative method of the second step and the fifth step in Algorithm 1 are more robust and efficient, but they can change the fact that Algorithm 1 is the compromise method between the local and global ones. To sum up, Algorithm 1 is the compromise method between the local and global ones. ☐

Theorem 3.

The convergence of the integrated hybrid second order algorithm (Algorithm 3) is independent of the initial iterative value.

Proof.

The integrated hybrid second order algorithm (Algorithm 3) is composed of two parts sub-algorithms (Algorithm 1 and Algorithm 2). From Theorem 2, Algorithm 1 is a compromise method between the local and global method. Of course, whether the test point p is very far away or not far away from the planar implicit curve , if the initial iterative value lies close to the orthogonal projection point , Algorithm 1 could be convergent. In any case, Algorithm 2 can change the initial iterative value of Algorithm 1 sufficiently close to the orthogonal projection point to ensure the convergence of Algorithm 1. In this way, Algorithm 3 can converge for any initial iterative value. Therefore, the convergence of the integrated hybrid second order algorithm (Algorithm 3) is independent of the initial value. ☐

5. Results of the Comparison

Example 1.

([14]) Assume a planar implicit curve . One thousand and six hundred test points from the square are taken. The integrated hybrid second order algorithm (Algorithm 3) can orthogonally project all 1600 points onto planar implicit curve Γ. It satisfies the relationships and .

It consists of two steps to select/sample test points:

(1) Uniformly divide planar square of the planar implicit curve into sub-regions , where

(2) Randomly select a test point in each sub-region and then an initial iterative value in its vicinity.

The same procedure to select/sample test points applies for other examples below.

One test point in the first case is specified. Using Algorithm 3, the corresponding orthogonal projection point is = (−0.47144354751227009, 0.70879213227958752), and the initial iterative values are (−0.1,0.8), (−0.1,0.9), (−0.1,1.1), (−0.1,1.2), (−0.2,0.8), (−0.2,0.9), (−0.2,1.1) and (−0.2,1.2), respectively. Each initial iterative value iterates 12 times, respectively, yielding 12 different iteration times in nanoseconds. In Table 3, the average running times of Algorithm 3 for eight different initial iterative values are 1,099,243, 582,078, 525,942, 490,537, 392,090, 364,817, 369,739 and 367,654 nanoseconds, respectively. In the end, the overall average running time is 524,013 nanoseconds, while the overall average running time of the circle shrinking algorithm in [14] is 8.9 ms under the same initial iteration condition.

Table 3.

Running time for different initial iterative values by Algorithm 3 in Example 1.

The iterative error analysis for the test point under the same condition is presented in Table 4 with initial iterative points in the first row. The distance function is used to compute error values in other rows than the first one, and other examples below apply the same criterion of the distance function. The left column in Table 4 denotes the corresponding number of iterations, which is the same for Tables 8–15.

Table 4.

The error analysis of the iteration process of Algorithm 3 in Example 1.

Another test point in the second case is specified. Using Algorithm 3, the corresponding orthogonal projection point is = (−0.42011639143389254, 0.63408011508207950), and the initial iterative values are (0.3,0.9), (0.3,1.2), (0.4,0.9), (0.3,0.7), (0.1,0.8), (0.1,0.6), (0.4,1.1), (0.4,1.3), respectively. Each initial iterative value iterates 10 times, respectively, yielding 10 different iteration times in nanoseconds. In Table 5, the average running times of Algorithm 3 for eight different initial iterative values are 1,152,664, 844,250, 525,540, 1,106,098, 1,280,232, 1,406,429, 516,779 and 752,429 nanoseconds, respectively. In the end, the overall average running time is 948,053 nanoseconds, while the overall average running time of the circle shrinking algorithm in [14] is 12.6 ms under the same initial iteration condition.

Table 5.

Running times for different initial iterative values by Algorithm 3 in Example 1.

The third test point in the third case is specified. Using Algorithm 3, the corresponding orthogonal projection point is =, , and the initial iterative values are (0.1,0.2), (0.1,0.3), (0.1,0.4), (0.2,0.2), (0.2,0.3), (0.3,0.2), (0.3,0.3), (0.3,0.4), respectively. Each initial iterative value iterates 12 times, respectively, yielding 12 different iteration times in nanosecond. In Table 6, the average running times of Algorithm 3 for eight different initial iterative values are 183,515, 680,338, 704,694, 192,564, 601,235, 161,127, 713,697 and 1,034,443 nanoseconds, respectively. In the end, the overall average running time is 533,952 nanoseconds, while the overall average running time of the circle shrinking algorithm in [14] is 9.4 ms under the same initial iteration condition.

Table 6.

Running times for different initial iterative values by Algorithm 3 in Example 1.

To sum up, Algorithm 3 is faster than the circle shrinking algorithm in [14] (see Figure 8).

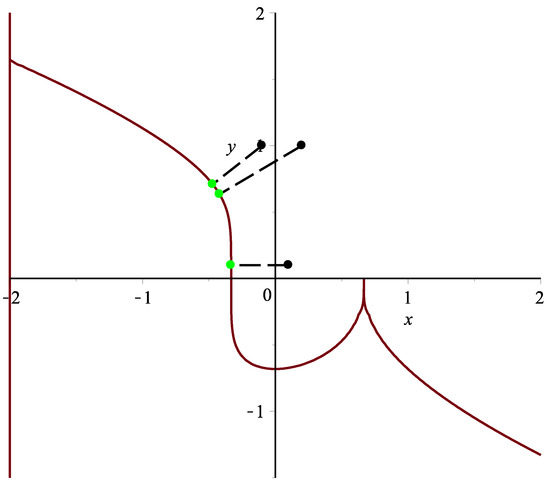

Figure 8.

Graphic demonstration for Example 1.

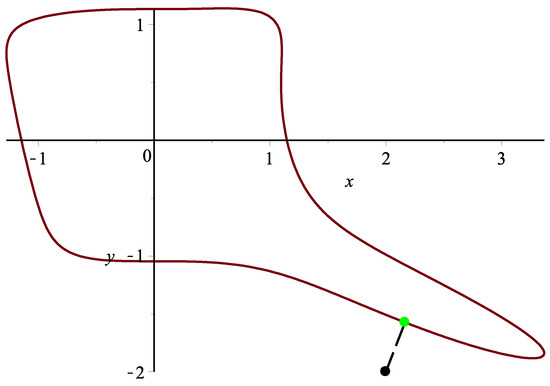

Example 2.

Assume a planar implicit curve . Nine hundred test points from square are taken. Algorithm 3 can rightly orthogonally project all 900 points onto planar implicit curve Γ. It satisfies the relationships and . One test point in this case is specified. Using Algorithm 3, the corresponding orthogonal projection point is = (−1.2539379406252056281, 0.57568037362837924613), and the initial iterative values are (−1.4,0.6), (−1.3,0.7), (−1.2,0.6), (−1.6,0.4), (−1.4,0.7), (−1.4,0.3), (−1.3,0.6), (−1.2,0.8), respectively. Each initial iterative value iterates 10 times, respectively, yielding 10 different iteration times in nanoseconds. In Table 7, the average running times of Algorithm 3 for eight different initial iterative values are 4,487,449, 4,202,203, 4,555,396, 4,533,326, 4,304,781, 4,163,107, 4,268,792 and 4,378,470 nanoseconds, respectively. In the end, the overall average running time is 4,361,691 nanoseconds (see Figure 9).

Table 7.

Running times for different initial iterative values by Algorithm 3 in Example 2.

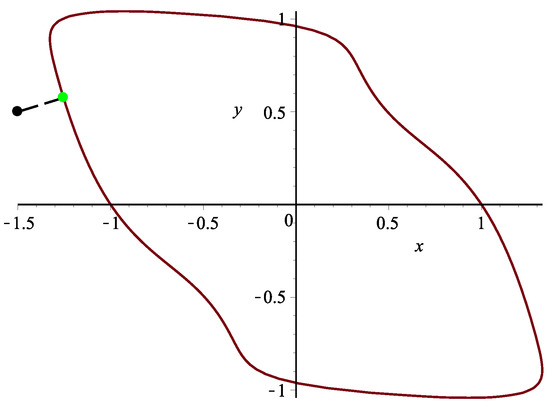

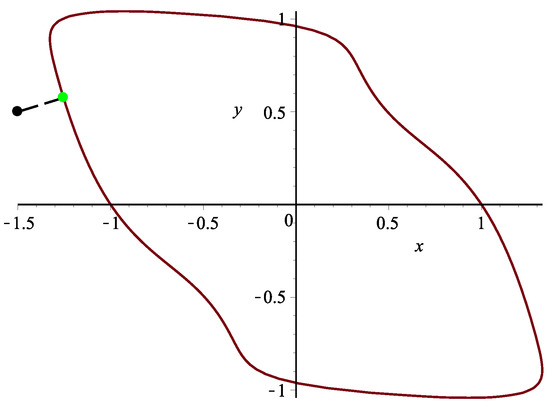

Figure 9.

Graphic demonstration for Example 2.

The iterative error analysis for the test point p = (−1.5,0.5) under the same condition is presented in Table 8 with initial iterative points in the first row.

Table 8.

The error analysis of the iteration process of Algorithm 3 in Example 2.

Example 3.

Assume a planar implicit curve . Three thousand and six hundred points from square are taken. Algorithm 3 can can orthogonally project all 3600 points onto planar implicit curve Γ. It satisfies the relationships and . One test point in this case is specified. Using Algorithm 3, the corresponding orthogonal projection point is = (−0.027593939033081903,−4.6597845115690539), and the initial iterative values are (−12,−7), (−3,−5), (−5,−4), (−6.6,−9.9), (−2,−7), (−11,−6), (−5.6,−2.3), (−4.3,−5.7), respectively. Each initial iterative value iterates 10 times, respectively, yielding 10 different iteration times in nanoseconds. In Table 9, the average running times of Algorithm 3 for eight different initial iterative values are 299,569, 267,569, 290,719, 139,263, 125,962, 149,431, 289,643 and 124,885 nanoseconds, respectively. In the end, the overall average running time is 210,880 nanoseconds (see Figure 10).

Table 9.

Running times for different initial iterative values by Algorithm 3 in Example 3.

Figure 10.

Graphic demonstration for Example 3.

The iterative error analysis for the test point under the same condition is presented in Table 10 with initial iterative points in the first row.

Table 10.

The error analysis of the iteration process of Algorithm 3 in Example 3.

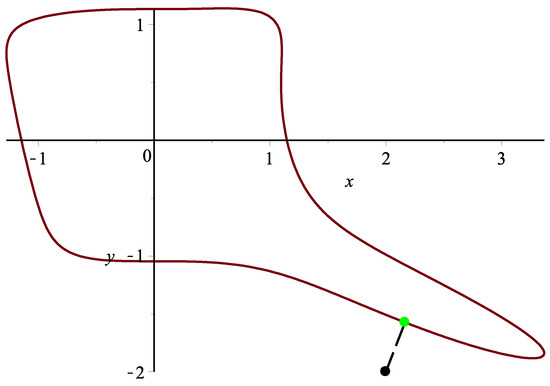

Example 4.

Assume a planar implicit curve . Two thousand one hundred test points from the square are taken. Algorithm 3 can orthogonally project all 2100 points onto planar implicit curve Γ. It satisfies the relationships and . One test point in this case is specified. Using Algorithm 3, the corresponding orthogonal projection point is = (2.1654788271485294, −1.5734131236664724), and the initial iterative values are (2.2,−2.1), (2.3,−1.9), (2.4,−1.8), (2.1,−2.3), (2.4,−1.6), (2.3,−1), (1.6,−2.5), (2.6,−2.5), respectively. Each initial iterative value iterates 10 times, respectively, yielding 10 different iteration times in nanoseconds. In Table 11, the average running times of Algorithm 3 for eight different initial iterative values are 403,539, 442,631, 395,384, 253,156, 241,510, 193,592, 174,340 and 187,362 nanoseconds, respectively. In the end, the overall average running time is 286,439 nanoseconds (see Figure 11).

Table 11.

Running times for different initial iterative values by Algorithm 3 in Example 4.

Figure 11.

Graphic demonstration for Example 4.

The iterative error analysis for the test point under the same condition is presented in Table 12 with initial iterative points in the first row.

Table 12.

The error analysis of the iteration process of Algorithm 3 in Example 4.

Example 5.

Assume a planar implicit curve . Tow thousand four hundred test points from the square are taken. Algorithm 3 can orthogonally project all 2400 points onto planar implicit curve Γ. It satisfies the relationships and .

One test point in this case is specified. Using Algorithm 3, the corresponding orthogonal projection point is = (16.9221067487652, −9.77831982969495), and the initial iterative values are (12,−20), (3,−5), (5,−4), (66,−99), (14,−21), (11,−6), (56,−23), (13,−7), respectively. Each initial iterative value iterates 10 times, respectively, yielding 10 different iteration times in nanoseconds. In Table 13, the average running times of Algorithm 3 for eight different initial iterative values are 285,449, 447,036, 405,726, 451,383, 228,491, 208,624, 410,489 and 224,141 nanoseconds, respectively. In the end, the overall average running time is 332,667 nanoseconds (see Figure 12).

Table 13.

Running times for different initial iterative values by Algorithm 3 in Example 5.

Figure 12.

Graphic demonstration for Example 5.

The iterative error analysis for the test point under the same condition is presented in Table 14 with initial iterative points in the first row.

Table 14.

The error analysis of the iteration process of Algorithm 3 in Example 5.

Example 6.

Assume a planar implicit curve . One spatial test point in this case is specified, and orthogonally project it onto plane , so the planar test point will be . Using Algorithm 3, the corresponding orthogonal projection point on plane is , and it satisfies the two relationships and . In the iterative error Table 15, six points (1,1), (1.5,1.5), (−1,1), (1,−1), (1.5,1), (1,1.5) in the first row are the initial iterative points of Algorithm 3. In Figure 13, red, green and blue points are the spatial test point, planar test point and their common corresponding orthogonal projection point, respectively. Assume surface with two free variables x and y. The yellow curve is planar implicit curve .

Table 15.

The error analysis of the iteration process of Algorithm 3 in Example 6.

Figure 13.

Graphic demonstration for Example 6.

Remark 4.

In the 22 tables, all computations were done by using g++ in the Fedora Linux 8 environment. The iterative termination criteria for Algorithm 1 and Algorithm 2 are and , respectively. Examples 1–6 are computed using a personal computer with Intel i7-4700 3.2-GHz CPU and 4.0 GB memory.

In Examples 2–6, if the degree of every planar implicit curve is more than five, it is difficult to get the intersection between the line segment determined by test point p and and the planar implicit curve by using the circle shrinking algorithm in [14]. The running time comparison for Algorithm in [14] was not done, and it was not done for the circle double-and-bisect algorithm in [36] due to the same reason. The running time comparison test by using the circle double-and-bisect algorithm in [36] has not been done because it is difficult to solve the intersection between the circle and the planar implicit curve by using the circle double-and-bisect algorithm. In addition, many methods (Newton’s method, the geometrically-motivated method [31,32], the osculating circle algorithm [33], the Bézer clipping method [25,26,27], etc.) cannot guarantee complete convergence for Examples 2–5. The running time comparison test for those methods in [25,26,27,31,32,33] has not been done yet. From Table 2 in [36], the circle shrinking algorithm in [14] is faster than the existing methods, while Algorithm 3 is faster than the circle shrinking algorithm in [14] in our Example 1. Then, Algorithm 3 is faster than the existing methods. Furthermore, Algorithm 3 is more robust and efficient than the existing methods.

Besides, it is not difficult to find that if test point p is close to the planar implicit curve and initial iterative point is close to the test point p, for a lower degree of and fewer terms in the planar implicit curve and lower precision of the iteration, Algorithm 3 will use less total average running time. Otherwise, Algorithm 3 will use more time.

Remark 5.

Algorithm 3 essentially makes an orthogonal projection of test point onto a planar implicit curve . For the multiple orthogonal points situation, the basic idea of the authors’ approach is as follows:

- (1)

- Divide a planar region of planar implicit curve into sub-regions , where .

- (2)

- Randomly select an initial iterative value in each sub-region.

- (3)

- Using Algorithm 3 and using each initial iterative value, do the iteration, respectively. Let us assume that the corresponding orthogonal projection points are , respectively.

- (4)

- Compute the local minimum distances , where .

- (5)

- Compute the global minimum distance .

To find as many solutions as possible, a larger value of m is taken.

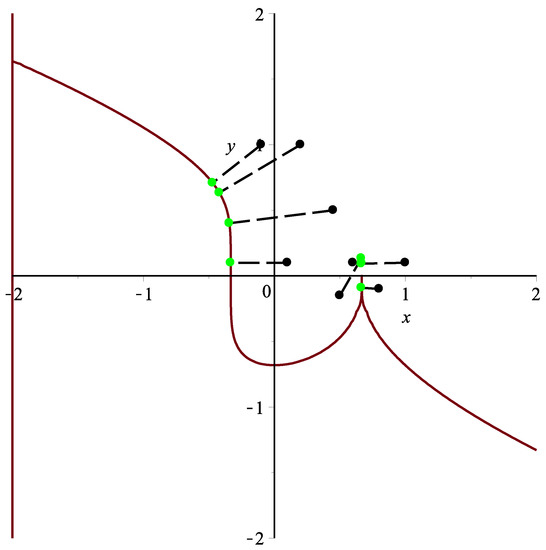

Remark 6.

In Example 1, for the test points (−0.1,1.0), (0.2,1.0), (0.1,0.1), (0.45,0.5), by using Algorithm 3, the corresponding orthogonal projection points are , , , ,, respectively (see Figure 14 and Table 16). In addition to the six test examples, many other examples have also been tested. According to these results, if test point p is close to the planar implicit curve , for different initial iterative values , which are also close to the corresponding orthogonal projection point , it can converge to the corresponding orthogonal projection point by using Algorithm 3, namely the test point p and its corresponding orthogonal projection point satisfy the inequality relationships:

Figure 14.

Graphic demonstration for the singular point case of Algorithm 3.

Table 16.

Distance for the singular point case of Algorithm 3.

Thus, it illustrates that the convergence of Algorithm 3 is independent of the initial value and Algorithm 3 is efficient. In sum, the algorithm can meet the top two of the ten challenges proposed by Professor Les A. Piegl [41] in terms of robustness and efficiency.

Remark 7.

From the authors’ six test examples, Algorithm 3 is robust and efficient. If test point p is very far away from the planar implicit curve and the degree of the planar implicit curve is very high, Algorithm 3 also converges. However, inequality relationships (42) could not be satisfied simultaneously. In addition, if the planar implicit curve contains singular points, Algorithm 3 only works for test point p in a suitable position. Namely, for any initial iterative point , test point p can be orthogonally projected onto the planar implicit curve, but with a larger distance than the minimum distance between the test point and the orthogonal projection point, where is the singular point. For example, for the test point (1.0,0.01), (0.6,0.1), (0.5,−0.15), (0.8,−0.1), Algorithm 3 gives the corresponding orthogonal projection points as , , , , respectively. However, the actual corresponding orthogonal projection point of four test points is (see Figure 14 and Table 16).

Remark 8.

This remark is added to numerically validate the convergence order of two, thanks to the reviewers’ insightful comments, which corrects the previous wrong calculation of the convergence order. The iterative error ratios for the test point p = in Example 1 are presented in Table 17 with initial iterative points in the first row. The formula is used to compute error ratios for each iteration in rows other than the first one, which is the same for Table 18, Table 19, Table 20, Table 21 and Table 22. From the six tables, once again combined with the order of convergence formula , it is not difficult to find out that the order of convergence for each example is approximately between one and two, which verifies Theorem 1. The convergence formula ρ comes from the Formula [42], i.e., .

Table 17.

The error ratios for each iteration in Example 1 of Algorithm 3.

Table 18.

The error ratios for each iteration in Example 2 of Algorithm 3.

Table 19.

The error ratios for each iteration in Example 3 of Algorithm 3.

Table 20.

The error ratios for each iteration in Example 4 of Algorithm 3.

Table 21.

The error ratios for each iteration in Example 5 of Algorithm 3.

Table 22.

The error ratios for each iteration in Example 6 of Algorithm 3.

6. Conclusions

This paper investigates the problem related to a point projection onto a planar implicit curve. The integrated hybrid second order algorithm is proposed, which is composed of two sub-algorithms (hybrid second order algorithm and initial iterative value estimation algorithm). For any test point p, any planar implicit curve containing singular points, whether test point p is close to or very far away from the planar implicit curve, the integrated hybrid second order algorithm could be convergent. It is proven that the convergence of Algorithm 3 is independent of the initial value. Convergence analysis of the integrated hybrid second order algorithm demonstrates that the convergence order is second order. Numerical examples illustrate that the algorithm is robust and efficient.

7. Future Work

For any initial iterative point and test point in any position of the plane, for any planar implicit curve (including containing singular points, the degree of the planar implicit curve being arbitrarily high), the future work is to construct a brand new algorithm to meet three requirements: (1) it does converge, and the orthogonal projection point does simultaneously satisfy three relationships of Formula (11); (2) it is very effective at tackling singularity; (3) it takes less time than the current Algorithm 3. Of course, it will be very challenging to find this kind of algorithm in the future.

Another potential topic for future research is to develop a more efficient method to compute the minimum distance between a point and a spatial implicit curve or a spatial implicit surface. The new method must satisfy three requirements in terms of convergence, effectiveness at tackling singularity and efficiency.

Author Contributions

The contributions of all of the authors were the same. All of them have worked together to develop the present manuscript.

Funding

This research was funded by [National Natural Science Foundation of China] grant number [71772106], [Scientific and Technology Foundation Funded Project of Guizhou Province] grant number [[2014]2093], [The Feature Key Laboratory for Regular Institutions of Higher Education of Guizhou Province] grant number [[2016]003], [Training Center for Network Security and Big Data Application of Guizhou Minzu University] grant number [20161113006], [Shandong Provincial Natural Science Foundation of China] grant number [ZR2016GM24], [Scientific and Technology Key Foundation of Taiyuan Institute of Technology] grant number [2016LZ02], [Fund of National Social Science] grant number [14XMZ001] and [Fund of the Chinese Ministry of Education] grant number [15JZD034].

Acknowledgments

We take the opportunity to thank the anonymous reviewers for their thoughtful and meaningful comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gomes, A.J.; Morgado, J.F.; Pereira, E.S. A BSP-based algorithm for dimensionally nonhomogeneous planar implicit curves with topological guarantees. ACM Trans. Graph. 2009, 28, 1–24. [Google Scholar] [CrossRef]

- Gabriel, T. Distance approximations for rasterizing implicit curves. ACM Trans. Graph. 1994, 13, 342. [Google Scholar]

- Gourmel, O.; Barthe, L.; Cani, M.P.; Wyvill, B.; Bernhardt, A.; Paulin, M.; Grasberger, H. A gradient-based implicit blend. ACM Trans. Graph. 2013, 32, 12. [Google Scholar] [CrossRef]

- Li, Q.; Tian, J. 2D piecewise algebraic splines for implicit modeling. ACM Trans. Graph. 2009, 28, 13. [Google Scholar] [CrossRef]

- Dinesh, M.; Demmel, J. Algorithms for intersecting parametric and algebraic curves I: Simple intersections. ACM Trans. Graph. 1994, 13, 73–100. [Google Scholar]

- Krishnan, S.; Manocha, D. An efficient surface intersection algorithm based on lower-dimensional formulation. ACM Trans. Graph. 1997, 16, 74–106. [Google Scholar] [CrossRef]

- Shene, C.-K.; John, K.J. On the lower degree intersections of two natural quadrics. ACM Trans. Graph. 1994, 13, 400–424. [Google Scholar] [CrossRef]

- Maxim, A.; Michael, B.; Gershon, E. Global solutions of well-constrained transcendental systems using expression trees and a single solution test. Comput. Aided Geom. Des. 2012, 29, 265–279. [Google Scholar]

- Sonia, L.R.; Juana, S.; Sendra, J.R. Bounding and estimating the Hausdorff distance between real space algebraic curves. Comput. Aided Geom. Des. 2014, 31, 182–198. [Google Scholar]

- Ron, G. Curvature formulas for implicit curves and surfaces. Comput. Aided Geom. Des. 2005, 22, 632–658. [Google Scholar]

- Thomas, W.S.; Zheng, J.; Klimaszewski, K.; Dokken, T. Approximate implicitization using monoid curves and surfaces. Graph. Mod. Image Proc. 1999, 61, 177–198. [Google Scholar]

- Eva, B.; Zbyněk, Š. Identifying and approximating monotonous segments of algebraic curves using support function representation. Comput. Aided Geom. Des. 2014, 31, 358–372. [Google Scholar]

- Anderson, I.J.; Cox, M.G.; Forbes, A.B.; Mason, J.C.; Turner, D.A. An Efficient and Robust Algorithm for Solving the Foot Point Problem. In Proceedings of the International Conference on Mathematical Methods for Curves and Surfaces II Lillehammer, Lillehammer, Norway, 3–8 July 1997; pp. 9–16. [Google Scholar]

- Martin, A.; Bert, J. Robust computation of foot points on implicitly defined curves. In Mathematical Methods for Curves and Surfaces: Tromsø; Nashboro Press: Brentwood, TN, USA, 2004; pp. 1–10. [Google Scholar]

- William, H.P.; Brian, P.F.; Teukolsky, S.A.; William, T.V. Numerical Recipes in C: The Art of Scientific Computing, 2nd ed.; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Steve, S.; Sandford, L.; Ponce, J. Using geometric distance fits for 3-D object modeling and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 1183–1196. [Google Scholar]

- Morgan, A.P. Polynomial continuation and its relationship to the symbolic reduction of polynomial systems. In Symbolic and Numerical Computation for Artificial Intelligence; Academic Press: Cambridge, MA, USA, 1992; pp. 23–45. [Google Scholar]

- Layne, T.W.; Billups, S.C.; Morgan, A.P. Algorithm 652: HOMPACK: A suite of codes for globally convergent homotopy algorithms. ACM Trans. Math. Softw. 1987, 13, 281–310. [Google Scholar]

- Berthold, K.P.H. 1Relative orientation revisited. J. Opt. Soc. Am. A 1991, 8, 1630–1638. [Google Scholar]

- Dinesh, M.; Krishnan, S. Solving algebraic systems using matrix computations. ACM SIGSAM Bull. 1996, 30, 4–21. [Google Scholar]

- Chionh, E.-W. Base Points, Resultants, and the Implicit Representation of Rational Surfaces. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 1990. [Google Scholar]

- De Montaudouin, Y.; Tiller, W. The Cayley method in computer aided geometric design. Comput. Aided Geom. Des. 1984, 1, 309–326. [Google Scholar] [CrossRef]

- Albert, A.A. Modern Higher Algebra; D.C. Heath and Company: New York, NY, USA, 1933. [Google Scholar]

- Thomas, W.; David, S.; Anderson, C.; Goldman, R.N. Implicit representation of parametric curves and surfaces. Comput. Vis. Graph. Image Proc. 1984, 28, 72–84. [Google Scholar]

- Nishita, T.; Sederberg, T.W.; Kakimoto, M. Ray tracing trimmed rational surface patches. ACM SIGGRAPH Comput. Graph. 1990, 24, 337–345. [Google Scholar] [CrossRef]

- Elber, G.; Kim, M.-S. Geometric Constraint Solver Using Multivariate Rational Spline Functions. In Proceedings of the 6th ACM Symposium on Solid Modeling and Applications, Ann Arbor, MI, USA, 4–8 June 2001; pp. 1–10. [Google Scholar]

- Sherbrooke, E.C.; Patrikalakis, N.M. Computation of the solutions of nonlinear polynomial systems. Comput. Aided Geom. Des. 1993, 10, 379–405. [Google Scholar] [CrossRef]

- Park, C.-H.; Elber, G.; Kim, K.-J.; Kim, G.Y.; Seong, J.K. A hybrid parallel solver for systems of multivariate polynomials using CPUs and GPUs. Comput. Aided Des. 2011, 43, 1360–1369. [Google Scholar] [CrossRef]

- Bartoň, M. Solving polynomial systems using no-root elimination blending schemes. Comput. Aided Des. 2011, 43, 1870–1878. [Google Scholar]

- Van Sosin, B.; Elber, G. Solving piecewise polynomial constraint systems with decomposition and a subdivision-based solver. Comput. Aided Des. 2017, 90, 37–47. [Google Scholar] [CrossRef]

- Hartmann, E. The normal form of a planar curve and its application to curve design. In Mathematical Methods for Curves and Surfaces II; Vanderbilt University Press: Nashville, TN, USA, 1997; pp. 237–244. [Google Scholar]

- Hartmann, E. On the curvature of curves and surfaces defined by normal forms. Comput. Aided Geom. Des. 1999, 16, 355–376. [Google Scholar] [CrossRef]

- Nicholas, J.R. Implicit polynomials, orthogonal distance regression, and the closest point on a curve. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 191–199. [Google Scholar]

- Hu, S.-M.; Wallner, J. A second order algorithm for orthogonal projection onto curves and surfaces. Comput. Aided Geom. Des. 2005, 22, 251–260. [Google Scholar] [CrossRef]

- Li, X.; Wang, L.; Wu, Z.; Hou, L.; Liang, J.; Li, Q. Convergence analysis on a second order algorithm for orthogonal projection onto curves. Symmetry 2017, 9, 210. [Google Scholar] [CrossRef]

- Hu, M.; Zhou, Y.; Li, X. Robust and accurate computation of geometric distance for Lipschitz continuous implicit curves. Vis. Comput. 2017, 33, 937–947. [Google Scholar] [CrossRef]

- Chen, X.-D.; Yong, J.-H.; Wang, G.; Paul, J.C.; Xu, G. Computing the minimum distance between a point and a NURBS curve. Comput. Aided Des. 2008, 40, 1051–1054. [Google Scholar] [CrossRef]

- Chen, X.-D.; Xu, G.; Yong, J.-H.; Wang, G.; Paul, J.C. Computing the minimum distance between a point and a clamped B-spline surface. Graph. Mod. 2009, 71, 107–112. [Google Scholar] [CrossRef]

- Hoschek, J.; Lasser, D.; Schumaker, L.L. Fundamentals of Computer Aided Geometric Design; A. K. Peters, Ltd.: Natick, MA, USA, 1993. [Google Scholar]

- Hu, S.; Sun, J.; Jin, T.; Wang, G. Computing the parameter of points on NURBS curves and surfaces via moving affine frame method. J. Softw. 2000, 11, 49–53. (In Chinese) [Google Scholar]

- Piegl, L.A. Ten challenges in computer-aided design. Comput. Aided Des. 2005, 37, 461–470. [Google Scholar] [CrossRef]

- Weerakoon, S.; Fernando, T.G.I. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 13, 87–93. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).