Abstract

Approximate computing is a promising approach to the design of area–power-performance-efficient circuits for computation error-tolerant applications such as image processing and machine learning. Approximate functional units, such as approximate adders and approximate multipliers, have been actively studied for the past decade, and some of these approximate functional units can dynamically change the degree of computation accuracy. The greater their computational inaccuracy, the faster they are. This study examined the high-level synthesis of approximate circuits that take advantage of such accuracy-controllable functional units. Scheduling methods based on integer linear programming (ILP) and list scheduling were proposed. Under resource and time constraints, the proposed method tries to minimize the computation error of the output value by selectively multi-cycling operations. Operations that have a large impact on the output accuracy are multi-cycled to perform exact computing, whereas operations with a small impact on the accuracy are assigned a single cycle for approximate computing. In the experiments, we explored the trade-off between performance, hardware cost, and accuracy to demonstrate the effectiveness of this work.

1. Introduction

Computational approximation is a promising paradigm that exploits hardware capabilities or mitigates computational demands. Approximate computing is an attractive technique to trade-off high-performance and low-power circuits for applications such as image processing and machine learning, where the applications have an inherent tolerance to errors. This tolerance enables the relaxation of the computation requirement regarding performance or energy because exact computation is not always needed [1,2]. Design techniques for approximate arithmetic circuits have been developed at different design levels, from the transistor to the architecture level [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16]. A variety of techniques for approximate computing have been proposed at the circuit level in the literature, including arithmetic circuits such as approximate adders [6,7,8] and multipliers [9,10,11,12,13]. Approximate computing circuits are generally designed by combining normal (i.e., accurate) functional units with such approximate functional units, based on the requirements for the circuits.

Computational quality (i.e., accuracy) allowed in an application varies in terms of degree of error tolerance, and the degree of approximation for functional units at the circuit level is also different. Approximate functional units have become desirable recently because they enable configuration of the control of accuracy at runtime [8,12,13]. In order to design a circuit that satisfies the requirements in terms of resources and time, in addition to accuracy, it is indispensable to assess how the errors incurred by the approximation circuits propagate via exact and approximate computations, and thus finally affect the output. It is important to discuss this topic, especially for high-level synthesis (HLS) of approximate circuits. Although a large number of studies on HLS of approximate computing circuits have been published [14,15,16], none efficiently utilize such accuracy-controllable functional units.

In this paper, we present a scheduling method that is aware of exact and approximate computations. We mathematically derive a scheduling problem for approximate computing circuits with variable-cycle multipliers based on integer linear programming (ILP), where each arithmetic operation is determined in either exact or approximate mode, and with the degree of approximation (i.e., the number of cycles) during scheduling satisfying resource and time constraints such that the error at the output is minimized. In addition, we extend the problem to take into account operation chaining, which enables the flexible use of approximate computations and further mitigates the output errors. Furthermore, we propose a list-scheduling algorithm for the proposed scheduling problem to obtain an approximate solution in polynomial time.

The main contributions of this paper are twofold:

- Scheduling for approximate computing circuits with accuracy-controllable approximate multipliers is mathematically derived using an ILP formulation. Our proposed scheduling takes account of exact and approximate computations, and determines that each arithmetic operation is scheduled as either exact or approximate under resource and time constraints such that the error at the output is minimized.

- A list-scheduling algorithm is proposed to solve the proposed scheduling problem in polynomial time, which can solve faster than the ILP method.

The remainder of this paper is organized as follows. Section 2 introduces related work. In Section 3, we derive our proposed variable-cycle scheduling problem based on ILP. In addition, we take into account chaining in the scheduling problem. Section 4 proposes a list-scheduling algorithm to efficiently find a solution in polynomial time. Section 5 evaluates our proposed methods. Finally, Section 6 concludes this paper.

2. Related Work

Approximate computing has been established as a promising technique, and several surveys have been reported [1,2,3]. The research on approximate computing has briefly encompassed approximate arithmetic circuits [3,4,5,6,7,8,9,10,11,12,13], logic synthesis [14,15,16,17,18], and modeling [19,20,21,22,23].

According to the review in [3], approximate arithmetic circuits such as approximate adders and multipliers have been introduced. The authors in [6] focused on low-power design, and they developed approximate adders for DSPs to simplify the complexity of a conventional mirror adder cell at the transistor level by adopting approximate computing. As another approach to energy-efficient DSP applications, a reverse carry propagate adder (RCPA) was proposed in [7]. In addition to an approximate adder, the work in [9] developed an approximate multiplier, where the approximate circuits are a bio-inspired imprecise adder and multiplier. In [11], the authors also developed an approximate adder and a multiplier. The proposed multiplier uses the proposed approximate adder for the accumulation of the error signals in the error vectors to reduce the error at the output. Although the mentioned works aimed to purely pursue energy efficiency, high performance, or area reduction at the sacrifice of accuracy, the required accuracy of an error-tolerant application using approximate computing circuits varies significantly at runtime. In previous research [8,12,13], accuracy-controllable approximate arithmetic circuits were developed. The authors in [8] raised the issue that the static approximation, which fixes accuracy, may fail to satisfy the requirements in terms of energy, performance, or area. They sequentially extended their work to propose an accuracy-controllable approximate multiplier [12]. Sano et al. followed these studies to develop a 32-bit accuracy-controllable approximate multiplier for FPGAs [13]. The work aimed to trade-off an approximate computing circuit in terms of energy, performance, and area with respect to the accuracy requirement.

The approximate computing circuits are basically designed with the combination of accurate functional units with approximate functional units [3,4,5,6,7,8,9,10,11,12,13], based on the requirements for the circuits. A number of works have proposed logic synthesis techniques for approximate computing. Nepal et al. proposed automated behavioral synthesis of approximate computing circuits, called ABACUS, which synthesizes an approximate computing circuit by directly operating at the behavioral descriptions of circuits to automatically generate approximate variants [14,15]. Based on their work, Schafer proposed a method that does not use approximate computing circuits but uses exact circuits having lower bandwidths [13]. The author focused on enabling resource-sharing-based design space exploration (DSE) for FPGAs, and did not aim to determine a trade-off between area and error. At the higher level of abstraction, an approximate high-level synthesis (AHLS) was proposed to synthesize a register-transfer-level (RTL) implementation from an accurate high-level C description, which aimed to optimize energy efficiency with voltage scaling [17]. Unlike the works in [16,17], Leipnitz and Nazar proposed an FPGA-oriented approximation methodology that combines with various optimizations, such as precision scaling of operators [14], bitwidth reduction [16], or variable-to-constant (V2C) substitution [17], or uses a set of libraries in [18,24]. The issue has also been addressed from the perspectives of resources, throughput, and real-time operation [25,26,27,28]. In [29], Shirane et al. proposed a case study of high-level synthesis of accuracy-controllable approximate multipliers [12].

Modeling techniques for statistical and analytical perspectives are regarded as significant approaches to approximate computing designs in order to estimate the error produced by approximate circuits [19,20,21,22,23]. Venkatesan et al. proposed a modeling and analysis framework for approximate computing circuits [19]. The drawback of this technique is that it focuses on post-design analysis, and it cannot be easily applied to optimization. In [20], the authors used the error rate, which represents the probability that a result is approximated and different from the exact value, but only addressed the frequency of the error and ignored the magnitude. Some research proposed error propagation rules to overcome this drawback [21,22]. In addition to [21], the authors in [23] proposed a set of analytical models to estimate circuit metrics and a DSE method to derive Pareto-optimal solutions for approximate designs.

Unfortunately, most of the previously mentioned works have paid little attention to allocation, scheduling, and binding algorithms, which are the crucial techniques in HLS. Regarding this perspective, our work is similar to [22,30]. However, none of the previous studies developed scheduling methods that are aware of accuracy-controllable approximate arithmetic circuits. In this work, we focus on scheduling for approximate computing circuits of variable-cycle approximate multipliers. The reason for targeting multipliers is that they are the most common operation in a variety of applications. Although most research involves approximate adders, we deliberately neglect them because the area and delay of a multiplier accounts for a larger portion than those of an adder. Instead, we assume that clock cycles of our approximate multipliers are variable, for which the delay can be controlled by configuring the accuracy. For example, it is assumed that the exact multiplication takes two cycles and the approximate multiplication takes one cycle. The accuracy-controllable multiplier [12,13] can operate with a long delay if an exact multiplication is needed with a single multiplier, or with a short delay if an approximation is good enough. The novelty of our work is the variable cycle of approximate multipliers.

3. ILP-Based Scheduling for Variable-Cycle Approximate Functional Units in High-Level Synthesis

3.1. A Scheduling Example

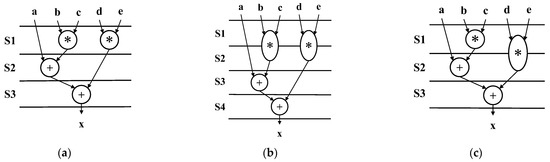

In Figure 1, the three data flow graphs (DFGs) show an example of scheduling. In general, a DFG consists of the nodes and the edges that represent operations and precedence constraints (i.e., data dependencies between the operations), respectively. Each DFG consists of two adders and three multipliers. The notation labeled S means a clock cycle; for instance, S1 is the first clock and S2 is the second clock. In this work, we assume a dual-mode multiplier based on an accuracy-controllable multiplier, which is presented in [12,13]. One mode is used as a normal multiplier that takes two cycles for exact calculation, and the other is an approximate multiplier that takes one cycle. Figure 1a shows that the two multiplications are approximately calculated for each in one cycle, and the whole operation is performed in three cycles. Note that each multiplication in this case takes one cycle, so that the performance is maximized without accounting for accuracy. Therefore, the case in Figure 1a may have a larger error than the case in Figure 1b, where each multiplier performs in two cycles. However, Figure 1b shows four cycles are necessary for all the operations and results in the degradation of performance compared with the case in Figure 1a. Our proposal in Figure 1c is allowed to flexibly employ each multiplier, which is selected as being either exact or approximate. This case demonstrates that one multiplier is approximated, and the others are calculated precisely. The output error should be smaller than the case in Figure 1a. In addition, the total cycle is shown, as well as that in Figure 1b, without the performance degradation. Given the DFG and constraints on the resources and the maximum number of time steps, our proposed scheduling determines each mode of the multipliers and determines an optimal schedule.

Figure 1.

An example of scheduling for approximate computing circuits with variable-cycle approximate multipliers. (a) Approximate multiplications. (b) Exact multiplications. (c) Variable-cycle multiplications.

3.2. ILP Formulation

Scheduling for variable-cycle approximate functional units is mathematically derived as an ILP formulation in this paper. Given a DFG that consists of functional units and data flow dependencies, scheduling determines the number of cycles for each operation under time and resource constraints such that the error at the output is minimized. We assume that the multipliers are accuracy-controllable, and can be selected as being in either exact or approximate mode. The exact mode yields accurate output, but the approximate mode outputs an inaccurate result. The approximate mode of a multiplier can vary its own cycles, and scheduling also determines the number of cycles for each multiplication.

Let denote a binary decision variable to schedule the -th multiplication in the cycle. If becomes 1, multiplication is being performed in the cycle. Similarly, let denote a binary decision variable to schedule the operation other than multiplication in the cycle. Each multiplication is determined in either the approximate or exact mode and takes one cycle or several cycles. The number of cycles for the multiplication is varied dependent on the degree of approximation. The delay produced by the other operations is relatively shorter than that of the multiplication. For simplicity, we have classified the operations as multiplication and the other operations, but we can prepare an operation other than multiplication with a variable cycle, which does not essentially make any difference.

Here, we define the number of cycles for each multiplication and the other operations. For comprehension, we assume in Equations (1) and (2) that takes either one or two cycles and takes one cycle. However, the number of cycles for and can be easily extended as a decision variable and an arbitrary number of cycles, respectively.

Let , , denote the number of cycles, the start time, and the finish time of -th multiplication, respectively. Each multiplication is assumed to be performed in one or two cycles, and the start time and finish time are the same if the multiplication is determined to be performed in one cycle.

Next, let denote the number of cycles for the -th operation other than multiplication. On the assumption that takes only one cycle in the description, we can easily extend the number of cycles to an arbitrary number of cycles with similar equations to Equations (3)–(6).

The DFG, which is given as an input, includes precedence constraints between the operations. Let denote a binary value of the dependency between -th and -th operations. If is given as one, there are the constraints between -th and -th operations. Equation (8) indicates that the start time of the successor operation must be followed after the finish time of the predecessor operation i1. The constraints for operations other than multiplication can be easily taken into account by adding equations similar to Equation (8).

Most of the scheduling requires resource and time constraints. Both of the constraints are assumed to be given in advance. The resource constraints for the multiplication and other operations are fixed as , and then the number of multipliers is limited. The number of the multipliers assigned to active multiplications cannot exceed the number of the total number of multipliers in any cycle as follows. Assume unlimited availability for the purpose of avoiding overhead in sharing, except for multipliers such as adders and ALUs.

Furthermore, the time constraints are given as , which limit the finish time of multipliers and other operations. All the operations must finish performing before the time constraint. Let denote the finish time of the operation other than multiplication. In this paper, and are synonymous because it is assumed that operations other than multiplication can be performed in one cycle. The time constraints are given as follows:

Accuracy is evaluated by the magnitude of the error. In other words, the smaller the error, the higher the accuracy of the circuit. We describe how errors are produced if the operations are approximated, using a multiplication operation. Consider multiplication operation . Let the errors propagated before and be denoted as and , and the errors of the multiplication and its product be denoted as and , respectively. Here, we derive the approximate multiplication in Equation (12). It should be noted that can be ignored due to negligible loss of accuracy, as in [21,22].

Based on the assumption, let denote the errors produced in the -th multiplication. Let denote an error generated if the -th multiplication is approximated and becomes non-zero if the multiplication is approximated. In other words, is zero if multiplication is exactly performed without approximation. Note that Equation (13) assumes that the exact multiplication takes two cycles. However, it can easily be extended to take an arbitrary number of cycles if the error that increases in a linear manner with the increase in the degree of approximation is allowed. The same formulation is also used for operations other than multiplication.

Let denote the error at the output, which means the error produced by a final output of the operation, either or the error of other operations. The error at the output derives from each error propagated from each of the operations. In this work, the objective of our scheduling is to minimize the error at the output.

We formulate this problem as ILP and can solve it using an ILP solver. In this work, the multiplications are performed in one or two cycles; however, the presented formulation can be easily changed to be performed in any number of cycles.

3.3. Chaining

Scheduling algorithms for high-level synthesis that cannot handle optimization techniques are of no practical value. Among the several optimization techniques that exist, we consider chaining in this work. For the proposed method, the aim of chaining is to speed up the circuit and improve the computational accuracy by increasing the number of accurate multiplications.

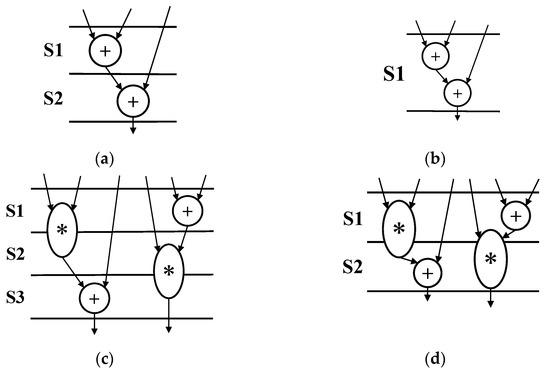

The chaining assumed in this work is shown in Figure 2. We assume two types of chaining. The first is general addition chaining, in which the number of stages is changed based on the given delay constraints. Without considering addition chaining, it takes two cycles to perform two additions, as shown in Figure 2a. If two adders are used, as in Figure 2b, it can be performed in one cycle. Thus, the configuration of the functional unit is important when performing chaining. Next, we consider not only the chaining of additions, but also the chaining of exact multiplications and additions. Here, we assume that exact multiplication is performed in two cycles. If chaining is not considered, it takes three cycles, as shown in Figure 2c. If we perform exact multiplication and addition with chaining, it can be performed in two cycles, as shown in Figure 2d. Since the scheduling problem in this work is to minimize the error under the constraints of resources and time, we predict that exact multiplication and addition chaining will have a significant impact on the error reduction.

Figure 2.

Example of chaining in this work. (a) Add to Add (no Chaining). (b) Add to Add (Chaining). (c) Exact Mult to Add (no Chaining). (d) Exact Mult to Add (Chaining).

The important point in chaining is which operations are chained. In particular, under resource constraints, the overall number of execution cycles differs greatly depending on which operations are chained. In the ILP method, the following changes are made to some equations to take chaining into account.

First, in the ILP method without considering chaining, Equations (1) and (2) were classified into multiplication and non-multiplication operations. However, these are classified into multiplication, addition, and other operations by adding Equation (15). Let be a 0–1 decision variable that is when addition is performed in cycle . Moreover, let be the execution time of addition . Thus, addition is defined as in Equations (15) and (16), and time constraints are defined as in Equation (17), as in ILP without considering chaining. To avoid sharing overhead, an unlimited number of adders are assumed to be available.

Let be the number of steps when chaining additions together, and let STEP be the maximum number of steps that an addition can chain. To avoid chaining beyond the delay condition, STEP is defined from the delay condition.

We also make changes to the dependencies. In the case of the dependency between additions, it is defined as in Equation (19). In the case of no chaining, the two additions are executed in different cycles. In the case of chaining, the two additions are performed in the same cycle.

The dependency between addition and multiplication is defined as in Equations (20) and (21). Equation (20) shows the dependency from multiplication to addition and Equation (21) shows the dependency from addition to multiplication. Define as 1 for chaining from exact multiplication to addition, and 2 for chaining from addition to exact addition. As in the case of chaining between additions, it is performed in different cycles when not chaining, and in the same cycle when chaining. Approximate multiplication is not performed using chaining in this work.

4. Heuristic Scheduling Algorithms Based on List Scheduling

4.1. List-Scheduing Algorithm

Table 1 shows the symbols used in the list scheduling. We propose a heuristic algorithm for the variable-cycle scheduling problem and show pseudo-code based on the conventional resource-constrained list scheduling in Algorithm 1. For a given DFG G(V,E), let , , , and be the set of all operations, i.e., multiplication, operations other than multiplication, and approximate multiplication, respectively. In this case, and . Let denote the operations that can be executed, denote the operations that are being executed, and denote the operations that have completed execution. Initially, . The length of the number of execution cycles for approximate multiplication, exact multiplication, and other operations is and , respectively. These are given as preconditions. Let be the execution cycle of each operation calculated by As Late As Possible (ALAP). Let be the priority of the list scheduling to be created based on it. Let the start time of each operation be and the end time be . If the operation is executed in a single cycle, these will be the same values. Let be the remaining time until the execution of operation is completed, and be the current cycle. In the constraints, i.e., resource constraints and time constraints, limits the number of multipliers and limits the overall number of execution cycles. The number of currently available multipliers is defined as . This is updated every cycle. Let be an index of the magnitude of the error given to the output value when approximating the multiplication. This value is obtained from the calculation of error propagation. To minimize the output error, scheduling is performed by switching to the exact mode in order of the multiplication with the largest error. The set of multiplications that have never been made exact is denoted as , and we loop until all the multiplications have been made exact once. In the initial state, .

| Algorithm 1 List-Scheduling Algorithm | |

| 1 | ListScheduling(G(V,E)) begin |

| 2 | for do |

| 3 | ← ALAP_schedule |

| 4 | end for |

| 5 | for do |

| 6 | |

| 7 | end for |

| 8 | for n in 1..|M|+1 do |

| 9 | for do |

| 10 | if then |

| 11 | |

| 12 | else end if |

| 13 | end for |

| 14 | = 0, |

| 15 | while do |

| 16 | |

| 17 | for do |

| 18 | for do |

| 19 | if then |

| 20 | |

| 21 | if then |

| 22 | , |

| 23 | |

| 24 | end if |

| 25 | end if |

| 26 | if then |

| 27 | |

| 28 | if then |

| 29 | , , |

| 30 | |

| 31 | end if |

| 32 | end if |

| 33 | end for |

| 34 | if then |

| 35 | , |

| 36 | if then |

| 37 | , |

| 38 | |

| 39 | elif then |

| 40 | , |

| 41 | else , end if |

| 42 | end if |

| 43 | if then |

| 44 | |

| 45 | if then |

| 46 | , |

| 47 | |

| 48 | else , end if |

| 49 | end if |

| 50 | end for |

| 51 | end while |

| 52 | if then |

| 53 | , |

| 54 | |

| 55 | else |

| 56 | |

| 57 | end if |

| 58 | |

| 59 | end for |

| 60 | end |

Table 1.

Symbols for list-scheduling algorithm.

In Algorithm 1, ALAP scheduling is first performed in line 3. Here, all the multiplications are scheduled as approximate multiplications. This result is used as a priority when performing resource-constrained list scheduling from line 8. Next, line 6 finds the multiplication whose error becomes large if it is approximated by calculating the error propagation, and finds its influence . Between line 8 and line 59, if all the multiplications are approximate multiplications or if they are exact one-by-one multiplications, the total number of multiplications plus one time resource-constrained list scheduling is performed. Scheduling is performed to minimize the error under resource and time constraints. As a new change, in line 12, we reduce the priority used for resource-constrained list scheduling only for exact multiplications. This is to avoid performing ALAP again when some of the multiplications are switched to the exact mode and to make it easier to satisfy the time constraints. Lines 18 to 35 process the multicycle operations that are being executed. Lines 36 to 51 execute the operations with the highest priority among the operations that can be executed. After executing all the operations, satisfaction of the time constraint is checked at line 52. If it satisfied, it is retained as the best solution. If not, the exact multiplication is returned to approximate multiplication. Finally, the approximate solution that satisfies the resource and time constraints and minimizes the error is output. The computational complexity of this algorithm is because the list scheduling is repeated one time plus the number of multiplications.

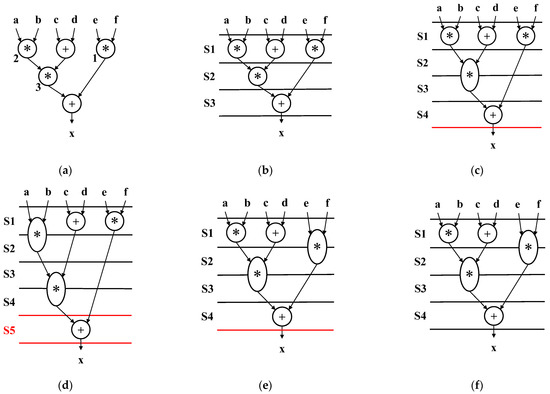

4.2. Proposed List-Scheduling Example

For the operation from lines 8 to 59 of Algorithm 1, an example problem in DFG with three multiplications and two additions is shown in Figure 3. We assume one cycle for approximate multiplications, two cycles for exact multiplications, and one cycle for additions. The resource constraint is list scheduling as two accuracy-controllable approximate multipliers and one adder. The time constraint is four cycles. Figure 3a shows the DFG given, and the number at the bottom left of the multiplication is assumed to be the magnitude of the influence of the error on the output value obtained in line 6 of Algorithm 1. First, as shown in Figure 3b, all the multiplications are approximate multiplications, and resource constraint-based list scheduling is performed. Next, the list scheduling is performed again with the multiplications made exactly with the largest errors. At this time, since all the operations can be performed within the time constraint of four cycles, the multiplication that was made exact in Figure 3c is kept exact for the next scheduling. Next, as shown in Figure 3d, the multiplication with the second-largest error is made exact and list scheduling is performed. However, in Figure 3d the time constraint of four cycles is exceeded, so the upper left multiplication is returned to approximation. Next, the multiplication with the smallest error is made exact and list scheduling is performed. The result of Figure 3e satisfies the time constraint, so we keep the multiplication as exact. Finally, since all the multiplications have been performed correctly, the result of Figure 3e, which will have the smallest error so far, is output as the final solution as shown in Figure 3f. In this example, we show 1–2 cycles for simplicity, but our proposed method can be applied to operations with more than 3 cycles by changing the values of and .

Figure 3.

Proposed list-scheduling example. (a) A given DFG. (b) Approximate all multiplications (result of first list scheduling). (c) Exact multiplication with the largest error(result of second list scheduling). (d) Exact multiplication with the second largest error (result of third list scheduling). (e) Exact multiplication with the smallest error (result of fourth list scheduling). (f) Final output that satisfies resource and time constraints.

4.3. Chaining

List scheduling takes chaining into account by adding the following process. Similar to ILP, list scheduling classifies operations into three categories: multiplication, addition, and others. Algorithms 2 and 3 are the additions to of list scheduling, where is the set of additions. is the length of the execution cycle of the additions. Algorithm 2 is added after line 25 and Algorithm 3 is added after line 42 to perform resource constraint-based list scheduling. It also assumes that an unlimited number of adders are available to avoid sharing overhead as in ILP.

| Algorithm 2 Add in List-Scheduling Algorithm ① | |

| 1 | ifthen |

| 2 | |

| 3 | if then |

| 4 | , , |

| 5 | |

| 6 | End if |

| 7 | End if |

| Algorithm 3 Add in List-Scheduling Algorithm ② | |

| 1 | ifthen |

| 2 | |

| 3 | if then |

| 4 | , |

| 5 | |

| 6 | else , end if |

| 7 | End if |

Moreover, Algorithm 4 is added after Algorithm 1 to the chaining process. Three chaining processes are performed in sequence: exact multiplication to addition, addition to exact multiplication, and addition to addition. is a value that indicates whether chaining is possible based on the delay condition of exact multiplication. When this value is 1, exact multiplication and addition can be chained, and when it is 0, chaining is not possible. Similarly, let be a value that indicates whether or not chaining is possible between additions based on the delay condition of the addition.

| Algorithm4 Chaining in List-Scheduling Algorithm | |

| 1 | ifthen |

| 2 | for do |

| 3 | if then |

| 4 | |

| 5 | if then |

| 6 | , |

| 7 | |

| 8 | else , end if |

| 9 | end if |

| 10 | if then |

| 11 | , |

| 12 | if then |

| 13 | , |

| 14 | |

| 15 | else , end if |

| 16 | end if |

| 17 | end for |

| 18 | end if |

| 19 | ifthen |

| 20 | for do |

| 21 | if then |

| 22 | , |

| 23 | if then |

| 24 | , |

| 25 | |

| 26 | else , end if |

| 27 | end if |

| 28 | end for |

| 29 | end if |

Let operation be the subsequent operation of operation . In Algorithm 4, the first line determines whether exact multiplication and addition can be chained based on the delay condition in line 1. To perform exact multiplication to addition chaining, line 3 determines if exact multiplication has been completed in this cycle, and if there is a subsequent addition. If the above conditions are met, exact multiplication and addition chaining is performed. Similarly, chaining from addition to exact multiplication is performed from line 10. Finally, the process of chaining addition to addition is performed from line 19. The reason why the processing is done in this order is that the objective function of this scheduling problem is to minimize the output error and the emphasis is on accurate multiplication and addition chaining rather than addition to addition chaining.

In this method, chaining is performed after processing the resource constraint list scheduling for each cycle; however, chaining is always performed if it is possible to chain at that time. Under resource constraints, the overall number of execution cycles varies greatly depending on which operations are chained; therefore, this approximation algorithm may not be optimal in terms of the number of execution cycles. However, list scheduling is an algorithm used to shorten the algorithm runtime, and the objective function aims to minimize the error. Therefore, the chaining process is straightforward.

With the above changes, the scheduling is undertaken with the aim of minimizing the output error under resource and time constraints. In this work, exact multiplication and addition are used for chaining; however, depending on the delay conditions, it can easily be modified so that approximate multiplication or other operations are used for chaining.

5. Experiment

5.1. Exprimental Setup

In order to demonstrate the effectiveness of our proposed method, we conducted experiments. We used CPLEX 12.10 as the solution solver for the ILP, scheduling up to one hour in real-time on a PC with an AMD Ryzen 7 PRO 4750G CPU and 64 GB main memory. If an optimal solution could not be found in one hour, the best solution at the time was used. The list-scheduling algorithms were implemented in Python with the Numpy library. Due to various resource constraints, we compared the error of conventional resource constrained-scheduling, which does not consider the error, and the proposed variable-cycle scheduling, which minimizes the output error. The number of accuracy-controllable approximation multipliers is restricted as a resource constraint. Adders, ALUs, etc., are assumed to be used without restriction because of their large sharing overhead.

In a conventional resource-constrained scheduling that minimizes the number of execution cycles, the schedule is based on the assumption that each operation is performed in a fixed number of cycles. Therefore, the minimum number of cycles can be found when all the multiplications are scheduled in one cycle (n cycles) and the minimum number of cycles when all the multiplications are scheduled in two cycles (m cycles). We increase the number of functional units as a resource constraint until the minimum number of cycles (n and m) does not change. The proposed method is given the time constraint between n to m cycles under each resource constraint and performs variable-cycle scheduling where each of the multiplications is performed in one or two cycles with the aim of minimizing the output error.

We used MediaBench [31] as a benchmark program. In the ILP-scheduling method and list-scheduling algorithm, the delay for each operation is scheduled assuming that approximate multiplication is performed in one cycle, exact multiplication in two cycles, and operations other than multiplication in one cycle. We synthesized the circuits in which each of the approximate multipliers has 32-bit accuracy control, taken from [13] based on the scheduling results. Then, we compared the area and the error for the synthesized circuits for a xc7z020clg484-1 device with Vivado 2020.1 provided by Xilinx. The error was evaluated by Monte Carlo simulation. We compared the following methods:

- All-exact (AE): each of the multiplications is performed without approximation and takes two cycles.

- All-approximated (AA): each of the multiplications is approximated and performed in one cycle.

- Mixed: each multiplication is determined as being either exact or approximated in two cycles or one cycle, respectively.

- Mixed-chain: each multiplication is determined as being either exact or approximated in two cycles or one cycle, respectively, and considering chaining.

5.2. Exprrimental Results

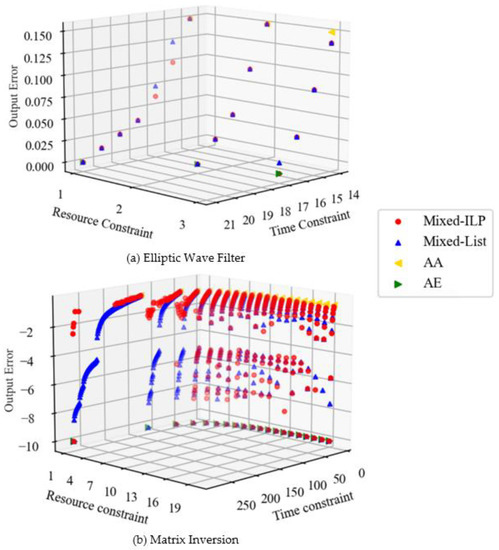

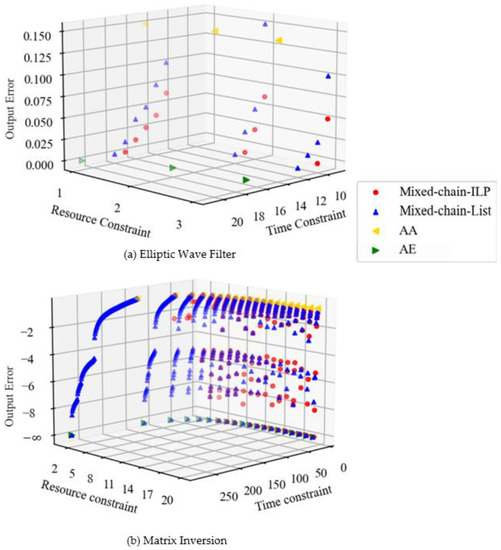

Figure 4 shows the scheduling results for each benchmark. The output error is denoted as the relative value of the error included in the output for the exact result. In Figure 4b, the output error is shown in logarithmic terms and is denoted as when all multiplications are performed in two cycles (i.e., when there is no error). The higher the point, the more stringent the time constraint. The fewer the number of functional units that are constraints of the resource, the larger the difference between the number of cycles required to perform all the multiplications in one cycle and in two cycles. The scheduling results with a wide distribution of errors provide more options for circuit design. Because of this, it possible to find a solution that meets the designer’s requirements based on the area, execution time, and output error.

Figure 4.

Comparison of ILP and list-scheduling errors.

The Elliptic Wave Filter is regular and poses a short critical path of DFG compared to Matrix Inversion. Therefore, when the time constraint is increased and the number of approximate multiplications is reduced, the error of Mixed decreases slowly, as shown in Figure 4a. By comparison, Matrix Inversion is a complex DFG. In such a case, the magnitude of the error differs greatly depending on which multiplication is approximated. In Matrix Inversion, there is a multiplication on the critical path that has a large impact on the output error. Therefore, the distribution of the graph becomes narrower when the resource constraint is increased and the execution time is short. AA and AE in Figure 4 do not take error into account, and there is a trade-off between area and execution time. By comparison, Mixed minimizes the output error due to resource and time constraints, and a trade-off is established between area, execution time, and output error.

Table 2 shows the comparison between list-scheduling algorithm and the ILP-based technique. In the table, Nodes represents the total number of operations for each benchmark. Mult means the number of multiplications among them. Designs indicates the number of design problems for a variety of resource and time constraints. Wins, Losses, and Draws represent the number of designs of our proposed algorithm that outperform the ILP-based technique in terms of accuracy, even slightly; the number of designs of the ILP-based technique that outperform our proposed algorithm; and the number where the same solutions are found, respectively. The number of times it takes more than one hour for ILP indicates the number of times the optimal solution is not obtained with ILP. In the Elliptic Wave Filter, there are three cases where the approximate solution for list scheduling is inferior to the optimal solution for ILP, but all the results in Figure 4a are close to the optimal solution. In Matrix Inversion, about half of the ILPs are not able to find the optimal solution in one hour. Most have no solution or a solution that is far from optimal and is inferior to the approximate solution of list scheduling. In contrast, list scheduling obtained solutions with a wide distribution regardless of the number of nodes. It can be said to be effective even for large applications.

Table 2.

Comparison of the list-scheduling solution with the ILP solution.

Table 3 shows the longest, shortest, and mean runtimes required for scheduling for each benchmark. ILP was unable to find an optimal solution in one hour when the number of nodes exceeded 50. However, list scheduling was able to find an approximate solution within three minutes even when the number of nodes exceeded 300. With ILP, scheduling can already take a long time for Cosine with 42 nodes; thus, if the number of nodes exceeds 300, it is expected to take several days to a month or more. As the number of nodes increases, the runtime increases exponentially, making ILP impractical. With list scheduling, there is a difference between the longest and shortest runtime with Matrix Inversion, which has a wide range of given time constraints. It is possible to obtain a near-optimal solution in a short time.

Table 3.

Runtime for scheduling (s).

Table 4 shows the logic synthesis results of some of the scheduling results in Auto Regression Filter using Vivado 2020.1 on the xc7z020clg484-1 device. In the conventional method, we synthesized a circuit with AE performing in 12 cycles. In ILP and list scheduling, we synthesize Mixed circuits performing in 12 cycles. In these circuits, the performance of the circuit (number of cycles) was scheduled with the same constraints. Circuits designed with the two proposed methods both achieved low area and power by approximation. Furthermore, it is clear from the PSNR values that the magnitude of the error is minute. Therefore, the proposed method can design circuits with the same performance as that of the conventional method, having a low area and low power, without much loss of accuracy. Comparison of the PSNR of the proposed method shows that the approximate solution of list scheduling is almost equal to the optimal solution of ILP. The difference in area and power between the proposed methods, despite their equal accuracy, can be attributed mainly to the optimization of synthesis tools.

Table 4.

Comparison of synthesized auto regression filter.

Figure 5 shows the scheduling results when chaining is considered. AA and AE here do not have chaining and are plotted at the same locations as in Figure 4. Comparing Figure 4a and Figure 5a, the execution time has been reduced and the error has been reduced. This is due to the fact that they are not only adding, but also chaining exact multiplication and addition. Comparing Figure 4b and Figure 5b, we can see that the results are similar to those of the Elliptic Wave Filter. Moreover, in the case of chaining, a wide distribution in terms of error is obtained even when resource constraints are large.

Figure 5.

Comparison of ILP and list-scheduling errors with chaining.

Table 5 shows the results of comparing the list-scheduling solution with the ILP solution in terms of output error for each benchmark and summarizes the results, as shown in Figure 5. Compared to Table 1, there was no significant increase in the number of times the ILP exceeded one hour. The number of times the approximate solution for list scheduling is inferior to the optimal solution for ILP has increased. This is because the approximate algorithm does not perform chaining as effectively as ILP. However, as the number of nodes increases, the number of times that list scheduling outperforms ILP increases, indicating that the approximate algorithm is more effective for large-scale applications.

Table 5.

Comparison of the list-scheduling solution with the ILP solution with chaining.

Table 6 shows the longest and shortest runtimes required for chaining considered scheduling for each benchmark. In ILP, the runtime does not increase that much even when chaining is taken into account. In list scheduling, the runtime is approximately doubled by chaining. This is simply due to the increase in processing. However, list scheduling can still be solved in polynomial time.

Table 6.

Runtime for scheduling with chaining (s).

The overall experimental results show that the proposed method can take advantage of approximate multipliers whose accuracy can be dynamically controlled in high-level synthesis. Although conventional scheduling explores circuits that meet the requirements through the trade-off between resource and performance, the proposed method incorporates approximate computing searches for circuits that meet requirements that are lower than those of the trade-off between resource, performance, and accuracy. Due to its flexibility, high-level synthesis can identify a more efficient circuit suitable for an application.

Our proposed ILP and list-scheduling methods have different characteristics in target applications. Although the ILP method can obtain an optimal schedule, the computational time becomes very long with the increase in the number of operations in applications. In contrast, the list-scheduling method can quickly find a circuit schedule while it sometimes fails to obtain an optimal schedule. In summary, the ILP method is preferred for use in a large application, and the list-scheduling method is suitable for a small application. In addition, we considered an optimization technique in high-level synthesis, and the two proposed methods show its practicality by chaining.

The circuit synthesis results show that the proposed method achieves lower power and lower cost with the same performance by applying approximations. Furthermore, it can be said that both the ILP and list-scheduling methods have small errors, although approximations are applied. This means that the proposed method improves resource and power use without much impact on the application. However, there is a large difference in power and resources used between list scheduling and ILP, even though the accuracy is almost the same. This indicates that it is necessary to consider memory resources and other factors when scheduling, and this is one of the issues to be addressed in the future. In addition, this research can be applied to ASICs. However, since we only experimented with FPGAs, this is also an issue to be addressed in the future.

6. Conclusions

We present a scheduling algorithm that exploits the difference in latency between approximate and exact operations in variable-cycle multiplication. The two proposed methods efficiently utilize approximate functional units whose accuracy can be dynamically controlled. The first method uses ILP to search for the optimal solution over time. The second method searches for a good solution in a short time by list scheduling. The two proposed methods extend the design search space compared to the conventional methods. This enables the design of approximate computing circuits that meet the requirements of resources, performance, and accuracy. The synthesized circuits based on the proposed method consumer less power and resources than conventional accurate circuits at the expense of small errors. In addition, they can be combined with optimization techniques and are practical. Although this paper focuses on approximate multipliers, the presented approach can be combined with other approximate methods.

Future studies will combine the presented approach with other optimization techniques such as pipelining and bitwidth reduction. In addition, since experiments were conducted only on FPGAs, we believe that experiments on ASICs will be necessary.

Author Contributions

Conceptualization, K.O.; Data curation, K.O.; Formal analysis, K.O.; Funding acquisition, H.T.; Investigation, K.O.; Methodology, K.O.; Project administration, H.T.; Software, K.O.; Supervision, H.N., X.K. and H.T.; Validation, K.O.; Visualization, K.O.; Writing—original draft, K.O.; Writing—review & editing, H.N., X.K. and H.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Japan Society for the Promotion of Science grant number 20H00590, 20H04160, and 21K19776.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data were presented in the main text.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mittal, S. A survey of techniques for approximate computing. ACM Comput. Surv. 2016, 48, 1–33. [Google Scholar] [CrossRef]

- Xu, Q.; Mytkowicz, T.; Kim, N.S. Approximate computing: A survey. IEEE Des. Test 2016, 33, 8–22. [Google Scholar] [CrossRef]

- Jie, H.; Orshansky, M. Approximate computing: An emerging paradigm for energy-efficient design. In Proceedings of the 2013 18th IEEE European Test Symposium (ETS), Avignon, France, 27–30 May 2013. [Google Scholar]

- Ye, R.; Wang, T.; Yuan, F.; Kumar, R.; Xu, Q. On reconfiguration-oriented approximate adder design and its application. In Proceedings of the 2013 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Jose, CA, USA, 18–21 November 2013. [Google Scholar]

- Camus, V.; Schlachter, J.; Enz, C. A low-power carry cut-back approximate adder with fixed-point implementation and floating-point precision. In Proceedings of the IEEE/ACM Design Automation Conference, Austin, TX, USA, 5–9 June 2016. [Google Scholar]

- Guputa, V.; Mohapatra, D.; Raghnathan, A.; Roy, K. Low-power digital signall processing using approximate adders. IEEE Trans. Comput.-Aided Des. Integr. Circxuits Syst. 2013, 32, 124–137. [Google Scholar] [CrossRef]

- Pashaeifar, M.; Kamal, M.; Kusha, A.A.; Pedram, M. Appproximate reverse carry propagate adder for energy-efficient dsp applications. IEEE Trans. Very Large Scale Integr. Syst. 2018, 26, 2530–2541. [Google Scholar] [CrossRef]

- Yang, T.; Ukezono, T.; Sato, T. A low-power configurable adder for approximate applications. In Proceedings of the 2018 19th International Symposium on Quality Electronic Design (ISQED), Santa Clara, CA, USA, 13–14 March 2018. [Google Scholar]

- Mahdiani, H.R.; Ahmadi, A.; Fakhraie, S.M.; Lucas, C. Bio-Inspired imprecise computational blocks for efficient VLSI implementation of Soft-computing applications. IEEE Trans. Circuits Syst. I Regul. Pap. 2010, 57, 850–862. [Google Scholar] [CrossRef]

- Lin, C.H.; Lin, I.C. High accuracy approximate multiplier with error correction. In Proceedings of the 2013 IEEE 31st International Conference on Computer Design (ICCD), Asheville, NC, USA, 6–9 October 2013. [Google Scholar]

- Liu, C.; Han, J.; Lombardi, F. A low-power, high-performance approximate multiplier with configurable partial error recovery. In Proceedings of the 2014 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 24–28 March 2014. [Google Scholar]

- Yang, T.; Ukezono, T.; Sato, T. A low-power high-speed accuracy-controllable approximate multiplier design. In Proceedings of the 2018 23rd Asia and South Pacific Design Automation Conference (ASP-DAC), Jeju, Korea, 22–25 January 2018. [Google Scholar]

- Sano, M.; Nishikawa, H.; Kong, X.; Tomiyama, H.; Ukezoko, T. Design of a 32-bit accuracy-controllable approximate multiplier for FPGAs. In Proceedings of the 2021 18th International SoC Design Conference (ISOCC), Jeju, Korea, 6–9 October 2021. [Google Scholar]

- Nepal, K.; Li, Y.; Bahar, R.I.; Reda, S. ABACUS: A technique for automated behavioral synthesis of approximate computing circuits. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition, Dresden, Germany, 24–28 March 2014. [Google Scholar]

- Nepal, K.; Hashemi, S.; Tann, H.; Bahar, R.I.; Reda, S. Automated high-level generation of low-power approximate computing circuits. IEEE Trans. Emerg. Top. Comput. 2019, 7, 18–30. [Google Scholar] [CrossRef]

- Schafer, B.C. Enabling high-level synthesis resource sharing design space exploration in FPGAs through automatic internal bitwidth adjustments. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2017, 36, 97–105. [Google Scholar] [CrossRef]

- Lee, S.; John, L.K.; Gerstlauer, A. High-level synthesis of approximate hardware under joint precision and voltage scaling. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017. [Google Scholar]

- Vaverka, F.; Hrbacek, R.; Sekanina, L. Evolving component library for approximate high level synthesis. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016. [Google Scholar]

- Venkatesan, R.; Agarwal, A.; Roy, K.; Raghunathan, A. MACACO: Modeling and analysis of circuits for approximate computing. In Proceedings of the 2011 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Jose, CA, USA, 7–10 November 2011. [Google Scholar]

- Chan, W.T.J.; Kahng, A.B.; Kang, S.; Kumar, R.; Sartori, J. Statistical analysis and modeling for error composition in approximate computation circuits. In Proceedings of the 2013 IEEE 31st International Conference on Computer Design (ICCD), Asheville, NC, USA, 6–9 October 2013. [Google Scholar]

- Li, C.; Luo, W.; Sapatnekar, S.S.; Hu, J. Joint precision optimization and high-level synthesis for approximate computing. In Proceedings of the 2015 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 8–12 June 2015. [Google Scholar]

- Godínez, J.C.; Esser, S.; Shafique, M.; Pagani, S.; Henkel, J. Compiler-driven error analysis for designing approximate accelerators. In Proceedings of the 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 19–23 March 2018. [Google Scholar]

- Godínez, J.C.; Vargas, J.M.; Shafique, M.; Henkel, J. AxHLS: Design space exploration and high-level synthesis of approximate accelerators using approximate functional units and analytical models. In Proceedings of the 2020 IEEE/ACM International Conference on Computer Aided Design (ICCAD), San Diego, CA, USA, 2–5 November 2020. [Google Scholar]

- Xu, S.; Schafer, B.C. Exposing approximate computing optimizations at different levels: From behavioral to gate-level. IEEE Trans. Very Large Scale Integr. Syst. 2017, 25, 3077–3088. [Google Scholar] [CrossRef]

- Leipnitz, M.T.; Nazar, G.L. High-level synthesis of resource-oriented approximate designs for FPGAs. In Proceedings of the Design Automation Conference, Las Vegas, NV, USA, 2–6 June 2019. [Google Scholar]

- Leipnitz, M.T.; Nazar, G.L. High-level synthesis of approximate designs under real-time constraints. ACM Trans. Embed. Comput. Syst. 2019, 18, 59. [Google Scholar] [CrossRef]

- Leipnitz, M.T.; Perleberg, M.R.; Porto, M.S.; Nazar, G.L. Enhancing Real-Time Motion Estimation through Approximate High-Level Synthesis. In Proceedings of the 2020 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Limassol, Cyprus, 6–8 July 2020. [Google Scholar]

- Leipnitz, M.T.; Nazar, G.L. “Throughput-oriented spatio-temporal optimization in approximate high-level synthesis. In Proceedings of the 2020 IEEE 38th International Conference on Computer Design (ICCD), Hartford, CT, USA, 18–21 October 2020. [Google Scholar]

- Shirane, K.; Nishikawa, H.; Kong, X.; Tomiyama, H. High-level synthesis of approximate computing circuits with dual accuracy modes. In Proceedings of the 2021 18th International SoC Design Conference (ISOCC), Jeju, Korea, 6–9 October 2021. [Google Scholar]

- Shin, W.K.; Liu, J.W.S. Algorithms for scheduling imprecise computations with timing constraints to minimize maximum error. IEEE Trans. Comput. 1995, 44, 466–471. [Google Scholar]

- Lee, C.; Potkonjak, M.; Smith, W.H.M. MediaBench: A tool for evaluating and synthesizing multimedia and communications systems. In Proceedings of the 30th Annual International Symposium on Microarchitecture, Research Triangle Park, NC, USA, 3 December 1997. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).