Abstract

Musculoskeletal disorders (MSDs) can significantly impact individuals’ quality of life (QoL), often requiring effective rehabilitation strategies to promote recovery. However, traditional rehabilitation methods can be expensive and may lack engagement, leading to poor adherence to therapy exercise routines. An exergame system can be a solution to this problem. In this paper, we investigate appropriate hand gestures for controlling video games in a rehabilitation exergame system. The Mediapipe Python library is adopted for the real-time recognition of gestures. We choose 10 easy gestures among 32 possible simple gestures. Then, we specify and compare the best and the second-best groups used to control the game. Comprehensive experiments are conducted with 16 students at Andalas University, Indonesia, to find appropriate gestures and evaluate user experiences of the system using the System Usability Scale (SUS) and User Experience Questionnaire (UEQ). The results show that the hand gestures in the best group are more accessible than in the second-best group. The results suggest appropriate hand gestures for game controls and confirm the proposal’s validity. In future work, we plan to enhance the exergame system by integrating a diverse set of video games, while expanding its application to a broader and more diverse sample. We will also study other practical applications of the hand gesture control function.

1. Introduction

Musculoskeletal disorders (MSDs) can greatly affect individuals’ quality of life (QoL). Musculoskeletal conditions significantly impair dexterity and mobility, which can result in early retirement from the workforce, decreased well-being, and the diminished capacity to engage in society [1]. They are the highest contributor to the global need for effective rehabilitation. Fortunately, recent studies reveal that physical exercises using hands and/or fingers can help reduce pain in people with musculoskeletal issues [2]. These issues are common in children and young people [3].

People suffering from MSDs usually visit therapists for rehabilitation. However, conventional rehabilitation methods can be costly and may not engage patients, which results in poor compliance with treatment exercise routines [4].

A rehabilitation exergame system of playing video games while making various hand gestures [5] is an enjoyable way to engage in hand exercises [6,7]. It can promote happiness and motivation during rehabilitation. The term exergame defines the combination of digital gaming with exercises [8]. In particular, an exergame is a physical activity that uses information technology such as a video game to require a player to be active or conduct an exercise [9,10]. A lot of studies have demonstrated that an exergame system can address the limitations of real-life rehabilitation environments by providing a low-cost virtual training environment for patients [11,12]. Playing a video game utilizing hand gestures can serve as an effective independent exercise at home for people who are suffering from MSDs and are undergoing therapy [13].

Previously, hand gestures have been widely implemented for controlling video games in rehabilitation exergame systems [14]. However, it is not known whether or not the implemented hand gestures in a system are easy and intuitive for users. Technically, prior studies have yet to discuss the investigation of hand gesture controls. They mainly focus on the exergame system using hand gestures. Hence, in this paper, we investigate easy and intuitive hand gestures for controlling video games in an exergame system to support people who conduct hand or finger exercises for rehabilitation.

To find suitable hand gestures in the exergame system in this paper, we explore individual responses and their insights. For this purpose, we design and implement an exergame system that uses hand gestures to control a web-based video game. This prototype utilizes the Mediapipe Python library [15] to recognize the defined hand gestures in the images that are captured by a web camera. Based on this library’s output, specific hand gestures will be detected to control character movements in the game.

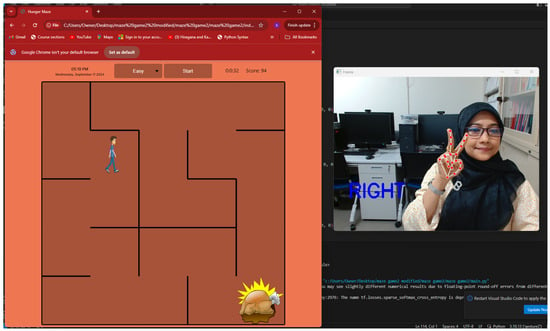

In this paper, we adopt Maze as a simple video game, and control the avatar’s movements using five simple hand gestures. Figure 1 illustrates an overview of our proposal. A Python program is implemented to identify the user’s hand gestures via the web camera and to direct the avatar’s movement, enabling up, down, left, right, and release movements. Due to the simplicity of the game rules, we expect that individuals can easily manage various hand gestures to control video games. The objective of this study is to investigate the effectiveness of selected hand gestures for game controls in the rehabilitation exergame system. It is expected to support rehabilitation with hand or finger exercises in daily life so that users can practice them independently.

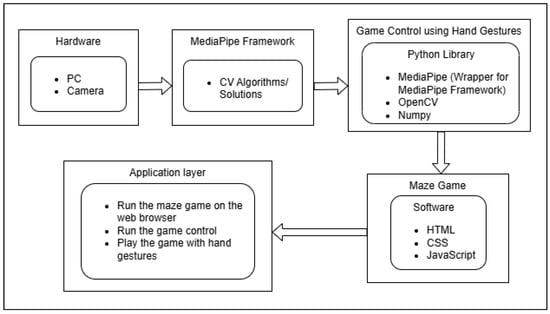

Figure 1.

Overview of exergame system with hand gestures.

For evaluations, we ask 16 students in Andalas University in Indonesia to play this Maze game and answer the 10 questions in the usability questionnaire. The results confirm the validity of the proposal by young people. The upcoming study will involve the evaluation of the system by other people.

The rest of this paper is organized as follows: Section 2 explores relevant studies in a literature review. Section 3 discusses the methodology. Section 4 presents the rehabilitation exergame system. Section 5 investigates appropriate hand gestures for game controls. Section 6 shows the evaluation. Finally, Section 7 concludes this paper with suggestions for future work.

2. Literature Review

In this section, we review related works to this study in the literature. Some studies discussed exergames using hand gestures for rehabilitation and the theory of exergames.

2.1. Exergames Using Hand Gestures for Rehabilitation

In [16], Yeng et al. proposed a game-based hand rehabilitation system. They applied seven common hand gestures for hand exercises to control the game “2048”. The experimental results showed that an appropriate distance between the webcam (computer monitor) and hand (user) is significant in this system. The optimal distance of a computer monitor from the user ranges from 0.52 m to 0.73 m. This distance ensures successful hand detection and tracking both indoors and outdoors. The system can function well in indoor settings with longer distances, such as approximately 1.83 m, for bedridden patients.

In [17], Fonteles et al. introduced an interactive musical game that enables users to practice hand therapy exercises while learning rhythm and melodic structures. This game employs MIDI files and the leap motion controller to detect the gestures made by the user’s hands and fingers. This study found that children and adults completed the hand therapy tasks while enjoying the game.

In [18], Saman et al. suggested hand mobility recovery through the game “Tetris”, which is controlled by nine hand gestures. The primary goal of this application is to encourage users to perform as many hand exercises as possible. These exercises aim to improve blood circulation, helping the affected area heal faster, restoring flexibility, and strengthening the hand muscles, thus increasing the chances of recovering hand functionality.

In [19], Nasri et al. proposed an sEMG-controlled 3D game that leverages a deep learning-based architecture for real-time gesture recognition for rehabilitation therapies. This study also presents a rehabilitation game controlled by seven dissimilar hand gestures for patients who require a recovery process due to their limitations caused by aging or a health condition.

In [20], Farahanipad et al. designed a 3D game-based system for wrist rehabilitation using hand gestures called HandReha. The gestures are selected from a set of the ones suitable for wrist rehabilitation and are implemented to control the game built in a 3D environment. This study concluded that the wrist rehabilitation system is user-friendly and effective for rehabilitation purposes.

In [21], Sophiya et al. introduced hand gesture-driven gaming for effective rehabilitation, allowing people of varied physical abilities to participate in immersive gaming experiences to improve their quality of life. Apart from the previous studies, we focused on how to obtain easy hand gestures for controlling video games in the rehabilitation exergame system as the preliminary stage for further studies.

2.2. Theory of Exergames

In [22], Oh et al. explained exergames and exergaming. They mentioned exergames as video games that promote players’ physical movements or exertions. They defined exergaming as the experimental activity of playing exergames, requiring physical exertions or movements that are more than sedentary activities and also including activities of strength, balance, and flexibility.

In [23], Brox et al. discussed social exergaming to prevent loneliness and encourage physical activity for seniors. They gave a narrative review of how exergames can help motivate the elderly to exercise more, focusing on possible social interactions in online exergames and persuasive technologies.

In [24], Huang et al. examined whether playing exergames improves physical fitness in young adults and whether such an improvement depends on their previous participation in other forms of exercise. They designed a 12-week randomized controlled trial for an exergame program.

In [25], Yu et al. examined how exergames affect physical fitness in middle-aged and older adults. Their findings demonstrated that the exergame program enhanced cardiopulmonary endurance and leg muscle strength in healthy individuals within this age group.

In [26], Pirovano et al. explained that exergames and rehabilitation are related to a methodology for designing effective and safe therapeutic exergames. They mentioned a comprehensive definition of therapeutic exergames from which a method is derived to create safe exergames for actual therapy pathways.

In [27], Kaur et al. created a review of various techniques of hand gesture recognition. The three main approaches to hand gesture recognition systems are glove-based, vision-based, and depth-based systems. They also discussed sensor-based, vision-based, motion-based, color-based, skeletal-based, and depth-based recognitions. Sensor-based recognition uses electronic gloves or sensors to capture the motion and position of hand gestures using data gloves. High accuracy and fast response speed are the benefits of this approach. However, data gloves are often expensive and inflexible because of their complex hardware toolkit requirements. Afterward, the development of vision-based recognition eliminated the need for wearing a glove.

In [28], Quan et al. mentioned the KNN classifier as a simple and effective method for hand gesture recognition. Firstly, using the skin color separates the hand region from the background so that a distance signature will describe the shape of hand gestures. Then, the KNN classifier will recognize hand gestures. The experimental results demonstrate that it performs well for hand gesture recognition with a dataset of 240 hand images.

In [29], Prema et al. investigated gaming using different hand gestures by artificial neural networks (ANNs) that allow players to interact with games using natural hand movements. In [30], Eshitha et al. also used artificial neural networks to recognize hand gestures. In [31], Zhu et al. presented a Kinect-based hand gesture recognition system for playing racing video games. The system could track both one-hand and two-hand movements utilizing the RGB-D Kinect sensor.

In [32], Kavana et al. discussed the recognition of hand gestures using Mediapipe. They simplified the finger spelling recognition and the data processing using Mediapipe hand proposed by Google, which can be used with a typical digital camera. In this study, we explore investigating hand gesture controls using the Maze game for our experiments in a rehabilitation exergame system.

3. Methodology

This section provides the methodology for investigating hand gesture controls and conducting the experiments in our exergame system.

3.1. Methodology of Hand Gesture Investigation

We investigate appropriate hand gestures for controlling video games in our system through the following steps.

- We list all the combinations of simple hand gestures using one hand, where we limit the hand gestures whether each finger is open (straight) or closed (bent) for simplicity.

- We make the questionnaire with 10 questions about all of the 32 hand gestures to choose appropriate ones according to the difficulty level from very easy (scale of 5) to very difficult (scale of 1).

- We ask 16 students to perform the 32 hand gestures fill in the questionnaire.

- We calculate the average rating for each hand gesture.

- Based on the result, we choose the top 10 hand gestures with the highest rating.

- Since five hand gestures are necessary to control the exergame system, we select the top five gestures in the best group and the next top five gestures in the second-best group.

- We assign the five arrow keys to the five hand gestures in each group.

3.2. Methodology of Experiments

We conduct the experiments as follows.

- We make the SUS questionnaire with 10 questions.

- We ask 15 students to play the exergame system using the hand gestures in the best group and in the second-best group.

- We ask the students to fill in the questionnaire.

- We compare and analyze the results of the game scores and the SUS scores between the two groups.

- We measure the accuracy of the hand gesture recognition by experimenting with 30 students.

- We measure the user experience of the exergame system using the User Experience Questionnaire (UEQ) with the best hand gesture.

- We analyze the UEQ results.

4. Exergame System Using Hand Gestures

In this section, we present the exergame system using hand gestures for rehabilitation.

4.1. System Design

Figure 2 shows the design of the proposed exergame system. The hardware includes the PC and the camera. The PC processes the data. In the PC, the camera captures the user’s hand and sends the video data to the MediaPipe Framework, which implements CV algorithms/solutions and is the brain behind the system. The MediaPipe Framework analyzes the camera feed using computer vision (CV) algorithms to detect and track hand gestures. The Mediapipe Python library, a scaffold for the MediaPipe Framework, processes these gestures and converts them into game commands for game controls. The Maze game responds to these commands, letting the user play the game by moving their hand gestures. So, from the captured image, hand gestures are recognized to control the game in real time using the Mediapipe and OpenCV Python libraries. NumPy is also used to perform mathematical and scientific calculations. The video game, developed using HTML, CSS, and JavaScript, runs within a web browser for ease of access.

Figure 2.

Design of exergame system.

4.2. Gesture Recognition

To recognize the defined hand gestures from the given image or video frame, Mediapipe is first used to detect the coordinates of 21 keypoints of one hand in Figure 3 [33]. Mediapipe is included in the standard Python library, and consists of two functions called BlazePalm and Landmark Model. Then, a Python program called Gesture Recognizer is implemented to recognize each hand gesture by calculating the distance between the two selected keypoints and comparing it with the given threshold. The distance between keypoints 0 and 4 is used for the thumb finger, the distance between keypoints 0 and 8 is used for the index finger, the distance between keypoints 0 and 12 is used for the middle finger, the distance between keypoints 0 and 16 is used for the ring finger, and the distance between keypoints 0 and 20 is used for the little finger. When the distance is larger than the given threshold, the corresponding finger state is regarded as open and is closed otherwise. The outlines of the three functions are described as follows:

Figure 3.

Twenty-one keypoints of one hand.

- BlazePalm detects the hand region in the image. It works on the full image and yields an oriented bounding box around the hand.

- Landmark Model detects the locations of the 21 keypoints in the detected region of the hand. It operates on the cropped image region defined by BlazePalm and returns the coordinates of the keypoints.

- Gesture Recognizer generates a result object of gesture detection for each recognition run. Classifies the previous computed keypoints configuration into a discrete set of gestures.

4.3. Video Game

Maze in Figure 4 is adopted as the video game in the exergame system [34]. We chose this game in our system because it is easy to play and needs a low number of movements in the development. This game can also help develop problem-solving skills and improve cognitive abilities [35]. This game runs on a web browser and has four difficulty levels with different complexities: easy, medium, hard, and extreme. The objective of the game is to find food to ease the hunger of the human character. The character’s moving direction should be properly changed to reach the food as quickly as possible. In the exergame system, this direction change is possible by showing the corresponding hand gesture to each direction.

Figure 4.

Maze in exergame system.

5. Investigation of Hand Gestures for Exergame

In this section, we investigate the appropriate hand gestures in the exergame system.

5.1. Possible Hand Gestures

First, we list possible simple hand gestures using one hand for video game controls. In this investigation, we only consider the simple state for each finger, namely, open (straight) or closed (bent). We do not consider the rotation of the hand. Then, different hand gestures can exist, since each of the five fingers has two states. Figure 5 illustrates them.

Figure 5.

All possible gestures of one hand.

5.2. Hand Gesture Rating

To find appropriate easy hand gestures among the 32 possible ones, we asked 16 students in Okayama University, Japan, to make the hand gestures one by one and to rate each gesture using a five-point Likert scale: 5 (very easy), 4 (easy), 3 (neutral), 2 (difficult), and 1 (very difficult). Then, the average rating for each gesture was calculated.

5.3. Selected Hand Gestures

Table 1 shows the rating results of 32 hand gestures by the 16 students. According to the average rating results, the top 10 gestures are 1, 2, 4, 12, 29, 3, 7, 11, 21, and 8 in this order. Based on the data of 16 respondents, we calculated the rating score for each gesture in Figure 5. It is observed that the gestures with lower rating scores in Table 1 are harder and less reliable than those with higher scores. For instance, gesture 27, which has the lowest average score of 1.7, is particularly challenging for most respondents. This gesture requires the middle and little fingers to be close together while the other fingers are open. Then, since the adopted video game in the system needs five hand gestures for the four direction keys (right, left, up, down), and another key (space), we made the best hand gesture group of 1, 2, 4, 12, and 29, and the second-best group of 3, 7, 11, 21, and 8. This second-best group was made for performance comparisons with the best group. Figure 6 and Figure 7 show the five hand gestures for the keys in the best group and in the second-best group, respectively.

Table 1.

Rating results for 32 hand gestures.

Figure 6.

Five hand gestures for keys in best group.

Figure 7.

Five hand gestures for keys in second-best group.

5.4. Hand Gesture Recognition for Best Group

The Python program Gesture Recognizer is implemented to recognize each defined hand gesture in the best group using the keypoint results from Mediapipe.

- Right Direction: Gesture 12 is used for the right direction key. When both the index finger and the middle finger are open and the others are closed, it is recognized.

- Left Direction: Gesture 4 is used for the left direction key. When the index finger is open and the others are closed, it is recognized.

- Up Direction: Gesture 2 is used for the up direction key. When all the fingers are open, it is recognized.

- Down Direction: Gesture 1 is used for the down direction key. When all the fingers are closed, it is recognized.

- Space: Gesture 29 is used for the right direction key. When the middle finger, the ring finger, and the little finger are all open and the others are closed, it is recognized.

5.5. Hand Gesture Recognition for Second-Best Group

Gesture Recognizer is implemented to recognize each defined hand gesture in the second-best group using the keypoint results from Mediapipe.

- Right Direction: Gesture 8 is used for the right direction key. When both the thumb finger and the index finger are open and the others are closed, it is recognized.

- Left Direction: Gesture 3 is used for the left direction key. When the thumb finger is open and the others are closed, it is recognized.

- Up Direction: Gesture 11 is used for the up direction key. When both the thumb finger and the little finger are open, it is recognized.

- Down Direction: Gesture 7 is used for the down direction key. When the little finger is open and the others are closed, it is recognized.

- Space: Gesture 21 is used for the space key. When the index finger, the middle finger, and the ring finger are all open and the others are closed, it is recognized.

6. Evaluation of Best Hand Gestures

In this section, we evaluate the effectiveness of the best hand gestures in the previous section.

6.1. Game Score Results

First, we evaluate the effectiveness of the best hand gestures in playing video games in the exergame system in terms of game scores. We asked the 15 students to play the game using the best hand gestures and the second-best ones, and compared their scores. Table 2 shows the results. For any student, the best hand gestures gave higher scores, which supports the effectiveness of the best hand gestures in playing this video game.

Table 2.

Game score results.

6.2. System Usability Scale (SUS) Results

Second, we evaluate the usability of the exergame system by playing the game using the best hand gestures through the System Usability Scale (SUS) [36].

6.2.1. SUS Standard Ranges

Table 3 shows the standard ranges for the SUS evaluation. The SUS gives the final score ranging from 0 to 100. The higher the score, the better the perceived usability. Moreover, it also gives the interpretation of the final results.

Table 3.

Standard range of SUS score.

6.2.2. Questions in SUS Questionnaire

Table 4 shows the ten questions for the SUS questionnaire. For each question, it is requested to answer with five levels (5: strongly agree; 4: agree; 3: neutral; 2: disagree; 1: strongly disagree). The questions adhere to the SUS rule such that all the statements alternate between positive and negative to prevent respondents from answering on autopilot.

Table 4.

Questions for questionnaire.

6.2.3. Procedure of SUS Score Calculation

From the answer results, the SUS score is computed by the following procedure [37]:

- Add up the answer levels for the odd-numbered questions (1, 3, 5, 7, and 9), and subtract 5 from the total to obtain the odd raw SUS score.

- Add up the answer levels for the even-numbered questions (2, 4, 6, 8, and 10), and subtract the total from 25 to obtain the even raw SUS score.

- Add up the odd and even raw scores, and multiply the sum by 2.5. This resulting number is the final SUS score.

6.2.4. SUS Results

Table 5 displays the responses, the raw SUS score, and the final SUS score by each student when the best hand gestures are used. Referring to Table 3, the average final SUS score of 84 suggests Excellent. Table 6 shows the results when the second-best hand gestures are used. The average final SUS score of 79.3 indicates Good. Thus, the usability of the best ones is higher than that of the second-best ones.

Table 5.

SUS results for best hand gestures.

Table 6.

SUS results for second-best hand gestures.

6.3. The Accuracy of Hand Gesture Recognition

Third, we evaluate the accuracy of hand gesture recognition to measure whether the system correctly detects each defined hand gesture or not.

6.3.1. Procedure of Measuring the Accuracy of Hand Gesture Recognition

We conduct the experiment with 30 students to measure the accuracy of correctly detecting each defined hand gesture by the system as follows.

- Give 10 easiest defined hand gestures.

- Ask each student to show each gesture for one second and see whether the system correctly detects the gesture or not.

- Repeat step 2 10 times for each gesture.

6.3.2. The Accuracy Results of Hand Gesture Recognition

Table 7 displays the accuracy results of the best group (Gesture 12, Gesture 4, Gesture 2, Gesture 1, and Gesture 29) and the second-best group (Gesture 8, Gesture 3, Gesture 11, Gesture 7, and Gesture 21) with the average and percentage for each gesture. The result shows that the accuracy of correctly detecting each defined hand gesture in the best group is higher than in the second-best group. We could see that some gestures in Group 2, such as Gesture 3, Gesture 11, and Gesture 7, resulted in lower rates than the others. These gestures can fail to be correctly captured by the camera in real time for the following reasons.

Table 7.

The accuracy results of hand gesture recognition.

- Low-lighting conditions: Poor lighting reduces the camera’s ability to clearly capture hand landmarks and edges, making it difficult for the model to detect gestures accurately.

- Camera resolution: Low-resolution cameras may not capture fine details of the hand, particularly the position and orientation of fingertips, which can lead to inaccurate detection.

- Occlusion of hand parts: When fingers overlap or the hand is partially hidden, the algorithm may fail to detect all necessary landmarks, resulting in missed or incorrect gesture interpretation.

- Inconsistent hand position or distance from the camera: If the hand is too close or too far from the camera, it may fall outside the optimal detection range, making it harder for the model to track it accurately.

However, we can improve the gesture recognition reliability as follows:

- Ensure good, consistent lighting and minimal background noise.

- Use a high-resolution, high-frame-rate camera.

- Minimize hand occlusions and keep gestures within a moderate distance from the camera.

- Use optimized hardware for faster processing in real-time applications.

6.4. User Experience Questionnaire (UEQ) Results

Finally, we evaluate the user experience of the exergame system with the User Experience Questionnaire (UEQ) using the best hand gestures. The UEQ includes 26 questions which can be seen in [38].

6.4.1. Six Scales in UEQ

The UEQ consists of the following six scale values [39]:

- Attractiveness: The exergame system looks attractive, enjoyable, friendly, and pleasant.

- Perspicuity: The exergame system is easy to understand, clear, simple, and easy to learn.

- Efficiency: The user can perform tasks with the system fast, efficiently, and in a pragmatic way.

- Dependability: The interaction with the exergame system is predictable, secure, and meets expectations. The system supports in performing the tasks.

- Stimulation]: Using the exergame system is interesting, exciting, and motivating.

- Novelty: The exergame system is innovative, inventive, and creatively designed.

6.4.2. UEQ Results

Figure 8 shows the UEQ results that are calculated with the responses from the students. The results show that Attractiveness, Perspicuity, Efficiency, and Stimulation are Excellent, and Dependability and Novelty are Good. Hence, the results confirm the validity of the proposed rehabilitation exergame system with the best hand gestures.

Figure 8.

UEQ results for best hand gestures.

6.5. Discussions on Usage in Practical Applications

Here, we discuss the use of the proposed hand gestures in practical application systems. In future works, we will study their implementations and evaluations.

6.5.1. Smart Advertising Display

In [40], an interactive advertising display system based on the user’s interest recognitions is studied. It utilizes YOLOv7 Pose for the feature detection and the pose estimation based on angular relationships. SVM is employed to determine which grid region of the advertising display the subject is currently focused on. However, this proposal limits the number of selections on the display, because the detection resolution for grids is low. Instead, the proposed hand gestures using finger open/closed states can be used to exclude this limitation to extend the number of selected displaying grids by defining the necessary number of hand gestures using different combinations of fingers.

6.5.2. Exercise and Performance Learning Assistant System

In [41,42], an Exercise and Performance Learning Assistant System (EPLAS) is studied to assist people in practicing exercises or learning performances, such as Yoga poses, by themselves at home. By using an open-source software OpenPose, the rating, arrow guide, and reaction-time functions were implemented to evaluate the user’s pose and point out the feature points to be improved. To rate the Yoga difficulty level with OpenPose, in [43], this study offered an objective reference to help Yoga self-practitioners to choose appropriate Yoga poses based on their level. This implemented objective difficulty level for Yoga poses can prevent users from injuring themselves during self-practice by choosing difficult poses that are not suitable for them.

This EPLAS system was implemented for practical use on a conventional personal computer (PC) and a GPU equipped with a camera. Since the rating function requires that the whole body of a user is detected by the camera, the user needs to stand at a distant position from the PC or the GPU. Thus, a remote control of the system by a user is important for its usability. Then, the proposed hand gestures can be used to realize it by defining the necessary hand gestures for operations. The user can control the system including selecting various difficulty-level Yoga poses by hand gestures.

7. Conclusions

This paper investigated appropriate hand gestures for controlling video games in the rehabilitation exergame system where Mediapipe was adopted for real-time recognition of the gestures and Maze was installed as a simple easy video game. Comprehensive investigations using the System Usability Scale (SUS) and User Experience Questionnaire (UEQ) were conducted with 16 students in Andalas University, Indonesia. We also measured the recognition accuracy for each defined hand gesture performed by 30 students. The results confirm the validity of appropriate hand gestures in the proposal. Moreover, the results indicated that the Maze game is easy to control with the best group of hand gesture controls. It implies that the best group gestures can be used to control other games and the low-score hand gestures are challenging in controlling the video game.

In future works, we will use the best group hand gestures to control various video games in the rehabilitation exergame system. Additionally, we will investigate other suitable hand gestures for the system by consulting rehabilitation therapy experts. Then, we will enhance the exergame system by improving the user interface, considering hand rotations and/or movements, and installing other video games. Subsequently, we will continue our evaluation by extending the set of users to a more diverse population. Finally, we will study other practical applications of the hand gesture control function.

Furthermore, we consider the following for real-world applications of hand gestures in a rehabilitation exergame system. First, collaboration with medical experts ensures that the selected gestures align with medical practices. The experts can recommend safe, appropriate gestures for specific medical conditions, such as MSD, stroke recovery, hand rehabilitation, or motor function disorders. Depending on the patient’s needs, they can identify movements that improve range of motion, fine motor skills, or coordination. Next, conducting therapy through exergame controlled by hand gestures can encourage them by making therapy enjoyable and engaging because patients often find traditional rehabilitation repetitive and boring, leading to poor adherence. Finally, patients can continue rehabilitation independently at home with minimal equipment, e.g., a PC with a webcam, so that the therapy is accessible for patients in remote areas or those with limited mobility.

Author Contributions

Conceptualization, N.F.; software, R.H.; validation, K.C.B.; formal analysis, I.T.A.; investigation, R.H.; data curation, A.A.R.; writing—original draft, R.H.; writing—review and editing, N.F. and K.C.B.; visualization, C.-P.F.; supervision, N.F. All authors have read and agreed to the published version of this manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study as involving humans is only for obtaining the hand gesture in the testing phase to validate the feasibility of our developed system.

Informed Consent Statement

The authors would like to assure readers that all participants involved in this research were informed, and all photographs used were supplied voluntarily by the participants themselves. The authors have ensured that all ethical considerations, particularly regarding participant consent and data protection, have been rigorously adhered to. Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Acknowledgments

We would like to thank all the colleagues in the Distributing System Laboratory, Okayama University, who were involved in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Musculoskeletal Health. Available online: https://www.who.int/news-room/fact-sheets/detail/musculoskeletal-conditions (accessed on 11 May 2024).

- Gasibat, Q.; Simbak, N.B.; Aziz, A.; Petridis, L.; Tróznai, Z. Stretching exercises to prevent work-related musculoskeletal disorders: A review article. Am. J. Sport Sci. Med. 2017, 5, 27–37. [Google Scholar] [CrossRef]

- Klein, S.; Chiu, K.; Clinch, J.; Liossi, C. Musculoskeletal pain in children and young people. In Managing Pain in Children and Young People: A Clinical Guide; Wiley: Hoboken, NJ, USA, 2024; pp. 147–169. [Google Scholar] [CrossRef]

- Lohse, K.; Shirzad, N.; Verster, A.; Hodges, N.; Van der Loos, H.M. Video games and rehabilitation: Using design principles to enhance engagement in physical therapy. J. Neurol. Phys. Ther. 2013, 37, 166–175. [Google Scholar] [CrossRef] [PubMed]

- Exergame. Available online: https://healthysd.gov/what-is-exergaming/ (accessed on 7 February 2024).

- Pereira, M.F.; Prahm, C.; Kolbenschlag, J.; Oliveira, E.; Rodrigues, N.F. A virtual reality serious game for hand rehabilitation therapy. In Proceedings of the 2020 IEEE 8th International Conference on Serious Games and Applications for Health (SeGAH), Vancouver, BC, Canada, 12–14 August 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Knight, J. The Importance of Hand Exercises. Available online: https://handandwristinstitute.com/the-importance-of-hand-exercises/ (accessed on 5 February 2024).

- Staiano, A.E.; Calvert, S.L. The promise of exergames as tools to measure physical health. Entertain. Comput. 2011, 2, 17–21. [Google Scholar] [CrossRef] [PubMed]

- Macvean, A.; Robertson, J. Understanding exergame users’ physical activity, motivation and behavior over time. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New York, NY, USA, 27 April 2013; pp. 1251–1260. [Google Scholar] [CrossRef]

- Sween, J.; Wallington, S.F.; Sheppard, V.; Taylor, T.; Llanos, A.A.; Adams-Campbell, L.L. The role of exergaming in improving physical activity: A review. J. Phys. Act. Health 2014, 11, 864–870. [Google Scholar] [CrossRef]

- Demers, M.; Martinie, O.; Winstein, C.; Robert, M.T. Active video games and low-cost virtual reality: An ideal therapeutic modality for children with physical disabilities during a global pandemic. Front. Neurol. 2020, 11, 601898. [Google Scholar] [CrossRef] [PubMed]

- Xue, Y.; Zhao, L.; Xue, M.; Fu, J. Gesture interaction and augmented reality based hand rehabilitation supplementary system. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 October 2018; pp. 2572–2576. [Google Scholar] [CrossRef]

- Alfieri, F.M.; da Silva Dias, C.; de Oliveira, N.C.; Battistella, L.R. Gamification in musculoskeletal rehabilitation. Curr. Rev. Musculoskelet. Med. 2022, 15, 629–636. [Google Scholar] [CrossRef] [PubMed]

- Pirbabaei, E.; Amiri, Z.; Sekhavat, Y.A.; Goljaryan, S. Exergames for hand rehabilitation in elders using Leap Motion Controller: A feasibility pilot study. Int. J. Hum.-Comput. Stud. 2023, 178, 103099. [Google Scholar] [CrossRef]

- Mediapipe 0.10.11. Available online: https://pypi.org/project/mediapipe/ (accessed on 12 March 2024).

- Yeng, A.C.M.; Han, P.Y.; How, K.W.; Yin, O.S. Hand gesture controlled game for hand rehabilitation. In Proceedings of the International Conference on Computer, Information Technology and Intelligent Computing (CITIC 2022); Atlantis Press: Amsterdam, The Netherlands, 2022; pp. 205–215. [Google Scholar] [CrossRef]

- Fonteles, J.H.; Serpa, Y.R.; Barbosa, R.G.; Rodrigues, M.A.F.; Alves, M.S.P.L. Gesture-controlled interactive musical game to practice hand therapy exercises and learn rhythm and melodic structures. In Proceedings of the 2018 IEEE 6th International Conference on Serious Games and Applications for Health (Segah), Vienna, Austria, 16–18 May 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Saman, O.; Stanciu, L. Hand mobility recovery through game controlled by gestures. In Proceedings of the 2019 IEEE 13th International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 29–31 May 2019; pp. 241–244. [Google Scholar] [CrossRef]

- Nasri, N.; Orts-Escolano, S.; Cazorla, M. An semg-controlled 3d game for rehabilitation therapies: Real-time time hand gesture recognition using deep learning techniques. Sensors 2020, 20, 6451. [Google Scholar] [CrossRef] [PubMed]

- Farahanipad, F.; Nambiappan, H.R.; Jaiswal, A.; Kyrarini, M.; Makedon, F. HAND-REHA: Dynamic hand gesture recognition for game-based wrist rehabilitation. In Proceedings of the 13th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 30 June–3 July 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Sophiya, E.; Reddy, S.S. Hand gesture-driven gaming for effective rehabilitation and improved quality of life-a review. In Proceedings of the 2024 5th International Conference on Innovative Trends in Information Technology (ICITIIT), Kottayam, India, 15–16 March 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Oh, Y.; Yang, S. Defining exergames & exergaming. Proc. Meaningful Play 2010, 2010, 21–23. [Google Scholar]

- Brox, E.; Luque, L.F.; Evertsen, G.J.; Hernández, J.E.G. Exergames for elderly: Social exergames to persuade seniors to increase physical activity. In Proceedings of the 2011 5th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth) and Workshops, Dublin, Ireland, 23–26 May 2011; pp. 546–549. [Google Scholar] [CrossRef]

- Huang, H.C.; Wong, M.K.; Lu, J.; Huang, W.F.; Teng, C.I. Can using exergames improve physical fitness? A 12-week randomized controlled trial. Comput. Hum. Behav. 2017, 70, 310–316. [Google Scholar] [CrossRef]

- Yu, T.C.; Chiang, C.H.; Wu, P.T.; Wu, W.L.; Chu, I.H. Effects of exergames on physical fitness in middle-aged and older adults in Taiwan. Int. J. Environ. Res. Public Health 2020, 17, 2565. [Google Scholar] [CrossRef] [PubMed]

- Pirovano, M.; Surer, E.; Mainetti, R.; Lanzi, P.L.; Borghese, N.A. Exergaming and rehabilitation: A methodology for the design of effective and safe therapeutic exergames. Entertain. Comput. 2016, 14, 55–65. [Google Scholar] [CrossRef]

- Kaur, H.; Rani, J. A review: Study of various techniques of hand gesture recognition. In Proceedings of the 2016 IEEE 1st International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 4–6 July 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Quan, C.; Liang, J. A simple and effective method for hand gesture recognition. In Proceedings of the 2016 International Conference on Network and Information Systems for Computers (ICNISC), Wuhan, China, 15–17 April 2016; pp. 302–305. [Google Scholar] [CrossRef]

- Prema, S.; Deena, G.; Hemalatha, D.; Aruna, K.; Hashini, S. Gaming using different hand gestures using artificial neural network. EAI Endorsed Trans. Internet Things 2024, 10, 1–9. [Google Scholar] [CrossRef]

- Eshitha, K.; Jose, S. Hand gesture recognition using artificial neural network. In Proceedings of the 2018 International Conference on Circuits and Systems in Digital Enterprise Technology (ICCSDET), Kottayam, India, 21–22 December 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Zhu, Y.; Yuan, B. Real-time hand gesture recognition with Kinect for playing racing video games. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; pp. 3240–3246. [Google Scholar] [CrossRef]

- Kavana, K.; Suma, N. Recognization of hand gestures using Mediapipe hands. Int. Res. J. Mod. Eng. Technol. Sci. 2022, 4, 4149–4156. [Google Scholar]

- Google AI Blog, On-Device, Real-Time Hand Tracking with MediaPipe. Available online: https://ai.googleblog.com/2019/08/on-device-real-time-hand-tracking-with.html (accessed on 18 January 2024).

- MazeGame. Available online: https://www.sourcecodester.com/javascript/16517/simple-hunger-maze-game-javascript-free-source-code.html (accessed on 12 January 2024).

- Nef, T.; Chesham, A.; Schütz, N.; Botros, A.A.; Vanbellingen, T.; Burgunder, J.M.; Müllner, J.; Martin Müri, R.; Urwyler, P. Development and evaluation of maze-like puzzle games to assess cognitive and motor function in aging and neurodegenerative diseases. Front. Aging Neurosci. 2020, 12, 87. [Google Scholar] [CrossRef] [PubMed]

- SUS: System Usability Scale. Available online: https://www.usability.gov/how-to-and-tools/methods/system-usability-scale.html (accessed on 10 January 2024).

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar] [CrossRef]

- UEQ: User Experience Questionnaire. Available online: https://www.ueq-online.org/ (accessed on 1 July 2024).

- Schrepp, M. User experience questionnaire handbook. In All You Need to Know to Apply the UEQ Successfully in Your Project; ResearchGate: Berlin, Germany, 2015; pp. 1–16. [Google Scholar] [CrossRef]

- Lee, Y.E.; Fan, C.P. Identification of user’s interests by deep-learning based pose estimation with angle relationship from keypoints for smart advertising displays. In Proceedings of the 2024 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Danang, Vietnam, 3–6 November 2024; pp. 1–3. [Google Scholar] [CrossRef]

- Anggraini, I.T.; Basuki, A.; Funabiki, N.; Lu, X.; Fan, C.; Hsu, Y.C.; Lin, C.H. A proposal of exercise and performance learning assistant system for self-practice at home. Adv. Sci. Technol. Eng. Syst. J. 2020, 5, 1196–1203. [Google Scholar] [CrossRef]

- Huang, W.C.; Shih, C.L.; Anggraini, I.T.; Funabiki, N.; Fan, C.P. Human’s reaction time-based score calculation of self-practice dynamic yoga system for user’s feedback by open pose and fuzzy rules. In Proceedings of the 2023 11th International Conference on Information and Education Technology (ICIET), Fujisawa, Japan, 18–20 March 2023; pp. 577–581. [Google Scholar] [CrossRef]

- Shih, C.L.; Liu, J.Y.; Anggraini, I.T.; Xiao, Y.; Funabiki, N.; Fan, C.P. Difficulty evaluation of yoga poses by angular velocity and body area calculation for GPU-based yoga self-practice system. In Proceedings of the 2024 IEEE Gaming, Entertainment, and Media Conference (GEM), Turin, Italy, 5–7 June 2024; pp. 1–4. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).