Abstract

The task of the detection of unmanned aerial vehicles (UAVs) is of great significance to social communication security. Infrared detection technology has the advantage of not being interfered with by environmental and other factors and can detect UAVs in complex environments. Since infrared detection equipment is expensive and data collection is difficult, there are few existing UAV-based infrared images, making it difficult to train deep neural networks; in addition, there are background clutter and noise in infrared images, such as heavy clouds, buildings, etc. The signal-to-clutter ratio is low, and the signal-to-noise ratio is low. Therefore, it is difficult to achieve the UAV detection task using traditional methods. The above challenges make infrared UAV detection a difficult task. In order to solve the above problems, this work drew upon the visual processing mechanism of the human brain to propose an effective framework for UAV detection in infrared images. The framework first determines the relevant parameters of the continuous-coupled neural network (CCNN) through the image’s standard deviation, mean, etc. Then, it inputs the image into the CCNN, groups the pixels through iteration, then obtains the segmentation result through expansion and erosion, and finally, obtains the final result through the minimum circumscribed rectangle. The experimental results showed that, compared with the existing most-advanced brain-inspired image-understanding methods, this framework has the best intersection over union (IoU) (the intersection over union is the overlapping area between the predicted segmentation and the label divided by the joint area between the predicted segmentation and the label) in UAV infrared images, with an average of 74.79% (up to 97.01%), and can effectively realize the task of UAV detection.

1. Introduction

In recent years, unmanned aerial vehicles (UAVs) have been widely used in various civilian and commercial applications. Due to the endless non-cooperative intrusion incidents of civilian UAVs in low-altitude areas, UAV target detection technology has received widespread attention. Initially, UAV detection methods mainly included the following types: radar detection, radio frequency detection, photoelectric identification tracking, and sound monitoring. However, the electromagnetic signals emitted by small UAVs are very limited [1,2], difficult to detect with conventional radar, and do not handle the Doppler effect well [3]. With the development of artificial intelligence in image-processing technology, image-recognition methods have made significant progress in the detection of UAVs. The above method requires manually constructing a feature extractor based on the shape, color, and other characteristics of the identified target to extract information, which is greatly affected by the background and has high environmental requirements. However, in reality, UAVs often need to be identified in complex environments such as at night or in rainy and snowy weather. Small UAVs are not easily captured by cameras [4,5], resulting in unclear features. The above method cannot operate in this environment.

Infrared detection technology has the advantage of not being interfered with by environmental factors, so it has a wide application scenario. It can collect targets in special environments such as at night. Compared with optical images, detecting UAVs with infrared images has many advantages like better thermal sensitivity and all-weather detection capability [6,7]. For example, infrared imaging receives infrared radiation; it does not require high lighting conditions and can be used at night. It has a strong reconnaissance ability because infrared radiation has a thermal effect and can pass through clouds. Infrared imaging can observe targets hidden behind clouds, independent of weather changes [8]. Therefore, using images collected by infrared thermal imaging instruments instead of color images has become the main method to realize target detection in special environments such as at night.

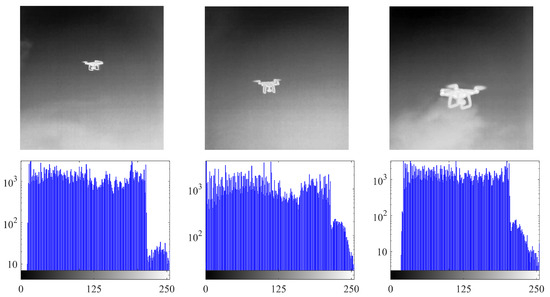

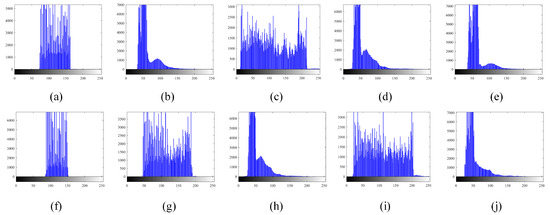

It is difficult to identify UAVs in infrared images using the most-advanced deep learning and underlying image-processing methods. This is because of the following: (1) Since infrared detection equipment is expensive and data collection is difficult, existing methods have difficulty detecting UAVs. There are few infrared images of these machines, making it difficult to train deep neural networks. (2) In addition, there are background clutter and noise in infrared images, such as heavy clouds and buildings, thus a low signal-to-clutter ratio and a low signal-to-noise ratio [9]. It is difficult to achieve the task of UAV detection using traditional methods. As shown in Figure 1, when there is cloud interference, the histogram of the infrared image will not have an obvious bimodal shape or a nearly bimodal, unimodal, or multimodal shape.

Figure 1.

Infrared image and its histogram.

To solve the above problems, this work drew inspiration from the visual processing mechanisms of mammals and introduces a processing mechanism that simulates the visual cortex of the human brain into the infrared image processing. The CCNN neurons encode the grayscale values of the pixels in the image as the firing frequency of the neurons [10]. The identification of the target objects is achieved by distinguishing the frequencies corresponding to neural clusters. The higher the grayscale value of a pixel, the higher the frequency of the output signal. If the grayscale values of the pixels are similar, their frequencies are also close. This image-processing method can reduce the local gray level difference of the image and compensate for small local discontinuities in the image. However, there is currently no existing method based on the CCNN for the automatic processing of infrared images. In this work, a framework for automatically detecting UAVs in infrared images is proposed. The framework first sets the relevant parameters of the continuous-coupled neural network (CCNN) through the standard deviation, mean, etc., of the image. Then, it inputs the image into the CCNN, groups the pixels through iterations, uses the entropy of the image as an indicator to control the number of iterations, then obtains the segmentation results through expansion and erosion, and finally, obtains the detection results of the UAV through the minimum circumscribed rectangle. The framework can automatically identify UAVs in infrared images and overcome the difficulty of deep learning due to the small number of samples.

In summary, the main contributions of this work are listed as follows:

- (1)

- It introduces the primary visual cortex model into infrared image processing and analyzes the status characteristics of the CCNN in the infrared image processing.

- (2)

- It proposes a new framework for the automatic detection of infrared UAVs. This framework is capable of automatically configuring the parameters based on the input image, then groups the image pixel values through the iterative process of the CCNN, reconstructs the image through the output value, and controls the number of iterations through the entropy of the image.

- (3)

- The proposed detection framework was tested in a complex environment to verify the effectiveness of the method. This work was performed on infrared images collected with complex buildings and cloud cover. The average IoU of this framework in the UAV infrared images reached 74.79% (up to 97.01%), which can effectively achieve unmanned operation for machine detection tasks.

2. Related Works

2.1. UAV Detection Method Based on Machine Learning

With the development of artificial intelligence in image-processing technology, many scholars have made significant progress in the research on UAV detection. Molchanov et al. extracted features based on the eigenvectors and eigenvalues of multi-dimensional scaling (MDS) (a classic dimensionality reduction method) [11,12]. They trained a linear and a non-linear support vector machine (SVM) (a support vector machine is a two-class classification model, and its basic model is a linear classifier defined with the largest interval in the feature space), as well as a naive Bayes classifier. In a second test, the authors excluded some models from the training and found that the classifier could still classify them into fixed-wing, stationary rotor, or helicopter with an accuracy ranging from 87% to 100%. The challenge was a robust alignment of the m-D signature. The proposed feature-extraction algorithm requires the m-D signature to be properly aligned. The current alignment method does not assume discontinues in the m-D signature (e.g., when a part of the signature is shadowed by another object). For such scenarios, a more-robust alignment procedure is required [11]. Jahangir and Baker trained a binary decision tree model, which could improve the UAV prediction probability to 88% and reduce the false alarm rate to 0 [13,14]. But, the model was trained based on the Doppler effect of the UAVs; the electromagnetic signals emitted by small UAVs are very limited [1,2], difficult to detect with conventional radar, and do not handle the Doppler effect well [3]. Therefore, this method still has limitations for UAV detection in special environments. Olusiji et al. used various categories of wavelet transforms (discrete wavelet transform, continuous wavelet transform, and wavelet scattering transform) to extract features from the signals to build models using these features. They used the wavelet scattering transform to extract signatures (scattergrams) from the steady state of the radio frequency (RF) signals at a 30 dB signal-to-noise ratio (SNR) and used these scattergrams to train the SqueezeNet. They achieved an accuracy of 98.9% at a 10 dB SNR [15]. However, using various categories of wavelet transforms for extracting features from the signals is a complicated task, and the electrical signals radiated by UAVs are not obvious in some complex environments, which brings great challenges to the model construction.

However, the above method needs to manually construct a feature extractor to extract information according to the shape, color, and other characteristics of the target. Feature extraction is one of the more-difficult steps in image pattern recognition. Some sources of difficulty are the presence of irrelevant information and the relationshipof a feature set to a particular application [16]. This requires high professional knowledge and is prone to problems such as poor generalization ability and difficulty in achieving real-time detection efficiency. The classifier structure is more difficult to interpret, which tends to worsenwith the number of features. Further, the prediction variability tends to increase, and the classifier is sensitive to outliers. There is no guarantee that the classifier will perform as well on a new set of samples [17].

2.2. UAV Detection Method Based on Deep Learning

Deep learning has shown great promise in computer vision and pattern recognition [18,19], and deep neural networks are increasingly used for image segmentation [20] and image fusion [21]. Deep learning is, therefore, a promising means of detecting and identifying UAVs [18,22]. In [18], a YOLOv2-based (YOLO is a target detection model. Its full name is you only look once. This means that you only need to browse once to identify the category and location of the objects in the picture.) object-detection algorithm was chosen for loaded and unloaded UAV detection, and the average precision reached 74.94%. This was the first time a YOLOv2-based algorithm was introduced to loaded and unloaded UAV object detection. However, the data scene collected by this method is relatively simple, and there are no other interference factors such as noise. It still needs to be verified on more datasets [18]. Wang et al. proposed a convolutional neural network (CNN) with two heads [23]: one for the classification of the input range Doppler map patch into the target being present or absent and the other for the regression of the offset between the target and the patch center. Then, based on the output of the network, a nonmaximum suppression (NMS) mechanism composed of probability-based initial recognition, distribution-density-based recognition, and voting-based regression was developed to reduce false alarms, as well as control the false alarms. Finally, experiments on both simulated data and real data were carried out, and it was shown that the proposed method could locate the target more accurately and achieve a much lower false alarm rate at a comparable detection rate than the constant false alarm rate (CFAR). Due to the input of the network actually being a series of patches obtained from the R-D map, the training and testing process of the proposed method was rather time-consuming, which limits its application in real life. In addition to the above methods, algorithms [1,24] such as R-CNN, FastR-CNN, and FasterR-CNN are also widely used in UAV detection.

However, the above work was all based on the training and testing of images collected by visible light cameras, and it did not take into account the situation that the optical lens cannot capture the UAVs in a complex environment. The above method has limited room for improvement in detecting UAVs in clouds at night. Although infrared images have the advantage of not being interfered with by environmental factors, infrared detection equipment is relatively expensive and data collection is difficult, so there are few existing infrared images based on UAVs, making it difficult to train deep neural networks based on infrared images.

2.3. Brain-Inspired Computing

There are billions of neurons in the human brain. The dendrite is the input terminal [25]. The cell body integrates incoming spikes received by different branches of the dendrites and generates a spike when its membrane potential reaches the threshold. Spikes travel along the axons to other neurons via synapses [26,27,28].

Various types of brain-inspired models have been proposed to faithfully simulate the human brain. In recent years, spiking neural networks (SNNs) have attracted enormous research interest. There has been an upward trend in SNN-related papers [25]. For example, a variety of spiking neuron models have been proposed [10] to emulate the generation of spikes with different levels of fidelity and computational cost; many memristor-based models have been proposed [29,30] to simulate synapses [31].

More and more brain-inspired models have been used in the field of computer vision. The pulse-coupled neural network (PCNN) is the third-generation artificial neural network proposed in the 1990s. Its network is constructed by simulating the activity of mammalian visual cortex neurons. It is also called the third-generation neural network. Since the PCNN model was developed based on the study of mammalian physiological and visual characteristics, it has obvious advantages in target segmentation [32].

Inspired by the primary visual cortex of mammals, such as cats, PCNN models can display synchronized oscillations and process digital images without training. Over the past few decades, many studies [33,34,35] have demonstrated that PCNN models have better performance [36,37] than other neuron models in image-processing tasks. Di et al. [36] performed medical image fusion based on NSCT domain rolling guidance filtering and an adaptive PCNN. Qi et al. [37] performed breast density segmentation based on the Morph SPCNN model. However, PCNN models exhibit periodic behavior under a periodic stimulus [10]. This is the main obstacle for PCNNs to create a brain-like machine.

The continuous-coupled neural network (CCNN) is a new brain-like neural network. It was evolved from the PCNN. The difference is that the discharge process in all PCNN models is deterministic and cannot realistically simulate human brain neurons. The CCNN can show periodic behavior under constant stimulation, as well as chaotic behavior [38] under periodic stimulation and can simulate human brain neurons more realistically [10].

However, there is currently no method for processing infrared images based on the CCNN, and the CCNN requires manual adjustment of its parameters during image processing. There is no automatic image-segmentation solution based on the CCNN, which limits the application of the CCNN, especially for infrared images.

3. Method

In this section, we propose in detail a framework for UAV detection in infrared images that draws on the visual processing mechanisms of the human brain.

3.1. Continuous-Coupled Neural Network

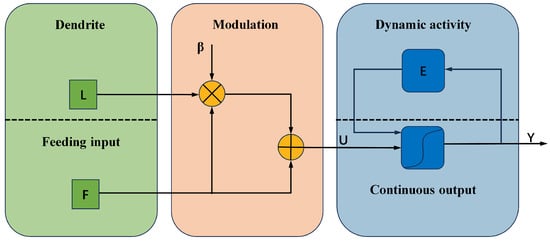

Different from traditional artificial neural networks, the CCNN does not require any training and only has a single layer of laterally linked continuous-coupled neurons [39], which mainly includes five crucial components: couple linking, feeding input, modulation product, dynamic activity, and continuous output [32].

On the dendritic tree, the feeding synapses receive the external stimulus, which is the main input signal, and the action potential of the neighboring neurons. Moreover, the linking synapses are only associated with their adjacent neurons [32]. The interaction of the above two synapses produces the membrane potential of the neuron, which is compared with the dynamic threshold potential to judge whether the action potential is generated or not.

The CCNN is described as follows:

where

The five main parts are couple linking (n), feeding input (n), modulation product (n), dynamic activity (n), and continuous output (n). The quantity is the external feeding input received by the receptive fields. The parameters and denote exponential decay factors that record previous input states. The functions and are weighting factors modulating the action potentials of the surrounding neurons. Among them, denote the feeding and linking synaptic weights, respectively, and denotes the linking strength, which directly determines (n) in the modulation product (n).

Figure 2 presents an intuitive illustration of the five parts.

Figure 2.

Continuous-coupled neuron network.

The CCNN model can be considered as a self-organized method, i.e., the network is provided with inputs, but without the demand of the desired output. For image segmentation, the output of the CCNN often does not correspond well with the content of the images, because of the inner parameter setting mechanisms for adjusting the behavior of the neurons [40].

Based on Zhan et al. [41] and Chen et al.’s [42] research, we propose an automatic parameter-setting method for the CCNN tailored to infrared images. There are five adjustable parameters, , , , , and , and these parameters can be set automatically.

where and denote the Otsu thresholding and the maximum intensity of the image. represents the standard deviation of the image. In the above equation, parameters , , and always have a large impact on the CCNN. Obviously, the larger the value of , the more strongly a neuron is influenced by its eight adjacent outputs. The larger the values of or , the lower the segmentation accuracy rates become.

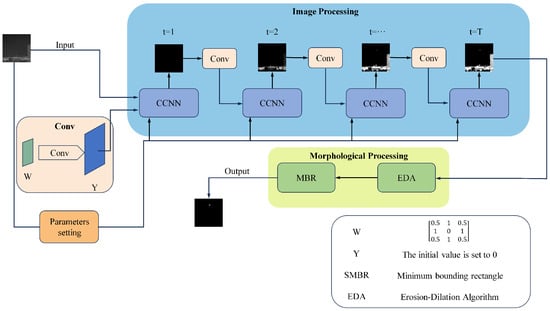

3.2. Image-Processing Framework

Figure 3 below shows the basic framework of our use of the CCNN model to segment UAVs in infrared images. The CCNN model, which simulates the visual cortex of the human brain with simplified parameters, is used to process the infrared images, and morphological algorithms such as dilation and erosion are used to remove the background and successfully segment the UAV in the infrared image.

Figure 3.

Image-processing framework of UAVs in infrared images.

3.2.1. Erosion–Dilation Algorithm

During the erosion operation, the minimum value of the values in the rectangular neighborhood of each position is taken as the output gray value of that position. The overall brightness of the output image after erosionis lower than that of the original image [43]. The area of the brighter areas in the image will become smaller, and the area of the darker areas will increase. Dilation is equivalent to the reverse operation of erosion, where lighter objects in the image will become larger and darker objects will decrease in size.

3.2.2. Minimum Bounding Rectangle

After obtaining the segmentation result, the final result is obtained through the minimum bounding rectangle (MBR). The specific operation is to determine the UAV in the segmented image through the maximum connectivity [44] and, then, calculate the centroid to determine the bounding box.

4. Experiment

In this section, we conducted experiments on infrared images and compared them with the most-advanced underlying image-processing methods.

4.1. Dataset

First, we used a handheld infrared thermal-imaging device to collect some UAV data at night. The equipment parameters were as follows:

The IR resolution was 640 × 512 px; the pixel size was 12 m; the focal length of the infrared objective lens was 50 mm; the field of view (FOV) was ; the focal length adaptation range was 50 m .

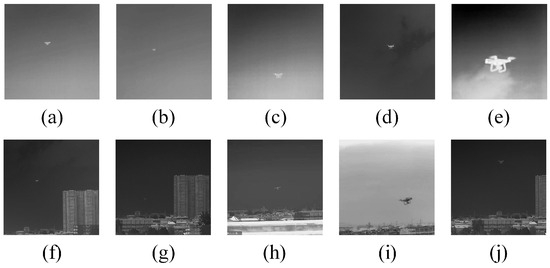

We collected a total of 19 UAV infrared images, then these data were divided into two categories: high-altitude UAV and low-altitude UAV with buildings in the background (Figure 4; Image 1∼5 were taken when the UAV was flying at a high altitude; Image 6∼10 were taken when the UAV was flying at a low altitude), and all the data were labeled using manual annotation. Figure 4 is a depiction of five samples from each of the two categories of datasets. The parameter settings of the experiment were the same as explained in Section 3.

Figure 4.

High-flying-height images: (a) Image 1, (b) Image 2, (c) Image 3, (d) Image 4, and (e) Image 5. Low-flying-height images: (f) Image 6, (g) Image 7, (h) Image 8, (i) Image 9, and (j) Image 10.

4.2. Experimental Results

The results obtained by this model were then compared with those obtained by advanced underlying image-processing methods such as the PCNN, Otsu, and FCM.

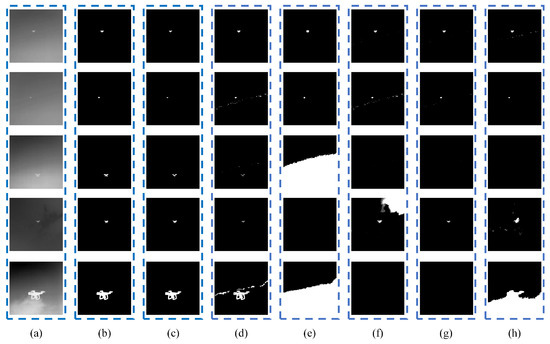

The Figure 5 shows the set of experiments which was completed with data at a high flight altitude. At this time, the background interference factor was clouds.

Figure 5.

Comparative results obtained by different methods when the background is clouds: (a) an original image, (b) results of manual labeling, (c) results of the CCNN, (d) results of the PCNN, (e) results of FCM, (f) results of Otsu, (g) results of iteration, and (h) results of 2-mode.

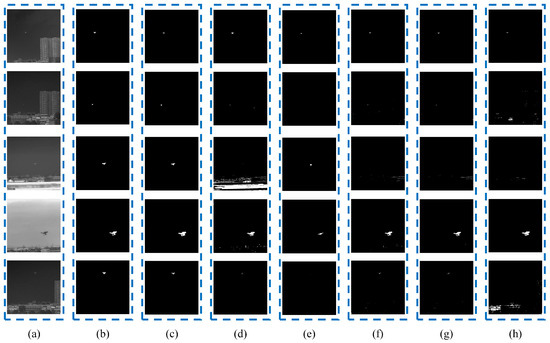

The Figure 6 shows the set of experiments which was completed with data at a low flight altitude. At this time, the background interference factors were buildings.

Figure 6.

Comparative results obtained by different methods when the background is buildings: (a) an original image, (b) results of manual labeling, (c) results of the CCNN, (d) results of the PCNN, (e) results of FCM, (f) results of Otsu, (g) results of iteration, and (h) results of 2-mode.

Figure 7 illustrates the corresponding histograms of the original infrared images. Although it can be observed that the shapes of the histograms are irregularly distributed, including bimodal or nearly bimodal, unimodal, and multimodal, our proposed model could effectively extract the targets from the background, as shown in Figure 5 and Figure 6.

Figure 7.

Histograms of experimental images: (a) Image 1, (b) Image 2, (c) Image 3, (d) Image 4, (e) Image 5, (f) Image 6, (g) Image 7, (h) Image 8, (i) Image 9, and (j) Image 10.

4.3. Performance Measure

Considering that this research needed to identify UAVs in complex environments, we used the intersection over union (IoU) [45] of the images to evaluate the performance of the segmentation method. The IoU is the overlap area between the predicted segmentation and label divided by the joint area between the predicted segmentation and label (the intersection of the two/the union of the two). This metric ranges from 0∼1 (0∼100%), where 0 means no overlap and 1 means completely overlapping segmentations. The calculation formula is:

In the segmentation task, the samples can be divided into true examples (true positive (TP), false positive (FP) examples, true negative (TN) examples, and false negative (FN) examples. TP, FP, and FN, respectively, represent the number of corresponding samples.

Table 1 lists the performance metrics for each algorithm. As can be seen, the results of our model seemed to be the best, with the highest IoU value. This proved that our model outperformed the above methods in terms of segmentation performance.

Table 1.

IoU of the above Images 1–10.

After conducting the tests on all the data, we found that the average IoU of the CCNN was the highest, reaching 74%; the PCNN, iteration, Otsu, and bimodal methods showed similar performance, with scores of 46.96%, 41.11%, 40.61%, and 35.50% respectively; FCM performed the poorest, with a score of 22.56%.

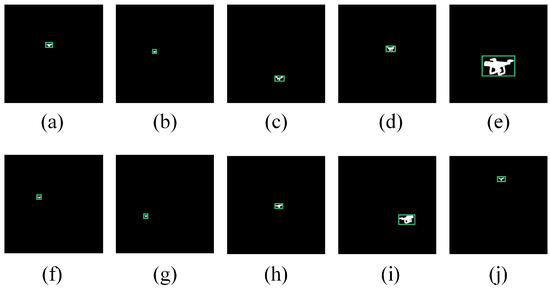

4.4. Detection Results

After obtaining the segmentation results, we used the erosion and expansion and minimum enclosing rectangle algorithms to obtain the detection results. The result are shown in Figure 8.

Figure 8.

Final results: (a) Image 1, (b) Image 2, (c) Image 3, (d) Image 4, (e) Image 5, (f) Image 6, (g) Image 7, (h) Image 8, (i) Image 9, and (j) Image 10.

4.5. Status Characteristics

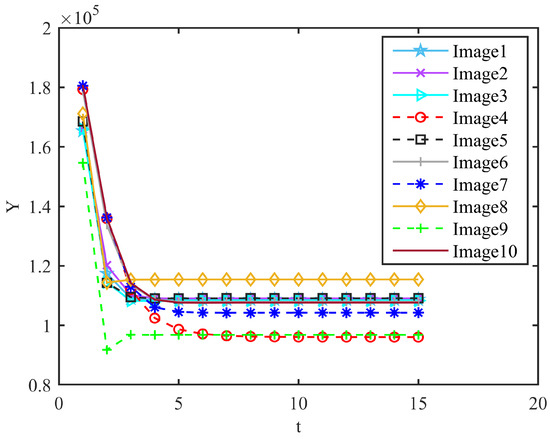

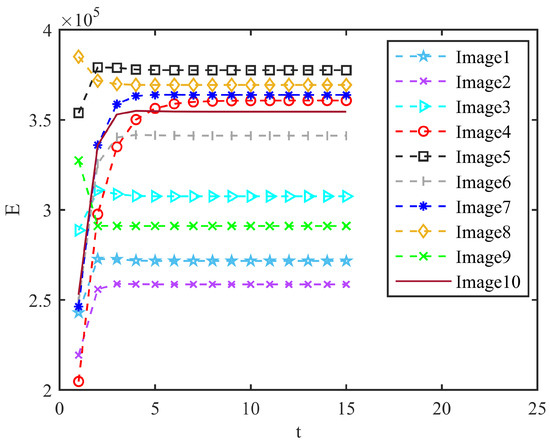

In order to study the statuscharacteristics of the CCNN, we measured the values of and at different iteration times. The results were as follows. t is the iteration number of the CCNN. It can be observed that the final steady-state iteration times of the different images were inconsistent during the iteration process, but generally, 6 to 7 times can be stable as it shows in Figure 9 and Figure 10.

Figure 9.

Value of Y during CCNN iteration.

Figure 10.

Value of E during CCNN iteration.

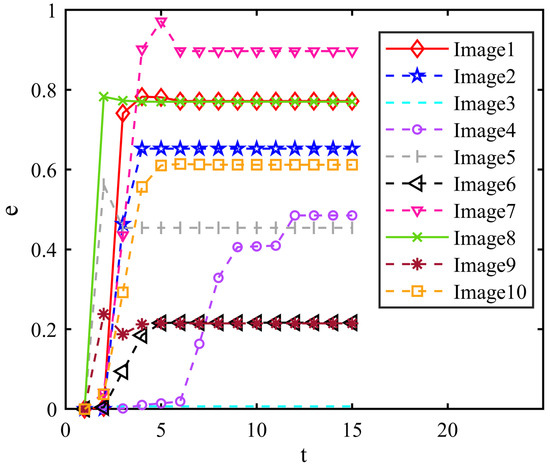

We used the change in the image entropy as the basis for judging whether to continue the iterations. From the results, we can find that the entropy of all images remained constant when the number of iterations was 15 as it shows in Figure 11.

Figure 11.

Value of e during CCNN iteration.

5. Limitations and Future Work

Although this work provided a breakthrough for the task of detecting UAVs in infrared images, a few limitations remain, which future studies should address:

- (1)

- The result showed that the model still had the phenomenon of missing detection. In the future, we hope to introduce structural information of the UAV to better detect all parts of the UAV.

- (2)

- How to design an algorithm for video tasks based on the characteristics of the CCNN model to achieve the goal of real-time detection is also one of the important tasks in the future.

- (3)

- In this work, we used the CCNN to process infrared images and realize the detection of UAVs. In the future, we will work on implementing hardware-based CCNNs based on memristive synaptic devices.

6. Conclusions

In this work, we designed an automatic detection framework for infrared UAV images based on the CCNN, which processes infrared images without learning, solving the problem in deep learning due to insufficient data. All calculated parameters were automatically acquired in self-adaptive ways. Our framework segments the infrared images with a low number of iterations and has high-performance indicators, which is of practical value for small target recognition in infrared images.

Author Contributions

Conceptualization, Z.Y., J.L. (Jing Lian) and J.L. (Jizhao Liu); methodology, Z.Y., J.L. (Jing Lian) and J.L. (Jizhao Liu); software, Z.Y. and J.L. (Jizhao Liu); validation, Z.Y., J.L. (Jing Lian) and J.L. (Jizhao Liu); formal analysis, J.L. (Jing Lian) and J.L. (Jizhao Liu); investigation, Z.Y., J.L. (Jing Lian) and J.L. (Jizhao Liu); resources, Z.Y., J.L. (Jing Lian) and J.L. (Jizhao Liu); data curation, Z.Y.; writing—original draft preparation, Z.Y. and J.L. (Jizhao Liu); writing—review and editing, Z.Y., J.L. (Jing Lian) and J.L. (Jizhao Liu); visualization, Z.Y.; supervision, J.L. (Jizhao Liu); project administration, J.L. (Jizhao Liu); funding acquisition, J.L. (Jizhao Liu). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Natural Science Foundation of Gansu Province under Grant 21JR7RA510.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Behera, D.K.; Bazil Raj, A. Drone Detection and Classification using Deep Learning. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020; pp. 1012–1016. [Google Scholar] [CrossRef]

- Shi, Q.; Li, J. Objects Detection of UAV for Anti-UAV Based on YOLOv4. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology ICCASIT, Weihai, China, 14–16 October 2020; pp. 1048–1052. [Google Scholar] [CrossRef]

- Memon, S.A.; Ullah, I. Detection and tracking of the trajectories of dynamic UAVs in restricted and cluttered environment. Expert Syst. Appl. 2021, 183, 115309. [Google Scholar] [CrossRef]

- Memon, S.A.; Son, H.; Kim, W.G.; Khan, A.M.; Shahzad, M.; Khan, U. Tracking Multiple Unmanned Aerial Vehicles through Occlusion in Low-Altitude Airspace. Drones 2023, 7, 241. [Google Scholar] [CrossRef]

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object detection from UAV thermal infrared images and videos using YOLO models. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Jiang, B.; Ma, X.; Lu, Y.; Li, Y.; Feng, L.; Shi, Z. Ship detection in spaceborne infrared images based on Convolutional Neural Networks and synthetic targets. Infrared Phys. Technol. 2019, 97, 229–234. [Google Scholar] [CrossRef]

- Lin, Z.; Huang, M.; Zhou, Q. Infrared small target detection based on YOLO v4. J. Phys. Conf. Ser. 2023, 2450, 012019. [Google Scholar] [CrossRef]

- Fang, H.; Xia, M.; Zhou, G.; Chang, Y.; Yan, L. Infrared Small UAV Target Detection Based on Residual Image Prediction via Global and Local Dilated Residual Networks. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Liu, J.; Lian, J.; Sprott, J.C.; Liu, Q.; Ma, Y. The Butterfly Effect in Primary Visual Cortex. IEEE Trans. Comput. 2022, 71, 2803–2815. [Google Scholar] [CrossRef]

- Molchanov, P.; Harmanny, R.I.; de Wit, J.J.; Egiazarian, K.; Astola, J. Classification of small UAVs and birds by micro-Doppler signatures. Int. J. Microw. Wirel. Technol. 2014, 6, 435–444. [Google Scholar] [CrossRef]

- Taha, B.; Shoufan, A. Machine Learning-Based Drone Detection and Classification: State-of-the-Art in Research. IEEE Access 2019, 7, 138669–138682. [Google Scholar] [CrossRef]

- Jahangir, M.; Baker, C.J. Extended dwell Doppler characteristics of birds and micro-UAS at l-band. In Proceedings of the 2017 18th International Radar Symposium (IRS), Prague, Czech Republic, 28–30 June 2017; pp. 1–10. [Google Scholar] [CrossRef]

- Jahangir, M.; Baker, C.J.; Oswald, G.A. Doppler characteristics of micro-drones with L-Band multibeam staring radar. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 1052–1057. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Guvenc, I. Wavelet transform analytics for RF-based UAV detection and identification system using machine learning. Pervasive Mob. Comput. 2022, 82, 101569. [Google Scholar] [CrossRef]

- Hall, E.; Kruger, R.; Dwyer, S.; Hall, D.; Mclaren, R.; Lodwick, G. A Survey of Preprocessing and Feature Extraction Techniques for Radiographic Images. IEEE Trans. Comput. 1971, C-20, 1032–1044. [Google Scholar] [CrossRef]

- Loew, M.H. Feature extraction. Handb. Med Imaging 2000, 2, 273–342. [Google Scholar]

- Seidaliyeva, U.; Alduraibi, M.; Ilipbayeva, L.; Almagambetov, A. Detection of loaded and unloaded UAV using deep neural network. In Proceedings of the 2020 Fourth IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 9–11 November 2020; pp. 490–494. [Google Scholar] [CrossRef]

- Dou, J.X.; Pan, A.Q.; Bao, R.; Mao, H.H.; Luo, L. Sampling through the lens of sequential decision making. arXiv 2022, arXiv:2208.08056. [Google Scholar]

- Zhu, H.; Zhang, M.; Zhang, X.; Zhang, L. Two-branch encoding and iterative attention decoding network for semantic segmentation. Neural Comput. Appl. 2020, 33, 5151–5166. [Google Scholar] [CrossRef]

- Zhu, H.; Wang, K.; Zhang, Z.; Liu, Y.; Jiang, W. Low-light image enhancement network with decomposition and adaptive information fusion. Neural Comput. Appl. 2022, 34, 7733–7748. [Google Scholar] [CrossRef]

- Dou, J.X.; Bao, R.; Song, S.; Yang, S.; Zhang, Y.; Liang, P.P.; Mao, H.H. Demystify the Gravity Well in the Optimization Landscape (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023. [Google Scholar]

- Wang, C.; Tian, J.; Cao, J.; Wang, X. Deep Learning-Based UAV Detection in Pulse-Doppler Radar. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Dou, J.X.; Mao, H.; Bao, R.; Liang, P.P.; Tan, X.; Zhang, S.; Jia, M.; Zhou, P.; Mao, Z.H. The Measurement of Knowledge in Knowledge Graphs. In Proceedings of the AAAI 2023 Workshop on Representation Learning for Responsible Human-Centric AI (R2HCAI); Association for the Advancement of Artificial Intelligence (AAAI): Washington, DC, USA, 2023. [Google Scholar]

- Yi, Z.; Lian, J.; Liu, Q.; Zhu, H.; Liang, D.; Liu, J. Learning rules in spiking neural networks: A survey. Neurocomputing 2023, 531, 163–179. [Google Scholar] [CrossRef]

- Di, J.; Ren, L.; Liu, J.; Guo, W.; Zhange, H.; Liu, Q.; Lian, J. FDNet: An end-to-end fusion decomposition network for infrared and visible images. PLOS ONE 2023, 18, e0290231. [Google Scholar] [CrossRef]

- Ma, T.; Mou, J.; Al-Barakati, A.A.; Jahanshahi, H.; Miao, M. Hidden dynamics of memristor-coupled neurons with multi-stability and multi-transient hyperchaotic behavior. Phys. Scr. 2023, 98, 105202. [Google Scholar] [CrossRef]

- Ma, T.; Mou, J.; Banerjee, S.; Cao, Y. Analysis of the functional behavior of fractional-order discrete neuron under electromagnetic radiation. Chaos Solitons Fractals 2023, 176, 114113. [Google Scholar] [CrossRef]

- Zhang, X.R.; Wang, X.Y.; Ge, Z.Y.; Li, Z.L.; Wu, M.Y.; Borah, S. A Novel Memristive Neural Network Circuit and Its Application in Character Recognition. Micromachines 2022, 12, 2074. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.Y.; Dong, C.T.; Zhou, P.F.; Nandi, S.K.; Nath, S.K.; Elliman, R.G.; Iu, H.H.C.; Kang, S.M.; Eshraghian, J.K. Low-Variance Memristor-Based Multi-Level Ternary Combinational Logic. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 69, 2423–2434. [Google Scholar] [CrossRef]

- Ding, S.; Wang, N.; Bao, H.; Chen, B.; Wu, H.; Xu, Q. Memristor synapse-coupled piecewise-linear simplified Hopfield neural network: Dynamics analysis and circuit implementation. Chaos Solitons Fractals 2023, 166, 112899. [Google Scholar] [CrossRef]

- Lian, J.; Yang, Z.; Liu, J.; Sun, W.; Zheng, L.; Du, X.; Yi, Z.; Shi, B.; Ma, Y. An overview of image segmentation based on pulse-coupled neural network. Arch. Comput. Methods Eng. 2021, 28, 387–403. [Google Scholar] [CrossRef]

- Lian, J.; Ma, Y.; Ma, Y.; Shi, B.; Liu, J.; Yang, Z.; Guo, Y. Automatic gallbladder and gallstone regions segmentation in ultrasound image. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 553–568. [Google Scholar] [CrossRef] [PubMed]

- Qi, Y.; Yang, Z.; Lian, J.; Guo, Y.; Sun, W.; Liu, J.; Wang, R.; Ma, Y. A new heterogeneous neural network model and its application in image enhancement. Neurocomputing 2021, 440, 336–350. [Google Scholar] [CrossRef]

- Lian, J.; Liu, J.; Yang, Z.; Qi, Y.; Zhang, H.; Zhang, M.; Ma, Y. A Pulse-Number-Adjustable MSPCNN and Its Image Enhancement Application. IEEE Access 2021, 9, 161069–161086. [Google Scholar] [CrossRef]

- Di, J.; Guo, W.; Liu, J.; Lian, J.; Ren, L. Medical image fusion based on rolling guide filter and adaptive PCNN in NSCT domain. Appl. Res. Comput. Yingyong Yanjiu 2023, 40, 15374–15406. [Google Scholar]

- Qi, Y.; Yang, Z.; Lei, J.; Lian, J.; Liu, J.; Feng, W.; Ma, Y. Morph_SPCNN model and its application in breast density segmentation. Multimed. Tools Appl. 2020, 80, 2821–2845. [Google Scholar] [CrossRef]

- Wang, X.Y.; Zhang, X.R.; Gao, M.; Tian, Y.Z.; Wang, C.H.; Iu, H.H. A Color Image Encryption Algorithm Based on Hash Table, Hilbert Curve and Hyper-Chaotic Synchronization. Mathematics 2023, 3, 567. [Google Scholar] [CrossRef]

- Lian, J.; Shi, B.; Li, M.; Nan, Z.; Ma, Y. An automatic segmentation method of a parameter-adaptive PCNN for medical images. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1511–1519. [Google Scholar] [CrossRef] [PubMed]

- Zhou, D.; Zhou, H.; Gao, C.; Guo, Y. Simplified parameters model of PCNN and its application to image segmentation. Pattern Anal. Appl. 2015, 19, 939–951. [Google Scholar] [CrossRef]

- Zhan, K.; Zhang, H.; Ma, Y. New Spiking Cortical Model for Invariant Texture Retrieval and Image Processing. IEEE Trans. Neural Netw. 2009, 20, 1980–1986. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Park, S.K.; Ma, Y.; Ala, R. A New Automatic Parameter Setting Method of a Simplified PCNN for Image Segmentation. IEEE Trans. Neural Netw. 2011, 22, 880–892. [Google Scholar] [CrossRef] [PubMed]

- Johnson, T.; Iacoviello, F.; Hayden, D.; Welsh, J.; Levison, P.; Shearing, P.; Bracewell, D. Packed bed compression visualisation and flow simulation using an erosion-dilation approach. J. Chromatogr. A 2020, 1611, 460601. [Google Scholar] [CrossRef]

- Li, Z.; Guo, B.; Ren, X.; Liao, N.N. Vertical Interior Distance Ratio to Minimum Bounding Rectangle of a Shape. In Hybrid Intelligent Systems; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Cheng, B.; Girshick, R.; Dollar, P.; Berg, A.C.; Kirillov, A. Boundary IoU: Improving Object-Centric Image Segmentation Evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 15334–15342. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).