Abstract

High accuracy measurement of size is essential in physical and biomedical sciences. Various sizing techniques have been widely used in sorting colloidal materials, analyzing bioparticles and monitoring the qualities of food and atmosphere. Most imaging-free methods such as light scattering measure the averaged size of particles and have difficulties in determining non-spherical particles. Imaging acquisition using camera is capable of observing individual nanoparticles in real time, but the accuracy is compromised by the image defocusing and instrumental calibration. In this work, a machine learning-based pipeline is developed to facilitate a high accuracy imaging-based particle sizing. The pipeline consists of an image segmentation module for cell identification and a machine learning model for accurate pixel-to-size conversion. The results manifest a significantly improved accuracy, showing great potential for a wide range of applications in environmental sensing, biomedical diagnostical, and material characterization.

1. Introduction

High accuracy size measurement is important for characterizing nanoscale and microscale particles. Various sizing measurement techniques are widely used to sort colloidal materials [1], analyze natural bioparticles such as pollen [2], characterize cells [3,4,5,6], examine soil particle [7], monitor food quality during harvest [8], and assess air quality [9]. For instance, a golden standard for monitoring parasites in drinking water systems identifies different types of bioparticles characterized by their sizes [5]. Commonly used particle sizing techniques include non-imaging-based sizing techniques such as sieve analysis [10], static laser light scattering [11], dynamic light scattering [12], nanoparticle tracking analysis [13], time-of-transition (TOT) principle [14], as well as imaging-based sizing techniques such as bright-field microscopy [15], fluorescent microscopy [16,17], and electron microscopy [18].

Sieve analysis [10,19] is a traditional method used to measure the particle size. It utilizes stacked sieves with increasing aperture sizes to clamp particles and generate a size distribution. Other non-imaging-based sizing techniques estimate the particle size indirectly. For instance, static laser scattering [11] measures the gyration size instead of the physical one based on the scattering pattern. Dynamic light scattering [12] retrieves the particle size based on the correlation function of scattered light signal, which essentially measures the diffusion coefficient of the particle. Nanoparticle tracking analysis [13] based on Brownian motion obtains the size from the diffusion coefficient of particle. The non-imaging-based sizing techniques mentioned above are unable to accurately determine the size of non-spherical particles due to the limit of applied models. Conventional flow cytometry [20] determines the size according to the scattering pattern or other optical signatures. Before that, the calibration using particles of known size is required. Unfortunately, the calibration is not generalizable because particles of the same size may have substantially different optical signatures due to the difference in materials, surface properties, internal structures, or fluorescent labels.

Imaging-based sizing techniques are able to provide a direct measurement of the physical size of particles based on image analysis. Image analysis is an automated method by using intelligent software to analyze results of a huge number of images. Images of microscale and nanoscale particles are usually acquired using imaging-based microscopy. Commonly used image sensors include single-point photodetectors such as photomultiplier tube (PMT) and avalanche photodiode (APD) [21] as well as two-dimensional (2D) photosensor arrays such as charge-coupled device (CCD) or complementary metal-oxide-semiconductor (CMOS) [22]. In a 2D sensing case, the size of individual particle is estimated by converting the pixel to size at a fixed conversion ratio which is determined theoretically according to the specifications of the optical components. For example, a single pixel in images taken corresponds to 0.33 μm with a 60× objective, and 0.5 μm with a 40× objective according to the product specifications. However, it is noticed that this fixed conversion ratio does not always give rise to an accurate particle sizing, probably arising from factors such as the objective error, imaging error, and segmentation error. Hence, the relationship between the pixel number and physical size is difficult to be modelled due to possible nonlinearity.

To address the issues mentioned above, a machine learning-based pipeline for imaging-based high accuracy particle sizing has been developed. The machine learning-based pipeline automatically segments micro particles from the images, estimates the pixel size of particles, and predicts the physical size from the pixel information using a machine learning model trained with labeled images of calibration spherical beads. Compared to conventional approaches, our intelligent pipeline offers a more accurate particle sizing by learning from the massive calibration data. This machine learning-enabled pipeline would greatly extend the applicability of imaging-based sizing in the field of environmental sensing, biomedical diagnostical, and material characterization.

2. Methods

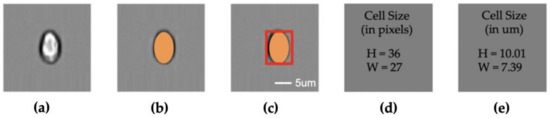

The pipeline algorithm automatically analyzes the pixel information of the target particles and converts the pixel information into actual size based on a machine learning model (Figure 1a–e). First, it generates a contour of the particle using a segmentation algorithm. Then, the contour information is used to estimate the shape of the particle. Finally, the shape information is converted to physical length and width using the pixel-to-size module learnt by a quadratic machine learning model trained with least-squares regression [23] using the spherical beads of known sizes. All the aforementioned operations are integrated into an image processing pipeline to automatically predict the physical size of particles from images acquired using an imaging flow cytometry (Amnis® ImageStream®X Mk II [24,25]).

Figure 1.

Size measurement pipeline; (a) cell image; (b) segmentation; (c) cell shape; (d) cell size in pixel numbers; (e) cell size in μm; (a–c) share the same scale bar.

2.1. Segmentation and Pixel Measurement Module

Deep learning has recently made impressive progress in imaging segmentation. For examples, U-Net [26], Deep Cell [27], Faster R-CNN [28], Mask R-CNN [29], and RetinaNet [30] have been demonstrated for instance segmentation in single cell analysis [31]. However, those deep learning models are computationally intensive and require heavy labelling from human. Imaging flow cytometry is capable of generating single cell image with a clear background. Hence it is well-suited for computer vision-based analysis.

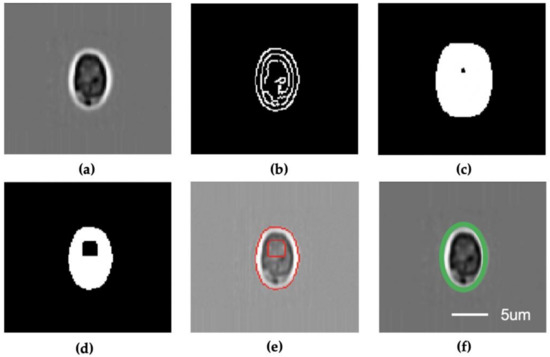

The computer vision-based segmentation algorithm (Figure 2) first resizes the input single-particle images into 120 × 120 pixels and removes the noise using a Gaussian blurring module (Figure 2a). Then, a Canny detector is applied to the processed images to generate the edge images (Figure 2b) that are subsequently processed with erode (Figure 2c) and dilating (Figure 2d) algorithms to generate the output blob images. Next, the algorithm identifies the edge in the blob images and generates the contour information of the particle (Figure 2e). The height and width in terms of pixel numbers are estimated from particle contour (Figure 2f). In the case of spherical particles, the height and width have the same value. Finally, the physical size of the particles is determined based on the machine learning model.

Figure 2.

Segmentation and pixel measurement module. (a) Gaussian blur; (b) Canny detector; (c) Erode; (d) Dilate; (e) Find contours; (f) Estimate shape. All subfigures share the same scale bar.

Signal noise often degrades the image quality and introduces error to subsequent processing submodules in the pipeline. Gaussian blur [32] is a popular algorithm to reduce the noise and enhance the image quality. The formula of the Gaussian blur is expressed as

where is the output pixel value, is the input image pixel, and is a Gaussian kernel given by

where is the amplitude of the Gaussian kernel, and mark the center position of the kernel, and represent the standard deviation (SD) with respect to variables and .

Canny detector [33] is a popular technique in edge detection given its advantages in low error rate, high localizability, and minimized response. The Canny edge detection algorithm can be implemented by following steps:

Firstly, the gradient strength and direction are calculated as

where the and are the first derivatives of vertical direction () and horizontal direction (), respectively. The is rounded to 0, 45, 90, or 135 degrees. For example, the in between 22.5 degree to 67.5 degree maps to 45 degree. Next, a non-maximum suppression algorithm is applied to remove non-considered pixel so that only the thin lines remain. Finally, a hysteresis stage with high and low threshold is applied on the lines to further improve the results.

Dilate and Erode [34] are two basic morphological operations for removing noise, isolating or jointing the individual components, and finding the intensity bumps or holes in an image. The dilate operation uses a kernel, such as pixels, with an anchor point at the center of the kernel to scan over the image and calculate the maximum pixel value. That maximum value replaces the value in the anchor point. As a result, the bright regions are expanded, and the individual components with small gaps in between are connected. In contrast, the erode operation uses the minimal value to replace the value in the anchor point to render a thinner bright area.

The find contours operation [35] obtains the contour information. A contour is a closed curve where all its points are on the boundary and have the same value. In our algorithm, ellipse is used to approximate the outline of the cells. In the last stage of the imaging processing, the contour information of the cells is passed into an estimator function to obtain the inscribed rotated rectangle of the ellipse.

2.2. Size Converter Module

The size converter module converts the pixel to size in micrometers with the machine learning model. The calibration process started with the collecting images of microplastic beads with diameters in 3 μm, 4 μm, 4.6 μm, 5 μm, 5.64 μm, 7.32 μm, 8 μm, 10 μm, 12 μm, and 15 μm (from Thermo Fisher Scientific, Duke Scientific and Polysciences Inc., Warrington, FL, USA ). Then, they were processed with the segmentation algorithm to generate the beads diameters in pixels. Finally, a quadratic curve model was learned. The matrix equation regarding the least-square regression [36] parameters of a, b, and c in quadratic curve can be calculated by

where , , . Inside the equation, is the pixels size of the individual bead and is the corresponding physical size of the bead, and is the total number of beads.

When the linear models are learned, we obtained the parameters m = 0.2905 and b = 0.4785 for the linear model and a = −0.000163, b = 0.301, and c = −0.618 for the quadratic curve model. As the Root Mean Square Error (RMSE) of the quadratic model is smaller than the linear model (0.2657 vs 0.2668), the quadratic curve model was adopted to implement the size converter module.

2.3. Performance Evaluation

To evaluate the performance of the image processing pipeline, the image database of microplastic beads of known sizes and biological cells have been built. First, the image segmentation algorithm was evaluated with the Intersection over Union (IoU) metric [37]. Then, the performance of the machine learning model was evaluated with Root Mean Square Error (RMSE). Finally, the measurement on a realistic cell dataset was performed. The mathematical expressions of IoU and RMSE metrics are expressed as

and

where is the area in ground truth and is the target segmented area; is the physical size, is the predicted size and is the total number of particles. Furthermore, the height and width distributions of particles such as beads, Cryptosporidium and Giardia oocytes are determined using bright-field imaging flow cytometry.

3. Results and Discussions

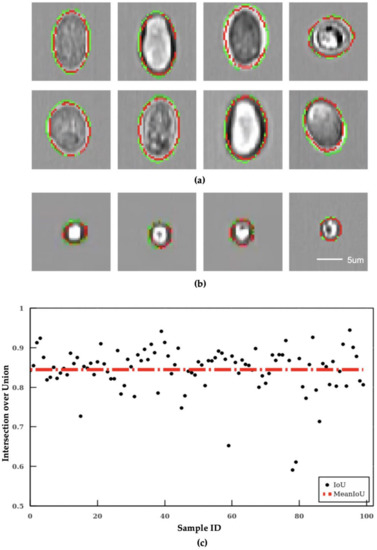

3.1. Segmentation and Pixel Measurement

The segmentation results are evaluated with the IoU score between the contour labelled by human operators and the contour predicted by the algorithm. The output of the segmentation algorithm IS depicted in Figure 3a. The top two rows are the results of segmented Giardia oocyte images, and the lowest row is the results of segmented Cryptosporidium oocyte images. In these images, the green line is the ground truth (human labeled), and the red line is the output of the segmentation algorithm. As shown in Figure 3a, the image outputs of the segmentation algorithm are close to the ground truth. Overall, the segmentation algorithm achieved 84.4% in mean IoU (red dotted line) as shown in Figure 3b in which each blue dot represents the IoU of an individual image output of the testing dataset.

Figure 3.

Error analysis on the segmentation algorithm and quadratic curve-based calibration. The image output of the segmentation algorithm of (a) segmentation results of Giardia, and (b) segmentation results of Cryptosporidium. All subfigures share the same scale bar. (c) intersection over Union results of the segmentation algorithm.

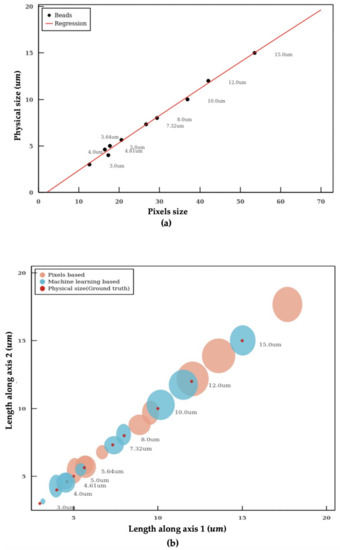

3.2. Physical Size Measurement

The imaging flow cytometer uses a fixed pixel-to-size ratio based on the specifications of the optics for particle sizing. However, this approach often leads to large errors in particle size (Table 1). Therefore, a machine learning model is established to determine the pixel-to-size ratio for accurate sizing. Both linear and quadratic regression models are adopted to learn the relationship between the pixel (pixels) and length (μm) of microplastic beads of known sizes. As the Root Mean Square Error (RMSE) of the quadratic model is smaller than the linear model (0.2657 vs 0.2668), the quadratic curve model was employed. Figure 4a shows the diameter versus the pixel size of the microplastic beads. The quadratic machine learning regression model is shown as the blue curve.

Table 1.

Measurement error analysis.

Figure 4.

Calibration of size measurement. (a) quadratic curve-based calibration. (b) the length distribution in both axes of microplastic particles within a distribution range. The circle represents the population distribution with the error in range. Red colored ones are the physical diameters of beads, pink color ones are the beads sizes based on assumption, and cyan colored ones are the beads sizes based on machine learning calibration.

The sizes of the microplastic beads measured using our algorithm and using the fixed pixel-to-size ratio (0.33 μm/pixel with 60× objective on Amnis Imagestream MKII) are summarized in Table 1 and Figure 4b. The fixed pixel-to-size conversion ratio is the mainstream approach used by imaging flow cytometry. Our algorithm shows significantly more accurate sizing in comparison. Figure 4b shows the length distribution in both axes of microplastic particles within a distribution range. The red dots represent the actual sizes of the beads (ground truth based on manufacturer’s specifications), the brown dots present the size measured using the fixed pixel-to-size ratio, and the dark green dots represent the size measured using the machine learning model. The sizes of the dots represent the SD of the measurement. The microplastic particles have a narrow distribution with a CV < 2% according to product specifications.

The machine learning model gives rise to significantly more accurate size measurement compared to the approach using a fixed pixel-to-size ratio. The sizes of microplastic beads measured using the machine learning model deviate only slightly from the ground truth with a mean percentage error of 4.2% (Table 1). In contrast, the mean percentage error using the fixed conversion ratio is 23.3% which is five times larger than the machine learning model.

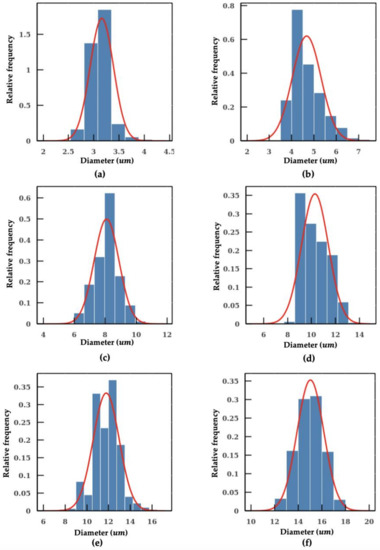

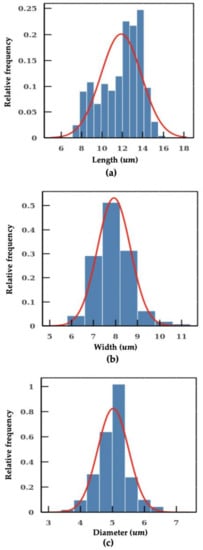

As shown in Figure 4b, methods using fixed conversion ratio tends to overestimate the size of the particle. In the worst scenario, the percentage error even reaches a value close to 40%. In addition, the SD measured with the machine learning model is also smaller in comparison, which indicates a better precision in particle sizing. The individual measurements of microplastic beads of 3 μm, 5 μm, 12 μm and 15 μm using the machine learning model are shown in Figure 5. The aforementioned algorithms were integrated into a pipeline. With this intelligent pipeline, the height and width distributions of Cryptosporidium and Giardia oocytes are determined using bright-field images from imaging flow cytometry. The results are presented in Figure 6 and Table 2. Our intelligent pipeline determines that the mean height of Giardia oocytes is 11.87 μm with an SD of 1.9 μm. The mean width of the Giardia oocytes is 7.92 μm with an SD of 0.75 μm. The Cryptosporidium oocytes are approximately spherical, and the mean diameter Cryptosporidium oocytes measured using our algorithm is 5.03 μ with an SD of 0.48 μm. In contrast, the mean height and width of Giardia oocytes are 12.94 μm and 8.45 μm, and the mean diameter Cryptosporidium oocytes is 5.17 μm when calculated using the fixed conversion ratio.

Figure 5.

Measurement of individual microplastic particles sizes distribution: (a) 3 μm; (b) 5 μm (c) 8 μm; (d) 10 μm; (e) 12 μm; and (f) 15 μm.

Figure 6.

Measurement on Cryptosporidium and Giardia. (a) length of the Giardia. (b) width of the Giardia. (c) diameter of the Cryptosporidium.

Table 2.

Measurement results on bioparticles.

4. Conclusions

In this paper, a machine learning-based pipeline for imaging-based high accuracy bioparticle sizing is demonstrated. It consists of an image segmentation module for extracting contours and estimating the pixel size of the bioparticle as well as a machine learning model for accurate pixel-to-size conversion. The image segmentation algorithm achieves 84.4% in the mean IoU, and the particle size determined by the machine learning model only has a mean percentage error of 4.2% which is five times better than the methods using a fixed pixel-to-size conversion ratio (23.3%). Our method empowers different intelligent imaging systems such as imaging flow cytometry for high accurate particle sizing and promises great potential for a wide range of applications in the field of environmental sensing, biomedical diagnostics, and material characterization.

Author Contributions

Conceptualization, S.L., A.Q.L. and T.B.; methodology, S.L., H.T., G.C. and X.J.; software, S.L.; validation, X.J., Y.S. and Y.L.; data curation, K.T.N. and S.F.; writing—original draft preparation, S.L.; writing—review and editing, Y.Z., Y.L., P.H., X.J., A.Q.L., T.B. and Y.S.; supervision, T.B. and A.Q.L.; funding acquisition, A.Q.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Singapore National Research Foundation under the Competitive Research Program (NRF-CRP13-2014-01), and Ministry of Education Tier 1 RG39/19.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Mage, P.L.; Csordas, A.T.; Brown, T.; Klinger, D.; Eisenstein, M.; Mitragotri, S.; Hawker, C.; Soh, H.T. Shape-based separation of synthetic microparticles. Nat. Mater. 2019, 18, 82. [Google Scholar] [CrossRef] [PubMed]

- Park, J.H.; Seo, J.; Jackman, J.A.; Cho, N.-J. Inflated sporopollenin exine capsules obtained from thin-walled pollen. Sci. Rep. 2016, 6, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Viles, C.L.; Sieracki, M.E. Measurement of marine picoplankton cell size by using a cooled, charge-coupled device camera with image-analyzed fluorescence microscopy. Appl. Environ. Microbiol. 1992, 58, 584–592. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.Z.; Xiong, S.; Zhang, Y.; Chin, L.K.; Chen, Y.Y.; Zhang, J.B.; Zhang, T.; Ser, W.; Larrson, A.; Lim, S. Sculpting nanoparticle dynamics for single-bacteria-level screening and direct binding-efficiency measurement. Nat. Commun. 2018, 9, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Dreelin, E.A.; Ives, R.L.; Molloy, S.; Rose, J.B. Cryptosporidium and Giardia in surface water: A case study from Michigan, USA to inform management of rural water systems. Int. J. Environ. Res. Public Health 2014, 11, 10480–10503. [Google Scholar] [CrossRef] [PubMed]

- Medema, G.J.; Schets, F.M.; Teunis, P.F.M.; Havelaar, A.H. Sedimentation of Free and Attached Cryptosporidium Oocysts and Giardia Cysts in Water. Appl. Environ. Microbiol. 1998, 64, 4460–4466. [Google Scholar] [CrossRef]

- Abbireddy, C.O.; Clayton, C.R. A review of modern particle sizing methods. Proc. Inst. Civ. Eng. Geotech. Eng. 2009, 162, 193–201. [Google Scholar] [CrossRef]

- Costa, C.; Loy, A.; Cataudella, S.; Davis, D.; Scardi, M. Extracting fish size using dual underwater cameras. Aquac. Eng. 2006, 35, 218–227. [Google Scholar] [CrossRef]

- Almeida, S.M.; Pio, C.; Freitas, M.C.; Reis, M.; Trancoso, M.A. Approaching PM2. 5 and PM2. 5− 10 source apportionment by mass balance analysis, principal component analysis and particle size distribution. Sci. Total Environ. 2006, 368, 663–674. [Google Scholar] [CrossRef]

- Fernlund, J.M. The effect of particle form on sieve analysis: A test by image analysis. Eng. Geol. 1998, 50, 111–124. [Google Scholar] [CrossRef]

- Saveyn, H.; Thu, T.L.; Govoreanu, R.; van der Meeren, P.; Vanrolleghem, P.A. In-line comparison of particle sizing by static light scattering, time-of-transition, and dynamic image analysis. Part. Part. Syst. Charact. 2006, 23, 145–153. [Google Scholar] [CrossRef]

- Brown, W. Dynamic Light Scattering: The Method and Some Applications; Clarendon Press Oxford: Oxford, UK, 1993; Volume 313. [Google Scholar]

- Filipe, V.; Hawe, A.; Jiskoot, W. Critical evaluation of Nanoparticle Tracking Analysis (NTA) by NanoSight for the measurement of nanoparticles and protein aggregates. Pharm. Res. 2010, 27, 796–810. [Google Scholar] [CrossRef] [PubMed]

- Weiner, B.; Tscharnuter, W.W.; Karasikov, N. Improvements in Accuracy and Speed Using the Time-of-Transition Method and Dynamic Image Analysis for Particle Sizing: Some Real-World Examples; ACS Publications: Washington, DC, USA, 1998. [Google Scholar]

- Bradbury, S.; Bracegirdle, B. Introduction to Light Microscopy; Bios Scientific: Oxford, UK, 1998. [Google Scholar]

- Hamilton, N. Quantification and its applications in fluorescent microscopy imaging. Traffic 2009, 10, 951–961. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Zhu, T.; Zhang, T.; Mazzulla, A.; Tsai, D.P.; Ding, W.; Liu, A.Q.; Cipparrone, G.; Sáenz, J.J.; Qiu, C.-W. Chirality-assisted lateral momentum transfer for bidirectional enantioselective separation. Light Sci. Appl. 2020, 9, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Flegler, S.L.; Flegler, S.L. Scanning & Transmission Electron Microscopy; Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- Shi, Y.; Zhao, H.; Nguyen, K.T.; Zhang, Y.; Chin, L.K.; Zhu, T.; Yu, Y.; Cai, H.; Yap, P.H.; Liu, P.Y. Nanophotonic array-induced dynamic behavior for label-free shape-selective bacteria sieving. ACS Nano 2019, 13, 12070–12080. [Google Scholar] [CrossRef] [PubMed]

- Adan, A.; Alizada, G.; Kiraz, Y.; Baran, Y.; Nalbant, A. Flow cytometry: Basic principles and applications. Crit. Rev. Biotechnol. 2017, 37, 163–176. [Google Scholar] [CrossRef]

- Lawrence, W.G.; Varadi, G.; Entine, G.; Podniesinski, E.; Wallace, P.K. A comparison of avalanche photodiode and photomultiplier tube detectors for flow cytometry. Proceedings of Imaging, Manipulation, and Analysis of Biomolecules, Cells, and Tissues VI, San Jose, CA, USA, 29 February 2008; p. 68590M. [Google Scholar]

- Han, Y.; Gu, Y.; Zhang, A.C.; Lo, Y.H. Review: Imaging technologies for flow cytometry. Lab. Chip 2016, 16, 4639–4647. [Google Scholar] [CrossRef]

- Pham, H. Springer Handbook of Engineering Statistics; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; pp. 26–27. [Google Scholar]

- Basiji, D.A. Principles of Amnis Imaging Flow Cytometry. In Imaging Flow Cytometry; Springer: New York, NY, USA, 2016; pp. 13–21. [Google Scholar]

- Erdbrugger, U.; La Salvia, S.; Lannigan, J. Detection of Extracellular Vesicles Using the ImageStream® X MKII Imaging Flow Cytometer. Available online: https://research.ouhsc.edu/Portals/1329/Assets/Documents/CoreFacilities/FlowCytometryandImaging/Amnis-Detection%20of%20excellular%20vesicles.pdf?ver=2020-06-16-101254-110 (accessed on 1 November 2020).

- Falk, T.; Mai, D.; Bensch, R.; Cicek, O.; Abdulkadir, A.; Marrakchi, Y.; Bohm, A.; Deubner, J.; Jackel, Z.; Seiwald, K.; et al. U-Net: Deep learning for cell counting, detection, and morphometry. Nat. Methods 2019, 16, 67–70. [Google Scholar] [CrossRef]

- Van Valen, D.A.; Kudo, T.; Lane, K.M.; Macklin, D.N.; Quach, N.T.; DeFelice, M.M.; Maayan, I.; Tanouchi, Y.; Ashley, E.A.; Covert, M.W. Deep learning automates the quantitative analysis of individual cells in live-cell imaging experiments. PLoS Comput. Biol. 2016, 12, e1005177. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2018. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Moen, E.; Bannon, D.; Kudo, T.; Graf, W.; Covert, M.; van Valen, D. Deep learning for cellular image analysis. Nat. Methods 2019, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2010; pp. 101–107. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Gil, J.Y.; Kimmel, R. Efficient dilation, erosion, opening, and closing algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1606–1617. [Google Scholar] [CrossRef]

- Suzuki, S. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Strang, G. Linear Algebra and Learning from Data; Wellesley-Cambridge Press: Wellesley, MA, USA, 2019. [Google Scholar]

- Udupa, J.K.; LaBlanc, V.R.; Schmidt, H.; Imielinska, C.; Saha, P.K.; Grevera, G.J.; Zhuge, Y.; Currie, L.M.; Molholt, P.; Jin, Y. Methodology for evaluating image-segmentation algorithms. In Proceedings of the SPIE 4684, Medical Imaging 2002: Image Processing, San Diego, CA, USA, 9 May 2002; pp. 266–277. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).