Highlights

What are the main findings?

- A multimodal detection framework using UAV-based DOM and DSM data for individual planted tree seedling detection.

- A novel SSM-based multimodal fusion module for feature-level fusion in the multimodal detection algorithm.

What are the implications of the main findings?

- Improved accuracy and robustness of individual planted tree seedling detection through feature fusion of DOM and DSM data.

- Improved accuracy and efficiency of multimodal detection algorithm through the SSM-based fusion module featuring global feature fusion and linear computational complexity.

Abstract

Detection of planted tree seedlings at the individual level is crucial for monitoring forest ecosystems and supporting silvicultural management. The combination of deep learning (DL) object detection algorithms and remote sensing (RS) data from unmanned aerial vehicles (UAVs) offers efficient and cost-effective solutions. However, current methods predominantly rely on unimodal RS data sources, overlooking the multi-source nature of RS data, which may result in an insufficient representation of target features. Moreover, there is a lack of multimodal frameworks tailored explicitly for detecting planted tree seedlings. Consequently, we propose a multimodal object detection framework for this task by integrating texture information from digital orthophoto maps (DOMs) and geometric information from digital surface models (DSMs). We introduce alternate scanning fusion (ASF), a novel multimodal fusion module based on state space models (SSMs). The ASF can achieve global feature fusion while maintaining linear computational complexity. We embed ASF modules into a dual-backbone YOLOv5 object detection framework, enabling feature-level fusion between DOM and DSM for end-to-end detection. To train and evaluate the proposed framework, we establish the planted tree seedling (PTS) dataset. On the PTS dataset, our method achieves an AP50 of 72.6% for detecting planted tree seedlings, significantly outperforming the original YOLOv5 on unimodal data: 63.5% on DOM and 55.9% on DSM. Within the YOLOv5 framework, comparative experiments on both our PTS dataset and the public VEDAI benchmark demonstrate that the ASF surpasses representative fusion methods in multimodal detection accuracy while maintaining relatively low computational cost.

1. Introduction

Accurate information on planted tree seedlings is essential for monitoring forest regeneration, guiding silvicultural practices, and supporting ecological restoration [1]. Such data plays a critical role in applications ranging from tracking the migration of tree lines [2] and forecasting mechanisms of extreme climate events [3] to evaluating post-disaster forest recovery [4]. In particular, statistics on seedling distribution and density inform planting strategies to enhance forest establishment efficiency [5] and optimize ecological outcomes [6]. While field surveys can provide detailed individual-level data, they are time-consuming, labor-intensive, and unsuitable for large-scale monitoring. RS offers a more efficient and cost-effective alternative for large-scale surveys. However, the accurate detection of individual planted tree seedlings remains a technical challenge due to their small size, dense distribution, and complex backgrounds. Addressing this challenge is essential for enabling further attribute extractions, such as height, crown width, and health status, which ultimately support data-driven forest management and environmental assessment. Therefore, developing a high-precision and robust remote sensing detection algorithm is of great theoretical significance and practical value for improving the efficiency and accuracy of planted tree seedling identification.

Traditional approaches for individual tree detection have shown promise, particularly those based on geometric features such as canopy height models and normalized point clouds extracted from airborne light detection and ranging (LiDAR) data [7,8]. However, these methods often struggle to distinguish tree seedlings from mature trees in forested environments and require costly high-density point cloud data, making these approaches unsuitable for seedling-specific detection tasks [9,10]. To address this limitation, Pearse et al. [11] adopted a DL-based solution using Faster R-CNN [12] to directly detect seedlings in aerial DOM imagery by leveraging fine-grained texture features, instead of using the LiDAR-derived features. However, it proved sensitive to visual interference caused by dense undergrowth or non-target shrubs [11]. On the other hand, geometric characteristics, such as crown height and crown morphology available in digital surface models (DSMs), have the potential to enhance class separability in such complex environments. It highlights the importance of fusing both texture and geometric information for more reliable seedling detection in realistic scenarios. Motivated by this, we propose integrating features from DOM and DSM within a deep learning framework to achieve more accurate and robust detection of individual planted tree seedlings.

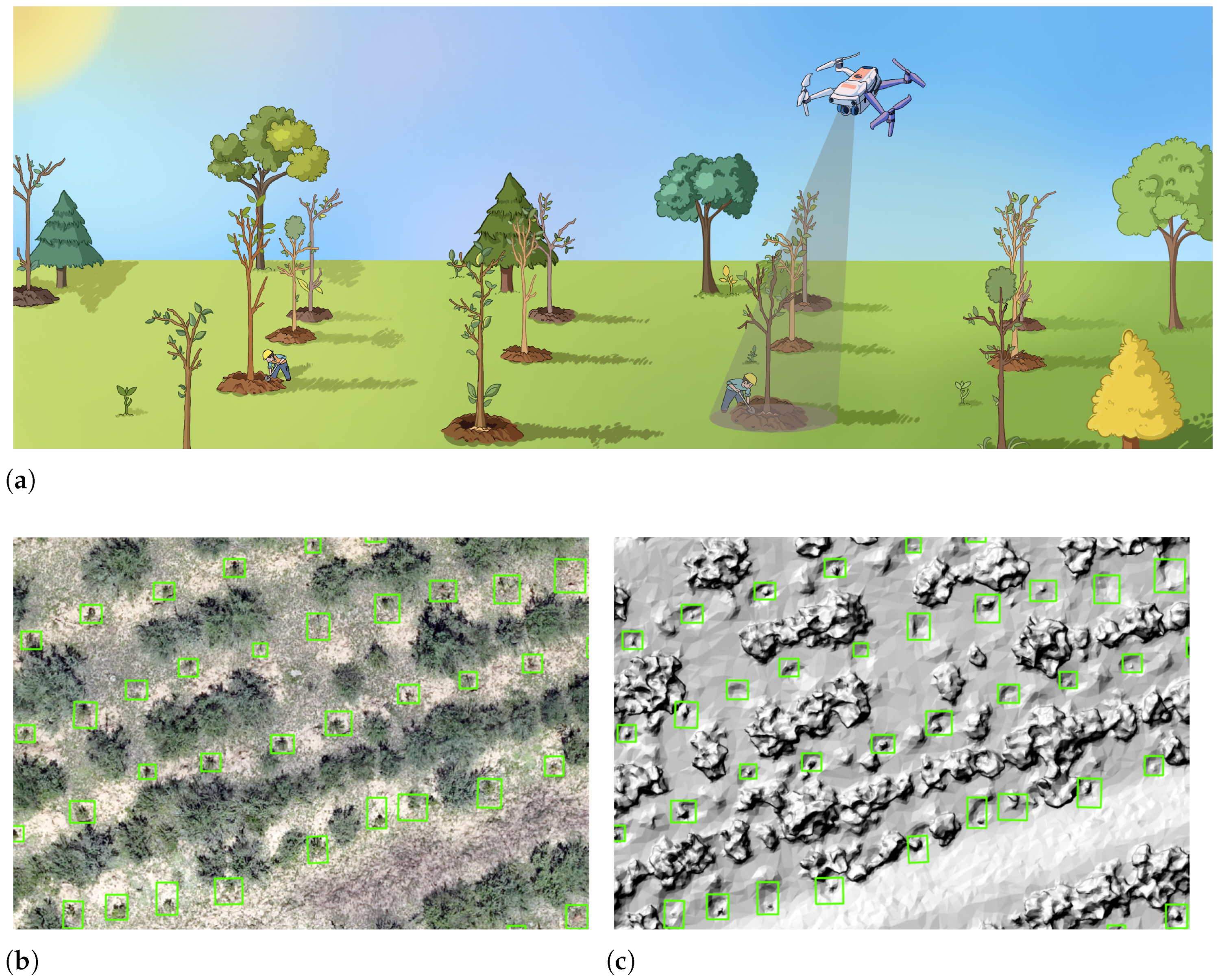

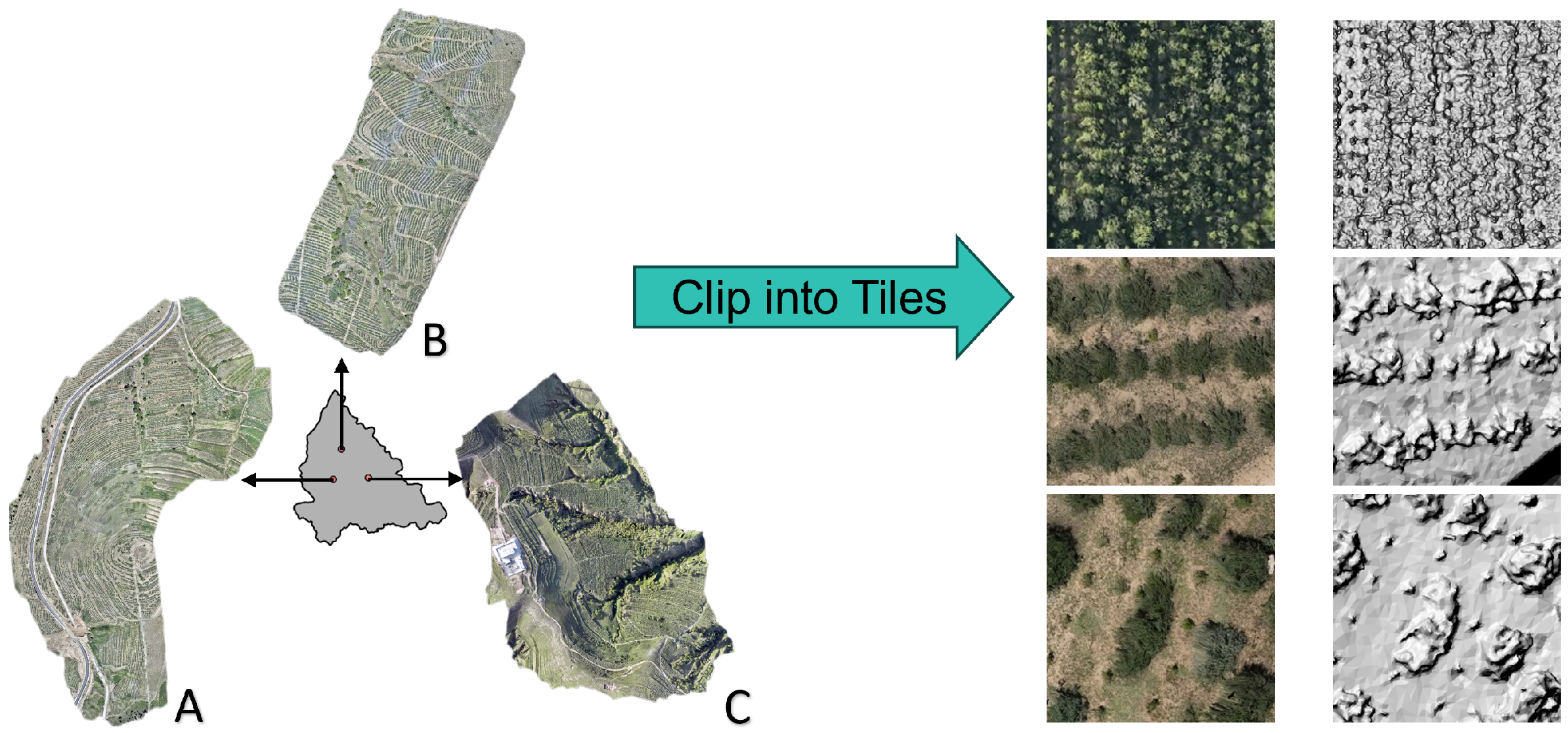

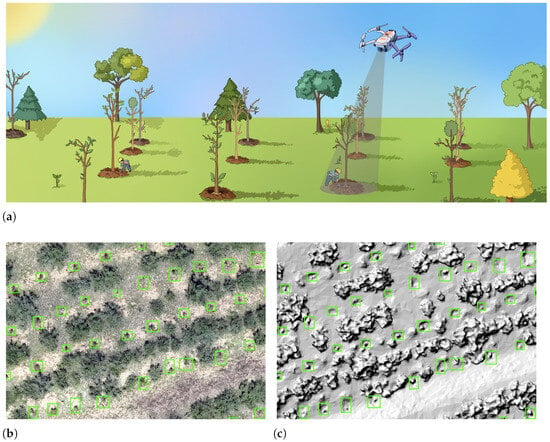

The rapid development of UAV platforms and structure-from-motion (SfM) photogrammetry has made it increasingly practical to acquire high-resolution DOM and DSM imagery over large forested areas [13]. Figure 1a shows our aerial survey mission for planted tree seedlings. Images with high spatial resolution can now be easily obtained at a large scale using UAV equipped with advanced cameras. And then, it was integrated with SfM software (e.g., Pix4Dmapper 4.6.4 [14]) for generating high-resolution DOM and DSM. DOM and DSM characterize distinct forest features. As shown in Figure 1b,c, DOM primarily characterizes textural features, such as tree canopy color and shadows, whereas DSM highlights geometric features, including tree crown morphology and planting traces. Our work focuses on the effective fusion of features from the two modalities for improving the detection accuracy of the tree seedlings.

Figure 1.

(a) UAV-based aerial survey mission for individual planted tree seedling detection. (b) DOM with ground-truth annotations. (c) DSM with ground-truth annotations.

Current multimodal DL-based fusion methods are predominantly developed based on attention mechanisms (e.g., squeeze-and-excitation (SE) [15]) and transformer [16] architectures. Attention mechanisms typically involve compressing feature maps, potentially leading to the loss of global information. Transformer-based approaches demonstrate better capability in constructing global intermodal interactions, but exhibit quadratic computational complexity (O(N2)). N denotes the length of input tokens. The recent emergence of SSMs [17] enable stronger global modeling capabilities with linear computational complexity (O(N)). The global modeling capability can promote comprehensive multimodal feature interaction, thereby alleviating spatial misalignment between different modalities, while the linear computational cost can contribute to a more efficient fusion network. Despite the strong potential of SSMs, a well-performing SSM-based fusion method tailored for object detection frameworks remains lacking.

To fill this gap, we introduce ASF, a fusion module that incorporates an alternate scanning strategy [18] to exploit the advantages of SSMs for multimodal object detection. To apply this approach to our task of detecting planted tree seedlings, the ASF modules are embedded into a dual-backbone YOLOv5 [19] object detection framework, enabling feature-level fusion between DOM and DSM for end-to-end detection. Ablation experiments have validated the performance contributions of our proposed framework for this task. Extensive comparative experiments have demonstrated that ASF surpasses representative fusion methods for multimodal RS object detection, achieving superior detection results.

In summary, the main contributions of this paper are as follows:

- (1)

- We propose a multimodal framework that integrates DOM and DSM for the detection of individual planted tree seedlings, significantly improving the detection accuracy in forested scenarios compared to unimodal detection systems.

- (2)

- We develop an ASF module, which is a SSM-based multimodal fusion network enabling linear-complexity global feature fusion. The ASF modules are embedded into a dual-backbone YOLOv5 framework for feature-level fusion to enhance end-to-end multimodal detection.

- (3)

- We collect and establish the PTS dataset, which covers 96 hectares of forest area and contains high-resolution aerial DOM and DSM data, for the task of detecting planted tree seedlings. This dataset is specifically designed for the training and evaluation of multimodal object detection algorithms.

- (4)

- Within the YOLOv5 framework, our ASF outperforms existing representative fusion methods for multimodal object detection, achieving superior detection performance on both the PTS dataset and the public VEDAI [20] benchmark.

The remainder of this paper is organized as follows. Section 2 reviews the related work of multimodal fusion methods and SSM-based models. Section 3 details our multimodal individual planted tree seedling detection framework and the architecture of the ASF module. Section 4 introduces the data and experimental preparation. The experimental results and discussion are provided in Section 5. Section 6 concludes and outlines future work.

2. Related Work

2.1. Fusion Methods for Multimodal Object Detection

In the field of RS data analysis, multimodal fusion is a viable approach to overcoming the constraints inherent in unimodal methods. Integrating information from distinct data sources enables more reliable and robust decision-making across diverse tasks such as object detection, land cover classification, and change detection [21]. The fusion strategies are commonly classified into three categories: pixel-level, feature-level, and decision-level fusion [22]. Pixel-level fusion refers to the fusion of multimodal data at the input stage, after which a single-backbone network processes the fused data to extract features for downstream tasks. Feature-level fusion utilizes multi-stream-backbone networks to extract features from various modalities and subsequently combines these features for further use. Decision-level fusion decomposes a multimodal task into multiple independent unimodal tasks, with the fusion performed at the final results.

In multimodal RS object detection tasks, decision-level fusion is seldom used, as it requires repeated inference, resulting in high computational cost. Pixel-level fusion is often utilized in real-time algorithms. A notable example is SuperYOLO [23], which employs SE modules for shallow fusion of multimodal data at the input stage and integrates super-resolution techniques to improve detection performance for small objects. The most widely adopted fusion strategy is the feature-level fusion. Previous studies by Liu et al. [24] and Cao et al. [25] have demonstrated that feature-level fusion outperforms the other two fusion strategies in object detection accuracy. Building upon feature-level fusion, Fang et al. [26] proposed decoupling multimodal features into common features and distinct features for fusion and developed a multimodal object detection framework named YOLOFusion. They designed a Cross-Modality Attentive Feature Fusion module, in which each group of features interacted across modalities through SE refinement. However, the SE requires the compression of feature maps along spatial dimensions, which can easily result in the loss of spatial information. With the advance of vision transformer architectures, Fang et al. [27] developed the cross-modality fusion transformer (CFT). The CFT tokenizes feature maps from different modalities and concatenates them into a unified sequence, enabling global intermodal interaction through the use of the self-attention mechanism. Despite its architectural simplicity and global modeling capability, the self-attention computation over concatenated tokens introduces significant computational redundancy. To address this limitation, Shen et al. [28] proposed the iterative cross-attention guided feature fusion (ICAFusion), which eliminates token concatenation and directly implements cross-modal interaction through the use of the cross-attention mechanism. This reduces the computational overhead of CFT by half while maintaining competitive fusion performance, but it does not alter the inherent quadratic computational complexity of the transformer.

Although attention mechanisms and transformer architectures have provided effective fusion solutions for multimodal object detection, they both exhibit inherent limitations: attention mechanisms may lead to information loss, while transformers require substantial computational resources. Recently, the emerging SSM architecture not only rivals transformers in global modeling capabilities but also maintains linear computational complexity. These advantages inspire our exploration of a novel SSM-based fusion method for multimodal object detection.

2.2. SSM-Based Models

The S4 module [17] presents the first practical solution to the high computation and memory requirements of SSMs, enabling long-range dependency modeling with linear scalability of sequence length. After that, Fu et al. [29] proposed the H3 block to narrow the gap between attention and SSMs in language modeling, achieving transformer-comparable performance. To further advance the above research, Gu et al. [30] introduced critical innovations by proposing an S6 module and a generic language model backbone called Mamba. The S6 module addresses the limitations of S4 in flexibility and efficiency through a selection mechanism and a hardware-aware algorithm. Leveraging the S6 design, Mamba demonstrates better performance over transformers in modeling large-scale sequential data.

SSMs’ superior performance on sequential data has driven researchers to explore their adaptation to visual tasks. VMamba [31] emerged as a pioneering implementation and performed well on visual tasks, relying on its visual state space (VSS) blocks-stacked backbone. With this progress, numerous novel SSM-based image processing methods have been proposed, and valuable innovative ideas have been provided for multimodal fusion. Xie et al. [32] developed a dynamic feature fusion module based on SSMs for the color enhancement of medical images. He et al. [33] introduced Pan-Mamba, which integrated low-resolution multispectral and high-resolution panchromatic images to generate a high-resolution multispectral image. Yang et al. [34] proposed Mamba Twister, a language-image fusion backbone for referring image segmentation. Dong et al. [35] adopted the parameter-sharing mechanism for SSM matrices to achieve language-video multimodal fusion. Chen et al. [18] proposed ChangeMamba for spatiotemporal change detection in RS, whose key innovation lies in the scanning strategies for modality alignment, summarized as three types: alternate scanning, parallel scanning, and cascade scanning.

The advantages of SSMs have garnered considerable attention, prompting many researchers to explore their applications across various multimodal domains. However, an open-source and effective SSM-based fusion module for multimodal object detection remains unavailable, motivating our development and application of such a module for individual planted tree seedling detection.

3. Methodology

3.1. Overview of Multimodal Individual Tree Seedling Detection Framework

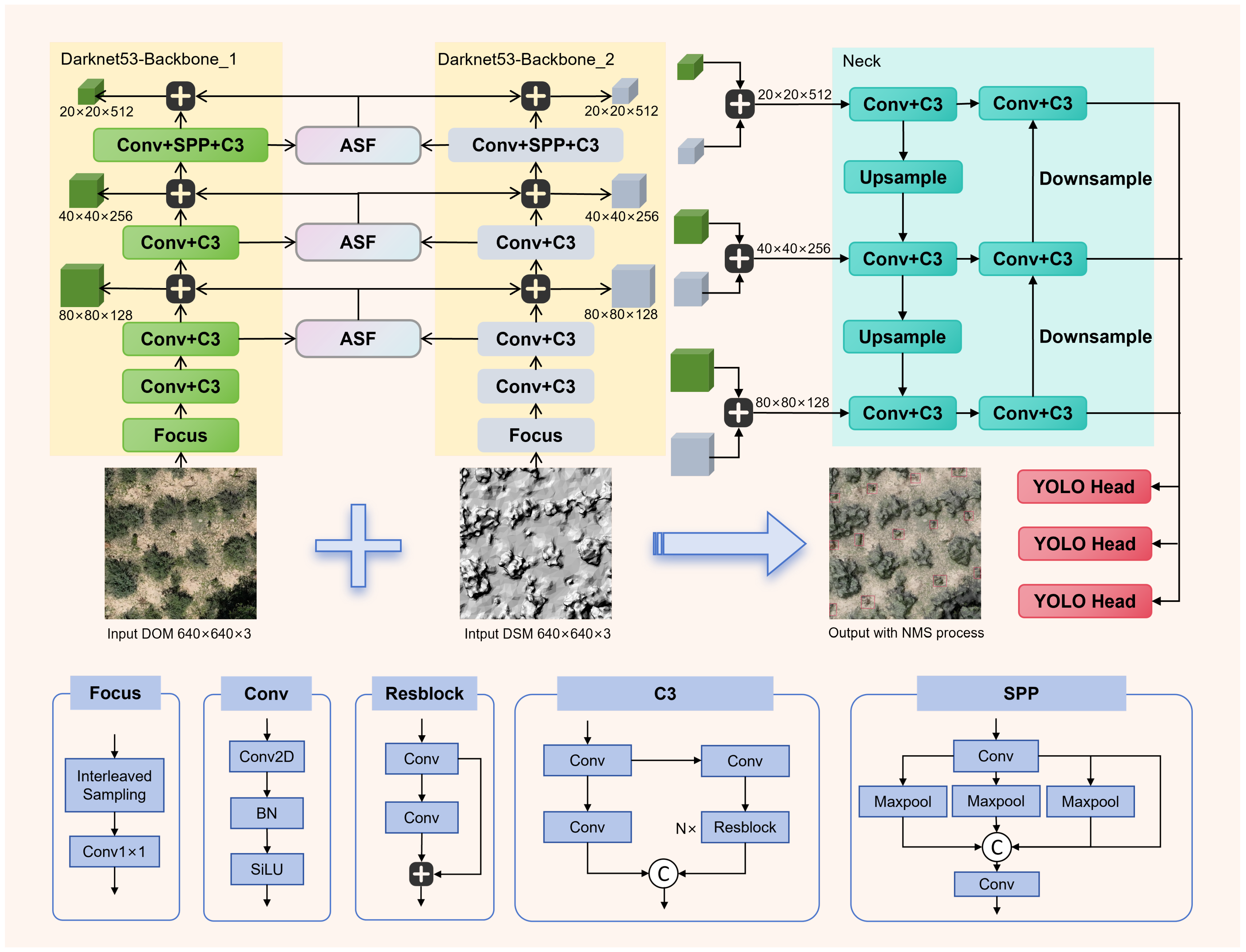

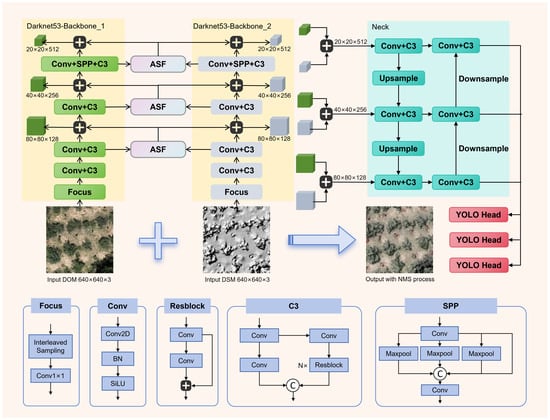

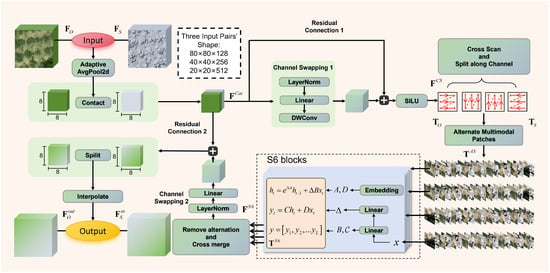

Our multimodal individual tree seedling detection framework, named ASFYOLO, adopts a dual-backbone YOLOv5 architecture. The entire workflow, as illustrated in Figure 2, comprises three key stages: (1) unimodal feature extraction, (2) feature-level fusion, and (3) detection neck and head [28].

Figure 2.

The overall architecture of multimodal individual tree seedling detection. The multimodal framework primarily employs a dual-backbone YOLOv5 architecture, where the two backbone networks extract features from DOM and DSM, with feature interaction through ASF modules.

The unimodal feature extraction is first conducted through two backbone networks dedicated to DOM and DSM inputs, which can be formulated as shown in Equation (1).

where , denote the DOM-stream and DSM-stream feature maps from the i-th (i = 3, 4, 5) layer of backbones with parameters and . H, W, C denote the height, width and channel of feature maps. , denote the pair of DOM and DSM input images. CSPDarkNet [36] is employed as the backbone network, which comprises main components including Focus [37], Conv, C3 [38], and SPP [39] blocks. The and , as the last three outputs of the C3 blocks, are provided for the feature-level fusion method.

The feature-level fusion process can be formulated as Equation (2).

where denotes the i-th layer result of the multimodal fusion function with parameter . incorporates the proposed ASF module and an addition operation. Residual connections bridge the ASF modules between the dual-backbone networks, preventing the fusion modules from directly influencing the backbone networks. The addition operation (Add) or 1 × 1 convolution (1 × 1 Conv) is commonly employed as the final step to merge the dual-stream feature maps into one path.

The last stage involves transforming the fused feature maps into bounding boxes and class labels by the detection neck and head, as defined in Equation (3).

where multi-scale transformations are applied to , , and by the neck network , and the head network implements classification and bounding box regression to produce the final output vectors , , and . The neck network employs a hybrid architecture of the feature pyramid network (FPN) [40] and path aggregation network (PAN) [41]. The FPN establishes a top-down pathway to propagate high-level semantic features across scales, while the PAN introduces a bottom-up pathway to preserve critical spatial details through low-level feature aggregation. The YOLO head network conducts the final object generation, and the loss function can be formulated as Equation (4).

where denotes the total object detection loss, denotes the loss of bounding box for localization, denotes the loss of object classification , and denotes the loss of judging whether there is an object . The detection results are further processed by non-maximum suppression (NMS) [42] to eliminate redundant overlapping predictions, thereby completing the entire object detection pipeline.

3.2. Preliminaries of SSM

SSMs derive their theoretical foundation from linear time-invariant systems, where an input signal undergoes a hidden state generating the system response . This process can be formulated as linear ordinary differential equations (ODEs) as Equation (5).

where denotes the state transition parameter, and denote the projection parameters, and denotes the skip connection parameter (assumed to be zero for computational simplification).

However, the ODEs are utilized to describe continuous signals and systems, which are incompatible with the discrete tensor computation of DL. It is therefore imperative to perform temporal discretization. The zero-order hold (ZOH) method is one of the discretization approaches, which employs a time scale parameter to convert the continuous parameters and into discrete counterparts and , represented by Equation (6).

Through this transformation, Equation (5) can be discretized into discrete-time equations whose structure parallels that of RNNs [43], expressed as Equation (7).

By recursively expanding Equation (7), the equations will be rewritten in a convolutional form as Equation (8).

where denotes the length of the input sequence , can be regarded as a convolutional kernel, and * represents a convolutional operation.

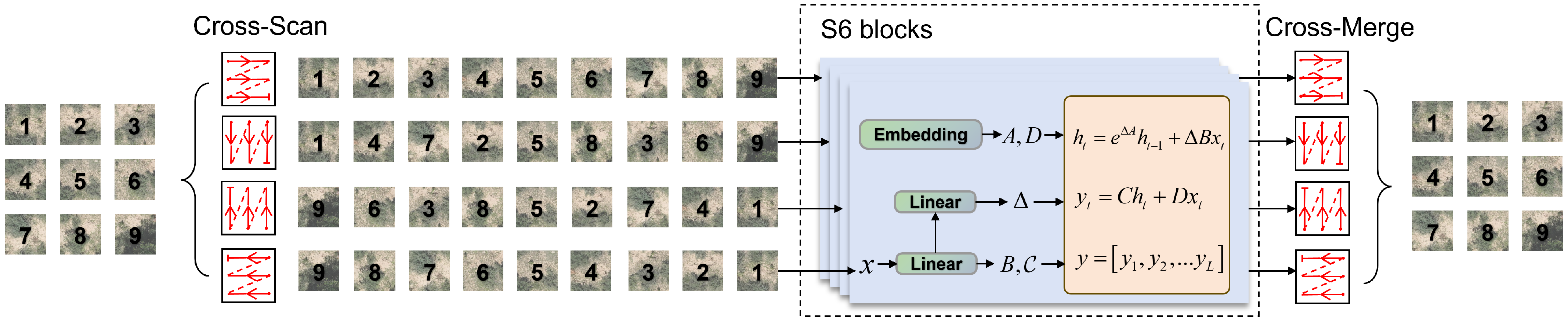

Furthermore, the S6 block incorporates a selection mechanism that projects the input sequence onto parameters , , and via linear layers, as illustrated in Figure 3, thereby establishing dynamic coupling between the SSM parameters and the input. To adapt the S6 architecture for image processing, the SS2D strategy adopted operates a Cross-Scan on the image through four directional traversals, decomposing the 2D spatial relationships into sequential token streams for the S6 blocks. After S6 modeling, the output tokens undergo Cross-Merge to generate the final 2D feature maps. The combination of hardware-efficient computation and effective global context modeling positions the SS2D architecture as a significant advancement for DL-based image processing.

Figure 3.

SS2D (2D selective scan) with S6 blocks. This approach utilizes Cross-Scan to transform image patches into four directional token sequences, which are then fed into S6 blocks for modeling. Finally, the spatial dimensions are reconstructed via Cross-Merge.

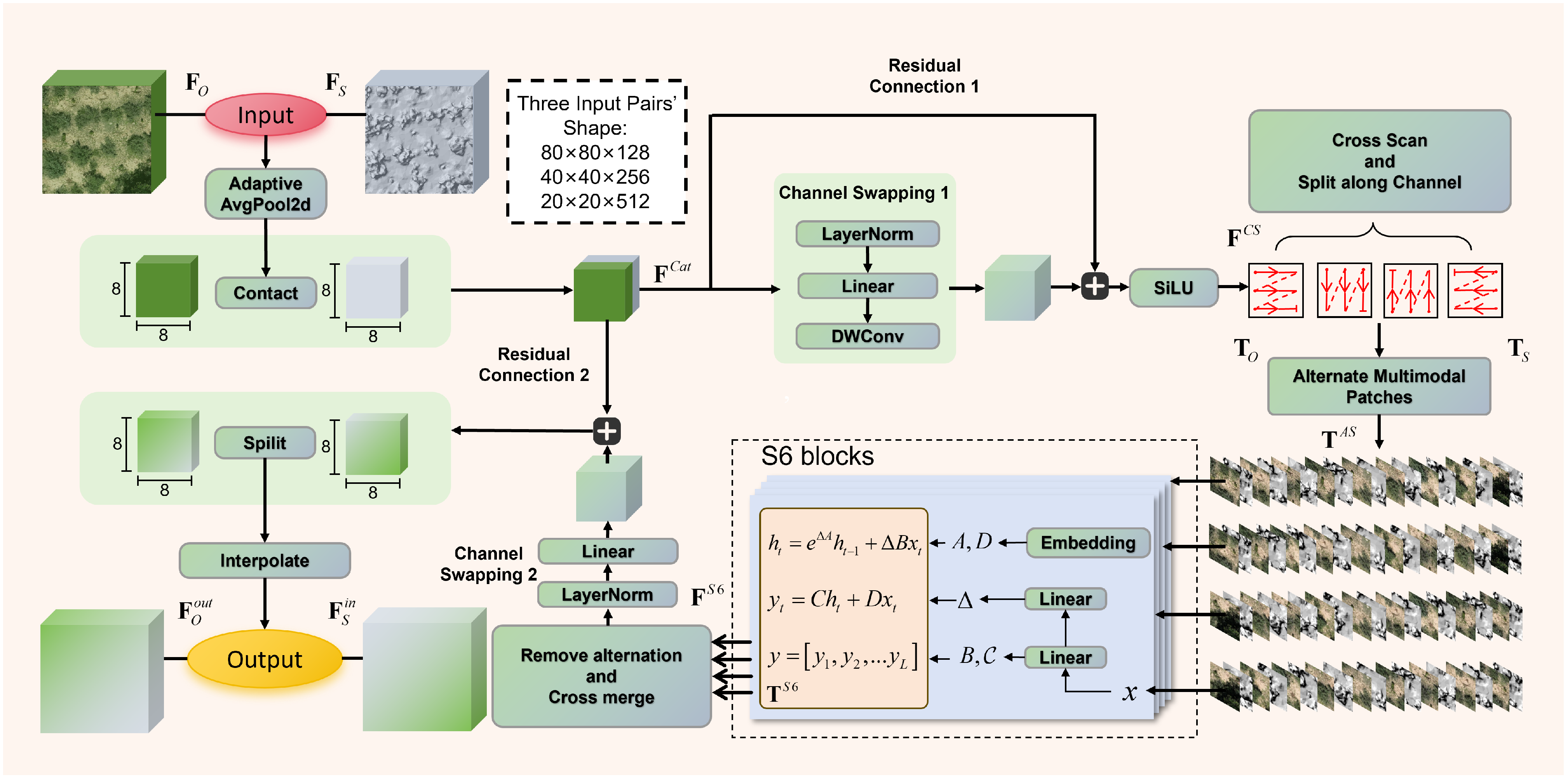

3.3. ASF: Alternate Scanning Fusion

The core innovation of this paper is the SSM-based ASF module, which bridges the dual-stream backbones for feature-level fusion, with the overall architecture illustrated in Figure 4. Given a pair of input feature maps and , the SSM can process the tokenized feature maps with a computational complexity of in Equation (8). However, despite SSM’s computational efficiency, tokenizing the entire input feature maps, which are derived from the outputs of the last three C3 blocks in the backbone, still incurs significant GPU memory consumption and computational overhead. A straightforward solution to mitigate this issue involves spatial dimensionality reduction through a pooling operation [27]. Specifically, the ASF module employs adaptive average pooling layers to downsample the pair of input feature maps to an 8×8 spatial resolution, followed by channel-wise concatenation to facilitate downstream processing. This process can be represented by Equation (9).

where is the concatenation of pooled pair feature maps.

Figure 4.

Overall architecture of the ASF module. The key is that tokens, generated via Cross-Scan on the multimodal hybrid feature map, are split along the channel and alternately arranged for S6 modeling.

Subsequent fusion framework adopts the fundamental architecture of the VSS block, with structural simplification achieved by removing the original feed-forward network. The processing pipeline before SS2D sequentially applies LayerNorm [44], linear projection, and depthwise separable convolution (DWConv) [45] to achieve channel swapping on . To prevent channel swapping from directly disrupting feature maps and preserve intermodal discriminative characteristics, a residual connection bypassing the channel swapping layers is integrated and activated by the SiLU [46] function, formally expressed as Equation (10).

The channel-swapped feature map is tokenized through Cross-Scan in SS2D and split along channel to acquire four scanning-direction token groups of two modality denoted as , . and are alternately arranged along the sequence dimension and fed into the S6 block for state space modeling, as specified in Equation (11).

where denotes the alternate tokens from and , denotes the output of S6 blocks, and the S6 initial state parameters are as Equation (12).

where denotes a zeros matrix for initiation of with a negative exponential, , , and are obtained by the linear projection of , denotes a ones matrix for initiation of , and denotes an evenly distributed random parameter within [0, 1] for the bias of .

After the S6 modeling, the is removed from alternation and cross-merged to the with the same shape of . The is further transformed through a linear projection and layer normalization, still residually added with , and then subjected to inverse operations (split and bilinear interpolation) to obtain the final outputs and .

4. Materials and Experimental Setup

4.1. Datasets

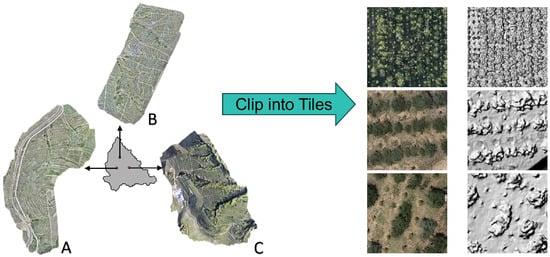

4.1.1. PTS: Planted Tree Seedlings

To train and validate the individual planted tree seedling detection framework, this study collected data with a UAV from three sites (A, B, C) in Pinglu District, Shuozhou City, Shanxi Province, China, as shown in the left panel of Figure 5. SfM software was used to generate DOM (RGB imagery) and DSM (grayscale imagery stretched to the range of 0–255), covering 96 hectares, with spatial resolutions of 0.01 m (Site A), 0.02 m (Site B), and 0.05 m (Site C). Additionally, multispectral imagery data (red, green, blue, and near-infrared bands) were collected in Site A to explore the impact of different spectral data combinations on detection performance. For annotating planted tree seedlings on the large imagery, manual labeling was performed using the annotation tools in ArcGIS Pro 3.0.2 [47], and semi-automatic annotation was conducted with the Geo SAM 1.1.0 plugin [48] integrated into QGIS 3.30.3 [49]. The identification of planted tree seedlings was guided by visual cues in both DOM and DSM, such as morphology, shadow characteristics, planting traces, and spatial arrangement. Following the completion of a portion of annotations, a pre-trained object detection model was fine-tuned on the annotated samples and subsequently applied to the large imagery to infer additional samples. The inferred results were then manually inspected and refined to ensure accuracy and completeness, ultimately yielding a high-quality dataset containing approximately 25,000 samples.

Figure 5.

(Left): UAV survey areas; (right): PTS dataset for DL-based object detection algorithms.

Given the computational infeasibility of processing full-scene raster data with DL-based frameworks, we implemented a tiling strategy to enable efficient inference. The original imagery was clipped into 4318 georeferenced tile pairs (640 × 640 pixels), each co-registering the DOM and DSM, as shown in the right panel of Figure 5. These organized tiles constitute the PTS dataset, which is designed explicitly for DL-based object detection algorithms in this task.

4.1.2. VEDAI: Vehicle Detection in Aerial Imagery

To further validate the effectiveness and generalization capability of the proposed method, we incorporate the widely recognized VEDAI benchmark into our experiments. This dataset comprises 10 subsets, each containing over 1200 aligned infrared and visible images. These images are provided at two sizes: 1024 × 1024 pixels and 512 × 512 pixels, with a decimeter-level spatial resolution. The dataset encompasses a diverse range of RS scenarios, including rural landscapes, urban areas, and transportation networks. It provides annotated instances spanning eight distinct vehicle categories: car, truck, pickup, tractor, camping car, boat, plane, and van. Its multimodal characteristics and multi-class targets make it particularly suitable for investigating advanced multimodal RS object detection algorithms.

4.2. Implementation Details

Our proposed framework is implemented in PyTorch (version 2.1.0) and runs on an Ubuntu 22.04 server equipped with CUDA 11.8 and an NVIDIA RTX 4090 GPU. The PTS and VEDAI datasets are used to train and validate the framework. The PTS dataset is split into train, test, and validation sets at an 8:1:1 ratio. The train and test sets are used for our experiments, and the validation set is provided for the user’s final validation. Following [23], the VEDAI is devised for 10-fold cross-validation, in which each subset is split into train and test at a 9:1 ratio. The ablation experiments are primarily conducted on the PTS dataset, with VEDAI fold01 used as an additional source of validation. The comparative experiments with previous methods are performed on the PTS dataset and the 10 folds of VEDAI by averaging the results. For the hyperparameter configuration, we adopt a stochastic gradient descent (SGD) optimizer with an initial learning rate of 0.01 and a momentum of 0.94, small COCO pre-trained weights for model initialization, a batch size of 32, 100 epochs for the PTS training, and 300 epochs for the VEDAI training.

4.3. Evaluation Metrics

The evaluation metrics include three primary measures: AP50 (average precision at 50% intersection over union (IoU)), AP75 (at 75% IoU), and AP50:95 (over IoU thresholds from 50% to 95% at 5% intervals). In object detection, a predicted bounding box is considered correct if its IoU with the ground truth exceeds a predefined threshold. Due to the small size of targets in RS imagery, the more lenient AP50 is adopted as the foremost evaluation metric. The AP is calculated as the area under the precision–recall curve, and the mAP is the mean of AP values across all classes. The corresponding formulas are defined in Equation (13).

where TP, FP, and FN denote true positive, false positive, and false negative, and i denotes the number of classes.

Additionally, GFOLPs and parameters are used as metrics to quantify the model’s computational complexity and architectural scale.

5. Results and Discussion

5.1. Ablation Study

5.1.1. Necessity of Multimodality and ASF

To demonstrate the performance gains from multimodal data (DOM and DSM) in individual planted tree seedling detection, we first implemented popular unimodal detectors on unimodal data through the MMDetection [50] project. The detection algorithms include one-stage detectors (RetinaNet [51], YOLOv5), two-stage detectors (Faster R-CNN, Cascade R-CNN [52]), and transformer-based detectors (DINO [53], DDQ-DETR [54]). Subsequently, we implemented the proposed ASFYOLO multimodal detection system on both DOM and DSM data. To validate the enhancement brought by the ASF, the compared baselines include unimodal YOLOv5 and dual-backbone YOLOv5 with basic fusion strategies (Add and 1 × 1 Conv). The comparisons are detailed in Table 1.

Table 1.

Ablation study results of multimodality and ASF for individual planted tree seedling detection.

From the experimental results, among these unimodal object detection algorithms, the detection results on DOM are better than those on DSM. DDQ-DETR achieved the best detection performance, with an AP50 of 65.5% on DOM and 55.9% on DSM, while YOLOv5 also achieved competitive results, with an AP50 of 63.5% on DOM and 55.9% on DSM. Compared with other two-stage and transformer-based methods, YOLOv5 exhibits a more lightweight architecture, faster inference speed, and lower development complexity, making it the preferred baseline for fusion methods. After implementing basic fusion strategies on both DOM and DSM, detection performance substantially improved, reaching 70.2% AP50 with Add fusion and 70.0% AP50 with 1 × 1 Conv fusion. The results confirm that integrating DOM and DSM multimodal data is highly effective for enhancing the performance of individual planted tree seedling detection. After embedding ASF modules into the Add fusion strategy, detection performance was further improved, raising AP50 to 72.6%. Compared with the original YOLOv5, which utilizes unimodal data (either DOM or DSM), the ASFYOLO model, which integrates multimodal DOM and DSM, boosts the AP50 by 9.1% and 16.7%, respectively.

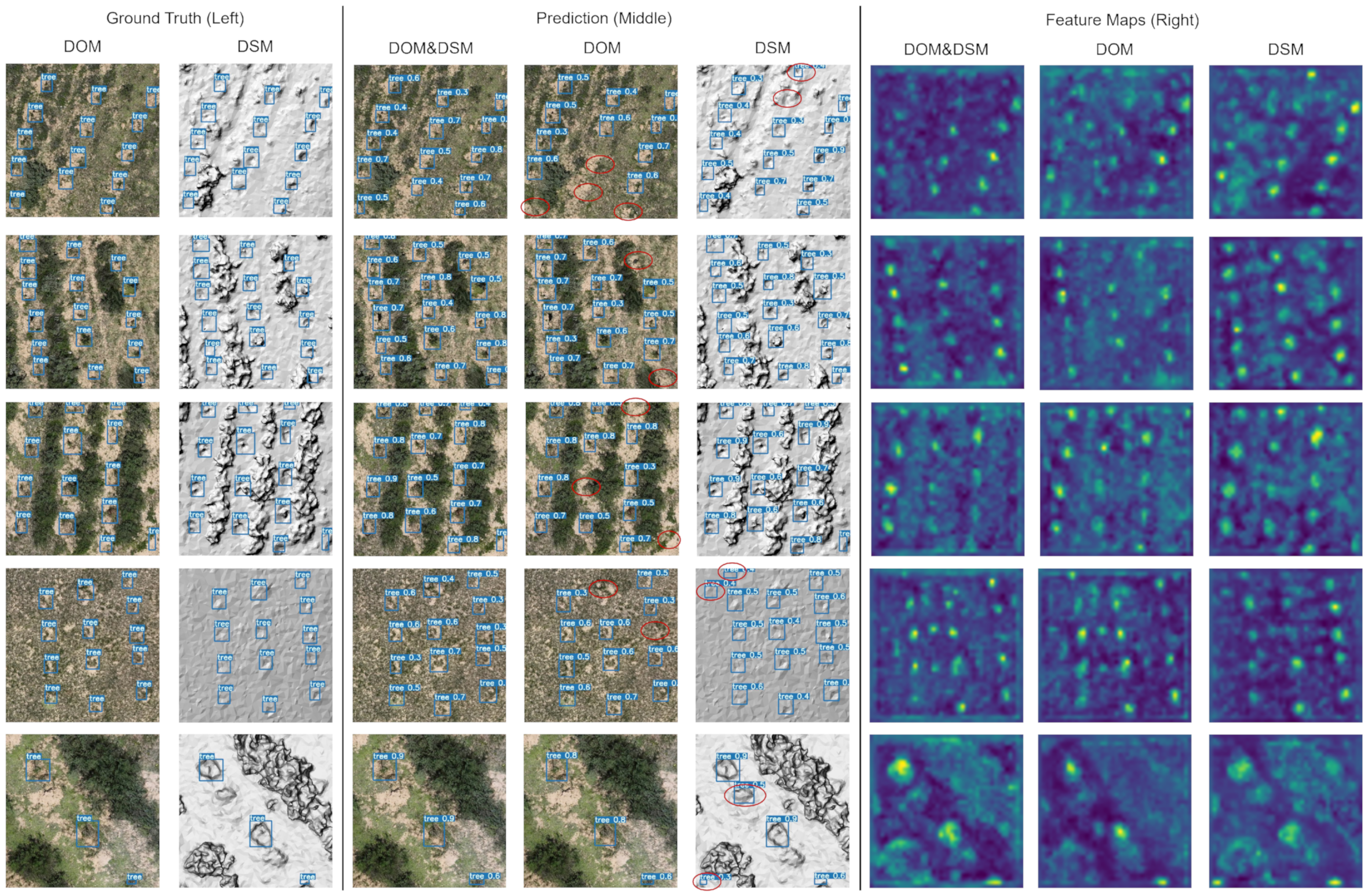

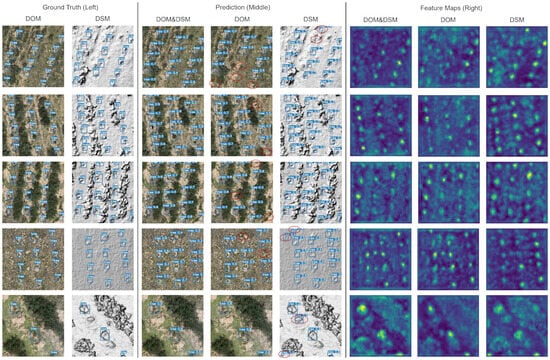

To visualize the detection outcomes of the ASFYOLO framework using different data sources, representative examples are presented in Figure 6. The middle three columns, respectively, show detection results from (1) dual multimodal data (DOM and DSM), (2) dual unimodal DOM data, and (3) dual unimodal DSM data. The right three columns display their corresponding feature maps. Recognizing that shallow feature maps capture texture details and deep layers represent semantic content, we selectively present intermediate-level feature maps with channel-wise averaging for visualization. It can be observed that using multimodal sources in our framework enables the detection of targets that are missed or incorrectly detected in unimodal data sources. In the corresponding feature maps, the target’s elevation-response region exhibits better separability in the multimodal data compared with the unimodal data.

Figure 6.

(Left): Ground truth of planted tree seedlings on DOM and DSM; (middle): detection results of multimodal and unimodal data; (right): corresponding intermediate-level feature maps. FP and FN are circled in red.

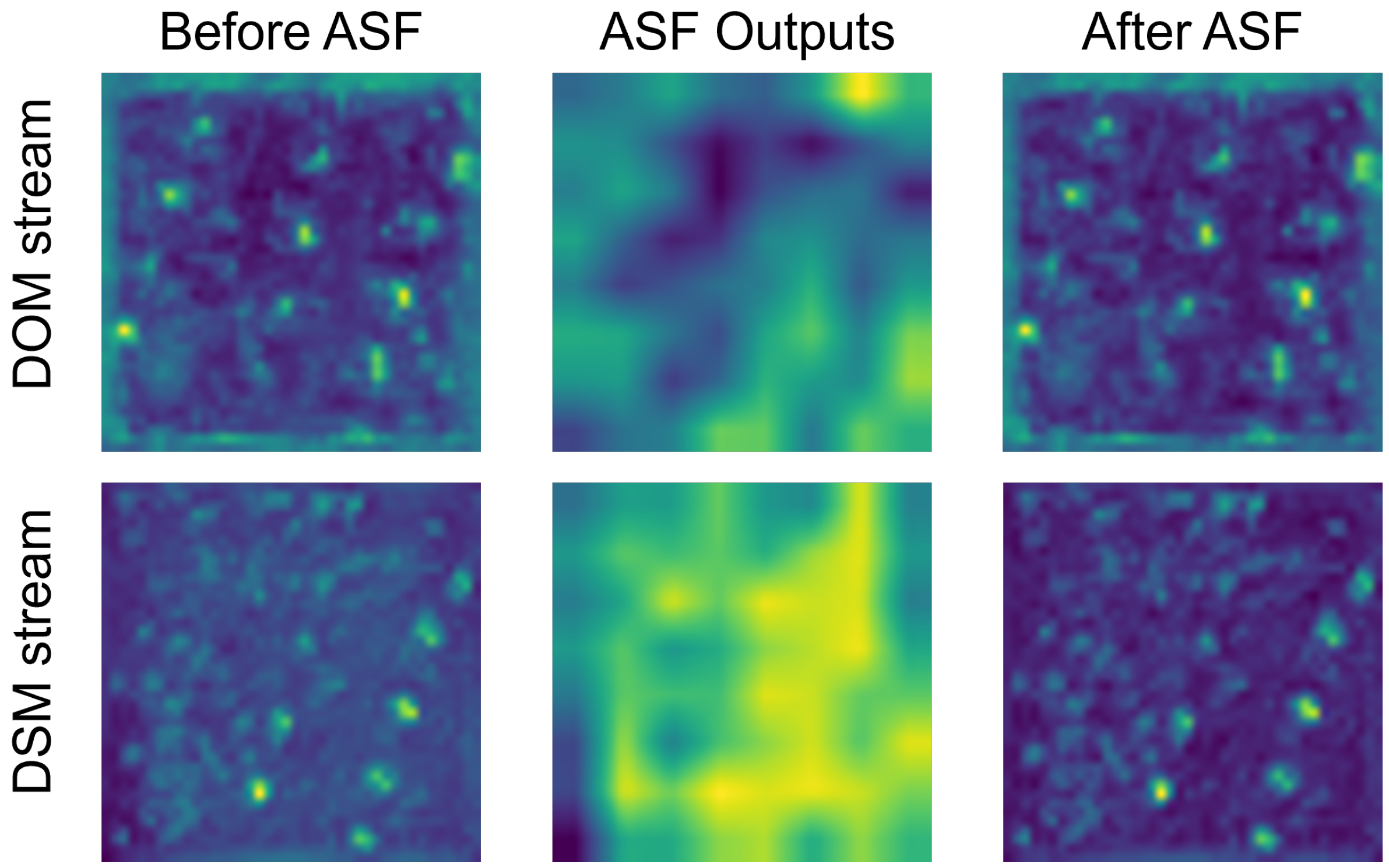

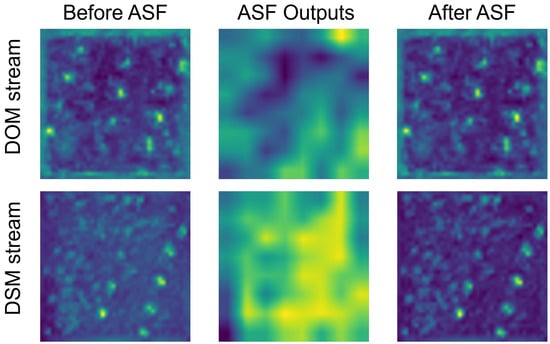

We also provide a visualization of the ASF module’s functionality, as it serves as a critical component for fusing DOM and DSM features. As mentioned in the previous section, the ASF module bridges the dual-backbone networks in a branch structure, providing residual compensation to the extracted feature maps. As illustrated in Figure 7 (corresponding to the first row of Figure 6), this compensation enhances the target features while suppressing irrelevant background features, thereby improving the distinctiveness of the target features.

Figure 7.

Comparison of dual-stream feature maps before and after ASF compensation in the intermediate-level layer.

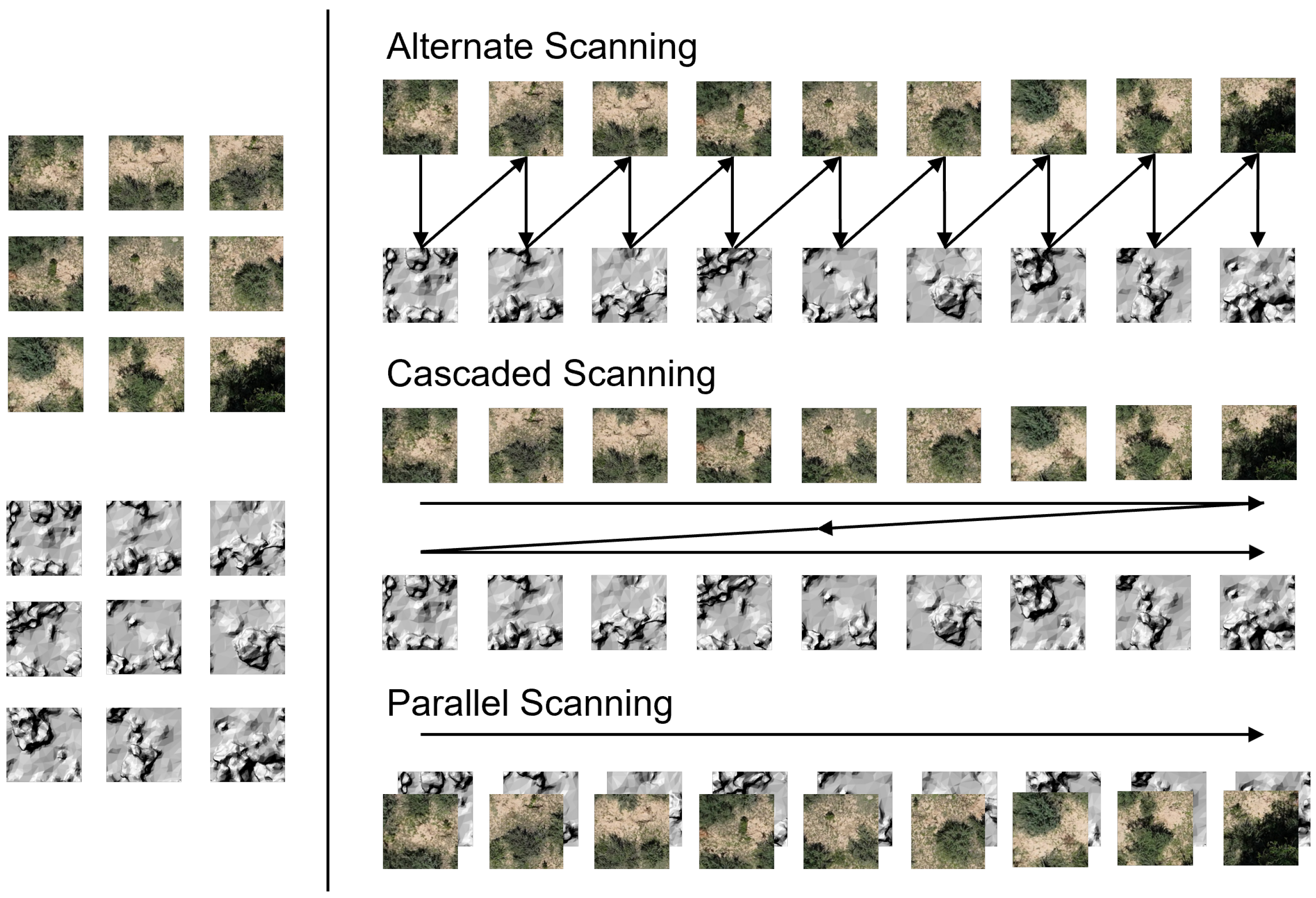

5.1.2. Necessity of Alternate Scanning

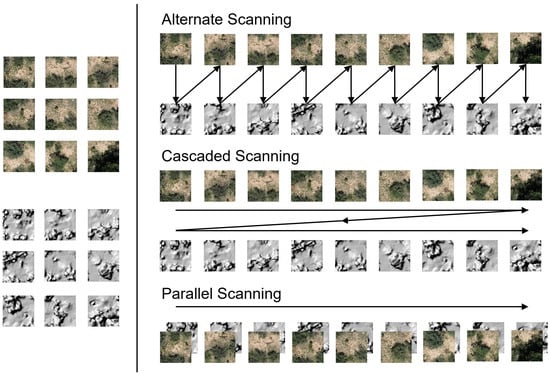

Within the ASF module structure, the alternate scanning (AS) strategy is applied to feature maps to facilitate multimodal feature alignment during SSM modeling. The ChangeMamba framework introduces two additional scanning strategies alongside AS: cascaded scanning (CS) and parallel scanning (PS), as depicted in Figure 8.

Figure 8.

Three scanning strategies: alternate, cascaded, and parallel scanning.

To determine the best strategy for multimodal object detection, we individually replaced AS with CS and PS, then assessed their performance on the PTS dataset and the VEDAI fold01 benchmark. As shown in Table 2, all three strategies (AS, CS, PS) achieve comparable detection performances on PTS, with AP50 scores of 72.6%, 71.8%, and 72.1%, respectively. On the VEDAI benchmark, the AS strategy demonstrates clear superiority, achieving a mAP50 of 80.7%, significantly outperforming CS (77.7%) and PS (77.5%). Based on these results, we integrate the AS strategy into the design of the fusion module for this study.

Table 2.

Comparative evaluation of AS, CS, and PS scanning strategies on the PTS dataset and VEDAI benchmark.

5.1.3. Ablation Study on ASF Module Components

The ASF module comprises several critical components, including channel swapping blocks, SS2D, and residual connection designs, as labeled in Figure 4. In the ablation study on the PTS dataset, each component is removed individually to evaluate its contribution and to validate the overall effectiveness of the module. The experimental results are presented in Table 3. The results demonstrate that the removal of any component results in a decline in detection performance, confirming the importance of the proposed design choices. Among all components, the exclusion of SS2D leads to the most significant performance degradation, underscoring the crucial role of SS2D in the module.

Table 3.

Results of the ablation study on ASF module components.

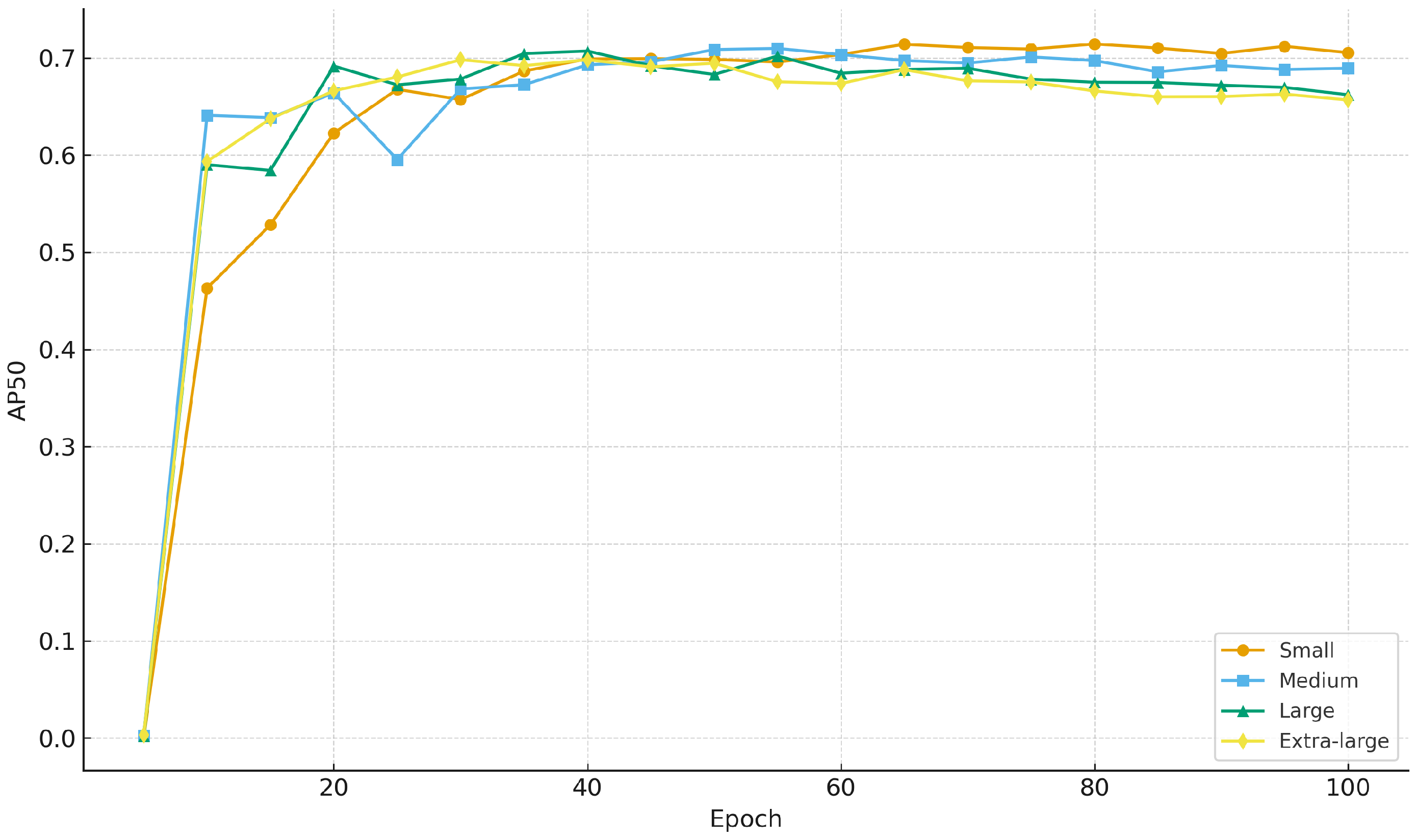

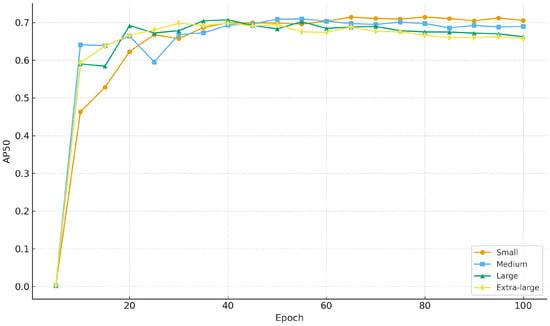

5.2. Hyperparameter Selection for Individual Tree Seedling Detection

The hyperparameter selection experiments focused on the scale of ASFYOLO models (small, medium, large, and extra-large), batch size, and learning rate. The first step was to select the appropriate model scale. A learning rate of 0.01 and the maximum batch size supported by GPU memory were applied for all model scales. The experimental results, presented in Table 4, show that the small model achieved the best performance. The training curves of all model scales, shown in Figure 9, indicate that all models converge properly, while larger models are more prone to overfitting. Therefore, the small model was selected for subsequent experiments. Based on this choice, further experiments were conducted on the PTS dataset by varying batch sizes (8, 16, 32) and learning rates (0.02, 0.01, 0.005). As presented in Table 5, the model achieved the highest AP50 with a batch size of 32 and a learning rate of 0.01. The best performance for AP75 and AP was obtained with a batch size of 8 and a learning rate of 0.005. Since AP50 is the primary evaluation metric in this study, a learning rate of 0.01 and a batch size of 32 were selected.

Table 4.

Detection performance and model complexity across different scales.

Figure 9.

Training curves of different model scales on AP50.

Table 5.

Detection performance under different batch sizes and learning rates.

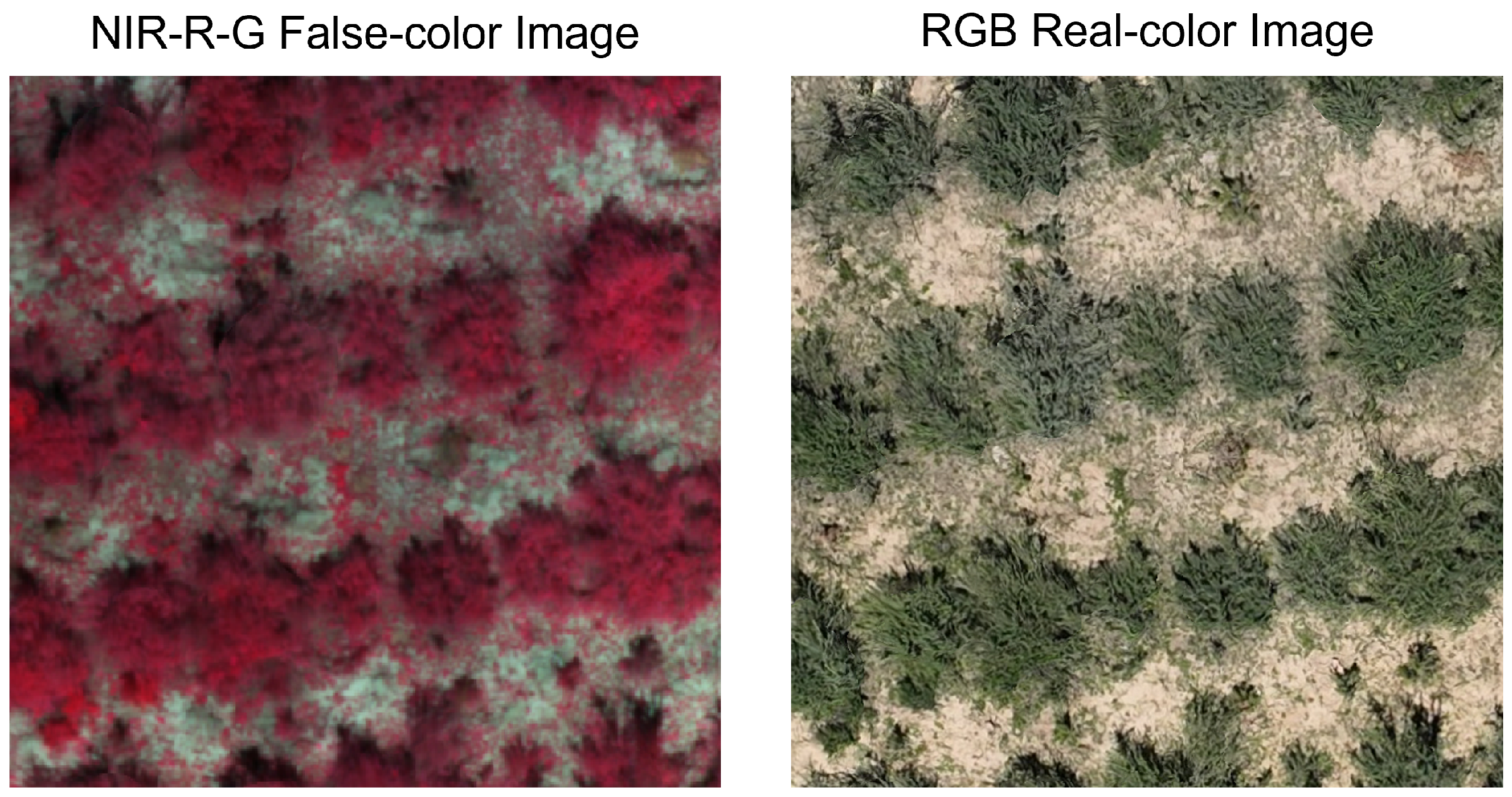

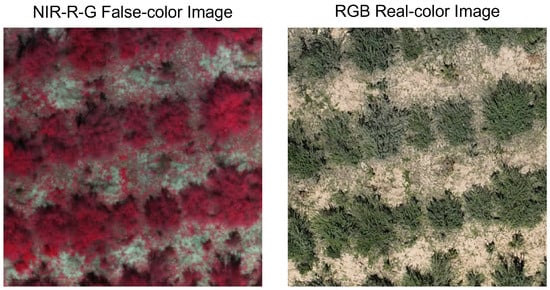

5.3. RGB vs. NIR-R-G: Performance on Individual Tree Seedling Detection Task

As previously mentioned in the introduction to the PTS dataset, we acquired multispectral DOM data from Site A, which comprises near-infrared (NIR), red, green, and blue spectral bands. The NIR band exhibits heightened sensitivity to vegetation reflectance characteristics. A typical visualization approach for vegetation utilizes a three-channel false-color composite combining NIR, red, and green bands (NIR-R-G). In this composite, vegetation appears as distinct red hues, providing enhanced contrast against bare soil substrates, as illustrated in Figure 10. To investigate whether NIR-R-G false-color imagery improves individual planted tree seedling detection performance, we conducted experiments on Site A by replacing the original RGB imagery with NIR-R-G imagery as input for our ASFYOLO detection framework.

Figure 10.

(Left): Multispectral NIR-R-G false-color DOM; (right): RGB true-color DOM.

The experimental results are presented in Table 6. The overall detection accuracy declines due to the reduced dataset scale. When using unimodal inputs, RGB achieves marginally higher performance with an AP50 of 56.7% than NIR-R-G’s 55.9%. Within the multimodal framework (DOM + DSM), NIR-R-G input demonstrates a slight advantage, attaining an AP50 of 59.8% compared with RGB’s 58.6%. While the NIR-R-G spectral composition offers modest improvement for multimodal detection, we deem it more valuable for downstream ecological applications.

Table 6.

Detection accuracy metrics: RGB vs. NIR-R-G spectral inputs.

5.4. Comparisons with Previous Methods

In the comparative analysis, we benchmarked our proposed ASFYOLO against several powerful unimodal object detection methods, including YOLOv11 [55], DDQ-DETR, and MambaYOLO [56], as well as representative multimodal object detection methods also built upon the YOLOv5 framework, including SuperYOLO, YOLOv5 (Add), YOLOFusion, CFT, and ICAFusion. SuperYOLO employs a lightweight single-backbone structure with pixel-level fusion and does not incorporate super-resolution enhancement in our experiment. All other multimodal algorithms utilize a dual-backbone structure with feature-level fusion. The experimental results for individual planted tree seedling detection on the PTS dataset are presented in Table 7, and the multi-class object detection results on the VEDAI dataset are shown in Table 8.

Table 7.

Comparison of powerful unimodal and multimodal object detection methods on the PTS dataset (individual planted tree seedling detection).

Table 8.

Comparison of multimodal object detection methods on the VEDAI benchmark (IR + RGB multi-class object detection). AP50 is used for evaluating single-class objects.

Table 7 reports the accuracy and computational resource consumption of the detection methods using different modalities on the planted tree seedling detection task. All multimodal detection methods utilizing the combined DOM + DSM input significantly outperformed those unimodal detection systems using DOM or DSM alone. These results demonstrate that the DOM + DSM fusion strategy is highly effective in enhancing detection performance in the individual planted tree seedling detection task. Within the DOM + DSM modality, our proposed ASFYOLO achieved the best detection performance with an AP50 of 72.6%. Leveraging the efficiency of the SSM architecture, ASFYOLO also exhibits superior parameter efficiency and computational cost compared with transformer-based methods (CFT and ICAFusion). Compared with CFT, ASFYOLO nearly halves the parameters while reducing GFLOPs by 3.35. Compared with ICAFusion, although ASFYOLO has higher parameters, it maintains a computational advantage, requiring 0.44 fewer GFLOPs. These results highlight ASF’s effective balance between detection accuracy and computational efficiency, making it highly suitable for practical applications in large-scale RS imagery interpretation.

Since PTS is a single-class dataset, we further evaluated the effectiveness of ASFYOLO on a standard multi-class RS object detection task by conducting comparative experiments on the publicly available VEDAI dataset using both IR and RGB modalities. The experimental results are presented in Table 8. The ASFYOLO achieves the best mAP50 of 77.4% and the highest mAP of 45.8%, delivering the optimal AP50 performance across six target classes. These results further demonstrate the superiority and scalability of our model across different multimodal detection scenarios.

6. Conclusions and Future Work

In this paper, we propose fusing textural features from DOM and geometric features from DSM to enhance the robustness of individual planted tree seedling detection in forested scenarios. A dual-backbone YOLOv5 architecture is adopted as the foundational framework for executing this multimodal object detection task. Within this architecture, we develop an ASF module for feature-level fusion between DOM and DSM. The ASF module, evolved from the VSS block, employs an AS strategy to align multimodal tokens for S6 modeling, enabling computationally efficient global multimodal fusion. To train and evaluate the entire framework, we establish the PTS dataset, which contains co-registered DOM and DSM data covering a 96-hectare forest area. By integrating DOM and DSM, the multimodal framework achieves significantly improved detection performance compared with unimodal detection systems using DOM or DSM individually. We benchmark the ASFYOLO against prior multimodal object detection methods on both the PTS dataset and the public VEDAI dataset. The ASFYOLO demonstrates superior performance for multimodal detection tasks while maintaining relatively low computational cost.

Although our study has achieved promising results, it still has certain limitations and opens up broad opportunities for future research. The main limitations are as follows:

- (1)

- The current dataset is limited to a district-level region and has a narrow temporal coverage. Therefore, the model’s generalization capability across different forest types, larger spatial scales, and varying seasonal conditions has not yet been fully verified.

- (2)

- The current detection task is still at an early stage of information extraction for planted tree seedlings, focusing mainly on locating and delineating individual targets, while attributes such as species, health status, and growth conditions have not yet been addressed.

- (3)

- The interpretability of the proposed ASF method regarding the mechanisms of 518 feature fusion remains limited.

To address the above limitations, our future work will include, but is not limited to, the following directions:

- (1)

- We will expand the dataset in both spatial and temporal dimensions to support studies on model generalization across diverse forest environments and seasonal conditions.

- (2)

- We will build upon the findings of this study to develop more refined methods for extracting detailed attributes of tree seedlings, supporting practical forestry applications such as pest monitoring and plantation optimization.

- (3)

- We will explore the interpretability of the ASF module in multimodal feature fusion and integrate the insights into practical remote sensing tasks to achieve more task-adaptive fusion strategies.

We also hope our work will inspire further collaborative research in these directions.

Author Contributions

Conceptualization, T.Q. and Y.L.; methodology, T.Q. and P.Y.; software, Y.L. and J.T.; validation, T.Q., Y.L. and J.T.; formal analysis, T.Q.; investigation, P.Y.; resources, J.T.; data curation, T.Q.; writing—original draft preparation, T.Q.; writing—review and editing, Y.L.; visualization, T.Q.; supervision, C.H., Z.L. and Z.Z.; project administration, J.T.; funding acquisition, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Key R&D Program of China under Grant 2022YFB3903000, 2022YFB3903004, the Youth Innovation Promotion Association CAS under Grant 2021118, and the National Natural Science Foundation of China under Grant 42371376.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed at the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Teng, M.; Ouaknine, A.; Laliberté, E.; Bengio, Y.; Rolnick, D.; Larochelle, H. Bringing SAM to new heights: Leveraging elevation data for tree crown segmentation from drone imagery. arXiv 2025, arXiv:2506.04970. [Google Scholar] [CrossRef]

- Lett, S.; Dorrepaal, E. Global drivers of tree seedling establishment at alpine treelines in a changing climate. Funct. Ecol. 2018, 32, 1666–1680. [Google Scholar] [CrossRef]

- Browne, L.; Markesteijn, L.; Engelbrecht, B.M.; Jones, F.A.; Lewis, O.T.; Manzané-Pinzón, E.; Wright, S.J.; Comita, L.S. Increased mortality of tropical tree seedlings during the extreme 2015–16 El Niño. Glob. Chang. Biol. 2021, 27, 5043–5053. [Google Scholar] [CrossRef]

- Ibáñez, T.S.; Wardle, D.A.; Gundale, M.J.; Nilsson, M.C. Effects of soil abiotic and biotic factors on tree seedling regeneration following a boreal forest wildfire. Ecosystems 2022, 25, 471–487. [Google Scholar] [CrossRef]

- Holl, K.D.; Zahawi, R.A.; Cole, R.J.; Ostertag, R.; Cordell, S. Planting seedlings in tree islands versus plantations as a large-scale tropical forest restoration strategy. Restor. Ecol. 2011, 19, 470–479. [Google Scholar] [CrossRef]

- Brancalion, P.H.; Holl, K.D. Guidance for successful tree planting initiatives. J. Appl. Ecol. 2020, 57, 2349–2361. [Google Scholar] [CrossRef]

- Hyyppa, J. Detecting and estimating attributes for single trees using laser scanner. Photogramm. J. Finl. 1999, 16, 27–42. [Google Scholar]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A new method for segmenting individual trees from the lidar point cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Næsset, E.; Nelson, R. Using airborne laser scanning to monitor tree migration in the boreal–alpine transition zone. Remote Sens. Environ. 2007, 110, 357–369. [Google Scholar] [CrossRef]

- Stumberg, N.; Bollandsås, O.M.; Gobakken, T.; Næsset, E. Automatic detection of small single trees in the forest-tundra ecotone using airborne laser scanning. Remote Sens. 2014, 6, 10152–10170. [Google Scholar] [CrossRef]

- Pearse, G.D.; Tan, A.Y.; Watt, M.S.; Franz, M.O.; Dash, J.P. Detecting and mapping tree seedlings in UAV imagery using convolutional neural networks and field-verified data. ISPRS J. Photogramm. Remote Sens. 2020, 168, 156–169. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.J.; Tiede, D.; Seifert, T. UAV-based forest health monitoring: A systematic review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Jarahizadeh, S.; Salehi, B. A comparative analysis of UAV photogrammetric software performance for forest 3D modeling: A case study using AgiSoft photoscan, PIX4DMapper, and DJI Terra. Sensors 2024, 24, 286. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Chen, H.; Song, J.; Han, C.; Xia, J.; Yokoya, N. ChangeMamba: Remote sensing change detection with spatiotemporal state space model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Changyu, L.; Hogan, A.; Hajek, J.; Diaconu, L.; Kwon, Y.; Defretin, Y.; et al. ultralytics/yolov5: V5. 0-YOLOv5-P6 1280 models, AWS, Supervise. ly and YouTube integrations. Zenodo. 2021. Available online: https://github.com/ultralytics/yolov5/releases/tag/v5.0 (accessed on 12 April 2021).

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Li, J.; Hong, D.; Gao, L.; Yao, J.; Zheng, K.; Zhang, B.; Chanussot, J. Deep learning in multimodal remote sensing data fusion: A comprehensive review. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102926. [Google Scholar] [CrossRef]

- Gómez-Chova, L.; Tuia, D.; Moser, G.; Camps-Valls, G. Multimodal classification of remote sensing images: A review and future directions. Proc. IEEE 2015, 103, 1560–1584. [Google Scholar] [CrossRef]

- Zhang, J.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Du, Q. SuperYOLO: Super resolution assisted object detection in multimodal remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral deep neural networks for pedestrian detection. arXiv 2016, arXiv:1611.02644. [Google Scholar] [CrossRef]

- Cao, Z.; Yang, H.; Zhao, J.; Guo, S.; Li, L. Attention fusion for one-stage multispectral pedestrian detection. Sensors 2021, 21, 4184. [Google Scholar] [CrossRef] [PubMed]

- Qingyun, F.; Zhaokui, W. Cross-modality attentive feature fusion for object detection in multispectral remote sensing imagery. Pattern Recognit. 2022, 130, 108786. [Google Scholar] [CrossRef]

- Qingyun, F.; Dapeng, H.; Zhaokui, W. Cross-modality fusion transformer for multispectral object detection. arXiv 2021, arXiv:2111.00273. [Google Scholar]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Fu, D.Y.; Dao, T.; Saab, K.K.; Thomas, A.W.; Rudra, A.; Ré, C. Hungry hungry hippos: Towards language modeling with state space models. arXiv 2022, arXiv:2212.14052. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Xie, X.; Cui, Y.; Tan, T.; Zheng, X.; Yu, Z. Fusionmamba: Dynamic feature enhancement for multimodal image fusion with mamba. Vis. Intell. 2024, 2, 37. [Google Scholar] [CrossRef]

- He, X.; Cao, K.; Zhang, J.; Yan, K.; Wang, Y.; Li, R.; Xie, C.; Hong, D.; Zhou, M. Pan-mamba: Effective pan-sharpening with state space model. Inf. Fusion 2025, 115, 102779. [Google Scholar] [CrossRef]

- Yang, Y.; Ma, C.; Yao, J.; Zhong, Z.; Zhang, Y.; Wang, Y. Remamber: Referring image segmentation with mamba twister. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 108–126. [Google Scholar]

- Dong, Z.; Beedu, A.; Sheinkopf, J.; Essa, I. Mamba fusion: Learning actions through questioning. arXiv 2024, arXiv:2409.11513. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Song, A.; Zhao, Z.; Xiong, Q.; Guo, J. Lightweight the focus module in yolov5 by dilated convolution. In Proceedings of the 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (CVIDL & ICCEA), Changchun, China, 20–22 May 2022; pp. 111–114. [Google Scholar]

- Quan, J.; Deng, Y. Enhancing YOLOv3 Object Detection: An In-Depth Analysis of C3 Module Integrated Architecture. In Proceedings of the 2024 9th International Conference on Image, Vision and Computing (ICIVC), Suzhou, China, 15–17 July 2024; pp. 117–121. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Rothe, R.; Guillaumin, M.; Van Gool, L. Non-maximum suppression for object detection by passing messages between windows. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 290–306. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef]

- Maguire, D.J. ArcGIS: General purpose GIS software system. In Encyclopedia of GIS; Springer: Berlin/Heidelberg, Germany, 2008; pp. 25–31. [Google Scholar]

- Zhao, Z.; Fan, C.; Liu, L. Zenodo, Version 1.1.0; Geo SAM: A QGIS Plugin Using Segment Anything Model (SAM) to Accelerate Geospatial Image Segmentation. 2023. Available online: https://zenodo.org/records/8191039 (accessed on 2 November 2025).

- Moyroud, N.; Portet, F. Introduction to QGIS. QGIS Generic Tools 2018, 1, 1–17. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open mmlab detection toolbox and benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Zhang, S.; Wang, X.; Wang, J.; Pang, J.; Lyu, C.; Zhang, W.; Luo, P.; Chen, K. Dense distinct query for end-to-end object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7329–7338. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Wang, Z.; Li, C.; Xu, H.; Zhu, X.; Li, H. MambaYOLO: A Simple Baseline for Object Detection with State Space Model. Proc. AAAI Conf. Artif. Intell. 2025, 39, 8205–8213. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).