Abstract

The reconstruction of high-resolution (HR) remote sensing images (RSIs) from low-resolution (LR) counterparts is a critical task in remote sensing image super-resolution (RSISR). Recent advancements in convolutional neural networks (CNNs) and Transformers have significantly improved RSISR performance due to their capabilities in local feature extraction and global modeling. However, several limitations remain, including the underutilization of multi-scale features in RSIs, the limited receptive field of Swin Transformer’s window self-attention (WSA), and the computational complexity of existing methods. To address these issues, this paper introduces the NGSTGAN model, which employs an N-Gram Swin Transformer as the generator and a multi-attention U-Net as the discriminator. The discriminator enhances attention to multi-scale key features through the addition of channel, spatial, and pixel attention (CSPA) modules, while the generator utilizes an improved shallow feature extraction (ISFE) module to extract multi-scale and multi-directional features, enhancing the capture of complex textures and details. The N-Gram concept is introduced to expand the receptive field of Swin Transformer, and sliding window self-attention (S-WSA) is employed to facilitate interaction between neighboring windows. Additionally, channel-reducing group convolution (CRGC) is used to reduce the number of parameters and computational complexity. A cross-sensor multispectral dataset combining Landsat-8 (L8) and Sentinel-2 (S2) is constructed for the resolution enhancement of L8’s blue (B), green (G), red (R), and near-infrared (NIR) bands from 30 m to 10 m. Experiments show that NGSTGAN outperforms the state-of-the-art (SOTA) method, achieving improvements of 0.5180 dB in the peak signal-to-noise ratio (PSNR) and 0.0153 in the structural similarity index measure (SSIM) over the second best method, offering a more effective solution to the task.

1. Introduction

In the past few decades, the rapid development of satellite technology has led to a significant increase in the volume of RSIs, with applications spanning disaster monitoring [1,2], agriculture [3], weather forecasting [4], target detection [5], and land use classification [6,7]. HR images, in particular, offer richer texture information, which is crucial for detailed surface feature extraction and interpretation in downstream tasks, thereby enhancing the accuracy of applications. Although image SR does not increase the physical resolution of the imaging sensor, it can effectively reconstruct high-frequency details from existing image data, enabling more informative representations than traditional enhancement methods. Therefore, SR has emerged as a promising solution for the extraction of richer information from RSIs without increasing hardware costs. Early methods include pan-sharpening [8] and interpolation [9], which, while simple and efficient, often suffer from smoothing and blurring issues. Other methods, such as reconstruction-based approaches [10,11], sparse representation [12], and convex projection [13], offer strong theoretical support but are complex and require substantial a priori knowledge, limiting their widespread application in remote sensing image processing across different sensors.

The rapid advancement of information technology has led to the widespread adoption of deep learning-based methods across various fields, including SR image processing. CNNs [14] have garnered significant attention in the field of computer vision due to their advantages in automatic feature extraction, parameter sharing, spatial structure preservation, translation invariance, and generalization ability. The seminal work by Dong et al. [15], which applied CNNs to image SR for the first time, marked a significant milestone, outperforming traditional methods and validating the feasibility of CNNs in this domain. Building upon the success of SRCNN, Dong et al. [16] proposed FSRCNN, which introduced a novel tail-up sampling training technique, establishing it as a dominant training method for image SR. However, both SRCNN and FSRCNN were limited to only three convolutional layers, which constrained their depth. The advent of residual networks [17] propelled the development of deep learning models towards greater depths. VDSR [18], for instance, utilized 20 layers of convolution and achieved element-wise summation of the input image and model output feature maps through residual connections, effectively mitigating the issue of gradient vanishing in deep models. Its performance surpassed that of SRCNN. Subsequently, the performance of CNN-based models progressively advanced to deeper levels [19,20,21], although increasing model depth is not without its limitations. Researchers began to incorporate attention mechanisms into image SR. RCAN [22] combined the channel attention mechanism with VDSR to construct the first SR network based on attention, demonstrating that attention mechanisms can significantly enhance the performance of SR models. Following this, second-order attention [23], residual attention [24], and pixel attention [25] were employed to further enhance the performance of image SR models. Today, attention mechanisms and residual structures have become standard components in SR models. Moreover, CNNs have been introduced to SR processing of RSIs. RSIs, unlike ordinary optical images, are characterized by complex feature types and significant scale differences [26]. Leveraging these characteristics, LGCNet [27] outperformed SRCNN by learning multi-level representations of local details and global environments, becoming the first network specifically designed for the SR of RSIs. Lei et al. [28] built upon LGCNet and, by incorporating the multi-scale self-similarity of RSIs, proposed HSENet, which has become a classical model for RSISR. To further enhance the effectiveness of RSISR, researchers have continuously improved the network structure and proposed various new attention mechanisms [29,30] that significantly improve the quality of generated images. However, the local characteristics of CNNs limit their ability to model the global pixel dependencies in RSIs, which affects their performance in the SR task for RSIs.

Despite the substantial advancements in CNNs for image SR, many of these methods primarily focus on minimizing absolute pixel errors, often resulting in a high peak signal-to-noise ratio (PSNR) but images that appear overly smooth and lack perceptual quality. To overcome these limitations, generative adversarial networks (GANs), as detailed in [31,32,33], have been proposed. GANs consist of two opposing networks: the generator and the discriminator, trained in a zero-sum game. The generator aims to create realistic images to fool the discriminator, while the discriminator’s task is to differentiate between real and generated images. Through this adversarial training, GANs generate images that exhibit superior perceptual quality compared to those produced by traditional CNN-based methods. SRGAN [31], the pioneering network to apply GANs to image SR, features a generator consisting of multiple stacked residual blocks and a discriminator based on a VGG-style convolutional neural network. SRGAN introduces a novel loss function that utilizes a pre-trained VGG network to extract image features, optimizing the feature distance between generated and real samples to enhance perceptual similarity. However, the use of perceptual loss can sometimes introduce artifacts. To mitigate this issue, ESRGAN [32] improves upon SRGAN by employing dense residual blocks and removing the batch normalization layer, which reduces artifacts. Despite these improvements, the use of dense residual blocks increases computational resource consumption, potentially affecting ESRGAN’s efficiency. Applying a typical optical image super-resolution GAN model directly to RSIs may lead to feature blurring and texture loss in the generated images. Researchers have made various advancements in GANs for RSISR, including edge sharpening [34], the introduction of attention mechanisms [35], gradient referencing [36], and leveraging the unique characteristics of RSIs [37]. These improvements often focus on optimizing the generator. As research continues, some scholars have shifted their focus to optimizing the discriminator. For instance, Wei et al. [38] proposed a multi-scale U-Net discriminator with attention, which captures structural features of images at multiple scales and generates more realistic high-resolution images. Similarly, Wang et al. [39] introduced a vision Transformer (ViT) discriminator, which also captures structural features at multiple scales and has achieved notable results. Despite these advancements, GAN-based methods still rely on CNN architectures for the construction of the generator and discriminator, and they fall short of fully addressing the inherent localization limitations of CNNs.

The Transformer architecture [40] was first applied to the field of natural language processing (NLP), and Dosovitskiy et al. [41] innovatively introduced it into the field of computer vision (CV) by proposing the ViT. ViT has achieved SOTA performance in several CV tasks. ViT directly models the global dependencies of an image through the self-attention mechanism, which outperforms the local convolution operation of CNNs in processing global features, especially in large-scale datasets. However, the computational complexity of the multi-head self-attention (MHSA) mechanism in ViT is quadratic with respect to the length of the input sequence, which consumes significant computational resources when processing large images. To reduce the computational complexity of MHSA, the Swin Transformer [42] introduces a shift window, limiting the MHSA computation to non-overlapping localized windows. This modification makes the computational complexity linearly related to the image size while still allowing for the interaction of information across the windows, effectively improving computational efficiency. SwinIR [43] combines the localized feature extraction capability of CNNs with the global modeling capability of Transformers. The model includes a shallow feature extraction module, a deep feature extraction module, and an HR reconstruction module. The deep feature extraction module consists of multiple layers of Swin Transformer with residual connections. This structure has become a mainstream design in Transformer-based SR methods. SRFormer [44] introduces a replacement attention mechanism in the Transformer, which not only improves the resolution of the image but also effectively reduces the computational burden while retaining details. TransENet [45] is the first Transformer model applied to the SR of RSIs. However, it upsamples the feature maps before decoding, leading to high computational resource consumption and complexity. To tackle token redundancy and limited multi-scale representation in large-area remote sensing super-resolution, TTST [46] introduces a Transformer that selectively retains key tokens, enriches multi-scale features, and leverages global context, achieving better performance with reduced computational cost. CSCT [47] proposes a channel–spatial coherent Transformer that enhances structural detail and edge reconstruction through spatial–channel attention and frequency-aware modeling, aiming to overcome the limitations of CNN- and GAN-based RSISR methods. SWCGAN [48] integrates CNNs and Swin Transformers in both the generator and discriminator and demonstrates improved perceptual quality compared to earlier CNN-based GANs. However, despite their advantages, these Transformer-based methods still face challenges such as high memory consumption and computational overhead during training, particularly in the deep feature extraction stages. While Swin Transformer improves efficiency using shifted window mechanisms, the limited receptive field within each window may restrict the model’s ability to capture long-range dependencies across wide spatial extents—an essential requirement in remote sensing tasks. In contrast, our proposed NGSTGAN explicitly addresses these limitations by introducing a lightweight and efficient N-Gram-based shifted window self-attention mechanism that enhances the model’s capacity to capture both local structures and global dependencies without significantly increasing computational complexity. Moreover, the ISFE module further strengthens spatial structure preservation by facilitating interaction-based enhancement across features. Compared to TTST, CSCT, and SWCGAN, NGSTGAN strikes a better balance between structural fidelity, perceptual quality, and computational efficiency, which is crucial for scalable deployment in large-area RSISR applications.

In language modeling (LM), an N-Gram [49] is a set of consecutive sequences of characters or words, widely used in the field of NLP as a probabilistic, statistics-based language model, where the value of N is usually set to 2 or 3 [49]. The N-Gram model performed well in early statistical approaches because it could take into account longer context spans in sentences. Even in some deep learning-based LMs, the N-Gram concept is still employed. Sent2Vec [50] learns N-Gram embeddings using sentence embeddings. To better learn sentence representations, the model proposed in [51] uses recurrent neural networks (RNNs) to compute the context of word N-Grams and passes the results to the attention layer. Meanwhile, some high-level vision studies have also adopted this concept. For example, pixel N-Grams [52] apply N-Grams at the pixel level, both horizontally and vertically, and view N-Gram networks [53] treat successive multi-view images of three-dimensional objects along time steps as N-Grams. Unlike these, this paper builds on previous N-Gram language models. Referring to NGSwin [54], the concept of N-Grams is introduced into image processing, focusing on bidirectional two-dimensional information at the local window level. This approach offers a new method for processing single RSIs in low-level visual tasks.

To summarize, current RSISR models face three major limitations. First, RSIs often contain multiple ground objects with strong self-similarity and repeated patterns across different spatial scales. However, most existing methods fail to effectively exploit the inherent multi-scale characteristics of RSIs. Second, the commonly used WSA in Swin Transformer is limited to local windows, making it difficult to leverage texture cues from adjacent regions. The independent and sequential sliding windows further restrict the model’s ability to capture global contextual dependencies. Third, many state-of-the-art RSISR models rely on heavy architectures with high computational cost. It is therefore essential to design lightweight models that not only limit parameters to 1 M∼4 M but also reduce the total number of multiply–accumulate operations during inference [55,56].

This study aims to enhance the spatial resolution of LR RSIs using deep learning techniques, thereby producing high-quality data for remote sensing applications. To achieve this, this study proposes a new RSISR model, namely NGSTGAN. It integrates the N-Gram Swin Transformer as the generator and combines the multi-attention U-Net as the discriminator. To tackle the multi-scale characteristics of remote sensing imagery, this study proposes a multi-attention U-Net discriminator, which is an extension of the attention U-Net [38] and incorporates channel, spatial, and pixel attention modules before downsampling. The U-Net structure provides pixel-wise feedback to the generator, facilitating the generation of more detailed features. It also enhances feature extraction capabilities [57] by integrating information from various scales through multi-level feature extraction and skip connections. The channel attention module enhances the representation of feature maps at each layer, enabling the generator to concentrate on critical channels, which is particularly important for complex remote sensing image features such as texture and color. The spatial attention module efficiently identifies significant regions and improves the spatial detail performance of U-Net in reconstructing high-resolution images, allowing the model to focus on specific feature regions and accurately recover details. Conversely, the pixel attention module offers robust feature representation at the local pixel level, making U-Net more adaptable and flexible in processing fine image information, such as feature edges and small details. Additionally, to extract multi-scale and multi-directional features from the input image, enrich the feature expression, and enhance the model’s ability to capture complex texture and detail information, the ISFE is designed in the generator, achieving promising results. To address the limitation of the Swin Transformer’s limited receptive field, where degraded pixels cannot be recovered by utilizing the information of neighboring windows, we refer to NGSwin [54] and introduce the N-Gram concept into the Swin Transformer. Through the S-WSA method, neighboring uni-Gram embeddings are able to interact with each other, allowing N-Gram contextual features to be generated before window segmentation and enabling the full utilization of neighboring window information to recover degraded pixels. The specifics of the N-Gram implementation are detailed in Section 2.3.2. To reduce the number of parameters and the complexity of the model, we employ a CRGC method [54,58] to simplify the N-Gram interaction process.

Currently, most RSISR studies primarily rely on RGB-band datasets, which are widely used due to their availability and ease of visualization. However, for many real-world applications such as land use classification, crop monitoring, and environmental assessment, multispectral data can provide more comprehensive and accurate information. Recent studies have highlighted the value of multispectral Landsat imagery in various remote sensing tasks beyond RGB, including cloud removal and missing data recovery. For example, spatiotemporal neural networks have been used to effectively remove thick clouds from multi-temporal Landsat images [59], while tempo-spectral models have demonstrated strong performance in reconstructing missing data across time series of Landsat observations [60]. These works underscore the growing importance of leveraging multi-band satellite data to enhance the performance of downstream remote sensing tasks. To broaden the application scope of super-resolved imagery, this study introduces a novel SR dataset composed of multi-band remote sensing images derived from publicly available L8 and S2 sources. Unlike most existing RSISR datasets limited to RGB, our dataset includes the NIR band, enabling super-resolution processing on four bands: R, G, B, and NIR. The proposed method is capable of handling this multi-band input, expanding the potential applications of SR-enhanced imagery in multispectral analysis scenarios.

The primary contributions of this paper are summarized as follows:

- (1)

- An ISFE is proposed to extract multi-scale and multi-directional features by combining different convolutional kernel parameters, thereby enhancing the model’s ability to capture complex texture and detail information, specifically designed for multispectral RSIs.

- (2)

- The N-Gram concept is introduced into Swin Transformer, and the N-Gram window partition is proposed, which significantly reduces the number of parameters and computational complexity.

- (3)

- The multi-attention U-Net discriminator is proposed by adding spatial, channel, and pixel attention mechanisms to the attention U-Net discriminator, which improves the accuracy of detail recovery and reduces information loss in SR tasks, ultimately enhancing image quality.

- (4)

- A custom multi-band remote sensing image dataset is constructed to further verify the wide applicability and excellent performance of NGSTGAN in the SR task for multispectral images.

This paper is structured as follows. Section 2 presents the method proposed in this study, Section 3 describes the datasets and experimental setup, Section 4 provides both quantitative and qualitative analyses of the experimental results, Section 5 discusses the findings, and Section 6 concludes the paper with a summary of the key findings.

2. Methods

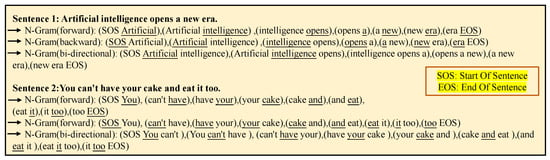

2.1. Definition of N-Gram in Images

As illustrated in Figure 1, N-Gram language models utilize consecutive sequences of forward, backward, or bidirectional words as N-Grams to predict target words [50]. In contrast to models that do not employ N-Grams, where words are often treated as independent entities, the inclusion of contextual features necessitates the introduction of RNNs, attention mechanisms, or average word embeddings. In the N-Gram model, text is segmented into sequences of N words, with each N-Gram treated as a separate feature. Upon recognizing an N-Gram as a feature, it is typically considered independently, without direct interaction with or combination with other N-Gram sequences.

Figure 1.

N-Grams in text. The underlined words represent the target words, while the non-underlined words serve as the neighbors of these target words.

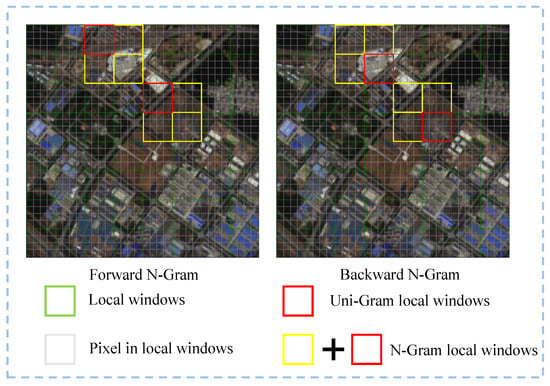

In the image, we define the non-overlapping local window in the Swin Transformer as the uni-Gram, within which each pixel interacts with every other pixel through a self-attention mechanism. The N-Gram is a larger window that contains the uni-Gram and its neighboring windows. Each uni-Gram in the image and each pixel within the uni-Gram in the N-Gram are analogous to a word and its character in text, respectively. As shown in Figure 2, with N set to 2, the red frame delineates the local uni-Gram window, while the yellow frame highlights its neighboring local uni-Gram window. Together, the red and yellow areas form the local N-Gram window. The local windows in the lower right (or upper left) corners of the graph are designated as forward (or backward) N-Gram neighbors. The interaction between N-Grams will be elaborated upon in “N-Gram Window Partition” subsection of Section 2.3.2.

Figure 2.

N-Grams in an image. Each local window is considered a uni-Gram, while the lower-right (or upper-left) local windows are treated as forward (or backward) N-Gram neighbors.

To illustrate the expansion of the model’s receptive field through the application of N-Gram concepts, consider an input image subjected to a single-layer convolution operation with a convolutional kernel, a stride of s, and a padding of p. The receptive field (R) of the single-layer convolution can be calculated using the following equation:

For a multilayer convolution, the receptive field is initially for the first layer and subsequently for the second layer. More generally, the receptive field of the l-th layer can be recursively expressed as follows:

If the N-Gram mechanism is employed after each layer of convolution to integrate the context (i.e., the information of neighboring windows) at each position, let w denote the window size and n denote the size of the N-Gram. For computational convenience, we consider only the information of the neighboring windows before and after the current window for both the forward and backward N-Gram mechanisms. The receptive fields of the forward and backward N-Grams are calculated using the same formula, which can be uniformly expressed as follows:

To more intuitively demonstrate the effect of the N-Gram mechanism on the expansion of the receptive field, we set , , , , and . This configuration yields a receptive field of for the single-layer window. The receptive fields for the forward and backward N-Grams are , respectively. Thus, the sum of the receptive fields of the forward and backward N-Grams is 16. By incorporating the forward and backward N-Gram mechanisms, the receptive field is extended from 5 to 16, significantly enhancing the model’s ability to capture contextual information and, thus, improving the perceptual quality.

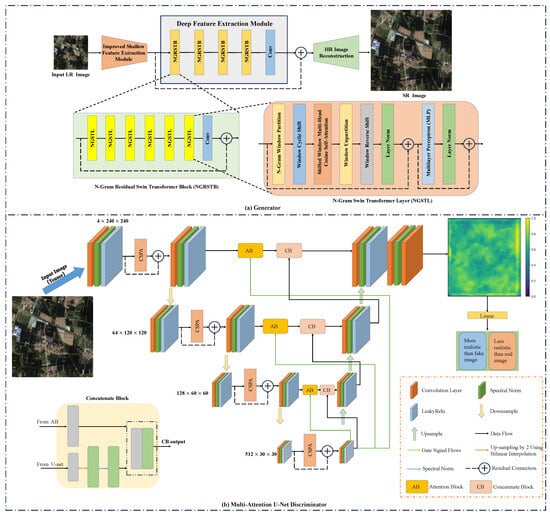

2.2. Overall Architecture of the Model

Figure 3 illustrates the overall architecture of the NGSTGAN model proposed by this study, which comprises two primary components: the generator and the discriminator. The generator includes three components: the ISFE, the deep feature extraction module (DFEM), and the HR image reconstruction module. Inspired by NGSwin [54], the N-Gram concept is introduced in the RSTB of DFEM [43] to form the NGRSTB. Additionally, drawing inspiration from A-ESRGAN [38], the discriminator adopts the improved attention U-Net. The main structure is based on U-Net, with the addition of the CSPA, which is applied before each downsampling step to enhance feature representation at different levels. The training configuration for the generator in NGSTGAN is provided in Table 1. Each module is described in detail below.

Figure 3.

Overall architecture of the proposed NGSTGAN. The framework consists of (a) a generator based on the N-Gram Swin Transformer, comprising a shallow feature extraction module, a deep feature extraction module with N-Gram Residual Swin Transformer Blocks (NGRSTBs), and an HR image reconstruction module, and (b) a multi-attention U-Net discriminator incorporating attention blocks, concatenate blocks, and channel–spatial–pixel attention modules.

Table 1.

Training configuration of the NGSTGAN generator.

2.3. Generator

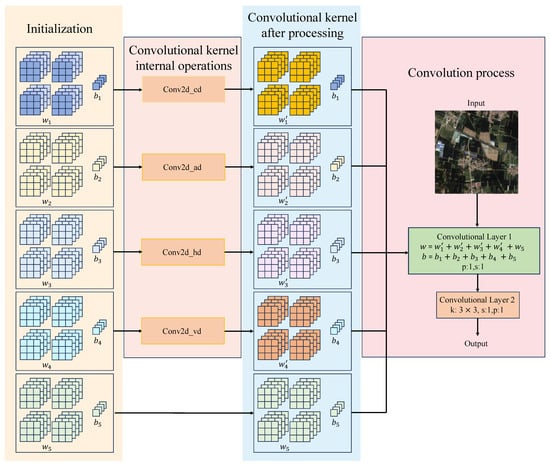

2.3.1. Improved Shallow Feature Extraction Module

Given the following training dataset: , where denotes the number of bands of the input image, H and W denote the height and width of the LR image, and denote the height and width of the HR image, and r denotes the SR times. In the training set, an LR image () and its corresponding HR image () are selected. First, the input LR image () is mapped to a higher dimensional feature space by ISFE. Unlike the traditional shallow feature extraction [43], which only uses a single 2D convolutional layer to extract features, ISFE combines multiple convolutional operations, and through the combination of multiple convolutional kernel parameters, it is capable of extracting multi-scale and multi-directional features from the input image, aiming at enriching the feature representation and enhancing the model’s ability to capture the complex texture and detail information. The specific structure of ISFE is shown in Figure 4. The initial stage of ISFE needs to initialize five convolution kernels with weights and biases ( and , respectively). In Conv2d_cd, the convolution kernel is flattened, and the fifth element (with subscripts starting at 0) is subtracted from the sum of the other elements to achieve the effect of a high-pass filter, which ultimately returns the updated weights and bias. The formula is expressed as follows:

Figure 4.

Detailed architecture of the improved shallow feature extraction (ISFE) module. The design integrates five parallel convolutional kernel branches with specialized transformations—including center difference, angle-directional modulation, horizontal difference, and vertical difference—to enhance multi-scale and directional feature extraction, followed by unified kernel-weight fusion for shallow representation learning.

The structure of Conv2d_ad is similar to that of Conv2d_cd, with the addition of the parameter, which is used to rearrange the elements of the convolution kernel and weight them in a predetermined order. This approach enhances the model’s ability to extract multi-scale and multi-directional feature information, particularly when processing complex multi-spectral RSIs, thereby improving the model’s texture and detail capture capabilities. The adjustment of each element in the convolution kernel can be expressed by the following equation:

where denotes the weights of the convolution kernel returned after the Conv2d_ad weight update.

The Conv2d_hd operation involves copying the elements from the first column (i.e., elements 0, 3, and 6) of the original convolution kernel to the first column of the new tensor. The third column (elements 2, 5, and 8) of the new tensor is then filled with the negative values of the corresponding elements from the original convolution kernel. The Conv2d_vd operation follows a similar approach, but it operates on different elements of the convolution kernel. It copies the elements from the first row (i.e., elements 0, 1, and 2) of the original convolution kernel to the first row of the new tensor and fills the third row (elements 6, 7, and 8) with the negative values of the corresponding elements from the original convolution kernel. The formulas for adjusting the elements in the convolution kernel for Conv2d_hd and Conv2d_vd are provided in Equations (6) and (7), respectively.

In the context of the proposed methodology, and represent the updated weights of the convolution kernels following the adjustments to the weights of Conv2d_hd and Conv2d_vd, respectively. The weights () of the convolution kernel are left unchanged, preserving their original values. Similarly, the biases remain unaltered, as they are not subject to the aforementioned modifications. Subsequently, the combined weights and biases of the convolution kernels, after all the operations have been performed, are aggregated to form the new weights and biases of the convolution kernel for convolutional layer 1, as calculated below:

Subsequently, the low-resolution image () is sequentially fed into convolutional layer 1 and convolutional layer 2 to yield the output of the ISFE. The operation of the ISFE is denoted as , and the extracted features are represented as , where C denotes the number of feature channels. Thus, the entire process of the ISFE can be succinctly encapsulated as follows:

The implementation of ISFE primarily relies on convolutional operations, which are adept at processing early visual information. This characteristic facilitates more stable optimization and enhances the quality of the outcomes, as previously noted in [45]. Moreover, ISFE offers a straightforward approach to mapping the input image space into a higher dimensional feature space.

2.3.2. Deep Feature Extraction Module

As illustrated in Figure 3a, the DFEM is primarily composed of K NGRSTBs and a convolutional layer. Specifically, the intermediate features () and the DFEM output features () are extracted sequentially as follows:

where represents the i-th NGRSTB and denotes the final convolutional layer within the DFEM. By incorporating the convolutional layer, the DFEM introduces the inductive bias of convolution into the Transformer-based network, enhancing the integration of shallow and deep features.

N-Gram Residual Swin Transformer Block. Each NGRSTB comprises N N-Gram Swin Transformer layers (NGSTLs) and a convolutional layer. Given the input features of the i-th NGRSTB as , we first extract the intermediate features () through the NGSTLs:

where signifies the j-th NGSTL within the i-th NGRSTB. Subsequently, a convolutional layer is appended before the residual connection is executed. The output of the i-th NGRSTB can be expressed as follows:

where represents the convolutional layer of the i-th NGRSTB. This design offers two key advantages. Firstly, while Transformers can be viewed as a specific implementation of spatially variable convolution [61,62], convolutional layers with spatially invariant filters can enhance the translational invariance of SwinIR. Secondly, the residual connectivity facilitates short connections from various blocks to the reconstruction module, thereby enabling the aggregation of features at different levels.

The NGSTL is an enhancement to the Swin Transformer layer of SwinIR [43]. The primary innovation of NGSTL lies in its introduction of the N-Gram concept to the window partitioning mechanism, leading to the N-Gram window partition. While retaining the fundamental structure of the Swin Transformer layer, NGSTL incorporates the N-Gram window partition, with the rest of the architecture remaining largely unchanged; thus, details are omitted for brevity.

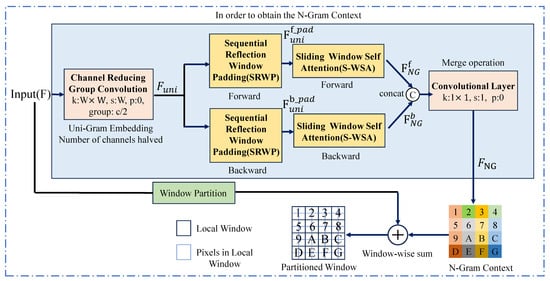

N-Gram Window Partition. As depicted in Figure 5, the NGWP is composed of three components: CRGC, SRWP, and S-WSA. For clarity, the input to the NGWP, denoted as , represents a feature map with c channels, a height of h, and width of w. It is noteworthy that the input to the NGWP varies across NGSTL instances. The initial input to the first NGSTL is the output of ISFE, whereas subsequent NGSTLs receive the outputs from their predecessors as inputs. The F is transformed into by CRGC, which employs a convolution kernel of size , with a step size of W and a group number of . This convolution not only decreases the channel count and spatial resolution but also significantly reduces the complexity of N-Gram interactions, thereby lowering computational requirements and parameter counts.

Figure 5.

The process diagram of N-Gram window partition. k, s, p, , and W are the convolutional kernel size, step size, padding, number of groups, and size of the local window, respectively. Channel-reducing group convolution, sequential reflection window padding, and sliding window self-attention work together to introduce the N-Gram idea while reducing the number of parameters and MACs.

To illustrate the effectiveness of CRGC, a mathematical analysis is provided. Given an input feature map of size with a convolution kernel size of , step size of s, padding of p, and g groups, the output channels are . For standard convolution, the numbers of parameters () and FLOPs () are calculated as follows:

For the CRGC in this work, assuming a window size of W, a reduction in input channels to (where r is the reduction factor), and a reduction in spatial resolution to , the numbers of parameters () and FLOPs () are calculated as follows:

From the above equations, it can be observed that the application of CRGC reduces the number of parameters by a factor of r and the number of FLOPs by a factor of compared to standard convolution. The generator of NGSTGAN in this paper is primarily based on an improved version of the Swin Transformer method, whose computational complexity formula is expressed as follows:

where halving the c value and shrinking to times significantly reduces the computational complexity.

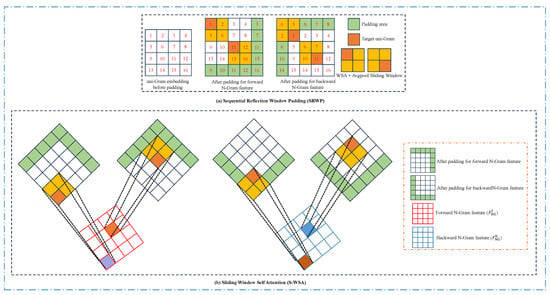

Sequential Reflection Window Padding. The result of the CRGC operation, i.e., , is inputted into the SRWP. As illustrated in Figure 6a, SRWP is employed for padding, given a window size of , i.e., the uni-Gram embedding window size before padding. For forward padding, padding is performed at the lower right of , using the small window of rows/columns at the upper left as the padding value. Similarly, for backward padding, the top left of is padded, using the bottom-right rows/columns of small windows as the padding values. The advantage of this approach is that for both forward and backward padding, the padding values are neighbors of the target uni-Gram, still adhering to the principle according to which the target uni-Gram interacts with its neighbors rather than simply padding with 0 or random values. The forward feature map () and the backward feature map () can be obtained through SRWP.

Figure 6.

Sequential reflection window padding and sliding window self-attention in the N-Gram window partition module, where the value of N is set to 2. When the window is slid in uni-Gram embedding, it is pooled by WSA and averaged to obtain forward and backward N-Gram features.

Sliding Window Self-Attention. As shown in Figure 6b, in order to capture the local context information and, thus, obtain the N-Gram context (), S-WSA is applied to the forward feature map () and the backward feature map (). S-WSA obtains and by sliding on and , respectively. When using S-WSA, average pooling is applied within each N-Gram () window to obtain the window containing information about the uni-Gram and its neighbors. At the end of the slide, , containing forward information, and , containing backward information, are obtained.

Then, , containing forward information, and , containing backward information, are merged using the convolution to obtain . encapsulates a rich N-Gram context. After window partitioning (F), it is added window by window with to obtain the partition window. It is worth noting that has the number of channels restored to c because it merges and . After the N-Gram window partition, the process continues in the order of the NGSTL, and since the rest is the same as the STL in SwinIR [43], it is not repeated here.

2.3.3. HR Image Reconstruction

In the context of HR image reconstruction, we employ the lightweight pixel-shuffle direct method [43]. This approach is composed of two primary modules: PixelShuffle and UpsampleOneStep. PixelShuffle serves as an efficient upsampling technique that enhances resolution by directly mapping input feature channels to the spatial dimension. This method not only reduces the number of parameters and computational complexity but also maintains high-quality reconstruction results. UpsampleOneStep is a one-step upsampling module leveraging the PixelShuffle technique, designed for lightweight SR reconstruction. Following the deep feature extraction module, the output features are represented as . After concatenating these features with the shallow features via residual connection, the input features () retain their dimensions. The primary function of UpsampleOneStep is to achieve efficient image upsampling through a single convolutional layer and a PixelShuffle operation, thereby accomplishing the SR task with fewer parameters and lower computational complexity. Given an upsampling factor of and a final output channel number of , the dimension of the feature map after the convolution operation becomes . Subsequently, PixelShuffle is applied to the output of the convolutional layer, which rearranges the channels of the input feature map. The number of output channels is adjusted to 4, while the spatial dimensions (height and width) of the output are expanded to 240 (tripled), resulting in . Inside PixelShuffle, the calculation for the output channel is expressed as follows:

where denotes the number of channels after the convolution operation, with a value of 36.

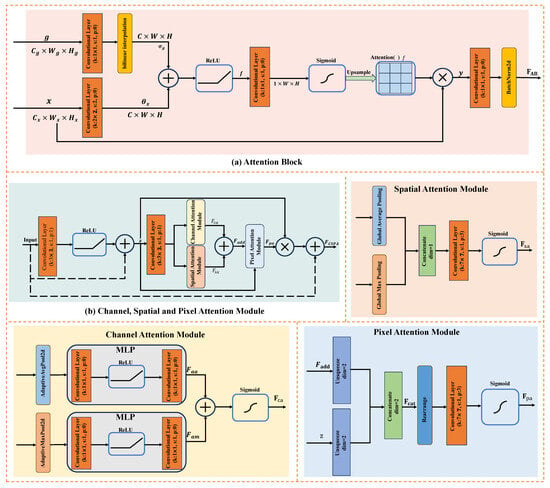

2.4. Multi-Attention U-Net Discriminator

The multi-attention U-Net discriminator is an improvement on the attention U-Net discriminator [38], as shown in Figure 3. This model distinguishes itself from the conventional U-Net discriminator model by incorporating three critical modules: the CSPA, the attention block (AB), and the concatenate block (CB). The U-Net architecture is primarily composed of two segments: the encoder and the decoder, each comprising numerous layers. The CSPA is integrated into the U-Net encoder stage. Before downsampling, the input features undergo processing by CSPA, followed by enhancement through residual connections. The integration of spatial, channel, and pixel attention mechanisms into the U-Net framework significantly enhances the model’s ability to focus on critical features. Spatial attention highlights crucial regions, improving detail reconstruction, while channel attention enhances the adaptive selection of multi-channel information. Pixel attention optimizes the recovery of each pixel through fine-grained processing, thereby improving the accuracy of detail recovery in SR tasks and reducing information loss, thereby enhancing the final image quality. The processed features are then passed to the downsampling layer for further processing.

The AB module, applied between the encoder and decoder, facilitates the model in more efficiently capturing key feature regions by jointly processing the encoder’s output and the output of the bottom-most bridging part. This module significantly improves the network’s discriminative ability in the presence of complex or noisy input features, aiding the model in accurately focusing on important information, thereby enhancing overall performance. Conversely, the CB module integrates the outputs from the AB module and the outputs from the next layer of the decoder, providing them to the current decoder. Specifically, the CB module upsamples the LR feature maps from the next layer of the decoder to the same resolution as the current decoder’s feature maps, facilitating the combination of detailed features from the encoder with abstract features from the decoder, thereby retaining more detailed information and contextual associations when recovering images or generating output.

2.4.1. Attention Block

As depicted in Figure 7a, the input to the AB module consists of the output of the current encoder, denoted as , and the output of the bottom bridge section, denoted as . x is first processed through a convolutional layer with a convolutional kernel and a step size of 2 to obtain a downsampled feature map (). Simultaneously, g is convolved with a convolutional layer, then upsampled to match the spatial dimensions of using bilinear interpolation to produce . The fused feature map (f) is obtained by adding and and applying the ReLU activation function. The attention weight map () is generated by the Sigmoid activation function. is then upsampled to match the size of the original input (x) to ensure consistent spatial dimensionality. Finally, x and are multiplied element-wise to yield the output (y) as follows:

Figure 7.

Detailed architecture of key attention components in the proposed multi-attention U-Net discriminator. (a) The attention block integrates encoder and bridge features through a spatial attention mechanism to enhance salient region focus. (b) The channel, spatial, and pixel attention module combines three attention sub-modules to sequentially refine features in the encoder by emphasizing informative channels, highlighting spatially relevant regions, and enhancing pixel-level details.

Subsequently, y is convolved and normalized by a convolutional layer, resulting in .

2.4.2. Concatenate Block

As shown in Figure 3b, the CB module is designed to integrate the output of the AB, denoted as , with the output from the corresponding decoder layer of the U-Net, denoted as . Specifically, is first upsampled by a factor of two using bilinear interpolation to match the spatial dimensions. Then, , after being processed by spectral normalization, is concatenated with the upsampled to produce the final output .

2.4.3. Channel, Spatial, and Pixel Attention Module

The CSPA module, as depicted in Figure 7b, comprises three primary components: the channel attention module, the spatial attention module, and the pixel attention module. The input to these modules, , originates from the encoder. It is first convolved with a kernel and activated by a ReLU function, followed by a residual connection with the original input to yield a feature map (z). z is then convolved again with a kernel and fed into both the channel attention module and the spatial attention module, producing and , respectively. These outputs are concatenated to form , which, together with z, is input into the pixel attention module to generate . The final output, , is calculated as follows:

The input of the channel attention module, z, undergoes convolution and is then subjected to adaptive average pooling and maximum pooling, producing and , respectively. These are fed into the same multi-layer perceptron (MLP) comprising two convolutional layers and a ReLU activation function. The average and maximum pooling results are summed and processed by a sigmoid activation function to generate the channel attention output, . This module enhances the features of significant channels while suppressing less important ones, thereby improving the network’s selectivity on features.

The input to the spatial attention module (z) is similarly the convolutionally processed. It undergoes global average and maximum pooling to yield and , respectively. These are concatenated along the channel dimension to create a new feature map with two channels, which is then convolved with a kernel to produce through a sigmoid activation function. This module focuses on crucial spatial locations in the image, enhancing the network’s perception of key information and reducing the impact of noise.

The input to the pixel attention module comes from and spliced to the output () and z. First, and z are expanded in the channel dimension so that the shape changes to . This is done so that the two tensors can be spliced in subsequent operations. Splicing and z in the expanded dimension yields , which indicates that, at each spatial location, the input features and the corresponding attentional weights are included. The dimension is then rearranged to . The rearranged is input into a convolutional layer with a convolutional kernel size of , a step size of 1, and a padding of 3. Finally, the pixel attention output () is obtained by the Sigmoid activation function. This module can further improve the attention to each pixel in an image, especially in image detail reconstruction and feature enhancement.

2.5. Loss Function

This study integrates pixel loss, perceptual loss, and relative adversarial loss to optimize the generator, thereby enhancing the quality of SR images.

2.5.1. Pixel Loss

Commonly employed pixel losses include loss and loss, which calculate the absolute difference and the mean square deviation, respectively, between the pixel values of the SR image and the HR image. loss is more robust and produces higher-quality images compared to loss. Given a training dataset () with N pairs of samples, the loss can be expressed as follows:

where represents the SR network with parameter w.

2.5.2. Perceptual Loss

While the use of pixel loss to optimize the generator can yield images with high PSNR values, the lack of high-frequency content, such as edges and texture, often results in images that appear overly smooth, lacking detail and sharpness, and exhibiting poor visual quality. SRGAN [31] introduces perceptual loss to enhance the visual quality of SR images. Perceptual loss extracts feature maps from HR and SR images using a pre-trained VGG network [63], which contains rich spatial and semantic information capable of capturing texture and content information. The inter-pixel distance between SR and HR is then calculated as the perceptual loss. By optimizing in the high-dimensional perceptual space, perceptual loss mitigates the impact of different feature angles and lighting conditions between LR and HR images, thereby improving the reconstructed image quality. The computational formula for the perceptual loss is expressed as follows:

In this study, represents the feature map derived from the ith convolution operation preceding the jth maximal pooling layer within the VGG19 network, following activation by the activation function. and denote the height and width of the corresponding feature maps, respectively. To fully exploit the spatial information of low-level feature maps and the semantic information of high-level features, this research employs the multi-layer features of the VGG19 network to calculate the perceptual loss. The perceptual losses from different layers are weighted and summed to form the final perceptual loss. This is expressed as follows:

where signifies the weight of the perceptual loss calculated from the output features of the ith convolutional layer preceding the jth maximum pooling layer. It is important to note that utilizing multi-layer features to compute the perceptual loss may increase the memory usage of the network and slow down the training process, yet the inference phase will remain unaffected.

2.5.3. Relative Adversarial Loss

The adversarial loss computation in this study adopts the concept of the relative average discriminator proposed by RaGAN [64]. Unlike the standard discriminator, which aims to increase the probability of true data being true and decrease that of false data, the relative discriminator reduces the probability of false data being true while increasing the probability of true data being true. This approach, in comparison to the standard GAN, can more effectively highlight the relative difference between real data and generated data, thereby mitigating the instability of standard GAN training, enhancing the performance of the discriminator, and guiding the generator to produce more realistic images. The relative GAN loss can be formulated as follows:

where denotes the discriminator that employs the RaGAN concept, denotes the distribution pattern of real-world high-resolution image data, and signifies the distribution pattern of hyper-resolution image data generated by the generator.

The total loss of the NGSTGAN proposed in this study is the weighted sum of the aforementioned three losses, mathematically represented as follows:

where , , and denote the weighting coefficients for the three types of losses, namely pixel loss, perception loss, and relative confrontation loss, respectively. In this study, the values of , , and are set to 1, 0.5, and 0.9, respectively.

3. Material and Experimental Setup

3.1. Dataset Description

This paper employs L8 and S2 cross-sensor images as the experimental data. The diversity in land use types across different regions, influenced by seasonal, climatic, and weather factors, necessitates a substantial amount of training data to enhance the model’s generalization performance. However, such data not only consumes considerable storage resources but also demands substantial computational resources, which are often insufficient to train a generalized SR model.

Therefore, in this study, images primarily from the northern region of Henan province, including parts of Shandong, Hebei, Shanxi, and Shanxi, are selected for training of the SR model. The main surface types in this region include mountains and plains, with the mountains belonging to the Taihang mountains and the plains covering the majority of the northern Henan plains region, where wheat is the primary food crop, making it a key wheat production base in China.

The L8 satellite carries payloads including the overland land imager and the thermal infrared sensor, providing coverage of the global landmass with spatial resolutions of 30 m, 100 m, and 15 m (panchromatic). The imaging scene size is 185 km × 180 km, the spacecraft altitude is 705 km, and the revisit period is 16 days. The S2 satellite system consists of two satellites, S2A and S2B, offering a revisit period of 10 days for a single satellite and 5 days for the two combined. The S2 satellite is equipped with a multi-spectral imager (MSI) with 13 bands at a spacecraft altitude of 786 km, with a swath width of 290 km and spatial resolutions of 10 m, 20 m, and 60 m. In the experiment, we collected 8 L8 (Collection 2 Level 2 T1) images and 9 S2A images from the official websites of the U.S. Geological Survey (USGS) and the European Space Agency (ESA), both of which are surface reflectance products. To minimize the interference of clouds with the data, we selected cloud-free areas from L8 and S2 images downloaded at intervals of no more than half a month. S2 images with a resolution of 10 m in the blue, green, red, and NIR bands were used as the HR labels, while L8 images with a 30 m resolution were used as the LR labels for the corresponding bands in the experiment. The L8 image with a 30 m resolution was used as the LR input, with a 3-fold resolution difference between LR and HR. The multispectral images were synthesized, spliced, and cropped to obtain matched L8 and S2 images, where the L8 image size was pixels and the corresponding S2 image size was 10,980 × 10,980 pixels. To ensure dataset representativeness and support generalizability, the collected images cover diverse geographic regions featuring various land cover classes, such as urban areas, agricultural fields, forests, and water bodies. The dataset also includes scenes with different terrain types and heterogeneity, ranging from flat plains to more complex landscapes. This diversity in land cover and terrain enhances the robustness of our super-resolution method across a wide range of remote sensing scenarios.

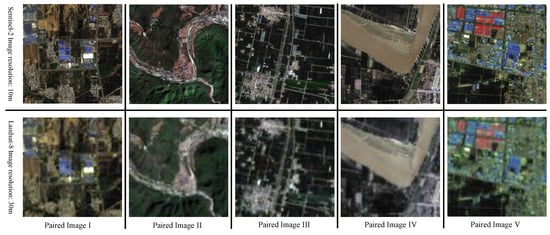

To input the images into the model, the matched images were cropped into image patches. In the experiment, the images were cropped into non-overlapping patches according to a regular grid, resulting in a total of 16598 patches, with the size of the L8 patch being pixels and the size of the corresponding S2 patch being pixels. After manually removing unavailable patches (e.g., cloudy, no data), 16,250 usable patches were finally obtained and divided into training, validation, and testing datasets in a ratio of 8:1:1. Figure 8 shows part of the training dataset.

Figure 8.

In this study, we use paired image patches from various scenarios to train the SR model. The 10 m HR S2 images are placed in the first row, while the 30 m LR L8 images are in the second row.

3.2. Evaluation Indicators

To evaluate the quality of SR images generated by the model, this study employs seven evaluation metrics: PSNR [65], SSIM [65,66], local standard deviation [67], similarity measurement based on angle (SAM) [68], learned perceptual image patch similarity (LPIPS) [69], parameter quantity [56], and multiply–accumulate operations (MACs) [70,71].

3.2.1. Peak Signal-to-Noise Ratio (PSNR)

The PSNR [65] assesses the quality of SR images by comparing the pixel differences between the HR image and the SR image. Minimizing pixel loss is equivalent to maximizing PSNR. A higher value of PSNR indicates a higher quality of the generated SR image. The PSNR can be expressed as follows:

where denotes the generator, max represents the maximum possible pixel value of the image, denotes the SR image generated by the generator, and MSE is the mean-square error between the pixels of the SR image and the real-world HR image, expressed as follows:

3.2.2. Structural Similarity Index Measure (SSIM)

Although the PSNR is a commonly used metric for image quality assessment, it does not adequately represent the subjective perception of image quality by the human eye, as it does not account for perceptual similarity. Therefore, models optimized for pixel loss may perform well on PSNR but often yield unsatisfactory visual results. SSIM [65,66] measures SR images in terms of structure, luminance, and contrast and takes a value in the range of , with values closer to 1 indicating greater similarity between images. This can be expressed by SSIM as:

where l, c, and s denote the similarity between SR and HR images in terms of structure, luminance, and contrast, respectively, and , , and denote the weights of different features in the calculation of SSIM. When , SSIM can be expressed as follows:

where and denote the mean and variance of the image, respectively, and , and are constants, with L representing the dynamic range of the image pixels and and typically set to 0.01 and 0.03, respectively.

3.2.3. Local Standard Deviation (LSD)

The LSD [67] is capable of reflecting the grayscale variation in the feature edge regions of RSIs, making it suitable for assessing the quality of edge reconstruction in super-resolved images. The LSD can be formulated as follows:

where K signifies the size of the window used to compute the local standard deviation, represents the pixel value at coordinates , and denotes the average pixel value within the window.

3.2.4. Similarity Measurement Based on Angle (SAM)

The SAM [68] is a commonly used similarity metric in remote sensing imagery and spectral analysis, mainly used to measure the angular difference between two spectral vectors. The method treats the spectrum of each pixel in an image as a high-dimensional vector and evaluates the similarity of the two vectors by calculating the angle between them. The smaller the pinch angle, the more similar the two spectra are and vice versa. The SAM can be expressed as follows:

where denotes the transpose of and is a very small constant to prevent the denominator from being zero.

3.2.5. Learned Perceptual Image Patch Similarity (LPIPS)

The LPIPS [69] is a metric designed to assess the perceptual similarity between images, aligning with human visual perception. It utilizes a pre-trained neural network to extract deep feature maps from both super-resolved () and high-resolution () images. The similarity is then quantified by calculating the distance between these feature maps. The LPIPS leverages a deep learning model to capture high-level features, thereby more accurately reflecting human perception of image similarity. The value of the LPIPS falls within the range of , with smaller values indicating a closer perceptual distance between images, reflecting higher similarity. The LPIPS can be expressed as follows:

where denotes the lth layer of the pre-trained network; represents the scaling vector for the feature maps by channel in the lth layer; and and denote the height and width of the output feature maps in the lth layer of the pre-trained network, respectively.

3.2.6. Parameters and Multiply–Accumulate Operations

Parameters [65] are the learnable weights and biases in a deep learning model, crucial for storing and updating the model’s knowledge. The number of parameters directly influences the model’s capacity, storage requirements, and computational overhead. More parameters generally enable the model to capture more complex patterns but may also lead to overfitting or computational inefficiency. MACs [70,71] are a critical measure of a model’s computational complexity, indicating the number of multiply-and-add operations it performs during inference. Higher MACs imply greater computational cost and longer inference times. Calculating MACs allows for the evaluation of a model’s performance across different hardware platforms. The balance between parameters and MACs is essential for ensuring a model’s expressive power and optimizing efficiency with limited computational resources. In practice, the numbers of parameters and MACs significantly impact a model’s deployability and inference performance, especially in edge devices or real-time systems.

3.3. Experimental Configuration and Implementation Details

The PyTorch framework [72,73] was employed for model development and training, run on an NVIDIA GeForce RTX 3090 GPU with 24 GB of memory. The training process spanned 500 epochs, with each batch containing 16 data instances. To enhance the generalization capability of the model, various data augmentation strategies were applied during preprocessing, including horizontal and vertical flips, as well as rotations of , , and . The AdamW optimizer [74] was adopted for training stability and efficiency, initialized with a learning rate of 0.0002. A step learning rate scheduler was used, reducing the learning rate by a factor of 0.2 every 150 epochs to facilitate convergence. The overall loss function was constructed as a weighted sum of pixel-wise loss (L1 loss), perceptual loss, and adversarial loss, with respective weights set to 1, 0.5, and 0.9. The perceptual loss was computed using intermediate feature maps from a pre-trained VGG-19 network, while the adversarial loss was derived from a multi-attention U-Net-based discriminator. The generator architecture, as detailed in Table 1, follows a four-stage encoder–decoder structure built on transformer blocks, configured with an N-Gram size of 2, uniform depth of (6, 6, 6, 6), 6 attention heads per stage, embedding dimension of 96, and window size of 8. Patch-wise tokenization was performed using a patch size of 1, and LayerNorm and GELU were used as the normalization and activation functions, respectively. These detailed configurations ensure both the reproducibility of our results and the robustness of the NGSTGAN framework.

3.4. Comparison with SOTA Methods

To thoroughly validate the efficacy of the proposed NGSTGAN model, we conducted a comprehensive comparison with the latest deep learning techniques. Specifically, the CNN and attention mechanism-based methods include SRCNN [15], VDSR [18], RCAN [22], LGCNet [27], and DCMNet [75]; the Transformer-based methods are SwinIR [43] and SRFormer [44]; and the GAN-based methods are SRGAN [31] and ESRGAN [32].

4. Experimental Results and Analysis

4.1. Quantitative Analysis of Experimental Results

In Table 2, our proposed method, NGSTGAN, demonstrates satisfactory performance across several critical metrics, notably achieving optimal scores in four core evaluation metrics—PSNR, SSIM, RMSE, and LPIPS—thereby validating its significant advantages in detail restoration and perceptual quality. Specifically, the PSNR of NGSTGAN reaches 34.1317 dB, significantly higher than that of the traditional CNN methods, SRCNN (30.0448 dB), and GAN-based SRGAN (30.2481 dB), with improvements of 4.0869 dB and 3.8836 dB, respectively. Compared with the superior SwinIR and SRFormer, it also improves by 0.0869 dB and 0.0836 dB, respectively. In the SSIM metric, NGSTGAN reaches 0.8800, surpassing the second-ranked ESRGAN (0.8669) by 0.0131, and improving by 0.1229 compared with SRCNN, which indicates its better structural preservation ability. In terms of RMSE, NGSTGAN also performs well, with a score of 0.0165, which is significantly lower than the traditional VDSR (0.0257) and RCAN (0.0252) methods, with reductions of 0.0092 and 0.0087, respectively, reflecting its superiority in error control, and compared with the second-best methods, SwinIR and SRFormer, the RMSE is reduced by 0.0012, respectively. In addition, the score of NGSTGAN on the LPIPS metric is 0.1906, which is 0.0267 less than that of the second-best model, ESRGAN (0.2173), further demonstrating its superiority in perceptual quality, with high visual consistency. In terms of spectral information retention, NGSTGAN reaches 0.1025 on the SAM metric, second only to DCMNet’s 0.0915, indicating that it is close to optimal in spectral information retention. As for the number of parameters and MACs, although the number of parameters and computation amount of NGSTGAN do not reach the minimum, the complexity of the model is still kept at a low level compared with its performance improvement, consuming only 3.77 M parameters and 20.43 G MACs, which is significantly better than that of complex models such as RCAN (15.63 M, 99.32 G). Moreover, the NGSTGAN model is improved based on the SwinIR model, and the number of parameters and the value of MACs are reduced by 1.28 M and 11.92 G, respectively, compared to SwinIR, which indicates that the improvement of NGSTGAN over SwinIR is very effective, able to reduce the computation and model complexity and, achieving better results. Thus, NGSTGAN strikes a good balance between performance and computational complexity, showing its potential as a lightweight and efficient SR method.

Table 2.

Average quality evaluation of different models on the test dataset. The top-performing results are marked in red, while the second best are marked in blue. In the table, ↑ indicates that higher values are better, while ↓ means lower values are better.

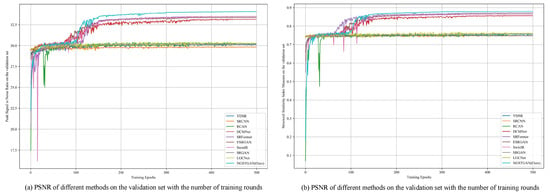

Figure 9 shows the trends of PSNR and SSIM on the validation dataset with the number of training rounds for different models during the training process; the PSNR and SSIM show a similar pattern of change. Within the first 100 rounds, the performance of each model improves faster and fluctuates more, at which time the NGSTGAN model does not perform favorably. However, beyond 100 rounds, models such as SRCNN, SRGAN, VDSR, RCAN, and LGCNet gradually converge, with only minor fluctuations following adjustments to the learning rate. Around 110 rounds, the NGSTGAN model begins to close the performance gap with the other models, and by 150 rounds (following an adjustment in the learning rate), it significantly reduces this gap. In subsequent rounds of training, model performance stabilizes, with only minor increases after 300 and 450 rounds of learning rate adjustments, indicating that the model’s performance is nearing saturation.

Figure 9.

Comparison plot of PSNR and SSIM variation with training rounds for different models on validation data.

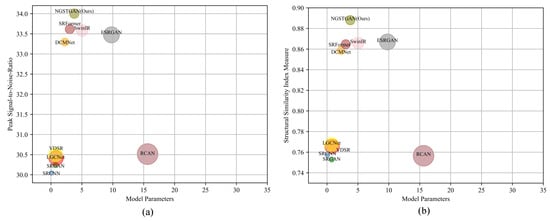

Figure 10 presents a performance comparison of various models in the RSISR task, evaluated in terms of four metrics: PSNR, SSIM, number of parameters, and MACs. The size of the scatter plot indicates the computational complexity of the model, i.e., the magnitude of the MACs value, with larger circles representing higher computational complexity. In Figure 10a, the horizontal axis represents the number of model parameters, and the vertical axis represents the PSNR value. It is evident that our proposed NGSTGAN achieves the highest PSNR with a relatively small number of parameters, significantly outperforming other models. In contrast, traditional models like VDSR and SRCNN, with very few parameters, perform poorly. RCAN, with more parameters, shows improved performance but still falls short of NGSTGAN, with more MACs and greater computational complexity. SwinIR and SRFormer achieve a balance between PSNR and the number of parameters, yet they do not surpass NGSTGAN. In Figure 10b, the horizontal axis represents the number of model parameters, and the vertical axis represents the SSIM value. Once again, NGSTGAN achieves the highest SSIM and outperforms all the compared models. SwinIR and SRFormer have SSIM values close to 0.86, nearly matching that of NGSTGAN, whereas RCAN’s SSIM is also close to 0.85 but with higher computational complexity. Traditional models like VDSR, SRCNN, and SRGAN, with fewer parameters, have SSIM values around 0.75, indicating poor performance.

Figure 10.

The number of parameters and average performance on the test dataset, with MACs represented by scatter magnitude.

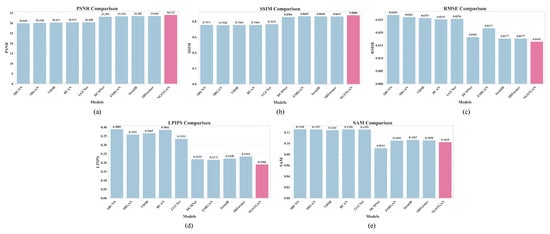

To more intuitively demonstrate the advantages of our proposed NGSTGAN model, we present bar charts comparing the performance of NGSTGAN against other SOTA methods across multiple evaluation metrics, as shown in Figure 11. The metrics include PSNR, SSIM, RMSE, LPIPS, and SAM. In each chart, NGSTGAN is highlighted in a distinct color for emphasis. It is evident from the figure that NGSTGAN consistently outperforms the comparison models in all evaluation metrics, demonstrating superior performance in both distortion-based and perceptual quality measures. This comprehensive comparison further validates the effectiveness of our proposed method.

Figure 11.

Performance comparison of NGSTGAN with leading super-resolution models across PSNR (a), SSIM (b), RMSE (c), LPIPS (d), and SAM (e).

4.2. Qualitative Analysis of Experimental Results

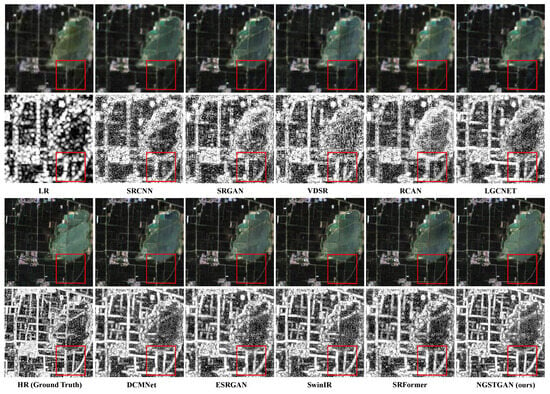

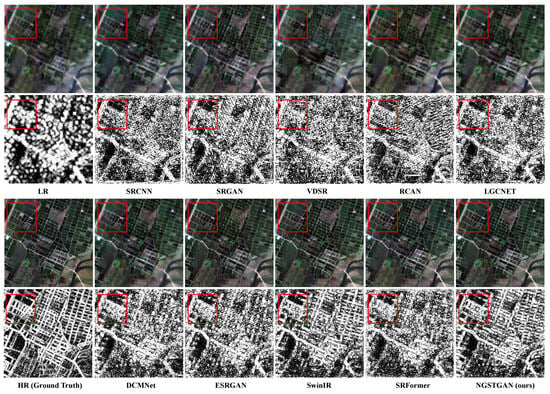

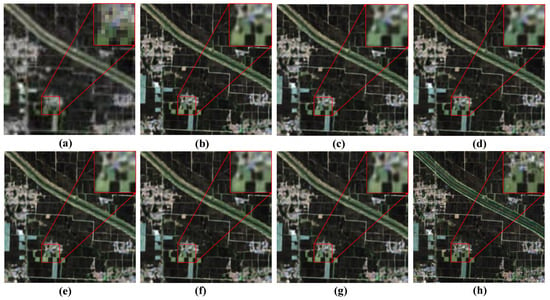

Figure 12 and Figure 13 present a comparison of the global reconstruction results of different models for two RSIs, including the RGB three-band true-color image and the local standard deviation image. As seen in the figures, the reconstruction results based on pixel loss optimization appear smoother compared to other methods but are blurred in the edge parts of the image. In contrast, the method proposed in this study, which combines pixel loss, adversarial loss, and perceptual loss, achieves clearer reconstruction results, especially in detail recovery, and closely matches the high-resolution images. To further highlight the differences in reconstruction results between the different models, we calculate the standard deviation of each pixel within a window and enhance the contrast through normalization, histogram equalization, and sharpening filters to make the differences in reconstruction results more evident. By comparing the standard deviation plots, it is clear that the method proposed in this study is capable of recovering detail information closer to the real image during the reconstruction process, especially in the high-frequency detail region, significantly improving the reconstruction effect.

Figure 12.

Global reconstruction effect of different models (I). The red box highlights the emphasized region.

Figure 13.

Global reconstruction effects of different models (II). The red box highlights the emphasized region.

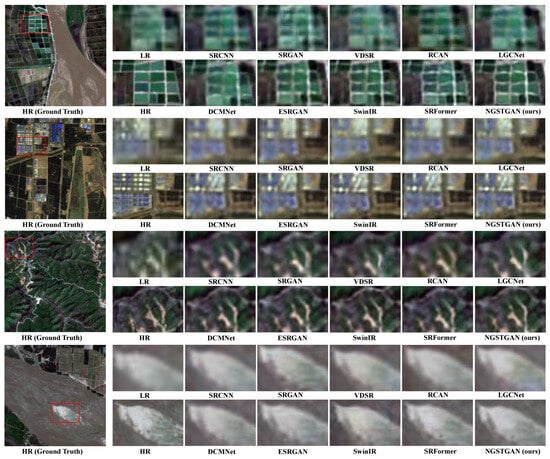

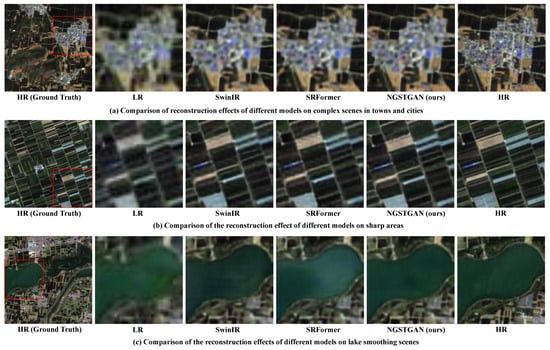

Figure 14 compares the reconstruction results of different models on local areas of four remote sensing images, with NGSTGAN demonstrating notable advantages in several aspects. In comparison with other models, NGSTGAN is capable of more accurately restoring fine textures, including farmland boundaries, road outlines, vegetation patterns, and river contours, among others. This capability fully satisfies the high-resolution requirements of remote sensing imagery. Moreover, the performance of NGSTGAN is particularly noteworthy in edge regions, surpassing traditional models such as SRCNN and VDSR. It effectively distinguishes between the edges of buildings and natural boundaries through more accurate edge detection and detail enhancement. Additionally, NGSTGAN demonstrates superior texture consistency, which minimizes artifacts during the reconstruction process and ensures a clearer and more lifelike visual outcome. This is particularly evident in the preservation of edge structures and textures. Furthermore, the model excels in recovering contrast and brightness, enabling the realistic restoration of original features and bringing the reconstruction closer to the HR state. The comparison across different scenes, including farmlands, urban areas, mountains, and rivers, reveals that NGSTGAN consistently demonstrates high-quality reconstruction capabilities, highlighting its robustness and detail restoration ability. This further underscores its superiority in the task of high-precision RSISR.

Figure 14.

Localized reconstruction renderings of different models, including farmland, towns, mountains, and rivers. The red boxes indicate the regions of interest that are enlarged for visual comparison.

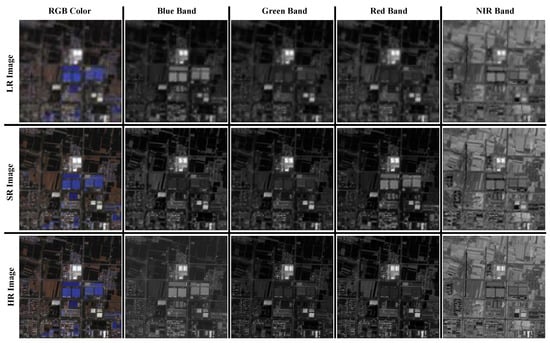

Figure 15 presents a comparison of the LR image, the SR image generated by NGSTGAN, and the HR image across different spectral bands. This comparison clearly highlights the superiority of NGSTGAN in the SR task of remote sensing images. The method achieves near-true reconstruction under multi-band conditions, significantly enhancing the resolution and detail of remote sensing images. The images include RGB, as well as individual blue, green, red, and NIR bands, each showcasing detail recovery in these bands across images of different resolutions. In the RGB color image, the SR image exhibits closer color performance to the HR image and significantly better detail and contrast compared to the LR image. This is particularly evident in the recovery of texture information in building areas and farmland distribution, with improved edge sharpness. In the single-band analysis, the SR images generated by NGSTGAN closely match the true HR values in terms of brightness, contrast, and texture reproduction. This is particularly notable in the NIR band, where the SR images clearly depict texture distribution in farmland and vegetation areas, unlike the blurred or distorted appearance in the LR images. This demonstrates that NGSTGAN not only achieves high-quality SR reconstruction in the visible band but also exhibits strong detail restoration ability across multispectral bands.

Figure 15.

The reconstruction effect of our method on each band of the image.

4.3. Ablation Experiments of Important Components

To comprehensively evaluate the contributions of each core module within NGSTGAN, we conducted a series of ablation experiments by selectively removing the ISFE module, the NGWP module, and the multi-attention U-Net discriminator. This resulted in eight different model configurations (Cases 1–8), as presented in Table 3. The performance of each configuration was assessed using five commonly adopted metrics. Higher PSNR and SSIM values indicate better image quality, while lower RMSE, LPIPS, and SAM scores correspond to more accurate reconstructions with better perceptual consistency and spectral fidelity.

Table 3.

Experimental ablation results of important components in NGSTGAN. The top-performing results are marked in red, while the second-best are marked in blue. In the table, ↑ indicates that higher values are better, while ↓ means lower values are better. ✓ indicates module inclusion, whereas ✗ denotes module removal.

The results clearly show that the full model (Case 8) achieves the best performance across all metrics, with a PSNR of 34.1317; SSIM of 0.8800; and the lowest RMSE, LPIPS, and SAM values of 0.0165, 0.1906, and 0.1025, respectively. This demonstrates the strong synergistic effect of the three modules working together. In contrast, all other configurations that omit one or more components exhibit varying degrees of performance degradation, highlighting the necessity of each module. Among them, Case 3—where only the discriminator is removed—yields the second-best results, suggesting that, while the discriminator contributes significantly, the generator remains robust on its own. Case 1 (without ISFE) and Case 2 (without NGWP) show similar performance, indicating complementary roles between the two modules in enhancing reconstruction. Moreover, configurations retaining two modules (e.g., Cases 1, 2, and 3) consistently outperform those with only a single module (Cases 4, 5, and 6). Notably, Case 7, where all three components are removed, records the poorest results across all metrics, underscoring the foundational importance of each module. Cases that preserve only one module still retain partial functionality, but their performance lags significantly behind the full model. In summary, the ISFE module, NGWP module, and multi-attention U-Net discriminator each play indispensable roles in the NGSTGAN framework. Their integration leads to substantial improvements in both reconstruction accuracy and perceptual quality. The ablation study not only validates the individual value of each component but also confirms the soundness and effectiveness of the overall architectural design.

5. Discussion

5.1. Advantages of ISFE

To evaluate the effectiveness of the proposed ISFE module, we conducted a series of ablation experiments, as shown in Table 4. These experiments assess the impact of ISFE under different model configurations. First, by comparing the generator with and without the ISFE module, we observe that incorporating ISFE significantly improves performance across all evaluation metrics. Specifically, PSNR increases by 0.3929 dB, SSIM improves by 0.0092, RMSE decreases by 0.0008, LPIPS drops by 0.0226, and SAM is reduced by 0.0029. These improvements indicate that the ISFE module enhances the generator’s ability to reconstruct fine details and maintain spectral consistency.

Table 4.

Ablation experiments with ISFE. The top-performing results are marked in red, while the second-best are marked in blue. In the table, ↑ indicates that higher values are better, while ↓ means lower values are better.

Furthermore, when comparing GAN-based configurations, the model with both a generator and discriminator (Ours) equipped with the ISFE module achieves the best performance overall. Compared to its counterpart without ISFE, PSNR improves by 0.4106 dB, SSIM increases by 0.0112, RMSE decreases by 0.0009, LPIPS is reduced by 0.0278, and SAM decreases by 0.0031. The consistent improvements across all metrics validate the contribution of the ISFE module, particularly in joint training scenarios where both structural fidelity and perceptual quality are critical.

5.2. Advantages of N-Gram Window Partition

To evaluate the effectiveness of the NGWP module in enhancing model performance and reducing complexity, we conducted a series of comparative and ablation experiments. It is important to emphasize that, in GAN-based architectures, only the generator is active during inference and testing; therefore, all reported parameter counts and MACs refer exclusively to the generator. The detailed results are summarized in Table 5.

Table 5.

Ablation experiments in NGWP. The top-performing results are marked in red, while the second-best are marked in blue. In the table, ↑ indicates that higher values are better, while ↓ means lower values are better.

The baseline SwinIR model achieves a PSNR of 33.5820 dB and an SSIM of 0.8658, with corresponding RMSE, LPIPS, and SAM values of 0.0177, 0.2240, and 0.1067, respectively. It contains 5.05 M parameters and requires 32.35 G MACs. After integrating the NGWP module, consistent performance improvements are observed: PSNR increases to 33.6133 dB (+0.0313 dB); SSIM rises to 0.8673; and RMSE and LPIPS decrease to 0.0176 and 0.2224, respectively. These gains are accompanied by notable reductions in model size (3.77 M) and computational cost (20.43 G), underscoring NGWP’s ability to enhance performance while significantly improving efficiency. The inclusion of the ISFE module further boosts performance, raising PSNR to 34.0062 dB and SSIM to 0.8765, with RMSE and LPIPS dropping to 0.0168 and 0.1998, respectively. Crucially, these enhancements are achieved without increasing the parameter count or computational overhead, demonstrating the module’s effectiveness in improving reconstruction quality in a resource-efficient manner. When the discriminator is introduced alongside NGWP and ISFE, the model achieves its best performance: PSNR reaches 34.1317 dB, SSIM improves to 0.8800, RMSE and LPIPS are reduced to 0.0165 and 0.1906, and SAM declines to 0.1025. Compared to the baseline, this corresponds to gains of +0.5497 dB in PSNR and +0.0142 in SSIM and reductions of 0.0012, 0.0334, and 0.0042 in RMSE, LPIPS, and SAM, respectively—highlighting the synergistic effect of the proposed components. In conclusion, the NGWP module substantially improves feature interaction while achieving efficient model compression. When integrated with the ISFE module and the multi-attention discriminator, the overall NGSTGAN framework delivers notable improvements in both reconstruction accuracy and perceptual quality, validating the effectiveness and necessity of these components.

5.3. Advantages of Multi-Attention U-Net Discriminator