Abstract

Landslides are characterized by their suddenness and destructive power, making rapid and accurate identification crucial for emergency rescue and disaster assessment in affected areas. To address the challenges of limited landslide samples and data complexity, a landslide identification sample library was constructed using high-resolution remote sensing imagery combined with field validation. An innovative Dual-Coded Segmentation Network (DS Net), which realizes dynamic alignment and deep fusion of local details and global context, image features and domain knowledge through the multi-attention mechanism of Prior Knowledge Integration (PKI) module and Cross-Feature Aggregation (CFA) module, significantly improves the landslide detection accuracy and reliability. To objectively evaluate the performance of the DS Net model, four efficient semantic segmentation models—SegFormer, SegNeXt, FeedFormer, and U-MixFormer—were selected for comparison. The results demonstrate that DS Net achieves superior performance (overall accuracy = 0.926, precision = 0.884, recall = 0.879, and F1-score = 0.882), with metrics that are 3.5–7.1% higher than the other models. These findings confirm that DS Net effectively improves the accuracy and efficiency of landslide identification, providing a critical scientific basis for landslide prevention and mitigation.

1. Introduction

China is a country prone to frequent geological disasters [1,2]. According to data from the National Bureau of Statistics, hundreds of thousands of geological disasters occurred in China between 2007 and 2022, with landslides ranking as the most common type. Landslides are characterized by their wide distribution, high frequency, and complex causes and mechanisms. Frequent landslides can lead to a variety of disasters and accidents, including traffic disruptions, river blockages, farmland damage, building destruction, village burial, and human and animal casualties. These events have become one of the most severe geological threats, posing significant risks to the safety of local residents’ lives and property, the sustainable development of regional economic and social health, and the safety of major engineering construction and operations.

Therefore, it is urgent to conduct research on the distribution and activity of landslide disasters, as high-precision identification and extraction of landslides form the foundation for studying landslide disasters [3,4,5,6]. Such efforts can provide critical references for landslide disaster prevention and mitigation research. However, landslides are often triggered by external environmental or geological tectonic factors, leading to fragmented terrain and inaccessible sites [7]. These conditions make it challenging for traditional landslide identification methods to accurately determine the specific location, scale, and quantity of landslide disasters [8]. Moreover, traditional methods lack standardized criteria for landslide identification, require extensive manpower and resources, and suffer from inefficiency. With the significant advancements in remote sensing platforms and sensor technology, high-resolution remote sensing imagery has been widely applied in geological disaster identification and investigation [9,10]. Consequently, using optical remote sensing imagery for high-precision landslide identification has become a key focus and a prominent research area in landslide disaster studies.

Deep learning has been developing rapidly in the field of image recognition and has attracted significant attention from researchers [11]. Compared to traditional machine learning, deep learning can achieve strong feature extraction and representation capabilities by organically combining single-layer feature extraction modules [12]. Compared to natural images, remote sensing images have more complex semantic and background information, making the application of Convolutional Neural Networks (CNN) to remote sensing images a hot topic [13]. In recent years, the research of computer vision (CV) technology in the field of landslide recognition has made important breakthroughs, which significantly improves the accuracy and efficiency of geologic disaster monitoring. At the data level, the fusion of multi-source remote sensing data have become the mainstream direction, Chen et al. [14] realized the feature fusion of DEM and remote sensing image data by utilizing the feature fusion of two-branch network data in Sichuan–Tibet area, which enhanced the robustness of intelligent landslide detection modeling. At the algorithmic level, the introduction of novel deep learning frameworks such as Transformer [15] and meta-learning [16] effectively solves the problem of model accuracy under small sample conditions. However, DNNSs still have many shortcomings in meeting the demands of remote sensing applications. On the one hand, the performance of DNNs is usually affected by the time-consuming and laborious labeling process and single-task limitations (e.g., each visual recognition task usually requires a separate DNN to be trained); on the other hand, the labeled data of specific sensor images are very scarce [17,18].

The Al large model, also known as “foundation model”, refers to a large-scale pre-trained model based on deep neural network architecture. This type of model mainly adopts Transformer and other advanced architectures, and is trained through massive data, with the following significant features: 1. Powerful transfer learning ability, which can be adapted to a variety of application scenarios; 2. excellent generalization performance; and 3. wide application potential. As the core technology in the field of artificial intelligence, the base model has become an important support for computer vision, natural language processing and other applications [19,20]. The rapid advancement of task-specific foundation models has revolutionized image segmentation. Notably, the Segment Anything Model (SAM) [21] established the first promptable segmentation framework with zero-shot generalization, though its class-agnostic design limits domain-specific precision. the Mask2Former [22] unified various segmentation tasks through masked attention transformers, demonstrating particular strength in boundary-sensitive applications. Domain-adapted variants like MedSAM [23] (for medical images) and RSRefSeg [23] (for remote sensing) proved the value of injecting domain knowledge into foundation models. Currently, intelligent recognition research primarily learns from training samples to extract and internalize the a priori knowledge in the data. However, the efficiency and effectiveness of this process are highly dependent on the number and quality of training samples [24,25]. When dealing with complex and data-scarce problems such as landslide prediction, the a priori knowledge that the model can extract is inevitably limited by the number of available samples. Due to the episodic and unpredictable nature of landslide events, collecting relevant samples is often extremely challenging and results in a relatively limited dataset. Therefore, even if the model architecture is advanced and the algorithm is optimized, without sufficient diversity and quantity of training samples, the model’s ability to learn landslide feature patterns and recognition rules will remain partial and insufficient. Thus, maximizing the extraction and effective utilization of a priori knowledge is the top priority of this study. The main content of this study includes the following points:

- (1)

- A library of landslide identification samples was created using high-resolution remote sensing imagery and landslide boundary data obtained through field validation.

- (2)

- The large model has billions or even hundreds of billions of parameters. It is trained by inputting large amounts of data, enabling the computer to acquire human-like “thinking” abilities and perform a variety of complex tasks, including image generation. In this paper, we design DSNet, which realizes the dynamic alignment and deep fusion of local details with global context, image features with domain knowledge, and significantly improves the accuracy and reliability of landslide detection through the multi-attention mechanism of Prior Knowledge Integration (PKI) module and Cross-Feature Aggregation (CFA) module.

- (3)

- Test the model’s prediction results on the landslide sample set under different data augmentation modes to determine the optimal data augmentation strategy for landslide identification within the study area.

2. Materials and Methods

2.1. Study Area

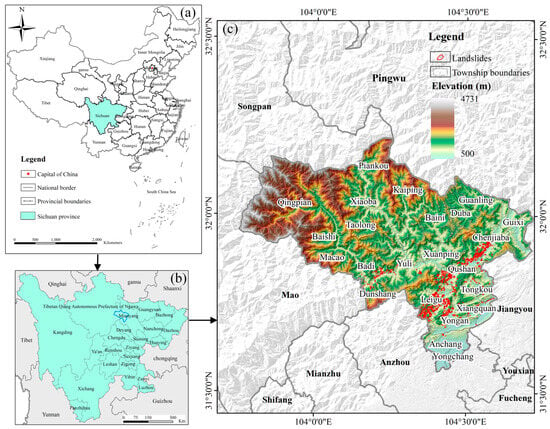

Beichuan Qiang Autonomous County is located in the transition zone from the northwestern edge of the Sichuan Basin to the western Sichuan Plateau (Figure 1). It falls under the jurisdiction of Mianyang City and has a land area of 3082.72 km2 [26]. The county borders Jiangyou City, Anzhou District, Mao County, Songpan County, and Pingwu County, and is situated in the Longmen Mountain region, a transitional area between the Sichuan Basin and the Qinghai–Tibet Plateau. Tectonically, it is located at the junction of the front and back ranges of the Longmen Mountains [27]. The entire area is mountainous, characterized by long gullies, steep slopes, and a complex geological environment. The Yingxiu-Beichuan fault, which triggered the Wenchuan earthquake, lies in the southeastern part of the study area. Along the fault zone, Silurian phyllite and sandstone, Carboniferous carbonate rocks, and loose Quaternary deposits are widely exposed on both sides of rivers and valleys. These highly weathered rocks provide abundant material sources for geological disasters. Moreover, the area is part of the well-known Lutou Mountain heavy rainfall region, which experiences abundant precipitation. The geographical location, topography, geomorphology, and geological and tectonic conditions of the study area make landslides highly frequent in this region.

Figure 1.

Location of the study area. (a) Location of Sichuan Province; (b) location of Mianyang City; (c) location of Beichuan.

2.2. Production of a Landslide Identification Database

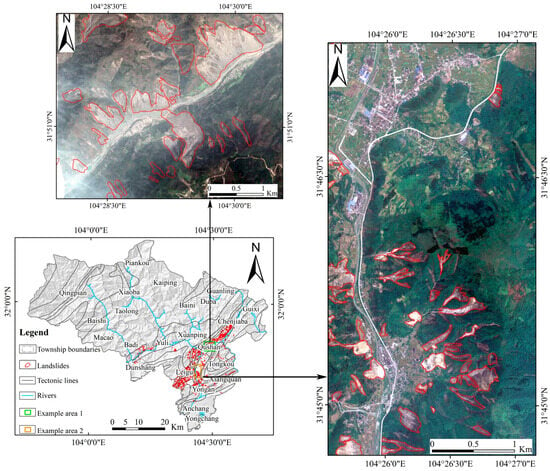

The model training process often requires a large amount of labeled sample data. From the schematic diagram of the spatial distribution of landslides in Beichuan County (Figure 2), it can be observed that earthquake-induced landslides in the Beichuan area are primarily distributed in the southeastern and parts of the central regions of the county. Their distribution is significantly influenced by tectonic lines and rivers, while landslide development in the northern and western regions of the county is relatively weaker.

Figure 2.

Visual Interpretation Results of Beichuan County Landslides.

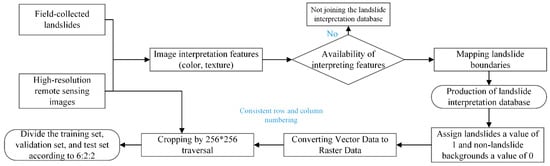

Using high-resolution Google Earth images with a spatial resolution of 0.5 m, combined with the spectral characteristics of landslides in the study area, we applied visual interpretation to construct landslide identification sample data. The landslide contours were used as boundaries to outline landslide surface vector data and generate label data. The process of sample library production included vector editing, field assignment, vector-to-raster conversion, label generation, data segmentation, and sample dataset division. The detailed process of sample library production is shown in Figure 3.

Figure 3.

Process of landslide database production.

- (1)

- Based on the existing landslide interpretation signs, the vector editing tool in ArcGIS 10.8 was used to edit the remote sensing images in TIFF format (Figure 2), and the boundaries were manually outlined through visual interpretation. Since the accuracy of boundary outlining directly affects the quality of the landslide sample library, to improve the precision of landslide samples and the accuracy of model training, it is necessary to strictly control vector outlining errors during the process. The error range of the outlined vector boundaries should be kept within 1–2 pixels.

- (2)

- ArcGIS software was used to assign attributes to the landslide interpretation data by adding a “label” attribute field to the outlined landslide boundary data. The landslide attribute field was assigned a value of “1”, while the non-landslide background was assigned a value of “0”.

- (3)

- Python 3.6, combined with the GDAL library, was used to convert vector files into raster files based on the assigned attribute field values. The relevant information was read from the raster files to create binary maps, ultimately generating the label data required for constructing the vegetation sample library. Additionally, it was ensured that the generated raster data matched the number of rows and columns in the image data.

- (4)

- Due to limited computer memory, the entire remote sensing image contains too much information to be used directly as input for the network model. Therefore, the remote sensing image and its corresponding landslide labeling data must be divided into several smaller images to ensure compatibility with the model for training. To simplify the processing of remote sensing images and enable the network model to better extract detailed landslide features while ensuring effective convergence, the dataset was divided into images of 256 × 256 pixels.

- (5)

- After processing through the sample segmentation operation, a total of 4879 high-resolution images were obtained, forming the remote sensing landslide sample library. To ensure sample diversity and make the dataset as varied as possible, the data were randomly divided into training, validation, and test sets in a ratio of 6:2:2. The training set, consisting of 2927 images, was used for model training to extract features. The validation set, comprising 976 images, was used to evaluate the model during training, while the test set, also consisting of 976 images, was used to assess the model’s performance.

2.3. Methods

2.3.1. Dual-Coded Segmentation Network (DSNet) Architecture

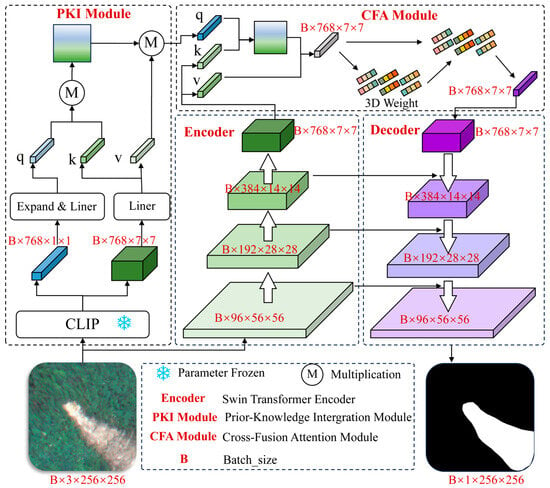

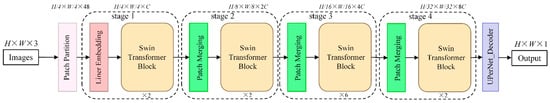

In this study, we propose a Dual-coded Segmentation Network (DSNet) that integrates prior knowledge features through a dual-encoder single-decoder architecture. The network consists of three key components: 1. Swin Transformer Encoder: It extracts multi-scale and hierarchical features through shifted window-based self-attention, capturing both local and global image characteristics. 2. CLIP-based Encoder: It leverages the pretrained CLIP large model [28] to enhance feature representation, combining its strong visual-semantic understanding with domain-specific adaptation for landslide identification. 3. Hierarchical Decoder: It utilizes progressive upsampling with skip connections to recover spatial details while maintaining feature consistency across scales. The workflow of the model is illustrated in Figure 4.

Figure 4.

Structure of the DSNet.

First, the core of CLIP lies in its dual-tower architecture (image encoder and text encoder) with stacked Transformers, which aligns image-text features through contrastive learning during training. In this study, we exclusively employ the image encoder architecture of CLIP. In Figure 4, the green cube in CLIP’s output represents the high-level features extracted through multiple Transformer layers, while the blue cube denotes the corresponding global features. The local information captures fine-grained details such as cracks, soil texture, or small deformations, while the global information provides broader contextual understanding, such as terrain shape and overall landslide morphology. Simultaneously, the Swin model, with its hierarchical structure and ability to model long-range dependencies, is employed to capture rich feature information from the images, ensuring comprehensive representation of complex landslide scenes.

Next, the PKI (Prior Knowledge Integration) module is introduced to effectively integrate the local and global information extracted by CLIP. The core equation of PKI is shown in Equation (1). By incorporating domain-specific knowledge about landslides, such as common deformation patterns or geological characteristics, the PKI module generates a priori knowledge tailored to landslide analysis. This step ensures that the model focuses on the most relevant features for landslide identification.

Here, MA is Multi-Head Attention; XG and XL represent the global and local features output by CLIP, respectively; E() represents the dimension expansion of the matrix; L() is the fully connected layer; and is a scaling factor to prevent the gradient from disappearing due to large dot product values.

Subsequently, in the CFA (Cross-Feature Aggregation) module (Equations (2) and (3)), the image features extracted by the Swin model are deeply fused with the a priori knowledge obtained from the PKI module. This fusion process enriches the feature representation with landslide-specific information, enhancing the model’s ability to distinguish landslides from other geological phenomena. The result is a robust and semantically meaningful feature set that improves the accuracy and reliability of landslide image analysis.

Here, L() is the fully connected layer; is a scaling factor to prevent the gradient from disappearing due to large dot product values; and E is an energy function associated with the input features, usually computed as a significance measure for each position or channel in the feature map.

In the process of information integration and fusion, we skillfully utilize the multi-head attention mechanism [29] to enhance the interaction between different types of information. The core of this mechanism lies in its ability to dynamically model relationships between global and local features through scaled dot-product attention, as shown in Equation (4):

where q (Query) is derived from global information, while k (Key) and v (Value) are projected from local information.

This design allows the model to focus on the most relevant local details guided by the broader context, thereby improving the extraction of landslide prior information. By leveraging the attention mechanism, the model can dynamically weigh the importance of local features within the global context, ensuring a more accurate and comprehensive representation of landslide characteristics.

In the fusion stage of image features and prior knowledge, the roles are reversed: landslide prior information is used as the query (q), and image feature information serves as the key (k) and value (v). This reversal enables the model to align the extracted image features with the domain-specific prior knowledge, further strengthening the representation of landslide-related information within the image features. The multi-head attention mechanism facilitates fine-grained interactions between the prior knowledge and image features, allowing the model to highlight regions or patterns that are most indicative of landslides. This two-stage, attention-driven approach ensures that the final feature representation is both contextually aware and semantically enriched, significantly improving the model’s performance in landslide detection and analysis.

In addition, we have innovatively incorporated the SimAM (Simple Attention Module) [30] into the CFA module. SimAM is an attention model based on twin network architecture, which processes paired input data through a two-branch structure with shared weights to efficiently capture long-distance dependencies and cross-sample interactions. To further enhance spatial-channel feature selection, we extend SimAM with a 3D Weight mechanism. The core of SimAM lies in the use of cross-attention to compute the correlation between two input features, where one branch serves as a Query and the other branch provides the Key and Value, generates the attention weights and fuses the features through a similarity matrix. This mechanism assigns unique weights to neurons, enabling the model to focus more precisely on information that is critical to the task at hand while effectively ignoring irrelevant or less important parts. Finally, through the decoder’s step-by-step decoding process, the model successfully generates an accurate landslide mask.

2.3.2. Swin Transformer

Historically, convolutional neural networks (CNNs) have dominated the field of computer vision. Since AlexNet made a splash in the 2012 ImageNet competition [31], CNNs have been the model of choice for a variety of vision-based tasks. Given the success of Transformers in natural language processing, researchers have begun to explore their application in image processing. Compared to traditional convolutional neural networks, Transformer-based models leverage their multi-head attention mechanism to establish relationships between different pixels, thereby better extracting feature information in a global context. Vision Transformer (ViT), as a representative application of Transformers in image processing, has achieved outstanding results, surpassing traditional CNNs in the field of image recognition [32,33,34]. This demonstrates that Transformers can, to some extent, replace CNNs in image processing and hold great promise for future applications. However, the pixel resolution of images is significantly more complex than the text structure in a paragraph, and traditional Transformer models calculate attention globally, applying attention computations across the entire image. As a result, computational complexity becomes extremely high when processing high-resolution images.

To address the aforementioned issues, the Swin Transformer (Shifted Window Transformer) [35] modifies the original Multi-Head Self-Attention (MSA) structure of the Transformer and introduces a Window-based MSA (W-MSA) structure. This approach divides an image into multiple non-overlapping windows and computes attention within each window independently, significantly reducing computational complexity compared to the whole-image attention computation in ViT. Meanwhile, to overcome the limitation of feature transfer between different windows, the Swin Transformer further proposes a Shifted Window-based MSA (SW-MSA) structure. This method facilitates the transfer of feature information between windows by shifting their positions, thereby ensuring the network’s recognition accuracy. Experimental results have demonstrated that the Swin Transformer achieves top performance across various image classification tasks, showcasing its outstanding capabilities. The specific structure is shown in Figure 5.

Figure 5.

Structure of the Swin Transformer.

Similarly to ViT, images entering the Swin Transformer are first divided into chunks using the patch partition structure and flattened along the channel dimension. These feature maps are then processed through four stages to extract feature information. In the first stage, each chunk undergoes a linear transformation before being fed into two consecutive Swin Transformer blocks. In the subsequent stages, to further extract features and reduce the size of the feature maps, the feature maps are downsampled using patch merging before being fed into the next Swin Transformer block.

2.3.3. Evaluation Indicators

Overall accuracy (OA), precision, F1_score, and recall were used as model evaluation metrics [36,37]. To calculate these four metrics, the following criteria were defined for the landslide identification problem, t: TP (True Positive), in which the pixels classified as landslide are correctly predicted as landslide; FP (False Positive), in which the pixels classified as non-landslide are incorrectly predicted as landslide; FN (False Negative), in which the pixels classified as landslide are incorrectly predicted as non-landslide; and TN (True Negative), in which the pixels classified as non-landslide are correctly predicted as non-landslide [38].

In summary, the above metrics are calculated as follows:

2.3.4. Implementation Details

The experiments in this paper were conducted on the Windows 11 x64 Edition operating system, which provides a stable foundation with enhanced security features and performance optimizations. For deep learning frameworks and acceleration, the experiments utilize Pytorch version 2.1.1, complemented by Pytorch-CUDA version 11.8, to enable GPU acceleration, significantly enhancing model training speed. The training process is powered by an NVIDIA GeForce RTX4090 GPU with 24 GB of video memory, supporting large-scale model training and high-resolution image processing tasks. Regarding model training parameters, 100 training epochs are conducted for experiments involving the Swin Transformer and Dual-Coded Segmentation Network to thoroughly explore the model’s learning capabilities and mitigate overfitting. The batch_size is uniformly set to 8 to balance memory utilization and convergence speed. The network input consisted of remote sensing images with dimensions of 3 × 256 × 256, adhering to the standard input format required by most deep learning models. The Stochastic Gradient Descent (SGD) [39] optimizer is utilized as the optimizer. During the training process, it is necessary to gradually reduce the learning rate to improve the model. In this paper, we use the callback function ReduceLROnPlateau in Pytorch, which can automatically adjust the learning rate during training and is conducive to finding a balanced position between the training speed of the model and the efficiency of the training, which is achieved by reducing the learning rate after every N training rounds. In this paper, we try different learning rates, including 1 × 10−1, 1 × 10−2, 1 × 10−3, 1 × 10−4, 1 × 10−5, and the training results confirm that the learning rate of 1 × 10−4 is the most effective. Therefore, in this paper, the initial learning rate is set to 0.0001; N is 10, i.e., if the training accuracy does not decrease after 10 rounds of training, a new learning rate is introduced into the model for training. The newly set learning rate is to reduce the size of the initial learning rate by 20%. This study quantifies the computational efficiency based on the model parameters, average training time for each epoch, and floating-point operations (FLOPs), with the experimental results detailed in Table 1.

Table 1.

Computational resource metrics of DSNet.

3. Results and Analysis

3.1. Analysis of Ablation Experiment Results

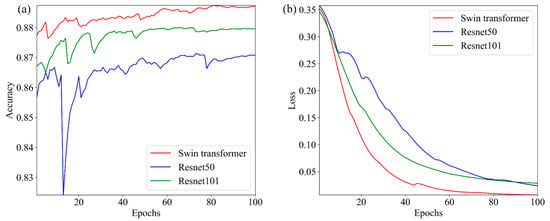

In this study, a set of ablation experiments was conducted to investigate the impact of the backbone network on the experimental results. ResNet50, ResNet101, and the Swin Transformer were selected as encoders and backbone networks, respectively, to assess their influence on the feature extraction performance of the newly constructed DS Network. The effectiveness of the backbone network significantly determines the feature extraction capabilities of the encoder. A deep learning network model with strong learning ability relies heavily on whether the backbone network possesses robust feature extraction capabilities. Regarding the selection of ResNet models, both ResNet50 and ResNet101 are well-established network architectures that have been validated through extensive experiments and have demonstrated excellent performance across various computer vision tasks, including image classification, object detection, and semantic segmentation. Compared to ResNet18 and ResNet34, ResNet50, and ResNet101 have greater network depth, enabling them to better capture high-level image features. However, they are faster to train and easier to optimize than deeper models such as ResNet152. Therefore, in this study, ResNet50 and ResNet101, as representative ResNet architectures, were chosen as encoders with their fully connected layers removed. The validation results using the Swin Transformer as the encoder are presented in Figure 6.

Figure 6.

Comparison of different backbone network validation phases: (a) validation accuracy; (b) validation loss.

The experimental results show that, compared to the DS Network using ResNet50 and ResNet101 as backbone networks, the DS Net with the Swin Transformer as the backbone network not only achieves a steady improvement in validation accuracy, reaching a higher level, but also exhibits relatively lower training loss.

The Swin Transformer model demonstrates significant performance advantages, particularly in comparison to the classic ResNet50 and ResNet101 models [40]. From the perspective of validation accuracy, the accuracy curve of the Swin Transformer exhibits a steady upward trend, quickly surpassing the baseline performance of ResNet50. Moreover, as training progresses, it further widens the gap with ResNet101, eventually stabilizing at a relatively high accuracy level. This improvement highlights the Swin Transformer’s remarkable advancements in feature extraction and model generalization capabilities.

Meanwhile, observing the changes in the loss, the loss curve of the Swin Transformer exhibits a smoother and more rapidly descending trajectory. In the early stages, its loss value is significantly lower than that of the ResNet family of models, indicating that the Swin Transformer can converge more quickly to the vicinity of the optimal solution. As the number of training rounds increases, this advantage becomes even more pronounced, with the loss curve continuing to decline and eventually reaching a value lower than both ResNet50 and ResNet101. This directly highlights the Swin Transformer’s efficiency and stability during the optimization process. Additionally, it demonstrates that while increasing network depth can enable the extraction of more features, it may also lead to training challenges such as gradient vanishing or gradient explosion, which can adversely affect the model’s convergence speed and segmentation performance.

3.2. Visual Analysis of Experimental Results

The Swin Transformer demonstrated the best overall performance as the backbone network in the ablation experiments. Therefore, in this subsection, the prediction results of the DS Net and the Swin Transformer are visualized and compared individually. The results of the evaluation metrics are presented in Table 2.

Table 2.

Evaluation metrics for the DS Net compared to the Swin Transformer.

As shown in the table, a comprehensive evaluation of the two advanced models, Swin Transformer and DS Net, clearly demonstrates the significant advantages of DS Net. Specifically, DS Net achieves an OA of 0.926, surpassing the Swin Transformer’s 0.895. Additionally, DS Net attains a P of 0.884, compared to the Swin Transformer’s 0.843, showcasing its superior ability to accurately identify pixels in landslide areas. More importantly, DS Net significantly outperforms the Swin Transformer on the key metric of recall, with a value of 0.879 compared to the Swin Transformer’s 0.814. This indicates that DS Net is better equipped to comprehensively identify landslide areas in actual events, effectively reducing the risk of missed detections. The F1_score, combining P and recall, further confirms the superiority of DS Net, with a score of 0.882, far exceeding the Swin Transformer’s 0.828. This highlights DS Net’s robust and consistent performance in landslide identification tasks. These substantial performance improvements can be attributed to DS Net’s innovative network design, which incorporates a priori knowledge extracted and fused through the CLIP model. This enables DS Net to more effectively capture the complex features of landslide areas, ensuring its exceptional performance.

3.3. Comparison with Other Semantic Segmentation Models

To evaluate the performance of the DS Net-based landslide recognition model more objectively, we incorporated findings from the backbone network ablation experiments, which showed that CNN-based models significantly underperformed compared to the Swin Transformer. Therefore, four representative efficient semantic segmentation models were selected for comparison in this study: 1. SegFormer [41] uses a hierarchical transformer encoder and a lightweight MLP decoder to significantly reduce computational complexity while maintaining accuracy. 2. SegNeXt [42] innovatively fuses convolution and attention mechanisms, and its Convolutional Attention Module (CAM) excels in edge preservation and detail segmentation. 3. FeedFormer [43] creates the first feed-forward transformer architecture, replacing the standard attention layer with a cascading feed-forward network to dramatically increase processing speed and memory efficiency. 4. U-MixFormer [44] is an improved hybrid transformer based on U-shaped architecture, which is especially suitable for complex scene segmentation tasks by optimizing local and global feature fusion. The selection of these advanced models provided a comprehensive and rigorous frame of reference for DS Net’s performance evaluation.

During the implementation of the study, the Beichuan County landslide extraction dataset, which is unified and contains rich landslide disaster information, was used to systematically train all the models (including DS Net) involved in the comparison. This dataset was chosen not only because it can comprehensively reflect the actual situation of landslide extraction but also because it can rigorously test the identification and generalization abilities of each model in a complex and changing environment. By constructing a unified training framework and testing process, this study ensured that all models were evaluated under the same conditions, thus effectively excluding potential interference from external factors. In terms of accuracy performance on the test set, Table 3 details the key evaluation metrics of each model, including OA, P, recall, and F1_score. Through comparative analysis, it can be clearly seen that the DS Net model achieves significantly better results than the other comparative models in all evaluation metrics. Specifically, DS Net’s OA is as high as 0.926, a metric that is higher than those of FeedFormer, SegFormer, SegNeXt, and U-MixFormer by 3.6%, 3.7%, 3.6%, and 3.5%, respectively. Its P is 0.884, which is 5.5%, 7.3%, 5.9%, and 4.8% higher than those of the other models. Its recall rate reaches 0.879, which is 6.5%, 4.2%, 5.7%, and 7.1% higher compared to the other models, while its F1_score is 0.882, outperforming FeedFormer, SegFormer, SegNeXt, and U-MixFormer by 6.1%, 5.8%, 5.9%, and 6.0%, respectively.

Table 3.

Comparison of DS Net results with other semantic segmentation networks.

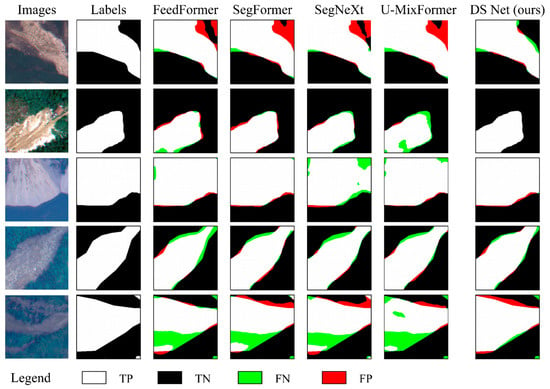

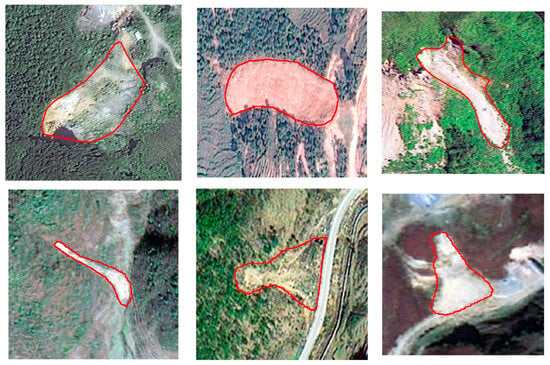

In order to demonstrate more intuitively the difference in the performance of the models in the landslide identification task, this study also provides a visual output of the model comparison, as shown in Figure 7.

Figure 7.

Comparison of confusion matrix visualizations for different models.

In Figure 7, we can closely observe the recognition results produced by different models for the same complex landslide area. These results not only visualize the performance differences among the models but also reveal their technical limitations and advantages in handling specific geological features. Leveraging its superior design, the DS Net model significantly outperforms other comparative models in recognizing the precise boundaries of landslides, capturing landslide-specific features with remarkable detail, and accurately distinguishing landslide areas from non-landslide areas. By extracting multi-scale hierarchical features and local-global information of the images through the Swin and CLIP models and employing multi-head and SimAM attention mechanisms for in-depth feature fusion and focusing on key information, the DS Net model achieves highly accurate landslide mask generation. The effectiveness of this network can be attributed to its advanced feature fusion strategy, fine-grained information processing mechanism, and its ability to precisely capture critical landslide information.

Compared to the excellent performance of DS Net, the other four semantic segmentation models in the comparison, despite their expertise in specific domains, exhibited relatively similar overall performance levels in the task of landslide identification. This suggests potential deficiencies in their ability to model landslide-specific attributes. For instance, FeedFormer, an innovative sequence-to-sequence model based on the Transformer architecture, excels in domains such as natural language processing by effectively handling large volumes of sequential data with its self-attention mechanism. However, its application in semantic segmentation, particularly in the highly specialized task of landslide recognition, appears limited. This limitation stems primarily from the extreme complexity and diversity of landslide terrain, which exceeds the scope of features that the model can learn from a limited and potentially biased training dataset. Combined with constraints in generalization ability, FeedFormer struggles to make accurate predictions when encountering variant landslide features. Additionally, its attention mechanism may fail to adequately focus on critical landslide details, such as minute cracks and soil disturbances, leading to incomplete recognition results. SegFormer, another lightweight Transformer-based semantic segmentation model, leverages a self-attention mechanism to capture long-distance dependencies in images, aiming to enhance global information comprehension and improve segmentation accuracy. However, when faced with the highly similar spectral and textural features of landslides and vegetation, SegFormer encounters difficulties in distinguishing between these visually similar categories. In areas with dense vegetation and complex landslide morphology, its prediction results often fail to accurately delineate boundaries between landslides and vegetation cover, revealing its limitations in addressing closely related categories. SegNeXt and U-MixFormer face challenges more closely tied to their structural characteristics. SegNeXt, a convolutional neural network-based model, enhances spatial attention with a multi-scale convolutional attention module. However, its conventional convolutional operations may fall short in capturing the subtle but crucial spectral and textural differences between landslides and their surroundings, particularly vegetation. This limitation becomes especially evident in cases where landslide edges are blurred or intertwined with vegetation. On the other hand, U-MixFormer, a hybrid model combining U-Net and Transformer advantages, theoretically should improve segmentation accuracy through hierarchical feature extraction and global contextual understanding. However, in practice, its decoder may struggle to effectively differentiate between landslide and vegetation features when fusing multi-level encoder outputs. Similarly, its hybrid attention mechanism may fail to sufficiently focus on key landslide areas, resulting in inaccurate recognition. These observations indicate that even models integrating multiple advanced technologies require further optimization in structural design and feature extraction strategies to overcome the challenges of capturing and distinguishing complex terrain features. This is essential to meet the high-precision recognition demands of highly specialized tasks like landslide detection in practical applications.

4. Discussion

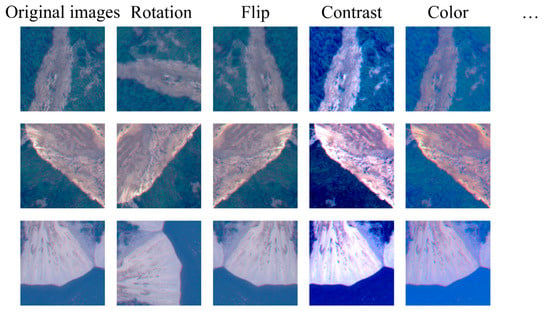

4.1. Comparison and Analysis of Different Data Enhancement Patterns

Data enhancement is a powerful data processing technique that applies diverse transformations to segmented images [45,46], such as rotation, flipping, adding noise, and blurring [47,48,49] (as shown in Figure 8). While it does not substantially introduce brand new data, it effectively simulates various possible changes in images under practical conditions, exponentially increasing the diversity and value of the dataset at a lower cost. Of particular importance is ensuring that corresponding labels undergo consistent transformations alongside the augmented image data. Labels are crucial in supervised learning as they guide the model to learn how to extract the correct outputs from input images. For example, if a rotation operation is applied to an image, its corresponding labels must also be rotated in the same manner to maintain the alignment between image content and label information. This synchronized transformation of labels is a critical factor in ensuring the effectiveness of data enhancement. Failure to maintain this consistency could result in the model learning incorrect information, ultimately affecting its performance.

Figure 8.

Enhancement results of partial training set images.

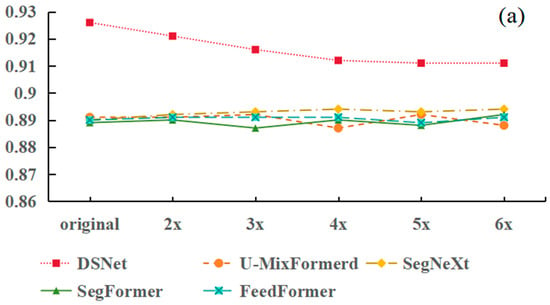

Considering that this study uses a homemade landslide identification dataset, it may not be quantitatively superior to other public datasets. To prevent the model from overfitting due to the limited number of images in the dataset, data enhancement techniques were employed to expand the original dataset. For this purpose, methods such as image rotation, contrast adjustment, and chromaticity adjustment were primarily adopted. These transformation operations enhance the model’s ability to recognize different viewpoints, mirror morphologies, noise interference, and blurring effects in images. This significantly improves the model’s generalization ability, enabling it to exhibit stronger adaptability and robustness when dealing with new data or complex scenarios. Data enhancement is an indispensable and important method in deep learning and computer vision research. In this study, training sample sets with different enhancement levels were used to statistically analyze the changes in predictive indicators for various models on the test set. The results are shown in Figure 9.

Figure 9.

Evolution of test set metrics with different training set enhancement multipliers: (a) OA; (b) P; (c) F1_score; (d) recall.

The key performance indicators (OA, P, F1_score, recall) of DSNet exhibit a gradual decline as the dataset enhancement multiplier increases. From the feature perspective, although data enhancement increases data diversity and improves the model’s generalization ability to unseen samples, excessive enhancement can compromise key structural and detailed information within the images, particularly the subtle features critical to segmentation tasks. While the Swin Transformer and CLIP encoders in DSNet excel at capturing multi-scale, multi-level, and global contextual information, they depend on high-quality features in the original images for accurate encoding. When these features are distorted by over-enhancement, the encoder’s feature extraction capability may be severely impacted, resulting in features that no longer accurately reflect the content of the original image, thereby degrading segmentation performance. From the model learning perspective, the new samples introduced through data augmentation are not always consistent with the original task distribution. Over-enhanced images may deviate significantly from the original data, causing the model to learn features or noise unrelated to the task. This “noise learning” phenomenon disrupts the model’s ability to identify and utilize key features, leading to poorer performance on the test set. Such interference is especially pronounced in landslide identification tasks like those using DANet, which rely heavily on a priori knowledge for precise segmentation. When employing data enhancement techniques, it is crucial to carefully balance the intensity of enhancement against model performance to avoid issues such as feature distortion, noise learning, and overfitting caused by excessive enhancement. Future research can explore more intelligent data enhancement strategies, such as dynamic enhancement guided by model feedback or task-oriented enhancement methods. Additionally, developing more robust feature extraction and segmentation models will help address the challenges posed by data augmentation. Moreover, conducting in-depth investigations into the impact of data enhancement on model learning mechanisms represents an essential direction for future research.

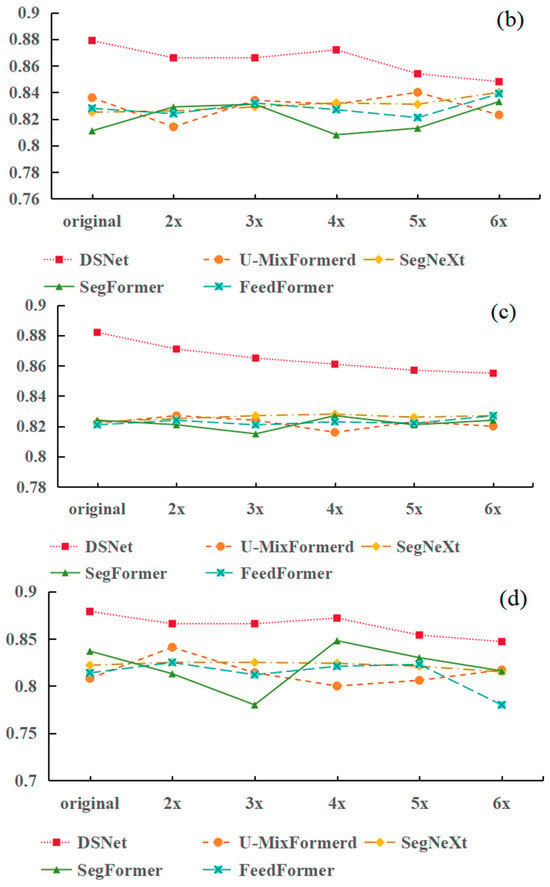

4.2. The Model Adaptation Capability of DSNet on the Bijie Dataset

The practical value of landslide identification models largely depends on their ability to adapt to different environments. In order to verify the practical effectiveness of the DSNet proposed in this study, the publicly available landslide dataset [50] in Bijie, Guizhou, is used to carry out the adaptability analysis. The area is located in the slope zone of the transition from the Tibetan Plateau to the eastern hills, with an altitude of 457–2900 m, large relative altitude difference, many steep slopes, abundant rainfall (average annual rainfall of 849–1399 mm), and fragile ecological environment. Meanwhile, Bijie City is located on the Yunnan–Guizhou Plateau, and topographically belongs to the confluence of two or three levels of stairs, with a large relative difference in elevation within the region. The topography and precipitation conditions in Bijie laid the foundation for the development of landslides. Examples of landslides in the dataset are shown in Figure 10.

Figure 10.

Some landslide samples from the Bijie dataset: red lines are landslide boundaries.

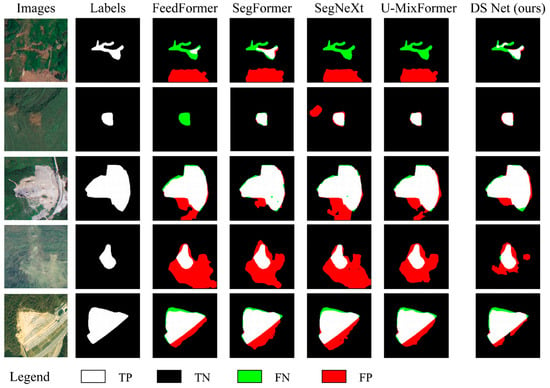

In this study, the Bijie landslide dataset was allocated into training, validation and test sets in the ratio of 6:2:2 using a randomized partitioning strategy, and the data expansion operation was implemented on the training samples. The network parameters of all models are fixed during the experiment, and the optimal weights obtained from pre-training are directly called for inference analysis, and the final model test results are shown in Table 4 and Figure 11. DSNet outperforms other models overall.

Table 4.

DS Net generalization results in Bijie dataset compared to other semantic segmentation networks.

Figure 11.

Comparison of Confusion Matrix Visualization for Different Models in the Bijie dataset.

As can be seen from Table 4, loading the optimal weights of the pre-trained model can effectively improve the model’s performance. This is due to the fact that the pre-training weights have already learned rich feature representations through historical data, and using them as initialization parameters for the new task can enable the model to quickly obtain strong landslide identification capability. Even on a completely new set of landslide datasets, it is possible to achieve excellent recognition test results by mining image features with a short training period.

Figure 11 compares the prediction results of different models (FeedFormer, SegFormer, SegNeXt, U-MixFormer, and DS Net) in the Bijie landslide identification task. Comparison of the prediction results between real labels and each model shows that DS Net has a more complete detection of the landslide region, fewer FN and FP, and more accurate boundary segmentation, and the overall performance is better than the other models. This indicates that the DS Net proposed in this study is able to learn landslide features more efficiently, thus improving the recognition accuracy.

4.3. Application of DS Net in Other Scenarios

In order to verify the generalizability of the DSNet, the publicly available GVLM dataset [51] is selected as the research subject in this study. The GVLM dataset contains 17 pairs of VHR (very high-resolution) imagery acquired through the Google Earth service at a spatial resolution of 0.59 m. As shown in Table 5, the dataset covers diverse landslide scenarios in different countries and regions in six continents: Asia, Africa, North America, South America, Europe, and Oceania. The dataset covers a total area of 163.77 km2, effectively making up for the fact that the existing public landslide database is limited to only a few cities or regions [52].

Table 5.

Details of the GVLM dataset.

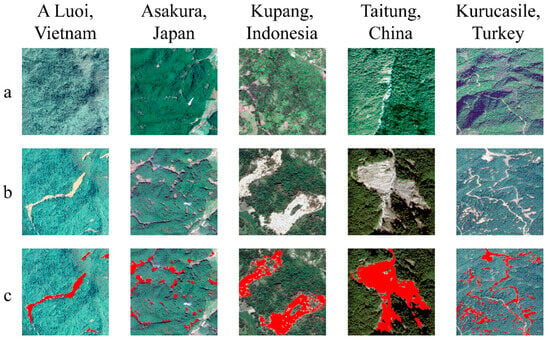

The dataset contains 17 subsets covering landslides in different geographical areas. These landslides show significant differences in size, morphology, phenology, and land cover type (some landslide samples are shown in Figure 12). Due to the above reasons, it causes its remote sensing images to exhibit a high degree of spectral heterogeneity and variation in radiation intensity. Landslides are triggered by a variety of mechanisms, including rainfall, earthquakes, floods, hurricanes, snowmelt, and rock loosening. The major land cover types involved in GVLM dataset can be categorized into three groups: (1) man-made surfaces (roads, buildings, and residential areas); (2) vegetation cover (farmland, forests, shrubs, and grasslands); and (3) bodies of water (snow, ice, rivers, and oceans). The high diversity characteristics of this dataset provide a solid foundation for evaluating the generalization ability of the DSNet proposed in this study.

Figure 12.

Some samples of the GVLM dataset. (a,b) Pre- and post-event imagery. (c) Landslides with red masks.

The entire GVLM dataset was used as a test set for direct prediction. The DSNet generalization results are compared with other semantic segmentation models as shown in Table 6. DSNet outperforms other models overall.

Table 6.

DS Net generalization results in GVLM dataset compared to other semantic segmentation networks.

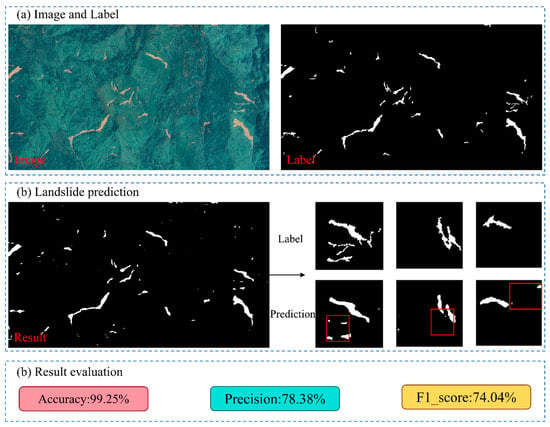

This study demonstrates, in detail, the prediction results for A Luoi, Vietnam, as shown in Figure 13. DSNet effectively identified most of the landslide areas in the experimental area. DSNet still achieved excellent recognition performance under complex terrain conditions, with a precision of 78.38%, an overall accuracy of 99.25%, and an F1 score maintained at a high level of 74.04%.

Figure 13.

Generalization Experiment Results.

These quantitative indicators provide sufficient evidence of the model’s core competencies. Even when faced with the challenge of cross-regional application, the model still successfully captured key features of the landslides. Particularly noteworthy is that the model is able to effectively distinguish image features that are different from those of Beichuan County, showing good generalization performance. The test results show that the overall recognition effect of DSNet is poor for relatively small landslides, which indicates the direction of the model’s subsequent improvement. Undeniably, the identification case in Figure 11 proves that the modeling framework is able to accurately target landslide locations and provide reliable technical support for disaster assessment. The practical value of this study is to confirm the potential of the DSNet model as a new generation landslide identification tool. Although there are adaptability differences in different geologic environments, its core recognition framework exhibits excellent performance and provides a new technical path for geologic hazard monitoring. In the future, the model is expected to achieve a wider range of applications by continuously optimizing the training strategy and enhancing the feature learning capability.

4.4. Limitations and Future Work

This study proposes a dual encoding segmentation network (DSNet), which extracts multi-scale image features and global-local information, respectively, through dual-path encoding of Swin Transformer and CLIP model. When integrated into landslide identification a priori knowledge using PKI module, the deep fusion of features and knowledge is realized by the multi-attention and SimAM in the CFA module, which finally outputs a high-precision landslide mask. Finally, the landslide mask is output through hierarchical decoding, showing significant advantages in recall (0.879) and F1-score (0.882) indicators, providing a new paradigm for landslide detection in complex terrain.

An in-depth analysis of the prediction results in Figure 12 of the generalization experiment reveals that the model is still deficient in the precise delineation of landslide boundaries, especially when dealing with landslides that are relatively small in size and exhibit elongated morphology. This limitation mainly stems from the following three factors: 1. Insufficient coverage of small-scale elongated landslide samples in the current training dataset; 2. The pixel features presented by this type of landslides in remote sensing imagery are relatively weak; 3. The elongated morphology leads to fragmentation of edge features, which makes it difficult for the model to establish a continuous and accurate boundary representation.

To address the limitations of the above model in elongated landslide boundary identification. In the future, we will carry out a systematic improvement study from two levels: data collection and algorithm optimization. At the data level, the focus is on supplementing typical elongated landslide samples. By using a combination of UAV tilt photography and ground-based LiDAR scanning, three-dimensional topographic data with centimeter-level accuracy were acquired, and a system of quantitative indexes for landslide morphology features was established, including morphological parameters such as aspect ratio, curvature, and slope direction. Meanwhile, the sample augmentation algorithm based on generative adversarial network is developed to generate synthetic samples of different sizes and morphology ratios through parameterized control, especially for the targeted enhancement of extreme morphology landslides with a length of more than 80 m and an aspect ratio of more than 5:1. In terms of algorithm improvement, the focus will be on how to transform morphological parameters such as landslide aspect ratio and strike into attentional weights to strengthen the feature extraction capability for slender structures. At the same time, a post-processing module based on the active contour model is developed to combine deep learning output with geometric constraint optimization to ensure that the boundary prediction results are consistent with both image characteristics and geological morphological laws.

5. Conclusions

In this study, an efficient and accurate landslide identification method was developed, featuring a Dual-coded Segmentation Network that integrates features extracted from a priori knowledge. This network combines the Swin Transformer and the CLIP large models as dual encoders and utilizes a hierarchical up-sampling technique for decoding, enabling comprehensive capture of multi-scale, multi-layer, and global information from landslide images. The results of comparison and ablation experiments reveal the following:

- The DS Network with the Swin Transformer as the encoder demonstrates better accuracy compared to ResNet50 and ResNet101 in ablation experiments;

- The DS Net model excels in landslide identification tasks, significantly outperforming comparison models such as SegFormer, SegNeXt, FeedFormer, and U-MixFormer across all evaluation metrics, with specific improvements ranging from 3.5% to 7.3%;

- In the landslide identification task, excessive data enhancement can disrupt key image features and introduce noise, leading to performance degradation of the DSNet model. Therefore, the relationship between enhancement intensity and model performance must be carefully balanced.

- While DSNet achieves superior performance (Recall = 0.879, F1 = 0.882), its current limitation lies in accurately segmenting small, elongated landslides due to dataset gaps and weak edge features. Future work will combine UAV/LiDAR-based 3D morphology augmentation and GAN-generated synthetic samples with algorithm-level enhancements integrating aspect-ratio-guided attention and active contour post-processing.

Author Contributions

Conceptualization, X.W. and S.L.; methodology, X.W.; software, D.Z.; validation, X.W., D.Z. and X.S.; formal analysis, X.W.; investigation, X.W.; resources, S.L.; data curation, X.W.; writing—original draft preparation, X.W.; writing—review and editing, C.L.; visualization, L.X.; supervision, X.W.; project administration, S.L.; funding acquisition, Y.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, Grant No. 4230140, Southwest Mountain Natural Resources Remote Sensing Monitoring Engineering Technology Innovation Center open project Fund of Ministry of Natural Resources (NO: RSMNRSCM-2024-007).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Xiaochuan Song was employed by the company Sichuan 402 Surveying and Mapping Technology Corp. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Liu, X.L.; Miao, C. Large-Scale Assessment of Landslide Hazard, Vulnerability and Risk in China. Geomat. Nat. Hazards Risk 2018, 9, 1037–1052. [Google Scholar] [CrossRef]

- Zhang, L.; Guo, Z.X.; Qi, S.; Zhao, T.H.; Wu, B.C.; Li, P. Landslide Susceptibility Evaluation and Determination of Critical Influencing Factors in Eastern Sichuan Mountainous Area, China. Ecol. Indic. 2024, 169, 112911. [Google Scholar] [CrossRef]

- Samia, J.; Temme, A.; Bregt, A.K.; Wallinga, J.; Stuiver, J.; Guzzetti, F.; Ardizzone, F.; Rossi, M. Implementing Landslide Path Dependency in Landslide Susceptibility Modelling. Landslides 2018, 15, 2129–2144. [Google Scholar] [CrossRef]

- Yang, Y.; Song, S.L.; Yue, F.C.; He, W.; Shao, W.; Zhao, K.; Nie, W. Superpixel-Based Automatic Image Recognition for Landslide Deformation Areas. Eng. Geol. 2019, 259, 105166. [Google Scholar] [CrossRef]

- Xun, Z.Y.; Zhao, C.Y.; Kang, Y.; Liu, X.J.; Liu, Y.Y.; Du, C.Y. Automatic Extraction of Potential Landslides by Integrating an Optical Remote Sensing Image with an InSAR-Derived Deformation Map. Remote Sens. 2022, 14, 2669. [Google Scholar] [CrossRef]

- Huang, F.M.; Liu, K.J.; Li, Z.Y.; Zhou, X.T.; Zeng, Z.Q.; Li, W.B.; Huang, J.S.; Catani, F.; Chang, Z.L. Single Landslide Risk Assessment Considering Rainfall-Induced Landslide Hazard and the Vulnerability of Disaster-Bearing Body. Geol. J. 2024, 59, 2549–2565. [Google Scholar] [CrossRef]

- Guo, L.J.; Miao, F.S.; Zhao, F.C.; Wu, Y.P. Data Mining Technology for the Identification and Threshold of Governing Factors of Landslide in the Three Gorges Reservoir Area. Stoch. Environ. Res. Risk Assess. 2022, 36, 3997–4012. [Google Scholar] [CrossRef]

- Goetz, J.N.; Brenning, A.; Petschko, H.; Leopold, P. Evaluating Machine Learning and Statistical Prediction Techniques for Landslide Susceptibility Modeling. Comput. Geosci. 2015, 81, 1–11. [Google Scholar] [CrossRef]

- Chen, T.H.K.; Prishchepov, A.; Fensholt, R.; Sabel, C.E. Detecting and Monitoring Long-Term Landslides in Urbanized Areas with Nighttime Light Data and Multi-Seasonal Landsat Imagery across Taiwan from 1998 to 2017. Remote Sens. Environ. 2019, 225, 317–327. [Google Scholar] [CrossRef]

- Lissak, C.; Bartsch, A.; De Michele, M.; Gomez, C.; Maquaire, O.; Raucoules, D.; Roulland, T. Remote Sensing for Assessing Landslides and Associated Hazards. Surv. Geophys. 2020, 41, 1391–1435. [Google Scholar] [CrossRef]

- Ren, P.Z.; Xiao, Y.; Chang, X.J.; Huang, P.Y.; Li, Z.H.; Gupta, B.B.; Chen, X.J.; Wang, X. A Survey of Deep Active Learning. ACM Comput. Surv. 2022, 54, 1–40. [Google Scholar] [CrossRef]

- Caroppo, A.; Leone, A.; Siciliano, P. Comparison Between Deep Learning Models and Traditional Machine Learning Approaches for Facial Expression Recognition in Ageing Adults. J. Comput. Sci. Technol. 2020, 35, 1127–1146. [Google Scholar] [CrossRef]

- Chen, L.Y.; Li, S.B.; Bai, Q.; Yang, J.; Jiang, S.L.; Miao, Y.M. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Zhang, R.; Lv, J.C.; Yang, Y.J.; Wang, T.Y.; Liu, G.X. Analysis of the Impact of Terrain Factors and Data Fusion Methods on Uncertainty in Intelligent Landslide Detection. Landslides 2024, 21, 1849–1864. [Google Scholar] [CrossRef]

- Dong, A.A.; Dou, J.; Li, C.D.; Chen, Z.Q.; Ji, J.; Xing, K.; Zhang, J.; Daud, H. Accelerating Cross-Scene Co-Seismic Landslide Detection Through Progressive Transfer Learning and Lightweight Deep Learning Strategies. IEEE Trans. Geosci. Remote Sens. 2024, 62. [Google Scholar] [CrossRef]

- Song, Y.; Song, Y.X.; Wang, C.N.; Wu, L.W.; Wu, W.C.; Li, Y.; Li, S.C.; Chen, A.Q. Landslide Susceptibility Assessment through Multi-Model Stacking and Meta-Learning in Poyang County, China. Geomat. Nat. Hazards Risk 2024, 15, 2354499. [Google Scholar] [CrossRef]

- Lv, P.Y.; Ma, L.S.; Li, Q.M.; Du, F. ShapeFormer: A Shape-Enhanced Vision Transformer Model for Optical Remote Sensing Image Landslide Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2681–2689. [Google Scholar] [CrossRef]

- Chen, X.R.; Zhao, C.Y.; Lu, Z.; Xi, J.B. Landslide Inventory Mapping Based on Independent Component Analysis and UNet plus: A Case of Jiuzhaigou, China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 2213–2223. [Google Scholar] [CrossRef]

- Dias, P.; Potnis, A.; Guggilam, S.; Yang, L.; Tsaris, A.; Medeiros, H.; Lunga, D. An Agenda for Multimodal Foundation Models for Earth Observation. In Proceedings of the IGARSS 2023-2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 1237–1240. [Google Scholar]

- Jiao, L.; Huang, Z.; Lu, X.; Liu, X.; Yang, Y.; Zhao, J.; Zhang, J.; Hou, B.; Yang, S.; Liu, F. Brain-Inspired Remote Sensing Foundation Models and Open Problems: A Comprehensive Survey. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 10084–10120. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment Anything. In Proceedings of the IEEE/CVF international Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Cheng, B.; Choudhuri, A.; Misra, I.; Kirillov, A.; Girdhar, R.; Schwing, A.G. Mask2former for Video Instance Segmentation. arXiv 2021, arXiv:2112.10764. [Google Scholar]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment Anything in Medical Images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef] [PubMed]

- Lessee, M.A.N.; Hamburger, M.W.; Ferrara, M.R.; McLean, A.; FitzGerald, C. A Global Dataset and Model of Earthquake-Induced Landslide Fatalities. Landslides 2020, 17, 1363–1376. [Google Scholar] [CrossRef]

- Xu, Y.; Ouyang, C.; Xu, Q.; Wang, D.; Zhao, B.; Luo, Y. CAS Landslide Dataset: A Large-Scale and Multisensor Dataset for Deep Learning-Based Landslide Detection. Sci. Data 2024, 11, 12. [Google Scholar] [CrossRef] [PubMed]

- Ding, M.T.; Hu, K.H. Susceptibility Mapping of Landslides in Beichuan County Using Cluster and MLC Methods. Nat. Hazards 2014, 70, 755–766. [Google Scholar] [CrossRef]

- Qin, X.H.; Chen, Q.C.; Wu, M.L.; Tan, C.X.; Feng, C.J.; Meng, W. In-Situ Stress Measurements along the Beichuan-Yingxiu Fault after the Wenchuan Earthquake. Eng. Geol. 2015, 194, 114–122. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International conference on machine learning, PMLR, Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems; Pereira, F., Burges, C.J., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Han, K.; Wang, Y.H.; Chen, H.T.; Chen, X.H.; Guo, J.Y.; Liu, Z.H.; Tang, Y.H.; Xiao, A.; Xu, C.J.; Xu, Y.X.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110. [Google Scholar] [CrossRef]

- Parvaiz, A.; Khalid, M.A.; Zafar, R.; Ameer, H.; Ali, M.; Fraz, M.M. Vision Transformers in Medical Computer Vision-A Contemplative Retrospection. Eng. Appl. Artif. Intell. 2023, 122, 106126. [Google Scholar] [CrossRef]

- Xu, H.M.; Xu, Q.; Cong, F.Y.; Kang, J.; Han, C.; Liu, Z.Y.; Madabhushi, A.; Lu, C. Vision Transformers for Computational Histopathology. IEEE Rev. Biomed. Eng. 2024, 17, 63–79. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Hong, H.Y.; Pradhan, B.; Sameen, M.I.; Kalantar, B.; Zhu, A.X.; Chen, W. Improving the Accuracy of Landslide Susceptibility Model Using a Novel Region-Partitioning Approach. Landslides 2018, 15, 753–772. [Google Scholar] [CrossRef]

- Lv, J.C.; Zhang, R.; Wu, R.Z.; Bao, X.; Liu, G.X. Landslide Detection Based on Pixel-Level Contrastive Learning for Semi-Supervised Semantic Segmentation in Wide Areas. Landslides 2024, 22, 1087–1105. [Google Scholar] [CrossRef]

- Nohani, E.; Khazaei, S.; Dorjahangir, M.; Asadi, H.; Elkaee, S.; Mahdavi, A.; Hatamiafkoueieh, J.; Tiefenbacher, J.P. Delineating Flood-Prone Areas Using Advanced Integration of Reduced-Error Pruning Tree with Different Ensemble Classifier Algorithms. ACTA Geophys. 2024, 72, 3473–3484. [Google Scholar] [CrossRef]

- Asadi, M.; Mokhtari, L.G.; Shirzadi, A.; Shahabi, H.; Bahrami, S. A Comparison Study on the Quantitative Statistical Methods for Spatial Prediction of Shallow Landslides (Case Study: Yozidar-Degaga Route in Kurdistan Province, Iran). Environ. EARTH Sci. 2022, 81, 51. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. Segnext: Rethinking Convolutional Attention Design for Semantic Segmentation. arXiv 2022, arXiv:2209.08575. [Google Scholar]

- Shim, J.; Yu, H.; Kong, K.; Kang, S.-J. Feedformer: Revisiting Transformer Decoder for Efficient Semantic Segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2263–2271. [Google Scholar]

- Yeom, S.-K.; von Klitzing, J. U-MixFormer: UNet-like Transformer with Mix-Attention for Efficient Semantic Segmentation. arXiv 2023, arXiv:2312.06272. [Google Scholar]

- Wei, R.L.; Ye, C.M.; Sui, T.B.; Zhang, H.J.; Ge, Y.G.; Li, Y. A Feature Enhancement Framework for Landslide Detection. Int. J. Appl. EARTH Obs. Geoinf. 2023, 124, 103521. [Google Scholar] [CrossRef]

- Liu, Y.F.; Yang, H.L.; Jiao, R.C.; Wang, Z.P.; Wang, L.Y.; Zeng, W.; Han, J.F. A New Deformation Enhancement Method Based on Multitemporal InSAR for Landslide Surface Stability Assessment. IEEE J. Sel. Top. Appl. EARTH Obs. Remote Sens. 2024, 17, 11086–11100. [Google Scholar] [CrossRef]

- Qi, J.H.; Chen, H.; Chen, F.P. Extraction of Landslide Features in UAV Remote Sensing Images Based on Machine Vision and Image Enhancement Technology. Neural Comput. Appl. 2022, 34, 12283–12297. [Google Scholar] [CrossRef]

- Lin, H.J.; Li, L.; Qiang, Y.; Xu, X.L.; Liang, S.Y.; Chen, T.; Yang, W.J.; Zhang, Y. A Method for Landslide Identification and Detection in High-Precision Aerial Imagery: Progressive CBAM-U-Net Model. Earth Sci. Inform. 2024, 17, 5487–5498. [Google Scholar] [CrossRef]

- Lian, X.G.; Li, Y.; Wang, X.B.; Shi, L.F.; Xue, C.H. Research on Identification and Location of Mining Landslide in Mining Area Based on Improved YOLO Algorithm. Drones 2024, 8, 150. [Google Scholar] [CrossRef]

- Ji, S.; Yu, D.; Shen, C.; Li, W.; Xu, Q. Landslide Detection from an Open Satellite Imagery and Digital Elevation Model Dataset Using Attention Boosted Convolutional Neural Networks. Landslides 2020, 17, 1337–1352. [Google Scholar] [CrossRef]

- Zhang, X.K.; Yu, W.K.; Pun, M.O.; Shi, W.Z. Cross-Domain Landslide Mapping from Large-Scale Remote Sensing Images Using Prototype-Guided Domain-Aware Progressive Representation Learning. ISPRS J. Photogramm. Remote Sens. 2023, 197, 1–17. [Google Scholar] [CrossRef]

- Zhu, Q.Q.; Zhang, Y.N.; Wang, L.Z.; Zhong, Y.F.; Guan, Q.F.; Lu, X.Y.; Zhang, L.P.; Li, D.R. A Global Context-Aware and Batch-Independent Network for Road Extraction from VHR Satellite Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 353–365. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).