Abstract

Recently, the radar high-resolution range profiles (HRRPs) have gained significant attention in the field of radar automatic target recognition due to their advantages of being easy to acquire, having a small data footprint, and providing rich target structural information. However, existing recognition methods typically focus on single-domain features, utilizing either the raw HRRP sequence or the extracted feature sequence independently. To fully exploit the multi-domain information present in HRRP sequences, this paper proposes a novel target feature fusion recognition approach. By combining a convolutional long short-term memory (ConvLSTM) network with a cascaded gated recurrent unit (GRU) structure, the proposed method leverages multi-domain and temporal information to enhance recognition performance. Furthermore, a multi-input framework based on learnable parameters is designed to improve target representation capabilities. Experimental results of 6 ship targets demonstrate that the fusion recognition method achieves superior accuracy and faster convergence compared to methods relying on single-domain sequences. It is also found that the proposed method consistently outperforms the other previous methods. And the recognition accuracy is up to 93.32% and 82.15% for full polarization under the SNRs of 20 dB and 5 dB, respectively. Therefore, the proposed method consistently outperforms the previous methods overall.

1. Introduction

The radar high-resolution range profile (HRRP) contains structural feature information from ship target scattering centers. Compared to traditional methods like synthetic aperture radar (SAR) and inverse SAR (ISAR) [1,2,3,4,5], HRRP sequences offer more convenient radar echo signal data acquisition and simpler imaging algorithms. Consequently, HRRP has emerged as a significant research focus in the field of radar automatic target recognition. While HRRP encompasses rich target structural feature information, it is highly susceptible to amplitude sensitivity and noise, which can severely degrade target recognition performance [6].

In recent years, data-driven learning methods for HRRP sequence recognition have developed significantly [7,8,9,10]. A common approach is to employ convolutional neural networks (CNNs) for target feature extraction [11,12], with the aim of improving target recognition performance. By constructing multilayer CNNs and optimizing under suitable conditions, abstract features of HRRP sequences can be effectively extracted, enhancing the generalization ability of the model for target recognition. CNN-based methods inherit the characteristics of orientation and translation invariance, thereby improving the recognition rate of HRRP sequences. However, CNNs are not effective in handling temporal characteristics. The long short-term memory(LSTM) network is now commonly used in detecting HRRP characteristics [13,14]. Ding et al. [15] proposed an HRRP sequence recognition method based on self-attention and utilized a LSTM network to address the distance dependency in sequences. This method improves the convergence properties of the model while better handling long-range HRRP sequences. Zhang et al. [16] applied a feature extraction-based approach for feature recognition of HRRP sequences, which reduces the issue of noise sensitivity and enhances target recognition accuracy. However, these above methods do not consider the multi-domain features within HRRP sequences, lacking the integration of feature domains with amplitude information, represent time domain.

To fully make use of the multi-domain information in HRRP sequences and improve radar HRRP target recognition performance, this paper proposes an HRRP target feature fusion classification method. The radar echo and HRRP sequence samples of ships can be firstly generated by the electromagnetic (EM) scattering simulation techniques. By fusing the original sequence with the feature sequence, the model can effectively extract multi-dimensional sequence features from HRRP based on gate recurrent unit (GRU) training. This approach improves radar HRRP sequence recognition performance, even in complex environments. To sum up, the contributions are shown below:

- An end-to-end learning approach based on an overall objective is proposed, which combines the time domain and feature domain of HRRP sequences by adjusting the contribution allocation between features and learnable parameters, enabling accurate target recognition;

- By integrating convolutional LSTM (ConvLSTM) network with gate recurrent unit (GRU) structures, a multi-input framework based on learnable parameters is designed to address the sensitivity characteristics of HRRP sequence recognition.

Some experiments of 6 ship models are conducted to test the recognition accuracy of the proposed method. Besides, the Gaussian white noise is added on original dataset to test the robustness. It is found that the proposed method consistently outperforms the other previous methods. And the recognition accuracy is up to 93.32% for full polarization under the signal-to-noise ratio (SNR) of 20 dB. To further assess the recognition performance, we used visualization of loss function and confusion matrix to analyze the proposed model and the comparison model. Additionally, feature dimensionality reduction based on t-SNE method was utilized to evaluate the model’s classification ability in a more visual manner [17]. From the result, this proposed method has a high recognition accuracy and robustness.

2. Scattering Simulation and HRRP Sequences

The radar data and the range profiles of ship target are difficult to obtain by measurements. Therefore, the scattering simulation techniques are introduced to generate the radar echo. Then, their HRRP sequence samples are generated through the simulated radar echo. Generally, the radar data are relative to the attacking scene and missile’s trajectory.

2.1. Attacking Scene

In practical applications, obtaining the radar echo of a target ship is accomplished through the radar of the missile’s seeker during its motion. Therefore, the radar echo are derived from the simulation of the missile’s position during an anti-ship missile attacks on a ship target.

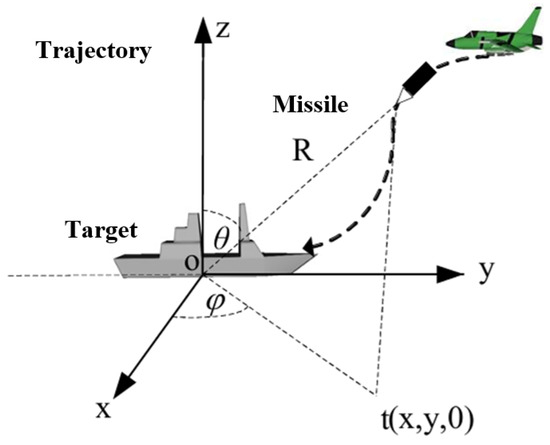

In order to describe the anti-ship missile attack process, the missile’s position during the attack should be firstly determined. Briefly, the missile possibly comes from the different positions, the missile’s trajectory is sampled and discretized, resulting in a sequence of positions as shown in Figure 1. And its elevation and azimuth angles relative to the ship target can be obtained by the different positions. In our experiments, the missile’s trajectory is supposed to be sampled and discretized to different elevation and azimuth angles. The elevation angle has a step angle of 5°, ranging from 30° to 90°. The azimuth angle has a step angle of 1°, ranging from −60° to 60°. Therefore, there are 1573 sequence samples as the dataset.

Figure 1.

Anti-Ship Missile Attacking Scene.

2.2. Electromagnetic Scattering Simulation

In order to obtain the radar echo at different positions, we employ the shooting and bouncing ray—physical optics (SBR-PO) method for electromagnetic scattering simulation of ship targets [18]. The core concept involves using optical approximations to describe the propagation of EM waves within a scene. Ray tracing technology is utilized to calculate the trajectories of rays within the scene. Geometrical optics (GO) is then applied to resolve the field strengths of rays within ray tubes as they bounce between surface elements. Finally, physical optics (PO) is used to derive the induced electromagnetic surface currents to determine the target’s scattering field.

For conducting target, the electric surface current induced by the primary incidence of EM wave is approximated by Equation (1) by using the physical optics method:

where is the outward unit normal of the target surface at . The incident magnetic field is given by

where is the polarization of incident wave, with vertical polarization given by , and horizontal polarization given by . Here, is the radar incident angle. The wave vector is the incident direction. The EM wave number in free space is , c is light speed, f is radar frequency. The lit region denotes the part of the target surface that is illuminated by the incident field, and the shadow region is the part of the target surface that is not exposed to the incident field.

Finally, the scattering field can be expressed as:

where denotes the lit portion of the target surface, is the characteristic impedance in free space. is the scattering direction. r is the radar distance, is the position of received radar, . For backscattered field, .

The scattering effect arising from multiple bounces of the EM wave on the complex target is taken into account by the ray tracing approach. A dense set of ray tubes, which represent the incident EM field, are launched to be incident on the target [19,20]. Each ray tube is traced through multiple bounces on the target according to the law of geometrical optics (GO) until it exits the target geometry. The field in the ray tube is calculated by the GO method. At each reflection point on the target, the PO method is applied to compute the scattered field produced by the induced surface current associated with that ray tube, which is given by

where denotes the footprint of the ray tube on the target surface, is the position vector at any point on the target surface within the ray footprint, and is the magnetic field in the ray tube of multiple bounces at .

Finally, the total scattered field is collected at the far field, and can be obtained by the summation of contributions of primary incidence and multiple bounces. The received scattered field intensities at given polarization and frequency f can then be determined and expressed as

where is the polarization of scattering wave.

Therefore, the scattered fields include four polarization results corresponding to the incident polarization and scattering polarization : vertical-vertical (VV), vertical-horizontal (VH), horizontal-horizontal (HH), horizontal-vertical (HV) modes. Besides, the parallelism by utilizing the graphics processing unit (GPU) [21,22] is implemented to accelerate the EM scattering simulation to generate effectively the radar echo dataset.

2.3. HRRP Sequences by Backscattered Field

Assuming that there exist N point scatterers along the down range (assumed along the x-axis) each located at a different location. Then, the backscattered field at the far-field can be approximated as

where is the backscattered field amplitude for the point scatterer at . The range profile can be constructed by inverse Fourier transforming this frequency diverse field with respect to as given below:

In the above equation, both the summing and the integration operators are linear and therefore can be interchanged as

Then, the integral in Equation (8) perfectly vanishes to impulse (or Dirac delta) function as given below:

Here, represents the range profile as a function of range x. Therefore, the point scatterers located at different locations are perfectly pinpointed in the range axis with their associated backscattered field amplitudes of “”s. The result in Equation (9) is valid for the infinite bandwidth. In real applications, however, the backscattered field data can only be collected within a finite bandwidth of frequencies, i.e.,: ranging from to . Then, the limits of the integral in Equation (8) should be changed to give

One can proceed with by taking the definite integral in Equation (10) as

The above result can be simplified as

, the center frequency , the frequency bandwidth . is the sinc function, sinc defocusing around the scattering centers in the range profile pattern is unavoidable due to finite bandwidth of the radar signal. Therefore, the scattering centers on the range are centered at the true locations of “”s with their corresponding field amplitudes, “”s. When the radar transmits a signal modulated at N different stepped frequencies of and collects the scattered field intensities, , for these N discrete frequency values. Then, the range profile can easily be constructed by applying the inverse fast Fourier transform (IFFT) operation as [23]

Therefore, the HRRP sequences of the ship targets can be obtained by the EM scattering simulation and transforming formulation aforementioned. In our experiment, 6 different ship models are used to generate radar echo and range profile, and the size parameters of ships are shown in Table 1. And the radar’s central frequency GHz, with a bandwidth of MHz.

Table 1.

Size parameters of 6 ships.

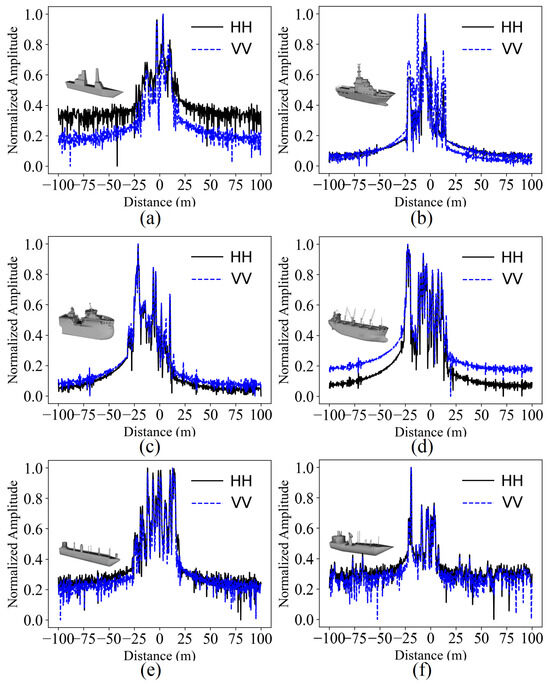

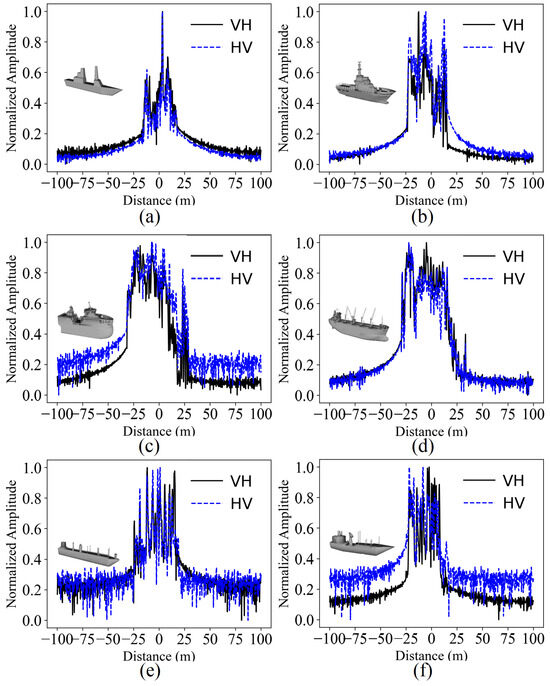

The polarimetric projection of target scattering centers contain a wealth of scattering information in both spatial and polarimetric dimensions. Four polarization modes (HH, HV, VV, VH) are thus adopted. And the normalized process was used in dataset generation to remove the dimension sensibility. As an example, Figure 2 and Figure 3 shows the co-polarization (HH & VV) and cross-polarization (VH & VH) HRRPs of 6 ship models at a particular azimuth/elevation angle of (, ). It is found the range profile can indicate the scattering intensities from the different locations, and which is relative to the ship model.

Figure 2.

Co-polarization HRRPs of 6 ships at , (a) Ship 1; (b) Ship 2; (c) Ship 3; (d) Ship 4; (e) Ship 5; (f) Ship 6.

Figure 3.

Cross-polarization HRRPs of 6 ships at , (a) Ship 1; (b) Ship 2; (c) Ship 3; (d) Ship 4; (e) Ship 5; (f) Ship 6.

3. Proposed Features Fusion Methods

Based on the above method, after calculating the HRRP sequence of the target ship, it is necessary to perform feature extraction and fusion methods by using specific method.

3.1. Feature Extraction and Extension

Initially, the raw HRRP sequence of the target undergoes data preprocessing in the frequency domain. The detailed parameters for feature extraction [24,25] are listed in Table 2.

Table 2.

Extracted HRRP features.

Table 2 presents statistical features extracted from the HRRP sequence of the target ship. The mean value represents the average level of electromagnetic wave reflection from the target. The minimum value indicates the lowest reflection intensity, while the maximum value signifies the highest reflection intensity. The range is calculated as the difference between the maximum and minimum values, representing the magnitude of the reflection intensity value range. The standard deviation measures the degree of variation or dispersion of the reflection intensity values from the mean, indicating the consistency of the reflection intensity. The coefficient of variation provides a normalized measure of dispersion relative to the mean. The smoothness evaluates the level of uniformity in the sequence. The skewness measures the asymmetry of the distribution of reflection intensity values relative to the mean, while the kurtosis indicates the peakedness of the distribution relative to a normal distribution. These features collectively provide a comprehensive statistical characterization of the HRRP sequence, facilitating effective analysis and interpretation of the electromagnetic wave reflection properties of the target ship.

To eliminate the amplitude sensitivity of static features, Backpropagation (BP) neural networks are used in feature extraction network, widely recognized for their ability to approximate complex functions through iterative learning. Originating from the gradient-based optimization paradigm, BP neural networks employ a multi-layered structure, consisting of an input layer, hidden layers, and an output layer. Each layer is composed of artificial neurons connected by weighted links, where each neuron applies a nonlinear activation function to its inputs, allowing the network to model non-linear relationships. First, in order to ensure the consistency of amplitude, the input static feature needs to be convolved by sliding window first to eliminate its difference in amplitude. Then, the output sequence is transformed with method [25], which is consist with high dimension data from original sequence.

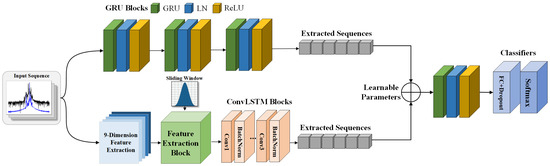

3.2. Network Structure

3.2.1. Multi-Input GRU Feature Fusion

To enhance the recognition accuracy of the raw HRRP sequence, it is proposed an end-to-end HRRP recognition model, namely the fusion-input GRU model, as illustrated in Figure 4. This recognition framework primarily consists of an HRRP-based statistical feature extraction section, a multi-input GRU adaptive feature fusion section, and a final fully connected classifier network. The statistical feature extraction methods are employed to perform feature dimensionality reduction and extraction on the target sequence. Table 3 gives the configurations of the proposed multi-input GRU. Following this, an effective fusion of the composite domain HRRP features is achieved within the GRU framework using hierarchical fusion methods. Finally, the target features are classified and identified through a fully connected layer based on Softmax. Figure 4 and Algorithm 1 show the overall structure and the algorithm flow, respectively.

| Algorithm 1: Fusion Model of Feature Extraction and HRRPs |

Input: Received scattered field data , label . Here N denotes sequence length.

|

Figure 4.

Overall structure of the fusion process.

Table 3.

Configurations of Multi-input GRU.

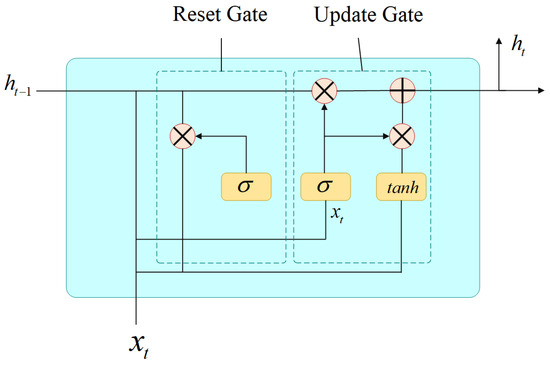

Similar to the LSTM network, the GRU employs gating mechanisms to control the retention and forgetting of information in its units. This approach effectively addresses issues of long-term dependencies and vanishing gradients [27].

To determine the retention of historical information, this framework employs a GRU module with an incorporated residual connection. The diagram of the reset gate and update gate in the GRU module is shown in Figure 5 and the specific parameters are as follows:

where and indicate the reset gate and update gate, respectively, represents the current state information, and is the Sigmoid function, which ranges from 0 to 1 and assists in data retention and forgetting.

Figure 5.

GRU network structure.

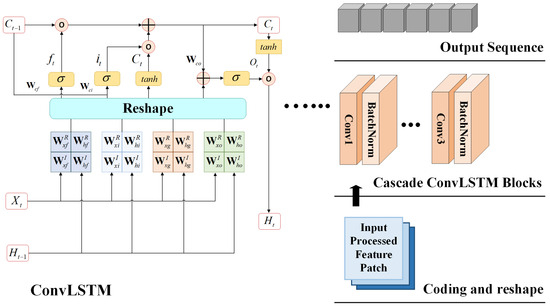

3.2.2. ConvLSTM Blocks

The ConvLSTM network represents a specialized variant of the conventional LSTM network. By replacing the matrix multiplication operations within each gating unit with convolutional operations, ConvLSTM is better equipped to handle high-dimensional input data. Figure 6 shows the diagram of a ConvLSTM block and the overall cascade structure connected by feature extraction block. For the t-th time step, the expressions for the memory cell , the gates , , , and the output are formally given as

where “” refers to the sigmoid function, and “tanh” denotes the hyperbolic tangent function. The symbols “∗” and “∘” signify the convolutional operator and the Hadamard product, respectively. represents the input to the current cell, while is the candidate memory cell for information transmission. Additionally, and correspond to convolution kernels and bias terms, respectively. The subscripts of W and b provide specific meanings. For example, denotes the convolution kernel for the input-output gate, and is the bias term.

Figure 6.

Flowchart of the ConvLSTM Blocks.

Table 4 and Table 5 give the parameter settings of each ConvLSTM layer in Figure 4. GlobalAveragePooling, FullConnectionLayer and Dropout layer is removed to process feature fusion.

Table 4.

Parameter settings of ConvLSTM.

Table 5.

Parameter settings of output layers.

3.2.3. Features Fusion of Learnable Parameters

Achieving effective fusion between semantically strong feature domain sequences and spatially rich HRRP sequences is a crucial method for improving the accuracy of the final recognition results. Let the GRU feature be and the HRRP feature be . The fusion method can be formulated as

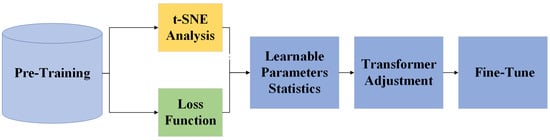

where, and are learnable parameters intended to manage the contribution of each data domain feature for adaptive feature fusion. The whole process needs to go through a pre-training process, which is shown in Figure 7. In a similar manner, high-level feature fusion of these data domains can be achieved by following the aforementioned steps. This fitting method for learnable parameters based on pre-training integration is combined with t-SNE analysis and loss function analysis. Given the linear relationship between and , the proposed method performs parameter fitting under full polarization.

Figure 7.

Learnable parameters adjustment framework.

4. Experiments and Analysis

The abovementioned 6 ship targets are conducted to generate radar echo and to obtain HRRPs dataset, the results of each ship include 1573 radar sampled angles and 4 polarization modes. Besides, the Gaussian white noise is added on original dataset both on training and evaluation sets to test the robust of the proposed method. The different signal-to-noise ratios (SNRs) are set as (1) 20 dB, (2) 15 dB, (3) 10 dB, (4) 5 dB, respectively.

The experimental evaluation of the proposed model is illustrated from two aspects: (1) Accuracy, denoted as , and (2) Loss Function, denoted as .

where TP, TN, FP, and FN represent positive samples judged as positive, negative samples judged as negative, negative samples judged as positive, and positive samples judged as negative, respectively. is the cross-entropy loss function. The value of is 1 if the category of sample i is j, besides the value is 0. is the probability that sample i is judged to be of category j. N denotes the total number of samples.

In addition, all the experiments are performed in a computer with related environments shown in Table 6.

Table 6.

Training Environments of proposed methods.

In Figure 7, a fitting method for learnable parameters based on pre-training integration is presented. Given the linear relationship between and , the proposed method performs parameter fitting under full polarization.

4.1. Adjustment of Learnable Parameters

The different learnable parameter combinations are tested under the original radar dataset. Table 7 and Table 8 compares performance on parameters mentioned in feature fusion models of (16). In Table 7, this setting achieves the highest recognition accuracy, reaching 94.22%, which is superior to other parameter combinations. In Table 8, this combination also exhibits the fastest convergence rate, reaching the early stopping condition in only 31 epochs. This indicates that the parameter combination not only achieves high recognition accuracy but also converges in a shorter training time. Therefore, selecting this parameter is the optimal choice in our experiments.

Table 7.

Comparison of Learnable Parameters Accuracy (Full Polarization).

Table 8.

Comparison of Learnable Parameters Early Stop (Full Polarization).

Compared to the recognition method based on single sequence, the fusion recognition method can achieve the better recognition accuracy and exhibit convergence rate.

4.2. Comparisons of SNR Analysis

As a comparison, support vector machine (SVM) is chosen as a single classifier and Self-Attention with LSTM is also selected for comparison. SVM is a robust supervised machine learning algorithm that identifies an optimal hyperplane to separate and classify data points, thereby maximizing the margin between different classes. This algorithm operates by mapping the input data into a higher-dimensional space and utilizing support vectors to delineate the optimal decision boundary for classification.

Self-attention with LSTM enhances classification by capturing temporal dependencies with LSTM and focusing on relevant parts of the input sequence with self-attention. This combination improves the ability to understand complex patterns and dependencies within the data, leading to more accurate classification outcomes.

Table 9 and Table 10 compares result in different noise levels for HH polarization and full polarization. SVM and traditional CNN exhibited poor performance in classification in each SNR level. The proposed Method shows stability compared with other LSTM methods in lower noise level, it shows greater performance than other kinds of method. Under the SNR of 20 dB, the recognition accuracy is about 93.32% and 87.15% for full polarization and single (HH) polarization, respectively. Even under the SNR of 20 dB, the accuracy is about 82.15% and 76.10%. It indicates that the proposed method is robust and reliable. Performance of proposed method becomes more comparable at real condition noise level, and the proposed method consistently outperforms the previous methods overall.

Table 9.

Comparison of Classification Methods under different SNRs (Full Polarization).

Table 10.

Comparison of Classification Methods under different SNRs (HH Polarization).

Table 11 presents a comparative analysis of the results obtained using the proposed method alongside two other established classification methods. A cross-sectional examination of similar HRRP sequences reveals that the proposed HRRP target feature fusion classification method achieves a superior recognition rate, indicating improved performance over existing models in this category. This enhancement in recognition accuracy suggests that the feature fusion approach effectively captures and characteristics of HRRP sequences, leading to more reliable classification outcomes in practical scenarios.

Table 11.

Comparison with existing methods.

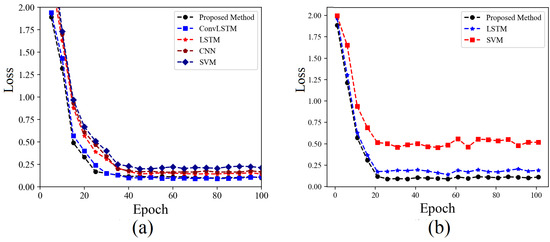

4.3. Visualization of Loss Function

Figure 8 shows the curve of the loss function related to training epochs of proposed method compared with other training method in full polarization and HH polarization. From the data analysis, it can be obtained that the proposed method has a better convergence ability compared with traditional methods above in difference polarized way. This is because proposed method has a fusion network structure to reduce the instability of single network framework. Moreover, compared with traditional methods, the loss function has a smaller value when it become stable, which shows the proposed method can enhance the classification accuracy from the feature fusion with original HRRP sequences.

Figure 8.

Comparison of loss function (a) full polarization (b) HH polarization.

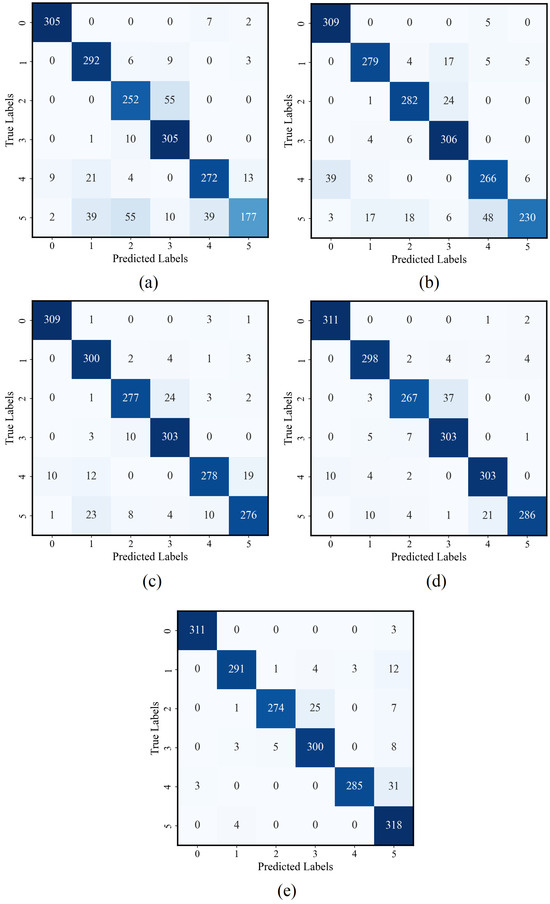

4.4. Visualization of Confusion Matrix

Figure 9 shows the confusion matrix of the proposed model on full polarization dataset. Table 12 shows related PA and OA of the proposed model from confusion matrix.

Figure 9.

Confusion matrix of proposed method in full polarization: (a) 5 dB SNR (b) 10 dB SNR (c) 15 dB SNR (d) 20 dB SNR (e) Orignial dataset.

Table 12.

OA and PA Comparison of Confusion Matrix.

The Overall Accuracy (OA) is the ratio of the number of correctly classified samples to the total number of samples. It can be expressed as:

The Producer’s Accuracy (PA) for a specific class is the proportion of the correctly classified instances of that class to the total actual instances of that class. It is computed for each class i as:

denotes true positive for class i, denotes false positive for class i, M is total number of samples.

It can be observed that, when noise level is greater than 10 dB, the proposed model obtains an OA larger than 90% from datasets which shows a good performance on multi-class classification. The PA of target ships achieves great performance of ship 1, ship 2 and ship 4, whereas ship6 shows minimum values among those noise levels. This may be caused by the fact that ship6 does not have significant feature which is easy for distinguish.

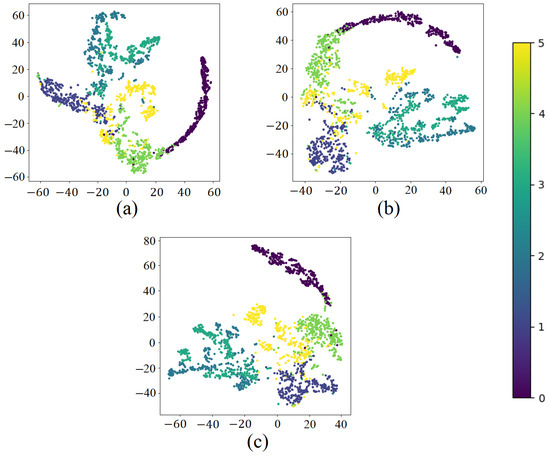

4.5. Visualization of Feature Extraction

To visualized further feature representation of 6 simulated models, in this part the output of fully connected layer with high-dimentional data is used to put into t-distributed stochastic neighbor embedding (t-SNE) [17] to reduce dimension into 2D. Figure 10 shows the results and 6 colors denotes 6 classes of target. From the results, it can be observed that the proposed method exhibits more distinguishing features compared to the other two methods, indicating that it has a stronger ability to adapt to various dataset learning conditions. This approach will particularly enhance the detection of subtle feature differences in ships, effectively mitigating the negative impact of significant variations in feature sequence recognition under different viewing angles. As a result, more feasible and stable recognition outcomes can be achieved.

Figure 10.

Two-dimensional t-SNE projection from extracted feature vectors with 6 classes of target. (a) LSTM (b) ConvLSTM (c) Proposed Method.

5. Conclusions

In this paper, the features fusion method based on HRRP sequences is proposed to enhance target recognition accuracy. By fusing the original sequence with the feature sequence, the method can effectively extract multi-dimensional sequence features from HRRP. Some experiments indicate that it has a high recognition accuracy and robustness through the experimental evaluation and the EM scattering simulation of 6 ship models. In our study, the radar echo are from ship targets, more realistic scattering problem including the ships in sea environment should be discussed in next plan.

Author Contributions

Conceptualization: W.Y., T.C., Z.Z., H.H. and J.H.; Methodology: W.Y., T.C., Z.Z. and J.H.; Validation: S.L.; writing—original draft preparation: W.Y. and T.C.; Writing—review and editing: W.Y., S.L. and Z.Z.; Supervision: H.H.; Project administration: H.H. and J.H.; funding acquisition: W.Y. and J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by the National Natural Science Foundation of China (62031010, 62471108); and in part by the Natural Science Foundation of Sichuan Province (2023NSFSC0464).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The authors would like to thank the anonymous reviewers and editors for their selfless help to improve our manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, V.C.; Martorella, M. Inverse Synthetic Aperture Radar Imaging; SciTech Publishing: Chennai, India, 2014. [Google Scholar]

- Zhao, W.; Heng, A.; Rosenberg, L.; Nguyen, S.T.; Hamey, L.; Orgun, M. ISAR Ship Classification Using Transfer Learning. In Proceedings of the 2022 IEEE Radar Conference (RadarConf22), New York, NY, USA, 21–25 March 2022; pp. 1–6. [Google Scholar]

- Kechagias-Stamatis, O.; Aouf, N. Automatic target recognition on synthetic aperture radar imagery: A survey. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 56–81. [Google Scholar] [CrossRef]

- Huang, Y.; Zhao, Z.; Nie, Z.; Liu, Q.H. Dynamic volume equivalent SBR method for electromagnetic scattering of targets moving on the sea. IEEE Trans. Antennas Propag. 2023, 71, 3509–3519. [Google Scholar] [CrossRef]

- Brem, R.; Eibert, T.F. A shooting and bouncing ray (SBR) modeling framework involving dielectrics and perfect conductors. IEEE Trans. Antennas Propag. 2015, 63, 3599–3609. [Google Scholar] [CrossRef]

- Guo, C.; Xu, C.; Sun, S.; Zhang, X. The Denoise and Reconstruction Method for Radar HRRP Using Enhanced Sparse Auto-Encoder. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Lin, C.-L.; Chen, T.-P.; Fan, K.-C.; Cheng, H.-Y.; Chuang, C.-H. Radar high-resolution range profile ship recognition using two-channel convolutional neural networks concatenated with bidirectional long short-term memory. Remote Sens. 2021, 13, 1259. [Google Scholar] [CrossRef]

- Zeng, Z.; Sun, J.; Xu, C.; Wang, H. Unknown SAR target identification method based on feature extraction network and KLD–RPA joint discrimination. Remote Sens. 2021, 13, 2901. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Guo, D.; Chen, B.; Chen, W.; Wang, C.; Liu, H.; Zhou, M. Variational temporal deep generative model for radar HRRP target recognition. IEEE Trans. Signal Process. 2020, 68, 5795–5809. [Google Scholar] [CrossRef]

- Ding, B.; Chen, P. HRRP feature extraction and recognition method of radar ground target using convolutional neural network. In Proceedings of the 2019 International Conference on Electromagnetics in Advanced Applications (ICEAA), Granada, Spain, 9–13 September 2019; pp. 0658–0661. [Google Scholar] [CrossRef]

- Fu, Z.; Li, S.; Li, X.; Dan, B.; Wang, X. A Performance Analysis of Neural Network Models in HRRP Target Recognition. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–4. [Google Scholar]

- Hu, W.-S.; Li, H.-C.; Wang, R.; Gao, F.; Du, Q.; Plaza, A. Pseudo Complex-Valued Deformable ConvLSTM Neural Network with Mutual Attention Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5533017. [Google Scholar] [CrossRef]

- Hu, W.-S.; Li, H.-C.; Deng, Y.-J.; Sun, X.; Du, Q.; Plaza, A. Lightweight Tensor Attention-Driven ConvLSTM Neural Network for Hyperspectral Image Classification. IEEE J. Sel. Top. Signal Process. 2021, 15, 734–745. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y.; Wang, Y.; Wang, J.; Long, T. Polarimetric HRRP Recognition Based on ConvLSTM with Self-Attention. IEEE Sens. J. 2021, 21, 7884–7898. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Ran, L.; Xie, R. Feature extraction method for DCP HRRP-based radar target recognition via m-x decomposition and sparsity-preserving discriminant correlation analysis. IEEE Sens. J. 2020, 20, 4321–4332. [Google Scholar] [CrossRef]

- Pan, M.; Jiang, J.; Kong, Q.; Shi, J.; Sheng, Q.; Zhou, T. Radar HRRP Target Recognition Based on t-SNE Segmentation and Discriminant Deep Belief Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1609–1613. [Google Scholar] [CrossRef]

- Huang, W.-F.; Zhao, Z.-Q.; Zhao, R.; Wang, J.-Y.; Nie, Z.-P.; Liu, Q.-H. GO/PO and PTD with virtual divergence factor for fast analysis of scattering from concave complex targets. IEEE Trans. Antenn. Propag. 2015, 63, 2170–2179. [Google Scholar] [CrossRef]

- Yang, W.; Kee, C.Y.; Wang, C.-F. Novel extension of SBR-PO method for solving electrically large and complex electromagnetic scattering problem in half-space. IEEE Trans. Geosci. Remote 2017, 55, 3931–3940. [Google Scholar] [CrossRef]

- Tao, Y.; Lin, H.; Bao, H. GPU-based shooting and bouncing ray method for fast RCS prediction. IEEE Trans. Antennas Propag. 2010, 58, 494–502. [Google Scholar]

- Parker, S.G.; Bigler, J.; Dietrich, A.; Friedrich, H.; Hoberock, J.; Luebke, D.; McAllister, D.; McGuire, M.; Morley, K.; Robison, A.; et al. Optix: A general purpose ray tracing engine. ACM Trans. Graph. (TOG) 2010, 29, 66. [Google Scholar] [CrossRef]

- Kee, C.Y.; Wang, C.-F. Efficient GPU implementation of the high-frequency SBR-PO method. IEEE Antennas Wirel. Propag. Lett. 2013, 12, 941–944. [Google Scholar] [CrossRef]

- Özdemir, C. Inverse Synthetic Aperture Radar Imaging with MATLAB Algorithms, 2nd ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2021; ISBN 978-1-119-52133-4. [Google Scholar]

- Wang, Y.; Long, B.; Wang, F. RCS Statistical Feature Extraction for Space Target Recognition Based on Bi-LSTM. In Proceedings of the IGARSS 2023—2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 6049–6052. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, S.; Yang, Q.; Fan, X. RCS Statistical Features and Recognition Model of Air Floating Corner Reflector. Syst. Eng. Electron. 2019, 41, 780–786. [Google Scholar]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- Li, L.; Zhu, Y.; Zhu, Z. Automatic Modulation Classification Using ResNeXt-GRU with Deep Feature Fusion. IEEE Trans. Instrum. Meas. 2023, 72, 2519710. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).