Abstract

Cracks are a common defect in civil infrastructures, and their occurrence is often closely related to structural loading conditions, material properties, design and construction, and other factors. Therefore, detecting and analyzing cracks in civil infrastructures can effectively determine the extent of damage, which is crucial for safe operation. In this paper, Web of Science (WOS) and Google Scholar were used as literature search tools and “crack”, “civil infrastructure”, and “computer vision” were selected as search terms. With the keyword “computer vision”, 325 relevant documents were found in the study period from 2020 to 2024. A total of 325 documents were searched again and matched with the keywords, and 120 documents were selected for analysis and research. Based on the main research methods of the 120 documents, we classify them into three crack detection methods: fusion of traditional methods and deep learning, multimodal data fusion, and semantic image understanding. We examine the application characteristics of each method in crack detection and discuss its advantages, challenges, and future development trends.

1. Introduction

Civil infrastructure is a critical component of the long-term stable development of an economy, and regular inspection and maintenance is essential to prevent major accidents. Traditionally, manual visual inspection has been used to assess the condition of infrastructure during operation [,]. However, this method is time-consuming, subjective [], and potentially hazardous. The current trend in civil infrastructure inspection involves integrating state-of-the-art computer vision technologies with traditional methods. This integration has led to numerous studies focused on intelligent detection techniques aimed at enhancing both robustness and efficiency [,]. Automatic crack detection is a significant area within intelligent inspection of civil infrastructure [,]. Due to the inherent properties of construction materials, most infrastructures develop cracks during their service life []. In some cases, especially under harsh conditions and increasing loads, the formation and propagation of cracks can be rapid, leading to a shortened lifespan and potentially severe safety incidents [,]. Moreover, there are numerous types of cracks in large quantities, categorized based on factors such as causes, sizes, and shapes. Different types of cracks have varying impact on structural safety []. Therefore, employing traditional manual methods to inspect these cracks individually poses significant challenges in terms of efficiency and cost. Accurately identifying cracks that may affect the safety performance of structures from the vast number of cracks is currently one of the most critical issues in the field.

To solve this problem, researchers are actively exploring intelligent crack identification techniques based on computer vision. By building a large database of crack images and combining image processing and pattern recognition techniques, algorithmic models can be trained that can automatically identify different types of cracks. Common crack detection algorithm models include the following: (1) Crack detection by traditional image processing methods mainly relies on techniques such as edge detection, threshold segmentation, morphological operations, etc. to identify cracks. Commonly used algorithms include Canny edge detection [], Otsu technique [], Hough transform [], Gray-Level Co-occurrence Matrix (GLCM) based on texture features [], wavelet transform [], etc. (2) Machine learning-based crack detection uses machine learning algorithms to learn crack features from training data and classify test images to detect cracks. Commonly used algorithms include support vector machines (SVMs) [,], Random Forest [,], Decision Trees [], etc. (3) Deep learning-based crack detection builds a convolutional neural network (CNN) model [] to realize end-to-end crack detection. Frequently used models include U-Net [,], FCN [,], SegNet [], etc. Azouz et al. [] reviewed image processing techniques and visual-based machine learning algorithms and summarized crack detection and analysis techniques for monitoring geometric changes in building structures. Hsieh et al. [] reviewed 68 machine learning (ML)-based crack detection methods. Hamishebahar et al. [] reviewed 61 crack detection methods based on deep learning and found that semantic segmentation is one of the popular methods in the field of crack detection in recent years. In addition, advanced technologies such as drones [,] and 3D laser scanning [] can be used to obtain three-dimensional information of the infrastructure surface, and combined with computer vision algorithms to realize automatic identification and quantitative analysis of cracks, which can greatly improve the efficiency and accuracy of identification. Satellite technologies also play a crucial role in monitoring cracks in civil infrastructure. Key technologies include synthetic aperture radar interferometry [] (e.g., MT-InSAR []), high-resolution optical imaging (e.g., HSR bitemporal satellite images []), multispectral and hyperspectral imaging [], and thermal infrared imaging []. These technologies effectively identify and monitor cracks and structural deterioration by providing high-resolution imagery, three-dimensional modeling, and surface temperature data. Combined with change detection techniques and time-series analysis, they significantly enhance the efficiency and accuracy of infrastructure monitoring. Effective crack detection usually involves overcoming problems related to shadows, light, and illumination. To improve the visibility of cracks in images, shadow removal, light compensation, and illumination adjustment techniques are needed. For example, using illumination enhancement techniques such as Retinex [] or adaptive thresholding [] techniques can improve image quality and ensure accurate crack detection even when lighting conditions change. The deep learning-based algorithm can continuously improve its recognition ability and can gradually improve the accuracy and robustness of identifying various types of cracks. Intelligent crack detection technology can effectively solve the problems of recognition efficiency and cost caused by traditional manual labor, resulting in a more reliable guarantee for the safe operation of civil infrastructure.

In recent years, the field of crack detection technology based on computer vision has witnessed a remarkable advancement. A number of researchers have employed feature-based deep learning methods and traditional image processing techniques for the extraction and analysis of cracks [,,]. However, in real-world scenarios, cracks typically exist under complex and variable environmental conditions, resulting in detection data that often contain substantial noise, which can severely impact the accuracy of the detection results []. Consequently, the advancement of high-precision and highly robust crack detection methodologies has become a shared objective within both the scientific and engineering communities, with the aim of achieving accurate crack detection for civil infrastructure in a variety of scenarios []. Researchers in the field of civil engineering employ the distinctive attributes of their discipline to facilitate interdisciplinary, cross-industry, and cross-domain collaboration, aiming to establish an artificial intelligence theoretical framework applicable to this field []. They have successfully integrated deep learning-based crack detection algorithms with modern technologies, including advanced sensing equipment, high-definition imaging systems [], and lightweight robotic platforms []. This integration has enabled intelligent crack detection, providing effective decision support for the maintenance and repair of civil infrastructure and advancing the field towards greater levels of automation [,]. At present, some researchers are improving deep learning models such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) [,] to enhance the accuracy and robustness of crack detection. In addition, there are also methods based on weakly supervised learning [,] and transfer learning [,,] in the field of crack detection. These methods train models using a small number of labeled samples, thereby reducing the workload associated with data annotation. In summary, the development of high-precision and highly robust crack detection methods represents a critical research direction in the field of computer vision. In the future, deep learning-based crack detection technology will continue to evolve and improve, playing an increasingly important role in engineering practice.

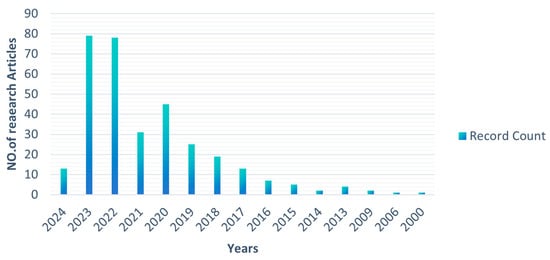

For this paper, we utilized the Web of Science (WOS) and Google Scholar as literature search tools, using “fracture”, “civil infrastructure”, and “computer vision” as search terms, and obtained 325 relevant papers. Figure 1 shows the distribution of relevant studies by year.

Figure 1.

Year-wise distribution of articles.

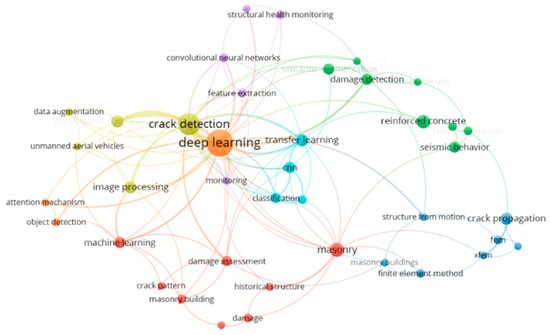

A keyword search was then conducted on the retrieved literature to identify frequent keywords related to the aforementioned terms. The keywords identified were ‘deep learning’, ‘crack detection’, ‘feature extraction’, ‘CNN’, and ‘image processing’, as shown in Figure 2.

Figure 2.

Keywords for crack detection.

To maximize the retrieval of papers relevant to the research, these keywords were combined with a basic set of keywords to comprehensively utilize the database; a total of 120 papers were ultimately selected for analysis and summarization over the period 2020–2024. Based on recent research and technology development trends in the field of crack detection in civil engineering infrastructure, this paper proposes a comprehensive classification framework that classifies crack detection methods into three categories: combination of traditional methods and deep learning, multimodal data fusion, and semantic image understanding, reflecting on the characteristics and development trends of the application of various methods in crack detection in recent years. Next, we summarize the datasets and evaluation metrics applied to crack detection. Finally, the advantages and limitations of existing methods are summarized, and the development trends of intelligent crack detection in civil engineering infrastructure are analyzed and discussed.

The comprehensive classification framework proposed in this paper not only covers a combination of traditional and modern technologies, but also integrates various data processing approaches and provides a systematic and multifaceted perspective to help readers more fully understand current technological advances, strengths, and challenges. Compared to studies that focus only on a specific time period or data source, this paper surveys key papers from the past five years to ensure the most up-to-date and comprehensive research.

2. Crack Detection Combining Traditional Image Processing Methods and Deep Learning

In image processing, feature extraction is used to extract representative image information and identifying features from raw data for further analysis and processing. Traditional crack detection methods mainly rely on manual features such as edge detection, thresholding, and morphological operations. These methods can effectively detect cracks under certain conditions. However, they often lack robustness and accuracy when faced with complex backgrounds, changing lighting conditions, and noise interference.

With the rapid development of artificial intelligence, methods represented by deep learning have made a breakthrough in the field of computer vision. Convolutional neural networks (CNNs) have become a central algorithm in deep learning due to their superior performance in image processing and computer vision tasks. Compared to traditional methods, deep learning models not only automatically learn and extract multi-layered complex features from data but are also better able to adapt to lighting variations, noise interference, and complex backgrounds. Deep learning models can be trained and optimized end-to-end directly from the input image to the output result. This approach simplifies the processing workflow, reduces the accumulation of errors in intermediate stages, and improves overall detection efficiency and accuracy. Deep learning models also provide powerful generalization capabilities that can be adapted to a variety of application scenarios as well as training data.

By integrating traditional methods and deep learning for crack detection, we can fully utilize the advantages of both approaches to improve crack detection performance. Traditional methods have rich experience and effectiveness in data preprocessing and feature extraction, while deep learning models excel at learning complex representations of crack features to realize more accurate crack detection.

2.1. Crack Detection Based on Image Edge Detection and Deep Learning

Cracks exhibit distinctive edge features that can be identified through the application of edge detection techniques. Typically, there is a significant change in the grey scale value between the area on either side of the crack edge and the edge pixel itself. The utilization of grayscale gradient information through the use of differential operators in the edge detection algorithm enables the accurate localization of crack edges, thus facilitating the identification of cracks in an effective manner [,]. For example, the gradient for edge detection is calculated using Equation (1):

where represents the input image, and and are the gradient operators in the horizontal and vertical directions, respectively. This formula calculates the strength of gradients in various directions to detect edges in the image. The most common edge detection operators include gradient operators (first-order differential operators), second-order differential operators, and operators used in Canny edge detection algorithms. First-order differential operators include the Sobel operator, the Prewitt operator, and the Roberts operator. For example, first-order derivative operators (e.g., Sobel and Prewitt operators) define contours by computing local changes in image intensity, as shown in Equation (2):

Equation (2) shows the computation of the gradient of the image by these convolution kernels for edge detection. Classical second-order derivative operators (e.g., Laplace operator []) compute the image change using Equation (3):

Equation (3) is used to detect edges and textural changes in the image, which are enhanced by computing the second-order derivatives of the edges. It is evident that different edge detection operators are suitable for the detection of different types of crack edges. Although these operators are relatively simple and fast to compute, a single operator is often unable to meet the complex requirements of crack segmentation in complex backgrounds. Furthermore, the detection capabilities of these operators are constrained in the presence of substantial noise. Consequently, a considerable number of researchers have recently employed deep learning techniques to integrate and enhance edge detection, thereby further enhancing the accuracy and robustness of crack detection. Table 1 provides a summary of crack detection methods based on edge detection and deep learning.

Table 1.

Crack detection method based on edge detection and deep learning.

In their initial application of YOLOv4 for crack detection, Kao et al. [] subsequently enhanced the quality of the resulting images by integrating Canny edge detection. This resulted in a notable improvement in the accuracy of the subsequent detection process. Building upon the GM-ResNet crack detection model, Li et al. [] extracted additional quantitative information about cracks using Canny edge detection. In a pioneering approach, Choi et al. [] initially enhanced crack feature information using the Sobel algorithm, subsequently achieving high-precision, high-efficiency crack detection with the ResNet50 neural network. Guo et al. [] employed BARNet for feature learning and prediction, subsequently integrating it with the Sobel algorithm to achieve precise crack detection and localization. Luo et al. [] integrated the Canny edge detection results with the low-level feature layers of the DeepLabV3+ network. This enhanced the positional and detailed information of road cracks, compensating for the loss of details when merging high-level and low-level feature layers, thereby achieving high-precision crack detection.

Edge detection can effectively extract crack edge information and reduce the interference of background noise. Therefore, edge detection is commonly used as a preprocessing step. Its main purpose is to simplify the input data and reduce the complexity and noise interference by first extracting the edge features from the image. This can improve the learning efficiency and detection efficiency of deep learning models. By reducing the processing load of irrelevant areas and reducing the consumption of computational resources, the model can quickly focus on areas that may contain cracks. This improves the final detection accuracy and efficiency. Edge detection can also be used as a postprocessing step to optimize and improve the output of a deep learning model. In this scenario, edge detection is employed to further extract and refine the edge information of cracks to improve crack detection and localization results. Deep learning models have strong generalization ability and can adapt to different types and shapes of cracks. Combining edge detection with deep learning models not only improves detection accuracy but also allows detailed features such as crack length, width, and shape to be extracted. This integration can improve the accuracy of crack risk assessment and maintenance decision-making.

However, incorporating edge detection into the preprocessing stage increases the complexity of the detection pipeline and requires additional computational resources and time. In addition, the algorithm itself requires appropriate parameter settings, which greatly affects the efficiency of preprocessing. In addition, edge detection results depend on the quality of the image and the performance of the algorithm. If the noise level in the image is high or the performance of the algorithm is not optimized, the detection accuracy of subsequent deep learning models may be adversely affected. Deep learning models typically require large amounts of labeled data, placing high demands on data quality and resources. Inadequate data quality can degrade model performance. The key to efficient crack detection lies in overcoming the shortcomings of each while reasonably leveraging the advantages of edge detection and deep learning.

2.2. Crack Detection Based on Threshold Segmentation and Deep Learning

Threshold segmentation is a method that divides an image into several classes on the basis of one or more threshold values in order to separate features of interest from background pixels. The basic principle of threshold segmentation can be expressed in Equation (4) as follows:

where represents the pixel intensity at position in the image, is the threshold value, and is the segmented image. This formula indicates that pixels with intensity greater than the threshold are classified as foreground (1), while those with lower intensity are classified as background (0). Low computational cost, fast processing, stable performance, and ease of implementation make this the most basic and commonly used method for detecting crack images in concrete structures. Common threshold-based segmentation methods include the Otsu method [], adaptive thresholding [], multi-level thresholding [], histogram-based thresholding [], and fuzzy thresholding []. The Otsu method determines the optimal threshold by maximizing the interclass variance of the segmented region.

is obtained, whose formula is shown in Equation (5):

where and are, respectively, the number of pixels in the two categories and the total number of pixels in , and and are the average pixel intensities in the two categories. The threshold is chosen by maximizing for optimum foreground and background separation. The adaptive thresholding method adjusts the threshold according to the local characteristics of the image and is usually calculated by Equation (6):

where is a constant subtracted from the average intensity at in the local neighborhood. The method handles different illumination conditions by adjusting the threshold according to the local image statistics. Thresholding segmentation methods are suitable for images with a constant background gray scale, uniform illumination, and high contrast. However, if illumination is irregular or the background contains noise, a single-threshold segmentation method often fails to deliver optimal results. In crack detection, the limitations of single-threshold segmentation methods become more pronounced, especially when dealing with complex images. This is why various improved and integrated threshold segmentation methods are continually emerging. The combination of threshold segmentation and deep learning is currently a major trend in image processing and computer vision. Table 2 summarizes crack detection methods based on edge detection and deep learning.

Table 2.

Crack detection method based on threshold segmentation and deep learning.

Flah et al. [] proposed a model for defect detection that integrates convolutional neural networks and advanced Otsu image processing techniques. The model is applicable to the detection of inaccessible areas of concrete structures and enables the classification, localization, segmentation, and quantification of damage in cracked structures. Mazni et al. [] combined transition learning and Otsu to monitor the structural condition of concrete surfaces. He et al. [] first preprocessed images using the Otsu algorithm and then classified them using the YOLOv7 network to accurately classify crack repair traces (CRTs) or secondary cracks (SCs). Zhang et al. [] used an adaptive thresholding method to remove the background of the image and highlight the cracked areas. Weakly supervised learning models (WSISs) were then used to identify cracks and achieve higher detection accuracy. This approach effectively improved the detection performance by reducing the background noise and increasing the sensitivity of the model to crack features. He et al. [] applied adaptive thresholding to the segmentation of crack images obtained with the U-GAT-IT model, followed by re-detection with the model. The study was conducted. The proposed weakly supervised method showed excellent performance in crack detection.

When deep learning models are used for crack detection, threshold segmentation is usually used as a preprocessing step. The reason for this is that threshold segmentation effectively filters out background noise, separating regions of possible cracks from the background and retaining only those regions where cracks are likely to be present. By simplifying the input data and reducing the computational burden on the deep learning model, threshold segmentation allows the model to further learn complex features on the preprocessed image and adapt to cracks of different shapes and sizes. Furthermore, by extracting crack features from the image first through threshold segmentation, it provides more distinct and targeted key features for subsequent models. This improves the robustness of the deep learning model under different scenarios and conditions. Furthermore, threshold segmentation can be applied in the postprocessing step. After the deep learning model outputs initial crack detection results, threshold segmentation can be used to further refine these results and improve the clarity and continuity of crack edges. Threshold segmentation techniques can also be used to further filter the detection results output by the deep learning model to eliminate potentially false-positive areas and improve detection accuracy.

For thresholding as preprocessing and postprocessing of crack detection, each has its drawbacks. Performing thresholding as preprocessing can lead to information loss. Fixed thresholds affect the input quality of deep learning models because they are difficult to adapt to changing image conditions and cannot effectively handle complex textures. Thresholding as a postprocessing step can oversimplify the model output and lose detail. It also relies on parameter tuning and is susceptible to noise, which can easily be mistaken for cracks. These issues can reduce the accuracy and robustness of the final detection results. Despite its simplicity and ease of implementation, threshold segmentation often performs less than optimally when performing complex crack detection tasks due to its inherent limitations.

Incorporating a threshold segmentation branch into a deep learning model allows for more flexible and efficient crack detection and alleviates some of the aforementioned problems. This branch can act as an additional module in the network to improve the detection performance and robustness of the model by processing intermediate features using the thresholding technique. By incorporating the threshold segmentation branch before the feature extraction stage of the deep learning model, more image details can be preserved and the model can further process and optimize these details in subsequent layers to reduce information loss. Adaptive thresholding methods can be incorporated to address the non-adaptive nature of fixed thresholds. By leveraging deep learning models to automatically learn and adjust threshold parameters, models can adapt to different image and lighting conditions to improve robustness. To address the issue of oversimplification of results, feature maps generated by the deep learning model can be integrated with the initial results of threshold segmentation, allowing the model to improve and optimize results in the early stages. This approach helps reduce oversimplification. Subsequent network layers can further improve the fine detail of crack edges and increase detection accuracy. The practical effectiveness of the aforementioned approaches depends on the specific design of the model and the quality of the training data. To achieve the best detection performance, the model structure must be continuously tuned and optimized in real-world applications. This iterative process includes fine-tuning model parameters, trial and error of different architectures, and ensuring that the training data accurately represent the target problem. Continuous improvement of the model can be aimed at improving detection performance.

2.3. Crack Detection Based on Morphological Operations and Deep Learning

The crack detection method based on morphological operations [] involves performing image processing techniques such as erosion, dilation, opening, and closing. These operations effectively remove non-crack edge information and refine the edges of cracks. Subsequently, the features extracted using these morphological operations facilitate crack identification. By combining morphological operations with deep learning, it is possible to detect cracks in complex concrete surface images more effectively, leveraging attributes such as crack shape, connectivity, and curvature. This integration significantly enhances detection accuracy and robustness. Table 3 summarizes the crack detection methods reviewed in this section, highlighting the use of morphological operations and deep learning.

Table 3.

Crack detection method based on morphological operations and deep learning.

Huang et al. [] proposed to integrate morphological closure operations into the Mask R-CNN model for crack detection in images, which can effectively detect cracks and segment instances. Fan et al. [] proposed a parallel ResNet for crack recognition, and then used mathematical morphological methods to extract the framework of the crack to obtain information about the length, width, and area of the crack. Kong et al. [] proposed a dual-scale CNN for crack detection, morphological operations for crack measurement, and shape context for crack monitoring, which utilizes a morphological operation to extract the framework of the crack to obtain information about the length, width, and area of the crack. Dang et al. [] proposed the TunnelURes framework for segmentation based on basic crack features to realize automatic evaluation of cracks and quantitative growth monitoring. Andrushia et al. [] proposed a pixel-level thermal image crack detection method based on U-Net architecture with an encoder–decoder framework. To extract the shape information of the crack and accurately quantify the damage, morphological operations and an improved distance transformation method were used to quantify the crack and calculate its width at the pixel level.

In the crack detection process, the integration of morphological manipulation and deep learning models can improve the detection effectiveness at several levels. Specifically, this integrated approach can be categorized into three aspects. In the preprocessing stage, morphological manipulations such as erosion and dilation are applied before the image is fed into the deep learning model. These manipulations significantly reduce noise and emphasize the features of the crack, allowing the deep learning model to focus more on the crack region. This preprocessing method effectively improves the learning efficiency and detection performance of the model. In the postprocessing stage, after obtaining the output of the deep learning model, morphological manipulations such as closure are applied to optimize the detection results. These manipulations fill the gaps along the crack edges and smooth the crack contours to improve the accuracy and precision of the detection results. Postprocessing techniques are introduced to ensure the continuity and integrity of the final detection results. Hybrid models incorporate morphological manipulations into the structure of the deep learning model, such as within the interlayer or at specific points. This approach integrates the morphological processing step inside the deep learning model and leverages the strengths of both technologies to realize more accurate crack detection. Hybrid models not only improve detection accuracy but also enhance the model’s adaptability to a variety of complex crack morphologies.

3. Crack Detection Based on Multimodal Data Fusion

Crack detection is critical in civil engineering, directly affecting the safety and stability of buildings and infrastructure. However, methods based on a single data source face challenges such as sensitivity to illumination, viewing angle, and noise, as well as limitations in capturing crack morphology and depth. To address these issues, multimodal data fusion techniques have emerged as a significant research direction. These techniques integrate information from different sensors or data sources, providing more comprehensive and precise crack detection. This approach enhances detection accuracy, robustness, and reliability. This chapter explores the current application status of multimodal data fusion in crack detection for civil infrastructure inspection, focusing on two key areas: multi-sensor fusion and multisource data fusion.

3.1. Multi-Sensor Fusion

Multi-sensor fusion enhances the adaptability of crack detection systems to environmental changes and interferences by integrating information from various sensor types. By combining data from optical sensors, laser sensors, thermal imaging sensors, and others, it provides more comprehensive and precise detection results. This approach increases information sharing among different sensors and enhances the overall reliability and robustness of the system. Table 4 illustrates the specific application methods of various sensors in multi-sensor fusion, highlighting the advantages and disadvantages of each sensor type.

Table 4.

Multi-sensor fusion for crack detection.

Jian et al. [] combined digital photography with LiDAR technology and used YOLOv5s to train and detect cracks in the collected images. This integration achieved temporal and spatial synchronization of multiple sensors, ensuring accurate and efficient crack detection under large-scale data conditions. Liang et al. [] fused features from optical and infrared sensors to capture images and employed C-Net for pixel-level detection of cracks. The thermal features provided by infrared images compensated for the limitations of visible light images under low-light conditions or when obscured, thereby enhancing the accuracy of road crack detection. Alamdari et al. [] first used CNNs to localize cracks in images and then combined the geometric shapes and dimensions of cracks obtained from laser scanners with the surrounding environmental information acquired by LiDAR using point cloud registration techniques. This approach enabled comprehensive, accurate, and detailed defect detection. Liu et al. [] proposed a multifunctional sensor for crack detection by combining optical and capacitive sensors. The optical function of the sensor localizes cracks, while the capacitive function triggers alerts. Through image processing and capacitive measurements, this sensor efficiently detects and quantifies cracks. Park et al. [] utilized visual sensors and two laser sensors to detect cracks on the surface of concrete structures in real time using the YOLO algorithm. They calculated crack dimensions based on the position of the laser beams and enhanced measurement accuracy through distance sensor and laser alignment calibration algorithms. Simulation and experimental validation demonstrated that this system achieves high-precision real-time detection and quantification of cracks.

Different sensors possess varying sensitivities and error characteristics. By integrating diverse types of sensors such as vision, LiDAR, ultrasound, and infrared thermography, researchers can overcome the limitations imposed by environmental noise or interference on a single sensor. This integration enhances resistance to interference and allows for the acquisition of multi-dimensional information about cracks, providing more comprehensive and detailed detection results. The integration of multiple sensors offers redundant information, ensuring that even if one sensor fails or provides inaccurate data, other sensors can still supply valid information. This redundancy ensures the reliability and continuity of the system. Different sensors capture various characteristics of cracks, and by leveraging their complementary nature, a comprehensive capture of the diverse features and changes of cracks can be achieved. For instance, visual sensors provide high-resolution images that aid in the precise localization and measurement of surface cracks, while LiDAR offers three-dimensional structural data. The integration of these two types of sensors is particularly suitable for detecting cracks in complex and large-scale infrastructure. The combination of ultrasonic sensors and infrared thermography is widely used for detecting internal cracks and material defects. Ultrasonic sensors penetrate materials to detect internal cracks, while infrared thermography identifies potential structural damage through temperature variations. Utilizing the inherent characteristics of these sensors enables real-time monitoring of cracks in civil infrastructure, aiding in the timely detection and assessment of crack development. This facilitates prompt maintenance and repair actions to prevent further crack propagation.

With the continuous advancement of artificial intelligence and deep learning technologies, the processing and analysis of multi-sensor data have become increasingly intelligent. Deep learning algorithms enable efficient processing of large-scale data, enhancing the identification and analysis of crack morphology and predicting crack development trends. Concurrently, the application of Internet of Things (IoT) technologies empowers crack detection systems with remote monitoring and automated management capabilities. Monitoring data can be transmitted in real-time to central servers for analysis and processing, facilitating real-time monitoring and management of infrastructure crack conditions.

The integration of multiple sensors in crack detection faces several challenges. Firstly, the synchronization and alignment of sensor data require precise time and spatial calibration. Secondly, the complexity of data processing and fusion algorithms increases the difficulty in system design and implementation. Lastly, addressing the high cost and complex deployment processes of sensors is essential.

3.2. Multi-Source Data Fusion

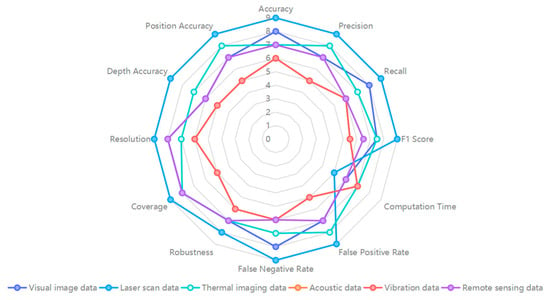

Multi-source data fusion involves integrating various information sources from different types or origins, such as images, sound, vibrations, under specific standards to logically combine them in spatial or temporal domains. This comprehensive analysis aims to accurately describe the condition of cracks, thereby enhancing the performance and accuracy of crack detection systems. By leveraging the strengths of multiple data sources, this approach overcomes the limitations of individual data streams, enhancing the reliability and precision of detection. It provides detailed and comprehensive crack information, supporting more accurate damage assessment and informed maintenance decisions. Figure 3 compares the performance of multiple data sources in crack detection to help select the most suitable data source or integrate various data sources.

Figure 3.

Performance comparison of multi-source data fusion crack detection.

Yan et al. [] combined RGB images with LiDAR data to identify regions of interest and extract depth information, thereby improving the accuracy of crack detection and quantification. This integration enhances the estimation of actual pixel sizes of cracks. Dong et al. [] fused point cloud data with grayscale images as inputs to the YOLOv5 model, which enhanced the effectiveness of detecting pavement cracks. Kim et al. [] addressed the challenge of varying camera angles with concrete surfaces by integrating data from RGB-D cameras and high-resolution digital cameras, resulting in precise crack measurements. Chen et al. [] reduced false alarms in concrete defect detection by integrating geotagged aerial images with Building Information Modeling (BIM) data. Pozzer et al. [] evaluated the performance of various deep neural network models in detecting defects in concrete structures by combining thermal imaging with visible light images, providing comprehensive insights into the structural integrity of concrete.

The fusion of multi-source data offers both significant advantages and challenges in the detection of cracks in civil infrastructure. By integrating various data types, such as image data, laser scanning data, and thermal imaging data, multi-source data fusion provides a comprehensive understanding of crack conditions, enhancing the reliability and accuracy of detection. This approach also improves the system’s adaptability to diverse environmental conditions, expands detection coverage, and uncovers hidden cracks, thereby aiding in infrastructure maintenance and management decisions. However, multi-source data fusion faces several challenges. Data processing is complex, requiring solutions to technical issues such as data alignment, fusion, and analysis, which increase system complexity and costs. The differences between various data sources necessitate the optimization of algorithms and models to improve detection efficiency and accuracy. Additionally, multi-source data fusion relies on support from different types of sensors and equipment, thereby increasing hardware and maintenance costs. Therefore, when applying multi-source data fusion for crack detection in civil infrastructure, it is crucial to carefully select the most suitable approach.

In recent years, the continuous advancement of artificial intelligence, deep learning, and big data technologies has driven new trends in the application of multi-source data fusion for crack detection in civil infrastructure. Firstly, the ability to process and analyze multi-source data using deep learning techniques has significantly improved, leading to enhanced accuracy and efficiency in crack detection systems. Secondly, the ongoing innovation and widespread adoption of sensor technologies have expanded data fusion applications across various sensor types, enriching the methods and content of data fusion in crack detection systems. Furthermore, advancements in cloud computing and edge computing technologies have improved the real-time performance and scalability of multi-source data fusion, making it easier to adapt to the monitoring and management needs of large-scale infrastructure. Overall, the development of multi-source data fusion technology in crack detection is moving towards greater intelligence, integration, and real-time capabilities.

Table A1 in Appendix A compares the advantages and disadvantages of traditional methods compared to the fusion of deep learning and multimodal data.

4. Crack Detection Based on Image Semantic Understanding

Image understanding (IU) involves in-depth analysis and comprehension of images, focusing on semantic understanding. Its primary objective is to recognize and interpret semantic information within images. Typically, IU encompasses three aspects: classification, detection, and segmentation [,]. By employing image understanding techniques, deeper insights into image content can be achieved, facilitating a comprehensive understanding of image information. This capability enables efficient and precise crack detection, providing crucial technical support for safety management and maintenance in civil engineering.

4.1. Crack Detection Based on Classification Networks

Convolutional neural networks (CNNs) were initially developed for image classification problems. They are designed to mimic the workings of the human visual system, with multiple layers of convolution and pooling operations progressively extracting features from images. These features are then fed into fully connected layers for classification. The structure of CNNs enables them to automatically learn features from raw image data and adjust weights during training, leading to accurate classification of images across different categories. Zhang et al. [] pioneered the application of deep learning in crack detection by using CNNs, achieving superior results compared to traditional methods such as support vector machines (SVMs) and boosting.

A classification network can be used to detect the presence of cracks in an image and classify them as either positive or negative samples. When given an image as input, this network analyzes its features and makes a classification judgment. If cracks are identified, the network labels the images as a positive sample; otherwise, it labels it as a negative sample. This algorithm can be applied at both the whole-image level and the image-patch level. Classifying positive and negative samples in crack images is a crucial step in crack detection and analysis. It facilitates the automation of crack detection processes and enhances structural safety and maintenance efficiency. Classification errors can directly impact the accuracy of subsequent crack damage assessment and maintenance strategies.

Some researchers focus on classifying entire images containing structural cracks. This approach involves using the entire image as input and distinguishing between crack and non-crack regions through annotation, feature extraction, model training, and evaluation. Flah et al. [] proposed a classification model for concrete structural cracks, categorizing them into five types, including the presence of surface cracks and four directional cracks (HR, HL, VR, and VL), achieving high classification accuracy. Yang et al. [] compared the performance of three convolutional neural networks—AlexNet, VGGNet13, and ResNet18—in the recognizing and classifying of crack images. The results indicate that ResNet18 outperforms the other two networks, demonstrating superior feature extraction capability and accurate crack identification. Rajadurai et al. [] combined transfer learning with fine-tuning of the AlexNet model for crack classification tasks, resulting in improved accuracy and practical performance. Kim et al. [] proposed a crack classification detection model for concrete structural surface cracks named OLeNet, by fine-tuning the hyperparameters of the LeNet-5 architecture. This model effectively handles low-quality images and collaborates with Internet of Things (IoT) devices without relying on high-performance computing resources. O’Brien et al. [] employed deep convolutional neural networks and transfer learning techniques to achieve automatic detection and classification of cracks in concrete tunnel lining images.

Additionally, some researchers segment original images containing cracks into multiple small-sized images (patches) and independently classify each patch to determine if it contains a crack. For instance, Chen et al. [] used high-definition images of facade cracks captured by multi-source drones. They combined CNN models to classify and detect 128 × 128 pixel image blocks, achieving a 94% F1 score, which enabled the classification of minor cracks. Dais et al. [] utilized transfer learning for image patch-level classification in crack detection. They modified a pre-trained network model by removing the fully connected layer, adding a new fully connected layer as the top layer, and incorporating batch normalization and dropout layers with a dropout probability of 0.5. Finally, they added a fully connected layer with softmax activation to classify images into crack or non-crack categories. This approach enhanced the performance and generalization ability while reducing the need for extensive labeled data and accelerating model training speed, maintaining high classification accuracy. Li et al. [] proposed a method based on residual neural networks (ResNets) and transfer learning, capable of accurately classifying and recognizing dam structural crack images with a resolution of 200×200 pixels without detailed morphology feature annotation. This method also enables the localization of the lower part and shape of the cracks.

The advantage of whole-image-level classification lies in its simplicity and effectiveness when cracks are prominent and widely distributed throughout the image. This method relies on the overall image features to determine the presence of cracks. However, in cases where crack locations are unclear or unevenly distributed, classification accuracy may be lower. In contrast, patch-level classification analyzes images at a finer granularity, compensating for the limitations of whole-image classification. This approach divides the image into smaller patches and classifies each one, which can improve accuracy by identifying local regions containing cracks. Table 5 summarizes algorithms of recent years for crack classification.

Table 5.

Summary of relevant details of crack classification.

Currently, research primarily focuses on patch-level classification, as it better identifies local regions within images, determining the position and shape of cracks. This method lays the groundwork for subsequent crack segmentation and quantification. However, patch-level classification can be cumbersome and produces relatively coarse results, making it challenging to perform refined crack feature assessments. In crack classification tasks, networks typically end with fully connected layers, allowing only for patch-level classification. Consequently, classification results are generally binary (crack or non-crack) and do not achieve pixel-level classification or accurately represent specific crack morphologies. To obtain more precise crack morphology information, it is necessary to integrate object detection or segmentation networks with image processing techniques.

By combining these approaches, we can achieve more detailed and accurate crack detection, enhancing the effectiveness of crack hazard assessment and maintenance decision-making.

4.2. Crack Detection Based on Object Detection Networks

Object detection networks are capable of automatically recognizing and localizing cracks in images, typically using bounding boxes. Deep learning-based object detection networks can be categorized into single-stage and two-stage detectors []. These networks can effectively handle crack detection tasks under various scales, shapes, and occlusion conditions, providing crucial technical support for crack detection in civil infrastructure.

Two-stage detectors achieve higher detection accuracy by dividing the task into two separate stages. First, they generate region proposals, and they perform classification and bounding box regression on these proposals. Typical two-stage detectors include the R-CNN series (R-CNN [], Fast R-CNN [], Faster R-CNN []) and Mask R-CNN []. Figure 4 illustrates the evolution of two-stage detectors.

Figure 4.

Two-stage detectors from 2014 to present.

Single-stage detectors are a type of object detection method that directly predicts the class and location of objects in an image in a single forward pass. Unlike two-stage detectors, which first generate region proposals and then perform classification and bounding box regression, single-stage detectors accomplish both tasks simultaneously. This makes them faster and suitable for real-time applications. Common single-stage detectors include the YOLO (You Only Look Once) series [,,,,], SSD (Single Shot MultiBox Detector) [], and RetinaNet []. Figure 5 illustrates the development process of single-stage detectors.

Figure 5.

Single-stage detectors from 2014 to present.

Park et al. [] combined YOLOv3-tiny with laser scanning to achieve high-precision real-time detection and quantification of cracks on structural surfaces. Xu et al. [] enhanced Mask R-CNN by incorporating Path Aggregation Feature Pyramid Network (PAFPN) and Sobel filter branch, resulting in improved defect detection performance. Zhao et al. [] proposed a Crack-FPN network integrated with YOLOv5, which achieved higher detection accuracy and computational efficiency under varying lighting conditions and complex backgrounds. Li et al. [] combined Faster R-CNN with drones for bridge crack detection, enhancing both the efficiency in detecting fine cracks on bridges and the overall detection accuracy. Tran et al. [] conducted crack detection on bridge decks and compared five different object detection networks. Their results demonstrated that YOLOv7 excelled in detecting cracks on high-resolution bridge deck images, offering superior accuracy and faster analysis speed compared to the other networks. Zhang et al. [] replaced the feature extraction network in YOLOv4 with a lightweight network, reducing the number of parameters and backbone layers. This approach not only achieved real-time detection but also demonstrated high precision and processing speed. Table 6 summarizes the performance of various object detection networks on their respective datasets.

Table 6.

Summary of region-based crack detection.

In the field of crack detection, single-stage detectors are valued for their fast computation speed and simple structure, enabling real-time processing of large-scale data. They can swiftly localize cracks in a single forward pass, making them ideal for scenarios demanding high real-time performance. However, their performance may be limited in complex backgrounds and when detecting fine cracks. In contrast, two-stage detectors, though more computationally complex and slower, offer superior accuracy. They are more precise in detecting fine cracks in complex backgrounds, making them particularly suitable for high-precision detection tasks. In summary, single-stage methods, with their speed advantages, are more suitable for large-scale rapid monitoring and early warning systems. Two-stage methods are better suited for detailed analysis and high-precision crack assessment tasks. In practical applications, the choice of method depends on specific requirements. Alternatively, a hybrid detection system that combines the strengths of both methods can balance speed and accuracy.

Crack object detection networks can intelligently localize crack positions but often fall short in providing detailed information about crack morphology and trajectory. Such detailed information is crucial for crack hazard assessment and maintenance decision-making. To address these shortcomings, recent research has focused on pixel-level crack detection. Pixel-level detection leverages image processing techniques or segmentation networks to extract detailed features of cracks, including their morphology, size, and orientation. This approach not only enhances the accuracy of crack detection but also provides a more reliable basis for engineering management and maintenance. Consequently, it improves the accuracy of crack hazard assessments and the effectiveness of maintenance decisions.

In summary, while object detection networks excel at localizing cracks, pixel-level detection offers the detailed analysis necessary for comprehensive crack assessment and informed maintenance planning.

4.3. Crack Detection Based on Segmentation Networks

To achieve more refined crack detection, researchers have developed pixel-level crack detection methods based on deep learning segmentation algorithms. Segmentation networks have been employed for this purpose. Table 7 summarizes the mainstream semantic segmentation algorithms used in recent years.

Models based on encoder–decoder architecture and Spatial Pyramid Pooling (SPP) structure are widely applied in crack detection, effectively capturing crack features across different scales and scenes. This approach is particularly useful for handling targets or scenes with significant scale variations. Many scholars have conducted pixel-level detection of structural cracks using network architectures such as FCN, SegNet, and U-Net [,]. Some researchers have enhanced the accuracy and robustness of these semantic segmentation networks by embedding various functional modules. These enhancements improve the utilization of information within images, thereby boosting the network’s detection performance in detecting cracks [].

Table 7.

Summary of semantic segmentation algorithms.

Table 7.

Summary of semantic segmentation algorithms.

| Model | Improvement/Innovation | Backbone/Feature Extraction Architecture | Efficiency | Results |

|---|---|---|---|---|

| FCS-Net [] | Integrating ResNet-50, ASPP, and BN | ResNet-50 | - | MIoU = 74.08% |

| FCN-SFW [] | Combining fully convolutional network (FCN) and structural forests with wavelet transform (SFW) for detecting tiny cracks | FCN | Computing time = 1.5826 s | Precision = 64.1% Recall = 87.22% F1 score = 68.28% |

| AFFNet [] | Using ResNet101 as the backbone network, and incorporating two attention mechanism modules, namely VH-CAM and ECAUM | ResNet101 | Execution time = 52 ms | MIoU = 84.49% FWIoU = 97.07% PA = 98.36% MPA = 92.01% |

| DeepLabv3+ [] | Replacing ordinary convolution with separable convolution; improved SE_ASSP module | Xception-65 | - | AP = 97.63% MAP = 95.58% MIoU = 81.87% |

| U-Net [] | The parameters were optimized (the depths of the network, the choice of activation functions, the selection of loss functions, and the data augmentation) | Encoder and decoder | Analysis speed (1024 × 1024 pixels) = 0.022 s | Precision = 84.6% Recall = 72.5% F1 score = 78.1% IoU = 64% |

| KTCAM-Net [] | Combined CAM and RCM; integrating classification network and segmentation network | DeepLabv3 | FPS = 28 | Accuracy = 97.26% Precision = 68.9% Recall = 83.7% F1 score = 75.4% MIoU = 74.3% |

| ADDU-Net [] | Featuring asymmetric dual decoders and dual attention mechanisms | Encoder and decoder | FPS = 35 | Precision = 68.9% Recall = 83.7% F1 score = 75.4% MIoU = 74.3% |

| CGTr-Net [] | Optimized CG-Trans, TCFF, and hybrid loss functions | CG-Trans | - | Precision = 88.8% Recall = 88.3% F1 score = 88.6% MIoU = 89.4% |

| PCSN [] | Using Adadelta as the optimizer and categorical cross-entropy as the loss function for the network | SegNet | Inference time = 0.12 s | mAP = 83% Accuracy = 90% Recall = 50% |

| DEHF-Net [] | Introducing dual-branch encoder unit, feature fusion scheme, edge refinement module, and multi-scale feature fusion module | Dual-branch encoder unit | - | Precision = 86.3% Recall = 92.4% Dice score = 78.7% mIoU = 81.6% |

| Student model + teacher model [] | Proposed a semi-supervised semantic segmentation network | EfficientUNet | - | Precision = 84.98% Recall = 84.38% F1 score = 83.15% |

Li et al. [] proposed an FCS-Net segmentation network that integrates Atrous Spatial Pyramid Pooling (ASPP) and batch normalization (BN) modules with the original ResNet-50. This enhancement aims to improve the segmentation capability for detecting fine cracks. Wang et al. [] combined fully convolutional network (FCN) with multiscale structured forests to construct five FCN-based network architectures capable of accurately segmenting tiny cracks. Hang et al. [] developed an Adaptive Feature Fusion Network (AFFNet) composed of ResNet101 as the backbone and two attention mechanism modules, which enables automatic pixel-level detection of concrete cracks. Sun et al. [] enhanced the DeepLabv3+ model by replacing standard convolutions with depthwise separable convolutions, adjusting the dilation rates of convolutions, assigning channel-wise weights to the spatial pyramid module, and selecting feature maps to contribute to crack detection. This approach not only improved segmentation accuracy and detail preservation but also enhanced the model’s ability to accurately localize cracks and resist background interference. Tabernik et al. [] introduced a two-stage deep learning architecture based on segmentation and decision networks, achieving excellent crack segmentation performance with only 25–30 training samples.

Semantic segmentation networks offer significant advantages in crack detection compared to other detection methods. By classifying each pixel, semantic segmentation can separate cracks from the background, achieving precise localization and recognition. This approach provides fine-grained segmentation results, preserving edge details and enhancing detection accuracy. Additionally, semantic segmentation is adaptable to varying lighting conditions and complex backgrounds, demonstrating robustness in handling diverse crack morphologies. Semantic segmentation effectively filters noise, highlights crack regions, and extracts morphological features such as width, length, and orientation, providing quantitative information about cracks at multiple scales. In monitoring systems, applying semantic segmentation networks enables efficient batch data processing, facilitating automatic and intelligent maintenance and inspection of large-scale civil infrastructure. This method reduces the impact of human errors and allows for continuous learning and optimization, making the detection process more reliable and efficient.

In addition to the aforementioned crack detection models, Vision Transformer (ViT), a recently proposed deep learning model, has received significant attention in recent years. Vision Transformer, originally proposed by Dosovitskiy et al. in 2020, is based on a self-attention mechanism. Unlike traditional convolutional neural networks (CNNs), ViT converts image data into sequential data by dividing the image into fixed-size patches and transforming these patches into one-dimensional vectors combined with linear embedding and position encoding. Thanks to the self-attention mechanism, ViT captures global contextual information in images, offering enhanced feature modeling capabilities. As a result, ViT outperforms CNNs in tasks such as image classification, object detection, and semantic segmentation. This is why many scientists use ViT for crack detection. Direct application of ViT extracts global features from images for crack detection through its self-attention mechanism. Combining ViT with CNN leverages the CNN’s local feature extraction capabilities alongside ViT’s global information processing. Feature enhancement techniques can also be applied to the features extracted by ViT to improve detection performance. ViT can process images at different scales to capture crack features at various scales. Additionally, self-supervised learning can reduce the dependence on labeled data by pre-training ViT without labeled data, followed by fine-tuning with labeled data. Finally, the accuracy of crack detection can be further improved by optimizing the self-attention mechanism or by incorporating other attention mechanisms. In future computer vision tasks related to crack detection, Vision Transformer (ViT) is expected to extend its applications and address the shortcomings of CNNs.

5. Datasets

While algorithm perfection is critical to the successful implementation of crack detection models, the quality and size of the dataset, as well as the support of high-performance computing hardware, are equally important. The dataset is the basis for information learning and training of the deep learning model and directly affects the final performance of the model. Accurate crack detection not only depends on advanced algorithms but also requires rich and high-quality data support so that the model can learn more features and patterns to improve detection accuracy and reliability. To better evaluate the performance of crack detection models, Table 8 summarizes some key publicly available datasets, including various datasets for crack classification, target detection, and pixel-level segmentation. The details of these datasets include the name of the dataset, number of images included, image resolution, manual labeling information, application area, and limitations.

Table 8.

Open-source crack detection datasets.

This information will help researchers select the appropriate dataset for their research needs and evaluate the dataset’s applicability and limitations. Manual labeling of pixel-level labels is a time-consuming and labor-intensive process. As a result, the number of samples in a dataset used for crack segmentation is generally much smaller than in a dataset used for crack classification or target detection. This is primarily because pixel-level labeling not only requires significant time and effort but also requires an extremely high level of labeling accuracy, which significantly increases the cost of building these datasets.

Datasets form the basis for model training and evaluation, and their size, quality, and diversity directly affect model performance, generalization ability, and application effectiveness. High-quality datasets significantly increase model accuracy and reliability, while inadequate or inappropriate datasets limit model performance and scope. The size of the dataset has a significant impact on the performance of a crack detection model. Large, diverse datasets provide a rich sample and increase model versatility as the model learns more representative features and reduces the risk of overfitting. Large datasets typically contain more data for validation and testing, allowing for a more accurate assessment of model performance. If the training dataset is too small, the model will remember certain details of the training data, leading to the phenomenon of overfitting, i.e., the model performs well on the training data but does not perform well when faced with new data. In addition, a small dataset that is not diverse enough not only prevents the model from learning enough features to deal with different crack types and changing conditions, but also leads to inaccurate evaluation results due to insufficient sample size during the validation phase. For complex crack detection models, a large amount of data is required for training in order to fully demonstrate their capabilities. If the amount of data is not sufficient, the model will not be able to learn effective features, resulting in poor performance. For simple models, effective learning can be achieved with smaller datasets, but at the same time the upper bound on model performance is limited.

6. Evaluation Index

To objectively evaluate the performance of crack detection models in terms of accuracy, reliability, and validity, a set of mathematical measures are commonly used to quantify model performance. These measures provide a quantitative basis for comparing and validating the effectiveness of different methods. Table 9 summarizes the scales commonly used to evaluate the performance of crack detection models.

Table 9.

Performance evaluation index of crack detection model.

The selection and calculation of evaluation metrics may vary across different crack detection tasks, but the core metrics usually include true positives (TPs), false positives (FPs), true negatives (TNs), and false negatives (FNs). These parameters are used to calculate precision, recall, and the F1 score. Precision measures the proportion of areas predicted by the model to be cracked that actually are. Recall evaluates a model’s ability to identify all actual crack areas. The F1 score is the reconciled average of precision and recall and provides a comprehensive assessment of performance. To guarantee the objectivity of the F1 score, it is often necessary to reset the prediction thresholds according to the specific task, while precision is only informative when the sample size is balanced.

In crack target detection, intersection over union (IoU) is a commonly used evaluation metric that measures the degree of overlap between predicted and actual regions. The closer the IoU is to 1, the more accurate the detection results. The IoU calculation is based on the intersection and union of predicted and actual images. It is based on the intersection and concatenation of predicted and actual target images and is only valid if the predicted and actual target images belong to the same category. The IoU calculation is based on the intersection and concatenation of predicted and actual images and is only valid if the predicted image belongs to the same category as the actual target image. APs are calculated by 11-point interpolation [] and by the area under the curve (AUC), where mAP is the average of APs in the different categories. mAP is the average of APs calculated in different categories.

For the crack segmentation task, evaluation is based on pixel-level true positives (TPs), false positives (FPs), false negatives (FNs), and true negatives (TNs). Due to the subjective nature of manual labeling, some errors are typically allowed at the edges of the actual pixel region, with the range of discrimination error usually being plus or minus 2 to 5 pixels []. To minimize the effect of subjective errors, the enhanced Hausdorff distance metric [] and the newly proposed CovEval criterion [] are employed to improve the accuracy of evaluation results. These evaluation metrics systematically assess the performance of the crack detection model, providing insights into its strengths and weaknesses and offering a quantitative basis for further research and improvement.

The above evaluation indices and methods provide a scientific basis for systematically evaluating the performance of the crack detection model. They help to understand the model’s strengths and weaknesses and offer data support for subsequent research and technical improvements.

7. Discussion

With the continuous development of technology, computer vision, particularly deep learning techniques, has become an important tool in the field of crack detection in civil infrastructure. These techniques have greatly improved the accuracy and efficiency of detection, but their practical application still faces many challenges. In order to gain a comprehensive understanding of these challenges and strategies to address them, this section reviews the current status, challenges, and future directions of using computer vision for crack detection.

(1) Currently, deep learning is a mainstream trend in crack detection research and applications. However, it faces challenges such as high computational resource requirements, limited end-to-end interpretability, and model accuracy affected by data quality. Traditional image processing methods still offer advantages in specific scenarios. Therefore, integrating traditional methods with deep learning to leverage their respective strengths is an important direction for future research.

(2) Existing deep learning-based crack detection algorithms mainly rely on two-dimensional visible light images to analyze visible crack information, and this approach limits the acquisition of crack depth information. However, the combination of multidimensional remotely sensed data (e.g., high-resolution satellite imagery, 3D laser scanning images, and infrared images) provides new insights to address the problem. These data sources can provide additional support and accuracy in obtaining and analyzing fracture depth information. For example, high-resolution satellite imagery can provide information on crack distribution over large areas, and multispectral satellite imagery can help characterize cracks in different materials. Laser scanning and infrared images can provide more accurate spatial depth information, and RGBD (red–green–blue depth) images combine color and depth information, further improving the accuracy of crack detection.

Future research can focus on developing crack detection algorithms that utilize multidimensional remote sensing data combined with deep learning. Combining different data sources to train the model allows different crack characteristics to be more comprehensively covered and analyzed, which greatly improves the accuracy and reliability of the detection algorithm. The application of this method should promote the development of crack detection technology and provide better support for the safe maintenance of civil infrastructure structures.

(3) Utilizing semantic segmentation algorithms for crack detection in civil infrastructure requires extensive pixel-level annotated data, which is both costly and challenging to obtain. Current crack segmentation models often lack robustness and generalization capabilities when working with limited datasets. To address this issue, it is crucial to establish more comprehensive crack segmentation datasets and develop algorithms designed for small-sample training. Crack segmentation tasks also require fine pixel-level annotations, which can lead to subjective discrepancies among annotators, particularly in the edge regions of micro-cracks. Proposing edge evaluation metrics to mitigate the impact of annotation errors is a valuable research direction. By studying these metrics in depth, we can achieve a more objective assessment of crack detection models’ performance, reducing the influence of human factors and improving the reliability and practicality of these models.

Future research should focus on designing effective edge evaluation metrics and incorporating small-sample training algorithms to enhance the performance of crack segmentation models in real-world environments. Additionally, leveraging techniques such as transfer learning and generative adversarial networks can address issues of data scarcity and annotation errors. These approaches can help mitigate the limitations posed by limited data and subjective biases, thereby improving the accuracy and robustness of crack detection models.

(4) ViT is very demanding on computational resources and training data; its training process is complex, its performance is insufficient for small datasets, and it is inferior to convolutional neural networks (CNNs) in local feature extraction. While ViT performs well on large datasets, the high computational resource requirements and model complexity remain challenges for future research. In future computer vision tasks related to crack detection, Vision Transformer (ViT) is expected to further improve its applications and compensate for the limitations of traditional CNNs in local feature extraction. As the technology matures, ViT is expected to contribute significantly to advances in the field of computer vision.

(5) The size, quality, and diversity of the dataset have a direct impact on the performance of the crack detection model. A large and diverse dataset increases the generalizability and accuracy of the model, while a small dataset can lead to over-fitting and limit the model’s performance on new data. Complex models require sufficient data; for simple models, although training on small datasets can be effective, the upper performance limit is lower. Therefore, datasets should be scaled and optimized to improve model performance, and future research should explore data augmentation and synthesis methods for more efficient training and evaluation.

(6) In the crack detection task, the choice of epochs (training cycles) and batch size significantly affects the model performance. An appropriate number of epochs can ensure that the model fully learns the fine characteristics of cracks and thus improve the detection accuracy, but too many epochs can lead to over-fitting, which affects the model’s ability to generalize new data. On the other hand, the choice of batch size affects the stability of learning and computational efficiency. A smaller batch size improves the generalization ability of the model but may lead to unstable learning; a larger batch size provides more stable gradient estimation and improves the learning rate but may reduce the generalization ability. In crack detection, a reasonable configuration of epochs and batch sizes is very important to improve the accuracy and efficiency of model training, and it is necessary to find an optimal balance between training stability, computational resources, and model performance.

Through these discussions, we aim to provide a comprehensive understanding of the application of computer vision for crack detection, identify bottlenecks in existing technologies, and suggest appropriate research directions and solutions to advance the field.

8. Conclusions

Over time, civil infrastructure projects inevitably develop surface damage such as cracks and spalling, which are common problems for concrete structures. Surface cracks, in particular, are a major contributor to concrete failure. The results of crack detection can clearly show the extent of damage and identify early signs of structural defects. Early detection, assessment, and monitoring of cracks are essential to maintain the integrity and extend the service life of concrete structures. Crack detection using machine vision has several advantages over manual inspection, including increased efficiency, safety, and cost-effectiveness. This technology reduces the risks associated with hazardous conditions and long inspection time, improves inspection efficiency, reduces costs, and is gaining importance in engineering practice. This paper provides a systematic review of current research on crack detection in civil infrastructure. Its conclusions highlight the achievements, challenges, and future directions of computer vision techniques for the inspection and maintenance of concrete structures.

(1) In practice, the choice image classification, object detection, and semantic segmentation algorithms should be based on the specific requirements of the task at hand. If the goal is to identify images containing cracks, a classification network algorithm should be used. This simple yet effective preliminary screening tool provides foundational information for subsequent crack localization and analysis. For crack classification and localization, facilitating rapid inspection of surface cracks on the target object, an object detection network algorithm is appropriate. This method can deliver more detailed crack information, enabling quicker inspection and analysis, and offering crucial references for maintenance and repair work. To obtain quantitative information about crack morphology, a segmentation network algorithm should be employed. This algorithm performs pixel-level segmentation of images, accurately identifying crack regions and providing quantitative information regarding the crack’s shape and size. In summary, the selection of the appropriate algorithm should align with the specific needs of the inspection task to ensure accurate and efficient crack detection and analysis.

(2) Computer vision methods for civil infrastructure face a number of challenges. Data quality and labeling issues (e.g., insufficient datasets and inconsistent labeling) affect model training and performance. Environmental variations such as lighting and weather conditions and different types of cracks increase the detection complexity. High computational resource requirements and real-time processing are important constraints that often cannot be satisfied by traditional methods. Model generalization is important; models may not perform well with new datasets or conditions, and there is a risk of over-fitting. The complexity and robustness of algorithms require models to be more adaptive and stable, and standardization and compatibility issues impede system integration. Real-time processing and integration issues affect detection speed and efficiency. Environmental perturbations require more adaptive methods. Ethical and legal issues, including data protection and compliance, must also be addressed. These challenges emphasize the need for global solutions to ensure effective and sustainable management of civil infrastructures.