Automatic Classification of Submerged Macrophytes at Lake Constance Using Laser Bathymetry Point Clouds

Abstract

1. Introduction

2. Materials

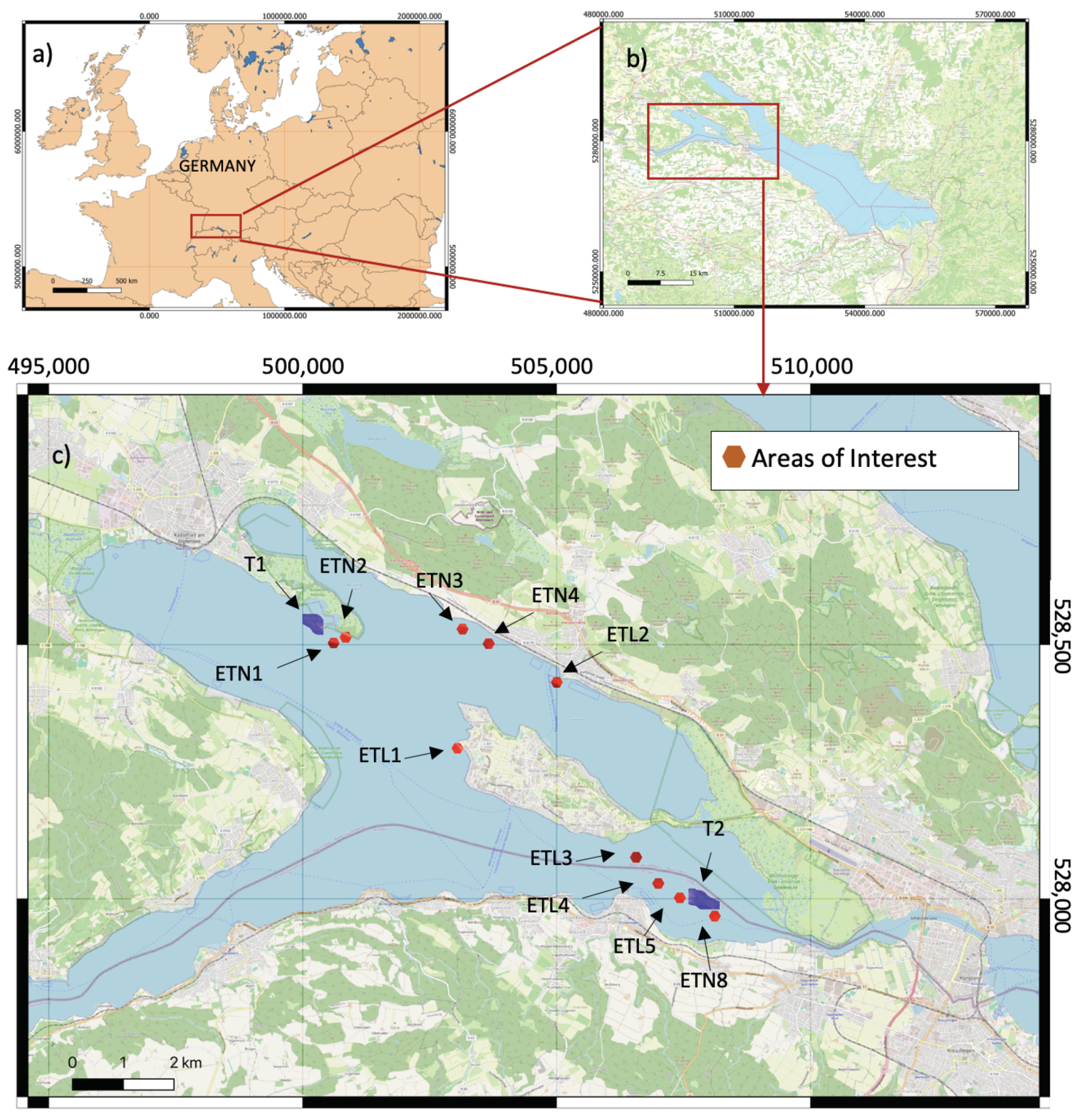

2.1. Study Area and Research Project

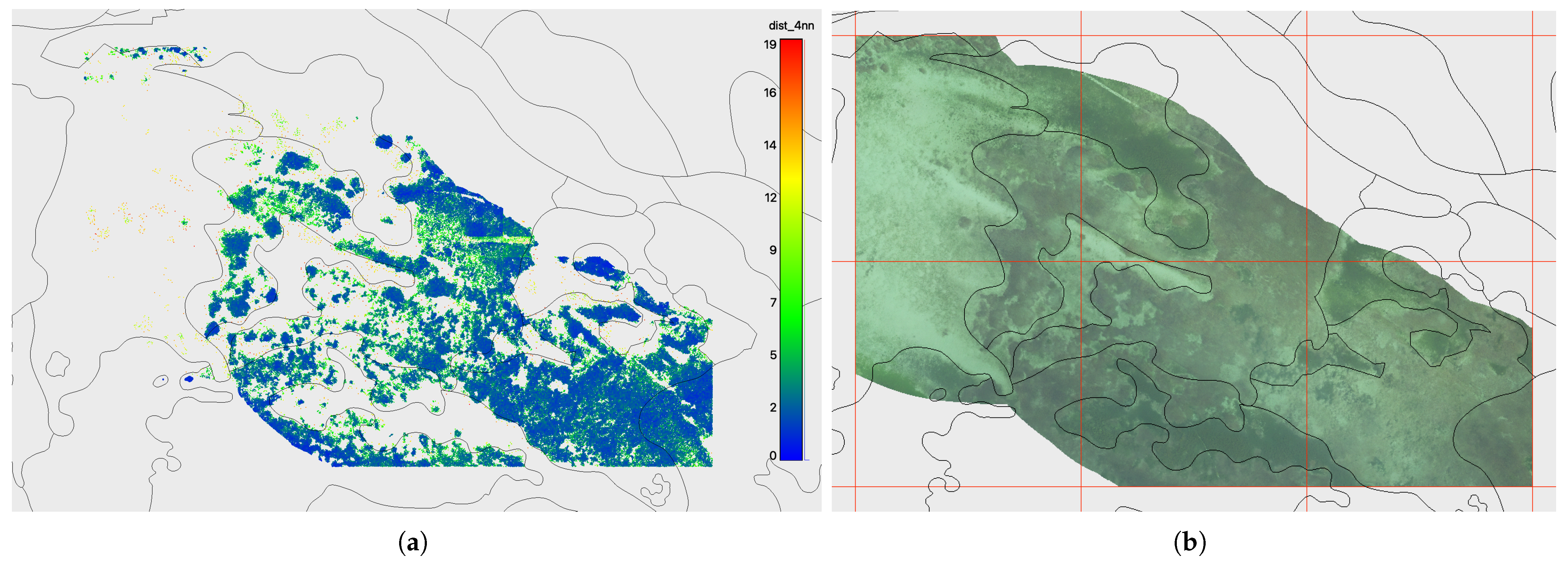

2.2. Dataset

2.3. Software Framework

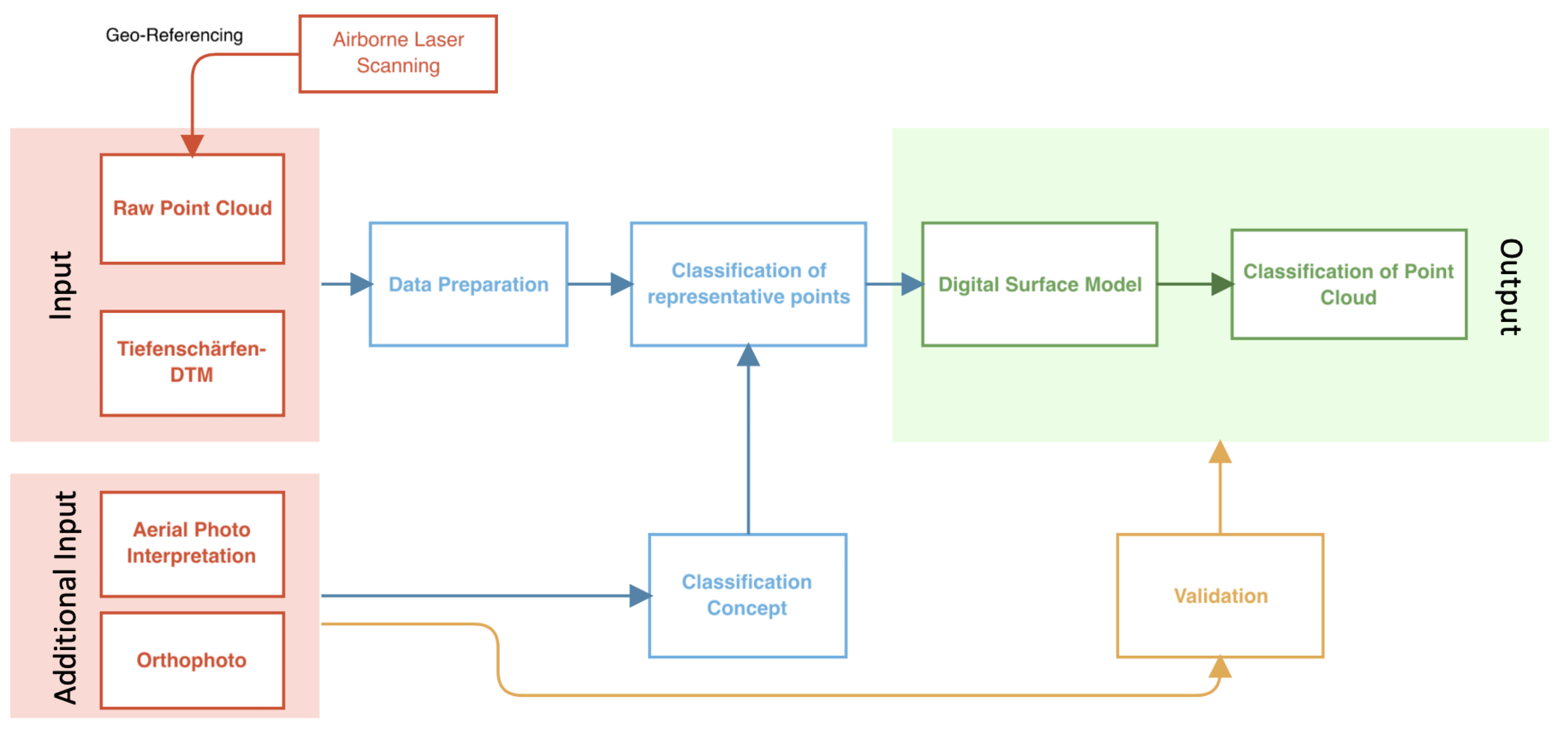

3. Methods

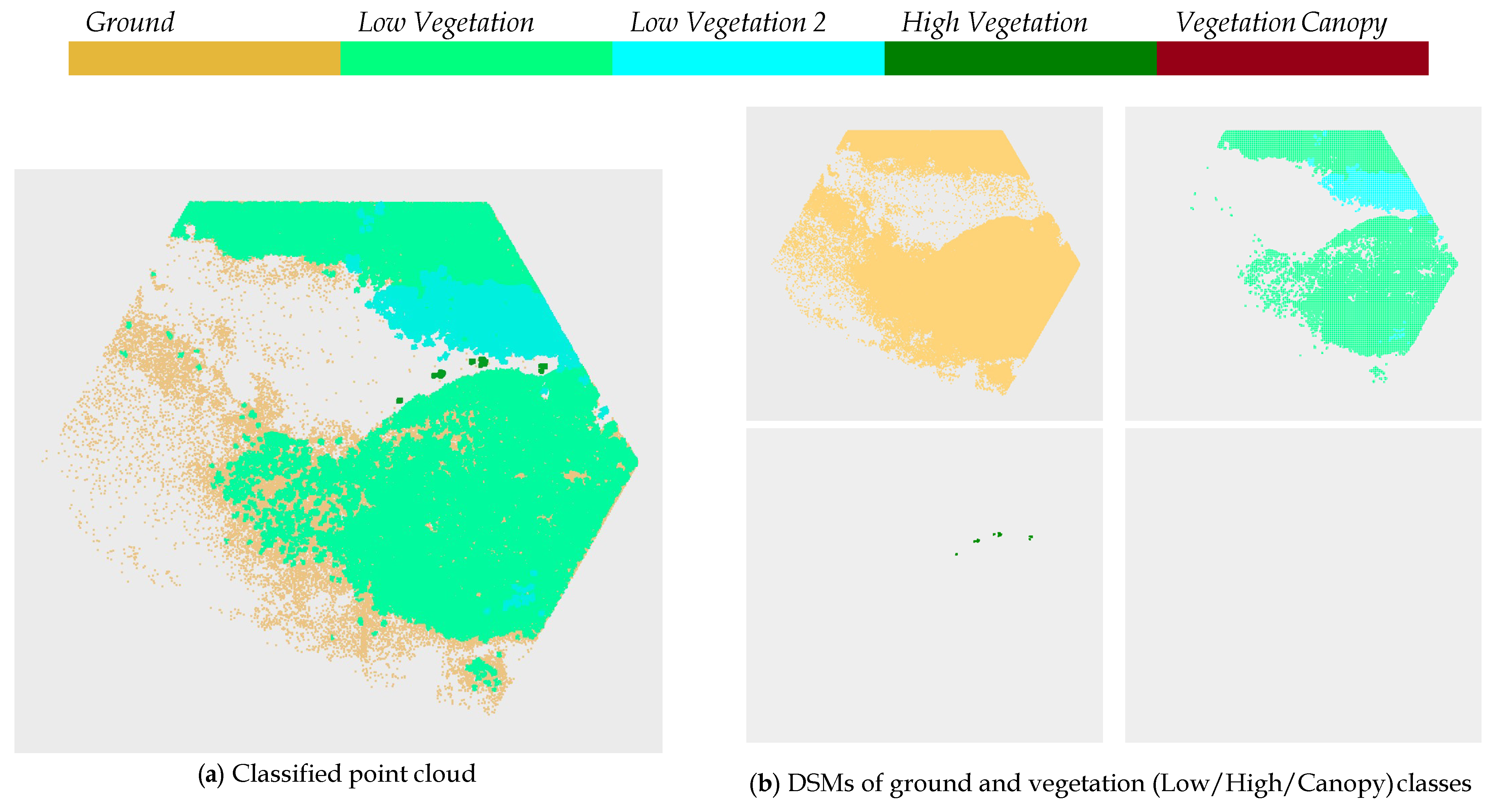

3.1. Airborne Laser Scanning Data Processing

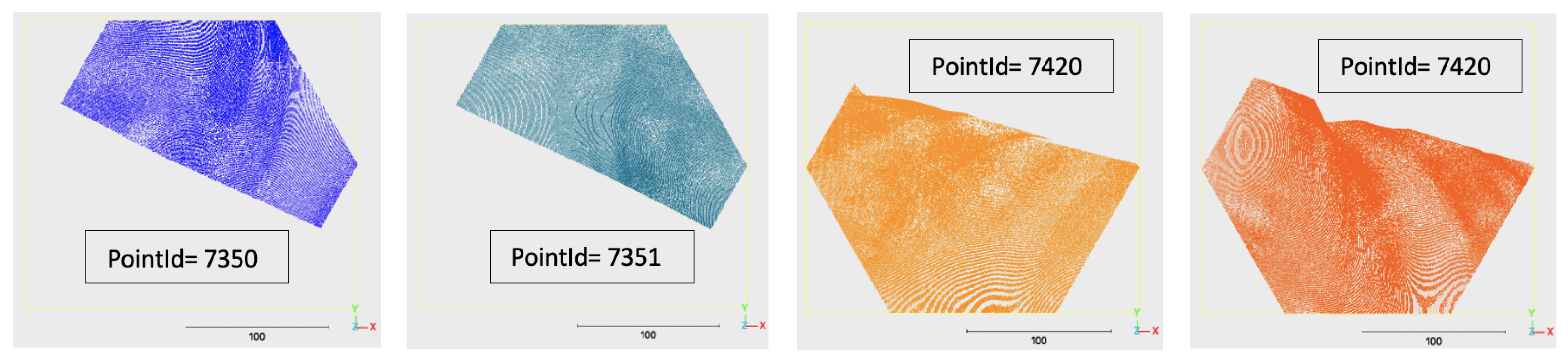

3.1.1. Data Preparation

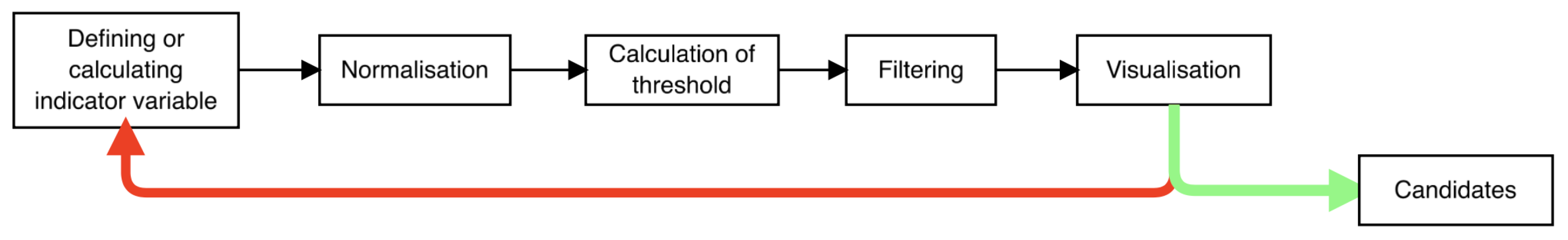

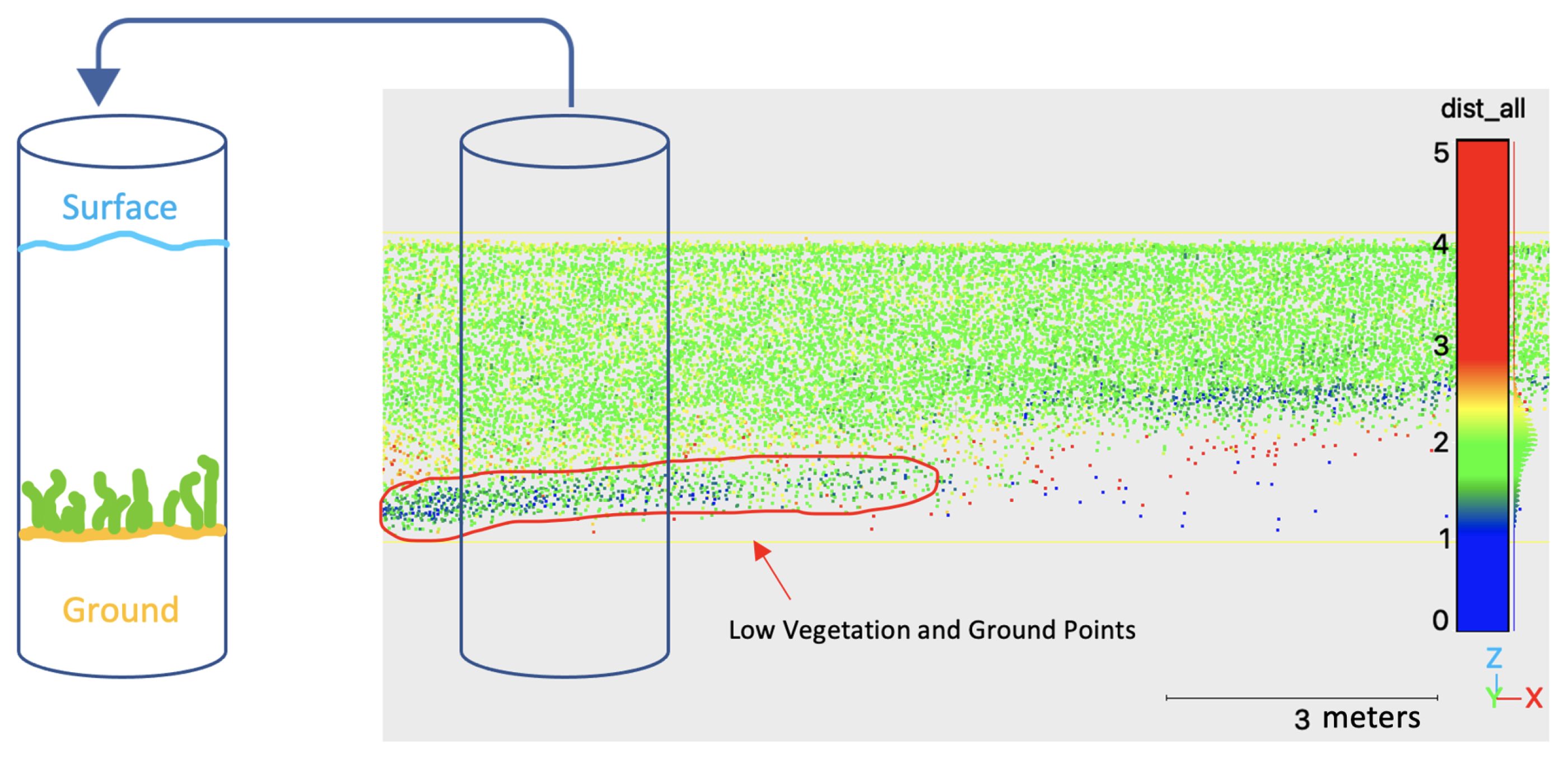

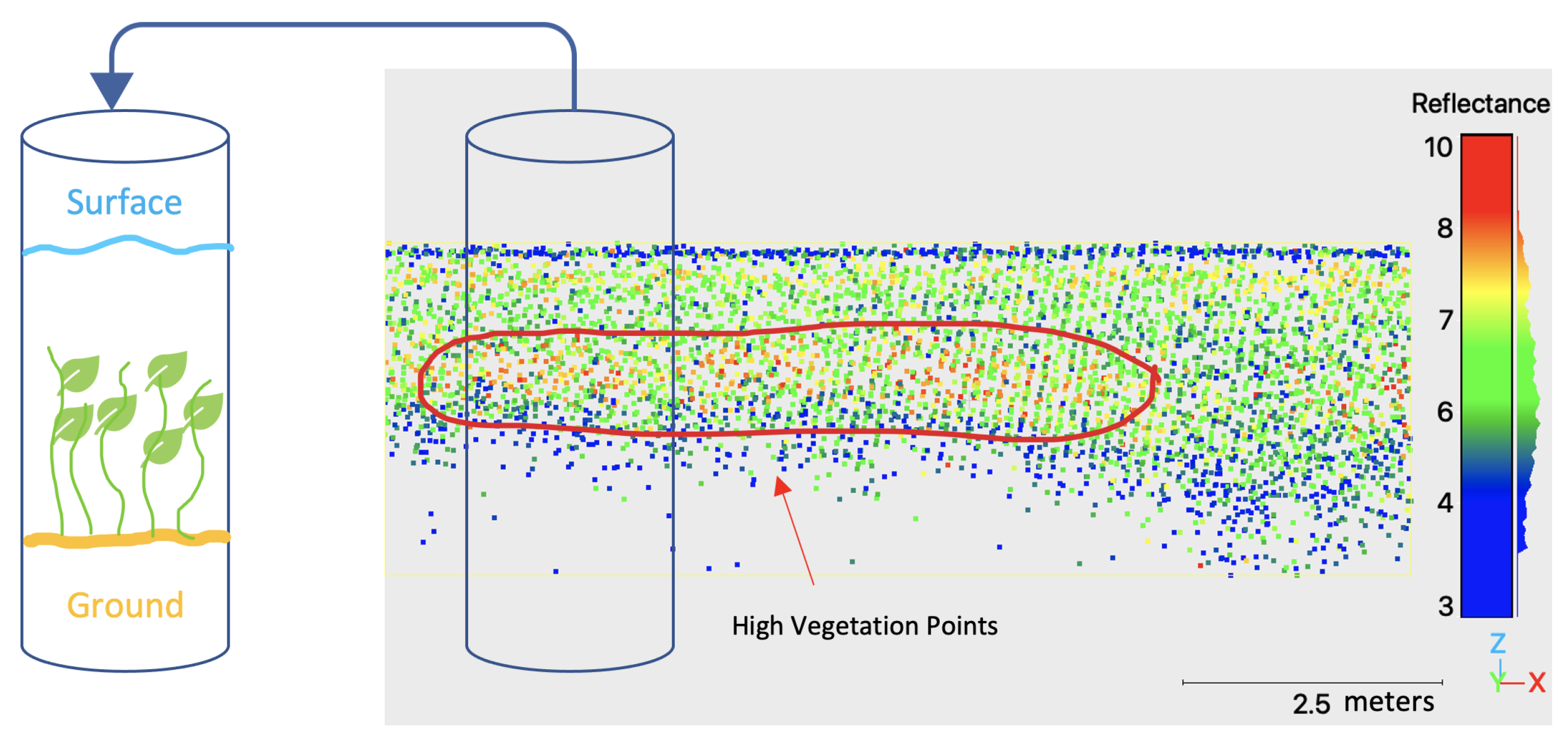

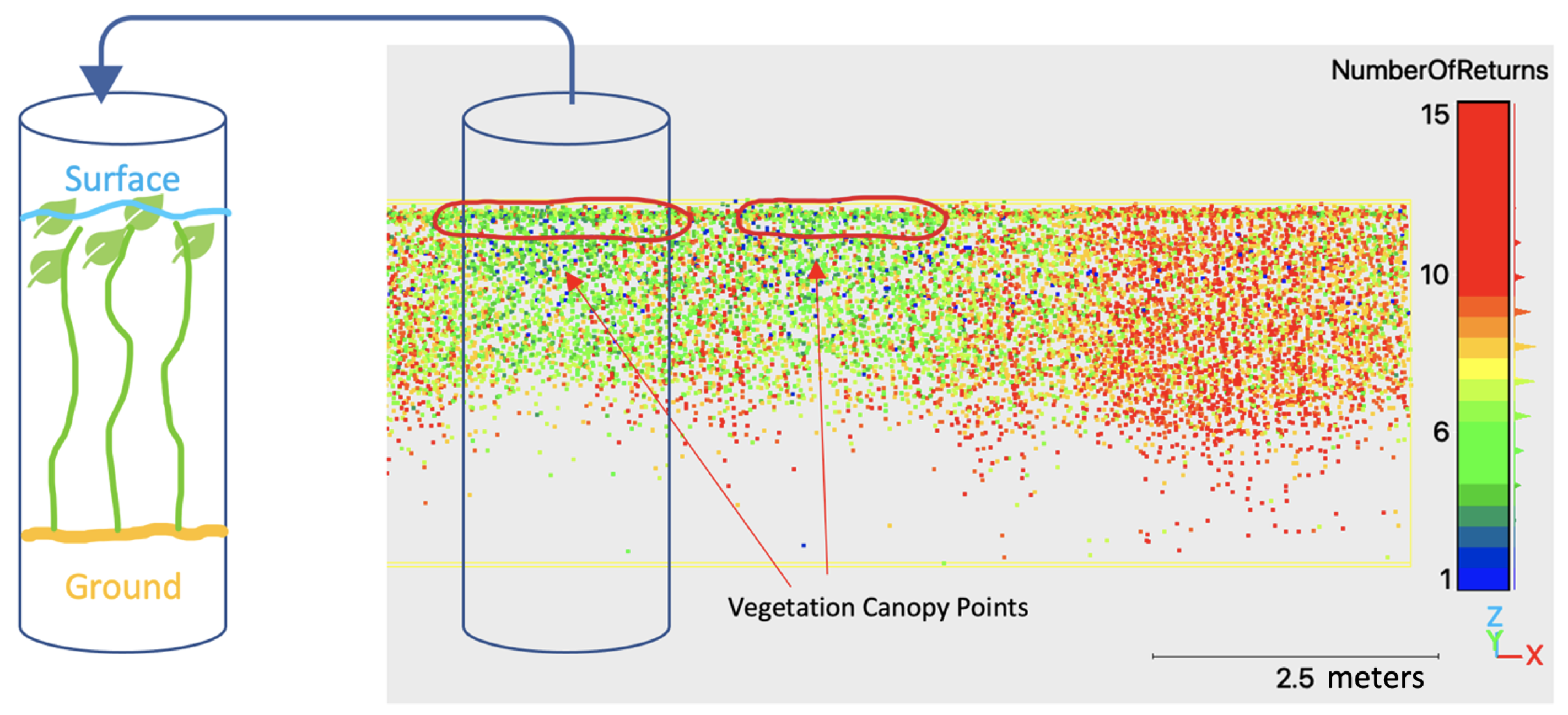

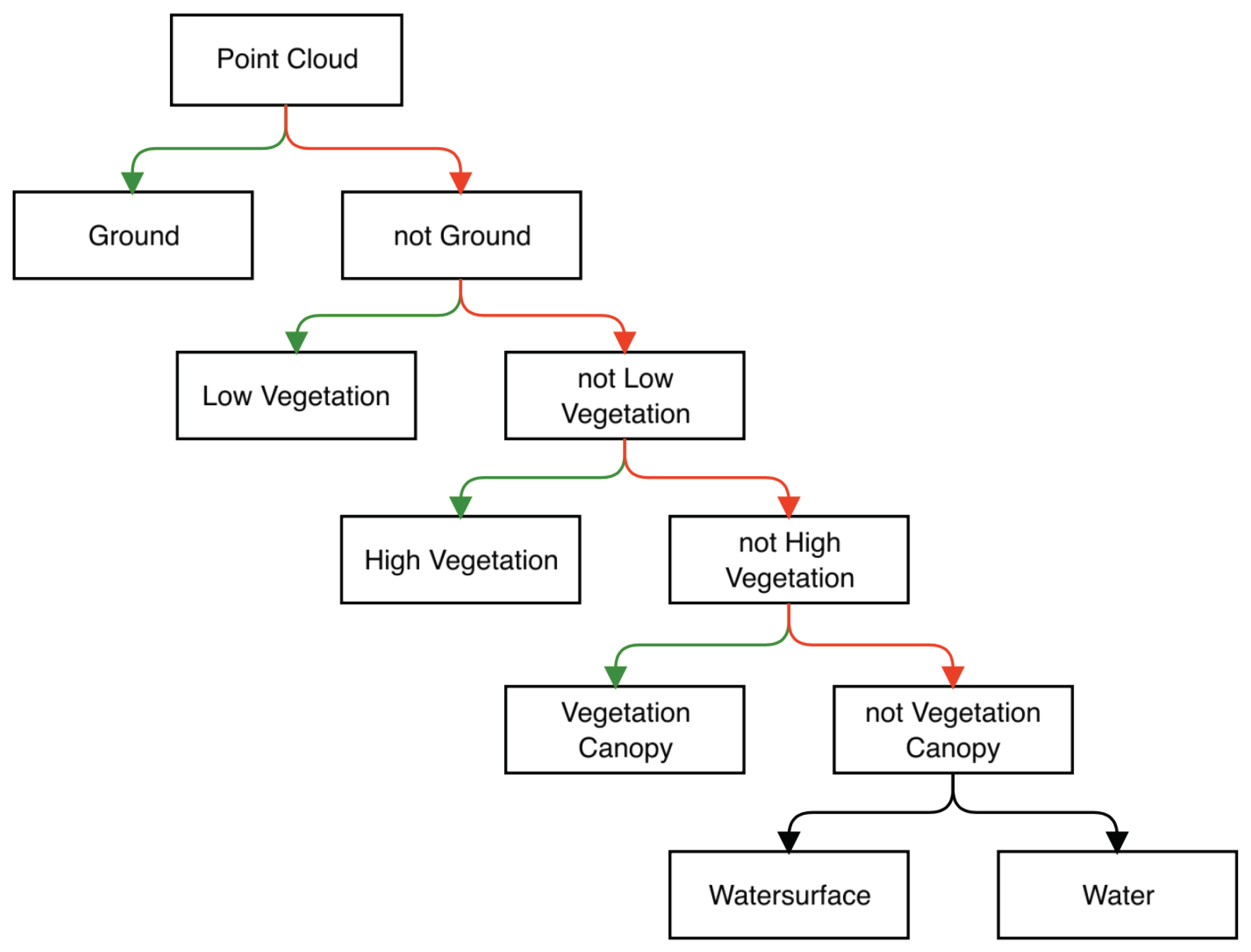

3.1.2. Classification of Candidates

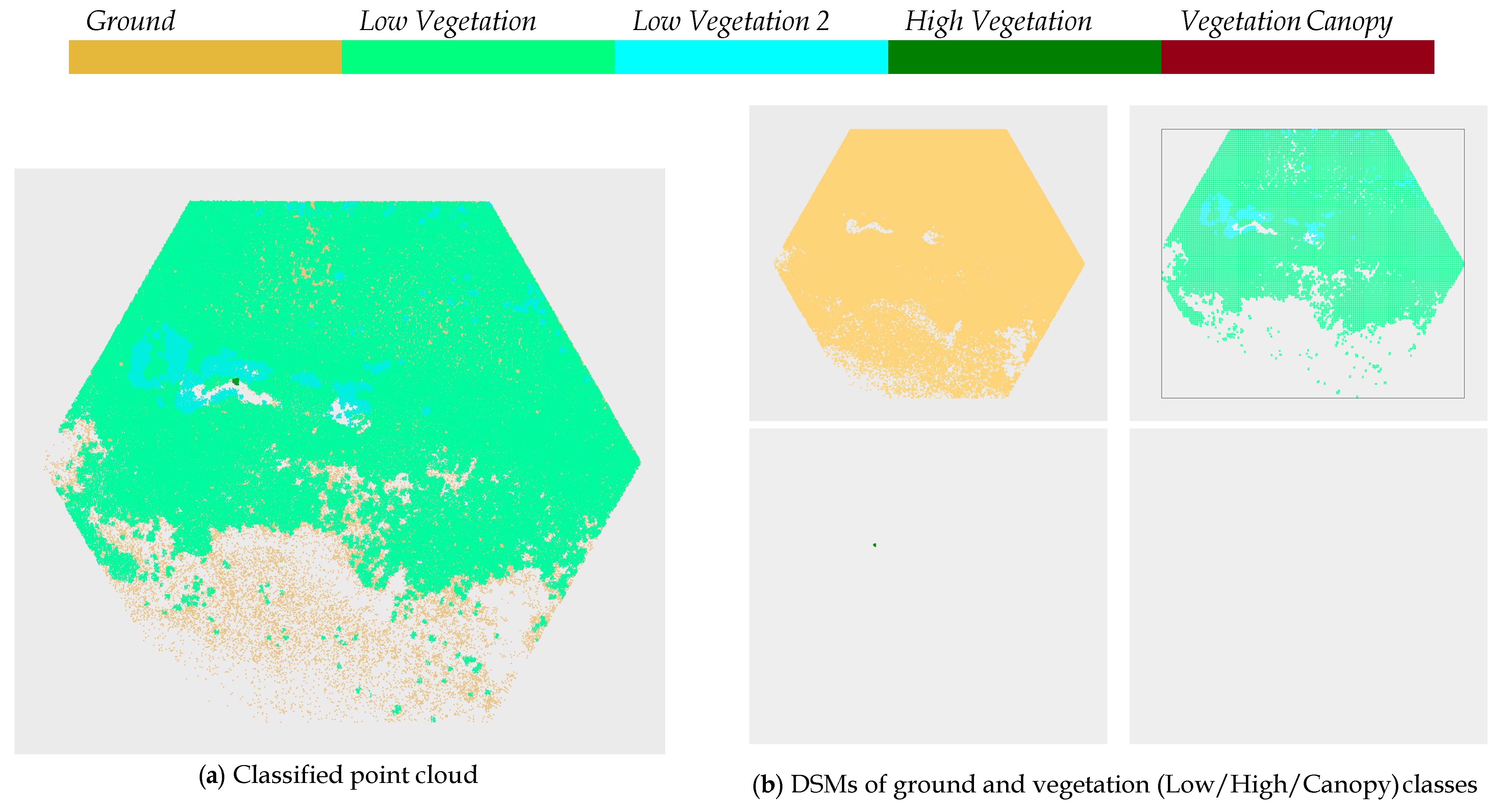

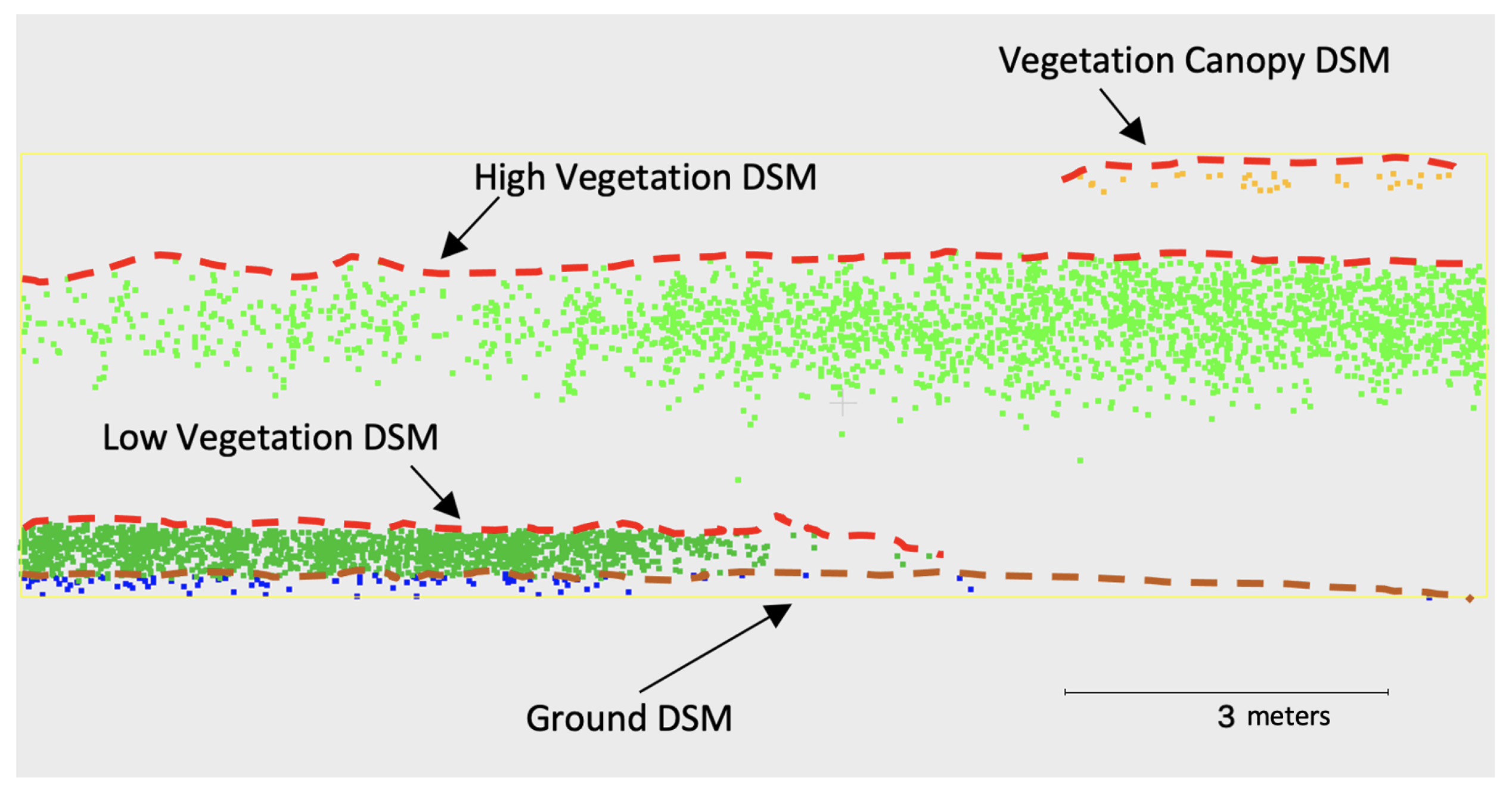

3.1.3. Calculation of Digital Surface Models

3.1.4. Classification of Point Cloud

3.2. Processing of Additional Data for Quality Assessment

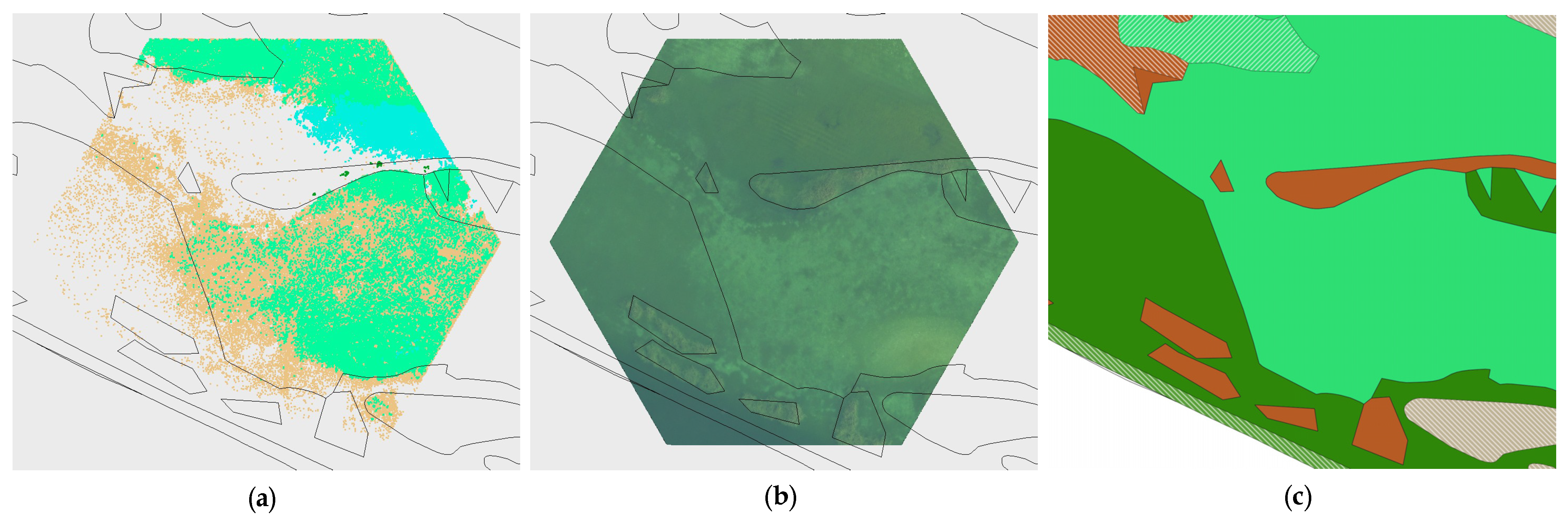

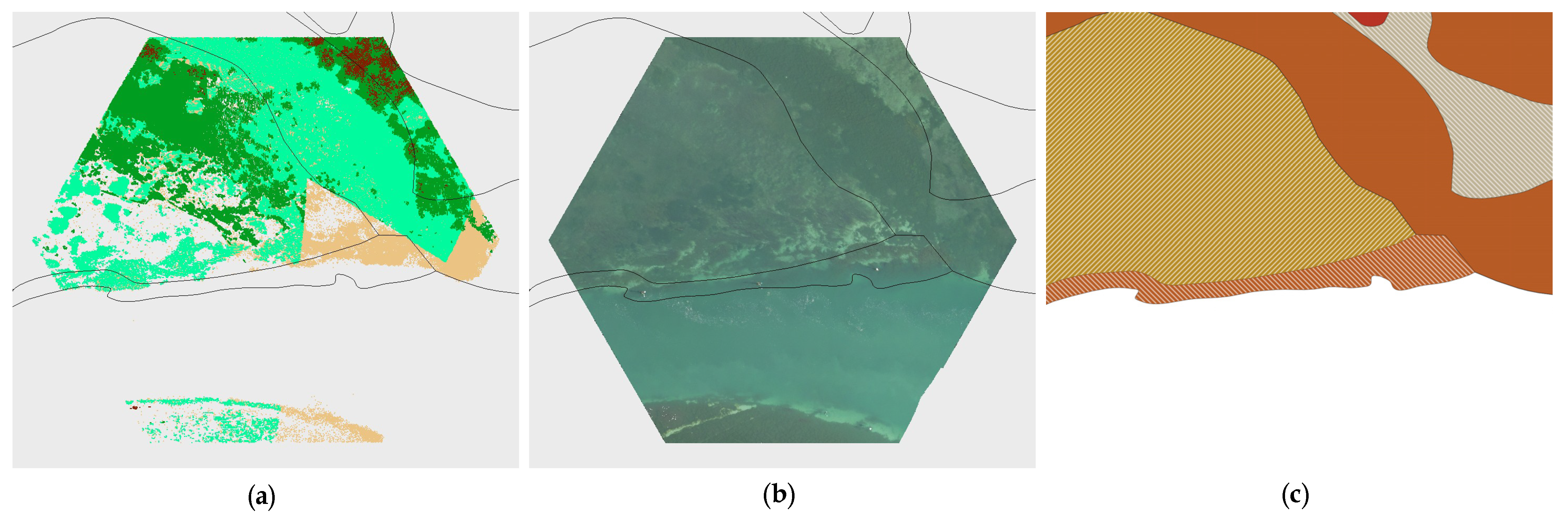

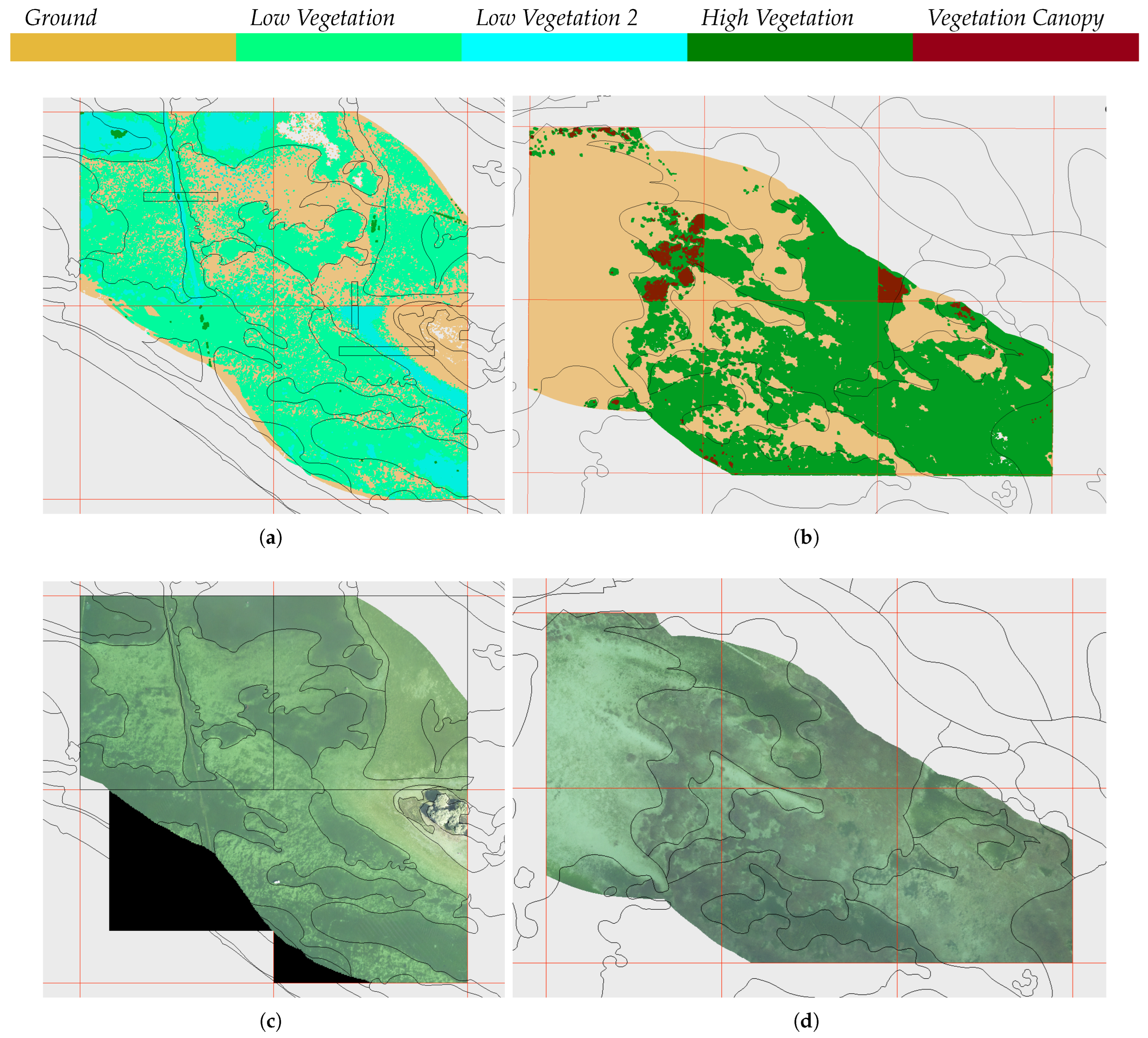

4. Results

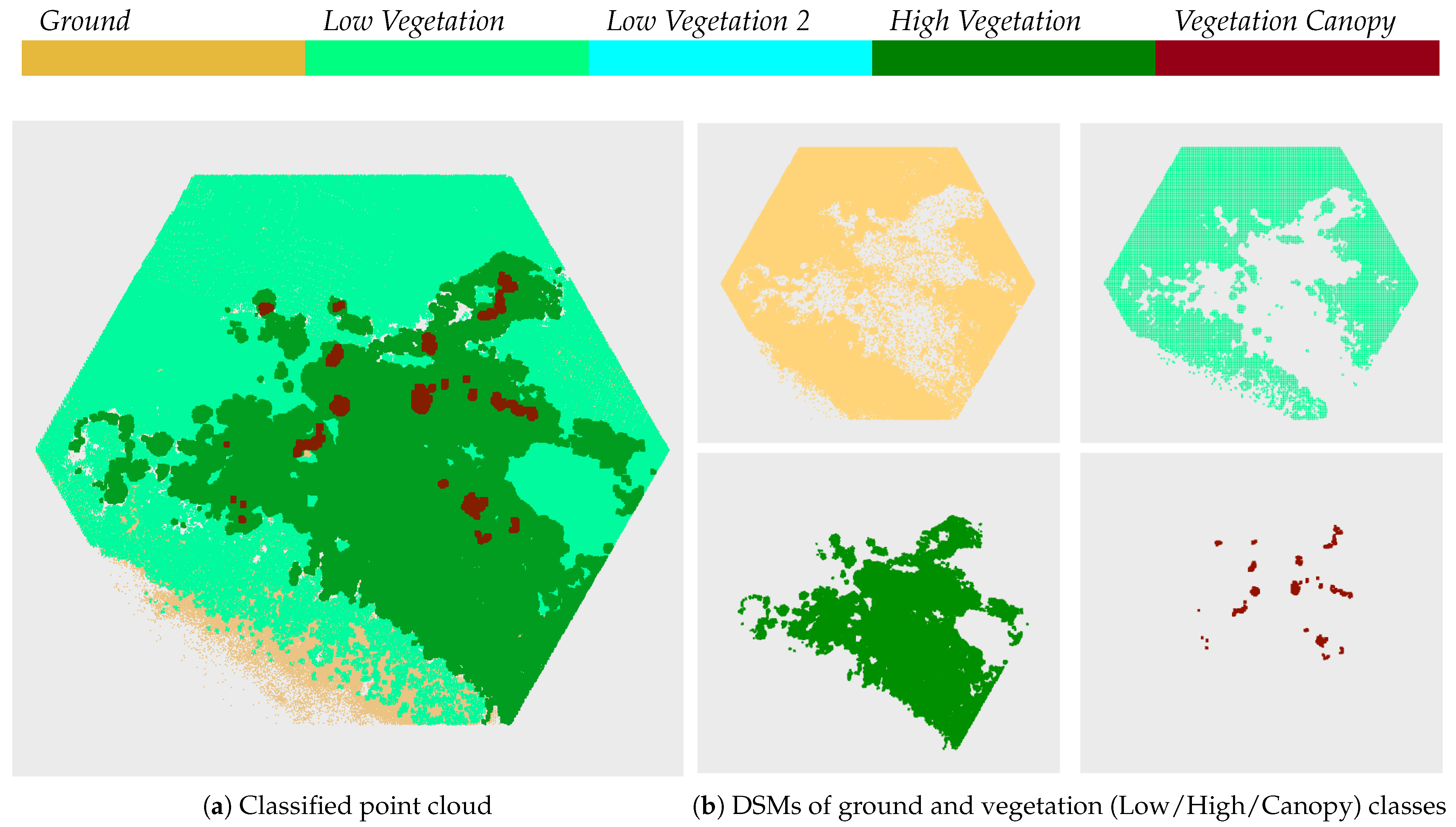

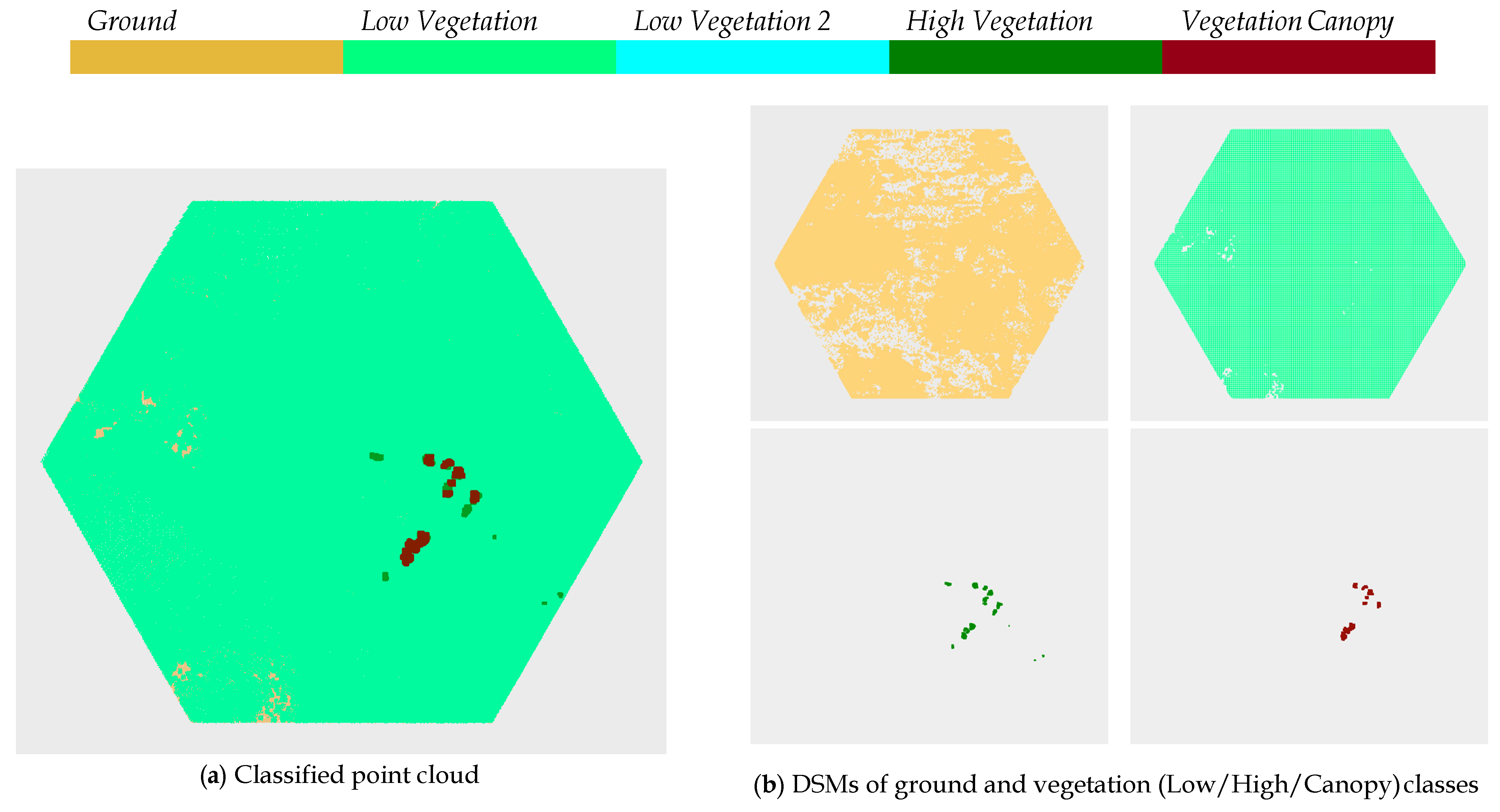

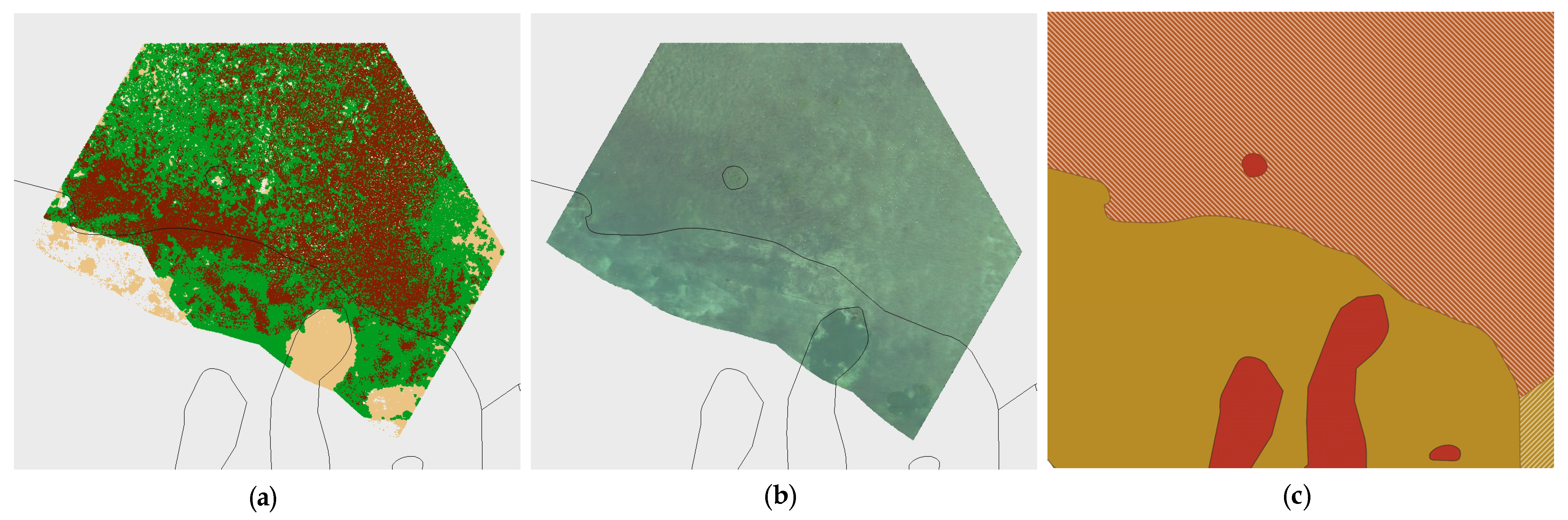

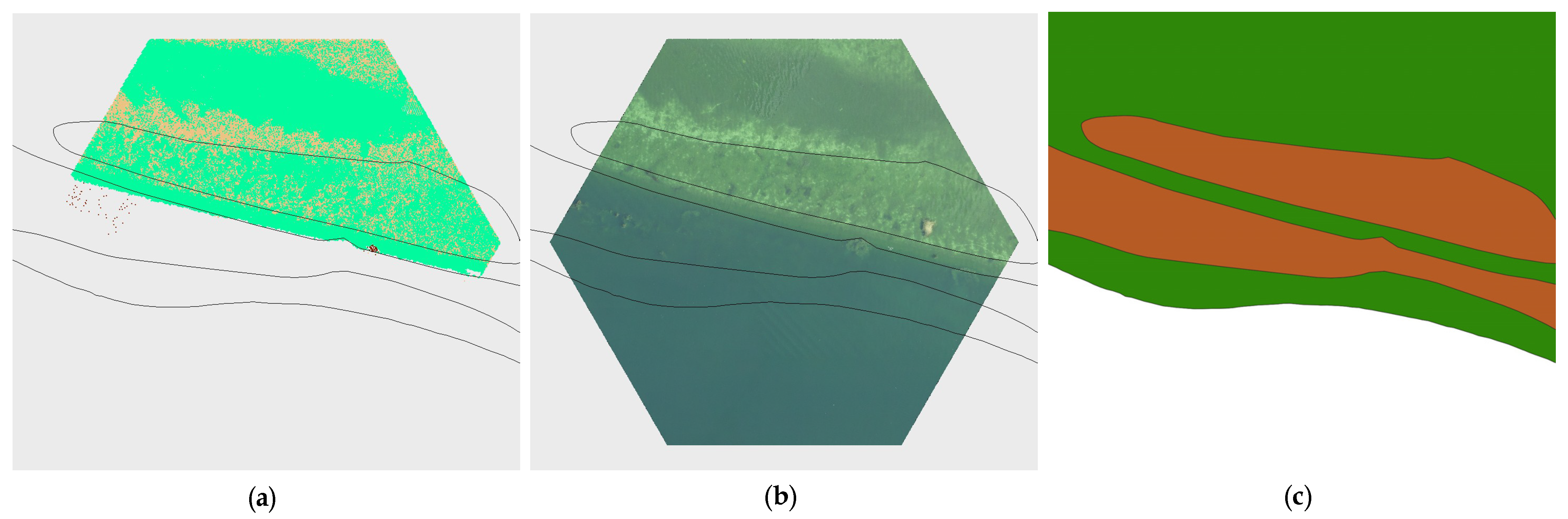

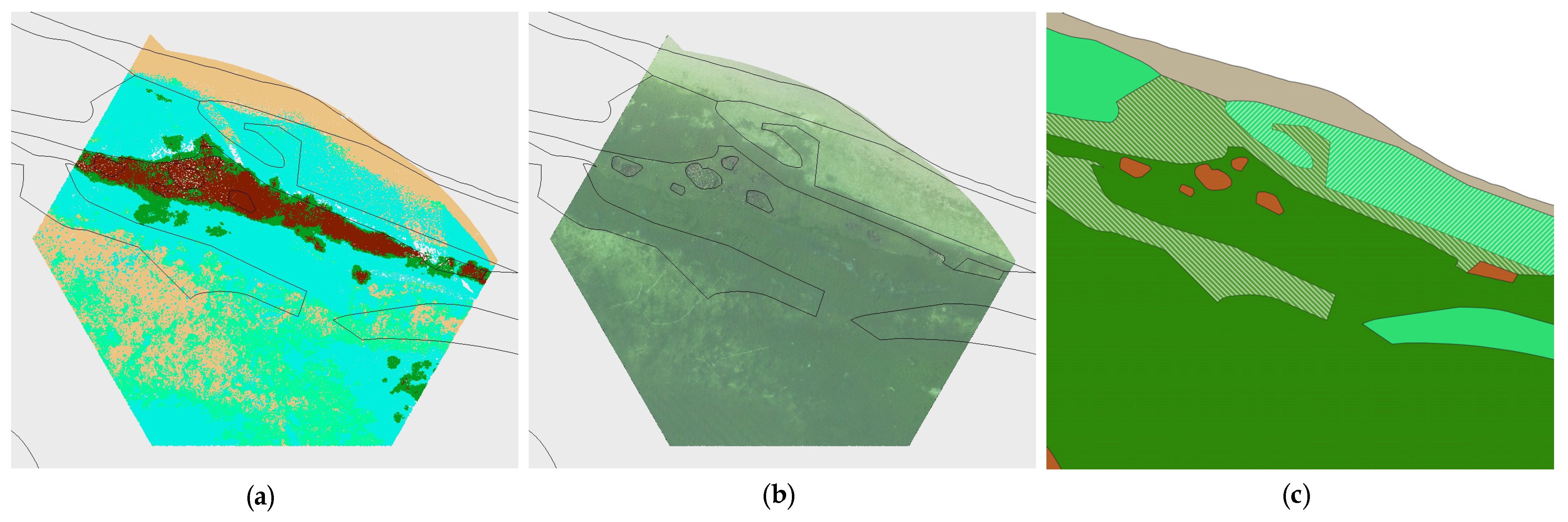

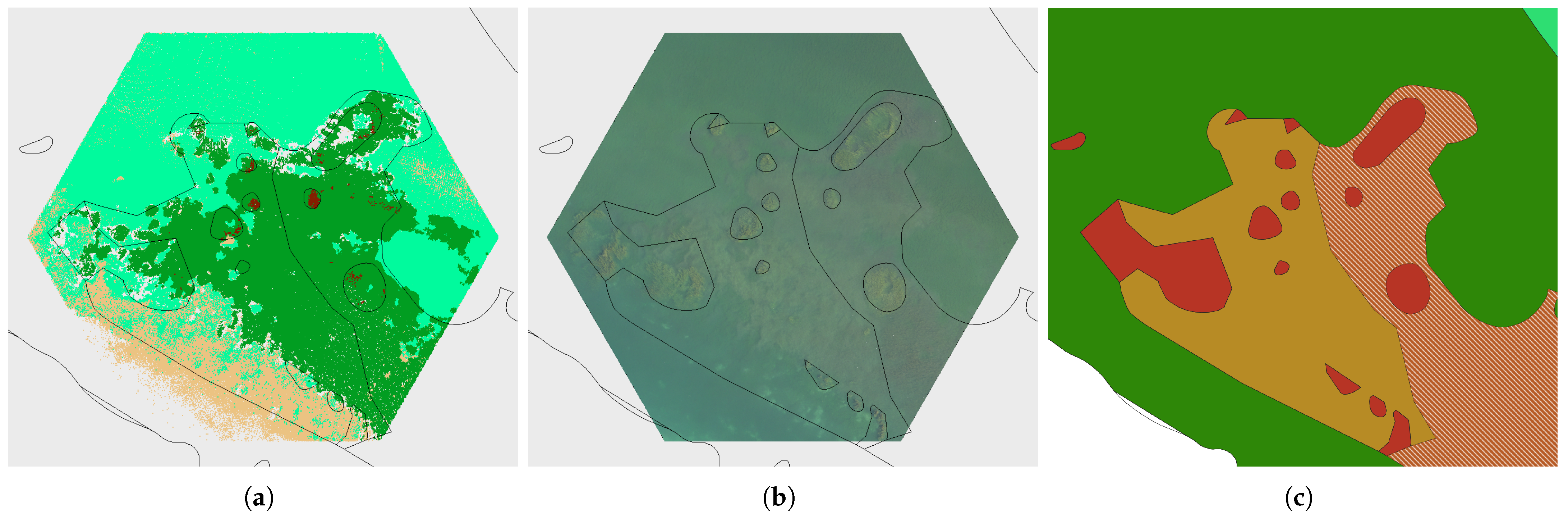

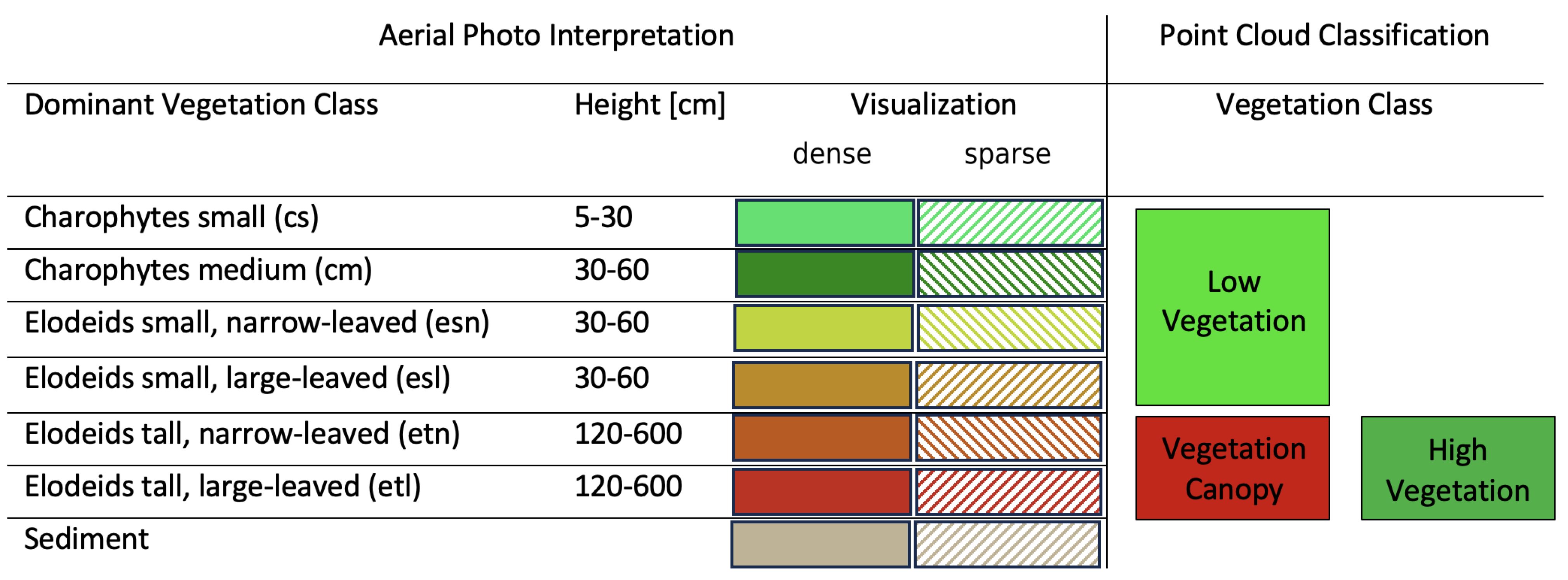

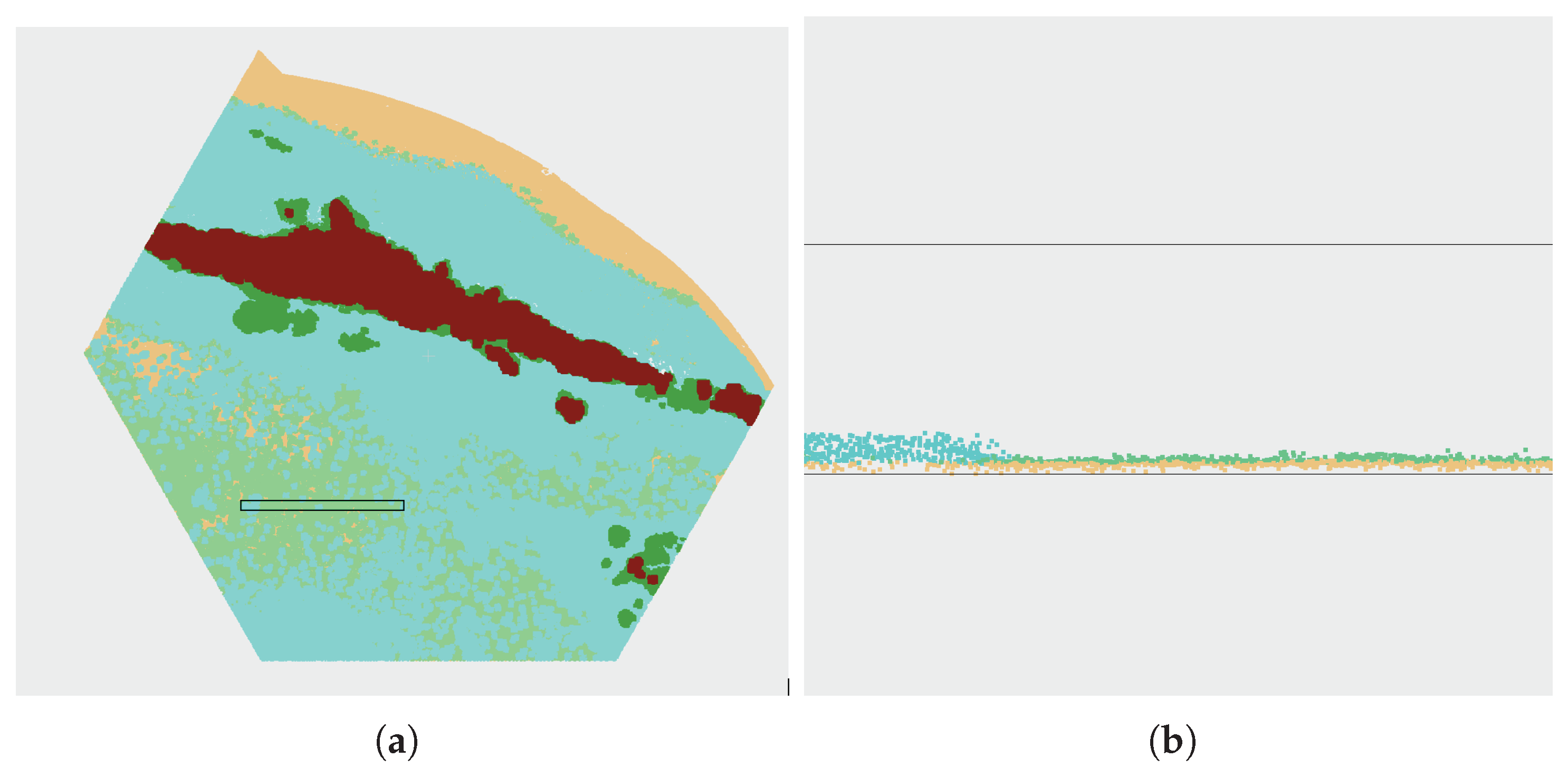

4.1. Classification Results

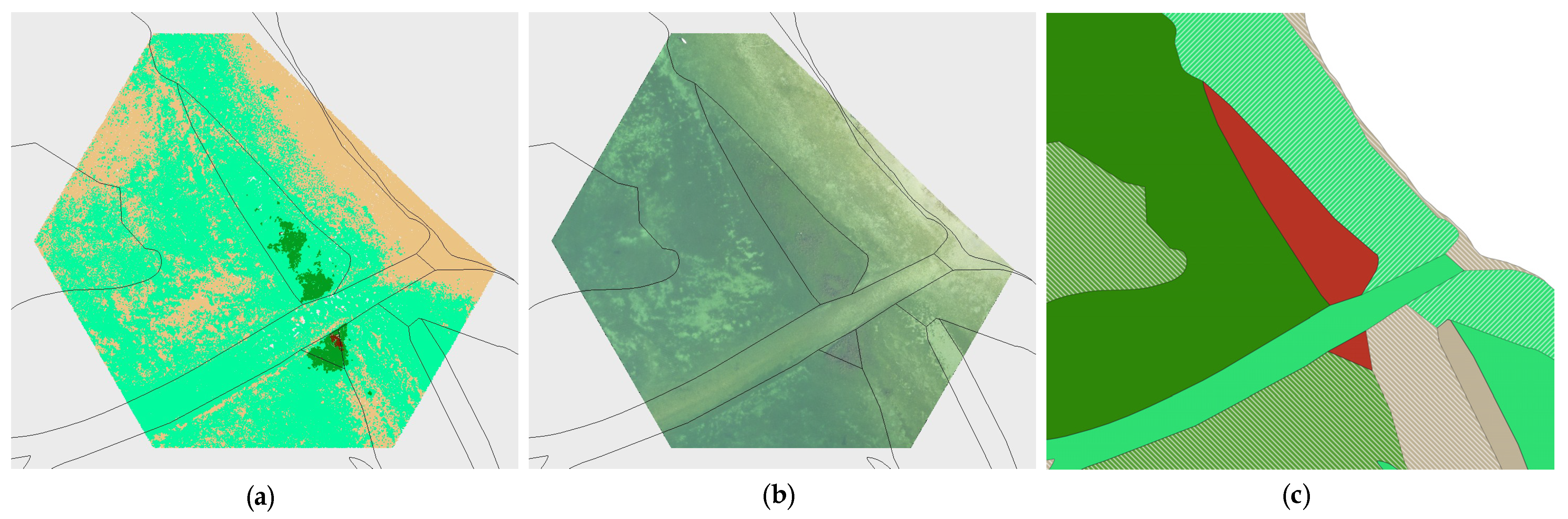

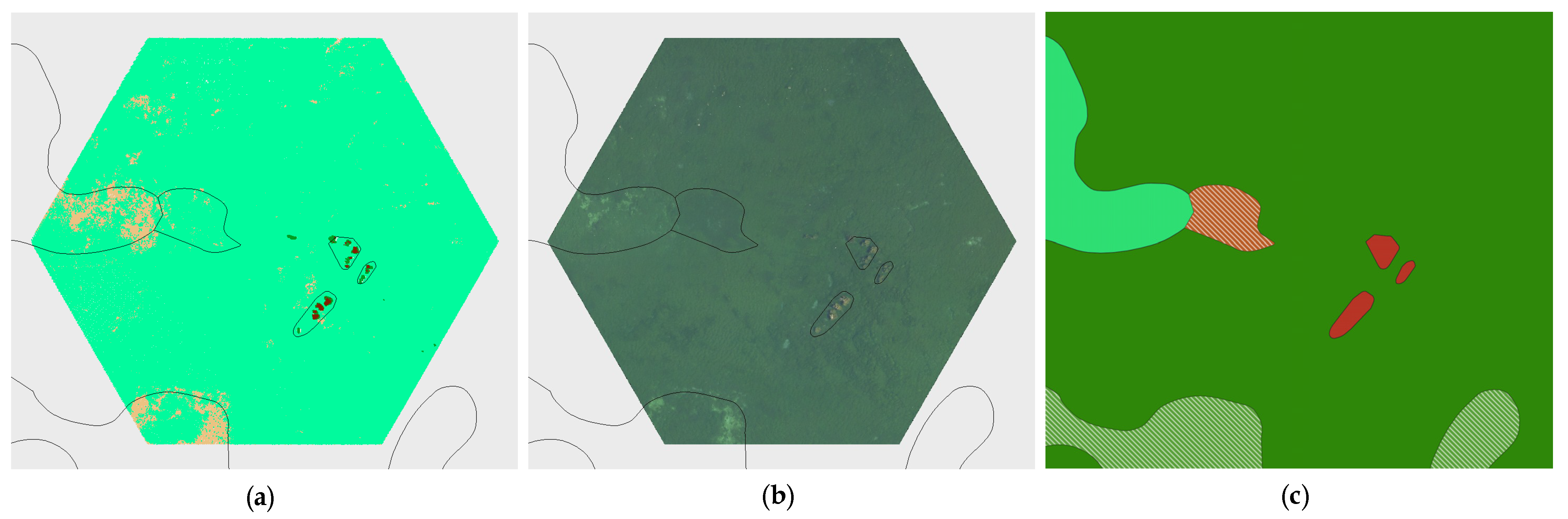

4.2. Comparison with Reference Data

5. Validation

5.1. Validation of Ground and Low Vegetation Class

5.2. Validation of High Vegetation Class

5.3. Validation of Vegetation Canopy Class

6. Discussion

6.1. Summary of the Validation

6.2. Vertical Complexity of Macrophyte Stands

6.3. Potential for Improvement and Extensions

- The calculation of the vegetation volume and biomass volume by combining the knowledge of vegetation densities.

- The extension of data analysis for determining vegetation density.

- The determination of leaf size could also be included in the analysis, following the aerial photo-based classification. The hypothesis is that plants with large leaf sizes may allow less of the signal of the laser beam to penetrate compared to those with small leaf size.

- The most ambitious extension of LiDAR data analysis would be the development of an advanced classification process that allows for detailed vegetation class distinctions or even the identification of vegetation types by combining various indicator attributes. Instead of using only one main indicator for each vegetation class, a combination of several attributes such as vegetation height, vegetation area size, leaf size, vegetation density, water depth, Reflectance, NumberOfReturns, and other influencing variables could lead to a more precise classification. This idea could be further developed by incorporating additional knowledge about vegetation types and their characteristics.

6.4. Transferability

6.5. Applications

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Classification Results and Comparative Data

References

- Carpenter, S.; Lodge, D. Effects of submersed macrophytes on ecosystem processes. Aquat. Bot. 1986, 26, 341–370. [Google Scholar] [CrossRef]

- Yamasaki, T.N.; Jiang, B.; Janzen, J.G.; Nepf, H.M. Feedback between vegetation, flow, and deposition: A study of artificial vegetation patch development. J. Hydrol. 2021, 598, 126232. [Google Scholar] [CrossRef]

- Coops, H.; Kerkum, F.C.M.; van den Berg, M.S.; van Splunder, I. Submerged macrophyte vegetation and the European Water Framework Directive: Assessment of status and trends in shallow, alkaline lakes in the Netherlands. In Shallow Lakes in a Changing World: Proceedings of the 5th International Symposium on Shallow Lakes, Dalfsen, The Netherlands, 5–9 June 2005; Springer: Amsterdam, The Netherlands, 2007; pp. 395–402. [Google Scholar] [CrossRef]

- Schneider, S. Macrophyte trophic indicator values from a European perspective. Limnologica 2007, 37, 281–289. [Google Scholar] [CrossRef]

- Zhang, T.; Ban, X.; Wang, X.; Li, E.; Yang, C.; Zhang, Q. Spatial relationships between submerged aquatic vegetation and water quality in Honghu Lake, China. FResenius Environ. Bull. 2016, 25, 896–909. [Google Scholar]

- Lehmann, A.; Lachavanne, J.B. Changes in the water quality of Lake Geneva indicated by submerged macrophytes. Freshw. Biol. 1999, 42, 457–466. [Google Scholar] [CrossRef]

- Espel, D.; Courty, S.; Auda, Y.; Sheeren, D.; Elger, A. Submerged macrophyte assessment in rivers: An automatic mapping method using Pleiades imagery. Water Res. 2020, 186, 116353. [Google Scholar] [CrossRef] [PubMed]

- Rowan, G.S.L.; Kalacska, M. A Review of Remote Sensing of Submerged Aquatic Vegetation for Non-Specialists. Remote Sens. 2021, 13, 623. [Google Scholar] [CrossRef]

- Luo, J.; Li, X.; Ma, R.; Li, F.; Duan, H.; Hu, W.; Qin, B.; Huang, W. Applying remote sensing techniques to monitoring seasonal and interannual changes of aquatic vegetation in Taihu Lake, China. Ecol. Indic. 2016, 60, 503–513. [Google Scholar] [CrossRef]

- Nelson, S.A.C.; Cheruvelil, K.S.; Soranno, P.A. Satellite remote sensing of freshwater macrophytes and the influence of water clarity. Aquat. Bot. 2006, 85, 289–298. [Google Scholar] [CrossRef]

- Schmieder, K. Littoral zone—GIS of Lake Constance: A useful tool in lake monitoring and autecological studies with submersed macrophytes. Aquat. Bot. 1997, 58, 333–346. [Google Scholar] [CrossRef]

- Mandlburger, G. A Review of Active and Passive Optical Methods in Hydrography. Int. Hydrogr. Rev. 2022, 28, 8–52. [Google Scholar] [CrossRef]

- Collin, A.; Ramambason, C.; Pastol, Y.; Casella, E.; Rovere, A.; Thiault, L.; Espiau, B.; Siu, G.; Lerouvreur, F.; Nakamura, N.; et al. Very high resolution mapping of coral reef state using airborne bathymetric LiDAR surface-intensity and drone imagery. Int. J. Remote Sens. 2018, 39, 5676–5688. [Google Scholar] [CrossRef]

- Guo, K.; Li, Q.; Mao, Q.; Wang, C.; Zhu, J.; Liu, Y.; Xu, W.; Zhang, D.; Wu, A. Errors of Airborne Bathymetry LiDAR Detection Caused by Ocean Waves and Dimension-Based Laser Incidence Correction. Remote Sens. 2021, 13, 1750. [Google Scholar] [CrossRef]

- Klemas, V.V. Remote Sensing of Submerged Aquatic Vegetation. In Seafloor Mapping along Continental Shelves: Research and Techniques for Visualizing Benthic Environments; Finkl, C., Makowski, C., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 13, pp. 125–140. [Google Scholar] [CrossRef]

- Kinzel, P.J.; Legleiter, C.J.; Nelson, J.M. Mapping River Bathymetry With a Small Footprint Green LiDAR: Applications and Challenges. JAWRA J. Am. Water Resour. Assoc. 2013, 49, 183–204. [Google Scholar] [CrossRef]

- Meneses, N.C.; Baier, S.; Geist, J.; Schneider, T. Evaluation of Green-LiDAR Data for Mapping Extent, Density and Height of Aquatic Reed Beds at Lake Chiemsee, Bavaria—Germany. Remote Sens. 2017, 9, 1308. [Google Scholar] [CrossRef]

- Mandlburger, G.; Jutzi, B. On the Feasibility of Water Surface Mapping with Single Photon LiDAR. ISPRS Int. J. Geo-Inf. 2019, 8, 188. [Google Scholar] [CrossRef]

- Guenther, G.; Cunningham, A.; Laroque, P.; Reid, D. Meeting the accuracy challenge in airborne lidar bathymetry. In Proceedings of the EARSeL-SIG-Workshop LIDAR, Dresden, Germany, 16–17 June 2000. [Google Scholar]

- Philpot, W. (Ed.) Airborne Laser Hydrography II; Cornell University Library (eCommons): Ithaca, NY, USA, 2019; p. 289. [Google Scholar] [CrossRef]

- Maas, H.G.; Mader, D.; Richter, K.; Westfeld, P. Improvements in LiDAR bathymetry data analysis. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W10, 113–117. [Google Scholar] [CrossRef]

- Gong, Z.; Liang, S.; Wang, X.; Pu, R. Remote Sensing Monitoring of the Bottom Topography in a Shallow Reservoir and the Spatiotemporal Changes of Submerged Aquatic Vegetation Under Water Depth Fluctuations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5684–5693. [Google Scholar] [CrossRef]

- Wang, D.; Xing, S.; He, Y.; Yu, J.; Xu, Q.; Li, P. Evaluation of a New Lightweight UAV-Borne Topo-Bathymetric LiDAR for Shallow Water Bathymetry and Object Detection. Sensors 2022, 22, 1379. [Google Scholar] [CrossRef]

- Parrish, C.E.; Dijkstra, J.A.; O’Neil-Dunne, J.P.M.; McKenna, L.; Pe’eri, S. Post-Sandy Benthic Habitat Mapping Using New Topobathymetric Lidar Technology and Object-Based Image Classification. J. Coast. Res. 2016, 76, 200–208. [Google Scholar] [CrossRef]

- Fritz, C.; Dörnhöfer, K.; Schneider, T.; Geist, J.; Oppelt, N. Mapping submerged aquatic vegetation using RapidEye satellite data: The example of Lake Kummerow (Germany). Water 2017, 9, 510. [Google Scholar] [CrossRef]

- Shuchman, R.A.; Sayers, M.J.; Brooks, C.N. Mapping and monitoring the extent of submerged aquatic vegetation in the Laurentian Great Lakes with multi-scale satellite remote sensing. J. Great Lakes Res. 2013, 39, 78–89. [Google Scholar] [CrossRef]

- Luo, J.; Duan, H.; Ma, R.; Jin, X.; Li, F.; Hu, W.; Shi, K.; Huang, W. Mapping species of submerged aquatic vegetation with multi-seasonal satellite images and considering life history information. Int. J. Appl. Earth Obs. Geoinf. 2017, 57, 154–165. [Google Scholar] [CrossRef]

- Richter, K.; Maas, H.G.; Westfeld, P.; Weiß, R. An Approach to Determining Turbidity and Correcting for Signal Attenuation in Airborne Lidar Bathymetry. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 31–40. [Google Scholar] [CrossRef]

- Steinbacher, F.; Dobler, W.; Benger, W.; Baran, R.; Niederwieser, M.; Leimer, W. Integrated Full-Waveform Analysis and Classification Approaches for Topo-Bathymetric Data Processing and Visualization in HydroVISH. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2021, 89, 159–175. [Google Scholar] [CrossRef]

- Pfeifer, N.; Mandlburger, G. LiDAR data filtering and Digital Terrain Model generation. In Topographic Laser Ranging and Scanning—Principles and Processing, 2nd ed.; Shan, J., Toth, C.K., Eds.; CRC Press: Boca Raton, FL, USA, 2018; pp. 349–378. [Google Scholar]

- Pfeifer, N.; Stadler, P.; Briese, C. Derivation of Digital Terrain Models in the SCOP++ Environment. In Proceedings of the OEEPE Workshop on Airborne Laserscanning and Interferometric SAR for Digital Elevation Models, Stockholm, Sweden, 1–3 March 2001. [Google Scholar]

- M. Isenburg LAStools—Efficient LiDAR Processing Software. (Version 141017). Available online: http://rapidlasso.com/LAStools (accessed on 18 June 2024).

- Widyaningrum, E.; Bai, Q.; Fajari, M.K.; Lindenbergh, R.C. Airborne Laser Scanning Point Cloud Classification Using the DGCNN Deep Learning Method. Remote Sens. 2021, 13, 859. [Google Scholar] [CrossRef]

- Zhu, J.; Sui, L.; Zang, Y.; Zheng, H.; Jiang, W.; Zhong, M.; Ma, F. Classification of Airborne Laser Scanning Point Cloud Using Point-Based Convolutional Neural Network. ISPRS Int. J. Geo-Inf. 2021, 10, 444. [Google Scholar] [CrossRef]

- Winiwarter, L.; Mandlburger, G.; Schmohl, S.; Pfeifer, N. Classification of ALS Point Clouds Using End-to-End Deep Learning. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2019, 87, 75–90. [Google Scholar] [CrossRef]

- Walicka, A.; Pfeifer, N. Semantic Segmentation of Buildings Using Multisource ALS Data. In Recent Advances in 3D Geoinformation Science, Proceedings of the 18th 3D GeoInfo Conference, Munich, Germany, 13–14 September 2023; Kolbe, T.H., Donaubauer, A., Beil, C., Eds.; Springer: Cham, Switzerland, 2024; pp. 381–390. [Google Scholar]

- Calantropio, A.; Menna, F.; Skarlatos, D.; Balletti, C.; Mandlburger, G.; Agrafiotis, P.; Chiabrando, F.; Lingua, A.M.; Giaquinto, A.; Nocerino, E. Under and Through Water Datasets for Geospatial Studies: The 2023 ISPRS Scientific Initiative “NAUTILUS”. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, X-2-2024, 33–40. [Google Scholar] [CrossRef]

- Spaak, P.; Alexander, J. Seewandel. 2018. Available online: https://seewandel.org (accessed on 18 June 2024).

- Muller, H. Lake Constance—A model for integrated lake restoration with international cooperation. Water Sci. Technol. 2002, 46, 93–98. [Google Scholar] [CrossRef]

- Murphy, F.; Schmieder, K.; Baastrup-Spohr, L.; Pedersen, O.; Sand-Jensen, K. Five decades of dramatic changes in submerged vegetation in Lake Constance. Aquat. Bot. 2018, 144, 31–37. [Google Scholar] [CrossRef]

- Wahl, B.; Peeters, F. Effect of climatic changes on stratification and deep-water renewal in Lake Constance assessed by sensitivity studies with a 3D hydrodynamic model. Limnol. Oceanogr. 2014, 59, 1035–1052. [Google Scholar] [CrossRef]

- Internationale Gewässerschutzkommission für den Bodensee (IGKB). Available online: https://www.igkb.org (accessed on 18 June 2024).

- Rottman, H.; Auer, B.R.; Kamps, U. Q-880-G. 2022. Available online: http://www.riegl.com/uploads/tx_pxpriegldownloads/RIEGL_VQ-880-GII_Datasheet_2022-04-04.pdf (accessed on 18 June 2024).

- Isenburg, M. LASzip: Lossless Compression of Lidar Data. Photogramm. Eng. Remote Sens. 2013, 79, 209–217. [Google Scholar] [CrossRef]

- Wessels, M.; Anselmetti, F.; Artuso, R.; Baran, R.; Daut, G.; Gaide, S.; Geiger, A.; Groeneveld, J.; Hilbe, M.; Möst, K. Bathymetry of Lake Constance—A High-Resolution Survey in a Large, Deep Lake. ZFV-Zeitschrift Geodasie Geoinf. 672 Landmanag. 2015, 140, 204. [Google Scholar] [CrossRef]

- Pfeifer, N.; Mandlburger, G.; Otepka, J.; Karel, W. OPALS—A framework for Airborne Laser Scanning data analysis. Comput. Environ. Urban Syst. 2014, 45, 125–136. [Google Scholar] [CrossRef]

- Otepka, J.; Mandlburger, G.; Karel, W. The OPALS data mananger—Efficient data management for large airborne laser scanning projects. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 153–159. [Google Scholar] [CrossRef]

- Python 3.6.8. 2018. Available online: https://www.python.org/downloads/release/python-368/ (accessed on 18 June 2024).

- Schmieder, K.; Werner, S.; Bauer, H.G. Submersed macrophytes as a food source for wintering waterbirds at Lake Constance. Aquat. Bot. 2006, 84, 245–250. [Google Scholar] [CrossRef]

- Mandlburger, G.; Pfennigbauer, M.; Schwarz, R.; Floery, S.; Nussbaumer, L. Concept and Performance Evaluation of a Novel UAV-Borne Topo-Bathymetric LiDAR Sensor. Remote Sens. 2020, 12, 986. [Google Scholar] [CrossRef]

- Vander Zanden, J.; Vadeboncoeur, Y.; Chandra, S. Fish Reliance on Littoral–Benthic Resources and the Distribution of Primary Production in Lakes. Ecosystems 2011, 14, 894–903. [Google Scholar] [CrossRef]

- Walker, P.; Wijnhoven, S.; Van der Velde, G. Macrophyte presence and growth form influence macroinvertebrate community structure. Aquat. Bot. 2013, 104, 80–87. [Google Scholar] [CrossRef]

| Class | Height [cm] | Species |

|---|---|---|

| Charophytes small (cs) | 5–30 | Chara aspera Willd., Chara aspera var. subinermis Kütz., Chara tomentosa L., Chara virgata Kütz., Nitella hyalina (DC.) C. Agardh |

| Charophytes medium (cm) | 30–60 | Chara contraria A. Braun ex Kütz., Chara dissoluta A. Braun ex Leonhardi, Chara globularis Thuill., Nitella flexilis (L.) C. Agardh, Nitellopsis obtusa (Desv.) J. Groves |

| Elodeids tall, large-leaved (etl) | 120–600 | Potamogeton angustifolius J. Presl, Potamogeton crispus L., Potamogeton lucens L., Potamogeton perfoliatus L. |

| Elodeids tall, narrow-leaved (etn) | 120–600 | Ceratophyllum demersum L., Myriophyllum spicatum L., Potamogeton helveticus (G. Fisch.) W. Koch, Potamogeton pectinatus L., Potamogeton pusillus L., Potamogeton trichoides Cham & Schltdl., Ranunculus circinatus Sibth., Ranunculus trichophyllus Chaix, Ranunuculus fluitans Lam., Zannichellia palustris L. (tall) |

| Elodeids small, large-leaved (esl) | 30–60 | Elodea canadensis Michx., Elodea nuttallii (Planch.) H. St. John, Groenlandia densa (L.) Fourr. |

| Elodeids small, narrow-leaved (esn) | 30–60 | Alisma gramineum Lej., Alisma lanceolatum With., Najas marina subsp. intermedia (Wolfg. Ex Gorski) Casper, Potamogeton friesii Rupr., Potamogeton gramineus L., Zannichellia palustris L. (small) |

| Other macroalgae (o) | no data | Cladophora sp. Kütz., Ulva (Enteromorpha) sp. L., Hydrodictyon sp. Roth, Spirogyra sp. Link, Vaucheria sp. A.P. de Candolle |

| Tile | Ground | Low Vegetation | Low Vegetation 2 | High Vegetation | Vegetation Canopy |

|---|---|---|---|---|---|

| ETL1 | 85.34 | 76.02 | 0.0 | 2.29 | 0.21 |

| ETL2 | 68.89 | 64.18 | 0.0 | 0.0 | 0.0 |

| ETL3 | 81.41 | 101.75 | 0.0 | 0.47 | 0.40 |

| ETL4 | 75.30 | 60.69 | 0.0 | 38.66 | 1.56 |

| ETL5 | 67.40 | 0.0 | 0.0 | 69.38 | 57.66 |

| ETN1 | 39.53 | 46.55 | 0.0 | 0.0 | 0.82 |

| ETN2 | 61.49 | 50.32 | 57.30 | 10.26 | 8.82 |

| ETN3 | 91.49 | 70.0 | 3.10 | 0.01 | 0.0 |

| ETN4 | 62.75 | 39.97 | 5.42 | 0.09 | 0.0 |

| ETN8 | 56.35 | 44.50 | 0.0 | 22.09 | 3.59 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wagner, N.; Franke, G.; Schmieder, K.; Mandlburger, G. Automatic Classification of Submerged Macrophytes at Lake Constance Using Laser Bathymetry Point Clouds. Remote Sens. 2024, 16, 2257. https://doi.org/10.3390/rs16132257

Wagner N, Franke G, Schmieder K, Mandlburger G. Automatic Classification of Submerged Macrophytes at Lake Constance Using Laser Bathymetry Point Clouds. Remote Sensing. 2024; 16(13):2257. https://doi.org/10.3390/rs16132257

Chicago/Turabian StyleWagner, Nike, Gunnar Franke, Klaus Schmieder, and Gottfried Mandlburger. 2024. "Automatic Classification of Submerged Macrophytes at Lake Constance Using Laser Bathymetry Point Clouds" Remote Sensing 16, no. 13: 2257. https://doi.org/10.3390/rs16132257

APA StyleWagner, N., Franke, G., Schmieder, K., & Mandlburger, G. (2024). Automatic Classification of Submerged Macrophytes at Lake Constance Using Laser Bathymetry Point Clouds. Remote Sensing, 16(13), 2257. https://doi.org/10.3390/rs16132257