Abstract

The non-invasive detection and digital documentation of buried archaeological heritage by means of geophysical prospection is increasingly gaining importance in modern field archaeology and archaeological heritage management. It frequently provides the detailed information required for heritage protection or targeted further archaeological research. High-resolution magnetometry and ground-penetrating radar (GPR) became invaluable tools for the efficient and comprehensive non-invasive exploration of complete archaeological sites and archaeological landscapes. The analysis and detailed archaeological interpretation of the resulting large 2D and 3D datasets, and related data from aerial archaeology or airborne remote sensing, etc., is a time-consuming and complex process, which requires the integration of all data at hand, respective three-dimensional imagination, and a broad understanding of the archaeological problem; therefore, informative 3D visualizations supporting the exploration of complex 3D datasets and supporting the interpretative process are in great demand. This paper presents a novel integrated 3D GPR interpretation approach, centered around the flexible 3D visualization of heterogeneous data, which supports conjoint visualization of scenes composed of GPR volumes, 2D prospection imagery, and 3D interpretative models. We found that the flexible visual combination of the original 3D GPR datasets and images derived from the data applying post-processing techniques inspired by medical image analysis and seismic data processing contribute to the perceptibility of archaeologically relevant features and their respective context within a stratified volume. Moreover, such visualizations support the interpreting archaeologists in their development of a deeper understanding of the complex datasets as a starting point for and throughout the implemented interactive interpretative process.

1. Introduction

Non-invasive archaeological prospection integrating aerial archaeology, airborne remote sensing, magnetometry, and ground penetrating radar (GPR) is gaining more and more importance in the modern field of archaeology and archaeological heritage management. Dedicated large-scale applications with the goal to explore entire archaeological sites and landscapes initiated by the Ludwig Boltzmann Institute for Archaeological Prospection and Virtual Archaeology (LBI ArchPro), focusing on sites such as the World Heritage Stonehenge (UK) [1,2,3], the Roman Carnuntum (A) [4,5,6,7] or the Viking Age sites of Birka (S) [8,9], Kaupang (N) [10], and Borre (N) [11,12] among other respective case studies in Europe, have opened up new horizons for the understanding and historical significance of the explored hidden archaeological heritage. The obtained 2D and 3D datasets are large and diverse resulting in a complex and therefore time-consuming analysis and archaeological interpretation processes, which generally require the integration of all data at hand, three-dimensional imagination, and a broad understanding of the archaeological problem. A central task in interpreting such large-scale prospection datasets is the identification of archaeologically relevant features, their graphical definition, archaeological classification, and description. This is conventionally performed manually within a GIS environment, in the form of an interpretative mapping based on 2D data visualizations, by manually locating and delineating archaeologically relevant features and assigning predefined feature classes and standardized descriptions. For prospection data sources producing two-dimensional data such as aerial images and magnetometry, this is a viable approach. Other modalities such as laser scans and image-based modeling add three-dimensional surface information and high-resolution GPR data provide a detailed 3D insight into the subsurface, data for which GIS solutions were not originally designed.

Typical high-resolution archaeological GPR datasets store scalar reflectivity values defined over rectilinear three-dimensional grids with grid spacing in the range of less than one decimeter, representing the surveyed 3D volume of the subsurface [13]. Such a high data resolution is associated with large datasets and corresponding high expenditure times for the detailed interpretative mapping. Still, GPR interpretation is mostly performed manually, although first approaches towards semi-automatic data interpretation have been published [14,15] and deep learning techniques have been investigated to support and accelerate this process [16].

Current manual GIS-based interpretative mapping approaches are typically performed using horizontal time- or depth-slices extracted from the 3D GPR dataset volume. The visualization examples provided are based on data from the southern part of the Roman forum of Carnuntum (A), which is one of the largest building complexes within the former Civil Town used for years as a test field for geophysical instrumentation [17] and reference dataset for processing and visualization [5]. This GPR measurement provides remains of Roman walls, floors, steps, sewage canals, and other anthropogenic structures, which are clearly recognizable. The dataset was recorded using a motorized 16-channel ground penetrating radar (MIRA) with an antenna frequency of 400 MHz. Data processing was performed using the in-house developed software ApRadar (https://archpro.lbg.ac.at/technologie/apsoft-2-0/, accessed on 29 January 2022), which is capable of handling large-scale high-resolution prospection data involving many processing steps, including bandpass filtering, resampling, and Kirchhoff migration. The resulting dataset counts 1601 × 1601 × 50 voxels at a resolution of 5 × 5 × 5 cm [13] resulting in a covered area of 80.05 × 80.05 m up to a depth of 2.5 m. The initial manual interpretation of the dataset identified more than 800 individual features of archaeological interest [18].

In this work, we present a novel interpretation approach based on 3D GPR data visualization techniques derived from earlier approaches using medical imaging data [19,20]. This is possible because 3D medical imaging techniques and GPR provide comparable 3D datasets. In addition, the exploration of archaeological GPR slices is very similar to the way how radiologists read X-ray computed tomography (CT) or magnetic resonance imaging (MRI) data; however, the medical imaging processes and the respective questions differ greatly from the archaeological application of GPR. While radiologists try to identify and assess deviations from a well-known norm—healthy human anatomy—based on well understood and standardized imaging techniques, archaeologists need to locate and analyze any conceivable kinds of man-made features in the generally unknown subsurface or within a unique archaeological stratification based on a—from the archaeological perspective—comparatively poorly understood and difficult to standardize imaging process. The detectability of archaeologically relevant features in the subsurface heavily depends on GPR imaging quality, which itself is influenced by many technical and environmental factors such as soil conditions or the respective physical contrast between the archaeological structures and the surrounding soil matrix or archaeological stratification.

Beyond mere recognition of relevant features, the archaeological interpretation process requires a comprehensive understanding of the respective stratification, the depicted features, their contexts, 3D shapes, and spatial arrangement. These requirements clearly demand advanced visualization approaches beyond 2D slices, preserving the 3D nature of the data and supporting the analysis through reduction in complexity and enhancement of the relevant information. The challenges posed by the archaeological interpretation of GPR data are exacerbated by the fact that in most cases it is not clear in what way archaeological structures are recorded by the imaging technique. In the same way that bones in radiographs look very different from photographs, since X-ray absorption rather than color is recorded, GPR datasets reflect abstract physical properties of the archaeological features [21,22,23]. In practice, high-resolution images of a complex stony soil matrix or archaeological stratification appear to be noisy. Major structures such as walls are only distinguishable by either slight textural changes or the geometrical layout of the involved buildings. The 3D visualization of GPR data faces the challenge of depicting the archaeologically relevant dataset regions and features in a comprehensive way, while at the same time suppressing irrelevant information interfering with their perception. This requires robust, intuitive, and highly flexible visualization techniques, as well as advanced data post-processing after GPR dataset reconstruction, migration, and the computation of the commonly used reflectance amplitude images using the Hilbert transform. Applying 3D image processing techniques including filtering, classification, and segmentation has the potential to reveal and depict more archaeologically relevant information. The computation of texture features is just one example showing how post-processing can enhance data understanding [24]. Moreover, GPR measurements can be exploited beyond reflectivity amplitudes. Seismic attribute analysis yields new insights by better depicting certain man-made structures [25].

The goal to optimally exploit such additional information channels can only be achieved through joint analysis. Image fusion is one possibility to combine the respective information. The software toolbox TAIFU (Toolbox for Archaeological Image FUsion) developed by the LBI ArchPro offers numerous 2D image fusion algorithms [26]. It could theoretically be extended to 3D GPR data, enabling, e.g., the combination of different seismic attributes into a single image; however, image fusion does not solve the problem of how to efficiently analyze the resulting 3D volume. Moreover, fusion is limited to a single type of data, such as 2D or 3D images. Fused visualization is an alternative approach. Unlike image fusion, which happens in the dataset domain, fused visualization just combines the visual contributions of the individual datasets. This has the advantage that heterogeneous data including different representations, formats, and resolutions can be handled. This is exactly what the developed 3D visualization approach does. It supports the visual combination of 3D GPR volumes, point-clouds, 3D vector data and 2D imagery, which altogether yields a novel and revolutionary way to support the understanding of archaeological GPR datasets as well as the totality of heterogeneous data available for an integrated multimodal archaeological interpretative process. For the first time, it is possible to extract convincing visual representations of both the outer shape and internal composition of the archaeological structures still hidden underground, directly from the data at hand. Such visualizations do not only support visual exploration, but also provide an exceptional basis for further analysis and the comprehensive extraction of archaeologically relevant features. The possibility to derive and recheck 3D models of an archaeological site using the underlying 3D GPR measurement evidence increases their accuracy, credibility, and thereby aids the dissemination of scientific results and the soundness of the methodology.

2. Materials and Methods

2.1. Ground Penetrating Radar and the Similarity to Medical 3D Image Data

High-resolution 3D GPR datasets for archaeological prospection are computed from the echoes of an electromagnetic pulse emitted into the ground by a transmitting antenna and recorded using a receiving antenna. Practical survey grids are in the order of sub decimeter intervals, depending on the radar wavelength used [7,13]. The properties of the recorded echo wave strongly depend on the physical properties of the archaeological features, as well as the physical properties such as composition, grain size, pore volume, humidity of the surrounding soil matrix, or embedded archaeological and geological deposits. GPR dataset reconstruction aims at the computation of the radar reflectivity at distinct 3D locations beneath the recording surface. The necessary transformation of the signal recorded in 2D space to the 3D volume is typically calculated based on estimated signal propagation velocities; however, the complex radar wave/soil interaction requires extensive pre-processing, including band-pass filtering and migration to avoid artifacts such as excessive noise and reflector localization ambiguities. Finally, the commonly used reflectivity measure is computed from the absolute amplitude of the adjusted wave signal, resampled at each cell or voxel of the dataset grid defined over the measurement volume (3D cuboid) [21,27].

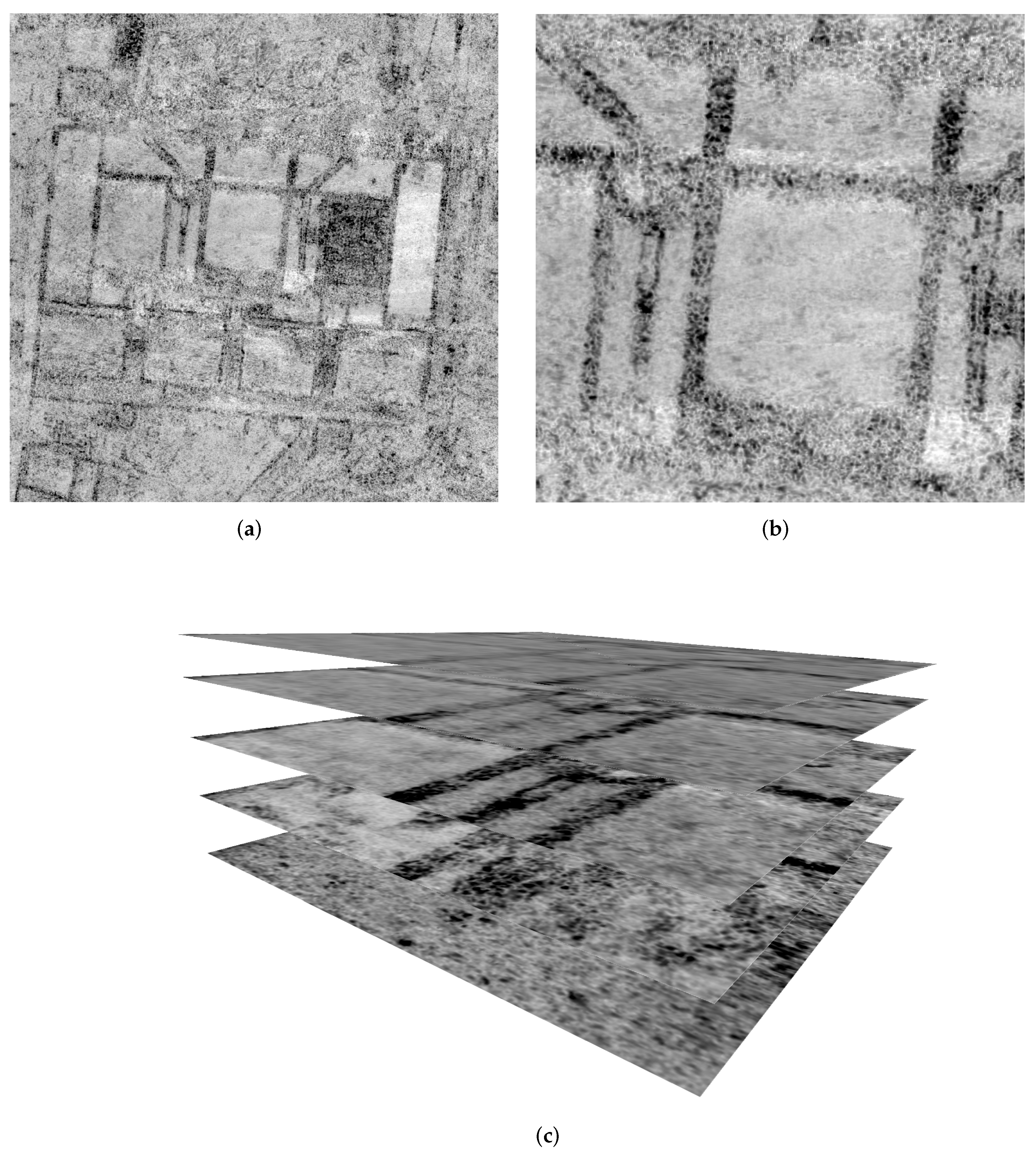

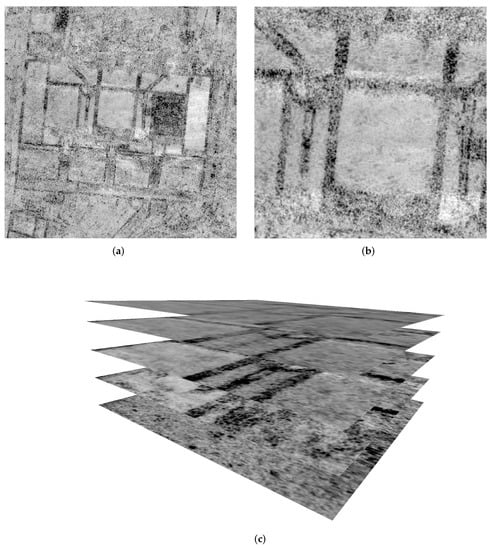

In the archaeological interpretation process, GPR datasets are generally visualized as stacks of 2D time or depth slice images, which are horizontal sections through the dataset volume representing a certain depth or time interval (see Figure 1c). In addition, vertical sections can be extracted and explored in orthogonal views for enhanced perception of 3D structures. For display, we deliberately use a grey scale ranging from white for low radar reflectivity to black for high radar reflectivity (see Figure 1).

Figure 1.

Overview and detailed view of a high-resolution (4 × 4 cm) northed GPR slice showing the unexcavated remains of the southern third of the Roman forum in the Civil Town of Carnuntum, Austria. The slice image depicts the main outline of the building complex adjoining to the main open square in the north as well as internal details such as preserved paved floors, individual stone slabs in the foundation walls, or thresholds in door openings. The grid of individual pillars underneath the paved floors of the eastern building shows the remains of a hypocaust for heating the three main buildings interpreted as curia, the City hall. Furthermore, remains of the sewerage are visible within the building complex and underneath the streets east and west of the forum (a,b). Selection of detail slices forming a sub-volume of the dataset stack, which shows the 3D nature of the GPR data in analogy to a medical 3D dataset (see Figure 2). Some structures, such as walls, are traceable over a wide depth range, while small structures are only visible in individual layers. Comprehensive understanding of the data including 3D shapes of such structures obviously requires three-dimensional visualizations (c).

Larger time- or depth-intervals reduce the total number of stacked images to be analyzed and, at the same time, the amount of noise. The aggregation of values along the vertical time/depth axis supports the detectability of linear or geometrical outlines of structures such as walls within a noisy background, etc.; however, it inevitably results in a loss of contour fidelity when it comes to the definition of a specific feature during the interpretative mapping. In addition, the technique to display the stack of GPR data slices with minimal depth or time intervals, in the order of half of the wavelength, as an infinite video loop was established as a visualization standard [28]. This approach seems to support the interpreter in the initial formation of a mental three-dimensional model of the stratification under investigation. The spatial relationships of the features and structures recorded in the radar survey become more readily comprehensible through animation, as the information that is only fragmentarily mapped in the individual slices is merged through the motion, and thus becomes easier to comprehend [29].

It is also appropriate to use varying parameters for the generation of the time and depth slices, as well as to use different processing methods such as GPR attributes [30]. Amplitude maps can be complemented with sectional views for improved three-dimensional perception. This is especially useful for surveys with high geological complexity. Integrating additional data or modalities can improve comprehension of GPR datasets and facilitate their interpretation [31].

For the implementation of the interpretation process, GIS environments with the appropriate tools are frequently employed. GIS environments do not only support GPR dataset visualization in the form of horizontal 2D slices with globally referenced positioning, but also provide the functionality to overlay supporting 2D images and 2.5D data derived from magnetometry, remote sensing, and existing geographical or historical maps. Software solutions such as GPR-Slice and GPR-Insights (https://www.screeningeagle.com/en/products/category/software/gpr-slice-insights#GPR-Insights, accessed on 29 January 2022) offer comparable functionality, but also offer GPR data processing, visualization of 2D time or depth slices and profiles, as well as the extraction of 3D iso-surfaces from the GPR datasets to be combined with additional 2D images and 3D models. The support for integrating 3D models in GIS is improving but still limited. Basic support for the visualization of 3D volume datasets such as GPR has only recently been introduced in widespread GIS environments such as ArcGIS (version 2.6 and higher) (https://pro.arcgis.com/de/pro-app/latest/help/mapping/layer-properties/what-is-a-voxel-layer-.htm, accessed on 29 January 2022). More general volume visualization tools such as Voxler (https://www.goldensoftware.com/products/voxler, accessed on 29 January 2022) or VG Studio Max (https://www.volumegraphics.com/de/produkte/vgstudio-max.html, accessed on 29 January 2022) support multiple volume datasets, as well as the inclusion of non-volumetric data. Still, their flexibility is limited in terms of the targeted local control of the visualization outcome, as well as for the integration of non-volumetric data as required for the archaeological interpretation process.

The 3D visualization of GPR datasets in the form of iso-surfaces was established at an early stage [32] and is still widely used [33,34], e.g., to depict Roman foundations and walls. Iso-surfaces provide acceptable but clearly suboptimal results for the archaeological analysis, since they assume that relevant structures can be separated on the basis of different dataset values.

In GPR data this is not the case due to high intensity variations and intrinsic noise throughout the datasets. Relevant structures in extracted iso-surfaces either appear fragmented or are not visible at all, depending on the choice of the iso value. Generally, iso-surfaces sacrifice most of the data immanent information for just a single value transition surface.

The techniques presented in [35] represent an early example of the application of so-called direct volume rendering (DVR) techniques to GPR datasets, which generally compute 3D views from volumetric data taking into account all dataset information available. Although focusing on the integration of GPR and EM-61 data into one single 3D volume, work in [35] already emphasized the importance of 3D visualization for object localization and the interpretation process and the key role of the appropriate transfer function mapping measurement values to optical properties. Unfortunately, it had no further impact on archaeological applications. Consequently, 3D volume visualization techniques are not yet established as a standard for archaeological GPR applications. Other application examples of DVR in the geoscience context exist, but are rare, e.g., the visualization of volumetric atmospheric data as part of large multi-variate datasets [36,37].

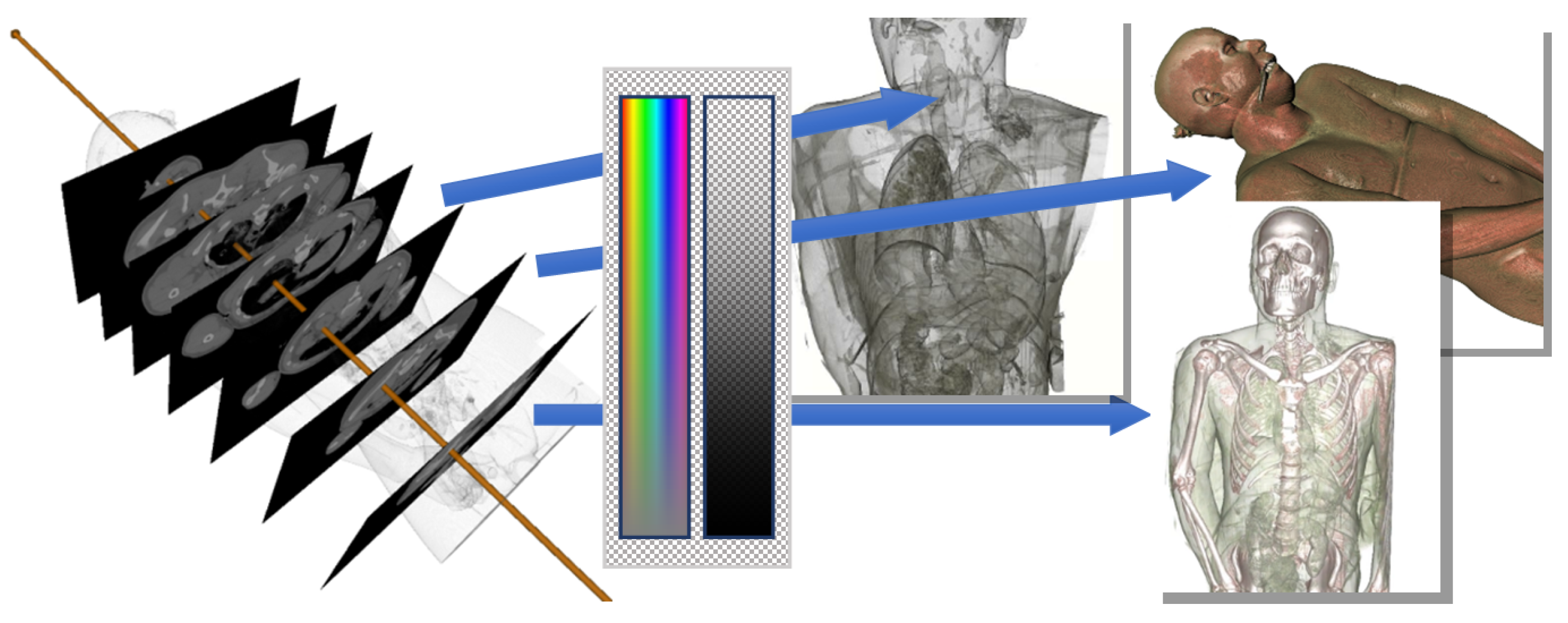

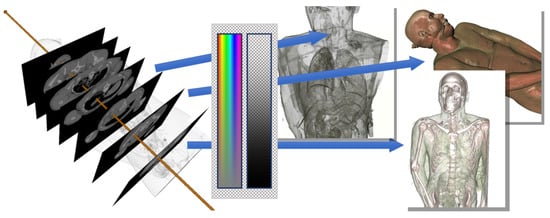

Medical imaging techniques such as X-ray computed tomography (CT) or magnetic resonance imaging (MRI) provide 3D volume datasets similar to GPR. The 2D visualization tools used in medical imaging such as ITK-Snap (http://www.itksnap.org, accessed on 29 January 2022) or 3D Slicer (https://www.slicer.org/, accessed on 29 January 2022) use coupled cross-sectional views as their central visualization means. This approach has become established as a standard in medical imaging, since it simplifies tracing the course of structures in 3D space compared to a single view. The cross-sections displayed are not limited to the dataset grid axes. Instead, they are obtained by sampling the dataset on arbitrarily oriented slice planes. This may also include the aggregation of sample values in a slab region around the slice plane using operations such as averaging, minimum, or maximum. Many clinical questions do not require the full dataset resolution by modern CT scanners. In these cases, the loss of detail information due to the aggregation is acceptable and outweighed by the time saved due to the reduced number of slices to be processed. The 3D visualization of CT data using iso-surface rendering is well-suited to depict structures well-defined by ideally unique dataset value ranges, such as bones; however, iso-surfaces have been largely replaced by DVR using a transfer function mapping dataset values to color and transparency. This approach is more flexible, supporting semi-transparent representations as well as the visual separation of multiple features, as far as they are characterized by unambiguous dataset value ranges. DVR images are typically computed by casting view rays through the dataset volume, densely sampling the data, applying the transfer function, computing artificial lighting at data-immanent feature surfaces, and finally the accumulation of the visual contribution of all samples to a color value as shown in Figure 2 [38].

Figure 2.

Generalized direct volume rendering (DVR) principle: The color values for single pixels of the output images on the right are computed by sampling the underlying dataset along view rays (arrow, left) and accumulating their individual visual contributions obtained by applying the transfer function assigning optical properties (color and transparency) to measurement values (middle).

Although the visualization functionality offered by medical tools is useful for the archaeological analysis of GPR data, their applicability in archaeology is limited. Medical software tools are not developed with an archaeological workflow in mind, nor designed to support the interpretative mapping. Consequently, the support for the integration of multiple volume datasets as well as georeferencing is missing. Furthermore, the unmodified use of medical DVR has been found to be insufficient for depicting archaeological features in the interpretation process as well as for illustration purposes [39,40]. Compared to their medical counterparts, GPR datasets show a different value distribution and characteristics. While CT Hounsfield values are characteristic for particular tissue types, with moderate variations, GPR data may show high frequency intensity changes over the entire dataset volume, e.g., in stony ground, which leads to noisy looking images. Noise may also be introduced in the measurement and reconstruction stage, due to limited methods and a priori knowledge on the volume under investigation. Soil moisture reduces the contrast. These are challenging problems, which indicate the need for the development of specific data pre-processing workflows. Nevertheless, visualization methods for medical data offer numerous inspirations for archaeological applications but require a special adaptation to the requirements and the objectives, i.e., optimal visibility of archaeological structures for their identification and definition in the interpretative process.

2.2. Integrated 3D Visualization for Archaeological Prospection Data

Based on the demand for 3D visualization of prospection data and standards developed in medical imaging, we specified a novel integrated visualization approach for archaeological 3D prospection datasets, with the goal to optimally support the GPR dataset exploration as well as the interpretation process. The application of specific 3D image processing techniques is crucial to improve the dataset suitability for 3D visualization, and thereby to achieve a representation quality that allows a novel, interactive computer-assisted 3D interpretation process. It is also required to be able to seamlessly incorporate 2D images for multimodal analysis, since this can potentially contribute valuable information. Magnetometry images, for example, depict metal finds or former fireplaces, and may thereby provide valuable clues to the past use of particular buildings or rooms. Airborne images and laser scans provide context information. Integration of still standing monuments above the surface in the form of 3D point clouds from terrestrial laser scanning or 3D meshes from image-based modeling or virtual reconstruction models contributes more context information. The combined 3D visualization of heterogeneous data supports the development of a deep understanding of the initial GPR dataset, as well as the whole area under investigation through the explicit integration of the multimodal information measured by the various sensors.

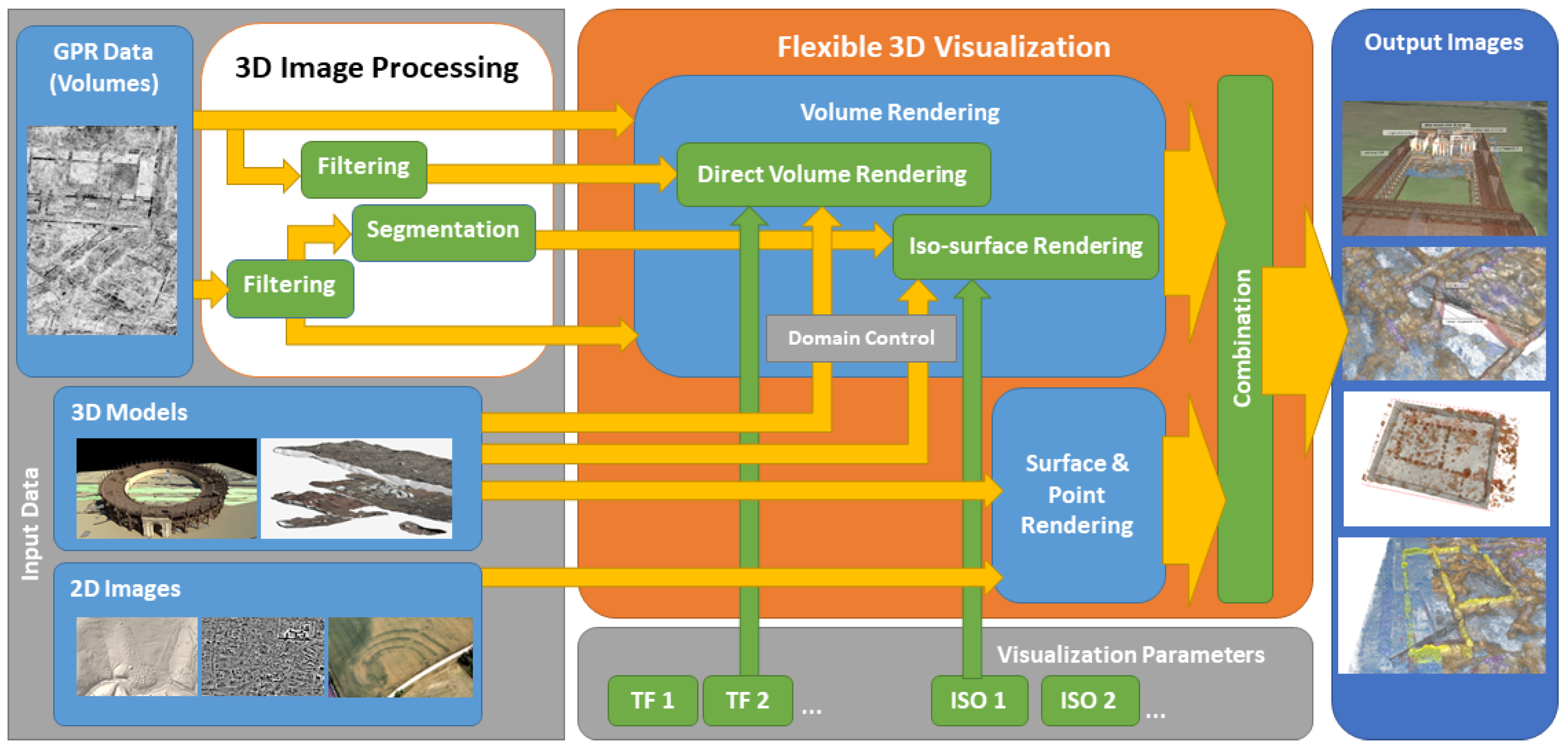

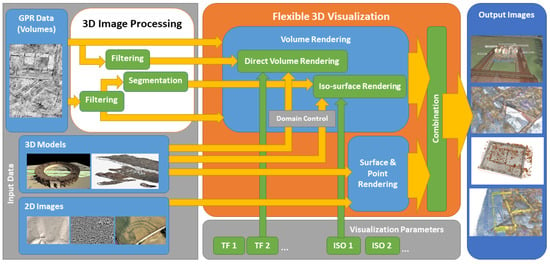

Figure 3 gives an overview of the proposed 3D visualization system for heterogeneous archaeological prospection data centered around a flexible 3D visualization algorithm capable of handling the respective data types. GPR datasets may first undergo 3D image processing using a variety of filters (Section 2.3.1) and segmentation algorithms (Section 2.4). Original and derived 3D volumes are visualized using DVR or iso-surface rendering using transfer functions or iso values, respectively (Section 2.3.2). The 3D models, point clouds, and 2D images are first handled using a standard rendering pipeline, and later seamlessly integrated with the volume rendering contributions leading to the output image (Section 2.5). The 3D surface models may also be used to define the visible portion of volume datasets as well as application domains of the transfer function, which offers a multitude of possibilities for visualization (Section 2.6) and interpretation (Section 3.1).

Figure 3.

Visualization system overview: The system supports a wide range of data types. The 3D volumes may be visualized using DVR or as iso-surface, directly or after undergoing 3D image processing. The flexible visualization algorithm supports combining multiple datasets, or multiple versions of the same dataset by combining all their visual contributions. Furthermore, contributions from 3D models and 2D imagery are integrated. The 3D models can be used to control the visible portions of volume datasets, as well as the application domains of transfer functions.

2.3. GPR Data Pre-Processing for 3D Visualization

Direct application of volume rendering techniques, such as DVR or iso-surface rendering for GPR data, is basically possible; however, the results do not reach the quality of DVR in the medical approach. This is due to the different dataset properties related to the measurement principle, the underlying physics, and the reconstruction process. A detailed comparison would go far beyond the scope of this work. Put in a nutshell, CT measures the X-ray permeability, which is different for air, soft tissue, and bones with moderate variations within their structure. Structures of interest such as organs or lesions are often an order of magnitude (centimeters) larger than the dataset resolution (approx. 0.5 mm). Finally, there is a high degree of redundancy in the measurement process, which, combined with state-of-the-art reconstruction algorithms, leads to crisp datasets with a low number of artifacts.

In GPR imaging there is a trade-off between the achievable depth of penetration and the possible spatial resolution, both linked to the applied radar wavelength [7,21]. We often use 400 or 500 MHz antennas, leading to a penetration depth of approximately 2 to 3 m, defining the vertical extent of the measurement volume. The spatial resolutions of the reconstructed 3D data volume typically lie in the range of 25 cm up to 2 cm, which corresponds to the extent of the smallest archaeological features of interest such as individual walls, basements for columns, postholes, or hypocaust pillars. Nevertheless, such features are only covered by a small number of voxels even in high-resolution GPR datasets.

A trained human interpreter can perceive the outlines of buildings, depict different types of floors, open squares, garden areas, etc., based on the visual inspection of 2D GPR slices (see Figure 1). This is to a large extent due to the ability of the human brain to supplement incomplete data with existing a priori knowledge through analogy inferences in the mental interpretation process. This way a human expert may recognize contextual information that is not derivable for an automatic segmentation or classification system; thus, the human interpreter uses a type of mental filtering that reduces the complex data visualizations to the archaeologically relevant content by depicting geometrical layouts, individual features, or by differentiating various patterns in relation to the complete image content. Noise normally does not affect this ability, as it is faded out in the mental interpretation process.

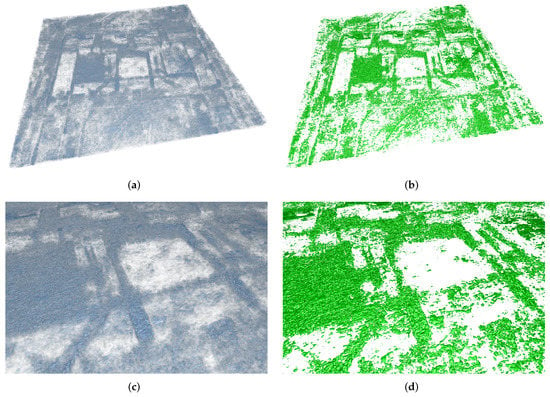

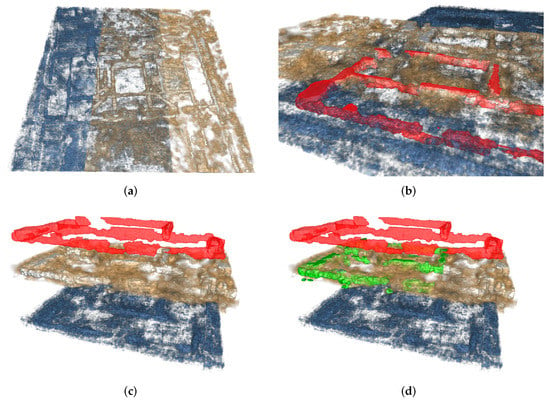

In the proposed approach the interpretative process is facilitated using 3D volume visualizations based on DVR offering the interpreter a comprehensive and thus enhanced perception of the data in 3D space. DVR reveals the 3D shapes of major structures of archaeological interest such as walls, but the images produced appear noisy (see Figure 4). It is possible to identify archaeologically relevant information such as walls, floors, hypocausts, ditches, or pits within the displayed diffuse GPR patterns. The exact delineation of individual features or larger structures in the course of the interpretative mapping is challenging in both 2D and 3D visualizations. Small features can easily be mistaken for ground structures.

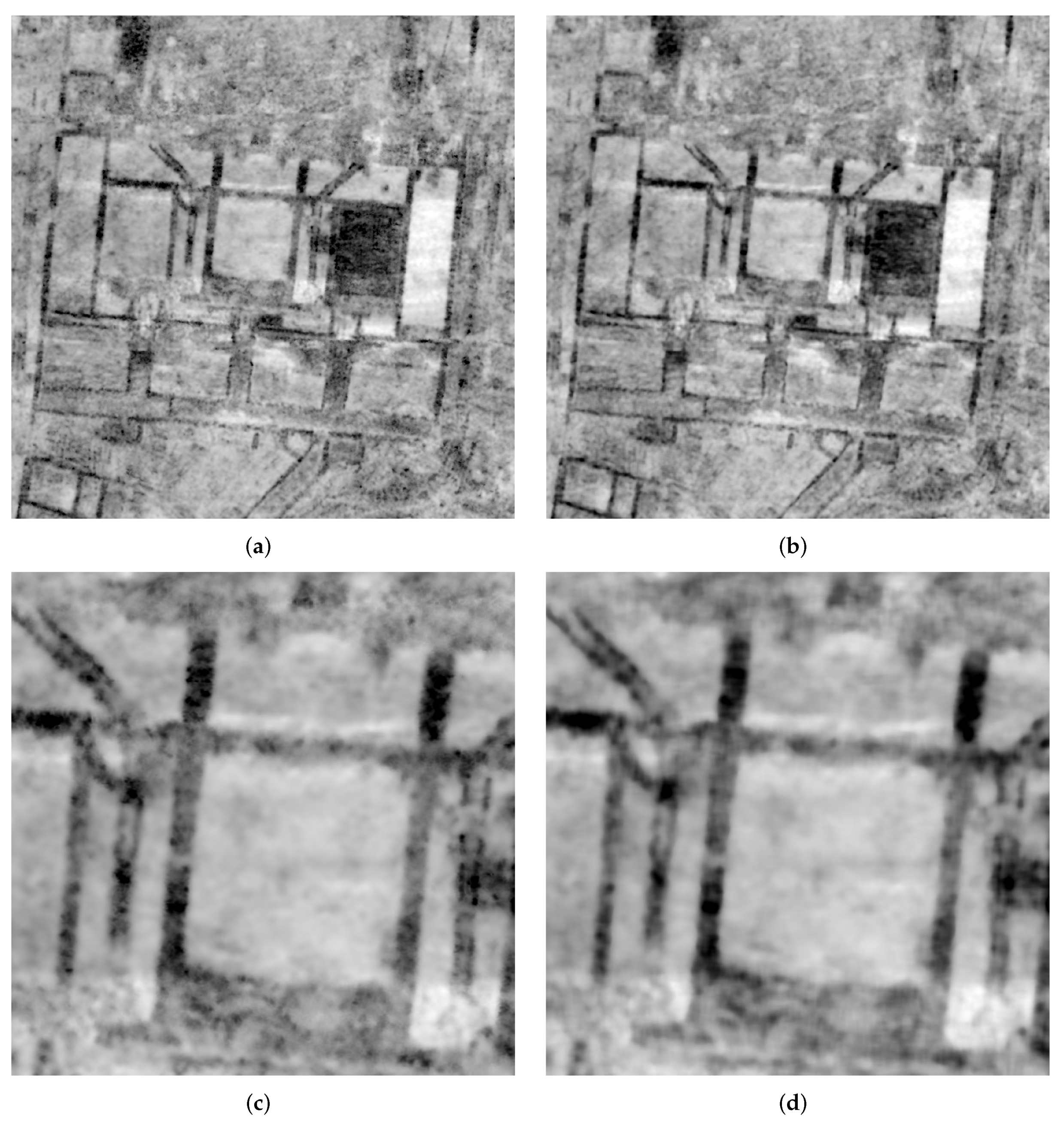

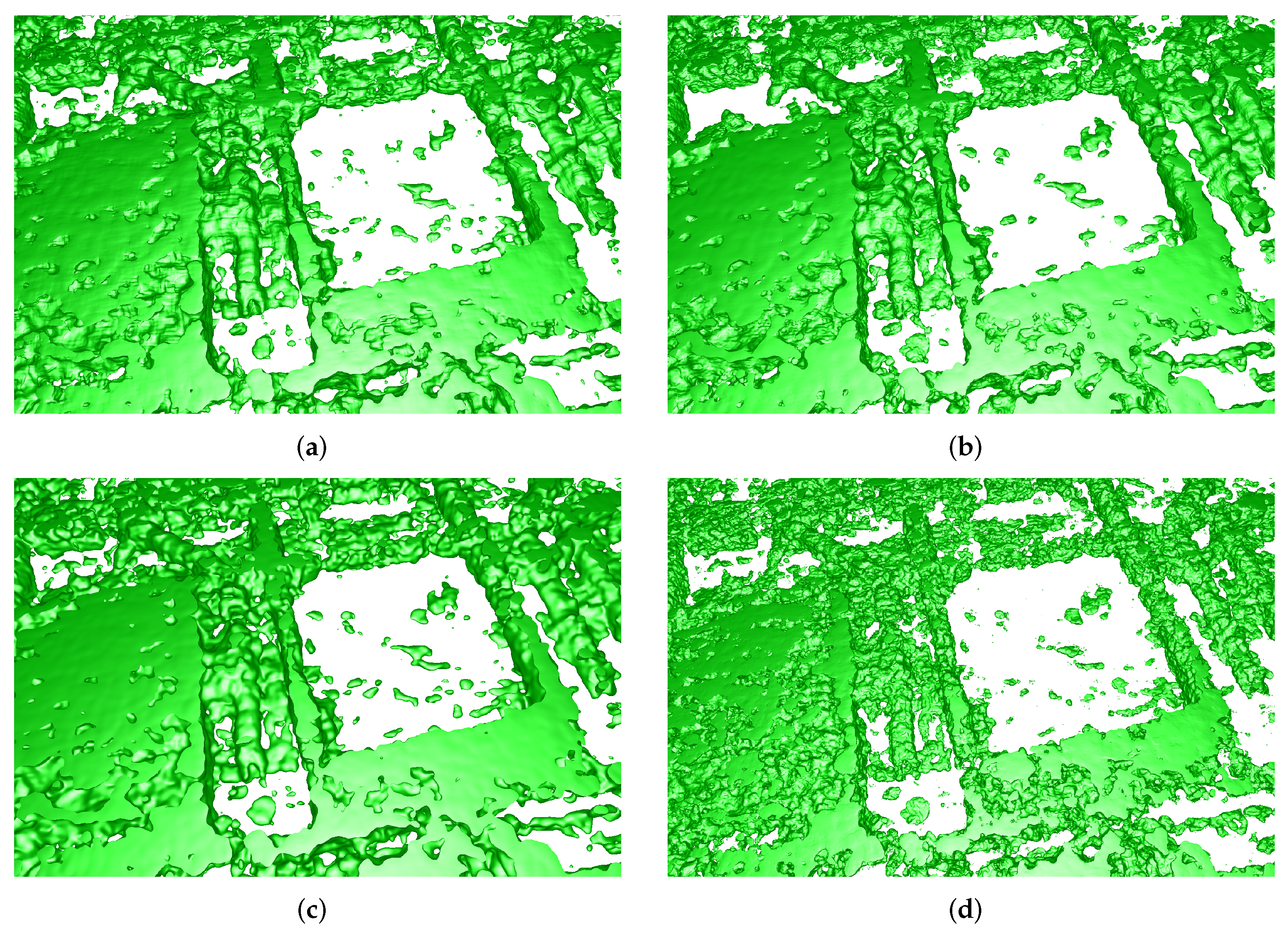

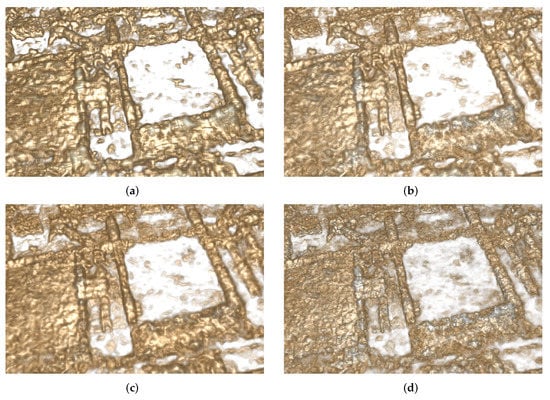

Figure 4.

Direct volume visualization and iso-surface visualization of unfiltered GPR dataset: The structures visible in individual 2D slices can also be seen in the 3D DVR images (a,c). The moderate gain in plasticity for walls is paid for with a high degree of clutter caused by ground structures sharing the same dataset values. Iso-surface visualization avoids the blurring caused by blending semi-transparent soil structure contributions. Still, the walls are only visualized as accumulations of strong radar reflectors like individual bricks or stones, rather than independent superordinate structures (b,d).

2.3.1. Filtering of GPR Data Volumes for 3D Visualization

We investigated image processing techniques capable of improving the suitability of GPR datasets for 3D visualization techniques in the sense of 3D illustrations easier to understand. Such processing techniques should produce datasets with increased perceptibility of archaeologically relevant features and their respective 3D shapes, as well as a better distinguishability from geological structures in order to facilitate the interpretative process.

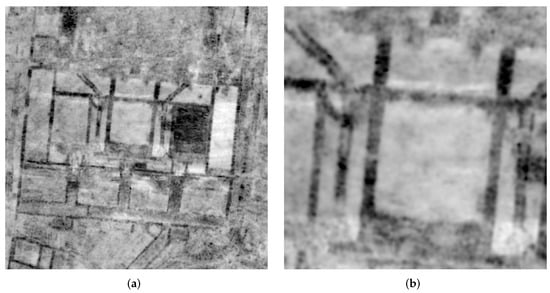

Image smoothing filters such as averaging and Gaussian smoothing remove noise and thereby inherently lead to more homogeneous GPR images. The improvement of such filters comes at the cost of blurred contours; therefore, we investigated the matter of edge-preserving image smoothing filters often used for medical tomography data and their applicability for the enhancement of GPR datasets. The simplest form of smoothing filters attributed with edge-preserving characteristics is the median filter [41]. Unfortunately, it did not meet our expectations by disproportionately blurring the dataset (see Figure 5).

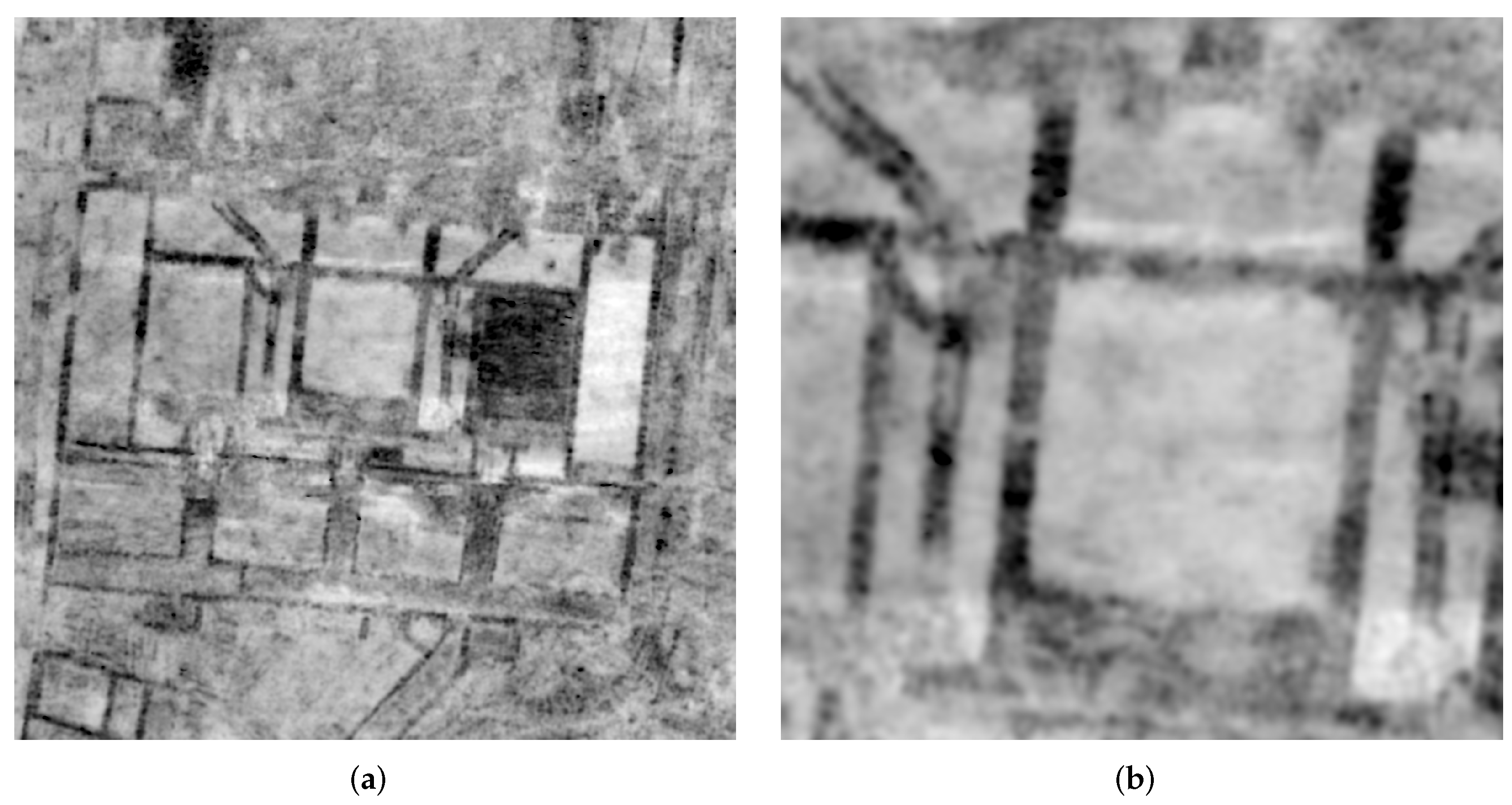

Figure 5.

Results of a 3D bilateral filter (a,c) and a median filter (b,d) applied to a GPR dataset. Both filters reveal the actual boundaries of the foundation walls in a more defined way compared to the original image in Figure 1. The bilateral filter avoids blurring at structure boundaries.

The bilateral filter, an extension of Gaussian smoothing preserves major discontinuities, while smoothing the image. This is achieved by weighting the contributions of neighbor values based on their distance in data value space, therefore radar reflectivity, in addition to the weighting in 3D Euclidean space according to Equation (1) [42].

Filtered image values at position p are evaluated to the weighted sum of values at positions q in a neighborhood S, where and denote the weights for center voxel and image value distance, respectively, based on Gaussian distributions with standard deviations and . The weighting by proximity in value space largely avoids smearing across structure boundaries, since smoothing is mostly performed using dataset values close to the center value .

Experiments performed with the reference GPR dataset from the forum of Roman Carnuntum showed, that the bilateral filter preserves sharp borders of larger structures, while noise is reduced and small low contrast structures disappear. A median filter of the same kernel size inevitably leads to a higher degree of blurring. Depending on the choice of many small structures such as sewerage ducts can also be preserved as shown in Figure 5.

Anisotropic diffusion filters are another type of filters, which can be used to achieve smoothing without sacrificing dataset edge features. The general idea behind anisotropic diffusion filters is to remove noise from images by solving a heat equation (partial differential equation) of the form

with D denoting the diffusion speed through the medium and the initial image. With D set to a constant, solving the above PDE system leads to isotropic behavior such as ordinary smoothing filters; therefore, in [43] D was replaced by an edge detector of the form

leading to an inhomogeneous but still isotropic diffusion, which preserves edges by limiting smoothing near them.

Weickart used the structure tensor to achieve true anisotropic behavior by aligning the diffusion with the local surface structure [44,45]. Results differ depending on how this is achieved. Edge enhancing diffusion (EED) largely inhibits diffusion flow and therefore blurring in surface normal direction, and thereby facilitates edge-preserving smoothing [46]. Other variants such as coherence enhancing diffusion steer diffusion along line structure to, e.g., reconnect interrupted line structures.

Application to GPR data shows that EED filters can remove noise from small structures in GPR data. Loss of outline detail through smearing is less prominent than using the median filter as shown in Figure 6.

Figure 6.

Edge enhancing anisotropic diffusion filter applied to GPR Roman GPR dataset. Similar to the bilateral filter, EED (a,b) can depict the outline of the foundation walls in comparison to the unfiltered image in Figure 1. Compared to the median filter result detail view in Figure 5d, blurring of wall boundaries occurs to a lesser extent. Noise in the surrounding soil regions is still removed to a great extent (b).

Another state-of-the-art class of methods for edge preserving image denoising are models formulated using the total variation (TV) as first presented in [47]. Their ROF denoising model uses an energy functional, which consists of a term penalizing differences between the initial image f and the denoised image u based on the Euclidean norm (L2), and a second term penalizing high dataset variations. This model leads to an energy minimization problem of the form

The parameter can be used to trade data fidelity against smoothness of the solution. In [48] a modified functional model using the L1 norm was proposed.

For both formulations, low values for lead to a high degree of smoothing. Only the contours of major structures are preserved, while higher values also preserve small details by penalizing differences to the original dataset.

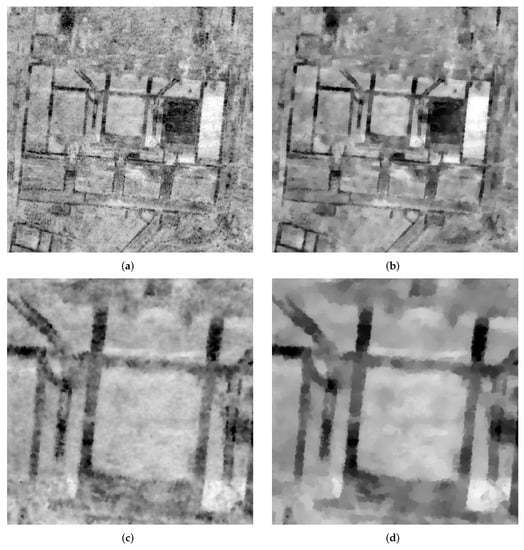

In our experiments with archaeological GPR datasets their TV-L1 denoising model formulation consistently produced images better suited for 3D visualization than the original ROF model, which is ideally suited for removing Gaussian noise. Small soil structures such as individual stones visible in GPR dataset appear to resemble so-called salt and pepper noise. This type of noise can better be dealt with by applying the L1 norm, which is known to be robust against outliers. The TV-L1 model also has the advantageous property, that the value of is proportional to the minimum size of the features to be preserved. Applied to GPR datasets this leads to highly homogeneous and sharp delineation of features supported by the setting. The dataset values of inner structures converge to a representative mean value. Smaller structures and noise are suppressed as shown in Figure 7. All in all, the resulting datasets are much better suited for direct volume visualization.

Figure 7.

GPR data denoise using the TV-L1 filter: Dataset values converge to the representative values of their respective regions depending on the choice of parameter . High values preserve smaller details such as the side walls of the Roman sewerage ducts on both sides of the forum of Carnuntum. Large features, such as walls, are still noisy and the filtered image more similar to the original image in Figure 4 (a,c). A lower yields images with largely homogeneous wall. Still, their contours remain well defined and sharp. Small details such as the cavity of the sewer begin to blur or disappear (b,d).

Image filtering is performed using the enormous computing power of modern GPUs. Since all the above-mentioned methods are parallelizable, the achievable speedups are on the order of 100 compared to a CPU implementation, reducing practical computation times for complex iterative algorithms such as TV-L1 denoising from minutes to seconds for practical grid sizes such as the Carnuntum dataset (1601 × 1601 × 50 voxels). Simple algorithms such as the kernel-based bilateral filter can be computed in less than a second. In the presented solution, filtering on the GPU is integrated with the 3D visualization, which allows for interactive tuning of filter parameters.

2.3.2. 3D Visualization of Filtered GPR Data Volumes

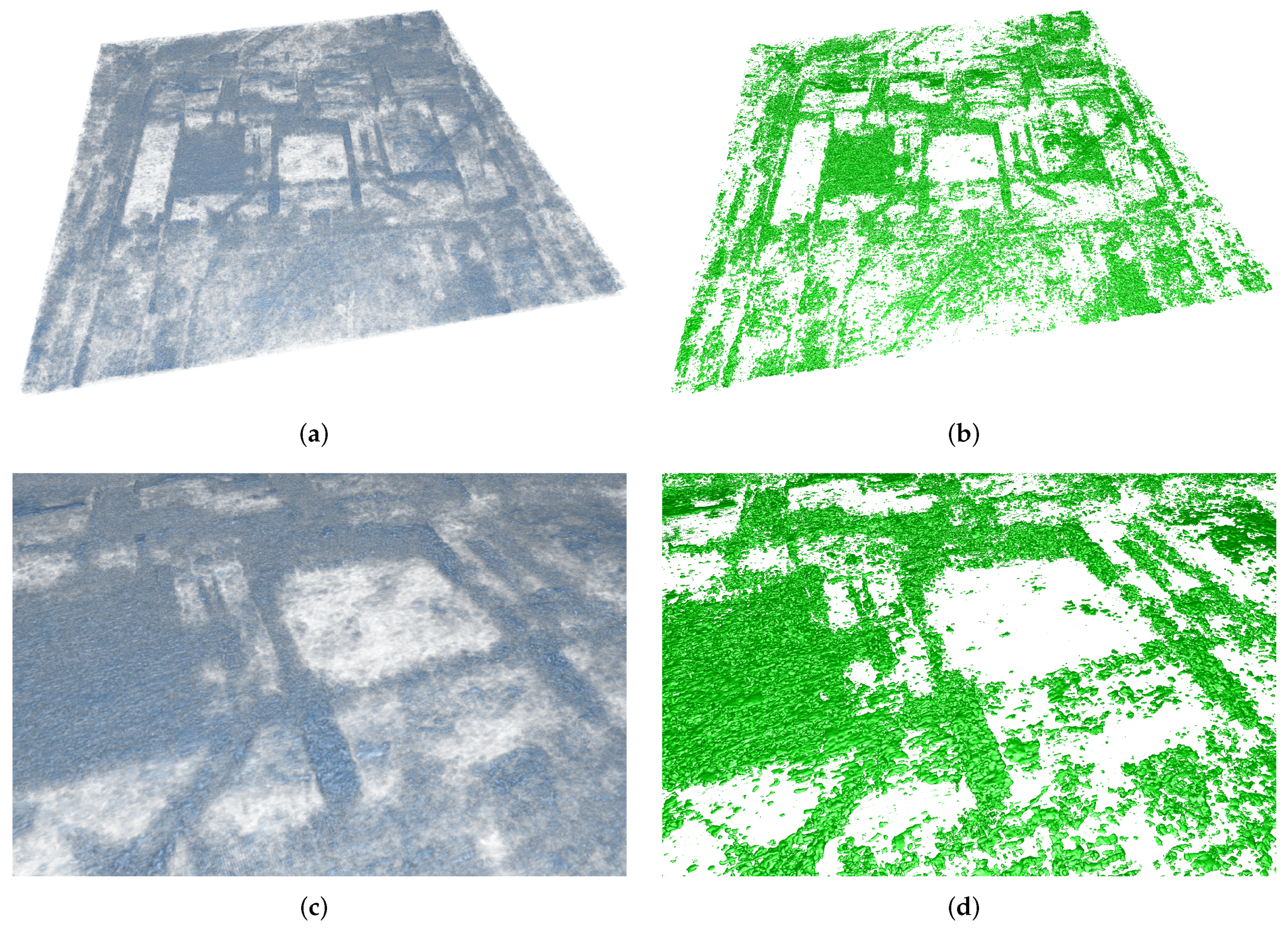

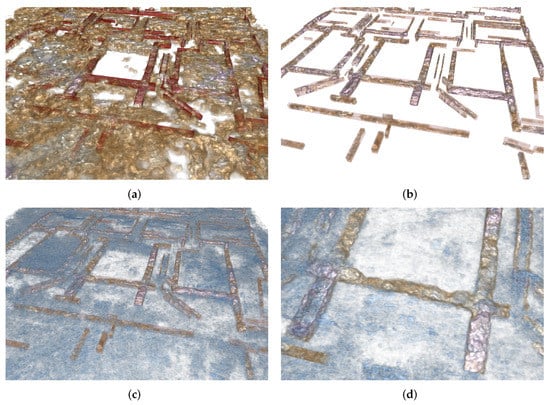

The filtering algorithms introduced in Section 2.3.1 increase dataset homogeneity as required for contiguous volume visualizations of, e.g., the walls of a building. These algorithms remove high frequency noise. Edges of large structures become more clearly defined. This has a significant impact on the 3D visualization output. The 3D shapes of major structures can immediately be seen in both DVR (Figure 8) and iso-surface rendering (Figure 9). Building outlines appear at the first glance, which allows further investigations of details such as doors and floors. Occlusion due to the aggregations of small structures still occurs but is by far less disturbing than in the DVR results obtained with unfiltered GPR data in Figure 4.

Figure 8.

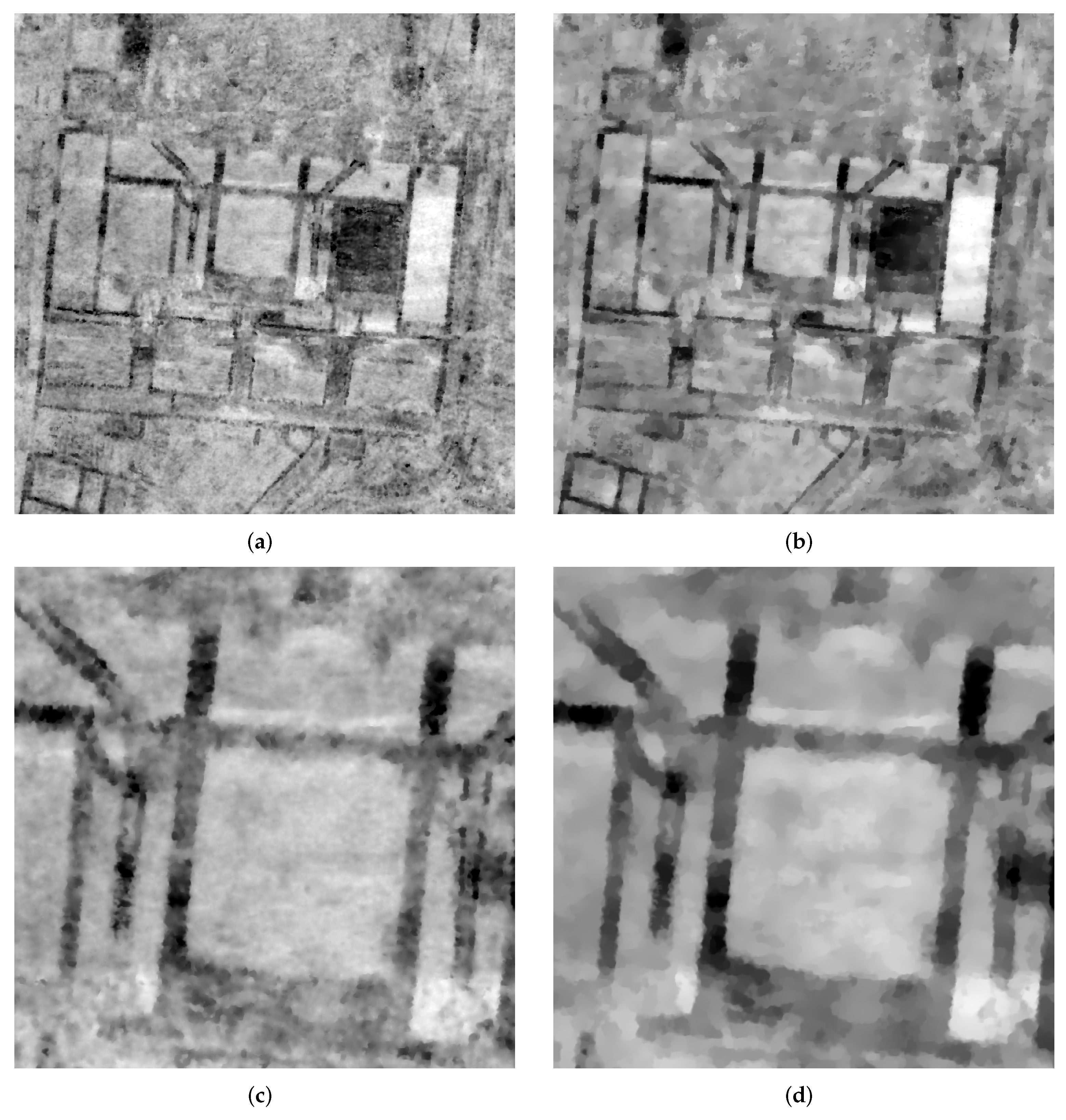

Direct volume rendering of GPR datasets filtered with a 3D median filter of kernel size 5 (a), a bilateral filter (b), an edge-enhancing anisotropic diffusion filter (EED) (c) and a TV-L1 filter (d). The outlines of the walls, sewerage ducts, and paved floors are clearly visible in all images. The blur level introduced by the median filter seems to be generally higher and it is respecting the shape edges of archaeological features to a lesser extent. The EED filter delivers very similar results to the bilateral filters. The TV-L1 filter results appear more detailed since blurring of structure boundaries is largely avoided.

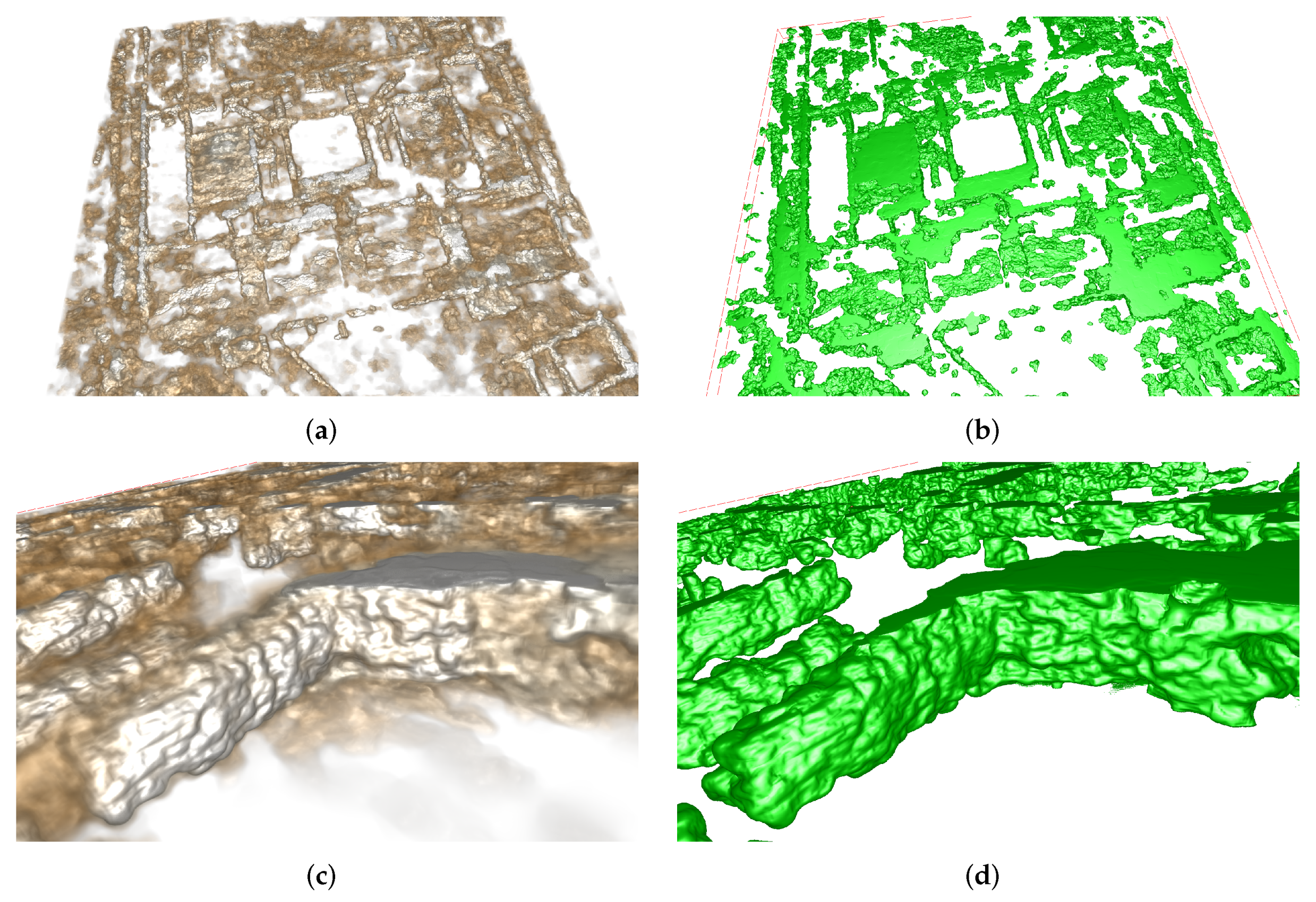

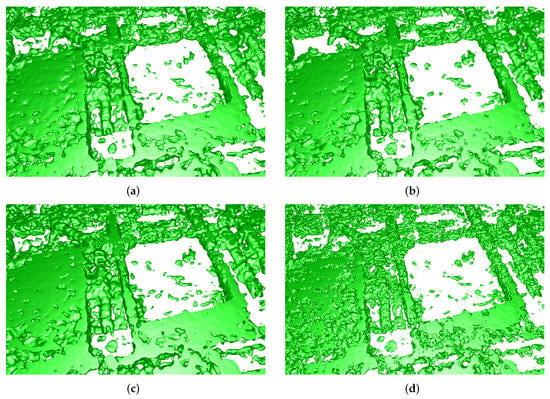

Figure 9.

Iso-surface visualization of GPR datasets filtered with a 3D median filter of kernel size 5 (a), a bilateral filter (b), an edge-enhancing anisotropic diffusion filter (c), and a TV-L1 filter (d). The results are comparable to Figure 8, although DVR preserves slightly more structure surface details. Nevertheless, iso-surfaces of filtered GPR data are a useful complement to DVR.

With conservative parameter settings the filters just reduce noisy patterns within the archaeological stratification and the geological background. More aggressive parameter settings, such as larger kernel sizes for the median, , and for the bilateral filter, a higher number of iterations for the EED filter, respectively, also lead to an increasing degree of structure surface blur, which is usually undesirable. The TV-L1 steps out of the line in preserving sharp transitions at structure boundaries even for small values, only preserving large structures. By tuning the parameter for a specific structure size, e.g., wall width, the filter virtually removes all smaller structures. Surface details are still preserved. The illustration in Figure 10 gives the impression that individual stones within the walls become visible. To confirm this, however, an excavation would have to be performed.

Figure 10.

TV-L1-based volume visualization using a more aggressive filter setting of , leading to the removal of most structures smaller than the wall diameter in the DVR result, while wall surface structure details are still visible (a,c). In the corresponding iso-surface visualization (b,d) these surfaces are even more defined at the expense of inner structural information.

We mainly use the TV-L1 filter for visualization in the interpretative process. Experiments beyond the scope of this work have confirmed that it consistently maintains the highest level of detail. Furthermore, it is easy to tune. For other purposes such as preparing GPR datasets for 3D printing, a higher degree of smoothing might be desirable.

2.4. GPR Segmentation

Image filtering greatly aids the quality of 3D GPR data visualization; however, neither the global classification by value of DVR nor the implicit thresholding by iso-surface rendering are able to depict individual features, such as the walls of a single building in a dataset containing many buildings or features characterized by similar dataset values.

In digital image processing nomenclature segmentation refers to the process of assigning labels to the individual pixels/voxels in an image or dataset sharing certain characteristics such as similar data values, or belonging to the same archaeological entity, e.g., the foundation or the paved floor of a Roman building. Strictly speaking, the binary classification on thresholding by value in iso-surface visualization is already a simple form of segmentation, although with limited capabilities. Thresholding is not powerful enough to separate archaeologically relevant structures in GPR data, since the respective data value ranges are not unique. In medical imaging advanced segmentation techniques using image features and statistics, texture information, and prior knowledge about the target structure can delineate structures such as organs or lesions despite ambiguous data values and noise [49]. Being able to extract structures of archaeological interest and, ultimately, to divide GPR datasets into archaeologically meaningful non-overlapping labeled features using segmentation techniques would greatly aid the analysis of prospection data. Segmentation approaches applied to GPR datasets could also serve as starting point for the archaeological interpretation process.

Automatic segmentation of archaeological prospection datasets would require the incorporation of existing knowledge and expertise of skilled human interpreters in specific algorithms. The feasibility of such solutions has recently been shown for well-defined problems in medical image analysis, such as the whole heart segmentation. For such problems, state-of-the-art approaches based on deep convolutional neuronal networks (DCNNs) can even outperform human experts [50]. CNN-based methods learn by example, which requires large training datasets ideally covering all possible data variations due to soil conditions and all conceivable shape variations of the structure to detect or segment. Such training data, archaeological GPR datasets with the target structures manually located and delimited by a human expert, afterwards validated by excavations, do not exist to our knowledge. It is unlikely that they will appear in near future.

To still be able to segment and thereby flexibly extract a variety of structures of archaeological interest from GPR datasets we adopted the interactive segmentation approach in [48] using an energy minimization functional based on geodesic active contours , the total variation of the solution , and seed regions to be specified by the user :

This minimization problem can be efficiently solved on GPUs, allowing real-time tuning of the parameters and controlling the influence of the gradient of the input image in , which generally attracts the solution towards to sharp edges in the input dataset. Parameter controls the desired similarity to the specified seed region . Integrated in the visualization framework the seed region image can be interactively edited by painting constraint regions on top of the input image, thereby efficiently steering the algorithm towards the desired result, whilst avoiding unwanted or missing parts.

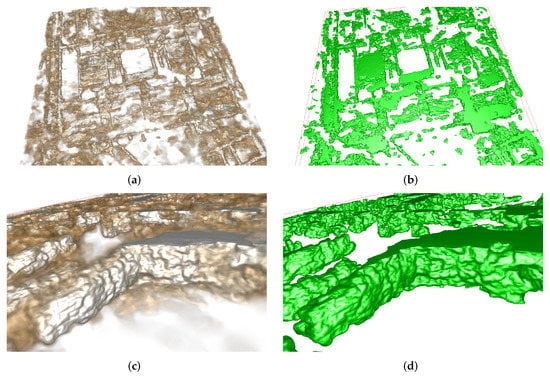

In experiments we tried to segment structures such as barrows, postholes, ditches, and walls including the ones from the forum in Roman Carnuntum. Using this segmentation approach, it was possible to obtain plausible 3D models with little user interaction required. For wall segmentation the outline of the desired building had to be roughly drawn on top of representative GPR slices, which was performed using both, cross-sectional slices shown in the 3D view, and three coupled cross-sectional 2D views of the dataset. For the visualization of the resulting binary segmentation masks, iso-surface rendering was the method of choice. Figure 11 shows such segmentation results in relation to a manual interpretation model and the underlying dataset.

Figure 11.

Combined visualization of original GPR dataset (DVR) using the same transfer function as in Figure 4 (blue), mesh representation of an interpretation model (red) obtained by extruding polygons drawn on top of 2D GPR slices, and the result from interactive 3D segmentation of the walls of two buildings (yellow and orange). The segmentation datasets are more detailed, reflecting the actual shape of the walls, while the hand drawn interpretation shows an idealized floor plan.

2.5. Flexible GPR Volume Visualization

The presented visualization system features visualization techniques far beyond DVR and iso-surface visualization. Its high degree of flexibility is achieved using a hybrid rendering algorithm for 3D volume and surface data based on ray-casting, inspired by work presented in [20], later extended to support conjoint 3D visualization of heterogeneous data in forensic science [19]. The central idea of the algorithm is to combine the visual contributions of arbitrary datasets passed along view rays through each pixel of the screen in the sense of DVR (see Figure 2). For each ray, the accumulated output color and opacity at sample i with color and opacity are computed using the recursive blending operation in Equation (7).

In DVR, samples are taken from a single volume dataset. In Equation (8), we generalize this formulation for samples in volumes, and include samples at ray intersections with 3D models, where is the number of volumes or surface samples hit at ray location i.

While volume dataset samples can be directly obtained from GPU texture memory, meshes, and point sets need to be handled differently. The computation of and needs to process their contributions in sorted order with respect to the distance from the viewpoint, which these data types do not support. The HA-buffer presented in [51] facilitates efficient sorted access to the visual contributions of 3D models using a hash-table, which is populated by rendering the respective models using the OpenGL graphics pipeline. We construct a modified HA-buffer in the first rendering stage, which stores fragment color contributions from visible 3D models. In addition, it handles invisible 3D bounding geometry models for GPR volumes, which can either be quadrilaterals representing the whole dataset range, sub-volumes, or arbitrary 3D shapes. HA-buffer entries from GPR dataset boundary fragments store a signed object ID. The sign distinguishes between entering and leaving the dataset. In the second rendering stage, the HA-buffer is resolved by traversing its depth-sorted stored entries. The objects IDs allow maintaining a set of objects the ray has entered or left, so-called active objects. Starting with the first HA-buffer entry, visible contributions from surface entries are directly combined with the accumulated color. If a positive object ID is read from the HA-buffer entry, the associated GPR volume is activated until its negative ID is read. The contribution of volumes in the sense of DVR is computed by ray casting and accumulating the samples of all active volumes (Equation (8)) until the next HA-buffer entry is reached. Multiple contributions at a single sampling location are combined. Implicit iso-surfaces visualization is achieved by detecting iso-surface hits based on two consecutive dataset samples, refining the hitpoint, and inserting the visual contribution as an additional sample.

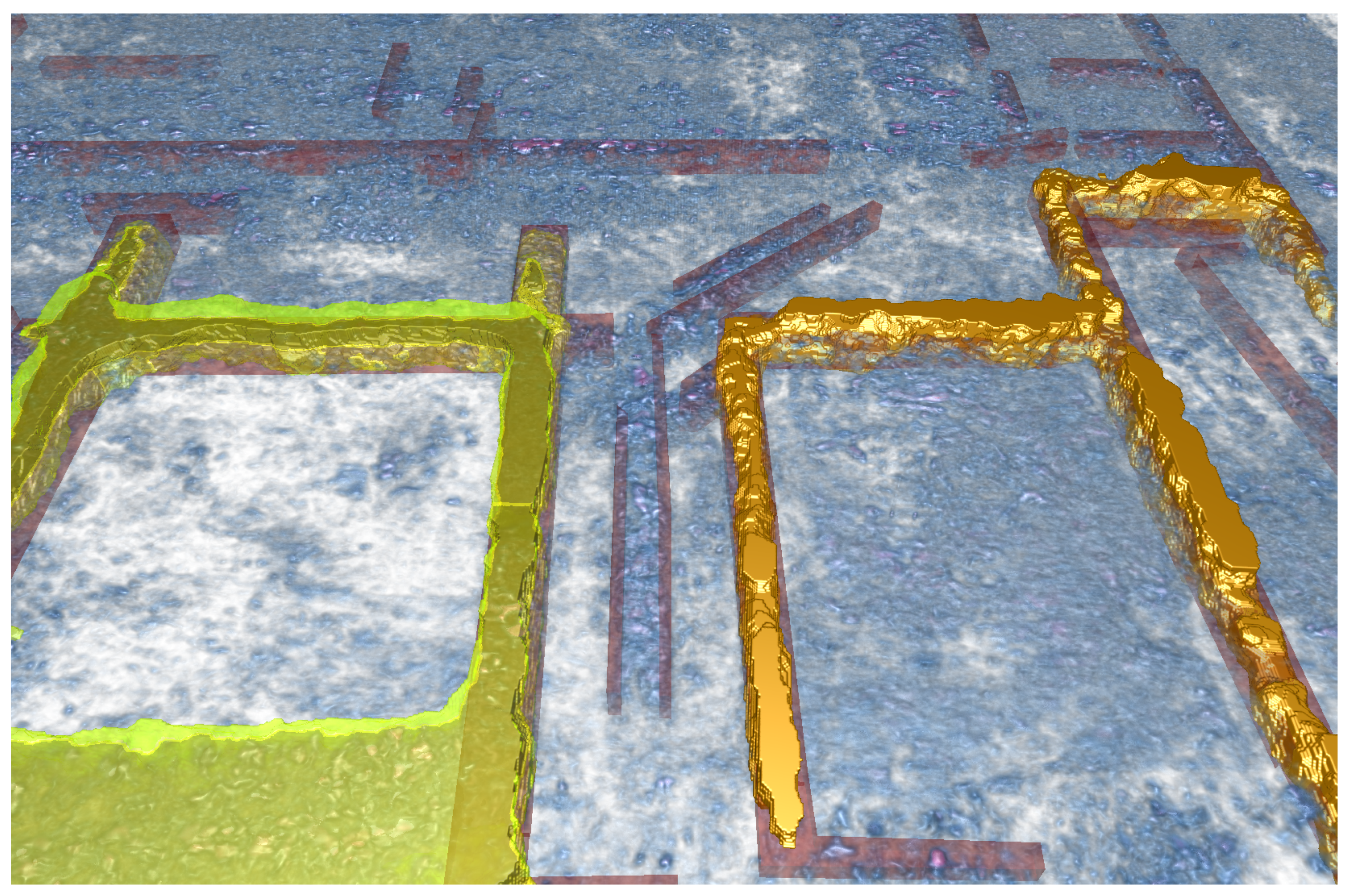

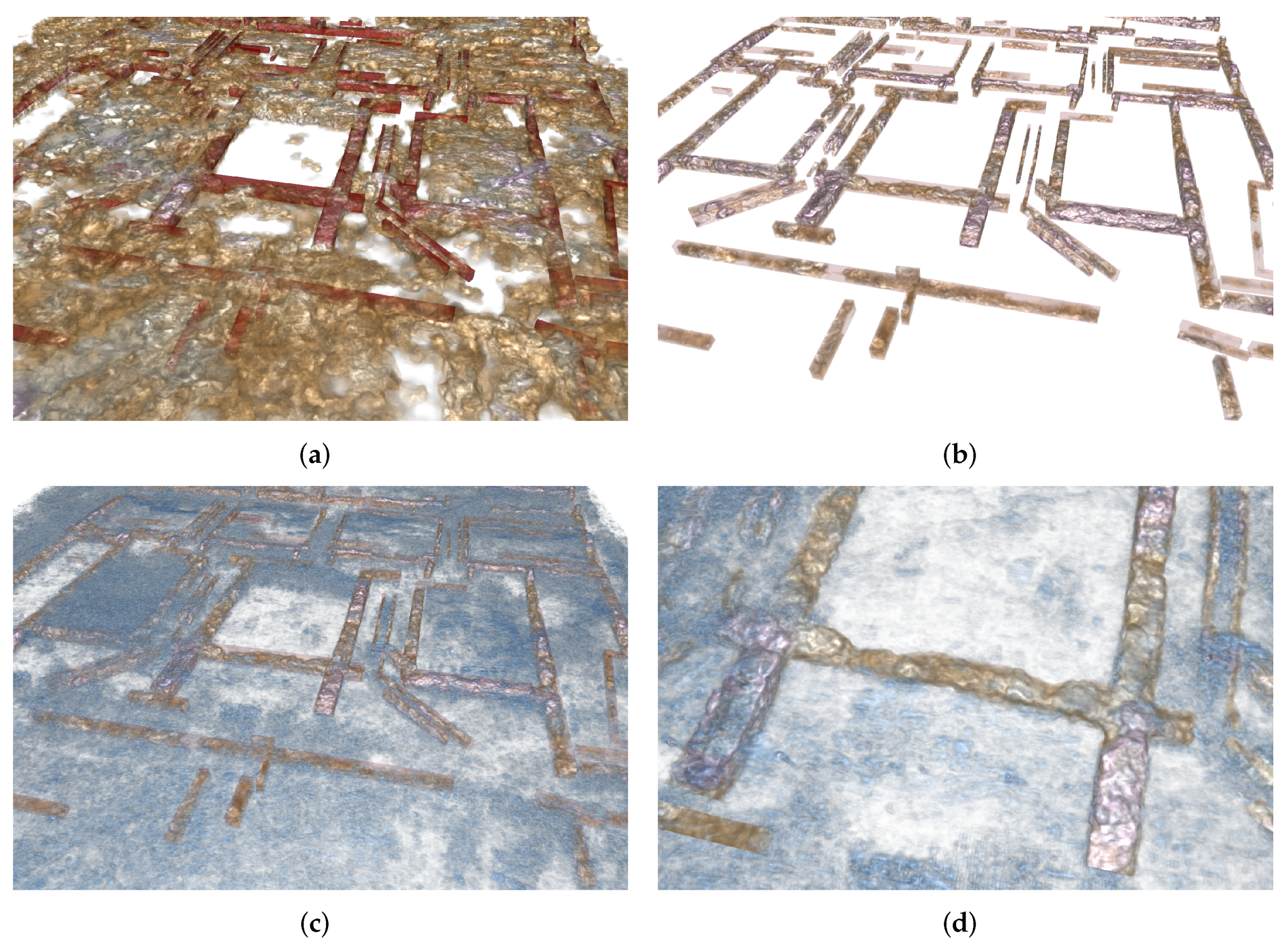

This hybrid rendering for heterogeneous data offers a multitude of possibilities for visualization. Support for multiple volume datasets allows the fused visualization of unfiltered and filtered dataset versions, as well as segmentation datasets. It is possible to independently choose between DVR and/or iso-surface rendering, and their respective visualization parameters. Furthermore, datasets from multiple different measurements can either be superimposed, or visualized next to each other. In our fused visualization approach, the orientations and resolutions of the datasets do not have to be identical. This is particularly useful, if a site is measured at different points in time, or using diverse measurement equipment. Figure 12 shows examples on how to use this functionality in archaeologically beneficial ways: The superimposition of an unfiltered dataset with a TV-L1 filtered version shows the major features emphasized by the filter. Thanks to the fused visualization approach the details suppressed by the filter can be re-added as visual contributions from the unfiltered dataset. The integration of a segmentation volume displayed as semi-transparent iso-surface allows the detailed analysis of the enclosed volume while being aware of the depicted structure’s shape and extent, in this case, the remains of the wall. Spatially distributed visualization avoids mutual occlusion. Iso-surfaces rendering integrated with DVR may generally be used to further emphasize specific features, such as regions of exceptionally high radar reflectivity or segmentation results. In the example the choice of a high iso-value depicts most of the foundation.

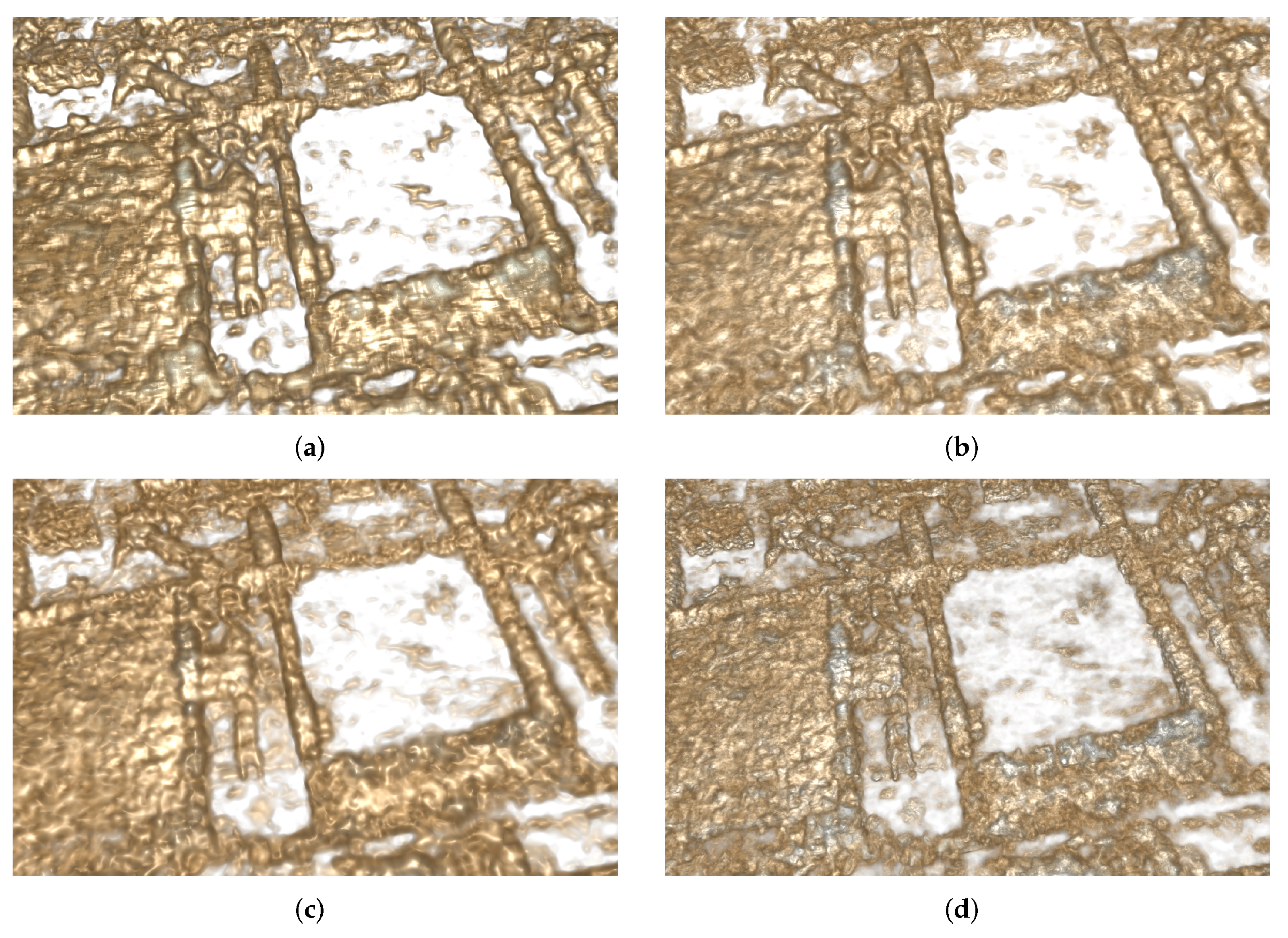

Figure 12.

Visualization results overview: Multi-volume DVR based on partially overlapping sub-volumes of the unfiltered (blue) and TV-L1 filtered (brown) Carnuntum dataset. In the overlap region, the visual contributions are combined. The floor plan remains clearly recognizable, details lost during filtering are supplemented from the unfiltered dataset (a). Integration of a semi-transparent segmentation result displayed in red emphasizing the walls of the segmented building. In the unfiltered region the superimposition with the segmentation supports the structural analysis of the walls (b). Spatial separation in, e.g., vertical direction reduces occlusion and is therefore useful for illustrating results of GPR data analysis (c). Likewise, including iso-surface visualization of the filtered dataset may be used to highlight regions of high radar reflectivity. In our case, there is a good match with the segmented walls (d).

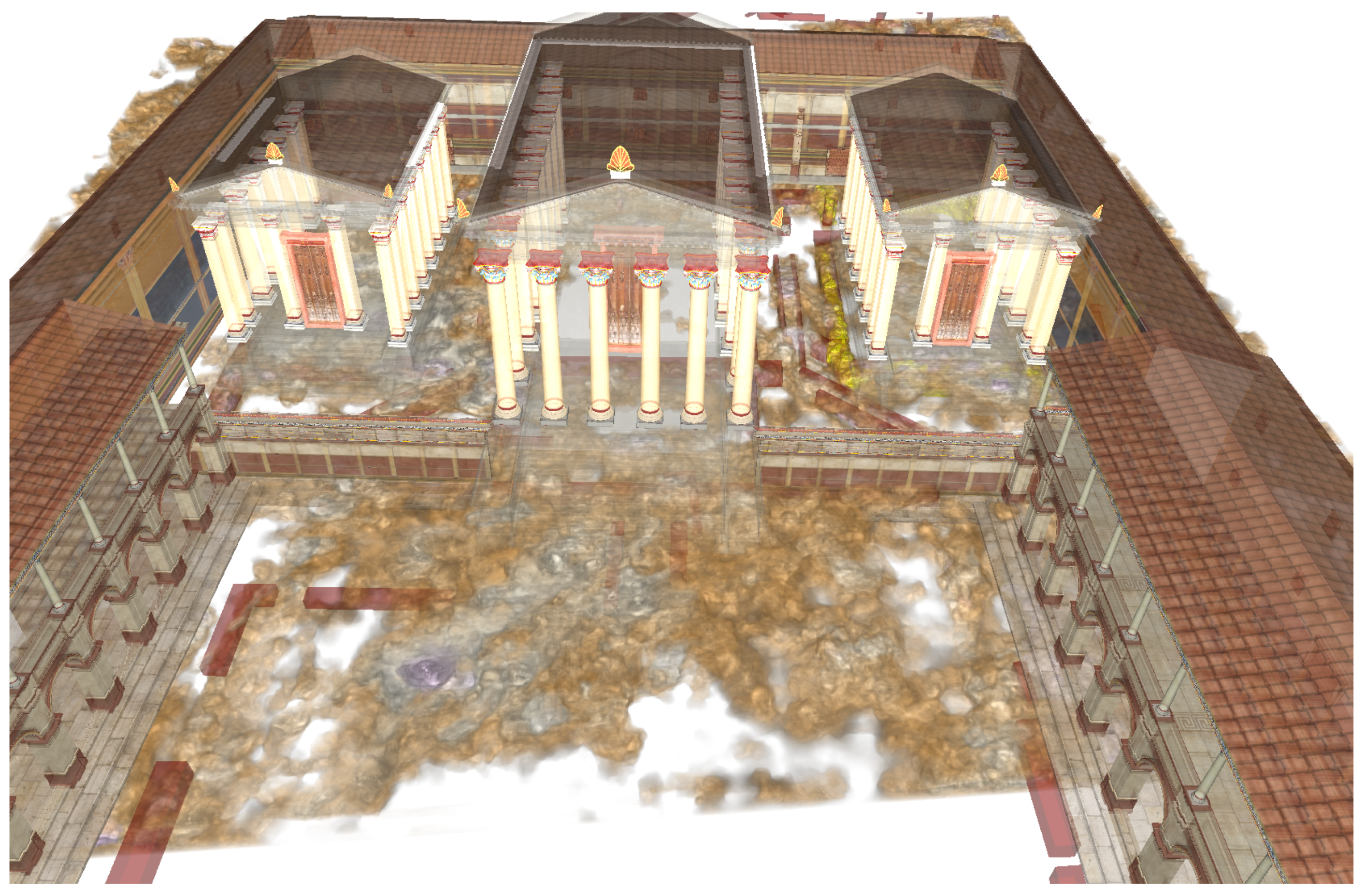

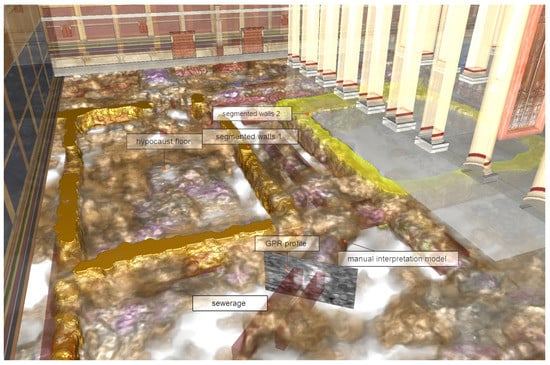

Support for arbitrary 3D data is a key feature of the proposed approach. It enables comprehensive visualizations of measured data and 3D interpretation models converted from interpretations manually elaborated in GIS tools by drawing polygons. The 2D polygons are converted into 3D models by extrusion. The simultaneous visibility of recorded prospection data, computer-aided analysis results, and human reasoning results in unprecedented possibilities for comprehensive prospection data analysis. It has the potential to support the discussion of interpretation results. Such visualizations are a powerful tool for the comparative dissemination of complex interpretation results to non-experts, which may increase their understanding by establishing the link between abstract prospection data and more tangible virtual models. Figure 13 shows a virtual reconstruction of the Roman forum of Carnuntum together with filtered GPR data and a 3D interpretation model.

Figure 13.

Combined visualization of GPR data, interpretation model, segmentation, and a virtual reconstruction of the Roman forum in Carnuntum. The integration of the 3D model allows viewers with no prior knowledge of Roman architecture to gain an idea of the possible original appearance of the buildings detected by ground penetrating radar.

2.6. Local Visualization Control

We have shown how to visually combine multiple heterogeneous datasets by accumulating their individual contributions in a fused visualization approach. Still, the possibilities of the proposed method are far from being exhausted. The core rendering algorithm keeping track of the objects encountered along the view rays opens countless new possibilities for even more flexible visualization techniques. Based on the archaeological need to visually depict structures in GPR data, we exploited the feasibility of application domain control for DVR using 3D models, thereby extending work in [19]. The simplest form of geometry-based visualization control is to restrict DVR to volume regions inside or outside a 3D model. It is also possible to use different DVR parameters leading to a different DVR appearance in the intersection region. Another option is to select the displayed dataset version (filtered or unfiltered) depending on the location relative to the controlling 3D model.

Our implementation supports restricting the visible portion of the dataset based on constructive solid geometry (CSG) operations. CSG techniques are routinely used in the CAD domain to model complex mechanical parts by applying a series of intersection, union, and difference operations with simple geometric primitives to an initial geometric model, e.g., a difference operation using a cylinder to model the drilling of a borehole into an existing 3D model.

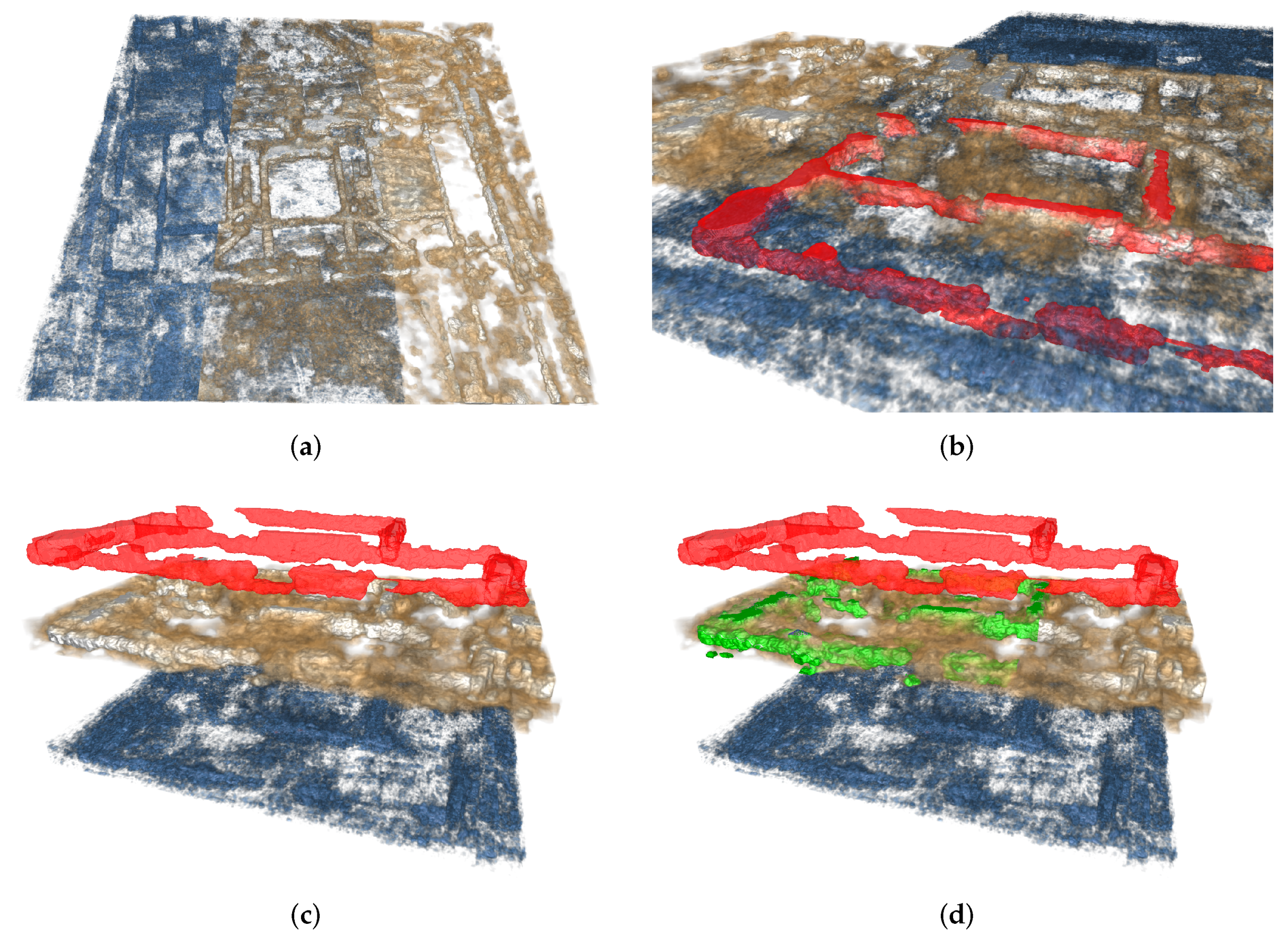

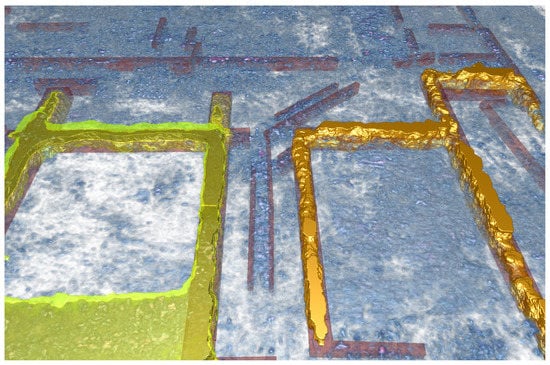

Figure 14 shows a local visualization control example using a simple 3D interpretation model, depicting walls and sewers. Instead of displaying the 3D model and the GPR dataset together (Figure 14a), the invisible 3D interpretation model geometry is used to restrict GPR DVR to its extents. This way, surface details of the dataset features in the selected portion of the filtered GPR dataset can clearly be seen, while occlusion by visual contributions from outside the interpretation boundaries does not occur at all (Figure 14b). Another option is to show outside dataset regions using a different transfer function. In the example both, i.e., an unfiltered version of the dataset and a different transfer function were used. Walls with well-defined contours stand out in the resulting images, while the surrounding dataset remains visible (Figure 14c,d).

Figure 14.

Local visualization control example: Conjoint visualization of filtered GPR dataset and 3D interpretation model (a). GPR dataset DVR limited to the inside of the invisible 3D model geometry (b). Combination of unfiltered GPR DVR outside and filtered GPR DVR inside the interpretation model. The building floor plan stands out while the remainder of the dataset is still visible. Wall surface details are visible despite the simplistic nature of the interpretation (c,d).

3. Results

The presented visualization and interactive interpretation approach has been successfully tested with data from multiple sites. In this publication we restrict to the Carnuntum example, where the GPR amplitude dataset was the central source of information. We analyzed the dataset using visualizations of the original dataset as well as multiple filter results from TV-L1 smoothing. They were visualized together with other sources of information in the analysis stage, where we tried to extract the walls of the three main buildings of the Roman forum from the GPR dataset using the developed tools for comparison with the existing 3D interpretation model elaborated using a slice-based approach in a GIS environment.

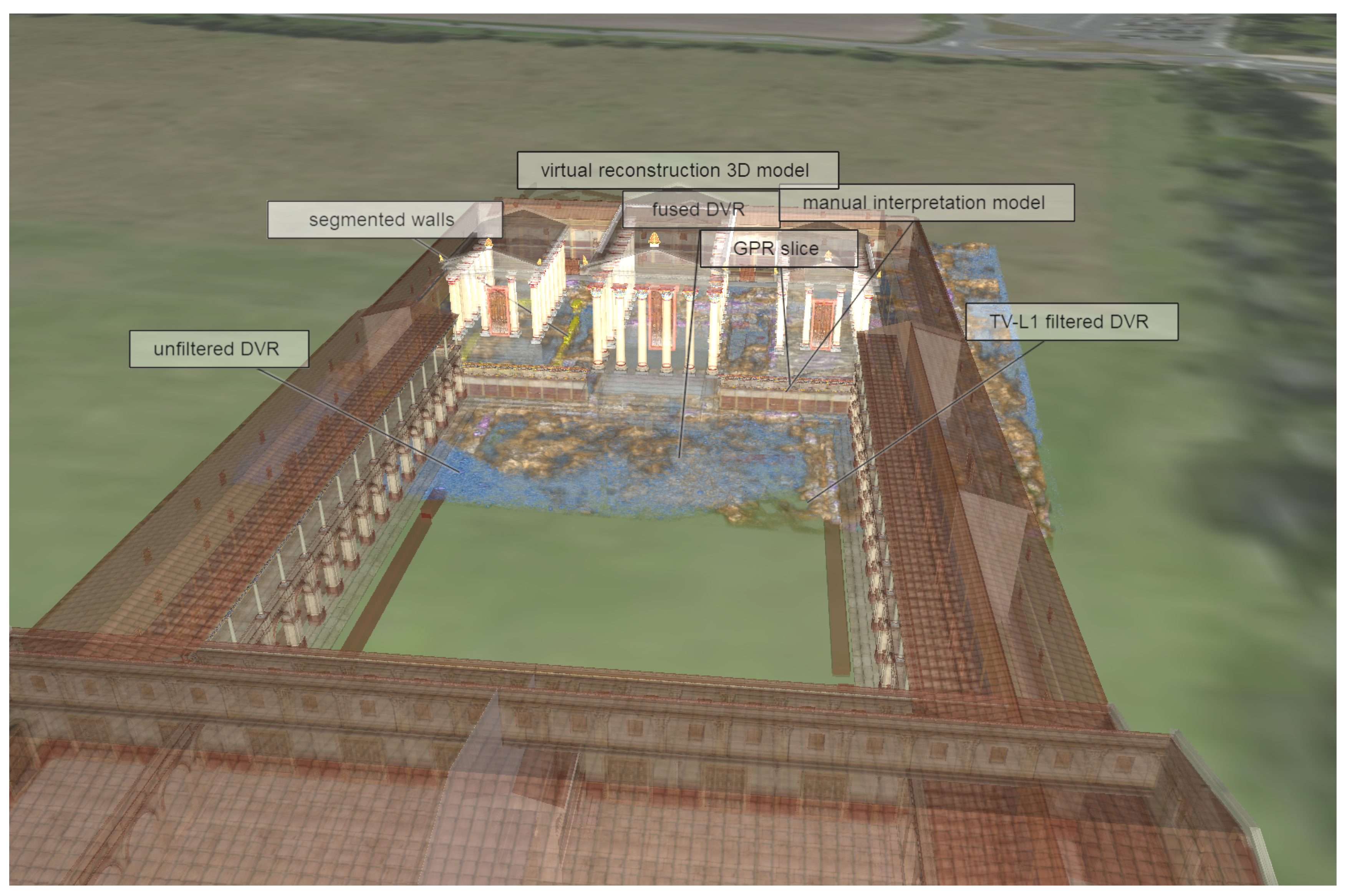

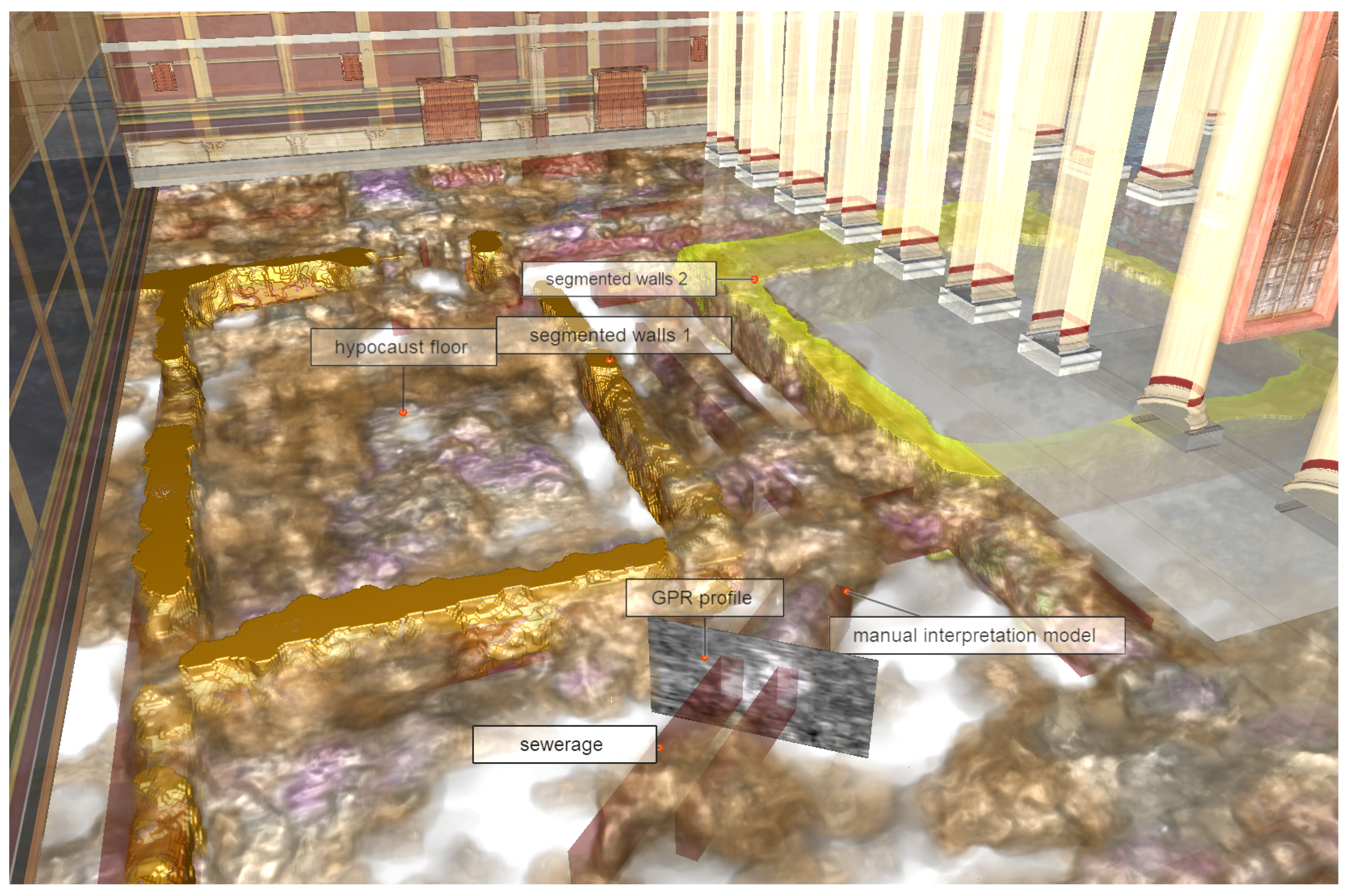

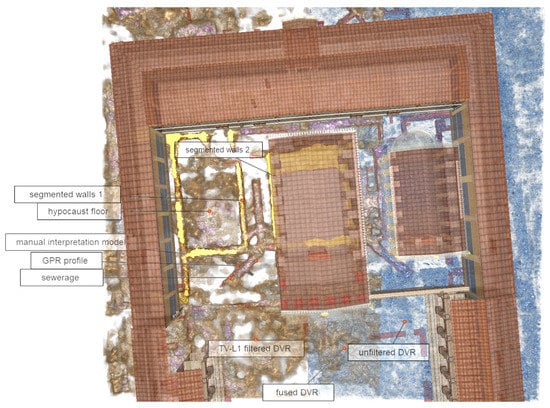

For site-illustration, the GPR datasets, the manual interpretation model, and the segmented walls were visualized together with a reconstructed 3D model of the forum embedded in a 3D terrain model of the site, to enable the spatial assignability in the present landscape. The included virtual reconstruction also supports the direct visual evaluation of the 3D modeled interpretation hypotheses against prospection data measured by sensors. Flexible control over the visible datasets and features helps to steer the visualization towards optimal perceptibility of the information for questions in application scenarios ranging from data exploration to illustration in the context of comprehensive dissemination of prospection results to stakeholders and the wide public. In the context of dissemination, the 3D annotation functionality of the system supports marking and labeling of important details in the data in the analysis stage, e.g., to point out structures of archaeological interest. The corresponding labels are automatically placed in arbitrary views illustration [52]. Figure 15 shows an overview illustration elaborated using the test GPR dataset from Carnuntum, filtered versions, a 3D interpretation model, a 3D model of the building complex, a terrain model of the area textured using an aerial image, and annotations. A vertical shift of −2.5 m for the terrain dataset prevents subsurface data occlusion. Semitransparent display of the 3D models aids the recognizability of spatial positional relationships to GPR dataset features. Labels point to the individual datasets and regions, making the illustration easier to understand.

Figure 15.

Result image combining multiple versions of the GPR dataset, a 3D interpretation model of the walls, segmented walls, and the reconstructed model. All the datasets are visualized together with a 3D terrain model.

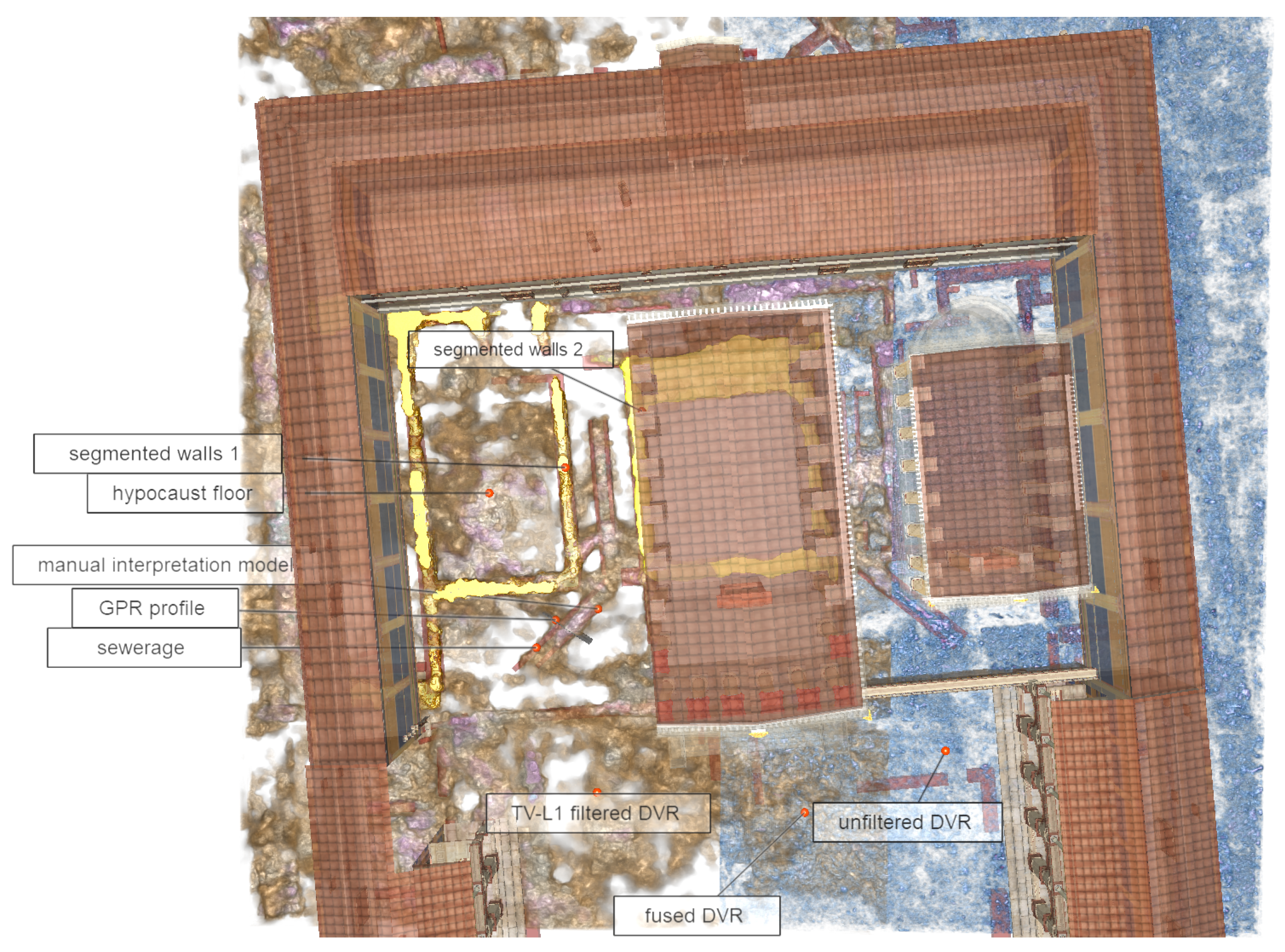

Figure 16 is a top view of the forum without the terrain model. Again, the 3D model is transparent so that the underground structures remain partially visible. For better visibility of the hypocaust and the sewer, the left building was removed using a CSG difference operation with a corresponding quadrilateral. Again, labels point to important dataset features, making the illustration largely self-explanatory.

Figure 16.

Top view of the scene from Figure 15 without terrain and parts of the 3D model cut out (Section 2.6) for better visibility of the prospection datasets, interpretation model, and the segmented walls (Section 2.4).

Figure 17 is an oblique view better depicting the 3D shapes of the segmented walls and the hypocaust. It also shows the possibility to place arbitrarily oriented GPR slices in the 3D scene to illustrate findings such as the cavity inside the sewer based on the data.

Figure 17.

Detail view: The segmentation results depict and visually separate the two buildings in the surrounding GPR visualization. A vertical profile sampled from the unfiltered dataset placed perpendicular to the sewer illustrates the still existing cavity in its center indicated by lower/darker reflectivity values. Its small size in the order of the dataset resolution limits the quality of the presentation. Furthermore, details such as pillars of a hypocaust floor are visible.

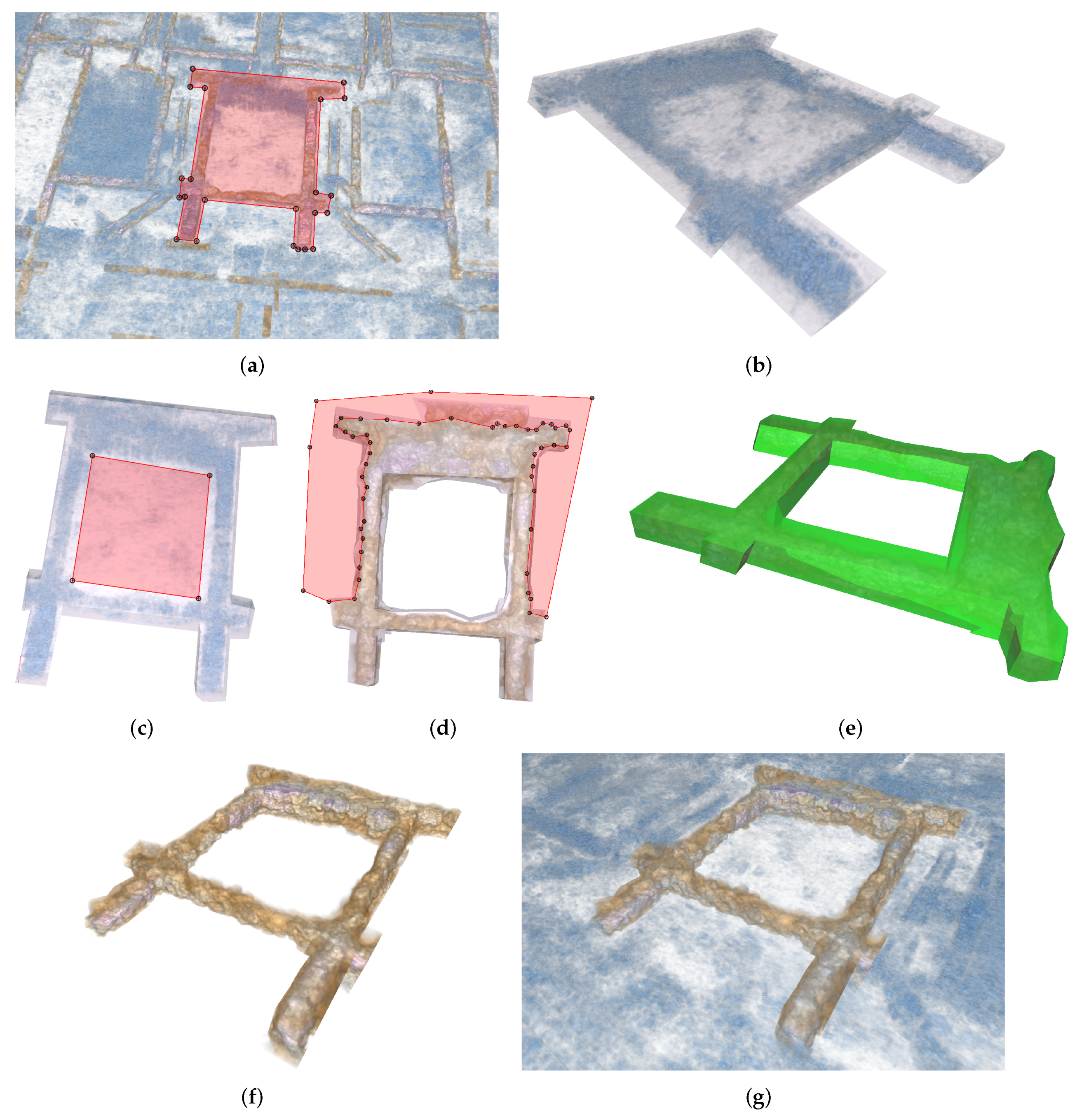

3.1. Interactive 3D Interpretation

In our experiments we discovered that using the techniques from Section 2.6 in conjunction with a simplistic 3D interpretation model is often sufficient for visual depiction of archaeological structures including fine surface details in DVR, since they are capable of removing occluding portions of the dataset or enabling visual differentiation against nearby structures of similar radar reflectivity as shown in Figure 14d. The still time consuming segmentation is not necessary unless an explicit 3D model is needed for further analysis or 3D printing. For all other cases we developed interactive GPR interpretation tools based on 3D shapes obtained by extruding polygons drawn on top of 3D DVR renderings. Figure 18 shows results of using these tools for the extraction of the walls shown in Figure 11 in a fraction of the time needed for the segmentation. Starting from an initial coarse interpretation geometry restricting DVR to its interior the interpretation was refined by drawing more polygons and combining their extruded 3D geometry with the previous interpretation model. This can be performed using the CSG operations intersection, union, and difference computed using the geometry processing library libigl [53], which uses the implementation of Boolean operations on Nef polyhedra in CGAL [54]. In the example, we mainly used difference operations. Refinement steps can be performed from arbitrary viewpoints and directions which, unlike GIS-based interpretation, leads to true 3D models with sufficient expressive power to represent the shapes of most archaeological structures.

Figure 18.

Interactive 3D GPR interpretation by polygon extrusion and CSG operations: Initial 2D contour (a). Visualization limiting DVR to the interior of the extruded 3D model (b). More 2D contours (c,d) and refined 3D interpretation model obtained using difference operations (e). Final 3D model applied to TV-L1 filtered dataset. Details of the structure surface are visible from any viewing perspective (f). Highlighting of the interpreted wall in the surrounding data (g).

3.2. System Requirements and Performance

The presented visualization framework relies on the computing power of a modern graphics card. The software uses both, OpenGL for feeding 3D models into the rendering framework and CUDA for combining all their contributions and sampling volume datasets for the final images. The computational complexity depends on the number of contributions to accumulate. Semitransparent 3D models are more complex than opaque ones since it is not sufficient to display the surface closest to the viewpoint only. Volume sampling complexity increases with the number of overlapping datasets and the number of samples required to accurately display them, which depends on the dataset size and resolution. Rendering times for the Carnuntum forum scene range from around 20 milliseconds for top views of a single dataset to 200 milliseconds for four overlapping versions, the interpretation model, and the virtual reconstruction for an output image size of 1920 × 1080 pixels on a NVidia GTX 2080 Ti GPU. This is sufficient for interactive data exploration and analysis in a desktop setting. Dataset size is currently limited by the graphics card memory. The 11 gigabytes provided by the card we used in our experiments were sufficient to process datasets in the single-digit hectare range, depending on the respective resolution and the number of derived datasets used.

4. Discussion

The experiments carried out so far have clearly shown the potential of the proposed approach. Archaeologists were enthusiastic about how the display of filtered GPR data using DVR for the first time makes the shape of the archaeological structures contained therein immediately and clearly visible. This is not only the case for major structures such as walls, but also for features at the dataset resolution level such as hypocaust pillars or inner structure of the sewers. First experiments with GPR datasets from other sites yielded similarly good results after optimizing filtering and rendering parameters. Nevertheless, it will require a more detailed investigation whether the proposed filters work for all datasets. It could theoretically be that they hide relevant structures or introduce some artificially. Apart from that, archaeologists were also impressed by the visualization fidelity over the state-of-the-art, the multitude of ways to illustrate GPR data and the possibilities to seamlessly combine them with other datasets in the GIS sense. The experiments performed so far have also shown that it is not possible to overlay arbitrarily much information without losing the overview; therefore, it will be necessary to adapt the visible data area flexibly to the question and to the viewer perspective. The basic functionality for this is already provided by the visualization algorithm.

The functionality to be able to visualize and also elaborate archaeological interpretations based on data displayed in three dimensions was seen as an important step forward, also because the visualization of interpretations elaborated on a slice-by-slice basis together with the 3D visualized GPR data evidence in the respective regions revealed significant deviations. Some of them can be explained by the interpretation process aiming at recovering building floor plans in their original form before decay and erosive processes. Others indicate real interpretation inaccuracies, inconsistencies, or even errors, which could be related to the limited capabilities of 2D views for 3D data visualization. Interpretations elaborated using segmentation techniques consistently were in accordance with the shapes implicitly perceivable in the 3D visualization. These observations clearly require further investigations.

From the hardware perspective, it is possible to visualize and process a practical dataset on a single PC. In the future visualization and filtering of datasets not fitting GPU memory, e.g., archaeological landscapes in the range of square kilometers, could be achieved by employing out-of-core and view-dependent multi-resolution techniques.

Author Contributions

Conceptualization, A.B. and W.N.; methodology, A.B. and W.N.; software, A.B.; formal analysis, A.B. and W.N.; investigation, A.B. and W.N.; writing—original draft preparation, A.B.; writing—review and editing, A.B. and W.N.; visualization, A.B.; supervision, W.N.; funding acquisition, W.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of these data.

Acknowledgments

The present work is based on preliminary work in the field of medical imaging for specific applications in forensics at the Ludwig Boltzmann Institute for Clinical Forensic Imaging continued at the Ludwig Boltzmann Institute for Archaeological Prospection and Virtual Archaeology (LBI ArchPro). We thank Johannes Höller for contributing to the user interface of the software as preparing 3D models. We want to thank Mario Wallner for the fruitful discussions of the interpretative process as well as providing 3D models of GIS based interpretations. We also want to thank Alois Hinterleitner providing us with high quality GPR volume datasets. The LBI ArchPro (archpro.lbg.ac.at) is based on an international cooperation of the Ludwig Boltzmann Gesellschaft (A), Amt der Niederösterreichischen Landesregierung (A), University of Vienna (A), TU Wien (A), Danube University Krems (A), ZAMG—Central Institute for Meteorology and Geodynamics (A), 7reasons (A), The Spanish Riding School Vienna (A), LWL—Federal state archaeology of Westphalia-Lippe (D), NIKU—Norwegian Institute for Cultural Heritage (N), and Vestfold and Telemark fylkeskommune—Kulturarv (N).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gaffney, C.; Gaffney, V.; Neubauer, W.; Baldwin, E.; Chapman, H.; Garwood, P.; Moulden, H.; Sparrow, T.; Bates, R.; Löcker, K.; et al. The Stonehenge Hidden Landscapes Project. Archaeol. Prospect. 2012, 19, 147–155. [Google Scholar] [CrossRef]

- Gaffney, V.; Neubauer, W.; Garwood, P.; Gaffney, C.; Löcker, K.; Bates, R.; De Smedt, P.; Baldwin, E.; Chapman, H.; Hinterleitner, A.; et al. Durrington walls and the Stonehenge Hidden Landscape Project 2010–2016. Archaeol. Prospect. 2018, 25, 255–269. [Google Scholar] [CrossRef]

- Gaffney, V.; Baldwin, E.; Bates, M.; Bates, C.; Gaffney, C.; Hamilton, W.; Kinnaird, T.; Neubauer, W.; Yorston, R.; Allaby, R.; et al. A Massive, Late Neolithic Pit Structure associated with Durrington Walls Henge. Internet Archaeol. 2020, 55. [Google Scholar] [CrossRef]

- Neubauer, W.; Wallner, M.; Gugl, C.; Loecker, K.; Vonkilch, A.; Trausmuth, T.; Nau, E.; Jansa, V.; Wilding, J.; Eder-Hinterleitner, A.; et al. Zerstörungsfreie archäologische Prospektion des römischen Carnuntumerste Ergebnisse des Forschungsprojekts “ArchPro Carnuntum”. In Carnuntum Jahrbuch; Verlag der Österreichischen Akademie der Wissenschaften: Wien, Austria, 2018; Volume 1, pp. 55–75. [Google Scholar] [CrossRef]

- Neubauer, W. Die Entdeckung des Forums der Zivilstadt. In Carnuntum. Wiedergeborene Stadt der Kaiser; Humer, F., Ed.; Von Zabern: Darmstadt, Germany, 2014. [Google Scholar]

- Neubauer, W.; Doneus, M.; Trinks, I.; Verhoeven, G.; Hinterleitner, A.; Seren, S.; Löcker, K. Long-term Integrated Archaeological Prospection at the Roman Town of Carnuntum/Austria. Oxbow Monogr. Ser. 2012, 2, 202–221. [Google Scholar] [CrossRef]

- Neubauer, W.; Eder-Hinterleitner, A.; Seren, S.; Melichar, P. Georadar in the Roman civil town Carnuntum, Austria: An approach for archaeological interpretation of GPR data. Archaeol. Prospect. 2002. [Google Scholar] [CrossRef]

- Trinks, I.; Neubauer, W.; Hinterleitner, A. First High-resolution GPR and Magnetic Archaeological Prospection at the Viking Age Settlement of Birka in Sweden. Archaeol. Prospect. 2014, 21, 185–199. [Google Scholar] [CrossRef]

- Trinks, I.; Neubauer, W.; Nau, E.; Gabler, M.; Wallner, M.; Hinterleitner, A.; Biwall, A.; Doneus, M.; Pregesbauer, M. Archaeological prospection of the UNESCO World Cultural Heritage Site Birka-Hovgården. In Archaeological Prospection: Proceedings of the 10th International Conference on Archaeological Prospection, Vienna, Austria, 29 May–2 June 2013; Austrian Academy of Sciences Press: Vienna, Austria, 2013; pp. 39–40. [Google Scholar]

- Gustavsen, L.; Cannell, R.J.; Nau, E.; Tonning, C.; Trinks, I.; Kristiansen, M.; Gabler, M.; Paasche, K.; Gansum, T.; Hinterleitner, A.; et al. Archaeological prospection of a specialized cooking-pit site at Lunde in Vestfold, Norway. Archaeol. Prospect. 2018, 25, 17–31. [Google Scholar] [CrossRef]

- Tonning, C.; Schneidhofer, P.; Nau, E.; Gansum, T.; Lia, V.; Gustavsen, L.; Filzwieser, R.; Wallner, M.; Kristiansen, M.; Neubauer, W.; et al. Halls at Borre: The discovery of three large buildings at a Late Iron and Viking Age royal burial site in Norway. Antiquity 2020, 94, 145–163. [Google Scholar] [CrossRef]

- Draganits, E.; Doneus, M.; Gansum, T.; Gustavsen, L.; Nau, E.; Tonning, C.; Trinks, I.; Neubauer, W. The late Nordic Iron Age and Viking Age royal burial site of Borre in Norway: ALS- and GPR-based landscape reconstruction and harbour location at an uplifting coastal area. Quat. Int. 2015, 367, 96–110. [Google Scholar] [CrossRef]

- Trinks, I.; Hinterleitner, A.; Neubauer, W.; Nau, E.; Löcker, K.; Wallner, M.; Gabler, M.; Filzwieser, R.; Wilding, J.; Schiel, H.; et al. Large-area high-resolution ground-penetrating radar measurements for archaeological prospection. Archaeol. Prospect. 2018, 25, 171–195. [Google Scholar] [CrossRef]

- Hinterleitner, A.; Löcker, K.; Neubauer, W.; Trinks, I.; Sandici, V.; Wallner, M.; Pregesbauer, M. Kastowsky-Priglinger, K. Automatic detection, outlining and classification of magnetic anomalies in large-scale archaeological magnetic prospection data. In Archaeological Prospection, Proceedings of the 11th International Conference on Archaeological Prospection, Warsaw, Poland, 15–19 September 2015; Rzeszotarska-Nowakiewicz, A., Ed.; The Institute of Archaeology and Ethnology, Polish Academy of Sciences: Warsaw, Poland, 2015; pp. 296–299. [Google Scholar]

- Poscetti, V.; Zotti, G.; Neubauer, W. Improving the GIS-based 3D mapping of archeological features in GPR data. In Archaeological Prospection, Proceedings of the 11th International Conference on Archaeological Prospection, Warsaw, Poland, 15–19 September 2015; Rzeszotarska-Nowakiewicz, A., Ed.; The Institute of Archaeology and Ethnology, Polish Academy of Sciences: Warsaw, Poland, 2015; pp. 603–607. [Google Scholar]

- Küçükdemirci, M.; Sarris, A. Deep learning based automated analysis of archaeo-geophysical images. Archaeol. Prospect. 2020, 27, 107–118. [Google Scholar] [CrossRef]

- Seren, S.; Eder-Hinterleitner, A.; Neubauer, W.; Melichar, P. New Results on Comparison of Different GPR Systems and Antenna Configurations at the Roman Site Carnuntum. In Proceedings of the 20th EEGS Symposium on the Application of Geophysics to Engineering and Environmental Problems, Denver, CO, USA, 1–5 April 2007; pp. 6–12. [Google Scholar] [CrossRef]

- Poscetti, V.; Neubauer, W.; Trinks, I. Archaeological interpretation of GPR data: State-of-the-art and the road ahead. In Archaeological Prospection, Proceedings of the 9th International Conference on Archaeological Prospection, Izmir, Turkey, 19–24 September 2011; Drahor, M.G., Berge, M.A., Eds.; Archaeology and Art Publications: Istanbul, Turkey, 2011; pp. 145–148. [Google Scholar]

- Bornik, A.; Urschler, M.; Schmalstieg, D.; Bischof, H.; Krauskopf, A.; Schwark, T.; Scheurer, E.; Yen, K. Integrated computer-aided forensic case analysis, presentation, and documentation based on multimodal 3D data. Forensic Sci. Int. 2018, 287, 12–24. [Google Scholar] [CrossRef] [PubMed]

- Kainz, B.; Grabner, M.; Bornik, A.; Hauswiesner, S.; Muehl, J.; Schmalstieg, D. Ray casting of multiple volumetric datasets with polyhedral boundaries on manycore GPUs. ACM Trans. Graph. 2009, 28. [Google Scholar] [CrossRef] [Green Version]

- Conyers, L.B. Ground-Penetrating Radar for Archaeology; AltaMira Press: Walnut Creek, CA, USA, 2004. [Google Scholar]

- Goodman, D.; Piro, S. GPR Remote Sensing in Archaeology, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2013; 233p. [Google Scholar] [CrossRef]

- Conyers, L.B. Chapter 2—Basic Method and Theory of Ground-penetrating Radar. In Ground-Penetrating Radar for Geoarchaeology; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2016; Chapter 2; pp. 12–33. [Google Scholar] [CrossRef]

- Zhao, W.; Forte, E.; Pipan, M. Texture Attribute Analysis of GPR Data for Archaeological Prospection. Pure Appl. Geophys. 2016, 173, 2737–2751. [Google Scholar] [CrossRef]

- Trinks, I.; Hinterleitner, A. Beyond Amplitudes: Multi-Trace Coherence Analysis for Ground-Penetrating Radar Data Imaging. Remote Sens. 2020, 12, 1583. [Google Scholar] [CrossRef]

- Verhoeven, G.J.J. TAIFU—Toolbox for Archaeological Image FUsion. In Proceedings of the Aerial Archaeology Research Group (AARG) 2015 Annual Conference, Santiago de Compostela, Spain, 9 September 2015; p. 36. [Google Scholar] [CrossRef]

- Conyers, L.B. Ground-penetrating Radar for Archaeological Mapping. In Remote Sensing in Archaeology; Wiseman, J., El-Baz, F., Eds.; Interdisciplinary Contributions to Archaeology; Springer: Berlin/Heidelberg, Germany, 2006; pp. 329–344. [Google Scholar] [CrossRef]

- Neubauer, W. Magnetische Prospektion in der Archäologie. Ph.D. Thesis, University of Vienna, Vienna, Austria, 2001. [Google Scholar]

- Neubauer, W.; Seren, S.; Hinterleitner, A.; Löcker, K.; Melichar, P. Archaeological interpretation of combined magnetic and GPR surveys of the Roman town Flavia Solva, Austria. ArchéoSciences 2009, 33, 225–228. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Forte, E.; Pipan, M.; Tian, G. Ground Penetrating Radar (GPR) attribute analysis for archaeological prospection. J. Appl. Geophys. 2013, 97, 107–117. [Google Scholar] [CrossRef]

- Conyers, L.B. Ground-penetrating radar mapping using multiple processing and interpretation methods. Remote Sens. 2016, 8, 562. [Google Scholar] [CrossRef] [Green Version]

- Leckebusch, J.; Peikert, R. Investigating the true resolution and three-dimensional capabilities of ground-penetrating radar data in archaeological surveys: Measurements in a sand box. Archaeol. Prospect. 2001, 8, 29–40. [Google Scholar] [CrossRef]

- Novo, A.; Grasmueck, M.; Viggiano, D.A.; Lorenzo, H. 3D GPR in Archeology: What can be gained from dense Data Acquisition and Processing? In Proceedings of the 12th International Conference on Ground Penetrating Radar, Birmingham, UK, 15–19 June 2008; pp. 2–6. [Google Scholar]

- Verdonck, L.; Launaro, A.; Vermeulen, F.; Millett, M. Ground-penetrating radar survey at Falerii Novi: A new approach to the study of Roman cities. Antiquity 2020, 94, 705–723. [Google Scholar] [CrossRef]

- Kadiolu, S.; Daniels, J.J. 3D visualization of integrated ground penetrating radar data and EM-61 data to determine buried objects and their characteristics. J. Geophys. Eng. 2008, 5, 448–456. [Google Scholar] [CrossRef]

- Andres, J.; Davis, M.; Fujiwara, K.; Anderson, J.; Fang, T.; Nedbal, M. A geospatially enabled, PC-based, software to fuse and interactively visualize large 4D/5D data sets. In Proceedings of the OCEANS 2009, Biloxi, MS, USA, 26–29 October 2009. [Google Scholar] [CrossRef]

- Liang, J.; Gong, J.; Li, W.; Ibrahim, A.N. Visualizing 3D atmospheric data with spherical volume texture on virtual globes. Comput. Geosci. 2014, 68, 81–91. [Google Scholar] [CrossRef]

- Engel, K.; Hadwiger, M.; Kniss, J.M.; Lefohn, A.E.; Salama, C.R.; Weiskopf, D. Real-Time Volume Graphics; Taylor & Francis Ltd.: Abingdon, UK, 2006; pp. 47–186. [Google Scholar]

- Bornik, A.; Wallner, M.; Hinterleitner, A.; Verhoeven, G.; Neubauer, W. Integrated volume visualisation of archaeological ground penetrating radar data. In Eurographics Workshop on Graphics and Cultural Heritage; Robert, S., Michael, W., Eds.; The Eurographics Association: Geneve, Switzerland, 2018. [Google Scholar] [CrossRef]

- Neubauer, W.; Bornik, A.; Wallner, M.; Verhoeven, G. Novel volume visualisation of GPR data inspired by medical applications. In Proceedings of the 13th International Conference on Archaeological Prospection, Sligo, Ireland, 28 August–1 September 2019. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Prentice Hall: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the 6th IEEE International Conference on Computer Vision, Bombay, India, 7 January 1998. [Google Scholar] [CrossRef]