A Scalable and Accurate De-Snowing Algorithm for LiDAR Point Clouds in Winter

Abstract

:1. Introduction

- (1)

- The characteristics of LiDAR point cloud data under snowy conditions were systematically analyzed in terms of distance, intensity and data percentage, providing solid support for subsequent studies;

- (2)

- Given the characteristics of point cloud data, a dynamic filter that integrates distance and intensity was developed. This method has thresholds that are dynamically adjustable to fully preserve environmental characteristics that are based on the accurate removal of snow noise. Evaluation experiments on the WADS dataset demonstrated the excellent performance of our method.

2. Related Work

- (1)

- Statistics-based Snow Noise Filtering Methods

- (2)

- Intensity-based Snow Noise Filtering Methods

- (3)

- Deep Learning-based Snow Noise Filtering Methods

3. Materials and Methods

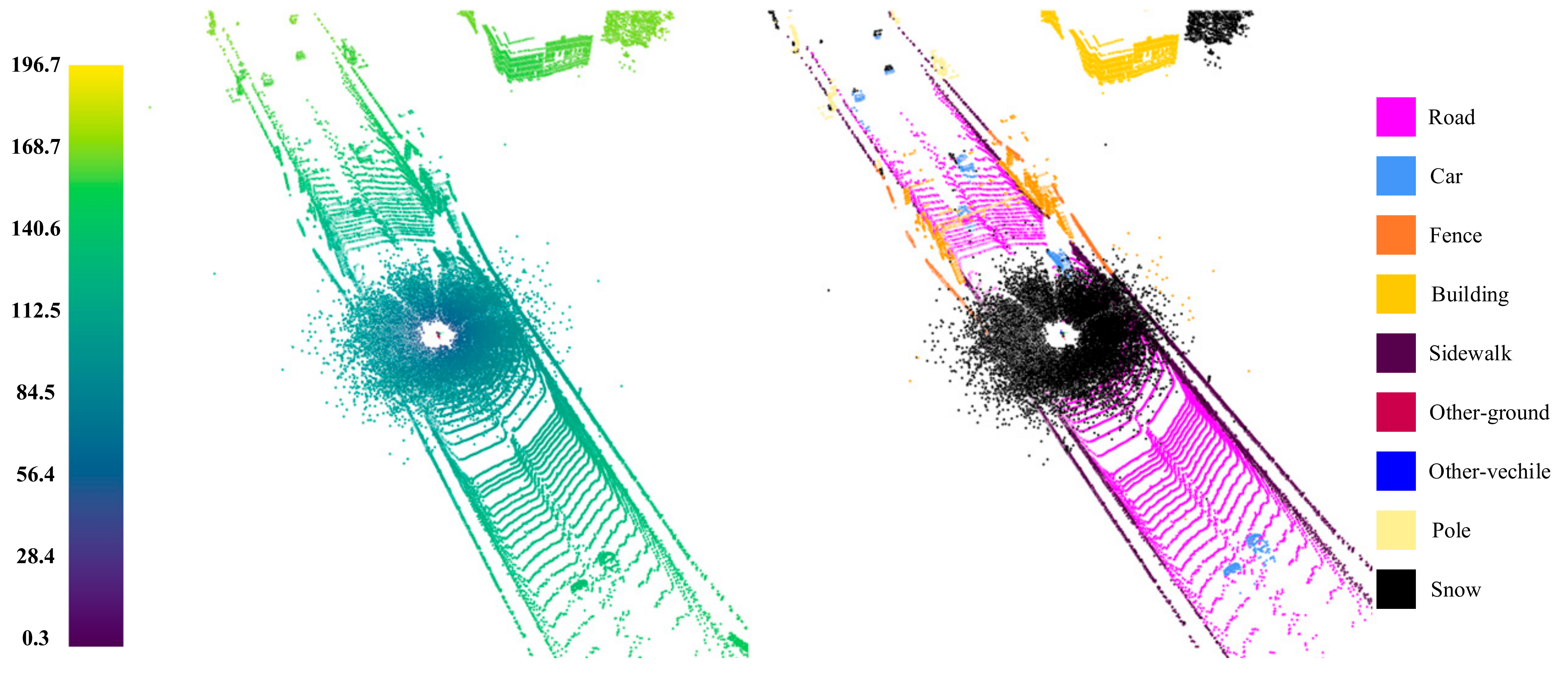

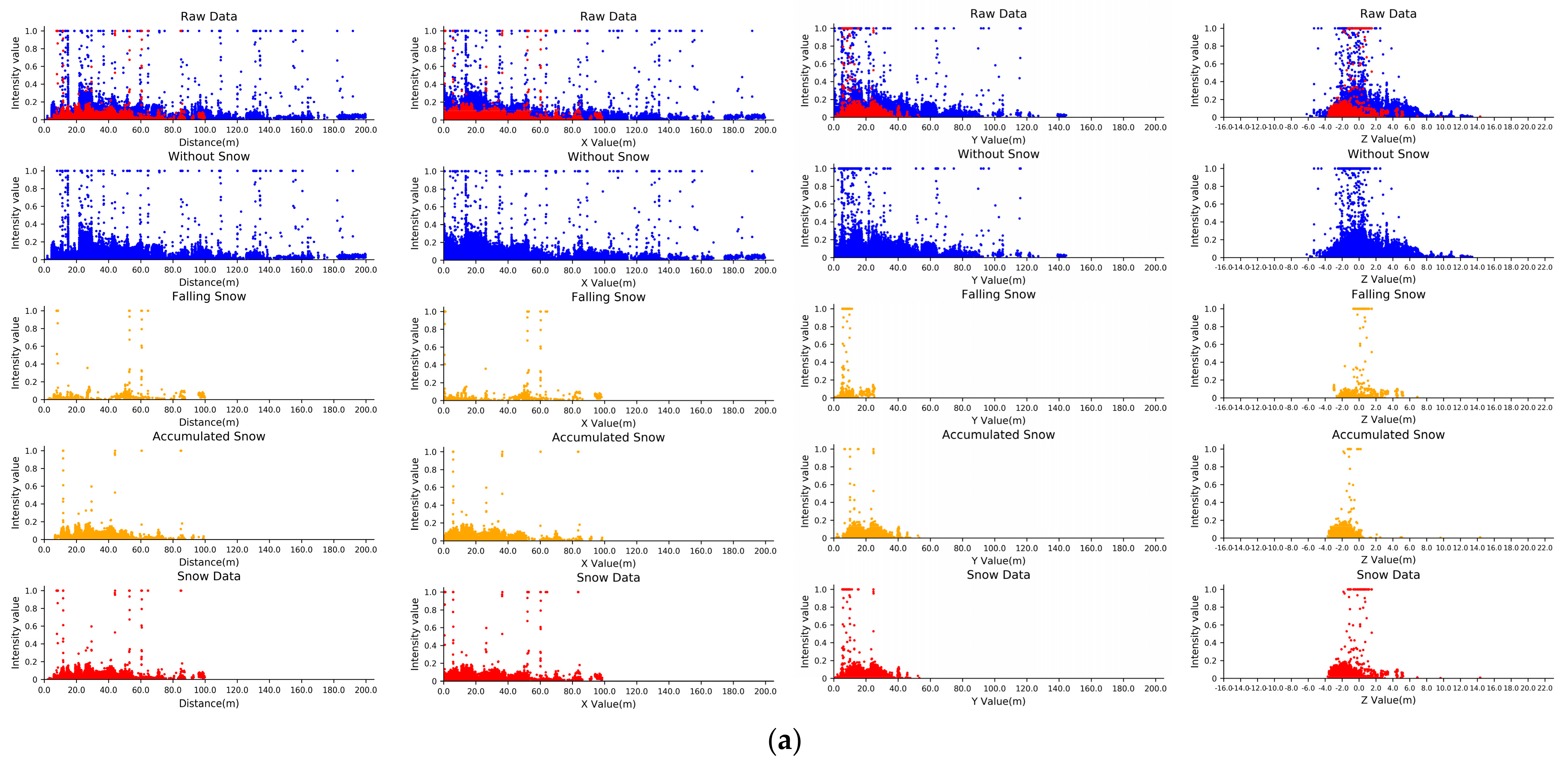

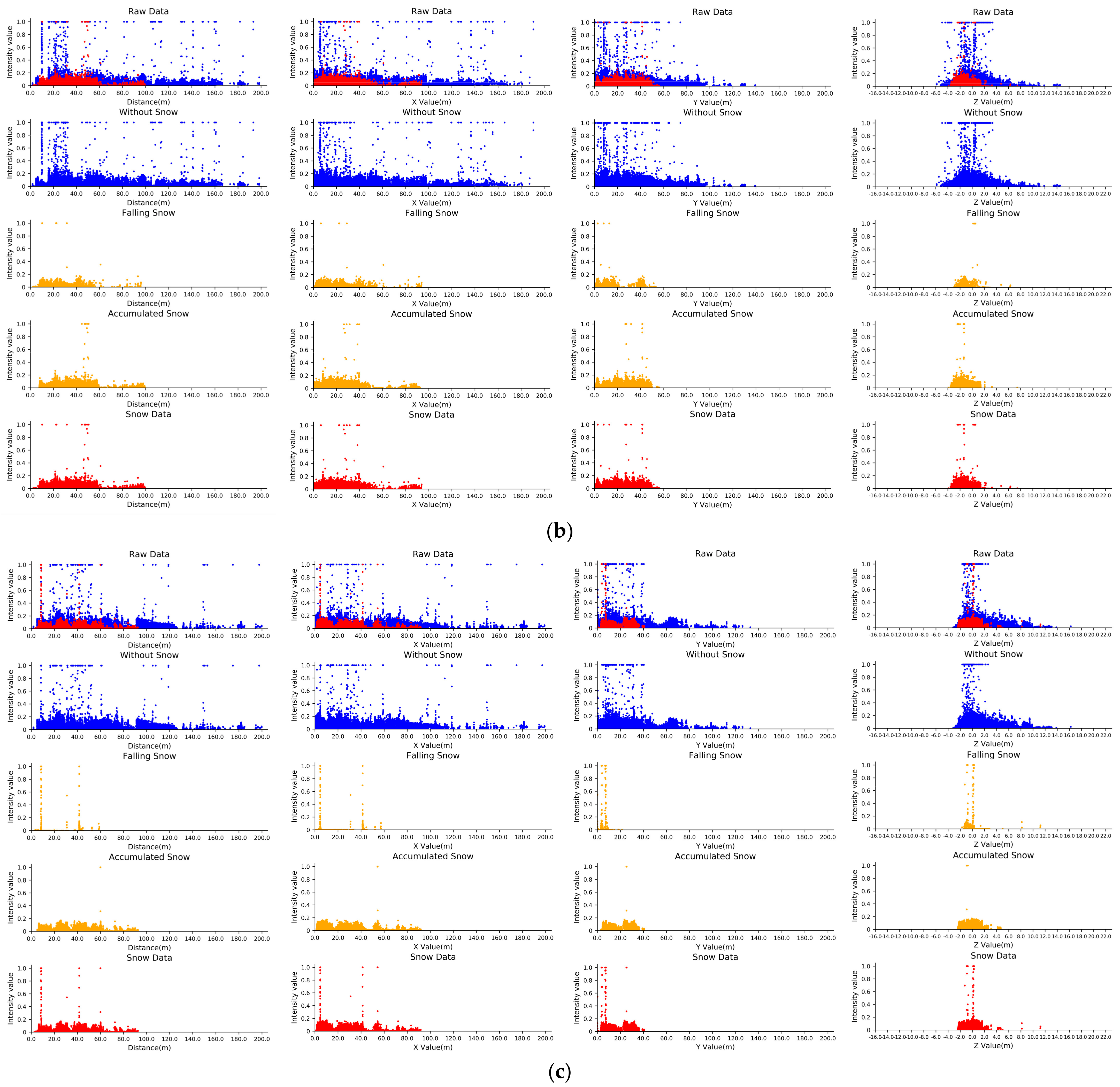

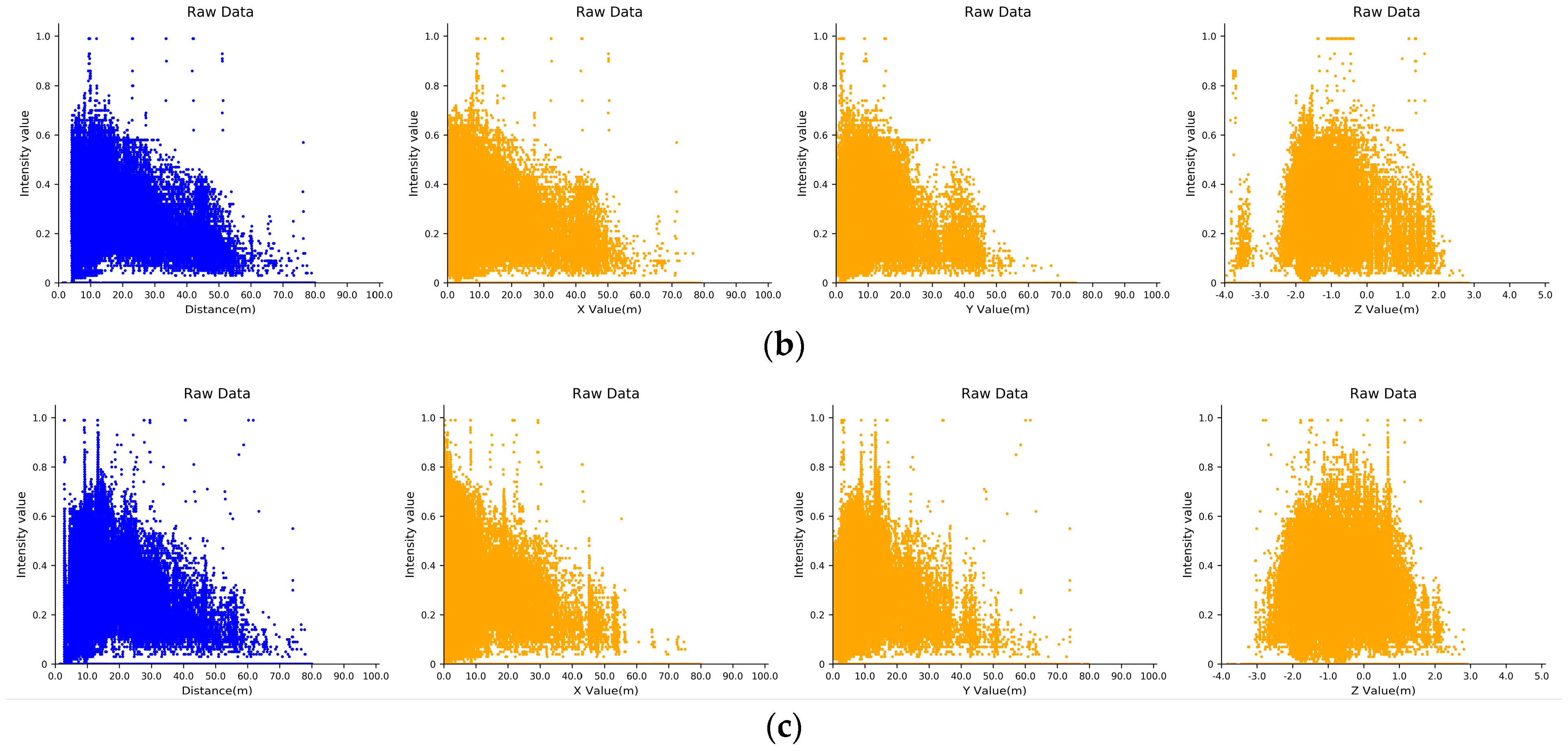

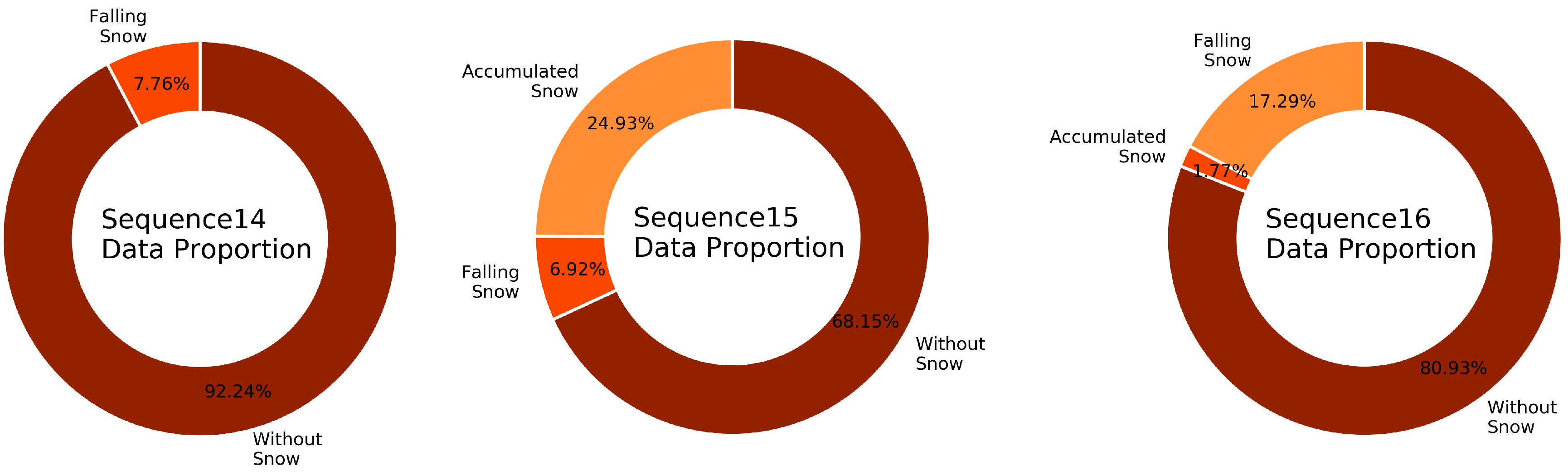

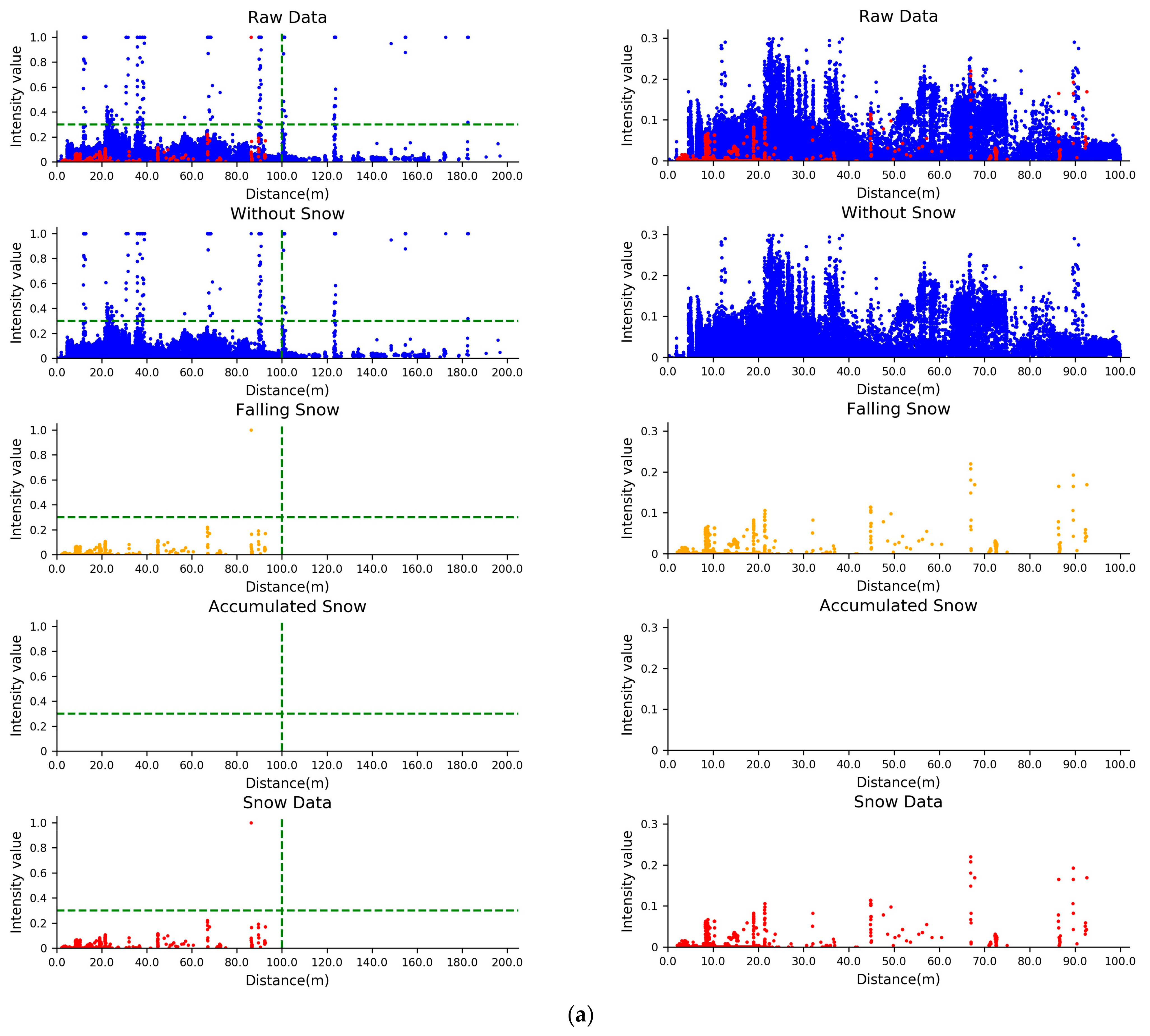

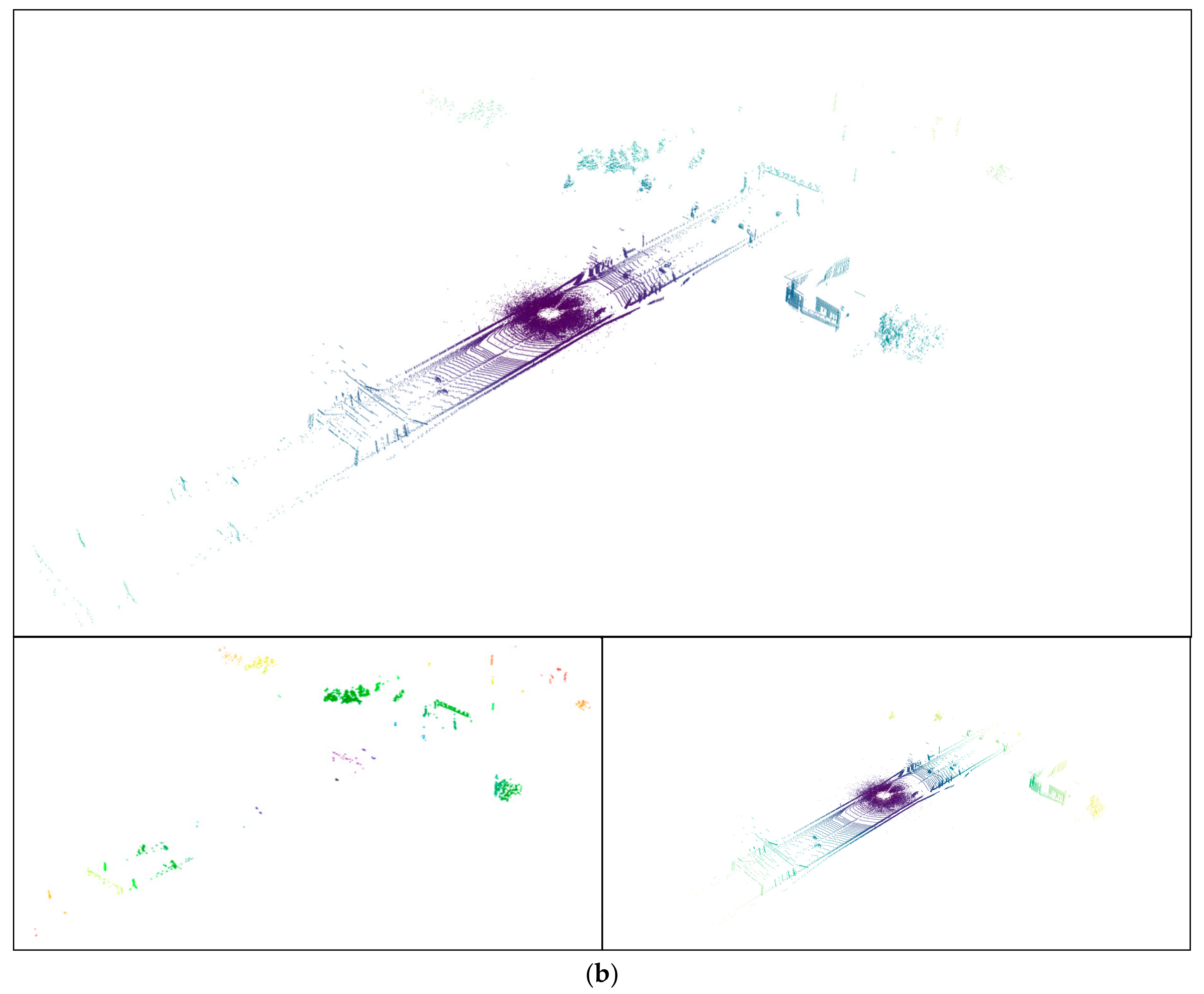

3.1. Characteristic Analysis of LiDAR Point Cloud Data Collected under Snowy Conditions

3.1.1. Basis of Data Analysis: The Dataset

3.1.2. How Do Snowy Days Affect LiDAR Point Clouds?

3.2. Dynamic Filtering Algorithm for Snow Noise Removal

| Algorithm 1: Dynamic Distance–Intensity Outlier Removal |

| Input: Point Cloud Dynamic distance coefficient Number of nearest neighbors |

| Output: De-snowing Point Cloud Filtered Point Cloud |

| Intermediate variable: Mean distance Mean_distances Distance Dynamic filtering threshold |

| Begins |

| for , do |

| ; |

| end |

| calculate |

| for , do |

| calculate |

| switch |

| calculate |

| if , then |

| else |

| end |

| return , |

| end |

4. Experiment

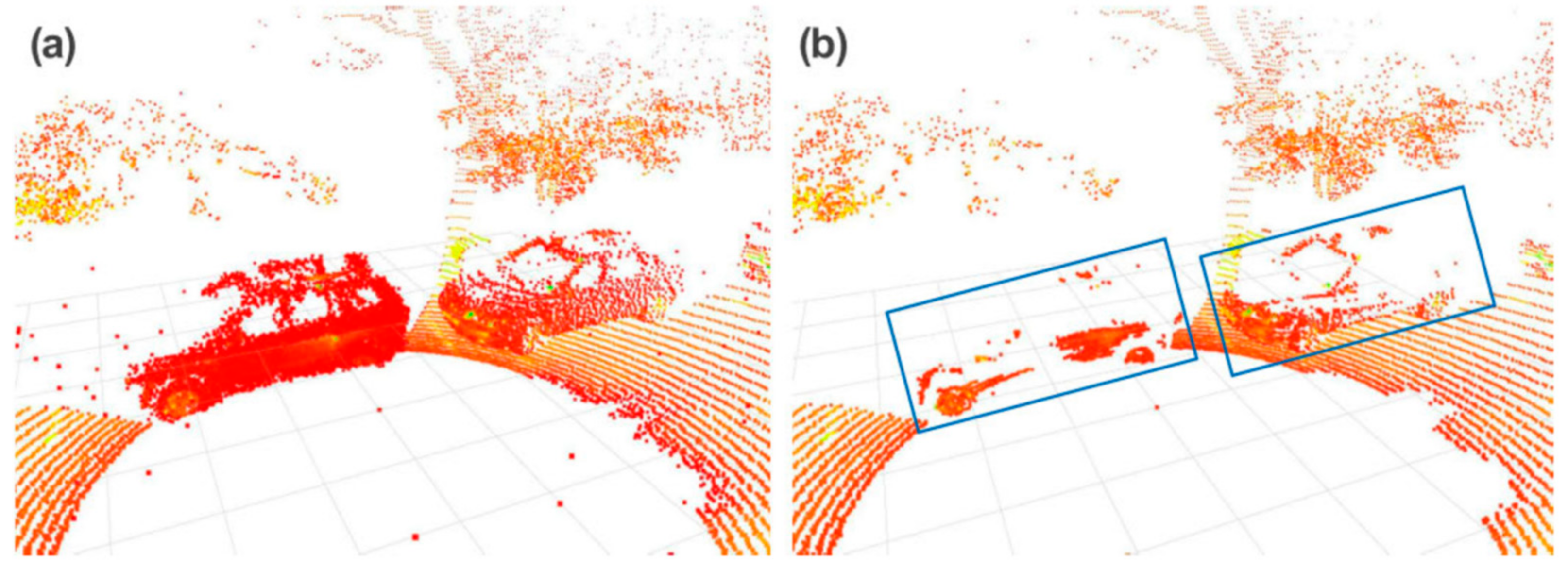

4.1. Qualitative Assessment

4.2. Quantitative Evaluation

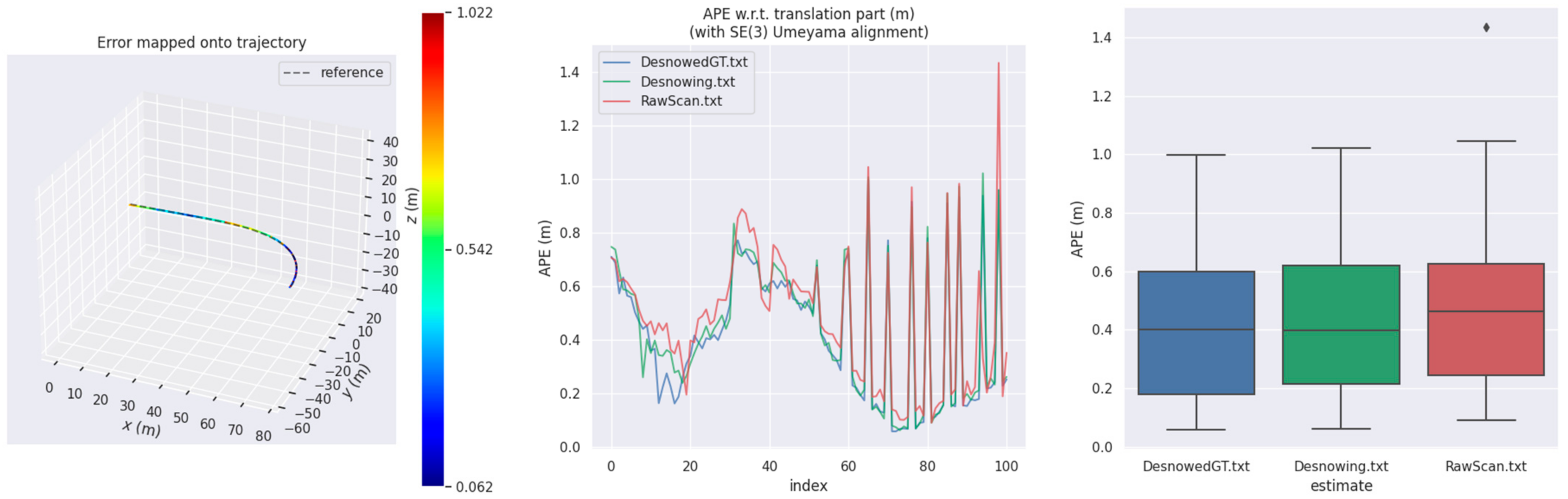

4.3. Other Applications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yoneda, K.; Suganuma, N.; Yanase, R.; Aldibaja, M. Automated driving recognition technologies for adverse weather conditions. IATSS Res. 2019, 43, 253–262. [Google Scholar] [CrossRef]

- Wang, H.; Yue, Z.; Xie, Q.; Xie, Q.; Zhao, Q.; Zheng, Y.; Meng, D. From Rain Generation to Rain Removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14791–14801. [Google Scholar]

- Lin, S.L.; Wu, B.H. Application of Kalman Filter to Improve 3D LiDAR Signals of Autonomous Vehicles in Adverse Weather. Appl. Sci. 2021, 11, 3018. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Autonomous Driving in Adverse Weather Conditions: A Survey. arXiv 2021, arXiv:2112.089362112. [Google Scholar]

- Kurup, A.; Bos, J. DSOR: A Scalable Statistical Filter for Removing Falling Snow from LiDAR Point Clouds in Severe Winter Weather. arXiv 2021, arXiv:2109.07078. [Google Scholar]

- Park, J.I.; Park, J.; Kim, K.S. Fast and Accurate Desnowing Algorithm for LiDAR Point Clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar] [CrossRef]

- Charron, N.; Phillips, S.; Waslander, S.L. De-noising of lidar point clouds corrupted by snowfall. In Proceedings of the 15th IEEE Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 254–261. [Google Scholar]

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. Cnn-based lidar point cloud de-noising in adverse weather. IEEE Robot. Autom. Lett. 2020, 5, 2514–2521. [Google Scholar] [CrossRef] [Green Version]

- Han, X.F.; Jin, J.S.; Wang, M.J.; Jiang, W.; Gao, L.; Xiao, L. review of algorithms for filtering the 3D point cloud. Signal Processing Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Narváez, E.A.L.; Narváez, N.E.L. Point cloud de-noising using robust principal component analysis. In Proceedings of the International Conference on Computer Graphics Theory and Applications, Setúbal, Portugal, 25–28 February 2006; pp. 51–58. [Google Scholar]

- Jenke, P.; Wand, M.; Bokeloh, M.; Schilling, A.; Straßer, W. Bayesian point cloud reconstruction. In Computer Graphics Forum; Blackwell Publishing, Inc.: Oxford, UK; Boston, MA, USA, 2006; Volume 25, pp. 379–388. [Google Scholar]

- Paris, S. A gentle introduction to bilateral filtering and its applications. In Proceedings of the ACM SIGGRAPH 2007 Courses, Los Angeles, CA, USA, 12–17 August 2001; Available online: https://dl.acm.org/doi/proceedings/10.1145/12815003-es (accessed on 20 February 2022).

- Lipman, Y.; Cohen-Or, D.; Levin, D.; Tal-Ezer, H. Parameterization-free projection for geometry reconstruction. ACM Trans. Graph. (TOG) 2007, 26, 22. [Google Scholar] [CrossRef]

- Huang, H.; Li, D.; Zhang, H.; Ascher, U.; Cohen-Or, D. Consolidation of unorganized point clouds for surface reconstruction. ACM Trans. Graph. (TOG) 2009, 28, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Liu, S.; Chan, K.C.; Wang, C.C.L. Iterative consolidation of unorganized point clouds. IEEE Comput. Graph. Appl. 2011, 32, 70–83. [Google Scholar]

- Balta, H.; Velagic, J.; Bosschaerts, W.; De Cubber, G.; Siciliano, B. Fast statistical outlier removal based method for large 3D point clouds of outdoor environments. IFAC-PapersOnLine 2018, 51, 348–353. [Google Scholar] [CrossRef]

- Duan, Y.; Yang, C.; Chen, H.; Yan, W.; Li, H. Low-complexity point cloud de-noising for LiDAR by PCA-based dimension reduction. Opt. Commun. 2021, 482, 126567. [Google Scholar] [CrossRef]

- Roriz, R.; Campos, A.; Pinto, S.; Gomes, T. “DIOR: A Hardware-Assisted Weather De-noising Solution for LiDAR Point Clouds. IEEE Sens. J. 2022, 22, 1621–1628. [Google Scholar] [CrossRef]

- Piewak, F.; Pinggera, P.; Schafer, M.; Peter, D.; Schwarz, B.; Schneider, N.; Enzweiler, M.; Pfeiffer, D.; Zöllner, M. Boosting lidar-based semantic labeling by cross-modal training data generation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 year, 1000 km: The Oxford robotcar dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The apolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2702–2719. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. Nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 15 November 2020; pp. 11682–11692. [Google Scholar]

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian adverse driving conditions dataset. Int. J. Robot. Res. 2021, 40, 681–690. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pan, Y.; Xiao, P.; He, Y.; Shao, Z.; Li, Z. MULLS: Versatile LiDAR SLAM via Multi-metric Linear Least Square. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11633–11640. [Google Scholar] [CrossRef]

- Grupp, M. Evo: Python PackAge for the Evaluation of Odometry and Slam. Available online: https://github.com/MichaelGrupp/evo (accessed on 10 March 2022).

| Sensors | Sun Glare | Rain | Fog | Snow |

|---|---|---|---|---|

| LiDAR | Reflectivity degradation Reduction in measuring Shape change due to splash | Reflectivity degradation Reduction in measuring | Noise due to snow Road surface occlusion | |

| MWR | Reduction in measuring | Reduction in measuring | Noise due to snow | |

| Camera | Whiteout of objects | Visibility degradation | Visibility degradation | Visibility degradation Road surface occlusion |

| Distance | (0 m, 50 m) | (50 m, 100 m) | (100 m, 150 m) | (150 m, +∞) | |

|---|---|---|---|---|---|

| Classification | |||||

| Falling snow points | 15,196 | 1195 | 0 | 0 | |

| Accumulated snow points | 0 | 0 | 0 | 0 | |

| Non-snow points | 184,045 | 9535 | 864 | 292 | |

| Distance | (0 m, 50 m) | (50 m, 100 m) | (100 m, 150 m) | (150 m, +∞) | |

|---|---|---|---|---|---|

| Classification | |||||

| Falling snow points | 14,364 | 38 | 0 | 0 | |

| Accumulated snow points | 49,610 | 2236 | 22 | 0 | |

| Non-snow points | 123,333 | 16,600 | 1730 | 150 | |

| Distance | (0 m, 50 m) | (50 m, 100 m) | (100 m, 150 m) | (150 m, +∞) | |

|---|---|---|---|---|---|

| Classification | |||||

| Falling snow points | 35,156 | 970 | 0 | 0 | |

| Accumulated snow points | 3312 | 379 | 14 | 0 | |

| Non-snow points | 156,717 | 11,435 | 793 | 127 | |

| Intensity | (0, 0.1) | (0.1, 0.2) | (0.2, 0.3) | (0.3, 0.4) | (0.4, 0.5) | (0.5, 0.6) | (0.6, 0.7) | (0.7, 0.8) | (0.8, 0.9) | (0.9, 1.0) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification | |||||||||||

| Falling snow points | 14,681 | 54 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 4 | |

| Accumulated snow points | 28,277 | 344 | 28 | 19 | 8 | 5 | 2 | 0 | 6 | 34 | |

| Non-snow points | 133,871 | 28,885 | 596 | 218 | 39 | 29 | 17 | 19 | 17 | 216 | |

| Intensity | (0, 0.1) | (0.1, 0.2) | (0.2, 0.3) | (0.3, 0.4) | (0.4, 0.5) | (0.5, 0.6) | (0.6, 0.7) | (0.7, 0.8) | (0.8, 0.9) | (0.9, 1.0) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification | |||||||||||

| Falling snow points | 14,737 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Accumulated snow points | 55,481 | 1021 | 12 | 5 | 5 | 0 | 0 | 0 | 1 | 27 | |

| Non-snow points | 112,142 | 23,874 | 707 | 65 | 63 | 34 | 11 | 15 | 13 | 379 | |

| Intensity | (0, 0.1) | (0.1, 0.2) | (0.2, 0.3) | (0.3, 0.4) | (0.4, 0.5) | (0.5, 0.6) | (0.6, 0.7) | (0.7, 0.8) | (0.8, 0.9) | (0.9, 1.0) | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification | |||||||||||

| Falling snow points | 35,592 | 261 | 4 | 2 | 7 | 2 | 5 | 3 | 3 | 44 | |

| Accumulated snow points | 32,403 | 399 | 12 | 2 | 0 | 0 | 0 | 2 | 0 | 2 | |

| Non-snow points | 110,280 | 1526 | 304 | 285 | 130 | 56 | 67 | 51 | 29 | 437 | |

| Distance | (0 m, 10 m) | (10 m, 20 m) | (20 m, 30 m) | (30 m, 40 m) | (40 m, 50 m) | (50 m, 60 m) | (60 m, 70 m) | (70 m, 80 m) | (80 m, 90 m) | (90 m, 100 m) | (100 m, 110 m) | (110 m, 120 m) | (120 m, 130 m) | (130 m, 140 m) | (140 m, 150 m) | (150 m, 160 m) | (160 m, 170 m) | (170 m, 180 m) | (180 m, 190 m) | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification & Intensity | |||||||||||||||||||||

| Snow Noise Points | (0, 0.1) | 14,735 | 16,913 | 5685 | 2780 | 1807 | 966 | 38 | 0 | 9 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| (0.1, 0.2) | 26 | 116 | 142 | 62 | 49 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.2, 0.3) | 0 | 6 | 21 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.3, 0.4) | 0 | 0 | 16 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.4, 0.5) | 0 | 2 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.5, 0.6) | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.6, 0.7) | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.7, 0.8) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.8, 0.9) | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.9, 1.0) | 0 | 14 | 20 | 0 | 0 | 0 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Non-Snow Points | (0, 0.1) | 7420 | 60,310 | 25,987 | 18,140 | 11,848 | 4243 | 1872 | 1219 | 681 | 784 | 343 | 41 | 109 | 319 | 430 | 41 | 28 | 52 | 0 | 4 |

| (0.1, 0.2) | 312 | 19,328 | 1774 | 1330 | 5529 | 355 | 27 | 17 | 59 | 93 | 4 | 0 | 0 | 51 | 4 | 0 | 1 | 1 | 0 | 0 | |

| (0.2, 0.3) | 0 | 394 | 155 | 14 | 13 | 10 | 0 | 2 | 0 | 4 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | |

| (0.3, 0.4) | 0 | 155 | 30 | 28 | 1 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.4, 0.5) | 0 | 17 | 16 | 4 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.5, 0.6) | 0 | 9 | 8 | 0 | 2 | 2 | 0 | 2 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | |

| (0.6, 0.7) | 0 | 11 | 2 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.7, 0.8) | 0 | 6 | 2 | 2 | 0 | 3 | 0 | 2 | 0 | 0 | 0 | 2 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | |

| (0.8, 0.9) | 0 | 6 | 4 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | |

| (0.9, 1.0) | 0 | 152 | 22 | 16 | 2 | 10 | 0 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 5 | 0 | 0 |

| Distance | (0 m, 10 m) | (10 m, 20 m) | (20 m, 30 m) | (30 m, 40 m) | (40 m, 50 m) | (50 m, 60 m) | (60 m, 70 m) | (70 m, 80 m) | (80 m, 90 m) | (90 m, 100 m) | (100 m, 110 m) | (110 m, 120 m) | (120 m, 130 m) | (130 m, 140 m) | (140 m, 150 m) | (150 m, 160 m) | (160 m, 170 m) | (170 m, 180 m) | (180 m, 190 m) | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification & Intensity | |||||||||||||||||||||

| Snow Noise Points | (0, 0.1) | 18,840 | 28,769 | 15,238 | 3905 | 1904 | 594 | 330 | 228 | 223 | 95 | 47 | 5 | 22 | 16 | 2 | 0 | 0 | 0 | 0 | 0 |

| (0.1, 0.2) | 394 | 10 | 405 | 78 | 81 | 4 | 26 | 3 | 14 | 3 | 2 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.2, 0.3) | 2 | 0 | 5 | 5 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.3, 0.4) | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.4, 0.5) | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.5, 0.6) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.6, 0.7) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.7, 0.8) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.8, 0.9) | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.9, 1.0) | 0 | 0 | 26 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Non-Snow Points | (0, 0.1) | 30,308 | 30,395 | 21,908 | 10,986 | 6482 | 4913 | 912 | 2206 | 1626 | 1247 | 617 | 40 | 4 | 78 | 41 | 4 | 353 | 14 | 0 | 8 |

| (0.1, 0.2) | 8307 | 1568 | 4511 | 3982 | 3212 | 1195 | 35 | 436 | 390 | 217 | 7 | 0 | 0 | 14 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.2, 0.3) | 31 | 2 | 390 | 229 | 38 | 0 | 5 | 2 | 0 | 2 | 6 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.3, 0.4) | 18 | 0 | 18 | 20 | 8 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.4, 0.5) | 16 | 0 | 12 | 17 | 7 | 3 | 3 | 4 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.5, 0.6) | 2 | 0 | 8 | 13 | 6 | 3 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.6, 0.7) | 4 | 0 | 1 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.7, 0.8) | 0 | 0 | 4 | 8 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.8, 0.9) | 0 | 0 | 4 | 6 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.9, 1.0) | 0 | 2 | 131 | 115 | 82 | 18 | 10 | 21 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Distance | (0 m, 10 m) | (10 m, 20 m) | (20 m, 30 m) | (30 m, 40 m) | (40 m, 50 m) | (50 m, 60 m) | (60 m, 70 m) | (70 m, 80 m) | (80 m, 90 m) | (90 m, 100 m) | (100 m, 110 m) | (110 m, 120 m) | (120 m, 130 m) | (130 m, 140 m) | (140 m, 150 m) | (150 m, 160 m) | (160 m, 170 m) | (170 m, 180 m) | (180 m, 190 m) | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification & Intensity | |||||||||||||||||||||

| Snow Noise Points | (0, 0.1) | 25,333 | 22,024 | 16,438 | 3145 | 730 | 145 | 69 | 29 | 64 | 18 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| (0.1, 0.2) | 4 | 248 | 351 | 37 | 5 | 4 | 0 | 2 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.2, 0.3) | 0 | 2 | 8 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.3, 0.4) | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.4, 0.5) | 0 | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.5, 0.6) | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.6, 0.7) | 0 | 0 | 0 | 4 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.7, 0.8) | 0 | 2 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.8, 0.9) | 0 | 2 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.9, 1.0) | 0 | 10 | 0 | 8 | 9 | 0 | 0 | 0 | 4 | 15 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Non-Snow Points | (0, 0.1) | 8022 | 29,970 | 29,444 | 14,438 | 9784 | 4570 | 2263 | 1390 | 997 | 914 | 1170 | 2011 | 1135 | 2090 | 628 | 648 | 294 | 54 | 53 | 405 |

| (0.1, 0.2) | 22 | 177 | 409 | 160 | 43 | 30 | 72 | 28 | 109 | 137 | 130 | 120 | 51 | 12 | 2 | 10 | 2 | 1 | 1 | 10 | |

| (0.2, 0.3) | 0 | 248 | 13 | 15 | 3 | 2 | 2 | 9 | 0 | 0 | 9 | 0 | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | |

| (0.3, 0.4) | 0 | 262 | 9 | 0 | 1 | 1 | 2 | 4 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 2 | |

| (0.4, 0.5) | 0 | 98 | 8 | 4 | 0 | 0 | 2 | 7 | 2 | 2 | 2 | 0 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.5, 0.6) | 0 | 48 | 0 | 2 | 0 | 0 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | |

| (0.6, 0.7) | 0 | 52 | 4 | 1 | 0 | 1 | 0 | 6 | 0 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.7, 0.8) | 0 | 36 | 6 | 4 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 1 | 0 | |

| (0.8, 0.9) | 0 | 14 | 10 | 2 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| (0.9, 1.0) | 0 | 268 | 18 | 20 | 2 | 2 | 14 | 41 | 0 | 26 | 4 | 0 | 2 | 7 | 0 | 1 | 0 | 19 | 6 | 7 |

| Distance | (0 m, 10 m) | (10 m, 20 m) | (20 m, 30 m) | (30 m, 40 m) | (40 m, 50 m) | (50 m, 60 m) | (60 m, 70 m) | (70 m, 80 m) | (80 m, 90 m) | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Value | |||||||||||

| 0.016 | 0.018 | 0.020 | 0.022 | 0.024 | 0.026 | 0.028 | 0.030 | 0.032 | 0.034 | ||

| Filters | Precision | Recall |

|---|---|---|

| DROR | 71.51 | 91.89 |

| DSOR | 65.07 | 95.60 |

| DDIOR | 69.87 | 95.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; You, X.; Chen, L.; Tian, J.; Tang, F.; Zhang, L. A Scalable and Accurate De-Snowing Algorithm for LiDAR Point Clouds in Winter. Remote Sens. 2022, 14, 1468. https://doi.org/10.3390/rs14061468

Wang W, You X, Chen L, Tian J, Tang F, Zhang L. A Scalable and Accurate De-Snowing Algorithm for LiDAR Point Clouds in Winter. Remote Sensing. 2022; 14(6):1468. https://doi.org/10.3390/rs14061468

Chicago/Turabian StyleWang, Weiqi, Xiong You, Lingyu Chen, Jiangpeng Tian, Fen Tang, and Lantian Zhang. 2022. "A Scalable and Accurate De-Snowing Algorithm for LiDAR Point Clouds in Winter" Remote Sensing 14, no. 6: 1468. https://doi.org/10.3390/rs14061468

APA StyleWang, W., You, X., Chen, L., Tian, J., Tang, F., & Zhang, L. (2022). A Scalable and Accurate De-Snowing Algorithm for LiDAR Point Clouds in Winter. Remote Sensing, 14(6), 1468. https://doi.org/10.3390/rs14061468