Using Sentinel-1, Sentinel-2, and Planet Imagery to Map Crop Type of Smallholder Farms

Abstract

1. Introduction

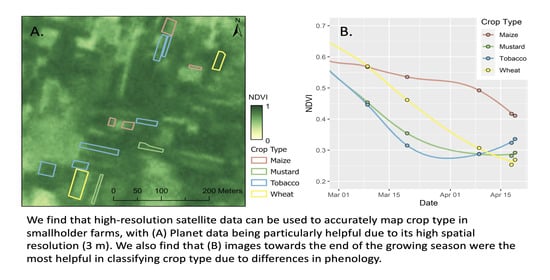

- How accurately do Sentinel-1, Sentinel-2, and PlanetScope imagery map crop types in smallholder systems? Which sensor or sensor combinations lead to the greatest classification accuracies?

- Does crop type classification accuracy vary with farm size? Does adding Planet satellite data improve the classification accuracy more for the smallest farms?

- How does classification accuracy vary based on the timing of imagery used? Are there particular times during the growing season that lead to a better discrimination of crop types?

2. Materials and Methods

2.1. Data

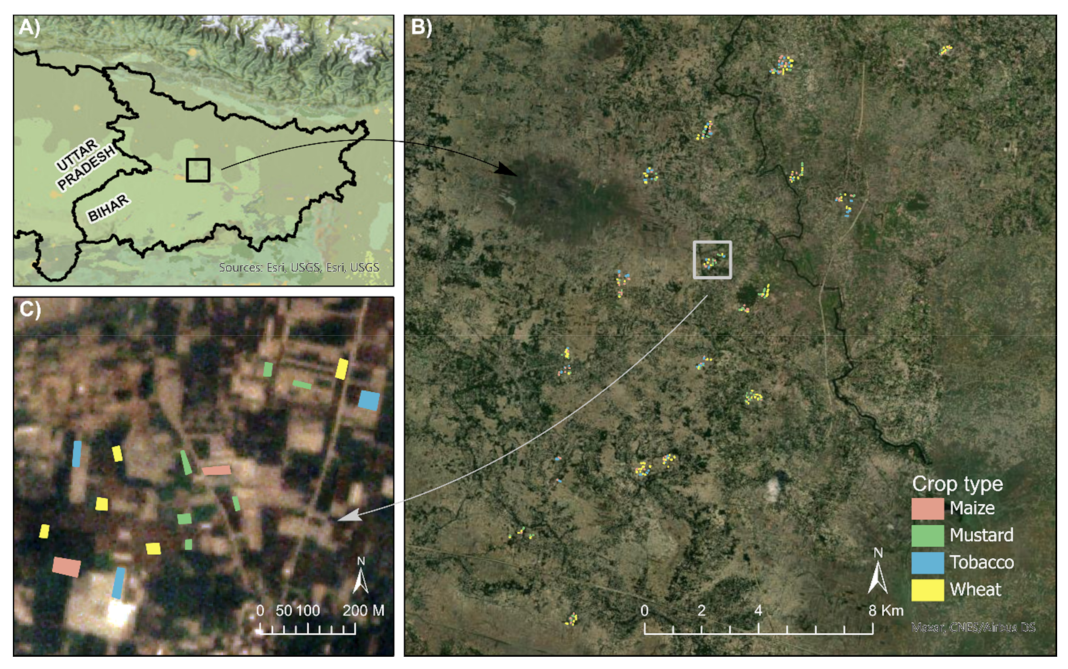

2.1.1. Study Area and Field Polygons

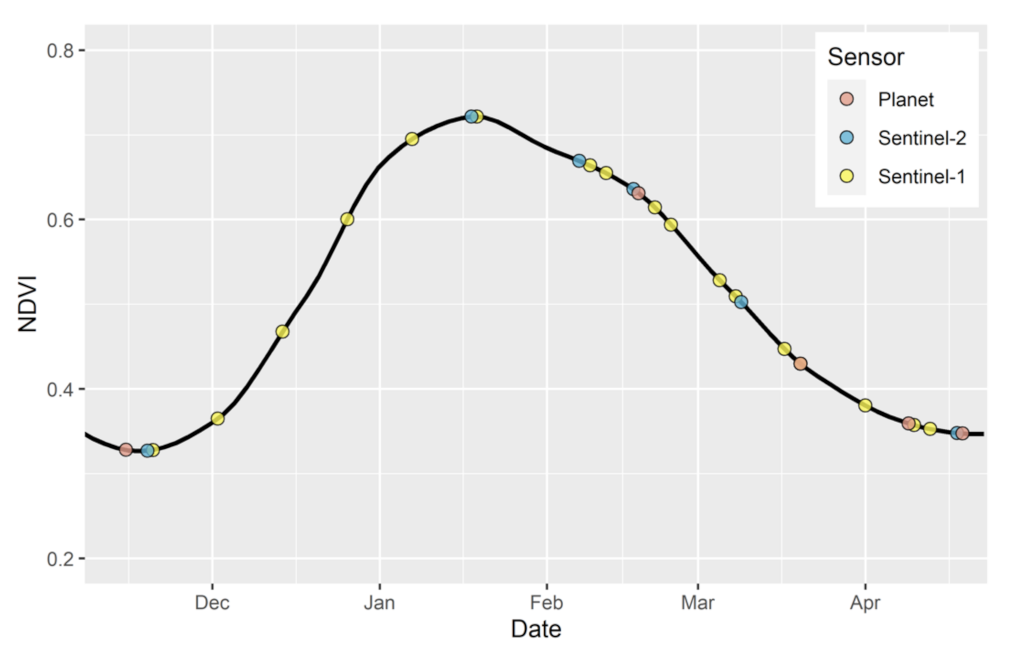

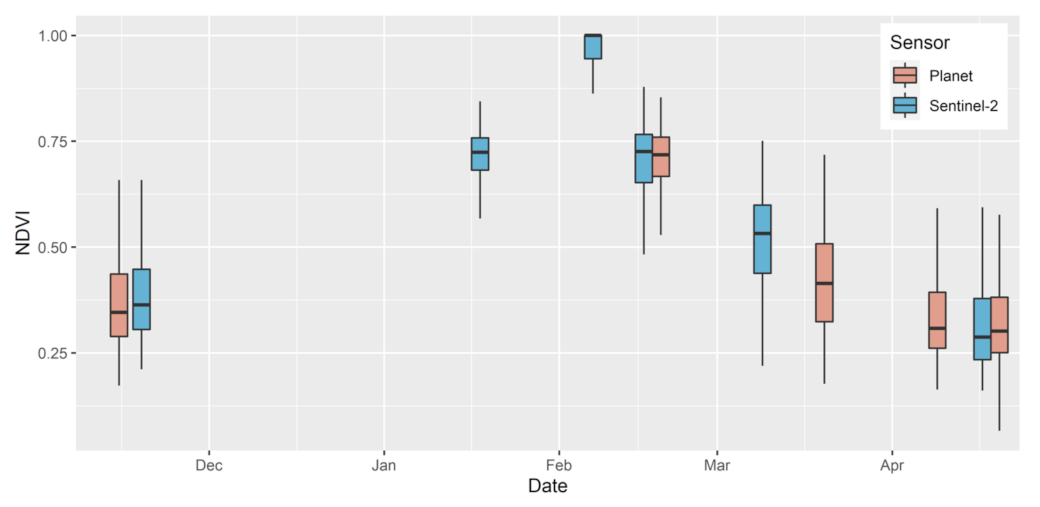

2.1.2. Satellite Data

2.2. Methods

2.2.1. Sampling Strategy and Feature Selection for Model Development

2.2.2. Performance of Different Sensor Combinations

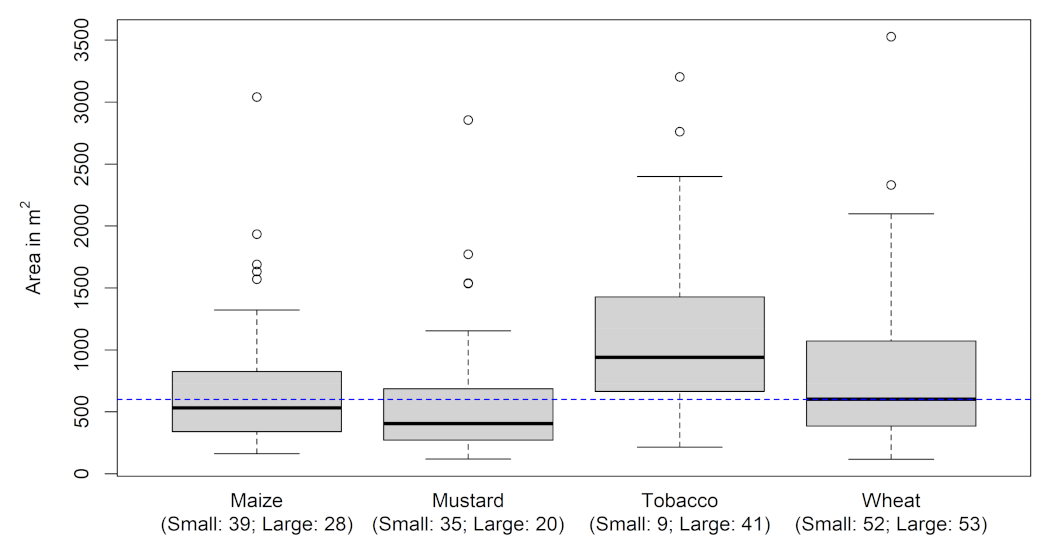

2.2.3. Classification Accuracy Based on Farm Size

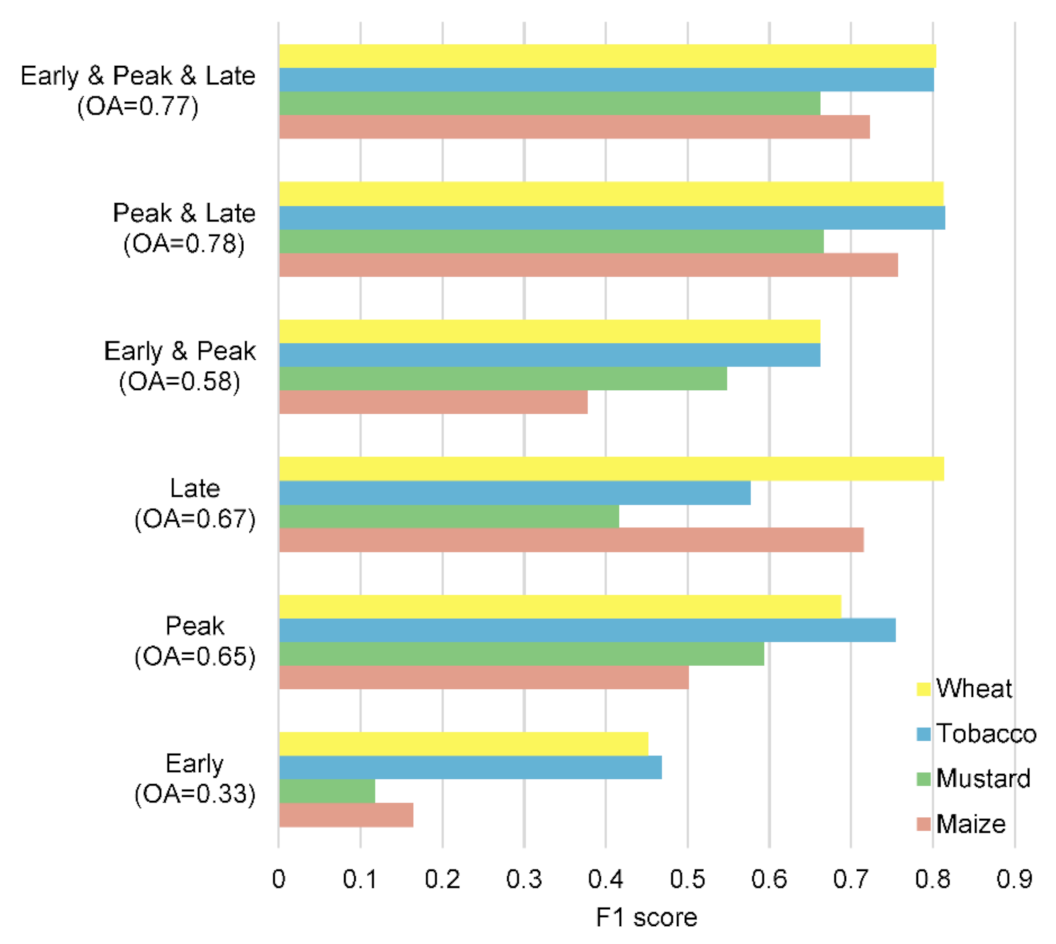

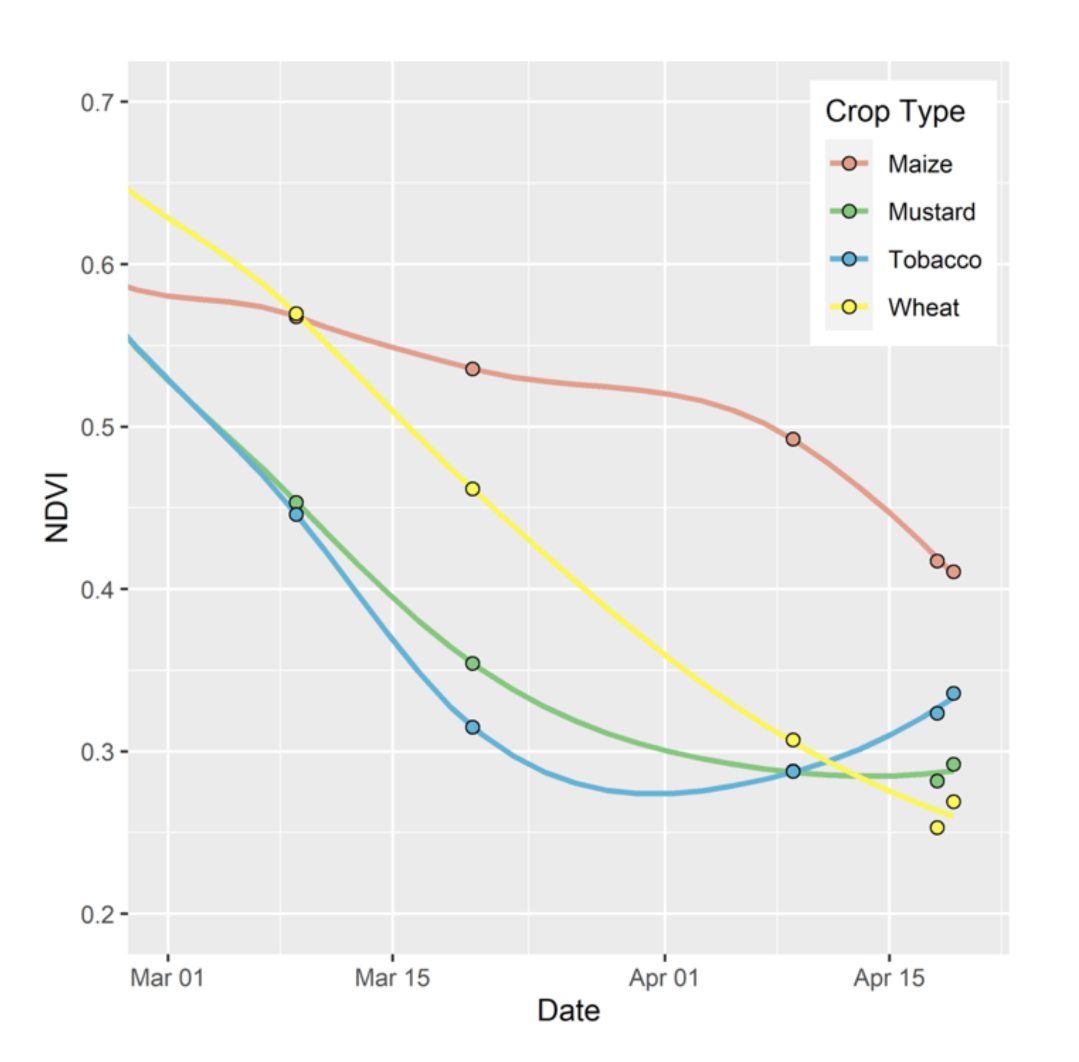

2.2.4. Classification Accuracy and Image Sampling Dates

3. Results

3.1. Crop Classification Accuracies from a Combination of Different Sensors

3.2. The Influence of Farm Size on Classification Accuracy

3.3. Classification Accuracies Based on Images from Different Periods of the Growing Season

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ricciardi, V.; Ramankutty, N.; Mehrabi, Z.; Jarvis, L.; Chookolingo, B. How much of the world’s food do smallholders produce? Glob. Food Secur. 2018, 17, 64–72. [Google Scholar] [CrossRef]

- Lowder, S.K.; Skoet, J.; Raney, T. The number, size, and distribution of farms, smallholder farms, and family farms worldwide. World Dev. 2016, 87, 16–29. [Google Scholar] [CrossRef]

- Lobell, D.B.; Burke, M.B.; Tebaldi, C.; Mastrandrea, M.D.; Falcon, W.P.; Naylor, R.L. Prioritizing climate change adaptation needs for food security in 2030. Science 2008, 319, 607–610. [Google Scholar] [CrossRef] [PubMed]

- Cohn, A.S.; Newton, P.; Gil, J.D.B.; Kuhl, L.; Samberg, L.; Ricciardi, V.; Manly, J.R.; Northrop, S. Smallholder agriculture and climate change. Annu. Rev. Environ. Resour. 2017, 42, 347–375. [Google Scholar] [CrossRef]

- Jain, M.; Singh, B.; Srivastava, A.A.K.; Malik, R.K.; McDonald, A.J.; Lobell, D.B. Using satellite data to identify the causes of and potential solutions for yield gaps in India’s wheat belt. Environ. Res. Lett. 2017, 12, 094011. [Google Scholar] [CrossRef]

- Lobell, D.B. The use of satellite data for crop yield gap analysis. Field Crops Res. 2013, 143, 56–64. [Google Scholar] [CrossRef]

- Jain, M.; Mondal, P.; DeFries, R.S.; Small, C.; Galford, G.L. Mapping cropping intensity of smallholder farms: A comparison of methods using multiple sensors. Remote Sens. Environ. 2013, 134, 210–223. [Google Scholar] [CrossRef]

- Jain, M.; Singh, B.; Rao, P.; Srivastava, A.K.; Poonia, S.; Blesh, J.; Azzari, G.; McDonald, A.J.; Lobell, D.B. The impact of agricultural interventions can be doubled by using satellite data. Nat. Sustain. 2019, 2, 931–934. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; You, C.; di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with google earth engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Jain, M.; Naeem, S.; Orlove, B.; Modi, V.; DeFries, R.S. Understanding the causes and consequences of differential decision-making in adaptation research: Adapting to a delayed monsoon onset in Gujarat, India. Glob. Environ. Change 2015, 31, 98–109. [Google Scholar] [CrossRef]

- Kurukulasuriya, P.; Mendelsohn, R. Crop switching as a strategy for adapting to climate change. Afr. J. Agric. Resour. Econ. 2008, 2, 1–22. [Google Scholar]

- Wang, S.; di Tommaso, S.; Faulkner, J.; Friedel, T.; Kennepohl, A.; Strey, R.; Lobell, D.B. Mapping crop types in Southeast India with smartphone crowdsourcing and deep learning. Remote Sens. 2020, 12, 2957. [Google Scholar] [CrossRef]

- Useya, J.; Chen, S. Exploring the potential of mapping cropping patterns on smallholder scale croplands using sentinel-1 SAR data. Chin. Geogr. Sci. 2019, 29, 626–639. [Google Scholar] [CrossRef]

- Gumma, M.K.; Tummala, K.; Dixit, S.; Collivignarelli, F.; Holecz, F.; Kolli, R.N.; Whitbread, A.M. Crop type identification and spatial mapping using sentinel-2 satellite data with focus on field-level information. Geocarto Int. 2020, 1–17. [Google Scholar] [CrossRef]

- Jain, M.; Srivastava, A.K.; Singh, B.; McDonald, A.; Malik, R.K.; Lobell, D.B. Mapping smallholder wheat yields and sowing dates using micro-satellite data. Remote Sens. 2016, 8, 860. [Google Scholar] [CrossRef]

- Rustowicz, R.M.; Cheong, R.; Wang, L.; Ermon, S.; Burke, M.; Lobell, D. Semantic segmentation of crop type in Africa: A novel dataset and analysis of deep learning methods. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 75–82. [Google Scholar]

- Kpienbaareh, D.; Sun, X.; Wang, J.; Luginaah, I.; Kerr, R.B.; Lupafya, E.; Dakishoni, L. Crop type and land cover mapping in northern Malawi using the integration of sentinel-1, sentinel-2, and planetscope satellite data. Remote Sens. 2021, 13, 700. [Google Scholar] [CrossRef]

- Khatami, R.; Mountrakis, G.; Stehman, S.V. A meta-analysis of remote sensing research on supervised pixel-based land-cover image classification processes: General guidelines for practitioners and future research. Remote Sens. Environ. 2016, 177, 89–100. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google earth engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Wilson, R.T. Py6S: A python interface to the 6S radiative transfer model. Comput. Geosci. 2013, 51, 166–171. [Google Scholar] [CrossRef]

- Vermote, E.F.; Tanre, D.; Deuze, J.L.; Herman, M.; Morcette, J.-J. Second simulation of the satellite signal in the solar spectrum, 6S: An overview. IEEE Trans. Geosci. Remote Sens. 1997, 35, 675–686. [Google Scholar] [CrossRef]

- Fletcher, R.S. Using vegetation indices as input into random forest for soybean and weed classification. Am. J. Plant Sci. 2016, 7, 720–726. [Google Scholar] [CrossRef]

- Gurram, R.; Srinivasan, M. Detection and estimation of damage caused by thrips thrips tabaci (lind) of cotton using hyperspectral radiometer. Agrotechnology 2014, 3. [Google Scholar] [CrossRef]

- Tucker, C.J. Remote sensing of leaf water content in the near infrared. Remote Sens. Environ. 1980, 10, 23–32. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Prueger, J.H. Value of using different vegetative indices to quantify agricultural crop characteristics at different growth stages under varying management practices. Remote Sens. 2010, 2, 562–578. [Google Scholar] [CrossRef]

- Filella, I.; Serrano, L.; Serra, J.; Peñuelas, J. Evaluating wheat nitrogen status with canopy reflectance indices and discriminant analysis. Crop Sci. 1995, 35. [Google Scholar] [CrossRef]

- Remote Estimation of Leaf Area Index and Green Leaf Biomass in Maize Canopies—Gitelson—2003—Geophysical Research Letters—Wiley Online Library. Available online: https://agupubs-onlinelibrary-wiley-com.proxy.lib.umich.edu/doi/full/10.1029/2002GL016450 (accessed on 4 March 2021).

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- McNairn, H.; Protz, R. Mapping corn residue cover on agricultural fields in Oxford county, Ontario, using thematic mapper. Can. J. Remote Sens. 1993, 19, 152–159. [Google Scholar] [CrossRef]

- Vreugdenhil, M.; Wagner, W.; Bauer-Marschallinger, B.; Pfeil, I.; Teubner, I.; Rüdiger, C.; Strauss, P. Sensitivity of sentinel-1 backscatter to vegetation dynamics: An Austrian case study. Remote Sens. 2018, 10, 1396. [Google Scholar] [CrossRef]

- Planet Team. Planet Application Program Interface: In Space for Life on Earth; Planet Team: San Francisco, CA, USA, 2017; Available online: https://Api.Planet.Com (accessed on 1 March 2018).

- Houborg, R.; McCabe, M.F. A cubesat enabled spatio-temporal enhancement method (CESTEM) utilizing planet, landsat and MODIS data. Remote Sens. Environ. 2018, 209, 211–226. [Google Scholar] [CrossRef]

- Hijmans, R.J. Raster: Geographic Data Analysis and Modeling; R Package Version 3.4-5. 2020. Available online: https://CRAN.R-project.org/package=raster (accessed on 1 March 2018).

- R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing: Vienna, Austria, 2021. Available online: https://www.R-project.org/ (accessed on 1 March 2018).

- Kuhn, M. caret: Classification and Regression Training. R package version 6.0-86. 2020. Available online: https://CRAN.R-project.org/package=caret (accessed on 1 March 2018).

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic use of radar sentinel-1 and optical sentinel-2 imagery for crop mapping: A case study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef]

- Crnojevic, V.; Lugonja, P.; Brkljac, B.; Brunet, B. Classification of small agricultural fields using combined landsat-8 and rapideye imagery: Case study of Northern Serbia. J. Appl. Remote Sens. 2014, 8, 83512. [Google Scholar] [CrossRef]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A.R. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

- Ferrant, S.; Selles, A.; Le Page, M.; Herrault, P.-A.; Pelletier, C.; Al-Bitar, A.; Mermoz, S.; Gascoin, S.; Bouvet, A.; Saqalli, M.; et al. Detection of irrigated crops from sentinel-1 and sentinel-2 data to estimate seasonal groundwater use in South India. Remote Sens. 2017, 9, 1119. [Google Scholar] [CrossRef]

| Spectral Index | Sensor | Application | Reference |

|---|---|---|---|

| Green-Blue Normalized Difference Vegetation Index, G-B NDVI: (G-B)/(G + B) * | Sentinel-2, Planet | Plant pigment for differentiating species | [22] |

| Green-Red Normalized Difference Vegetation Index, G-R NDVI: (G-R)/(G + R) | Sentinel-2, Planet | Plant pigment for differentiating species | [22,23] |

| Normalized Difference Vegetative Index, NDVI: (NIR-R)/(NIR + R) | Sentinel-2, Planet | LAI, intercepted PAR | [22,24] |

| Plant Senescence Reflectance Index, PSRI: (R−G)/NIR | Sentinel-2, Planet | Plant senescence | [25] |

| Normalized Pigment Chlorophyll Index, NPCI: (R–B)/(R + B) | Sentinel-2, Planet | Leaf chlorophyll (esp. during late stages) | [22,25,26] |

| Green Chlorophyll Index, GCVI or CI green: (NIR/G)–1 | Sentinel-2, Planet | LAI, GPP, Chlorophyll (early stages) | [27] |

| Normalized Difference Index 7, NDI7: (NIR–SWIR)/(NIR + SWIR) | Sentinel-2 | Vegetation status, water content, residue cover | [28,29] |

| Cross Ratio, CR: VH/VV | Sentinel-1 | Vegetation water content | [30] |

| Sensor | No. of Images | No. of Bands and Indices per Image | No. of Features Used |

|---|---|---|---|

| Planet | 5 | 4 bands; 6 indices | 31 of 50 |

| Sentinel-2 | 6 | 10 bands; 7 indices | 65 of 102 |

| Sentinel-1 | 17 | 2 bands; 1 index | 51 of 51 |

| Sentinel-1 + Sentinel-2 | 18 | – | 116 of 153 |

| Planet + Sentinel-2 | 11 | – | 96 of 152 |

| Planet + Sentinel-1 + Sentinel-2 | 28 | – | 147 of 203 |

| Sensor | Early (Mid November–Early December) | Peak (Mid January–late February) | Late (Late March–Mid April) | Early and Peak | Peak and Late | Early and Peak and Late |

|---|---|---|---|---|---|---|

| Sentinel-1 | 1120 | 0221 | 0410 | 1120 + 0221 | 0221 + 0410 | 1120 + 0221 + 0410 |

| Sentinel-2 | 1119 | 0217 | 0418 | 1119 + 0217 | 0217 + 0418 | 1119 + 0217 + 0418 |

| Planet | 1115 | 0218 | 0409 | 1115 + 0218 | 0218 + 0409 | 1115 + 0218 + 0409 |

| Overall Accuracy for Each Sensor and Sensor Combination | ||||||

|---|---|---|---|---|---|---|

| Sentinel-1 | Sentinel-2 | Planet | Sentinel-1 + Sentinel-2 | Planet + Sentinel-1 | Planet + Sentinel-2 | Planet + Sentinel-1 + Sentinel-2 |

| 0.69 | 0.72 | 0.73 | 0.80 | 0.82 | 0.82 | 0.85 |

| Crop-Specific F1 Scores | |||

|---|---|---|---|

| Maize | Mustard | Tobacco | Wheat |

| 0.81 | 0.76 | 0.87 | 0.89 |

| F1 Score | Small Farms | Large Farms | ||

|---|---|---|---|---|

| Without Planet | With Planet | Without Planet | With Planet | |

| Maize | 0.76 | 0.82 | 0.71 | 0.81 |

| Mustard | 0.61 | 0.68 | 0.71 | 0.78 |

| Tobacco | 0.65 | 0.71 | 0.84 | 0.88 |

| Wheat | 0.78 | 0.82 | 0.88 | 0.90 |

| Overall accuracy | 0.73 | 0.79 | 0.82 | 0.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rao, P.; Zhou, W.; Bhattarai, N.; Srivastava, A.K.; Singh, B.; Poonia, S.; Lobell, D.B.; Jain, M. Using Sentinel-1, Sentinel-2, and Planet Imagery to Map Crop Type of Smallholder Farms. Remote Sens. 2021, 13, 1870. https://doi.org/10.3390/rs13101870

Rao P, Zhou W, Bhattarai N, Srivastava AK, Singh B, Poonia S, Lobell DB, Jain M. Using Sentinel-1, Sentinel-2, and Planet Imagery to Map Crop Type of Smallholder Farms. Remote Sensing. 2021; 13(10):1870. https://doi.org/10.3390/rs13101870

Chicago/Turabian StyleRao, Preeti, Weiqi Zhou, Nishan Bhattarai, Amit K. Srivastava, Balwinder Singh, Shishpal Poonia, David B. Lobell, and Meha Jain. 2021. "Using Sentinel-1, Sentinel-2, and Planet Imagery to Map Crop Type of Smallholder Farms" Remote Sensing 13, no. 10: 1870. https://doi.org/10.3390/rs13101870

APA StyleRao, P., Zhou, W., Bhattarai, N., Srivastava, A. K., Singh, B., Poonia, S., Lobell, D. B., & Jain, M. (2021). Using Sentinel-1, Sentinel-2, and Planet Imagery to Map Crop Type of Smallholder Farms. Remote Sensing, 13(10), 1870. https://doi.org/10.3390/rs13101870