Abstract

Integrated Pest Management (IPM) is a system that combines ready-made plant protection methods. IPM guidelines apply to all users of plant protection products and require the prioritization of preventative methods. Adherence to IPM principles contributes to the production of healthy and safe food. In Poland, the implementation of IPM into agricultural practice remains a solution to the problem. Furthermore, it is necessary to ensure education and implementation of IPM at the basic or implementation level. The IPM element, particularly emphasized in the 2009/128/EC Directive, is the use of so-called warning systems, tools that address the issue of plant protection application. In this regard, it is necessary to use decision support systems (DSSs). DSSs are digital solutions that integrate meteorological, global, and field data. They include the risk of disease and pest occurrence and the timing of the application. DSSs are not part of the farmer’s experience or presentation but support them in making sound decisions. DSS reduces costs, the side effects of plant protection, and energy consumption. Examples of such solutions in Poland include the eDWIN platform and OPWS, classified, among others, in cereal protection against fungi. The aim of this article is to present the role, capabilities, and limitations of decision support systems in modern agricultural production and their importance in the context of the Green Deal and digital agriculture.

1. Introduction

Integrated Pest Management (IPM)

Integrated Pest Management has been the primary approach to plant protection since the 1950s. IPM is a method of protecting plants from harmful organisms that aims to minimize the risk to human and animal health and the environment. The core objective of integrated plant protection is to achieve high yields of good quality, in optimal growing conditions, in a way that does not threaten the natural environment and human health.

According to the definition by the Food and Agriculture Organization of the United Nations [1], the IPM model combines all available pest control techniques. It integrates biological, chemical, physical, and crop-specific (cultural) management strategies and practices [1]. IPM promotes sustainable forms of agriculture, with a significant reduction in the use of synthetic plant protection products (pesticides). It also strengthens natural mechanisms of pest and disease control, which are considered essential components of the agroecosystem. These mechanisms are recognized as integral elements of the agroecosystem. Their presence must be constantly monitored and maintained below the thresholds of economic harm. The role of pests in IPM was already presented in the concept of Bottler [2]. It was pointed out that it is necessary to tolerate pests at an acceptable level, manage plant protection on an ecosystem scale, maximize the use of natural enemies of pests, and take an interdisciplinary approach to the problem. In order to understand integrated plant management, it is essential to first clarify its ecological foundations. Specifically, it should be emphasized that ecology refers to the interactions among living organisms, and that environmental disturbances directly affect these interactions, thereby contributing to the emergence of pests. Within the framework of integrated plant management, a pest is understood as a population density of a phytophagous species; consequently, this concept is directly linked to the determination of the economic thresholds. The ecological foundations of IPM refer to the principles and processes governing interactions between plants, pests, their natural enemies, and the surrounding environment. They emphasize how ecological balance, biodiversity, and environmental factors regulate pest populations and plant health. Understanding these foundations is essential for developing sustainable strategies that minimize chemical inputs and rely instead on natural regulatory mechanisms within agroecosystems [1,3].

One of the negative long-term effects of IPM was the global use of pesticides, which undoubtedly continued uninterrupted. This persistence has had negative consequences for the preservation and protection of biodiversity, as well as the human right to healthy food [1,3]. Excessive use of agrochemicals has caused serious environmental damage, including water and air pollution, soil fertility degradation, and the decline of beneficial organisms such as bees [4,5].

Limitations of implementing Integrated Pest Management (IPM) in cereal crops in Poland

The implementation of IPM in cereal crops in Poland, despite being formally in place since 2014, still encounters significant barriers. The most important problem remains the lack of a clear reduction in pesticide use, especially in large, economically strong farms that dominate domestic cereal production. In these entities, low motivation to limit chemical plant protection is observed, which may result from the lack of effective financial and advisory incentives. The authors of the review clearly highlight the challenges of implementing integrated plant protection programs in Polish farms, particularly in winter wheat, which is the dominant cereal crop in Poland. These challenges are primarily related to the management of powdery mildew, septoria tritici blotch, and aphid infestations [6].

Another limitation is the low level of knowledge of IPM principles among farmers. Studies indicate that a significant number of producers do not know integrated protection methods for specific crops, which indicates insufficient effectiveness of the training and education system [6].

As a result, IPM remains largely a theoretical framework, the practical implementation of which in cereal crops requires comprehensive systemic support, through the development of advisory services, improvement of education, and the introduction of motivational mechanisms [6].

The most important principles of IPM—integrated plant protection (IPP)

Since 1 January 2014, pursuant to the 21 October 2009 Directive of the European Parliament and of the Council establishing the framework for community action with regard to the use of pesticides (2009/128/CE), all professional users have had to observe the rules of integrated plant protection (IPP). Annex III in Section 2 and Section 3 states the following:

Section 2: Harmful organisms must be monitored using appropriate methods and tools, if available. Such tools shall include monitoring of fields, as well as warning systems and systems for forecasting and early prediction based on solid scientific evidence, when the application thereof is possible, along with advice from people with relevant and adequate professional qualifications.

Section 3: Based on the results of monitoring actions, a professional user must decide whether to apply plant protection methods and when to apply them. The main factor contributing to decision-making is the threshold of harmfulness of harmful organisms, clearly defined and determined based on solid scientific research. If it is possible, the user should take into account the threshold values relevant for a given region, area, crop, and specific weather conditions.

One of the most important elements of integrated control, which is emphasized in the Directive, is “warning systems”, i.e., different types of systems assisting in making the decision to protect the crops chemically. The recent years have witnessed the development of scientific research regarding the basics of such decision support systems (DSSs). An important aspect of such research is the analysis of the development of diseases and pests, as influenced by meteorological conditions. Based on this, it is possible to create supporting systems able to forecast the intensification of a pathogen or the beginning of pest development stages when the pests need to be combated. A more detailed description of DSS is also warranted. Modern pest monitoring technologies increasingly rely on Internet of Things (IoT) solutions, including sensor networks and automated camera systems capable of quantifying insect populations in real time. These devices transmit data wirelessly (e.g., via Wi-Fi or other communication protocols) to centralized servers, where advanced algorithms and data-driven models process and interpret the information. The system then generates automated alerts and decision recommendations, which are communicated directly to farmers and crop advisors, facilitating rapid, evidence-based interventions in crop protection.

However, it must be stressed that the application of DSS in plant protection does not relieve the producer or the consultant from conducting detailed observations and field inspections at a given plantation to assess the economic advisability of the treatment [7,8,9].

Another key element of integrated protection is the selection of resistant and locally adapted crop varieties, which supports long-term sustainability [10].

Limitations of the use of advisory systems in IPP

The reduction in the use of crop protection products in field crops is based on the implementation of decision support systems for plant protection against pests and diseases.

European and global trends to reduce the amount of chemicals used in crop protection rely on greater precision in their application. The basis is the precise timing of application and the correct selection of chemical preparations in crop protection. Delayed chemical treatments often result in low or no effectiveness. The same applies to treatments carried out too early. The reduction in crop protection product use in field crops is primarily based on the application of DSS in pest and disease management. Accurate determination of the optimal timing of treatment is facilitated by a DSS designed for chemical plant protection.

Using and supporting the recommendations of decision-making systems is relatively easy and does not require special training, education, or sophisticated equipment. However, it should be remembered, first of all, that the final decision is made by a user. Advisory systems only recommend carrying out the treatment. In such a situation, it is necessary to carry out field observations, properly identify the threat, assess whether the pest threshold has been exceeded, and only then proceed with treatment using appropriate chemical preparations. Another barrier to wider adoption of advisory systems is the perception of economic risk. Farmers may be reluctant to rely solely on DSS-generated recommendations, fearing potential yield losses if the advice proves inaccurate. Furthermore, the generational divide in Polish agriculture also plays a role, as younger farmers are more open to adopting digital solutions, while older farmers often remain skeptical of their reliability. In Poland, the effectiveness of advisory systems is also shaped by institutional factors, including the availability of extension services and public funding for digital agriculture. Without adequate systemic support—such as subsidies for modern monitoring equipment or training programs—adoption of DSSs is likely to remain limited to larger, more technologically advanced farms.

The aim of the article is to review the current decision-making systems supporting strategies for combating the most important diseases and pests occurring in the Polish climate zone and their importance in the context of the Green Deal and digital agriculture.

In integrated plant protection, DSS is the foundation for informed decisions on protecting crops against fungi attacking cereals [11]. Within IPM, the increasing impact of diseases on crops in the era of climate change makes their role even more credible. Advanced DSSs play a key role in the decision-making process covering protective treatments, the timing of their implementation, and the appropriate selection of active substances. Thanks to this, farmers have access to reliable and up-to-date knowledge from both a scientific and a practical perspective. DSSs support agricultural producers in making decisions on crop protection. DSS platforms provide access to specialist knowledge and professional advisory support. All of this makes it possible to precisely and sustainably conduct crop production, thanks to DSS. DSS in plant protection can generally be classified into two main types: Integrated Pest Management (IPM) systems, which aim to reduce pest populations, and Integrated Disease Management (IDM) systems, which focus on controlling plant pathogens. These approaches differ in both diagnostic methods and analytical perspectives. In pest management, the emphasis is placed on monitoring population dynamics, identifying harmful developmental stages, and determining the timing of transitions between life cycle phases. In contrast, disease diagnostics primarily address factors such as incidence, symptom severity, and host susceptibility. In both cases, agrometeorological conditions play a pivotal role, as they may either promote pest development or increase the likelihood of disease outbreaks [4,12,13,14].

In Poland, the development of such tools was possible thanks to the close cooperation of research institutes of national importance, such as the Institute of Plant Protection—National Research Institute at Poznań, the Institute of Horticulture—National Research Institute in Skierniewice, the Institute of Cultivation, Fertilization and Soil Science—National Research Institute at Puławy and the Institute of Plant Breeding and Acclimatization—National Research Institute at Radzików. An important role in Poland is also played by provincial agricultural advisory centers and other scientific and implementation units.

As a result of this cooperation, an online information platform was created, the task of which is to integrate knowledge and data from the field of plant protection and to make them available to producers in the form of modern decision-support tools (www.agrofagi.com.pl, accessed on 12 August 2025). Thanks to this, it is possible to effectively implement the principles of integrated plant protection in agricultural practice, taking into account local soil and climate conditions and the specificity of individual crops. The decision support system is based on the analysis of output data, including the following components: information on crop rotation, soil properties, varieties used, meteorological conditions (both current and forecast), as well as economic data on the costs of treatments. They take into account the thresholds of harmfulness, which are the basis for decisions on the need for action. A major limitation of current DSS platforms is their partial integration with broader farm management systems. The lack of interoperability between DSS, precision farming tools, and economic planning software limits their effectiveness and reduces their appeal to farmers seeking comprehensive digital solutions [15].

2. Decision Support Systems (DSSs) for Selected Cereal Diseases

2.1. Powdery Mildew of Cereals and Grasses—Blumeria graminis (Dc.) Speer f. sp. tritici (Erysiphaceae)

In the context of Integrated Disease Management (IDM)—which targets plant pathogens, and IPM (Insect and Pest Control)—powdery mildew of cereals and grasses is one of the main fungal threats to cereals in Europe, including Poland. Diseases, unlike pests that are studied through population dynamics, are evaluated based on their frequency of occurrence and severity of infection, which makes continuous monitoring crucial.

The pathogen responsible for powdery mildew of cereals and grasses in wheat is Blumeria graminis f. sp. tritici (Erysiphaceae). This disease was selected for analysis because it is considered one of the most important fungal pathogens of wheat and barley. It occurs every year in both Poland and Europe and continues to pose a serious threat to cereal crops. Therefore, specialized decision support systems (DSSs) are used to monitor and forecast it [16,17,18]. In practice, this disease is sometimes classified as a key risk factor because it can reduce the yield of cereals grown in continental and humid regions by up to several percent [16]. Maximum losses occur in areas with constant high humidity and heavy rainfall, i.e., in areas with a maritime climate [17].

Global warming and prolonged droughts during the vegetation period in southern Europe may significantly reduce the occurrence of powdery mildew of cereals and grasses. However, it is expected that in a changing climate, fungal pathogens may be more active (aggressive) due to selection pressure, which, in turn, may contribute to an increase in the geographical range of their occurrence, and growers should vigilantly invest in up-to-date monitoring and forecasting systems [17].

In wheat fields, in conditions particularly favorable to the development of B. graminis f. sp. tritici, the reduction in grain yield may range from 13% to 30% [18]. In the face of such threats, advisory systems are an important diagnostic and support tool for farmers in making rational decisions regarding plant protection. Advisory systems for powdery mildew of cereals and grasses integrate meteorological data (e.g., humidity, temperature, rainfall) with pathogen development models. In Poland, DSS platforms provided by the Institute of Plant Protection—NRI (IPP–NRI) are available to farmers, supporting early detection, risk forecasting, and optimized fungicide use.

Advanced machine learning (ML) models, combined with explainable artificial intelligence capabilities, are increasingly being used, while models for powdery mildew of cereals and grasses traditionally rely mainly on an empirical and regression approach, estimating the probability of infection depending on weather conditions; their results allow farmers to assess whether and when preventive treatments are economically justified [19]. Thanks to ongoing monitoring of threats, taking into account weather conditions and pathogen development forecasts, advisory systems enable precise and timely planning of protective treatments. Thus, they contribute to minimizing yield losses and limiting the excessive use of fungicides.

Monitoring plants for the occurrence of powdery mildew of cereals and grasses is particularly important if in the previous growing year, the pathogen B. graminis f. sp. tritici occurred in a given place at least to a minimal extent, ranging from one to several infected leaves. The most effective way of protection against B. graminis f. sp. tritici is prevention and a significant reduction in the chances of its occurrence. One of the effective ways of minimizing the occurrence of this pathogen is to cultivate plants with space between them (less frequent sowing). Such a solution contributes to maintaining better air circulation between plants and exposure of plants to sunlight. This reduces the infestation of plants by the pathogen.

It is recommended to properly prepare the soil before sowing, plow the stubble, destroy volunteer cereals, apply balanced fertilization (not too much nitrogen, the right amount of potassium), and cultivate varieties resistant to this disease [11]. In addition, sprinkling of cultivated fields also affects the occurrence of B. graminis f. sp. tritici. Relative humidity increases, which also promotes the development of cereal fungi. It is important to water regularly, because it can lead to inhibition of powdery mildew of cereals and grasses development in cereals and grasses [20].

Sustainable agrotechnical practices and an integrated approach to plant protection are essential for controlling powdery mildew of cereals and grasses. Excessive fertilization can stimulate excessive growth of young plant tissues, which are more susceptible to infection, so it should be used with caution. It is equally important to remove weeds and plant debris from fields because they can be a source of pathogen spores.

The use of crop rotation and the selection of plant varieties resistant to powdery mildew of cereals and grasses infection helps reduce disease pressure by interrupting the life cycle of the pathogen and reducing its survival in the soil [20].

Cereal monocultures intensify the infection by the pathogen B. graminis f. sp. tritici by accumulating infectious material in crop residues and in the ground. In order to limit the spread of the pathogen, it is recommended to, among others, properly prepare the soil, thoroughly plow stubble, destroy volunteers, apply balanced fertilization (with nitrogen limitation and potassium optimization), and grow resistant varieties [10].

Advisory systems for the protection of winter barley against powdery mildew of cereals and grasses are available on the website of the Institute of Plant Protection—NRI (IPP–NRI). These are specific tools that provide information on monitoring and forecasting, as well as advisory systems for the protection of cereals against the most important diseases in Poland.

Although preventive agronomic practices such as crop rotation, resistant varieties, balanced fertilization (reduced nitrogen, adequate potassium), and proper soil preparation remain essential, combining these strategies with DSS-supported IDM ensures sustainable disease management. By combining resistant varieties, cultivation practices, and forecasting systems, farmers can minimize both crop losses and fungicide use

2.2. Rusts in Cereals—Yellow Rust (Puccinia striiformis f. sp. tritici Westend., Pucciniaceae), Leaf Rust (Puccinia triticina Erikss., Pucciniaceae), Stem Rust (Puccinia graminis f. sp. tritici Pers., Pucciniaceae)

In contrast to pests, which are studied in terms of population dynamics, plant diseases are assessed based on their frequency and severity. Among the pathogens, the most dangerous are three forms of cereal rust: yellow rust (Puccinia striiformis f. sp. tritici Westend., Pucciniaceae), leaf rust (Puccinia triticina Erikss., Pucciniaceae), and stem rust (Puccinia graminis f. sp. tritici Pers., Pucciniaceae). Each of these pathogenic fungi can cause significant yield losses, ranging from 5% to even 50%, depending on weather conditions, crop location, and plant development stage at the time of the epidemic [21,22]. Fungal diseases globally can cause yield losses of up to 40%, posing a major threat to global food security [23].

Selected diseases are available for cereal crops in Poland and are also available for DSS models. However, as with the universal occurrence of cereals and grasses, the development and risk of rust diseases are monitored using IDM and DSS models, which allow for the prediction of application based on the conditioning conditions and the permitting stage. These models often incorporate exponential or linear functions, linking temperature, humidity, and plant growth stage with pathogen incubation and disease dynamics. Alternative models can address the time at which protective measures and loss prevention measures are implemented.

In the context of increasing legislative restrictions on plant protection products and environmental pressures, the development and use of disease-resistant varieties become essential for sustainable crop production [23,24]. In the context of climate change, increasingly higher temperatures, changed atmospheric circulation, and more frequent and intense precipitation create favorable conditions for the development of diseases, in particular leaf rust (Puccinia triticina Erikss., Pucciniaceae). This pathogen is a specialized biotrophic fungus that infects leaves of spring and winter wheat. It is common on all continents of wheat cultivation, and its pressure increases under conditions of intensive cultivation, high nitrogen fertilization, and abundant dew [25]. Infection occurs at 100% relative humidity and a temperature of about 20 °C, and spores germinate after 4–8 h [26,27,28]. Yield losses associated with P. triticina infection depend mainly on the severity of infection and the developmental stage of the plant. In conditions of severe infestation, losses can range from 40% to 80%, and in Poland, typically amount to approx. 15%, although in favorable conditions they can reach even 30–60% [29].

Changing climate significantly affects the epidemiology of fungal diseases of wheat. Forecasts indicate that increased temperatures in the 21st century may shorten the incubation period of the disease and contribute to its earlier and more intense occurrence. The model developed by Wójtowicz et al. [30] for two wheat varieties, Ostroga and Turnia, showed a strong relationship between temperature and the length of the incubation period of brown leaf rust. These results indicate a potential increase in the frequency of infection by P. triticina in western Poland in the coming decades.

The study concerned the analysis of the impact of the predicted climate warming on the length of the incubation period of wheat brown rust in western Poland. Similarly to powdery mildew in cereals and grasses, leaf rust development can be predicted using IDM and DSS models. The study analyzed the impact of predicted climate warming on the incubation period of wheat leaf rust in western Poland using two mathematical models: LP1 and LP2. Wheat leaf rust is one of the most dangerous cereal pathogens in Central Europe, and its development strongly depends on thermal conditions. In view of global climate change, it is becoming important to predict the dynamics of this disease in various temperature scenarios. The main objective of the study was to quantify the impact of temperature increase on the length of the pathogen incubation period, as well as to estimate how the conditions of disease development will change. The LP1 and LP2 models were used to predict the length of the incubation period after increasing the temperature by 1, 2, 3, or 4 °C compared to the actual values from 2010 to 2014. The data obtained can be used in modeling the pathogen threat and supporting Integrated Disease Management (IDM). The model structure is based on the exponential relationship between temperature and the length of the incubation period, expressed in hours. The presented models were parameterized for western Poland, but the approach is applicable to Central Europe. Modern monitoring systems increasingly combine DSS with remote sensing (drones, cameras) and meteorological sensors, improving real-time disease risk assessment.

Computer simulations were based on meteorological data from 2010 to 2014, recorded in three selected locations in western Poland. For each year, five data variants were developed: the base one and four with increased temperatures by 1, 2, 3, and 4 °C. Simulations were carried out for the months from April to July, calculating monthly sums of the daily incubation rate (MSDTI), which was a measure of the intensity of disease development in individual periods of the growing season.

The LP1 and LP2 models are exponential tools that combine temperature with the incubation period duration, expressed in hours. Monthly sums of daily incubation indices were calculated to measure disease development. These models provide farmers with predictive information that allows them to plan Integrated Disease Management (IDM), optimize fungicide applications, and assess pathogen risk under various climate scenarios. Modern monitoring methods, such as drones, camera systems, and climate-based decision support systems, are further improving the precision of rust management.

2.3. Septoria tritici Blotch—Zymoseptoria tritici (Mycosphaerellaceae)

Septoria tritici blotch (STB), caused by Z. tritici, is a fungal disease that can reduce wheat yields by up to 30–50% under conditions favorable to the pathogen [31]. Traditional protection strategies based on calendar-based fungicide applications are often inefficient, costly, and environmentally harmful. In response, decision support systems have emerged, integrating meteorological, phenological, and epidemiological data to assess infection risk and optimize treatment timing. The development and implementation of DSS tools based on epidemiological models, such as those by Chaloner et al. [32], El Jarroudi et al. [31], and Delfosse et al. [33], represent a breakthrough in sustainable control of STB in wheat. By integrating weather data, crop status, and pathogen biology, these tools enable more effective and environmentally responsible disease management. For agricultural advisory services, this provides powerful instruments for delivering accurate and timely recommendations that support modern, sustainable farming.

The mechanism of operation of decision support systems (DSSs) for the control of STB in wheat is based on various modeling approaches that simulate the development of epidemics caused by Z. tritici, incorporating both meteorological and crop phenological data. The scientific literature distinguishes three main modeling approaches for predicting infection risk and supporting decisions on the timing and intensity of fungicide applications: mechanistic, statistical, and layered (vertical) approaches.

The mechanistic model developed by Chaloner et al. [32] considers four key phases of the pathogen’s life cycle: infection, latency, sporulation, and spore dispersal. It relies on meteorological inputs such as air temperature, relative humidity, and precipitation to calculate the infection rate and simulate epidemic development. A major advantage of this model lies in its ability to dynamically track infection risk in real time, enabling precise timing of fungicide applications.

The second approach, a statistical model developed by El Jarroudi et al. [31], is based on regression analysis and field data. This model integrates weather variables—temperature, cumulative rainfall, and the number of rainy days—with wheat growth stages. Its primary aim is to predict the severity of infection in lower leaf layers and estimate the risk to upper canopy leaves, which are critical for yield formation. Due to its simplicity, this model can be easily implemented in mobile applications designed for agricultural advisors and farmers.

The most widely used model in Europe is the one proposed by Delfosse et al. [33], known by the acronym SEPTTR (Septoria Leaf Blotch Model). This layered infection model links disease severity in the lower canopy with infection risk in the upper canopy, taking into account microclimatic variability within the wheat crop. This allows for more accurate predictions of vertical disease spread and supports more targeted decisions regarding the second (T2) and third (T3) fungicide applications, which are key to protecting yield-critical flag leaves.

The SEPTTR model uses the following meteorological data: daily minimum air temperature measured at 2 m above ground level, daily total precipitation, and crop phenological data related to the BBCH 31 growth stage. Meteorological observations are collected starting 120 days prior to the occurrence of BBCH 31 and continue until that stage is reached. Based on these inputs, the model generates output data, including cumulative precipitation, cumulative temperature, and a risk indicator that initially takes negative values.

The adopted model:

0.046 * precipitation + 0.042 * Tmin- 6.69 > 0 [33],

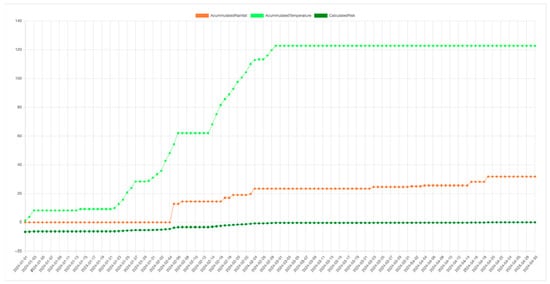

The prediction model is designed to predict septoria epidemics based on meteorological data recorded before BBCH phase 31. An epidemic was defined as an infection of at least 5% on the three highest leaves (sum of the three leaves). The calculated risk of detecting an early septoria infection is a value > 0 (Figure 1) [34,35]. DSSs for STB help reduce the number of unnecessary protective treatments by adjusting their timing to the actual risk of infection. This allows for reducing plant protection costs and delaying the development of pathogen resistance to fungicides. By integrating local weather data and epidemiological models, agricultural advisors can provide recommendations tailored to specific field conditions, which increases producers’ confidence in the recommendations and their effectiveness.

Figure 1.

The visualization of model SEPTTR results—cumulative rainfall, temperature, and calculated infection risk over time. The graphic shows recommendations regarding the risk of pathogen infection and the need for treatment.

The SEPTTR model is a valuable tool for the prediction of leaf spot disease due to its mechanistic analysis of plant layers and integration of weather conditions and field infection status. It is particularly useful in seasons with intense disease pressure. However, its use may result in too many false alarms and an underestimation of less susceptible varieties. Additional challenges include limited resolution of weather data and a lack of personalization to individual farms. In practice, it is worth combining its results with field observations and taking into account additional factors, such as variety resistance or local microclimates. The SEPTTR system is currently being tested for agricultural advisory purposes at the Wielkopolska Agricultural Advisory Centre in Poznan. The aim of the pilot is to assess its usefulness under real farming conditions and to adapt the model to the specifics of crops in the region. Based on the developed mathematical model, a computer-based advisory application has been created, with its main function being the monitoring of early infection risk by fungal pathogens (Figure 1). The application integrates meteorological data, crop condition information, and biological parameters of the pathogen, enabling a faster response to disease threats and more precise planning of plant protection treatments.

Decision support systems are based on meteorological data and damage thresholds to determine the best time of chemical treatment. Examples include the Danish systems “PC-Plant Protection” (now “Crop Protection Online”) and “PROCULTURE”, which consists of parameters such as precipitation, relative humidity, and temperature, and also takes into account factors such as the biological organism, which allows for the prediction of infections [36,37]. The effectiveness of DSS depends on the setting of the model and weather conditions. In years with high disease intensity, using DSS increased yields from 4 to 42%, and the financial gain was from 3 to 16% [38]. In years with low disease pressure, such systems often recommended no spraying, which allowed the elimination of fungi and reduced costs. Authorization systems that come from countries with a dependent climate and a growing season that may be harmful, such as the Iowa climate [37].

Research conducted by Jørgensen et al. and published [22] within the project “Rust management in wheat using IPM principles and alternative products” demonstrated that DSSs, which were disconnected from fungicide use and spraying applications, allowed the device to be switched off without compromising protection—in many cases permitting a complete elimination of sprays if the variety was mandatory and the conditions of use were not approved. These systems were determined based on field monitoring (scouting), the application of damage thresholds (as low as 1–10% for yellow rust), local application conditions, and disease pressure, as well as the applied restrictions to the specifications defined in the country. Regardless of the priority of DSSs, there was yellow rust, in some cases, also other pathogens such as Septoria tritici. Their effectiveness in controlling brown rust was limited, which is probably due to the priority of this disease in the algorithms. The use of DSS not only improves the effectiveness of protection but also contributes to the treatment profitability through effects and supports strategies to prevent the spread of pathogens on the fungus. Within the DSS project, they are based on the use of monitoring, the definition emphasizes forecasting models on data such as temperature and relative loads, which can check the risk of occurrence. For example, it was indicated that the penetration of the yellow rust pathogen decreases at temperatures below −5 °C.

The CPO model is a simple example of a practical decision support system available for limiting the losses caused by powdery mildew of cereals and grasses (B. graminis f. sp. tritici) in a primary crop. They operate on decision thresholds characteristic of three main parameters: growth stage (GS), susceptibility to infection, and frequency of operation of the actuators in the devices. Despite the lack of an explicit mathematical function in the system documentation, the model structure can be formally applied using a simple logic-threshold solution, which gives it a deterministic model [39].

The CPO model recommends treatment when the thresholds are exceeded. It is worth adding that this is a susceptible variety; treatment is applied at a higher level of disease than in the case of different varieties. If fungicides are applied, active substances with proven efficacy against powdery mildew of cereals and grasses should be selected [22].

It is worth remembering that the model is not automatically compromised by the impact of fungal sprays. If a fungicide effective against powdery mildew has been applied in the last 10 days, the risk can be considered neutralized.

3. Decision Support Systems for Selected Pests

3.1. Aphids in Cereals—Rhopalosiphum padi (L.), Sitobion avenae (Fabricius) (Aphididae)

Aphid-transmitted viral diseases in cereals, particularly Barley Yellow Dwarf Virus (BYDV), pose a serious threat to European cereal production. The bird cherry-oat aphid (R. padi L.) and the grain aphid (S. avenae Fabricius) are the most important vectors of BYDV. Once transmitted, the virus causes stunted growth, discoloration, and significant yield reductions. R. padi is a migratory aphid species that colonizes winter wheat in the autumn. It transmits BYDV in a persistent, circulative manner—meaning that even low aphid populations can lead to significant infection if the vector is viruliferous.

The economic damage caused by Barley Yellow Dwarf Virus (BYDV) largely depends on the timing of infection, as early infections result in significantly greater yield losses. Another critical factor is the abundance and activity of aphid populations, which serve as the primary vectors of the virus. Additionally, environmental conditions that favor virus transmission—such as mild and wet autumn weather—can substantially increase the risk of outbreaks and the severity of symptoms, ultimately leading to greater reductions in cereal crop yields.

The need for timely detection and response has led to the development of models that help determine when control measures are justified. Conventional calendar-based insecticide applications are often inefficient, leading to unnecessary treatments or late intervention. Modern crop protection strategies increasingly rely on decision support systems that use pest biology and weather data to predict infection risk and optimize the timing of control measures [40].

The TSUM model is a phenological decision support system (DSS) developed to predict the risk of Barley Yellow Dwarf Virus (BYDV) transmission by aphid vectors, primarily R. padi. This thermal-time-based model accumulates daily mean temperatures above a base threshold of 3 °C, starting from October 1st. Once the cumulative temperature sum (TSUM) reaches 170 °C days, the model signals a high probability of aphid colonization and the associated risk of BYDV transmission. This critical threshold, as indicated by White et al. [41], serves as a decision point to intensify monitoring of aphid activity and to consider potential intervention strategies.

The TSUM model is complemented by standardized field observations, which play a crucial role in both decision-making and model validation. These observations involve the use of sticky and suction traps, as well as direct plant inspections to detect the presence of aphid populations. Monitoring is carried out on a daily or weekly basis during the autumn period, particularly as the cumulative temperature approaches the 170 °C threshold. Field data collected through these protocols are also used to calibrate and validate the model under diverse climatic and agronomic conditions, ensuring its relevance and accuracy in different geographical regions.

In addition to predicting the timing of aphid activity, the TSUM model supports insecticide decision-making by integrating biological and meteorological data. The DSS outputs risk assessments—categorized as low, moderate, or high—based on aphid presence and the status of the cumulative temperature sum. Insecticide treatments are recommended only when aphid populations are confirmed shortly before or around the time the TSUM threshold is reached. This targeted approach helps reduce the frequency of chemical applications, supporting more sustainable pest management practices.

The usefulness of the TSUM model has been confirmed in regional monitoring programs, such as the AHDB BYDV [42] management tool in the United Kingdom. These programs demonstrate how real-time data collection and integration into DSS platforms can enhance model validation and contribute to adapting the model to local aphid population dynamics and climatic variability. Historical studies, such as those by Plumb [43], have further emphasized the significance of temperature thresholds and autumn aphid behavior in effective BYDV management.

The BYDV TSUM model is recognized for its simplicity and operational ease, providing an accessible method for forecasting BYDV risk based on the accumulation of degree days. This facilitates better planning of insecticide applications and optimization of input use. Nonetheless, the model’s effectiveness depends heavily on the incorporation of actual aphid monitoring data. Neglecting factors such as aphid presence, vector infectivity, and local agronomic conditions can result in false warnings and unnecessary treatments. Such inaccuracies not only undermine the reliability of the DSS but may also contribute to the development of insecticide resistance. Therefore, a balanced integration of thermal models with empirical field data remains essential for the responsible and effective use of the TSUM model in Integrated Pest Protection programs.

3.2. Colorado Potato Beetle—Leptinotarsa decemlineata (Say) (Chrysomelidae)

The occurrence of the Colorado potato beetle (Leptinotarsa decemlineata Say) has been a problem in potato crops since the 1950s [44]. In the conventional system, many pesticides are approved for use to control this pest, which, however, acquires resistance to them quite quickly. Losses caused by the potato beetle alone, in the absence of chemical plant protection, can reach 40%, and in extreme cases even 70% [45,46,47,48].

In case of this pest, an effective system is the SIMLEP computerized decision support program. It was developed in Germany at the Institut für Folgenabschätzung im Pflanzenschutz (JKI-SF). It is used to optimize the chemical protection of potatoes against the potato beetle and consists of two modules—SIMLEP 1 and SIMLEP 3. The first simulates the development of the pest in a region based on an analysis of thermal conditions, while the second simulates the development of the pest on a selected potato plantation, based on the results of temperature measurements and field inspections carried out during the period of laying the first eggs [49].

SIMLEP1 is a regional forecasting model that predicts the development of the pest from hatching from the soil after winter to the occurrence of young beetles, but first generation. within a region. The hibernation period is not reflected in the model. The model requires as its input air temperature (2 m height) on an hourly basis. Weather data must be available from 1st April onwards. The amount of information required and its frequency may hinder the use of the model. It should also be emphasized that in practice, SIMLEP 1 is used to forecast the first occurrence of egg clusters in a specific location [49].

SIMLEP 3 is a field-specific model that forecasts the occurrence of the developmental stages of L decemlineata. SIMLEP 3 simulates the development of L. decemlineata from the beginning of egg laying to the occurrence of old larvae. Model input parameters were increased compared to SIMLEP 1 (air temperature (2 m height) on an hourly basis (from 1st April onwards, date when the first egg clusters) were found, date of the previous assessment with no egg clusters, number of egg clusters). Despite the need to enter a lot of data into the system, the results are significantly more satisfying: date of first occurrence of young larvae (L1/L2) and date of first occurrence of old larvae (L3/L4). One of the most important is predicting the period of maximum egg laying, which means time for optimal assessment of population density with respect to decision-making on insecticide use. The next period of maximum abundance of young larvae is the optimal time for chemical treatment [47,49].

Furthermore, studies have shown the effectiveness of the SIMPLEP 3 system in many regions, not only at the local level. The model has been widely introduced into agricultural practice in many countries (Germany, Austria, Poland), and its use has led to some improvements in farmers’ crop protection efforts.

The requirements of integrated control clearly indicate the need to use all available nonchemical methods to protect crop plantations. In addition, use non-chemical methods that support plantation protection. In all integrated protection guidelines, the signaling of pests and the recommendation to use available DSS are strongly highlighted.

At the Institute of Plant Protection—National Research Institute (IPP—NRI), two DSS to control cutworms (Agrotis segetum Denis & Schiffermüller, and Agrotis exclamationis L.) (Noctuidae) in sugar beets and cereal leaf beetles (Oulema spp.) (Chrysomelidae) in cereals were developed and validated in field experiments. For short-term forecasting of cutworm occurrence, cutworm moth arrival at holdings should be monitored from early May. Moths’ flight times depend largely on the weather in a given year. In the spring, treatments are timed on the basis of the number of butterflies caught with light and/or pheromone traps. The date of treatment is set at 30–35 days (depending on weather conditions) after more than one butterfly is first caught within 2–3 days. The timing of insecticide application can also be determined based on air temperature readings. This involves calculating the sum of effective temperatures: (1) from the day when moths first appeared en masse until the cumulative heat sum reached 501.1 °C, corresponding to the L2 stage, and (2) from the day of the first mass moth appearance until the cumulative effective sum of temperature 230.0 °C, calculated as the sum of daily air temperature values above the developmental physiological threshold for A. segetum and A. exclamationis (average 10.9 °C), also corresponding to the L2 stage. A slight delay of a few days in the application of chemicals will not compromise control effectiveness. The best chemical treatment results are obtained when cutworms reach the L2 stage, and the plants reach the inter-row covering (rosette development) stage. Monitoring A. segetum plays a key role in Integrated Pest Management, as it enables early detection of moth emergence and determination of economic thresholds for larval populations. Several types of monitoring devices and predictive models are commonly used. The most widespread are light traps and pheromone traps, which allow continuous tracking of adult activity. Pheromone traps, due to their selectivity in attracting males, provide reliable data on flight dynamics and form the basis for phenological models. Light traps, although less selective, offer a broader picture of nocturnal insect activity within a given habitat.

In addition, various agrometeorological models have been developed, which combine weather data (temperature, humidity, precipitation) with species-specific biological parameters. These models can predict larval emergence or moth flight peaks, thereby facilitating the timing of control measures.

Each approach has distinct advantages and limitations. Pheromone traps are highly sensitive and easy to operate, but their effectiveness may be influenced by competing odor sources in the environment or weather conditions affecting moth activity. Light traps capture multiple species simultaneously, which is valuable for biodiversity studies, but they require labor-intensive identification of specimens and incur relatively high energy costs. Agrometeorological models enable real-time forecasting and reduce the need for continuous field monitoring; however, their accuracy depends strongly on the quality of meteorological data and proper calibration to local populations.

Research findings indicate that combining different methods provides the most effective results. Predictive models that integrate pheromone trap data with meteorological inputs achieve high accuracy in forecasting A. segetum flight peaks, thereby improving the scheduling of insecticide applications [9,50,51,52,53]. More recently, studies have also highlighted the potential of automated digital traps, which employ imaging technology and remote data transmission to reduce labor requirements and enhance monitoring precision. The DSS for cereal leaf beetles requires observation and recording the date of mass oviposition by pest (in practice, this is also the time of the first hatchings of individual larvae from previously laid eggs, which are about 1 mm in size) and starting on that date, recording the average daily air temperature and humidity.

Thanks to the mathematical model (multiple curvilinear regression equation), the total effective temperature for pest development is calculated (10.6 °C), and thus recommended chemical treatment [9]. Experiment results demonstrated their effectiveness and their suitability for agricultural practice. Thanks to the obtained results, farmers have access to knowledge supported by solid research and field tests. Based on the results obtained, applications have been developed that will be useful to producers. The applications will assist in deciding the need for chemical protection of sugar beet against cutworms and cereals against cereal leaf beetles, thus recommending treatment only when necessary [9]. The examples of SIMLEP, cutworm, and cereal leaf beetle DSS highlight the growing importance of integrating biological monitoring, weather data, and predictive modeling into pest management strategies. While challenges remain—such as the need for frequent and reliable meteorological data—the widespread validation and adoption of these systems across Europe demonstrate their effectiveness. By enabling more precise timing of interventions, they support the principles of IPM, reduce unnecessary pesticide use, and help safeguard crop yields under changing climatic conditions.

4. Conclusions

Future strategies of advisory systems in integrated plant protection should include a global approach to resistance breeding for broad crop adaptation to diverse environments. Gene pyramiding targeting several pathogens should be combined with plant tolerance to abiotic stresses [49]. However, when multiple diseases occur simultaneously, resistance alone may be insufficient and should be complemented with other methods, such as crop rotation and spatial and temporal crop diversity [54,55]. In the era of climate change, the role of integrated advisory systems becomes particularly important.

Climate change increases pest pressure from both established and invasive species expanding into new regions due to warmer winters and longer growing seasons [52]. DSSs should, therefore, integrate disease forecasting with pest population monitoring and risk assessment. Early detection using weather-based models, pheromone traps, remote sensing, and AI image recognition allows for proactive responses before pests reach damaging thresholds [56,57].

Effective DSS must integrate meteorological data, pest phenology, crop susceptibility, and field monitoring to enable precise forecasting, better planning, and reduced pesticide use [58,59]. Web-DSS platforms such as eDWIN in Poland provide predictive models for pest and disease occurrence, infection risk tracking, and treatment planning. Similarly, the Online Pest Warning System (OPWS) (www.agrofagi.com.pl, accessed on 12 August 2025) operated by IPP–NRI delivers public monitoring results, regional alerts, and recommendations, supporting rapid responses to threats. Access to various DSS tools is also embedded within OPWS.

These platforms are vital under increasing climate variability, enhancing early warning and supporting site-specific, environmentally sound protection. Integration with mobile apps and sensor networks further improves the timeliness and precision of advice. However, despite growing DSS availability in Poland, their practical implementation remains limited due to low awareness, training gaps, and farmers’ attitudes. Advisory institutions, thus, play a key role in education, technical support, and system adaptation to local conditions, which increases acceptance.

Author Contributions

Conceptualization, A.T. and M.J.; methodology, A.T., M.J. and A.P.-R.; software, M.J.; validation, A.T., M.J. and A.P.-R.; formal analysis, A.T., M.J. and A.P.-R.; investigation, A.T., M.J. and A.P.-R.; data curation, M.J.; writing—original draft preparation, A.T. and M.J.; writing—review and editing, A.T., M.J. and A.P.-R.; visualization, A.T. and M.J.; supervision, A.T., M.J. and A.P.-R.; project administration, A.T.; funding acquisition, A.T. and M.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- FAO. International Code of Conduct on Pesticide Management. 2025. Available online: https://www.fao.org (accessed on 10 July 2025).

- Bottrell, D.G. Integrated Pest Management; Council on Environmental Quality; U.S. Government Printing Office: Washington, DC, USA, 1979; 120p. [Google Scholar]

- Deguine, J.P.; Aubertot, J.N.; Flor, R.J.; Lescourret, F.; Wyckhuys, K.A.G.; Ratnadass, A. Integrated pest management: Good intentions, hard realities. A review. Agron. Sustain. Dev. 2021, 41, 38. [Google Scholar] [CrossRef]

- Aktar, W.; Sengupta, D.; Chowdhury, A. Impact of pesticides use in agriculture: Their benefits and hazards. Interdiscip. Toxicol. 2009, 2, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Nicolopoulou-Stamati, P.; Maipas, S.; Kotampasi, C.; Stamatis, P.; Hens, L. Chemical Pesticides and Human Health: The Urgent Need for a New Concept in Agriculture. Front. Public Health 2016, 4, 148. [Google Scholar] [CrossRef] [PubMed]

- Piwowar, A. The use of pesticides in Polish agriculture after integrated pest management (IPM) implementation. Environ. Sci. Pollut. Res. 2021, 28, 26628–26642. [Google Scholar] [CrossRef]

- Tratwal, A.; Horoszkiewicz-Janka, J.; Bereś, P.; Walczak, F.; Podleśny, A. Przydatność aplikacji komputerowej do wyznaczania optymalnego terminu zwalczania rdzy brunatnej pszenicy. Zagadnienia Doradz. Rol. 2014, 3, 106–113. [Google Scholar]

- Tratwal, A.; Jakubowska, M.; Baran, M. Online Pest Warning System–Support for Agriculture and Transfer from Science to Practice. In Farm Machinery and Processes Management in Sustainable Agriculture; FMPMSA 2022, Pascuzzi, S., Santoro, F., Eds.; Cham Lecture Notes in Civil Engineering; Springer: Cham, Switzerland, 2022; Volume 289, pp. 209–215. [Google Scholar]

- Jakubowska, M.; Tratwal, A.; Kachel, M. The weather as an indicator for decision-making support systems regarding the control of cutworms in beets and cereal leaf beetles in cereals and their adoption in farming practice. Agron. Sect. Precis. Digit. Agric. 2023, 13, 786. [Google Scholar] [CrossRef]

- Niedbała, G.; Tratwal, A.; Piekutowska, M.; Wojciechowski, T.; Uglis, J. A Framework for Financing Post-Registration Variety Testing System: A Case Study from Poland. Agronomy 2022, 12, 325. [Google Scholar] [CrossRef]

- Gacek, E.; Głazek, M.; Matyjaszczyk, E.; Pruszyński, G.; Pruszyński, S.; Stobiecki, S. Metody Ochrony w Integrowanej Ochronie Roślin; PruszyńskiS., Ed.; Centrum Doradztwa Rolniczego w Brwinowie, Oddział w Poznaniu: Poznań, Poland, 2016; p. 148. [Google Scholar]

- Oliveira, C.M.; Auad, A.M.; Mendes, S.M.; Frizzas, M.R. Crop losses and the economic impact of insect pests on Brazilian agriculture. Crop Prot. 2014, 56, 50–54. [Google Scholar] [CrossRef]

- Rossi, V.; Salinari, F.; Poni, S.; Caffi, T.; Bettati, T. Addressing the implementation problem in agricultural Decision Support Systems: The example of vite.net®. Comput. Electron. Agril. 2014, 100, 88–99. [Google Scholar] [CrossRef]

- Damos, P. Modular structure of web-based Decision Support Systems for integrated pest management. A review. Agron. Sustain. Dev. 2015, 35, 1347–1372. [Google Scholar] [CrossRef]

- Barzman, M.; Bàrberi, P.; Birch, A.N.E.; Boonekamp, P.; Dachbrodt-Saaydeh, S.; Graf, B.; Hommel, B.; Jensen, J.E.; Kiss, J.; Kudsk, P.; et al. Eight principles of integrated pest management. Agron. Sustain. Dev. 2015, 35, 1199–1215. [Google Scholar] [CrossRef]

- Griffey, C.A.; Das, M.K.; Stromberg, E.L. Effectiveness of adult-plant resistance in reducing grain yield loss to powdery mildew in winter wheat. Plant Dis. 1993, 77, 618–622. [Google Scholar] [CrossRef]

- Pietrusińska, A.; Żurek, M. Wpływ mączniaka prawdziwego zbóż i traw na uprawy pszenicy w kontekście zmian klimatu. Biul. IHAR 2024, 301, 45–51. [Google Scholar] [CrossRef]

- Lackermann, K.V.; Conley, S.P.; Gaska, J.M.; Martinka, M.J.; Esker, P.D. Effect of location, cultivar, and diseases on grain yield of soft red winter wheat in Wisconsin. Plant Dis. 2011, 95, 1401–1406. [Google Scholar] [CrossRef]

- Diachenko, G.; Laktionov, I.; Vinyukov, O.; Likhushyna, H. A Decision Support System for Wheat Powdery Mildew Risk Prediction Using Weather Monitoring, Machine Learning and Explainable Artificial Intelligence. Comput. Electron. Agric. 2025, 230, 109905. [Google Scholar] [CrossRef]

- Deiss, F. How to Identify, Prevent, and Treat Powdery Mildew on Plants. CABI BioProtection Portal. 2025. Available online: https://bioprotectionportal.com/resources/how-to-identify-prevent-and-treat-powdery-mildew (accessed on 1 July 2025).

- Singh, R.P.; Singh, P.K.; Rutkoski, J.; Hodson, D.P.; He, X.; Jørgensen, L.N.; Hovmøller, M.S.; Huerta-Espino, J. Disease Impact on Wheat Yield Potential and Prospects of Genetic Control. Annu. Rev. Phytopathol. 2016, 54, 303–322. [Google Scholar] [CrossRef]

- Jørgensen, L.N.; Matzen, N.; Leitzke, R.; Thomas, J.E.; O’Driscoll, A.; Klocke, B.; Maumene, C.; Lindell, I.; Wahlquist, K.; Zemeca, L.; et al. Management of rust in wheat using IPM principles and alternative products. Agriculture 2024, 14, 821. [Google Scholar] [CrossRef]

- Savary, S.; Nelson, A.; Sparks, A.H.; Willocquet, L.; Duveiller, E.; Mahuku, G.; Forbes, G.; Garrett, K.A.; Hodson, D.; Padgham, J.; et al. International agricultural research tackling the effects of global and climate changes on plant diseases in the developing world. Plant Dis. 2011, 95, 1204–1216. [Google Scholar] [CrossRef] [PubMed]

- Morgounov, A.; Tufan, H.A.; Sharma, R.; Akin, B.; Bagci, A.; Braun, H.J.; Kaya, Y.; Keser, M.; Payne, T.S.; Sonder, K.; et al. Global incidence of wheat rust and powdery mildew during 1969–2010 and durability of resistance of winter wheat variety Bezostaya 1. Eur. J. Plant Pathol. 2012, 132, 323–340. [Google Scholar] [CrossRef]

- Kolmer, J. Leaf Rust of Wheat: Pathogen Biology, Variation and Host Resistance. Forests 2013, 4, 70–84. [Google Scholar] [CrossRef]

- Hu, G.; Rijkenberg, F.H. Subcellular localization of beta-1,3-glucanase in Puccinia recondita f sp. tritici-infected wheat leaves. Planta 1998, 204, 324–334. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Meakin, H.; Dickinson, M. Isolation of genes expressed during compatible interactions between leaf rust (Puccinia triticina) and wheat using cDNA-AFLP. Mol. Plant Pathol. 2003, 4, 469–477. [Google Scholar] [CrossRef]

- Rodríguez-Moreno, V.M.; Jiménez-Lagunes, A.; Estrada-Avalos, J.; Mauricio-Ruvalcaba, J.E.; Padilla-Ramírez, J.S. Weather-data-based model: An approach for forecasting leaf and stripe rust on winter wheat. Meteorol. Appl. 2020, 27, e1896. [Google Scholar] [CrossRef]

- Strzembicka, A.; Czajowski, G.; Karska, K. Characteristic of the winter wheat breeding materials in respect of resistance to leaf rust Puccinia triticina. Bull. Plant Breed. Acclim. Inst. 2013, 268, 7–14. [Google Scholar]

- Wójtowicz, A.; Wójtowicz, M.; Ratajkiewicz, H.; Pasternak, M. Prognoza zmian czasu inkubacji sprawcy rdzy brunatnej pszenicy w reakcji na przewidywane ocieplenie klimatu. Fragm. Agron. 2017, 34, 197–207. [Google Scholar]

- El Jarroudi, M.; Delfosse, P.; Maraite, H.; Hoffmann, L.; Tychon, B. Assessing the accuracy of simulation model for Septoria leaf blotch disease progress on winter wheat. Plant Dis. 2009, 93, 983–992. [Google Scholar] [CrossRef] [PubMed]

- Chaloner, T.M.; Fones, H.N.; Varma, V.; Bebber, D.P.; Gurr, S.J. A new mechanistic model of weather-dependent Septoria tritici blotch disease risk. Philos. Trans. R. Soc. B 2019, 374, 20180266. [Google Scholar] [CrossRef]

- Delfosse, P. Assessing the Interplay between Weather and Septoria Leaf Blotch Severity on Lower Leaves on the Disease Risk on Upper Leaves in Winter Wheat. J. Fungi 2022, 8, 1119. [Google Scholar] [CrossRef]

- Te Beest, D.E.; Shaw, M.W.; Paveley, N.D.; van den Bosch, F. Evaluation of a predictive model for Mycosphaerella graminicola for economic and environmental benefits. Plant Pathol. 2009, 58, 1001–1009. [Google Scholar] [CrossRef]

- Te Beest, D.E.; Shaw, M.W.; Pietravalle, S.; van den Bosch, F. A predictive model for early-warning of Septoria leaf blotch on winter wheat. Eur. J. Plant Pathol. 2009, 124, 413–425. [Google Scholar] [CrossRef]

- Hagelskjæ, L.; Jørgensen, L.N. A web-based decision support system for integrated management of cereal pests. EPPO Bull. 2013, 33, 467–471. [Google Scholar] [CrossRef]

- Švarta, A.; Bimšteine, G. Winter wheat leaf diseases and several steps included in their integrated control: A review. Res. Rural Dev. 2019, 25, 55–62. [Google Scholar]

- El Jarroudi, M.; Kouadoi, L.; El Jarroudi, J.; Junk, J.; Bock, C.; Diouf, A.A.; Delfosse, P. Improving fungal disease forecasts in winter wheat: A critical role of intraday variations of meteorological conditions in the development of Septoria leaf blotch. Field Crop Res. 2017, 213, 12–20. [Google Scholar] [CrossRef]

- Aarhus University; SEGES. CPO Model for Mildew in Wheat [Factsheet]. IPM Decisions. Available online: https://www.ipmdecisions.net/media/ryrghkcv/ipm_factsheet-cpo-model-for-mildew-in-wheat_v0001.pdf (accessed on 1 July 2025).

- Thackray, D.J.; Diggle, A.J.; Jones, R.A.C. BYDV PREDICTOR: A simulation model to predict aphid arrival, epidemics of Barley yellow dwarf virus and yield losses in wheat crops in a Mediterranean-type environment. Plant Pathol. 2009, 58, 186–202. [Google Scholar] [CrossRef]

- White, S.; Telling, S.; Griffiths, H.G.; Skirvin, D.J.; Williamson, M.; Ellis, S.; Schaare, T.; Granger, R.E.; Potter, O. Management of Aphid and BYDV Risk in Winter Cereals; Project Report; AHDB: Coventry, UK, 2023; p. 163. [Google Scholar]

- Agriculture and Horticulture Development Board (AHDB). Barley Yellow Dwarf Virus (BYDV) Management Tool. Available online: https://ahdb.org.uk/bydv (accessed on 30 June 2025).

- Plumb, R.T. A rational approach to the control of barley yellow dwarf virus. J. R. Agric. Soc. Engl. 1986, 147, 162–171. [Google Scholar]

- Węgorek, W. Badania nad pośrednim i bezpośrednim wpływem fotoperiodu na rozwój i fizjologię stonki ziemniaczanej (Leptinotarsa decemlineata Say.). Pr. Nauk. Inst. Ochr. Roślin 1959, 1, 5–35. [Google Scholar]

- Pawińska, M.; Osowski, J. Wpływ ochrony na jakość bulw. Ziemn. Pol. 1998, 4, 13–21. [Google Scholar]

- Pawińska, M.; Mrówczyński, M. Występowanie i zwalczanie stonki ziemniaczanej (Leptinotarsa decemlineata Say) w latach 1978–1999. Prog. Plant Prot. 2000, 40, 292–299. [Google Scholar]

- Wójtowicz, A.; Jörg, E. Prognozowanie rozwoju stonki ziemniaczanej za pomocą programu komputerowego SIMLEP. Prog. Plant Prot. 2004, 44, 538–541. [Google Scholar]

- Pawińska, M. Historia i kierunki ochrony plantacji ziemniaka przed głównymi agrofagami. Prog. Plant Prot. 2009, 49, 1637–1642. [Google Scholar]

- Jörg, E.; Racca, P.; Preiß, U.; Butturini, A.; Schmiedl, J.; Wójtowicz, A. Control of Colorado Potato Beetle with the SIMLEP Decision Support System. EPPO Bull. 2007, 31, 353–358. [Google Scholar] [CrossRef]

- Garnis, J.; Dąbrowski, Z.T. Suitability of three methods used in monitoring and establishing presence of Agrotis segetum (Schiff.) by extension staff and vegetable producers. Ann. Warsaw Univ. Life Sci.—SGGW-Hortic. Landsc. Archit. 2008, 29, 19–30. [Google Scholar]

- Lewandowski, A.; Zjawińska, M. The efficiency of light trapping and pheromone traps for monitoring cutworms (Noctuinae) infesting in the cultivation of carrots and the importance of correctly forecasting. Prog. Plant Prot. 2012, 5, 535–540. [Google Scholar]

- Jakubowska, M.; Bocianowski, J. Efektywność pułapek feromonowych w monitoringu odłowów Agrotis segetum (Den. et Schiff.) i Agrotis exclamationis (L.) (Lep. Noctuidae) dla potrzeb prognozowania krótkoterminowego. Ann. Univ. Mariae Curie-Skłodowska. Sect. E Agric. 2013, 68, 1–9. [Google Scholar] [CrossRef]

- Legrève, A.; Duveiller, E. Preventing potential diseases and pest epidemics under a changing climate. In Climate Change and Crop Production; Reynolds, M.P., Ed.; CABI Publishing: Wallingford, UK, 2010; pp. 50–70. [Google Scholar]

- Sparks, A.N.; Riley, D.G.; Kuhar, T.P.; Blackmer, J.; Gonzalez, R. Climate change and pest management: Unanticipated consequences of trophic dislocation. Pest Manag. Sci. 2020, 76, 3855–3860. [Google Scholar]

- Tilman, D.; Cassman, K.G.; Matson, P.A.; Naylor, R.; Polasky, S. Agricultural sustainability and intensive production practices. Nature 2002, 418, 671–677. [Google Scholar] [CrossRef]

- Czembor, E.; Tratwal, A.; Pukacki, J.; Krystek, M.; Czembor, J.H. Managing fungal pathogens of field crops in sustainable agriculture and AgroVariety internet application as a case study. J. Plant Protect. Res. 2025, 65, 1–26. [Google Scholar] [CrossRef]

- Skendžić, S.; Zovko, M.; Živković, I.P.; Lešić, V.; Lemić, D. The impact of climate change on agricultural insect pests. Insects 2021, 12, 440. [Google Scholar] [CrossRef]

- Bale, J.S.; Masters, G.J.; Hodkinson, I.D.; Awmack, C.; Bezemer, T.M.; Brown, V.K.; Whittaker, J.B. Herbivory in global climate change research: Direct effects of rising temperature on insect herbivores. Glob. Change Biol. 2002, 8, 1–16. [Google Scholar] [CrossRef]

- Parsa, S.; Morse, S.; Bonifacio, A.; Chancellor, T.C.B.; Condori, B.; Crespo-Pérez, V.; Furlong, M.J. Obstacles to integrated pest management adoption in developing countries. Proc. Natl. Acad. Sci. USA 2014, 111, 3889–3894. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).