Abstract

The innovations in the field of specialized navigation systems have become prominent research topics. As an applied science for people with special needs, navigation aids for the visually impaired are a key sociotechnique that helps users to independently navigate and access needed resources indoors and outdoors. This paper adopts the informetric analysis method to assess the current research and explore trends in navigation systems for the visually impaired based on bibliographic records retrieved from the Web of Science Core Collection (WoSCC). A total of 528 relevant publications from 2010 to 2020 were analyzed. This work answers the following questions: What are the publication characteristics and most influential publication sources? Who are the most active and influential authors? What are their research interests and primary contributions to society? What are the featured key studies in the field? What are the most popular topics and research trends, described by keywords? Additionally, we closely investigate renowned works that use different multisensor fusion methods, which are believed to be the bases of upcoming research. The key findings of this work aim to help upcoming researchers quickly move into the field, as they can easily grasp the frontiers and the trend of R&D in the research area. Moreover, we suggest the researchers embrace smartphone-based agile development, as well as pay more attention to phone-based prominent frameworks such as ARCore or ARKit, to achieve a fast prototyping for their proposed systems. This study also provides references for the associated fellows by highlighting the critical junctures of the modern assistive travel aids for people with visual impairments.

1. Introduction

Visual impairment refers to the congenital or acquired impairment of visual function, resulting in decreased visual acuity or an impaired visual field. According to the World Health Organization, approximately 188.5 million people worldwide suffer from mild visual impairment, 217 million from moderate to severe visual impairment, and 36 million people are blind, with the number estimated to reach 114.6 million by 2050 [1]. In daily life, it is challenging for people with visual impairments (PVI) to travel, especially in places they are not familiar with. Although there have been remarkable efforts worldwide toward barrier-free infrastructure construction and ubiquitous services, people with visual impairments have to rely on their own relatives or personal travel aids to navigate, in most cases. In the post-epidemic era, independent living and independent travel elevate in importance since people have to maintain a social distance from each other. Thus, there has been consistent research conducted that concentrates on coupling technology and tools with a human-centric design to extend the guidance capabilities of navigation aids.

CiteSpace is a graphical user interface (GUI) bibliometric analysis tool developed by Chen [2], which has been widely adopted to analyze co-occurrence networks with rich elements, including authors, keywords, institutions, countries, and subject categories, as well as cited authors, cited literature, and a citation network of cited journals [3,4]. It has been widely applied to analyze the research features and trends in information science, regenerative medicine, lifecycle assessment, and other active research fields. Burst detection, betweenness centrality, and heterogeneous networks are the three core concepts of CiteSpace, which help to identify research frontiers, influential keywords, and emerging trends, along with sudden changes over time [3]. The visual knowledge graph derived by CiteSpace consists of nodes and relational links. In this graph, the size of a particular node indicates the co-occurrence frequency of an element, the thickness and color of the ring indicate the co-occurrence time slice of this element, and the thickness of a link between the two nodes shows the frequency of popularity in impacts [5]. Additionally, the purple circle represents the centrality of an element, and the thicker the purple circle, the stronger the centrality. Nodes with high centrality are usually regarded as turning points or pivotal points in the field [6]. In this work, we answer the following questions: What are the most influential publication sources? Who are the most active and influential authors? What are their research interests and primary contributions to society? What are the featured key studies in the field? What are the most popular topics and research trends, described by keywords? Moreover, we closely investigate milestone sample works that use different multisensor fusion methods that help to better illustrate the machine perception, intelligence, and human–machine interactions for renowned cases and reveals how frontier technologies influence the PVI navigation aids. By conducting narrative studies on representative works with unique multisensor combinations or representative multimodal interaction mechanisms, we aim to enlighten upcoming researchers with illustrating the state-of-art multimodal trial works conducted by predecessors.

This paper is organized as follows. In the next section, we applied CiteSpace bibliometrics on the Web of Science Core Collection and answer five essential questions of the field. Subsequently, in Section 3, we looked closely into and illustrate the renowned works of the field. Finally, Section 4 discusses the key findings and concludes the work.

2. Data Collection and Bibliometric Analysis

2.1. Data Collection

The data for bibliometric analysis were collected from the subset of Clarivate Analytics’ Web of Science Core Collection, which included entries indexed by SCI-EXPANDED, SSCI, A&HCI, CPCI-SSH, CPCI-S, and ESCI. The data retrieval strategy was as follows: TI = (((travel OR mobility OR navigation OR guidance OR guiding OR walking support OR mobile OR wayfinding) AND (system OR aid OR assist OR assistive OR assistant OR assistance OR aid OR aids OR prototype OR device)) AND (blind OR visually impair OR visually challenge OR visual impair OR visual challenge OR visually impaired OR visual impairment OR visual impairments OR vision impaired OR blindness)), where the time span = 2010–2020 (retrieved data 23 February 2021). A total of 550 references were obtained. The key parameters were applied as year per slice (1), term source (all selections), node type (choose one at a time), selection criteria (k = 25), and visualization (cluster view-static, show merged network). Publications on signal processing and control theory were excluded. Note that for most of the research works on travel aids for visually impaired people, the essential keywords always appear in the titles. Thus, the bibliometric search was carried out on the title (TI) rather than the topic (TS).

2.2. Bibliometric Analysis of Publication Outputs

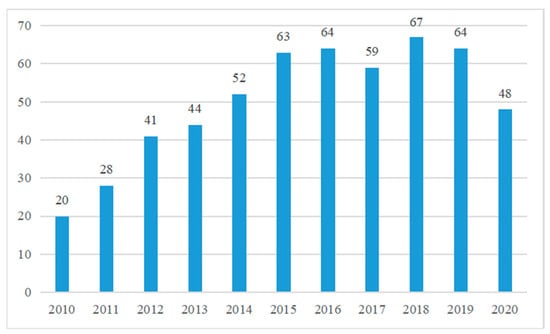

The total number of publications increased over the period studied but with some fluctuations. As shown in Figure 1, the period studied could be divided into two stages: the first stage was from 2010 to 2015 and the second stage was from 2016 to 2020. The first stage was a rapid development period; publication output increased from 20 in 2010 to 63 in 2015. One of the most important reasons was the boom of modern smartphones, led by Apple’s iPhone and Google’s Android phones after 2008. This facilitated the development of ubiquitous and affordable travel aid solutions for people with visual impairment, a trend revealed on the international telecom market and in the later analysis in this work [7]. It appears that a steady development of publication output takes place in the second stage.

Figure 1.

Publication output performance for navigation systems for PVI from 2010 to 2020 in Web of Science.

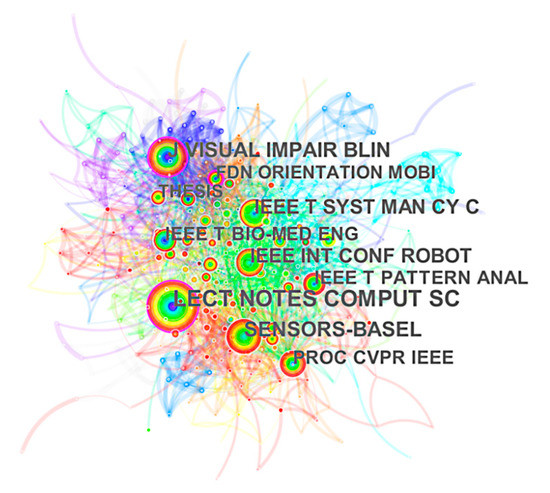

2.3. Most Influential Journals by Co-Citation Journals Map

To further analyze the most popular journals in the development of PVI travel aids, a co-cited journals (proceedings) knowledge map that highlights influential journals was generated. Generating a co-citation journal map resulted in 419 nodes and 2442 links. From Figure 2, the top 10 co-cited journals were Lecture Notes in Computer Science (0.11), Sensors-Basel (0.05), Journal of Visual Impairment Blindness (0.14), IEEE Transactions on Systems, Man, and Cybernetics-Part C (0.06), IEEE International Conference on Robotics and Automation (0.05), Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (0.07), IEEE Transactions on Biomedical Engineering (0.07), IEEE Transactions on Pattern Analysis and Machine Intelligence (0.05), Foundations of Orientation and Mobility (0.12) and THESIS (0.04). The journals Lecture Notes in Computer Science, Journal of Visual Impairment Blindness and Foundations of Orientation and Mobility have larger node sizes and higher centrality, at 0.14, 0.15, and 0.12, respectively. Therefore, we suggest that these three journals have high influence in the field of navigation assistance for PVI. In addition, according to the primary focus of the abovementioned journals, it is obvious that travel aids for PVI people possess interdisciplinary characteristics from both social and natural technological aspects.

Figure 2.

Journal co-citation map of studied publications from 2010 to 2020.

2.4. Most Active and Influential Authors by Co-Authorship and Co-Citationship

Active authors refer to authors who have published more documents in the given period. As shown in Figure 3, a co-author map, composed of 316 nodes and 197 links, was derived. The size of the node indicates the quantity of articles published by the author, and the lengths of links between the nodes were inversely proportional to the collaboration frequency of author pairs. From the co-author map, we observed that the connections between the most active authors are not intensive, while the 10 most active authors were Dragan Ahmetovic, Chieko Asakawa, Akihiro Yamashita, Joao Guerreiro, Edwige Pissaloux, Katsushi Matsubayashi, Cang Ye, Bogdan Mocanu, Kei Sato, and Xiaochen Zhang.

Figure 3.

Co-authorship map of studied publications from 2010 to 2020.

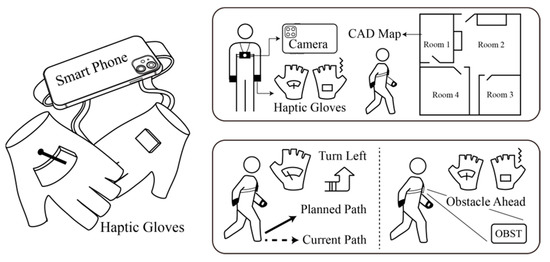

According to the co-authorship map, the most active author in the field is Dragan Ahmetovic, who has been working on computer vision and human–machine interactions for assistive systems. As a leading author, he worked closely with Chieko Asakawa and Joao Guerreiro when he was a research fellow at Carnegie Mellon University. The NavCog he proposed is a smartphone-based navigation system providing instructions for PVI, both indoors and outdoors. It uses a bluetooth low energy (BLE) beacon to collect scene knowledge and instruct users verbally. A considerable number of experimental studies have been conducted in airports [8], campuses [9], and other venues. It is worth mentioning that its later version also displays guidance clues, using the phone screen to enhance the instructions for users with residual vision [9,10]. Akihiro Yamashita, who worked closely with Katsushi Matsubayashi and Kei Sato, built a navigation system that uses radio frequency identification devices (RFID) and the quasi zenith satellite system (QZSS) to reinforce localization. The system uses Microsoft HoloLens to learn the geometry layout of the surroundings, which is necessary to plan feasible paths [11,12,13]. Edwige Pissaloux recently used a framework based on deep convolutional neural networks (Deep CNN) to detect indoor targets [14,15], an essential component for intelligence assistive systems. Cang Ye focused on guiding robots [16,17], while Bogdan Mocanu mainly studied mobile facial recognition, which is supposed to support the assistive system [18,19]. The ANSVIP proposed by Xiaochen Zhang uses ARcore-based simultaneous localization and mapping (SLAM) to localize users when GPS is not available, and haptic feedbacks are designed to ensure instructions are understood by the users [20].

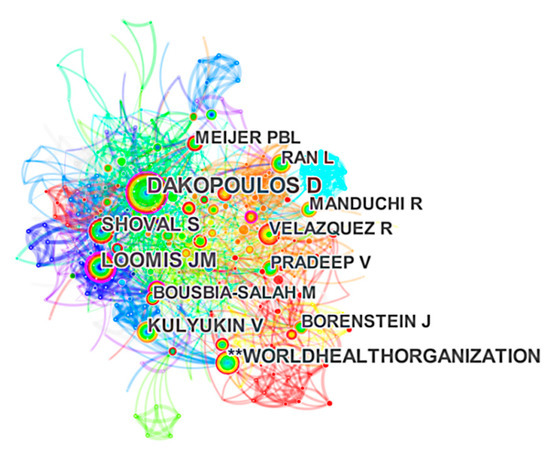

Author co-citations reveal the influential researchers who are leading the hot topics in research, as researchers are likely to cite these works while working on relevant studies. The author co-citation map shown in Figure 4 is composed of 409 nodes and 2365 links. Specifically, each node represents one author, while the node size denotes the corresponding citation counts. For better visualization of the graph, we adjusted the layout of the nodes and removed the anonymous nodes. Thus, the lengths of links no longer indicate relationships between author nodes. According to the co-citation map, the top 10 influential authors were Dimitrios Dakopoulos (0.14), Jack M. Loomis (0.2), Shraga Shoval (0.09), the World Health Organization (0.01), Vladimir Kulyukin (0.06), Vivek Pradeep (0.05), Lisa Ran (0.04), Ramiro Velazquez (0.03), Peter B. L. Meijer (0.03), and Mounir Bousbia-Salah (0.08). According to the centrality of co-citationship, Dimitrios Dakopoulos and Jack M. Loomis are the most influential authors in the field.

Figure 4.

Author co-citation map of studied publications from 2010 to 2020.

Specifically, Jack M. Loomis specializes in spatial cognition, vision-free navigation, and augmented reality for assistive technology. He proposed a guided eye system using GPS, GIS, and virtual acoustics components. As the PVI with the guided eye system moves, illustrative speeches are generated by a voice synthesizer. Furthermore, the subject is supposed to be capable of identifying the orientation of each described element, since the environmental information is conveyed by stereo or spatialized sound [21].

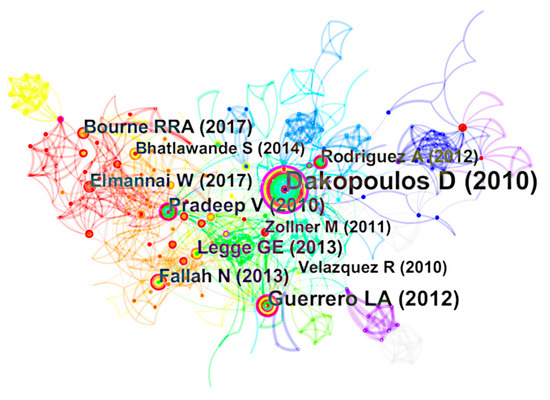

2.5. Key References by Co-Citation

Analyzing the citation frequency and centrality of co-cited references helps to clarify the key literature of state-of-the-art works in the field. As shown in Figure 5, the reference co-citation map has 455 nodes and 1518 links. Table 1 shows the details of the top 10 co-cited references ranked by citation frequency. In terms of centrality, the key works are by Dakopoulos (2010), Guerrero (2012), Pradeep (2010), and Rodriguez (2012).

Figure 5.

Reference co-citation map of studied publications from 2010 to 2020.

Table 1.

Top 10 co-cited references of studied publications from 2010 to 2020.

Dakopoulos categorized the indoor navigation system into audio feedback, tactile feedback, and w/o interface according to human–computer interaction and evaluated a sample of each system based on their structure and operating specifications. He claimed that the human–computer interactions of travel aids must make efforts to embrace features such as hands-free, nonauditory, and wearable [22]. The system proposed by Guerrero was composed of infrared cameras, infrared lights, a computer, and a smartphone. When the infrared camera, installed indoors, scans the infrared lights embedded in the cane, the vision system will identify the user’s position with respect to the environmental coordination system and guide the subject accordingly via voice messages casted by the smartphone [23].

Other key work by Pradeep included introducing a polynomial system utilizing trifocal tensor geometry and quaternion representation of rotation matrices, from which camera motion parameters can be extracted in the presence of noise [24]. Rodriguez used stereovision to achieve obstacle detection. It is worth noting that RANSAC and bone conduction technology were applied to enhance the optimization of localization and the user experience of human–machine interaction [25].

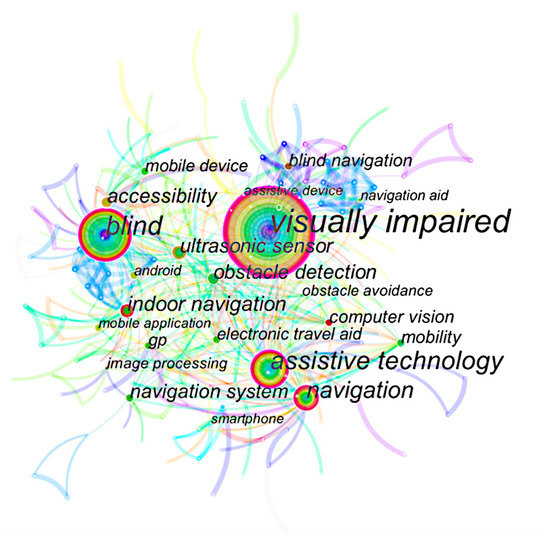

2.6. Primary Topics and Research Hotspots by Keyword Co-Occurrence

The keywords co-occurrence knowledge map reflects the primary topics and hotspots over time [31]. By setting term source to author keywords/descriptors (DE), a keywords co-occurrence map is generated with 364 nodes and 682 links, where each node represents one keyword, and its size indicates the corresponding co-occurrence frequencies, as shown in Figure 6. Table 2 lists the top 20 keywords in terms of co-occurrence frequency and centrality. As shown in the table, the most frequential keywords were ‘visually impaired’, ‘blind’, ‘assistive technology’, ‘navigation’, ‘ultrasonic sensor’, ‘accessibility’, ‘indoor navigation’, ‘obstacle detection’, ‘navigation system’, ‘blind navigation’, ‘computer vision’, ‘mobility’, ‘gps’ (shown as ‘gp’ in Figure 6), ‘mobile device’, ‘image processing’, ‘electronic travel aid’, ‘navigation aid’, ‘android’, ‘mobile application’, and ‘assistive device’. The ‘visually impaired’ consisted of eight similar elements, which were ‘visually impaired’, ‘visual impairment’, ‘visually impaired people’, ‘visualimpairmen’, ‘blind and visually impaired’, ‘blind and visually impaired’, ‘visual impaired’, and ‘visually impaired people (vip)’. The terms ‘blind’, ‘blindness’, ‘blind people’, and ‘blind user’ together formed the element ‘blind’. Moreover, ‘Assistive technology’ and ‘assistive technology’ were combined as ‘assistive technology’; ‘Computer vision’ and ‘computervision’ were combined as ‘computer vision’; ‘ultrasonicsensor’ and ‘ultrasonic sensor’ were combined as ‘ultrasonic sensor’.

Figure 6.

Keyword co-occurrence map of studied publications from 2010 to 2020.

Table 2.

Top 20 keywords by co-occurrence.

We categorize the top 20 keywords into four topics, namely user, goal, requirement, and technology, as shown in Table 2. User refers to the research population targeted, goal emphasizes the outputs, requirement indicates the user needs supposed to be met during the process, and technology represents the specific scientific and engineering means used to achieve the goal. According to the co-occurrence of keywords, it is evident that researchers have been taking advantage of interdisciplinary technologies to empower navigation aids for PVI, but there has been less work that considered social–technical issues such as human–machine cooperation theory, usability engineering, feasibility analysis, and user experience.

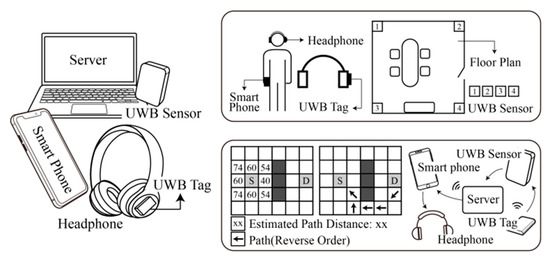

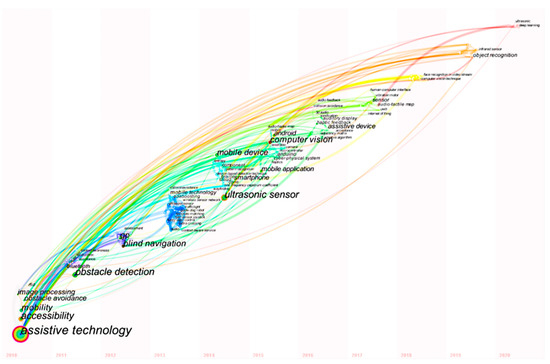

The timezone map of the keywords requirement and technology is shown in Figure 7. Comprehensive analysis of Table 2 and Figure 7 helps to identify the research hotspots in navigation assistance for PVI over the past decade. In terms of technology, ‘assistive technology’ can be regarded as a general term, so the keyword appeared more frequently and stands as a root of the map in Figure 7. Subsequently, specific technologies emerged for services with explicit demands, such as ultrasonic sensors [32], infrared sensors [33], computer vision [34], ultrawideband (UWB) [35], and GPS [36] to collect environmental information; image processing [37], convolutional neural network (CNN) [38], and deep learning [14] to achieve advanced intelligence; stereo audio [39], audio–tactile maps [40], and haptic feedbacks [41] to bridge humans and machines. From the perspective of requirement, ‘accessibility’, ‘mobility’, and ‘wearable’ are among the most popular keywords. The specific requirements of the system include: (1) detecting, recognizing, and avoiding objects [42,43]; (2) situational awareness [44]; (3) smartphone-based [45] mobile applications [46]; and (4) audio [47] and haptic feedbacks [48]. Furthermore, it can be seen in Figure 7 that the research hotspots in the last three years are CNN, assistive wearable devices, facial recognition in video streaming, infrared sensors, object recognition, and deep learning. To summarize, works with artificial intelligence such as neural networks and deep learning have emerged as hot topics in navigation aids for PVI.

Figure 7.

Map of timezone view of keywords for topics by requirement and technology.

The timezone map presents the development process over a period, thereby providing insights to predict the future trend of the studied research field. Specifically, for scene understanding during the period 2010 to 2015, the system mainly uses Bluetooth, RFID, and ultrasonic sensors to obtain environmental data. After 2015, computer-vision-based approaches have been more popular for situational awareness. Furthermore, with respect to functionalities, the primary focus shifted from simple obstacle avoidance to more complicated mixed functionalities borrowed from autonomous mobile robotics to achieve better navigation. Concerning hardware, since 2008, mobile phones have gradually become wise options, and the flexibility and compatibility of smartphone apps have injected vitality into the research community. As a result, the development of smartphone-based approaches increased remarkably over time, and its growth rate reached a peak by 2015. Smartphone-based approaches are still thriving and stable. This also explains the reason why the publications studied are divided into two stages. According to Figure 7, more artificial intelligence approaches such as deep learning and neural networks appear as later nodes. Therefore, we propose that the trend towards autonomy and intelligence is unstoppable, while the primary device chosen as the carrier of the navigation aids would still be smartphones. On the basis of ensuring safe roaming, new methodologies such as vision-based cognitive systems [49] and social semantics [50] are gaining more attention to comprehensively improve the quality of subjects’ daily life.

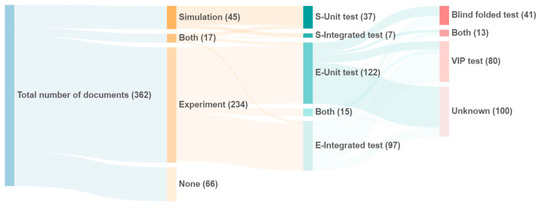

According to the bibliometric study, we have answered the following questions: What are the most influential publication sources? Who are the most active and influential authors? What are their research interests and primary contributions to society? What are the featured key studies in the field? What are the most popular topics and research trends, described by keywords? However, bibliometric analysis has its shortcomings since the results do not provide sufficient detailed insights due to two internal causes. First, the conclusions lack applicability, as they do not take a closer look into sample milestone works. Second, it is weak at identifying pioneering works using epoch-making approaches, as the influence of citation and hotspot requires more time to emerge. Thus, we have conducted a narrative study that facilitates further examination into works with unique multisensor combinations or representative multimodal interaction mechanisms.

4. Discussion and Conclusions

In this work, a bibliometric study using CiteSpace was carried out for research works of PVI navigation aids. We clearly answered the following questions: What are the publication characteristics and most influential publication sources? Who are the most active and influential authors? What are their research interests and primary contributions to society? What are the featured key studies in the field? What are the most popular topics and research trends, described by keywords? Since bibliometric analysis suffers from latency of response to research-focus changes and lacks closer views of milestone and representative works, we conducted a narrative illustration on remarkable works with different system architecture and sensory combinations in achieving modern navigation aids. The narrative study categories the works by their primary focus and takes ten representative works as illustrative examples to show the distinct influential sensory combinations and mechanisms applied.

The main findings follow. First, as an applied science, navigation aids research and development periodically reflect the status of frontier science and the economical rules of electronic products that accompany the development of personal computation devices and services; the carriers of navigation aids gradually shift from specially manufactured equipment to ubiquitous personal devices, e.g., PDA, computer, and currently, smartphones. Second, the research and developmental works focus less on fundamental theories; the most influential journal of this field shares the scope of sensory technology, assistive technology, robotics, and human-machine interactions. Third, the most active authors are with institutions in USA and Japan. Cooperation between leading authors results in high publication output and quality. Fourth, the key references reflect a fact that wearable and compact aids, two essential means of usability engineering, draw more attention and adoption by researchers. Fifth, hybrid functionalities supported by computer vision and multimodal sensor appear to gain more focus other than conventional obstacle avoidance. Moreover, latest progress of science and technology, such as artificial intelligence, robotics simultaneous localization and mapping, and stereo multimodal feedbacks, all allow for navigation aids to be more powerful, compact, efficient, and user-friendly. Finally, there have been numerous attempts using different sensor combination and distinct multimodal human–machine interactions to realize the navigation aids.

However, a clear conclusion is lacking, which establishes the best way to achieve navigation aids with comprehensive consideration of affordability, usability, and sufficient functionality. One of the reasons is that it lacks a recognized guideline or benchmark for these features. Thus, besides the scientific and engineering efforts, the next stage for research and development of PVI navigation aids may require additional efforts on the user-centric factors to make systems user-friendly and practical from aspects of affordability and usability, and on cooperatively working with leading research institutions and dominating organizations (WHO, World Health Organization or RESNA, Rehabilitation Engineering and Assistive Technology Society of North America) to jointly propose recommended standards and unified evaluation indicators for the research field.

As can be seen, although significant potential exists in relation to the benefits of interdisciplinary scientific advances in PVI travel aids, a large number of under-researched areas remain. Based on the literature, the advanced sensory, multimodal perception, autonomous navigation planning, and human–machine interactions are among the most viable and practical supportive components for modern PVI travel aids. Based on our study of the literature and insights gained from users, a light-weight system is clearly preferred to a highly coupled complex system. Moreover, in addition to the scientific contribution and advances, the human-centric principle is also an essential concern.

By comprehensively considering usability, feasibility, and development and technology adoption costs, we believe that the use of smartphones as the primary carrier, including the use of their onboard sensors with optional accessories, appears to be a clear trend for the upcoming research and development of navigation aids for PVI.

Specifically, for researchers and developers in this field, we subjectively but constructively suggest embracing the agile development principle in realizing navigation systems for PVI. That is, rather than undertaking all development from scratch, we believe a more sensible approach is to use a fast prototype with open interfaces, such as Google ARCore or Apple ARKit, in addition to other compatible and powerful technologies that encompass positioning, perception, navigation, and artificial intelligence in a shell. After the conceptual system is verified by the function-equivalent rapid prototype, the team could further explore and expand the potential of the smartphone-based system or appointed appropriate frameworks, which are considered to be less efficient in terms of time and effort, in addition to being commercially expensive.

Although most papers do not discuss the stability and compatibility of their works, we understand the ease and frequency with which navigation-aid systems crash or fail in practice. We highly recommend frameworks such as ARCore and ARKit, because they are engineering innovations that achieve stable mapping and positioning (repositioning) via numerous scientific and engineering efforts of their R&D teams. These technologies were developed for multiplayer application in a shared physical space, which is consistent with the needs of the PVI navigation, and are termed sensor-based-positioning, sparse feature SLAM, and human–machine systems. In addition, the development process is simpler than that of the conventional approaches; that is, more intuitively understandable and powerful cross-platform development environments are emerging, such as Unity3D and Xcode. These are user-friendly approaches for researchers and college students.

Given the increasing popularity of smartphones, we believe that ’smartphone+’ will be the dominant form of future PVI travel aids due to its various merits, such as integrated and well-calibrated sensors, powerful processing capability, ease of use, and on-the-move intelligent interfaces, which are endorsed by prominent teams; lower development cost; and, primarily, high rates of ownership and a minimum additional learning burden.

Author Contributions

Conceptualization, X.Z., F.S., and F.H.; methodology, X.Y. and F.S.; software, X.Y.; validation, L.H., and X.Y.; resources, X.Z.; data curation, X.Z. and L.H.; writing—original draft preparation, X.Y. and L.H.; writing—review and editing, X.Z., F.S., and F.H.; visualization, X.Y. and L.H.; supervision, X.Z. and F.H.; project administration, X.Z. and F.H.; funding acquisition, X.Z. and F.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Humanity and Social Science Youth foundation of the Ministry of Education of China, grant numbers 18YJCZH249 and 19YJC760109; Guangzhou Science and Technology Planning Project, grant number 201904010241; ’Design Science and Art Research Center‘ of Guangdong Social Science Research Base.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Acknowledgments

We thank MDPI for its linguistic assistance during the preparation of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Bourne, R.R.; Flaxman, S.R.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; Leasher, J.; Limburg, H.; et al. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e888–e897. [Google Scholar] [CrossRef]

- Ping, Q.; He, J.; Chen, C. How many ways to use CiteSpace? A study of user interactive events over 14 months. J. Assoc. Inf. Sci. Technol. 2017, 68, 1234–1256. [Google Scholar] [CrossRef]

- Chen, C. CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 359–377. [Google Scholar] [CrossRef]

- Liu, Z.; Yin, Y.; Liu, W.; Dunford, M. Visualizing the intellectual structure and evolution of innovation systems research: A bibliometric analysis. Scientometrics 2015, 103, 135–158. [Google Scholar] [CrossRef]

- Chen, C.; Hu, Z.; Liu, S.; Tseng, H. Emerging trends in regenerative medicine: A scientometric analysis in CiteSpace. Expert Opin. Biol. Ther. 2012, 12, 593–608. [Google Scholar] [CrossRef] [PubMed]

- Chen, C. The centrality of pivotal points in the evolution of scientific networks. In Proceedings of the 10th International Conference on Intelligent User Interfaces, San Diego, CA, USA, 10–13 January 2005; pp. 98–105. [Google Scholar]

- Del Rosario, M.B.; Redmond, S.J.; Lovell, N.H. Tracking the evolution of smartphone sensing for monitoring human movement. Sensors 2015, 15, 18901–18933. [Google Scholar] [CrossRef]

- Guerreiro, J.; Ahmetovic, D.; Sato, D.; Kitani, K.M.; Asakawa, C. Airport accessibility and navigation assistance for people with visual impairments. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–14. [Google Scholar]

- Sato, D.; Oh, U.; Guerreiro, J.; Ahmetovic, D.; Naito, K.; Takagi, H.; Kitani, K.M.; Asakawa, C. NavCog3 in the wild: Large-scale blind indoor navigation assistant with semantic features. ACM Trans. Access. Comput. 2019, 12, 1–30. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Gleason, C.; Kitani, K.M.; Takagi, H.; Asakawa, C. NavCog: Turn-by-turn smartphone navigation assistant for people with visual impairments or blindness. In Proceedings of the 13th Web for All Conference, Montreal, QC, Canada, 11–13 April 2016; pp. 1–2. [Google Scholar]

- Sato, K.; Yamashita, A.; Matsubayashi, K. Development of a navigation system for the visually impaired and the substantiative experiment. In Proceedings of the 2016 Fifth ICT International Student Project Conference (ICT-ISPC), Nakhon Pathom, Thailand, 27–28 May 2016; pp. 141–144. [Google Scholar]

- Endo, Y.; Sato, K.; Yamashita, A.; Matsubayashi, K. Indoor positioning and obstacle detection for visually impaired navigation system based on LSD-SLAM. In Proceedings of the 2017 International Conference on Biometrics and Kansei Engineering (ICBAKE), Kyoto Sangyo University, Kyoto, Japan, 15–17 September 2017; pp. 158–162. [Google Scholar]

- Yamashita, A.; Sato, K.; Sato, S.; Matsubayashi, K. Pedestrian navigation system for visually impaired people using hololens and RFID. In Proceedings of the 2017 Conference on Technologies and Applications of Artificial Intelligence (TAAI), Taipei, Taiwan, 1–3 December 2017; pp. 130–135. [Google Scholar]

- Afif, M.; Ayachi, R.; Said, Y.; Pissaloux, E.; Atri, M. An evaluation of retinanet on indoor object detection for blind and visually impaired persons assistance navigation. Neural Process. Lett. 2020, 51, 1–15. [Google Scholar] [CrossRef]

- Afif, M.; Ayachi, R.; Pissaloux, E.; Said, Y.; Atri, M. Indoor objects detection and recognition for an ICT mobility assistance of visually impaired people. Multimed. Tools Appl. 2020, 79, 1–18. [Google Scholar] [CrossRef]

- Ye, C.; Qian, X. 3-D object recognition of a robotic navigation aid for the visually impaired. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 26, 441–450. [Google Scholar] [CrossRef]

- Zhang, H.; Ye, C. Human-Robot Interaction for Assisted Wayfinding of a Robotic Navigation Aid for the Blind. In Proceedings of the 12th International Conference on Human System Interaction (HSI), Richmond, VA, USA, 25–27 June 2019; pp. 137–142. [Google Scholar]

- Tapu, R.; Mocanu, B.; Zaharia, T. Face recognition in video streams for mobile assistive devices dedicated to visually impaired. In Proceedings of the 2018 14th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Las Palmas de Gran Canaria, Spain, 26–29 November 2018; pp. 137–142. [Google Scholar]

- Mocanu, B.; Tapu, R.; Zaharia, T. Deep-see face: A mobile face recognition system dedicated to visually impaired people. IEEE Access 2018, 6, 51975–51985. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, X.; Zhu, Y.; Hu, F. An ARCore based user centric assistive navigation system for visually impaired people. Appl. Sci. 2019, 9, 989. [Google Scholar] [CrossRef]

- Loomis, J.M.; Klatzky, R.L.; Golledge, R.G. Navigating without vision: Basic and applied research. Optom. Vis. Sci. 2001, 78, 282–289. [Google Scholar] [CrossRef]

- Dakopoulos, D.; Bourbakis, N.G. Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Trans. Syst. Man Cybern. Part C 2010, 40, 25–35. [Google Scholar] [CrossRef]

- Guerrero, L.A.; Vasquez, F.; Ochoa, S.F. An indoor navigation system for the visually impaired. Sensors 2012, 12, 8236–8258. [Google Scholar] [CrossRef] [PubMed]

- Pradeep, V.; Lim, J. Egomotion using assorted features. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1514–1521. [Google Scholar]

- Rodríguez, A.; Yebes, J.J.; Alcantarilla, P.F.; Bergasa, L.M.; Almazán, J.; Cela, A. Assisting the visually impaired: Obstacle detection and warning system by acoustic feedback. Sensors 2012, 12, 17476–17496. [Google Scholar] [CrossRef]

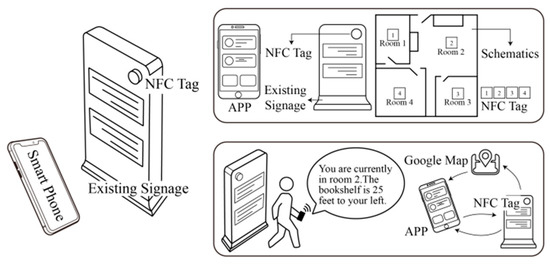

- Legge, G.E.; Beckmann, P.J.; Tjan, B.S.; Havey, G.; Kramer, K.; Rolkosky, D.; Gage, R.; Chen, M.; Puchakayala, S.; Rangarajan, A. Indoor navigation by people with visual impairment using a digital sign system. PLoS ONE 2013, 8, e76783. [Google Scholar] [CrossRef] [PubMed]

- Fallah, N.; Apostolopoulos, I.; Bekris, K.; Folmer, E. Indoor human navigation systems: A survey. Interact. Comput. 2013, 25, 21–33. [Google Scholar]

- Elmannai, W.; Elleithy, K. Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef] [PubMed]

- Zöllner, M.; Huber, S.; Jetter, H.-C.; Reiterer, H. NAVI—A proof-of-concept of a mobile navigational aid for visually impaired based on the microsoft kinect. In IFIP Conference on Human-Computer Interaction; Springer: Berlin, Germany, 2011; pp. 584–587. [Google Scholar]

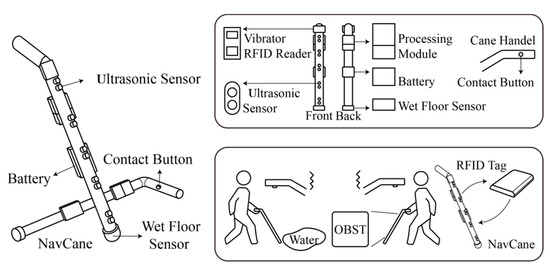

- Bhatlawande, S.; Mahadevappa, M.; Mukherjee, J.; Biswas, M.; Das, D.; Gupta, S. Design, development, and clinical evaluation of the electronic mobility cane for vision rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 1148–1159. [Google Scholar] [CrossRef]

- Yu, D.; Xu, Z.; Pedrycz, W.; Wang, W. Information Sciences 1968–2016: A retrospective analysis with text mining and bibliometric. Inf. Sci. 2017, 418, 619–634. [Google Scholar] [CrossRef]

- Kumar, K.; Champaty, B.; Uvanesh, K.; Chachan, R.; Pal, K.; Anis, A. Development of an ultrasonic cane as a navigation aid for the blind people. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari, India, 10–11 July 2014; pp. 475–479. [Google Scholar]

- Marzec, P.; Kos, A. Low Energy Precise Navigation System for the Blind with Infrared Sensors. In Proceedings of the 2019 MIXDES-26th International Conference “Mixed Design of Integrated Circuits and Systems”, Rzeszów, Poland, 27–29 June 2019; pp. 394–397. [Google Scholar]

- Chaccour, K.; Badr, G. Computer vision guidance system for indoor navigation of visually impaired people. In Proceedings of the 2016 IEEE 8th International Conference on Intelligent Systems (IS), Sofia, Bulgaria, 4–6 September 2016; pp. 449–454. [Google Scholar]

- Ma, J.; Zheng, J. High precision blind navigation system based on haptic and spatial cognition. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 956–959. [Google Scholar]

- Prudhvi, B.; Bagani, R. Silicon eyes: GPS-GSM based navigation assistant for visually impaired using capacitive touch braille keypad and smart SMS facility. In Proceedings of the 2013 World Congress on Computer and Information Technology (WCCIT), Sousse, Tunisia, 22–24 June 2013; pp. 1–3. [Google Scholar]

- Filipe, V.; Fernandes, F.; Fernandes, H.; Sousa, A.; Paredes, H.; Barroso, J. Blind navigation support system based on Microsoft Kinect. Procedia Comput. Sci. 2012, 14, 94–101. [Google Scholar] [CrossRef]

- Hsieh, Y.-Z.; Lin, S.-S.; Xu, F.-X. Development of a wearable guide device based on convolutional neural network for blind or visually impaired persons. Multimed. Tools Appl. 2020, 79, 29473–29491. [Google Scholar] [CrossRef]

- Matsuda, K.; Kondo, K. Towards an accurate route guidance system for the visually impaired using 3D audio. In Proceedings of the 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE), Nagoya, Japan, 24–27 October 2017; pp. 1–2. [Google Scholar]

- Papadopoulos, K.; Koustriava, E.; Koukourikos, P. Orientation and mobility aids for individuals with blindness: Verbal description vs. audio-tactile map. Assist. Technol. 2018, 30, 191–200. [Google Scholar] [CrossRef] [PubMed]

- Ahlmark, D.I.; Prellwitz, M.; Röding, J.; Nyberg, L.; Hyyppä, K. An initial field trial of a haptic navigation system for persons with a visual impairment. J. Assist. Technol. 2015, 9, 199–206. [Google Scholar] [CrossRef]

- Costa, P.; Fernandes, H.; Martins, P.; Barroso, J.; Hadjileontiadis, L.J. Obstacle detection using stereo imaging to assist the navigation of visually impaired people. Procedia Comput. Sci. 2012, 14, 83–93. [Google Scholar] [CrossRef][Green Version]

- Milotta, F.L.; Allegra, D.; Stanco, F.; Farinella, G.M. An electronic travel aid to assist blind and visually impaired people to avoid obstacles. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin, Germany, 2015; pp. 604–615. [Google Scholar]

- Silva, C.S.; Wimalaratne, P. Context-aware Assistive Indoor Navigation of Visually Impaired Persons. Sens. Mater. 2020, 32, 1497–1509. [Google Scholar] [CrossRef]

- Carbonara, S.; Guaragnella, C. Efficient stairs detection algorithm Assisted navigation for vision impaired people. In Proceedings of the 2014 IEEE International Symposium on Innovations in Intelligent Systems and Applications (INISTA), Alberobello, Italy, 23–25 June 2014; pp. 313–318. [Google Scholar]

- Chaccour, K.; Badr, G. Novel indoor navigation system for visually impaired and blind people. In Proceedings of the 2015 International Conference on Applied Research in Computer Science and Engineering (ICAR), Beirut, Lebanon, 8–9 October 2015; pp. 1–5. [Google Scholar]

- Laubhan, K.; Trent, M.; Root, B.; Abdelgawad, A.; Yelamarthi, K. A wearable portable electronic travel aid for blind. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 3–5 March 2016; pp. 1999–2003. [Google Scholar]

- Loconsole, C.; Dehkordi, M.B.; Sotgiu, E.; Fontana, M.; Bergamasco, M.; Frisoli, A. An IMU and RFID-based navigation system providing vibrotactile feedback for visually impaired people. In International Conference on Human Haptic Sensing and Touch Enabled Computer Applications; Springer: Berlin, Germany, 2016; pp. 360–370. [Google Scholar]

- Nair, V.; Budhai, M.; Olmschenk, G.; Seiple, W.H.; Zhu, Z. ASSIST: Personalized indoor navigation via multimodal sensors and high-level semantic information. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Ntakolia, C.; Dimas, G.; Iakovidis, D.K. User-centered system design for assisted navigation of visually impaired individuals in outdoor cultural environments. Univers. Access Inf. Soc. 2020, 20, 1–26. [Google Scholar]

- Katz, B.F.G.; Kammoun, S.; Parseihian, G.; Gutierrez, O.; Brilhault, A.; Auvray, M.; Truillet, P.; Denis, M.; Thorpe, S.; Jouffrais, C. NAVIG: Augmented reality guidance system for the visually impaired. Virtual Real. 2012, 16, 253–269. [Google Scholar] [CrossRef]

- Gelmuda, W.; Kos, A. Multichannel ultrasonic range finder for blind people navigation. Bull. Pol. Acad. Sci. 2013, 61, 633–637. [Google Scholar] [CrossRef]

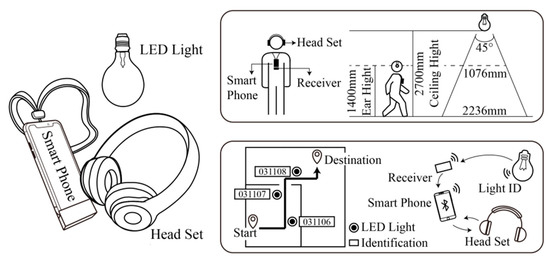

- Nakajima, M.; Haruyama, S. New indoor navigation system for visually impaired people using visible light communication. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 37. [Google Scholar] [CrossRef]

- Praveen, R.G.; Paily, R.P. Blind navigation assistance for visually impaired based on local depth hypothesis from a single image. Procedia Eng. 2013, 64, 351–360. [Google Scholar] [CrossRef]

- Bourbakis, N.; Makrogiannis, S.K.; Dakopoulos, D. A system-prototype representing 3D space via alternative-sensing for visually impaired navigation. IEEE Sens. J. 2013, 13, 2535–2547. [Google Scholar] [CrossRef]

- Aladren, A.; López-Nicolás, G.; Puig, L.; Guerrero, J.J. Navigation assistance for the visually impaired using RGB-D sensor with range expansion. IEEE Syst. J. 2014, 10, 922–932. [Google Scholar] [CrossRef]

- Martinez-Sala, A.S.; Losilla, F.; Sánchez-Aarnoutse, J.C.; García-Haro, J. Design, implementation and evaluation of an indoor navigation system for visually impaired people. Sensors 2015, 15, 32168–32187. [Google Scholar] [CrossRef] [PubMed]

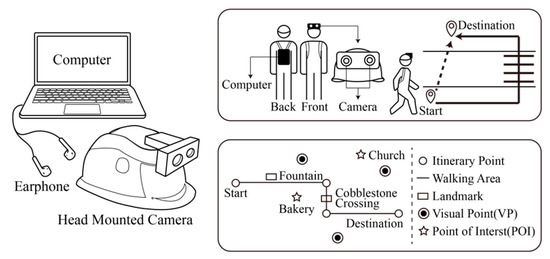

- Xiao, J.; Joseph, S.L.; Zhang, X.; Li, B.; Li, X.; Zhang, J. An assistive navigation framework for the visually impaired. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 635–640. [Google Scholar] [CrossRef]

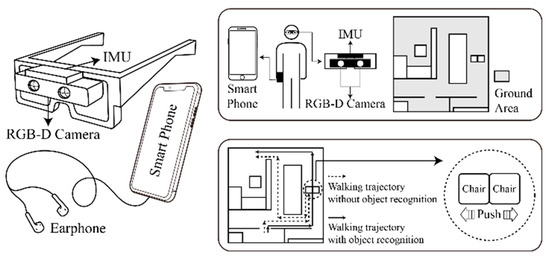

- Li, B.; Munoz, J.P.; Rong, X.; Xiao, J.; Tian, Y.; Arditi, A. ISANA: Wearable context-aware indoor assistive navigation with obstacle avoidance for the blind. In European Conference on Computer Vision; Springer: Berlin, Germany, 2016; pp. 448–462. [Google Scholar]

- Ganz, A.; Schafer, J.; Tao, Y.; Yang, Z.; Sanderson, C.; Haile, L. PERCEPT navigation for visually impaired in large transportation hubs. J. Technol. Pers. Disabil. 2018, 6, 336–353. [Google Scholar]

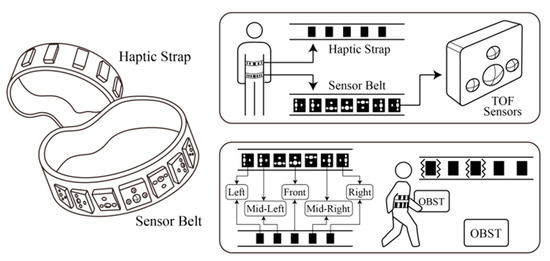

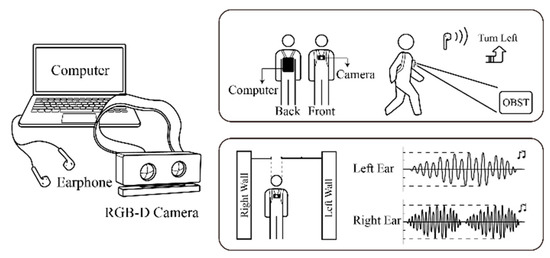

- Katzschmann, R.K.; Araki, B.; Rus, D. Safe local navigation for visually impaired users with a time-of-flight and haptic feedback device. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 583–593. [Google Scholar] [CrossRef]

- Meshram, V.V.; Patil, K.; Meshram, V.A.; Shu, F.C. An astute assistive device for mobility and object recognition for visually impaired people. IEEE Trans. Hum. Mach. Syst. 2019, 49, 449–460. [Google Scholar] [CrossRef]

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable travel aid for environment perception and navigation of visually impaired people. Electronics 2019, 8, 697. [Google Scholar] [CrossRef]

- Barontini, F.; Catalano, M.G.; Pallottino, L.; Leporini, B.; Bianchi, M. Integrating wearable haptics and obstacle avoidance for the visually impaired in indoor navigation: A user-centered approach. IEEE Trans. Haptics 2020, 14, 109–122. [Google Scholar] [CrossRef] [PubMed]

- Nair, V.; Olmschenk, G.; Seiple, W.H.; Zhu, Z. ASSIST: Evaluating the usability and performance of an indoor navigation assistant for blind and visually impaired people. Assist. Technol. 2020, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Duh, P.J.; Sung, Y.C.; Chiang, L.Y.F.; Chang, Y.J.; Chen, K.W. V-eye: A vision-based navigation system for the visually impaired. IEEE Trans. Multimed. 2020, 23, 1567–1580. [Google Scholar] [CrossRef]

- Rodrigo-Salazar, L.; Gonzalez-Carrasco, I.; Garcia-Ramirez, A.R. An IoT-based contribution to improve mobility of the visually impaired in Smart Cities. Computing 2021, 103, 1233–1254. [Google Scholar] [CrossRef]

- Mishra, G.; Ahluwalia, U.; Praharaj, K.; Prasad, S. RF and RFID based Object Identification and Navigation system for the Visually Impaired. In Proceedings of the 2019 32nd International Conference on VLSI Design and 2019 18th International Conference on Embedded Systems (VLSID), Delhi, India, 5–9 January 2019; pp. 533–534. [Google Scholar]

- Ganz, A.; Schafer, J.M.; Tao, Y.; Wilson, C.; Robertson, M. PERCEPT-II: Smartphone based indoor navigation system for the blind. In Proceedings of the 2014 36th annual international conference of the IEEE engineering in medicine and biology society, Chicago, IL, USA, 26–30 August 2014; pp. 3662–3665. [Google Scholar]

- Kahraman, M.; Turhan, C. An intelligent indoor guidance and navigation system for the visually impaired. Assist. Technol. 2021, 1–9. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, H.; Zhang, L.; Zhu, Y.; Hu, F. Double-Diamond Model-Based Orientation Guidance in Wearable Human–Machine Navigation Systems for Blind and Visually Impaired People. Sensors 2019, 19, 4670. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, M.; Haruyama, S. Indoor navigation system for visually impaired people using visible light communication and compensated geomagnetic sensing. In Proceedings of the 2012 1st IEEE International Conference on Communications in China (ICCC), Beijing, China, 15–17 August 2012; pp. 524–529. [Google Scholar]

- Li, B.; Munoz, J.P.; Rong, X.; Chen, Q.; Xiao, J.; Tian, Y.; Arditi, A.; Yousuf, M. Vision-based mobile indoor assistive navigation aid for blind people. IEEE Trans. Mob. Comput. 2018, 18, 702–714. [Google Scholar] [CrossRef] [PubMed]

- Katz, B.F.G.; Truillet, P.; Thorpe, S.; Jouffrais, C. NAVIG: Navigation assisted by artificial vision and GNSS. In Proceedings of the Workshop on Multimodal Location Based Techniques for Extreme Navigation, Helsinki, Finland, 17 May 2010; pp. 1–4. [Google Scholar]

- Katz, B.F.G.; Dramas, F.; Parseihian, G.; Gutierrez, O.; Kammoun, S.; Brilhault, A.; Brunet, L.; Gallay, M.; Oriola, B.; Auvray, M. NAVIG: Guidance system for the visually impaired using virtual augmented reality. Technol. Disabil. 2012, 24, 163–178. [Google Scholar] [CrossRef]

- Bai, J.; Lian, S.; Liu, Z.; Wang, K.; Liu, D. Smart guiding glasses for visually impaired people in indoor environment. IEEE Trans. Consum. Electron. 2017, 63, 258–266. [Google Scholar] [CrossRef]

- Bai, J.; Lian, S.; Liu, Z.; Wang, K.; Liu, D. Virtual-blind-road following-based wearable navigation device for blind people. IEEE Trans. Consum. Electron. 2018, 64, 136–143. [Google Scholar] [CrossRef]

- Pełczyński, P.; Ostrowski, B. Automatic calibration of stereoscopic cameras in an electronic travel aid for the blind. Metrol. Meas. Syst. 2013, 20, 229–238. [Google Scholar] [CrossRef]

- Song, M.; Ryu, W.; Yang, A.; Kim, J.; Shin, B.S. Combined scheduling of ultrasound and GPS signals in a wearable ZigBee-based guidance system for the blind. In Proceedings of the 2010 Digest of Technical Papers International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–13 January 2010; pp. 13–14. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).