Abstract

Digital learning competence (DLC) can help students learn effectively in digital learning environments. However, most of the studies in the literature focused on digital competencies in general without paying specific attention to learning. Therefore, this paper developed a DLC framework based on a comprehensive literature review, which consists of six dimensions, namely technology use, cognitive processing, digital reading skill, time-management, peer management and will management. This study then developed a scale to assess these competencies where 3473 middle school students participated in the scale validation process. Specifically, exploratory factor analysis, confirmatory factor analysis and item discrimination were used to validate this scale. The six dimensions accounted for 58.66% of the total variance of the scale. The overall internal consistency coefficient was 0.94 for the scale. The results showed that the developed DLC scale is a valid and reliable instrument for assessing middle school students’ digital learning competence. The findings of this study can help teachers and specialists to improve the competencies of their learners by providing a new validated scale that could be used to assess learners and identify their DLC weakness, hence provide the needed trainings accordingly.

1. Introduction

1.1. Digital Learning Competencies

During recent years, digital technologies have seen widespread use across global society and adoption at all levels of education [1]. With the infusion of technology in education, the educational system has been digitalized [2]. Learning environments have shifted from physical to blended environments where learning can be both online and offline. Learning contents have shifted from paper-based formats to both digital and tangible-based formats. The learners have also shifted to be the digital generation of learners (also known as: Digital Learner [3], Digital Natives [4,5,6], Net Generation [7], Millennials [8], and Generation Z [9]). The rapid advancement in technology in recent years have changed learning behaviors, and an increasing focus on informal learning has become one of the several challenges faced by current educational systems [10].

Digital competence has been interpreted in various ways (e.g., digital Literacy, digital Capacity, e-Literacy, e-Skills, e-Competence, computer Literacy, and media Literacy) in policy documents, in academic literature, and in teaching, learning and certification practices [11]. The International Computer and Information Literacy Study (ICILS) defines digital literacy/competence as an individual’s ability to use computers to investigate, create and communicate in order to participate effectively at home, at school, in workplaces and communities [12]. However, different fields have different (digital) competencies. For instance, the competencies required in education are different than those required in finance or health fields. Additionally, education as a field combines knowledge about several sub-fields, including pedagogy, educational technology, instructional design, etc. Therefore, there is a special interest towards investigating digital competencies in learning specifically. In line with this, several organizations, such as UNESCO and OECD [13], have launched several calls to investigate digital learning competencies. For example, the report, Global Framework on Digital Literacy of UNESCO, emphasized learning with technology as the key digital literacy [14]. The National Conference of State Legislature (NCSL) integrated “the ability to use digital tools safely and effectively for learning” into the concept of digital competence/literacy [15]. Brolpito [16] emphasized the importance of digital competence for online learning in a lifelong journey. Huang et al. [17] further pointed out that the COVID-19 pandemic has further emphasized the need for students to acquire digital learning competencies so that they can cope with this rapidly changing world. However, the current literature has not deeply focused specifically on the digital learning competence.

Learning competence refers to the way individuals (and organizations as groups of individuals) are able to recognize, absorb and use knowledge [18]. It is meant to convey that organisms are able to learn, something enables them to do so, and this something (which is actually a multitude of things) varies across individuals [19]. It is generally believed that learning competence comprised three elements: goal (motivation), will and ability (knowledge and practice) [20]. This implies that the learning competence of a person depends entirely on whether the person has a clear goal, a strong will and a wealth of theoretical knowledge, as well as plenty of practical experience. Based on this, Digital Learning Competence (DLC) is defined in this paper as a set of knowledge, skills and attitudes that enable students to learn efficiently and effectively by using digital tools in digital learning environments.

1.2. Research Gap and Objectives

Based on the background above, several research studies focused on exploring digital competence frameworks, but those frameworks were not discussed enough from the perspective of student’s learning. For example, Ferrari [21] described a framework for developing and understanding digital competence in Europe, which involves five areas, namely information, communication, content-creation, safety and problem-solving. The self-assessment grid within this framework gave each European citizen a chance to evaluate his/her own level of digital competence. Cartelli [22] also developed a framework of digital competence assessment based on the following dimensions: cognitive, affective and socio-relational. While digital competence frameworks were discussed in different fields, limit focus has been paid to the pedagogic contexts. Particularly, for those studies which focused on the importance of digital competence in education, most of them paid much attention to teachers’ competence to integrate digital tools into their pedagogical and administrative work [23]. For instance, the Norwegian Centre for ICT in Education introduced professional digital competence framework for teachers, which consisted of seven competence areas, namely: subjects and basic skill, change and development, interaction and communication and so on [23]. However, with the spread of COVID-19, students from all over the world were affected with the school closure policy and face-to-face classes were shifted to online courses. During that time, not only teachers’ digital competencies were needed to improve, but also students’ digital learning competencies [17]. In José Sá and Serpa’s research [24], the evaluation of educators’ facilitating learners’ digital competence were the lowest compared with their professional competencies and digital pedagogic competencies. It is clear to see that exploring students’ digital learning competencies is needed more than ever.

Additionally, most of the instruments developed to assess digital competencies were tested with limited sample size. For example, in José Sá and Serpa’s research, only 127 participants completed the questionnaire, and there were 268 students participating in Cartelli’s research [22]. Therefore, to cover these two research gaps, this study contributes to extant literature by creating a framework for DLC, based on a solid and scientific research method, namely comprehensive literature review. It then uses a large sample size to develop and validate a scale based on this framework to assess students’ DLC. Specifically, this paper answers the following two research questions:

- RQ1. What learning competencies are needed by students for digital learning?

- RQ2. How to assess DLC of students?

The rest of the paper is structured as follows: Section 2 answers the first research question by creating a Digital Learning Competence (DLC) framework, while Section 3 answers the second research question by developing and validating a scale that assess the digital learning competencies (identified in RQ1.). Section 4 discusses the obtained results. Finally, Section 5 concludes the paper with a summary of the findings, research implications and future directions based on this research study.

2. Creation of the DLC Framework

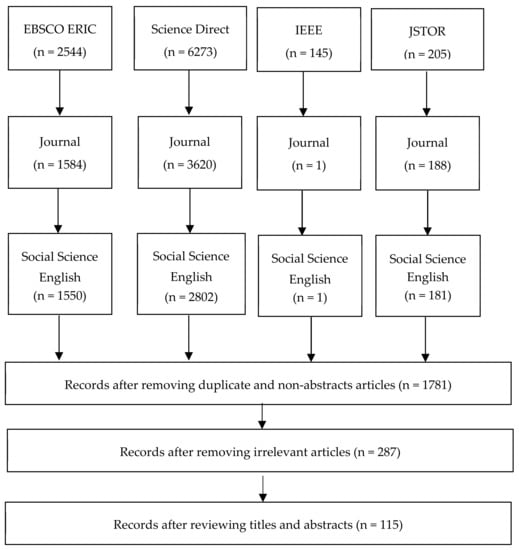

To answer the first research question, a comprehensive review was conducted by two authors of this paper (educators and researchers of educational technology themselves) aiming to identify the learning competences needed in digital environments. In this context, several search keywords were used, including “digital competency”, “digital literacy”, “information literacy”, “online learning competencies”, “digital learning competencies”, “e-learning competencies” and “cyberlearning competencies”. The search was conducted in the search engine Google Scholar and in different electronic databases, including ScienceDirect, IEEE Xplore and JSTOR. Several excluding criteria were considered in this search, namely (1) Papers which are not written in English; (2) Book chapters and white/conference papers; and (3) Papers which do not provide a comprehensive description about the possible needed digital learning competencies. After preliminary screening, 1781 journal articles were retained. Based on the abstract screening, 1553 redundant articles and those which did not meet the inclusion/exclusion criteria were deleted. Finally, two researchers went through the full text of the remaining 287 articles, and 115 articles were remained for this study. Figure 1 presents the literature review process.

Figure 1.

Review Process of the literature review.

The collected DLC were then split into different categories, via a card sorting method. This method is used to organize and improve the architecture of the information. It is an established method for knowledge elicitation by creating different categories of collected information, where it has been widely applied in several fields, including education, psychology, robotics, knowledge engineering, software engineering and web site design [25,26,27]. To ensure the reliability of the final obtained categories, two researchers in this study participated in the categorization process based on the definitions of DLC reported in the reviewed studies. Based on the competency extracted from the literature review and considering the three dimensions of goal, will and ability of DLC in digital learning environments (presented above), a DLC framework of six competencies was developed, as shown in Table 1. Each of these competencies is discussed below.

Table 1.

Framework of digital learning competence.

Technology Use: UNESCO [28] highlighted that students should use various networked devices, digital resources and electronic environments to learn, and proposed the ICT Competency Framework for Teachers. However, Rolf, Knutsson, & Ramberg [29] stated that the appropriate use of technology within the applied teaching practices is still a challenge. In this context, several researchers highlighted that learners’ abilities to use technology should be one of the concerns of teachers [30,31]. Similarly, Beetham [32] mentioned that “No technologies should be introduced to the learning situation without consideration of learners’ confidence and competence in their use” (p. 43). In this context, it is seen that 63% of teachers focused specifically on the development of their learners’ ICT skills [30]. However, students lack the required ICT skills, despite the rapid digitalization in society and working life [33,34]. Therefore, in the DLC framework, technology use is to assess student’s abilities to learn, work and innovate by using different technologies, including technology selection, media understanding and evaluating, and creation and communication by using digital media.

Cognitive Processing: Eagleman [35] defined cognitive processing as the way of gathering different information and using them meaningfully in a specific situation (e.g., while learning). Nowadays students are almost grown up with digital technology and Internet, [36] mentioned that in the era of digital learning, cognitive development processing is influenced by technology. Weinstein, Madan, and Sumeracki [37] further mentioned that cognitive processing in education should be considered to increase learning efficiency, especially in this new era. Moreover, digital technology, such as mixed-reality, has been proved to be able to amplify the role of learners’ cognitive processing [38,39], which indicates that cognitive processing in digital environments should be taken into consideration for evaluating digital learning competency. Therefore, in this framework, cognitive processing is to assess student’s abilities of information processing in learning, including having ideas, rehearsing, elaborating, organizing and reflecting.

Digital Reading Skill: Internet provides students different learning scenarios by accessing different information (e.g., text, video or audio) from different sources (websites, blogs, social networks, etc.). In these learning scenarios, the student has to deal with huge number of available information sources, as well as different information formats and their reliability [40]. Salmerón, Strømsø, Kammerer, Stadtler and van den Broek further stated that to effectively make use of these learning scenarios, digital reading skill is important, including searching and navigation, information assessment and integration. Researchers pointed out that readers may face reading problems when they are exposed to complex information environments [41,42]. Therefore, in the DLC framework, digital reading skill assesses students’ abilities of reading and covers different skills needed in digital learning, including, surveying, questioning, reading actively, reciting and reviewing.

Time Management: in the digital learning era, several educators are now using self-regulated strategies while teaching, where students are in control of the learning process and are self-oriented, rather than being controlled by the teacher. Specifically, time management is crucial in online learning environments, as the students are supposed to be autonomous and self-directed students [43]. Therefore, in the DLC framework, time management assesses student’s ability to use time effectively, including setting goals, prioritizing learning tasks, scheduling and feedback.

Peer management: Peer-to-peer management refers to a group of students with a common spoken language are working together to complete different educational concepts [44]. Hegarty mentioned that digital learning is not facilitated only by teachers [45], but also by peers. Therefore, in the DLC framework, peer management assesses student’s ability of collaboration with peers for achieving goals, including trust with each other, influencing and guiding others, negotiating and resolving the conflict.

Will management: Kyndt, Onghena, Smet and Dochy [46] stated that providing learning opportunities to learners is not enough, as the willingness to consider these opportunities is the first crucial step towards learning [47,48]. In Rubin’s pioneering study [49], several features of a good learner were mentioned, including willingness to guess, make mistakes, communicate and monitor one’s own. Additionally, it is expected that learners should be more willing to take responsibility for their own learning to develop continuously [50]. This is further seen especially in digital learning where teachers are mostly absent and no face-to-face learning is provided (i.e., teachers cannot continuously motivate and guide students). Therefore, in the DLC framework, willingness management assesses student’s self-management in learning motivation, including learning beliefs, motivation and self-efficacy.

Based on the proposed framework in Table 1, and to answer the second research question, a 5-point Likert scale was developed and validated for the assessment of digital learning competence, as discussed in the next section.

3. Validation of the DLC Scale

An experiment was conducted to answer the second research question and the obtained results are reported in the next subsequent sections.

3.1. Experimental Procedure

In the first stage, a 5-point Likert scale with 52 original items was developed based on several validated instruments. For instance, the items of “technology use” dimension considered the VARK questionnaire [51], the European Digital Competence Framework (EDCF) [52] and the Global Framework of Reference on Digital Literacy Skills for Indicator [14]. The items of “cognitive processing” and “will management” dimensions have referred to the Motivated Strategies for Learning Questionnaire (MSLQ) [53] and Learning and Study Strategies Inventory (LASSI) [54]. The items of “digital reading” considered SQ3R methods [55]. The items of “time management” dimension referred to Adolescence Time Management Scale (ATMS) by Huang [56]. Finally, the items of “peer management” were compiled by the authors according to the requirements of interpersonal skills for collaborative learning [57].

Ten experts in the field of learning technology, education and psychology then assessed this scale by measuring the quality of each item, ranging from “not measuring” to “total measuring”. The obtained results highlighted that only 41 items (out of 52 items) were considered as “total measuring”. Particularly, 9 items were deleted, which were mainly adapted from Teo’s [58] digital native assessment scale, because most experts thought that these items could be used to evaluate the technology using preferences rather than competence. Additionally, 2 items were further deleted because the experts viewed them as highly correlated with other items in the dimension.

Afterwards, a large-scale pre-experiment was held with 1000 middle school students. After the application of Exploratory Factor Analysis (EFA), two more items were deleted (highlighted using * in Table 2) because they have cross-loadings with the factor loading difference less than 0.15 [59] and the new obtained Digital Learning Competence Scale (DLCS) contained only 39 items, as shown in Table 2.

Table 2.

The final scale with 39 items.

Finally, a serial of small-scale pre-trials with high school students was conducted to improve the accuracy, clarity and parsimony of each item, by considering the inputs of several middle school students who read the full questionnaire. For instance, the item “When I read the newspaper and magazine, I can transfer the title of the article into questions” was changed to “When I read the newspaper and magazine, I can transfer the title of the article into questions, in order to guide the following reading”.

At the end, a sample of 3473 (1560 male, 1913 female; aged 13–14) middle school students from 38 schools were collected. Students were given 30 min to answer the online questionnaire in computer classroom guided by teachers. The sampling strategies include cluster and convenient sampling. Cluster sampling was used to investigate the 3700 students from 100 classes in Grade 8 from the 38 schools located separately and equally in the 7 districts in Beijing, which take about 1/6 of all the Grade 8 students in Beijing. Then the 3 or 4 classes from each school were selected by convenience sampling.

3.2. Data Analysis

SPSS 21.0 and AMOS 22.0 were employed to analyze the collected data. Exploratory factor analysis (EFA) was used to determine the factor structure. To establish structural validity of the scale, confirmatory factor analysis (CFA) was applied. Sampling adequacy was determined for the factor analysis. There are no missing values in the data. The normality of the data was examined by inspection of skewness and kurtosis. From the results, the skewness (technology use = −0.397; cognitive processing = −0.680; digital reading = −0.180; time management = −0.240; peer management = −0.367; will management = −0.525) and kurtosis (technology use = 0.512; cognitive processing = 1.787; digital reading = 0.579; time management = 0.434; peer management = 0.698; will management = 0.648) values were within the recommended cut-offs of |3| and |10| for skewness and kurtosis, respectively [60]. These results ensure the normality of the data. Results of Kaiser-Meyer-Olkin (KMO) (0.957) and Bartlett’s Test of Sphericity (χ2 (3473) = 69043.803, p < 0.001) further showed that the data was appropriate for the factor analysis to proceed.

3.3. Results

3.3.1. Exploratory Factor Analysis

A principal component analysis with varimax rotation was used to explore the factor structure of the collected data. The six factors accounted for 58.66% of the total variance, and the coefficient of the overall Cronbach’s alpha of the scale was 0.94. Cronbach’s alpha for each subscale ranged from 0.736 to 0.894, as shown in Table 3. The first factor, technology use, with the eigenvalue of 12.811, included nine items (Cronbach’s alpha = 0.870). The second factor, cognitive processing, with the eigenvalue of 3.520, included seven items (Cronbach’s alpha = 0.894). The third factor, reading skill, with the eigenvalue of 2.176, included seven items (Cronbach’s alpha = 0.867). The fourth factor, peer management, with the eigenvalue of 1.827, included six items (Cronbach’s alpha = 0.886). The fifth factor, time management, with the eigenvalue of 1.360, included six items (Cronbach’s alpha = 0.858). The sixth factor, will management, with the eigenvalue of 1.181, included five items (Cronbach’s alpha = 0.736). Factors with an eigen value greater than 1 could be considered representative [61]. The coefficient alpha values for all the constructs are above the recommended threshold value of 0.7, as recommended by Nunnally [62].

Table 3.

Factor loading and Cronbach’s alpha values for the six factors.

3.3.2. Confirmatory Factor Analysis

To further explore the relations among the six constructs, and to substantiate the structure of the scale, CFA was conducted in AMOS 22.

As shown in Table 4, CFA resulted in satisfactory indices (χ2 (682) = 6336.345, p < 0.001; RMSEA = 0.049; CFI = 0.918; GFI = 0.901; NFI = 0.909; TLI = 0.910) indicating that the six-factor model, obtained in EFA from SPSS 21, was of good fit. As mentioned by Joreskog and Sorbom [63], one could ignore the absolute fit index of minimum discrepancy chi-square p value if the sample size obtained for the study is greater than 200. Hou, Wen and Cheng [64] also mentioned that Chisq/df is easily affected by the sample size, and therefore the Chisq/df was not reported here because of the large sample.

Table 4.

Model fit measurement statistics.

3.3.3. Convergent and Discriminant Validity

In addition to the model fit indices, composite reliability (CR) and average variance extracted (AVE) were also estimated to examine the validity of the scale, which can be obtained from CFA [61]. A composite reliability of 0.70 or above and an average variance extracted of more than 0.50 are deemed acceptable [65]. As shown in Table 5, the composite reliability (CR) values were above the recommended threshold value in all items giving further evidence of construct reliability. The average variance extracted (AVE) for the measures was 0.50 and above except for the three factors of technology use, reading skill and will management, which is higher than 0.4 but less than 0.5. According to Fornell and Larcker [66], if AVE is less than 0.5, but composite reliability is higher than 0.6, the convergent validity of the construct is still adequate. This confirms the convergent and discriminant validities of the scale.

Table 5.

CR and AVE of confirmatory factor analysis.

4. Discussion

The present study focused on developing and validating a scale that could be used to evaluate the students’ digital learning competence. A framework of digital learning competence with six dimensions was first proposed based on a comprehensive literature review. According to the proposed framework, a new scale with 39 items to measure DLCS was developed, and a survey was carried out with 3473 students in China for testing the reliability and validity of the scale. EFA revealed a six-factor structure model accounting for 58.66% of the total variance, and the overall Cronbach’s alpha was 0.944. CFA supported the six-factor model: technology use, cognitive processing, digital reading, peer management, time management and will management. All the constructs satisfied the conditions of reliability and discriminant validity. Statistical analyses showed that the scale (DLCS) was a valid and reliable instrument.

Digital learning competence is of critical importance for students to learn in digital environments with digital devices, which is also a necessary survival capability for the digital citizen in the Internet age [67]. Understanding the structure of digital learning competence could contribute to the cultivation of student learning ability in a digital world. The six identified competencies, namely technology use, cognitive processing, digital reading, peer management, time management and will management should be taken into consideration to promote students’ 21st century skills. In fact, the results of this study also coincide with the four domains of “learning to learn” proposed by Caena and Stringher [68], namely: cognitive and metacognitive domain, affective-motivational and learning dispositions domain, proactive domain and social domain.

Cognitive processing, digital reading and technology use are the three fundamental factors for digital learning competence. Cognitive processing emphasizes the information processing capacity, which includes the ability to select the main knowledge points from lectures, to link knowledge for better understanding and reflect on the learned contents [69]. Digital reading emphasizes the ability of reading digital materials, including the capability to summarize them, pose and solve questions (e.g., published in online forums), and review the read materials [40]. Technology use emphasizes the ability to use different kinds of technology to live, to create, to learn and to communicate, which is closely related with one’s information literacy [70].

Peer management, time management and will management are the three competencies that can help to ensure that the digital learning goals could be accomplished in time. Huang et al. [67] mentioned that if students have these high management skills, they can easily reach their learning goal for better learning outcomes, especially when the teacher is absent (i.e., learning outside of classrooms). In the process of digital learning, students control and adjust their actions according to their goals, overcome difficulties and realize the goal, which is embodied in the three aspects of will management, time management and peer management. Peer management emphasizes students’ ability to cooperate with others, including the ability to talk and listen, to influence others and to resolve the conflict [57]. Time management emphasizes the ability to use time effectively, including the ability to plan, to schedule and to evaluate [56]. Will management emphasizes the students’ self-management ability in learning motivation, including the learning belief and motivation [71].

5. Conclusions and Future Work

This study created a framework of digital learning competence with six dimensions, namely technology use, cognitive processing, reading skills, peer management, time management and will management. It then developed and validated a scale that could be used to assess these competencies. EFA revealed a six-factor structure model accounting for 58.66% of the total variance, and the overall Cronbach’s alpha was 0.944. Statistical analyses showed that the scale (DLCS) was a valid and reliable instrument.

This study can advance the educational technology field by providing to researchers and practitioners a new framework of digital learning competence that can then be used to investigate how these competencies vary from a learner to another based on several individual differences (gender, personality, learning style, ethnicity, etc.), hence provide personalized learning process accordingly. This study can also help teachers and specialists to improve the competencies of their learners by providing a new validated scale that could be used to assess learners and identify their DLC weakness. Specifically, understanding the structure of digital learning competence could contribute to the cultivation of student’s learning ability in digital environments. With the emerging trends of online merging offline learning, especially during the post-COVID 19 pandemic, digital learning competence is becoming key for learner success when learning by themselves independently.

Despite the solid ground that this study presented related to DLC, several limitations are found that should be acknowledged. For instance, the digital native’s competencies for learning are increasingly complex and natural to their learning process. Therefore, more research is needed to have a systematic process of identifying those competences and how they overlap with other competencies from other fields. Additionally, the included DLC constructs were based on the conducted literature review; therefore, these constructs depended on the included paper. To overcome this limitation, future research should focus on conducting a Delphi Method with international experts in the field to further validate the obtained framework and scale (i.e., the experts might suggest adding or deleting some competencies or items).

Future research directions could focus on: (1) determine the acquisition of competencies in their entirety (learning and implementation) as a skill; and (2) other digitally advanced cultures to predict the relationship between students’ performance and digital learning competence. Multivariate statistical analysis methods, such as multiple regression analysis, should be used to discover the relations of the subscales and learning achievements, and relations among the items of the questionnaire. The norm of digital learning competence for digital natives should be built through large sample surveys.

Author Contributions

Conceptualization, J.Y., R.H., and R.Z.; methodology, R.Z.; software, R.Z.; validation, A.T., J.Y.; data curation, R.Z.; writing—original draft preparation, J.Y., R.Z.; writing—review and editing, A.T., K.K.B.; supervision, R.H.; project administration, J.Y.; funding acquisition, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by 2019 Zhejiang Provincial Philosophy and Social Planning Project: Design and Evaluation of Learning Space for Digital Learners (No: 19ZJQN21YB).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare there is no conflict of interest regarding the publication of this paper.

References

- Carrier, M.; Damerow, R.M.; Bailey, K.M. Digital Language Learning and Teaching: Research, Theory, and Practice; Routledge: Abingdon-on-Thames, UK, 2017. [Google Scholar]

- Yang, J.; Yu, H. Learner is Changing: Review of Research on Digital Generation of Learners. Int. Comp. Educ. 2015, 37, 78–84. (In Chinese) [Google Scholar]

- Kalantzis, M.; Cope, B. Introduction: The Digital Learner—Towards a Reflexive Pedagogy. In Handbook of Research on Digital Learning; IGI Global: Hershey, PA, USA, 2020; pp. xviii–xxxi. [Google Scholar]

- Prensky, M. Digital Natives, Digital Immigrants Part 1. Horizon 2001, 9, 1–6. [Google Scholar] [CrossRef]

- Barak, M. Are digital natives open to change? Examining flexible thinking and resistance to change. Comput. Educ. 2018, 121, 115–123. [Google Scholar] [CrossRef]

- Kesharwani, A. Do (how) digital natives adopt a new technology differently than digital immigrants? A longitudinal study. Inf. Manag. 2020, 57, 103170. [Google Scholar] [CrossRef]

- Tapscott, D. Wer ist die Netz-Generation? In Net Kids; Gabler Verlag: Berlin/Heidelberg, Germany, 1998; pp. 35–58. [Google Scholar]

- Dimock, M. Defining Generations: Where Millennials End and Generation Z Begins. Pew Res. Cent. 2019, 17, 1–7. [Google Scholar]

- Goh, E.; Okumus, F. Avoiding the hospitality workforce bubble: Strategies to attract and retain generation Z talent in the hospitality workforce. Tour. Manag. Perspect. 2020, 33, 100603. [Google Scholar] [CrossRef]

- Chen, N.S.; Cheng, I.L.; Chew, S.W. Evolution is not enough: Revolutionizing current learning environments to smart learning environments. Int. J. Artif. Intell. Educ. 2016, 26, 561–581. [Google Scholar]

- Gallardo-Echenique, E.E.; Marqués-Molias, L.; de Oliveira, J.M. Digital Competence in the Knowledge Society. MERLOT J. Online Learn. Teach. 2015, 11, 1–16. [Google Scholar]

- Fraillon, J.; Ainley, J.; Schulz, W.; Duckworth, D.; Friedman, T. IEA International Computer and Information Literacy Study 2018 Assessment Framework; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- OECD. Students, Computers and Learning: Making the Connection, PISA; OECD Publishing: Paris, France, 2015. [Google Scholar]

- Law, N.; Woo, D.; de la Torre, J.; Wong, G. A Global Framework of Reference on Digital Literacy Skills for Indicator 4.4.2; UNESCO Institute Statistics: Montreal, QC, Canada, 2018. [Google Scholar]

- National Conference of State Legislature (NCSL). Digital Literacy. Washington, DC: The NCSL Podcast. NCSL. Available online: http://www.ncsl.org/research/education/digital-literacy.aspx (accessed on 11 April 2021).

- Brolpito, A. Digital Skills and Competence, and Digital and Online Learning; European Training Foundation: Turin, Italy, 2018. [Google Scholar]

- Huang, R.H.; Liu, D.J.; Zhan, T.; Amelina, N.; Yang, J.F.; Zhuang, R.X.; Chang, T.W.; Cheng, W. Guidance on Active Learning at Home during Educational Disruption: Promoting Student’s Self-Regulation Skills during COVID-19 Out-Break; Smart Learning Institute of Beijing; Normal University: Beijing, China, 2020. [Google Scholar]

- Van Winkelen, C.; McKenzie, J. Knowledge Works: The Handbook of Practical Ways to Identify and Solve Common Organizational Problems for Better Performance; John Wiley & Sons: New York, NY, USA, 2011. [Google Scholar]

- Mercado, E. Mapping Individual Variations in Learning Capacity. Int. J. Comp. Psychol. 2011, 24, 4–35. [Google Scholar] [CrossRef]

- Learning Capacity. Available online: http://wiki.mbalib.com/wiki/%E5%AD%A6%E4%B9%A0%E5%8A%9B (accessed on 25 February 2021).

- Ferrari, A. DIGCOMP: A Framework for Developing and Understanding Digital Competence in Europe. Available online: https://www.researchgate.net/publication/282860020_DIGCOMP_a_Framework_for_Developing_and_Understanding_Digital_Competence_in_Europe (accessed on 11 April 2021).

- Cartelli, A. Frameworks for Digital Competence Assessment: Proposals, Instruments and Evaluation. In Proceedings of the 2010 InSITE Conference, Cassino, Italy, 19–24 June 2010; Informing Science Institute: Cassino, Italy, 2010; pp. 561–574. [Google Scholar]

- Kelentrić, M.; Helland, K.; Arstorp, A.T. Professional Digital Competence Framework for Teachers. EU SCIENCE HUB. Available online: https://ec.europa.eu/jrc/en/digcompedu (accessed on 11 April 2021).

- Sá, M.J.; Serpa, S. COVID-19 and the Promotion of Digital Competences in Education. Univers. J. Educ. Res. 2020, 8, 4520–4528. [Google Scholar] [CrossRef]

- Cheng, Y.W.; Sun, P.C.; Chen, N.S. The essential applications of educational robot: Requirement analysis from the perspectives of experts, researchers and instructors. Comput. Educ. 2018, 126, 399–416. [Google Scholar] [CrossRef]

- Nurmuliani, N.; Zowghi, D.; Williams, S.P. Using card sorting technique to classify requirements change. In Proceedings of the 12th IEEE International Requirements Engineering Conference, Washington, DC, USA, 6–10 September 2004; pp. 240–248. [Google Scholar]

- Tlili, A.; Nascimbeni, F.; Burgos, D.; Zhang, X.; Huang, R.; Chang, T.-W. The evolution of sustainability models for Open Educational Resources: Insights from the literature and experts. Interact. Learn. Environ. 2020, 1–16. [Google Scholar] [CrossRef]

- UNESCO. UNESCO ICT Competency Framework for Teachers. Paris: UNESCO. Available online: http://unesdoc.unesco.org/images/0026/002657/265721e.pdf (accessed on 11 April 2021).

- Rolf, E.; Knutsson, O.; Ramberg, R. An analysis of digital competence as expressed in design patterns for technology use in teaching. Br. J. Educ. Technol. 2019, 50, 3361–3375. [Google Scholar] [CrossRef]

- Fraillon, J.; Ainley, J.; Schulz, W.; Friedman, T.; Gebhardt, E. Preparing for Life in a Digital Age: The IEA International Computer and Information Literacy Study International Report; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Littlejohn, A.; Beetham, H.; McGill, L. Learning at the digital frontier: A review of digital literacies in theory and practice. J. Comput. Assist. Learn. 2012, 28, 547–556. [Google Scholar] [CrossRef]

- Beetham, H. Designing for Active Learning in Technology-Rich Contexts. In Rethinking Pedagogy for a Digital Age; Routledge India: London, UK, 2013; pp. 55–72. [Google Scholar]

- Omito, O. Evaluating learners’s ability to use technology in distance education: The case of external degree programme of the university of nairobi. Turk. Online J. Distance Educ. 2016, 17, 147–157. [Google Scholar] [CrossRef]

- Hiltunen, L. Lack of ICT Skills Hinders Students’ Professional Competence. Available online: https://jyunity.fi/en/thinkers/lack-of-ict-skills-hinders-students-professional-competence/ (accessed on 11 April 2021).

- Eagleman, D. Cognitive Processing: What Is and Why It Is Important. Available online: https://braincheck.com/blog/cognitive-processing-what-it-is-why-important (accessed on 11 April 2021).

- Di Giacomo, D.; Ranieri, J.; Lacasa, P. Digital Learning as Enhanced Learning Processing? Cognitive Evidence for New insight of Smart Learning. Front. Psychol. 2017, 8, 1329. [Google Scholar] [CrossRef]

- Weinstein, Y.; Madan, C.R.; Sumeracki, M.A. Teaching the science of learning. Cogn. Res. Princ. Implic. 2018, 3, 1–17. [Google Scholar] [CrossRef]

- Sethy, S.S. Cognitive Skills: A Modest Way of Learning through Technology. Turk. Online J. Distance Educ. 2012, 13, 260–274. [Google Scholar]

- Raptis, G.E.; Fidas, C.; Avouris, N. Effects of mixed-reality on players’ behaviour and immersion in a cultural tourism game: A cognitive processing perspective. Int. J. Hum. Comput. Stud. 2018, 114, 69–79. [Google Scholar] [CrossRef]

- Salmerón, L.; Strømsø, H.I.; Kammerer, Y.; Stadtler, M.; van den Broek, P. Comprehension Processes in Digital Reading. Learning to Read in a Digital World; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2018; pp. 91–120. [Google Scholar]

- McNamara, D.S.; Magliano, J. Toward a comprehensive model of comprehension. Psychol. Learn. Motiv. 2009, 51, 297–384. [Google Scholar]

- Rouet, J.F. The Skills of Document Use: From Text Comprehension to Web-Based Learning; Psychology Press: London, UK, 2006. [Google Scholar]

- Dembo, M.H.; Junge, L.G.; Lynch, R. Becoming a self-regulated learner: Implications for web-based education. In Web-Based Learning: Theory, Research, and Practice; Routledge: London, UK, 2006; pp. 185–202. [Google Scholar]

- Lu, M.; Deng, Q.; Yang, M. EFL Writing Assessment: Peer Assessment vs. Automated Essay Scoring. In Proceedings of the International Symposium on Emerging Technologies for Education, Magdeburg, Germany, 23–25 September 2019; pp. 21–29. [Google Scholar]

- Hegarty, B. Attributes of open pedagogy: A model for using open educational resources. Educ. Technol. 2015, 55, 3–13. [Google Scholar]

- Kyndt, E.; Onghena, P.; Smet, K.; Dochy, F. Employees’ willingness to participate in work-related learning: A multilevel analysis of employees’ learning intentions. Int. J. Educ. Vocat. Guid. 2014, 14, 309–327. [Google Scholar] [CrossRef]

- Maurer, T.J.; Weiss, E.M.; Barbeite, F.G. A model of involvement in work-related learning and development activity: The effects of individual, situational, motivational, and age variables. J. Appl. Psychol. 2003, 88, 707. [Google Scholar] [CrossRef] [PubMed]

- Tharenou, P. The relationship of training motivation to participation in training and development. J. Occup. Organ. Psychol. 2001, 74, 599–621. [Google Scholar] [CrossRef]

- Rubin, J. What the “good language learner” can teach us. TESOL Q. 1975, 9, 41–51. [Google Scholar] [CrossRef]

- Hurtz, G.M.; Williams, K.J. Attitudinal and motivational antecedents of participation in voluntary employee development activities. J. Appl. Psychol. 2009, 94, 635. [Google Scholar] [CrossRef] [PubMed]

- Fleming, N.D.; Mills, C. Not Another Inventory, Rather a Catalyst for Reflection. Improv. Acad. 1992, 11, 137–155. [Google Scholar] [CrossRef]

- Kluzer, S.; Priego, L.P. Digcomp into Action: Get Inspired, Make It Happen. A User Guide to the European Digital Competence Framework (No. JRC110624); Publications Office of the European Union: Luxembourg, 2018. [Google Scholar]

- Pintrich, P.R.; Smith, D.; Garcia, T.; McKeachie, W. Predictive validity and reliability of the Motivated Strategies for Learning Questionnaire (MSLQ). Educ. Psychol. Meas. 1993, 53, 801–813. [Google Scholar] [CrossRef]

- Cano, F. An In-Depth Analysis of the Learning and Study Strategies Inventory (LASSI). Educ. Psychol. Meas. 2006, 66, 1023–1038. [Google Scholar] [CrossRef]

- Marzuki, A.G. The Implementation of SQ3R Method to Develop Students’ Reading Skill on Islamic Texts in EFL Class in Indonesia. Regist. J. 2019, 12, 49–61. [Google Scholar] [CrossRef]

- Huang, X.T.; Zhang, Z.J. The compiling of adolescence time management scale. Acad. J. Psychol. 2001, 4, 338–343. (In Chinese) [Google Scholar]

- Johnson, D.; Johnson, R.; Holubec, E. Advanced Cooperative Learning; Interaction Book Company: Edin, MN, USA, 1988. [Google Scholar]

- Teo, T. An initial development and validation of a Digital Natives Assessment Scale (DNAS). Comput. Educ. 2013, 67, 51–57. [Google Scholar] [CrossRef]

- Worthington, R.L.; Whittaker, T.A. Scale Development Research. Couns. Psychol. 2006, 34, 806–838. [Google Scholar] [CrossRef]

- Kline, R.B. Principles and Practice of Structural Equation Modeling, 2nd ed.; Guilford Press: New York, NY, USA, 2015; ISBN 978-1-60623-876-9. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E.; Tatham, R.L. Multivariate Data Analysis, 6th ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Nunnally, J.C. Psychometric Theory, 2nd ed.; McGraw-Hill: New York, NY, USA, 1994; ISBN 007047849X. [Google Scholar]

- Joreskog, K.G.; Sorbom, D. LISREL 8: Structural Equation Modeling; Scientific Software International Corp: Chicago, IL, USA, 1996. [Google Scholar]

- Hou, J.; Wen, Z.; Cheng, Z. Structural Equation Model and Its Application; Education Science Publication: Beijing, China, 2004. [Google Scholar]

- Dillon, W.R.; Goldstein, M. Multivariate Analysis: Methods and Applications; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable and measuremenr error. J. Mark. Res. 1981, 34, 161–188. [Google Scholar]

- Huang, R.H.; Liu, D.J.; Tlili, A.; Yang, J.F.; Wang, H.H. Handbook on Facilitating Flexible Learning during Educational Disruption: The Chinese Experience in Maintaining Undisrupted Learning in COVID-19 Outbreak; Smart Learning Institute of Beijing; Normal University: Beijing, China, 2020. [Google Scholar]

- Caena, F.; Stringher, C. Towards a new conceptualization of Learning to Learn. Aula Abiert 2020, 49, 199–216. [Google Scholar] [CrossRef]

- Yip, M.C.W. The Reliability and Validity of the Chinese Version of the Learning and Study Strategies Inventory (LASSI-C). J. Psychoeduc. Assess. 2012, 31, 396–403. [Google Scholar] [CrossRef]

- Carretero, S.; Vuorikari, R.; Punie, Y. DigComp 2.1: The Digital Competence Framework for Citizens with Eight Proficiency Levels and Examples of Use (No. JRC106281). Joint Research Centre (Seville Site). Available online: http://publications.jrc.ec.europa.eu/repository/bitstream/JRC-106281/web-digcomp2.1pdf(online).pdf (accessed on 11 April 2021).

- Duncan, T.G.; McKeachie, W.J. The Making of the Motivated Strategies for Learning Questionnaire. Educ. Psychol. 2005, 40, 117–128. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).