Abstract

Background: Internists formulate diagnostic hypotheses and personalized treatment plans by integrating data from a comprehensive clinical interview, reviewing a patient’s medical history, physical examination and findings from complementary tests. The patient treatment life cycle generates a significant volume of data points that can offer valuable insights to improve patient care by guiding clinical decision-making. Artificial Intelligence (AI) and, in particular, Generative AI (GAI), are promising tools in this regard, particularly after the introduction of Large Language Models. The European Federation of Internal Medicine (EFIM) recognizes the transformative impact of AI in leveraging clinical data and advancing the field of internal medicine. This position paper from the EFIM explores how AI can be applied to achieve the goals of P6 Medicine principles in internal medicine. P6 Medicine is an advanced healthcare model that extends the concept of Personalized Medicine toward a holistic, predictive, patient-centered approach that also integrates psycho-cognitive and socially responsible dimensions. An additional concept introduced is that of Digital Therapies (DTx), software applications designed to prevent and manage diseases and disorders through AI, which are used in the clinical setting if validated by rigorous research studies. Methods: The literature examining the relationship between AI and Internal Medicine was investigated through a bibliometric analysis. The themes identified in the literature review were further examined through the Delphi method. Thirty international AI and Internal Medicine experts constituted the Delphi panel. Results: Delphi results were summarized in a SWOT Analysis. The evidence is that through extensive data analysis, diagnostic capacity, drug development and patient tracking are increased. Conclusions: The panel unanimously considered AI in Internal Medicine as an opportunity, achieving a complete consensus on the matter. AI-driven solutions, including clinical applications of GAI and DTx, hold the potential to strongly change internal medicine by streamlining workflows, enhancing patient care and generating valuable data.

1. Introduction

The practice of Internal Medicine is based on a detailed collection of information from each specific patient. Diagnostic hypotheses and personalized treatment plans are formulated by integrating data from a comprehensive clinical interview, the patient’s medical history, physical examination, and findings from complementary tests [1]. This meticulous process is vital to delivering high-quality care and ensuring patient safety [2]. Additionally, the complexity of patients in internal medicine sets this specialty apart from others [3]. Patients are often elderly, present with multiple comorbidities, and their health is managed with multiple chronic medications. When hospitalized, these patients require high-touch or high-intensity care resources, such as additional diagnostic tests and multiple treatments, which in turn can have significant clinical and economic consequences. In this challenging scenario, a well-designed clinical decision support system that synthesizes the large volumes of patient data collected or generated during usual care can guide decision-making [4,5,6,7,8,9]. Artificial Intelligence is a promising tool in this regard. One of its subsets, Generative AI (GAI), is especially worthy of in-depth exploration.

The European Federation of Internal Medicine (EFIM) recognizes the transformative potential of AI in advancing the field of internal medicine. This position paper from the EFIM explores how AI can be applied to achieve the goals of P6 Medicine principles in internal medicine [10]. Medicine integrates a holistic approach to patient treatment and considers a wide range of factors to provide increasingly effective preventive and medical care [6,7,8]. Together, AI adoption, especially generative artificial intelligence (GAI) and digital therapeutics (DTx), and a focus on psycho-cognitive factors for patients paves a path towards an integrated, data-driven future for healthcare Data can be collected via the Internet of Medical Things (IoMT), a network comprising connected medical devices, sensors, and software that gather, share, and analyze real-time health information [9]. Although AI is promising in medical applications, its adoption in Internal Medicine may be inconsistent and unregulated. Specific issues of concern about AI applications include clinical efficacy and usability, ethical responsibility, and legal compliance [10,11,12,13,14]. Also, there is still a lack of clear, practice-oriented guidelines for internists on how to incorporate these technologies safely, effectively, and ethically into patient care [10,11,12,13,14,15,16,17,18,19].

1.1. Generative AI in Internal Medicine

Artificial intelligence (AI) applications demonstrate the capability of improving clinical decision-making by enhancing clinical accuracy [11] through the detection of patterns and changes in medical data [12]. Additionally, AI facilitates personalized treatments by using longitudinal parameters and continuous symptom tracking [12]. GAI shows significant potential benefits in medical applications, however its overall effectiveness and usability in clinical practice remain to be fully validated. GAI can generate new content from existing data patterns, hence, it can be used for generating images, text or even synthetic patient data for any purpose, like medical education, clinical decision support systems in the form of chatbots or synthetic patient data for research [18]. In terms of diagnostic effectiveness, GAI can solve simulated medical cases at rates that are comparable to or even higher than clinicians [19]. In a randomized clinical trial, clinicians who were directly supported by GAI improved their overall diagnostic performance [20]. Similarly, a systematic review and meta-analysis across ten medical databases found that ChatGPT, (version GPT-4) as a specific form of GAI, achieved an accuracy of 56% in addressing medical queries, although with considerable variability depending on specialty and task [21]. Regarding communication-related applications, such as drafting discharge instructions and preparing patient messages, GAI is effective to generate texts that are high in empathy and readability, potentially reducing clinician workload [22]. GAI also shows promise in supporting clinicians in tasks like information-gathering and summarization [23], such as summarizing a patient history from the electronic health record (EHR) [24].

In specific specialties, GAI can also train on medical and multiomics databases to design highly personalized treatment plans for patients affected by comorbidities or complex conditions, such as rare genetic disorders and cardiovascular diseases. GAI can monitor biomarkers longitudinally and optimize pharmaceutical prescriptions with the improvement of patient care [25,26]. GAI in medical imaging and radiology applications can improve image quality by synthesizing clearer pathology images and training more robust diagnostic models. Promising techniques include Generative Adversarial Networks (GANs) and diffusion models, which allow the generation of high-fidelity medical image reconstructions [27]. Other examples of GAI use in medical research include clinical trial simulation, disease outbreaks modelling, and drug development assistance [28] or conducting pilot studies to evaluate the quality and accuracy of ChatGPT-generated answers to common patient questions [4,29]. In education, GAI could help medical students practice collecting a patient history with virtual patients that are AI chatbots [30]. Digital Therapies (DTx) (for example, Youper 12.04.000), which are AI software applications for disease or disorder prevention and management, are used in the clinical setting to influence patients’ health behaviors and health outcomes, similar to how drugs interact with a patient’s physiology [31]. Like a prescribable medication, DTx consists of an active ingredient (algorithm) and one or more excipients (dense dynamic interactions). DTx aims to correct dysfunctional behaviors through cognitive therapies and can pair with devices, sensors, or wearables. DTx is mostly prescription-based once rigorously evaluated for effectiveness and also collects or generates real-world data for continuing analytics that support therapy optimization [8].

However, Generative AI faces challenges when it comes to integration into existing workflows due to missed instructions and variable accuracy depending on the pathology or discipline [23]. Successful integration also relies on social or organizational factors, such as training and oversight rather than solely on the GAI application or model [32]. Importantly, GAI is known to generate hallucinations, or fabricated outputs, which could have serious health care consequences. For example, hallucinations were detected in 6% of GAI-generated discharge summaries, while 18% presented potential patient safety issues [33]. GAI models also exhibit sociodemographic biases, which arise from their training data and algorithms (also known as data bias or algorithmic bias), which raises the risk of perpetuating health system biases and inequities [34].

For these reasons, the GAI applications in medicine require constant human supervision to prevent errors, bias, and harmful consequences for patients, or a human-in-the-loop approach. GAI should be a supportive tool in patient treatment and management, rather than an autonomous agent [23]. AI literacy for clinicians who use GAI will become a necessary competency for development and training, so that they can effectively formulate queries and critically interpret outputs and recognize potential system failures, including hallucinations and bias [25]. GAI design, instruction, and implementation in a healthcare context should be adapted to the needs of individual organizations and medical departments [10]. This includes the potential for AI agents to be applied in clinical practice. Privacy and ownership of health data are also important considerations due to corporate ownership of GAI platforms, which are privately owned even if publicly available for use, and governed by regional or national laws and regulations [35]. As a result, GAI applications must strictly comply with government regulations and established frameworks to protect privacy and data ownership issues and guarantee transparency and accountability in patient care [36,37].

1.2. EFIM Position on AI in Internal Medicine

The European Federation of Internal Medicine (EFIM) identified an urgent need to close the gap between emerging technologies and their practical applications in clinical internal medicine settings [38]. This position paper offers professional guidance for internal medicine practitioners. We aim to:

- -

- Provide practical and immediate recommendations for internal medicine specialists: Offer advice on how to include AI in the clinical workflow within a department or clinic, and with other medical disciplines. AI can also be employed to support the daily clinical activities and reduce administrative workload.

- -

- Assure that human expertise and value is not overlooked and that physician well-being is supported: Core clinical skills and medical professional competencies remain a foundation on which digital literacy and AI literacy should be built.

- -

- Reduce uncertainty regarding ethical, legal, and data privacy issues: Internists require clear frameworks to manage risks such as algorithmic bias, liability, compliance with regulations like the General Data Protection Regulation (GDPR), patient consent, and transparency.

Recommendations drawn from rigorous studies of innovative technologies allow for evidence-based applications of AI, including GAI, and DTx. This avoids misleading information, reliance on hype, unrealistic expectations, misallocation of resources, and loss of trust in scientific and medical advancements. Furthermore, robust scientific investigations, including pilot studies where appropriate and ethical, allow for application developments that account for patient safety, data privacy, data and algorithmic biases, and accountability in controlled environments before widespread adoption. They also offer pathways towards justifying investment in GAI research and implementation in healthcare systems.

This EFIM position paper describes the approach to evidence synthesis and recommendations for internal medicine specialists on how AI can be applied to achieve the goals of P6 Medicine principles in internal medicine.

2. Methodology

To develop an evidence base for analysis, this position paper involved the following key approaches: a literature search and bibliometric analysis; a Delphi consensus development among a group of 30 European internal medicine AI specialists and digital health experts; and a SWOT analysis. The methodology diagram was shown in Figure 1.

Figure 1.

Methodology diagram.

2.1. Literature Review

Literature examining the relationship between AI and Internal Medicine was retrieved through a bibliometric analysis [39]. We iteratively refined the search approach with different keywords and analyzed the retrieved citations for relevance to the study aim. Only English language peer-reviewed literature was included. The search was performed on 2 April 2024, using Elsevier’s SCOPUS search engine. The query used was: TITLE-ABS-KEY (“artificial intelligence” OR “machine learning” OR “deep learning” OR “AI”) AND TITLE-ABS-KEY (“internal medicine” OR “clinical medicine” OR “internal diseases” OR “internal health”) AND TITLE-ABS-KEY (“cost” OR “costs” OR “expenditure” OR “financial impact” OR “economic impact” OR “expenses”) AND TITLE-ABS-KEY (“benefit” OR “benefits” OR “advantages” OR “improvements” OR “outcomes”). The definitions present on MESH (National Library of Medicine portal) were adopted: https://www.ncbi.nlm.nih.gov/mesh/ (accessed on 2 April 2024).

The bibliometric analysis was performed using the R package, version 4.4.1 bibliometrix [40]. Analysis involved network analysis and multiple correspondence analysis, a statistical methodology (a statistical multivariate method which helped to synthesize the results which allow to identify the thematic communities of keyword), to build conceptual maps that help identify the specific relationships between critical keywords in the literature [15]. To determine the semantic cores of the literature and strengthen the findings from each methodology, we have performed a hierarchical cluster analysis based on different community results obtained from the concurrent keyword networks [16]. At the same time, following this approach [16], we have validated the different clusters obtained.

2.2. Delphi Method

Themes identified from the literature review were further examined employing the Delphi method [41,42,43], centered to respond to the Grand Tour Question: “What role should Artificial Intelligence play in the care of Internal Medicine patients?”.

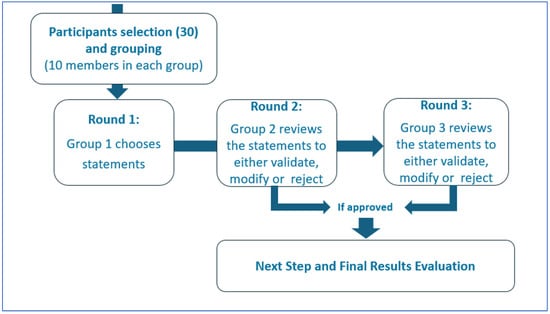

The Delphi process allows for a participatory and shared process between experts (both clinical and technical) similar to Socrates’ maieutics in order to highlight the different facets of the topic under study (Figure 2).

Figure 2.

Delphi Method.

Thirty international AI and Internal Medicine experts participated to the Delphi panel, including members of the EFIM Telemedicine, Innovative Technologies and Digital Health Working Group and other professionals with relevant academic and clinical experience [42]. The panel was divided into three groups of ten experts. The first group selected the initial statements for investigation; the second group then reviewed the statements to either validate, reject, or modify them. The validated statements were included in the Strength, Opportunity, Weakness and Threat (SWOT) analysis, while the third group analyzed the rejected or replaced statements [44]. Each statement was scored for the perceived value and risk using a Likert scale from −10 to +10 [43]. The median scores were used to determine consensus and plotted x (risk) and y (value) axes to identify strengths (−x/+y), weaknesses (−x/−y), threats (+x/−y), and opportunities (+x/+y) [18]. Panel discussions were carried out in person or remotely during video calls (via Zoom or Google Meet). Participants scored the statements via a Google Form; they could not view other participants’ individual responses. One author (FR) had access to the results at the end of the third round, and also conducted the statistical survey. As consensus was reached between the first and second group of experts, input from the third group of Delphi’s panel was not necessary. Open Science Framework, however, does not allow Italy to be selected as a repository for the data.

3. Research Outcomes

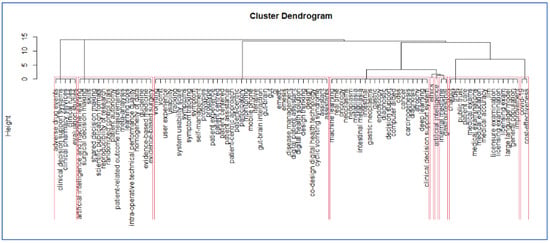

The methodology described in the paper is based on a comprehensive review of relevant literature and the identification of key themes through hierarchical clustering on the application of AI in medicine. The resulting dendrogram (Figure 3) shows the different fields of application and their integration in clinical practice.

Figure 3.

The final dendrogram of the bibliometric analysis. Each red rectangle shows a different cluster of keywords reflecting the current debate in the field.

Network analysis of the co-occurring keywords and distinct phases, such as multiple correspondence analysis, a statistical multivariate method which helped to synthesize the results which allow to identify the thematic communities’ keywords. The dendrogram summarizes the results of the bibliometric analysis, showing the different semantic cores of literature as observed in Figure 1. Each red rectangle shows a different group of keywords reflecting the current debate in the field. Because the dendrogram is a tree-like diagram created by hierarchical grouping, it shows how the data points are grouped in sequence; each “leaf” of the diagram is a datapoint linked to other datapoints through branches of larger keyword groups. In our case at the bottom of the diagram, each data point is represented as a single “leaf”. As it is possible to move up words, the branches connect to show which elements or groups of key words come together to form larger groups of keywords. Figure 1 shows where there may be relevant debates in this field of AI applications in Internal medicine. In order to interpret the dendrogram, two different keywords which are in the same branch of the dendrogram are keywords which appear closely in the scientific literature, for instance these topics are discussed jointly in articles. More generally it is possible to say that keywords which appear in the same cluster or groups are related similar debates in literature (they appear closely in different publications).

The bibliometric analysis emphasizes the relevance of AI in Internal Medicine, particularly its role in earlier disease detection, which can reduce healthcare costs and improve patient treatments [45]. In addition, AI can improve clinical workflows and reduce the risk of medical errors [46]. AI also facilitates personalized medicine with tailored treatment strategies [5]. However, AI can result in relevant costs, which include not only its initial integration into medical practice, but also the ongoing need for updates and maintenance.

The Delphi results were summarized in a SWOT Analysis, as shown in Table 1 and Figure 4. Substantial comments were included in the SWOT Analysis, while textual clarifications were addressed within the panel.

Table 1.

SWOT Analysis: AI used in Internal Medicine.

Figure 4.

Represents the quantitative scores from the SWOT analysis. Green area: positive area. Yellow area: items can be improved or more attention is required to apply them. Red area: dangerous area.

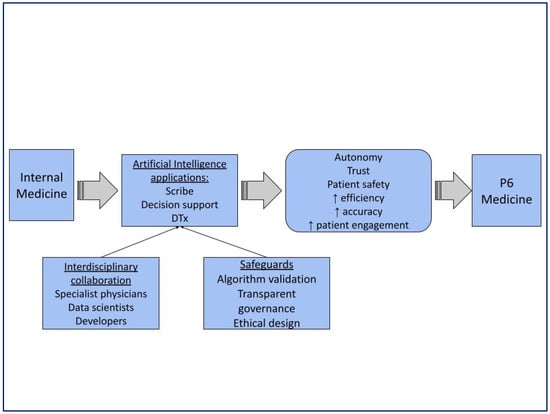

The in-depth discussion that took place within the groups allowed us to highlight the main shared conclusions that the possibility of increasing the capacity to analyze data significantly improves diagnostic capacity, the development of therapies and patient tracking (Figure 5).

Figure 5.

Summarizes the main outcomes of the Delphi consensus and relates them to P6 medicine. The arrows show how the use of AI contributes to the realization of the P6 Medicine increasing autonomy, trust, patient safety, efficiency, accuracy and patient engagement.

The consensus panel unanimously acknowledged AI in Internal Medicine as an opportunity. Fewer opportunities for improvement emerged and showed polarized views, as represented in the graph by a clustering of points toward the outer vertices, which is typical of disruptive technologies.

3.1. Consensus Recommendations on Generative AI in Internal Medicine

In summary, GAI can be trained to generate real-time content, such as text, images, or even synthetic patient data, based on patterns from existing data. In internal medicine, GAI offers promising applications, including the following:

- Research and Drug Discovery: GAI can generate synthetic patient data for clinical trial simulations and accelerate drug development. For instance, it can assist in drug development by identifying new therapeutic compounds or simulating their effects.

- Personalized Medicine: GAI can generate synthetic data to train AI models for more accurate personalized medicine applications, leading to tailored treatment plans.

- Population Health Management: GAI has the potential to model disease outbreaks and develop more effective public health interventions. By simulating different scenarios, internists and public health officials can proactively design strategies to minimize the impact of infectious diseases and chronic conditions in populations.

- Improving Clinical Documentation: GAI provides new methods for introducing information into clinical records, such as voice recognition or predictive writing, which can significantly improve the documentation process and reduce the associated workload and professional burnout [7].

- Clinical Decision Support and Patient Education: GAI can be used for clinical decision support systems in the form of chatbots or for medical education, such as generating images, text, or synthetic patient data for history taking in virtual patients. Pilot studies have evaluated the quality and accuracy of ChatGPT-generated answers to common patient questions [8].

Generative AI offers a pathway for internal medicine specialists to advance P6 Medicine goals and improve overall efficiency.

3.2. Recommended Integration of AI into Clinical Processes

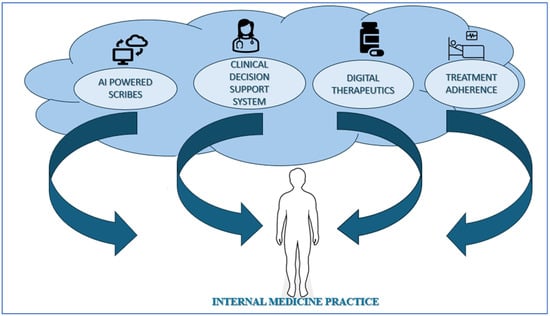

Successfully integrating AI into clinical workflows requires careful consideration and a detailed analysis of existing processes by internists and healthcare organizations. The aim is to identify areas where AI solutions can improve the quality of care and reduce documentation burden. Examples of practical applications include:

- AI-powered Scribes: These tools automate documentation tasks, such as compiling clinical information registries or generating clinical notes, potentially reducing administrative burden and freeing up attention and time for the internal medicine specialist to focus on patient interaction [45].

- Clinical Decision Support Systems (CDSS): AI-powered CDSS can provide real-time guidance to internists at the point of care, improving diagnostic accuracy and treatment efficiency by analyzing vast datasets and offering evidence-based recommendations [46].

- Digital Therapies (DTx): Examples include DTx for preventing hospital visits, improving patient self-management, and providing remote therapy. DTx may facilitate increasing patient participation and empowerment, continuous monitoring, feedback, and behavioral support in medical treatments. Physicians can serve patients as a guide, interpreting data, personalizing treatments, and coordinating effective integration of digital treatments into health pathways, especially for patients with lower digital skills [24,47]. From an economic perspective, DTx could lead to cost-saving opportunities through increasing treatment adherence, reducing acute exacerbations of chronic conditions, offering scalable interventions, and improving healthcare service efficiencies [47].

Key areas for AI integration in internal medicine include tools such as medical scribes, clinical decision support systems (CDSS), digital therapeutics, and treatment adherence, offering clear opportunities for improving efficiency, accuracy, and patient engagement. The strengths highlighted in the SWOT analysis suggest significant potential for improving both clinical workflows and patient outcomes. At the same time, the analysis emphasizes that these benefits cannot be realized in isolation: careful algorithm validation, seamless workflow integration, and transparent governance are essential to ensure safety and trust. Ethical issues such as equity and patient autonomy must be considered, recognizing that poorly designed or implemented AI tools risk reinforcing disparities or undermining patient-centered care. Overall, the analysis positions AI as a transformative force in internal medicine, but its success depends on responsible, deliberate, and ethically grounded implementation. Applied appropriately in this way, internal medicine specialists may be allowed more effort and time to attend to complex patient care.

To advance the field, GAI applications can be evaluated in various ways in both pilot and large-scale settings. Example GAI evaluations could focus on administrative efficiencies (e.g., reduced documentation time or prescribing and ordering time), factual accuracy or errors (e.g., in medical history summaries), usability and acceptability by healthcare professionals and patients, and the introduction or perpetuation of systemic biases and inequity. DTx, as a specific AI application, also deserves evaluation due to heterogeneity of endpoints, which limits generalizability. Use of primary endpoints would improve the robustness of DTx evaluation. For example, primary endpoints are the Insomnia Severity Index for insomnia assessment, and HbA1c changes between two clinically meaningful time points for diabetes monitoring. Ultimately, randomized, parallel-group trials where ethically possible would be a gold standard for evaluating all possible AI applications and DTx. Therefore, future studies should concentrate on primary endpoint examples.

3.3. Legal, Regulatory, and Ethical Considerations

Legal, regulatory, and ethical considerations are vital in the potential use of AI, particularly GAI, in healthcare [48]. Many of the challenges internal medicine specialists face are universal for all clinicians. First, AI tools are required to comply with strict data privacy regulations, such as the General Data Protection Regulation (GDPR) in the EU [49]. In the current environment, this is an evergreen challenge to assure protection of sensitive patient information [50]. However, discrepancies in the interpretation of regulations across different regions and countries results in variability in compliance, and ongoing vigilance and collaboration may allow for pathways towards uniform regulatory interpretations. Additionally, robust cybersecurity measures involving AI will also need to evolve in contemporary times where data breaches, hacking attempts, and new threats also appear. This could include clinicians developing competencies and awareness of new protocols, and organizations can engage in routine security audits [46].

The European Commission has established a comprehensive framework to promote the safe, ethical, and reliable use of AI in healthcare [37,38]. This framework sets out key principles that are crucial considerations for AI applications in internal medicine:

- Transparency: AI models should be designed to allow clinicians to understand decision-making processes.

- Fairness: AI must operate without bias and should support equal access to care.

- Accountability: Clear responsibility must be assigned for AI development and implementation.

These principles are embedded in the EU Artificial Intelligence Act (AI Act) in March 2024, and sets out detailed requirements for high-risk AI applications, including those in healthcare. However, some regulatory gaps remain, particularly concerning DTx, which is by design a software-based solution. DTx are categorized as Medical Device Software (MDSW) under the EU Medical Devices Regulation 2017/745 (MDR). Despite this, the regulation does not address them. Uncertainties about DTx classification and market authorization could lead to misclassification as low-risk, even if DTx has a high therapeutic impact. To address these gaps, frameworks like the “Software as a Medical Device: Possible Framework for Risk Categorization and Corresponding Considerations,” developed by the International Medical Devices Regulators Forum (IMDRF), can be beneficial [51].

Second, liability for errors occurring as a result of AI applications remains uncertain: whether the technology developer, healthcare organization, or individual clinician is liable for errors is yet to be determined. Whether AI can be liable is also unclear, given that there are already some industries where AI, specifically GAI, cannot be accountable for its outputs.

Third, explainable AI is founded on transparency for the user to sufficiently understand how the model works in generating an output, such as a decision support recommendation. This includes aspects of bias and equity, which may involve explainability also of the source data for developing and training AI.

Finally, AI is seen best positioned as augmenting human expertise, rather than replacing it. The human-in-the-loop model would be relevant to achieve the most gains from AI applications. This also may best support productive therapeutic patient-physician relationships in an increasingly automated environment.

To summarize, AI applications in Internal Medicine offer significant opportunities to improve both efficiency and quality of care and reduce administrative burdens. The EFIM sees opportunities and challenges in AI applications advancing P6 Medicine principles in the field, evolving and improving care effectiveness. Figure 6 highlights the impact of AI on Internal Medicine practice.

Figure 6.

Impact of AI on Internal Medicine practice.

The EFIM provides an official statement and key recommendations for the implementation of AI in internal medicine (Table 2). These recommendations are designed to guide internal medicine specialists and their organizations in using AI responsibly and effectively to improve patient care.

Table 2.

The EFIM Official statement proposed for the implementation of AI in Internal Medicine.

Several key points were identified by the panel as fundamental elements for AI use in Internal Medicine:

- Increasing medical data poses a significant administrative challenge.

- Organizing information is essential for digitizing clinical workflows, such as clinical decision support systems.

- A comprehensive and punctual collection of clinical data allows to design AI-driven decision support tools that integrate into internal medicine practices.

- Ethical and responsible data collection is essential to ensure patient privacy and consent to maintain patient safety and trust [54].

4. Conclusions

AI applications, including GAI and DTx, have the potential to transform internal medicine by streamlining workflows, enhancing patient care, and generating valuable data. These technologies offer a path towards more efficient, personalized, and human-centered healthcare. However, successful integration of such technologies in the healthcare domain hinges on interdisciplinary collaboration. Care teams should involve new professional roles, such as clinical data scientists and bioengineers. Additional fundamental requirements are adherence to evolving EU regulations and focus on ethical considerations like transparency and fairness. Patients and their caregivers must remain at the care center delivery. As a call to action, the EFIM recommends that internal medicine specialists, along with their organizations, professional societies, and communities, take a leading role in shaping the education, design, implementation, evaluation, and regulation of AI in clinical settings.

Author Contributions

Conceptualization, I.S.-C., F.P., M.M., O.M. and T.I.L.; Data curation, C.D. and A.V.; Formal analysis, I.S.-C., F.R., A.V. and A.S.; Funding acquisition, R.G.-H.; Methodology, C.D. and A.V.; Project administration, F.P.; Supervision, F.P.; Visualization, A.S.; Writing—original draft, I.S.-C., F.P., F.R., O.M. and A.V.; Writing—review & editing, F.P., M.M., F.R., C.D., T.I.L., A.S. and R.G.-H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data will be provided by the corresponding author upon request.

Conflicts of Interest

The Authors declare that they have no conflicts of interest.

References

- Lichstein, P.R. The Medical Interview. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Walker, H.K., Hall, W.D., Hurst, J.W., Eds.; Butterworths: Boston, MA, USA, 1990. Available online: http://www.ncbi.nlm.nih.gov/books/NBK349/ (accessed on 8 February 2025).

- Pietrantonio, F.; Orlandini, F.; Moriconi, L.; La Regina, M. Acute Complex Care Model: An organizational approach for the medical care of hospitalized acute complex patients. Eur. J. Intern. Med. 2015, 26, 759–765. [Google Scholar] [CrossRef]

- Jeyaraman, M.; Balaji, S.; Jeyaraman, N.; Yadav, S. Unraveling the Ethical Enigma: Artificial Intelligence in Healthcare. Cureus 2023, 15, e43262. [Google Scholar] [CrossRef]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Pietrantonio, F.; Florczak, M.; Kuhn, S.; Kärberg, K.; Leung, T.; Said Criado, I.; Sikorski, S.; Ruggeri, M.; Signorini, A.; Rosiello, F.; et al. Applications to augment patient care for Internal Medicine specialists: A position paper from the EFIM working group on telemedicine, innovative technologies & digital health. Front. Public Health 2024, 12, 1370555. [Google Scholar]

- Gupta, N.S.; Kumar, P. Perspective of artificial intelligence in healthcare data management: A journey towards precision medicine. Comput. Biol. Med. 2023, 162, 107051. [Google Scholar] [CrossRef]

- Aranyossy, M.; Halmosi, P. Healthcare 4.0 value creation—The interconnectedness of hybrid value propositions. Technol. Forecast. Soc. Change 2024, 208, 123718. [Google Scholar] [CrossRef]

- Coiera, E.; Liu, S. Evidence synthesis, digital scribes, and translational challenges for artificial intelligence in healthcare. Cell Rep. Med. 2022, 3, 100860. [Google Scholar] [CrossRef]

- Winkel, A.F.; Telzak, B.; Shaw, J.; Hollond, C.; Magro, J.; Nicholson, J.; Quinn, G. The Role of Gender in Careers in Medicine: A Systematic Review and Thematic Synthesis of Qualitative Literature. J. Gen. Intern. Med. 2021, 36, 2392–2399. [Google Scholar] [CrossRef] [PubMed]

- Rangarajan, D.; Rangarajan, A.; Reddy, C.K.K.; Doss, S. Exploring the Next-Gen Transformations in Healthcare Through the Impact of AI and IoT. In Advances in Medical Technologies and Clinical Practice; IGI Global: Hershey, PA, USA, 2024; pp. 73–98. Available online: https://services.igi-global.com/resolvedoi/resolve.aspx?doi=10.4018/979-8-3693-8990-4.ch004 (accessed on 8 July 2025).

- Xie, Y.; Zhai, Y.; Lu, G. Evolution of artificial intelligence in healthcare: A 30-year bibliometric study. Front. Med. 2025, 11, 1505692. [Google Scholar] [CrossRef] [PubMed]

- Singh, B. Artificial Intelligence (AI) in the Digital Healthcare and Medical Industry: Projecting Vision for Governance and Regulations. In Advances in Healthcare Information Systems and Administration; IGI Global: Hershey, PA, USA, 2024; pp. 161–188. Available online: https://services.igi-global.com/resolvedoi/resolve.aspx?doi=10.4018/979-8-3693-6294-5.ch007 (accessed on 8 July 2025).

- Bhavanam, B.R. The role of AI in transforming healthcare: A technical analysis. World J. Adv. Eng. Technol. Sci. 2025, 15, 803–811. [Google Scholar] [CrossRef]

- Kotowicz, M.; Bieniak-Pentchev, M.; Koczkodaj, M. Artificial Intelligence in medicine: Potential and Application Possibilities—Comprehensive literature review. Qual. Sport 2025, 41, 60222. [Google Scholar] [CrossRef]

- Pietrantonio, F.; Vinci, A.; Maurici, M.; Ciarambino, T.; Galli, B.; Signorini, A.; La Fazia, V.M.; Rosselli, F.; Fortunato, L.; Iodice, R.; et al. Intra- and Extra-Hospitalization Monitoring of Vital Signs—Two Sides of the Same Coin: Perspectives from LIMS and Greenline-HT Study Operators. Sensors 2023, 23, 5408. [Google Scholar] [CrossRef]

- De Freitas, J.; Nave, G.; Puntoni, S. Ideation with Generative AI—In Consumer Research and Beyond. J. Consum. Res. 2025, 52, 18–31. [Google Scholar] [CrossRef]

- Gude, V. Factors Influencing ChatGpt Adoption for Product Research and Information Retrieval. J. Comput. Inf. Syst. 2025, 65, 222–231. [Google Scholar] [CrossRef]

- Siragusa, L.; Angelico, R.; Angrisani, M.; Zampogna, B.; Materazzo, M.; Sorge, R.; Giordano, L.; Meniconi, R.; Coppola, A.; SPIGC Survey Collaborative Group; et al. How future surgery will benefit from SARS-CoV-2-related measures: A SPIGC survey conveying the perspective of Italian surgeons. Updat. Surg. 2023, 75, 1711–1727. [Google Scholar] [CrossRef] [PubMed]

- Eriksen, A.V.; Möller, S.; Ryg, J. Use of GPT-4 to Diagnose Complex Clinical Cases. NEJM AI 2024, 1, AIp2300031. [Google Scholar] [CrossRef]

- Goh, E.; Gallo, R.; Hom, J.; Strong, E.; Weng, Y.; Kerman, H.; Cool, J.A.; Kanjee, Z.; Parsons, A.S.; Ahuja, N.; et al. Large Language Model Influence on Diagnostic Reasoning: A Randomized Clinical Trial. JAMA Netw. Open 2024, 7, e2440969. [Google Scholar] [CrossRef]

- Wei, Q.; Yao, Z.; Cui, Y.; Wei, B.; Jin, Z.; Xu, X. Evaluation of ChatGPT-generated medical responses: A systematic review and meta-analysis. J. Biomed. Inform. 2024, 151, 104620. [Google Scholar] [CrossRef]

- Hu, D.; Guo, Y.; Zhou, Y.; Flores, L.; Zheng, K. A systematic review of early evidence on generative AI for drafting responses to patient messages. npj Health Syst. 2025, 2, 27. [Google Scholar] [CrossRef]

- Hager, P.; Jungmann, F.; Holland, R.; Bhagat, K.; Hubrecht, I.; Knauer, M.; Vielhauer, J.; Makowski, M.; Braren, R.; Kaissis, G.; et al. Evaluation and mitigation of the limitations of large language models in clinical decision-making. Nat. Med. 2024, 30, 2613–2622. [Google Scholar] [CrossRef]

- Fassbender, A.; Donde, S.; Silva, M.; Friganovic, A.; Stievano, A.; Costa, E.; Winders, T.; Van Vugt, J. Adoption of Digital Therapeutics in Europe. Ther. Clin. Risk Manag. 2024, 20, 939–954. [Google Scholar] [CrossRef]

- Bhuyan, S.S.; Sateesh, V.; Mukul, N.; Galvankar, A.; Mahmood, A.; Nauman, M.; Rai, A.; Bordoloi, K.; Basu, U.; Samuel, J. Generative Artificial Intelligence Use in Healthcare: Opportunities for Clinical Excellence and Administrative Efficiency. J. Med. Syst. 2025, 49, 10. [Google Scholar] [CrossRef]

- Singh, A. Automated Patient History Summarization Using Generative AI. SSRN. 2025. Available online: https://www.ssrn.com/abstract=5184805 (accessed on 15 September 2025).

- Khosravi, B.; Purkayastha, S.; Erickson, B.J.; Trivedi, H.M.; Gichoya, J.W. Exploring the potential of generative artificial intelligence in medical image synthesis: Opportunities, challenges, and future directions. Lancet Digit. Health 2025, 7, 100890. [Google Scholar] [CrossRef]

- Pietrantonio, F.; Piasini, L.; Spandonaro, F. Internal Medicine and emergency admissions: From a national Hospital Discharge Records (SDO) study to a regional analysis. Ital. J. Med. 2016, 10, 157–167. [Google Scholar] [CrossRef]

- Ferrari-Light, D.; Merritt, R.E.; D’Souza, D.; Ferguson, M.K.; Harrison, S.; Madariaga, M.L.; Lee, B.E.; Moffatt-Bruce, S.D.; Kneuertz, P.J. Evaluating ChatGPT as a patient resource for frequently asked questions about lung cancer surgery—A pilot study. J. Thorac. Cardiovasc. Surg. 2025, 169, 1174–1180.e18. [Google Scholar] [CrossRef]

- Yi, Y.; Kim, K.-J. The feasibility of using generative artificial intelligence for history taking in virtual patients. BMC Res. Notes 2025, 18, 80. [Google Scholar] [CrossRef]

- Kister, K.; Laskowski, J.; Makarewicz, A.; Tarkowski, J. Application of artificial intelligence tools in diagnosis and treatment of mental disorders. Curr. Probl. Psychiatry 2023, 24, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Reddy, S. Generative AI in healthcare: An implementation science informed translational path on application, integration and governance. Implement. Sci. 2024, 19, 27. [Google Scholar] [CrossRef] [PubMed]

- Stanceski, K.; Zhong, S.; Zhang, X.; Khadra, S.; Tracy, M.; Koria, L.; Lo, S.; Naganathan, V.; Kim, J.; Dunn, A.G.; et al. The quality and safety of using generative AI to produce patient-centred discharge instructions. NPJ Digit. Med. 2024, 7, 329. [Google Scholar] [CrossRef] [PubMed]

- Omar, M.; Soffer, S.; Agbareia, R.; Bragazzi, N.L.; Apakama, D.U.; Horowitz, C.R.; Charney, A.W.; Freeman, R.; Kummer, B.; Glicksberg, B.S.; et al. Sociodemographic biases in medical decision making by large language models. Nat. Med. 2025, 31, 1873–1881. [Google Scholar] [CrossRef]

- Chen, M.; Mohd Said, N.; Mohd Rais, N.C.; Ho, F.; Ling, N.; Chun, M.; Ng, Y.S.; Eng, W.N.; Yao, Y.; Korc-Grodzicki, B.; et al. Remaining Agile in the COVID-19 pandemic healthcare landscape—How we adopted a hybrid telemedicine Geriatric Oncology care model in an academic tertiary cancer center. J. Geriatr. Oncol. 2022, 13, 856–861. [Google Scholar] [CrossRef]

- Lekadir, K.; Frangi, A.F.; Porras, A.R.; Glocker, B.; Cintas, C.; Langlotz, C.P.; Weicken, E.; Asselbergs, F.W.; Prior, F.; Collins, G.S.; et al. FUTURE-AI: International consensus guideline for trustworthy and deployable artificial intelligence in healthcare. BMJ 2025, 388, e081554. [Google Scholar] [CrossRef]

- Li, H.; Moon, J.T.; Purkayastha, S.; Celi, L.A.; Trivedi, H.; Gichoya, J.W. Ethics of large language models in medicine and medical research. Lancet Digit. Health 2023, 5, e333–e335. [Google Scholar] [CrossRef]

- Zhang, K.; Meng, X.; Yan, X.; Ji, J.; Liu, J.; Xu, H.; Zhang, H.; Liu, D.; Wang, J.; Wang, X.; et al. Revolutionizing Health Care: The Transformative Impact of Large Language Models in Medicine. J. Med. Internet Res. 2025, 27, e59069. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Drago, C.; Gatto, A.; Ruggeri, M. Telemedicine as technoinnovation to tackle COVID-19: A bibliometric analysis. Technovation 2023, 120, 102417. [Google Scholar] [CrossRef]

- Aria, M.; Cuccurullo, C. bibliometrix: An R-tool for comprehensive science mapping analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Pietrantonio, F.; Rosiello, F.; Alessi, E.; Pascucci, M.; Rainone, M.; Cipriano, E.; Di Berardino, A.; Vinci, A.; Ruggeri, M.; Ricci, S. Burden of COVID-19 on Italian Internal Medicine Wards: Delphi, SWOT, and Performance Analysis after Two Pandemic Waves in the Local Health Authority “Roma 6” Hospital Structures. Int. J. Environ. Res. Public Health 2021, 18, 5999. [Google Scholar] [CrossRef]

- Savic, L.C.; Smith, A.F. How to conduct a Delphi consensus process. Anaesthesia 2023, 78, 247–250. [Google Scholar] [CrossRef] [PubMed]

- Niederberger, M.; Spranger, J. Delphi Technique in Health Sciences: A Map. Front. Public Health 2020, 8, 457. [Google Scholar] [CrossRef]

- Ruggeri, M.; Drago, C.; Rosiello, F.; Orlando, V.; Santori, C. Economic Evaluation of Treatments for Migraine: An Assessment of the Generalizability Following a Systematic Review. PharmacoEconomics 2020, 38, 473–484. [Google Scholar] [CrossRef] [PubMed]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Briganti, G.; Le Moine, O. Artificial Intelligence in Medicine: Today and Tomorrow. Front. Med. 2020, 7, 27. [Google Scholar] [CrossRef]

- Kendziorra, J.; Seerig, K.H.; Winkler, T.J.; Gewald, H. From awareness to integration: A qualitative interview study on the impact of digital therapeutics on physicians’ practices in Germany. BMC Health Serv. Res. 2025, 25, 568. [Google Scholar] [CrossRef]

- Moghbeli, F.; Langarizadeh, M.; Ali, A. Application of Ethics for Providing Telemedicine Services and Information Technology. Med. Arch. 2017, 71, 351. [Google Scholar] [CrossRef] [PubMed]

- Mort, M.; Roberts, C.; Pols, J.; Domenech, M.; Moser, I. The EFORTT investigators Ethical implications of home telecare for older people: A framework derived from a multisited participative study. Health Expect. 2015, 18, 438–449. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical Considerations of Using ChatGPT in Health Care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef] [PubMed]

- Software as a Medical Device: Possible Framework for Risk Categorization and Corresponding Considerations. IMDRF. Available online: https://www.imdrf.org/documents/software-medical-device-possible-framework-risk-categorization-and-corresponding-considerations (accessed on 22 September 2025).

- World Health Organization. Telemedicine: Opportunities and Developments in Member States: Report on the Second Global Survey on eHealth. World Health Organization. 2009. Available online: https://www.afro.who.int/publications/telemedicine-opportunities-and-developments-member-state (accessed on 25 April 2025).

- Rosiello, F.; Vinci, A.; Vitali, M.; Monti, M.; Ricci, L.; D’oca, E.; Damato, F.M.; Costanzo, V.; Ferrari, G.; Ruggeri, M.; et al. Is Rome ready to react to chemical, biological, radiological, nuclear, and explosive attacks? A tabletop simulation. Ital. J. Med. 2024, 18, 2. [Google Scholar] [CrossRef]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Wachter, S. Normative challenges of identification in the Internet of Things: Privacy, profiling, discrimination, and the GDPR. Comput. Law Secur. Rev. 2018, 34, 436–449. [Google Scholar] [CrossRef]

- Tolentino, R.; Baradaran, A.; Gore, G.; Pluye, P.; Abbasgholizadeh-Rahimi, S. Curriculum Frameworks and Educational Programs in AI for Medical Students, Residents, and Practicing Physicians: Scoping Review. JMIR Med. Educ. 2024, 10, e54793. [Google Scholar] [CrossRef]

- Subbaraman, R.; De Mondesert, L.; Musiimenta, A.; Pai, M.; Mayer, K.H.; Thomas, B.E.; Haberer, J. Digital adherence technologies for the management of tuberculosis therapy: Mapping the landscape and research priorities. BMJ Glob. Health 2018, 3, e001018. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).