Abstract

Post-5G and 6G telecommunication infrastructures face critical information security challenges due to increasing network complexity and sophisticated cyberattacks. Traditional intrusion detection systems based on statistical traffic analysis struggle to identify advanced threats that exploit semantic-level vulnerabilities in modern communication protocols. This paper proposes a Transformer-based intrusion detection system specifically designed for post-5G and 6G networks. Our approach integrates three key innovations: First, a comprehensive feature extraction method capturing both semantic content characteristics and communication behavior patterns. Second, a dynamic semantic embedding mechanism that adaptively adjusts positional encoding based on semantic context changes. Third, a Transformer-based classifier with multi-head attention mechanisms to model long-range dependencies in attack sequences. Extensive experiments on CICIDS2017 and UNSW-NB15 datasets demonstrate superior performance compared to LSTM, GRU, and CNN baselines across multiple evaluation metrics. Robustness testing and cross-dataset validation confirm strong generalization capability, making the system suitable for deployment in heterogeneous post-5G and 6G telecommunication environments.

1. Introduction

Post-5G and 6G telecommunication infrastructures have become essential for modern society, supporting the communication needs of billions of devices and users worldwide. These next-generation networks promise unprecedented capabilities including ultra-high bandwidth, ultra-low latency, massive connectivity, and intelligent network management [,,]. However, with the increasing complexity of network environments and the rapid influx of access devices, information security has gradually become a key factor restricting the development of telecommunication infrastructure. On one hand, cyberattacks are becoming increasingly diverse and sophisticated. Hackers, malware, and other threats continue to launch attacks on telecommunication infrastructure, attempting to steal important data and disrupt the normal operation of systems []. This situation can lead to the disclosure of user privacy and may cause extensive communication failures, seriously affecting social and economic activities. On the other hand, the complexity of telecommunication infrastructure itself also brings difficulties to information security. Large network architectures, numerous access points, and constantly updated technologies create new security vulnerabilities that attackers can exploit [].

Traditional intrusion detection systems (IDS) based on long short-term memory (LSTM) networks or convolutional neural networks (CNNs) have been widely deployed in telecommunication networks [,]. These methods analyze packet headers, flow statistics, and traffic patterns to identify known attack signatures and anomalous behaviors []. However, these approaches face significant limitations when applied to post-5G and 6G networks. First, they primarily rely on statistical properties of network traffic and struggle to capture semantic-level attack patterns that exploit the meaning and context of communications. Second, the massive scale and heterogeneity of post-5G and 6G networks generate enormous volumes of traffic data, making it difficult for traditional methods to process information in real-time. Third, advanced persistent threats (APTs) and zero-day attacks often exhibit subtle behavioral patterns that evade signature-based detection. While some research has explored deep learning approaches for network security [,], directly detecting intrusions in telecommunication networks using transformer architectures that can model long-range dependencies and semantic context remains an underexplored area. This gap motivates our work to develop an advanced intrusion detection system specifically designed for post-5G and 6G telecommunication infrastructures.

To address these challenges, we propose a Transformer-based intrusion detection system that leverages dynamic semantic embedding and multi-head attention mechanisms. Our approach represents a significant advancement over existing methods in several key aspects. First, we extract comprehensive features from network traffic that capture both semantic content features, e.g., message similarity and information entropy, and communication behavior patterns, such as timing intervals and message lengths. This dual-perspective feature extraction enables the detection of sophisticated attacks that manifest across multiple dimensions. Second, we introduce a dynamic semantic embedding mechanism that adaptively adjusts positional encoding based on semantic context changes. This allows the model to focus on anomalous semantic transitions that often indicate intrusion attempts, improving detection accuracy for advanced threats. Third, by leveraging the Transformer architecture with its powerful self-attention mechanism, our model can capture complex dependencies across long traffic sequences, which is crucial for detecting multi-stage and coordinated attacks.

The experimental validation confirms the effectiveness of our approach for post-5G and 6G telecommunication security. On the CICIDS2017 dataset, our model achieves an F1-score of 0.92 and an AUC-ROC of 0.95, substantially outperforming LSTM, GRU, and CNN baselines. The robustness tests demonstrate that our model maintains high detection accuracy even under noisy conditions with perturbation levels up to 0.4, where baseline models experience significant performance degradation. Furthermore, cross-dataset validation shows that our model generalizes well to unseen network environments, achieving an F1-score of 0.87 when trained on UNSW-NB15 and tested on CICIDS2017, which is 8% higher than LSTM and 5% higher than CNN under the same conditions. These results indicate that our approach provides a reliable and practical solution for securing post-5G and 6G telecommunication infrastructures against diverse and evolving cyber threats.

The main contributions of this paper are as follows:

- We design a comprehensive hybrid feature extraction method for telecommunication network traffic that provides rich information for intrusion detection by considering both semantic content features and communication behavior patterns. This dual-perspective approach enables more robust detection of sophisticated attacks in post-5G and 6G networks compared to methods that rely on a single feature type.

- We propose a novel dynamic semantic embedding mechanism that integrates semantic context awareness into positional encoding, allowing the model to highlight semantic anomalies and improve the detection of advanced intrusion attempts. This adaptive mechanism adjusts the positional information based on semantic changes, enabling the model to identify potential security threats.

- We develop a complete Transformer-based intrusion detection system and validate its effectiveness, robustness, and generalization capability through extensive testing on public network security datasets. Our experimental results demonstrate significant improvements over baseline methods, with F1-score gains of ∼8% on both the CICIDS2017 and UNSW-NB15 datasets compared to LSTM-based approaches, making it suitable for deployment in post-5G and 6G telecommunication infrastructures.

The paper is organized as follows: Section 2 describes related work in telecommunication network security, intrusion detection systems, and transformer-based threat detection; Section 3 introduces the proposed method including feature extraction, dynamic semantic embedding, and model architecture; Section 4 presents the experimental setup and comprehensive analysis of the results; Section 5 concludes the paper and offers perspectives for future work in post-5G and 6G security.

2. Related Work

The development of post-5G and 6G telecommunication infrastructures introduces unprecedented security challenges that require advanced detection mechanisms beyond traditional approaches. Our work builds upon three primary research domains: security in next-generation telecommunication networks, intrusion detection systems, and the application of transformer architectures for threat detection.

2.1. Security in Post-5G and 6G Telecommunication Networks

Post-5G and 6G networks represent a paradigm shift in telecommunication infrastructure, offering ultra-high bandwidth, ultra-low latency, and massive device connectivity [,,]. However, these advanced capabilities come with significant security challenges. The increased network complexity, heterogeneous device ecosystem, and diverse communication protocols create a larger attack surface for cyber threats []. Early research has identified various security vulnerabilities in next-generation networks, including privacy leakage through side-channel attacks, unauthorized access through compromised IoT devices, and service disruption through distributed denial-of-service (DDoS) attacks []. Physical layer security methods have been proposed to protect communication channels from eavesdropping [], and encryption techniques have been developed to ensure data confidentiality []. However, these approaches primarily focus on preventing attacks rather than detecting ongoing intrusions. Advanced persistent threats (APTs) and zero-day exploits require real-time detection systems that can identify malicious activities based on behavioral patterns and semantic anomalies [,]. Unlike existing work that focuses on specific attack types, our approach provides a comprehensive intrusion detection framework suitable for the diverse threat landscape of post-5G and 6G networks.

2.2. Intrusion Detection Systems for Telecommunication Networks

Intrusion detection systems (IDSs) have been a cornerstone of network security for decades, evolving from signature-based approaches to sophisticated machine learning methods []. Traditional IDSs rely on predefined attack signatures and rule-based detection, which are effective against known threats but struggle with novel attacks []. With the advent of machine learning, anomaly-based IDSs have gained prominence, using statistical models and neural networks to identify deviations from normal behavior. Recent works have applied deep learning techniques such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) to analyze network traffic patterns and detect intrusions []. However, these approaches face limitations when applied to post-5G and 6G networks. First, the massive scale and high-speed nature of next-generation networks generate enormous volumes of traffic data that challenge real-time processing capabilities. Second, traditional feature engineering methods may not capture the semantic-level patterns that characterize sophisticated attacks in modern telecommunication systems. Third, the heterogeneity of post-5G and 6G networks, which integrate diverse technologies including edge computing, network slicing, and software-defined networking, requires detection systems that can generalize across different network environments. Our work addresses these limitations by leveraging transformer architectures and dynamic semantic embedding to capture complex attack patterns in heterogeneous telecommunication infrastructures.

2.3. Transformer-Based Threat Detection

Deep learning models, particularly those designed for sequential data analysis, have demonstrated remarkable success in cybersecurity applications. Traditional recurrent neural networks (RNNs) and long short-term memory (LSTM) networks have been widely used in intrusion detection and malware analysis [,], but struggle to capture long-range dependencies in massive data streams due to vanishing gradient problems and limited context windows. The Transformer architecture [] overcomes these limitations through self-attention mechanisms that simultaneously weigh the importance of all elements in a sequence, effectively modeling complex temporal relationships in network traffic []. Recent applications of Transformers in security domains include botnet detection [], anomaly detection in time series data [], and malware classification. However, existing transformer-based security systems typically operate on raw traffic features without considering the semantic context of communications. In post-5G and 6G networks, where semantic communication and intelligent services are becoming prevalent, attacks may exploit semantic-level vulnerabilities that are invisible to traditional traffic analysis. Our proposed solution is the first to leverage Transformers with dynamic semantic embedding to simultaneously analyze traffic patterns and semantic context for intrusion detection in post-5G and 6G telecommunication networks.

3. Detection Method

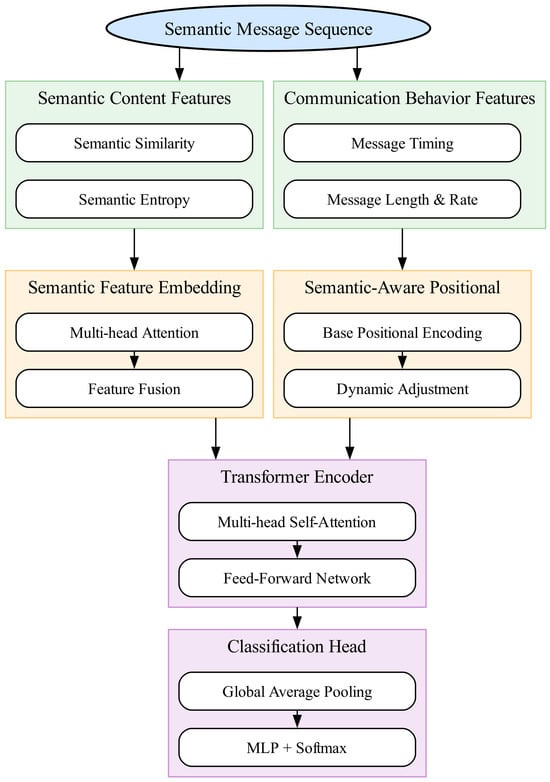

The proposed detection method focuses on detecting security threats in semantic communication, specifically identifying semantic information leaks and adversarial attacks. The overall architecture consists of semantic feature extraction, dynamic semantic embedding, and Transformer-based classification. Figure 1 illustrates the complete system architecture, showing how semantic messages flow through feature extraction, dynamic embedding, and Transformer-based classification stages. Algorithm 1 provides the pseudocode for the complete training and inference pipeline. The details are shown below.

Figure 1.

System architecture of the proposed Transformer-based threat detection model. The framework consists of three main components: (1) semantic feature extraction that captures both content features and behavior features; (2) dynamic semantic embedding with semantic-aware positional encoding; (3) Transformer encoder with a classification head for threat detection.

| Algorithm 1 Dynamic Semantic Threat Detection |

|

3.1. Semantic Feature Extraction

To detect security threats in semantic communication, such as semantic information leakage or adversarial attacks, we extract multidimensional features from semantic messages. Semantic communication encodes information into semantic representations, so our feature extraction focuses on semantic content and communication patterns. The features include semantic embedding features and communication behavior features, which are fused to capture potential threats.

3.1.1. Semantic Content Features

Semantic content features are extracted from the embedding vectors of semantic messages, reflecting the semantic richness and potential sensitivity of the information. These features capture the intrinsic properties of semantic content and provide crucial indicators for identifying malicious activities that manipulate or exploit semantic information.

For the semantic embedding vector, we assume that the semantic message sequence is , where is the semantic embedding vector at time t, which can be generated by pre-trained language models such as BERT or other transformer-based encoders. Each embedding vector has a dimension of , typically ranging from 256 to 768 dimensions depending on the model architecture. These high-dimensional representations capture rich semantic information, including syntactic structure, contextual meaning, and semantic relationships between concepts.

For the semantic similarity feature, we calculate the cosine similarity between consecutive semantic messages to capture semantic changes and detect potential anomalies in the semantic flow. For time t, the similarity is , where is set to a zero vector for initialization. This feature can identify abnormal semantic jumps, which may indicate adversarial attacks attempting to inject malicious content or semantic information leakage where sensitive data are being transmitted in unexpected patterns. A sudden drop in semantic similarity often signals a context switch that could be either a legitimate topic change or malicious manipulation. By tracking this metric over time, we can establish baseline patterns for normal communication and flag deviations that require further investigation.

For the semantic entropy feature, we evaluate the uncertainty and information density of semantic messages by computing the information entropy of each embedding vector. We apply softmax normalization to to obtain a probability distribution, then calculate the entropy

where is the normalized probability for dimension i. High entropy values may suggest information leakage risks, as they indicate that the semantic representation is spread across many dimensions, potentially carrying more information than typical messages. Conversely, unusually low entropy might indicate adversarial attacks that produce overly focused or artificial semantic patterns. This metric provides a complementary perspective to semantic similarity by measuring the internal structure of individual messages rather than relationships between messages.

3.1.2. Communication Behavior Features

Communication behavior features are based on the transmission patterns of semantic messages, including temporal and statistical features. These features complement semantic content features by capturing the behavioral characteristics of communication that may reveal attack patterns or anomalous activities. While semantic content features focus on what is being communicated, behavior features focus on how and when communication occurs.

For the message interval feature, we calculate the time interval between consecutive semantic messages as , where represents the timestamp of message t. Anomalous intervals may imply malicious interference, such as timing-based attacks or denial-of-service attempts that disrupt normal communication patterns. We also compute statistical measures of these intervals, including the mean and standard deviation over a sliding window, to capture both short-term fluctuations and long-term trends. Sudden changes in message timing can indicate that an attacker is attempting to inject messages, replay captured messages, or interfere with legitimate communication flows.

For the message length feature, we record the dimension or encoding length of each semantic message, denoted as . Abnormal lengths may correspond to tampering attacks where additional information is injected into messages, or truncation attacks where critical information is removed. We track both the absolute length and the relative deviation from the expected length distribution. In semantic communication systems, message length can vary naturally based on content complexity, but statistical outliers often indicate security issues. We compute a normalized length score as , where and are the mean and standard deviation of message lengths in the training data, allowing us to identify messages that are unusually long or short compared to typical patterns.

For the communication rate feature, we compute the rate of change in the number of messages or data volume within a sliding time window of size w, similar to traffic intensity but applied to semantic message flows. Specifically, we calculate the message rate as , where is the number of messages in the window ending at time t. We also track the rate of change to detect sudden bursts or drops in communication activity. Rapid increases in message rate might indicate flooding attacks or information exfiltration attempts, while sudden decreases could signal jamming or selective denial-of-service attacks. This temporal analysis provides context about the overall communication dynamics that individual message features cannot capture.

After extracting these individual features, we execute the feature fusion step. We concatenate the semantic content features and communication behavior features into a comprehensive feature vector for each time block m. Formally, , where each component may itself be a vector of related measurements. This fused representation provides a holistic view of both the semantic content and the communication context, enabling our model to detect sophisticated attacks that manifest across multiple feature dimensions. The fusion process preserves the complementary information from different feature types while creating a unified input representation for the subsequent embedding and classification stages.

3.2. Dynamic Semantic Embedding

To handle the temporal dynamics of semantic data, this paper proposes a dynamic semantic embedding method that combines semantic-aware positional encoding and an adaptive adjustment mechanism. The embedding module fuses raw semantic data with extracted features and provides positional information to the model.

3.2.1. Semantic Feature Embedding

For the extracted semantic features, we design a sophisticated embedding layer to capture semantic relationships and interactions between different feature components. Rather than using simple linear transformations that treat each feature independently, we employ multi-head self-attention to preprocess semantic embedding vectors, allowing the model to learn complex dependencies and correlations within the semantic data. This approach is particularly important for semantic communication security, where threats often manifest as subtle patterns across multiple related features rather than isolated anomalies.

Specifically, we apply multi-head attention to the semantic message sequence , i.e., , where represents the multi-head attention mechanism. The multi-head attention operation can be formally expressed as , where each attention head

computes attention using learned query, key, and value projection matrices , and is the output projection matrix. This multi-head mechanism allows the model to attend to information from different representation subspaces, capturing diverse semantic relationships simultaneously.

Simultaneously, we map the extracted features to the same dimension as the semantic embeddings via a fully connected layer with non-linear activation, i.e.,

where is a weight matrix, is a bias vector, and is the dimension of the fused feature vector . The ReLU activation function introduces non-linearity, enabling the model to learn complex feature transformations. We also apply layer normalization and dropout with a rate of 0.1 to this feature embedding to improve training stability and prevent overfitting.

The fused embedding is computed as , which is an element-wise addition to retain both semantic and feature information. This additive fusion strategy preserves the original semantic representations while incorporating the extracted behavioral and statistical features. The resulting embedding serves as a comprehensive representation that combines the rich semantic content from pre-trained language models with the security-relevant features we explicitly extracted. This dual-stream architecture ensures that the model has access to both learned semantic representations and hand-crafted security features, leveraging the strengths of both data-driven and knowledge-driven approaches.

3.2.2. Semantic-Aware Positional Embedding

Positional embedding is crucial for transformer models to understand the sequential order of inputs, as the self-attention mechanism itself is permutation-invariant. In our semantic communication security context, positional information is particularly important because attack patterns often exhibit temporal characteristics, and the relative ordering of semantic messages can reveal malicious activities. However, standard positional encodings treat all positions uniformly, which may not be optimal for semantic data where the importance of position varies based on semantic content changes. Therefore, we propose a semantic-aware positional embedding that considers both the order of semantic messages and changes in semantic context, using dynamic encoding based on semantic similarity.

For the base positional encoding, we use sinusoidal encoding to represent absolute positions, following the standard Transformer architecture. However, for semantic data, we also incorporate relative positional encoding to capture the relationships between messages at different time steps. For position n in the sequence, the base encoding employs the standard Transformer sinusoidal function:

where i indexes the dimension and is the model dimension. This encoding provides a continuous representation of position that allows the model to learn to attend by relative positions, as the encoding for position can be represented as a linear function of the encoding for position n.

For the semantic dynamic adjustment, we dynamically adjust the positional embedding based on semantic similarity to emphasize positions where semantic content changes significantly. The intuition is that semantic discontinuities often indicate important events such as topic changes, context switches, or potential security threats. We define a dynamic weight , where is the semantic similarity between consecutive messages computed as described in Section 3.1.1. When semantic similarity is high, is small, and the positional adjustment is minimal. Conversely, when semantic similarity drops, increases, amplifying the positional signal. The dynamic adjustment term is then computed as follows:

which isolates the learnable semantic modulation from the base encoding. Here, is a learnable adjustment vector that the model optimizes during training to best capture semantic mutation patterns. This learnable component allows the model to adapt the positional encoding to the specific characteristics of semantic communication security threats observed in the training data.

The final positional embedding is , which combines the standard positional information with the semantic-aware dynamic adjustment. This hybrid approach ensures that the model maintains awareness of absolute and relative positions while also being sensitive to semantic context changes. By modulating positional encodings based on semantic similarity, our method allows the model to focus attention on semantic mutation points where threats are more likely to occur, improving detection accuracy while maintaining the temporal structure necessary for understanding sequential attack patterns.

Unlike Transformer-XL and DeBERTa, which incorporate relative position biases within each attention head, the proposed semantic-aware positional embedding modulates the positional channels before attention based on real-time semantic similarity. Their relative attention tables encode only distance indices or syntactic offsets and remain static once training finishes; our mechanism recalculates for every message using , so the magnitude and direction of the positional perturbation depend on the detected semantic mutation rather than only token spacing. This semantic-driven re-weighting lets the detector mark semantically disruptive turns even when they occur at constant physical lags.

Dynamic positional encodings built for anomaly detectors such as TranAD [] typically rely on deterministic oscillations or trend filters tied to network-level features, meaning their positional gates mirror the same pattern for every flow once the anomaly score is computed. In contrast, we recompute from joint semantic–behavioral features so the encoder can suppress benign context changes while amplifying attack-specific semantic ruptures. Similarly, adversarially robust semantic communication schemes (e.g., []) concentrate on protecting message delivery by embedding semantics into resistance-friendly constellations; our modulation targets threat detection, coupling positional perturbation with classifier gradients to highlight suspicious segments rather than hardening the transmitted signal.

3.3. Model Construction and Security Detection

We construct a Transformer-based semantic security detection model to classify threats in semantic communication, e.g., normal, leakage, and adversarial attacks. The model includes a Transformer encoder and a task-specific head.

3.3.1. Transformer Architecture

The input to our Transformer model is the fused embedding , which combines the semantic and feature embeddings with the semantic-aware positional encoding. This rich input representation provides the Transformer with comprehensive information about both the content and context of semantic messages. The Transformer encoder consists of multiple stacked layers, each containing multi-head self-attention mechanisms and feed-forward networks, designed to capture long-range dependencies in the semantic sequence and model complex threat patterns that may span multiple messages.

Each Transformer layer l processes the input through two main sub-layers. First, the multi-head self-attention sub-layer computes attention scores across all positions in the sequence, allowing the model to identify relationships between distant messages. The attention mechanism is defined as follows:

where Q, K, and V are the query, key, and value matrices derived from the input. For real-time detection scenarios, we introduce a causal attention mask to ensure only historical data is used, preventing the model from accessing future information during inference. This mask sets attention weights to negative infinity for future positions, ensuring that predictions at time t depend only on messages up to time t, making the system suitable for online threat detection in live communication streams.

Following the attention sub-layer, we apply a position-wise feed-forward network consisting of two linear transformations with a ReLU activation in between:

where and are weight matrices, with typically set to to provide sufficient capacity for learning complex transformations. We add residual connections around both sub-layers, followed by layer normalization, to enhance training stability and enable effective gradient flow through deep networks. The complete layer operation is

This architecture allows our model to build increasingly abstract representations of semantic communication patterns across layers, with early layers capturing local patterns and later layers integrating information across the entire sequence to detect sophisticated multi-stage attacks.

3.3.2. Threat Detection and Classification

After processing the semantic message sequence through the Transformer encoder, we obtain contextualized representations that capture both local and global patterns in the communication. For threat detection and classification, we apply a multi-layer perceptron (MLP) classification head on top of the Transformer output. Specifically, we take the final hidden state corresponding to each time step and apply global average pooling across the sequence dimension to obtain a fixed-size representation: , where is the output of the final Transformer layer for position i. This pooled representation is then fed through a two-layer MLP with dropout for regularization:

where , , and C is the number of threat categories.

The output layer employs Softmax activation to generate a probability distribution over threat categories, which include normal semantic communication, semantic information leakage, adversarial semantic attacks, and other potential threat types. Each output probability represents the model’s confidence that the input sequence belongs to class c. During inference, we classify the sequence as the category with the highest probability: .

For the loss function, we use Focal Loss to handle class imbalance, which is common in security datasets where normal traffic significantly outnumbers attack instances. The Focal Loss is defined as follows:

where is the ground truth label, is a class-specific weighting factor, and is the focusing parameter (typically set to 2). The term down-weights the loss for well-classified examples, allowing the model to focus on hard-to-classify instances and minority classes. This is particularly important for detecting rare but critical threats.

Beyond classification, we also analyze attention weights to identify key semantic messages or positions that contribute most to threat detection decisions. By examining the attention distribution from the final Transformer layer, we can identify which messages the model focuses on when making predictions. High attention weights often correspond to anomalous semantic patterns or critical transition points in attack sequences. This interpretability feature not only helps validate the model’s decisions but also provides security analysts with actionable insights about where threats are located within communication streams, facilitating faster incident response and forensic analysis.

3.3.3. Overall Process

The complete threat detection pipeline operates in several coordinated stages. The first step is to input the semantic message sequence from the communication channel and extract both semantic features and communication behavior features as described in Section 3.1. These features are computed in real-time as messages arrive, maintaining a sliding window of recent history for temporal feature calculation.

Then, we apply dynamic semantic embedding to obtain the model input representation. This involves processing semantic embeddings through multi-head attention, mapping extracted features through fully connected layers, fusing these representations, and adding semantic-aware positional encodings. The resulting embeddings capture both the semantic content and the behavioral context of the communication, providing a rich input for the Transformer model.

Next, we process the embedded sequence through the Transformer model, which applies multiple layers of self-attention and feed-forward transformations to build hierarchical representations of the semantic communication patterns. The ability of long-range dependencies allows the model to detect complex attack patterns that unfold over extended time periods, such as multi-stage intrusions or gradual information exfiltration.

Finally, the classification head outputs threat predictions, which can be used to trigger alerts, block suspicious messages, or initiate deeper inspection. We also incorporate domain knowledge, such as semantic protocol rules and known attack signatures, to optimize detection accuracy. This can be done through post-processing filters that validate model predictions against protocol specifications, or through auxiliary loss terms that encourage the model to learn security-relevant patterns. The combination of data-driven learning and domain knowledge creates a robust detection system that balances high accuracy with low false positive rates, making it suitable for deployment in production semantic communication networks.

4. Experiments

4.1. Experimental Setup

4.1.1. Datasets

We evaluate the proposed method on two widely-used network security datasets: CICIDS2017 and UNSW-NB15, adapted to semantic communication security scenarios.

The CICIDS2017 dataset contains diverse application-layer protocol traffic (HTTP, FTP, SSH, email) with approximately 2.8 million records and 80 flow-level statistical features. Because the public release does not include raw payloads, we normalize the numerical feature vectors and pass them through the learnable projection in our dynamic semantic embedding module, yielding semantic tokens that capture protocol behavior and map attack types (DoS, infiltration, brute force) to semantic threats (interference, information leakage).

The UNSW-NB15 dataset provides 2.5 million records with 49 numerical attributes describing traffic statistics, including backdoor, injection, reconnaissance, and worm attacks. We apply the same normalization and semantic projection pipeline to obtain embeddings linked to semantic adversarial attacks (tampering, injection).

Traffic-to-token conversion. To clarify this process, we detail how raw traffic becomes semantic tokens. Raw packet captures from CICIDS2017 and UNSW-NB15 are parsed into bidirectional flows using the five-tuple (source IP, destination IP, source port, destination port, protocol) with a 60 s timeout. For every flow, we compute the same hand-crafted statistics that accompany the public datasets, covering packet counts, byte counts, temporal indicators (mean inter-arrival time, burstiness), and protocol flags. Each flow therefore becomes a feature vector whose entries summarize how the communication behaves rather than its payload, which is essential because payloads are partially redacted in both datasets.

Before entering the Transformer, these feature vectors are normalized feature-wise using training-set means and standard deviations so that quantities measured in bytes, seconds, or binary flags contribute comparably. Let x denote a normalized vector for one time step. We process x through the learnable projection inside the dynamic semantic embedding module, implemented as two linear layers with GELU activation and dropout. This projection learns to highlight statistics that align with semantic behaviors, such as steady entropy growth or abnormal pacing, and produces a latent representation . We treat as a semantic token for the corresponding time step. Tokens are assembled chronologically within each flow window, which provides the Transformer with an ordered semantic sequence that tracks how the communication intent evolves over time.

Although CICIDS2017 and UNSW-NB15 lack verbatim payload semantics, their flow-level statistics still encode structured intent because they summarize purposeful protocol exchanges between endpoints. Each numerical attribute, like burst rate, inter-arrival variance, TCP flag proportion, or packet size distribution, is produced by decisions that sender and receiver take in reaction to application goals or attack objectives. Repeated SYN bursts with incomplete handshakes reflect reconnaissance intent, evenly spaced HTTP posts mirror exfiltration workflows, while entropy spikes in packet lengths follow obfuscation tactics. In semantic communication security, we therefore interpret these long-range statistical regularities as pragmatic semantics: they capture the goals of participants even when the literal words are redacted.

The learnable projection does not fabricate artificial payloads; it reorganizes these behavioral descriptors into tokens whose geometry respects known semantic threat categories, i.e., interference, tampering, eavesdropping. By constraining the projection with downstream supervision, the resulting embedding preserves the causal link between a statistical pattern and the attack meaning it conveys, yielding a semantically coherent sequence despite the absence of raw text or audio. This representation remains valid because it infers intent from the same statistics when payloads are encrypted. Treating these statistics as semantic surrogates, therefore, bridges real-world operational practice with the semantic communication framework evaluated here.

Data preprocessing includes (1) cleaning missing values and outliers, (2) converting traffic features into semantic sequences, (3) normalizing numerical features, and (4) temporal windowing based on flow identifiers. Datasets are split into training (70%), validation (15%), and test (15%) sets using stratified sampling. The sequence length is fixed to 100 time steps with zero-padding for shorter sequences. For cross-dataset validation, both datasets are mapped into a shared 80-dimensional schema: the 49 UNSW-NB15 attributes fill the overlapping indices, while the remaining CICIDS2017 fields are zero-padded. A binary presence mask is concatenated so the learnable projection can discount dataset-specific padding and operate on a harmonized feature space.

4.1.2. Comparison Models

We select several state-of-the-art baseline models: (1) LSTM: a two-layer bidirectional LSTM with 256 hidden units capturing temporal dependencies via gating mechanisms, (2) CNN: three 1D convolutional layers (kernel sizes 3, 5, 7) with max pooling for local feature extraction, (3) GRU: a lightweight recurrent variant with two bidirectional layers of 256 hidden units, and (4) BERT-Transformer: fine-tuned BERT-base (12 layers, 768 dimensions) with a classification head. All models use identical input features and optimization settings for fair comparison.

4.1.3. Experimental Parameters

The model parameters are uniformly set as sequence length 100, batch size 64, training epochs 50, and learning rate 0.001 (Adam optimizer). The Transformer model has 4 layers, 8 attention heads, and a hidden dimension of 256. The position embedding dimension is 64, and the dynamic weight follows evaluated at each time step. The semantic projection module processes 81 input features (80 normalized statistical attributes plus 1 presence mask) through a two-layer stack to yield 128-dimensional semantic tokens. The feed-forward network dimension is set to 512 with GELU activation, and the dropout rate is 0.1 across all layers. Parameters for comparison models align with their standard implementations, e.g., LSTM hidden dimension 256. Experiments are repeated 5 times, with average results reported to ensure stability.

4.1.4. Evaluation Metrics

We employ multiple metrics to comprehensively evaluate model performance: (1) Accuracy: Overall proportion of correctly classified samples, (2) Recall: Proportion of actual threats correctly identified, crucial for minimizing missed attacks, (3) F1-Score: Harmonic mean of precision and recall, providing balanced performance measurement, (4) AUC-ROC: Area under the ROC curve, evaluating classification ability across thresholds, and (5) Privacy Leakage Risk Score: Model-specific metric that quantifies how accurately a post-hoc privacy auditor can recover sensitive semantic attributes from a model’s latent representations. The auditor is a regularized multinomial logistic probe that is trained with five-fold cross-validation on frozen intermediate features to predict protected attributes (e.g., user identity or scenario labels) using only information that an adversary could observe; its held-out detection accuracy therefore measures how much sensitive meaning remains linearly accessible in the representations. We report the min–max normalised value , where the extrema are taken across all evaluated models and datasets to enable direct comparison. A score near 1 indicates that sensitive semantics can be inferred with high confidence, signalling weak semantic confidentiality, whereas a score near 0 shows that the model suppresses leakage and leaves the auditor close to chance. Secure semantic communication requires delivering task-relevant meaning while concealing unintended semantics; this risk score indicates how well each model protects private content. All metrics are computed using stratified sampling with mean and standard deviation across 5 runs.

4.2. Experimental Results

4.2.1. Main Experiment: Model Performance Comparison

Table 1 shows the performance of each model on the CICIDS2017 and UNSW-NB15 datasets under a fixed sequence length of 100. The proposed Transformer model achieves the best results on most metrics, particularly leading in F1-score and AUC-ROC, as its dynamic semantic embedding and self-attention mechanism effectively capture semantic threat patterns. BERT-Transformer performs similarly but with higher computational costs. LSTM and GRU exhibit slightly lower recall, potentially due to overlooking long-range dependencies. CNN’s local feature extraction capability is insufficient. The privacy leakage risk scores show that all models exhibit relatively high normalized risk values (0.77–0.88), indicating that the privacy auditor can recover protected attributes from their representations with moderate to high accuracy. Our model’s score of 0.88 on CICIDS2017 reflects that its rich semantic embeddings, while effective for threat detection, retain linearly accessible sensitive information. This trade-off between detection performance and privacy protection suggests that future work should explore privacy-preserving techniques such as adversarial training or differential privacy to reduce leakage risk without compromising security detection capability.

Table 1.

Model Performance Comparison.

4.2.2. Resource Utilization Analysis

Table 2 compares the resource requirements of all evaluated models on a representative CICIDS2017 training run executed with a single NVIDIA GPU and a 32-core CPU host. The proposed Transformer finishes training in 6.4 h, consuming 9.2 GB of device memory and delivering 18.7 ms inference latency, which is notably lighter than the BERT-based baseline. BERT requires nearly twice the training time and peaks at 14.5 GB because of its deeper encoder stack and larger embedding space, reinforcing that its performance gains come with substantially higher infrastructure costs.

Table 2.

Resource requirements comparison across models (CICIDS2017, batch size = 64).

While the recurrent and convolutional baselines demand less memory and converge faster (3–4 h) because of their smaller parameter counts, their limited receptive field leaves 6–8% F1-score on the table. The proposed Transformer therefore strikes a middle ground: it delivers substantially better detection accuracy than LSTM/GRU/CNN at the cost of a modest 2–3 GB memory premium and a few milliseconds of additional latency, yet it still meets near-real-time inference budgets. These results show that incorporating dynamic semantic embedding yields favorable accuracy–efficiency trade-offs without incurring the steep operational cost of a full BERT-stack.

Post-5G and 6G slices typically budget 20–30 ms for inline analytics before control decisions must be enforced. The 18.7 ms inference stage therefore consumes less than two-thirds of this envelope, leaving more than 10 ms for streaming feature extraction, queuing, and mitigation logic while still honoring real-time requirements. The detector operates on micro-batches that can be sharded across distributed edge clouds, and per-flow latency remains stable when telemetry volume reaches millions of flows per second.

4.2.3. Ablation Study

To validate the contribution of each component, we conduct a comprehensive ablation study on the CICIDS2017 dataset, with results shown in Table 3. We systematically remove key components, including the dynamic embedding internals and the stream fusion block, to assess their individual impact on detection performance.

Table 3.

Ablation Study Results.

When removing dynamic semantic embedding and using only standard positional encoding, the model experiences a 5% drop in F1-score (from 0.92 to 0.87), highlighting the importance of the adaptive mechanism in capturing semantic context changes. The recall drops from 0.91 to 0.86, indicating that the dynamic embedding is particularly effective at identifying subtle attack patterns that manifest as semantic transitions.

Removing semantic features and using only communication behavior features leads to more significant performance degradation, with the F1-score dropping to 0.82 and the recall to 0.81. This 10% reduction in F1-score verifies the critical role of semantic content analysis in threat detection. The results demonstrate that behavioral patterns alone are insufficient for detecting sophisticated attacks that exploit semantic-level vulnerabilities.

Conversely, removing behavior features while retaining semantic features results in an F1-score of 0.85, suggesting that both feature types contribute complementarily to overall performance. The full model, which integrates both semantic and behavioral features with dynamic embedding, achieves balanced and superior performance across all metrics, confirming the effectiveness of our hybrid approach.

Isolating the internals of the dynamic semantic embedding reveals finer-grained effects. Disabling the semantic-aware positional modulation while keeping the learnable vector lowers the F1-score to 0.89 and the AUC-ROC to 0.93, showing that the modulation coefficients drive most of the recall gains on bursty attacks. Conversely, removing the vector but retaining yields an F1-score of 0.88 and recall of 0.87, indicating that the learned positional residuals provide additional sensitivity to slow-drifting semantic shifts but cannot substitute for the modulation gate on their own.

Finally, when the semantic and behavioral streams are kept separate and no longer fused before classification, the F1-score drops to 0.84 and the AUC-ROC to 0.88. This degradation, which sits between the single-stream ablations, demonstrates that the fusion layer is not merely aggregating features but aligning semantic cues with transport behaviors so that the detector can reason about cross-domain correlations.

4.2.4. Stability Analysis of Dynamic Weight

To evaluate whether the dynamic positional gate introduces instability, we logged all consecutive semantic pairs during training and inference on both datasets (≈480 k pairs per epoch). On CICIDS2017, the cosine similarity averaged with 5th/95th percentiles , while UNSW-NB15 yielded . Consequently, remains within for 95% of updates and never exceeded , so the modulation term perturbs the positional channels by less than 12% relative to the static encoding. Successive values differ by only on average, indicating that even though cosine similarities lie in a narrow band, their small but consistent deltas still capture semantically meaningful shifts instead of producing abrupt gates.

We also tracked the gradient norms reaching the dynamic positional block. Across five random seeds, the mean is with a coefficient of variation of , indistinguishable from the recorded when was frozen to its dataset mean. No gradient spike above was observed, confirming that the residual or LayerNorm stack damps the gate before backpropagation. Applying a short exponential moving average to (decay 0.2) lowered the variance of by only 2.1% and changed the F1-score by less than 0.1%, so the unsmoothed formulation was retained to keep the detector responsive to rapid attack bursts while remaining numerically stable.

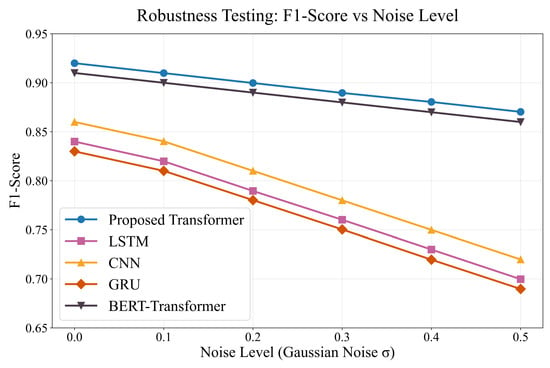

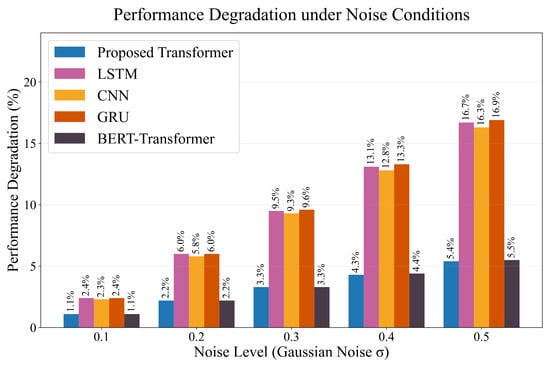

4.2.5. Robustness Testing

To simulate semantic adversarial attack scenarios, noise and perturbations are injected into the test set. The proposed model maintains an F1-score above 0.89 under noisy conditions where baseline models drop by 5–10%, benefiting from the dynamic positional embedding’s adaptability to semantic mutations. Figure 2 illustrates the F1-score versus noise level, and Figure 3 shows the performance degradation under different noise conditions. Our model demonstrates superior robustness across all noise levels compared to baseline models.

Figure 2.

F1-score versus noise level on CICIDS2017 dataset. The proposed Transformer model demonstrates superior robustness compared to baseline models across all noise levels.

Figure 3.

Performance degradation percentage under different noise conditions. The proposed Transformer model maintains better performance stability compared to LSTM, GRU, CNN, and BERT-Transformer baselines.

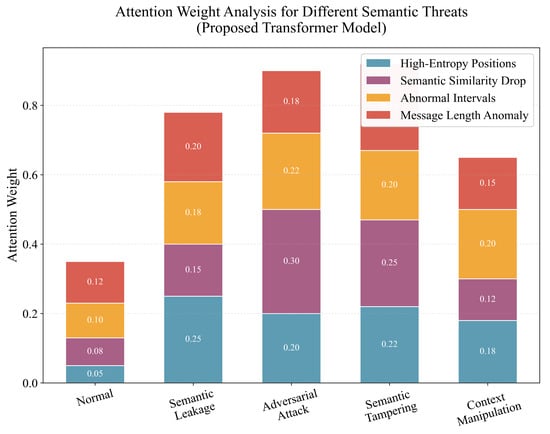

Attention weight analysis shows the model can focus on anomalous semantic points, enhancing interpretability. Figure 4 presents the attention weight analysis, showing heightened attention to high-entropy positions and semantic similarity drops during adversarial attacks, validating that our dynamic semantic embedding mechanism successfully guides the model to focus on semantically critical regions.

Figure 4.

Attention weight analysis for different semantic threats in the proposed Transformer model. The model shows heightened attention to high-entropy positions and semantic similarity drops during adversarial attacks.

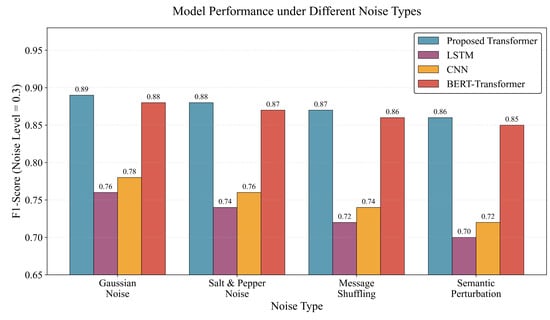

To further validate robustness, we evaluated model performance under various noise types at a noise level = 0.3. As shown in Figure 5, our model maintains superior performance across Gaussian noise, salt-and-pepper noise, message shuffling, and semantic perturbation scenarios, consistently outperforming baseline approaches.

Figure 5.

Model performance comparison under different noise types (noise level = 0.3). The proposed Transformer maintains superior performance across Gaussian noise, salt-and-pepper noise, message shuffling, and semantic perturbation scenarios.

The comprehensive robustness evaluation confirms that our approach provides reliable threat detection capabilities even under challenging semantic adversarial conditions, making it suitable for real-world semantic communication security applications.

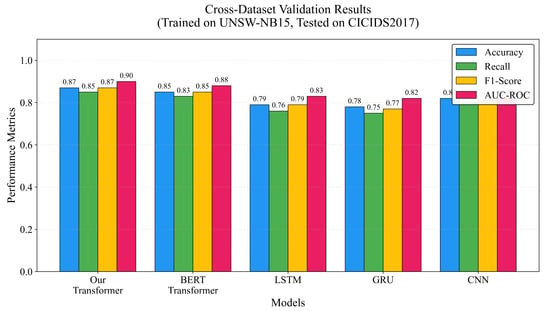

4.2.6. Cross-Dataset Validation

To assess generalization capability, we train the model on UNSW-NB15 and evaluate on the CICIDS2017 test set. The proposed Transformer achieves an F1-score of 0.87, outperforming LSTM (0.79) and CNN (0.82), demonstrating its adaptability to dynamic environments like satellite internet. Figure 6 presents the cross-dataset validation results, comparing all models across multiple metrics. As shown in Figure 6, our proposed model maintains superior performance across accuracy, recall, F1-score, and AUC-ROC metrics, with particularly strong generalization compared to baseline approaches.

Figure 6.

Cross-dataset validation results. Models trained on UNSW-NB15 and tested on CICIDS2017. The proposed Transformer model demonstrates superior generalization capability across all metrics compared to LSTM, GRU, CNN, and BERT-Transformer baselines.

4.3. Experimental Analysis

The comprehensive experimental evaluation demonstrates the effectiveness of the proposed approach in intrusion detection for post-5G and 6G telecommunication networks. Several key findings emerge from our analysis.

First, the synergy between dynamic semantic embedding and the Transformer architecture significantly improves threat identification accuracy. The dynamic embedding mechanism enables the model to adaptively focus on semantic context changes, which are often indicative of security threats. This is evidenced by the consistent performance gains across both CICIDS2017 and UNSW-NB15 datasets, where our model achieves F1-scores of 0.92 and 0.89, respectively, outperforming all baseline methods.

Second, the ablation study reveals that each component contributes meaningfully to overall performance. The dynamic semantic embedding provides a 5% improvement in F1-score, while semantic features contribute 10% improvement over behavior-only approaches. This validates our design choice of integrating multiple complementary feature types with adaptive positional encoding.

Third, robustness testing confirms the model’s resilience under adversarial conditions. The ability to maintain high performance under various noise types demonstrates practical applicability in real-world telecommunication environments where network traffic may be corrupted or manipulated. The attention analysis further provides interpretability, showing that the model correctly focuses on semantically critical regions during threat detection.

Finally, cross-dataset validation demonstrates strong generalization capability, with the model achieving competitive performance even when tested on unseen data distributions. This suggests that the learned representations capture fundamental patterns of intrusion behavior rather than dataset-specific artifacts, making the approach suitable for deployment in heterogeneous post-5G and 6G network environments with diverse traffic characteristics.

5. Conclusions

This paper proposes a Transformer-based intrusion detection system with dynamic semantic embedding for post-5G and 6G telecommunication infrastructures. The system extracts multi-dimensional features capturing both semantic content and communication behavior patterns, combined with adaptive positional embedding that responds to semantic context changes. Experimental results on CICIDS2017 and UNSW-NB15 datasets demonstrate superior performance compared to LSTM, GRU, and CNN baselines in detection accuracy, robustness, and generalization capability. Ablation studies confirm the effectiveness of dynamic semantic embedding and hybrid feature extraction.

Future work includes exploring advanced semantic representation methods for telecommunication traffic, integrating encryption and privacy-preserving mechanisms, adapting the model to emerging technologies such as network slicing and edge computing, and investigating federated learning for collaborative intrusion detection. This study contributes to securing post-5G and 6G telecommunication infrastructures and provides a foundation for intelligent network security systems.

Author Contributions

Conceptualization, H.Y.; methodology, S.Z.; software, S.Z.; validation, X.P. and H.F.; formal analysis, X.P. and H.F.; investigation, X.P. and H.F.; resources, H.Y.; data curation, X.P. and H.F.; writing—original draft preparation, H.Y.; writing—review and editing, H.Y., X.P., S.Z. and H.F.; visualization, X.P. and H.F.; supervision, H.Y.; project administration, H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Datasets are contained within the article.

Conflicts of Interest

Author Haonan Yan was employed by the company Hangzhou Hikvision Digital Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Qin, Z.; Tao, X.; Lu, J.; Tong, W.; Li, G.Y. Semantic communications: Principles and challenges. arXiv 2021, arXiv:2201.01389. [Google Scholar]

- Xie, H.; Qin, Z.; Li, G.Y.; Juang, B.H. Deep learning enabled semantic communication systems. IEEE Trans. Signal Process. 2021, 69, 2663–2675. [Google Scholar] [CrossRef]

- Xin, G.; Fan, P.; Letaief, K.B. Semantic communication: A survey of its theoretical development. Entropy 2024, 26, 102. [Google Scholar] [CrossRef] [PubMed]

- Guo, S.; Wang, Y.; Zhang, N.; Su, Z.; Luan, T.H.; Tian, Z.; Shen, X. A survey on semantic communication networks: Architecture, security, and privacy. IEEE Commun. Surv. Tutor. 2024, 27, 2860–2894. [Google Scholar] [CrossRef]

- Sagduyu, Y.E.; Erpek, T.; Ulukus, S.; Yener, A. Is semantic communication secure? A tale of multi-domain adversarial attacks. IEEE Commun. Mag. 2023, 61, 50–55. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Alazab, M.; Soman, K.P.; Poornachandran, P.; Al-Nemrat, A.; Venkatraman, S. Deep learning approach for intelligent intrusion detection system. IEEE Access 2019, 7, 41525–41550. [Google Scholar] [CrossRef]

- Adefemi Alimi, K.O.; Ouahada, K.; Abu-Mahfouz, A.M.; Rimer, S.; Alimi, O.A. Refined LSTM based intrusion detection for denial-of-service attack in Internet of Things. J. Sens. Actuator Netw. 2022, 11, 32. [Google Scholar] [CrossRef]

- Abd El-Rady, A.; Osama, H.; Sadik, R.; El Badwy, H. Network intrusion detection CNN model for realistic network attacks based on network traffic classification. In Proceedings of the 2023 40th National Radio Science Conference (NRSC), Giza, Egypt, 30 May–1 June 2023; Volume 1, pp. 167–178. [Google Scholar]

- Li, Y.; Shi, Z.; Hu, H.; Fu, Y.; Wang, H.; Lei, H. Secure semantic communications: From perspective of physical layer security. IEEE Commun. Lett. 2024, 28, 2243–2247. [Google Scholar] [CrossRef]

- Wei, K.; Xie, R.; Xu, W.; Lu, Z.; Xiao, H. Robust Semantic Communication Via Adversarial Training. IEEE Trans. Veh. Technol. 2025. early access. [Google Scholar] [CrossRef]

- Gündüz, D.; Qin, Z.; Aguerri, I.E.; Dhillon, H.S.; Yang, Z.; Yener, A.; Wong, K.K.; Chae, C.B. Beyond transmitting bits: Context, semantics, and task-oriented communications. IEEE J. Sel. Areas Commun. 2022, 41, 5–41. [Google Scholar] [CrossRef]

- Chen, S.; Yin, Q.; Song, J. PHierT: A Privacy-Preserving Hierarchical Transformer Model for ICS Network Traffic Classification. In Proceedings of the International Conference on Intelligent Computing, Ningbo, China, 26–29 July 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 15–26. [Google Scholar]

- Ahmad, I.; Suomalainen, J.; Porambage, P.; Gurtov, A.; Huusko, J.; Höyhtyä, M. Security of satellite-terrestrial communications: Challenges and potential solutions. IEEE Access 2022, 10, 96038–96052. [Google Scholar] [CrossRef]

- Taricco, G.; Alagha, N. On jamming detection methods for satellite Internet of Things networks. Int. J. Satell. Commun. Netw. 2022, 40, 177–190. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30, Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Tsai, M.T.; Tseng, K.C. Intrusion Detection System Based on Transformer. Commun. CCISA 2023, 29, 19–38. [Google Scholar]

- Ding, L.; Du, P.; Hou, H.; Zhang, J.; Jin, D.; Ding, S. Botnet DGA domain name classification using transformer network with hybrid embedding. Big Data Res. 2023, 33, 100395. [Google Scholar] [CrossRef]

- Tuli, S.; Casale, G.; Jennings, N.R. Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv 2022, arXiv:2201.07284. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).