Abstract

The transition to 5G networks brings unprecedented speed, ultra-low latency, and massive connectivity. Nevertheless, it introduces complex traffic patterns and broader attack surfaces that render traditional intrusion detection systems (IDSs) ineffective. Existing rule-based methods and classical machine learning approaches struggle to capture the temporal and dynamic characteristics of 5G traffic, while many deep learning models lack interpretability, making them unsuitable for high-stakes security environments. To address these challenges, we propose Bidirectional Temporal Anomaly Detector (BiTAD), a deep temporal learning architecture for anomaly detection in 5G networks. BiTAD leverages dual-direction temporal sequence modelling with attention to encode both past and future dependencies while focusing on critical segments within network sequences. Like many deep models, BiTAD’s faces interpretability challenges. To resolve its “black-box” nature, a dual-perspective explainability module, coined TwinLens, is proposed. This module integrates SHAP and TimeSHAP to provide global feature attribution and temporal relevance, delivering dual-perspective interpretability. Evaluated on the public 5G-NIDD dataset, BiTAD demonstrates superior detection performance compared to existing models. TwinLens enables transparent insights by identifying which features and when they were most influential to anomaly predictions. By jointly addressing the limitations in temporal modelling and interpretability, our work contributes a practical IDS framework tailored to the demands of next-generation mobile networks.

1. Introduction

Fifth-generation networks bring a paradigm shift with ultra-high data rates and extremely low latency, facilitating numerous Internet of Things (IoT) and machine-type communication (MTC) applications. The introduction of 5G, combined with the exponential growth of connected devices, presents new challenges for efficient and reliable network management. Legacy intrusion detection systems (IDSs) based on static signatures or shallow machine learning (ML) models are ill-suited to this environment [1]. In particular, traditional signature-based IDSs often fail to detect novel or sophisticated threats [2], while static-threshold anomaly detectors cannot cope with the complexity of 5G traffic [3]. For example, although a hybrid ML framework reported ~99.87% accuracy on the CICIDS2018 dataset [4], that dataset lacks the bursty, session-based traffic and protocol diversity found in real-world 5G networks. Unlike legacy networks, 5G traffic is highly non-stationary and session-oriented, characterized by short, bursty flows and ephemeral IoT connections. Features such as GPRS Tunnelling Protocol (GTP) tunnels, network slicing, and new header structures further contribute to a heterogeneous traffic mix. Anomalies in this environment can include sudden latency spikes, packet loss, or signalling storms that disrupt service. Recent studies define 5G anomalies as unexpected increases in latency or unauthorized access events, reflecting the need to handle dynamic behaviours that are unseen in earlier datasets [3]. These factors also increase the attack surface for IoT-borne threats, which can propagate rapidly over low-latency 5G connections. Given that widely used IDS benchmarks (e.g., KDD’99, NSL-KDD, CICIDS2017) fail to capture 5G-specific characteristics, newer datasets such as 5G-NIDD, collected from a live 5G testbed, have been developed to more accurately represent real-world 5G traffic [5]. However, most current 5G IDSs still prioritise accuracy on non-5G benchmarks and provide limited transparency into which features and, crucially, which timesteps drive decisions in 5G flows. In this work, we adopt the 5G-NIDD dataset to develop a new Bidirectional Temporal Anomaly Detector (BiTAD) with an explainability scheme for next-generation mobile networks.

Fifth-generation traffic is characterized by complex features such as GTP tunnels, short-lived IoT connections and session-based flows, creating a highly dynamic network environment [3]. Anomalies in such networks may manifest as sudden latency spikes, signalling storms, or packet loss. These behaviours typically unfold gradually rather than occurring all at once [6], making temporal modelling essential for reliable detection.

To address this challenge, we propose BiTAD, a deep temporal anomaly detection framework tailored for 5G networks. BiTAD combines a stacked Bidirectional Long Short-Term Memory (BiLSTM) architecture with a single-head temporal self-attention module, allowing for the model to emphasise the most relevant segments of each network-flow sequence. This architecture captures both forward and backward dependencies, improving sensitivity to gradual or evolving attack patterns of 5G traffic. To enhance transparency, BiTAD integrates an explainability framework termed TwinLens, which combines SHAP (for global feature-level attribution) and TimeSHAP (for time-step relevance). Together, they not only reveal the features, but also when these features contribute to anomaly predictions. Unlike prior BiLSTM–Attention detectors evaluated on legacy datasets, BiTAD is the first interpretable deep temporal IDS tested on the 5G-NIDD dataset, achieving strong detection accuracy while providing time-aware insights that support operational trust and understanding.

Additionally, the model adopts a hierarchical BiLSTM design, where the output dimensionality is progressively reduced from 128 to 64 across two stacked BiLSTM layers (64→32 units per direction). This facilitates the abstraction of both short-term burst patterns and long-term dependencies, enabling a deeper understanding of temporal behaviours compared to flat or CNN-based models. Despite its robust anomaly detection capabilities, BiTAD—like most deep learning models—faces challenges in interpretability. To address this, we introduce TwinLens, a novel dual-perspective explainability framework that integrates SHAP for feature-level attribution and TimeSHAP for temporal relevance analysis. TwinLens allows for the post hoc interpretation of model predictions, identifying both which features contributed to the anomaly classification and when those features exerted the most influence in the input sequence. This integrated interpretability enables network analysts to derive transparent, actionable insights, thus enhancing operational trust and supporting responsive threat mitigation in real-world environments.

The key contributions of this work are as follows:

- BiTAD: A novel anomaly detection model that combines a stacked BiLSTM (leveraging the standard LSTM cell gates) with a custom single-head temporal self-attention mechanism. BiTAD integrates bidirectional sequence modelling and attention weighting to emphasise informative timesteps within each flow sequence while preserving LSTM’s intrinsic gating dynamics.

- TwinLens Explainability: A dual explainability module integrating SHAP and TimeSHAP to reveal both what features and when they contribute to anomaly decisions.

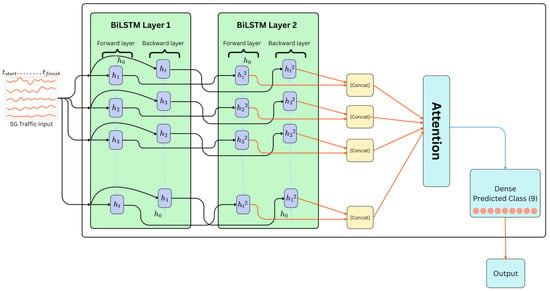

Figure 1 illustrates the architecture of the proposed BiTAD model, which is designed for anomaly detection in 5G network traffic. The model begins with a time-series input representing multivariate 5G traffic patterns across five sequential time steps. This input is first processed by the initial BiLSTM layer, which captures temporal dependencies in both forward and backward directions to extract low-level contextual features. The output from this layer is then passed into a second BiLSTM layer, which operates bidirectionally as well, but with a reduced hidden dimensionality, enabling the model to further refine and condense temporal features while preserving bidirectional context. At each time step, the hidden outputs from the forward and backward passes of the second BiLSTM layer are concatenated to form a unified representation. These representations are then passed into an attention mechanism, which assigns dynamic importance weights to different time steps based on their relevance to the final classification task. The attention-weighted context vector is subsequently processed by a dense (fully connected) layer that outputs the final predicted class, which consists of nine possible labels corresponding to various benign or anomalous traffic categories. This final output layer reflects the model’s classification decision. The combination of deep temporal modelling and attention-based interpretability enables BiTAD to effectively identify complex patterns in 5G traffic data.

Figure 1.

Architecture of the proposed BiTAD model.

2. Related Work

2.1. Classical and Statistical Approaches to Network Anomaly Detection

Traditional intrusion detection has relied on signature and statistical-based methods for their simplicity and speed. Signature-based IDS (e.g., Snort) match known attack patterns quickly, but by design they cannot flag novel (zero-day) threats in 5G traffic because no signature exists [7,8]. Likewise, simple ML classifiers—support vector machines (SVM), decision trees, k-nearest neighbours (KNN), and Naive Bayes (NB)—have long been popular due to their interpretability and computational efficiency. For example, NB is noted for its low computational cost [8]. However, these methods assume static data and require manual feature engineering, so they do not capture sequential patterns in time. Crucially, almost all prior work evaluates such classifiers on legacy datasets (e.g., KDD’99/NSL-KDD, CICIDS-2017) that do not reflect the dynamic protocols and slicing of modern 5G [9]. As a result, classic approaches tend to underperform on high-dimensional, time-varying 5G traffic (they lack temporal modelling and adaptivity). Table 1 summarizes representative classical methods, noting that their speed comes at the cost of no temporal context or ability to generalize to new 5G attacks. These gaps motivate our BiTAD model, which employs sequence learning to overcome the static assumptions of traditional methods.

Table 1.

Representative classical methods.

2.2. Deep Learning Models for Temporal Anomaly Detection

Deep learning methods have become the standard for sequence modelling in IDS. Recurrent neural networks (RNNs) and their gated variants (LSTM, BiLSTM) effectively capture temporal correlations in network traffic. Kurochkin et al. developed a GRU-based deep neural network for intrusion detection in software-defined networks, demonstrating that GRUs outperform baseline models in handling sequential traffic patterns, though long-range dependencies remain challenging [10]. The authors proposed an RNN (GRU) based IDS for a cloud environment using the CSE-CIC-IDS2018 dataset, achieving 99.92% accuracy and 99.69% precision, showcasing strong performance in multi-class detection. Nikitenko et al. [11] introduced a CNN–BiGRU with attention model for network IDS, where a 1D CNN extracts features and a BiGRU with attention emphasizes important time steps. This model achieved 99.81% accuracy on NSL-KDD and 97.80% on UNSW-NB15. Hu et al. [12] proposed a Self-Attention-Gated BiGRU (SAG-BiGRU) for intrusion detection, incorporating under- and over-sampling to handle data imbalance, and attaining notably higher classification precision than a standard BiGRU on CICIDS2017.

LSTM networks mitigate the vanishing gradient problem and effectively learn long-range dependencies, making them well-suited for spotting evolving attack patterns. BiLSTM extends this by processing traffic in both forward and backward directions, thereby improving context modelling. Attention mechanisms have further enhanced these models by highlighting important time steps. For instance, Zhang et al. [13] developed a BiLSTM with multi-head self-attention, which learned sequence features more effectively and achieved over 95% accuracy on KDD and CICIDS benchmarks. In short, modern IDS research shows that LSTM-based models vastly outperform simple RNNs by explicitly modelling sequence memory. Nevertheless, nearly all these studies rely on older datasets. Since the release of the 5G-NIDD dataset [14], several works have evaluated deep models directly on this corpus. For instance, deep transfer learning has improved DDoS detection: a BiLSTM pre-trained on a large 5G slice corpus and fine-tuned on 5G-NIDD achieved about 98.7% accuracy, with higher recall and F1-score than training from scratch [15]. A Transformer-based IDS achieved about 99.79% accuracy on 5G-NIDD with lower prediction time than recurrent baselines, indicating a trade-off between accuracy and efficiency that favours real-time detection [16]. These results, together with the dataset study that highlights realistic 5G traffic composition, confirm that sequence-aware deep models can effectively learn 5G attack patterns on 5G-NIDD.

Despite this progress, most evaluations of attention-based sequence models on 5G-NIDD still emphasize accuracy, without addressing which features and which time steps influence decisions. Our proposed BiTAD model fills this gap by combining a BiLSTM layer to capture bidirectional dependencies with a self-attention layer to reweight features across time—a combination not previously explored on authentic 5G IDS data. Table 2 summarizes the key strengths and limitations of LSTM, GRU, CNN-BiGRU, RNN, and SAG-BiGRU for 5G anomaly detection.

Table 2.

Qualitative comparison of deep models for 5G intrusion detection.

The comparison in Table 2 highlights that despite the emergence of advanced architectures such as Transformers and Graph Neural Networks (GNNs), LSTM-based models remain the most balanced and reliable choice for 5G anomaly detection. While transformer and graph-based detectors achieve near-perfect accuracies (≈98–99%), they rely heavily on GPU resources and prolonged training time, limiting their practicality for real-time or edge-level 5G monitoring. On the other hand, hybrid models, such as CNN–BiGRU and SAG–BiGRU, also deliver strong results, but they are computationally intensive and less interpretable, making them less suitable for operational deployment. In contrast, LSTM networks consistently achieve robust performance (≈90–95%) with moderate training cost, effectively capturing temporal dependencies inherent in 5G traffic flows. Their sequential memory mechanisms allow the detection of subtle and evolving anomalies that short-term or purely spatial models may overlook.

Prior work on the 5G-NIDD dataset has largely prioritised architectural novelty and detection performance over interpretability. However, real-world 5G deployments demand models that are not only accurate but also explainable and operationally feasible. By leveraging an LSTM-based backbone with an attention mechanism, the proposed BiTAD model builds on these strengths, establishing a transparent, sequence-aware framework that bridges the gap between accuracy, interpretability, and deployability in modern 5G intrusion detection.

2.3. Public Datasets for 5G Anomaly Detection

Most intrusion detection studies use public network datasets that predate 5G. Widely used benchmarks include NSL-KDD (an enhanced version of KDD’99), UNSW-NB15, CICIDS-2017, and the BoT-IoT dataset [18]. For example, many recent IDS models have been evaluated on NSL-KDD, CICIDS2017, and UNSW-NB15. BoT-IoT (2019) contains ~73 million IoT flows with diverse botnet attacks, but like the others, it was generated in 4G-era testbeds. As such, these datasets lack essential 5G characteristics, including 5G-specific protocols, network slicing, and realistic mobility patterns. To address this gap, Samarakoon et al. introduced 5G-NIDD dataset in 2022 [14]. 5G-NIDD contains ~85,000 flow records (≈60% benign, 40% attack) collected from a functional 5G test network under various DoS and scanning attacks. It captures live traffic from real mobile devices connected to operational 5G base stations, rather than relying on simulations, making it uniquely representative of modern 5G conditions. Table 3 compares these benchmark datasets, highlighting that 5G-NIDD is the only one reflecting current 5G network traffic. For this reason, we base our experiments on 5G-NIDD to ensure relevance to 5G networks.

Table 3.

Comparison of benchmark intrusion-detection datasets.

2.4. Explainability for Temporal Black-Box Models

While deep learning (DL) methods offer strong detection capabilities, they also exacerbate the “black box” issue. Interpreting IDS predictions is critical for trust, debugging, and operational use. In recent years, model-agnostic explainable AI (XAI) tools like SHAP and LIME have been applied to IDS research [22,23,24]. SHAP (SHapley Additive exPlanations) assigns each feature a contribution to a prediction based on game theory [25]. Compared with local-surrogate methods, SHAP often yields more stable, globally coherent attributions for IDS models, which helps analysts validate what the model actually relies on. It has been used to pinpoint influential flow/header features and to audit models for spurious inductive bias, improving trust and guiding feature-pruning in practice. On the other hand, LIME builds local surrogate models to explain individual decisions [26]. For example, Hermosilla et al. compared SHAP vs. LIME on XGBoost and TabNet using the UNSW-NB15 dataset [22].

As 5G intrusion detectors grow more complex, researchers have also integrated XAI to interpret their decisions. A common approach is to apply SHAP post hoc to trained models. For instance, Radoglou-Grammatikis et al. employed TreeSHAP (a SHAP variant for tree ensembles) to provide local and global explanations of a 5G core network IDS, identifying which features (e.g., PFCP flow statistics) most influenced each detection [27]. Such feature attribution helps validate that the IDS is reacting to meaningful traffic patterns rather than noise. For sequential deep models (e.g., RNN-based detectors), specialized explainers such as TimeSHAP have been proposed [28]. TimeSHAP extends SHAP into the temporal domain, attributing importance not only to input features but also to individual time steps, thereby identifying when events (e.g., traffic bursts) contribute most to an alert. Despite its potential, TimeSHAP has seen sparse application in network security. A recent systematic review notes that most XAI work in IDS still emphasizes static feature importances, with minimal attention to temporal explanations.

To bridge this gap, we proposed TwinLens, a dual-perspective explainability framework that combines SHAP-based feature attribution with TimeSHAP-based temporal attribution for our BiTAD model. To the best of our knowledge, no prior 5G-NIDD study has integrated both feature-level and time-step-level explanations in the same sequential IDS model. TwinLens provides comprehensive insights into both why and when anomalies are detected, enabling transparent, interpretable, and actionable outputs that enhance trust and support real-time mitigation.

3. Methodology

3.1. The 5G-NIDD Dataset

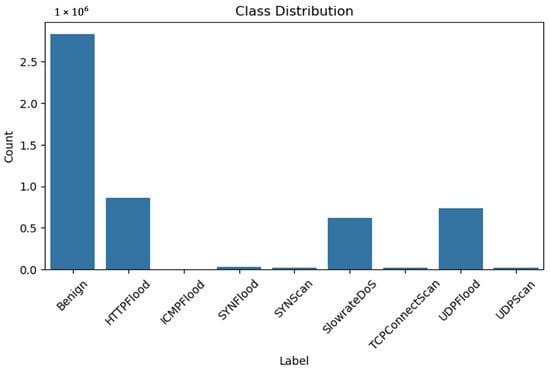

In this study, we adopt the 5G-NIDD dataset, the first public IDS dataset built on a real 5G testbed [14]. 5G-NIDD contains 5,128,776 network flow records with 52 total features (32 continuous, 12 integer, 8 categorical). Of these flows, 2,834,524 (55.27%) are benign and 2,294,252 (44.73%) are malicious. There are 8 attack classes (each flow is labelled by one of these attacks). Figure 2 illustrates the class distribution of the 5G-NIDD dataset. From the figure, we can observe that the majority class is Benign with over 2.8 million samples, while attack classes such as ICMPFlood, UDPScan, and SYNScan are significantly underrepresented. To be specific, benign traffic dominates the dataset, making up the vast majority of the samples; HTTPFlood, SlowrateDoS, and UDPFlood are the most represented attack types, though still far fewer than benign samples; and, ICMPFlood, SYNScan, and UDPScan have very few samples, posing challenges for learning and classification due to potential underfitting or model bias toward the majority class. This highlights a strong class imbalance in this dataset. This imbalance emphasizes the need for strategies like class weighting, resampling, or synthetic data generation to ensure effective learning across all categories during model training.

Figure 2.

The class distribution in the 5G-NIDD dataset.

Unlike legacy IDS benchmarks (e.g., NSL-KDD or CICIDS2017, which were generated in controlled/simulated settings), 5G-NIDD was collected on a live 5G network [14]. This authenticity means the data includes real 5G-specific protocols (e.g., GTP) and traffic patterns not seen in earlier datasets. The literature notes a “significant gap” between simulated datasets and real 5G traffic, so using 5G-NIDD addresses that gap. In summary, 5G-NIDD provides a comprehensive mix of benign and diverse attacks in a 5G context.

3.2. Data Preprocessing

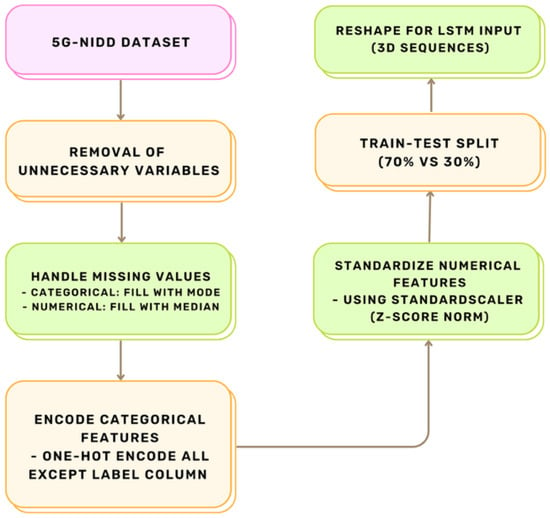

Prior to model training, we apply a series of essential preprocessing steps to improve the quality of the input data for reliable data learning. These steps include the following:

- Removal of Irrelevant Fields: Identifiers or metadata that are not contributing to anomaly detection, such as flow IDs, are removed. These features may introduce noise and affect the data modelling.

- Categorical Encoding: The 5G-NIDD dataset contains 52 features, including 8 categorical fields (e.g., protocol type, flag, slice ID). These categorical variables are converted to numerical form using one-hot encoding and ordinal encoding. For example, in one-hot encoding, a categorical feature with k values is mapped to a k-dimensional binary vector in such a way that the index corresponding to the category, i.e., category i, is set to 1, and the remaining ones are set to 0:

- Normalization: Continuous features (i.e., flow duration, byte counts, packet rates, inter-arrival times) are standardized using Z-score scaling so that all features have comparable ranges (mean 0, standard deviation 1). For instance, Z-score scaling uses:The normalization process helps prevent large-magnitude features (e.g., flood packet counts) from dominating the learning process. Additionally, it also mitigates exploding gradients and speeds training convergence.

- Sequence Shaping: To accommodate sequence-based models in this study, we group the network flows into fixed-length sequences. With a window size of T = 5, each instance is defined as , where d is the feature dimension after encoding. Thus, the data input to the model is in a 3D shape of (batch_size, 5, d). For flow-level classification to determine whether a specific network flow is normal or an attack, the label for each sequence is taken from the most recent timestep . It is worth noting that the framework accepts grouping multiple consecutive flows into a sequence for capturing temporal dependencies. This could facilitate the sequence-based models to learn the dynamic patterns across the flows. This characteristic is useful in identifying slow-building attacks or sequential behaviours.

- Class Balancing: As mentioned previously, the 5G-NIDD dataset is imbalanced, with approximately 60% of flows labeled as attacks. Among the attack cases, some classes, such as ICMP Flood, account for less than 1% of all malicious flows. Oversampling methods such as SMOTE or ADASYN were explored but found to be impractical because of the dataset’s large scale (≈5.1 million flows) and their tendency to distort sequential dependencies across consecutive flows. Consequently, we use class weighting. Specifically, we weight the loss for each class by where N is the total number of samples, C is the number of classes (9), and N(C) is the number of samples in class c. This weighting scheme effectively mitigates imbalance while preserving the temporal structure of the sequences. Such preprocessing (label encoding, normalization, and class weighting) follows established best practices for telecom anomaly detection.

After the preprocessing processes, each data point is transformed into a fixed-length numerical vector with dimension d. In this study, we adopt a train-test split strategy, where 70% of the instances are used to train the model, and the remaining 30% are for testing to evaluate the performance of the model. Figure 3 illustrates the data preprocessing pipeline.

Figure 3.

Preprocessing pipeline for 5G-NIDD dataset.

3.3. Model Architecture

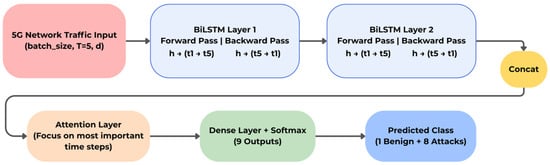

In this study, we propose the Bidirectional Temporal Attention Detector (BiTAD) for 5G network traffic classification. The model is built on a BiLSTM backbone with an attention mechanism to capture discriminative contextual and temporal patterns within network flow sequences. The output layer is a dense layer with softmax activation. Figure 4 depicts the BiTAD architecture.

Figure 4.

The architecture of the proposed BiTAD.

In the proposed model, BiLSTM network is adopted to capture temporal features in both forward and backwards directions. For each time step t, a forward hidden sequence {h→t} and a backwards sequence {h←t} are computed. The bidirectional sequences are concatenated, .

An attention layer is applied to focus on the most important and informative time steps. Let the sequence of hidden states be expressed as ., the attention mechanism computes a context vector c as a weighted sum of the hidden states:

where denotes the attention score, and is the normalized weight for time step t.

Next, the context vector c is fed into a dense output layer with softmax activation to generate the probability distribution for predicting the classes (i.e., 1 benign + 8 attack classes). The prediction is formulated as

In this study, we train multiple model variants using the Adam optimiser with a learning rate of 0.001, a batch size of 32, and a dropout rate of 0.3 to prevent model overfitting. We train the models using categorical cross-entropy loss:

To ensure consistent and reproducible evaluation, the dataset was divided using a 70:30 train–test split with class-stratified sampling to preserve label proportions. Hyperparameters were tuned empirically through iterative experimentation, adjusting hidden-unit size, dropout, and learning rate based on validation performance within the training set. The final configuration—64 hidden units per direction, dropout = 0.3, learning rate = 0.001—offered the most stable convergence. The model was trained using the Adam optimizer for 10 epochs with early stopping to prevent overfitting. Each experiment used a fixed random seed (42) and was repeated three times, and the reported metrics represent the average performance across runs to ensure statistical reliability.

3.4. Model Variants for Comparison

To evaluate the contribution of individual architectural components, we develop several model variants with different configurations. Table 4 presents these variants. The proposed BiTAD incorporates three key mechanisms: (1) LSTM cell gating (i.e., the standard input/forget/output gates within each LSTM cell), (2) bidirectionality strategy, and (3) attention mechanism. The LSTM cell gates control internal memory updates and forgetting dynamics, bidirectionality provides full-sequence context, and the attention mechanism selectively emphasises the most informative temporal segments for classification.

Table 4.

Summary of the LSTM-based model architectures.

3.5. TwinLens: Explainability Framework

Deep learning-based IDSs achieve promising accuracy performance, but they operate as “black boxes”. In security-critical applications, it is crucial to understand why an IDS flagged a flow as malicious [29]. To address this, we propose TwinLens, an XAI framework that provides dual-perspective explanations of predictions made by our BiTAB model, integrating both SHAP [25] and TimeSHAP [28]. Prior studies have demonstrated that transparency builds analyst trust. For example, Gaspar et al. and Barnard et al. incorporated SHAP explanations into their IDS pipelines [30,31]. Building on this, TwinLens not only highlights which features influence predictions (via SHAP) but also reveals when in time those features become influential (via TimeSHAP). SHAP provides feature-level attribution based on game-theoretic Shapley values [25]. For each feature i, the Shapley value is computed as

In practice, KernelSHAP [32] is used to approximate these values. SHAP yields local explanations (characterising feature contributions for individual flow predictions) and global insights (by aggregating values across flows, ranking the features by overall importance). For example, SHAP may reveal that “packet count” is the most influential variable in detecting UDP flood attacks, or that a specific port number drove a particular alert.

However, standard SHAP treats each input as a flat vector, ignoring temporal structure. Bento et al. extended SHAP to time-series data [28]. TimeSHAP computes Shapley attributions for each time step and feature in a sequence. By perturbing (masking) different segments of the flow sequence, TimeSHAP identifies which time indices (and which features within them) most influenced the model’s prediction. The generated output is a time-varying importance heatmap: for each time step t and feature j, TimeSHAP yields a value ) indicating how much that event-feature contributed to the prediction. In essence, TimeSHAP shows when in the sequence the model’s decision was made, complementing SHAP’s insight of which features mattered.

The proposed TwinLens framework combines these two explainers for the BiTAD model. SHAP highlights the most influential features (e.g., “byte count” or “protocol”), whereas TimeSHAP reveals temporal decision dynamics (e.g., a surge at packet 4 being decisive for detection). By merging these perspectives, TwinLens produces both aggregated feature rankings and time-varying importance heatmaps. For example, TwinLens may reveal that “packet count” and activity at time step 4 together drive the detection of a UDP flood attack. This dual explainability transforms BiTAD from a black-box into a transparent model, enabling analysts to pinpoint both the features and the time steps responsible for alerts. In summary, TwinLens provides explanations at two complementary levels: feature-level SHAP values and sequence-level TimeSHAP scores.

TwinLens provides a more comprehensive interpretability perspective than using SHAP or TimeSHAP individually. While SHAP identifies the most influential features contributing to a given prediction, it does not reveal when these features exert their influence. Conversely, TimeSHAP highlights the most critical time steps but lacks explicit feature-level attribution. By integrating both, TwinLens enables simultaneous reasoning about what features are important and when they become decisive during a network event sequence. This dual insight allows security analysts to trace evolving attack behaviours (e.g., a sudden surge in packet count or a protocol shift near the end of a sequence) that would otherwise remain hidden in single-view explanations.

Nevertheless, TwinLens introduces a moderate computational overhead because TimeSHAP requires iterative perturbation across timesteps, and its interpretability is bounded by the sequence length T. Future work will focus on optimising this process through selective timestep masking and real-time approximation techniques to reduce computation cost without compromising explanatory depth.

4. Results and Analysis

4.1. Model Performance Analysis

In this study, we analyse the performance of four sequential deep learning models on the 5G-NIDD dataset. The models are LSTM, BiLSTM, LSTM + Attention and our proposed BiTAD. The employed performance metrics include accuracy, precision, recall, and F1-score. For the binary classification task, results show a consistent improvement from the baseline LSTM to the BiTAD model. BiLSTM improves accuracy by 0.54% over LSTM (90.82%→91.36%) by capturing both forward and backward temporal dependencies, enabling better modelling of bidirectional flow characteristics in 5G traffic. Incorporating attention into LSTM further increases accuracy to 91.41% and improves precision from 0.82 to 0.83, reflecting its ability to dynamically weight the most critical time steps for classification. BiTAD, which integrates both bidirectionality and attention, delivers the highest performance with 93.33% accuracy and an F1-score of 0.91, representing nearly 2% improvement over LSTM + Attention and 2.51% over BiLSTM. These results confirm that combining both mechanisms leads to complementary benefits, enhancing the model’s ability to capture complex temporal dynamics in binary intrusion detection.

For the multiclass classification task, a similar performance pattern emerges. BiLSTM achieves a 2.43% accuracy gain over LSTM (86.32%→88.75%) due to its capacity to model bidirectional dependencies more effectively. Adding attention to LSTM boosts precision from 0.88 to 0.90 and recall from 0.84 to 0.87, demonstrating its strength in focusing on the most informative segments of the input sequence. BiTAD once again achieves the highest results, 90.47% accuracy and an F1-score of 0.91, gaining 1.72% over BiLSTM and more than 4% over LSTM. This consistent improvement across a more complex multiclass setting highlights the synergy of bidirectional temporal processing and attention weighting, providing significant advantages in handling diverse intrusion types.

Overall, BiTAD establishes a new performance benchmark for sequential deep learning-based IDSs on the 5G-NIDD dataset. Across both binary and multiclass scenarios, it consistently outperforms baseline models by leveraging bidirectional temporal modelling and attention-driven feature weighting. While the achieved accuracies (93.33% for binary, 90.47% for multiclass) fall short of near-perfect levels (~99%), the model’s stable gains and smooth convergence patterns underscore its robustness, technical soundness, and suitability for practical 5G intrusion detection deployments. This combination of bidirectionality and attention mirrors proven strategies in IoT and network anomaly detection, further validating BiTAD’s real-world applicability.

4.2. Why BiLSTM + Attention Excels?

The comparative results in Table 5 highlight the distinct performance advantages introduced by each architectural enhancement. The bidirectional structure in BiLSTM enables the model to capture both past and future temporal dependencies, improving the representation of 5G traffic flow dynamics such as connection initiation and termination sequences. This yields measurable gains over the baseline LSTM in both binary (91.36% vs. 90.82%) and multiclass (88.75% vs. 86.32%) tasks. The addition of the attention mechanism further boosts detection by assigning greater weight to the most informative time steps, improving precision, particularly in multiclass classification, where attention raises LSTM’s precision from 0.88 to 0.90 and BiLSTM’s from 0.91 to 0.92. Combined, the BiTAD model leverages both bidirectional memory and attention-driven focus, achieving the highest overall scores in both tasks (binary: 93.33% accuracy, F1 = 0.91; multiclass: 90.47% accuracy, F1 = 0.91).

Table 5.

Performance of deep learning models on the 5G-NIDD test set.

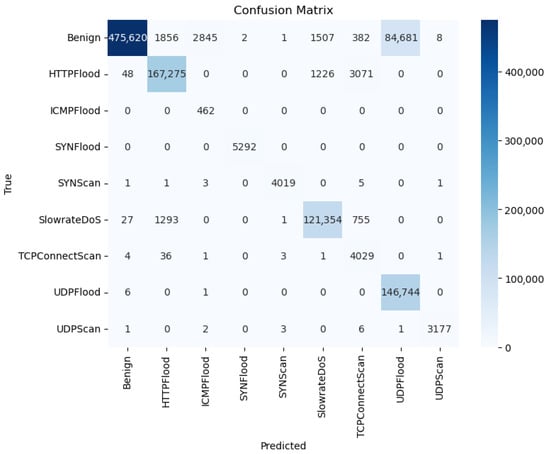

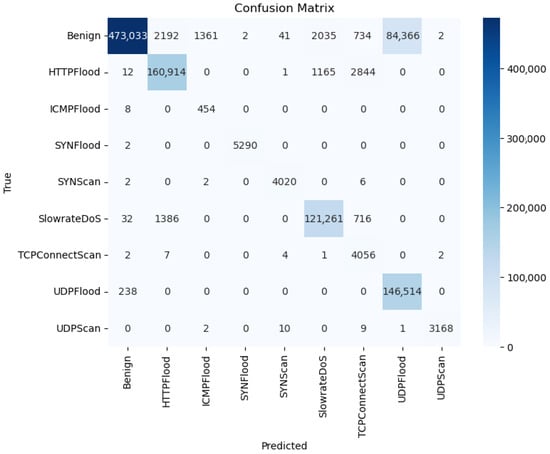

The confusion matrix in Figure 5 and per-class metrics in Table 6 further highlight BiTAD’s capability to generalize effectively across diverse and imbalanced attack categories. High-precision and high-recall scores are achieved for dominant attack types such as SYNFlood (0.99/1.00) and SYNScan (0.99/0.99). More challenging classes like ICMPFlood maintain perfect recall (1.00) despite low precision (0.13), ensuring anomalies are never missed even in rare categories. Similarly, TCPConnectScan achieves a recall of 0.98, substantially higher than that of unidirectional or non-attention baselines, though with moderate precision (0.48), reflecting a willingness to tolerate occasional false positives for rare but critical threats. HTTPFlood, SlowrateDoS, UDPFlood, and UDPScan also achieve near-perfect recall, demonstrating the model’s robustness across both frequent and infrequent attacks. While the Benign class records a slightly lower recall (0.83), this trade-off prioritizes minimizing false negatives in attack detection, which is preferable in high-security operational contexts.

Figure 5.

Confusion matrix for BiTAD on 5G-NIDD.

Table 6.

BiTAD performance on the 5G-NIDD (Multiclass) test set.

These improvements are largely attributed to the temporal attention mechanism’s ability to focus on critical bursts or irregular patterns within sequences, rather than distributing equal importance across all time steps. This selective weighting enables BiTAD to maintain strong detection capabilities even for minority classes, outperforming simpler LSTM and LSTM + Attention models under significant class imbalance.

Importantly, these performance gains are achieved with minimal computational overhead. BiTAD’s parameter count (~83 k) is only about 10% higher than baseline LSTM (~74 k) and remains orders of magnitude smaller than hybrid CNN + LSTM architectures (~300 k–500 k). This balance of accuracy and efficiency supports real-time feasibility, making BiTAD well-suited for operational 5G IDS deployment.

5G flows vary across devices and sessions with bursty traffic. Anomalies often unfold over time rather than at a single instant. BiLSTM captures past and future context, revealing delayed or emerging patterns such as slow scans and staggered exfiltration that unidirectional models often miss. The attention layer focuses on the most informative time steps, for example, sharp shifts in header fields or payload burstiness, so decisive windows drive the prediction. This design explains the gains in Table 5 and the strong recall on rare attacks. The model is compact at about 83 k parameters, so it remains feasible for near real-time use.

The next section (Section 4.3) evaluates interpretability using the TwinLens framework, demonstrating how explanation-guided tuning can further refine model precision and training efficiency.

4.3. TwinLens Interpretability

TwinLens is an interpretability framework that incorporates SHAP explainer for feature-wise attribution and TimeSHAP explainer for temporal attribution. The proposed XAI technique unearths underlying patterns in the data and provides insights for comprehending model behaviour. Specifically, this dual-perspective TwinLens can identify which features drive predictions and when they do.

4.3.1. Performance Interpretability Trade-Offs

TwinLens introduces post hoc analysis overhead, but explanation-guided pruning did not degrade detection. Guided by SHAP (feature importance) and TimeSHAP (timestep importance), we reduced inputs from 16→10 features and 5→3 timesteps, trimming parameters from 83,342→80,268 (~3.7% Reduced) while retaining accuracy and improving training speed/stability. Confusion matrices likewise remain clean for frequent attacks and show sensitivity on rare classes, indicating a favorable precision-recall balance for deployment. In practice, TwinLens also enables explainable alerts: each flagged flow can be accompanied by the top contributing features and the decisive timesteps, improving analyst trust and auditability.

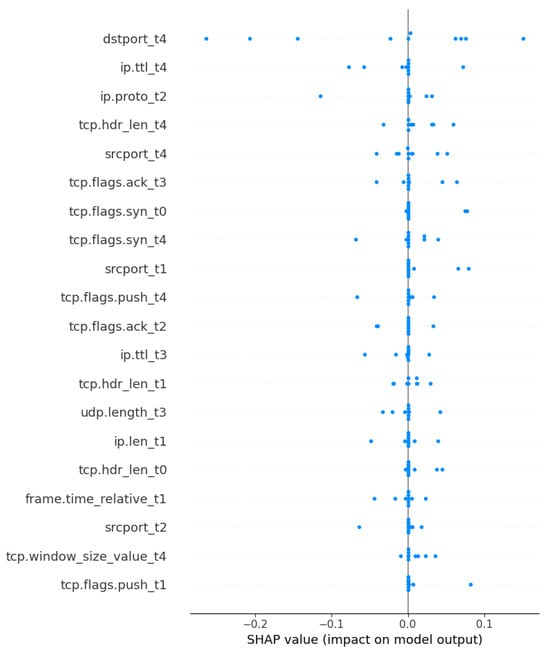

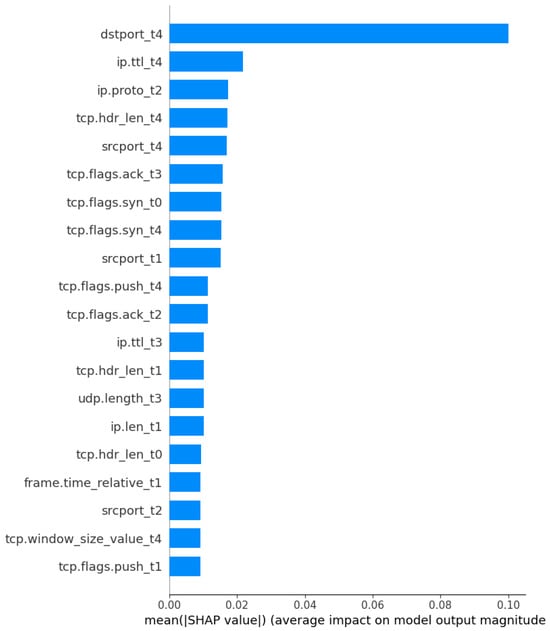

4.3.2. SHAP Analysis: Which Features

Figure 6 (SHAP Summary Plot) and Figure 7 (Mean Absolute SHAP values, |SHAP|) demonstrate the most influential features learned in the BiTAD model. From the results, we can observe that the features/attributes of dstport_t4, ip.ttl_t4, ip.proto_t2, and tcp.hdr_len_t4 are top-ranked and classified as dominant features. These attributes reflect traffic-level indicators of port targeting, IP-level protocol behaviour, and TCP header variations that are characteristic of certain DoS and scan attacks. These attributes reflect traffic-level indicators of port targeting, IP-level protocol behaviour, and TCP header variations that are characteristic of certain DoS and scan attacks. These insights align with prior studies of [7,14,33], highlighting that port-based and TTL-based features significantly contribute to cybersecurity attack detection in high-speed 5G environments. The feature distribution also suggests redundancy in lower-ranked attributes, which were pruned in the next tuning phase.

Figure 6.

SHAP summary plot: feature-level attribution.

Figure 7.

Mean absolute SHAP values of the top 20 features.

4.3.3. TimeSHAP Analysis: When Features Matter

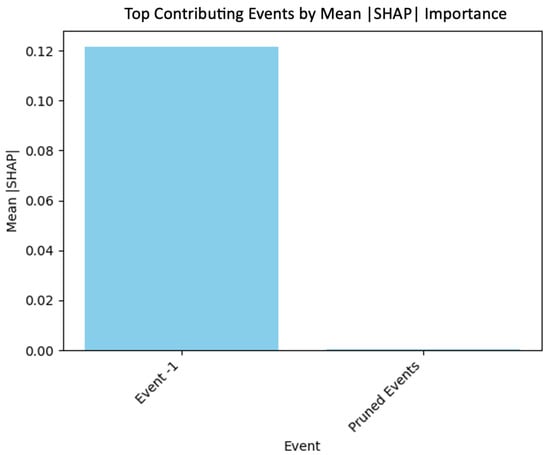

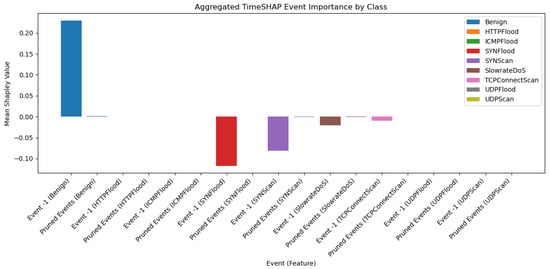

While SHAP identifies which features most influence the model’s predictions, TimeSHAP complements this by revealing when these features have the greatest impact within the sequence of events. In our study, the input sequence length was set to T = 5-time steps. Each “Event” refers to a specific time step position within this sequence—Event 1 being the earliest observation and Event 5 the most recent.

TimeSHAP computes Shapley attributions for every time–feature pair by perturbing (masking) different temporal segments. This allows identification of both the time indices (events) and the features within them that most influenced the model’s decision. The resulting output is a time-varying importance map, where each event–feature pair (t,i) is assigned an importance score for each time step t and feature i. This score quantifies how much the event-feature pair contributed to the final prediction.

Figure 8 shows that Event 1 consistently dominates in mean SHAP importance across all classes, suggesting that early sequence indicators are highly predictive for anomaly detection. In contrast, pruned events (later time steps beyond Event 3) contribute minimally, justifying their removal during sequence pruning for computational efficiency.

Figure 8.

The top contributing events shows that Event_1 dominates in influence.

Figure 9 presents the class-wise aggregated TimeSHAP values by step. Most anomalies, particularly SYNFlood and Benign flows, exhibit peak influence from Event 1, with moderate contributions from Event 2 and Event 3. The minimal influence of pruned events confirms that early packet-level patterns carry sufficient information for accurate classification. This aligns with prior findings that initial traffic bursts often signal abnormal behaviour in 5G fronthaul networks.

Figure 9.

Class-wise aggregated SHAP values by step.

These findings validate the effectiveness of using shorter input sequences, specifically Event 1 to Event 3, without significant loss in anomaly detection capability. This reduction directly supports TwinLens’ design objective of improving computational efficiency while maintaining accuracy. The early dominance of Event 1 further confirms that initial packet bursts in 5G fronthaul traffic often carry the most critical indicators for intrusion detection. By identifying and focusing on these early events, TwinLens not only reduces model complexity but also provides clear, explainable temporal insights, transforming BiTAD from a black box into an interpretable and operationally practical IDS. Similar observations were reported by Zhang et al. [13], where early network bursts served as key anomaly indicators in 5G fronthaul behaviour.

4.3.4. Model Tuning Guided by XAI Insights

We leverage the insights obtained from the TwinLens XAI to tune and optimize the proposed BiTAD model. Two key modifications were made based on the explainability analysis:

- Feature pruning: Based on the SHAP value rankings, the top 10 ranked features are selected (SHAP-based).

- Temporal pruning: From the previous analysis, TimeSHAP findings exhibit that the early time steps (i.e., t1-t3 possess higher predictive power. Thereby, the input sequence is reduced from 5 to 3-time steps (TimeSHAP-based).

These modifications produce a lightweight version of the BiTAB model (TwinLens-Tuned BiTAD). Table 7 records the total parameter counts of the original BiTAD model and the TwinLens-Tuned BiTAD. From the results, we can see that there is a reduction in total learnable parameters from 83,342 to 80,268. This leads to faster training and inference.

Table 7.

Parameter counts before and after XAI-guided tuning.

4.3.5. Training Curve and Confusion Matrix Analysis

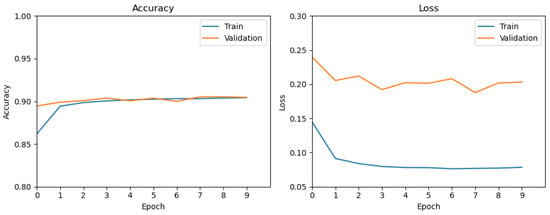

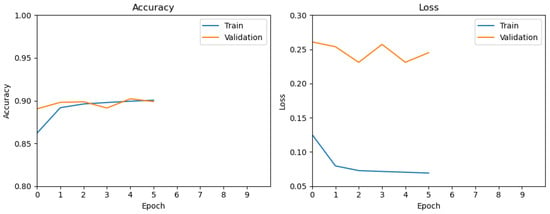

Figure 10 and Figure 11 show the training dynamics for the original BiTAD and the XAI-Tuned BiTAD (TwinLens-guided).

Figure 10.

Training accuracy/loss for original BiTAD.

Figure 11.

Training accuracy/loss for XAI-Tuned BiTAD.

Original BiTAD (Figure 10):

- Training Loss: Drops from ~0.15 to <0.075 over 9 epochs.

- Validation Loss: Relatively stable, fluctuating around ~0.20.

- Accuracy: Gradual increase to ~0.90, with minimal fluctuation.

XAI-Tuned BiTAD (Figure 11)

- Training Loss: Drops from ~0.13 to <0.07 within 5–6 epochs.

- Validation Loss: Slightly higher, fluctuating around ~0.24.

- Accuracy: Achieves ~0.90 in fewer epochs, matching the original’s final performance.

Overall, the XAI-Tuned BiTAD reaches the same final accuracy as the original model but does so with faster convergence and slightly lower training loss. While the validation loss is marginally higher, the improved training speed and efficiency from TwinLens-guided feature and sequence pruning present a favorable trade-off.

Figure 12 breaks down class-level performance using the confusion matrix, compared against the baseline in Figure 5. Complementing this, Table 8 presents the detailed class-wise metrics (accuracy, precision, recall, and F1-score) for BiTAD + TwinLens. With TwinLens-guided tuning, BiTAD sustains high detection accuracy for frequent attack types (SYNFlood, SYNScan, UDPFlood) while notably improving recall for rare classes such as TCPConnectScan (from 98% to 99%). HTTPFlood also maintains strong detection despite slightly lower benign recall (a common trade-off in IDS). Together, these results demonstrate that TwinLens tuning enhances sensitivity to minority attack classes without sacrificing overall detection capability, a critical factor for balanced IDS performance in diverse 5G traffic environments.

Figure 12.

Confusion matrix of BiTAD + TwinLens.

Table 8.

BiTAD + TwinLens performance on the 5G-NIDD (Multiclass) test set.

4.3.6. Summary and Justification

By leveraging the TwinLens framework, interpretability was enhanced at both the feature and sequence levels, offering a clearer understanding of how BiTAD identifies anomalies in 5G network traffic. Using SHAP for feature-level importance and TimeSHAP for temporal relevance, the model was able to identify and retain only the most critical input features and time steps. This explanation-guided pruning reduced the total parameter count by approximately 3000 (from ~83 k to ~80 k), resulting in faster training times and lower computational load, while maintaining identical classification performance across all metrics. The preservation of accuracy, precision, recall, and F1-score after pruning demonstrates that these removals targeted redundant components without compromising detection capability.

The streamlined BiTAD not only delivers strong detection across common and rare attack types but also meets the practical requirements of near-real-time intrusion detection in 5G environments. This efficiency gain is particularly important for edge deployments, where computational resources may be limited. Furthermore, the TwinLens-driven pruning process is model-agnostic, meaning the same approach can be applied to other sequential deep learning architectures for network anomaly detection or similar time-series classification problems. These findings align with the growing research trend toward interpretable and resource-efficient AI models, bridging the gap between high performance and practical deployability in operational security systems.

4.4. Comparative Benchmarking and Dataset Discrepancy

Many intrusion-detection studies report accuracies exceeding 99% on legacy or synthetic datasets such as CICIDS-2018, NSL-KDD, or BoT-IoT. These datasets are relatively small, highly balanced, and lack 5G-specific traffic characteristics such as GTP tunnelling and IoT signalling bursts. In contrast, the 5G-NIDD dataset used in this study contains authentic 5G-core traffic collected from an operational testbed and exhibits severe class imbalance (for example, ICMPFlood ≈ 0.05% of total flows).

Such real-world imbalance and heterogeneity make 5G-NIDD considerably more challenging for machine-learning-based IDS models. Therefore, while BiTAD’s overall accuracy of 90.47% may appear lower than the results reported on synthetic datasets, it exhibits robust generalisation under genuine 5G conditions. These results better reflect the operational performance that can be expected in live networks. Table 8 summarises the performance of BiTAD for each class, illustrating the impact of class imbalance on the precision and recall of different attack types. These findings confirm that BiTAD prioritises a balance trade-off between deployability and interpretability, offering a more realistic indicator of achievable performance in real 5G deployments.

4.5. Computational Efficiency, Experimental Setup, and Reproducibility

BiTAD was implemented in Python 3.10 using TensorFlow 2.12 on a CPU-based workstation equipped with an Intel Xeon W-2155 processor and 16 GB RAM. The model was trained for 10 epochs using the Adam optimiser with a learning rate of 0.001, batch size = 32, and a dropout rate of 0.3 to prevent overfitting. The categorical cross-entropy loss was weighted according to class-imbalance ratios to ensure fair learning across all attack classes.

The data preprocessing pipeline followed the procedures outlined in Section 3.2, including the removal of non-informative identifiers, one-hot and ordinal encoding for categorical variables, Z-score normalisation for continuous features, and temporal sequence grouping with a fixed window size (T = 5). The dataset was divided using a 70:30 train–test split with class-stratified sampling to preserve label distribution. Model hyperparameters (LSTM units, dropout rate, and learning rate) were tuned empirically through repeated experiments, and each configuration was trained three times to ensure statistical reliability.

A complete training cycle on the 5G-NIDD dataset (≈5.1 million flows) required approximately 60 min per run on CPU, which is reasonable given the dataset’s scale and the sequential nature of BiLSTM operations. The model contains only ≈83,000 trainable parameters, making it substantially lighter than transformer- or graph-based intrusion-detection systems that typically comprise millions of parameters. Despite CPU-only execution, BiTAD demonstrates an effective balance between accuracy, interpretability, and computational efficiency, supporting deployment even on commodity workstations or edge-level 5G monitoring nodes.

4.6. Real-World Deployment and Real-Time Detection

The practical deployment of an anomaly detection model in operational 5G networks entails addressing scalability, latency, and architectural integration challenges beyond controlled testbeds. The proposed BiTAD model was trained on the complete 5G-NIDD dataset (≈5.2 million flows) collected from a live 5G testbed [14], ensuring that the model captures realistic traffic dynamics, including genuine user behaviour and attack traffic. Training on large-scale, authentic data improves model generalization to live environments compared with synthetic or subsampled datasets.

4.6.1. Deployment Challenges and Practical Considerations

Modern 5G networks are highly distributed, virtualized, and data-intensive, requiring detection systems that are both efficient and adaptive. The deployment of BiTAD in such an environment must consider the following aspects:

- High Data Volume and Scalability:5G core networks routinely sustain hundreds of gigabits per second of traffic while serving millions of devices. As emphasized by Bocu and Iavich [34], real-time intrusion detection in high-bandwidth 5G cores is feasible when deployed in a virtualized and distributed manner. In this context, BiTAD’s compact architecture (approximately 83,000 parameters) and linear inference complexity enable parallelized deployment across network slices or MEC clusters, distributing the computational load efficiently.

- Low-Latency Requirements:Intrusion detection systems must operate within strict temporal budgets to enable real-time threat mitigation. Studies such as [34] have demonstrated that ML-based IDS frameworks can achieve real-time performance in live 5G cores without disrupting low-latency services. Similarly, Azkaei et al. [35] reported that their ML-based anomaly detectors executed within the near-real-time RIC loop, validating the feasibility of such systems. Given BiTAD’s lightweight design and attention-based sequence modelling, similar sub-second inference times are achievable when deployed on MEC or near-real-time RIC infrastructure.

- Dynamic Network Conditions:5G networks evolve continuously through new services, firmware updates, and shifting traffic distributions. To address concept drift, BiTAD can be periodically retrained or fine-tuned using recently captured flow data. This aligns with operational ML pipelines that incorporate incremental learning for adaptive intrusion detection in live networks.

- System Integration and Explainability:Integration with software-defined and virtualized 5G architectures (e.g., NFV, SDN, and MANO) facilitates elastic scaling and automated deployment. BiTAD can be containerized as a microservice within such frameworks, enabling per-slice or per-cluster instantiation. The TwinLens explainability module complements this deployment by providing interpretable, feature- and time-level attributions, enhancing analysts’ trust and situational awareness—capabilities identified as critical for operational IDS adoption [27].

4.6.2. Real-Time Detection Feasibility

Real-time anomaly detection in 5G networks requires that anomalous flows be identified within milliseconds to seconds. This can be achieved using three key strategies:

- 1.

- Efficient Model Design:By employing bidirectional temporal encoding with attention and a reduced parameter count, BiTAD minimizes inference latency. Similar deep learning architectures, such as ScalaDetect-5G [36], have achieved F1 > 99% while supporting real-time deployment through feature compression and model optimization.

- 2.

- Edge and Hierarchical Deployment:Locating detection instances at MEC nodes or near the RAN layer reduces data transmission delay and allows faster decision-making. Azkaei et al. [35] demonstrated that ML-based anomaly detectors deployed at the edge can operate efficiently within O-RAN’s near-real-time (≈1 s) control loop, validating the practicality of such designs.

- 3.

- Parallelization and Slicing:Logical partitioning of traffic across multiple BiTAD instances or slices enables concurrent analysis of large data volumes. Bocu and Iavich [34] confirmed that appropriately defined 5G virtual networks can sustain real-time IDS performance across high-throughput subnets.

Collectively, these findings in the literature [34,35,36] substantiate that with appropriate engineering—feature compression, distributed deployment, and efficient architectures—real-time anomaly detection on large-scale 5G traffic is technically achievable. Given BiTAD’s lightweight nature and linear complexity, similar operational performance is attainable using commodity or edge hardware.

4.6.3. Summary

In summary, BiTAD is designed with deployment practicality in mind. Its compact architecture, linear inference time, and dual-perspective interpretability render it suitable for distributed 5G environments that demand both scalability and transparency. When integrated into virtualized or MEC-based infrastructures, BiTAD can function as an interpretable, near-real-time anomaly detector, offering an effective and deployable intrusion detection solution aligned with the performance and reliability expectations of next-generation 5G networks.

5. Conclusions

This study proposed BiTAD, a Bidirectional LSTM with an Attention model, for anomaly detection in 5G networks, paired with TwinLens, a dual-explainability framework combining SHAP for feature attribution and TimeSHAP for temporal relevance. Designed to address the high-speed, bursty, and complex protocol behaviour of 5G traffic, BiTAD effectively captures temporal dependencies, while TwinLens provides interpretable insights into both feature and sequence-level contributions. When evaluated on the real-world 5G-NIDD dataset, BiTAD achieved superior detection performance and generalization compared to baseline LSTM models, particularly for rare attack classes, while maintaining competitive accuracy for common threats. TwinLens-guided pruning reduced parameters and training time without sacrificing accuracy, improving both efficiency and interpretability—key for operational deployment in critical 5G infrastructure. Future work will focus on optimizing computational efficiency via lightweight recurrent models, pruning and quantization strategies, and expanding applicability through hybrid architectures (e.g., GNN-LSTM), federated learning, and continual adaptation to evolving threats, ensuring robust, explainable, and scalable 5G intrusion detection.

Author Contributions

Conceptualization, J.L.T.L., Y.H.P. and S.Y.O.; methodology, J.L.T.L., Y.H.P. and H.S.L.; model development, J.L.T.L. and Y.H.P.; validation, J.L.T.L., Y.H.P. and H.S.L.; formal analysis, J.L.T.L.; writing—original draft preparation, J.L.T.L.; writing—review and editing, Y.H.P. and H.S.L.; visualization, J.L.T.L.; resources, C.Z., D.S., K.Y.C. and W.L.P.; supervision, Y.H.P., C.Z., H.S.L., D.S., S.Y.O., K.Y.C. and W.L.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the project “Integrated Software Toolbox for Secure IoT-to-Cloud Computing (INTACT)”, funded by the European Commission Horizon Europe Programme under contract number 101168438.

Data Availability Statement

The data used in this study are publicly available. The experiments were conducted using the 5G-NIDD dataset, which can be accessed from https://doi.org/10.23729/e80ac9df-d9fb-47e7-8d0d-01384a415361 (accessed on 16 October 2025). No new data were created or collected for this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zolotukhin, M.; Zhang, D.; Hämäläinen, T.; Miraghaei, P. On Attacking Future 5G Networks with Adversarial Examples: Survey. Network 2022, 3, 39–90. [Google Scholar] [CrossRef]

- Mohale, V.Z.; Obagbuwa, I.C. Evaluating machine learning-based intrusion detection systems with explainable AI: Enhancing transparency and interpretability. Front. Comput. Sci. 2025, 7, 1520741. [Google Scholar] [CrossRef]

- Pavani, G.K.; Veeramallu, B. Hybrid Machine Learning Framework for Anomaly Detection in 5G Networks. J. Inf. Syst. Eng. Manag. 2025, 10, 733–739. [Google Scholar] [CrossRef]

- Rabih, R.; Vahdat-Nejad, H.; Mansoor, W.; Joloudari, J.H. Highly accurate anomaly based intrusion detection through integration of the local outlier factor and convolutional neural network. Sci. Rep. 2025, 15, 21147. [Google Scholar] [CrossRef]

- Wang, Z.; Fok, K.W.; Thing, V.L.L. Emerging Trends in 5G Malicious Traffic Analysis: Enhancing Incremental Learning Intrusion Detection Strategies. In Proceedings of the 2024 IEEE International Conference on Cyber Security and Resilience, CSR, London, UK, 2–4 September 2024; pp. 114–119. [Google Scholar] [CrossRef]

- Rahman, A.-U.; Mahmud, M.; Iqbal, T.; Saraireh, L.; Kholidy, H.; Gollapalli, M.; Musleh, D.; Alhaidari, F.; Almoqbil, D.; Ahmed, M.I.B. Network Anomaly Detection in 5G Networks. Math. Model. Eng. Probl. 2022, 9, 397–404. [Google Scholar] [CrossRef]

- Noor, K.; Imoize, A.L.; Li, C.-T.; Weng, C.-Y. A Review of Machine Learning and Transfer Learning Strategies for Intrusion Detection Systems in 5G and Beyond. Mathematics 2025, 13, 1088. [Google Scholar] [CrossRef]

- Waghmode, P.; Kanumuri, M.; El-Ocla, H.; Boyle, T. Intrusion detection system based on machine learning using least square support vector machine. Sci. Rep. 2025, 15, 12066. [Google Scholar] [CrossRef]

- Kurochkin, I.I.; Volkov, S.S. Using GRU based deep neural network for intrusion detection in software-defined networks. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Ulaanbaatar, Mongolia, 10–13 September 2020. [Google Scholar] [CrossRef]

- Kamel, F.F.; Mahdi, M.S. Intrusion Detection Systems Based on RNN and GRU Models using CSE-CIC-IDS2018 Dataset in AWS Cloud. J. Al-Qadisiyah Comput. Sci. Math. 2024, 16, 141–160. [Google Scholar] [CrossRef]

- Nikitenko, A.; Bashkov, Y. Construction of a network intrusion detection system based on a convolutional neural network and a bidirectional gated recurrent unit with attention mechanism. East.-Eur. J. Enterp. Technol. 2024, 3, 6–15. [Google Scholar] [CrossRef]

- Hu, Z.; Liu, G.; Li, Y.; Zhuang, S. SAGB: Self-attention with gate and BiGRU network for intrusion detection. Complex Intell. Syst. 2024, 10, 8467–8479. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, X.; Liu, Z.; Fu, F.; Jiao, Y.; Xu, F. A Network Intrusion Detection Model Based on BiLSTM with Multi-Head Attention Mechanism. Electronics 2023, 12, 4170. [Google Scholar] [CrossRef]

- Samarakoon, S.; Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Chang, S.-Y.; Kim, J.; Ylianttila, M. 5G-NIDD: A Comprehensive Network Intrusion Detection Dataset Generated over 5G Wireless Network. arXiv 2022, arXiv:2212.01298. [Google Scholar] [CrossRef]

- Farzaneh, B.; Shahriar, N.; Al Muktadir, A.H.; Towhid, S.; Khosravani, M.S. DTL-5G: Deep transfer learning-based DDoS attack detection in 5G and beyond networks. Comput. Commun. 2024, 228, 107927. [Google Scholar] [CrossRef]

- Harshdeep, K.; Sumalatha, K.; Mathur, R. DeepTransIDS: Transformer-Based Deep learning Model for Detecting DDoS Attacks on 5G NIDD. Results Eng. 2025, 26, 104826. [Google Scholar] [CrossRef]

- Zhang, A.; Zhao, Y.; Zhou, C.; Zhang, T. ResACAG: A graph neural network based intrusion detection. Comput. Electr. Eng. 2024, 122, 109956. [Google Scholar] [CrossRef]

- Peterson, J.M.; Leevy, J.L.; Khoshgoftaar, T.M. A Review and Analysis of the Bot-IoT Dataset. In Proceedings of the 15th IEEE International Conference on Service-Oriented System Engineering, SOSE 2021, Online, 23–26 August 2021; pp. 20–27. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, W.; Wang, A.; Wu, H. Network Intrusion Detection Combined Hybrid Sampling with Deep Hierarchical Network. IEEE Access 2020, 8, 32464–32476. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. UNSW-NB15: A Comprehensive Data Set for Network Intrusion Detection Systems (UNSW-NB15 Network Data Set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Borah, S.; Panigrahi, R. A detailed analysis of CICIDS2017 dataset for designing Intrusion Detection Systems. Int. J. Eng. Technol. 2018, 7, 479–482. Available online: https://www.researchgate.net/publication/329045441 (accessed on 16 October 2025).

- Hermosilla, P.; Berríos, S.; Allende-Cid, H. Explainable AI for Forensic Analysis: A Comparative Study of SHAP and LIME in Intrusion Detection Models. Appl. Sci. 2025, 15, 7329. [Google Scholar] [CrossRef]

- Kumar, A.; Thing, V.L.L. Evaluating The Explainability of State-of-the-Art Deep Learning-based Network Intrusion Detection Systems. arXiv 2025, arXiv:2408.14040. [Google Scholar] [CrossRef]

- Keshk, M.; Koroniotis, N.; Pham, N.; Moustafa, N.; Turnbull, B.; Zomaya, A.Y. An explainable deep learning-enabled intrusion detection framework in IoT networks. Inf. Sci. 2023, 639, 119000. [Google Scholar] [CrossRef]

- Lundberg, S.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; pp. 4768–4777. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why Should I Trust You? Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Nakas, G.; Amponis, G.; Giannakidou, S.; Lagkas, T.; Argyriou, V.; Goudos, S.; Sarigiannidis, P. 5GCIDS: An Intrusion Detection System for 5G Core with AI and Explainability Mechanisms. In Proceedings of the 2023 IEEE Globecom Workshops, GC Wkshps 2023, Kuala Lumpur, Malaysia, 4–8 December 2023; pp. 353–358. [Google Scholar] [CrossRef]

- Bento, J.; Saleiro, P.; Cruz, A.F.; Figueiredo, M.A.; Bizarro, P. TimeSHAP: Explaining Recurrent Models through Sequence Perturbations. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Virtually, 14–18 August 2021; pp. 2565–2573. [Google Scholar] [CrossRef]

- Li, B. Unsupervised Temporal Anomaly Detection: Time Series, Data Stream, and Interpretability. Ph.D. Thesis, Technische Universität Dortmund, Dortmund, Germany, 2024. [Google Scholar]

- Islam, A.; Chang, S.-Y.; Kim, J.; Kim, J. Anomaly Detection in 5G using Variational Autoencoders. In Proceedings of the 2024 Silicon Valley Cybersecurity Conference, SVCC 2024, Seoul, Republic of Korea, 17–19 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Corea, P.M.; Liu, Y.; Wang, J.; Niu, S.; Song, H. Explainable AI for Comparative Analysis of Intrusion Detection Models. In Proceedings of the 2024 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Madrid, Spain, 8–11 July 2024; pp. 585–590. [Google Scholar] [CrossRef]

- Gaspar, D.; Silva, P.; Silva, C. Explainable AI for Intrusion Detection Systems: LIME and SHAP Applicability on Multi-Layer Perceptron. IEEE Access 2024, 12, 30164–30175. [Google Scholar] [CrossRef]

- Barnard, P.; Marchetti, N.; DaSilva, L.A. Robust Network Intrusion Detection Through Explainable Artificial Intelligence (XAI). IEEE Netw. Lett. 2022, 4, 167–171. [Google Scholar] [CrossRef]

- Bocu, R.; Iavich, M. Real-Time Intrusion Detection and Prevention System for 5G and beyond Software-Defined Networks. Symmetry 2022, 15, 110. [Google Scholar] [CrossRef]

- Azkaei, B.; Joshi, K.C.; Exarchakos, G. Machine Learning-Driven Anomaly Detection for 5G O-RAN Performance Metrics. arXiv 2025, arXiv:2509.03290. [Google Scholar] [CrossRef]

- Chang, S.; Cui, B.; Feng, S. ScalaDetect-5G: Ultra High-Precision Highly Elastic Deep Intrusion Detection System for 5G Network. CMES—Comput. Model. Eng. Sci. 2025, 144, 3805–3827. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).