Abstract

Surrogate-assisted evolutionary algorithms (SAEAs) are widely used in the field of high-dimensional expensive optimization. However, real-world problems are usually complex and characterized by a variety of features. Therefore, it is very challenging to choose the most appropriate surrogate. It has been shown that multiple surrogates can characterize the fitness landscape more accurately than a single surrogate. In this work, a multi-surrogate-assisted multi-tasking optimization algorithm (MSAMT) is proposed that solves high-dimensional problems by simultaneously optimizing multiple surrogates as related tasks using the generalized multi-factorial evolutionary algorithm. In the MSAMT, all exactly evaluated samples are initially grouped to form a collection of clusters. Subsequently, the search space can be divided into several areas based on the clusters, and surrogates are constructed in each region that are capable of completely describing the entire fitness landscape as a way to improve the exploration capability of the algorithm. Near the current optimal solution, a novel ensemble surrogate is adopted to achieve local search in speeding up the convergence process. In the framework of a multi-tasking optimization algorithm, several surrogates are optimized simultaneously as related tasks. As a result, several optimal solutions spread throughout disjoint regions can be found for real function evaluation. Fourteen 10- to 100-dimensional test functions and a spatial truss design problem were used to compare the proposed approach with several recently proposed SAEAs. The results show that the proposed MSAMT performs better than the comparison algorithms in most test functions and real engineering problems.

1. Introduction

Evolutionary algorithms (EAs), such as genetic algorithms (GAs) [1], differential evolution (DE) [2], and particle swarm optimization (PSO) [3], have been successfully utilized in various practical engineering problems, such as the design of aircraft wings [4], robotics [5], wireless networks [6], and the optimization of vehicle technology trajectory [7,8]. However, the utilization of EAs to address optimization problems requires hundreds of expensive exact fitness evaluations (FEs) to achieve an acceptable solution, which severely hinders their application in practical engineering problems. To solve this problem, SAEAs have been proposed. SAEAs are a class of techniques that combine EAs with surrogate models, which build inexpensive surrogate models for predicting the fitness values of candidate solutions as a replacement for FEs in the EAs. In recent years, the use of SAEAs for optimization problems has received increasing attention because the computational cost of constructing surrogates and predicting fitness values is less than FEs.

Model management strategy is crucial for SAEAs, which select some new samples for exact FEs and use them in the reconstruction of the surrogate model [9]. Currently developed model management strategies are mainly categorized as generation-based and individual-based, where the former selects all samples by generation for accurate FEs and the latter selects promising individuals for real evaluation. Both strategies have been shown to be effective, but in general, individual-based criteria are more flexible than generation-based criteria [10,11]. There are usually two criteria for selecting promising individuals. The first is the performance-based criterion that chooses the individual with the best fitness value for accurate evaluation to effectively enhance the performance of the surrogate and facilitate the exploitation of the currently promising area [12]. The second category is the uncertainty-based criterion, which can enhance exploration by selecting the most uncertain individual for accurate evaluation [13]. It has been shown [14] that using only one infill criterion may lead to early convergence or falling into a local optimum. Therefore, this paper selects the optimal or average individual for real function evaluation.

The current SAEAs can be classified into two principal categories. The first category is the single-layer SAEAs, which do not have hierarchically organized components and usually use only one model, i.e., a local or global surrogate, to assist one evolutionary algorithm. Li et al. [15] presented a fast algorithm using the global radial basis function (RBF) to assist PSO, in which they combined the performance-based criterion with a new criterion that considered the distance and fitness value concurrently, boosting the efficiency of the algorithm. Li et al. [16] also developed a surrogate-assisted multi-swarm optimization technique that alternately carries out teaching-learning-based optimization (TLBO) to enhance exploration and PSO to accelerate convergence. Global surrogates can summarize the overall fitness landscape. Nourian et al. [17] combined graphical neural networks (GNNs) and PSO for optimal design of truss structures. However, generally speaking, a large number of exactly evaluated samples are required to build highly accurate global surrogates when solving large-scale expensive optimization problems, which can make it difficult for algorithms to balance between high accuracy and low time consumption. Therefore, local surrogates are commonly used to increase the accuracy by capturing the regional characteristics of the fitness landscape. Cai et al. [18] presented a search technique based on trust region, which is to establish a local RBF model in the trust region surrounding the optimal population, thereby boosting the optimization efficiency. Pan et al. [19] used neighbor samples to train a local RBF model with higher accuracy to assist the TLBO and DE alternately and proposed a new prescreening criterion.

The second type is known as the hierarchical SAEAs, where multiple models are used to assist different EAs. The fine local surrogate is utilized to depict the specifics of the fitness landscape, while the rough global surrogate is utilized to mitigate the impact of local optima. Sun et al. [20] developed a hierarchical surrogate that uses both local and global models to assist PSO in directing the swarm towards the global optima. Wang et al. [21] developed the use of global RBF and local Kriging to assist DE for high-dimensional expensive problems. Kůdela et al. [22] integrated global RBF and a new Lipschitz-based-surrogate-assisted global optimization procedure with local optimization based on a local RBF model. Hierarchical SAEAs may increase the computational overhead compared to single-layer SAEAs, which can provide a solution in a shorter amount of time. However, for high-dimensional multimodal problems, hierarchical SAEAs are preferred. Hierarchical SAEAs can first identify promising regions in the global search phase and then explore more deeply in local regions, which is very beneficial for a comprehensive search of the decision space.

The reliability of SAEAs is mainly influenced by the surrogate and the evolutionary algorithm. With an increase in problem dimensions, the single surrogate easily causes the algorithm to be trapped in local optima. Meanwhile, the fixed surrogate makes it quite difficult to accurately describe different optimization problems. Therefore, to address this issue, the ensemble of surrogates is widely used in SAEAs [23,24]. Ren et al. [25] developed a hybrid algorithm that first carries out a series of global searches, then takes turns with global and local searches and employs social learning particle swarm optimization (SL-PSO) and DE for global searches assisted by a surrogate ensemble and local searches assisted by RBF, respectively. Guo et al. [13] replaced the time-consuming Gaussian processes with a heterogeneous integration composed of models with different input characteristics, which improves the diversity and reliability of the surrogate. Moreover, research on adaptive surrogate model selection techniques has also received more and more attention. Li et al. [26] adaptively selected one from an RBF or an ensemble model to facilitate PSO according to standard deviation. Liu et al. [27] introduced a decision space partition-based SAEA when addressing expensive optimization problems, which divides the space into several disjoint regions by the k-means clustering technique and establishes an RBF model on each region to assist PSO in global search, and an adaptive surrogate is utilized to assist local exploitation. Zhang et al. [28] designed a hierarchical surrogate framework that adopts RBF-assisted TLBO to locate promising regions and the dynamic surrogate-assisted DE to precisely determine the global optimal solution. The evolutionary algorithm is also a key component of SAEAs. When faced with a complex problem, a single EA may induce the optimization process to follow a similar trajectory and become trapped into local optima [29]. As a result, several SAEAs use different EAs at the same time. In addition, some SAEAs using the multi-task evolutionary algorithm have been developed. Multi-task optimization (MTO) is a method that can process several optimization tasks simultaneously, and it can promote the overall task solving progress through the data transfer between different tasks. Liao et al. [30] introduced a multi-tasking algorithm design framework that uses the generalized multi-factorial evolutionary algorithm (G-MFEA) to assist global and local surrogates simultaneously in addressing expensive optimization problems. Yang et al. [31] took a global Kriging or a local RBF model as the assistant task at different stages and then used the MFEA to optimize the assistant task and the target task simultaneously.

In addition, multi-agent systems using the partition idea are also effective in preventing algorithms from falling into local optima. Intelligent agents evolve in the decomposed dimensional space to find local suboptimal solutions, and the global optimum is determined in the dynamic interaction of intelligent agents. Akopov et al. [32] proposed a multi-agent genetic algorithm for multi-objective optimization, in which global optimization is achieved in the process of coordinating intelligent agents and significantly reduces the number of true function evaluations. Zhong et al. [33] proposed a multi-agent genetic algorithm that fixes all agents in a lattice environment and then uses the competition or cooperation of these agents to find the global optimum solution. Table 1 summarizes the important algorithms, including EAs and SAEAs.

Table 1.

Currently important algorithms, including EAs and SAEAs.

Inspired by the above surrogate-assisted optimization techniques, this study presents a novel multi-surrogate-assisted multi-tasking optimization algorithm (MSAMT) for large-scale optimization problems. The algorithm design first divides the entire goal space into different regions and constructs the corresponding RBF model for each region to support the global exploration. Then, a novel dynamic ensemble of surrogates is used to build surrogate-assisted local exploitation with high reliability near the current optimal solution. Finally, the G-MFEA is used to assist multiple surrogates in searching each subregion and the space around the current optimal solution. The following are the innovations and major contributions of the proposed method:

- Under the framework of the G-MFEA, a space partition strategy is used to introduce multiple surrogate models as optimization tasks, and global and local searches are carried out simultaneously so that the algorithm can achieve good exploration and considerably reduce the chance of falling into local optima.

- A novel dynamic ensemble of surrogates is proposed. In this adaptive strategy, a single surrogate or an ensemble of two surrogates or three surrogates is selected as a local model from the base model pool (including RBF, polynomial response surface (PRS), and support vector regression (SVR)) according to the error index, thus accelerating the convergence.

- Experimental results indicate that the proposed algorithm has better performance than the competition algorithm in most of the test functions and a truss design problem, resulting in better results and faster convergence processes.

The rest of this paper is structured as follows: Section 2 briefly reviews the information on the surrogate models (RBF, PRS, SVR, and the ensemble of surrogates) and the MFEA, and introduces the details of the MSAMT. The performance of the proposed algorithm is evaluated by extensive empirical studies in Section 3. Lastly, Section 4 concludes this work.

2. Materials and Methods

This section first introduces the surrogate models (SVR, RBF, PRS, and the unified ensemble of surrogates) used in this work, followed by a brief description of the MFEA.

2.1. Polynomial Response Surface

Box and Wilson first proposed [36] the PRS model, which is one of the most commonly used surrogates. The most widely utilized is the second-order polynomial function, because overfitting occurs easily when the order exceeds 3. The second-order PRS can be represented as follows:

where is the predicted value of the surrogate, denotes the number of input variables, and represents the coefficient that needs to be determined.

PRS has the advantages of strong interpretability, simple computation, high efficiency, and good noise filtering, which make it effective for low-dimensional nonlinear problems. However, with the increase in problem dimensions, the accuracy of PRS is relatively low.

2.2. Radial Basis Function

The RBF model, which was first developed by Hardy [37], can be used to approximate irregular terrain. The interpolation form of RBF is as follows:

where represents the sample size, represents the ith basis function, denotes the weight coefficient, and represents the Euclidean distance between two samples. It is important to note that there are multiple kinds of basis functions, including thin plate splines, linear splines, cubic splines, and so on.

2.3. Support Vector Regression

The SVR surrogate model is given as follows:

Faced with a linear function approximation, SVR can be recalculated as follows:

where is the threshold and represents the dot product. Find the weights that minimize the margin corresponding to solving the optimization problem and introduce slack variables and to keep as flat as possible:

where represents a regularization parameter that controls the trade-off between the complexity of the model and the level at which errors are allowed to exceed . The following formula can be used to obtain the weight coefficient:

where and are the Lagrange multipliers. The SVR model obtained by inserting Equation (6) into Equation (4) is as follows:

For nonlinear approximation, the kernel function can be used to replace the dot product of the input vectors to obtain the nonlinear transformation, and the resulting SVR model is expressed as follows:

2.4. Dynamic Ensemble of Surrogates

Generally speaking, there is no single surrogate that outperforms other surrogates in all kinds of problems. Therefore, the ensemble surrogate, which consists of several component surrogates that can fully utilize the benefits of each surrogate, has been widely used. The prediction of the ensemble surrogate is obtained through weighting the predicted value of multiple surrogates, so the approximate fitness of the samples can be determined by the following:

where is the prediction of the ensemble of surrogates, denotes the prediction of the ith surrogate, and is the number of surrogates, is the weight factor of the ith surrogate, and needs to meet the formula .

According to the characteristics of weight coefficients, the existing methods for ensemble modeling can be classified into global measures-based, local measures-based, and mixed measures-based. The weight coefficient in global measures is a constant calculated according to the global error of each surrogate, while the weight coefficient in local measures is influenced by the location of the prediction sample. The former ignores the difference in fitting accuracy of the model in local areas, while the accuracy of the latter may decline away from the sample points. Mixed measures-based combines the advantages of the two, taking into account the regions near and away from the samples. On the basis of the existing methods, Zhang et al. [38] suggested a new unified ensemble of surrogates (UES), which integrates global and local measures, and the weight coefficients are determined according to the position of the predicted sample within the entire decision space as follows:

where and represent the global and local weight coefficients of the ith surrogate, respectively and is the control function used to determine the impact of the global and local measures, where and represent the distances between the predicted sample and its closest and second-closest samples, respectively.

As a fixed ensemble of KRG, RBF, and PRS, the UES is difficult to apply to high-dimensional problems. For one thing, the time cost of constructing the KRG model is gradually exceed the affordable range as the dimensions increase. For other things, the UES uses leave-one-out cross-validation to calculate weight coefficients, which can consume a lot of time if the fixed ensemble of component surrogates is used. To alleviate the running cost, Zhang et al. [28] developed a dynamic ensemble of surrogates (DES) based on a UES, which takes RBF, PRS, and SVR as component surrogates and uses the determination coefficient and the normalized root mean square error to filter the models. and are represented as follows:

where denotes the size of test samples, denotes the prediction of the th test sample by surrogates, denotes the exact function fitness of the th test sample, and is the average value corresponding to the exact function values for test samples.

2.5. Multi-Factorial Evolutionary Algorithm

Gupta et al. [34] first suggested the multi-factorial evolutionary algorithm (MFEA), which represents a prototypical multi-tasking algorithm design technique. The MFEA is inspired by the bio-cultural models of multifactorial inheritance, which mimics the processes of biological mutation, selection, and reproduction for generational evolution. The “multi-factorial” means that the MFEA can deal with multiple tasks simultaneously. The MFEA optimizes several tasks simultaneously for one evolutionary population in the unified space. The definition of the multi-factorial optimization problem is as follows:

where K denotes the number of optimization problems, represents the th optimization problem, represents the decision space of the th optimization problem, is the dimension of , and denotes the optimal solution of the th optimization problem is denoted.

Each individual in the MFEA is defined by a collection of new properties, which are as follows:

Definition 1.

The factorial cost of the individual on the th optimization problem is given by , where is the objective value of with respect to the th optimization problem.

Definition 2.

The factorial rank, designated as , represents the index of the individual on the th optimization problem relative to in ascending order.

Definition 3.

The scalar fitness of the individual is obtained from its minimum factorial rank achieved across all tasks, i.e., .

Definition 4.

The skill factor of an individual represents the task at which it is most effective at among all other tasks, that is, .

In the MFEA, the evolutionary population is created in the search space with boundary [0, 1] at first. The dimension of is , where denotes the dimension of the ith optimization problem. Each individual is associated with an optimization task based on its factorial rank and obtains a skill factor. Then, it generates offspring through assortative mating and assigns skill factors to offspring through vertical cultural transmission. The above steps are repeated until the computational budget is depleted. Finally, some individuals with better scalar fitness are chosen to be the parental population of the next generation.

2.6. Methodology

In this section, a novel SAEA, denoted as the MSAMT, is presented, and the general framework of the MSAMT is first introduced. Then, a global RBF model based on decision space partition and a new dynamic ensemble of surrogates are given. Two phases of the MSAMT are subsequently presented in detail, namely local search based on an ensemble surrogate and global search based on space partition.

2.6.1. The General Framework of the MSAMT Method

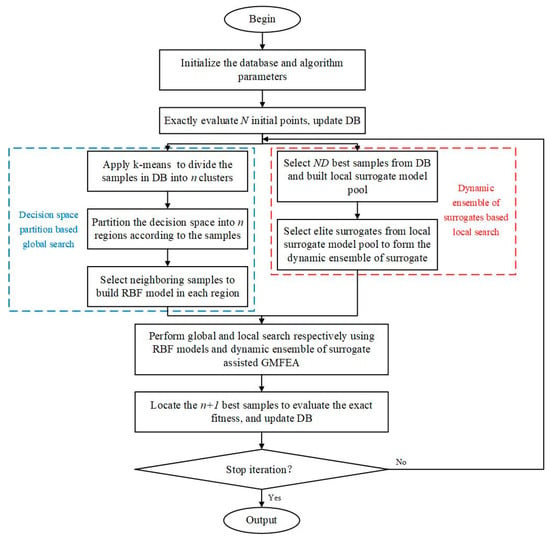

The main algorithm design framework of the MSAMT is given in Figure 1. During the surrogate construction process, all of the samples in the database are first divided into clusters using k-means clustering to prevent SAEAs from entering local optima. Then, the entire space is divided into disjoint regions, and each of which is associated with a different cluster. Finally, a corresponding RBF model is constructed in each region to assist the optimizer in finding the promising point for the global search, and a dynamic ensemble of surrogates is constructed using the best part of the sample selected from the database to search for the promising point within the local region. The former is concerned with exploring the entire decision space, while the latter tends to exploit the subregion near the optimal individual. The promising points identified by the surrogate-assisted G-MFEA are accurately evaluated and added to the database (DB).

Figure 1.

The flow chart of the proposed MSAMT.

Algorithm 1 provides the pseudocode of the MSAMT. Firstly, initial samples are sampled by Latin hypercube sampling (LHS). Each sample is evaluated by the real objective function and added to the DB. Secondly, all the exactly evaluated samples are clustered into groups utilizing k-means clustering, and the entire space is divided into disjoint areas based on the clusters. Then, the RBF model for each region is built. Thirdly, samples having the optimal fitness values are chosen to construct a dynamic ensemble of surrogates proposed in Algorithm 2. Finally, surrogates are subsequently applied to help the G-MFEA in the population evolution of generations as shown in Algorithm 3. Algorithm 4 is applied to produce an initial evolutionary population. Individuals with superior fitness values predicted by the surrogates are exactly evaluated and archived in the DB. The global and local searches are conducted simultaneously, and each iteration locates promising points distributed in disjoint regions and one promising point in the region around the optimal individual. The entire process is repeated until the maximum number of FEs is exhausted.

| Algorithm 1 Pseudocode of the MSAMT algorithm |

| Input: , problem dimension; , initial population size; , population size of dynamic ensemble of surrogates; , evolutionary population size; DB, database; , the number of exact evaluations; , the maximum number of exact evaluations; , the real objective function; , the number of clusters; rmp, matching probability; , the maximum number for each running of multitasking optimization; |

| Output: the position of the best individual and its fitness value; |

| 1: Generate initial samples by LHS; |

| 2: Evaluate exactly initial samples by the real objective function and save them to the DB; |

| 3: while , do |

| 4: //Decision space partition based global search |

| 5: Randomly select samples from the DB as clustering centers; |

| 6: Calculate the distance between all samples in the DB and each clustering center and assign each sample to the clustering center nearest to it; |

| 7: Calculate the mean of each cluster as the new clustering center; |

| 8: Repeat rows 6 and 7 until the new center is unchanged from the original center and output central position; |

| 9: for to , do |

| 10: Calculate the according to Equation (14); |

| 11: end for |

| 12: Determine in which region each of the samples in the database is located; |

| 13: for to , do |

| 14: if the number of exactly evaluated samples distributed in the th region is larger than , then |

| 15: Select all exactly evaluated samples in the th region to construct the RBF surrogate model; |

| 16: else |

| 17: Select the neighboring exactly evaluated samples to the th region to construct the RBF surrogate model; |

| 18: end if |

| 19: end for |

| 20: //Dynamic ensemble of surrogates based local search |

| 21: Apply Algorithm 2 to construct a dynamic ensemble of surrogates; |

| 22: //Perform global and local searches using RBF models and the dynamic ensemble of the surrogate-assisted G-MFEA, respectively; |

| 23: Apply Algorithm 3 to generate an evolutionary population and let ; |

| 24: Apply Algorithm 4 to perform multi-tasking optimization; |

| 25: Locate the individuals having better predicted fitness; |

| 26: Evaluate the real fitness value of the best individuals and save them to the DB; |

| 27: ; |

| 28: end while |

| Algorithm 2 Pseudocode for the dynamic ensemble of surrogates |

| Input: , population size of the dynamic ensemble of surrogates; DB, database; |

| Output: the dynamic ensemble of surrogates; |

| 1: Apply -fold cross validation (fivefold cross-validation is adopted in this paper) to best samples in the DB and build a pool of different single surrogate models (denoted as ); |

| 2: Compute the error metrics ( and ) of these surrogates according to Equations (11) and (12); |

| 3: Sort the and of these surrogates in ascending order and add the corresponding indices; |

| 4: Add the and the indices for each surrogate together to obtain the final ranking indicator for all surrogates (denoted as ); |

| 5: if is not empty, then |

| 6: Remove the other surrogates from and then use the single surrogate model with the ranking indicator of 2; |

| 7: else is not empty |

| 8: if there are two surrogates with the ranking indicator of 3, then |

| 9: Remove the other surrogate from and formulate the two surrogates with the ranking indicator of 3 into an ensemble surrogate using the UES; |

| 10: end if |

| 11: else |

| 12: Formulate the three surrogates into an ensemble surrogate using the UES; |

| 13: end if |

| Algorithm 3 Pseudocode for multi-tasking optimization |

| Input: , evolutionary population; , evolutionary population size; , the maximum number for each running of multi-tasking optimization; , the global surrogate model; , the local surrogate model; , the frequency to change the translation direction; , the threshold value to start the decision variable translation strategy; |

| Output: the optimal solutions of surrogate models; |

| 1: Apply Algorithm 4 to generate the evolutionary population and let ; |

| 2: Predict the fitness of each individual in with the RBF surrogates and the ensemble surrogate; |

| 3: Compute the skill factor () of each individual; |

| 4: while , do |

| 5: Randomize the individuals in ; |

| 6: for to , do |

| 7: Select two parent candidates and from ; |

| 8: Generate a random number , , and between 0 and 1; |

| 9: if or , then |

| 10: Parents and crossover to give two offspring individuals and ; |

| 11: else |

| 12: Mutate parent slightly to give an offspring . |

| 13: Mutate parent slightly to give an offspring ; |

| 14: end if |

| 15: Assign skill factors by parent candidates to offspring through vertical cultural transmission; |

| 16: if and , then |

| 17: Calculate the translated direction of each task; |

| 18: end if |

| 19: Update the position of each offspring according to the translated direction of its corresponding task; |

| 20: end for |

| 21: Predict the fitness of each offspring with the RBF surrogates and the ensemble surrogate and select better individuals as next parents; |

| 22: ; |

| 23: end while |

| Algorithm 4 Pseudocode for generating the evolutionary population |

| Input: , evolutionary population size; DB, database; , the number of samples in the DB; |

| Output: the evolutionary population ; |

| 1: If , then |

| 2: Add all samples from the DB to ; |

| 3: Generate individuals by LHS and add them to ; |

| 4: else |

| 5: If , then |

| 6: Add the first sample from the DB to ; |

| 7: Randomly select samples from other samples in the DB and add them to ; |

| 8: else |

| 9: Add the first sample from the DB to ; |

| 10: Randomly select samples from 1 to in the DB and add them to ; |

| 11: end if |

| 12: Generate individuals by LHS and add them to ; |

| 13: end if |

2.6.2. The Global and Local Models

The algorithm can be easily trapped into local optima because there are several local optima for various multimodal optimization problems. The proposed MSAMT adopts the method of decision space partition to help the optimization algorithm carry out global search. In this part of the work, we introduce the method suggested by Liu et al. [27] to partition the decision space. Firstly, all the samples in the database are divided into different clusters using the k-means clustering technique, i.e., . Then, the decision space is divided into suitable disjoint regions according to each cluster. The radius of the th region can be obtained as follows:

where and are the center positions of and , respectively, represents the Euclidean distance from to , and and denote the number of clusters and input variables, respectively.

After the decision space is divided, the samples are selected from the DB to build RBF models (denoted as ) in each subregion separately. Given the th region, if the number of accurately assessed samples in this region is greater than , all accurately assessed samples are chosen for modeling. Otherwise, the neighboring accurately assessed samples to this region are selected for modeling.

As a dynamic ensemble of RBF, PRS, and SVR, the DES model has been used for high-dimensional optimization problems [28]. However, its accuracy and robustness need to be improved. On the one hand, the DES requires two parameters and to select appropriate component surrogates, which may make it not well suited for various optimization problems. For another, the DES makes the first selection of component surrogates by and then makes the second selection by or according to the number of remaining component surrogates in the first selection. Therefore, the DES may use only one error metric when selecting component surrogates, which may lead to deviations in the accuracy of the final ensemble surrogate.

To enhance the accuracy of the ensemble surrogate, a novel dynamic selection for the ensemble surrogate (DSES) is presented in this paper. Algorithm 2 provides the DSES technique in detail. The main distinctions between the DSES and the DES include the following two points. First, the DSES uses and error metrics simultaneously to select component surrogates, ensuring that the final components used to formulate the ensemble surrogate have better fitting performance. Second, the DSES selects component surrogates from two perspectives at the same time. Therefore, it does not need to limit surrogates by setting thresholds, avoiding the trouble of adjusting parameters. The final DSES (denoted as ) can be a single surrogate or an ensemble of two or three surrogates.

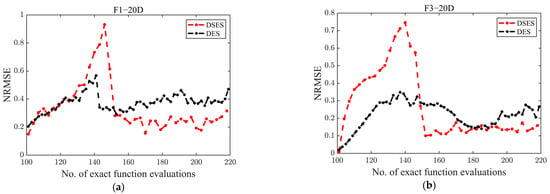

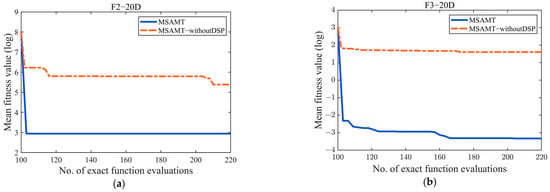

To demonstrate that the DSES has better performance than the DES, Figure 2 gives the values of both on the 20-dimensional F1 and F3 in the search process. From Figure 2, it was observed that the values of DSES and DES fluctuated greatly in the early period, but both gradually stabilized as the number of real function evaluations increased. Obviously, after stabilization, the DSES had smaller values than the DES. Therefore, one can consider that for different optimization problems, the DSES is more advantageous in building surrogate models with higher reliability than the DES.

Figure 2.

The values of the DSES and the DES on functions F1 and F3 with 20 dimensions in the search process. (a) The values of the DSES and the DES on functions F1 with 20 dimensions in the search process; (b) The values of the DSES and the DES on functions F3 with 20 dimensions in the search process.

2.6.3. Multi-Tasking Optimization Based on Decision Space Partition and a Dynamic Ensemble of Surrogates

It has been demonstrated that multi-tasking optimization may effectively handle multiple optimization tasks simultaneously [30,31]. In this study, the generalized multi-factorial evolutionary algorithm (G-MFEA) [35] is utilized to identify the best solution. The G-MFEA is a generalization of the MFEA that adds two strategies, decision variable translation and decision variable shuffling, to promote knowledge transfer between optimization problems with different optimal solutions. The RBF models and one dynamic ensemble of surrogates are optimized simultaneously as tasks in the G-MFEA. The pseudocode for multi-tasking optimization is given in Algorithm 3.

In Algorithm 3, an evolutionary population with individuals is generated at first, and the skill factors of them are computed by predicting fitness using RBF models and a dynamic ensemble of surrogates. The parent population generates an offspring population through an assortative mating strategy, subsequently utilizing vertical cultural transmission to designate skill factors to the offspring population. A decision variable transformation strategy is employed to map each offspring to a new location. The scalar fitness of the current populations is computed using surrogates, and then, individuals with better scalar fitness are chosen to become the next parent population. Algorithm 4 details the procedure of generating the initial evolutionary population. If the number of accurately assessed samples in the database is less than , all the samples in the database are added to the evolutionary population; if the number is greater than , but less than , samples are randomly selected from the database to be added to the evolutionary population; otherwise, the best samples are picked out from the database at first, and then, samples are selected from them to be added to the evolutionary population. Finally, the LHS is used to generate the remaining evolutionary individuals. This initial evolutionary population is generated in such a way that both historical data and new data are included, which can lead to better algorithmic performance.

In addition, in order to avoid falling into the local optimality, this paper selects an individual with better fitness from the most promising and the average individual for exact evaluation and archiving in the database. The average individual is as follows:

where represents a parameter that rises with the number of exact evaluations, denotes the number of samples, and denotes a random number that conforms to the Gaussian distribution.

3. Results and Discussion

A series of algorithm analyses will be taken to evaluate the performance of the MSAMT in this part. First, the test functions and parameters are provided, and the behavior of the MSAMT is studied in depth. Then, the MSAMT is contrasted with six recently proposed SAEAs, including HSAOA [28], DSP-SAEA [27], BiS-SAHA [25], FSAPSO [15], GL-SADE [21], and LSADE [22], on fourteen test functions and a practical engineering problem. Finally, the computational complexity of MSAMT is analyzed.

3.1. Test Problems

To study the effectiveness of the MSAMT, fourteen generally used test functions with different characteristics were adopted, and all of them considered five dimensions, namely 10D, 20D, 30D, 50D, and 100D. Table 2 provides the relevant details about these functions.

Table 2.

Test functions used in the experiment study.

3.2. Parameter Settings

In the MSAMT, for 10D, 20D, and 30D test functions, the initial population size was set to , the maximum number of exact function evaluations was set to , and the sample size for training the ensemble surrogate was set to , which were the same as used in [28]. For 50D and 100D test functions, and were both set to , and was set to 400. In the G-MFEA, the evolutionary population size was set to 100 as used in [31], the iteration number for population evolution was set as , and the mating probability was set as . In addition, a vital parameter in the MSAMT is the number of clusters , which was fixed at 2. To avoid randomness, each SAEAs was performed independently 20 times in MATLAB R2021b on the Intel Core i5-10400F with a 2.90 GHz processor to obtain the comparison results, and the MSAMT was compared to the other SAEAs using the Wilcoxon rank sum test (the significance level was set as ) on fourteen test functions. The symbols, i.e., “+”, “-”, and “=“, show that the proposed MSAMT is superior, inferior, and competitive with the corresponding algorithms, respectively.

3.3. Behavior Study of the MSAMT

3.3.1. Effect of the Clusters Number

The clusters number is an important parameter in the MSAMT, and in order to study its impact on the MSAMT performance, we compared the outcomes of the MSAMT variants with varying values across the 10, 20, and 30 dimensions of the 14 test problems, as illustrated in Table A1.

Table A1 displays the results of the algorithm analysis, which demonstrated that different variants performed significantly differently when faced with the same test problems. For example, the MSAMT with outperformed the other variants on test problems such as F1, F4, and F5, the MSAMT with performed well on the F7 test function, and when , the MSAMT outperformed on test functions such as F9 and F10. Comparing the results of all variants, it was found that the MSAMT with exhibited a superior performance on most of the test problems. Therefore, was used in this paper.

3.3.2. Effect of Matching Probability on Multi-Tasking Optimization

Exploration and exploitation capabilities determine how well the optimization algorithm performs. In the G-MFEA, the matching probability is used to achieve the balance between exploration and development. When is closer to 0, the probability of cross-task mating becomes smaller, thereby enhancing the exploitation ability. However, this may result in the algorithm converging on a local optimum. Conversely, when is closer to 1, the probability of cross-Zzztask mating increases, and it is able to search the decision space extensively, thus escaping from local optimality.

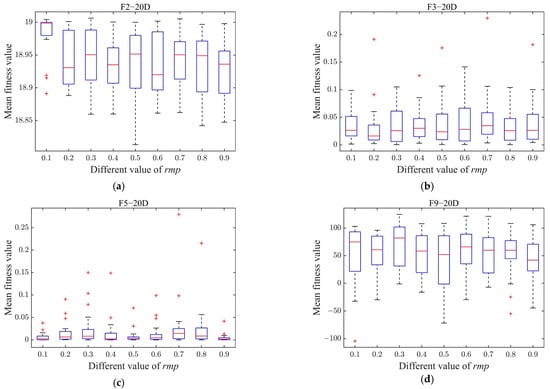

Figure 3 plots the mean fitness values of the MSAMT with different values on problems F2, F3, F5, and F9 with 20D. As Figure 3 shows, there was a considerable variation in the performance of MSAMT with different values. Without considering the red outliers, the MSAMT could obtain the best overall performance when the matching probability was equal to 0.4. Therefore, was set to 0.4 in this work.

Figure 3.

Performances of the MSAMT with different values on 20D F2, F3, F5, and F9 (From (a) to (d) are the box plots of F1, F3, F5, and F9, respectively).

3.3.3. Effect of the Maximum Generation Number for Each Running of the G-MFEA

The maximum generation number for each running of the G-MFEA serves to balance the performance and optimization costs. A smaller may make it challenging for the algorithm to locate an improved solution, and a larger is beneficial for the discovery of the optimal solution but may result in an unaffordable running cost.

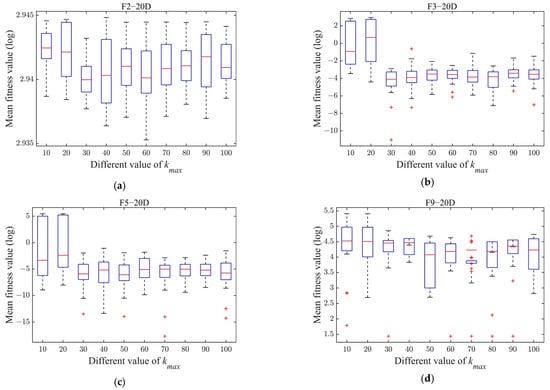

To achieve the optimal value of that achieves a balance between performance and optimization time, the same four test problems employed in the preceding section were utilized to assess the performance of the MSAMT variants with different values. Figure 4 displays the box plots of the average fitness values of the MSAMT variants with different values. It is evident from the Figure 4 that the medians, the maximum, and the interquartile range of the MSAMT were worse when the value was equal to 10 or 20. For different test problems, the performance of MSAMT with values greater than or equal to 30 displayed fluctuations, but the differences were not statistically significant. The maximum number of iterations for the G-MFEA was set to 50, taking into account both the optimization efficiency and the computing cost.

Figure 4.

Performances of the MSAMT with different values on 20D F2, F3, F5, and F9 (From (a) to (d) are the box plots of F1, F3, F5, and F9, respectively).

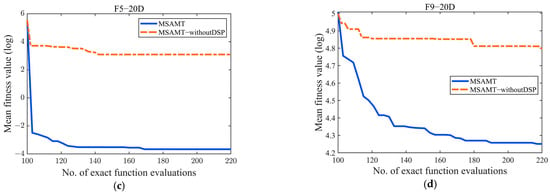

3.3.4. Effect of the Decision Space Partition

To evaluate the effectiveness of the decision space partition strategy, two variants, namely the proposed MSAMT and MSAMT-withoutDSP (only one RBF model is built to facilitate the global search conducted), were compared using the same four test functions as in Section 3.3.3. Figure 5 gives the convergence curves for the two variants. Figure 5 shows that the performance of the MSAMT was significantly better than MSAMT-withoutDSP without the decision space partition strategy. In particular, the MSAMT converged much faster than the MSAMT-withoutDSP in the early search stage.

Figure 5.

Convergence curves of the MSAMT with/without decision space partition on 20D F2, F3, F5, and F9 (From (a) to (d) are the convergence curves of F1, F3, F5, and F9, respectively).

3.3.5. Effect of the DSES

To validate the impact of the DSES on the MSAMT, comparison studies of the proposed MSAMT and two various variants, i.e., the MSAMT-KRG which adopts the KRG model in local search and the MSAMT-fixed which carries out the original UES, were conducted on 20-dimensional test functions. Statistical results for the three variants are provided in Table A2. The MSAMT demonstrated a superior performance, achieving the best solution on 8 of the 14 test functions. The MSAMT-fixed beat the other two variants on four test functions, while the MSAMT-KRG exhibited the best results on two test functions. On all test functions, the MSAMT took less computational time than the other two variants. The results demonstrate the effectiveness of the DSES.

3.4. Comparison with Other Recently Proposed Algorithms

3.4.1. Experimental Results on 10D, 20D, and 30D Test Problems

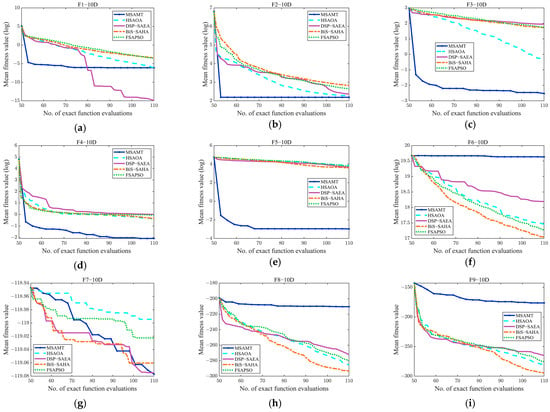

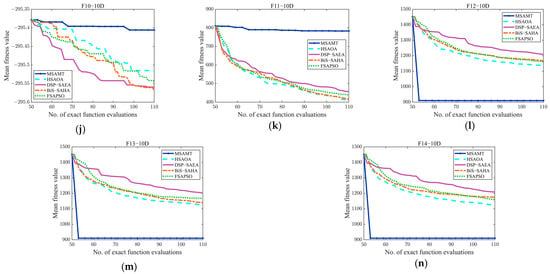

Four recently proposed SAEAs were first selected as comparison algorithms, i.e., HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO, and they were compared with the proposed MSAMT on 10D, 20D, and 30D 14 test functions to evaluate the performance of the MSAMT. The HSAOA is a hierarchical surrogate-assisted optimization algorithm that alternately uses DE and TLBO. During the local search phase, the HSAOA selects the samples that are closest to the optimal solution or have the best fitness value to construct a novel dynamic ensemble of surrogates. The DSP-SAEA is a two-stage search algorithm that integrates the global search phase based on decision space partition strategy with the local search phase using an adaptive surrogate within the trust region. The BiS-SAHA performs the global and local search stages in turn using the SL-PSO assisted by a surrogate ensemble and RBF-assisted DE. The FSAPSO is a fast optimization technique using global RBF-assisted PSO that suggests a novel evaluation criterion to select particles for real function evaluation.

Table A3 demonstrates that the suggested MSAMT had a superior performance compared to the other SAEAs, with the best results on 26 functions and the second-best results on 3 functions. Compared with the HSAOA, the MSAMT had a better performance on 28 test problems. Both algorithms used a dynamic ensemble of surrogates developed from the same ensemble. However, the dynamic ensemble of surrogates proposed by the MSAMT had a better fitting performance, which is helpful for the algorithms to converge better. The MSAMT significantly outperformed the DSP-SAEA on 66.7% of the test functions. Despite the introduction of the decision space partition strategy from the DSP-SAEA into the global search, the MSAMT utilizes the G-MFEA to seek the optimal solution, thus facilitating information exchange between different decision space regions and preventing premature convergence to local optima. The MSAMT had a better performance than the BiS-SAHA on 31 out of 42 functions. Both use a surrogate ensemble, but the G-MFEA employed by the MSAMT enables the algorithm to converge better. The MSAMT had a better performance than FSAPSO on 73.8% of the test functions. The FSAPSO uses only the global RBF model, while the MSAMT simultaneously utilizes the RBF model and dynamic ensemble of surrogates, which makes the MSAMT perform better than the FSAPSO.

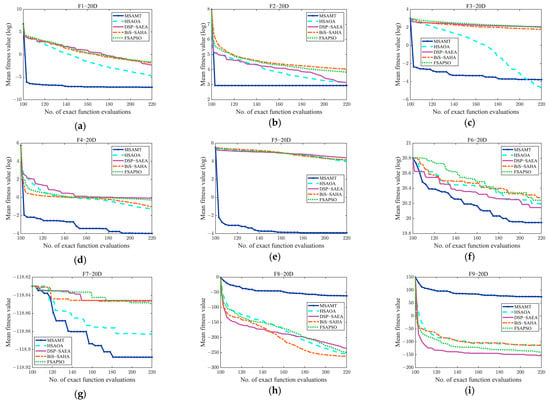

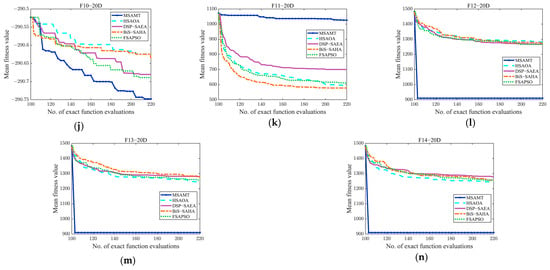

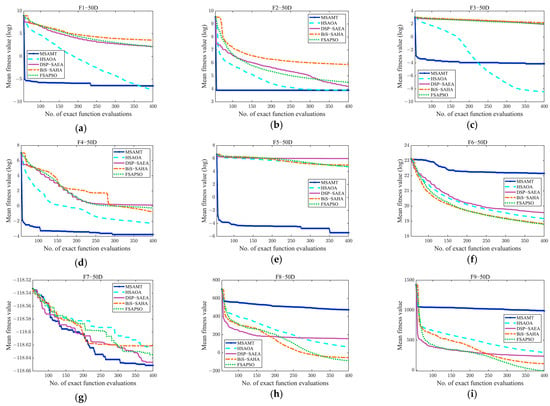

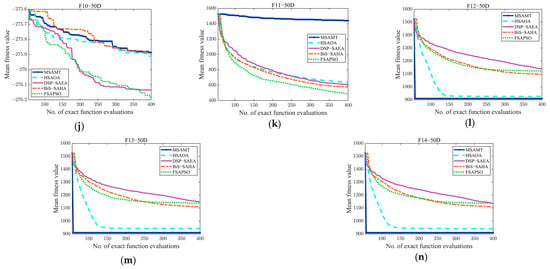

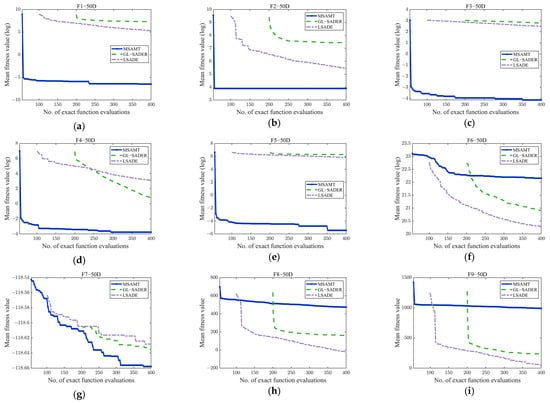

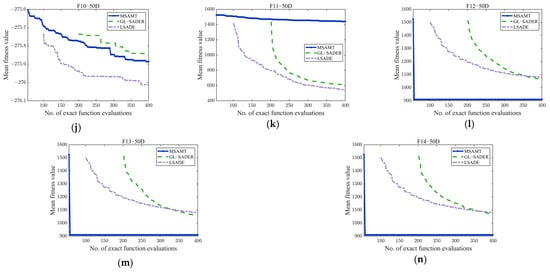

The convergence curves of the MSAMT and the four comparison algorithms on 10-, 20-, and 30-dimensional test functions are given in Figure A1, Figure A2 and Figure A3, respectively. According to the figures, the MSAMT outperformed the competing algorithms overall, with better convergence on most of the test functions. The preceding analysis indicates that MSAMT is competitive for low- and medium-dimensional problems.

3.4.2. Experimental Results on 50D and 100D Test Problems

High-dimensional optimization problems are widely available in reality, and thus, this paper further validates the scalability of the MSAMT through 50D and 100D test problems. In addition to the four competing algorithms previously described, two additional algorithms are added in this section for comparison with the MSAMT, namely GL-SADE and LSADE. The GL-SADE alternately uses RBF and Kriging to assist DE to perform both global and local searches and then performs local searches again utilizing the rewarding mechanism if the local search yields a better result. The LSADE suggests a new surrogate model that is based on the Lipschitz and adopts DE as an optimization algorithm. Both the GL-SADE and LSADE were proposed for expensive optimization problems. Note that on 50D and 100D test problems, the initial population size for GL-SADE was set to 200, while for the LSADE it was set to 100 and 200.

Table A4 shows that the MSAMT achieved the optimal mean results on 60.7% of the test functions, thereby exhibiting a superior performance compared to the four competing SAEAs. For 18 of the 28 test functions, the MSAMT performed better than the HSAOA. Compared with the DSP-SAEA and the FSAPSO, the MSAMT achieved better results on 19 test functions. The MSAMT had a better performance than the FSAPSO on 71.4% of the functions. Table A5 presents the comparison results of the MSAMT with the GL-SADE and the LSADE on functions with 50 and 100 dimensions. The MSAMT obtained better results on 19 out of 28 functions. Compared with the GL-SADE, the MSAMT was more competitive on 20 test functions. In contrast to the LSADE, the MSAMT had better mean values on 19 test functions.

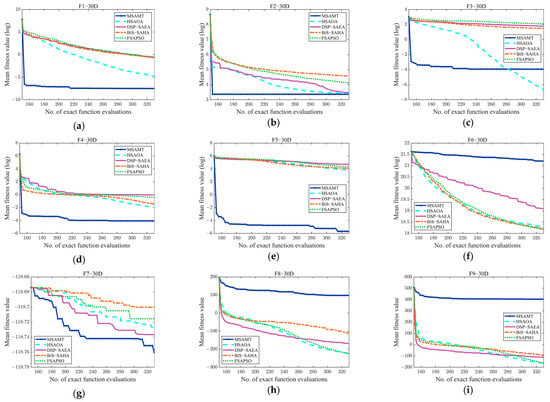

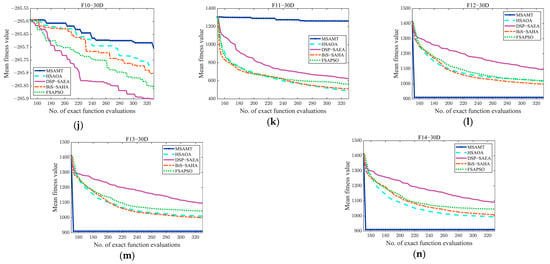

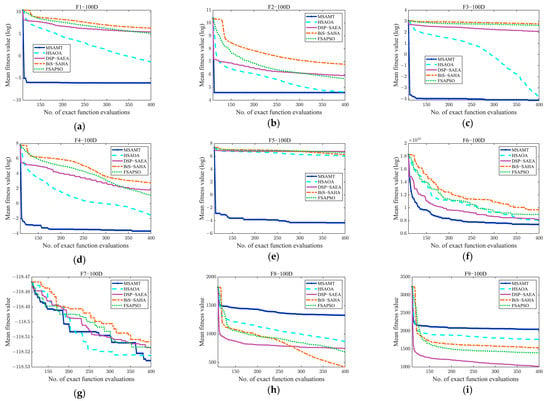

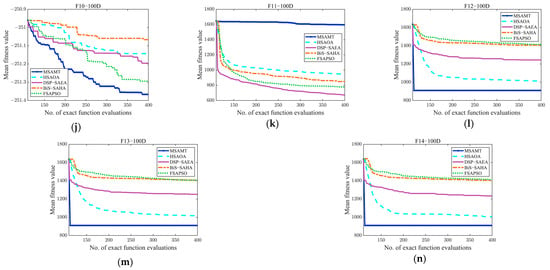

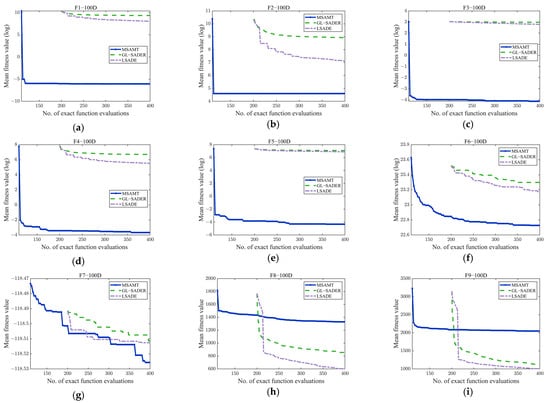

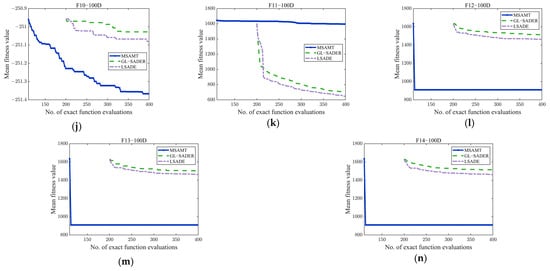

Convergence curves of the MSAMT, HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO for 50D and 100D test functions are given in Figure A4 and Figure A5, respectively. The figures demonstrate that the MSAMT had excellent convergence and could produce outcomes that are either better or comparable on most of the test functions. The HSAOA obtained the optimal results only on the F1-50 and F3-50 functions. The DSP-SAEA outperformed the other algorithms on the F9-100 and F11-100 functions, while the BiS-SAEA was most effective only for the F8-100 function. The FSAPSO obtained the best average value on five test problems, i.e., F6-50, F8-50, F9-50, F10-50, and F11-50. Figure A6 and Figure A7 show the convergence curves of MSAMT, GL-SADE, and LSADE on the 50- and 100-dimensional test problems, respectively. The MSAMT achieved the best mean values for all functions except F6-50, F8, F9, F10-50, and F11-50. The GL-SADE beat the other algorithms only on the F9-100 function. The LSADE won on 7 out of 28 functions. In summary, the MSAMT achieved the mean values on most of the test problems and was competitive in solving high-dimensional problems.

3.5. Computational Complexity Analysis of the MSAMT

Another essential part of measuring the performance of the algorithm is its computational complexity. The time complexity of the MSAMT is mainly determined by five main parts: the real function evaluation, the training of the dynamic ensemble of surrogates and the RBF models, the sorting of the samples in the database, the process of optimization using the G-MFEA, and other operations. The computational costs of other operations in the process are usually negligible, such as the generation and mutation of the population and the updating of the database.

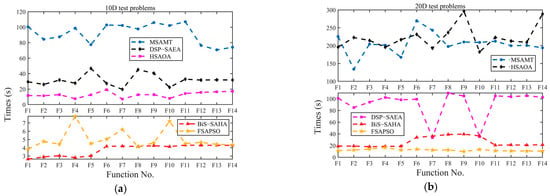

Run times of the proposed MSAMT and six competing algorithms on 10-, 20-, 30-, 50-, and 100-dimensional test functions are given in Figure 6. Based on the statistics presented in Figure 6, it was evident that for the 10-dimensional functions, the BiS-SAHA consumed the least time, followed by the FSAPSO. For the 20- and 30-dimensional functions, the FSAPSO had the lowest time cost, followed by the BiS-SAHA. The LSADE took the least time on the 50- and 100-dimensional functions, while the HSAOA and the DSP-SAEA required significantly more time than the other algorithms. This is due to the fact that the FSAPSO, LSADE, and GL-SADE were constructed only with a single surrogate, whereas the BiS-SAHA, MSAMT, DSP-SAEA, and HSAOA all used a surrogate ensemble. The proposed MSAMT consumed the most time on the 10-dimensional test functions, but with the increasing of problem dimensions, its time cost was much lower than those of the HSAOA and the DSP-SAEA.

Figure 6.

The average run times of the proposed algorithm and the competing algorithms on each test problem (From (a) to (e) are the average run times of 10D, 20D, 30D, 50D, and 100D test problems, respectively).

3.6. Case Study on a Spatial Truss Design Problem

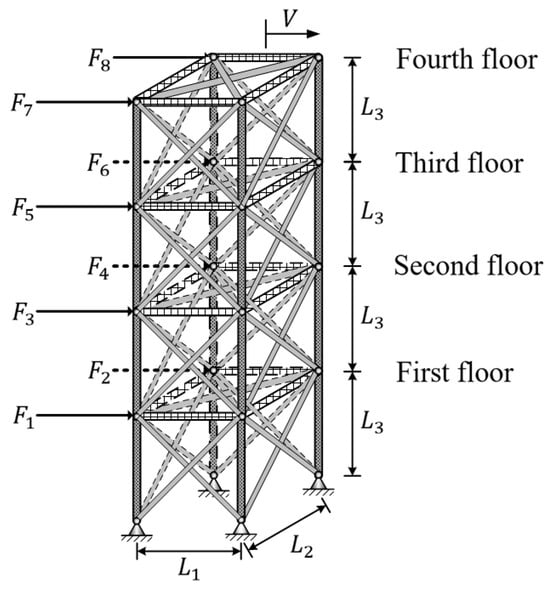

Spatial truss design is representative in optimization design, which involves several design variables and is widely used to verify the prediction accuracy and robustness of different algorithms. In the following, the MSAMT proposed in this paper was applied to a spatial truss design problem and compared with existing algorithms. The design problem aimed to search for the best structural parameters under the maximum allowable horizontal displacement , including the length of the horizontal, longitudinal, and vertical bars (denoted as , , and ), the Young’s modulus (denoted as −), and cross-sectional area (denoted as −) of the vertical, horizontal/longitudinal, and layer diagonal bars from the first to the fourth floor. Figure 7 shows the finite element model of the spatial truss structure comprising 72 bar elements under horizontal loads (). The horizontal loads were fixed values set as , , and . The initial values and ranges of the parameters to be optimized are shown in Table 3. The objective function to be optimized is given by the following:

where represents the maximum horizontal displacement, is a vector containing the 15 parameters to be optimized, and are the upper and lower bounds of the parameters, respectively, and denotes the number of structural parameters ().

Figure 7.

Simulation diagram of the spatial truss structure.

Table 3.

Initial values as well as upper and lower bounds of the parameters to be optimized.

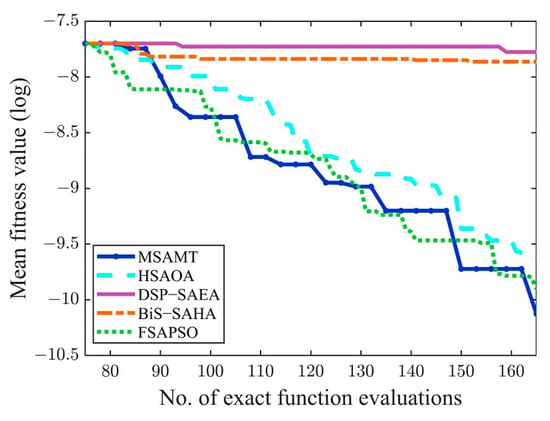

The HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO were chosen as the competing algorithms. The relevant parameters remained constant with the configurations from the previous sections. The optimal structural parameters and statistical results of the MSAMT and the competing algorithms for the spatial truss structure design problem are given in Table A6 and Table 4, respectively. The data showed that MSAMT achieved the best results. The convergence history is given in Figure 8, and the MSAMT converged faster than the comparison algorithms. In conclusion, the above results show that MSAMT is highly competitive in solving real engineering optimal design problems.

Table 4.

Statistical results obtained by the MSAMT, HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO on the spatial truss.

Figure 8.

Convergence curves of the MSAMT, HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO on the spatial truss.

4. Conclusions

This paper developed a multi-surrogate-assisted multi-tasking optimization algorithm (MSAMT) in the framework of the G-MFEA for the expensive optimization problems. The proposed MSAMT first divides the space into several disjoint regions using a decision space partition strategy and then builds an RBF model on each region. A new dynamic ensemble of surrogates is then suggested, constructed using the samples with the optimal fitness values. Several RBF models and a surrogate ensemble are then optimized simultaneously as tasks in multi-tasking optimization using the generalized multi-factorial evolutionary algorithm.

To evaluate the performance of the proposed approach, algorithm analyses of the MSAMT were first carried out on fourteen test functions, analyzing the parameters, the decision space partition strategy, the dynamic ensemble of surrogates, and the computational complexity. Finally, fourteen 10- to 100-dimensional test functions and a spatial truss design problem were used to compare the proposed approach with several recently proposed SAEAs. The results show that the proposed MSAMT exhibits a superior performance compared to comparison algorithms in most of the test functions and an engineering real-world problem.

The present study shows that the surrogate models can greatly affect the optimization results as they are utilized to select the samples that need to be evaluated exactly. Furthermore, as the modality of the optimization problem is increasing, both global and local searches may be unable to identify better individuals. Therefore, the proposed MSAMT enhances the population diversity through strategies such as the new dynamic ensemble of surrogates, the decision space partitioning, and the combination of local and global search under the G-MFEA framework to improve the performance of the algorithm.

In this work, the components used for constructing the ensemble surrogate are RBF, PRS, and SVR, and more components such as Kriging and the response surface model (RSM) could be further investigated to improve the ability of the MSAMT to handle various optimization problems. In addition, the control functions in the formula for calculating the weight coefficients (Equation (10)) can be adaptively selected according to different model combinations to further explore more reasonable weighting factors. Finally, it is possible to consider how the suggested MSAMT makes trade-offs between different objectives, thereby extending it to multi-objective optimization.

Author Contributions

Methodology, investigation, software, writing—original draft, data curation, and formal analysis, H.L.; conceptualization, methodology, supervision, project administration, and writing—review and editing, L.C.; methodology, supervision, validation, funding acquisition, and writing—review and editing, J.Z.; visualization and writing—review and editing, M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant No. 11872190).

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Performances of the MSAMT with different values on test functions with 10, 20, and 30 dimensions.

Table A1.

Performances of the MSAMT with different values on test functions with 10, 20, and 30 dimensions.

| No. | D | n = 2 | n = 3 | n = 4 | n = 5 | n = 6 |

|---|---|---|---|---|---|---|

| F1 | 10 | 6.1576 × 10−04/9.0159 × 10−04 | 1.1315 × 10−03/2.1076 × 10−03 | 1.3484 × 10−03/1.6437 × 10−03 | 5.2604 × 10−03/1.4730 × 10−03 | 6.4951 × 10−03/1.2067 × 10−02 |

| 20 | 8.7857 × 10−04/1.2927 × 10−03 | 7.5156 × 10−04/1.0109 × 10−03 | 2.3772 × 10−03/3.4051 × 10−03 | 1.1820 × 10−03/1.7333 × 10−03 | 1.2078 × 10−03/1.8732 × 10−03 | |

| 30 | 5.0537 × 10−04/8.3404 × 10−04 | 7.4973 × 10−04/1.1724 × 10−03 | 2.7145 × 10−03/5.4419 × 10−03 | 1.2440 × 10−03/1.6409 × 10−03 | 1.7024 × 10−03/4.0160 × 10−03 | |

| F2 | 10 | 8.9377 × 100/1.9688 × 10−02 | 8.9276 × 100/2.4215 × 10−02 | 8.9145 × 100/1.8565 × 10−02 | 8.9132 × 100/1.4603 × 10−02 | 8.9213 × 100/1.1374 × 10−02 |

| 20 | 1.8948 × 101/4.3698 × 10−02 | 1.8972 × 101/3.0864 × 10−02 | 1.8968 × 101/2.9814 × 10−02 | 1.8982 × 101/2.2537 × 10−02 | 1.8957 × 101/3.4526 × 10−02 | |

| 30 | 2.8907 × 101/6.3428 × 10−02 | 2.8932 × 101/5.2952 × 10−02 | 2.8933 × 101/5.1432 × 10−02 | 2.8954 × 101/4.2116 × 10−02 | 2.8942 × 101/4.6175 × 10−02 | |

| F3 | 10 | 1.0046 × 10−1/9.8901 × 10−2 | 2.5499 × 10−1/2.4696 × 10−1 | 1.9278 × 10−1/1.8896 × 10−1 | 4.4993 × 10−1/4.7951 × 10−1 | 3.6344 × 10−1/4.7301 × 10−1 |

| 20 | 3.5661 × 10−2/3.4910 × 10−2 | 3.8880 × 10−2/4.9165 × 10−2 | 3.4230 × 10−2/2.5054 × 10−2 | 5.9253 × 10−2/6.3396 × 10−2 | 3.6311 × 10−2/4.0904 × 10−2 | |

| 30 | 2.0405 × 10−2/2.5210 × 10−2 | 2.8334 × 10−2/3.1228 × 10−2 | 2.4139 × 10−2/2.2255 × 10−2 | 2.6908 × 10−2/2.4515 × 10−2 | 4.1592 × 10−2/3.5518 × 10−2 | |

| F4 | 10 | 2.4538 × 10−1/2.5738 × 10−1 | 2.9535 × 10−1/3.3024 × 10−1 | 4.1281 × 10−1/3.4644 × 10−1 | 4.5236 × 10−1/3.5449 × 10−1 | 3.7287 × 10−1/3.7932 × 10−1 |

| 20 | 3.6554 × 10−2/4.8874 × 10−2 | 9.7207 × 10−2/2.0167 × 10−1 | 1.4333 × 10−1/1.9419 × 10−1 | 1.1596 × 10−1/1.9961 × 10−1 | 1.4841 × 10−1/1.5769 × 10−1 | |

| 30 | 2.2352 × 10−2/3.6281 × 10−2 | 3.9419 × 10−2/6.3087 × 10−2 | 5.1184 × 10−2/8.1190 × 10−2 | 9.0757 × 10−2/1.3953 × 10−1 | 6.1304 × 10−2/9.2959 × 10−2 | |

| F5 | 10 | 1.5706 × 10−2/1.7458 × 10−2 | 4.3639 × 10−2/1.2757 × 10−1 | 9.5155 × 10−2/2.8352 × 10−1 | 1.5851 × 10−1/4.0449 × 10−1 | 2.0106 × 10−1/3.0228 × 10−1 |

| 20 | 2.5543 × 10−2/4.0294 × 10−2 | 3.9266 × 10−2/6.3480 × 10−2 | 2.8908 × 10−2/4.8136 × 10−2 | 2.2724 × 10−2/6.1190 × 10−2 | 2.8357 × 10−2/3.9895 × 10−2 | |

| 30 | 7.7361 × 10−3/2.0119 × 10−2 | 1.8737 × 10−2/3.8945 × 10−2 | 1.1835 × 10−2/1.4120 × 10−2 | 2.3746 × 10−2/4.0766 × 10−2 | 1.7943 × 10−2/2.4801 × 10−2 | |

| F6 | 10 | 3.9109 × 108/2.4226 × 108 | 3.2550 × 108/1.9101 × 108 | 3.4137 × 108/1.6906 × 108 | 3.4896 × 108/1.7638 × 108 | 3.3892 × 108/1.7821 × 108 |

| 20 | 4.4850 × 108/1.6046 × 108 | 4.4514 × 108/2.0654 × 108 | 4.3962 × 108/1.3582 × 108 | 4.9135 × 108/1.6797 × 108 | 5.3339 × 108/1.9813 × 108 | |

| 30 | 1.6204 × 109/3.4767 × 108 | 1.7716 × 109/4.8095 × 108 | 1.8055 × 109/4.1151 × 108 | 1.8337 × 109/4.2732 × 108 | 1.9834 × 109/5.5657 × 108 | |

| F7 | 10 | −1.1906 × 102/8.2173 × 10−2 | −1.1906 × 102/1.3282 × 10−1 | −1.1906 × 102/1.2635 × 10−1 | −1.1912 × 102/6.3425 × 101 | −1.1902 × 102/1.1162 × 10−1 |

| 20 | −1.1883 × 102/9.7998 × 10−2 | −1.1885 × 102/8.0033 × 10−2 | −1.1883 × 102/8.4834 × 10−2 | −1.1885 × 102/8.4656 × 10−2 | −1.1883 × 102/9.7280 × 10−2 | |

| 30 | −1.1872 × 102/7.4332 × 10−2 | −1.1870 × 102/5.8735 × 10−2 | −1.1871 × 102/5.5026 × 10−2 | −1.1873 × 102/4.8874 × 10−2 | −1.1872 × 102/5.1845 × 10−2 | |

| F8 | 10 | −2.1209 × 102/1.6889 × 101 | −2.1083 × 102/1.8400 × 101 | −2.0987 × 102/1.7706 × 101 | −2.0507 × 102/1.6862 × 101 | −2.0916 × 102/2.1757 × 101 |

| 20 | −6.6481 × 101/2.9068 × 101 | −6.8770 × 101/2.8479 × 101 | −5.3536 × 101/2.5079 × 101 | −5.6753 × 101/2.2102 × 101 | −7.4236 × 101/2.9150 × 101 | |

| 30 | 7.6683 × 101/4.5207 × 101 | 9.2089 × 101/3.5833 × 101 | 7.5062 × 101/3.7829 × 101 | 7.8364 × 101/3.5119 × 101 | 6.6761 × 101/4.4122 × 101 | |

| F9 | 10 | −1.8777 × 102/1.5327 × 101 | −1.7113 × 102/2.4084 × 101 | −1.7066 × 102/2.7885 × 101 | −1.7145 × 102/2.1716 × 101 | −1.7240 × 102/2.4936 × 101 |

| 20 | 6.4664 × 101/3.7553 × 101 | 6.7919 × 101/4.3892 × 101 | 6.6263 × 101/4.1520 × 101 | 5.5372 × 101/3.2486 × 101 | 4.8552 × 101/5.0830 × 101 | |

| 30 | 3.8454 × 102/5.8307 × 101 | 4.1287 × 102/5.2558 × 101 | 3.7172 × 102/9.9035 × 101 | 3.8358 × 102/1.0230 × 102 | 3.3660 × 102/9.2954 × 101 | |

| F10 | 10 | −2.9541 × 102/1.3311 × 10−1 | −2.9542 × 102/1.3419 × 10−1 | −2.9544 × 102/1.7197 × 10−1 | −2.9543 × 102/1.4707 × 10−1 | −2.9545 × 102/1.3905 × 10−1 |

| 20 | −2.9078 × 102/2.0940 × 10−1 | −2.9076 × 102/1.3735 × 10−1 | −2.9077 × 102/2.3274 × 10−1 | −2.9073 × 102/1.7840 × 10−1 | −2.9080 × 102/2.1605 × 10−1 | |

| 30 | −2.8567 × 102/1.9433 × 10−1 | −2.8567 × 102/2.0172 × 10−1 | −2.8570 × 102/1.8898 × 10−1 | −2.8567 × 102/2.2014 × 10−1 | −2.8570 × 102/2.0380 × 10−1 | |

| F11 | 10 | 7.6382 × 102/1.3531 × 102 | 7.9326 × 102/1.4033 × 102 | 7.8300 × 102/1.3476 × 102 | 7.4459 × 102/1.4442 × 102 | 7.4430 × 102/1.2479 × 102 |

| 20 | 1.0332 × 103/1.4849 × 102 | 1.0469 × 103/1.5155 × 102 | 1.0438 × 103/1.6408 × 102 | 1.0268 × 103/1.7361 × 102 | 1.0437 × 103/1.5754 × 102 | |

| 30 | 1.2662 × 103/1.0251 × 102 | 1.2770 × 103/1.1732 × 102 | 1.2701 × 103/1.1117 × 102 | 1.2567 × 103/1.2224 × 102 | 1.2538 × 103/1.3072 × 102 | |

| F12 | 10 | 9.1010 × 102/1.5644 × 10−1 | 9.1007 × 102/1.2011 × 10−1 | 9.1026 × 102/3.9976 × 10−1 | 9.1038 × 102/4.5278 × 10−1 | 9.1037 × 102/7.8635 × 10−1 |

| 20 | 9.1001 × 102/9.9019 × 10−3 | 9.1002 × 102/2.6173 × 10−2 | 9.1002 × 102/2.4352 × 10−2 | 9.1001 × 102/2.0584 × 10−2 | 9.1005 × 102/1.1789 × 10−1 | |

| 30 | 9.1000 × 102/6.0359 × 10−3 | 9.1001 × 102/9.8419 × 10−3 | 9.1001 × 102/1.0193 × 10−2 | 9.1001 × 102/6.8736 × 10−3 | 9.1001 × 102/1.2882 × 10−2 | |

| F13 | 10 | 9.1014 × 102/2.2779 × 10−1 | 9.1008 × 102/8.9839 × 10−2 | 9.1020 × 102/3.9035 × 10−1 | 9.1015 × 102/1.8995 × 10−1 | 9.1048 × 102/1.0171 × 100 |

| 20 | 9.1001 × 102/1.0621 × 10−2 | 9.1002 × 102/1.9057 × 10−2 | 9.1002 × 102/5.5961 × 10−2 | 9.1003 × 102/6.8497 × 10−2 | 9.1001 × 102/1.6544 × 10−2 | |

| 30 | 9.1001 × 102/1.0603 × 10−2 | 9.1001 × 102/4.4586 × 10−3 | 9.1001 × 102/6.7372 × 10−3 | 9.1001 × 102/7.0401 × 10−3 | 9.1001 × 102/5.5567 × 10−3 | |

| F14 | 10 | 9.1005 × 102/6.6851 × 10−2 | 9.1008 × 102/9.6278 × 10−2 | 9.1037 × 102/6.8048 × 10−1 | 9.1042 × 102/6.8477 × 10−1 | 9.1078 × 102/1.7724 × 100 |

| 20 | 9.1001 × 102/1.2135 × 10−2 | 9.1002 × 102/1.2772 × 10−2 | 9.1001 × 102/8.6495 × 10−3 | 9.1003 × 102/3.5058 × 10−2 | 9.1001 × 102/2.4665 × 10−2 | |

| 30 | 9.1001 × 102/6.3484 × 10−3 | 9.1001 × 102/1.4759 × 10−2 | 9.1001 × 102/1.0503 × 10−2 | 9.1001 × 102/1.5119 × 10−2 | 9.1001 × 102/6.8025 × 10−3 |

Table A2.

Performances of the MSAMT, MSAMT-KRG, and MSAMT-fixed on 20-dimensional test functions.

Table A2.

Performances of the MSAMT, MSAMT-KRG, and MSAMT-fixed on 20-dimensional test functions.

| No. | MSAMT | MSAMT-KRG | MSAMT-Fixed | |||

|---|---|---|---|---|---|---|

| Mean/Std | Time (s) | Mean/Std | Time (s) | Mean/Std | Time (s) | |

| F1 | 7.1867 × 10−4/1.2786 × 10−3 | 2.2559 × 102 | 8.5112 × 10−4/1.6087 × 10−3 | 1.4392 × 103 | 5.6628 × 10−5/1.2308 × 10−4 | 1.8153 × 103 |

| F2 | 1.8918 × 101/3.0714 × 10−2 | 1.3417 × 102 | 1.8957 × 101/3.2927 × 10−2 | 1.3663 × 103 | 1.8932 × 101/4.3047 × 10−2 | 1.8511 × 103 |

| F3 | 2.2813 × 10−2/1.8972 × 10−2 | 2.0433 × 102 | 3.1443 × 10−2/3.0788 × 10−2 | 1.4397 × 103 | 1.8529 × 10−2/1.9483 × 10−2 | 1.7036 × 103 |

| F4 | 1.8587 × 10−2/2.6338 × 10−2 | 2.0119 × 102 | 4.6343 × 10−2/6.6799 × 10−2 | 1.4155 × 103 | 2.1738 × 10−2/2.5219 × 10−2 | 1.8022 × 103 |

| F5 | 1.9954 × 10−2/3.7313 × 10−2 | 1.6742 × 102 | 6.4855 × 10−3/1.2761 × 10−2 | 1.4257 × 103 | 5.7906 × 10−4/9.8625 × 10−4 | 1.9176 × 103 |

| F6 | 4.5970 × 108/1.7431 × 108 | 2.0610 × 102 | 4.4432 × 108/1.8899 × 108 | 1.0199 × 103 | 4.7695 × 108/2.0986 × 108 | 1.4189 × 103 |

| F7 | −1.1891 × 102/8.6824 × 10−2 | 2.0682 × 102 | −1.1883 × 102/9.6093 × 10−2 | 1.0735 × 103 | −1.1884 × 102/7.2111 × 10−2 | 1.3799 × 103 |

| F8 | −6.1893 × 101/3.3596 × 101 | 1.9759 × 102 | −5.6754 × 101/2.7057 × 101 | 1.4987 × 103 | −4.6272 × 101/3.2021 × 101 | 1.9541 × 103 |

| F9 | 7.3996 × 101/3.6260 × 101 | 2.1021 × 102 | 5.0348 × 101/4.5175 × 101 | 9.3336 × 102 | 6.6196 × 101/3.5719 × 101 | 1.3000 × 103 |

| F10 | −2.9075 × 102/1.7213 × 10−1 | 2.0911 × 102 | −2.9078 × 102/1.5655 × 10−1 | 1.0734 × 103 | −2.9081 × 102/1.8345 × 10−1 | 1.3873 × 103 |

| F11 | 1.0258 × 103/1.7481 × 102 | 2.1239 × 102 | 1.0309 × 103/1.7726 × 102 | 1.1297 × 103 | 1.0365 × 103/1.8045 × 102 | 1.6549 × 103 |

| F12 | 9.1001 × 102/9.8825 × 10−3 | 1.9979 × 102 | 9.1001 × 102/1.2676 × 10−2 | 1.4347 × 103 | 9.1001 × 102/6.2157 × 10−3 | 1.8808 × 103 |

| F13 | 9.1001 × 102/1.6433 × 10−2 | 2.0068 × 102 | 9.1001 × 102/1.6238 × 10−2 | 1.3507 × 103 | 9.1001 × 102/3.7595 × 10−3 | 1.8873 × 103 |

| F14 | 9.1001 × 102/7.5166 × 10−3 | 1.9395 × 102 | 9.1001 × 102/1.5057 × 10−2 | 1.4379 × 103 | 9.1001 × 102/4.5504 × 10−3 | 1.7661 × 103 |

Table A3.

Statistical results (AVG ± STD) obtained by the MSAMT, HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO on test functions with 10, 20, and 30 dimensions.

Table A3.

Statistical results (AVG ± STD) obtained by the MSAMT, HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO on test functions with 10, 20, and 30 dimensions.

| No. | D | MSAMT | HSAOA | DSP-SAEA | BiS-SAHA | FSAPSO |

|---|---|---|---|---|---|---|

| F1 | 10 | 2.2186 × 10−3/7.3964 × 10−3 | 2.4575 × 10−3/2.4031 × 10−3(+) | 3.3321 × 10−7/6.0800 × 10−7(−) | 3.1726 × 10−2/3.9344 × 10−2(+) | 2.7795 × 10−2/3.0173 × 10−2(+) |

| 20 | 7.1867 × 10−4/1.2786 × 10−3 | 9.5862 × 10−3/2.1410 × 10−2(+) | 9.8464 × 10−2/1.4483 × 10−1(+) | 1.5405 × 10−1/1.1005 × 10−1(+) | 1.9539 × 10−1/1.3521 × 10−1(+) | |

| 30 | 5.4775 × 10−4/8.8610 × 10−4 | 8.1057 × 10−3/1.8270 × 10−2(+) | 4.9800 × 10−1/2.7486 × 10−1(+) | 5.3745 × 10−1/2.1538 × 10−1(+) | 6.1116 × 10−1/3.2927 × 10−1(+) | |

| F2 | 10 | 8.9291 × 100/1.6436 × 10−2 | 9.7479 × 100/1.4695 × 100(+) | 1.0654 × 101/1.5344 × 100(+) | 1.6730 × 101/5.7852 × 100(+) | 1.4193 × 101/7.3871 × 100(+) |

| 20 | 1.8918 × 101/3.0714 × 10−2 | 2.3221 × 101/4.1746 × 100(+) | 2.3291 × 101/2.9022 × 100(+) | 5.6260 × 101/2.1739 × 101(+) | 4.6111 × 101/2.6400 × 101(+) | |

| 30 | 2.8913 × 101/6.1577 × 10−2 | 3.0580 × 101/1.6938 × 100(+) | 3.2337 × 101/1.2599 × 100(+) | 9.6668 × 101/2.8762 × 101(+) | 6.1852 × 101/1.9570 × 101(+) | |

| F3 | 10 | 7.9531 × 10−2/9.6623 × 10−2 | 6.9486 × 10−1/8.2074 × 10−1(+) | 7.0093 × 100/2.4921 × 100(+) | 5.6567 × 100/2.8986 × 100(+) | 5.7167 × 100/2.4296 × 100(+) |

| 20 | 2.2813 × 10−2/1.8972 × 10−2 | 9.6387 × 10−3/9.7639 × 10−3(−) | 7.8720 × 100/1.8258 × 100(+) | 5.9863 × 100/4.0404 × 100(+) | 7.5047 × 100/2.7653 × 100(+) | |

| 30 | 1.9428 × 10−2/2.3069 × 10−2 | 1.2704 × 10−3/5.7678 × 10−4(−) | 5.8237 × 100/1.2778 × 100(+) | 4.3556 × 100/1.6599 × 100(+) | 7.8665 × 100/2.3169 × 100(+) | |

| F4 | 10 | 1.2388 × 10−1/1.7845 × 10−1 | 6.8511 × 10−1/2.8208 × 10−1(+) | 1.0043 × 100/7.5751 × 100(+) | 7.1742 × 10−1/2.9906 × 10−1(+) | 9.4176 × 10−1/6.4448 × 10−2(+) |

| 20 | 1.8587 × 10−2/2.6338 × 10−2 | 2.7908 × 10−1/3.4399 × 10−1(+) | 9.1369 × 10−1/9.7421 × 10−2(+) | 3.5613 × 10−1/1.9511 × 10−1(+) | 7.4943 × 10−1/1.2541 × 10−1(+) | |

| 30 | 1.7011 × 10−2/3.2582 × 10−2 | 1.3709 × 10−1/1.9915 × 10−1(+) | 9.6615 × 10−1/5.4062 × 10−2(+) | 2.3262 × 10−1/1.4185 × 10−1(+) | 6.5227 × 10−1/1.0699 × 10−1(+) | |

| F5 | 10 | 5.1065 × 10−2/9.6333 × 10−2 | 4.7218 × 101/2.8014 × 101(+) | 3.9081 × 101/1.4233 × 101(+) | 3.6467 × 101/2.1593 × 101(+) | 4.0986 × 101/1.6733 × 101(+) |

| 20 | 1.9954 × 10−2/3.7313 × 10−2 | 5.2701 × 101/2.5755 × 101(+) | 8.0158 × 101/2.6458 × 101(+) | 6.3888 × 101/3.0763 × 101(+) | 6.1867 × 101/3.2755 × 101(+) | |

| 30 | 3.4102 × 10−3/5.3363 × 10−3 | 4.6209 × 101/2.4258 × 101(+) | 2.1261 × 101/1.2658 × 101(+) | 6.1469 × 101/2.9189 × 101(+) | 7.6937 × 101/3.5378 × 101(+) | |

| F6 | 10 | 3.3595 × 108/1.6961 × 108 | 3.8227 × 107/4.1371 × 107(−) | 7.9564 × 107/6.1272 × 107(−) | 2.3740 × 107/3.0509 × 107(−) | 3.1398 × 107/3.0647 × 107(−) |

| 20 | 4.5970 × 108/1.7431 × 108 | 5.8898 × 108/2.0217 × 108(+) | 5.6116 × 108/2.8450 × 108(+) | 5.7606 × 108/2.2375 × 108(+) | 6.1811 × 108/2.9055 × 108(+) | |

| 30 | 1.6180 × 109/3.5130 × 108 | 8.6408 × 107/4.6362 × 107(−) | 1.9755 × 108/7.0611 × 107(−) | 8.1104 × 107/4.5028 × 107(−) | 7.7800 × 107/3.3843 × 107(−) | |

| F7 | 10 | −1.1908 × 102/1.3742 × 10−1 | −1.1884 × 102/1.0851 × 10−1(+) | −1.1907 × 102/1.2945 × 10−1(=) | −1.1906 × 102/1.3686 × 10−1(=) | −1.1902 × 102/1.2587 × 10−1(=) |

| 20 | −1.1891 × 102/8.6824 × 10−2 | −1.1888 × 102/9.6667 × 10−2(=) | −1.1885 × 102/1.0603 × 10−1(=) | −1.1885 × 102/1.0527 × 10−1(=) | −1.1885 × 102/9.6983 × 10−2(=) | |

| 30 | −1.1876 × 102/5.8657 × 10−2 | −1.1873 × 102/5.4940 × 10−2(=) | −1.1874 × 102/5.3362 × 10−2(=) | −1.1870 × 102/5.1004 × 10−2(=) | −1.1872 × 102/4.7621 × 10−2(=) | |

| F8 | 10 | −2.1031 × 102/1.7589 × 101 | −2.8556 × 102/1.4680 × 101(−) | −2.7223 × 102/1.9182 × 101(−) | −2.9346 × 102/1.6692 × 101(−) | −2.8002 × 102/1.9757 × 101(−) |

| 20 | −6.1893 × 101/3.3596 × 101 | −2.5524 × 102/2.1918 × 101(−) | −2.3584 × 102/2.7262 × 101(−) | −2.6230 × 102/3.2670 × 101(−) | −2.4975 × 102/3.3611 × 101(−) | |

| 30 | 9.7566 × 101/4.0362 × 101 | −2.2256 × 102/3.2951 × 101(−) | −1.6840 × 102/3.1401 × 101(−) | −1.1260 × 102/4.4708 × 101(−) | −2.2617 × 102/2.8949 × 101(−) | |

| F9 | 10 | −1.7629 × 102/1.7730 × 101 | −2.8029 × 102/2.2697 × 101(−) | −2.6487 × 102/1.2332 × 101(−) | −2.9478 × 102/1.5338 × 101(−) | −2.7535 × 102/1.8625 × 101(−) |

| 20 | 7.3996 × 101/3.6260 × 101 | −1.1239 × 102/3.3385 × 101(−) | −1.5357 × 102/1.3680 × 101(−) | −1.1359 × 102/3.1975 × 101(−) | −1.4092 × 102/1.9300 × 101(−) | |

| 30 | 4.0272 × 102/4.2241 × 101 | −1.6286 × 102/3.6614 × 101(−) | −1.1403 × 102/2.3933 × 101(−) | −9.3406 × 101/4.1676 × 101(−) | −1.6389 × 102/4.0406 × 101(−) | |

| F10 | 10 | −2.9541 × 102/1.3752 × 10−1 | −2.9552 × 102/1.9088 × 10−1(=) | −2.9555 × 102/1.6229 × 10−1(=) | −2.9557 × 102/2.2424 × 10−1(=) | −2.9554 × 102/1.6612 × 10−1(=) |

| 20 | −2.9075 × 102/1.7213 × 10−1 | −2.9063 × 102/1.6249 × 10−1(=) | −2.9073 × 102/1.9203 × 10−1(=) | −2.9064 × 102/2.0176 × 10−1(=) | −2.9069 × 102/2.1238 × 10−1(=) | |

| 30 | −2.8570 × 102/1.9496 × 10−1 | −2.8577 × 102/1.9317 × 10−1(=) | −2.8597 × 102/2.7387 × 10−1(=) | −2.8580 × 102/2.3075 × 10−1(=) | −2.8587 × 102/2.2160 × 10−1(=) | |

| F11 | 10 | 7.8293 × 102/1.4282 × 102 | 4.0859 × 102/8.5902 × 101(−) | 4.5692 × 102/9.1476 × 101(−) | 4.1606 × 102/8.9614 × 101(−) | 4.3799 × 102/1.3829 × 102(−) |

| 20 | 1.0258 × 103/1.7481 × 102 | 5.9594 × 102/9.8286 × 101(−) | 6.9914 × 102/1.1353 × 102(−) | 5.7719 × 102/7.4371 × 101(−) | 6.0940 × 102/1.0422 × 102(−) | |

| 30 | 1.2613 × 103/1.2295 × 102 | 4.9137 × 102/9.0273 × 101(−) | 6.2205 × 102/9.6358 × 101(−) | 5.1114 × 102/1.3416 × 102(−) | 5.6040 × 102/1.0212 × 102(−) | |

| F12 | 10 | 9.1006 × 102/1.4643 × 10−1 | 1.1360 × 103/6.7100 × 101(+) | 1.2088 × 103/5.1323 × 101(+) | 1.1684 × 103/6.9199 × 101(+) | 1.1621 × 103/5.3208 × 101(+) |

| 20 | 9.1001 × 102/9.8825 × 10−3 | 1.2808 × 103/8.0984 × 101(+) | 1.2678 × 103/4.4563 × 101(+) | 1.2720 × 103/7.2891 × 101(+) | 1.2653 × 103/4.0572 × 101(+) | |

| 30 | 9.1001 × 102/5.5963 × 10−3 | 1.0180 × 103/5.7919 × 101(+) | 1.0939 × 103/3.7824 × 101(+) | 9.9759 × 102/4.3238 × 101(+) | 1.0213 × 103/6.1759 × 101(+) | |

| F13 | 10 | 9.1011 × 102/1.3738 × 10−1 | 1.1264 × 103/6.7088 × 101(+) | 1.2016 × 103/7.0017 × 101(+) | 1.1395 × 103/3.9149 × 101(+) | 1.1673 × 103/6.1350 × 101(+) |

| 20 | 9.1001 × 102/1.6433 × 10−2 | 1.2447 × 103/5.9141 × 101(+) | 1.2806 × 103/5.6512 × 101(+) | 1.2790 × 103/6.7908 × 101(+) | 1.2571 × 103/4.5539 × 101(+) | |

| 30 | 9.1001 × 102/8.4273 × 10−3 | 1.0098 × 103/4.6875 × 101(+) | 1.0963 × 103/3.7419 × 101(+) | 9.9866 × 102/3.4782 × 101(+) | 1.0440 × 103/6.4525 × 101(+) | |

| F14 | 10 | 9.1010 × 102/1.8543 × 10−1 | 1.1254 × 103/7.7471 × 101(+) | 1.2085 × 103/5.6439 × 101(+) | 1.1744 × 103/9.4485 × 101(+) | 1.1591 × 103/8.3353 × 101(+) |

| 20 | 9.1001 × 102/7.5166 × 10−3 | 1.2435 × 103/7.2796 × 101(+) | 1.2788 × 103/5.1990 × 101(+) | 1.2569 × 103/8.4563 × 101(+) | 1.2566 × 103/3.8660 × 101(+) | |

| 30 | 9.1001 × 102/4.6669 × 10−3 | 9.9491 × 102/4.8337 × 101(+) | 1.0910 × 103/4.7972 × 101(+) | 1.0076 × 103/6.3577 × 101(+) | 1.0462 × 103/6.7583 × 101(+) | |

| +/=/− | 24/5/13 | 24/6/12 | 25/6/11 | 25/6/11 |

Table A4.

Statistical results (AVG ± STD) obtained by the MSAMT, HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO on test functions with 50 and 100 dimensions.

Table A4.

Statistical results (AVG ± STD) obtained by the MSAMT, HSAOA, DSP-SAEA, BiS-SAHA, and FSAPSO on test functions with 50 and 100 dimensions.

| No. | D | MSAMT | HSAOA | DSP-SAEA | BiS-SAEA | FSAPSO |

|---|---|---|---|---|---|---|

| F1 | 50 | 1.5139 × 10−3/2.9703 × 10−3 | 6.6420 × 10−4/1.1716 × 10−3(−) | 8.5655 × 100/2.3768 × 100(+) | 3.5827 × 101/1.0570 × 102(+) | 8.6070 × 100/4.2065 × 100(+) |

| F1 | 100 | 2.2974 × 10−3/4.1236 × 10−3 | 2.7541 × 10−1/5.4010 × 10−1(+) | 2.2511 × 102/3.6066 × 101(+) | 5.6663 × 102/4.9770 × 102(+) | 1.7318 × 102/7.0301 × 101(+) |

| F2 | 50 | 4.8989 × 101/2.0683 × 10−2 | 4.9004 × 101/3.8941 × 10−1(+) | 6.4929 × 101/8.2349 × 100(+) | 3.5411 × 102/3.4942 × 102(+) | 9.0141 × 101/2.3460 × 101(+) |

| F2 | 100 | 9.8980 × 101/2.6847 × 10−2 | 1.0434 × 102/2.6441 × 100(+) | 3.6493 × 102/4.7918 × 101(+) | 8.9207 × 102/3.4642 × 102(+) | 2.9185 × 102/3.7701 × 101(+) |

| F3 | 50 | 1.5949 × 10−2/1.7975 × 10−2 | 2.1525 × 10−4/4.6254 × 10−5(−) | 7.0014 × 100/1.3281 × 100(+) | 8.9919 × 100/3.6852 × 100(+) | 7.5177 × 100/7.1600 × 10−1(+) |

| F3 | 100 | 1.6202 × 10−2/1.6438 × 10−2 | 2.1473 × 10−2/1.0452 × 10−2(+) | 7.9457 × 100/4.3654 × 10−1(+) | 1.5824 × 101/1.9239 × 100(+) | 1.3845 × 101/1.3817 × 100(+) |

| F4 | 50 | 2.3249 × 10−2/3.9907 × 10−2 | 2.1299 × 10−1/1.3435 × 10−1(+) | 1.1393 × 100/4.7626 × 10−2(+) | 4.5965 × 10−1/1.6026 × 10−1(+) | 7.7063 × 10−1/6.8645 × 10−2(+) |

| F4 | 100 | 2.5404 × 10−2/4.1350 × 10−2 | 2.1299 × 10−1/1.3435 × 10−1(+) | 5.9262 × 100/8.2541 × 10−1(+) | 1.5578 × 101/2.7415 × 101(+) | 3.0273 × 100/1.0937 × 100(+) |

| F5 | 50 | 4.3405 × 10−3/8.5700 × 10−3 | 1.1369 × 102/3.9883 × 101(+) | 3.9735 × 102/3.8838 × 101(+) | 1.4639 × 102/6.6315 × 101(+) | 1.1791 × 102/3.1529 × 101(+) |

| F5 | 100 | 1.3211 × 10−2/2.5329 × 10−2 | 4.3194 × 102/2.2999 × 102(+) | 8.6395 × 102/3.0622 × 101(+) | 5.3052 × 102/1.2590 × 102(+) | 7.1114 × 102/1.2708 × 102(+) |

| F6 | 50 | 4.1817 × 109/1.0109 × 109 | 2.0962 × 108/8.6102 × 107(−) | 3.1424 × 108/9.2524 × 107(−) | 1.4923 × 108/6.2721 × 107(−) | 1.4518 × 108/4.9036 × 107(−) |

| F6 | 100 | 7.4188 × 109/1.0592 × 109 | 8.1092 × 109/1.4021 × 109(+) | 8.1388 × 109/1.5812 × 109(+) | 9.6883 × 109/2.0580 × 109(+) | 8.9691 × 109/1.4803 × 109(+) |

| F7 | 50 | −1.1865 × 102/4.2963 × 10−2 | −1.1862 × 102/4.9648 × 10−2(=) | −1.1865 × 102/5.0892 × 10−2(=) | −1.1862 × 102/4.5914 × 10−2(=) | −1.1863 × 102/3.7352 × 10−2(=) |

| F7 | 100 | −1.1853 × 102/3.9229 × 10−2 | −1.1852 × 102/3.9939 × 10−2(=) | −1.1852 × 102/2.9043 × 10−2(=) | −1.1851 × 102/2.4057 × 10−2(=) | −1.1852 × 102/3.1575 × 10−2(=) |

| F8 | 50 | 4.7430 × 102/4.0367 × 101 | 6.2601 × 101/4.0803 × 101(−) | 1.5347 × 102/3.5406 × 101(−) | −5.2526 × 101/4.7545 × 101(−) | −8.6248 × 101/4.4722 × 101(−) |

| F8 | 100 | 1.3291 × 103/5.3634 × 101 | 8.6902 × 102/6.8374 × 101(−) | 7.4663 × 102/5.4666 × 101(−) | 4.2095 × 102/7.9754 × 101(−) | 6.8752 × 102/1.1339 × 102(−) |

| F9 | 50 | 9.8795 × 102/5.0244 × 101 | 2.9357 × 102/1.0638 × 102(−) | 2.3344 × 102/4.2587 × 101(−) | 1.0728 × 102/7.2037 × 101(−) | −1.4294 × 101/4.0704 × 101(−) |

| F9 | 100 | 2.0480 × 103/5.4173 × 101 | 1.7680 × 103/1.3779 × 102(−) | 1.0183 × 103/7.6560 × 101(−) | 1.5369 × 103/1.6818 × 102(−) | 1.3951 × 103/1.7096 × 102(−) |

| F10 | 50 | −2.7589 × 102/1.8276 × 10−1 | −2.7594 × 102/2.2129 × 10−1(=) | −2.7613 × 102/3.6886 × 10−1(−) | −2.7589 × 102/2.0396 × 10−1(=) | −2.7618 × 102/1.7560 × 10−1(−) |

| F10 | 100 | −2.5137 × 102/1.6843 × 10−1 | −2.5114 × 102/1.7815 × 10−1(=) | −2.5120 × 102/1.6293 × 10−1(=) | −2.5107 × 102/1.8699 × 10−1(=) | −2.5129 × 102/2.6863 × 10−1(=) |

| F11 | 50 | 1.4403 × 103/8.7875 × 101 | 6.3811 × 102/1.3438 × 102(−) | 6.0781 × 102/6.7380 × 101(−) | 5.7609 × 102/8.6520 × 101(−) | 4.8557 × 102/9.5737 × 101(−) |

| F11 | 100 | 1.5965 × 103/5.8848 × 101 | 9.4489 × 102/9.5478 × 101(−) | 6.7137 × 102/3.6199 × 101(−) | 8.4819 × 102/7.7597 × 101(−) | 7.7777 × 102/9.1593 × 101(−) |

| F12 | 50 | 9.1000 × 102/2.2301 × 10−3 | 9.2622 × 102/7.2549 × 101(+) | 1.1402 × 103/3.9379 × 101(+) | 1.0975 × 103/5.4559 × 101(+) | 1.1225 × 103/5.2349 × 101(+) |

| F12 | 100 | 9.1000 × 102/3.2326 × 10−3 | 1.0103 × 103/5.1691 × 101(+) | 1.2434 × 103/4.7584 × 101(+) | 1.4063 × 103/3.4757 × 101(+) | 1.4119 × 103/3.9495 × 101(+) |

| F13 | 50 | 9.1000 × 102/4.4973 × 10−3 | 9.4249 × 102/1.0050 × 102(+) | 1.1483 × 103/4.3132 × 101(+) | 1.1096 × 103/6.5641 × 101(+) | 1.1379 × 103/5.7170 × 101(+) |

| F13 | 100 | 9.1000 × 102/4.4477 × 10−3 | 1.0144 × 103/6.9600 × 101(+) | 1.2516 × 103/4.2059 × 101(+) | 1.4071 × 103/4.0183 × 101(+) | 1.4066 × 103/3.1890 × 101(+) |

| F14 | 50 | 9.1000 × 102/3.8153 × 10−3 | 9.3946 × 102/9.2767 × 101(+) | 1.1362 × 103/3.0108 × 101(+) | 1.1092 × 103/5.7922 × 101(+) | 1.1373 × 103/5.5069 × 101(+) |

| F14 | 100 | 9.1000 × 102/2.9016 × 10−3 | 1.0065 × 103/4.9499 × 101(+) | 1.2338 × 103/3.9768 × 101(+) | 1.4038 × 103/3.4837 × 101(+) | 1.4129 × 103/2.9752 × 101(+) |

| +/=/− | 15/4/9 | 17/3/8 | 17/4/7 | 17/3/8 |

Table A5.

Statistical results (AVG ± STD) obtained by the MSAMT, GL-SADE, and LSADE on test functions with 50 and 100 dimensions.

Table A5.

Statistical results (AVG ± STD) obtained by the MSAMT, GL-SADE, and LSADE on test functions with 50 and 100 dimensions.

| No. | D | MSAMT | GL-SADE | LSADE |

|---|---|---|---|---|

| F1 | 50 | 1.5139 × 10−3/2.9703 × 10−3 | 1.5209 × 103/1.3836 × 102(+) | 1.9977 × 102/6.6385 × 101(+) |

| F1 | 100 | 2.2974 × 10−3/4.1236 × 10−3 | 1.1735 × 104/7.3673 × 102(+) | 3.1721 × 103/4.0235 × 102(+) |

| F2 | 50 | 4.8989 × 101/2.0683 × 10−2 | 1.6358 × 103/1.3374 × 102(+) | 2.2193 × 102/5.4929 × 101(+) |

| F2 | 100 | 9.8980 × 101/2.6847 × 10−2 | 7.4367 × 103/6.0353 × 102(+) | 1.2336 × 103/2.1562 × 102(+) |

| F3 | 50 | 1.5949 × 10−2/1.7975 × 10−2 | 1.6100 × 101/1.0188 × 100(+) | 1.2094 × 101/1.8939 × 100(+) |

| F3 | 100 | 1.6202 × 10−2/1.6438 × 10−2 | 1.9667 × 101/2.3333 × 10−1(+) | 1.6278 × 101/9.3989 × 10−1(+) |