1. Introduction

The impetus to achieve triple bottom line outcomes by businesses, ecologically sustainable development goals by governments, and social, economic, and ecological sustainability in natural resource use has resulted in a wide range of management options being developed that produce different combinations of outcomes across the broad set of criteria being used for their assessment. In many cases, these management alternatives result in trade-offs in outcomes against different objectives. For example, achieving environmental goals may result in a loss of employment opportunities at a national level, while at the business level, social considerations may result in loss of profits. Assessing trade-offs in these outcomes, however, is complex, particularly when different management options produce substantially different outcomes against each criterion. This trade-off is made more difficult when the outcomes of the different management options are measured in different and non-commensurable units (e.g., pollution in tons, profits in monetary values, employment impacts in terms of number of jobs lost, etc.).

Several approaches have been developed to rank different management options given their impact on the different criteria of interest, usually through the estimate of a composite indicator that allows the overall impacts to be compared. These approaches fall under the general area of multi-criteria decision analysis (MCDA), which aggregates outcomes over multiple objectives or criteria to provide a ranking of management alternatives given these potential trade-offs. Key to the application of MCDA is the use of preference weights associated with each objective, allowing a weighted composite value to be estimated by aggregation across all objectives. The use of MCDA to support management decisions has been applied in a wide range of areas, such as healthcare [

1,

2], agriculture, [

3,

4], transportation, [

5,

6] and resource and environmental management, [

7,

8,

9,

10], to name a few.

Data envelopment analysis (DEA) has also been broadly employed in an MCDA context. DEA is commonly used to estimate productivity measures such as technical efficiency, technical change, and capacity utilisation of individual firms or businesses. These firms are generally considered individual decision making units (DMUs). These measures reflect the extent to which a set of outputs produced by a DMU are maximized given the set of inputs employed (an output-oriented approach), or the degree to which their input use is minimized to achieve a given level of output (an input-oriented approach). From a decision support perspective, outcomes under different management options (even for a given firm) can also be considered effectively as the unit of production, where the outputs are the expected set of outcomes associated with each criterion or objective under each management option. Consistent with DEA terminology, we will consider these equivalent to DMUs, even though they represent the set of alternatives rather than decision makers per se.

The similarities between DEA and MCDA have long been established [

11,

12,

13,

14], and the use of DEA as an MCDA approach is gaining increasing interest in the decision making literature, often combined with criterion preference weights derived using other approaches, such as the analytic hierarchy process (AHP) [

15], or used to estimate the criteria weights based on AHP-type pairwise comparisons [

16]. de Oliveira et al. [

15] identified over 220 papers published between 1994 and 2022 that combined DEA and MCDA approaches or where DEA was used in an MCDA context. Applications of DEA as an MCDA tool have been undertaken in a wide variety of industries, such as agriculture [

12], energy [

17], fisheries [

18], and railways [

19]. DEA has also been applied to compare the efforts of different countries in protecting biodiversity [

20] and improving water quality [

21], where outputs are expressed in terms of biodiversity or water quality indices, respectively.

The DEA efficiency measure is sometimes referred to as the ‘benefit of the doubt’ indicator (BODI) [

22,

23] in an MCDA context, as it implicitly recognizes that DMUs may have different objective preferences and that their choices of output mix may reflect these preferences. In the case of DMUs actually representing different management options (rather than decision makers per se), these options may have been designed with achieving particular outcomes in mind, and hence their performance across multiple criteria may reflect this. A key advantage of the benefit of the doubt indicator is that objective preference weights do not need to be explicitly provided, as they are assumed implicit in the production mix of the DMU. However, as noted above, decision maker preferences can be directly included in the DEA model.

A feature of these studies is that all criterion indicators and their associated outcomes are compared at the same time. Where these indicators could be considered sub-indicators of a broader objective set (e.g., ecological, economic, or social objectives), different numbers of indicators in each implicitly apply different importance weightings to their corresponding broader objective, as they have a greater overall influence on the BODI/efficiency score. For example, an objective represented by five indicators would have (implicitly) greater influence on the overall efficiency score than an objective with two or three indicators. While this may be intentional in some cases, in others it may reflect availability of indicators rather than an implicit preference for some outcomes. For example, environmental indicators may be more readily accessible than social indicators (or vice versa). Without some compensating analysis, the environmental outcomes would dominate the determination of the ‘best’ management option in this case.

Two key approaches to DEA are the radial [

24] and additive (slacks-based) [

25] approaches. Both approaches have been widely applied in efficiency analysis, and each has particular theoretical and practical advantages and disadvantages. Radial models are conceptually simple, assuming that outputs (or inputs) change proportionally given an output (input) orientation, subject to any underlying returns to scale conditions. Slacks-based measures remove the proportionality assumption and assume that outputs (or inputs) can change individually based on what combinations are observed in the data [

26]. The previously cited applications of DEA used either the radial approach [

12,

17,

18] or the slacks-based approach [

19], without consideration of the impact of the approach used on the outcomes of the analysis.

In this study, we consider the implications of both approaches when using DEA for MCDA. We also consider the impact of imposing a hierarchical structure on the resultant ranking of options. Hierarchical structures are commonly imposed in MCDA [

27], with one method—the analytic hierarchy process (AHP) [

28]—designed specifically to provide decision support through a hierarchical structure. AHP is generally used to estimate weights associated with each objective, with the weights estimated for each level of the hierarchy (i.e., higher-level objectives such as maximizing overall ‘social’ or ‘economic’ outcomes; lower level (but more specific) objectives such as community benefits, equity, etc.). We consider the impact on option ranking of ignoring the implicit hierarchical structure when there are different number of indicators associated with each higher order objective.

The overall purpose of the study is to provide guidance to future applications of DEA for multi-criteria decision making as to the implications of the approach adopted. Specifically, we consider how the type of DEA model used and how it is applied affects the estimated relative efficiency score related to each management alternative being considered by decision makers. We do this through the use of, first, a hypothetical data set to illustrate the differences in outcomes more generally, and second, using an example data set from a previous MCDA application.

2. Materials and Methods

2.1. Data Envelopment Analysis

DEA is a non-parametric (linear programming) frontier-based method that is used to assess the relative efficiency of a set of decision making units (DMUs). DEA is a well-established method for productivity analysis [

29,

30,

31] that has been broadly applied to a wide range of industries.

The efficiency of a DMU is determined by its level of outputs (e.g., level of production) and inputs (e.g., capital and labor) relative to other DMUs. The efficient frontier is defined by the data, given by the set of DMUs that produces the highest level of (different combinations of) outputs for a given set of inputs. A particular advantage of DEA is the ability to include multiple outputs and inputs in the analysis. A further advantage of DEA is that the underlying functional form of the production function (i.e., relationship between outputs and inputs) does not need to be specified, and essentially, a separate ‘production function’ is identified for each DMU.

Outputs are represented by the extent to which objective or criterion outcomes are achieved under each management option. In this context, each management option is effectively treated as DMU-equivalent in a production context. In practice, these outputs are generally represented by indicators associated with the objective rather than the objective per se. There do not need to be any inputs in the traditional productivity analysis sense if each option involves the same level of resources to implement, although if different management options involve different resource inputs, these can be captured in the analysis. For example, if the different management options involve different costs, these can be included as an input, and the resultant measure is then similar to what may be produced in a cost effectiveness analysis. Otherwise, each DMU is assigned an equal ‘input’ with a value of 1 (one).

We consider two approaches to the estimation of the management option ‘efficiency’: an output-oriented radial DEA model [

24] and an output-oriented additive model (often referred to as a slacks-based model) [

25,

32,

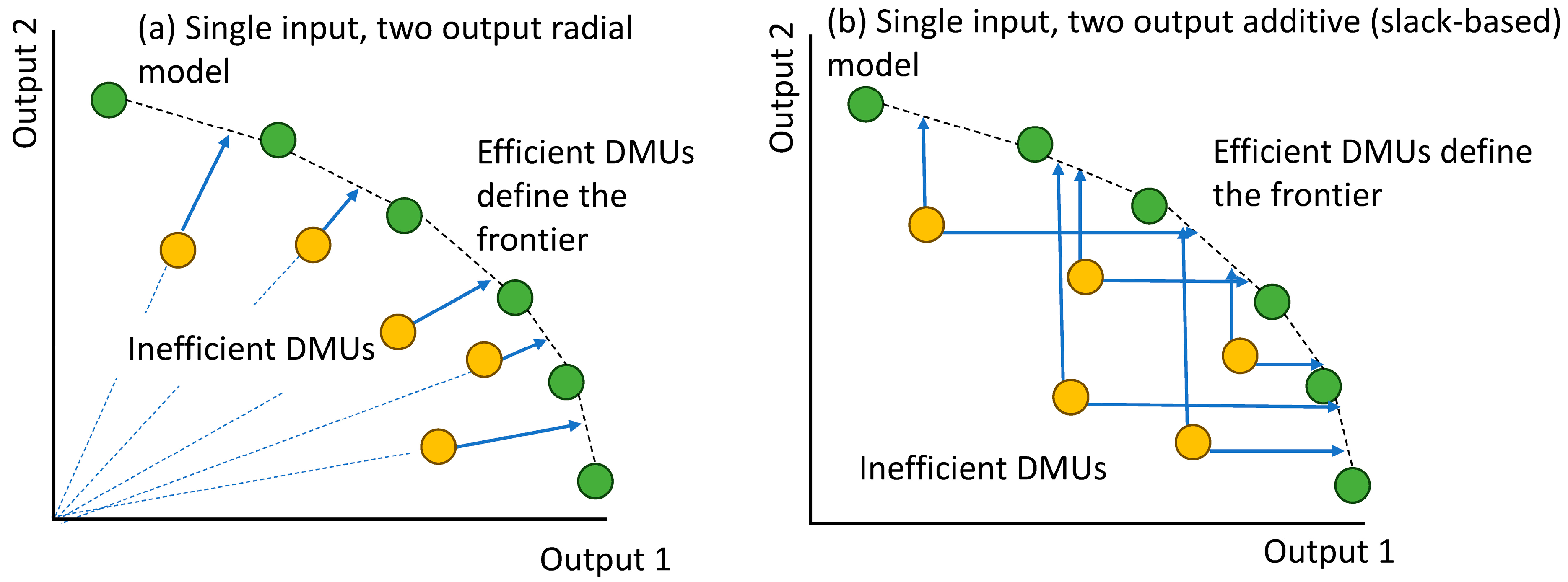

33]. A simplified comparison of the two approaches is given in

Figure 1, where the radial model results in a radial expansion to the frontier, while the additive model estimates the horizontal or vertical distance to the frontier. The radially (or additive) expanded inefficient DMU at the frontier may fall between two (or more in the case of multiple outputs) other DMUs (as seen in

Figure 1), the position determined by the weighted average of the outputs of the efficient DMUs.

The radial DEA model has been applied in a wide range of contexts, including to assess different management options given multiple objectives [

18]. For the purposes of using DEA as an MCDA tool, we employ an output-oriented model as we seek to identify the management options that maximize the outcomes with respect to the multiple objectives or criteria. We can define the output-oriented radial model as:

where

is a scalar showing by how much the outcomes of each DMU can increase output,

yj,m is the outcome against objective

m by DMU

j,

xj,n is amount of input

n used by the DMU

j (set at 1 for all DMUs) and

zj are weighting factors. In a productivity context, we can add an additional constraint,

, to imposes variable returns to scale in the model; otherwise, the model (implicitly) imposes constant returns to scale. With a single (constant) input, there is no difference between imposing variable returns or constant returns to scale [

34]. With variable inputs (e.g., costs of each option), imposing variable returns to scale as well as constant returns to scale may (via the derived measure of scale efficiency) provide additional information to assist decision makers. However, assuming decision makers aim to maximize the outcomes given the available resources, constant returns to scale may be a more appropriate assumption.

From this, the technical efficiency (TE) associated with the management option can be given by:

The measure of TE ranges from zero to 1, with 1 being full efficiency (i.e., on the frontier). Values less than 1 indicate that the DMU is operating at less than its full potential given the set of inputs. In the context of our analysis, the most efficient management option is that which best achieves the overall set of objectives.

In this study, we also consider an additive (also known as slacks-based) form of the DEA model, following Tone [

25] given by:

where the

y and

x variables are as defined previously, with

and

representing the slack variables required to reach the frontier. As with the radial model, adding an additional constraint

imposes variable returns to scale in the model while excluding this constraint results in constant returns to scale being (implicitly) imposed.

As all DMUs have the same input level,

and the objective function can be re-expressed as [

35]:

In this case, technical efficiency is given by . A further feature of the measure is that . That is, the slacks-based measure of efficiency is always less than or equal to the radial measure.

The radial models were implemented using the Benchmarking [

36] package in R [

37], and the slacks-based model using deaR [

38].

2.2. Hierarchical Structure of Decision Making

MCDA approaches originate from different theoretical traditions, but most involve some form of aggregation model to allow preference estimates to be combined and compared across criteria [

39]. This is generally achieved through the construction of an objective or criterion hierarchy, with specific measurable objectives/criteria [

27].

An example of such a hierarchy is given in

Figure 2, in which three objectives have a different number of indicators (or measurable sub-objectives) associated with each. In this example, ignoring the hierarchical structure and comparing all indicators at the same time will implicitly give more weight to Objective 3 (as it has the most indicators) and least weight to Objective 2 (as it has the fewest associated indicators).

The hierarchical structure is taken into account in the analysis through first estimating the efficiency score for each objective separately (based on the indicator values that make up that objective), then estimating the overall score using the individual objective scores as the measures. This two-stage process compensates for different objectives having different numbers of indicators when preferences relating to each indicator are unknown.

2.3. Data and Analyses

The analysis utilized two different data sets to test the implications of model choice given the structure of the data used and the importance of hierarchical structures. First, a hypothetical data set was generated to ensure a wide spread of criterion outcomes. The ‘efficiency’ of each set of outcomes was compared given different hierarchical structures and model type (i.e., radial or slacks-based) to assess how these impact the overall scores.

Second, a waste management data set from a previous MCDA application was re-examined using the two different DEA methods, with the DEA outcomes compared with the original MCDA analysis.

The two data sets differ in terms of the relationship between the variables. The variables, representing outcome indicators, within the hypothetical data set are relatively uncorrelated. In contrast, the outcome variables in the waste management data set were more correlated.

A hierarchical structure was also applied to both data sets to test the effects of introducing such a structure on the overall management alternative efficiency score. In the case of the hypothetical data set, this structure was varied to test the effects of different number of indicators/sub-objectives/criteria on the estimated efficiency score and overall estimate of performance. For the waste management data set, the indicators naturally grouped into environmental, technological and economic higher order groups.

2.3.1. Hypothetical Data and Analysis

One hundred DMUs were randomly generated with each producing nine outputs (i.e., indicators) with a range of values from 1 to 10. The R code used to generate and analyze the data is presented in the

Supporting Information. The range of values of each variable and the correlation between these values is illustrated in

Figure 3. Correlation between the variables was low, generally less than +/−0.2.

The data were analyzed first by comparing all indicators together using both DEA methods (i.e., radial and slacks-based), and the set of efficient DMUs were identified, representing the management options that produce the greatest overall benefits. A set of different hierarchical structures was then developed. Each structure was assumed to include three different broader ‘objectives’, each with varying numbers of associated indicators. An outline of the experimental design is given in

Table 1, where the number in brackets represents the number of indicators associated with each of the three objectives. The same experimental design was applied to both DEA model types.

For the hierarchical analysis, a two-step process was implemented. First, the indicators within each broader (higher-level) objective were compared first giving an efficiency score for each objective. For example, in the ‘equal’ scenario (

Table 1), the efficiency score for the first higher-level objective group was derived using only variables one to three; the second using variables four to six; and the third using variables seven to nine. Next, these higher-level objective group efficiency scores were used as the outcome associated with each broader objective, and the three objectives were compared in a second round of analysis. That is, the efficiency score of each of the higher-level objective group was used as the output, which was then compared with the efficiency score of the other higher order objective groups. The number of indicators associated with each of the broader objectives was changed to see the effects of this on the resultant efficiency score of each DMU.

2.3.2. Waste Management Data

The analysis was also applied using data from a previous MCDA analysis involving a range of different options for development of a waste management system. For each management alternative, a measure of the cost of its implementation and the outcomes in terms of environmental impacts and benefits were estimated. The data were originally produced by Hokkanen and Salminen [

40], who applied ELECTRE III [

41] to assess the different options, and more recently used by Sarkis [

42], who compared DEA to a range of other MCDA approaches.

The data include several undesirable outputs. There are a number of ways of dealing with undesirable outputs, including ignoring them, including them as inputs, or transforming the variables [

43]. Sarkis [

42] included these undesirable outputs as inputs, along with the costs of their implementation. In our study, we have applied the approach developed by Seiford and Zhu [

44], where the undesirable outputs (

y) are transformed as

y′ = −

y +

D, where D is a constant value that makes the resultant transformed output (

y’) positive for all

y. In this case, the maximum value of each of the undesirable variables plus one was added to the negative values. The ‘plus one’ was added such that the minimum value of the transformed variable was 1 (rather than zero). The higher the value of the transformed variable, the better the option compares to the worst performing option. This approach was adopted as the resultant data can be readily applied in both a radial and slacks-based DEA.

The transformed data are presented in

Table 2 for the 22 different management alternatives. A hierarchy containing three higher-level criteria (Environmental, Technical, and Economic) was assumed and the outputs allocated accordingly. All Environmental outputs were undesirable, with the values in

Table 2 being transformed as indicated above. The cost of each management alternative was used as the input.

This is a relatively small data set for use in DEA. To ensure appropriate degrees of freedom in the analysis, the number of comparisons generally should be greater than

max[

3(

m +

n),

m × n], where

m and

n are the number of outputs and inputs respectively [

45], suggesting that 24 or more comparisons would produce more reliable results. However, fewer alternatives may incorrectly increase the share of fully efficient options (i.e., TE = 1).

Unlike the hypothetical data, the waste treatment data are more highly correlated (

Table 3), both positively and negatively. This indicates a greater degree of proportionality in outputs as well as explicit trade-offs (e.g., greenhouse gases compared with acidic gases). This may affect the efficiency scores derived using the different methods.

As with the hypothetical data, the alternatives were examined using both the radial and slacks-based models, and with and without a hierarchical structure imposed.

4. Discussion

The underlying use of hierarchical structures in MCDA is relatively common. Different management objectives often have a different number of sub-objectives, or a different number of indicators relating to each objective. Introducing a hierarchical structure in the assessment of management alternatives using DEA provides a number of benefits. Foremost, it allows an assessment of how each management alternative relates to each objective as an overall benefit of the doubt measure over all objectives and indicators. While not considered in this study, the efficiency score associated with each group of indicators (i.e., potentially representing a separate management objective) could be considered as well as the overall performance measure of the management option.

The use of an intermediate step also has benefits in terms of saving degrees of freedom when data are limited. The number of indicators that can be compared in an analysis is limited by the number of available observations. The discrimination power and accuracy of DEA with respect to the ability to estimate the performance of DMUs decreases as the number of DMUs decreases or the numbers of inputs and outputs increases [

46]. While there is no definitive estimate of the number of observations required to ensure robust estimation of the efficiency scores, a commonly applied rule of thumb is that the number of observations (DMUs) should be greater than

max[

3(

m + n),

m × n], where

m and

n are the number of outputs and inputs, respectively [

45]. In our hypothetical example, with nine outputs and one input, 30 observations would be required to meet this. With 100 generated DMUs, this was not a concern. For the waste management example, however, degrees of freedom issues may have contributed to the large number of efficient alternatives when all the data were used, but substantially fewer when breaking the problem up into essentially four separate estimation stages (one each for each objective and one for the combined objective level analysis). For example, when all data were used, the problem required 24 observations (i.e., 3 × (7 + 1) alternatives) although only 22 alternatives were available. In contrast, estimating the efficiency score associated with just the environmental objectives required only 15 observations (i.e., 3 × (4 + 1)).

If decision makers do have differing preferences for the different objectives, then weights can be applied also at each stage to provide a weighted overall efficiency score. While not explicitly covered in this study, an example of how importance weights can be applied in DEA is given by Pascoe et al. [

18]. If weights were available for the waste management example, it is expected that the correlation between the MCDA and DEA model rankings would have been greater.

In the hypothetical analysis, each observation was treated as a separate management option for consideration. In many MCDA studies, multiple sets of observations are associated with each management option reflecting uncertainty in management outcomes. These may be derived through stochastic simulation using models or through (differing) expert opinion from a range of experts. The DEA framework offers particular advantages in this case, as each potential outcome set is compared with all other sets. The resultant efficiency scores can be considered as a distribution associated with each management option, allowing the effects of uncertainty to be explicitly captured in the analysis.

We find that the results (i.e., efficiency scores and relative rankings) are also sensitive to model choice. From the results using both data sets, the slacks-based model approach provided greater discrimination between management options (DMUs), with a smaller set of options identified as efficient. Avkiranet al. [

35] note a key challenge with radial models is that an identified efficient DMU may still exhibit lower levels of output in some indicators that others that are also identified as efficient. Slacks-based models directly assess these indicator-specific differences, although the loss of the proportionality assumption may mean that the ability of the options to achieve efficiency is limited if such a proportionality constraint exists [

35]. However, as we are not interested in improving the efficiency of inefficient management alternatives but identifying which options are the most efficient in a multi-criterion environment then this is not necessarily a problem.

The choice of which model to use to assess the management alternative may depend on the degree of independence between management outcomes. If management outcomes are perfectly independent, such that trade-offs between them are possible, then the slacks-based model may be more appropriate. Conversely, if the outcomes are not totally independent, such that increasing one will require in some increase in another (and vice versa), then the radial model may be more appropriate given the associated proportionality assumptions. In the hypothetical data set, each outcome was total independent, and hence the slacks-based model was able to provide greater discrimination between the hypothetical alternatives that produced them. For the waste management example, several outputs were correlated (e.g., toxins, acidic gases and nitrogen released in the water), suggesting the proportionality approach underlying the radial model may have been appropriate. For the waste management example, both models produced similar rankings and numbers of ‘efficient’ options, with the utilisation of the hierarchical structure more important in distinguishing the most efficient alternatives.

The study assumes that the relative preferences for each of the management outcomes is unknown, and hence the models provide a ‘benefit of the doubt’ measure of the efficiency of the different alternative. Under this scenario, the outcomes are sensitive to what form of model is used and how (if any) hierarchy of criteria is employed. The addition of preference weights is possible in DEA as noted previously, and this may reduce these differences if the importance of each criterion to the decision maker was more explicit. This is an area for future consideration.

5. Conclusions

The results of the analysis have several implications for the use of DEA for MCDA. First, as a ‘benefit of the doubt’ measure, DEA will not necessarily identify a single ‘best’ management option, but may instead identify a subset of efficient options. These options will have different strengths with regard to different objectives. With the addition of objective weightings, this subset may be able to be narrowed further and potentially a single best option identified. Without a set of objective weights, managers will need to assess trade-offs within this (reduced) group subjectively.

Second, in cases where different objectives have a different number of indicators (or quantifiable and measurable sub-objectives), ignoring the implicit (or explicit) hierarchical structure can result in less efficient management options appearing to be efficient. This could result in less-than optimal decisions being made, as the pool of potentially optimal management options is greater than it should be. The triple bottom line framework (i.e., environmental, social and economic) provides a useful conceptual classification system for many MCDA variables, even if an explicit hierarchy has not been pre-determined.

The third key result is that the efficiency scores, and hence ranking of the management options, are sensitive to the DEA approach, with the radial approach potentially overestimating the proportion of efficient options when the outcome data are uncorrelated. In contrast, the slacks-based approach can provide greater discrimination between management options, minimizing the set of efficient options that need to be subsequently considered.