Abstract

This paper proposes a new group-sparsity-inducing regularizer to approximate pseudo-norm. The regularizer is nonconvex, which can be seen as a linearly involved generalized Moreau enhancement of -norm. Moreover, the overall convexity of the corresponding group-sparsity-regularized least squares problem can be achieved. The model can handle general group configurations such as weighted group sparse problems, and can be solved through a proximal splitting algorithm. Among the applications, considering that the bias of convex regularizer may lead to incorrect classification results especially for unbalanced training sets, we apply the proposed model to the (weighted) group sparse classification problem. The proposed classifier can use the label, similarity and locality information of samples. It also suppresses the bias of convex regularizer-based classifiers. Experimental results demonstrate that the proposed classifier improves the performance of convex regularizer-based methods, especially when the training data set is unbalanced. This paper enhances the potential applicability and effectiveness of using nonconvex regularizers in the frame of convex optimization.

1. Introduction

In recent decades, sparse reconstruction has become an active topic in many areas, such as in fields of signal processing, statistics, and machine learning [1]. By reconstructing a sparse solution from a linear measurement, we can obtain a certain expression of high-dimensional data as a vector with only a small number of nonzero entries. In practical applications, the data of interest can often be assumed to have a certain special structure. For example, in microarray analysis of gene expression [2,3], hyperspectral image unmixing [4,5,6], force identification in industrial applications [7], classification problems [8,9,10,11,12,13], etc., the solution of interest often possesses group-sparsity structure, namely the solution has a natural grouping of its coefficients and nonzero entries only occur in few groups.

This paper focuses on the estimation of group sparse solution, which is related to the Group LASSO (least absolute shrinkage and selection operator) [14]. Suppose is a group sparse signal, where , and g is the number of groups. Just as with the use of pseudo-norm for evaluation of the sparsity, the group sparsity of can be evaluated with the pseudo-norm, i.e., , where is the Euclidean norm, and is the pseudo-norm which counts the number of nonzero entries in the vector in .

The group sparse regularized least squares problem can be modeled as

where and are known, and is the regularization parameter. However, the employment of the pseudo-norm makes (1) NP-hard [15]. Most studies in the application replace the nonconvex regularizer with its tightest convex envelope [16] (or its weighted variants), and the following regularized least squares problem has been proposed known as the Group LASSO [14],

where () in the regularization term

(this is -norm of , i.e., a separable weighted version [17] of -norm ) are used to adjust for group sizes with in [14,18]. We give a simple but clear explanation in Appendix A, to show the bias of -norm caused by group size in the application of group sparse classification (GSC).

Although the convex optimization problem (2) has been used as a standard model for group sparse estimation applications, the convex regularizer does not necessarily promote group sparsity sufficiently, mainly due to the fact that -norm is just an approximation of pseudo-norm within the severe restriction of the convexity. To promote the group sparsity more effectively than convex regularizers, nonconvex regularizers such as group SCAD (smoothly clipped absolute deviation) [3], group MCP (minimax concave penalty) [18,19], regularization (, ) [20], iterative weighted group minimization [21], and [22] have been used for group sparse estimation problems. However, they lose the overall convexity (In [23], a nonconvex regularizer which can preserve the overall convexity was proposed, but the fidelity term of the optimization model is (limited to the case of , where is the identity matrix), which cannot be applied to (1) for general .) of the optimization problems, which results in their algorithms of no guarantee of convergence to global minimizers of the overall cost functions.

In this paper, we propose a generalized weighted group sparse estimation model based on the linearly involved generalized-Moreau-enhanced (LiGME) approach [24] that uses nonconvex regularizer while maintaining the overall convexity of the optimization problem. Our contributions can be summarized as follows:

- We show in Proposition 2 that the generalized Moreau enhancement (GME) of , i.e., (see (11)), can bridge the gap between and . For the non-separable weighted , i.e., , its GME can be expressed as LiGME of in the case of weight matrix has full row-rank.

- We present a convex regularized least squares model with a nonconvex group sparsity promoting regularizer based on LiGME. It can be served as a unified model of many types of group sparsity related applications.

- We illustrate the unfairness of regularizer in unbalanced classification and then apply the proposed model to reduce the unfairness of it in GSC and weighted GSC (WGSC) [11].

The remainder of this paper is organized as follows. In Section 2, we give a brief review of LiGME model and WGSC method. In Section 3, we present our group sparse enhanced representation model and its mathematical properties. In Section 4, we apply the proposed model to group-sparsity-based classification problems. The conclusion is given in Section 5.

A preliminary short version of this paper was presented at a conference [25].

2. Preliminaries

2.1. Review of Linearly Involved Generalized-Moreau-Enhanced (LiGME) Model

We first give a brief review of linearly involved generalized-Moreau-enhanced (LiGME) models, which is closely related to our method. Although the convex function -norm (or nuclear norm) is the most frequently adopted regularizer for sparsity (or low-rank) pursuing problems, it tends to yield underestimation for high-amplitude value (or large singular value) [26,27]. The convexity-preserving nonconvex regularizers have been widely explored in [24,28,29,30,31,32,33], which promote sparsity (or low-rank) more effectively than convex regularizers without losing the overall convexity. Among them, the generalized minimax concave (GMC) function in [31] does not rely on certain strong assumptions in the least squares term and has great potential for dealing with nonconvex variations of . Motivated by GMC function, the LiGME model [24] provides a general framework for constructing linearly involved nonconvex regularizers for sparsity (or low-rank) regularized linear least squares while maintaining the overall convexity of the cost function.

Let , , , and be finite-dimensional real Hilbert spaces. Let a function be coercive with . Here is the set of proper (i.e., ) lower semicontinuous (i.e., is closed for ) convex function (i.e., for ) from to ; a function is called coercive if . For , the proximity operator of is defined by

The generalized Moreau enhancement (GME) of with is defined as

where is a tuning matrix for the enhancement. Then the LiGME model defined as the minimization of

where .

Please note that GMC [31] can be seen as a special case of (5) with and , where Id is the identity operator. Model (5) can also be seen as an extension of [32,33].

Although the GME function in (4) is not convex in general for , where is the zero operator, the overall convexity of the cost function (5) can be achieved with designed to satisfy the following convexity condition.

Proposition 1

A method of designing satisfying (6) for is provided in [24]; see Proposition A1 in Appendix B. For any that is coercive, even symmetry and prox-friendly (Even symmetry means ; prox-friendly means is computable ().) with , [24] provides a proximal splitting algorithm (see Proposition A2 in Appendix B) of guaranteed convergence to a globally optimal solution of model (5) under the overall-convexity condition (6).

2.2. Basic Idea of Weighted Group Sparse Classification (WGSC)

As a relatively simple but typical scenario for the application of the proposed idea in this paper, we introduce the main idea of weighted group sparse classification (WGSC). Classification is one of fundamental tasks in the field of the signal and image processing and pattern recognition. For a classification problem with g classes of subjects, the training samples can formulate a dictionary matrix , where is the subset of the training samples from subject i, is the j-th training sample from the i-th class, is the number of training samples from class i, and is the number of total training samples. The aim is to correctly determine which class the input test sample belongs to. Although deep learning is very popular and powerful for classification tasks, it requires a very large-scale training set and computation resources for numerous parameters training with complicated back-propagation.

Wright et al. proposed the sparse representation-based classification (SRC) [34] for face recognition. With the assumption that samples of a specific subject lie in a linear subspace, a valid test sample is expected to be approximated well by a linear combination of the training samples from the same class, which leads to a sparse representation coefficient over all training samples. Specifically, the test sample is approximated by the linear combination of the dictionary items, i.e., , where is the coefficient vector. A simple minimization model with sparse representation can be . In most SRC-based approaches, regularizer is relaxed to , and the model becomes the well-known LASSO model [35] in statistics.

The label information of the dictionary atoms is not used in the simple model of SRC, hence the regression is based solely on the structure of each sample. When the subspaces spanned by different classes are not independent, SRC may lead the test image to be represented by training samples from multiple different classes. Considering ideal situation where the test image should only be approximated well by the training samples from one class corresponding to the correct one, in [8,9,10], the authors divided training samples into groups by prior label information and used group-sparsity regularizers. Naturally, the coefficient vector has group structure , where . This kind of group sparse classification (GSC) approach aims to represent the test image using the minimum number of groups, and thus an ideal model is (1) which is NP-hard. As stated in Section 1, a convex approximation of , i.e., -norm, has been used widely as a best convex regularizer to incorporate the class labels.

More generally, the non-separable weighted -norm, i.e., has also been used as the regularizer in GSC [11,36,37]. For example, Tang et al. [11] proposed a weighted GSC (WGSC) model as follows, by involving the information of the similarity between query sample and each class as well as the distance between query sample and each training sample,

where penalizes the distance between and each training sample of i-th class, is set to assess the relative importance of training samples from i-th class for representing the test sample, and here ⊙ denotes element-wise multiplication. Specifically, the weights are computed by

where and are bandwidth parameters, , computes the distance from to the individual subspace generated by , and denotes the minimum reconstruction error of . The regularizer in (7) can be written as a non-separable weighted , i.e., , where

For the aforementioned methods, after obtaining the optimal solution (denoted by ), they assign to the class that minimizes the class reconstruction residual defined by .

Although regularizer and its weighted variants are widely used in GSC and WGSC-based methods, they not only suppress the number of selected classes, but also suppress significant nonzero coefficients within classes. The later may lead to underestimation of high-amplitude elements and adversely affect the performance. The nonconvex regularizers such as () [37] and group MCP [38] make the corresponding optimization problems nonconvex. Therefore, we hope to use a regularizer which can reduce the bias and approximate better than while ensuring the overall convexity of the problem.

3. LiGME Model for Group Sparse Estimation

3.1. GME of Weighted -Norm and Its Properties

Although -norm (or its weighted variants) acts as the favorable approach to approximate in the literature of group sparse estimation, it has large bias and does not promote group sparsity as effective as . Since GME provides an approach to better approximate direct discrete measures (e.g., for sparsity, matrix rank for low-rankness) than their convex envelopes, we propose to use it for designing group-sparsity pursuing regularizers.

More generally, let us consider the GME of in (3). Clearly, is coercive, even symmetry and prox-friendly, whose proximity operator can be computed by

where is a signal with group structure, and .

Actually, the GME of with (see (4)):

where and , can serve as a parametric bridge between and .

Proposition 2.

(GME of can bridge the gap between and .) Let for , where is the weight in (3) for . Then, for any ,

Together with the fact that where is the zero matrix, the regularization term can serve as a parametric bridge between and . As a special case, the GME of can serve as a parametric bridge between and .

Proof.

The regularization term , where

for . By ([39], Example 24.20), we obtain

Then, we obtain

and

□

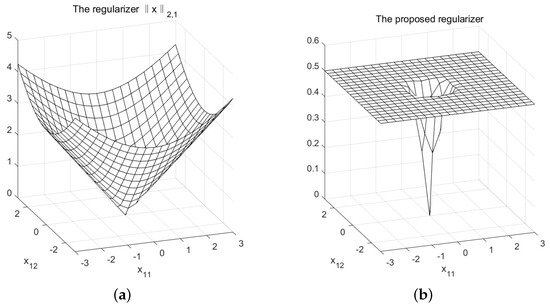

Figure 1 illustrates simple examples of and when , and . As we can see, can approximate better than .

Figure 1.

Simple examples of two group sparse regularizers (one group case): (a) The regularizer; (b) The regularizer .

Of course, as reviewed in Section 2.1, we can minimize (see (5)) with the algorithm in (A3) in Proposition A2, under the overall-convexity condition .

In the following, we consider the GME of non-separable weighted -norm , where is not necessarily a diagonal matrix. This is because in some applications, such as classification problems [11,36,37] stated in Section 2.2, and also heterogeneous feature selection [40], weights are introduced inside groups as well (i.e., the weight of every entry can be different) to improve the estimation accuracy. The GME of with is well-defined (The lack of coercivity requires slight modification from min to inf.) as

and therefore we can formulate

However, we should remark that is even symmetric but not necessarily coercive or prox-friendly (As found in ([39], Proposition 24.14), it is known that for and satisfying with some , we have for . In such a special case, if is prox-friendly, is also prox-friendly. However, for general not necessarily satisfying such standard conditions, we have to discuss the prox-friendliness of case by case.). Fortunately, by Proposition 3 below, if and can be expressed as for some , we can show the useful relation

which implies that the GME of can be handled as the LiGME of .

Proposition 3.

For which is coercive and , assume has full row-rank. Then for any ,

where and .

Proof.

On one hand, by the definition of GME, we have

where is given by

Therefore, .

On the other hand, by definition. Thus, we obtain the conclusion. □

In the rest of the paper, we focus on LiGME model of -norm.

3.2. LiGME of -Norm

For simplicity as well as for effectiveness in application to GSC and WGSC, we focus on the LiGME model of with an invertible linear operator ,

In this case, for achieving , we can simply design , in a way similar to ([31], (48)), as in the next proposition.

Proposition 4.

For an invertible , let

then for the LiGME model in (20), .

Proof.

By and Proposition 1, is ensured. □

Model (20) can be applied to many different applications that conform to group-sparsity structure.

4. Application to Classification Problems

4.1. Proposed Algorithm for Group-Sparsity Based Classification

Since regularizer in GSC is unfair for classes of different sizes (see Appendix A) while -regularizer is not, our purpose is to use a better approximation of as the regularizer. Therefore, we apply model (20) to group-sparsity-based classification. Following GSC, we can set in (20).

Inspired by WGSC [11] which well designs weights to enforce locality and similarity information of samples, we can also set the weight matrix according to (9). The classification algorithm is summarized in Algorithm 1.

4.2. Experiments

First, by setting , we conduct the experiments on a relatively simple dataset to investigate the influence by bias of regularizer on the classification problem (especially when training set is unbalanced), and verify the performance improvement using as the regularizer by conducting the experiments on a relatively simple dataset. The USPS handwritten digit database [42] has 11,000 samples of digits “0” through “9” (1100 samples per class). The dimension of each sample is . In our classification experiments, we vectorized them to 256-D vectors. The number of training samples for each class is not necessarily equal, which varies from 5 to 50 (the size of test set is fixed to 50 images per class).

We set (the initialization of should be modified in Algorithm 1) for the proposed model (20) and compared it with GSC (with regularizer) [10]. We set and fix to achieve the overall convexity of proposed method, and set , , . The initial estimate is set as , and the stopping criterion is set to either or steps reaching 10,000.

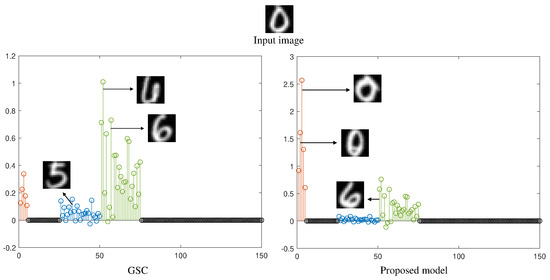

Figure 2 shows an example of unbalanced training set (digits “0” through “4” have 5 samples per class and “5” through “9” have 25 samples per class). The input (an image of digit “0”) was misclassified (into digital “6”) by GSC while classified correctly by proposed method. The obtained coefficient vectors by GSC and proposed method (both with ) are illustrated respectively, and some samples corresponding to nonzero coefficients are also displayed in Figure 2. It can be seen that the samples from digit “6” made the greatest contribution to the representation in GSC, and samples from “5” and “0” also made small contribution. In our method, samples from the correct class “0” made the biggest contribution and led to correct result. It is reasonable, because our method did not suppress the high value coefficients too much whereas did. The big suppression of made the coefficients of the correct class cannot be large enough, and thus easily led to misclassification.

| Algorithm 1: The proposed group-sparsity enhanced classification algorithm |

| Input: A matrix of training samples grouped by class information, a test sample vector , parameters , and . 1. Initialization: Let . Compute the weight matrix by (9). Choose satisfying . Choose satisfying 2. For , compute until the stopping criterion is fulfilled. 3. Compute the class label of by Output: The class label corresponding to . |

Figure 2.

Estimated sparse coefficients by GSC and proposed method respectively.

(For example, any , and can satisfy (22).)

Table 1 summarizes the recognition accuracy of GSC and the proposed method with . The training set includes digits “0” through “4” samples per class and “5” through “9” samples per class. Through numerical experiments, we found that GSC with and the proposed method with perform well on this dataset. We also experimented the proposed method of using , which did not degrade too much compared with using . We see that the GSC model degrades when the training set is unbalanced, and the proposed method outperforms GSC especially in such case.

Table 1.

Recognition results on the USPS database.

Next, we conduct the experiments on a classic face dataset to verify the validity of the proposed linearly involved model by setting the weight matrix according to (9). The ORL Database of Faces [43] contains 400 images from 40 distinct subjects (10 images per subject) with variations in lighting, facial expressions (open or closed eyes, smiling or not smiling) and facial details (glasses or no glasses). In our experiments, following [44], all images were downsampled from to and then formed 256-D vectors. The number of training samples for each class is not necessarily equal, which varies from 4 to 8 (test set is fixed to 2 images per class).

We compared the proposed model (20) ( and by (9) respectively) with GSC [10] and WGSC [11]. In order to achieve the overall convexity, we set and fix for proposed method. Settings of , initial estimate and stopping criterion are the same as those in the previous experiment. When the parameter is assigned too small, the obtained coefficient vector is not group sparse; when the parameter or is assigned too small, the information of locality or similarity plays a decisive role. We found that for regularizer-based methods (i.e., GSC and WGSC), for the proposed method and for weights involved methods (i.e., WGSC and proposed method with by (9)) work well on this dataset.

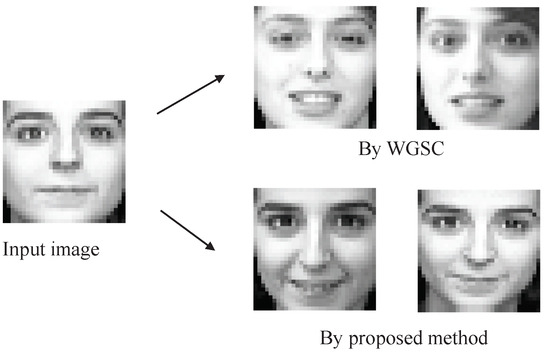

Figure 3 shows a classification result of WGSC and proposed method ( by (9)) (both with , ) when training set is unbalanced (20 subjects have 8 samples per class and the others have 6 samples per class). The input is an image of subject 10 which was misclassified into subject 8 by WGSC while classified correctly by proposed method.

Figure 3.

An example of results by WGSC and proposed method.

Table 2 summarizes the recognition accuracy of GSC, the proposed method with , WGSC and the proposed method with computed by (9). Training set setting is that 20 subjects have samples per class and the others have samples per class. With the strategically designed matrix (9), WGSC achieves a significant improvement over GSC. By using the proposed method with computed by (9), the performance can be further improved, especially when the training set is unbalanced.

Table 2.

Recognition results on the ORL database.

5. Conclusions

In this paper, the potential applicability and effectiveness of using nonconvex regularizers in convex optimization framework was explored. We proposed a generalized Moreau enhancement (GME) of weighted function and analyzed its relationship with the linearly involved GME of -norm. The proposed regularizer is nonconvex and promotes group sparsity more effectively than while maintaining the overall convexity of the regression model at the same time. The model can be used in many applications and we applied it to classification problems. Our model makes use of the grouping structure by class information and suppresses the tendency of underestimation of high-amplitude coefficients. Experimental results showed that the proposed method is effective for image classification.

Author Contributions

Conceptualization, M.Y. and I.Y.; methodology, Y.C., M.Y. and I.Y.; software, Y.C.; writing-original draft, Y.C., writing-review and editing, M.Y. and I.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by JSPS Grants-in-Aid grant number 18K19804 and by JST SICORP grant number JPMJSC20C6.

Data Availability Statement

Publicly available data sets were analyzed in this study. These data can be found here: https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/multiclass.html##usps; https://cam-orl.co.uk/facedatabase.html; and http://www.cad.zju.edu.cn/home/dengcai/Data/FaceData.html (accessed on 25 October 2021).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LASSO | Least Absolute Shrinkage and Selection Operator |

| SCAD | Smoothly Clipped Absolute Deviation) |

| MCP | Minimax Concave Penalty |

| GMC | Generalized Minimax Concave |

| GME | Generalized Moreau Enhancement |

| LiGME | Linearly involved Generalized-Moreau-Enhanced (or Enhancement) |

| SRC | Sparse Representation-based Classification |

| GSC | Group Sparse Classification |

| WGSC | Weighted Group Sparse Classification |

Appendix A. The Bias of ℓ2,1 Regularizer in Group Sparse Classification

Using regularizer in classification problems not only minimizes the number of the selected classes, but also minimizes the -norm of coefficients within each class. The later may adversely affect the classification result, since the optimal representation of a test sample by training samples of the correct subject may contain large coefficients. Moreover, in many classification applications, the number of training samples from different classes is not the same. We argue that the bias of regularizer makes it unfair for classes of different sizes.

Example A1.

Suppose that a test sample can be represented by a combination of all samples from class i without error, i.e., and , where and .

- (a)

- If the number of samples in this class is doubled by duplication, the training set of class i becomes . Obviously, can also be well represented by , where () and . However, . That is, value of the first representation (before duplication) is greater than that of the second one (after duplication).

- (b)

- If the number of samples in this class is increased to by copying times (d > 1), the training set of class i becomes . Obviously, is a representation of , where and . Then .

Example A1 tells us that the group size affects the value of regularizer. Even if the new training sample is only a copy of the original samples (without adding any new information), the value of regularizer will decrease. Therefore, regularizer is unfair for classes of different sizes. It has the tendency to refuse the class has relatively few samples, because the coefficient vector is more likely to have a large regularizer value. Please note that -regularizer is independent of group size and it does not have such unfairness.

Appendix B. Parameter Tuning and Proximal Splitting Algorithm for LiGME Model

Proposition A1

([24], Proposition 2). In (5), let , , and . Choose a nonsingular satisfying . Then , ensures , where and is an eigendecomposition.

Proposition A2

([24], Theorem 1). Consider minimization of in (5) under the overall-convexity condition (6). Let a real Hilbert space be a product space and define an operator with parameters , by

Then the following holds:

- 1.

- , where .

- 2.

- Choose satisfyingwhere is the operator norm. Thenand is -averaged nonexpansive in the Hilbert space .

- 3.

- Assume the condition (A1) holds. Then, for any initial point , the sequence generated byconverges to a point and

References

- Theodoridis, S. Machine Learning: A Bayesian and Optimization Perspective; Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Ma, S.; Song, X.; Huang, J. Supervised group Lasso with applications to microarray data analysis. BMC Bioinform. 2007, 8, 60. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, L.; Chen, G.; Li, H. Group SCAD regression analysis for microarray time course gene expression data. Bioinformatics 2007, 23, 1486–1494. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhong, Y.; Zhang, L.; Xu, Y. Spatial group sparsity regularized nonnegative matrix factorization for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6287–6304. [Google Scholar] [CrossRef]

- Drumetz, L.; Meyer, T.R.; Chanussot, J.; Bertozzi, A.L.; Jutten, C. Hyperspectral image unmixing with endmember bundles and group sparsity inducing mixed norms. IEEE Trans. Image Process. 2019, 28, 3435–3450. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Huang, T.Z.; Zhao, X.L.; Deng, L.J. Nonlocal tensor-based sparse hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2020, 59, 6854–6868. [Google Scholar] [CrossRef]

- Qiao, B.; Mao, Z.; Liu, J.; Zhao, Z.; Chen, X. Group sparse regularization for impact force identification in time domain. J. Sound Vib. 2019, 445, 44–63. [Google Scholar] [CrossRef]

- Majumdar, A.; Ward, R.K. Classification via group sparsity promoting regularization. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 861–864. [Google Scholar]

- Elhamifar, E.; Vidal, R. Robust classification using structured sparse representation. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1873–1879. [Google Scholar]

- Huang, J.; Nie, F.; Huang, H.; Ding, C. Supervised and projected sparse coding for image classification. In Proceedings of the Twenty-Seventh AAAI Conference on Artificial Intelligence, Bellevue, WA, USA, 14–18 July 2013. [Google Scholar]

- Tang, X.; Feng, G.; Cai, J. Weighted group sparse representation for undersampled face recognition. Neurocomputing 2014, 145, 402–415. [Google Scholar] [CrossRef]

- Rao, N.; Nowak, R.; Cox, C.; Rogers, T. Classification with the sparse group lasso. IEEE Trans. Signal Process. 2015, 64, 448–463. [Google Scholar] [CrossRef]

- Tan, S.; Sun, X.; Chan, W.; Qu, L.; Shao, L. Robust face recognition with kernelized locality-sensitive group sparsity representation. IEEE Trans. Image Process. 2017, 26, 4661–4668. [Google Scholar] [CrossRef]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Natarajan, B.K. Sparse approximate solutions to linear systems. SIAM J. Comput. 1995, 24, 227–234. [Google Scholar] [CrossRef] [Green Version]

- Argyriou, A.; Foygel, R.; Srebro, N. Sparse prediction with the k-support norm. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Siem Reap, Cambodia, 13–16 December 2018; Volume 1, pp. 1457–1465. [Google Scholar]

- Deng, W.; Yin, W.; Zhang, Y. Group sparse optimization by alternating direction method. In Wavelets and Sparsity XV; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; Volume 8858, p. 88580R. [Google Scholar]

- Huang, J.; Breheny, P.; Ma, S. A selective review of group selection in high-dimensional models. Stat. Sci. A Rev. J. Inst. Math. Stat. 2012, 27. [Google Scholar] [CrossRef]

- Breheny, P.; Huang, J. Group descent algorithms for nonconvex penalized linear and logistic regression models with grouped predictors. Stat. Comput. 2015, 25, 173–187. [Google Scholar] [CrossRef] [Green Version]

- Hu, Y.; Li, C.; Meng, K.; Qin, J.; Yang, X. Group sparse optimization via lp, q regularization. J. Mach. Learn. Res. 2017, 18, 960–1011. [Google Scholar]

- Jiang, L.; Zhu, W. Iterative Weighted Group Thresholding Method for Group Sparse Recovery. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 63–76. [Google Scholar] [CrossRef] [PubMed]

- Jiao, Y.; Jin, B.; Lu, X. Group Sparse Recovery via the ℓ0(ℓ2) Penalty: Theory and Algorithm. IEEE Trans. Signal Process. 2016, 65, 998–1012. [Google Scholar] [CrossRef]

- Chen, P.Y.; Selesnick, I.W. Group-sparse signal denoising: Non-convex regularization, convex optimization. IEEE Trans. Signal Process. 2014, 62, 3464–3478. [Google Scholar] [CrossRef] [Green Version]

- Abe, J.; Yamagishi, M.; Yamada, I. Linearly involved generalized Moreau enhanced models and their proximal splitting algorithm under overall convexity condition. Inverse Probl. 2020, 36, 035012. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Yamagishi, M.; Yamada, I. A Generalized Moreau Enhancement of ℓ2,1-norm and Its Application to Group Sparse Classification. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021. [Google Scholar]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Larsson, V.; Olsson, C. Convex low rank approximation. Int. J. Comput. Vis. 2016, 120, 194–214. [Google Scholar] [CrossRef]

- Blake, A.; Zisserman, A. Visual Reconstruction; MIT Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Zhang, C.H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef] [Green Version]

- Nikolova, M.; Ng, M.K.; Tam, C.P. Fast nonconvex nonsmooth minimization methods for image restoration and reconstruction. IEEE Trans. Image Process. 2010, 19, 3073–3088. [Google Scholar] [CrossRef] [PubMed]

- Selesnick, I. Sparse regularization via convex analysis. IEEE Trans. Signal Process. 2017, 65, 4481–4494. [Google Scholar] [CrossRef]

- Yin, L.; Parekh, A.; Selesnick, I. Stable principal component pursuit via convex analysis. IEEE Trans. Signal Process. 2019, 67, 2595–2607. [Google Scholar] [CrossRef]

- Abe, J.; Yamagishi, M.; Yamada, I. Convexity-edge-preserving signal recovery with linearly involved generalized minimax concave penalty function. In Proceedings of the ICASSP 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 4918–4922. [Google Scholar]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 210–227. [Google Scholar] [CrossRef] [Green Version]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y.; Quan, Y.; Luo, Y. Structured sparse coding for classification via reweighted ℓ2,1 minimization. In CCF Chinese Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2015; pp. 189–199. [Google Scholar]

- Zheng, J.; Yang, P.; Chen, S.; Shen, G.; Wang, W. Iterative re-constrained group sparse face recognition with adaptive weights learning. IEEE Trans. Image Process. 2017, 26, 2408–2423. [Google Scholar] [CrossRef]

- Zhang, C.; Li, H.; Chen, C.; Qian, Y.; Zhou, X. Enhanced group sparse regularized nonconvex regression for face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; Springer International Publishing: New York, NY, USA, 2017. [Google Scholar]

- Zhao, L.; Hu, Q.; Wang, W. Heterogeneous feature selection with multi-modal deep neural networks and sparse group lasso. IEEE Trans. Multimed. 2015, 17, 1936–1948. [Google Scholar] [CrossRef] [Green Version]

- Qin, Z.; Scheinberg, K.; Goldfarb, D. Efficient block-coordinate descent algorithms for the group lasso. Math. Program. Comput. 2013, 5, 143–169. [Google Scholar] [CrossRef]

- Hull, J.J. A database for handwritten text recognition research. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 550–554. [Google Scholar] [CrossRef]

- Samaria, F.S.; Harter, A.C. Parameterisation of a stochastic model for human face identification. In Proceedings of the1994 IEEE Workshop on Applications of Computer Vision, Sarasota, FL, USA, 5–7 December 1994; pp. 138–142. [Google Scholar]

- Cai, D.; He, X.; Hu, Y.; Han, J.; Huang, T. Learning a spatially smooth subspace for face recognition. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).