Abstract

In this paper, a modification to the Polak–Ribiére–Polyak (PRP) nonlinear conjugate gradient method is presented. The proposed method always generates a sufficient descent direction independent of the accuracy of the line search and the convexity of the objective function. Under appropriate conditions, the modified method is proved to possess global convergence under the Wolfe or Armijo-type line search. Moreover, the proposed methodology is adopted in the Hestenes–Stiefel (HS) and Liu–Storey (LS) methods. Extensive preliminary numerical experiments are used to illustrate the efficiency of the proposed method.

1. Introduction

Conjugate gradient methods are among the most popular methods for solving optimization problem, especially for large-scale problems due to the simplicity and low storage of their iterative form [1].

Consider the following unconstrained optimization problem:

where is continuously differentiable. Let be any initial point of the solution of the problem (1), then the conjugate gradient method generates an iteration sequence as follows:

where is the kth iterative point, is a steplength which is obtained by carrying out some line search, and is a search direction defined by

where denotes the gradient of the function at and is a scalar that determines different conjugate gradient methods [2,3,4,5,6]. In this paper, we focus our attention on well-known methods such as Polak–Ribière–Polyak (PRP) [4,5], Hestenes–Stiefel (HS) [3] and Liu–Storey (LS) [6] methods which share the same numerator in . The update parameters of these methods are, respectively, given by

where denotes the Euclidean norm of vectors. Other nonlinear conjugate gradient methods and their global convergence can be found in [1,7].

It is well-known that the PRP, HS and LS methods are generally regarded to be the most efficient methods in practical computation. This can be attributed to the property (*), which was derived in Gilbert and Nocedal [8]. Polak–Ribière [4] obtained the global convergence of the PRP method for the strongly convex functions with exact line search. Yuan [9] also obtained the global convergence of the PRP method under the assumption that the search direction satisfies a descent condition:

and the following standard Wolfe line search

where .

Their convergence properties are not so good in many situations. Powell [10] gave a counter example which showed that there exist nonconvex functions on which the PRP method does not converge globally even if the exact line search is used. Inspired by Powell’s work, Gilbert and Nocedal [8] proved that the modified PRP method is globally convergent in which is given by . The search direction prevents effectively jamming phenomena from occurring and satisfies the descent property (5) or the following sufficient descent condition:

which is very important for establishing the global convergence of the proposed method. In [11], Hager and Zhang proposed a modified HS formula for defined by

More specifically, in their proposed method, called CG-DESCENT. They showed that the method possesses the sufficient descent property with c = 7/8. Afterwards, they also presented the following extension of :

where is a nonnegative parameter. If , then the method possesses the sufficient descent property with . Cheng [12] developed a two term PRP-based descent method satisfying (7) by use of the projection technique for unconstrained optimization problem. Yu et al. [13] proposed a modified form of as follows:

It is important that if , then the condition (7) is achieved with . Yuan [14] present a new PRP formula defined by

where guaranteeing the descent property (7) and . Livieris and Pintelas [15] proposed a new class of spectral conjugate gradient methods which ensures sufficient descent independent of the accuracy of the line search.

Wei et al. [16] gave a variant of the PRP method called the VPRP method. The parameter in the VPRP method is given by

Based on the VPRP method, Zhang [17] made a little modification and obtained the NPRP method as follows,

and established the sufficient descent property (7) of the NPRP method. Recently, Zhang [18] proposed a three-term conjugate gradient method called MPRP method in which the direction takes the following form:

leading to the MPRP method with the sufficient descent property. This property always holds independent of any line search and the convexity of the objective function. Under the following line search

where with , the global convergence of the MPRP method is established. Note that the MPRP method in [18] will reduce to the standard PRP method if exact line search is used and converges globally under the line search (9). However, it fails to converge under the weak Wolfe line search (6). The main reason lies in the trust region property (Lemma 1 in Section 2) that is not satisfied by the MPRP method. Based on the method in [12,18], Dong et al. [19] propose a three-term PRP-type conjugate gradient method which always satisfies the sufficient descent condition independently of line searches employed.

Motivated by the above observations, we propose a modified three-term PRP formula based on (8), which possesses not only the sufficient descent property but also the trust region feature. In the following, we first reformulate the search direction (8) into a new form, which can be written as follows:

Then, we can consider the following general iteration form:

with any , it is not difficult to deduce that the direction defined by (11) satisfies

which is independent of any line search and the convexity of the objective function.

In this paper, we further study the PRP method and suggest a new three term PRP method to improve the numerical performance and obtain better property of the PRP method. The remaining of this paper is organized as follows. In Section 2, we present a modified PRP method by using a new technique and establish its global convergence. In Section 3, the new technique is extended to the HS and LS method. In the last section, some numerical results are reported to show the modified methods are efficient.

2. The Modified PRP Method and Its Properties

In order to have the sufficient descent condition and keep simple structure and good properties, we take a modification to the denominator of the PRP formula, namely,

where . For convenience, we call the iterative form by (2), (11) and (13) a ZPRP method. It is obvious that the ZPRP method reduces to the PRP method if .

Then, we give the modified PRP type conjugate gradient method below (Algorithm 1).

| Algorithm 1 Modified PRP-type Conjugate Gradient Method |

|

3. Global Convergence of the ZPRP Method

In this section, we come to show the global convergence of our proposed method. The following assumptions are often used in the literature to analyze the global convergence of conjugate gradient methods with inexact line searches.

Assumption 1

- (i)

- The level set is bounded.

- (ii)

- In some neighborhood N of , f is continuously differentiable and its gradient is Lipschitz continuous, that is, there exists a constant such that .

We first prove the ZPRP method is globally convergent with Wolfe line search (6). Under Assumption 1, we give a useful Zoutendijk condition [20].

Obviously, the Zoutendijk condition (17) and (12) imply that

Theorem 1.

Suppose that Assumption 1 holds. Consider the ZPRP method, and is obtained by the Wolfe conditions (6). Then we have

Proof of Theorem 1.

By Lemma 1, we have . Let , then we get

which implies

Hence, (19) holds. The proof is completed. ☐

Next, we prove the global convergence of the ZPRP method under the condition (9).

Theorem 2.

Suppose that Assumption 1 holds. Consider the ZPRP method and satisfies the Armijo line search (9). Then we have

Proof of Theorem 2.

Suppose that the conclusion is not true. Then there exists a constant such that ,

From (9) and Assumption 1 (i), we have

Suppose , then there is an infinite index set K such that

From (9), it follows that when is sufficiently large, satisfies the following inequality,

4. Extension to the HS and LS Method

In this section, we extend the idea above to the HS and LS method. The corresponding method is called the ZHS method and the ZLS method in which is respectively defined by

where . It is obvious that since . Hence, we now only need to discuss the global convergence of the ZHS method.

The following theorem shows that the ZHS method converges globally with the Wolfe line search (6).

Theorem 3.

Let Assumption 1 hold. Consider the ZHS method and is obtained by the Wolfe line search (6), then

The following result shows that the ZHS method with the Armijo line search (9) possesses global convergence.

Theorem 4.

Let Assumption 1 hold. Consider the ZHS method and is obtained by the line search (9), then

Proof of Theorem 4.

The proof is similar to the proof of the global convergent property of the ZPRP method given in Theorem 2 in this paper. We omit it here. ☐

5. Numerical Experiments

In this section, we report some numerical results on some of the unconstrained optimization problems in the CUTE [21] test problem libraty. We test the ZPRP method and ZHS method, and compare the performance of the these two methods with the MPRP method in [18]. The parameters and . All codes were written in MATLAB R2012a and run on PC with 3.00 GHz CPU processor and Win 7 operation system. We use the stopping iteration . The detailed numerical results are listed on the web site: http://mathxiuxiu.blog.sohu.com/326066259.html.

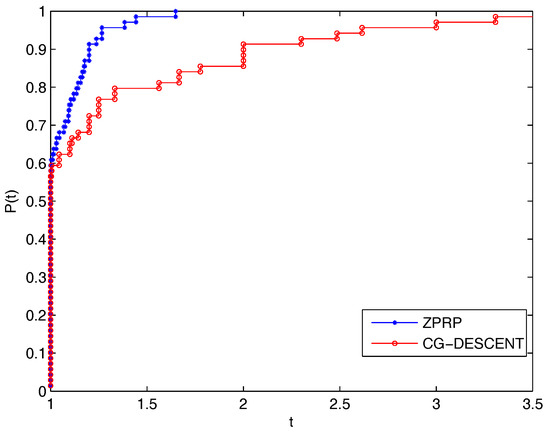

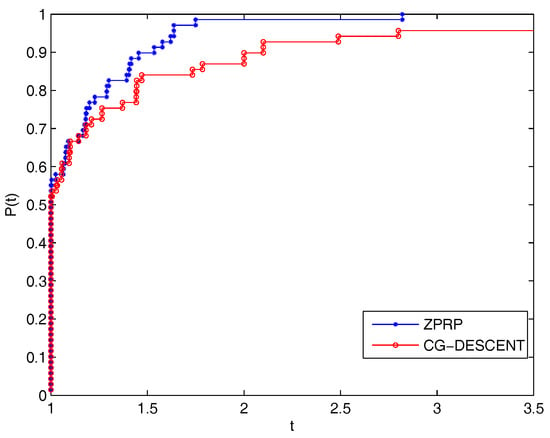

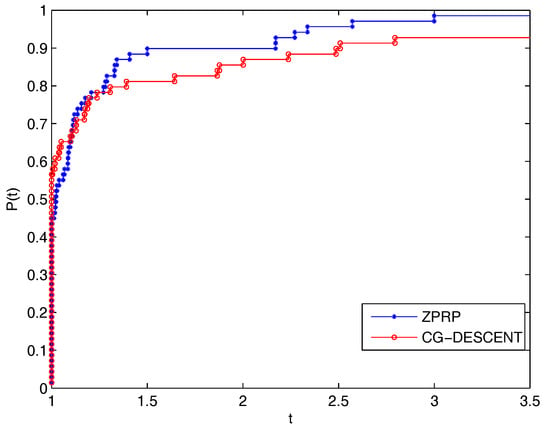

We first evaluate the performance of the ZPRP method with that of CG-DESCENT proposed by Hager and Zhang (2005) and all methods with the Wolfe line search (6). Figure 1, Figure 2 and Figure 3 show the numerical performance of the above methods related to the total number of iterations, the number of function and gradient evaluations, CPU time, respectively, which are evaluated using the profiles of Dolan and Moré [22]. For each method, we plot the fraction P of problems for which the method is within a factor t of the smallest number of iterations, or the smallest number of function evaluations or least CPU time, respectively. The left side of the figure gives the percentage of the test problems for which a method is the fastest; the right side gives the percentage of the test problems that are successfully solved by each of the methods. The top curve is the method that solved the most problems in a time that was within a factor t of the best time. Clearly, the ZPRP method has the better performance since it illustrates the best probability of being the optimal solver, outperforming CG-DESCENT. From Figure 1, we can obtain the ZPRP method solves about 59.5% of the test problems with the least number of function evaluations while CG-DESCENT solve about 56.5% of the test problems. For the total number of function and gradient evaluations, in Figure 2 illustrates that the ZPRP method solves 55.2% of the test problems with the least number of function and gradient evaluations while CG-DESCENT solve about 52.2% of the test problems. Therefore, the ZPRP method outperforms CG-DESCENT.

Figure 1.

The number of iteration.

Figure 2.

The total number of function and gradient evaluations.

Figure 3.

The total CPU time.

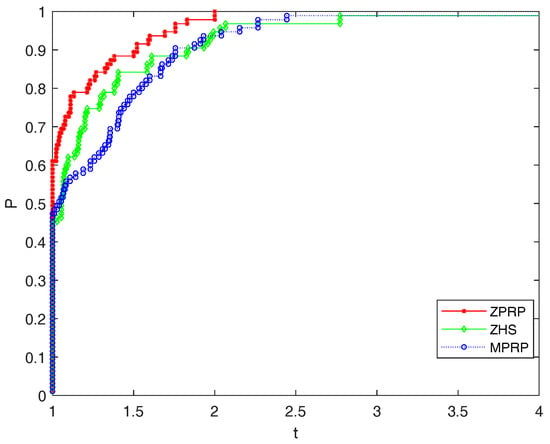

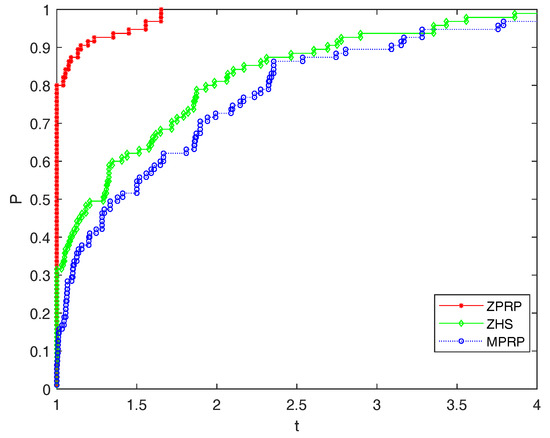

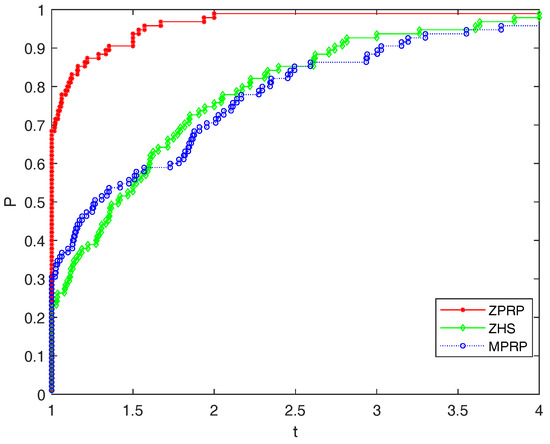

In the sequel, we compare the performance of the ZPRP method with that of the ZHS method and the MPRP method in [18] and all methods with the line search (9). Figure 4, Figure 5 and Figure 6 list the performance of the above methods relative to CPU time, the number of function evaluations and the number of gradient evaluations, respectively. From Figure 4, we can observe that the ZPRP method outperforms the MPRP and ZHS method. More analytically, the performance profile for the number of iteration shows that ZPRP can solve 61% of the test problems with the least number while MPRP and ZHS solve about 47.5% and 45.2% of the test problems, respectively. As regards the number of function and gradient evaluations, Figure 5 shows that the ZPRP solves 80% of the test problems with the least number. Hence, the performance of the ZPRP method slightly better than that of the MPRP and ZHS methods.

Figure 4.

The number of iteration.

Figure 5.

The total number of function and gradient evaluations.

Figure 6.

The total CPU time.

6. Conclusions

In this paper, we first proposed a modified PRP formula which provides sufficient descent directions for the objective function independent of any line search. Then we applied the technique to HS and LS conjugate gradient methods which also ensure the sufficient descent property. The global convergence of modified methods are established under the standard Wolfe line search or Armijo line search. Moveover, numerical experiments show that the proposed methods are promising. Our future work is concentrated on applying our coefficient with spectral conjugate gradient method [15] which ensures sufficient descent independent of the accuracy of the line search and studying the convergence properties of a spectral conjugate gradient method.

Author Contributions

J.S. made the numerical experiments, X.Z. was in charge of the overall research of the paper.

Funding

This research received no external funding.

Acknowledgments

The authors would like to thank the editor and the reviewers for their very careful reading and constructive comments on this paper. This work is supported by the National Natural Science Foundation of China (No. 11702206), the Natural Science Basic Research Plan of Shaanxi Province (No. 2018JQ1043) and Shaanxi Provincial Education Department (No. 16JK1435).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dai, Y.; Yuan, Y. Nonlinear Conjugate Gradient Methods; Shanghai Scientific and Technical Publishers: Shanghai, China, 2000. [Google Scholar]

- Fletcher, R.; Reeves, C. Function minimization by conjugate gradients. Comput. J. 1964, 7, 149–154. [Google Scholar] [CrossRef]

- Hestenes, M.; Stiefel, E. Methods of conjugate gradient for solving linear systems. J. Res. Natl. Bur. Stand. 1952, 49, 409–436. [Google Scholar] [CrossRef]

- Polak, B.; Ribière, G. Note surla convergence des mèthodes de directions conjuguèes. Rev. Fr. Inform. Rech. Oper. 1969, 3, 35–43. [Google Scholar]

- Polyak, B. The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 1969, 9, 94–112. [Google Scholar] [CrossRef]

- Liu, Y.; Sorey, C. Efficient generalized conjugate gradient algorithms. Part 1 Theory. J. Optim. Theory Appl. 1991, 69, 177–182. [Google Scholar] [CrossRef]

- Hager, W.W.; Zhang, H. A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2006, 2, 35–58. [Google Scholar]

- Gibert, J.; Nocedal, J. Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 1992, 2, 21–42. [Google Scholar] [CrossRef]

- Yuan, Y. Analysis on the conjugate gradient method. Optim. Method Softw. 1993, 2, 19–29. [Google Scholar] [CrossRef]

- Powell, M. Nonconvex minimization calculations and the conjugate gradient method. Numer. Anal. Lect. Notes Math. 1984, 1066, 122–141. [Google Scholar] [CrossRef]

- Hager, W.W.; Zhang, H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 2005, 16, 170–192. [Google Scholar] [CrossRef]

- Cheng, W. A Two-Term PRP-Based Descent Method. Numer. Func. Anal. Opt. 2007, 28, 1217–1230. [Google Scholar] [CrossRef]

- Yu, G.; Guan, L.; Li, G. Global convergence of modified Polak-Ribiére-Polyak conjugate gradient methods with sufficient descent property. J. Ind. Manag. Optim. 2008, 4, 565–579. [Google Scholar] [CrossRef]

- Yuan, G. Modified nonlinear conjugate gradient methods with sufficient descent property for large-scale optimization problems. Optim. Lett. 2009, 3, 11–21. [Google Scholar] [CrossRef]

- Livieris, I.; Pintelas, P. A new class of spectral conjugate gradient methods based on a modified secant equation for unconstrained optimization. J. Comput. Appl. Math. 2013, 239, 396–405. [Google Scholar] [CrossRef]

- Wei, Z.; Yao, S.; Liu, L. The convergence properties of some new conjugate gradient methods. Appl. Math. Comput. 2006, 183, 1341–1350. [Google Scholar] [CrossRef]

- Zhang, L. An improved Wei-Yao-Liu nonlinear conjugate gradient method for optimization computation. Appl. Math. Comput. 2009, 215, 2269–2274. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, W.; Li, D. A descent modified Polak-Ribière-Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 2006, 26, 629–640. [Google Scholar] [CrossRef]

- Dong, X.; Liu, H.; He, Y.; Babaie-Kafaki, S.; Ghanbari, R. A New Three-Term Conjugate Gradient Method with Descent Direction for Unconstrained Optimization. Math. Model. Anal. 2016, 21, 399–411. [Google Scholar] [CrossRef]

- Zoutendijk, G. Nonlinear Programming, Computational Methods. In Ineger and Nonlinear Programming; Abadie, J., Ed.; North-Holland: Amsterdam, The Netherlands, 1970; pp. 37–86. [Google Scholar]

- Bongartz, K.; Conn, A.; Gould, N.; Toint, P. CUTE: Constrained and unconstrained testing environments. ACM T. Math. Softw. 1995, 21, 123–160. [Google Scholar] [CrossRef]

- Dolan, E.; Morè, J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).