Abstract

Non-Intrusive Load Monitoring (NILM) enables appliance-level energy analysis from aggregated electrical signals, offering valuable insights for smart energy systems. While most NILM research focuses on high-resource environments, this study evaluates the feasibility of deploying NILM algorithms on constrained edge computing platforms. Two representative models for event detection and for energy disaggregation were trained on a high-end PC and tested on both the PC and two edge devices. A modular software framework using a virtual container and virtual environments ensured reproducibility across platforms. Experiments using datasets under simulated real-time streaming conditions revealed that although all devices achieved consistent detection, classification, and disaggregation performance, edge platforms struggled with real-time inference due to processing latency and memory limitations. This study presents a detailed comparison of execution time, resource usage, and model performance, highlighting the trade-offs associated with NILM deployment on embedded systems and proposing future directions for optimization and integration into smart grids.

1. Introduction

In the age of digital energy transformation, Non-Intrusive Load Monitoring (NILM) has become a cornerstone technology for understanding and optimizing energy consumption. By disaggregating total power usage into appliance-level insights using only aggregate measurements, NILM eliminates the need for costly and invasive submetering, offering a scalable and efficient solution for energy management, demand-side response, and smart grid optimization across residential, commercial, and industrial domains []. Recent real-world applications underscore NILM’s growing relevance. In industrial environments, NILM is being used for anomaly detection and predictive maintenance, enabling manufacturers to monitor machinery health and energy efficiency without installing sensors on every device. For example, active learning models have been developed to reduce the need for labeled data, cutting implementation costs by up to 99% while maintaining high prediction accuracy. In smart homes, NILM is integrated into intelligent energy management systems to track appliance usage, detect faults, and support demand-side optimization. Data-driven NILM approaches using deep learning have shown high accuracy in predicting future consumption and identifying abnormal patterns [,].

Despite these advances, most NILM research remains confined to high-performance computing environments or simulations, limiting its practical deployment []. This gap between theory and practice poses a significant challenge: how can NILM algorithms be effectively integrated into resource-constrained edge computing devices that are increasingly prevalent in smart grid optimization and Internet of Things (IoT) ecosystems? Bridging this gap requires evaluating how NILM algorithms perform on resource-constrained edge computing platforms. These platforms offer the potential for real-time, decentralized energy analysis. Furthermore, edge computing offers a compelling solution. By processing data locally, i.e., closer to where it is generated, edge computing enhances privacy and minimizes reliance on cloud infrastructure. This is particularly beneficial for NILM, where real-time inference and low-latency decision-making are essential. Studies have shown that edge-based NILM systems can reduce response time by up to 80% compared to cloud-based alternatives, and achieve up to 36% reduction in computational complexity and 75% reduction in storage requirements through model optimization. Moreover, edge deployment enables scalable, decentralized energy monitoring, which is critical for smart grids and distributed energy systems [,]. However, the computational demands of machine learning-based NILM models, particularly those designed for event detection and energy disaggregation, raise critical questions about their feasibility on embedded platforms, concerning challenges in terms of computational power, memory, and latency.

This study presents an experimental evaluation of state-of-the-art NILM algorithms for both event detection and energy disaggregation on edge computing devices. Unlike most previous studies, which focus on simulation on high-performance computing environments, this research implements and compares two NILM models: Deep Detection, Feature Extraction and Multi-Label Classification (DeepDFML)–NILM for event detection, and Sequence-to-Point (Seq2Point)–Convolutional Neural Network (CNN) for energy disaggregation, on constrained edge platforms such as the NVIDIA Jetson Nano and Jetson Orin Nano. The goal of this work is to evaluate the execution time, resource usage, and inference performance of two NILM algorithms for event detection and energy disaggregation when deployed on edge computing devices, assessing the feasibility of implementing these algorithms on such systems. Thus, rather than assessing NILM algorithms against each other, this work focuses on evaluating the computational performance of the selected algorithms on the selected devices. This work provides quantitative metrics on inference time, resource usage (CPU, RAM, GPU), and model sizes and errors, offering insights into the feasibility and trade-offs of deploying NILM systems on low-power hardware. The results highlight the limitations of Jetson Nano and Orin Nano for real-time applications, establishing a baseline for practical NILM deployments on embedded systems.

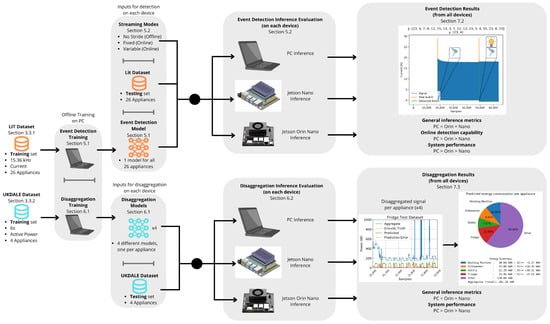

The present work is organized as follows: Section 2 reviews recent and relevant NILM research, with a focus on experimental studies and the limited exploration of embedded system deployment. Section 3 describes the selected NILM algorithms DeepDFML-NILM for event detection and Seq2Point CNN for load disaggregation along with their architectures and reported performance. This section also includes a dedicated Section 3.3, which details the Laboratory for Innovation and Technology (LIT) [] and UK Domestic Appliance-Level Electricity (UKDALE) [] datasets used in the experiments. Section 4 outlines the hardware and software setup, including the use of Docker containers and virtual environments to ensure consistent execution across devices. Section 5 presents the training and inference procedures for the event detection algorithm, evaluated on the PC, Jetson Nano, and Jetson Orin Nano, with corresponding resource usage and performance metrics. Similarly, Section 6 covers the training and inference procedures for the load disaggregation algorithm. Section 7 summarizes all the results and presents the conclusions for the metrics across devices. Finally, Section 8 discusses key findings, outlines limitations, and proposes future directions for optimizing NILM models for embedded systems, including GPU optimization, model compression, improved generalization testing, as well as a roadmap for their integration into smart grid and IoT ecosystems. A graphical overview of the present study’s pipeline is depicted in Figure 1.

Figure 1.

Graphical overview of the present study’s pipeline. Jetson Nano and Jetson Orin Nano images taken from [,].

2. Related Works

This section synthesizes key contributions in NILM research, focusing on computing paradigms, datasets, toolkits, and the distinction between load disaggregation and load detection. Promising approaches such as hybrid Long Short-Term Memory (LSTM) -CNN models [], spectrogram-based CNNs [], and lightweight event detection algorithms [] are highlighted, while noting persistent challenges in generalization, real-time deployment, and standardized evaluation [,]. This section also demonstrates that challenges persist in deploying NILM on edge devices due to constraints in memory, processing power, and latency.

Non-Intrusive Load Monitoring (NILM) has evolved significantly since Hart’s foundational work in the 1980s, which introduced event-based detection and clustering for load disaggregation. NILM formulates the energy disaggregation task as inferring per-appliance signals from aggregate mains data. From Hart’s seminal work on step-change signatures and combinatorial optimization [], research progressed to probabilistic state-space models (i.e., Hidden Markov models (HMM) and Factorial Hidden Markov models (FHMM)) and, more recently, to deep learning architectures (e.g., CNN, Long Short-Term Memory (LSTM), seq2point, Sequence-to-Sequence (seq2seq), Generative adversarial networks (GANs), Transformers, etc.) [,,].

Concerning the main approaches for loading disaggregation (estimation), hybrid sequence models are reported to combine local feature extraction with temporal modeling. A compact LSTM-CNN sequence-to-sequence architecture reported high overall accuracy on the Electrical Load Measurements dataset (REFIT) with notably modest parameter count (on the order of ), though per-appliance macro-F1 can lag for short-duty devices []. End-to-end CNNs operating on time–frequency representations (e.g., Short-Time Fourier Transform (STFT) spectrograms of current derivatives) jointly perform event handling and classification, showing near real-time feasibility in controlled tests and competitive accuracy on Building-Level Fully Labeled Electricity Disaggregation Dataset (BLUED) [,]. Beyond these exemplars, widely used families include sequence-to-point CNNs [], causal 1D CNNs on complex power (WaveNILM) [], graph-signal approaches [], GAN-enhanced models, and transformer variants surveyed in recent reviews [,]. Despite accuracy gains, many works still report sensitivity to house and device distribution shifts and limited reporting on inference time and computing cost. Yet, Compact LSTM and CNN disaggregation can reach high overall accuracy with relatively small models, but may underperform on short, bursty appliances and has primarily been validated on single datasets and homes [].

With regards to load detection (event detection), event-based pipelines segments are reported to aggregate signals into candidate transitions and then classify signatures. Lightweight, on-site methods emphasize simple features and decision logic for low-latency detection of closely spaced events, explicitly targeting embedded/edge deployment []. Event-driven CNN pipelines pair robust start/stop detection with deep transient classification, advancing accuracy while retaining an interpretable detection stage []. Compared to direct disaggregation models, event-first approaches offer a pragmatic route to edge-friendly NILM, but still require downstream assignment and potential disaggregation for energy accounting.

Recent research has focused on enhancing NILM through deep learning, embedded computing, and standardized datasets. For instance, toolkits such as Non-Intrusive Load Monitoring Toolkit (NILMTK) (https://github.com/nilmtk/nilmtk, accessed on 2 September 2025) [] and its extension NILMTK-contrib (https://github.com/nilmtk/nilmtk-contrib, accessed on 2 September 2025) [] have enabled reproducible experimentation and benchmarking across algorithms and datasets. These frameworks support preprocessing, model training, and performance evaluation using metrics such as F1 score, root mean square error (RMSE), and other precision metrics. Furthermore, datasets such as LIT-dataset (https://pessoal.dainf.ct.utfpr.edu.br/douglasrenaux/LIT_Dataset/index.html, accessed on 2 September 2025), UKDALE-dataset (https://data.ceda.ac.uk/edc/efficiency/residential/EnergyConsumption/Domestic/UK-DALE-2015, accessed on 2 September 2025), and Reference Energy Disaggregation Dataset (REDD) [] have become essential for training and validating NILM models. The LIT dataset, in particular, offers high-frequency sampling and precise event labeling, facilitating advanced feature extraction and classification. Deep learning models, including CNN-based architectures like DeepDFML-NILM (https://github.com/LucasNolasco/DeepDFML-NILM, accessed on 2 September 2025) [], have achieved high accuracy in load detection and multi-label classification. These models often use high-frequency signals and image-like representations of voltage–current trajectories to improve appliance recognition.

In general, public datasets are central to benchmarking and reproducibility: REDD [], UKDALE [], REFIT [], BLUED [], and others (e.g., Almanac of Minutely Power Dataset (AMPds and AMPds2), Electricity Consumption and Occupancy Dataset (ECO), Plug-Load Appliance Identification Dataset (PLAID), Building-level Office environment Dataset (BLOND), etc.). They vary in sampling rates (sub-Hz to tens of kHz), duration, number of homes, and device coverage [,]. Tooling for fair comparison includes NILMTK [,,], which provides parsers, baselines, and evaluation metrics. Reviews emphasize the need for unified test protocols (e.g., unseen-home splits, cross-dataset validation, and standardized thresholds) to enable apples-to-apples comparisons [,]. It is important to highlight that spectrogram-CNN approaches demonstrate near real-time processing and strong lab results, with encouraging performance on BLUED, but broader, longitudinal in-home trials remain limited [,].

Furthermore, surveys and individual studies converge on three gaps: (i) Generalization: models often degrade on unseen homes due to device heterogeneity and usage diversity; domain adaptation and semi-unsupervised learning are active but not definitive [,,]. (ii) Real-time/on-device: few works report end-to-end latency, memory, and energy footprints; promising timings exist (e.g., ∼100 ms per 1 s window on desktop for spectrogram-CNN) but embedded deployment evidence remains scarce []. (iii) Evaluation standards: inconsistent splits, thresholds, and metrics hinder fair comparison; community toolkits help, but standardized scenarios are still needed [,,,].

Moreover, several authors agree in the following practical recommendations: (i) Edge–cloud co-design: Use lightweight, on-device event detection to gate higher-cost inference; escalate to compact seq2point/seq2seq disaggregation locally, and defer to the cloud only for ambiguous segments [,]. (ii) Evaluation protocol: Adopt NILMTK-based pipelines with unseen-home and cross-dataset tests; report accuracy, latency, and memory (and, where relevant, energy) [,]. (iii) Data strategy: Mix canonical datasets (REDD, UKDALE, REFIT, BLUED) with augmentation and careful validation on small in-home pilots to quantify out-of-distribution robustness [,,,,,].

Nevertheless, while NILM research has made substantial progress in algorithm development, dataset creation, and toolkit standardization, most NILM implementations remain confined to simulated or controlled environments [,,]. Furthermore, while performance on public datasets is strong, authors consistently report challenges in generalization to unseen homes, latency and memory constraints, and a scarcity of field deployments [,].

As a consequence, there is a pressing need for real-world implementations, cross-platform benchmarking, and adaptive models that can operate reliably in diverse environments and real-world deployments. For example, in [], an edge solution based on an EVALSTPM32 microcontroller is developed, which involves a year-long field study to validate NILM systems under dynamic residential conditions. In [], the development and validation of an Open Multi Power Meter (OMPM) is presented, using a low-cost, scalable, and open-source hardware solution compatible with NILMTK, that differs from previous Arduino and Raspberry Pi-based solutions in its ability to offer a balance of accuracy and scalability since it is built around a single microcontroller architecture and uses RS485 communication. The developed OMPM enables simultaneous measurement of multiple electrical parameters (voltage, current, power, frequency, and power factor) across several channels, offering a replicable platform for academic research and practical energy management applications. In [], an instrumental prototype architecture of a smart meter with embedded capabilities for high-frequency NILM is presented, while evaluating computational performance on six edge platforms: Raspberry Pi 4 (RP4), Intel Neural Compute Stick 2 (NCS2), NXP i.MX 8M Plus (IMX8), NVIDIA Jetson Nano (NANO), Jetson Xavier NX (XAVIERNX), and Ultra96v1 (ULTRA96V1) AMD-Xilinx MPSoC FPGA. In [], lightweight, retrainable neural architectures like the Siamese network with fixed CNN and retrainable back propagation (BP) layers, are shown to overcome the memory, processing, and latency constraints of edge devices, by using an embedded Linux system with STM32MP1 to deploy the NILM pre-trained algorithm. It enables real-time, scalable NILM with online adaptation, making it a promising solution for smart homes, IoT, and energy management systems []. Similarly, in [], convolutional and LSTM neural networks are implemented to asses computational performance in the NVIDIA Jetson Orin NX and Nano, Google Coral (DevBoard and USB), Intel Neural Compute Stick 2, NXP i.MX8 Plus, and Xilinx Zynq UltraScale+ MPSoC ZCU104. The results show considerable variability among devices, with the ZCU104 and Jetson Orin achieving the lowest latencies across most models without any increase in the error metric.

From the above, event-driven methods deliver robust detection including closely spaced transitions, with resource-friendly designs suitable for embedded devices, yet they require integration with downstream disaggregation to provide appliance-level energy estimates [,].

Several studies have compared NILM algorithms and their computational performance using conventional computing environments. For instance, ref. [] evaluates four machine learning models (XGBoost, LSTM, Logistic Regression, and DTW-KNN) on a desktop equipped with an Intel i7-7700K CPU and NVIDIA GTX 1080 GPU, reporting accuracy, training time, and memory usage. Similarly, ref. [] analyzes computation times for each stage of its NILM pipeline on a Core i5 (2.3 GHz, 8 GB RAM) workstation, achieving an average processing speed of 19.35 ms per sample. These studies offer insights into algorithmic behavior but do not address edge-level deployment constraints. More recent efforts emphasize edge–cloud collaboration. The study in [] proposes a three-tier client–edge–cloud NILM architecture, deploying XGBoost and Seq2Point on a Raspberry Pi 5 (64-bit Arm Cortex-A76, 8 GB RAM) as the edge node and an Intel i7-10875H + RTX 2060 as the cloud, demonstrating that running inference at the edge significantly reduces latency. In addition, ref. [] converts a CNN into TensorFlow Lite and deploys it on an STM32MP1 dual-core SoC, achieving inference in 20 ms, while [] benchmarks edge-AI platforms (Jetson Orin NX/Nano, Google Coral, Intel NCS2, NXP i.MX8, Xilinx ZCU104) showing wide latency variability and identifying the ZCU104 and Jetson Orin as the fastest. Unlike studies such as [,], which primarily benchmark algorithms under desktop conditions, in the present work, a systematic experimental evaluation of NILM models is performed directly on embedded edge devices, specifically the NVIDIA Jetson Nano and Jetson Orin Nano, to quantify latency, resource consumption, and accuracy under real-time constraints. Similar to the edge-oriented approaches in [], the present study shares the goal of enabling practical NILM deployment under latency and scalability limitations; however, the present work contribution extends these efforts by providing detailed empirical measurements of processing time, CPU/GPU/RAM utilization, and energy disaggregation accuracy across multiple streaming configurations (fixed and variable stride).

3. Baseline NILM Algorithms

For this work, two NILM algorithms from the literature were implemented due to their good reported performance: DeepDFML-NILM, focused on load event detection, and Seq2Point CNN, focused on load disaggregation in domestic power grids. This section describes the key characteristics of both algorithms, including their architectures and reported performance.

3.1. Event Detection Algorithm

NILM event detection algorithms consist of techniques where high- or low-frequency aggregated signals are analyzed, studying the electronic signature of the appliances connected to an electric grid and detecting events such as the turning On or Off of these appliances. Electronic signatures are specific waveforms caused by appliances connecting to or disconnecting from the power grid. These signatures can provide information about the type of the event (On–Off), and the specific appliance that caused this change on the electric grid.

The DeepDFML-NILM algorithm [] is employed for event detection in this study. It performs on/off event detection, multi-label classification, and temporal regression directly from the aggregated signal, without requiring separate disaggregation for high-frequency signals such as those in the LIT dataset, described in Section 3.3.1.

The algorithm is based on a one-dimensional Convolutional Neural Network (1D-CNN) that processes sliding windows of 12,800 samples from the aggregated signal, with a stride of 2560 samples. Each window is divided into five non-overlapping segments and includes unmapped margins corresponding to 15% of the total samples. These margins are excluded from prediction to avoid detecting events too close to the window edges, where limited sample representation may reduce accuracy. Each segment is passed through fully connected subnetworks that generate three outputs: the event type (On or Off), the corresponding appliance label, and the estimated sample index indicating the event’s occurrence. A post-processing stage is applied to align, filter, and smooth the raw predictions, resulting in a consistent and temporally coherent activation timeline for each detected appliance.

According to the results presented in [], the algorithm achieved a 95.8% success rate in event detection, 97% accuracy in load classification, and an average error of 0.8 half-cycles in estimating the event’s sample index. Additionally, the model was validated on a Jetson TX1 platform, where it processed each window in 11.2 ms, with an average resource usage of 94.8% RAM, 30.9% CPU, and 50.6% GPU. The model has 464,990 parameters and occupies 5.7 MB of memory.

3.2. Load Disaggregation Algorithm

NILM load disaggregation algorithms aim to estimate the individual energy consumption of appliances by analyzing typically low-frequency aggregated power data. These algorithms decompose the total electrical load into distinct components by identifying patterns or features characteristic of specific devices.

NILMTK [] and its extension NILMTK-contrib [] are toolkits designed to support reproducible research in the NILM domain. They provide a suite of benchmark disaggregation algorithms, evaluation metrics, statistical analysis tools, and data preprocessing functionalities.

Among the available algorithms, one of the best-performing is Seq2Point-nilm (https://github.com/MingjunZhong/seq2point-nilm, accessed on 2 September 2025) [,], a one-dimensional Convolutional Neural Network (1D-CNN) designed to predict the power consumption of a target appliance at the center (midpoint) of a windowed segment of the aggregated signal. The implementation uses a 99-sample window in the NILMTK framework and a 599-sample window in the standalone implementation, with the window shifted using a one-sample stride.

The architecture consists of five convolutional layers, followed by a dense layer and a single scalar output. In [], models were trained on GPUs (GTX 970 and GTX TITAN X); however, no information was provided regarding resource usage or model size. For evaluation, the UKDALE dataset (see Section 3.3.2) was employed to train appliance-specific models for the kettle, microwave, fridge, dishwasher, and washing machine. Reported performance includes per-point Mean absolute errors (MAE) ranging from 7.4 W to 27.8 W, and daily Summed absolute errors (SAE) between 0.069 and 0.645.

3.3. Datasets

This section provides an overview of the datasets used in this study. The purpose of this study is not to assess or demonstrate the generalization performance of the proposed algorithms across datasets, or unseen appliances or households. Instead, the selected subsets are used as representative samples of data that can be obtained in real-world environments. These subsets serve solely as input data to evaluate the computational performance and feasibility of the algorithms when executed on the target edge computing devices.

3.3.1. LIT Dataset

LIT-dataset, ref. [], is a development from the Laboratory for Innovation and Technology in Embedded Systems (LIT) of the UTFPR university. The dataset is composed by three main subsets: Natural, Simulated, and Synthetic loads. Natural subset collects waveforms from real environments over long periods of time, recording the on and off times of each load, as well as the current and voltage waveforms of the aggregated signal and all monitored loads. Simulated loads were created in a MATLAB/SIMULINK environment with the goal of simulating complex scenarios that are difficult to recreate in the real world.

On the other hand, the Synthetic subset, which is the one used in this study, contains current and voltage waveforms collected using a custom setup that precisely controls the on and off times of the loads with a resolution better than 5 ms. This subset is sampled at 15,360 Hz and includes data from 26 different appliances. Each recording includes different combinations of one, two, three, or eight appliances working at the same time. Additionally, for each combination, the appliances are activated at 16 different triggering angles relative to the main grid waveform, resulting in a diverse set of waveforms for each reading. The original dataset only includes MATLAB tools, as explained in (https://pessoal.dainf.ct.utfpr.edu.br/douglasrenaux/LIT_Dataset/Matlab/index.html, accessed on 2 September 2025); however, a compatible version for python on .hdf5 format can be downloaded from the following link (https://drive.google.com/file/d/10NL9S8BYioj1U1_phCEoKX4WWRQoBuYW/view, accessed on 2 September 2025).

3.3.2. UKDALE Dataset

UKDALE-dataset, ref. [], is a whole-house demand open-access dataset from five UK houses. It includes two main data subsets: a high-frequency (16 kHz) aggregated current and voltage signal, and a low-frequency power dataset with disaggregated appliance-level readings at 6 s intervals and aggregated whole-house power at 1 s intervals. In this work, only the low-frequency power subset is used.

The five houses vary in the number of monitored appliances, from five in House 3 to fifty-three in House 1, and also in the recording duration, ranging from some months for most houses to more than two years for House 1. Different types of power meters were used, measuring either active or apparent power. For this study, only appliances recorded with active power, specifically the fridge, kettle, dishwasher, and washing machine, were considered.

4. Experimental Setup

This chapter outlines the experimental setup used to evaluate the performance of NILM algorithms for event detection and load disaggregation on edge computing platforms. It describes the hardware devices, runtime environments, and software architecture used across the PC, Jetson Nano, and Jetson Orin Nano for deploying and executing the models.

4.1. Hardware Devices

The goal of this work is to evaluate the execution time, resource usage, and inference performance of two NILM algorithms—DeepDFML-NILM for event detection and Seq2Point CNN for energy disaggregation—when deployed on edge computing devices, specifically the Jetson Nano and Jetson Orin Nano, to assess the feasibility of implementing these algorithms on such systems.

To perform this analysis, the devices, whose specifications are detailed in Table 1, were used. The PC was used both for training the models and for making inferences, while on the Jetson boards, only inferences were run with the models previously trained on the PC.

Table 1.

Hardware and software specifications of the development and edge platforms. Nvidia platforms information taken from [,].

Although the experiments conducted in this study did not directly measure the power consumption of the hardware platforms, it is relevant to consider their nominal energy profiles as a reference for practical deployment. The NVIDIA Jetson Nano can be configured with a maximum power mode of 10 W, while the NVIDIA Jetson Orin Nano offers a higher computational capacity with a maximum power mode of 15 W. For comparison, the baseline experiments were carried out on a PC equipped with an Intel Core i7-13620H (16 cores) processor and an NVIDIA RTX 4060 GPU, whose combined power consumption under load typically ranges between 150 W and 180 W, significantly higher than that of the edge devices. This contrast underscores the efficiency advantage of embedded platforms in terms of nominal energy usage.

4.2. Execution Environments: Docker Containers and Virtual Environment

To ensure portability, reproducibility, and compatibility across heterogeneous platforms, controlled execution environments were configured for each device. On the PC and Jetson Nano, Docker containers with the necessary libraries and dependencies were used. For the Jetson Orin Nano, a Python virtual environment managed with venv and pip was created instead, due to compatibility issues with the Docker container used on the Jetson Nano. Table 2 summarizes the main features of the runtime environments used on each platform.

Table 2.

Summary of runtime environments for each platform (Docker or virtual environment).

4.3. Software Architecture

A modular software architecture was developed with two independent systems: one for real-time event detection and another for energy disaggregation. Both systems provide a RESTful API and include a user interface built with Streamlit.

(i) Detection System: This service integrates FastAPI with WebSockets to receive POST requests triggered after running inferences with the event detection model. Detected events are streamed in real time. The Streamlit-based interface connects to the API via WebSocket and displays the real-time status of 26 monitored devices.

(ii) Disaggregation System: This component offers a separate API for running inferences using the Seq2Point model on aggregated power signals. Users can specify the target appliance and input CSV file; the system performs the prediction and stores the results. The corresponding dashboard allows users to select appliances, run inferences, and visualize the outputs through time-series and energy distribution plots.

(iii) Metrics Collection: To evaluate computational performance during model training and inference, a real-time monitoring system was implemented. It logs CPU, RAM, and GPU usage at 1 Hz on the Jetson boards and 10 Hz on the PC. The data is saved in CSV format as Timestamp, CPU Usage (%), RAM Usage (%), GPU Usage (%), and later processed to compute average resource utilization during the relevant execution periods.

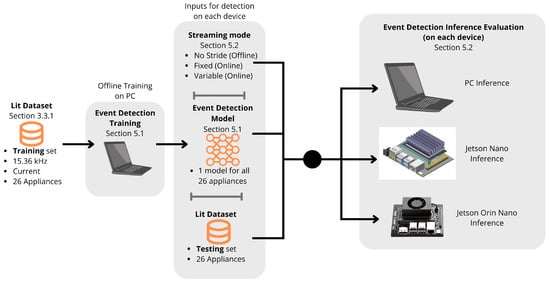

5. Event Detection Training and Inference

This section describes the complete workflow for the event detection model, including both the training process conducted on a personal computer (PC) and the inference phase performed across all target platforms: the PC, Jetson Nano, and Jetson Orin Nano. Each device used its corresponding runtime environment, as detailed in Table 1 and Table 2. Figure 2 shows a zoomed-in view of the event detection pipeline, originally introduced in the overall pipeline in Figure 1.

Figure 2.

Event detection pipeline. Jetson Nano and Jetson Orin Nano images taken from [,].

5.1. Event Detection Training

The event detection model was trained using the LIT dataset and the original training parameters from the DeepDFML-NILM repository. All training was performed on the PC. Table 3 summarizes the key training details like training time, average resource usage, and model size.

Table 3.

Detection model training time, average resource usage on PC, and model size.

The results in Table 3 show that the trained model matches the original presented in the DeepDFML-NILM repository, in terms of parameter count and size. Additionally, the recorded resource usage indicates that the training process was GPU-intensive, while the system had sufficient memory resources to handle the workload without bottlenecks.

After the training process, an evaluation was carried out using the trained model across all devices. This evaluation included metrics for event detection, load classification, temporal accuracy (Event Distance), and average processing time per 0.833 s window. The results obtained across devices are shown in Table 4. The time values are based on 10 samples (n = 10) with a 95% confidence interval (CI).

Table 4.

Performance metrics of the Event Detection trained model and average processing time for a 0.833 s window across devices.

The results in Table 4 show that all evaluation metrics remain consistent across devices, achieving correct detection of approximately 96% of On/Off events, a temporal accuracy error of only 0.78 AC semicycles (6.5 ms), and a high appliance classification accuracy of about 97%, with only a minimal number of appliance misclassifications.

The only difference between device results is in the processing time. This is expected, as the same trained model was used on all systems, and the variations are due to differences in computing capabilities. The processing time on the PC serves as a reference point. As expected, the Jetson Orin Nano demonstrates a lower processing time compared to the Jetson Nano, which aligns with its higher computational performance. These results are directly comparable with results obtained in [], where similar performance metrics were achieved, but a lower processing time of 11.2 ms per window was reached on an NVIDIA Jetson TX1 and a custom runtime environment.

A second evaluation measured the system resource usage of the model when processing a 0.833s window on each device. Table 5 summarizes the CPU, GPU, and RAM usage. All results are based on 352 samples (n = 352) with a CI = 95%.

Table 5.

Average resource usage of the Event Detection trained model when processing a 0.833 s window across devices.

The results in Table 5 show that the CPU% and RAM% usage are proportional to the device compute capability and available resources. The resource usage on the PC is the lowest as it serves as a reference point. Among the embedded platforms, Jetson Nano uses a higher percentage of its resources because they are fewer and must be managed more carefully. Regarding the GPU% usage, this algorithm does not trigger GPU-intensive processes. GPU resource usage is primarily due to system processes rather than algorithm requirements.

5.2. Event Detection Inference Evaluation

In a real NILM scenario, data is continuously acquired from a smart meter or energy sensor and then streamed to a pipeline with the necessary algorithms for inference processing. Inference can be performed in two ways: online, where data is processed in real time to detect appliance switching events as they happen, and offline, where the entire stored dataset is analyzed later when needed. In this work, three data streaming modes were implemented that recreate different real-world scenarios using 37.7 s signals sampled at 15,360 Hz from the LIT dataset:

(i) Mode 1—No stride: a baseline offline mode processes the 37.7 s signal at once, returning results only after the full signal has been analyzed. This mode assumes that the complete signal is already stored and suits scenarios where real-time detection is not required, and only historical event analysis is needed.

(ii) Mode 2—Fixed Stride: is an online mode, it assumes that real-time data is being streamed from an energy meter. This mode processes, each time, a fixed-length window of 4.16 s of data received from the energy meter, while the meter keeps buffering new data. Once the current window is processed, the fixed-length window moves forward, always advancing 0.833 s on the buffered data of the energy meter. If, upon completing processing of the current window, the buffer is less than 0.833 s, the meter keeps recording data until reaching 0.833 s of new data before passing them. If the buffer exceeds 0.833 s, the window only slides over the oldest 0.833 s of the buffer. The remainder accumulates for the next processing, which can result in the accumulation of more and more data, thus failing to provide real-time detection in that case.

(iii) Mode 3—Variable Stride: is an online mode, it also processes a 4.16 s fixed-length window as in Mode 2, but in this case, the fixed-length window moves forward the same time the current window processing took. This mode can return results more or less frequently depending on the window processing time, and this variable stride may affect the overlap between windows and thus affect the robustness of detections. These online modes enable continuous inference with overlapping windows, enhancing event detection. To avoid duplicate detections, events occurring within 50ms are grouped, keeping only the one with the highest confidence.

Section 5.2.1, Section 5.2.2 and Section 5.2.3 present the evaluation of Mode 1, Mode 2, and Mode 3, respectively, across the platforms and environments detailed earlier in Table 1 and Table 2, and analyze whether each mode is viable on each device.

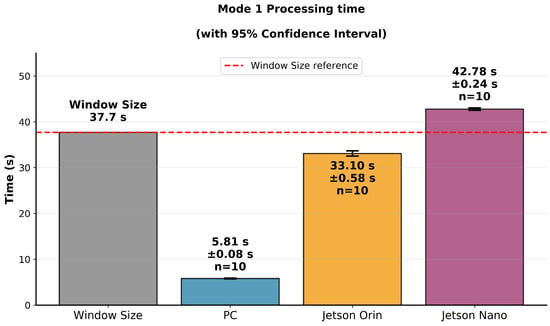

5.2.1. Mode 1—No Stride

As previously described, Mode 1 is an offline mode that processes an entire stored signal at once, in this case, a 37.7 s signal sampled at 15,260 Hz, equivalent to 580,000 samples. Figure 3 compares a 37.7 s window size with the average processing time of the window across the PC, Jetson Orin Nano, and Jetson Nano, obtained from 10 data points per device with a CI = 95%.

Figure 3.

Mode 1: Average processing time of a 37.7 s window across devices compared with the window size.

Figure 3 shows consistent measurements, demonstrating that the Mode 1 is stable and repeatable across all devices. Additionally, it is evident that the processing time in the Jetson Nano is greater than the window size. However, since this is an offline mode, all processing times are valid, even if they exceed the recorded window time.

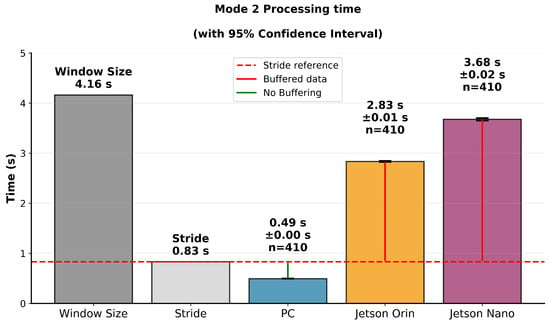

5.2.2. Mode 2—Fixed Stride

Mode 2 is an online mode that processes a 4.16 s fixed-stride window and uses a constant stride of 0.833 s to slide the window over the buffered data from the energy meter. Figure 4 shows the window size, the stride, and the average processing time of the 4.16 s signal across the PC, Jetson Orin Nano, and Jetson Nano, obtained from 410 data points per device, with a CI = 95%. This figure also compares the processing time across devices with the stride, showing whether the device is fast enough to process the window before new data becomes available, or whether the processing time exceeds the stride, in which case the additional data must be temporarily stored in the buffer.

Figure 4.

Mode 2: Average processing time of a 4.16 s window across devices compared with a 0.833 s stride and the window size.

Figure 4 shows consistent measurements, demonstrating that the Mode 2 is stable and repeatable across all devices. Additionally, the PC is considered the reference device, but it shows a clear example of when the window processing time is lower than the stride value (0.833 s). In this case, the energy meter continues to capture data until 0.833 s have elapsed since the last window shift. The shifted window is then processed with the new data without accumulating information in the buffer.

On Jetson devices, the processing time for each window exceeds the stride, which means that while a window is being processed, the energy meter continues to generate data that accumulates in the buffer. For example, on the Jetson Orin Nano, approximately 2.83 s of new data are recorded during the processing of a window; however, only 0.833 s are used in the subsequent analysis, while the rest remains stored, progressively increasing the amount of information pending processing. As a result, neither the Jetson Orin Nano nor the Jetson Nano achieved real-time performance, with this limitation being more evident in the Jetson Nano due to its lower efficiency.

5.2.3. Mode 3—Variable Stride

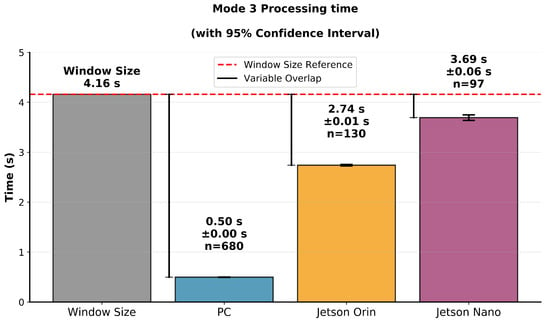

Mode 3 addresses the buffering issue by defining a variable stride equal to the processing time of the previous window, ensuring that the most up-to-date data is used. The DeepDFML-NILM algorithm implemented in this work excludes unmapped window margins from prediction to prevent detecting events near the edges, where limited sample representation may reduce accuracy. For this reason, maintaining a high overlap between windows is beneficial, as it provides redundant data and minimizes the impact of these unmapped margins. However, in this mode the stride can become excessively large, which reduces window overlap and may compromise detection robustness. Figure 5 illustrates the window size and the average processing time of the 4.16 s signal for the PC, Jetson Orin Nano, and Jetson Nano, with the number of data points (n) varying across devices and a CI = 95%. This figure also shows the overlap between windows across the devices.

Figure 5.

Mode 3: Average processing time of a 4.16 s window across devices compared with the window size, highlighting the difference between the processing time and window size, which translates to the window overlap per device.

Figure 5 shows consistent measurements, demonstrating that the Mode 3 is stable and repeatable across all devices. Additionally, the PC is considered the reference device, but it shows a clear example of enough overlap between windows due to its low 0.5 s stride value. Experimental results show that a maximum stride of 0.833 s is accepted to avoid degrading event detection. In contrast, the Jetson devices produce average strides of 3.69 s on the Nano and 2.74 s on the Orin Nano, which reduce the overlap to only 11% and 34%, respectively. Such limited overlap impacts performance in two key ways: it decreases redundancy, making event detection less reliable, and increases the likelihood of missing events occurring near the window edges. The impact of limited overlap on performance was evaluated through an experiment with 180 events, where the PC detected 100% of them, while detection dropped to 66% on the Jetson Orin Nano and 55% on the Jetson Nano. Note that all devices detected 100% of events in the offline mode.

Based on the results in Section 5.2.1, Section 5.2.2 and Section 5.2.3, several key observations can be made about the feasibility and performance of each inference mode across the evaluated devices.

At first, it was expected that the PC would perform well, since it was the reference device. Mode 1 (No Stride) processes the entire 37.7 s signal in just 5.81 s, making it efficient for offline analysis. Mode 2 (Fixed Stride) supports real-time inference with a window processing time lower than the stride value, and an 80% overlap between windows, while Mode 3 (Adaptive Stride) further improves the overlap to 88%, thereby enhancing detection reliability.

By contrast, Mode 2 is impractical on both the Jetson Orin Nano and the Jetson Nano. The processing times for a single window (2.83 s and 3.68 s, respectively) are much longer than the fixed-stride interval of 0.83 s. This causes data accumulation, making real-time operation infeasible. Mode 3 results in average strides of 2.74 s on the Orin Nano and 3.69 s on the Nano. This significantly reduces the overlap between windows to only 34% and 11%, respectively. This limited overlap reduces redundancy, making event detection less reliable, and increases the risk of missing events that occur near the edges of each window.

In summary, it is observed that there is an explicit limitation when using online inference modes on edge platforms with limited computational resources. In conclusion, the Jetson devices can perform offline detections but struggle with online inferences with the current system settings.

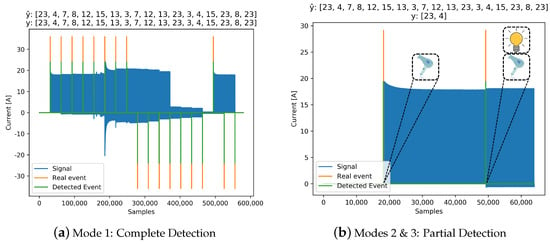

Figure 6 illustrates the output produced when processing the full signal with Mode 1, compared to the segmented analysis performed by Modes 2 and 3. Mode 1 delivers results only after the entire signal is processed, whereas Modes 2 and 3 allow more frequent updates by operating on smaller, overlapping time windows.

Figure 6.

Detection output results for different modes.

In Figure 6, the symbol “ŷ” denotes the ground truth events, while “y” marks the predicted detections. Upward vertical lines correspond to power-on events, and downward lines to power-off events. In this test signal, all events were correctly detected, with accurate appliance classification. The first detected event corresponds to appliance 23 being turned on, followed by appliance 4. According to the LIT dataset documentation, these devices are a hairdryer and an LED panel, respectively.

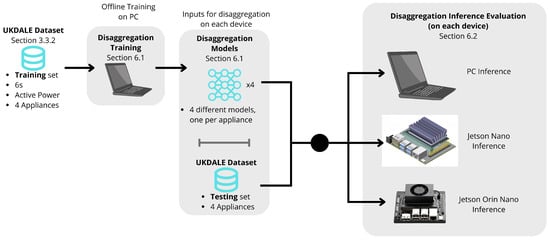

6. Load Disaggregation Training and Inference

This section describes the complete workflow for the load disaggregation model, including both the training process conducted on a personal computer (PC) and the inference phase performed across all target platforms: the PC, Jetson Nano, and Jetson Orin Nano. Each device used its corresponding runtime environment, as detailed in Table 1 and Table 2. Figure 7 shows a zoomed-in view of the event detection pipeline, originally introduced in the overall pipeline in Figure 1.

Figure 7.

Load disaggregation pipeline. Jetson Nano and Jetson Orin Nano images taken from [,].

6.1. Disaggregation Training

The implementation of the training of the disaggregation models was based on the repositories NILMTK [], NILMTK-contrib [] and Seq2Point-nilm [,], from which different scripts, normalization values, and CNN architectures were obtained.

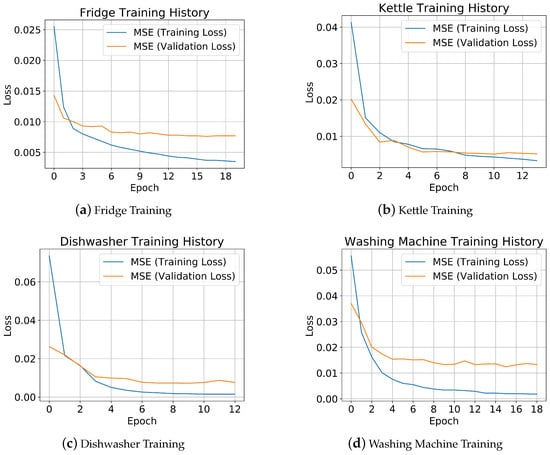

After preprocessing a training subset from the UKDALE-Dataset, models for the fridge, kettle, dishwasher, and washing machine were trained. Figure 8 shows the training curves for the different devices.

Figure 8.

Training curves for each appliance disaggregation model trained on the PC.

Figure 8 shows that the validation loss closely follows the training loss. Although a small gap is present in most cases, it remains minor, indicating that the models are not significantly overfitting.

The models generated have a fixed size of 3,623,449 parameters, with an approximate weight of 43.6 MB each. The average resource usage and time required during training are shown in Table 6.

Table 6.

Summary of training resource usage and training time on PC by appliance.

The training resource summary in Table 6 shows that all models were trained efficiently, with training times ranging from 13.4 to 20.5 s. CPU usage remained consistent around 10%, while RAM usage was between 72 and 74%, indicating moderate memory demands. GPU usage was higher for some appliances, especially the washing machine, but always stayed under 50%, without overloading the system.

On the other hand, Table 7 shows the complete training metrics of the models.

Table 7.

Summary of training error metrics for each appliance disaggregation model trained on the PC.

Table 7 confirms good training performance across all appliances. The training and validation metrics, MSE, MSLE, and MAE, remain low overall, with only minor increases in validation values, particularly for the dishwasher and washing machine. This supports earlier observations of minimal overfitting, suggesting that the models generalize well to unseen data.

6.2. Disaggregation Inference Evaluation

The disaggregation models were evaluated using an offline strategy, in which aggregated signals were processed on demand. A testing subset of the UKDALE dataset, corresponding to approximately 30 days of energy consumption subsampled at 60 s intervals (44,286 samples), was used.

For each device and appliance, average resource usage were measured as shown in Table 8 with a CI = 95% and the number of data points (n) varying across appliances and devices.

Table 8.

Average resource usage of the appliance disaggregation models across devices when processing a 30-day window.

In addition, the cumulative energy error (SAE), inference error metrics, and average processing times with n = 11 and a CI = 95% are summarized in Table 9.

Table 9.

SAE, inference error metrics, and average processing time of a 30-day window per appliance across devices.

The results in Table 8 and Table 9 confirm that all devices successfully performed offline disaggregation over 30 days of data, but with clear differences in efficiency, accuracy, and resource usage.

The PC, as the reference device, achieved the fastest inference times (≈2.2 s per appliance) with consistently low CPU (≈10%), moderate GPU usage (≈23%), and low RAM usage (50–58%). Error metrics were also the lowest across all devices, confirming that high-precision inference was maintained. The Orin Nano completed inference in 18–19 s per appliance, showing moderate CPU (≈25%), moderate GPU usage (≈29–30%), and high RAM usage (75–81%). Its error metrics were slightly worse than the PC but closely matched those of the Jetson Nano. The Jetson Nano showed the highest resource usage (CPU ≈ 38%, GPU ≈ 52–56%, RAM ≈ 90–91%) and slower inference times (≈22–25 s per appliance). Error metrics were nearly identical to the Orin Nano, indicating that performance differences are due more to hardware constraints than inference quality. Its high resource utilization suggests the Jetson Nano is operating close to capacity during inference.

The results indicate that the GPU usage presented the highest variability across all devices, with confidence intervals of up to ±5% on the Jetson Nano. This suggests that GPU utilization is more sensitive to fluctuations in workload and background processes than CPU or RAM usage, which remained relatively stable. In terms of processing time, the Jetson Nano exhibited the highest variability (up to ±2.9 s), compared to the Jetson Orin Nano and especially the PC, whose execution times were extremely consistent (only ±0.02–0.06 s). This indicates that Jetson Nano runtime stability is less predictable, likely due to scheduling, memory management, or differences in precision handling.

The results also show that, although the same trained disaggregation model was used across all devices, slight differences appear in metrics such as MSE, MAE, and MSLE. This variation is likely due to differences in inference precision, hardware architecture, or backend libraries used on each platform. Devices like the Jetson Nano and Orin Nano often rely on reduced precision, which can introduce minor numerical discrepancies during inference. However, the SAE (cumulative energy error) remains identical across all platforms, confirming that despite low-level differences, the overall energy estimation is consistent. This suggests the model’s core disaggregation capability remains stable across platforms.

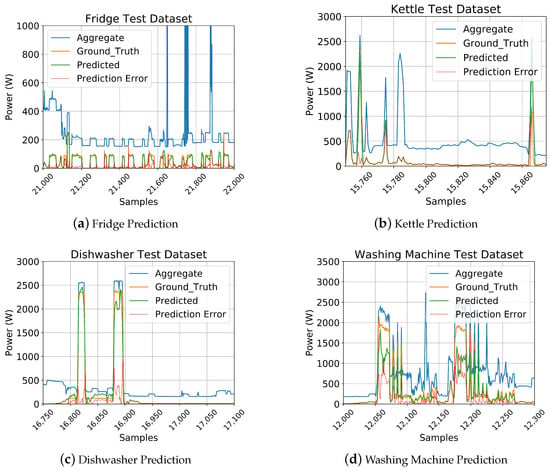

Figure 9 shows examples of the aggregated, ground truth, predicted signal, and prediction error for each appliance.

Figure 9.

Prediction data visualization for different appliances.

Figure 9 shows that the models are capable of accurately tracking the disaggregated signal throughout the inference period. This indicates that the models have learned the general patterns and behavior of each appliance and can effectively separate their individual consumption from the aggregate signal. The alignment between the predicted and actual values suggests good generalization and reliable performance, even when processing extended periods of real-world data.

The fridge prediction error curve indicates that this model is among the best trained in the study. Errors only rise briefly during state transitions or when other appliances introduce disturbances during steady states. The dishwasher error curve follows a similar pattern, with peaks at state changes and difficulties in capturing low-power states, though the overall error remains low.

In contrast, the kettle and washing machine error curves show much higher error levels. For the kettle, the error is persistent across the entire timeline, with additional peaks where the model incorrectly predicts appliance activity while the ground truth is zero. The washing machine error is also substantial, likely due to the complex, non-steady patterns that are hard to replicate.

Despite the washing machine’s high appliance-level error, its cumulative energy error (SAE) is the lowest among all models in this study. This suggests that achieving low per-appliance error does not always translate into the most accurate overall energy consumption estimates.

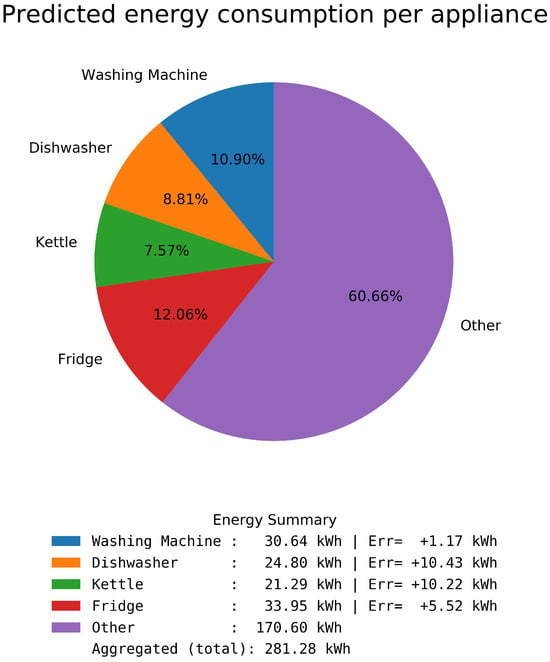

Figure 10 shows the results of disaggregating each appliance from the same aggregated signal using the API and dashboard to show their contribution to the total energy consumption.

Figure 10.

Predicted energy consumption per appliance and contribution to the total energy consumption.

Figure 10 shows that the washing machine is the appliance with the lowest total consumption error, aligning with the SAE value, while the kettle total consumption error is almost half the predicted value. It is also important to note that all errors are positive, indicating that the predictions add noise rather than underestimating the appliances’ consumption.

7. Results

This section presents a summary and conclusion of all key metrics, beginning with the training performance and resulting models for both event detection and load disaggregation, as evaluated on the PC. It reviews training durations, model complexity, and resource usage. Following this, this section compares inference performance across different hardware platforms, PC, Jetson Nano, and Jetson Orin Nano, highlighting differences in processing time, resource usage, and accuracy.

7.1. Training Performance

Table 10 summarizes the average training time and hardware resource usage for both the detection and disaggregation models on the PC. It includes CPU, RAM, and GPU usage during training, as well as the parameter count and memory size for each model.

Table 10.

Detection and disaggregation model average training time, average resource usage on PC, and model size.

Table 10 shows that although the detection model involved a more complex training process (requiring more training time and higher GPU usage), it remains significantly lighter than the disaggregation model in terms of both the number of parameters and memory size.

7.2. Event Detection Results

The event detection algorithm itself demonstrated strong performance across all devices, achieving a detection accuracy of 96.1%, a load classification accuracy of 97.06%, and an event distance error of only 0.78 half-cycles. The performance metrics obtained with the trained model in this work are very similar to those reported in []. The main difference lies in the processing time for a 0.833 s window: while [] reported 11.2 ms on a Jetson TX1, the results obtained were as follows: 23.71 ms on the PC, 138.17 ms on the Jetson Orin Nano, and 191.63 ms on the Jetson Nano, as shown in Table 4.

It is important to highlight that the variability of the data used in this work is very low, as shown in Table 4 and Table 5, and Figure 3, Figure 4 and Figure 5, which makes the results reliable and consistent. From this analysis, it can be observed that the processing time reported for the Jetson TX1 in [] is significantly lower than that obtained even on the PC. Furthermore, while [] reported an average GPU utilization of 50.6%, in the experiments conducted in this study, GPU usage remained low as shown in Table 5, suggesting that the detection algorithm was not directly leveraging GPU acceleration for data processing. This behavior may be attributed to the compatibility of the libraries and packages available in each execution environment, which could prevent effective use of the GPU.

The results of Table 4 and Table 5 show that the PC has the lowest CPU and RAM usage and the fastest processing time. Among the embedded platforms, the Jetson Orin Nano outperforms the Jetson Nano, demonstrating lower CPU and GPU usage as well as faster inference. In all cases, CPU and GPU usage remained within reasonable limits, while RAM usage reached its limit, especially on Jetson devices.

To analyze the streaming modes and real-time inference capability, Table 11 summarizes the processing times presented in Figure 3, Figure 4 and Figure 5 with their respective number of samples (n) and CI, and compares the inference latency of the detection modes across different platforms. The table also reports the percentage of events detected by each device under Mode 3 based on 180 events tested.

Table 11.

Event Detection processing times across platforms using different streaming modes, and % of detection in Mode 3.

The PC, serving as the reference device, fully meets the requirements for real-time detection. It achieved rapid response times of 0.5 s when processing a 4.16 s window while maintaining 100% detection accuracy under the adaptive stride configuration (Mode 3). It also handled offline inference efficiently, processing the entire 37.7 s window in only 5.5 s, making it well-suited for both real-time and batch processing scenarios.

The Jetson Orin Nano, although offering better performance than the Jetson Nano, is unable to sustain real-time operation. It required approximately 2.8 s to process a 4.16 s window and 33.10 s for the full 37.7 s window. These times are insufficient for stride-based detection, making the device unsuitable for online use. Under adaptive stride (Mode 3), its detection accuracy dropped to only 66%, further confirming its limitations for time-sensitive applications. Moreover, in Mode 2, approximately 2 s of data is accumulated in the buffer during each iteration, clearly illustrating the latency gap between data acquisition and processing.

The Jetson Nano exhibited even greater performance limitations, being unable to sustain real time. It required approximately 3.7 s to process a 4.16 s window and 42.78 s for the full 37.7 s window. These processing times are far from sufficient for stride-based detection, making the device unsuitable for online use. Under adaptive stride, its detection accuracy dropped to only 55%, underscoring its limited applicability in latency-critical scenarios. Furthermore, in Mode 2, nearly 2.8 s of data is accumulated in the buffer during each iteration, further highlighting the severe mismatch between data acquisition and processing speed.

In summary, all devices evaluated are acceptable for offline processing tasks. However, the Jetson boards cannot achieve real-time detection in either Mode 2 or Mode 3, as their processing times are too high, leading to potential data buffering or missed detections caused by insufficient overlap.

7.3. Disaggregation Results

For their part, the disaggregation models were effective in replicating the temporal patterns of consumption, as seen in Figure 9 and Table 9. Nevertheless, the cumulative energy analysis reveals opportunities for improvement, especially in devices with sporadic activations such as the kettle or dishwasher, where the SAE exceeds 0.7. This discrepancy suggests that, while the model may be accurate at the sample level, it could benefit from optimizations focused on predicting total consumption, such as adjusting the loss function or performing targeted retraining.

Table 8 and Table 9 show that although all platforms used the same disaggregation models and achieved identical SAE values, indicating similar cumulative energy estimates, there are evident differences in resource usage and inference times. The PC achieved the best overall efficiency as it is the reference device, with the lowest latency of about 2.2 s and minimal CPU (10.4%) and GPU (22.8%) usage across all the appliances. In contrast, the Jetson Nano exhibited the highest computational load and the slowest inference time of about 23.8 s across appliances. The Jetson Orin Nano demonstrated balanced performance, achieving a latency of about 18.9 s while maintaining moderate resource usage. Consistent use of the GPU across all devices demonstrates that the algorithm appropriately utilized resources to accelerate processing. One of the most heavily used resources during the inference process was RAM, which became the main bottleneck in the entire computation. This issue was particularly evident on the Jetson Nano, where memory resources are very limited, causing saturation easily and leading the system to stop functioning.

In conclusion, all devices are suitable for performing offline disaggregation. However, the Jetson Nano presented significant issues due to its limited RAM, making the system unstable and prone to crashes.

8. Discussion

This study assesses the execution time, resource usage, and inference performance of two NILM algorithms—DeepDFML-NILM for event detection and Seq2Point CNN for energy disaggregation—on edge devices such as the Jetson Nano and Jetson Orin Nano, to assess the feasibility of implementing these algorithms on such systems.

The results presented in Section 5.2 allow us to conclude that both the Jetson Orin Nano and the Jetson Nano are capable of performing accurate offline event detection. However, neither device is able to perform real-time detection in either of the two implemented online modes, as they suffer from either buffer overflows or reduced event detection performance caused by the limited overlap resulting from the high processing time per window. Another important bottleneck is the limited RAM, particularly on the Jetson Nano, which frequently becomes saturated and causes the device to crash during the experiments. An observation worth highlighting is the low GPU utilization during the experiments in this study, whereas the reference work [] reports an average GPU utilization of 50.6% and a processing time of 11.2 ms for a 0.833 s window, almost half the time of the best-performing device in this study, the PC, which achieves 23.7 ms for the same window size. This suggests that the experiments conducted in this study do not fully leverage the GPU, possibly due to the specific software versions available for each system. For future work, it is proposed to study in detail the software dependencies required to take full advantage of the available GPU resources on the Jetson devices, and to explore model compression methods such as TensorFlow Lite Converter, as done in [] to run models efficiently on edge hardware, potentially enabling real-time event detection on these platforms.

On the other hand, the results presented in Section 6.2 show that both Jetson devices are fully capable of performing appliance-level signal disaggregation efficiently, since processing data equivalent to 30 days of measurements was completed in under 30 s on both platforms. The only bottleneck in this case is, again, the RAM on the Jetson Nano, which occasionally saturates when running the algorithm. For real-world deployments, the Jetson Nano is, therefore, not recommended for either algorithm, as it does not guarantee stability and would require constant monitoring. Future work in this direction includes training models specifically to optimize the SAE metric, as it is the one most closely related to accurate appliance-level energy estimation. Additionally, it is proposed to evaluate the trained models using different datasets, or data from households other than the one used for training, where consumption patterns vary significantly, in order to test their generalization ability.

Finally, after achieving models with good generalization capacity that are optimized for real-time processing on embedded systems such as the Jetson Nano and Jetson Orin, future work will focus on the integration of NILM into practical smart grid and IoT ecosystems. For this, the following roadmap is proposed:

- Data and hardware infrastructure: Ensure the availability of high-resolution measurements through smart meters and sensors, together with the selection of edge devices that balance energy consumption, cost, and computational capacity.

- Algorithm optimization: Adapt and compress models for efficient deployment on resource-constrained hardware, ensuring low latency and scalability across different types of appliances.

- IoT architecture integration: Design an edge-to-cloud architecture that allows local event processing while transmitting aggregated data to the cloud for advanced analytics, employing standard protocols and interoperable APIs.

- User and grid oriented applications: Provide appliance-level consumption feedback to users, and enable demand-side management services, anomaly detection, and energy-use optimization in households and microgrids.

- Security and privacy: Implement encryption and access control to preserve the privacy of energy data.

- Scaling and validation: Conduct pilot tests in real environments, evaluate accuracy, latency, and robustness metrics, and finally integrate the results into smart grid platforms for large-scale services such as load forecasting, demand response, and distributed energy trading.

Author Contributions

Conceptualization, D.S.; methodology, D.S.; software, D.S. and C.A.; validation, D.S. and C.A.; formal analysis, D.S.; investigation, D.S.; resources, A.G. and T.M.; writing—original draft preparation, D.S. and T.M.; writing—review and editing, D.S., C.A. and T.M.; visualization, D.S.; supervision, T.M. and A.G.; project administration, A.G. and J.S.; funding acquisition, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank the Research Program “Energy Efficiency 2030: Transition to Sustainable Construction”, with code 1216-938-106387, funded by the Ministry of Science, Technology and Innovation (Minciencias) of the Government of Colombia through the call “938-2023 Ecosystems in Sustainable, Efficient and Affordable Energy” with contract No. 395-2023.

Data Availability Statement

The code used to present this study is openly available in the following repositories: NILM-PC (https://github.com/DASV12/NILM-PC, accessed on 2 September 2025); NILM-Jetson (https://github.com/DASV12/NILM-Jetson, accessed on 2 September 2025); Jetson Orin (https://github.com/Carlos278arulo/jetson-nilm-model, accessed on 2 September 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NILM | Non-Intrusive Load Monitoring |

| NILMTK | Non-Intrusive Load Monitoring Toolkit |

| DeepDFML | Deep Detection, Feature Extraction and Multi-Label Classification |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| HMM | Hidden Markov models |

| FHMM | Factorial Hidden Markov models |

| seq2point | Sequence-to-Point |

| seq2seq | Sequence-to-Sequence |

| GANs | Generative adversarial networks |

| RESTful | Representational State Transfer |

| API | Application Programming Interface |

| IoT | Internet of Things |

| UI | User Interface |

| PC | Personal Computer |

| RAM | Random Access Memory |

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

| MB | Megabyte |

| GB | Gigabyte |

| CSV | Comma-Separated Values |

| Hz | Hertz |

| kHz | Kilo Hertz |

| s/sec | Seconds |

| ms | Milliseconds |

| LIT | Laboratory for Innovation and Technology (LIT Dataset) |

| UKDALE | UK Domestic Appliance-Level Electricity |

| REFIT | Electrical Load Measurements |

| STFT | Short-Time Fourier Transform |

| BLUED | Building-Level Fully Labeled Electricity Disaggregation |

| REDD | Reference Energy Disaggregation Dataset |

| AMPds | Almanac of Minutely Power Dataset |

| ECO | Electricity Consumption and Occupancy |

| PLAID | Plug-Load Appliance Identification Dataset |

| BLOND | Building-level Office environment Dataset |

| OMPM | Open Multi Power Meter |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| MSLE | Mean Squared Logarithmic Error |

| RMSE | Root Mean Squared Error |

| Val_MAE | Validation Mean Absolute Error |

| Val_MSE | Validation Mean Squared Error |

| Val_MSLE | Validation Mean Squared Logarithmic Error |

| SAE | Sum of Absolute Errors |

| W | Watt |

| kWh | kilowatt-hour |

| CI | Confidence Interval |

| n | Number of samples |

References

- Gawin, B.; Małkowski, R.; Rink, R. Will NILM Technology Replace Multi-Meter Telemetry Systems for Monitoring Electricity Consumption? Energies 2023, 16, 2275. [Google Scholar] [CrossRef]

- Fabri, L.; Leuthe, D.; Schneider, L.M.; Wenninger, S. Fostering non-intrusive load monitoring for smart energy management in industrial applications: An active machine learning approach. Energy Inform. 2025, 8, 54. [Google Scholar] [CrossRef]

- Nutakki, M.; Mandava, S. Resilient data-driven non-intrusive load monitoring for efficient energy management using machine learning techniques. EURASIP J. Adv. Signal Process. 2024, 2024, 62. [Google Scholar] [CrossRef]

- Kizonde, B.K.; Mathaba, T.N.D.; Langa, H.M. A Non-intrusive Load Monitoring Technique for Real-Time Energy Management. In Innovations in Smart Cities Applications Volume 8; Ben Ahmed, M., Abdelhakim, B.A., Karaș, İ.R., Ben Ahmed, K., Eds.; Springer: Cham, Switzerland, 2025; pp. 453–462. [Google Scholar] [CrossRef]

- Xu, Q.; Liu, Y.; Luan, K. Edge-Based NILM System with MDMR Filter-Based Feature Selection. In Proceedings of the 2022 IEEE 5th International Electrical and Energy Conference (CIEEC), Nangjing, China, 27–29 May 2022; pp. 5015–5020. [Google Scholar] [CrossRef]

- Sykiotis, S.; Athanasoulias, S.; Kaselimi, M.; Doulamis, A.; Doulamis, N.; Stankovic, L.; Stankovic, V. Performance-Aware NILM Model Optimization for Edge Deployment. IEEE Trans. Green Commun. Netw. 2023, 7, 1434–1446. [Google Scholar] [CrossRef]

- Renaux, D.P.B.; Pottker, F.; Ancelmo, H.C.; Lazzaretti, A.E.; Lima, C.R.E.; Linhares, R.R.; Oroski, E.; da Silva Nolasco, L.; Lima, L.T.; Mulinari, B.M.; et al. A Dataset for Non-Intrusive Load Monitoring: Design and Implementation. Energies 2020, 13, 5371. [Google Scholar] [CrossRef]

- Kelly, J.; Knottenbelt, W. The UK-DALE Dataset, Domestic Appliance-Level Electricity Demand and Whole-House Demand from Five UK Homes. Sci. Data 2015, 2, 150007. [Google Scholar] [CrossRef] [PubMed]

- Franklin, D. Jetson Nano Brings AI Computing to Everyone. 2019. Available online: https://developer.nvidia.com/blog/jetson-nano-ai-computing/ (accessed on 7 October 2025).

- NVIDIA Corporation. Jetson Orin Nano Developer Kit User Guide. 2025. Available online: https://developer.nvidia.com/embedded/learn/jetson-orin-nano-devkit-user-guide/index.html (accessed on 7 October 2025).

- Naderian, S. A Novel Hybrid Deep Learning Approach for Non-Intrusive Load Monitoring of Residential Appliance Based on LSTM and CNN; Manuscript/Extended Paper as Compiled in Collection; Experiments on REFIT; University of Glasgow: Glasgow, UK, 2020. [Google Scholar] [CrossRef]

- Ciancetta, F.; Bucci, G.; Fiorucci, E.; Mari, S.; Fioravanti, A. A New Convolutional Neural Network-Based System for NILM Applications. IEEE Trans. Instrum. Meas. 2021, 70, 1501112. [Google Scholar] [CrossRef]

- Kotsilitis, S.; Kalligeros, E.; Marcoulaki, E.C.; Karybali, I.G. An Efficient Lightweight Event Detection Algorithm for On-Site Non-Intrusive Load Monitoring. IEEE Trans. Instrum. Meas. 2023, 72, 9000313. [Google Scholar] [CrossRef]

- Faustine, A.; Mvungi, N.H.; Kaijage, S.; Michael, K. A Survey on Non-Intrusive Load Monitoring Methodologies and Techniques for Energy Disaggregation Problem. arXiv 2017, arXiv:1703.00785. [Google Scholar] [CrossRef]

- Rafq, H.; Manandhar, P.; Rodriguez-Ubinas, E.; Qureshi, O.A.; Palpanas, T. A review of current methods and challenges of advanced deep learning-based non-intrusive load monitoring (NILM) in residential context. Energy Build. 2024, 305, 113890. [Google Scholar] [CrossRef]

- Hart, G.W. Nonintrusive appliance load monitoring. Proc. IEEE 1992, 80, 1870–1891. [Google Scholar] [CrossRef]

- Kaselimi, M.; Protopapadakis, E.; Voulodimos, A.; Doulamis, N.; Doulamis, A. Towards Trustworthy Energy Disaggregation: A Review of Challenges, Methods, and Perspectives for Non-Intrusive Load Monitoring. Sensors 2022, 22, 5872. [Google Scholar] [CrossRef]

- Anderson, K.D.; Ocneanu, A.; Benitez, D.; Carlson, D.; Rowe, A.; Bergés, M. BLUED: A fully labeled public dataset for event-based non-intrusive load monitoring research. In Proceedings of the KDD Workshop on Data Mining Applications in Sustainability, Beijing, China, 12–16 August 2012; Available online: https://api.semanticscholar.org/CorpusID:25397318 (accessed on 7 October 2025).

- Zhang, C.; Zhong, M.; Wang, Z.; Goddard, N.; Sutton, C. Sequence-to-Point Learning with Neural Networks for Non-Intrusive Load Monitoring. In Proceedings of the the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; AAAI Press: Washington, DC, USA, 2018; pp. 2604–2611. [Google Scholar]

- Harell, A.; Makonin, S.; Bajic, I.V. WaveNILM: A Causal Neural Network for Power Disaggregation from the Complex Power Signal. In Proceedings of the ICASSP, Brighton, UK, 12–17 May 2019; IEEE: New York, NY, USA, 2019; pp. 8335–8339. [Google Scholar] [CrossRef]

- He, K.; Stankovic, L.; Liao, J.; Stankovic, V. Non-Intrusive Load Disaggregation Using Graph Signal Processing. IEEE Trans. Smart Grid 2018, 9, 1739–1747. [Google Scholar] [CrossRef]

- Yang, D.; Gao, X.; Kong, L.; Pang, Y.; Zhou, B. An Event-Driven Convolutional Neural Architecture for Non-Intrusive Load Monitoring of Residential Appliance. IEEE Trans. Consum. Electron. 2020, 66, 173–182. [Google Scholar] [CrossRef]

- Batra, N.; Kelly, J.; Parson, O.; Dutta, H.; Knottenbelt, W.; Rogers, A.; Singh, A.; Srivastava, M. NILMTK: An Open Source Toolkit for Non-Intrusive Load Monitoring. In Proceedings of the 5th International Conference on Future Energy Systems (e-Energy ’14), Cambridge, UK, 11–13 June 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 265–276. [Google Scholar] [CrossRef]

- Batra, N.; Kukunuri, R.; Pandey, A.; Malakar, R.; Kumar, R.; Krystalakos, O.; Zhong, M.; Meira, P.; Parson, O. Towards Reproducible State-of-the-Art Energy Disaggregation. In Proceedings of the 6th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation (BuildSys 2019), New York, NY, USA, 13–14 November 2019; pp. 193–202. [Google Scholar] [CrossRef]

- Kolter, J.Z.; Johnson, M.J. REDD: A Public Data Set for Energy Disaggregation Research. In Proceedings of the ACM Workshop on Data Mining Applications in Sustainability (SustKDD), San Diego, CA, USA, 21 August 2011; Available online: https://api.semanticscholar.org/CorpusID:15339254 (accessed on 7 October 2025).

- da Silva Nolasco, L.; Lazzaretti, A.E.; Mulinari, B.M. DeepDFML-NILM: A New CNN-Based Architecture for Detection, Feature Extraction and Multi-Label Classification in NILM Signals. IEEE Sens. J. 2022, 22, 501–509. [Google Scholar] [CrossRef]

- Murray, D.; Stankovic, L.; Stankovic, V. An electrical load measurements dataset of United Kingdom households from a two-year longitudinal study. Sci. Data 2017, 4, 160122. [Google Scholar] [CrossRef]

- Kelly, J.; Batra, N.; Parson, O.; Dutta, H.; Knottenbelt, W.; Rogers, A.; Singh, A.; Srivastava, M. NILMTK v0.2: A Non-intrusive Load Monitoring Toolkit for Large Scale Data Sets. In Proceedings of the BuildSys, Memphis, TN, USA, 3–6 November 2014; pp. 182–183. [Google Scholar] [CrossRef]

- Cruz-Rangel, D.; Ocampo-Martinez, C.; Diaz-Rozo, J. Online non-intrusive load monitoring: A review. Energy Nexus 2025, 17, 100348. [Google Scholar] [CrossRef]

- Shabbir, N.; Vassiljeva, K.; Nourollahi Hokmabad, H.; Husev, O.; Petlenkov, E.; Belikov, J. Comparative Analysis of Machine Learning Techniques for Non-Intrusive Load Monitoring. Electronics 2024, 13, 1420. [Google Scholar] [CrossRef]

- Liaqat, R.; Sajjad, I.A. An Event Matching Energy Disaggregation Algorithm Using Smart Meter Data. Electronics 2022, 11, 3596. [Google Scholar] [CrossRef]

- Rodriguez-Navarro, C.; Portillo, F.; Robalo, I.; Alcayde, A. Evaluation of Traditional and Data-Driven Algorithms for Energy Disaggregation Under Sampling and Filtering Conditions. Inventions 2025, 10, 43. [Google Scholar] [CrossRef]

- Mari, S.; Bucci, G.; Ciancetta, F.; Fiorucci, E.; Fioravanti, A. An Embedded Deep Learning NILM System: A Year-Long Field Study in Real Houses. IEEE Trans. Instrum. Meas. 2023, 72, 2531215. [Google Scholar] [CrossRef]

- Rodríguez-Navarro, C.; Portillo, F.; Martínez, F.; Manzano-Agugliaro, F.; Alcayde, A. Development and Application of an Open Power Meter Suitable for NILM. Inventions 2024, 9, 2. [Google Scholar] [CrossRef]

- Kolosov, D.; Robinson, M.; Schirmer, P.A.; Mporas, I. An Instrumental High-Frequency Smart Meter with Embedded Energy Disaggregation. Sensors 2025, 25, 5280. [Google Scholar] [CrossRef]

- Lu, L.; Kang, J.S.; Meng, F.; Yu, M. Non-Intrusive Load Identification Based on Retrainable Siamese Network. Sensors 2024, 24, 2562. [Google Scholar] [CrossRef]

- González, M.L.; Ruiz, J.; Andrés, L.; Lozada, R.; Skibinsky, E.S.; Fernández, J.; Sedano, J.; García-Vico, Á.M. Deep Learning Inference on Edge: A Preliminary Device Comparison. In Intelligent Data Engineering and Automated Learning–IDEAL 2024; Julian, V., Camacho, D., Yin, H., Alberola, J.M., Nogueira, V.B., Novais, P., Tallón-Ballesteros, A., Eds.; Springer: Cham, Switzerland, 2025; pp. 265–276. [Google Scholar] [CrossRef]

- Xue, J.; Zhang, Y.; Wang, X.; Wang, Y.; Tang, G. Towards Real-world Deployment of NILM Systems: Challenges and Practices. In Proceedings of the 2024 IEEE International Conference on Sustainable Computing and Communications (SustainCom), Kaifeng, China, 30 October–2 November 2024; IEEE: Los Alamitos, CA, USA, 2024; pp. 16–23. [Google Scholar] [CrossRef]

- D’Incecco, M.; Squartini, S.; Zhong, M. Transfer Learning for Non-Intrusive Load Monitoring. IEEE Trans. Smart Grid 2020, 11, 1419–1429. [Google Scholar] [CrossRef]