Abstract

To address the issue of missed detection of abnormal images caused by scarcity of defect samples and inadequate model training that characterize the current substation image inspection methods, this paper proposes a new substation image inspection method based on visual communication and combination of normal and abnormal samples. In this new method, the quality of substation equipment images is first evaluated, and images are recaptured when they are defocused and underexposed. Images are then preprocessed to eliminate the impact of noise on the algorithm. Image feature alignment is then performed to mitigate camera displacement errors that could degrade algorithmic accuracy. Subsequently, normal-labeled images are used to train the model, and a normal sample database is thus established. Built upon visual communication infrastructure with low-level quantization, the visual feature discrepancy between the current inspection images and those in the normal sample database is calculated using the Learned Perceptual Image Patch Similarity (LPIPS) metric. Through this process, the normal images are filtered out while abnormal images are classified and reported. Finally, this new method is validated at a municipal power supply company in China. When the abnormal image reporting rate is 18.9%, the abnormal image reporting accuracy rate is 100%. This demonstrates that the proposed method can significantly decrease the workload of substation operation and maintenance personnel in reviewing substation inspection images, reduce the time required for a single inspection of substation equipment, and improve the efficiency of video-based substation inspections.

1. Introduction

Substations, as the hub of the power grid, undertake the critical tasks of power conversion and transmission, and the operational status of substation equipment directly affects the security and stability of the grid. Substations have a wide variety of equipment and are geographically dispersed across complex environments characterized by high temperature, high humidity, and electromagnetic interference [1,2,3]. As a result, efficient operation and maintenance of substations has always been a critical issue in the power industry.

In traditional operation and maintenance models, grid operators primarily rely on manual inspections to monitor equipment conditions. To discover potential failures, substation personnel need to periodically check meter readings, equipment appearance, connection parts, and other components on site [4,5]. However, as the power grid continues to expand in scale, and the number of substations and types of equipment steadily increase, significant limitations have been observed in manual inspections: Firstly, the massive volume of inspection tasks has led to a sharp increase in the workload of substation personnel, making it difficult to ensure the equipment can be inspected in time and in detail. Secondly, manual judgment is prone to factors such as subjective experience and fatigue condition, posing risks of missed detections or misjudgments of failures [6,7].

To address the above issues, intelligent inspection technologies have become a hot research topic. In 2019, the State Grid Corporation of China started to establish high-definition (HD) video inspection systems for substations. These systems employ HD video cameras to virtually monitor substation equipment and automatically identify defects by integrating computer vision technologies. In early studies, which primarily followed a defective sample-based identification approach, methods such as target segmentation, tracking of substation equipment images, and Faster Region-Based Convolutional Neural Network (Faster R-CNN), were employed to segment and locate defective regions in equipment images. A defective sample database is constructed to train models to automatically generate alarms for failures [8]. While such methods have reduced manual intervention to some extent and improved inspection efficiency, they still remain unsatisfactory in practical application due to the scarcity of defective equipment samples in substations.

Specifically, the current defective sample-based identification methods face two major bottlenecks: First, the coverage of the defective sample database is limited. There are a highly diverse range of substation equipment defects, such as insulation aging, connector overheating, and oil leaks, and some defects are exceedingly rare. As a result, it is difficult for the sample database to cover all possible failure types. Second, the existing models suffer from insufficient generalization capability. Due to the scarcity of defective samples and significant differences in operational scenarios, the trained models often exhibit low accuracy and high false positive rates in practical applications. As a result, the operation and maintenance personnel still need to treat identification results of the model as references, necessitating a second verification through traditional methods such as visual inspection of gauges and manual transcription of readings. Consequently, the workload has not been fundamentally reduced [9].

In recent years, researchers have attempted to optimize image-based substation inspection technologies from various aspects. State-of-the-art (SOTA) models primarily focus on improving the sample database by leveraging data augmentation techniques to expand defective samples, or exploring unsupervised learning methods to reduce reliance on labeled samples. Among these, self-supervised contrastive learning trains models to learn discriminative features by constructing sample pairs from augmented views of the same image and sample pairs from different images. Its advantage lies in the ability to uncover intrinsic patterns in data without the need for labeling data. However, in substation scenarios, the structural features of equipment images are difficult to learn through simple augmentations, and therefore this approach shows insufficient sensitivity to minor defects such as bushing oil leaks [10]. The Few-Shot Transformers Learning method utilizes a small number of labeled abnormal samples to rapidly adapt to new defect types. However, there are a highly diverse range of substation defects, such as insulation aging and mechanical failures, and some occur with extremely low frequency. This makes it difficult to fulfill the prerequisite that few-shot samples be representative, thus leading to limited generalization capability [11]. Diffusion-based reconstruction methods generate images that conform to the distribution of normal samples through a diffusion process, with a purpose of detecting anomalies through the discrepancy between the input and the reconstructed images. While such methods can achieve high reconstruction accuracy, they involve exceptionally complex calculations, often resulting in the inference time exceeding 100 ms per image. This makes it difficult to meet the real-time requirements of substation inspection [12]. Other studies focus on algorithmic improvements. The core idea of PatchCore is to memorize local feature patches of normal samples and detect anomalies by measuring the distance between input patches and the memory bank. This method offers a fast identification speed. However, because it focuses solely on local features, it exhibits a high false-negative rate for global structural anomalies in substation equipment, such as unclosed switchgear cabinet doors [13]. The Deep Anomaly Driven Generation (DRAEM) algorithm integrates an autoencoder with a discriminator, simultaneously outputting both a reconstructed image and an anomaly mask to achieve unified localization and identification. It achieves pixel-level accuracy in locating anomalies; however, its training process requires a large number of normal-abnormal sample pairs. Due to the scarcity of defective samples in substations, the model struggles to converge effectively in this specific scenario [14]. These aforementioned methods still fail to fundamentally resolve the core issue arising from the scarcity of defective samples.

In fact, substation equipment operates under normal conditions for the vast majority of the time. Statistically, the ratio of normal operation time to failure time exceeds 500:1 [15]. This offers a new direction for the development of inspection technologies: If models can be trained using the abundant normal equipment images, anomalies could be identified by comparing current inspection images with normal samples, thereby overcoming the limitation from the scarcity of defective samples.

Hence, this paper proposes an intelligent substation image inspection algorithm based on the comparison of normal samples. Its core idea is to construct a normal sample database encompassing the normal states of various equipment. Current inspection images are then compared against those in this database to automatically filter out normal images. Only a small number of abnormal images undergo defect classification before being submitted to the personnel for semi-supervised learning review. The proposed method can significantly reduce the workload of substation operation and maintenance personnel in reviewing substation inspection images and shorten the time required for a single inspection of substation equipment, thus improving the efficiency of video-based substation inspections.

To clearly illustrate the relationship between existing studies and the study described in this paper, Table 1 summarizes key findings from literature, their limitations, and the innovations in this paper in the field of image-based substation inspection.

Table 1.

Key Findings from Literature in Image-Based Substation Inspection and Novel Contributions of This Paper.

The core innovations of the proposed method in this paper are as follows: Considering the fact that there is a disproportionately higher number of normal samples of substation equipment, the proposed method constructs a normal sample database and employs visual comparison to circumvent the reliance on defective samples in state-of-the-art (SOTA) approaches. Meanwhile, local feature alignment and global structural comparison are integrated to strike a balance between identification accuracy and real-time performance and thus better suit the complex inspection scenarios in substations.

2. Substation Image Inspection Process

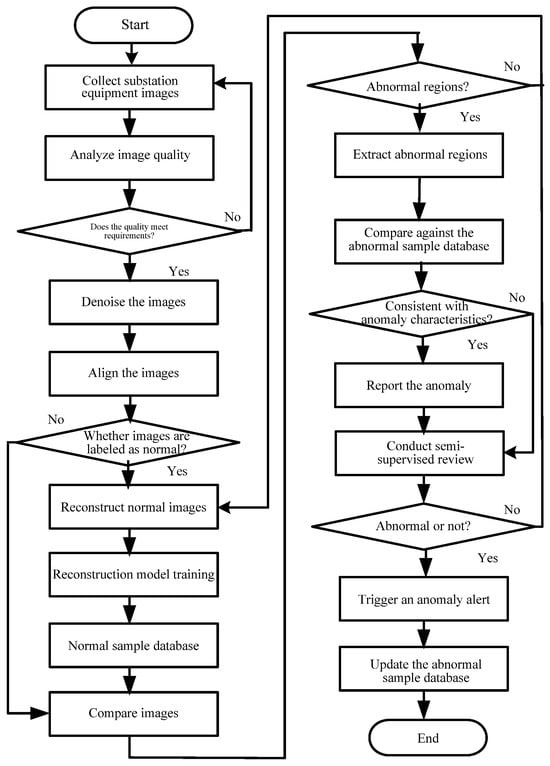

The process of the substation image inspection method based on visual communication and the combination of normal and abnormal samples (hereinafter referred to as the VC-CNAS method) comprises three key steps: preprocessing equipment images, detecting abnormal equipment images, and reviewing abnormal images. The substation image inspection process is shown in Figure 1.

Figure 1.

Substation Image Inspection Process.

- (1)

- Preprocessing Equipment Images

During a substation image inspection process, the proposed algorithm controls the HD video cameras in the substation to conduct photographic inspections on the equipment according to predefined points and thus obtain images of the operational status of the substation equipment. Then, the proposed algorithm evaluates the image quality to determine whether it meets this algorithm’s requirements for inspection and identification. For images that fail to meet the identification requirements—such as those defocused or underexposed images—the proposed algorithm controls the HD video camera to recapture images until they meet the quality standards. Finally, the algorithm performs morphological opening operation on the images to eliminate noises.

- (2)

- Detecting Abnormal Equipment Images

First, substation equipment inspection images are aligned to mitigate camera displacement errors that may lead to a decrease in identification accuracy of the algorithm. Next, images labeled as normal are reconstructed to train the model to establish a normal sample database. The current substation inspection images are then compared against the normal sample database to identify potential abnormal regions. For substation equipment images containing abnormal regions, abnormal regions are extracted. Normal substation equipment images are subsequently utilized for training the model of the normal sample database.

- (3)

- Reviewing Abnormal Images

First, abnormal regions in substation equipment images are compared against the abnormal sample database to determine whether they meet the abnormal criteria. For substation equipment images that meet the characteristics of the abnormal sample database, the abnormal information will be reported and a semi-supervised review is conducted. For substation equipment images that do not meet the characteristics of the abnormal sample database, only a semi-supervised review is performed. Next, in the semi-supervised review process, alarms are automatically generated for abnormal substation equipment images. Normal substation equipment images are utilized for training the model of the normal sample database. Finally, the abnormal sample database is updated with features of the abnormal equipment image.

3. Substation Image Inspection Model

3.1. Preprocessing Equipment Images

3.1.1. Collecting Substation Equipment Images

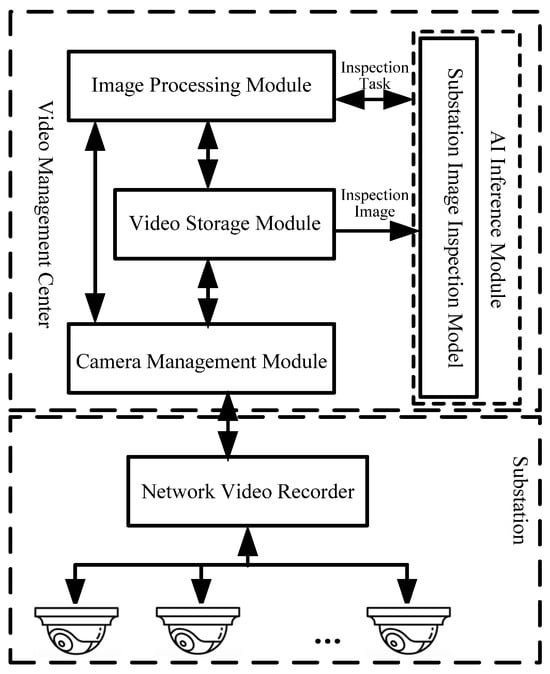

In 2020, State Grid Corporation of China started to build video management centers for substations at municipal power supply companies, with a purpose of collecting and analyzing equipment images from all substations under municipal jurisdiction in a unified manner. The algorithm is deployed in the AI inference module of the video management center at the substation. It issues inspection tasks to the image processing module and retrieves substation equipment inspection images from the video storage module. These images are then analyzed and any abnormal information detected will be reported, as shown in Figure 2.

Figure 2.

Substation Image Collection Framework.

The algorithm issues a command to the video management center to inspect equipment. The video management center controls the network video recorders (NVRs) and cameras in the substation to capture images according to the predefined inspection sequence. For example, the predefined points for image inspection of a 110 kV indoor substation are shown in Table 2.

Table 2.

Predefined Points for Image Inspection in Substations.

3.1.2. Analyzing the Quality of Substation Equipment Images

The purpose of assessing the quality of substation equipment images is to analyze whether the current images exhibit quality issues such as defocus or underexposure, thereby avoiding algorithmic errors during image inspection and analysis. For defocused or underexposed equipment images, the proposed algorithm controls the HD video camera to recapture images until they meet the quality standards.

In quality analysis of substation equipment images, defocused images are identified through their gradient information. When the gradient falls below the threshold value Δf1, it indicates insufficient information and poor sharpness at the edges, meaning that the image is defocused. Underexposure is identified by statistically analyzing the peak signal-to-noise ratio (PSNR) [16]. When the PSNR falls below the threshold Δf2, it indicates excessive noise and underexposure in the image.

The Laplace operator is a second-order differential operator applied to both horizontal and vertical coordinates of images. This operator, with its coefficients summing to zero and possessing rotation-invariant properties, meets the requirements for calculating the gradients of substation equipment images [17]. The gradient of substation equipment images is calculated as follows:

where s(Ex,Ey) represents the input substation equipment image; σ2s/σEx2 denotes the sum of second-order derivatives along the horizontal axis of the substation equipment image; σ2s/σEy2 indicates the sum of second-order derivatives along the vertical axis. This formula calculates the image gradient using the Laplace operator to reflect the second-order rate of change of image pixels along both the horizontal (Ex) axis and the vertical (Ey) axis. A higher gradient value indicates sharper image edges, while a lower value suggests that the image is defocused. In practical computation, it is necessary to calculate the second-order partial derivatives for each pixel of the input image s(Ex, Ey) and sum them up. The final result is a scalar value, which can be used for subsequent comparison with the threshold Δf1.

The gradient threshold for substation equipment images is jointly determined by the current image and those in the normal sample database. The gradient threshold Δf1 for substation equipment images is calculated as follows:

where np denotes the number of images in the normal sample database; fa represents the gradient of the current image; fbi indicates the gradient values of images in the normal sample database; fbmax − fbmin is the gradient deviation range (fbmax is the maximum gradient value in the normal sample database; fbmin is the minimum gradient value in the normal sample database). The formula consists of two components: The first part represents the average gradient value of the normal sample database, while the second part is the gradient offset correction term (fbmax − fbmin). The purpose of the correction term is to prevent extreme samples from affecting the threshold.

The peak signal-to-noise ratio (PSNR) is one of the key metrics for evaluating image quality. PSNR, which is calculated using the root mean square error (RMSE), quantifies the ratio of the maximum achievable image quality to the noise level [18]. Therefore, it is adopted for assessing the quality of substation equipment images in this study.

The RMSE of substation equipment images is calculated as follows:

where na represents the pixel value along the horizontal axis; oa denotes the pixel value along the vertical axis; Hij is the substation equipment image with added noise; Eij is the original substation equipment image. The signal-to-noise ratio threshold Δf2 is calculated using the method described in Equation (2). Due to space limitations, further details are not covered in this paper. This formula is used to quantify the difference between the noisy image Hij and the original noise-free image Eij. During calculation, it is necessary to iterate through all pixels of the image. The na represents the number of pixels in the width direction and oa denotes the number of pixels in the height direction. The squared difference for each pixel is first calculated and then the square root of the average value is taken. A higher Rmse value indicates more severe image noise.

The PSNR of substation equipment images is calculated as follows:

where Amax represents the maximum pixel value in the substation equipment image. PSNR is inversely proportional to Rmse and is measured in decibels (dB). For an 8-bit grayscale image, the maximum pixel value Amax is equal to 255; For an RGB color image, Amax is equal to 2553.

When the gradient or PSNR of the substation equipment image falls below the predefined threshold, the proposed algorithm issues a command to recollect images to the video management center and controls the HD video camera to recapture images until the quality meets the inspection requirements.

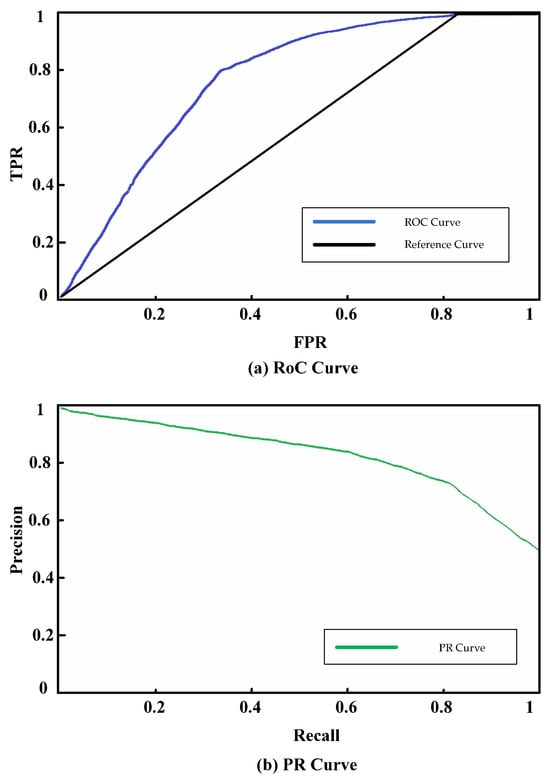

To further validate the rationality of the gradient threshold (Δf1) and the peak signal-to-noise ratio threshold (Δf2), this paper employs the Receiver Operating Characteristic (ROC) curve and the Precision-Recall (PR) curve for statistical analysis. By labeling a total of 50,000 images from five different substations, including 1200 genuine defective images, the True Positive Rate (TPR), False Positive Rate (FPR), precision, and recall under different threshold values are calculated. The optimal threshold is determined by selecting the threshold value under which the maximum Area Under the Curve (AUC) is achieved and the False Positive Rate (FPR) is maintained below 5%. This approach ensures generalization capability of the threshold across different substation environments and avoids deviations introduced by subjective judgments.

The ROC curve and the PR curve are shown in Figure 3.

Figure 3.

Receiver Operating Characteristic (ROC) Curve and Precision-Recall (PR) Curve.

Optimal Δf1 threshold selection: Under the constraint of FPR ≤ 5%, the threshold point with the highest TPR is selected, resulting in a Δf1 value of 18.7.

Optimal threshold selection for Δf2: Under the constraint of FPR ≤ 5%, the optimal threshold value for Δf2 is 28.3 dB.

3.1.3. Denoising Substation Equipment Images

Environmental factors introduce noise into substation equipment images captured by HD video cameras. Although noise in the images can be reduced by analyzing the quality of the images and recapturing qualified images, it cannot be completely eliminated [19]. Therefore, during the image preprocessing stage, substation equipment images must be denoised.

The opening operation is a morphological image processing method that involves first eroding and then dilating images, thereby eliminating noise such as burrs. Specifically, eroding aims to compress bright regions in the image, eliminate noise artifacts like burrs, and preserve real image features. The purpose of dilating is to restore the true values of bright regions. Through this eroding and dilating process, noise in the image is effectively removed [20]. Therefore, the morphological opening operation is employed in this study to denoise substation equipment images.

The eroding of substation equipment images is defined as follows:

where lz represents the structural element of the substation equipment image, and generally it is a 3 × 3 or 5 × 5 rectangular kernel; qc is the positional parameter of the erosion kernel; Ea stands for the input denoised substation equipment image. The calculation result is a set of all pixels satisfying the condition that “the structural element is entirely contained within the highlighted image regions,” which eliminates noise points smaller than the structural element.

The dilating of substation equipment images is defined as follows:

What makes it different from corrosion lies in the criterion that “the structural element must intersect with the highlighted regions of the image.” This operation can restore valid regions shrunk during the corrosion process. When combined with corrosion, it forms the opening operation, which removes noise while preserving the integrity of equipment contours. Through the eroding and dilating of substation equipment images, the impact of small objects such as burrs is eliminated while the size of the substation equipment images is kept unchanged, and the boundaries of the substation equipment images are smoothed.

3.2. Detecting Abnormal Equipment Images

3.2.1. Aligning Substation Equipment Images

The proposed algorithm identifies abnormal regions by performing pixel-wise subtraction between the current substation equipment images and images in the normal sample database. Therefore, the current substation equipment images must be aligned with those in the normal sample database using corner features to avoid identification accuracy degradation caused by displacement errors from HD video cameras.

The scale invariant feature transform (SIFT), as a local feature description algorithm, exhibits scale-invariant properties and can effectively detect corner features from power substation equipment images [21]. Perspective transformation (PT) is an image projection method that projects the current substation equipment images onto the plane of normal sample images to align corners of these images [22]. Therefore, the algorithm first applies SIFT to process the current substation equipment images to extract corner features, then uses perspective transformation (PT) to align the current images with those in the normal sample database.

The Gaussian function used in SIFT detection for substation equipment images is as follows:

where xc denotes the horizontal coordinate of the substation equipment image; yc represents the vertical coordinate of the substation equipment image; δ is the standard deviation of the Gaussian kernel for the normal distribution of the substation equipment image. It is used to control the degree of blurring. The larger the value, the blurrier the image; The parameter eb is the scale-space factor, which can be used to construct the multi-scale pyramid. This function provides scale invariance for subsequent corner feature detection by applying Gaussian blurring to the image at different scales.

Then, the scale space of the substation equipment images is computed as follows:

where Exy is the input substation equipment image; The asterisk (*) denotes the convolution operator. By convolving the Gaussian function with the original image Exy, it enables multi-scale image feature extraction and pays the way for corner feature detection in the SIFT algorithm.

Then, the Gaussian pyramid is built, and extremum points of the substation equipment images are computed to obtain corner features.

Finally, corner features of the substation equipment image are projected onto the normal sample via perspective transformation (PT) to align corner features. The 3D perspective coordinate system for substation equipment images is defined as follows:

where xb, yb and zb represent the horizontal, vertical, and depth coordinates of the substation equipment image before perspective projection; Elements b11, b12, b13, b21, b22, b23, b31, b32, and b33 constitute a 3 × 3 projective transformation (PT) matrix, which is solved using 4 pairs of matching points. Through perspective transformation, image tilt caused by camera angle deviations can be corrected, achieving pixel-level alignment between the current images and those in the sample database.

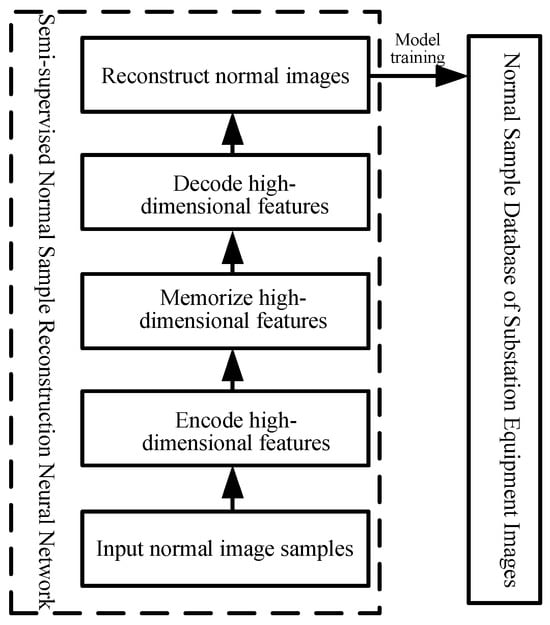

3.2.2. Utilizing Normal Substation Equipment Images for Model Training

To reconstruct substation equipment images labeled as normal, aligned substation equipment images are input and a semi-supervised learning-based neural network for reconstructing normal samples is run to extract, memorize, and decode high-dimensional features [23]. Finally, the neural network outputs reconstructed images exhibiting features of normal samples. Such images are used for model training to establish a normal sample database for substation equipment images, as shown in Figure 4.

Figure 4.

Normal Sample Training of Substation Equipment Image.

In supervised learning methods for substation equipment images, model training is performed by manually labeling image features. However, the vast quantity of normal sample images and the lack of manpower to label them have resulted in poor generalization performance of the trained inspection models at the substation [24]. Semi-supervised learning (SSL) incorporates both unlabeled and labeled samples for model training, which not only reduces the workload for substation operation and maintenance personnel, but also improves the accuracy of model training [25]. Therefore, SSL is adopted to utilize normal substation equipment images for model training.

Convolutional Neural Networks (CNN) are a deep learning algorithm that incorporates convolutional computations and possesses a deep convolutional architecture. CNNs, inspired by biological visual systems, are capable of implementing semi-supervised learning (SSL). The main components of a CNN include the convolutional layer, the activation function and the pooling layer [26]. When substation equipment images are processed, the feature space output from CNN layers serves as the input into the fully-connected layer, which then completes the mapping from the substation images to label sets.

During the input of substation equipment images, a CNN encodes high-dimensional features, reduces the dimensionality of the substation equipment image vectors, and memorizes the high-dimensional features of the substation images. During the output of substation equipment images, a CNN decodes high-dimensional features and increases the dimensionality of the image vectors. Normal images are then reconstructed and a normal sample database of substation equipment images is then established through model training.

The CNN convolution process computes the sum of weights and pixel intensities of equipment images. After the CNN convolution, the sum is defined as follows:

where ne denotes the size of CNN’s convolution kernel; oei represents the pixel intensities of different substation equipment images; wei is the weights of different substation equipment images. The operation is implemented through a sliding window, and the output feature map can extract local features such as edges and textures.

After performing convolution on the substation equipment images, a bias term is added and activation is applied to obtain the final result:

where β denotes the bias term of the CNN, with an initial value of 0, which is updated through backpropagation; γ represents the sigmoid activation function in CNN. By mapping the convolutional outputs to the range [0, 1], this operation introduces nonlinearity to significantly improve the fitting capacity of the network.

Subsequently, pooling is performed to reduce the feature space dimensionality of the substation equipment images. Finally, through the fully-connected layer, the processed substation equipment image is output as:

where ng denotes the number of neural layers in CNN; wgj represents the weights of different CNN neural layers; ui−1 is the output from the previous layer’s neurons. The fully connected layer projects high-dimensional features into the sample label space to reconstruct the image.

Substation operation and maintenance personnel determine whether equipment is in proper operating condition by comparing the current equipment images with normal images. If the two match, the equipment is confirmed to be in normal condition. Therefore, during the establishment of the normal sample database of substation equipment images, high-dimensional features of normal sample images are memorized. This ensures that the high-dimensional features of substation equipment images after model training remain consistent with the memorized features.

During the model memorization process, normal image samples of substation equipment are input into the model, where features of normal images are extracted as the memorized information. During the inference phase, high-dimensional features are retrieved from the normal sample database of equipment images in CNN and are compared with the current memorized information to show the differences between the input image and images in the normal sample database.

where nh denotes the number of normal image samples of substation equipment in the normal sample database; Mbi represents different input images of substation equipment; Mai is substation equipment images in the normal sample database. The image with the maximum deviation between the current sample and the normal sample database is selected as the maximum allowable tolerance range for deviations of normal samples. The ‖ ‖2 denotes the L2 norm. This formula calculates the maximum difference between the current image feature Mbi and the sample database feature Mai, which is used to determine the tolerance range for feature matching.

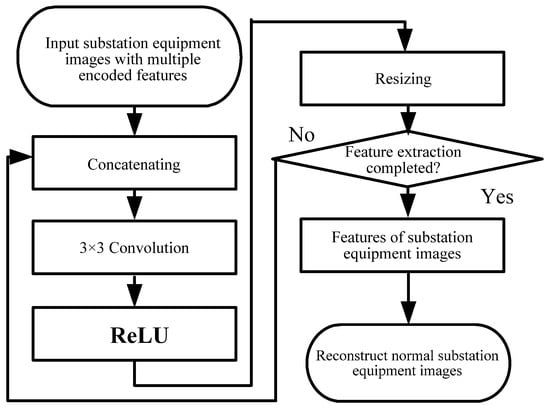

In the high-dimensional feature decoding phase, the first step involves concatenating multiple encoded features of substation equipment images from the CNN memorization module in the channel dimension of CNN. Subsequently, the concatenated image features of substation equipment undergo convolutional processing to adjust the channel dimensions. The substation equipment image features are then processed through a Rectified Linear Unit (ReLU) activation function to generate the first-layer output of the high-dimensional feature decoder [27]. Next, the images are resized to generate the second-layer output. This process is iterated until the high-dimensional feature decoder outputs all features, as illustrated in Figure 5.

Figure 5.

High-Dimensional Feature Decoding Structure.

In self-supervised learning (SSL), normal samples of substation equipment images labeled by maintenance and operation personnel are employed to train the model for identifying unlabeled image samples, thereby mitigating the heavy workload from manually labeling normal samples. The model training aims to minimize the mean squared error (MSE) loss function until convergence [28]. As the training iterations increase, the MSE converges, signifying the completion of model training. The MSE of the substation equipment image is defined as:

where nk is the number of substation equipment images; Ekj is the value of the current image of different substation equipment; Hki is the value of reconstructed normal image of different substation equipment. The model training minimizes MSE through gradient descent, and convergence is determined when the MSE is less than 1 × 10−5.

During model training, the ReLU activation function is used for updates to improve the accuracy of model training. During a substation image inspection, when detecting minor image abnormalities such as oil leakage from high-voltage bushings, the algorithm uses a semantic segmentation network to extract and process key regions of interest in the image, while masking out irrelevant background information. Then, the images are reconstructed to establish a normal sample database of substation equipment images. Due to space limitations, semantic segmentation is detailed in Reference [29] and will not be elaborated in this paper.

3.2.3. Comparing Substation Equipment Images and Extracting Abnormal Regions

Anomalies in substation equipment images are detected by comparing the preprocessed and aligned images against the normal image database to identify abnormal regions and obtain the difference between the images, while normal equipment images are filtered out.

Visual Communication (VC) is the process of conveying information through visual elements such as symbols, imagery, and text. It emphasizes efficient and intuitive information exchange through visual media. During substation equipment image comparison, visual communication (VC) can simulate human visual perception to identify discrepancies between current device images and standard normal images, thereby enhancing the accuracy of automated comparison.

Visual communication refers to the collaborative mechanism between machine-based visual feature quantization and human perception-based verification in this method. Specifically, it encompasses both the low level and the high level. At the low level, the visual difference between images is calculated using the LPIPS metric to quantify the machine-based identification results of “abnormal vs. normal” features. At the high level, a human-in-the-loop review process is introduced to manually verify high-risk abnormal samples identified by the machine, thereby calibrating the discrepancy between machine-based judgment and human visual perception. In this way, a closed loop of “machine computation—human verification—model iteration” is established.

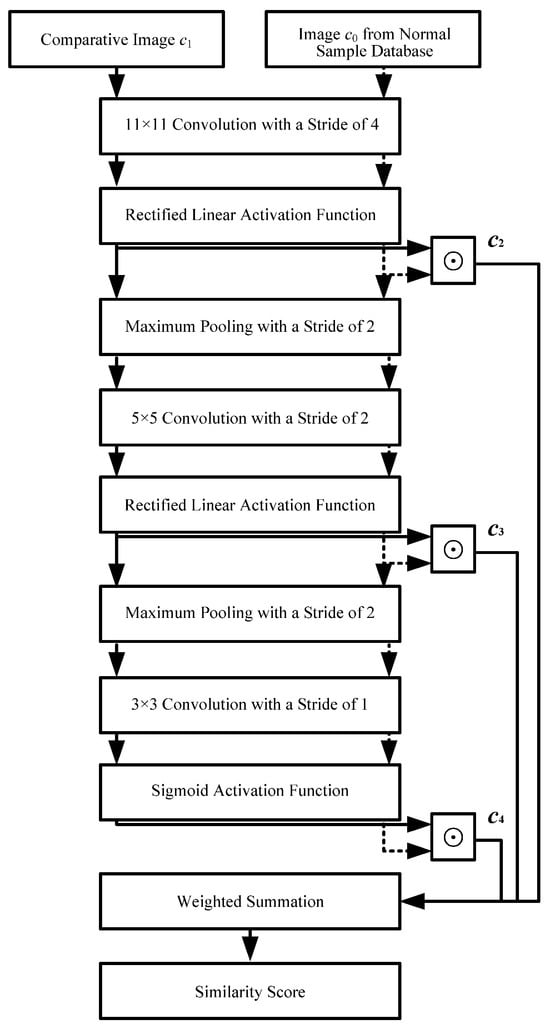

Learned perceptual image patch similarity (LPIPS) is a VVC approach that measures perceptual differences between two images. The approach reconstructs an inverse mapping of normal sample images within the current images to compare perceptual similarities. LPIPS exhibits compliance with human perceptual characteristics [30]. Therefore, the proposed comparison system of substation equipment images employs the LPIPS approach to compare substation equipment images. The similarity assessment model between substation equipment images and images from the normal sample database is illustrated in Figure 6.

Figure 6.

Structure of Similarity Comparison Model.

As illustrated in Figure 5, c0 denotes the feature vector of the image from the normal sample database of substation equipment, while c1 represents the feature vector of the currently input image of substation equipment. First, c0 and c1 undergo an 11 × 11 convolution with a stride of 4 and then ReLU activation to obtain c2. Then, c0 and c1 undergo maximum pooling, a 5 × 5 convolution with a stride of 2, and ReLU activation to obtain c3. Next, c0 and c1 again undergo maximum pooling, a 3 × 3 convolution with a stride of 1, and Sigmoid activation to obtain c4. Finally, the weighted average of c2, c2, and c4 is calculated to obtain the similarity score of the normal samples for substation equipment.

The similarity between the current substation equipment image and the images in the normal sample database is defined as follows:

where nb represents the number of layers in LPIPS; nc denotes the number of scores and combined weights per layer in LPIPS; wi represents the trainable weights of the same dimension for different channels; vi represents the mapping scores for different channels; wl is the sum of the LPIPS channel weights of the same dimension; yaj and ybj represent the unit-normalized values of the feature stacks extracted from the current substation equipment image and the normal sample image, respectively. A smaller dab value indicates higher similarity between two images. If the dab value is less than the similarity threshold Δf3, it indicates that the substation equipment image is similar to those in the normal sample database. If dab exceeds Δf3, it signifies the presence of abnormal regions in the substation equipment image. Subsequently, Equation (1) is applied to perform edge detection on abnormal regions in the substation equipment image and information of the abnormal regions is extracted.

The similarity threshold Δf3 is defined as:

where fc is the current similarity score; fcavg is the average similarity score among images in the normal sample database; fbmax − fbmin represents the image similarity deviation score, in which fcmax is the maximum image similarity in the normal sample database; fcmin is the minimum image similarity in the normal sample database. The correction term coefficient is used to expand the threshold range to avoid missing minor anomalies.

3.3. Reviewing Abnormal Images

The abnormal image database of substation equipment aims to dynamically accumulate abnormal data encountered by substation operation and maintenance personnel during inspections, enabling the model to develop the capability for classifying abnormal images. In addition to utilizing normal samples for model training, the training process for the abnormal image database of substation equipment images also incorporates spatial attention module-based feature weighting, enabling the model to focus more on defective regions in substation equipment images. Due to space limitations, only the spatial attention-based feature weighting is described for the training of the abnormal image database of substation equipment, with the remaining procedures detailed in Equations (9)–(13).

In spatial attention module-based feature weighting, the maximum defect is first computed according to Equation (12) and is then input into CNN for average pooling to obtain Za3.

where nl denotes the channel count of the first-layer defective samples of substation equipment images and it is set as 64 in this paper. dmax1i represents the maximum dissimilarity value among the first-layer channel defect samples. This formula extracts features of salient regions through an averaging operation to enhance the model’s focus on defects.

Subsequently, samples are taken on the saliency map of Za3, and are additively combined with the mean defective sample value at the second layer. The substation equipment image defects at the second layer are defined as follows:

where no denotes the channel count of the second-layer defective samples of substation equipment images and it is set as 128 in this paper; dmax2i represents the maximum dissimilarity value for the second-layer defect samples; zah3 is the sampling value of the saliency map of Za3, and it can achieve cross-layer feature fusion.

Finally, samples are taken on the saliency map of Za2, and are additively combined with the mean defective sample value at the third layer. The substation equipment image defects output at the third layer are defined as follows:

where np denotes the channel count of the third-layer defective samples of substation equipment images and it is set as 256 in this paper; dmax1i represents the maximum dissimilarity value among the first-layer channel defect samples. zah2 is the sampling value of the saliency map of Za2 and its final output is used for weighted enhancement of defective regions.

During defect identification, Equation (15) is used to compare abnormal regions of the current substation equipment images with those of images in the abnormal sample database. If the discrepancy between the abnormal substation equipment images and images in the abnormal sample database is lower than the threshold Δf4, it indicates the abnormal regions in the current substation equipment images belong to that defect category. The abnormal images are then reported to substation operation and maintenance (O&M) personnel for treatment, who will perform semi-supervised learning (SSL), label the defects, and trigger an abnormal alert. The threshold Δf4 is calculated using Equation (16).

When the comparison values between the abnormal regions of the current substation equipment images and those of images in the anomaly sample database exceed the threshold Δf4, it indicates that there are no similar failures in the abnormal sample database of substation images. The model will directly report the images to the substation operation and maintenance personnel to perform semi-supervised learning (SSL). If the current substation equipment images are identified as defective, the personnel will label the defect information, which will then be added into the abnormal sample database for training purposes. At the same time, an abnormal alert will be triggered. If the current substation equipment images are classified as normal, they will be used to train the normal sample database, Table 3.

Table 3.

Terminology.

4. Case Analysis

This section validates the proposed method using real-world operational data from a substation operation and maintenance division of a power supply company in Southwest China (2022). The substation operation and maintenance division of this power supply company manages 47 substations, including nine 220 kV substations with 318 standardized pre-defined inspection points for image inspections and thirty-nine 110 kV substations with 296 standardized pre-defined inspection points. The size of image input into CNN is set to 256 × 256 pixels, with an initial learning rate of 0.0001, a momentum of 0.5, and a batch size (BZ) of 16. The training set comprises 777,924 inspection images collected from 47 substations between January and December 2021, including 1142 images labeled via semi-supervised learning by substation operation and maintenance personnel. The test set contains 194,481 images collected from January to March 2022.

In the model training phase, a pseudo-labeling strategy is employed to optimize the labeling quality of a large number of unlabeled samples using a small amount of labeled data.

(1) Hierarchical pseudo-labeling mechanism

To mitigate the quantity imbalance between 1142 labeled samples and 776,782 unlabeled samples, a hierarchical pseudo-labeling strategy which combines confidence filtering and dynamic update is adopted. During the initial pseudo-labeling, normal samples that have been labeled are used to train a base CNN model to predict unlabeled samples. Samples with prediction confidence no less than 95% are marked as high-confidence normal samples and incorporated into the training set. During iterative optimization, after each training round, the feature difference between unlabeled samples and those in the normal sample database is calculated. Samples with differences below the similarity threshold are automatically labeled as candidate normal samples. Finally, 5% of candidate samples are randomly selected for manual verification. Mislabeled samples are corrected, and the verified samples are added into the normal sample database for the next round of training.

(2) Confirmation bias mitigation measures

In the abnormal sample augmentation phase, 100 typical abnormal images are selected from the labeled samples. Through data augmentation techniques including rotation and brightness adjustment, 5000 abnormal samples are generated. These samples are then mixed with normal samples for training to balance the class distribution. To enforce feature diversity, a feature entropy maximization regularization term is incorporated into CNN training. This enables the model to learn diverse features of equipment under various angles and lighting conditions in order to prevent overfitting to a small number of labeled samples. During dynamic threshold adjustment, the similarity threshold is adjusted based on the sample distribution from each training round. When the feature variance of newly added pseudo-labeled samples exceeds 5%, the threshold range is expanded by 10% to ensure sample diversity.

The hardware platform of the simulation system in the paper is an Intel Xeon Gold 6238 central processing unit (CPU), with a processor core count of 44, an operating frequency of 2.1 GHz, and a memory of 128 GB. For graphics processing, the industrial-grade edge computing platform NVIDIA Jetson AGX Orin, featuring a 128-core GPU with 32 GB of operating memory, is employed; The Windows Server 2019 is adopted as the software platform and a comparative analysis is conducted with CLIP-based zero-shot detection [31] and the substation image inspection method based on YOLOv8 with few-shot fine-tuning [32]. This method employs a Single Shot MultiBox Detector (SSD) to address the issue of low accuracy in defect identification due to a small number of defect samples in substations, which has been widely applied in substation image inspection.

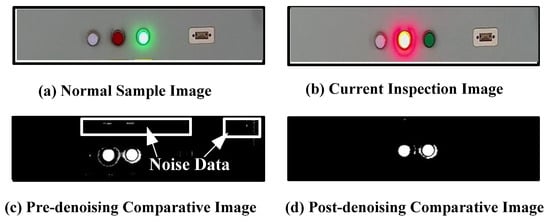

4.1. Analysis on Denoising of Substation Equipment Images

To demonstrate the noise characteristics of the substation equipment images, this paper presents denoising results by comparing the images, as illustrated in Figure 7.

Figure 7.

Analysis of Image Denoising for Substation Equipment.

As shown in Figure 7, the substation inspection image before denoising is first compared with the normal sample image in Figure 7a for similarity analysis, resulting in a noisy difference image, namely the pre-denoising comparative image in Figure 7c. Next, the pre-denoising substation inspection image is input into the image denoising module, resulting in the current denoised inspection image, as shown in Figure 7b. Subsequently, Figure 7b is compared with Figure 7a to generate the denoised comparative image in Figure 7d. Finally, by comparing Figure 7d with Figure 7c, it can be observed that the white speckle noise near the switchgear indicator lights in the pre-denoising image (Figure 7c) has been effectively filtered out in the post-denoising image (Figure 7d).

4.2. Analysis on Alignment of Substation Equipment Images

For alignment of substation equipment images, scale invariant feature transform (SIFT) is first employed to detect edge points in the images. The feature points of the current image are then aligned with those in the normal sample database, as shown in Figure 8.

Figure 8.

Image Alignment of Substation Equipment.

As shown in Figure 8, a total of 126 feature points—including indicator lights, display panels, and cabinet door handles—are detected using the SIFT method in the input substation equipment image (Figure 8a). Next, a total of 126 image feature points are taken from the normal sample images (Figure 8b) at this pre-defined shooting point in the normal sample database. Subsequently, perspective transformation (PT) is performed between the 126 feature points extracted from the input substation equipment image (Figure 8a) and the normal sample image (Figure 8b) to achieve image alignment.

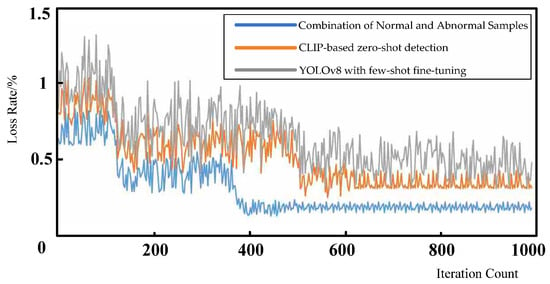

4.3. Analysis on Model Training Loss Function

In training the substation image inspection model, an appropriate iteration count exerts multi-level influences on the loss function values of the normal sample model. When the iteration count is insufficient, CNNs would fail to adequately learn the target features and cannot reach the global optimum. During gradient descent, the gradient fails to descend along the direction of the global maximum gradient, often resulting in convergence to local optima. Excessive training iterations may lead to overfitting in CNNs, resulting in insufficient generalization capability and failure to achieve local optima across all images.

Both the normal sample model and the YOLOv8 with few-shot fine-tuning model were configured with an initial learning rate of 0.0001, a momentum of 0.5, a batch size of 16, and a maximum iteration of 1000. Analysis of loss functions for training iterations was conducted using the normal sample model and the YOLOv8 with few-shot fine-tuning model, respectively, as illustrated in Figure 9.

Figure 9.

Analysis of Model Training Loss Function.

As shown in Figure 9, the loss of the VC-CNAS model converges to 0.2% at around 400 iterations. The loss of the CLIP-based zero-shot detection converges to approximately 0.5% at near 600 iterations, while the loss of the YOLOv8 with few-shot fine-tuning model converges to around 0.4% loss at near 550 iterations. This demonstrates that the normal sample model has a lower loss at fewer iterations compared to CLIP-based zero-shot detection and the YOLOv8 with few-shot fine-tuning models.

4.4. Comparative Analysis of Abnormal Regions

The core functionality of the normal sample model lies in the comparison of abnormal regions. Normal substation equipment images are filtered out and abnormal images are identified through a dedicated defect analysis module. During comparison of abnormal regions, the current substation equipment image is compared against images in the normal sample database to identify abnormal regions and obtain the difference between the images.

Anomalies in substation equipment images are detected by comparing the preprocessed and aligned images against the normal image database to identify abnormal regions and obtain the difference between the images, while normal equipment images are filtered out. Abnormal regions in images of transformer bushing oil levels are compared and analyzed in Figure 10.

Figure 10.

Comparative Analysis of Abnormal Oil Level Area of Transformer Bushing.

As shown in Figure 10, the current image showing the decreased bushing oil level (Figure 10b) is compared with the normal sample image of the bushing oil level (Figure 10a), yielding images highlighting abnormal bushing oil-level regions (Figure 10c). The difference of the abnormal region of the image reaches 98.3%, which exceeds the current Δf3 abnormal threshold of 23.6%. Therefore, it can be concluded that there is oil leakage in the transformer bushing.

4.5. Evaluation Metrics for Normal Image Identification

The proposed method in this paper differs fundamentally from defect-sample-based identification method, where algorithm performance is typically evaluated through false positive rates, false negative rates, accuracy, and the time required. In contrast, the proposed method in this paper filters out all normal substation equipment images, which means that the algorithm 100% excludes normal images, and reports abnormal images to operation and maintenance personnel for review. To facilitate comparison with traditional defective sample identification methods, this paper employs precision, recall, and F1-score for evaluation. Among them, precision is the proportion of samples identified as abnormal by the model that are actually defects; recall is the proportion of actual defective samples that are correctly identified by the model; F1-score is the harmonic mean of precision and recall, which means it can comprehensively reflect the model’s performance.

This paper employs the publicly released substation defect detection dataset by the Shandong Electric Power Enterprise Association of China. This dataset includes a total of 8307 images across eight major defect categories: meter damage, oil leakage, insulator damage, abnormal substation breathers, abnormal cabinet door closure, cover plate damage, foreign matter, and abnormal oil levels. Meanwhile, these images are mixed with 50,000 normal substation inspection images to form the experimental dataset. The proposed VC-CNAS method in this paper, the CLIP-based zero-shot detection method, and the YOLOv8 with few-shot fine-tuning method are employed to detect anomalies, respectively. The precision, recall, and F1-score of these three methods were compared, and the results are shown in Table 4.

Table 4.

Comparison of Metrics in Anomaly Identification.

As can be seen from Table 4, the proposed VC-CNAS method in this paper achieves an average F1-score of 94.3% on the actual defect dataset, implying its stable capability for identifying real-world defects. The recall rate remains at 93.4% during training, testifying to the model’s generalization ability. The precision is slightly higher than the recall, indicating that the model is relatively strict in anomaly judgment with a low false positive rate, which meets the practical requirement of “tolerating false positives rather than false negatives” in substation operation and maintenance. The proposed method in this paper outperforms both CLIP-based zero-shot detection and YOLOv8 with few-shot fine-tuning in terms of precision, recall, and F1-score.

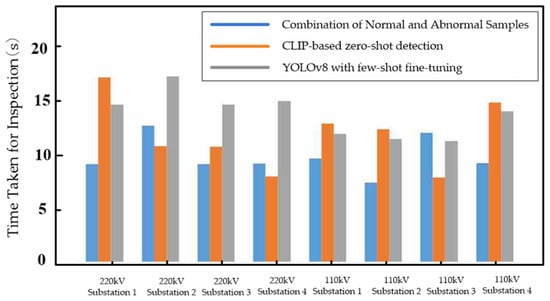

The time taken for substation equipment image inspection serves as the core performance metric of the proposed method. It is calculated as the time interval between inputting the substation equipment image into the algorithm and the algorithm generating the image identification result. The smaller this indicator is, the better the inspection performance is.

Four 110 kV and 220 kV substations were randomly selected from the substation division of the power supply company, respectively. The time taken for equipment image inspection conducted at the substations using the proposed VC-CNAS method, CLIP-based zero-shot detection and YOLOv8 with few-shot, respectively, is compared, with the comparison results shown in Figure 11.

Figure 11.

Performance of Substation Equipment Image Inspection.

As shown in Figure 11, the average time taken for equipment image inspection at the substations using the VC-CNAS method proposed in this paper is 11.23 s, slightly faster than that for CLIP-based zero-shot detection and YOLOv8 with few-shot fine-tuning. This indicates that while these three methods demonstrate comparable time efficiency in image inspection, the VC-CNAS method can successfully analyze and identify all abnormal equipment images in the substation.

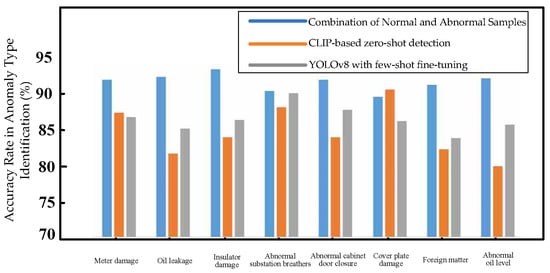

4.6. Analysis on the Accuracy Rate in Anomaly Type Identification

The analysis on the accuracy rate in anomaly type identification serves as the key metric for evaluating the model’s ability in identifying abnormal defects. This metric is computed as the percentage of defect images correctly identified by the model in the total number of randomly simulated defect images of substation inspection equipment. This metric ranges from 0% to 100%, with higher values indicating greater algorithm accuracy.

This paper employs the publicly released substation defect detection dataset by the Shandong Electric Power Enterprise Association of China. This dataset includes a total of 8307 images across eight major defect categories: meter damage, oil leakage, insulator damage, abnormal substation breathers, abnormal cabinet door closure, cover plate damage, foreign matter, and abnormal oil levels. Meanwhile, these images are mixed with 50,000 normal substation inspection images to form the experimental dataset. The accuracy rates in identifying anomaly types using the VC-CNAS method, CLIP-based zero-shot detection and YOLOv8 with few-shot fine-tuning, respectively, is compared and analyzed, with results shown in Figure 12.

Figure 12.

Accuracy Rate in Anomaly Type Identification (%).

As shown in Figure 12, the proposed VC-CNAS method achieves an accuracy rate of 92.3% in anomaly type identification for substation equipment image inspection, outperforming CLIP-based zero-shot detection and YOLOv8 with few-shot fine-tuning. The proposed method in this paper circumvents the reliance on defect samples by constructing a normal sample database, and dynamically updates the abnormal sample database through semi-supervised learning. It demonstrates significant advantages in scenarios where there is a lack of defective samples, making it particularly suitable for long-term operation and maintenance of substation equipment. CLIP-based zero-shot detection leverages the general visual knowledge of pre-trained models and requires no domain-specific data. However, it shows low accuracy in identifying specialized defects in substation equipment due to the cross-domain generalization gap. YOLOv8 with few-shot fine-tuning requires only a small number of labeled samples and is effective in detecting known defects. However, it exhibits a high false negative rate and a high false positive rate for unknown defects such as oil leaks.

4.7. Cross-Substation Threshold Stability Testing

A total of 37 substations not involved in the threshold optimization are selected from the original method, including four 220 kV substations and thirty-three 110 kV substations. The above ROC method is used to recalculate the optimal threshold for each substation. The fluctuation range of Δf1 is 17.9–19.5, with a mean of 18.6, a standard deviation of 0.52, and a coefficient of variation of 2.8%. The fluctuation range of Δf2 is 27.8–28.9 dB, with a mean of 28.2 dB, a standard deviation of 0.31, and a coefficient of variation of 1.1%. This demonstrates that the threshold maintains good stability across substations of different voltage levels and in different geographical regions.

The performance of the original heuristic threshold and the optimized threshold tested in 37 substations is shown in Table 4.

As can be seen from Table 5, the optimized threshold significantly reduces the false positive rate while maintaining a high recall rate, demonstrating its strong generalization capability.

Table 5.

Performance Comparison Between the Original Heuristic Threshold and the Optimized Threshold.

4.8. Ablation Experiment Design and Results Analysis

This section systematically validates the impact of preprocessing steps, model architecture, and similarity metrics on the overall performance by using the above experimental dataset. The evaluation metrics include the accuracy rate in reporting abnormal images, the accuracy rate in anomaly type identification, and the time required for each inspection.

The contribution analysis of the preprocessing steps is conducted to validate the impact of morphological denoising and image alignment on performance, with the results shown in Table 6.

Table 6.

Contribution Analysis of the Preprocessing Steps.

As shown in Table 6, morphological denoising effectively filters out noise such as white spots on switch cabinet indicator lights. Without morphological denoising, there would be a 5.3% increase in misjudgment rates of abnormal regions due to noise interference. Image alignment corrects camera displacement through SIFT feature matching. Without image alignment, there would be errors in feature comparison caused by pixel misalignment, reducing the identification accuracy rate by 8.7%. The combined effect of both morphological denoising and image alignment can improve the overall performance by 13.5%, testifying to the necessity of the preprocessing steps.

The impact of model architecture selection is demonstrated by comparing the performance of CNN and Vision Transformer in reconstructing normal samples and detecting anomalies, with the results shown in Table 7.

Table 7.

Contribution Analysis of Model Architecture Selection.

As shown in Table 7, Vision Transformer slightly outperforms CNN in anomaly type identification, but its training convergence speed is 62.5% slower, and it underperforms CNN in capturing regular features such as oil level markings. Considering that real-time performance and training efficiency are important for substation inspection, CNN is clearly the better option.

The contribution of similarity metrics is demonstrated by comparing the performance of LPIPS with traditional SSIM and PSNR in image comparison, as shown in Table 8.

Table 8.

Contribution Analysis of Similarity Metrics.

As shown in Table 8, LPIPS achieves a higher identification rate for subtle anomalies by simulating human visual perception compared to traditional methods, which is the central reason for its 100% accuracy in reporting anomalies. Traditional methods, which focus on pixel-level differences, are susceptible to interference from lighting variations and can therefore lead to missed detections.

In conclusion, the preprocessing steps underpins the performance, while the integration of LPIPS with CNN represents the core innovation. The synergy of these three components achieves a balance between “zero missed detections” and high efficiency.

4.9. Labeling Quality Assessment

The evaluation of labeling quality encompasses pseudo-labeling accuracy, feature consistency, and training stability. Among them, the pseudo-labeling accuracy is the proportion of correctly labeled samples in the manually verified pseudo-labeled samples. Feature consistency is the average cosine similarity of high-dimensional features between pseudo-labeled samples and human-labeled samples. Training stability refers to the fluctuation magnitude of the model’s loss function after incorporating pseudo-labeled samples. The labeling quality assessment results in this paper are shown in Table 9.

Table 9.

Results of Labeling Quality Assessment.

As shown in Table 9, the pseudo-labeling accuracy of 97.3% and feature consistency of 0.92 demonstrate that the labeling quality is close to that of manually labeled samples. The loss function exhibits a low fluctuation of 0.012, verifying that the strategy has effectively suppressed confirmation bias and prevented the model from overfitting due to sample imbalance.

5. Conclusions

To address the issue of missed detection of abnormal images caused by scarcity of defect samples and inadequate model training that characterize the current substation image inspection methods, this paper proposes the novel VC-CNAS method for substation image inspection. In light of the fact that normal samples of substation equipment vastly outnumber abnormal ones, the VC-CNAS method achieves precise identification of abnormal images by constructing a normal sample database and performing comparative analysis. It can be summarized as follows:

(1) A comprehensive image preprocessing workflow is established. Defocused or underexposed equipment images are assessed using Laplacian gradient and PSNR, and images failing to meet quality requirements are recaptured. Morphological opening operations are employed for denoising, thus effectively filtering out noise interference in the images.

(2) High-precision image features are aligned and normal samples are trained. Corner features of the images are extracted based on SIFT, and pixel-level alignment between the current image and the normal sample images is achieved in conjunction with perspective transformation (PT) to effectively eliminate camera displacement errors. A semi-supervised CNN is employed to reconstruct normal samples, and by encoding, memorizing, and decoding high-dimensional features, a normal sample database covering multiple types of equipment has been constructed.

(3) An image comparison mechanism based on visual communication is innovated. The LPIPS metric is introduced to quantify the visual feature discrepancy between the current inspection image and those in the normal sample database. This metric, combined with a spatial attention module, enhances the focus on defective regions, enabling precise extraction and classification of abnormal regions.

(4) A closed-loop system for semi-supervised anomaly review is established. By dynamically updating the abnormal sample database and through manual verification, semi-supervised inspection of abnormal images is achieved to establish a continuous optimization mechanism of “machine screening—manual verification—model iteration”.

In conclusion, the proposed VC-CNAS method for substation image inspection in this paper effectively overcomes the reliance of traditional approaches on defective samples. It significantly enhances inspection efficiency while ensuring the accuracy of anomaly image identification, which makes it well-suited for equipment inspection in complex substation scenarios.

Going forward, the focus will be placed on optimizing the model architecture to further reduce the proportion of normal images among those reported as abnormal, thereby enhancing the practicality and cost-effectiveness of the proposed method.

Author Contributions

Conceptualization, D.T.; Methodology, D.T., Z.F. and Y.L.; Software, Z.F. and X.W.; Validation, D.T. and X.W.; Resources, Y.L.; Data curation, X.W.; Writing—original draft, Z.F., Y.L. and X.W.; Writing—review & editing, Y.L. and X.W.; Supervision, D.T. and Z.F.; Project administration, Z.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research and APC was funded by the National Key R&D Program of China grant number 2024YFB095200.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

Authors Donglai Tang and Xiang Wan were employed by the company Aostar Information Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Dong, H.; Tian, Z.; Spencer, J.W.; Fletcher, D.; Hajiabady, S. Bilevel Optimization of Sizing and Control Strategy of Hybrid Energy Storage System in Urban Rail Transit Considering Substation Operation Stability. IEEE Trans. Transp. Electrif. 2024, 10, 10102–10114. [Google Scholar] [CrossRef]

- Ren, Z.; Jiang, Y.; Li, H.; Gu, Y.; Jiang, Z.; Lei, W. Probabilistic cost-benefit analysis-based spare transformer strategy incorporating condition monitoring information. IET Gener. Transm. Distrib. 2020, 14, 5816–5822. [Google Scholar] [CrossRef]

- Zhao, X.; Yao, C.; Zhou, Z.; Li, C.; Wang, X.; Zhu, T.; Abu-Siada, A. Experimental Evaluation of Transformer Internal Fault Detection Based on V-I Characteristics. IEEE Trans. Ind. Electron. 2020, 67, 4108–4119. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, Y.; Pan, C.; Zhao, X.; Zhang, L.; Wang, Z. Ultra-reliable and Low-latency Mobile Edge Computing Technology for Intelligent Power Inspection. High Volt. Eng. 2020, 46, 1895–1902. [Google Scholar]

- Tang, Z.; Xue, B.; Ma, H.; Rad, A. Implementation of PID controller and enhanced red deer algorithm in optimal path planning of substation inspection robots. J. Field Robot. 2024, 41, 1426–1437. [Google Scholar] [CrossRef]

- Hu, J.L.; Zhu, Z.F.; Lin, X.B.; Li, Y.Y.; Liu, J. Framework Design and Resource Scheduling Method for Edge Computing in Substation UAV Inspection. High Volt. Eng. 2021, 47, 425–433. [Google Scholar]

- Zhou, J.; Yu, J.; Tang, S.; Yang, H.; Zhu, B.; Ding, Y. Research on temperature early warning system for substation equipments based on the mobile infrared temperature measurement. J. Electr. Power Sci. Technol. 2020, 35, 163–168. [Google Scholar]

- Chen, X.; Han, Y.F.; Yan, Y.F.; Qi, D.L.; Shen, J.X. A Unified Algorithm for Object Tracking and Segmentation and its Application on Intelligent Video Surveillance for Transformer Substation. Proc. CSEE 2020, 40, 7578–7586. [Google Scholar]

- Wan, J.; Wang, H.; Guan, M.; Shen, J.; Wu, G.; Gao, A.; Yang, B. An Automatic Identification for Reading of Substation Pointer-type Meters Using Faster R-CNN and U-Net. Power Syst. Technol. 2020, 44, 3097–3105. [Google Scholar]

- Zheng, Y.; Jin, M.; Pan, S.; Li, Y.-F.; Peng, H.; Li, M.; Li, Z. Toward Graph Self-Supervised Learning with Contrastive Adjusted Zooming. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 8882–8896. [Google Scholar] [CrossRef]

- Wan, X.; Cen, L.; Chen, X.; Xie, Y.; Gui, W. Multiview Shapelet Prototypical Network for Few-Shot Fault Incremental Learning. IEEE Trans. Ind. Inform. 2024, 20, 11751–11762. [Google Scholar] [CrossRef]

- Lee, K.H.; Yun, G.J. Microstructure reconstruction using diffusion-based generative models. Mech. Adv. Mater. Struct. 2024, 31, 4443–4461. [Google Scholar] [CrossRef]

- Jing, Y.; Zhong, J.-X.; Sheil, B.; Acikgoz, S. Anomaly detection of cracks in synthetic masonry arch bridge point clouds using fast point feature histograms and PatchCore. Autom. Constr. 2024, 168, 105766. [Google Scholar] [CrossRef]

- Yang, Y.X.; Angelini, F.; Naqvi, S.M. Pose-driven human activity anomaly detection in a CCTV-like environment. IET Image Process. 2022, 17, 674–686. [Google Scholar] [CrossRef]

- Wang, R.; Yin, B.; Yuan, L.; Wang, S.; Ding, C.; Lv, X. Partial Discharge Positioning Method in Air-Insulated Substation with Vehicle-Mounted UHF Sensor Array Based on RSSI and Regularization. IEEE Sens. J. 2024, 24, 18267–18278. [Google Scholar] [CrossRef]

- Qin, J.-H.; Ma, L.-Y.; Zeng, G.-F.; Xu, B.-L.; Zhou, H.-L.; Nie, J.-H. A Detection Method for the Edge of Cupping Spots based on Improved Canny Algorithm. J. Imaging Sci. Technol. 2024, 68, 168–176. [Google Scholar] [CrossRef]

- Jing, W.J. A unified homogenization approach for the Dirichlet problem in perforated domains. SIAM J. Math. Anal. 2020, 52, 1192–1220. [Google Scholar] [CrossRef]

- Doss, K.; Chen, J.C. Utilizing deep learning techniques to improve image quality and noise reduction in preclinical low-dose PET images in the sinogram domain. Med. Phys. 2024, 51, 209–223. [Google Scholar] [CrossRef]

- Sueyasu, S.; Kasamatsu, K.; Takayanagi, T.; Chen, Y.; Kuriyama, Y.; Ishi, Y.; Uesugi, T.; Rohringer, W.; Unlu, M.B.; Kudo, N.; et al. Technical note: Application of an optical hydrophone to ionoacoustic range detection in a tissue-mimicking agar phantom. Med. Phys. 2024, 51, 5130–5141. [Google Scholar] [CrossRef]

- Prabhu, R.; Parvathavrthini, B.; Raja, R.A.A. Slum Extraction from High Resolution Satellite Data using Mathematical Morphology based approach. Int. J. Remote Sens. 2021, 42, 172–190. [Google Scholar] [CrossRef]

- Li, C.; Hu, C.; Zhu, H.; Tang, F.; Zhao, L.; Zhou, Y.; Zhang, S. Establishment and extension of a compact and robust binary feature descriptor for UAV image matching. Meas. Sci. Technol. 2025, 36, 025403. [Google Scholar] [CrossRef]

- Li, S.; Le, Y.; Li, X.; Zhao, X. Bolt loosening angle detection through arrangement shape-independent perspective transformation and corner point extraction based on semantic segmentation results. Struct. Health Monit. 2025, 24, 761–777. [Google Scholar] [CrossRef]

- Zhang, Z.; Fang, W.; Du, L.L.; Qiao, Y.; Zhang, D.; Ding, G. Semantic Segmentation of Remote Sensing Image Based on Encoder-Decoder Convolutional Neural Network. Acta Opt. Sin. 2020, 40, 40–49. [Google Scholar] [CrossRef]

- Tao, X.; Hou, W.; Xu, D. A Survey of Surface Defect Detection Methods Based on Deep Learning. Acta Autom. Sin. 2021, 47, 1017–1034. [Google Scholar]

- Chen, X.; Yuan, G.; Nie, F.; Ming, Z. Semi-Supervised Feature Selection via Sparse Rescaled Linear Square Regression. IEEE Trans. Knowl. Data Eng. 2020, 32, 165–176. [Google Scholar] [CrossRef]

- Zhang, D.; Zhang, X.; Sun, H.; He, J.H. Fault Diagnosis for AC/DC Transmission System Based on Convolutional Neural Network. Autom. Electr. Power Syst. 2022, 46, 132–140. [Google Scholar]

- Shan, C.; Li, A.; Chen, X. Deep delay rectified neural networks. J. Supercomput. 2023, 79, 880–896. [Google Scholar] [CrossRef]

- Wu, K.; Zhang, J.A.; Huang, X.; Guo, Y.J.; Heath, R.W. Waveform Design and Accurate Channel Estimation for Frequency-Hopping MIMO Radar-Based Communications. IEEE Trans. Commun. 2021, 69, 1244–1258. [Google Scholar] [CrossRef]

- Zhang, H.R.; Zhao, J.H.; Xiaoguang, Z. High-resolution Image Building Extraction Using U-net Neural Network. Remote Sens. Inf. 2020, 35, 143–150. [Google Scholar]

- Yang, S.G. Perceptually enhanced super- resolution reconstruction model based on deep back projection. J. Appl. Opt. 2021, 42, 691–697,716. [Google Scholar] [CrossRef]

- Ming, Y.F.; Li, Y.X. How Does Fine-Tuning Impact Out-of-Distribution Detection for Vision-Language Models. Int. J. Comput. Vis. 2024, 132, 596–609. [Google Scholar] [CrossRef]

- Wen, Y.H.; Wang, L. Yolo-sd: Simulated feature fusion for few-shot industrial defect detection based on YOLOv8 and stable diffusion. Int. J. Mach. Learn. Cybern. 2024, 15, 4589–4601. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).