Abstract

The limited nature of fossil resources and their unsustainable characteristics have led to increased interest in renewable sources. However, significant work remains to be carried out to fully integrate these systems into existing power distribution networks, both technically and legally. While reliability holds great potential for improving energy production sustainability, the dependence of solar energy production plants on weather conditions can complicate the realization of consistent production without incurring high storage costs. Therefore, the accurate prediction of solar power production is vital for efficient grid management and energy trading. Machine learning models have emerged as a prospective solution, as they are able to handle immense datasets and model complex patterns within the data. This work explores the use of metaheuristic optimization techniques for optimizing recurrent forecasting models to predict power production from solar substations. Additionally, a modified metaheuristic optimizer is introduced to meet the demanding requirements of optimization. Simulations, along with a rigid comparative analysis with other contemporary metaheuristics, are also conducted on a real-world dataset, with the best models achieving a mean squared error (MSE) of just 0.000935 volts and 0.007011 volts on the two datasets, suggesting viability for real-world usage. The best-performing models are further examined for their applicability in embedded tiny machine learning (TinyML) applications. The discussion provided in this manuscript also includes the legal framework for renewable energy forecasting, its integration, and the policy implications of establishing a decentralized and cost-effective forecasting system.

1. Introduction

In recent years, the use of fossil fuels has fallen out of favor for power production. The limited nature of fossil resources, as well as their unsustainable characteristics, has led to increased interest in renewable sources [1]. Nevertheless, the integration of renewable sources is not without challenges [2]. Sources such as wind turbines, solar cells, or electric dams can require a comparatively large upfront investment. Additionally, certain sources, such as solar and wind, rely heavily on external factors like the weather, making them unreliable for certain critical applications [3,4]. Despite these challenges, the integration of renewable sources is essential for sustainability and energy independence. The accurate prediction of solar power generation is crucial for efficient grid management and energy trading. Renewable sources also benefit from being relatively clean and decentralized, which can alleviate many losses caused by power line inefficiencies.

The legal framework for the production and distribution of solar power in Western Balkan countries is increasingly harmonized with European Union (EU) legislation, which issues various legal documents to regulate the development of the energy sector and support the use of renewable energy sources. In policies and directives such as the White and Green Papers on energy policy and renewable energy sources, the EU focuses on supply security, environmental protection, and industry competitiveness. Directive 2018/2001/EU [5] highlights the importance of renewable energy use and sets targets for 2030, including reducing CO2 emissions by 40%, increasing the share of renewable energy to 32%, and raising the share of renewables in transportation to 14%. To achieve these goals, member states develop national action plans with strategies, legislative frameworks, and incentives. Since the introduction of this directive, the share of renewable energy in the EU’s energy consumption has grown from 12.5% in 2010 to 23% in 2022 [6].

In line with EU policies, the legal framework for solar energy production and distribution in Western Balkan countries is oriented toward alignment with EU requirements. Although there are differences in the legislation of each country, most apply common standards through laws such as the Energy Act and the Renewable Energy Act, as well as through national energy development strategies. All Western Balkan countries have a basic Energy Act that encompasses renewable energy sources (RESs). This law typically defines the rights and obligations of solar energy producers, the obligation of transmission systems to facilitate grid connections for RES producers, procedures for issuing permits for the construction and commissioning of solar power plants, and incentives for investments in RES through subsidies, feed-in tariffs, or favorable loans.

The use of artificial intelligence (AI) has demonstrated impressive capabilities for handling time-series forecasting [7], even in the energy sector. By leveraging the forecasting abilities of AI algorithms to balance production and demand, better utilization of this valuable resource can be attained. Furthermore, by providing a more accurate and reliable estimate of production, better integration can be achieved with existing power distribution systems, reducing transmission losses [8,9].

However, AI algorithms are often computationally demanding, requiring substantial investments to train and execute predictions. Models are usually run on relatively expensive graphics processing units (GPUs) and require supporting system infrastructure. This can lead to relatively high power demands. Optimized models could potentially be compared and executed on significantly cheaper systems, enabling accurate models to run at a fraction of the hardware costs and with significantly lower power demands. Tiny machine learning (TinyML) [10] is a subset of machine learning (ML) that explores the deployment of ML models on microcontroller units (MCUs). Such deployment could significantly reduce the cost of using ML in deployment, especially in remote locations where solar and wind turbines may be installed. Further use of predictive models could help better integrate forecasting models into Internet of Things (IoT) networks [11,12].

This work explores the use of metaheuristic optimization algorithms to select optimal hyperparameters for three types of recurrent neural networks (RNNs) [13]. The goal is to select lightweight architectures that can be ported to MCUs for field use while maintaining suitable performance. As the task of hyperparameter tuning is often considered NP-hard [14], a modified metaheuristic optimizer is proposed, and a comparative analysis is conducted against several contemporary optimizers on two publicly available datasets, resulting in six experiments in total. The best-performing models are further explored for their forecasting potential once deployed on an MCU. The contributions of this work can be summarized as follows:

- An exploration of the legal foundations of energy forecasting in the Western Balkan region;

- The proposal of a lightweight framework for the in situ forecasting of solar energy production using models optimized for MCUs;

- The introduction of a modified metaheuristic optimizer tailored to the optimization needs of this study;

- The selection of lightweight time-series forecasting architectures with suitable accuracy for power systems;

- The implementation of the proposed model on an MCU to evaluate the practical effectiveness of the approach.

The structure of the remaining work is outlined as follows: Section 2 covers preceding works that inspire and support this study. In Section 3, the methodology is discussed in detail. Section 4 and Section 5 discuss the experimental configuration and present the attained results. Section 6 concludes the work and presents proposals for future research in the field.

2. Related Works

Renewable sources play an increasingly important role in maintaining energy sustainability. While full substitution with current technology is not yet possible, it is essential to shift the developmental focus toward improving and better integrating renewable sources into existing grids [15]. The utilization of renewable sources can take several forms; geothermal, wind, and solar energy have all been widely utilized [16], with wind and solar being among the most popular.

An issue with wind and solar energy production is the inherent reliance of this technology on the weather. Changes in production make it difficult to balance supply with demand. While storage solutions exist, they are often not economically viable. Accurately forecasting production can help improve utilization as well as planning for supplemental production from other sources. One potential way of forecasting production is through the use of time-series forecasting techniques [17]. Time-series forecasting has been applied in several fields and demonstrated notably good results. The use of AI algorithms can help improve forecasting accuracy, as AI can learn from observed data, accounting for more subtle relationships between features. Recurrent neural networks are particularly renowned for their capability to handle time series [18,19]; however, other approaches have been used as well, like deep learning models [20,21]. Several research papers outlined long short-term networks [22,23] and gated recurrent units [24,25] as particularly effective for this task.

Much like in situ production, in situ forecasting can help alleviate some of the challenges of operating remote systems [26]. While the training, preparation, and execution of ML forecasting models are undoubtedly very computationally demanding, once a trained model is established, its execution can be sufficiently optimized for relatively modest computational resources. Running predictive models on MCUs, known as TinyML, is a potentially lucrative approach for energy forecasting [12]. Remote production systems could be better tuned to maintain power production, balance stored power usage, and provide warnings when necessary. By operating on edge devices, TinyML minimizes the dependence on centralized infrastructure, effectively reducing the communication cost and improving system robustness. Additionally, it can support real-time data processing, lowering latency and allowing immediate predictions and decision-making. However, it is important to be mindful of the limited capacity of MCUs and design models that are sufficiently accurate yet computationally simple enough to run on economically viable and commercially available MCUs.

Hyperparameter optimization plays an essential role in adapting ML models for both accurate forecasting and execution on TinyML platforms. Hyperparameter selection helps tailor forecasting models to the applied problem, thereby improving overall model performance [27,28]. Optimization can also yield less computationally demanding models, striking a balance between accuracy and efficiency. Nevertheless, optimization in ML environments can be challenging. Given the large search spaces presented by modern algorithm configuration schemes, hyperparameter tuning can be considered NP-hard [29].

Researchers have adapted and utilized several techniques to address NP-hard optimization challenges. A popular approach among these is the use of metaheuristics. Metaheuristic optimizers adopt simulated search strategies to locate promising solutions within a given search space by employing a randomness-guided approach. Several optimizers have emerged over the years, drawing inspiration from various sources. Notable optimization algorithms include particle swarm optimization (PSO) [30] and genetic algorithms (GAs) [31]. More modern examples include the recently proposed reptile search algorithm (RSA) [32] and sinh-cosh optimizer (SCHO) [33]. Metaheuristic optimizers have shown promising outcomes applied to several challenges in the field, including hyperparameter selection. Some notable examples include applications in healthcare [34,35,36] and sentiment analysis [37,38]. Renewable energy forecasting has also been explored [39,40], as well as other time-series forecasting tasks [41,42].

While the use of AI algorithms for forecasting holds great potential in modern research, the incorporation of metaheuristic optimizers could enhance performance while reducing the computational costs of model execution. However, their combination has yet to be explored for deployment on TinyML devices. This work seeks to address this observed gap in the literature by proposing a modified metaheuristic optimizer designed to select optimal hyperparameter settings for solar energy production forecasting using RNN architectures, yielding adequately accurate models while maintaining low computational complexity. Furthermore, few studies have highlighted the difficulties and challenges associated with integration, given the current limitations of the legal framework in the Western Balkan regions.

2.1. Legal Framework for Production and Distribution of Solar Power

The legal framework in Western Balkan countries addressing the impact of solar energy on climate change is increasingly aligned with EU legislation. One of the key measures is the Renewable Energy Directive (RED II), which countries in the region implement through their membership in the Energy Community. The Energy Community, an international organization founded on 25 October 2005 in Athens, aims to extend the EU’s internal energy market to Southeast Europe, including the Montenegrin region.

The current Energy Law in Montenegro, for example, specifies “energy activities, regulates the conditions and manner of their performance to ensure quality and secure energy supply to end consumers, encourages the production of energy from renewable sources and high-efficiency cogeneration, regulates the organization and management of the electricity and gas markets, and other issues relevant to energy” [43]. In 2024, procedures began for adopting a new Energy Law in Montenegro, through which the EU directive will be transposed. It has also been announced that a new law on the cross-border exchange of electricity and natural gas will undergo public consultation. The adoption of this law would be significant for Montenegro, as it would formalize the complete regulatory package of the Energy Community.

In terms of environmental protection, the legal framework in Western Balkan countries is gradually aligning with EU legislation aimed at preserving natural resources, reducing pollution, and ensuring sustainable development. Although specific differences remain, most Western Balkan countries follow similar principles and frameworks. Key elements of environmental protection regulations include the Environmental Protection Law, which sets standards for air, water, and soil quality, environmental impact assessments, and ecological taxes; water resource management, which regulates conservation, pollution control, and river basin planning; biodiversity protection, which outlines strategies for habitat and endangered species preservation; waste management, which promotes recycling and penalizes improper disposal; air pollution control, which sets air quality standards and encourages a transition to cleaner energy sources; and energy efficiency and climate change policies, promoting renewable energy and adaptation to climate change [44].

While the environmental protection framework in the region is improving, implementation challenges remain, including a lack of funding and technical capacity. The international aspects of solar energy exchange and electricity distribution across the Western Balkans are becoming increasingly important due to growing energy demands and the transition to renewable sources. Most Western Balkan countries are connected through energy corridors and networks that enable cross-border energy exchange. However, capacities for solar energy exchange are still under development due to the specific characteristics of solar systems, whose production depends on weather conditions. Efficient solar energy exchange requires storage infrastructure, such as battery systems, which allow energy produced during sunny periods to be stored for later use or exported to neighboring countries during high demand. Solar energy exchange in the region is significant not only environmentally but also geopolitically, as it reduces dependence on fossil fuels and major energy exporters.

The Renewable Energy Directive encourages a greater share of solar and other clean energies in overall energy production, aiming to reduce emissions. The National Energy and Climate Plans (NECPs) of countries like Serbia, North Macedonia, and Albania set targets for renewable energy and CO2 emission reduction. Before the construction of solar power plants, an environmental impact assessment (EIA) is mandatory to ensure that solar projects do not harm the natural environment. Countries in the region are also introducing financial incentives, such as subsidies and feed-in tariffs, for solar projects, supporting ecological transition. The feed-in tariff (FiT) system is an incentive for renewable energy plants, where the distribution or transmission grid operator contracts with the plant operator to pay a predetermined price for each unit of electricity delivered over an agreed period [45].

According to Montenegro’s Energy Law, the National Energy and Climate Plan specifically includes, under Article 8, paragraph 2, national targets related to greenhouse gas emissions; renewable energy use; energy efficiency; energy security; the internal energy market; and research, innovation, and competitiveness [43]. In addition, regulations are harmonized with EU standards for air quality and pollution control, supporting a sustainable transition to solar energy and reducing the ecological footprint. In May 2021, the International Energy Agency (IEA) published a study [46] analyzing a complete decarbonization scenario by 2050, projecting that, by 2050, 90% of global electricity production could come from wind and solar photovoltaic power plants.

2.2. Recurrent Neural Networks

An RNN [13] is a specialized variant of a neural network designed in such a way as to allow for the handling of sequential data. Many core elements of a neural network are maintained in an RNN, with neurons and connections remaining practically identical. However, the RNN introduces recurrent self-connections for neurons, thus allowing preceding neuron activations to influence future outcomes. Given an input sequence I, an RNN operation for each time step t can be described as follows:

where represents the output and denotes the hidden state at time t. A neural network is characterized by a weighted network W. While the simple RNN expanded the capabilities available to researchers working with sequential data and neural networks, new issues arose. Specifically, vanishing and exploding gradients tend to disrupt network training and can result in suboptimal overall performance. Thus, mode-refined versions of these models have been developed.

2.3. Long Short-Term Memory Networks

One expanded version of the simple RNN is long short-term memory (LSTM) [47]. The LSTM network expands on the recurrent structure and integrates a complex series of gates to control the flow of information within the network, allowing some information to be retained within the network for later use. A cell state is introduced and used to preserve data. A forget gate can be used to clear a state.

where signifies its inputs at time step t, represents the previous hidden state, and and refer to the weight coefficients linked to these inputs, with representing the bias vector. Incoming data can be introduced to the cell state by using an input gate (), as outlined in Equation (3).

The weight coefficients relevant to this step are given by and , with the bias represented by . A series of additional candidate values is computed through a hyperbolic tangent layer, as shown in Equation (4).

Here, it is essential to consider the weight coefficients and , as well as the bias term . To update the cell state, an element-wise product ⊙ is used in combination with the forget gate , which clears the prior cell state to allow the updated cell state to be established with the current data. The newly computed candidate values are multiplied by the input gate and then combined with this result, as defined by Equation (5).

The initial sigmoid output, represented by , undergoes further processing, as indicated in Equation (6), where the cell state influences this processing. This resulting output is then passed through a layer, as specified in Equation (7), resulting in the updated hidden state. Here, and are the weight coefficients, while represents the bias term.

2.4. Gated Recurrent Units

An additional variation of the simple RNN is the gated recurrent unit (GRU) [48] network. While this network also relies on a series of gates, a significantly simpler system, consisting of only update and reset gates, is utilized. This makes GRU networks less computationally complex then LSTM while still being able to resist some of the obvious drawbacks of the baseline RNN.

In a GRU network, the update gate , which controls how much of the hidden state is replaced with new information, is computed at time step t as follows:

The candidate hidden state is computed as

Finally, the hidden state is updated by combining the previous hidden state and the candidate state:

In this context, denotes the sigmoid function, ⊙ represents element-wise multiplication, and denotes the hyperbolic tangent function. The weights and biases are the learned parameters of the GRU.

3. Methodology

3.1. The Variable Neighborhood Search Optimizer

The variable neighborhood search (VNS) [49] algorithm utilizes a systemic approach to adapt the neighborhood structure during optimization. The idea behind this approach is to focus on exploring more distant locations within the search space and allow the algorithm to avoid local and focus on global optima. The main stages of the optimization strategy are shaking, local search, and neighborhood change.

During shaking procedures, a solution in a simulated neighborhood, different from the current best, is selected. The structure of this neighborhood is then meticulously altered to effectively explore regions. This can be represented as

where denotes a neighborhood index in a given iteration K, while x denotes the current solution and is the output. Once is determined, a local search is conducted, as described in the following:

If the local search finds an improved solution, meaning , the current solution is updated to , and the neighborhood index is reset to . If not, the algorithm explores more distant neighborhoods by increasing k:

These procedures are repeated until a suitable solution is found or another criterion is met. The VNS algorithm is fairly flexible, and different criteria can be used depending on the goal of optimization.

3.2. The Modified VNS Algorithm

While the original VNS [49] optimizer still showcases admirable performance, extensive testing using congress of evolutionary computing (CEC) benchmarking functions [50] hints that room for improvement exists. Empirical tests also suggest that population diversity improvements can help bolster the outcomes attained by the optimizer. Integrating an adaptive approach could help the modified optimizer strike a better balance between intensification and exploration, further benefiting the attained solution.

Diversification control mechanisms have been proposed in the literature [51] centered on the norm. This metric allows for the consideration of diversity on both the agent and population levels. Given a set of agents p for an n-dimensional problem, the norm can be computed as follows:

where denotes the mean vector of agent positions across search space dimensions, with denoting an individual diversity vector denoted as the norm and signifying the diversity score of the entire population.

Since greater diversity is preferred in the early stages, diversity is dynamically adjusted throughout optimization. The norm manages this diversity via a dynamic threshold parameter, . If population diversity is found to be inadequate, the worst-performing agents are removed from the population. Diversity is tracked using , with an initial diversity value calculated as follows:

As the algorithm progresses and solutions approach the optimal region, the value of should decrease from the initial value according to the following rule:

where t and represent the current and next iterations, and T is the total number of iterations per run. In the later stages, as is gradually reduced, this mechanism will no longer be triggered, regardless of the value of .

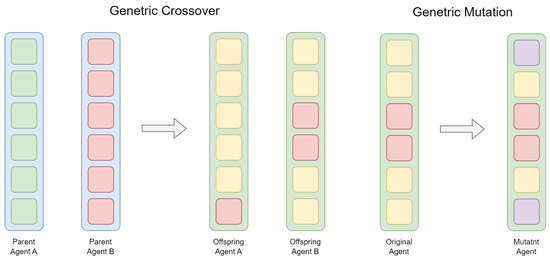

In each optimization iteration, worst-performing agents are removed from the population, and new solutions are generated using techniques inspired by the GA [31]. If , then replacement agents are produced by recombining 2 random agents in the population. If the condition is not met, the two best-performing agents are recombined. By randomly changing the created agent parameters, mutation further alters the obtained solutions. The crossover and mutation mechanisms are depicted in Figure 1.

Figure 1.

Genetic crossover and mutation mechanisms.

Crossover and mutation are controlled by the crossover and mutational probability parameters and , which have been shown to provide the best results when set to and .

The updated metaheuristic incorporates an extra adaptive parameter. To encourage exploration in the early phases of optimization, an adjustable parameter is used. Later phases use the original FA’s [52] remarkably potent intensification plan to encourage exploitation. The following equation presents the search processes for the FA:

where, as of iteration t, indicates the location of agent j, while represents the position of the firefly. Depending on their distance from one another, the parameter regulates the attraction between i and j. represents the light absorption coefficient, governs the randomness, and represents the stochastic vector.

In order to balance exploration and exploitation and enable both algorithms to participate, the parameter is first set to 1.5 and is changed in each iteration in accordance with

where t is the current optimization iteration, and T is the total. The FA search will be used if a randomly generated value in the range at the beginning of each iteration exceeds ; if not, the VNS search techniques are used. The suggested optimizer is called the diversity-oriented adaptive VNS (DOAVNS) with the included adjustments in mind. Algorithm 1 provides the procedural pseudocode for this optimizer.

| Algorithm 1 Proposed DOAVNS optimizer code. |

|

3.3. The ESP32 Platform for TinyML Use

The ESP32 [53] is a popular platform for IoT devices. The system-on-a-chip offers many advantages with an affordable, small footprint. The device supports several Bluetooth protocols as well as Wi-Fi functionality. In module form, several standard interfaces are available, including UART, I2C, GPIO, DAC, and ADC, as well as SD card support and motor PWM. This makes the ESP32 a very versatile platform for use in IoT networks [54], especially when considering the low minimum current demands of only 500 mA. The module relies on two low-power Xtensa 32-bit LX6 microprocessors and supports 446 KB of ROM as well as 520 KB of on-chip SRAM. The ESP32 can support a real-time operating system (RTOS). A low-power coprocessor can be utilized if computational resources are not required, such as in the case of monitoring peripherals, allowing for further power reduction.

Several frameworks exist for porting ML models for use on the ESP32, with support from the Arduino community [55]. One such framework allows for TensorFlow Lite models to be compiled, loaded, and run on the ESP32. The best-performing model optimized by metaheuristic optimizers in this work is subjected to compilation and adapted for use on the ESP32 chip. The Arduino IDE is used for programming, TensorFlow Lite is used for model compilation, and Python is used to conduct model training and optimization tasks.

TinyML offers considerable cost benefits in hardware and energy consumption. Microcontrollers compatible with TinyML like STM32 and Arduino Nano 33 BLE Sense cost approximately USD 5–20 per unit, compared to traditional IoT or edge devices such as Raspberry Pi or industrial computers, which range from USD 30 to 150. This results in hardware cost savings of up to 80%. In addition, TinyML devices typically consume just 1–10 milliwatts of power, considerably less than the 1–5 watts consumed by standard edge computing devices, resulting in over 90% reduction in energy usage and leading to lower operational costs and reduced battery maintenance needs.

Deployment and maintenance are also more economical with TinyML. These devices require minimal infrastructure and are simpler for management, resulting in an estimated 50% reduction in deployment and maintenance costs in comparison to the standard edge solutions. Furthermore, by processing data on-device, TinyML drastically reduces the volume of data sent to the cloud, considerably lowering bandwidth and storage costs. This can lead to bandwidth savings of up to 70%, which is particularly beneficial in remote or high-data environments.

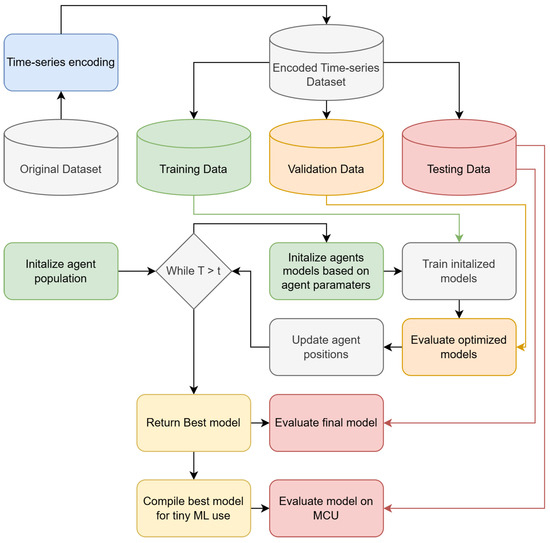

3.4. Proposed Framework

The framework proposed in this paper includes three-stage optimization. This encompasses data preparation and time-series encoding. A set of samples is used to create a time series, and data are separated into training, testing, and validation portions. Agent populations are generated, and based on solution parameters, models are generated. The generated models are trained using the training portion of the dataset and evaluated on the validation set. Once a termination criterion is met, the final model is evaluated using the withheld training portion. The best trained model is then compiled for use on an MCU, and performance is simulated using the UART input. In other words, once model optimization is completed (typically using more expensive hardware such as GPUs or TPUs), these optimized models can be efficiently deployed on embedded devices, operating with minimal power consumption. A flowchart explaining the proposed framework is presented in Figure 2.

Figure 2.

Proposed framework flowchart.

4. Experimental Setup

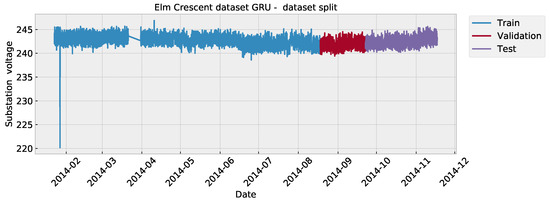

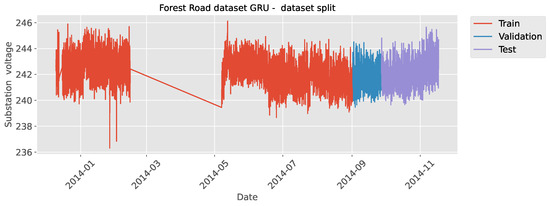

To evaluate the effectiveness of the proposed method, a publicly available dataset sourced from the real world was used, which can be found on Kaggle (https://www.kaggle.com/datasets/pythonafroz/solar-panel-energy-generation-data, accessed on 25 October 2024). The simulations concentrated on the Elm Crescent and Forest Road segments of the dataset. The data were sequentially divided into training, validation, and testing sets in a 70/10/20 ratio. Simulations were performed with a 12-step lag and 1-step-ahead targets. The dataset divisions are illustrated in Figure 3 for the Elm Crescent and in Figure 4 for the Forest Road portion of the dataset.

Figure 3.

Elm Crescent dataset visualization.

Figure 4.

Forest Road dataset visualization.

A comparative simulation was conducted between the proposed algorithm and the baseline VNS [49]. The evaluation also included other algorithms, such as the GA [31], PSO [30], RSA [32], and SCHO [33]. Each optimizer was implemented separately for the simulations using the parameter configurations specified in the original papers for each algorithm. Seven iterations were performed for each optimizer, utilizing a population of five individuals, and the simulations were run independently 30 times due to the high computational cost associated with optimization. The optimizers were responsible for hyperparameter selection for the RNN, LSTM, and GRU models using empirically determined ranges, as detailed in Table 1.

Table 1.

Parameter ranges for RNN, LSTM, and GRU networks.

Model performance is assessed using standard regression metrics [56].

An additional metric known as the index of agreement (IoA) [57] was also tracked during optimization to provide a more comprehensive view of the models’ performance. The formula for this metric is provided below:

In the given formulas, and represent the actual and predicted values for the i-th sample, represents the mean value, and n is the sample size. For the simulations performed, MSE is used as the objective function, while serves as the indicator function.

5. Simulation Outcomes

The simulation outcomes attained in the conducted experiments are presented in three parts. Firstly, the simulation scores of the RNN on the Elm Crescent and Forest Road datasets are presented. In all tables containing simulation outcomes, the best score in every observed category is highlighted in bold. Then, LSTM simulation outcomes are presented in the same order. Finally, simulations with GRU are presented. The best-performing models in each set of simulations are then compared separately in terms of detailed metrics. The outcomes are subjected to statistical validation. Finally, simulations for a model deployed on an ESP32 are reported.

5.1. RNN Simulations

5.1.1. RNN Elm Crescent Simulations

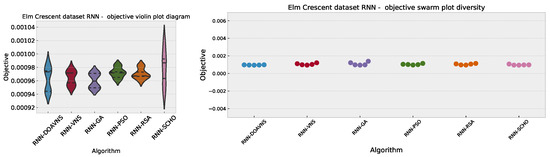

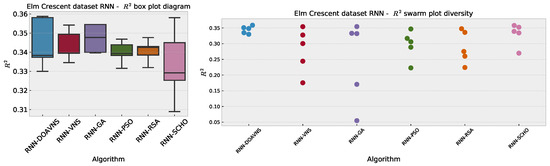

Comparisons in terms of the objective function for Elm Crescent RNN simulation scores are presented in Table 2. The introduced optimizer attained the best-performing model, scoring an objective function result of . The GA showcases impressive performance, achieving the best scores in the worst, mean, and median outcomes, while the PSO showcases the highest rate of stability despite not matching the favorable performance of other optimizers. Further stability comparisons are provided in terms of objective and indicator score distribution diagrams for the objective function in Figure 5 and indicator function in Figure 6. While the modified optimizer showcases a lower rate of stability in comparison to the baseline optimizer, this is to be somewhat expected when boosting algorithm diversification. Furthermore, this drop in stability allowed the optimizer to explore more promising regions of the search space, ultimately attaining the best-performing parameter selections for this test case.

Table 2.

Elm Crescent RNN simulations’ objective function outcomes.

Figure 5.

Elm Crescent RNN simulations’ objective function distribution diagrams.

Figure 6.

Elm Crescent RNN simulations’ indicator function distribution diagrams.

Further comparisons in terms of detailed metrics are provided in Table 3. The model optimized by the introduced DOAVNS optimizer showcases a definitive advantage over other models, attaining the best scores across all metrics.

Table 3.

Elm Crescent RNN simulations’ detailed metrics for the best-performing optimized models.

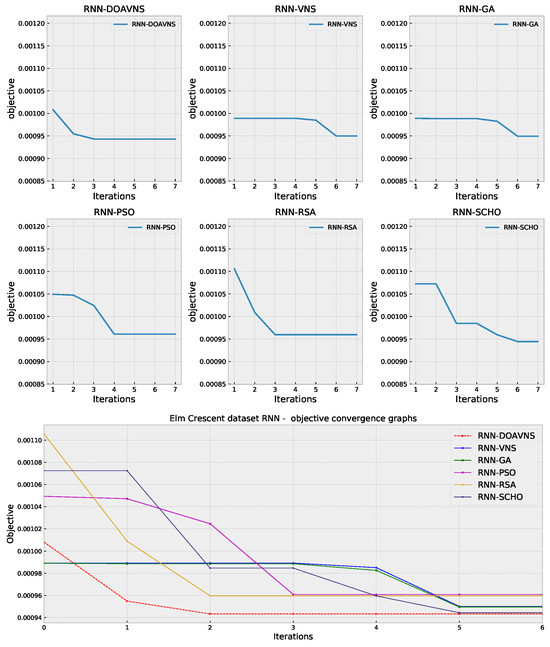

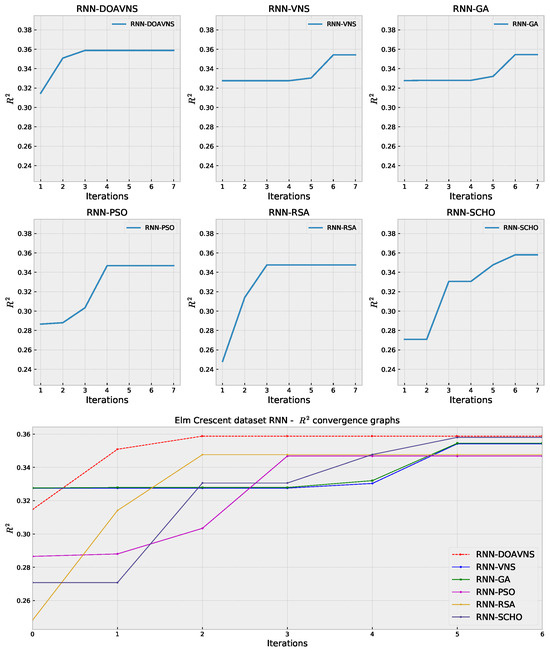

Further insights into the performance of the optimizer and its ability to prevent premature stagnation, as well as avoid being trapped in local optima, are showcased in terms of convergence diagrams. For each of the algorithms included in the comparative analysis, convergence diagrams in terms of objective and indicator functions are provided in Figure 7 and Figure 8. The boost in diversification helps the introduced optimizer converge toward an optimum, while other algorithms struggle with local best solutions.

Figure 7.

Elm Crescent RNN simulations’ objective function convergence diagrams.

Figure 8.

Elm Crescent RNN simulations’ indicator function convergence diagrams.

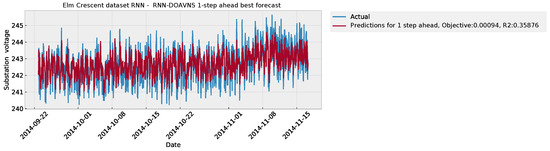

The parameter selections made by each algorithm for the respective best-performing model are provided in Table 4 to facilitate simulation repeatability. The predictions made by the best-performing model are provided in Figure 9, while the plot of the error over time is presented in Figure 10.

Table 4.

Best-performing RNN model parameter selections for Elm Crescent simulations.

Figure 9.

Forecasts of best DOAVNS model from Elm Crescent RNN simulations.

Figure 10.

Elm Crescent RNN simulations’ error over time.

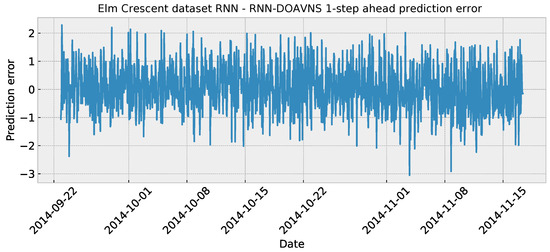

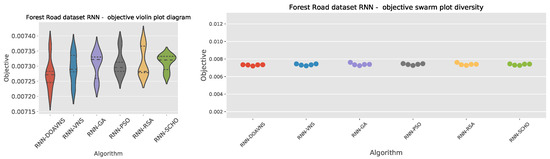

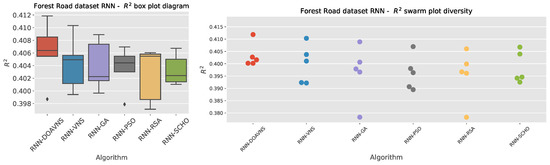

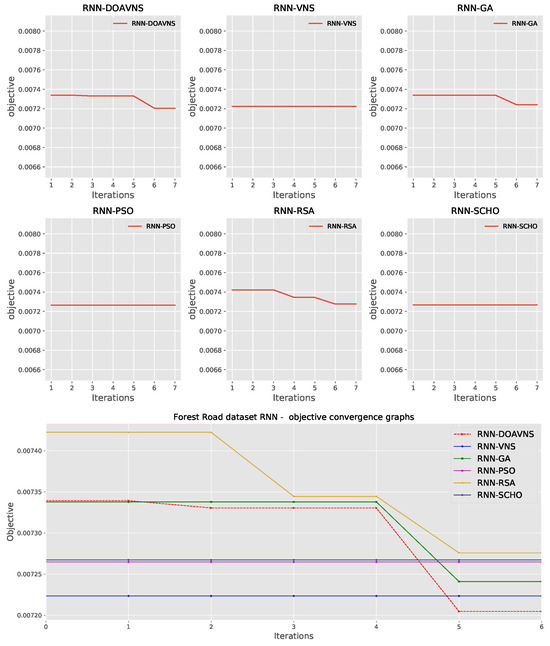

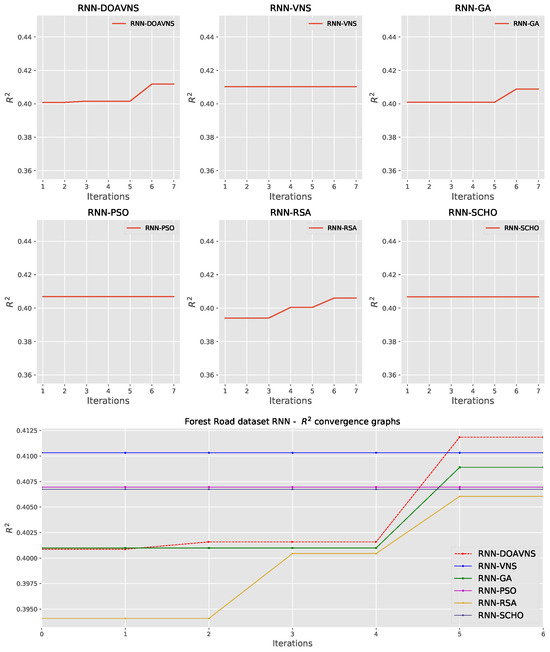

5.1.2. RNN Forest Road Simulations

Comparisons in terms of the objective function for Forest Road RNN simulation scores are presented in Table 5. The introduced optimizer attained the best-performing model, scoring an objective function result of . The introduced optimizer showcases superior performance in the mean and median outcomes, with respective scores of and . The SCHO algorithm attained the best outcomes in terms of worst-case simulations. The highest rate of stability is demonstrated by the SCHO. Further stability comparisons are provided in terms of objective and indicator score distribution diagrams for the objective function in Figure 11 and indicator function in Figure 12. The modified algorithm showcases a slightly reduced stability in this set of simulations, focusing on a more promising region in comparison to all other optimizers, as well as the baseline PSO.

Table 5.

Forest Road RNN simulations’ objective function outcomes.

Figure 11.

Forest Road RNN simulations’ objective function distribution diagrams.

Figure 12.

Forest Road RNN simulations’ indicator function distribution diagrams.

Further comparisons in terms of detailed metrics are provided in Table 6. The model optimized by the introduced DOAVNS optimizer showcases the best scores in terms of R2, MSE, and RMSE, while the GA showcases a high score in terms of MAE, and the SCHO showcases the best IoA score.

Table 6.

Forest Road RNN simulations’ detailed metrics for the best-performing optimized models.

Convergence diagrams for each optimizer are provided in Figure 13 and Figure 14 for objective and indicator scores. The introduced optimizer overcomes a local optimum and converges toward a better solution in iteration five, outperforming competing optimizers.

Figure 13.

Forest Road RNN simulations’ objective function convergence diagrams.

Figure 14.

Forest Road RNN simulations’ indicator function convergence diagrams.

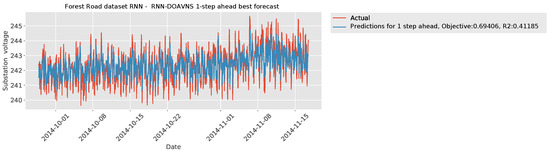

The parameter selections made by each algorithm for the respective best-performing model are provided in Table 7 to facilitate simulation repeatability. The predictions made by the best-performing model are provided in Figure 15, while the plot of the error over time is showcased in Figure 16.

Table 7.

Best-performing RNN model parameter selections for Forest Road simulations.

Figure 15.

Forecasts of best DOAVNS model from Forest Road RNN simulations.

Figure 16.

Forest Road RNN simulations’ error over time.

5.2. LSTM Simulations

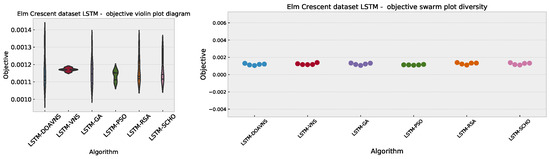

5.2.1. LSTM Elm Crescent Simulations

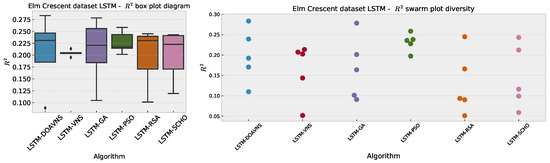

Comparisons in terms of the objective function for Elm Crescent LSTM simulation scores are presented in Table 8. The introduced optimizer attained the best-performing model, scoring an objective function result of . The PSO optimizer showcases decent results for the worst and mean scores; however, the introduced optimizer showcases the highest median scores. The VNS showcases the highest rate of stability when compared to other optimizers included in the comparative simulations. Further stability comparisons are provided in terms of objective and indicator score distribution diagrams for the objective function in Figure 17 and indicator function in Figure 18. A notable drop in stability can be observed for the introduced optimizer. This is to be somewhat expected with increasing diversification. However, the introduced modifications help improve performance, allowing the introduced modified algorithm to locate the most promising region and attain the highest objective scores.

Table 8.

Elm Crescent LSTM simulations’ objective function outcomes.

Figure 17.

Elm Crescent LSTM simulations’ objective function distribution diagrams.

Figure 18.

Elm Crescent LSTM simulations’ indicator function distribution diagrams.

Further comparisons in terms of detailed metrics are provided in Table 9. The model optimized by the introduced DOAVNS optimizer showcases the best scores in terms of all metrics except the IoA, where the GA showcases the highest score.

Table 9.

Elm Crescent LSTM simulations’ detailed metrics for the best-performing optimized models.

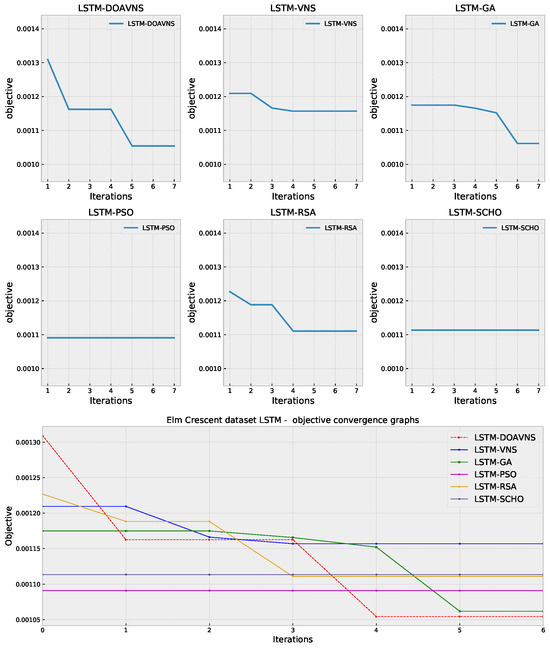

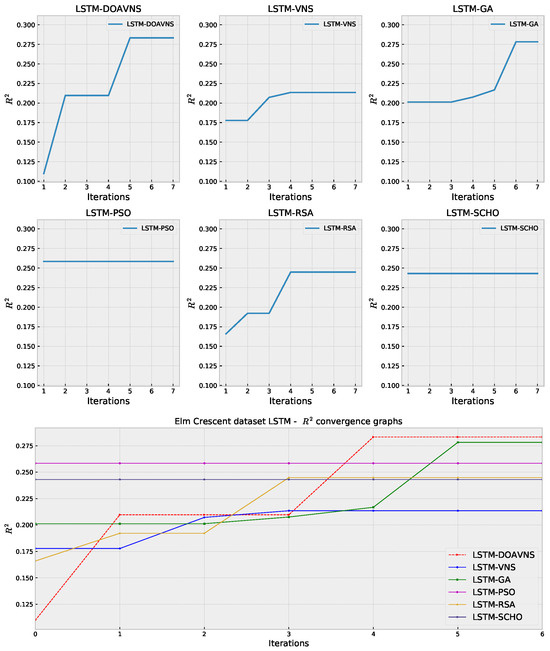

Convergence diagrams for each optimizer are provided in Figure 19 and Figure 20 for objective and indicator scores. The introduced optimizer overcomes a local optimum and converges toward a better solution in iteration four, outperforming competing optimizers.

Figure 19.

Elm Crescent LSTM simulations’ objective function convergence diagrams.

Figure 20.

Elm Crescent LSTM simulations’ indicator function convergence diagrams.

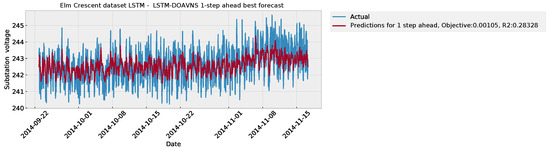

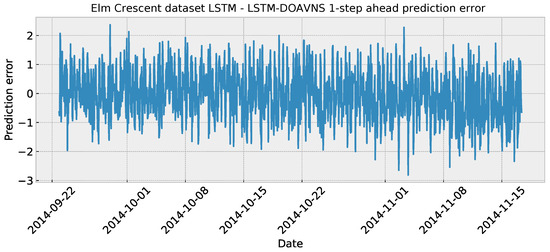

The parameter selections made by each algorithm for the respective best-performing model are provided in Table 10 to facilitate simulation repeatability. The predictions made by the best-performing model are provided in Figure 21, while the plot showcasing the error over time is outlined in Figure 22.

Table 10.

Best-performing LSTM model parameter selections for Elm Crescent simulations.

Figure 21.

Forecasts of best DOAVNS model from Elm Crescent LSTM simulations.

Figure 22.

Elm Crescent LSTM simulations’ error over time.

5.2.2. LSTM Forest Road Simulations

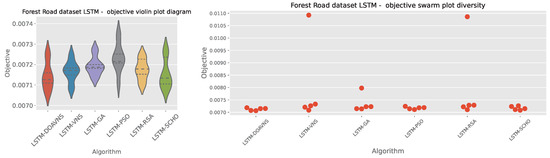

Comparisons in terms of the objective function for Forest Road LSTM simulation scores are presented in Table 11. The introduced optimizer attained the best-performing model, scoring an objective function result of . The optimizer also scores highly in terms of the mean and median. However, the RSA attains the best outcome in the worst-case simulation. The GA showcases the highest stability rating. Further stability comparisons are provided in terms of objective and indicator score distribution diagrams for the objective function in Figure 23 and indicator function in Figure 24. A slight drop in stability can be observed for the introduced optimizer. This is to be somewhat expected with increasing diversification. Nevertheless, these modifications also allow the optimizer to avoid local optima, attaining overall better results in comparison to other tested algorithms.

Table 11.

Forest Road LSTM simulations’ objective function outcomes.

Figure 23.

Forest Road LSTM simulations’ objective function distribution diagrams.

Figure 24.

Forest Road LSTM simulations’ indicator function distribution diagrams.

Further comparisons in terms of detailed metrics are provided in Table 12. The model optimized by the introduced DOAVNS optimizer showcases the best scores in terms of all metrics in the conducted tests.

Table 12.

Forest Road LSTM simulations’ detailed metrics for the best-performing optimized models.

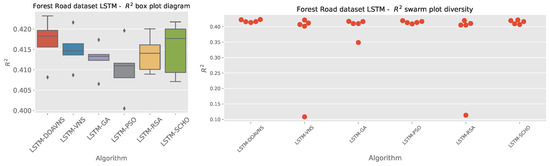

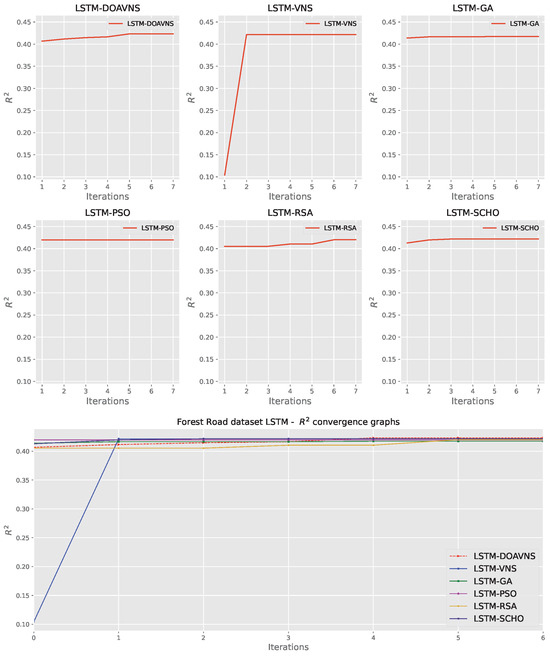

Convergence diagrams for each optimizer are provided in Figure 25 and Figure 26 for objective and indicator scores. The introduced optimizer overcomes a local optimum and converges toward a better solution, outperforming competing optimizers.

Figure 25.

Forest Road LSTM simulations’ objective function convergence diagrams.

Figure 26.

Forest Road LSTM simulations’ indicator function convergence diagrams.

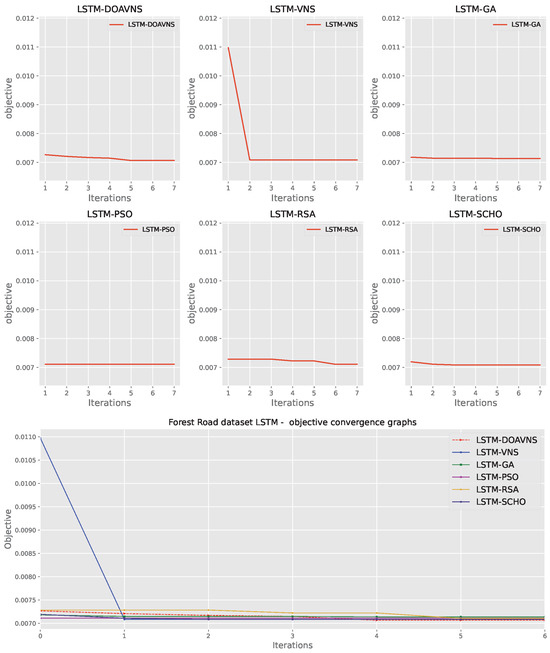

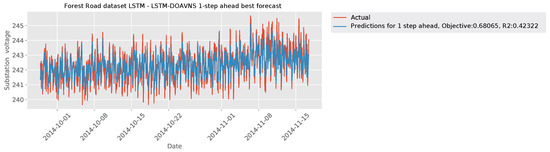

The parameter selections made by each algorithm for the respective best-performing model are provided in Table 13 to facilitate simulation repeatability. The predictions made by the best-performing model are provided in Figure 27, while Figure 28 provides insight into the error over time.

Table 13.

Best-performing LSTM model parameter selections for Forest Road simulations.

Figure 27.

Forecasts of best DOAVNS model from Forest Road LSTM simulations.

Figure 28.

Forest Road LSTM simulations’ error over time.

5.3. GRU Simulations

5.3.1. GRU Elm Crescent Simulations

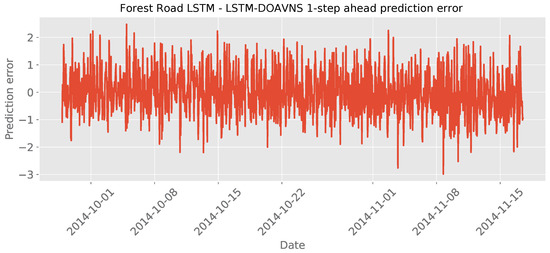

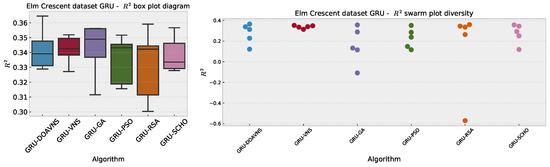

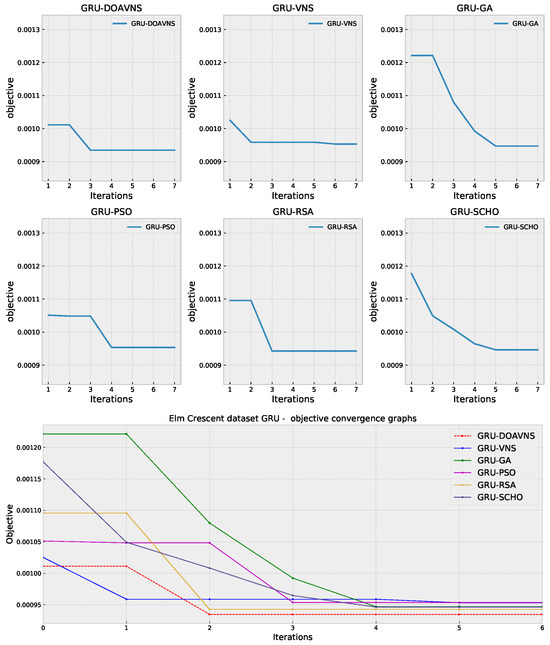

Comparisons in terms of the objective function for Elm Crescent GRU simulation scores are presented in Table 14. The introduced optimizer attained the best-performing model, scoring an objective function result of , as well as the best worst-case and mean scores. However, the best median performance goes to the GA. Stability rates are best maintained by the VNA algorithm, despite the models not attaining high scores in other metrics. Further stability comparisons are provided in terms of objective and indicator score distribution diagrams for the objective function in Figure 29 and indicator function in Figure 30. A notable drop in stability can be observed for the introduced optimizer. However, as previously stated, this drop can be attributed to the boost in diversification.

Table 14.

Elm Crescent GRU simulations’ objective function outcomes.

Figure 29.

Elm Crescent GRU simulations’ objective function distribution diagrams.

Figure 30.

Elm Crescent GRU simulations’ indicator function distribution diagrams.

Further comparisons in terms of detailed metrics are provided in Table 15. The model optimized by the introduced DOAVNS optimizer showcases the best scores in terms of all metrics in the conducted tests.

Table 15.

Elm Crescent GRU simulations’ detailed metrics for the best-performing optimized models.

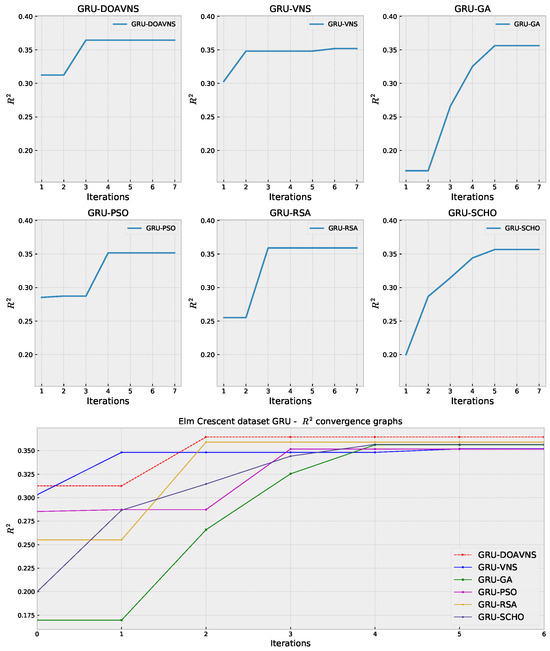

Convergence diagrams for each optimizer are provided in Figure 31 and Figure 32 for objective and indicator scores. The introduced optimizer overcomes a local optimum and converges toward a better solution, outperforming competing optimizers.

Figure 31.

Elm Crescent GRU simulations’ objective function convergence diagrams.

Figure 32.

Elm Crescent GRU simulations’ indicator function convergence diagrams.

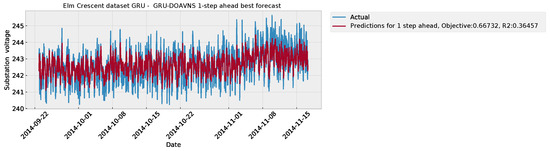

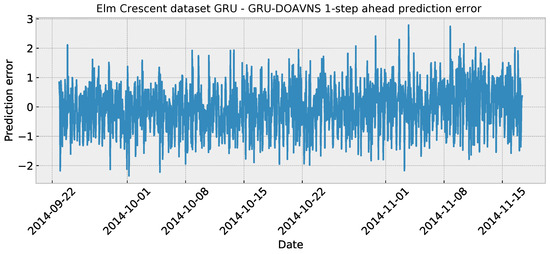

The parameter selections made by each algorithm for the respective best-performing model are provided in Table 16 to facilitate simulation repeatability. The predictions made by the best-performing model are provided in Figure 33. The plot of the error over time is given in Figure 34.

Table 16.

Best-performing GRU model parameter selections for Elm Crescent simulations.

Figure 33.

Forecasts of best DOAVNS model from Elm Crescent GRU simulations.

Figure 34.

Elm Crescent GRU simulations’ error over time.

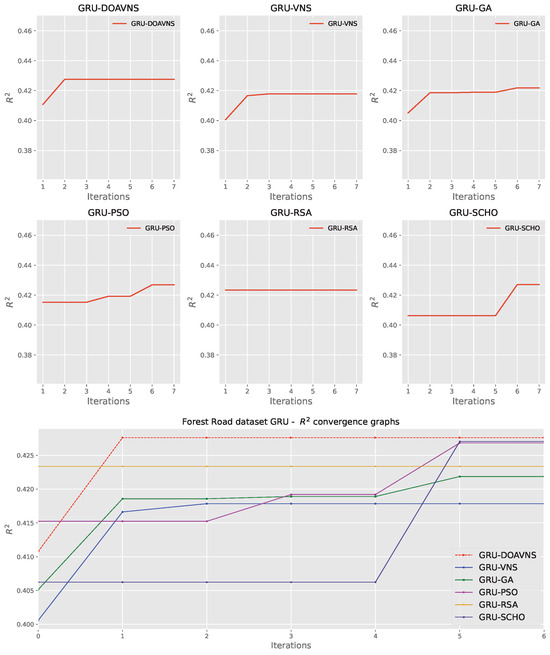

5.3.2. GRU Forest Road Simulations

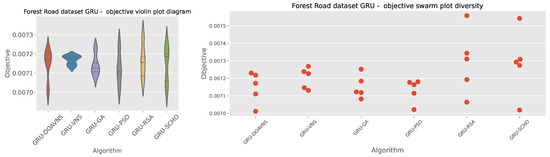

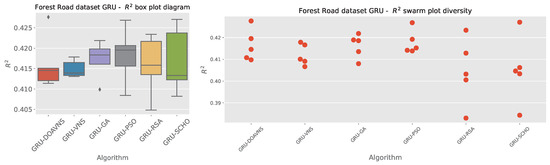

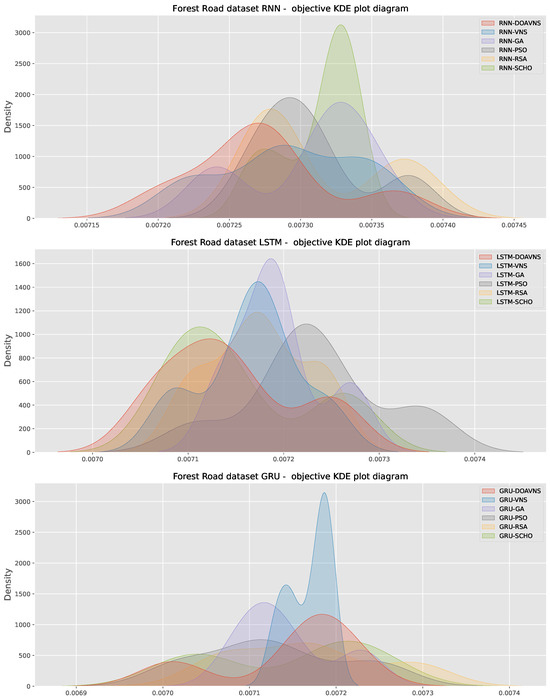

Comparisons in terms of the objective function for Forest Road GRU simulation scores are presented in Table 17. While the introduced optimizer showcases the best outcome in terms of best-case executions with a score of , the other optimizers showcase good performance as well, with the VNS scoring highest in terms of worst-case outcomes, while the PSO has high scores in terms of the median and mean, and the VNS optimizer has the highest rate of stability. Further stability comparisons are provided in terms of objective and indicator score distribution diagrams for the objective function in Figure 35 and indicator function in Figure 36. A notable drop in stability can be observed for the introduced optimizer. However, as previously stated, this drop can be attributed to the boost in diversification.

Table 17.

Forest Road GRU simulations’ objective function outcomes.

Figure 35.

Forest Road GRU simulations’ objective function distribution diagrams.

Figure 36.

Forest Road GRU simulations’ indicator function distribution diagrams.

Further comparisons in terms of detailed metrics are provided in Table 18. The model optimized by the introduced DOAVNS optimizer showcases the best scores in terms of all metrics in the conducted tests. The introduced optimizer scores the highest in terms of R2, MSE, and RMSE. However, the RSA shows admirable performance in terms of MAE as well as the IoA.

Table 18.

Forest Road GRU simulations’ detailed metrics for the best-performing optimized models.

Convergence diagrams for each optimizer are provided in Figure 37 and Figure 38 for objective and indicator scores. The introduced optimizer overcomes a local optimum and converges toward a better solution, outperforming competing optimizers.

Figure 37.

Forest Road GRU simulations’ objective function convergence diagrams.

Figure 38.

Forest Road GRU simulations’ indicator function convergence diagrams.

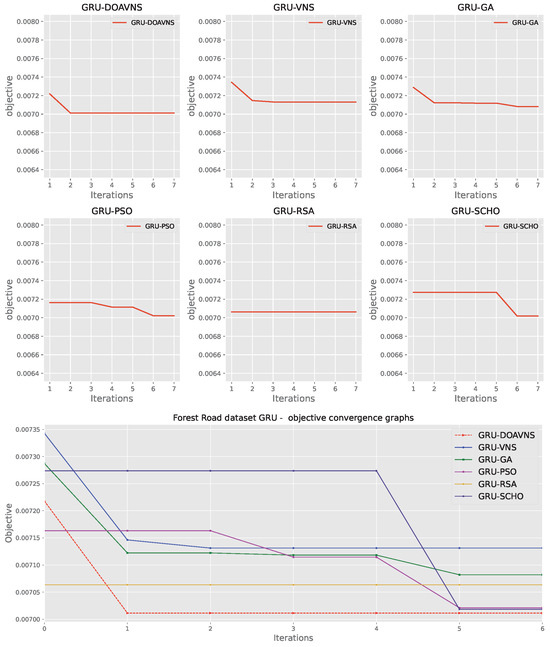

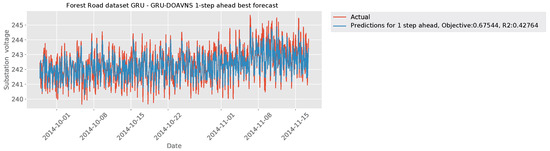

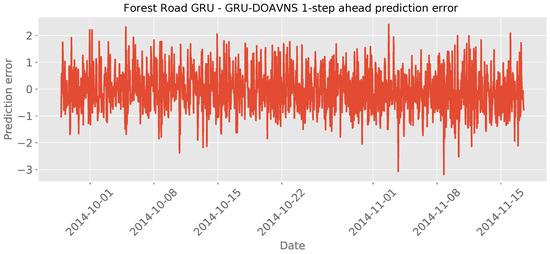

The parameter selections made by each algorithm for the respective best-performing model are provided in Table 19 to facilitate simulation repeatability. The predictions made by the best-performing model are provided in Figure 39. Lastly, Figure 40 plots the error over time.

Table 19.

Best-performing GRU model parameter selections for Forest Road simulations.

Figure 39.

Forecasts of best DOAVNS model from Forest Road GRU simulations.

Figure 40.

Forest Road GRU simulations’ error over time.

5.4. Comparison Between Best-Performing Models

A comparison between the best-performing optimized models in each simulation is presented in Table 20. The attained outcomes suggest that for the Elm Crescent dataset simulation, the GRU model showcases the best performance, with an R2 score of and an MSE of . In the case of Forest Road simulations, the GRU model also showcases the best scores, with an R2 of and an MSE of . Nevertheless, it is important to consider several factors when deciding on the most suitable model for use. The favorable performance of GRU makes these networks ideal for use in TinyML applications due to their relatively low computational demands in comparison to LSTM modes while still showing higher robustness and resistance to common issues in simple RNNs. For this reason, the GRU models are chosen for simulations with ESP32 devices, developed by Espressif Systems, a Chinese company based in Shanghai, China, and manufactured by TSMC using their 40 nm process.

Table 20.

A comparison between the best-performing models in each simulation.

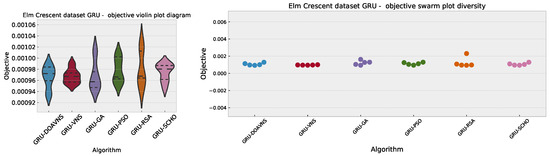

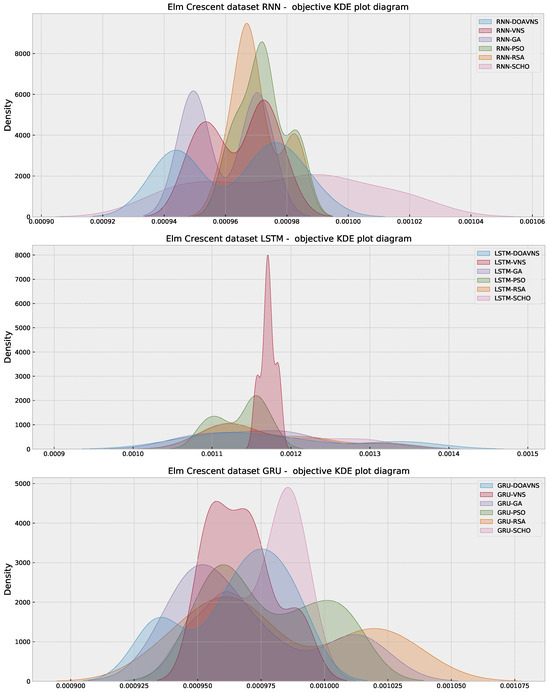

5.5. Optimization Statistical Validation

To establish a comparison between the evaluated algorithms, statistical validation is necessary, as relying on simple one-time executions can provide misleading conclusions. The conducted simulations need to meet an established set of criteria in order to be eligible for evaluation using parametric testing. Otherwise, non-parametric tests need to be utilized [58]. A sufficient number of simulations is needed to conduct testing; therefore, 30 turns were conducted for each of the conducted experiments, with independent random seeds satisfying the independence criteria. Homoscedasticity was established via Levene’s test [59], where the resulting p values are for each of the simulations, suggesting this criterion is also met. Finally, the normality criterion was tested with the Shapiro–Wilk [60] test. The attained outcomes are presented in Table 21. With the attained values falling below , the null hypothesis is rejected, suggesting that the normality assumption is not satisfied, and parametric test usage cannot be justified. Normality observations are further confirmed by the objective function KDE diagrams presented in Figure 41 and Figure 42 for Elm Crescent and Forest Road simulations.

Table 21.

Shapiro–Wilk scores for forecasting experiments for normality condition evaluation.

Figure 41.

Elm Crescent simulation KDE diagrams.

Figure 42.

Forest road simulation KDE diagrams.

As the conditions needed for parametric test application were not met, this work resorted to the utilization of the Wilcoxon signed-rank test [61] to establish a comparison between the proposed DOAVNS and other optimizers. The outcomes of this test are outlined in Table 22. As all criteria fall below the threshold limit , the attained outcomes suggest the statistical significance of the attained comparative analysis scores.

Table 22.

Wilcoxon signed-rank test scores in forecasting experiments.

5.6. Deployment Simulations

Based on simulation outcomes, GRU models showcase optimal performance once optimized and trained for prediction in both simulation cases. This is favorable, as the lower computational demands of GRUs make them ideal for deployment on MCUs that face challenges with limited computational resources. In this study, the models were re-implemented using TensorFlow Lite, compiled, converted to C, and uploaded to an ESP32 development board using the Arduino IDE. The device is configured to monitor a serial port input and, based on a series of inputs, generates responses accordingly. Simulations using the test portion of the datasets yield results consistent with the scores reported in prior tables, suggesting viability for real-world use.

It is important to note that the ESP32 supports additional configurations, such as a web server and the direct monitoring of voltages using a step-down transformer or external ADC, which could also be integrated with the platform. The proof-of-concept study conducted in this work suggests that an expanded version of this system could significantly aid in the distribution and democratization of IoT devices for energy forecasting, thereby improving renewable energy integration into existing power distribution networks and reducing losses from power overproduction and distribution.

6. Conclusions

With limited fossil resources and their unsustainable impacts, there is an increasing shift toward renewable energy. However, successfully incorporating these new sources into current power grids remains challenging, with both technical and regulatory obstacles to address. Although enhancing reliability could greatly improve energy sustainability, solar energy’s reliance on weather introduces variability that often requires costly storage to ensure stable output.

This study focuses on leveraging metaheuristic optimization to fine-tune recurrent forecasting models aimed at predicting solar power production, designed for TinyML deployment on MCUs in in situ applications. Various recurrent models—RNN, LSTM, and GRU—were tested to identify the most effective model. Additionally, a comparative evaluation on two real-world datasets for separate locations was conducted, encompassing multiple optimizers to validate the approach; a custom optimizer showed promising results, achieving an MSE as low as 0.000935v and 0.007011v in the best-case scenarios on the two evaluated datasets, signaling its potential for real-world implementation. The best models were further assessed on an ESP32 chip, utilizing a UART terminal to simulate execution. Model performance was consistent, with minimal latency for the application’s needs. By deploying models directly on-site, network infrastructure costs are reduced, and local functionalities can be added to help manage demand and supply more effectively, including alerting in critical situations.

One drawback is that, in this setup, models deployed on MCUs cannot be updated in real time; instead, they require retraining on separate hardware and reprogramming MCU firmware for updates. Other limitations include restricted population sizes and optimization times, given the high computational load. Future research aims to extend this methodology, exploring broader applications for the optimized model and developing the experimental framework within the TinyML environment. Specific directions will include the implementation of real-time model updates, the integration of wireless sensor networks, and the development of distributed forecasting systems over different renewable energy sources like wind, hydroelectric, and solar power. The research will also examine a solar power dataset from the Balkans, which is currently being collected; however, it is currently neither openly available nor complete for experiments of this scale. Ultimately, the application of TinyML models will be extended to other application domains, including healthcare, agriculture, IoT security, and industry, where these models may help in image classification, wireless sensor network routing, fault detection, fall prediction for children and elderly persons, etc.

Policy Implications

Precise TinyML-based solar energy forecasting systems can significantly impact legal policies related to grid reliability and renewable energy integration in the Western Balkans. By providing accurate, localized forecasts, these systems enhance compliance with EU regulations, like RED II, which promotes greater renewable energy use and efficient grid integration. Such models support policies aimed at improving grid stability by balancing renewable energy supply and demand, aligning with national energy security and greenhouse gas reduction goals set forth in NECPs. Furthermore, precise in situ forecasts can help reduce reliance on fossil fuel backups during periods of fluctuating solar output, advancing both regional and EU emission reduction targets.

TinyML’s cost-effective, localized deployment enables decentralized monitoring, reducing the need for extensive networking infrastructure and making renewable integration more accessible, particularly for smaller towns and remote areas. Building on incentives like feed-in tariffs, this capability could prompt policymakers to offer financial support for TinyML within larger renewable energy subsidies. Moreover, TinyML supports continuous monitoring and analysis of real-time data coming from sensors, which can aid in the identification of patterns indicating anomalies, like equipment malfunctioning or deviations in electricity output, ensuring fast interventions and reducing system downtime. Continuous monitoring can help in the integration of renewable systems with smart grids, ensuring that energy production matches demand and stabilizing the grid through predictions of energy fluctuations. Improved monitoring and storage capabilities enabled by TinyML could also support cross-border energy exchange regulations. With more accurate forecasting of production surpluses, energy can be stored or traded internationally, aiding regional energy independence, lowering reliance on imports, and achieving the Energy Community’s goal of harmonized market integration across the Western Balkans.

Finally, TinyML’s predictive accuracy aligns with environmental protection regulations by enabling solar projects to anticipate and mitigate environmental impacts more effectively. Accurate production models ensure compliance with air quality and resource conservation standards, expediting environmental impact assessments under regulations like the Environmental Protection Law. By integrating TinyML, regulatory oversight could become more efficient through real-time data that identify inefficiencies or excessive emissions. TinyML thus has the potential to drive policies supporting a resilient and sustainable energy grid across the Western Balkans, equipping decision-makers with improved monitoring and forecasting tools.

Author Contributions

Conceptualization, G.P., L.S. and L.J.; methodology, N.B.; software, N.B.; validation, N.B., M.Z. and Z.S.; formal analysis, M.Z.; investigation, M.Z.; resources, L.J.; data curation, L.J.; writing—original draft preparation, Z.S.; writing—review and editing, G.P.; visualization, M.Z.; supervision, Z.S.; project administration, N.B.; funding acquisition, L.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data utilized in this work are publicly available at https://www.kaggle.com/datasets/pythonafroz/solar-panel-energy-generation-data (accessed on 25 October 2024); Code snippets from the framework and datasets that were used for testing are available on the following github URL: https://github.com/nbacanin/solarforecastingtinyml (accessed on 25 October 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Höök, M.; Tang, X. Depletion of fossil fuels and anthropogenic climate change—A review. Energy Policy 2013, 52, 797–809. [Google Scholar] [CrossRef]

- Hayat, M.B.; Ali, D.; Monyake, K.C.; Alagha, L.; Ahmed, N. Solar energy—A look into power generation, challenges, and a solar-powered future. Int. J. Energy Res. 2019, 43, 1049–1067. [Google Scholar] [CrossRef]

- Hassan, Q.; Algburi, S.; Sameen, A.Z.; Salman, H.M.; Jaszczur, M. A review of hybrid renewable energy systems: Solar and wind-powered solutions: Challenges, opportunities, and policy implications. Results Eng. 2023, 20, 101621. [Google Scholar] [CrossRef]

- Simankov, V.; Buchatskiy, P.; Kazak, A.; Teploukhov, S.; Onishchenko, S.; Kuzmin, K.; Chetyrbok, P. A Solar and Wind Energy Evaluation Methodology Using Artificial Intelligence Technologies. Energies 2024, 17, 416. [Google Scholar] [CrossRef]

- European Parliament and Council of the European Union. Directive (EU) 2018/2001 of the European Parliament and of the Council on the Promotion of the Use of Energy from Renewable Sources (Recast); Text with EEA relevance; European Parliament and Council of the European Union: Brussels, Belgium, 2018. [Google Scholar]

- European Commission. EU’s Revised Renewable Energy Directive. 2018. Available online: https://eur-lex.europa.eu/eli/dir/2018/2001/oj (accessed on 31 October 2024).

- Lim, B.; Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. A 2021, 379, 20200209. [Google Scholar] [CrossRef]

- Kataray, T.; Nitesh, B.; Yarram, B.; Sinha, S.; Cuce, E.; Shaik, S.; Vigneshwaran, P.; Roy, A. Integration of smart grid with renewable energy sources: Opportunities and challenges—A comprehensive review. Sustain. Energy Technol. Assess. 2023, 58, 103363. [Google Scholar] [CrossRef]

- Khalid, M. Smart grids and renewable energy systems: Perspectives and grid integration challenges. Energy Strategy Rev. 2024, 51, 101299. [Google Scholar] [CrossRef]

- Berta, R.; Dabbous, A.; Lazzaroni, L.; Pau, D.; Bellotti, F. Developing a TinyML Image Classifier in a Hour. IEEE Open J. Ind. Electron. Soc. 2024, 5, 946–960. [Google Scholar] [CrossRef]

- Ficco, M.; Guerriero, A.; Milite, E.; Palmieri, F.; Pietrantuono, R.; Russo, S. Federated learning for IoT devices: Enhancing TinyML with on-board training. Inf. Fusion 2024, 104, 102189. [Google Scholar] [CrossRef]

- Elhanashi, A.; Dini, P.; Saponara, S.; Zheng, Q. Advancements in TinyML: Applications, Limitations, and Impact on IoT Devices. Electronics 2024, 13, 3562. [Google Scholar] [CrossRef]

- Medsker, L.; Jain, L.C. Recurrent Neural Networks: Design and Applications; CRC Press: Boca Raton, FL, USA, 1999. [Google Scholar]

- Bischl, B.; Binder, M.; Lang, M.; Pielok, T.; Richter, J.; Coors, S.; Thomas, J.; Ullmann, T.; Becker, M.; Boulesteix, A.L.; et al. Hyperparameter optimization: Foundations, algorithms, best practices, and open challenges. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1484. [Google Scholar] [CrossRef]

- Alazemi, T.; Darwish, M.; Radi, M. Renewable energy sources integration via machine learning modelling: A systematic literature review. Heliyon 2024, 10, e26088. [Google Scholar] [CrossRef] [PubMed]

- Khurshid, H.; Mohammed, B.S.; Al-Yacoubya, A.M.; Liew, M.; Zawawi, N.A.W.A. Analysis of hybrid offshore renewable energy sources for power generation: A literature review of hybrid solar, wind, and waves energy systems. Dev. Built Environ. 2024, 19, 100497. [Google Scholar] [CrossRef]

- Bacanin, N.; Jovanovic, L.; Zivkovic, M.; Kandasamy, V.; Antonijevic, M.; Deveci, M.; Strumberger, I. Multivariate energy forecasting via metaheuristic tuned long-short term memory and gated recurrent unit neural networks. Inf. Sci. 2023, 642, 119122. [Google Scholar] [CrossRef]

- Park, K.; Yim, J.; Lee, H.; Park, M.; Kim, H. Real-time solar power estimation through rnn-based attention models. IEEE Access 2023, 12, 62502–62510. [Google Scholar] [CrossRef]

- Zameer, A.; Jaffar, F.; Shahid, F.; Muneeb, M.; Khan, R.; Nasir, R. Short-term solar energy forecasting: Integrated computational intelligence of LSTMs and GRU. PLoS ONE 2023, 18, e0285410. [Google Scholar] [CrossRef]

- Moradzadeh, A.; Moayyed, H.; Mohammadi-Ivatloo, B.; Vale, Z.; Ramos, C.; Ghorbani, R. A novel cyber-Resilient solar power forecasting model based on secure federated deep learning and data visualization. Renew. Energy 2023, 211, 697–705. [Google Scholar] [CrossRef]

- Salman, D.; Direkoglu, C.; Kusaf, M.; Fahrioglu, M. Hybrid deep learning models for time series forecasting of solar power. Neural Comput. Appl. 2024, 36, 9095–9112. [Google Scholar] [CrossRef]

- Olcay, K.; Tunca, S.G.; Özgür, M.A. Forecasting and performance analysis of energy production in solar power plants using long short-term memory (LSTM) and random forest models. IEEE Access 2024, 12, 103299–103312. [Google Scholar] [CrossRef]

- Jailani, N.L.M.; Dhanasegaran, J.K.; Alkawsi, G.; Alkahtani, A.A.; Phing, C.C.; Baashar, Y.; Capretz, L.F.; Al-Shetwi, A.Q.; Tiong, S.K. Investigating the power of LSTM-based models in solar energy forecasting. Processes 2023, 11, 1382. [Google Scholar] [CrossRef]

- Guo, X.; Zhan, Y.; Zheng, D.; Li, L.; Qi, Q. Research on short-term forecasting method of photovoltaic power generation based on clustering SO-GRU method. Energy Rep. 2023, 9, 786–793. [Google Scholar] [CrossRef]

- Xu, Y.; Zheng, S.; Zhu, Q.; Wong, K.c.; Wang, X.; Lin, Q. A complementary fused method using GRU and XGBoost models for long-term solar energy hourly forecasting. Expert Syst. Appl. 2024, 254, 124286. [Google Scholar] [CrossRef]

- Hayajneh, A.M.; Alasali, F.; Salama, A.; Holderbaum, W. Intelligent Solar Forecasts: Modern Machine Learning Models & TinyML Role for Improved Solar Energy Yield Predictions. IEEE Access 2024, 12, 10846–10864. [Google Scholar]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: Theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Bassey, K.E. Hybrid renewable energy systems modeling. Eng. Sci. Technol. J. 2023, 4, 571–588. [Google Scholar] [CrossRef]

- Öcal, A.; Koyuncu, H. An in-depth study to fine-tune the hyperparameters of pre-trained transfer learning models with state-of-the-art optimization methods: Osteoarthritis severity classification with optimized architectures. Swarm Evol. Comput. 2024, 89, 101640. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the Proceedings of ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Mirjalili, S. Genetic Algorithm. In Evolutionary Algorithms and Neural Networks: Theory and Applications; Springer International Publishing: Cham, Switzerland, 2019; pp. 43–55. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Bai, J.; Li, Y.; Zheng, M.; Khatir, S.; Benaissa, B.; Abualigah, L.; Abdel Wahab, M. A Sinh Cosh optimizer. Knowl.-Based Syst. 2023, 282, 111081. [Google Scholar] [CrossRef]

- Minic, A.; Jovanovic, L.; Bacanin, N.; Stoean, C.; Zivkovic, M.; Spalevic, P.; Petrovic, A.; Dobrojevic, M.; Stoean, R. Applying recurrent neural networks for anomaly detection in electrocardiogram sensor data. Sensors 2023, 23, 9878. [Google Scholar] [CrossRef]

- Jovanovic, L.; Djuric, M.; Zivkovic, M.; Jovanovic, D.; Strumberger, I.; Antonijevic, M.; Budimirovic, N.; Bacanin, N. Tuning xgboost by planet optimization algorithm: An application for diabetes classification. In Proceedings of the Fourth International Conference on Communication, Computing and Electronics Systems: ICCCES 2022, Coimbatore, India, 15–16 September 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 787–803. [Google Scholar]

- Jovanovic, L.; Bacanin, N.; Zivkovic, M.; Antonijevic, M.; Petrovic, A.; Zivkovic, T. Anomaly detection in ECG using recurrent networks optimized by modified metaheuristic algorithm. In Proceedings of the 2023 31st Telecommunications Forum (TELFOR), Belgrade, Serbia, 21–22 November 2023; pp. 1–4. [Google Scholar]

- Mladenovic, D.; Antonijevic, M.; Jovanovic, L.; Simic, V.; Zivkovic, M.; Bacanin, N.; Zivkovic, T.; Perisic, J. Sentiment classification for insider threat identification using metaheuristic optimized machine learning classifiers. Sci. Rep. 2024, 14, 25731. [Google Scholar] [CrossRef]

- Dobrojevic, M.; Jovanovic, L.; Babic, L.; Cajic, M.; Zivkovic, T.; Zivkovic, M.; Muthusamy, S.; Antonijevic, M.; Bacanin, N. Cyberbullying Sexism Harassment Identification by Metaheurustics-Tuned eXtreme Gradient Boosting. Comput. Mater. Contin. 2024, 80, 4997–5027. [Google Scholar] [CrossRef]

- Pavlov-Kagadejev, M.; Jovanovic, L.; Bacanin, N.; Deveci, M.; Zivkovic, M.; Tuba, M.; Strumberger, I.; Pedrycz, W. Optimizing long-short-term memory models via metaheuristics for decomposition aided wind energy generation forecasting. Artif. Intell. Rev. 2024, 57, 45. [Google Scholar] [CrossRef]

- Damaševičius, R.; Jovanovic, L.; Petrovic, A.; Zivkovic, M.; Bacanin, N.; Jovanovic, D.; Antonijevic, M. Decomposition aided attention-based recurrent neural networks for multistep ahead time-series forecasting of renewable power generation. PeerJ Comput. Sci. 2024, 10, e1795. [Google Scholar] [CrossRef] [PubMed]

- Bacanin, N.; Petrovic, A.; Jovanovic, L.; Zivkovic, M.; Zivkovic, T.; Sarac, M. Parkinson’s Disease Induced Gain Freezing Detection using Gated Recurrent Units Optimized by Modified Crayfish Optimization Algorithm. In Proceedings of the 2024 5th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Lalitpur, Nepal, 18–19 January 2024; pp. 1–8. [Google Scholar]

- Bacanin, N.; Jovanovic, L.; Djordjevic, M.; Petrovic, A.; Zivkovic, T.; Zivkovic, M.; Antonijevic, M. Crop Yield Forecasting Based on Echo State Network Tuned by Crayfish Optimization Algorithm. In Proceedings of the 2024 IEEE International Conference on Contemporary Computing and Communications (InC4), Bengaluru, India, 15–16 March 2024; Volume 1, pp. 1–6. [Google Scholar]

- Government of Montenegro. Zakon o Energetici. Available online: https://www.gov.me/dokumenta/d17f9f62-ea19-4dd2-a73f-cbf6bfffab5c (accessed on 31 October 2024).

- European Union. Communication on REPowerEU Plan. 2022. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:52022DC0230 (accessed on 31 October 2024).

- Ekološka Ekonomija. Što je feed-in tarifa za obnovljive izvore? [What Is a Feed-in Tariff for Renewable Energy?]. 2016. Available online: https://ekoloskaekonomija.wordpress.com/2016/09/30/sto-je-feed-in-tarifa-za-obnovljive-izvore/ (accessed on 31 October 2024).

- D’Aprile, P.; Engel, H.; van Gendt, G.; Helmcke, S.; Hieronimus, S.; Nauclér, T.; Pinner, D.; Walter, D.; Witteveen, M. Net-Zero Europe: Decarbonization Pathways and Socioeconomic Implications; McKinsey & Company: Stockholm, Sweden, 2020. [Google Scholar]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. In Proceedings of the NIPS 2014 Workshop on Deep Learning, Montreal, QC, Canada, 13 December 2014. [Google Scholar]

- Mladenović, N.; Hansen, P. Variable neighborhood search. Comput. Oper. Res. 1997, 24, 1097–1100. [Google Scholar] [CrossRef]

- Luo, W.; Lin, X.; Li, C.; Yang, S.; Shi, Y. Benchmark Functions for CEC 2022 Competition on Seeking Multiple Optima in Dynamic Environments. arXiv 2022, arXiv:cs.NE/2201.00523. [Google Scholar]

- Cheng, S.; Shi, Y. Diversity control in particle swarm optimization. In Proceedings of the 2011 IEEE Symposium on Swarm Intelligence, Paris, France, 11–15 April 2011; pp. 1–9. [Google Scholar] [CrossRef]

- Yang, X.S.; He, X. Firefly algorithm: Recent advances and applications. Int. J. Swarm Intell. 2013, 1, 36–50. [Google Scholar] [CrossRef]

- Espressif Systems. ESP32 Series Datasheet. 2024. Available online: https://www.espressif.com/en/products/socs/esp32 (accessed on 15 October 2024).

- Maier, A.; Sharp, A.; Vagapov, Y. Comparative analysis and practical implementation of the ESP32 microcontroller module for the internet of things. In Proceedings of the 2017 Internet Technologies and Applications (ITA), Wrexham, UK, 12–15 September 2017; pp. 143–148. [Google Scholar]

- Zim, M.Z.H. TinyML: Analysis of Xtensa LX6 microprocessor for neural network applications by ESP32 SoC. arXiv 2021, arXiv:2106.10652. [Google Scholar]

- Tatachar, A.V. Comparative assessment of regression models based on model evaluation metrics. Int. J. Innov. Technol. Explor. Eng. 2021, 8, 853–860. [Google Scholar]

- Willmott, C.J.; Robeson, S.M.; Matsuura, K. A refined index of model performance. Int. J. Climatol. 2012, 32, 2088–2094. [Google Scholar] [CrossRef]

- LaTorre, A.; Molina, D.; Osaba, E.; Poyatos, J.; Del Ser, J.; Herrera, F. A prescription of methodological guidelines for comparing bio-inspired optimization algorithms. Swarm Evol. Comput. 2021, 67, 100973. [Google Scholar] [CrossRef]

- Schultz, B.B. Levene’s Test for Relative Variation. Syst. Biol. 1985, 34, 449–456. [Google Scholar] [CrossRef]

- Shapiro, S.S.; Francia, R.S. An Approximate Analysis of Variance Test for Normality. J. Am. Stat. Assoc. 1972, 67, 215–216. [Google Scholar] [CrossRef]

- Woolson, R.F. Wilcoxon Signed-Rank Test. In Encyclopedia of Biostatistics; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2005. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).