Abstract

Historically, exchange rate forecasting models have exhibited poor out-of-sample performances and were inferior to the random walk model. Monthly panel data from 1973 to 2014 for ten currency pairs of OECD countries are used to make out-of sample forecasts with artificial neural networks and XGBoost models. Most approaches show significant and substantial predictive power in directional forecasts. Moreover, the evidence suggests that information regarding prediction timing is a key component in the forecasting performance.

JEL Classification:

E0; E4; E5; E6; F0; F4

1. Introduction

A long-standing conundrum in macroeconomic theory is the inability to predict exchange rate movements with economic fundamentals. Several exchange rate forecasting models have been derived from assumptions that are nowadays an essential part of the economics curriculum. The uncovered interest parity (UIP), for example, is based on the empirically confirmed covered interest parity and the assumption of risk neutrality, both of which are widely accepted (Neely and Sarno 2002). However, while many exchange rate forecasting models are well founded from a theoretical point of view, their empirical applications have exhibited poor forecasting performances. In most cases, a simple random walk (RW) prediction outperforms the well thought through models. Arguably the most prominent exchange rate forecasting models are the aforementioned UIP model, the purchase power parity (PPP) model and the monetary model (MM). They are based on economic theories derived from different assumptions. For decades, little to no significant influence of the fundamentals used in these models on exchange rate movements has been found in the short term, although the theoretical explanations seem reasonable. The fact that no relationship between fundamentals and exchange rate movements could be demonstrated with the help of the aforementioned economic models does not mean that no relationship exists per se. As Amat et al. (2018) point out, a critical constraining factor of these models is their functional form. The forecasting equations consist of simple linear combinations of the fundamentals. This way, potential nonlinearites are neglected. The reason for the strict linear form are the theoretical derivations of the models. The fundamentals could have a significant and substantial effect on the movement of exchange rates if a nonlinear model equation was used. The authors of Amat et al. (2018) use simple machine learning (ML) methods with economic fundamentals to test if this leads to better forecasting results compared to ordinary least squares (OLS) estimates. Although their ridge regression and exponentially weighted average models improve the performance compared to the OLS estimates, they could not always beat the RW prediction.

Building on the research of Amat et al. (2018), I argue that the use of more complex ML models could lead to a better forecasting performance because they are better suited for capturing potential nonlinearities. The question I address with this examination is simple:

- Q:

- Do fundamentals from the PPP, UIP or MM model have forecasting power with regard to exchange rate movements, regardless of the functional form of the estimation equation?

The flexibility of ANN and XGBoost models should allow one to approximate a function which exploits the information in the economic fundamentals. Thus, if the fundamentals have predictive power, this should be evident by a higher predictive performance relative to the RW benchmark. The research question can be further specified by the following two specific questions:

- Q1:

- Can complex ML methods with economic fundaments beat the RW predictions in forecasting exchange rate differentials?

- Q2:

- Can complex ML methods with economic fundaments beat the RW predictions in forecasting exchange rate movement directions?

Here, the complex ML methods are XGBoost and ANN models, and economic fundamentals are derived from the PPP, UIP and MM theories. I show that neither ML model is able to convincingly outperform the RW forecast of exchange rate differentials, and when they do, the effect is comparatively small and statistical significance is almost always lacking. In predicting the direction of the exchange rate movements, on the other hand, both ML methods outperform the RW forecasts in almost all cases with varying levels of statistical significance. Moreover, ANNs provide better forecasting results than the XGBoost models. Additionally, the advantage of the ML models over the RW model disappears completely when time dummies are excluded from the features in the training phase. This suggests that the information driving the models’ directional forecast for the exchange rate is not derived from the fundamentals alone, but depends largely on the prediction timing, which is defined by the time dummy variables. This argument is supported by the good performance of a separate ANN model that predicts the direction of exchange rate movement using only time dummies as explanatory variables. However, models trained with fundamental variables still perform slightly better, suggesting that fundamentals may still have predictive power but are dependent on prediction timing.

This paper is structured as follows: In Section 2, I discuss the related literature. Section 3 follows with the description of the methodology, including the classic PPP, UIP and MM models, which the economic fundamentals included in the empirical part of this study stem from as well as the applied ML methods ANN and XGBoost. In Section 4, I describe the dataset. Section 5 continues with the description of the results, followed by a brief discussion of the implications. Finally, the conclusions are drawn.

2. Related Literature

Classic economic exchange rate forecasting models are based on the PPP, UIP and MM theories and have been the subject of numerous studies. The most famous of these studies was conducted by Meese and Rogoff (1983), who tested various specifications of the monetary model. They included both sticky as well as flexible-price approaches in the form of the Drenkel–Bilson, the Dornbusch–Frenkel and the Hopper–Morton monetary models (Meese and Rogoff 1983). They included different country pairs and varied the considered forecasting horizon from one to twelve months. Despite the different approaches, their models failed to outperform the RW benchmark in forecasting exchange rates. The authors of Vitek (2005) repeated this approach with a larger dataset at a later time and confirmed these findings. In recent years, the literature has found conflicting evidence on the predictability of exchange rates using MM fundamentals. In a study by Mark (1995), a model based on MM fundamentals was able to beat the RW benchmark in forecasting exchange rate differentials over a long time horizon. On the other hand, Engel et al. (2017) found that their monetary model using Taylor-rule fundamentals cannot consistently beat the RW benchmark in the short run in out-of-sample (OOS) forecasts, even though the coefficients of fundamentals in their OLS regression are statistically significant. Regarding the significance of UIP fundamentals in exchange rate forecasting, they conducted an examination of the effect of these fundamentals including inflation. They found that the coefficients of inflation were in many cases highly significant when predicting exchange rate differentials. However, the interest rate differential, which is supposed to be the explanatory factor for exchange rates changes according to the classical UIP theory, is not statistical significance in most cases (Engel et al. 2017). Regarding the PPP model, Edison and Klovland (1987) examined the short-term forecastability of exchange rate differentials. They used a time series from 1874 to 1971 for the country pair Norway and United Kingdom. They found no evidence for an effect of PPP fundamentals on the exchange rate differentials (Edison and Klovland 1987). In Dal Bianco et al. (2012), the authors find a good in-sample fit of their fundamental-based econometric models with an of using short-term weekly and monthly data. However, in OOS comparisons, their model is inferior to the RW model. In addition to using various sets of fundamentals, researchers also use various machine learning models to improve their predictions. The authors of Plakandaras et al. (2015) apply a wide range of machine learning models and model combinations to forecast exchange rate movements, ranging from ARIMA models to support vector machines and ANNs. They outperform the RW in OOS exchange rate prediction, taking into account a variety of variables, from commodity and equity prices to interest rates and GDP. However, they do not focus on a specific economic theory, as their objective is to achieve the best OOS performance possible (Plakandaras et al. 2015). In Amat et al. (2018), the authors use the exponentially weighted average strategy (EWA) with discount factors and ridge regression to forecast the exchange rate differentials as well as the direction of exchange rate movements. They incorporate fundamentals from the classic economic PPP, MM and UIP models. They compare the OOS forecasts of their models with OLS and RW benchmark models. They tested their results for statistical significance using the Diebold–Mariano (DM) statistics and p-values. In some cases, they narrowly beat the RW model; often the difference is less than one percent of the RMSE for different country pairs. Their directional forecasts show that the EWA model performs best, but the OLS regression can also correctly predict the direction of exchange rate movement over 50 percent of the time for most currency pairs (Amat et al. 2018).

The authors of Bajari et al. (2015) compare common machine learning models with classical econometric models for demand forecasting. They use linear and logistic regressions as econometric models, which they compare with random forests and support vector machines, as well as other machine learning models. Using Monte Carlo simulations, they show that model combination with linear regression improves OOS predictive power. Using real demand forecasting data, they show that ML models consistently provide better OOS predictions than their econometric counterparts, demonstrating why machine learning models should be considered in traditionally econometric settings.

A more complex machine learning model for economic forecasting is used by Qureshi et al. (2020). They use a two-stage XGBoost model for their Canadian GDP forecasts. In the first stage, they select variables based on the significance of their XGBoost model and in the second stage, they make predictions using a separate XGBoost model trained on the selected variables. Their results show that XGBoost models can lead to good predictive performance for monthly growth rate forecasts, as shown by the OOS forecasting performance (Qureshi et al. 2020).

3. Methodology

The fundamentals used in this research come from the UIP, PPP, and MM theories. In addition, I include the “all fundamentals” (AF) approach, which is the combined set of fundamentals from all three models. The descriptions of the models are by no means exhaustive and are instead intended to give an idea of where the linear form of the forecasting equations of classical models comes from and how the fundamentals are selected.

3.1. Uncovered Interest Rate Parity (UIP) Model

The UIP exchange rate forecasting model is based on the UIP condition. The UIP is derived from the covered interest rate parity (CIP) and the assumption of risk neutrality. The CIP states that investors cannot exploit differences in interest rates of two countries by using forward contracts and is expressed by Equation (1) below.

where is the price of a forward contract on the exchange rage at time t + 1. is the exchange rate at time t, denoted in units of the domestic country’s currency. and are the interest rates of the domestic and the foreign countries, respectively, on comparable assets with equal maturity. Transforming Equation (2) by applying the natural logarithm and assuming small values for and , yields the following equation (Equation (2)):

where the forward rate is defined by and the spot rate is defined by .

The forward rate can be represented as a composition of the investors, expectation of the spot rate and the risk associated with holding a domestic asset relative to the risk associated with holding a foreign asset, expressed by the risk premium . Hence, the forward rate can be described by Equation (3):

Combining Equations (2) and (3) yields Equation (4), which relates the change in the expected spot rate to the difference in interest rates in t () and the risk premium :

When risk neutrality of the investor is assumed, the risk premium is neutralized (), leading to the UIP, described by Equation (5):

Therefore, the difference in interest rates between the associated countries is assumed to explain the change in the exchange rate of their currencies. Hence, the explanatory variables considered for the later models are the current period interest rates of both countries for comparable assets with the same maturity (Meredith and Chinn 1998).

3.2. Purchasing Power Parity (PPP) Model

An alternative way of explaining the exchange rate movement lies in the PPP theory. According to the PPP theory, goods are internationally tradable substitutes. Neglecting complex trading behavior, e.g., due to differences in preferences and tastes, PPP theory assumes the homogeneity of goods and allows them to be easily tradable between countries. The purchasing power parity is defined by the following equation (Equation (6)):

where and are the natural logarithms of the price levels in the domestic and the foreign country, respectively. Due to the no-arbitrage condition on the goods markets assumed in PPP theory, the expected exchange rate in is given by Equation (7):

Therefore, the only variables to consider when forecasting exchange rate movements are the price levels of the respective countries, often reported as the consumer price index (CPI) (Juselius 1995).

3.3. Monetary Model (MM)

Regarding the MM model, there are several variants that distinguish, for example, between rigid and flexible prices, such as the Dornbusch–Frankel model (rigid prices) and the Frenkel–Bilson model (flexible prices) (Meese and Rogoff 1983).This examination follows the forecasting approach of Amat et al. (2018), which is based on the Frenkel–Bilson flexible price model. They model the relationship between income and money supply as the following linear equation, Equation (8):

where and denote the log levels of the money supply of the domestic and foreign countries, respectively, while and represent their log incomes. To estimate exchange rate differences, the difference between two consecutive periods leads to Equation (9):

where indicates that the connected variable is the time differential between the specified period and the previous period of the associated variable (i.e., ) (Amat et al. 2018).

3.4. Artificial Neural Networks (ANN)

All of the classical macroeconomic forecasting models described so far assume a linear relationship between fundamentals and exchange rate differentials or directions of movement. The reason for assuming linearity in these models stems from the theoretical derivations of the models. However, the restriction to a linear form may be a hindrance if the actual functional form is nonlinear. Multilayer perceptrons are known to be universal approximators. According to the universal approximation theorem, a multilayer perceptron model can approximate any continuous function between two Euclidean spaces. The reason for the flexibility of ANNs is the high complexity of the model due to the high number of parameters as well as the use of activation functions, which are responsible for the nonlinear characteristic of the approximation (Hornik 1991). A detailed and extensive explanation of the functionality of neural networks can be found in Goodfellow et al. (2016). In my study, I use a multilayer perceptron with two hidden layers. The first hidden layer consists of 1000 artificial neurons, the second of 500. The model equation of this network is shown in Equation (10) below. Let denote the vector of inputs to the network at time t. The vector consists of the values of the p economic fundamentals at time t. For the regression models, the prediction (where ) of the model at time t is calculated as follows:

while for the classification models, the predicted probability of the model at time t is calculated as follows:

where , and are the activation functions of the first, the second hidden layers, as well as the output layer, respectively. The prediction is the predicted exchange rate differential. The prediction is the predicted probability of the exchange rate differential to be positive. is a binary response variable, that is defined as follows

, and are differently sized weight matrices of the first and the second hidden layer, as well as the output layer. , and denote scalar bias terms, that are added in the first and second hidden layers, as well as the output layer. For the activation functions and I chose the relu function, as stated in Equation (14) below.

For the binary classification MLP models, which predict the direction of change of exchange rate movements, a sigmoid activation function, presented in Equation (14), is used for as the activation function of the ouput neuron . For the later regressions, no activation function is applied.

The MLP model is fit to a training dataset, consisting of 75 % of the considered data. As the objective function for regression, I chose the sum of squared residuals (SSR) loss function.

For classification, I implemented the binary cross-entropy (BCE) function as the loss function.

The loss function is minimized using the backpropagation algorithm. The backpropagation algorithm calculates the gradients of the loss function in respect to the weights in the network for each layer and update the weights. Backpropagation uses the chain rule to compute the gradients and the gradient descent algorithm to apply the necessary weight changes to the network. Gradient descent decreases the gradients according to the learning rate before subtracting them from the weight matrices in the networks, as shown in Equation (17) below (Goodfellow et al. 2016).

Here, denotes the learning rate. I chose the value for , which produces the greatest reduction in loss. Further, I did not use the exact version of the gradient descent algorithm described above, but the more advanced optimization algorithm Adam, which extends the gradient descent algorithms using momentum terms, as described by Kingma and Ba (2015). I use mini-batch training, which updates the weights after every mini-batch (Goodfellow et al. 2016). Once the training set is iterated through, an epoch is completed. I set the maximum epoch size to 1000. This number of epochs was, however, never reached, as I implemented Earlystopping as a callback. Earlystopping interrupts the training process, whenever a predefined improvement criterion is no longer fullfilled. In my examition, Earlystopping interrupted the training of a model, whenever the validation error did not improve by a margin of 0.0001 over the last 100 epochs. Setting this margin is a delicate process, as setting it too high can lead to underfitting, i.e., the model does not fit the training data closely enough and therefore loses potential predictive power. On the other hand, setting it to low might result in unnecessary long traninig time without improvement (Yao et al. 2007). To further prevent overfitting, I implement the regularization method dropout. Dropout prevents co-adaptation, the over-adjustment of the model to the idiosyncrasies of the training dataset, by eliminating neurons during the training process (Srivastava et al. 2014). I apply dropout to both hidden layers with a respective probability of p = 0.5 for each node to be left out at each pass during the training.

3.5. EXtreme Gradient Boosting (XGboost)

XGBoost stands for eXtreme Gradient Boosting and is a package developed by Chen and Guestrin (2016) for easy application and tuning of gradient boosting with regularization methods on linear models and CART models (classification and regression trees). For my empirical studies, I use classification and regression trees as weak learners for the XGBoost models. XGBoost, as well as other gradient boosting methods, have provided state-of-the-art results in a variety of domains for both classification and regression problems. In general, gradient boosting is an ensemble method that combines the weighted predictions of multiple weak learners, in this case tree models, into a superior prediction model. In the training process, the XGBoost model is built by sequentially fitting weak learners to bootstrap samples. However, instead of fitting them to the target variable, the goal is to reduce the pseudo-residuals of the previously fitted weak learners model. Therefore, the weak learners are sequentially fitted to the residuals of the previously fitted weak learners. By adding up the predictions of the weak learners, the prediction error is minimized. Once, the error can not be effectively reduced by additional weak learners, the final additive model takes the form of Equation (18) for regression and Equation (19) for classification (Chen and Guestrin 2016).

where and denote regression and classification tree models, respectively, and K is the number of weak learners contained in the final model. Like the MLP model, the additive model is also fitted by minimizing a predefined loss function. Unlike before, the loss function includes a penalty term for regularization, as shown by Equation (20) for regression and (21) for classification.

For the loss functions the penalty term is specified in Equation (22).

Here, M denotes the number of leaves in a CART weak learner and denotes the -norm of the weights w attached with each leave in a tree. Since M and w define the complexity of a tree, the term penalizes both the complexity of the tree and unnecessarily large weights in the loss function. A detailed explanation can be found in Chen and Guestrin (2016).

4. Data

The dataset consists of fundamentals and exchange rates from 1973–2014 for 10 different country pairs. The fundamentals for this examination stem from the aforementioned UIP, PPP and MM theories as well as an AF approach. Exchange rates are expressed relative to the USD dollar and recorded at the end of the month. The dataset is provided by Amat et al. (2018) and is available at their website, which I kindly refer to for more information about the dataset. The data were originally sourced from Datastream, the OECD, and the Federal Reserve’s website (Amat et al. 2020).

When training complex machine learning models, it is necessary to provide the algorithm with many observations in order to achieve an acceptable level of adaptation without overfitting. For this reason, I chose to train the algorithms using the combined data of all currencies, but to make the OOS forecasts for each currency pair separately. All variables are being scaled with maximum absolute scaling. To distinguish between countries, I encoded the IDs of the currency pairs with dummy variables. Also included are time dummies for months and years.

For every country pair, the dataset is split into a training set (in-sample) containing the first 67% of the available periods and a validation set (out-of-sample) consisting of the remaining 33% of the periods.

With this approach, I pursue the objective set by Amat et al. (2018). The goal is not to provide the most accurate forecasts of the exchange rate differentials or movement directions. Rather, the aim is to examine whether fundamentals from the aforementioned economic models have predictive power regarding the exchange rate differentials or movement directions.

5. Results

The hyperparameters for ANN as well as XBoost models are chosen sequentially according to the associated reduction in OOS loss. The results of the examinations are recorded in Table 1, Table 2, Table 3, Table 4 and Table 5. Each table contains the metrics obtained from the OOS predictions, i.e., Diebold–Mariano (DM) statistics, DM p-values and Theil ratios for the regressions as well as DM statistics, DM p-values, and error rates for the classification, for each of the 10 currency pairs, once with and once without time dummies.

Table 1.

One-month-ahead forecasting of exchange rate differentials with fundamentals using XGBoost.

Table 2.

One-month-ahead forecasting of exchange rate differentials with fundamentals using ANN.

Table 3.

One-month-ahead forecasting of exchange rate direction with fundamentals using XGBoost.

Table 4.

One-month-ahead forecasting of exchange rate direction with fundamentals using ANN.

Table 5.

One-month-ahead forecasting of exchange rate differentials/direction without fundamentals using ANN/XGBoost.

Table 1 contains the results for regressions with all sets of fundamentals using XGBoost. It is noticeable that in no case is the Theil ratio below 1, indicating that all the associated models predict the OOS exchange rate differences worse than the RW predictions. Moreover, in many cases the RW prediction is significantly better than the associated XGBoost model. This is the case when the Theil ratio is above 1 and the DM test and p-value simultaneously suggest statistical significance.This is particularly evident for the model variants that do not contain time dummies. Here, the p-values for most countries and for all sets of fundamental suggest statistical significance at least at the 10 percent level, sometimes even at the 1 percent level. Using the XGBoost algorithm, I can find no evidence that economic fundamentals improve the prediction of exchange rate differentials.

Alternatively, Table 2 shows the results for regressions using ANNs with all sets of fundamentals, once including and once excluding time dummies. Unlike the comparable results using XGBoost in Table 1, the Theil ratios are now closer to one. In about half of all cases they are even slightly below one. However, the difference is always very small and in most cases not statistically significant. There is no significant difference between the predictions generated by different sets of economic fundamentals and between the approaches that include time dummies or omit them. Although it appears that the ANNs are better fitted to the data than the XGBoost models, as indicated by the lower Theil ratios, this does not lead to significantly better predictions. Thus, it does not lead to the significance necessary to infer an overall effect of fundamentals on exchange rate differentials. Therefore, there is no evidence to support the claim that economic fundamentals have predictive power on exchange rate differentials.

Table 3 contains the results for the classifications with all sets of fundamentals, once with and once without time dummies, using XGBoost for classification to classify the direction of the exchange rate movement. In contrast to the results of the regression models of Table 1 and Table 2, the results for the classification yield some predictive power. In almost all cases, the error rate of each XGBoost model is lower than the RW error rate, i.e., less than 0.5, which means that the XGBoost model predicts the correct direction in more than 50 percent of the cases. However, the only cases where the models’ predictions differ significantly from the RW prediction are for the PTE/USD and FRF/USD currency pairs. However, this is true for all sets of fundamentals that include time dummies. Moreover, when comparing the models with time dummies to the models without time dummies, we observe an increase in the error rates as well as in the p-values for the models without time dummies. Thus, prediction timing seems to be important for classification, which is interesting since in most cases the original economic models do not consider prediction timing as a determining factor, but level differences between countries.

The results for the classifications using ANNs are shown in Table 4. For all sets of fundamentals, the error rates are much lower than for the RW benchmark. For most models that include time dummies, the results are statistically significant. Between these models, the differences in error rates and statistical significance are small. The low classification errors, as well as the presence of statistical significance in many cases, suggest the predictive power of fundamentals in terms of the exchange rate direction. However, the classification errors are much higher when the time dummies are excluded, and statistical significance disappears. This shows that the time dummies contain significant information for the predictions.

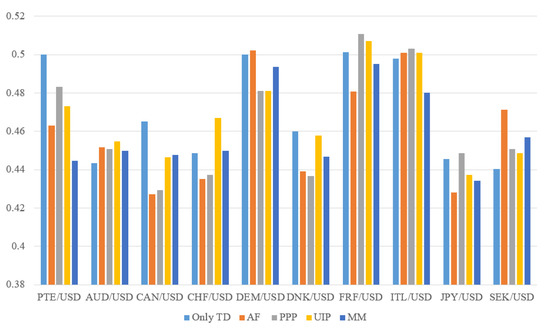

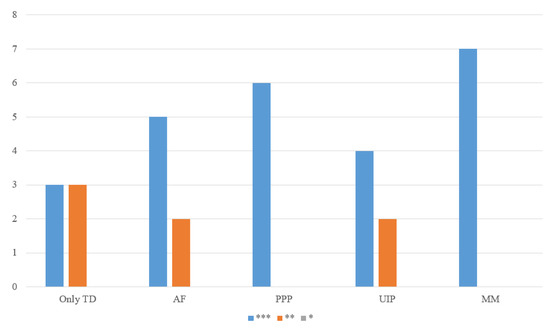

Based on these observations, I tested a new approach, in which I included only the time dummies as features. The goal was to investigate whether the time dummies alone could be responsible for the forecastability of exchange rate movement directions. Table 5 contains the results of this experiment. It can be observed that the error rates of the ANN models are in most cases smaller than the RW error rates and the difference is highly statistically significant. This raises the question of the role of fundamentals and their contribution to the predictive performance in the classification models of Table 3 and Table 4. In Figure 1, I compare the error rates of the fundamentals-based ANN models from Table 4, including time dummies, with the error rates of the ANN models only using time dummies as features from Table 5. It can be seen that the error rates from the predictions of the ANN models from Table 5, called “Only TD”, are not strictly lower than those from the ANN models in Table 4, “AF”, “PPP”, “UIP”, and “MM”. e.g., in the majority of cases, “MM” yields lower error rates than "Only TD". Furthermore, Figure 2 shows, in how many cases a certain level of statistical significance was reached by the models from Table 4 compared to the “Only TD” model of Table 5. It can be observed that “Only TD” displays statistical significance in six cases (for six country pairs) of which it reached three times the significance at the one percent level and three times at the five percent level. This is arguably worse than, for example, the “MM” model of Table 4, which reaches statistical significance at the one percent level in six cases. From this, I conclude that the good performance of the classifying ANN models from Table 4 cannot be attributed to the prediction timing alone, but fundamentals have contributed to the forecasting performance as well. However, the amount of the contribution of the fundamentals to the forecasting performance is unclear. There might be complex interactions between time dummies and fundamentals that are responsible for the forecasting performance of Table 4.

Figure 1.

Error rates—every set of fundamentals vs. only TD.

Figure 2.

Level of significance—every set of fundamentals vs. only TD.

6. Discussion

According to Alvarez et al. (2007), if fundamentals do not have a significant effect on exchange rate movements, monetary policy would not affect the exchange rate, since stimulating fundamentals would not lead to changes in exchange rates, but only to risk premia. The results from the ANN classification models of Table 4 suggest that fundamentals have forecasting power for exchange rates. However, as Table 5 shows, much of this is due to the time dummies. Therefore, the prediction timing is important. A possible explanation for these observations is that the fundamentals have some forecasting power for the exchange rate movement directions, but only in combination with the prediction timing. It is possible that there are complex interdependencies between time dummies and fundamental data. This fits well with the scapegoat theory of Bacchetta and Wincoop (2004). They argue that fundamental variables can be scapegoats in that the perceived importance of certain fundamentals by market participants, policymakers, and analysts change over time. Therefore, the weight they attach to individual fundamentals changes over time, leading to time-varying expectations as well as varying foreign exchange trading decisions. Therefore, the influence of macroeconomic fundamentals on exchange rates also changes similarly over time. This effect could be captured by complex interactions between the time dummies and the fundamentals, since the time dummies contain the information about the timing. This has implications for monetary policy because the temporary scapegoat effect distorts the relationship between fundamentals and exchange rates. As a result, policymakers cannot rely on past relationships between fundamentals and exchange rates because they might have changed. Moreover, changes in fundamentals might indicate that policy intervention is needed, but the scapegoat effect distorts the exchange rate, making it appear that policy intervention is not needed and that the exchange rate is independent of fluctuations in fundamentals. The empirical results of this paper strengthen the necessary conditions for the existence of the scapegoat effect, namely that the effect size of the influence of fundamentals on exchange rates depends on prediction timing.

7. Conclusions

In this paper, I examined the predictive power of macroeconomic fundamentals, when used in nonlinear machine learning models. I applied artificial neural networks (ANNs) in form of a multilayer perceptron model, as well as gradient boosted decision trees using XGBoost, to forecast exchange rates movements. I compared the OOS-RMSE for regression as well as the OOS classification error for the classification of individually trained models for each currency pair to the random walk benchmark. The fundamentals were derived from the PPP, UIP, and MM theories. I compared the significance of the differences in the predictive performance of the trained models with the random walk benchmark using the Diebold–Mariano test statistic and p-value. For the regression setting, i.e., the prediction of exchange rate differentials, I found no convincing evidence that fundamentals possess predictive power. For the classification setting, i.e., the prediction of exchange rate movement directions, the XGBoost models were able to outperform the random walk benchmark by a small margin, which was in some cases statistically significant. The ANN models were able to outperform the random walk benchmark by a larger margin, which was statistically significant at the 1 percent level in many cases. However, this was only the case when time dummies were included in the models. ANN regressions using only time dummies as explanatory variables showed good predictive performances and high levels of statistical significance in many cases as well. This called into question the role of fundamentals in the predictions of the well-performing models. However, a comparison of these results with the ANN models for classifications with fundamental data showed that the use of the MM fundamentals leads to better results in terms of statistical significance and error rates. Hence, the predictive performance cannot be attributed to the time dummies alone. One possible explanation for this is that complex interactions between time dummies and fundamentals could be the key to good directional forecasts for exchange rates. The relationship between time dummies and fundamental data needs to be explored in more detail in future studies.

Funding

The funding was provided by the German Research Foundation (DFG).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alvarez, Fernando, Andrew Atkeson, and Patrick J. Kehoe. 2007. If Exchange Rates are Random Walks, Then Almost Everything We Say About Monetary Policy is Wrong. American Economic Review 97: 339–45. [Google Scholar] [CrossRef]

- Amat, Christophe, Tomasz Michalski, and Gilles Stoltz. 2018. Fundamentals and exchange rate forecastability with simple machine learning methods. Journal of International Money and Finance 88: 1–24. [Google Scholar] [CrossRef]

- Amat, Christophe, Tomasz Michalski, and Gilles Stoltz. 2020. Data set and programs to reproduce the forecasts Fundamentals and exchange rate forecastability with simple machine learning methodss. Mendeley Data V1. [Google Scholar] [CrossRef]

- Bacchetta, Philippe, and Eric van Wincoop. 2004. A Scapegoat Model of Exchange Rate Fluctuations. Working Paper 10245. Cambridge, MA, USA: National Bureau of Economic Research. [Google Scholar] [CrossRef]

- Bajari, Patrick, Denis Nekipelov, Stephen P. Ryan, and Miaoyu Yang. 2015. Demand Estimation with Machine Learning and Model Combination. Working Paper 20955. Cambridge, MA, USA: National Bureau of Economic Research. [Google Scholar] [CrossRef]

- Chen, Tianqi, and Carlos Guestrin. 2016. Xgboost: A Scalable Tree Boosting System. Available online: http://arxiv.org/abs/1603.02754 (accessed on 29 October 2021).

- Dal Bianco, Marcos, Maximo Camacho, and Gabriel Perez Quiros. 2012. Short-run forecasting of the euro-dollar exchange rate with economic fundamentals. Journal of International Money and Finance 31: 377–96. [Google Scholar] [CrossRef] [Green Version]

- Edison, Hali J., and Jan Tore Klovland. 1987. A quantitative reassessment of the purchasing power parity hypothesis: Evidence from norway and the united kingdom. Journal of Applied Econometrics 2: 309–33. [Google Scholar] [CrossRef] [Green Version]

- Engel, Charles, Dohyeon Lee, Chang Liu, Chenxin Liu, and Steve Pak Yeung Wu. 2017. The Uncovered Interest Parity Puzzle, Exchange Rate Forecasting, and Taylor Rules. Working Paper 24059. Cambridge, MA, USA: National Bureau of Economic Research. [Google Scholar] [CrossRef]

- Goodfellow, Ian, Yoshua Bengio, and Aaron Courville. 2016. Deep Learning. Cambridge: MIT Press, Available online: http://www.deeplearningbook.org (accessed on 29 October 2021).

- Hornik, Kurt. 1991. Approximation capabilities of multilayer feedforward networks. Neural Networks 4: 251–57. [Google Scholar] [CrossRef]

- Juselius, Katarina. 1995. Do purchasing power parity and uncovered interest rate parity hold in the long run? an example of likelihood inference in a multivariate time-series model. Journal of Econometrics 69: 211–40. [Google Scholar] [CrossRef]

- Kingma, Diederik P., and Jimmy Ba. 2015. Adam: A method for stochastic optimization. Paper present at 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7–9; Edited by Yoshua Bengio and Yan LeCun. Conference Track Proceedings. [Google Scholar]

- Mark, Nelson C. 1995. Exchange rates and fundamentals: Evidence on long-horizon predictability. The American Economic Review 85: 201–18. [Google Scholar]

- Meese, Richard A., and Kenneth Rogoff. 1983. Empirical exchange rate models of the seventies: Do they fit out of sample? Journal of International Economics 14: 3–24. [Google Scholar] [CrossRef]

- Meredith, Guy, and Menzie D. Chinn. 1998. Long-Horizon Uncovered Interest Rate Parity. Working Paper 6797. Cambridge, MA, USA: National Bureau of Economic Research. [Google Scholar] [CrossRef]

- Neely, Christopher, and Lucio Sarno. 2002. How Well Do Monetary Fundamentals Forecast Exchange Rates? Working Papers 2002-007. St. Louis, MO, USA: Federal Reserve Bank of St. Louis. [Google Scholar]

- Plakandaras, Vasilios, Theophilos Papadimitriou, and Periklis Gogas. 2015. Forecasting daily and monthly exchange rates with machine learning techniques. Journal of Forecasting 34: 560–73. [Google Scholar] [CrossRef]

- Qureshi, Shafiullah, Ba M. Chu, and Fanny S. Demers. 2020. Forecasting Canadian GDP Growth Using XGBoost. Carleton Economic Papers 20-14. Ottawa: Department of Economics, Carleton University. [Google Scholar]

- Srivastava, Nitish, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: A simple way to prevent neural networks from overfitting. Journal of Machine Learning Research 15: 1929–58. [Google Scholar]

- Vitek, Francis. 2005. The Exchange Rate Forecasting Puzzle. International Finance 0509005. Munich: University Library of Munich, Germany. [Google Scholar]

- Yao, Yuan, Lorenzo Rosasco, and Andrea Caponnetto. 2007. On early stopping in gradient descent learning. Constructive Approximation 26: 289–315. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).