Simple Summary

Artificial intelligence (AI) is transforming urological oncology surgery. While earlier systems were mainly limited to analyzing image data, modern multimodal models can combine various sources of information, such as imaging, laboratory values, robotic data and clinical documentation. This allows for more precise diagnostics, surgical planning and follow-up care. Generative AI can also enhance communication between physicians and patients, as well as support surgical training by providing realistic simulations and personalized feedback. However, there are risks associated with incomplete or distorted training data, data protection issues and unclear responsibilities in AI-supported decision-making. A better understanding of the opportunities and limitations of multimodal and generative AI is crucial to ensure its safe, ethical and clinically meaningful use in urological practice.

Abstract

Background: Multimodal artificial intelligence (MMAI) is transforming urological oncology by enabling the seamless integration of diverse data sources, including imaging, clinical records and robotic telemetry to facilitate patient-specific decision-making. Methods: This narrative review summarizes the current developments, applications, opportunities and risks of multimodal AI systems throughout the entire perioperative process in uro-oncologic surgery. Results: MMAI demonstrates quantifiable benefits across the entire perioperative pathway. Preoperatively, it improves diagnostics and surgical planning via multimodal data fusion. Intraoperatively, AI-assisted systems provide real-time context-based decision support, risk prediction and skill assessment within the operating theater. Postoperatively, MMAI facilitates automated documentation, early complication detection and personalized follow-up. Generative AI further revolutionizes surgical training through adaptive feedback and simulations. However, critical limitations must be addressed, including data bias, the barrier of closed robotic platforms, insufficient model validation, data security issues, hallucinations and ethical concerns regarding liability and transparency. Conclusions: MMAI significantly enhances the precision, efficiency and patient-centeredness of uro-oncological care. To ensure safe and widespread implementation, resolving the technical and regulatory barriers to real-time integration into robotic platforms is paramount. This must be coupled with standardized quality controls, transparent decision-making processes and responsible integration that fully preserves physician autonomy.

1. Introduction

The integration of artificial intelligence (AI) into surgical workflows is a major challenge, but it is a proposed cornerstone of future development in this exciting field of uro-oncology surgery []. In the early stages of surgical AI research, most applications were single-modality and single-task systems, typically based on computer vision models for step and instrument recognition or complication detection from intraoperative videos []. However, these models are limited to visual signals and do not consider the broader clinical context.

In urology, however, extensive data are generated before, during and after surgery, including laboratory data, imaging data, electronic health record (EHR) data, robotic telemetry and surgical videos [,]. This data is typically neither structured nor systematically recorded. By combining these heterogeneous data sources with large language models (LLMs), it is now possible to train multimodal models that can not only detect patterns but also explain them in clinically meaningful ways []. This enables richer context generation, automated reporting and training or feedback systems. The potential of this paradigm is particularly pronounced in uro-oncology, where procedures such as robotic-assisted radical prostatectomy (RARP), radical cystectomy (RC) and partial nephrectomy (PN) are highly standardized.

In this context, it is important to distinguish between the different concepts of AI.

“Single-modality AI” uses one type of data, such as endoscopic video, CT scans or pathology slides, to perform a specific task, such as segmentation, detection or prediction.

“Integrated AI” involves incorporating one or more AI tools into a clinical workflow or device (e.g., smart overlays on a robotic console) but does not necessarily combine different data modalities within one model.

In contrast, multimodal artificial intelligence (MMAI) explicitly combines two or more different types of data, such as imaging, clinical variables and digital pathology, to learn joint representations across these inputs []. Therefore, MMAI has the capacity to support end-to-end, patient-specific decision-making across the perioperative pathway, rather than optimizing isolated tasks.

This non-systematic narrative review aims to provide an overview of the opportunities and risks of multimodal concepts using MMAI in uro-oncological surgery across the preoperative, intraoperative and postoperative phases, as well as in surgical training.

Literature Search and Selection

This narrative review is based on a non-systematic literature search conducted in PubMed and Google Scholar up to October 2025. The search terms used were, among others, “multimodal artificial intelligence”, “artificial intelligence”, “urology”, “urologic surgery” and “robot-assisted surgery”.

Relevant peer-reviewed studies, clinical trials, feasibility studies and technical reports relating to the use of multimodal AI in the preoperative, intraoperative and postoperative phases of urological surgery were included. Non-peer-reviewed preprints and papers lacking methodological detail were excluded. This selection aims to reflect the breadth of available evidence, rather than claiming to be exhaustive.

2. Technological Background

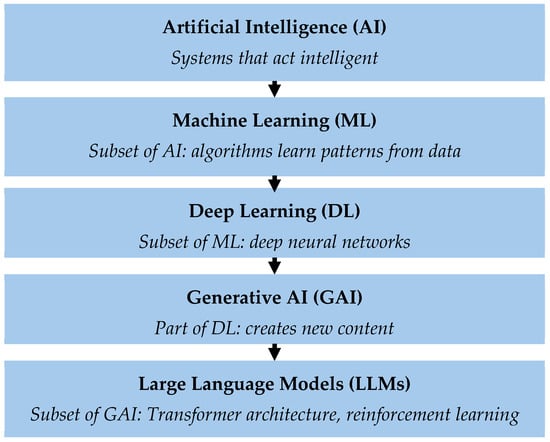

To gain a better understanding of current developments in urological surgery, it is necessary to provide an overview of the fundamental principles of AI, particularly generative AI. These relationships are depicted schematically in Figure 1.

Figure 1.

Hierarchical representation of the different levels of artificial intelligence (AI). Artificial Intelligence (AI) describes systems that can perform tasks with human-like intelligence. Machine learning (ML) is a subset of AI in which algorithms learn independently from data and recognize patterns. Deep learning (DL) uses multi-layered neural networks to understand complex relationships. Generative AI (GAI) is a subset of deep learning that can generate new content, such as text, images or simulations. Large language models (LLMs) are specialized GAI models designed for language processing. The Transformer architecture enables AI models to recognize relationships between words in a sentence by analyzing their meaning in the context of the entire text. This enables the model to understand language and respond meaningfully. LLMs are further trained using “Reinforcement Learning with Human Feedback” (RLHF) to generate more precise and understandable answers.

The term “artificial intelligence” (AI) encompasses various technologies that enable machines to perform tasks that normally require human intelligence []. Machine learning (ML) is a subgroup of AI that uses statistical methods to learn from data, recognize patterns and make predictions []. As a further development of ML, deep learning (DL) uses artificial neural networks to recognize patterns from large amounts of data, like the human brain. Generative AI (GAI) is a subset of DL. GAI can not only analyze and organize existing information but also create its own content, including text, images, audio files and other synthetic data. A distinction is made between natural language processing (NLP), image processing and prediction. Large language models (LLMs) are the tools for interpreting, generating and manipulating human-like text []. The advantage of LLMs is that they can be developed and specialized for specific tasks [].

The quality and diversity of the underlying data are crucial for these models’ performance. In uro-oncology, for example, the data is particularly diverse. Sources of possible data include robotic and endoscopic video sequences, as well as all instrument-specific data collected intraoperatively. Perioperative data, including electronic health record (EHR) data, laboratory values, imaging data and digital pathology data are available []. This database enables a wide range of applications of multimodal AI (MMAI) throughout the entire perioperative process.

3. Perioperative Applications of MMAI in Uro-Oncological Surgery

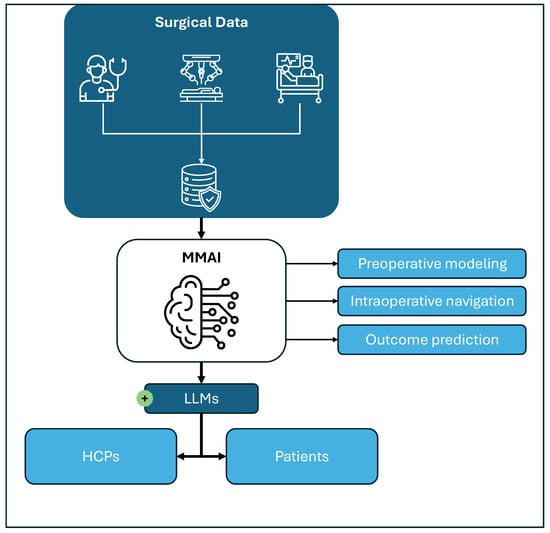

MMAI is envisioned to bridge the gap between single-task AI applications and the full integration of AI within clinical workflows. LLMs play a pivotal role in this context, as they can transform complex information into diverse, context-specific outputs tailored to various stakeholders, including healthcare professionals (e.g., in the operating room) and patients []. These outputs can be dynamically adapted to situational requirements, such as the desired level of empathy, depth of information and mode of visualization. As MMAI systems are not yet implemented in clinical practice, this work focuses on current challenges and early technological applications across the preoperative, intraoperative and postoperative settings, as well as in surgical training (see Figure 2).

Figure 2.

Integration of surgical data through multimodal artificial intelligence (MMAI). Surgical data from preoperative counseling and imaging, intraoperative robotic/video and monitoring data, as well as postoperative follow-up data, can be integrated into MMAI models to generate digital twins (preoperative modeling), perform intraoperative navigation and predict postoperative outcomes. Additional applications include simulation and training based on surgical data. Large language models (LLMs), as part of the MMAI, might facilitate communication and display of information generated by the MMAI to the healthcare professionals (HCPs) involved in the care of patients, as well as directly to the patient.

3.1. Preoperative

Before planning uro-oncological surgery, the treating urologist must take several preparatory steps. First, they assess the patient’s medical and treatment history, followed by a review of imaging modalities, current guidelines and the available literature []. Therefore, there are multiple data sources that must be evaluated together to guide surgical decision-making.

In urological oncology, several conventional imaging techniques are routinely used. These include ultrasonography, computed tomography (CT), magnetic resonance imaging (MRI), skeletal scintigraphy and positron emission tomography (PET) []. Conventional imaging techniques are increasingly being supplemented by molecular imaging, such as the use of prostate-specific membrane antigen (PSMA) tracers. These techniques allow tumors and lymph nodes to be localized more precisely and can be used with robotic systems during surgery [,,]. The importance of multiparametric MRI (mpMRI) has also increased, particularly in the diagnosis and preoperative evaluation of prostate cancer [].

Various imaging modalities can be integrated through MMAI. These systems combine imaging data with clinical parameters and genomic markers, where applicable, to improve surgical planning and decision-making [].

Artificial intelligence can complement imaging-based and anatomical planning by contributing to risk assessment and prognostication in uro-oncology. AI models can categorize the risk of progression in patients with intermediate-risk prostate cancer based on digitized histopathology []. AI-enabled prognostic models predict the risk of metastatic progression using whole-slide images of core biopsies or prostatectomy specimens. In a retrospective validation cohort of 176 patients, this deep learning model stratified intermediate-risk patients with high precision, achieving a hazard ratio (HR) of 4.66 (p < 0.001) for metastasis-free survival and an HR of 4.35 for biochemical recurrence-free survival compared to low-risk groups. This suggests that AI-derived histopathological biomarkers could complement genomic and clinical models for personalized therapy planning []. AI-assisted histopathological grading systems have demonstrated the ability to detect, grade and quantify prostate cancer with high accuracy. These systems achieve near-perfect agreement with expert pathologists and reduce interobserver variability []. These tools can improve diagnostic consistency, refine prognostic assessment and enhance personalized surgical decision-making. For instance, multi-classifier systems that combine long non-coding RNA (lncRNA)-based genomic classifiers, deep learning histology models and clinicopathological data have been shown to be more accurate than any single classifier at predicting recurrence-free survival in papillary renal cell carcinoma []. MMAI synthesizes diverse data types into clinically actionable insights, enabling more accurate and individualized prognostication and risk assessment in urologic oncology [,].

In recent years, three-dimensional (3D) reconstructions have become an integral part of the preoperative planning process in urological surgery. AI-based 3D reconstructions and virtual simulations facilitate patient-specific surgical planning and improve visualization of intricate anatomical structures and their spatial relationships with tumors, blood vessels and neighboring organs []. This improved anatomical understanding enables more precise and safer surgical preparation. The clinical utility of such 3D models has already been demonstrated in urology, particularly when planning partial nephrectomy, radical cystectomy and robot-assisted radical prostatectomy [].

Recent evidence further supports this development. The 3DPN trial is a multicenter randomized controlled trial (RCT) investigating the benefits of virtual interactive 3D modeling compared with conventional CT for preoperative planning in robot-assisted partial nephrectomy []. The ongoing trial aims to enroll 370 patients across ten centers, with total console time as the primary endpoint, serving as a surrogate for surgical efficiency. Secondary outcomes include warm ischemia time and positive surgical margin rates. This study underscores the growing importance of 3D modeling as a decision-support tool and highlights its potential to improve surgical precision and perioperative outcomes in urological oncology.

The integration of anatomical 3D kidney models, including vasculature, the pyelocaliceal system and cystic structures, can enhance tumor localization and enable safer hilar dissection during robot-assisted partial nephrectomy. These models may be displayed on auxiliary monitors, integrated directly into robotic interfaces or superimposed using augmented reality (AR). Furthermore, the incorporation of perfusion-zone mapping allows identification of segmental arteries, facilitating selective clamping and maximizing preservation of healthy renal parenchyma [].

Beyond renal surgery, 3D modeling has also demonstrated its value in prostate cancer surgery [,]. Integrating 3D-printed prostate models into nerve-sparing RARP can have a positive impact on surgical outcomes, improving intraoperative orientation and pathological communication [].

MMAI enhances these preoperative 3D reconstructions in urological oncology further, particularly by optimizing automated segmentation, modeling and real-time intraoperative integration []. Using MMAI-supported algorithms, precise and automated segmentation of tumors and critical structures, such as neurovascular bundles, on preoperative MRI or CT images is possible. This facilitates the creation of high-resolution, patient-specific 3D models []. Furthermore, MMAI approaches can compensate the limitations of individual modalities. This increases both the precision of tumor detection and the safety of resection [,]. Early AI models primarily analyzed individual image modalities. However, modern multimodal systems integrate heterogeneous data sources, enabling more comprehensive and patient-specific preoperative planning.

Close interdisciplinary collaboration between radiology, urology and data science teams is essential for effectively combining these modalities and interpreting the resulting data in a clinically relevant context. The multimodal approach is a dynamic process that continuously optimizes itself through technological innovations and ongoing advances in AI [].

MMAI can also be used not only in imaging but also preoperatively for patient education. It can provide medical information not only in text, but also in images, videos, audio and interactive formats. This enables a more personalized, understandable and engaging presentation of complex content. This has been proven to increase patient health literacy and understanding []. Using principles such as dual-coding theory and the reduction of cognitive overload, MMAI tools can prepare content so that it is processed both visually and verbally. This promotes the absorption and retention of relevant health information, particularly in younger or digitally savvy patient groups []. Initial studies show that AI-generated materials can improve the readability and accessibility of patient information, including in urology []. However, medical supervision is still required to ensure content accuracy and adaptation to the patient’s language level. In uro-oncology, AI has been used to translate complex information on prostate, bladder, kidney and testicular cancer into short, easy-to-understand texts for patients. In a randomized assessment, GPT-4 converted EAU guidelines into patient education materials in a mean time of 52.1 s. The AI-generated content significantly improved readability, reducing the required reading level from Flesch–Kincaid Grade 11.6 to 6.1 (p < 0.001), while maintaining equivalent ratings for accuracy, completeness and clarity compared to original expert-authored texts []. Such AI tools can support patient education and improve understanding of the disease and treatment options. Multimodal AI can also overcome barriers such as language or literacy difficulties by generating explanatory videos, infographics or spoken explanations. This is particularly relevant for patients with low health literacy or in resource-limited settings [].

3.2. Intraoperative

Historically, the application of AI in surgery, and thus also in uro-oncology, was focused on image-based approaches. The focus was on computer vision, i.e., instrument and step recognition in the operating room. Intraoperative image data is automatically analyzed to identify relevant anatomical structures and support surgical steps. AI-supported systems can suggest intraoperative risk profiles, bleeding probabilities or the optimal resection line thus facilitating decision-making [,,].

A key development has been the visualization and protection of delicate neurovascular structures, which are essential for functional outcomes in urologic oncology. Recent work on deep learning-based auto-contouring of prostate MRI achieved Dice similarity coefficients between 0.79 and 0.92, demonstrating robust segmentation of neurovascular bundles and periprostatic structures []. Accurately identifying these structures allows for nerve-sparing techniques during radical prostatectomy, which helps preserve erectile function and continence. Similarly, visualizing arterial anatomy helps minimize inadvertent vessel injury and intraoperative bleeding [].

AI-based systems have demonstrated the ability to automatically identify and visualize critical anatomical structures, such as nerves and blood vessels during lymph node dissection. These models achieve high segmentation accuracy and near-real-time performance, suggesting their strong potential for use in surgical navigation []. Furthermore, deep learning models can accurately predict lymphovascular invasion from whole-slide images, demonstrating their independent prognostic value for survival prediction in bladder cancer patients [].

Confocal laser microscopy (CLM) has enabled rapid and accurate intraoperative margin assessment for surgical margin detection during robot-assisted radical prostatectomy. CLM significantly reduced the median intraoperative assessment time to 8 min compared to 50 min for conventional frozen sections, with a high specificity of 96% for detecting positive surgical margins []. MMAI is a promising extension of this approach because it can automatically analyze and correlate imaging, histopathology and confocal microscopy data ex vivo on resected tissue and potentially in vivo during surgery. This integration would allow for the precise, real-time identification of positive margins without delaying the procedure.

Augmented reality (AR) systems project relevant anatomical and procedural information directly into the surgeon’s field of vision []. AI-driven AR navigation during partial nephrectomy can visualize the renal vasculature and its anatomical relationships intraoperatively, reducing hilar dissection time and minimizing bleeding, especially in cases of complex vascular anatomy []. Pure vision models have evolved further toward multimodal interaction by incorporating speech and context understanding. Recent vision-language models integrate visual perception, temporal analysis and higher-order reasoning to enable the multimodal interpretation of surgical scenes [,].

Therefore, MMAI applications rely on the tight integration of imaging, sensor data (e.g., tension sensors on robotic arms) and software-based decision support systems. This enables the consideration of intraoperative changes, such as organ motion, in real time, thereby increasing surgical precision [].

In a prospective validation, the deep learning model ‘CystoNet’ achieved a sensitivity of 90.9% and a specificity of 98.6% in detecting bladder cancer during cystoscopy. The system successfully identified 95% of tumors (42 out of 44), potentially addressing the 20% miss rate associated with standard white-light cystoscopy []. Beyond oncology, computer vision-based navigation assistance has been successfully demonstrated in ureteroscopy, improving procedural safety and efficiency [].

Beyond real-time visualization, deep learning models can analyze surgical video and kinematic data to assess surgeon proficiency. A multi-institutional study using RARP videos achieved 89% accuracy in identifying surgical phases and developed a parameter-based scoring system that effectively classified experts and novices with an accuracy of 86.2%. This suggests a high potential for automated, objective training and accreditation systems [].

In the context of globalization and increasingly international surgical teams, MMAI applications can facilitate the automatic translation of intraoperative instructions and interface displays into different languages. This improves team communication and workflow []. The integration of these technologies is driving the evolution toward a “digital operating room,” in which all available data streams are consolidated and utilized by the surgeon, assistants and nursing staff in real time and in a context-sensitive manner.

3.3. Postoperative

AI can also be helpful in documentation after surgery: Surgical reports generated by LLMs can not only be created significantly faster but also better comply with guidelines. They are positively evaluated by both surgeons and patients. AI-generated, realistic image representations further improve documentation, even though human input is still required []. Collaboration between surgeons and AI leads to the highest levels of acceptance and quality, whereas purely AI-generated reports, while faster, are less reliable []. By integrating multimodal data, AI can identify postoperative complications early, create individual risk profiles and derive personalized follow-up recommendations []. In uro-oncology AI already enables more precise predictions of treatment success and the analysis of complex relationships between imaging, pathology and clinical parameters []. The automated analysis of telemetry data from robotically assisted surgeries in combination with patient data enables continuous and comprehensive monitoring of treatment outcomes. Patient inquiries and billing activities are a major cause of physician burnout []. In a landmark study comparing AI versus physician responses to patient queries, evaluators preferred the AI response in 78.6% of cases. The AI responses were rated significantly higher not only for quality (odds ratio 3.6) but notably also for empathy (odds ratio 9.8), suggesting a valuable role in alleviating the administrative burden of postoperative care []. They can also automate billing processes by extracting relevant information from surgical reports. Studies have shown them to be highly accurate in coding surgical procedures [].

Selected studies demonstrating quantitative outcomes of AI applications in urological oncology across the perioperative course can be found in Table 1.

Table 1.

Overview of selected studies demonstrating quantitative outcomes of AI applications in urological oncology across the perioperative course. Abbreviations: BCR (biochemical recurrence), HR (hazard ratio), LLM (large language model), N (sample size), PCa (prostate cancer), RARP (robot-assisted radical prostatectomy), RCT (randomized controlled trial), rPN (robotic partial nephrectomy), κ (Kappa coefficient).

3.4. MMAI in Urological Training

Simulations are playing an increasingly important role in urologists’ training, from students to specialists. This applies not only to robotic surgery but also to endourology [,].

Realistic simulations are created using virtual reality (VR) and AR. These enable safe and effective surgical training. VR and AR promote technical skills and hand-eye coordination, while AI-based feedback personalizes learning and minimizes errors []. MMAI also enables objective analysis of learning curves and the provision of personalized feedback. This can improve surgical competence sustainably []. In an increasingly globalized world, surgical teams are also multinational []. Multimodal AI approaches combining text, images, video and speech can create language-independent learning environments. MMAI can also provide interactive case studies and adaptive learning materials tailored to the individual []. Multimodal generative AI can enable surgical training to take place in personalized, accessible learning environments, making training more efficient and inclusive [].

4. Future Directions: Risks, Opportunities and Integration into Robotic Platforms

The integration of MMAI applications into clinical practice requires clear quality controls, data protection measures and close collaboration between healthcare professionals and AI systems to avoid misinformation and misunderstandings []. A primary concern involves the quality and representativeness of training data. Models relying on single-center or specialized cohorts often exhibit inherent biases, resulting in limited generalizability and the potential for false decisions in cases involving atypical anatomy or rare tumors. Furthermore, some multimodal systems utilize synthetic or simulated data, which may lack crucial clinical validity []. Data protection and data security are further key challenges. Multimodal systems access highly sensitive information including imaging data, electronic health records, robotic telemetry and possibly voice data. Even with pseudonymization, there is a risk of re-identification []. Notably, many current LLMs are not fully compliant with existing medical data protection regulations []. At a technical level, insufficient model fidelity and the risk of hallucinations (the generation of plausible but false information) present serious clinical risks []. These errors could have critical consequences in intraoperative decision-making or automated documentation. Therefore, the development of domain-specific models and continuous, rigorous validation are crucial to avoiding misinterpretations [].

Finally, the increasing integration of AI entails socioethical and psychological risks. While AI systems optimize decision-making through objective data analysis and risk prediction, they must never replace the surgeon’s final clinical decision []. Physician responsibility mandates the critical evaluation of AI-generated recommendations, the consideration of individual patient factors and the integration of intuition and experience []. In summary, MMAI supports and complements perioperative decision-making, but the ultimate responsibility for diagnosis, treatment and explanation always rests with the attending physician.

Until now, MMAI solutions have not been fully integrated into the available robotic platforms. This direct integration has been disabled by medical device regulations as the robotic platform itself is certified []. Despite the technological maturity of many AI models, integrating them into commercial robotic platforms remains challenging. Currently, most robotic systems operate as ‘closed loops’ due to strict medical device regulations, which classify any software modification as a substantial change requiring re-certification. This creates an ecosystem in which third-party MMAI solutions cannot easily interface with the robotic console in real time. Furthermore, the computational latency required to process multimodal data (video, MRI and telemetry) poses a safety risk during live surgery. Future platforms must therefore adopt open application programming interface (API) architectures that allow for secure, low-latency edge computing to bridge the gap between academic AI models and clinical surgical hardware.

However, new platforms are arising in urology []. The capability to interface with MMAI solutions developed by external providers may represent a significant strategic advantage, enabling differentiation from competitors and enhancing future surgical capabilities. In this context, the concept of an open development ecosystem for MMAI solutions, analogous to applications available on app stores, could be particularly impactful.

5. Conclusions

Multimodal and generative AI systems open new perspectives in urology across all phases of the surgical process: from preoperative planning and intraoperative decision-making to postoperative care and training. By combining diverse data sources, MMAI can make clinical processes more precise, efficient and personalized. At the same time, however, new challenges arise: biased training data, unclear liability, a lack of transparency and data protection risks can jeopardize patient safety. The responsible use of multimodal AI therefore requires interdisciplinary collaboration, technical validation and clear ethical guidelines. In addition, robotic platforms and their configuration might play an important role in the adoption of MMAI in urooncologic surgery. AI systems must not replace surgical decisions but rather support them. Only when humans and machines work together in a complementary manner can MMAI realize its full potential and contribute to a safe, learning and patient-centered urological metaverse.

Author Contributions

Conceptualization, L.B., J.K. and S.R.; methodology, L.B., J.K. and S.R.; formal analysis, L.B., R.-B.I., J.R. and O.W.; investigation, K.H. and H.C.v.K.; data curation, J.K. and R.-B.I.; visualization, M.-L.W., P.S. and L.B.; writing—original draft preparation, L.B. and J.K.; writing—review and editing, all authors; supervision, S.R., N.C.B., J.J., T.B. and P.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

ChatGPT 5 (OpenAI) was used to assist with language polishing for grammar and clarity only. No AI tool was used to generate, analyze or interpret data. All authors reviewed the final content and take full responsibility for it. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| MMAI | Multimodal Artificial Intelligence |

| RARP | Robotic-Assisted Radical Prostatectomy |

| RC | Radical Cystectomy |

| PN | Partial Nephrectomy |

| ML | Machine Learning |

| DL | Deep Learning |

| GAI | Generative Artificial Intelligence |

| NLP | Natural Language Processing |

| LLM | Large Language Model |

| RLHF | Reinforcement Learning with Human Feedback |

| HER | Electronic Health Record |

| HCP | Healthcare Professionals |

| CT | Computed Tomography |

| MRI | Magnetic Resonance imaging |

| PET | Positron Emission Tomography |

| PSMA | Prostate-Specific Membrane Antigen |

| mpMRI | multiparametric MRI |

| lncRNA | long non-coding RNA |

| 3D | Three-Dimensional |

| AR | Augmented Reality |

| CLM | Confocal Laser Microscopy |

| VR | Virtual Reality |

| API | Application Programming Interface |

References

- Bhandari, M.; Zeffiro, T.; Reddiboina, M. Artificial Intelligence and Robotic Surgery: Current Perspective and Future Directions. Curr. Opin. Urol. 2020, 30, 48–54. [Google Scholar] [CrossRef] [PubMed]

- Kitaguchi, D.; Takeshita, N.; Hasegawa, H.; Ito, M. Artificial Intelligence-based Computer Vision in Surgery: Recent Advances and Future Perspectives. Ann. Gastroenterol. Surg. 2022, 6, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Gögenur, I. Introducing Machine Learning-Based Prediction Models in the Perioperative Setting. Br. J. Surg. 2023, 110, 533–535. [Google Scholar] [CrossRef] [PubMed]

- Miller, B.T.; Fafaj, A.; Tastaldi, L.; Alkhatib, H.; Zolin, S.; AlMarzooqi, R.; Tu, C.; Alaedeen, D.; Prabhu, A.S.; Krpata, D.M.; et al. Capturing Surgical Data: Comparing a Quality Improvement Registry to Natural Language Processing and Manual Chart Review. J. Gastrointest. Surg. 2022, 26, 1490–1494. [Google Scholar] [CrossRef]

- Rodler, S.; Ganjavi, C.; De Backer, P.; Magoulianitis, V.; Ramacciotti, L.S.; De Castro Abreu, A.L.; Gill, I.S.; Cacciamani, G.E. Generative Artificial Intelligence in Surgery. Surgery 2024, 175, 1496–1502. [Google Scholar] [CrossRef]

- Kaczmarczyk, R.; Wilhelm, T.I.; Martin, R.; Roos, J. Evaluating Multimodal AI in Medical Diagnostics. Npj Digit. Med. 2024, 7, 205. [Google Scholar] [CrossRef]

- National Academy of Medicine. Generative Artificial Intelligence in Health and Medicine: Opportunities and Responsibilities for Transformative Innovation; The Learning Health System Series; Maddox, T., Babski, D., Embi, P., Gerhart, J., Goldsack, J., Parikh, R., Sarich, T., Krishnan, S., Elliott, A., Eds.; National Academies Press: Washington, DC, USA, 2025; p. 28907. ISBN 978-0-309-73369-4. [Google Scholar]

- Adam, G.P.; Davies, M.; George, J.; Caputo, E.; Htun, J.M.; Coppola, E.L.; Holmer, H.; Kuhn, E.; Wethington, H.; Ivlev, I.; et al. Machine Learning Tools To (Semi-)Automate Evidence Synthesis: A Rapid Review and Evidence Map; AHRQ Methods for Effective Health Care; Agency for Healthcare Research and Quality (US): Rockville, MD, USA, 2024. [Google Scholar]

- Briganti, G. A Clinician’s Guide to Large Language Models. Future Med. AI 2023, 1, 1–6. [Google Scholar] [CrossRef]

- Ong, J.C.L.; Jin, L.; Elangovan, K.; Lim, G.Y.S.; Lim, D.Y.Z.; Sng, G.G.R.; Ke, Y.H.; Tung, J.Y.M.; Zhong, R.J.; Koh, C.M.Y.; et al. Large Language Model as Clinical Decision Support System Augments Medication Safety in 16 Clinical Specialties. Cell Rep. Med. 2025, 6, 102323. [Google Scholar] [CrossRef]

- Zia, A.; Aziz, M.; Popa, I.; Khan, S.A.; Hamedani, A.F.; Asif, A.R. Artificial Intelligence-Based Medical Data Mining. J. Pers. Med. 2022, 12, 1359. [Google Scholar] [CrossRef]

- Whiting, D.; Bott, S.R. Current Diagnostics for Prostate Cancer. In Prostate Cancer; Bott, S.R., Lim Ng, K., Eds.; Exon Publications: Brisbane City, QLD, Australia, 2021; pp. 43–58. ISBN 978-0-6450017-5-4. [Google Scholar]

- Stammes, M.A.; Bugby, S.L.; Porta, T.; Pierzchalski, K.; Devling, T.; Otto, C.; Dijkstra, J.; Vahrmeijer, A.L.; De Geus-Oei, L.-F.; Mieog, J.S.D. Modalities for Image- and Molecular-Guided Cancer Surgery. Br. J. Surg. 2018, 105, e69–e83. [Google Scholar] [CrossRef]

- Mondal, S.B.; O’Brien, C.M.; Bishop, K.; Fields, R.C.; Margenthaler, J.A.; Achilefu, S. Repurposing Molecular Imaging and Sensing for Cancer Image–Guided Surgery. J. Nucl. Med. 2020, 61, 1113–1122. [Google Scholar] [CrossRef]

- KleinJan, G.H.; Van Gennep, E.J.; Postema, A.W.; Van Leeuwen, F.W.B.; Buckle, T. Hybrid Surgical Guidance in Urologic Robotic Oncological Surgery. J. Clin. Med. 2025, 14, 6128. [Google Scholar] [CrossRef] [PubMed]

- Handke, A.E.; Ritter, M.; Albers, P.; Noldus, J.; Radtke, J.P.; Krausewitz, P. Multiparametrische MRT und alternative Methoden in der Interventions- und Behandlungsplanung beim Prostatakarzinom. Urologie 2023, 62, 1160–1168. [Google Scholar] [CrossRef] [PubMed]

- Khizir, L.; Bhandari, V.; Kaloth, S.; Pfail, J.; Lichtbroun, B.; Yanamala, N.; Elsamra, S.E. From Diagnosis to Precision Surgery: The Transformative Role of Artificial Intelligence in Urologic Imaging. J. Endourol. 2024, 38, 824–835. [Google Scholar] [CrossRef] [PubMed]

- Nair, S.S.; Muhammad, H.; Jain, P.; Xie, C.; Pavlova, I.; Brody, R.; Huang, W.; Nakadar, M.; Zhang, X.; Basu, H.; et al. A Novel Artificial Intelligence–Powered Tool for Precise Risk Stratification of Prostate Cancer Progression in Patients with Clinical Intermediate Risk. Eur. Urol. 2025, 87, 728–729. [Google Scholar] [CrossRef]

- Mobadersany, P.; Tian, S.K.; Yip, S.S.F.; Greshock, J.; Khan, N.; Yu, M.K.; McCarthy, S.; Brookman-May, S.D.; Muhammad, H.; Xie, C.; et al. AI-Enabled Analysis of H&E-Stained Prostate Cancer Tissue Images: Assessing Risk for Metastasis Prior to Apalutamide (APA) Treatment of Patients with Non-Metastatic Castration-Resistant Prostate Cancer (nmCRPC). J. Clin. Oncol. 2023, 41, 5027. [Google Scholar] [CrossRef]

- Huang, W.; Randhawa, R.; Jain, P.; Iczkowski, K.A.; Hu, R.; Hubbard, S.; Eickhoff, J.; Basu, H.; Roy, R. Development and Validation of an Artificial Intelligence–Powered Platform for Prostate Cancer Grading and Quantification. JAMA Netw. Open 2021, 4, e2132554. [Google Scholar] [CrossRef]

- Huang, K.-B.; Gui, C.-P.; Xu, Y.-Z.; Li, X.-S.; Zhao, H.-W.; Cao, J.-Z.; Chen, Y.-H.; Pan, Y.-H.; Liao, B.; Cao, Y.; et al. A Multi-Classifier System Integrated by Clinico-Histology-Genomic Analysis for Predicting Recurrence of Papillary Renal Cell Carcinoma. Nat. Commun. 2024, 15, 6215. [Google Scholar] [CrossRef]

- Zhang, B.; Li, S.; Jian, J.; Ren, X.; Zhao, Z.; Guo, L.; Su, F.; Meng, Z.; Zhao, Z. From Single-Cancer to Pan-Cancer Prognosis. Am. J. Pathol. 2025, 195, 1869–1884. [Google Scholar] [CrossRef]

- Ning, Z.; Pan, W.; Chen, Y.; Xiao, Q.; Zhang, X.; Luo, J.; Wang, J.; Zhang, Y. Integrative Analysis of Cross-Modal Features for the Prognosis Prediction of Clear Cell Renal Cell Carcinoma. Bioinformatics 2020, 36, 2888–2895. [Google Scholar] [CrossRef]

- Zhang, B.; Wan, Z.; Luo, Y.; Zhao, X.; Samayoa, J.; Zhao, W.; Wu, S. Multimodal Integration Strategies for Clinical Application in Oncology. Front. Pharmacol. 2025, 16, 1609079. [Google Scholar] [CrossRef] [PubMed]

- Rivero Belenchón, I.; Congregado Ruíz, C.B.; Gómez Ciriza, G.; Gómez Dos Santos, V.; Burgos Revilla, F.J.; Medina López, R.A. Impact of 3D-Printed Models for Surgical Planning in Renal Cell Carcinoma With Venous Tumor Thrombus: A Randomized Multicenter Clinical Trial. J. Urol. 2025, 213, 568–580. [Google Scholar] [CrossRef] [PubMed]

- Stolzenburg, J.-U.; Holze, S.; Dietel, A.; Franz, T.; Thi, P.H.; Trebst, D.; Köppe-Bauernfeind, N.; Bačák, M.; Spinos, T.; Liatsikos, E.; et al. Use of Interactive Three-Dimensional Modeling for Partial Nephrectomy in the 3DPN Study. Eur. Urol. Focus 2025, 11, 8–10. [Google Scholar] [CrossRef] [PubMed]

- De Groote, R.; Vangeneugden, J.; De Backer, P.; Mottrie, A. Use of Interactive Three-Dimensional Models in Partial Nephrectomy: For, but Critical Evaluation Is Key. Eur. Urol. Focus 2025, 11, 37–39. [Google Scholar] [CrossRef]

- Schiavina, R.; Bianchi, L.; Lodi, S.; Cercenelli, L.; Chessa, F.; Bortolani, B.; Gaudiano, C.; Casablanca, C.; Droghetti, M.; Porreca, A.; et al. Real-Time Augmented Reality Three-Dimensional Guided Robotic Radical Prostatectomy: Preliminary Experience and Evaluation of the Impact on Surgical Planning. Eur. Urol. Focus 2021, 7, 1260–1267. [Google Scholar] [CrossRef]

- The ESUT Research Group; Porpiglia, F.; Bertolo, R.; Checcucci, E.; Amparore, D.; Autorino, R.; Dasgupta, P.; Wiklund, P.; Tewari, A.; Liatsikos, E.; et al. Development and Validation of 3D Printed Virtual Models for Robot-Assisted Radical Prostatectomy and Partial Nephrectomy: Urologists’ and Patients’ Perception. World J. Urol. 2018, 36, 201–207. [Google Scholar] [CrossRef]

- Engesser, C.; Brantner, P.; Gahl, B.; Walter, M.; Gehweiler, J.; Seifert, H.; Subotic, S.; Rentsch, C.; Wetterauer, C.; Bubendorf, L.; et al. 3D -printed Model for Resection of Positive Surgical Margins in Robot-assisted Prostatectomy. BJU Int. 2025, 135, 657–667. [Google Scholar] [CrossRef]

- Mei, H.; Yang, R.; Huang, J.; Jiao, P.; Liu, X.; Chen, Z.; Chen, H.; Zheng, Q. Artificial Intelligence-Assisted Segmentation of Prostate Tumors and Neurovascular Bundles: Applications in Precision Surgery for Prostate Cancer. Ann. Surg. Oncol. 2025, 32, 9391–9399. [Google Scholar] [CrossRef]

- Tempany, C.M.C.; Jayender, J.; Kapur, T.; Bueno, R.; Golby, A.; Agar, N.; Jolesz, F.A. Multimodal Imaging for Improved Diagnosis and Treatment of Cancers. Cancer 2015, 121, 817–827. [Google Scholar] [CrossRef]

- Xi, L.; Jiang, H. Image-guided Surgery Using Multimodality Strategy and Molecular Probes. WIREs Nanomed. Nanobiotechnol. 2016, 8, 46–60. [Google Scholar] [CrossRef]

- Ardic, N.; Dinc, R. Emerging Trends in Multi-Modal Artificial Intelligence for Clinical Decision Support: A Narrative Review. Health Inform. J. 2025, 31, 14604582251366141. [Google Scholar] [CrossRef] [PubMed]

- Meskó, B. The Impact of Multimodal Large Language Models on Health Care’s Future. J. Med. Internet Res. 2023, 25, e52865. [Google Scholar] [CrossRef] [PubMed]

- Mehta, N.; Benjamin, J.; Agrawal, A.; Valanci, S.; Masters, K.; MacNeill, H. Addressing Educational Overload with Generative AI through Dual Coding and Cognitive Load Theories. Med. Teach. 2025, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Hershenhouse, J.S.; Mokhtar, D.; Eppler, M.B.; Rodler, S.; Storino Ramacciotti, L.; Ganjavi, C.; Hom, B.; Davis, R.J.; Tran, J.; Russo, G.I.; et al. Accuracy, Readability, and Understandability of Large Language Models for Prostate Cancer Information to the Public. Prostate Cancer Prostatic Dis. 2025, 28, 394–399. [Google Scholar] [CrossRef]

- Rodler, S.; Cei, F.; Ganjavi, C.; Checcucci, E.; De Backer, P.; Rivero Belenchon, I.; Taratkin, M.; Puliatti, S.; Veccia, A.; Piazza, P.; et al. GPT-4 Generates Accurate and Readable Patient Education Materials Aligned with Current Oncological Guidelines: A Randomized Assessment. PLoS ONE 2025, 20, e0324175. [Google Scholar] [CrossRef]

- Ihsan, M.Z.; Apriatama, D.; Amalia, R. AI-Assisted Patient Education: Challenges and Solutions in Pediatric Kidney Transplantation. Patient Educ. Couns. 2025, 131, 108575. [Google Scholar] [CrossRef]

- Bellos, T.; Manolitsis, I.; Katsimperis, S.; Juliebø-Jones, P.; Feretzakis, G.; Mitsogiannis, I.; Varkarakis, I.; Somani, B.K.; Tzelves, L. Artificial Intelligence in Urologic Robotic Oncologic Surgery: A Narrative Review. Cancers 2024, 16, 1775. [Google Scholar] [CrossRef]

- Vercauteren, T.; Unberath, M.; Padoy, N.; Navab, N. CAI4CAI: The Rise of Contextual Artificial Intelligence in Computer-Assisted Interventions. Proc. IEEE 2020, 108, 198–214. [Google Scholar] [CrossRef]

- Van Den Berg, I.; Savenije, M.H.F.; Teunissen, F.R.; Van De Pol, S.M.G.; Rasing, M.J.A.; Van Melick, H.H.E.; Brink, W.M.; De Boer, J.C.J.; Van Den Berg, C.A.T.; Van Der Voort Van Zyp, J.R.N. Deep Learning for Automated Contouring of Neurovascular Structures on Magnetic Resonance Imaging for Prostate Cancer Patients. Phys. Imaging Radiat. Oncol. 2023, 26, 100453. [Google Scholar] [CrossRef]

- Sasaki, S.; Kitaguchi, D.; Noda, T.; Matsuzaki, H.; Hasegawa, H.; Takeshita, N.; Ito, M. Deep Learning-Based Vessel and Nerve Recognition Model for Lateral Lymph Node Dissection: A Retrospective Feasibility Study. Langenbecks Arch. Surg. 2025, 410, 310. [Google Scholar] [CrossRef]

- Jiao, P.; Wu, S.; Yang, R.; Ni, X.; Wu, J.; Wang, K.; Liu, X.; Chen, Z.; Zheng, Q. Deep Learning Predicts Lymphovascular Invasion Status in Muscle Invasive Bladder Cancer Histopathology. Ann. Surg. Oncol. 2025, 32, 598–608. [Google Scholar] [CrossRef]

- Baas, D.J.H.; Vreuls, W.; Sedelaar, J.P.M.; Vrijhof, H.J.E.J.; Hoekstra, R.J.; Zomer, S.F.; Van Leenders, G.J.L.H.; Van Basten, J.A.; Somford, D.M. Confocal Laser Microscopy for Assessment of Surgical Margins during Radical Prostatectomy. BJU Int. 2023, 132, 40–46. [Google Scholar] [CrossRef]

- Ukimura, O.; Gill, I.S. Imaging-Assisted Endoscopic Surgery: Cleveland Clinic Experience. J. Endourol. 2008, 22, 803–810. [Google Scholar] [CrossRef] [PubMed]

- Shi, X.; Yang, B.; Guo, F.; Zhi, C.; Xiao, G.; Zhao, L.; Wang, Y.; Zhang, W.; Xiao, C.; Wu, Z.; et al. Artificial Intelligence Based Augmented Reality Navigation in Minimally Invasive Partial Nephrectomy. Urology 2025, 199, 20–26. [Google Scholar] [CrossRef] [PubMed]

- Yuan, K.; Srivastav, V.; Yu, T.; Lavanchy, J.L.; Marescaux, J.; Mascagni, P.; Navab, N.; Padoy, N. Learning Multi-Modal Representations by Watching Hundreds of Surgical Video Lectures. Med. Image Anal. 2025, 105, 103644. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Z.; Zhuo, Z.; Jia, X.; Zhang, E.; Wu, J.; Zhang, J.; Wang, Y.; Low, C.H.; Jiang, J.; Zheng, Z.; et al. SurgVLM: A Large Vision-Language Model and Systematic Evaluation Benchmark for Surgical Intelligence. arXiv 2025, arXiv:2506.02555. [Google Scholar]

- Zaffino, P.; Moccia, S.; De Momi, E.; Spadea, M.F. A Review on Advances in Intra-Operative Imaging for Surgery and Therapy: Imagining the Operating Room of the Future. Ann. Biomed. Eng. 2020, 48, 2171–2191. [Google Scholar] [CrossRef]

- Shkolyar, E.; Jia, X.; Chang, T.C.; Trivedi, D.; Mach, K.E.; Meng, M.Q.-H.; Xing, L.; Liao, J.C. Augmented Bladder Tumor Detection Using Deep Learning. Eur. Urol. 2019, 76, 714–718. [Google Scholar] [CrossRef]

- Oliva Maza, L.; Steidle, F.; Klodmann, J.; Strobl, K.; Triebel, R. An ORB-SLAM3-Based Approach for Surgical Navigation in Ureteroscopy. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2023, 11, 1005–1011. [Google Scholar] [CrossRef]

- Zhao, X.; Takenaka, S.; Iuchi, S.; Kitaguchi, D.; Wakabayashi, M.; Sato, K.; Arakaki, S.; Sasaki, K.; Kosugi, N.; Takeshita, N.; et al. Automatic Recognition of Surgical Phase of Robot-Assisted Radical Prostatectomy Based on Artificial Intelligence Deep-Learning Model and Its Application in Surgical Skill Evaluation: A Joint Study of 18 Medical Education Centers. Surg. Endosc. 2025, 39, 5623–5635. [Google Scholar] [CrossRef]

- Barallat, J.; Gómez, C.; Sancho-Cerro, A. AI, Diabetes and Getting Lost in Translation: A Multilingual Evaluation of Bing with ChatGPT Focused in HbA1c. Clin. Chem. Lab. Med. CCLM 2023, 61, e222–e224. [Google Scholar] [CrossRef]

- Abdelhady, A.M.; Davis, C.R. Plastic Surgery and Artificial Intelligence: How ChatGPT Improved Operation Note Accuracy, Time, and Education. Mayo Clin. Proc. Digit. Health 2023, 1, 299–308. [Google Scholar] [CrossRef]

- Hack, S.; Attal, R.; Locatelli, G.; Scotta, G.; Maniaci, A.; Parisi, F.M.; Van Der Poel, N.; Van Daele, M.; Garcia-Lliberos, A.; Rodriguez-Prado, C.; et al. Surgeon, Trainee, or GPT ? A Blinded Multicentric Study of AI -Augmented Operative Notes. Laryngoscope 2025, 1–11. [Google Scholar] [CrossRef]

- Froń, A.; Semianiuk, A.; Lazuk, U.; Ptaszkowski, K.; Siennicka, A.; Lemiński, A.; Krajewski, W.; Szydełko, T.; Małkiewicz, B. Artificial Intelligence in Urooncology: What We Have and What We Expect. Cancers 2023, 15, 4282. [Google Scholar] [CrossRef] [PubMed]

- Lipkova, J.; Chen, R.J.; Chen, B.; Lu, M.Y.; Barbieri, M.; Shao, D.; Vaidya, A.J.; Chen, C.; Zhuang, L.; Williamson, D.F.K.; et al. Artificial Intelligence for Multimodal Data Integration in Oncology. Cancer Cell 2022, 40, 1095–1110. [Google Scholar] [CrossRef] [PubMed]

- Tai-Seale, M.; Dillon, E.C.; Yang, Y.; Nordgren, R.; Steinberg, R.L.; Nauenberg, T.; Lee, T.C.; Meehan, A.; Li, J.; Chan, A.S.; et al. Physicians’ Well-Being Linked To In-Basket Messages Generated By Algorithms In Electronic Health Records. Health Aff. 2019, 38, 1073–1078. [Google Scholar] [CrossRef] [PubMed]

- Ayers, J.W.; Poliak, A.; Dredze, M.; Leas, E.C.; Zhu, Z.; Kelley, J.B.; Faix, D.J.; Goodman, A.M.; Longhurst, C.A.; Hogarth, M.; et al. Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern. Med. 2023, 183, 589–596. [Google Scholar] [CrossRef]

- Zaidat, B.; Lahoti, Y.S.; Yu, A.; Mohamed, K.S.; Cho, S.K.; Kim, J.S. Artificially Intelligent Billing in Spine Surgery: An Analysis of a Large Language Model. Glob. Spine J. 2025, 15, 1113–1120. [Google Scholar] [CrossRef]

- Goldenberg, M.G. Surgical Artificial Intelligence in Urology. Urol. Clin. N. Am. 2024, 51, 105–115. [Google Scholar] [CrossRef]

- Nedbal, C.; Gauhar, V.; Herrmann, T.; Singh, A.; Talyshinskii, A.; Al Jaafari, F.; Somani, B.K. Simulation-Based Training in Minimally Invasive Surgical Therapies (MIST): Current Evidence and Future Directions for Artificial Intelligence Integration—A Systematic Review by EAU Endourology. World J. Urol. 2025, 43, 448. [Google Scholar] [CrossRef]

- Aurello, P.; Pace, M.; Goglia, M.; Pavone, M.; Petrucciani, N.; Carrano, F.M.; Cicolani, A.; Chiarini, L.; Silecchia, G.; D’Andrea, V. Enhancing Surgical Education Through Artificial Intelligence in the Era of Digital Surgery. Am. Surg. 2025, 91, 1942–1948. [Google Scholar] [CrossRef]

- Vedula, S.S.; Ghazi, A.; Collins, J.W.; Pugh, C.; Stefanidis, D.; Meireles, O.; Hung, A.J.; Schwaitzberg, S.; Levy, J.S.; Sachdeva, A.K.; et al. Artificial Intelligence Methods and Artificial Intelligence-Enabled Metrics for Surgical Education: A Multidisciplinary Consensus. J. Am. Coll. Surg. 2022, 234, 1181–1192. [Google Scholar] [CrossRef]

- Hadjichristidis, C.; Geipel, J.; Keysar, B. The Influence of Native Language in Shaping Judgment and Choice. In Progress in Brain Research; Elsevier: Amsterdam, The Netherlands, 2019; Volume 247, pp. 253–272. ISBN 978-0-444-64252-3. [Google Scholar]

- Gupta, N.; Khatri, K.; Malik, Y.; Lakhani, A.; Kanwal, A.; Aggarwal, S.; Dahuja, A. Exploring Prospects, Hurdles, and Road Ahead for Generative Artificial Intelligence in Orthopedic Education and Training. BMC Med. Educ. 2024, 24, 1544. [Google Scholar] [CrossRef]

- Rengers, T.A.; Thiels, C.A.; Salehinejad, H. Academic Surgery in the Era of Large Language Models: A Review. JAMA Surg. 2024, 159, 445. [Google Scholar] [CrossRef]

- Fahrner, L.J.; Chen, E.; Topol, E.; Rajpurkar, P. The Generative Era of Medical AI. Cell 2025, 188, 3648–3660. [Google Scholar] [CrossRef]

- Yang, Y.; Lin, M.; Zhao, H.; Peng, Y.; Huang, F.; Lu, Z. A Survey of Recent Methods for Addressing AI Fairness and Bias in Biomedicine. J. Biomed. Inform. 2024, 154, 104646. [Google Scholar] [CrossRef]

- Morris, J.X.; Campion, T.R.; Nutheti, S.L.; Peng, Y.; Raj, A.; Zabih, R.; Cole, C.L. DIRI: Adversarial Patient Reidentification with Large Language Models for Evaluating Clinical Text Anonymization. AMIA Jt. Summits Transl. Sci. Proc. AMIA Jt. Summits Transl. Sci. 2025, 2025, 355–364. [Google Scholar]

- Hou, J.; Cheng, X.; Liao, J.; Zhang, Z.; Wang, W. Ethical Concerns of AI in Healthcare: A Systematic Review of Qualitative Studies. Nurs. Ethics 2025, 09697330251385024. [Google Scholar] [CrossRef] [PubMed]

- Tang, F.; Liu, C.; Xu, Z.; Hu, M.; Huang, Z.; Xue, H.; Chen, Z.; Peng, Z.; Yang, Z.; Zhou, S.; et al. Seeing Far and Clearly: Mitigating Hallucinations in MLLMs with Attention Causal Decoding. In Proceedings of the 2025 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10 June 2025; pp. 26147–26159. [Google Scholar]

- Anh-Hoang, D.; Tran, V.; Nguyen, L.-M. Survey and Analysis of Hallucinations in Large Language Models: Attribution to Prompting Strategies or Model Behavior. Front. Artif. Intell. 2025, 8, 1622292. [Google Scholar] [CrossRef] [PubMed]

- Loftus, T.J.; Tighe, P.J.; Filiberto, A.C.; Efron, P.A.; Brakenridge, S.C.; Mohr, A.M.; Rashidi, P.; Upchurch, G.R.; Bihorac, A. Artificial Intelligence and Surgical Decision-Making. JAMA Surg. 2020, 155, 148. [Google Scholar] [CrossRef] [PubMed]

- Arjomandi Rad, A.; Vardanyan, R.; Athanasiou, T.; Maessen, J.; Sardari Nia, P. The Ethical Considerations of Integrating Artificial Intelligence into Surgery: A Review. Interdiscip. Cardiovasc. Thorac. Surg. 2025, 40, ivae192. [Google Scholar] [CrossRef]

- Lee, A.; Baker, T.S.; Bederson, J.B.; Rapoport, B.I. Levels of Autonomy in FDA-Cleared Surgical Robots: A Systematic Review. Npj Digit. Med. 2024, 7, 103. [Google Scholar] [CrossRef]

- Cannoletta, D.; Gallioli, A.; Mazzone, E.; Gaya Sopena, J.M.; Territo, A.; Bello, F.D.; Casadevall, M.; Mas, L.; Mancon, S.; Diana, P.; et al. A Global Perspective on the Adoption of Different Robotic Platforms in Uro-Oncological Surgery. Eur. Urol. Focus 2025, 11, 22–25. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).