Simple Summary

Artificial intelligence (AI) is transforming cancer care by analyzing vast amounts of medical data that exceed human capacity. This review examines how AI enhances both productivity and creativity in clinical oncology. AI improves productivity by automating routine tasks like image analysis and report generation, allowing doctors to focus on patient care. More importantly, AI enables creative discovery by finding hidden patterns across different data types—combining pathology images, radiology scans, and genetic information to identify new biomarkers and treatment approaches. The FUTURE-AI framework provides practical guidelines to ensure these AI tools are fair, reliable, and understandable when used in clinical practice. This paper highlights how AI augments rather than replaces physician expertise, ultimately improving cancer diagnosis and treatment.

Abstract

Modern clinical oncology faces an unprecedented data complexity that exceeds human analytical capacity, making artificial intelligence (AI) integration essential rather than optional. This review examines the dual impact of AI on productivity enhancement and creative discovery in cancer care. We trace the evolution from traditional machine learning to deep learning and transformer-based foundation models, analyzing their clinical applications. AI enhances productivity by automating diagnostic tasks, streamlining documentation, and accelerating research workflows across imaging modalities and clinical data processing. More importantly, AI enables creative discovery by integrating multimodal data to identify computational biomarkers, performing unsupervised phenotyping to reveal hidden patient subgroups, and accelerating drug development. Finally, we introduce the FUTURE-AI framework, outlining the essential requirements for translating AI models into clinical practice. This ensures the responsible deployment of AI, which augments rather than replaces clinical judgment, while maintaining patient-centered care.

1. Introduction

Modern clinical oncology is at a turning point, shaped by accumulating biological knowledge and growing machine computing power. Cancer care is becoming more personalized through rapid advances in precision diagnostics and targeted therapies. However, this progress has also produced a vast, heterogeneous body of patient data that exceeds clinicians’ capacity to analyze and interpret [1,2]. High-resolution imaging, digital pathology, and multilayered genomic, transcriptomic, and proteomic profiles now require new analytic approaches [3,4,5]. In this context, artificial intelligence (AI) is not a future prospect but rather a present necessity for improving cancer care.

The integration of AI into clinical oncology is propelled by two related forces. First, breakthroughs in computational methods, particularly deep learning (DL), have produced multilayer artificial neural networks capable of detecting subtle patterns in extensive biomedical datasets that often escape human perception. Second, the digital transformation of healthcare has matured, with routine electronic health records (EHRs), whole-slide imaging (WSI) in pathology, and standardized genomic profiling now yielding longitudinal, high-quality datasets for training and validating AI models [6,7,8,9,10,11]. This creates a self-reinforcing data–AI loop: expanding datasets enable better models, whose finer-grained outputs become new data, further increasing the need for intelligent systems.

The impact of AI in oncology can be framed along two axes: productivity and creativity. AI improves productivity by automating labor-intensive tasks, reducing diagnostic errors and streamlining clinical workflows. AI also enables creativity by uncovering new biological insights, generating hypotheses, and supporting diagnostic and therapeutic strategies that were previously infeasible. This review examines how AI reshapes clinical workflows, enables novel research, and extends precision medicine through this lens. It outlines core technologies alongside the technical, ethical, and operational barriers to implementation.

2. An Overview of AI Technologies and Their Relevance to Oncology

AI in oncology has evolved through several distinct technological eras, each introducing new capabilities for data interpretation, prediction, and clinical decision support (Figure 1 and Figure 2). AI has progressed from traditional machine learning (ML) in the 1990s to deep learning (DL) in the 2010s and, since 2017, to transformer-based foundation models. During this period, the focus of analysis has shifted from hand-crafted features on structured data (data organized in tables with rows and columns, like blood test results or patient demographics) to representation learning for unstructured and multimodal inputs. This approach automatically learns features and latent vectors (embeddings) from data, reducing the need for manual feature engineering and providing reusable representations for downstream tasks such as classification, regression, and clustering, introducing generative methods (Figure 1).

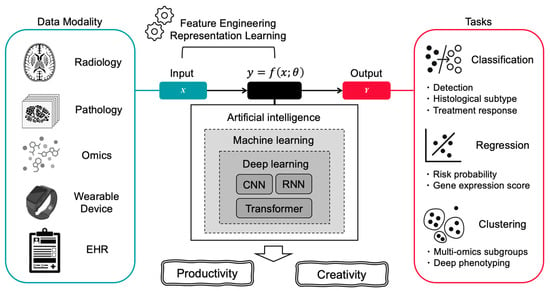

Figure 1.

Overview of AI Technologies and Their Relevance to Oncology. This figure illustrates how artificial intelligence transforms diverse clinical data sources into actionable insights for cancer care. The left panel shows various data modalities including radiology images, digital pathology slides, multi-omics data, wearable device outputs, and electronic health records (EHR). These inputs are processed through AI’s core components: representation learning powered by traditional ML and DL. The AI system achieves two primary outcomes: productivity enhancement (including automated detection, classification, differential subtype analysis, treatment response prediction, and regression tasks such as gene expression scoring) and creative discovery (enabling unsupervised clustering, multi-omics subgroup identification, and deep phenotyping).

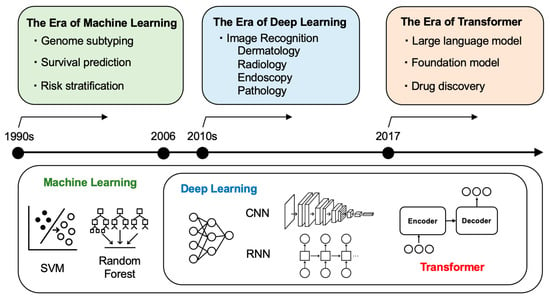

Figure 2.

AI Evolution and Application in Oncology: From Machine Learning to Transformers. Timeline showing the technological progression of AI in oncology across three eras: the era of machine learning (1990s) characterized by genome subtyping, survival prediction, and risk stratification using traditional algorithms (SVM, Random Forest); the era of deep learning (2010s–) featuring image recognition in dermatology, radiology, endoscopy, and pathology using CNN and RNN architectures; and the era of transformers (2017–present) enabling large language models, foundation models, and drug discovery applications.

ML forms the foundation of modern AI. Since the 1990s, ML has allowed computers to learn from examples rather than from handwritten rules for each task. In oncology, traditional ML models, such as support vector machines (SVM), decision trees, and random forests, have supported tumor classification, survival prediction, and risk stratification using structured clinical or genomic data [6,12,13,14]. However, a key limitation is the need for manual feature engineering, which requires domain expertise and limits scalability for unstructured inputs.

DL emerged in the 2010s, replacing hand-crafted features with networks that learn hierarchical representations from raw inputs. These multilayer neural networks capture complex, non-linear patterns in high-dimensional data. In oncology, DL has advanced image analysis in fields such as dermatology, radiology, endoscopy, and pathology. Convolutional neural networks (CNNs), built for spatial data, have demonstrated performance comparable to that of specialists in cancer detection and tumor segmentation [15,16,17,18,19,20]. For sequential data, recurrent neural networks (RNNs) support modeling of clinical time series and genomic sequences [20,21,22].

Among DL architectures, the transformer (introduced in 2017 and used in tools such as ChatGPT) first achieved breakthrough results in machine translation between languages and has since been generalized to a wide range of sequence modeling tasks, becoming a major driver of recent advances in AI [23]. A transformer uses self-attention: for each element in the input, the model weighs how much all other elements should influence it, creating context-aware representations and capturing long-range relationships. Since text, images, and genomic sequences can all be broken into ordered pieces, the same method can be applied beyond language. In oncology, transformer-based methods support clinical text processing, whole-slide and radiologic image interpretation (vision transformers), and genomic sequence modeling [24,25,26,27]. As models and datasets grew larger, this same architecture gave rise to “foundation models”—large transformers pre-trained on broad data and adaptable to many tasks [28].

In summary, AI in oncology has evolved from hand-crafted, task-specific algorithms to large, adaptive platforms that handle multimodal data and produce clinically relevant outputs (Figure 1). Traditional ML remains useful for small, well-curated datasets, whereas DL and transformer-based models address increasingly complex problems. These technologies are now being applied across clinical oncology. The following sections provide concrete examples of how these technologies improve productivity and support creativity.

3. Productivity Enhancement in Clinical Oncology

In clinical oncology, the main benefit of AI is increased productivity. AI can automate routine tasks, promote diagnostic consistency, and analyze large datasets. When used as assistive tools, these systems streamline workflows and allow clinicians to focus on complex decisions and patient care. This section reviews applications in three domains: imaging-based diagnostic support; natural language processing and large language models (LLMs) for documentation and data structuring; and AI tools that accelerate research workflows.

3.1. AI for Image-Based Oncologic Diagnosis: Radiology, Digital Pathology, Endoscopy, and Dermatology

As of 2024, the FDA had cleared or approved approximately 700 AI/ML-enabled medical devices, 76% of which are related to radiology. Most of these devices target image analysis, with oncologic imaging as a major application [1,29]. In mammography, deep learning systems have achieved radiologist-comparable breast cancer detection. In Sweden’s randomized Mammography Screening with Artificial Intelligence (MASAI) trial, 105,934 women were assigned to either AI-supported screening or standard double reading; the AI-supported screening was non-inferior and reduced radiologist workload by 44.2% (AI arm, 61,248 reads; control, 109,692) without increasing false-positive rates, and with evidence of earlier cancer detection [30,31].

Pathology, which is essential for definitive cancer diagnosis, has adopted imaging through digital pathology [32,33,34,35,36]. A recent meta-analysis that pooled 100 studies using more than 152,000 WSI reported a mean sensitivity of 96.3% and a mean specificity of 93.3%. Performance varied by subspecialty: gastrointestinal (93%/94%), urologic (95%/96%), and breast (83%/88%) [35]. Foundation-model efforts are emerging as evidenced by the GigaPath project, a collaboration among Microsoft, Providence Health System, and the University of Washington, which pretrained Prov-GigaPath on more than 170,000 slides (>1 billion 256 × 256-pixel tiles) and is among the first open-access foundation models for digital pathology; it has shown strong performance in cancer classification, mutation prediction, and vision-language tasks [36].

In addition to radiology and pathology, image-based AI has proven useful in endoscopy and dermatology. Unlike other modalities, endoscopy requires real-time analysis of video streams [37,38,39,40,41,42]. In colonoscopy, AI-assisted systems have increased the adenoma detection rate (ADR) in randomized trials through real-time polyp identification. Meta-analyses report a ~14% relative improvement in the ADR over standard colonoscopy [39,40]. In dermatology, a foundation model such as PanDerm, trained on >2 million dermoscopic and clinical images, improved physicians’ diagnostic accuracy for skin cancer by >10%, with the greatest benefits observed for non-dermatologists [26].

3.2. NLP for Data Structuring and Report Generation

Natural language processing (NLP) increases productivity in clinical oncology by converting unstructured text into analyzable data and standardizing documentation [41,42,43,44,45,46]. Oncology generates large volumes of free-text notes, including treatment histories, adverse events, response assessments, and progression narratives, that are difficult to query at scale. NLP methods help structure these records for quality improvement and research purposes.

Recent medically specialized LLMs address these needs. Models such as Med-PaLM, Clinical-T5, and GatorTron are trained on medical corpora and show stronger understanding of clinical text than general-purpose models [46,47,48,49]. Notably, Woollie has been reported as an oncology-specific LLM with multi-institutional validation: trained on real-world data from Memorial Sloan Kettering Cancer Center across lung, breast, prostate, pancreatic, and colorectal cancers, it achieved an area under the receiver operating characteristic curve (AUROC) of 0.97 for progression prediction, with external validation at University of California, San Francisco (UCSF) showing an AUROC of 0.88 [50]. While this performance drop indicates challenges in generalization across institutions, the model maintains clinically relevant predictive capability, demonstrating the importance of diverse training data and rigorous external validation.

Generative AI is also being used for radiology report drafting [51,52,53,54,55]. These systems can generate structured reports based on imaging findings, standardize terminology, and guarantee the inclusion of critical elements, such as tumor measurements and response assessments, according to Response Evaluation Criteria in Solid Tumors (RECIST) guidelines. This automation reduces reporting variability and improves consistency in evaluating treatment responses across different radiologists and institutions. One study reported a 30% reduction in initial report generation time, though comprehensive evaluation including verification and correction time by radiologists remains limited [56].

Finally, AI supports clinical trial operations by automating patient-protocol matching. Systems parse biomarker requirements, prior therapies, and performance status from EHRs, identify potentially eligible patients, and flag candidates for screening [56,57,58,59,60,61,62]. Programs using real-time matching from automatically extracted criteria have reported up to a 50% increase in enrollment in precision oncology trials [57]. Integration with e-consent and automated pre-screening is expected to further streamline recruitment and expand access.

3.3. AI-Powered Research Support Tools

AI improves research productivity by making literature searches more efficient and by accelerating data analysis, allowing investigators to spend more time on hypothesis generation and interpretation rather than manual screening and coding. General-purpose, LLM-based chat systems (e.g., ChatGPT, Gemini) can answer academic questions from their pretraining data (Figure 3). Hallucination remains a critical safety concern in healthcare applications. LLM-based chat systems can generate plausible-sounding but factually incorrect information with high confidence, potentially leading to dangerous clinical decisions. Newer “deep research” or web-enabled modes address this issue by running targeted searches at query time, gathering recent sources, and returning cited summaries. In practice, these modes offer a faster and more comprehensive starting point for literature reviews than manual keyword searches alone. However, coverage and citation quality still vary by platform and query, so source verification is still essential.

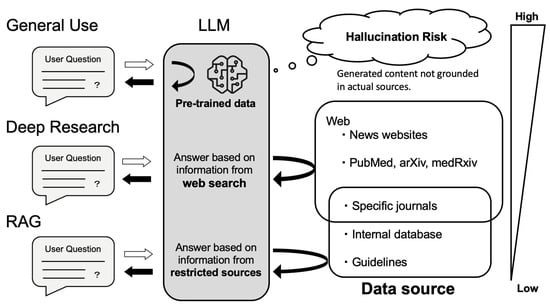

Figure 3.

How LLMs Answer Questions: Source Dependency and Hallucination Risk. General use relies solely on pre-trained data, which may result in hallucinations when content is not grounded in actual sources. Deep research mode performs real-time searches across openly available sources such as news websites, PubMed, arXiv, and medRxiv, allowing access to more recent but not necessarily curated information. Retrieval-augmented generation (RAG) answers questions based on specified, high-quality sources—including specific journals, internal databases, and clinical guidelines—thus providing the most reliable responses by constraining outputs to verified and authoritative content.

Reliability can be strengthened further with retrieval-augmented generation (RAG), which grounds model outputs in documents fetched from trusted corpora (e.g., PubMed, guidelines, institutional repositories) [63,64]. Conceptually, RAG follows three steps: retrieving passages relevant to the question; augmenting the prompt with those excerpts and bibliographic metadata; and generating an answer that quotes or cites the retrieved sources. Because the model is constrained to what it has “in context,” factual drift is less likely. Performance depends on corpus quality and retrieval ranking. Medical tools such as OpenEvidence use this approach [65]. The American Society of Clinical Oncology (ASCO) Guideline Assistant similarly restricts responses to curated ASCO content, improving reliability by design [66]. While RAG reduces the risk of hallucination, it does not eliminate the risk if retrieved content is incomplete or incorrect. This is why human verification of all outputs remains essential, particularly for clinical decision-making.

Beyond search, LLMs lower the barrier to quantitative work by translating plain-language requests into statistical code or running analyses directly [67]. Tasks that once required SPSS/SAS expertise or R/Python coding (e.g., survival analysis, multivariable regression, and subgroup analyses) can now begin with prompts such as “compare progression-free survival between arms, adjusting for age and performance status.” This “vibe coding” workflow—iteratively stating the intent of the analysis and having the system draft or revise code—helps clinician-researchers prototype quickly [68]. While vibe coding offers remarkable convenience, it carries substantial risks when users lack fundamental statistical and analytical knowledge. Outputs still require method review (e.g., assumptions, model choice, and multiple testing), reproducibility safeguards (version-controlled code, fixed random seeds, environment capture), and appropriate governance for patient data.

Although commercial AI tools are useful, clinicians must exercise caution when entering medical information into chat interfaces. Avoid submitting protected health information or potentially identifying details to public or consumer services. When clinical use is required, rely on institution-approved enterprise deployments with formal data-processing agreements, role-based access control, audit logging, and explicit data-retention settings (including disabling provider training on inputs) [69]. Prefer de-identified or synthetic data in prompts, and, when feasible, keep retrieval and model inference on-premises or within a virtual private cloud under institutional governance.

4. Creative Discovery in Clinical Oncology

While productivity gains are important, AI’s greater impact in oncology may be its ability to enable new discoveries. By analyzing complex, high-dimensional data, AI moves beyond optimizing existing tasks and supports the identification of previously unrecognized biological relationships and the design of new diagnostic and treatment approaches. This section examines how AI contributes to creative discovery in precision oncology.

4.1. Computational Biomarkers for Precision Oncology

AI is reshaping biomarker development by shifting the focus from single-analyte markers to computational biomarkers—patterns learned from combinations of images, molecular profiles, and clinical data. Traditional efforts focused on a specific protein or mutation linked to disease or treatment response, and AI extends this by modeling non-linear interactions across modalities to capture tumor biology more completely.

The most active line of work is multimodal deep learning (MDL), which integrates histopathology (“pathomics”), radiology (“radiomics”), genomics, and clinical records to create unified patient-level representations [70,71,72] (Figure 4). The Multimodal transformer with Unified maSKed modeling (MUSK) from Stanford exemplifies this approach as a vision-language system that pretrains on large, largely unpaired corpora of pathology image patches and clinical text, then aligns image–text pairs for downstream tasks [32]. In evaluations of lung and gastroesophageal cancers, MUSK outperformed the standard programmed cell death ligand 1 (PD-L1) biomarker alone in predicting immunotherapy benefit and surpassed clinicopathologic baselines for survival-related endpoints. These gains reflect the value of combining visual pathological features with contextual information from EHRs.

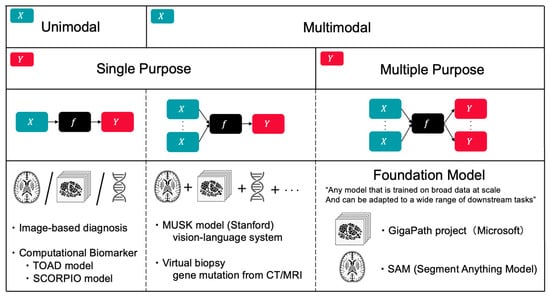

Figure 4.

Classification of AI Models by Input Modality and Output Task Multiplicity. Framework for categorizing AI models in oncology based on data modality (unimodal vs. multimodal) and task scope (single vs. multiple purpose). Examples include image-based diagnosis and computational biomarkers (TOAD, SCORPIO) for unimodal applications; MUSK model (Stanford vision-language system) and virtual biopsy for multimodal single-purpose tasks; and foundation models (GigaPath, SAM) that leverage multimodal inputs for multiple downstream applications. This classification illustrates the evolution toward integrated, versatile AI systems in precision oncology.

A useful distinction can be made between noninvasive “virtual biopsies” and histology-derived molecular surrogates [5,33,73]. Virtual biopsy refers to inferring histologic or genomic attributes directly from clinical imaging without tissue sampling—for example, predicting epidermal growth factor receptor (EGFR) mutation status from baseline computed tomography (CT) in non-small cell lung cancer or isocitrate dehydrogenase (IDH) mutation/1p/19q codeletion from brain magnetic resonance imaging (MRI) [74,75,76]. By contrast, histology-derived surrogates use hematoxylin and eosin (H&E) slides to infer molecular features (e.g., microsatellite instability (MSI) in colorectal cancer or Oncotype DX–like recurrence scores in breast cancer) [77,78,79,80,81]. The former may reduce the need for an initial biopsy; the latter can reduce add-on assays and cost while still relying on tissue obtained for standard care. Both require prospective, multi-site validation before routine adoption.

Beyond histology-derived surrogates, computational biomarkers with the potential to influence treatment decisions are emerging. The TOAD (Tumour Origin Assessment via Deep learning) model estimates tissue of origin from routine H&E WSIs [82]. Trained on 17,486 slides spanning 18 primary sites, it achieved top-1/top-3 accuracies of approximately 0.83/0.96 on an internal test set and 0.80/0.93 on an external test set; in a curated cancer of unknown primary (CUP) cohort (n = 317), its top-three predictions matched pathologists’ differentials in 82% of cases. By providing slide-level attention maps and calibrated probabilities, TOAD can prioritize ancillary testing and assist in the diagnostic workup. Another example is the SCORPIO (Standard Clinical and labOratory featuRes for Prognostication of Immunotherapy Outcomes) model, which predicts immunotherapy outcomes using only routine laboratory panels (complete blood counts and metabolic profiles) and basic clinical variables [83]. Developed on an institutional cohort (n ≈ 1600) and validated across internal sets (n ≈ 2500), multicenter phase-3 trials (n ≈ 4400), and an external real-world cohort (n ≈ 1100), the SCORPIO model covers nearly 10,000 immune checkpoint inhibitors (ICI)-treated patients across more than 20 types of cancers. SCORPIO outperformed tumor mutational burden (TMB) and PD-L1 in predicting overall survival and clinical benefit, offering a low-cost risk stratifier that can complement genomic tests where access is limited.

4.2. Unsupervised Discovery and Deep Clinical Phenotyping

Clinical courses in cancer vary widely across patients. This heterogeneity reflects diversity within tumor cells as well as germline genetic factors and environmental influences. To manage these differences, patient stratification has long been a core goal. Early approaches relied on gross morphology—histologic subtype—and staging systems such as TNM (and FIGO for gynecologic malignancies). These frameworks, derived from surgical and pathologic assessments, have guided decisions about extent of disease and initial management. Over time, molecular classification based on driver alterations has become central to treatment. Examples include selecting targeted therapies for EGFR-mutant lung cancer or human epidermal growth factor receptor 2 (HER2)-positive breast cancer [84,85,86].

Machine learning enables data-driven stratification that may reveal patterns not easily recognized by clinicians. In a 2019 study from RIKEN and Jikei University, investigators analyzed 435 patients with ovarian tumors using age and 32 preoperative laboratory measurements [87]. A supervised model distinguished malignant from benign tumors with an AUROC of 0.968. Applying unsupervised clustering to the same variables identified two groups within early-stage ovarian cancer: one with laboratory patterns resembling benign disease (cluster 1) and another resembling advanced cancer (cluster 2). Cluster 1 showed very low recurrence, whereas cluster 2 had higher recurrence and mortality, indicating a previously unrecognized subtype linked to prognosis based solely on preoperative data.

Recent work combines high-dimensional omics with DL to define subtypes grounded in tumor biology or hidden tissues structures. In hepatocellular carcinoma, for example, proteogenomic studies integrating whole-genome sequencing, RNA sequencing, and quantitative proteomics have identified three molecular classes: immune-“hot” with favorable outcomes, proliferative and TP53-enriched with angiogenic signaling and poorer outcomes, and CTNNB1-enriched with mTOR-pathway activation [88,89,90]. Candidate protein markers emerging from these analyses suggest targets for risk stratification and therapy development. Spatial omics extends phenotyping by mapping cell types and their interactions in situ [91,92,93,94]. Spatial transcriptomic analyses of lung adenocarcinoma show that, as tumors progress from in situ to invasive stages, the tumor microenvironment shifts from a relatively balanced immune milieu to a more immunosuppressive landscape. Incorporating such spatial information supports classification along immune context (often summarized as “hot” versus “cold” tumors).

Complementing these tumor-focused approaches, deep phenotyping emphasizes comprehensive, longitudinal profiling of individual physiology beyond the tumor itself [95,96,97,98]. This strategy integrates genomic sequencing, blood-based omics (e.g., metabolomics and proteomics), microbiome analysis, and wearable device data to capture each patient’s unique biological state over time. Importantly, while medicine has accumulated vast knowledge about individual causal mechanisms through experiments and clinical trials, integrating these fragmented insights remains challenging. AI for Medicine addresses this by combining data-driven models with domain knowledge—incorporating biological pathways and causal constraints into AI architectures rather than treating them as black boxes. This hybrid approach enables discovery of novel patterns while respecting established mechanistic understanding. By monitoring multiple physiological scales simultaneously, deep phenotyping can detect early transitions from wellness to disease and inform personalized treatment decisions based on the individual’s complete biological context rather than tumor characteristics alone. This holistic approach represents a paradigm shift toward predictive, preventive, personalized, and participatory (P4) medicine, which could transform cancer care by enabling earlier intervention and more precise therapeutic matching [99,100]. By quantifying disease heterogeneity and predicting individual trajectories, AI-enhanced deep phenotyping moves us closer to the dual goals of precision oncology: optimal treatment selection for existing cancers and proactive identification of at-risk individuals for prevention.

4.3. Drug Discovery

Drug discovery has long been slow, costly, and associated with high attrition rates. A recent breakthrough, DeepMind’s AlphaFold, addressed the decades-old “protein-folding problem” and won the 2024 Nobel Prize in Chemistry [101]. By accurately predicting three-dimensional structures from sequences at the proteome scale, AlphaFold removes a frequent bottleneck in structure-based design and makes structural hypotheses more accessible. Building on this progress, deep generative models now design novel small molecules while predictive models triage candidates, forming a computational pipeline from target structure to testable chemical matter.

An end-to-end example has been reported for hepatocellular carcinoma. Using an integrated platform (Pharma.AI), disease-gene analyses identified CDK20 as a target (PandaOmics), an AlphaFold2 model supplied the target structure, and a generative module (Chemistry42) produced approximately 9000 candidate molecules [102,103]. Seven of these molecules were synthesized and tested; one showed promising activity in liver cancer cells and advanced as a lead, all within roughly 30 days from design initiation. A second example concerns WSB1, a protein linked to metastasis through hypoxia-response pathways in lung and pancreatic cancers but historically underexplored as a drug target [104]. Investigators built an AlphaFold2 model of WSB1, refined it with molecular simulation, and conducted virtual screening across millions of compounds. Approximately 20 candidates were selected for synthesis and testing, yielding four lead molecules. These cases demonstrate how structure prediction and large-scale in silico triage can produce tractable chemical matter for previously neglected targets.

AlphaFold has been a breakthrough for drug discovery, including cancer therapeutics, but its limitations are well recognized [105,106,107,108]. It predicts a protein’s most stable shape; however, it does not model how the protein moves. Drug binding often depends on motion, such as small shifts when a compound binds, long-range effects within the protein, hidden pockets that open and close, or changes when proteins form complexes. Cancer-related mutations and chemical modifications can also alter these shapes. Therefore, drug development pipelines should incorporate computer simulations of protein motion, test binding against several plausible protein shapes, and confirm results with laboratory assays before moving candidates forward.

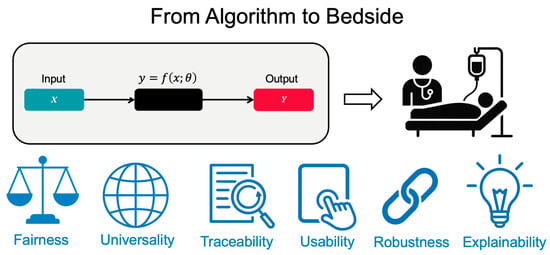

5. From Algorithm to Bedside: A FUTURE-AI–Informed Guide for Oncologists

AI helps clinical oncology with both productivity and creativity, and its use will continue to grow. Turning published models into tools that work at the bedside takes more than just accuracy. It also requires trust, fairness, and fit with day-to-day workflows. The FUTURE-AI framework, developed by 117 experts from 50 countries, offers six practical principles to guide this work: fairness, universality, traceability, usability, robustness, and explainability [109] (Figure 5).

Figure 5.

The FUTURE-AI Framework: Essential Requirements for Clinical AI Implementation. The FUTURE-AI framework defines six essential principles for successful bedside implementation: Fairness (equitable performance across populations), Universality (generalization across settings), Traceability (transparent documentation), Usability (workflow integration), Robustness (real-world reliability), and Explainability (interpretable decisions). Each domain has different maximum scores totaling 30 points, providing a quantitative assessment of clinical readiness.

5.1. Fairness: Ensuring Equitable AI Performance

AI models must perform consistently across all patient populations to avoid perpetuating health disparities [110,111,112,113,114,115,116]. For example, a melanoma detection algorithm trained primarily on light-skinned individuals may show significantly reduced sensitivity for darker-skinned patients, potentially delaying life-saving diagnoses. Similarly, breast cancer detection systems may perform differently based on breast density variations across ethnic groups [117,118]. Achieving fairness requires deliberate strategies throughout development. First, teams must collect representative data across demographic groups, socioeconomic backgrounds, and geographic regions [119]. Federated learning is a powerful solution that enables institutions to collaborate on model training without sharing sensitive patient data. Each hospital trains the model on its local population and shares only encrypted parameter updates. This approach successfully develops algorithms that maintain accuracy across diverse groups [120,121,122]. When disparities are detected, techniques like adversarial debiasing and fairness-aware ML can help correct imbalances while preserving diagnostic accuracy [123].

5.2. Universality: Building Models for Diverse Clinical Settings

For AI to transform cancer care globally, models must generalize beyond their development environment [124]. A mammography algorithm developed on equipment from one manufacturer must perform equally well with systems from other vendors. This challenge extends to variations in imaging protocols, patient populations, and even staining techniques in digital pathology [125,126]. Transfer learning provides a critical solution by leveraging knowledge from large, pre-trained models. Rather than requiring millions of oncology-specific images, developers can adapt models originally trained on general photographs to detect lung nodules or classify pathology slides using only thousands of examples [127,128,129]. However, achieving true universality demands more than technical solutions. It also requires standardization efforts across institutions, including harmonized imaging protocols, common data formats, and shared quality metrics that enable models to maintain performance when deployed in new settings [130,131].

5.3. Traceability: Creating Transparent AI Systems

The path from training data to clinical decision must be fully documented and auditable [132,133,134,135,136]. Comprehensive traceability serves multiple purposes: enabling rapid identification of problems, facilitating regulatory approval, and building institutional trust. This includes documenting data sources and patient demographics, recording all preprocessing steps and augmentation techniques, tracking model architecture choices and hyperparameter selections, maintaining version control for both code and data, and logging validation results across different test sets. Modern AI platforms increasingly include built-in traceability features that automatically log training runs, data lineage, and performance metrics [137,138]. These systems generate regulatory-ready documentation, streamlining the path from research to clinical deployment. When models fail or show unexpected behavior, this documentation allows for a quick root cause analysis and corrective action.

5.4. Usability: Integrating AI into Clinical Workflows

Even the most accurate AI model will fail if oncologists cannot use it effectively [139,140,141]. A lung nodule detection system requiring separate login, manual image upload, and minutes of processing will be adopted only minimally, regardless of its accuracy. Successful integration requires AI to operate seamlessly within existing tools, such as highlighting suspicious regions directly within Picture Archiving and Communication System (PACS) automatically flagging high-risk cases for priority review, and integrating predictions into EHRs. Usability encompasses more than technical integration. AI should reduce, not increase, cognitive load on clinicians. This means presenting information at an appropriate level of detail, providing confidence intervals rather than binary predictions, and allowing easy access to supporting evidence. Development must involve oncologists from the beginning to ensure that interfaces are intuitive and outputs are clinically meaningful. Training materials and hands-on sessions help clinicians understand both the capabilities and limitations of AI.

5.5. Robustness: Sustained Performance Under Real-World Conditions

Laboratory performance does not always translate directly to clinical practice [142,143,144,145,146]. Models must maintain accuracy when faced with variations in image quality, missing data, equipment differences, and the full spectrum of real-world complexity. A pathology AI trained on archival-quality slides may fail when confronted with routine clinical specimens that have artifacts, varying stain quality, or tissue folding. Building robust models requires diverse training data that includes edge cases and imperfect examples. Data augmentation techniques, such as artificially introducing noise, rotation, and color variations, help models learn invariance to common perturbations. Continuous monitoring systems detect when models encounter data outside their training distribution or when performance begins to degrade [147,148,149,150,151]. Some systems implement continuous learning, allowing models to adapt to changing practices and populations while preventing “catastrophic forgetting” of previously learned patterns. Furthermore, models must be resilient against deliberate threats, including data poisoning attacks that could corrupt the training data or adversarial attacks designed to cause misclassification at inference time [152]. Safeguarding against these security vulnerabilities is critical for clinical deployment.

5.6. Explainability: Making AI Decisions Understandable

The “black box” nature of DL remains a fundamental barrier to clinical trust [153,154,155,156,157]. Oncologists need to understand not only what an AI predicts but also why. This is especially important when recommendations differ from clinical judgment. Explainable AI techniques provide these insights through various methods. For imaging applications, techniques like Grad-CAM generate heatmaps showing which regions influenced a model’s decision, highlighting specific areas of a mammogram that suggest malignancy or pathology features indicative of aggressive disease [158,159,160,161]. For tabular data, SHAP values can rank the importance of different clinical factors and show whether a prognosis was driven primarily by tumor size, patient age, or biomarker status [162,163,164,165]. However, explainability must balance completeness with usability. The most effective systems provide layered explanations, offering simple summaries for routine cases and detailed analyses available when needed while always acknowledging uncertainty and limitations.

5.7. Future Directions

Current evidence reveals significant gaps between aspirations and reality. In a systematic review of AI research on radiological imaging for soft-tissue and bone tumors, the mean FUTURE-AI score was only 5.1 out of 30, indicating a substantial gap between current practice and established guidelines [166]. Beyond technical challenges, regulatory frameworks for AI in oncology remain evolving, with significant gaps in liability assignment when AI-assisted decisions lead to adverse outcomes [124]. Key unresolved questions include: Who bears responsibility when AI recommendations cause harm? What constitutes adequate evidence for AI tool approval? These regulatory uncertainties create barriers to implementation and require urgent attention form policymakers, professional societies, and healthcare institutions. Coordinated efforts across multiple stakeholders are required to move forward: developers must incorporate these principles from the outset, institutions must build robust infrastructure for responsible deployment, and regulators must establish frameworks that balance safety with innovation. Most importantly, the oncology community must participate in developing AI systems that address genuine clinical needs, ensuring that artificial intelligence augments—rather than replaces—the physician’s essential role in cancer care. Finally, as model complexity and scale increase, the oncology community should consider the environment cost of computational requirements. Training a single large language model generates emissions equivalent to approximately 300,000 kg of CO2, which is roughly five times the lifetime emissions of an average automobile [165,166]. Sustainable AI development requires commitment to algorithmic efficacy, model selection (avoiding unnecessarily large model when smaller ones suffice), and transparent reporting of computational costs. Progress in healthcare must not come at the expense of planetary health.

6. Conclusions: Toward Collaborative Intelligence in Oncology

This review traced the evolution of AI in oncology, from traditional ML through DL to transformer-based foundation models, demonstrating its dual impact on enhancing productivity and facilitating creative discovery. While AI can automate diagnostic tasks, streamline workflows, and enable novel insights through computational biomarkers and drug discovery, its successful clinical implementation requires more than technical accuracy. The FUTURE-AI framework emphasizes ensuring fairness, universality, transparency, usability, robustness, and explainability in deployment. Moving forward demands a collaborative approach where AI augments, rather than replaces, clinical judgment, with algorithms handling data-intensive tasks and clinicians providing contextual understanding and personalized care. To achieve this, we need prospective outcome studies, standardization across institutions, and comprehensive education for oncologists. Only through such coordinated efforts can we ensure that AI advances precision medicine while maintaining the patient-centered approach that is fundamental to quality cancer care.

Author Contributions

Conceptualization, M.K., H.O. and K.S.; investigation, M.K., H.O., T.I. and J.O.; writing—original draft preparation, M.K.; writing—review and editing, H.O., E.S., S.U., K.Y. (Kaoru Yoshikawa), A.O., T.I., J.O. and K.S.; visualization, M.K.; supervision, K.Y. (Kensei Yamaguchi) and K.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ADR | Adenoma Detection Rate |

| AI | Artificial Intelligence |

| ASCO | American Society of Clinical Oncology |

| AUROC: | Area Under the Receiver Operating Characteristic Curve |

| CDK | Cyclin-Dependent Kinase |

| CNN | Convolutional Neural Network |

| CT | Computed Tomography |

| CTNNB1 | Catenin Beta 1 |

| CUP | Cancer of Unknown Primary |

| DL | Deep Learning |

| EGFR | Epidermal Growth Factor Receptor |

| EHR | Electronic Health Record |

| FIGO: | International Federation of Gynecology and Obstetrics |

| FDA | Food and Drug Administration |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| H&E | Hematoxylin and Eosin |

| HER2 | Human Epidermal Growth Factor Receptor 2 |

| ICI | Immune Checkpoint Inhibitor |

| IDH | Isocitrate Dehydrogenase |

| LLM | Large Language Model |

| MASAI | Mammography Screening with Artificial Intelligence |

| MDL | Multimodal Deep Learning |

| ML | Machine Learning |

| MRI | Magnetic Resonance Imaging |

| MSI | Microsatellite Instability |

| mTOR | mammalian target of rapamycin |

| MUSK | Multimodal transformer with Unified maSKed modeling |

| NLP | Natural Language Processing |

| P4 | Predictive, Preventive, Personalized, and Participatory |

| PACS | Picture Archiving and Communication System |

| PD-L1 | Programmed Cell Death Ligand 1 |

| RAG | Retrieval-Augmented Generation |

| RECIST | Response Evaluation Criteria in Solid Tumors |

| RNA | Ribonucleic Acid |

| RNN | Recurrent Neural Network |

| SCORPIO | Standard Clinical and labOratory featuRes for Prognostication of Immunotherapy Outcomes |

| SHAP | SHapley Additive exPlanations |

| SVM | Support Vector Machine |

| TMB | Tumor Mutational Burden |

| TNM | Tumor, Node, Metastasis |

| TOAD | Tumor Origin Assessment via Deep learning |

| TP53 | Tumor Protein p53 |

| UCSF | University of California, San Francisco |

| WSB | WD repeat and suppressor of cytokine signaling box containing |

| WSI | Whole-Slide Image |

References

- Lotter, W.; Hassett, M.J.; Schultz, N.; Kehl, K.L.; Van Allen, E.M.; Cerami, E. Artificial Intelligence in Oncology: Current Landscape, Challenges, and Future Directions. Cancer Discov. 2024, 14, 711–726. [Google Scholar] [CrossRef]

- Bhinder, B.; Gilvary, C.; Madhukar, N.S.; Elemento, O. Artificial Intelligence in Cancer Research and Precision Medicine. Cancer Discov. 2021, 11, 900–915. [Google Scholar] [CrossRef]

- Tiwari, A.; Mishra, S.; Kuo, T.-R. Current AI Technologies in Cancer Diagnostics and Treatment. Mol. Cancer 2025, 24, 159. [Google Scholar] [CrossRef]

- El Naqa, I.; Karolak, A.; Luo, Y.; Folio, L.; Tarhini, A.A.; Rollison, D.; Parodi, K. Translation of AI into Oncology Clinical Practice. Oncogene 2023, 42, 3089–3097. [Google Scholar] [CrossRef]

- Marra, A.; Morganti, S.; Pareja, F.; Campanella, G.; Bibeau, F.; Fuchs, T.; Loda, M.; Parwani, A.; Scarpa, A.; Reis-Filho, J.S.; et al. Artificial Intelligence Entering the Pathology Arena in Oncology: Current Applications and Future Perspectives. Ann. Oncol. 2025, 36, 712–725. [Google Scholar] [CrossRef]

- Beam, A.L.; Drazen, J.M.; Kohane, I.S.; Leong, T.-Y.; Manrai, A.K.; Rubin, E.J. Artificial Intelligence in Medicine. N. Engl. J. Med. 2023, 388, 1220–1221. [Google Scholar] [CrossRef]

- Howell, M.D.; Corrado, G.S.; DeSalvo, K.B. Three Epochs of Artificial Intelligence in Health Care. JAMA J. Am. Med. Assoc. 2024, 331, 242–244. [Google Scholar]

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Tran, K.A.; Kondrashova, O.; Bradley, A.; Williams, E.D.; Pearson, J.V.; Waddell, N. Deep Learning in Cancer Diagnosis, Prognosis and Treatment Selection. Genome Med. 2021, 13, 152. [Google Scholar] [CrossRef] [PubMed]

- Wainberg, M.; Merico, D.; Delong, A.; Frey, B.J. Deep Learning in Biomedicine. Nat. Biotechnol. 2018, 36, 829–838. [Google Scholar] [CrossRef] [PubMed]

- Avanzo, M.; Stancanello, J.; Pirrone, G.; Drigo, A.; Retico, A. The Evolution of Artificial Intelligence in Medical Imaging: From Computer Science to Machine and Deep Learning. Cancers 2024, 16, 3702. [Google Scholar] [CrossRef]

- Cruz, G.A.; Wishart, D.S. Applications of Machine Learning in Cancer Prediction and Prognosis. Cancer Inform. 2007, 2, 59–77. [Google Scholar]

- Kourou, K.; Exarchos, T.P.; Exarchos, K.P.; Karamouzis, M.V.; Fotiadis, D.I. Machine Learning Applications in Cancer Prognosis and Prediction. Comput. Struct. Biotechnol. J. 2015, 13, 8–17. [Google Scholar] [CrossRef]

- Tseng, H.-H.; Wei, L.; Cui, S.; Luo, Y.; Haken, R.K.T.; El Naqa, I. Machine Learning and Imaging Informatics in Oncology. Oncology 2020, 98, 344–362. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Alex, K.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Andre, E.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-Level Classification of Skin Cancer with Deep Neural Networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Coudray, N.; Ocampo, P.S.; Sakellaropoulos, T.; Narula, N.; Snuderl, M.; Fenyö, D.; Moreira, A.L.; Razavian, N.; Tsirigos, A. Classification and Mutation Prediction from Non-Small Cell Lung Cancer Histopathology Images Using Deep Learning. Nat. Med. 2018, 24, 1559–1567. [Google Scholar] [CrossRef]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Werbos, P.J. Backpropagation through time: What it does and how to do it. In Proceedings of the IEEE, Skokie, IL, USA, 4–6 October 1990; Volume 78, pp. 1550–1560. [Google Scholar]

- Azizi, S.; Bayat, S.; Yan, P.; Tahmasebi, A.; Kwak, J.T.; Xu, S.; Turkbey, B.; Choyke, P.; Pinto, P.; Wood, B.; et al. Deep Recurrent Neural Networks for Prostate Cancer Detection: Analysis of Temporal Enhanced Ultrasound. IEEE Trans. Med. Imaging 2018, 37, 2695–2703. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017. [Google Scholar] [CrossRef]

- Pai, S.; Bontempi, D.; Hadzic, I.; Prudente, V.; Sokač, M.; Chaunzwa, T.L.; Bernatz, S.; Hosny, A.; Mak, R.H.; Birkbak, N.J.; et al. Foundation Model for Cancer Imaging Biomarkers. Nat. Mach. Intell. 2024, 6, 354–367. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020. [Google Scholar] [CrossRef]

- Yan, S.; Yu, Z.; Primiero, C.; Vico-Alonso, C.; Wang, Z.; Yang, L.; Tschandl, P.; Hu, M.; Ju, L.; Tan, G.; et al. A Multimodal Vision Foundation Model for Clinical Dermatology. Nat. Med. 2025, 31, 2691–2702. [Google Scholar] [CrossRef]

- Cui, H.; Wang, C.; Maan, H.; Pang, K.; Luo, F.; Duan, N.; Wang, B. scGPT: Toward Building a Foundation Model for Single-Cell Multi-Omics Using Generative AI. Nat. Methods 2024, 21, 1470–1480. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the Opportunities and Risks of Foundation Models. arXiv 2021. [Google Scholar] [CrossRef]

- Panagiotis, K.; Kline, T.L.; Meyer, H.M.; Khalid, S.; Leiner, T.; Loufek, B.T.; Blezek, D.; Vidal, D.E.; Hartman, R.P.; Joppa, L.J.; et al. Implementing Artificial Intelligence Algorithms in the Radiology Workflow: Challenges and Considerations. Mayo Clin. Proc. Digit. Health 2025, 3, 100188. [Google Scholar]

- Kristina, L.; Josefsson, V.; Larsson, A.-M.; Larsson, S.; Högberg, C.; Sartor, H.; Hofvind, S.; Andersson, I.; Rosso, A. Artificial Intelligence-Supported Screen Reading versus Standard Double Reading in the Mammography Screening with Artificial Intelligence Trial (MASAI): A Clinical Safety Analysis of a Randomised, Controlled, Non-Inferiority, Single-Blinded, Screening Accuracy Study. Lancet Oncol. 2023, 24, 936–944. [Google Scholar]

- Veronica, H.; Josefsson, V.; Sartor, H.; Schmidt, D.; Larsson, A.-M.; Hofvind, S.; Andersson, I.; Rosso, A.; Hagberg, O.; Lång, K. Screening Performance and Characteristics of Breast Cancer Detected in the Mammography Screening with Artificial Intelligence Trial (MASAI): A Randomised, Controlled, Parallel-Group, Non-Inferiority, Single-Blinded, Screening Accuracy Study. Lancet Digit. Health 2025, 7, e175–e183. [Google Scholar]

- Xiang, J.; Wang, X.; Zhang, X.; Xi, Y.; Eweje, F.; Chen, Y.; Li, Y.; Bergstrom, C.; Gopaulchan, M.; Kim, T.; et al. A Vision-Language Foundation Model for Precision Oncology. Nature 2025, 638, 769–778. [Google Scholar] [CrossRef]

- Bera, K.; Schalper, K.A.; Rimm, D.L.; Velcheti, V.; Madabhushi, A. Artificial Intelligence in Digital Pathology—New Tools for Diagnosis and Precision Oncology. Nat. Rev. Clin. Oncol. 2019, 16, 703–715. [Google Scholar] [CrossRef]

- Baxi, V.; Edwards, R.; Montalto, M.; Saha, S. Digital Pathology and Artificial Intelligence in Translational Medicine and Clinical Practice. Mod. Pathol. 2022, 35, 23–32. [Google Scholar]

- McGenity, C.; Clarke, E.L.; Jennings, C.; Matthews, G.; Cartlidge, C.; Freduah-Agyemang, H.; Stocken, D.D.; Treanor, D. Artificial Intelligence in Digital Pathology: A Systematic Review and Meta-Analysis of Diagnostic Test Accuracy. npj Digit. Med. 2024, 7, 114. [Google Scholar] [CrossRef]

- Xu, H.; Usuyama, N.; Bagga, J.; Zhang, S.; Rao, R.; Naumann, T.; Wong, C.; Gero, Z.; González, J.; Gu, Y.; et al. A Whole-Slide Foundation Model for Digital Pathology from Real-World Data. Nature 2024, 630, 181–188. [Google Scholar] [PubMed]

- Byrne, M.F.; Chapados, N.; Soudan, F.; Oertel, C.; Pérez, M.L.; Kelly, R.; Iqbal, N.; Chandelier, F.; Rex, D.K. Real-Time Differentiation of Adenomatous and Hyperplastic Diminutive Colorectal Polyps during Analysis of Unaltered Videos of Standard Colonoscopy Using a Deep Learning Model. Gut 2019, 68, 94–100. [Google Scholar] [PubMed]

- Mori, Y.; Bretthauer, M.; Kalager, M. Hopes and Hypes for Artificial Intelligence in Colorectal Cancer Screening. Gastroenterology 2021, 161, 774–777. [Google Scholar] [CrossRef]

- Hassan, C.; Spadaccini, M.; Iannone, A.; Maselli, R.; Jovani, M.; Chandrasekar, V.T.; Antonelli, G.; Yu, H.; Areia, M.; Dinis-Ribeiro, M.; et al. Performance of Artificial Intelligence in Colonoscopy for Adenoma and Polyp Detection: A Systematic Review and Meta-Analysis. Gastrointest. Endosc. 2021, 93, 77–85.e6. [Google Scholar] [CrossRef]

- Hassan, C.; Spadaccini, M.; Mori, Y.; Foroutan, F.; Facciorusso, A.; Gkolfakis, P.; Tziatzios, G.; Triantafyllou, K.; Antonelli, G.; Khalaf, K.; et al. Real-Time Computer-Aided Detection of Colorectal Neoplasia during Colonoscopy: A Systematic Review and Meta-Analysis. Ann. Intern. Med. 2023, 176, 1209–1220. [Google Scholar] [CrossRef]

- Ahmad, A.; Wilson, A.; Haycock, A.; Humphries, A.; Monahan, K.; Suzuki, N.; Thomas-Gibson, S.; Vance, M.; Bassett, P.; Thiruvilangam, K.; et al. Evaluation of a Real-Time Computer-Aided Polyp Detection System during Screening Colonoscopy: AI-DETECT Study. Endoscopy 2023, 55, 313–319. [Google Scholar] [PubMed]

- Karsenti, D.; Tharsis, G.; Perrot, B.; Cattan, P.; Sert, A.P.D.; Venezia, F.; Zrihen, E.; Gillet, A.; Lab, J.-P.; Tordjman, G.; et al. Effect of Real-Time Computer-Aided Detection of Colorectal Adenoma in Routine Colonoscopy (COLO-GENIUS): A Single-Centre Randomised Controlled Trial. Lancet Gastroenterol. Hepatol. 2023, 8, 726–734. [Google Scholar]

- Yim, W.-W.; Yetisgen, M.; Harris, W.P.; Kwan, S.W. Natural Language Processing in Oncology: A Review: A Review. JAMA Oncol. 2016, 2, 797–804. [Google Scholar] [CrossRef] [PubMed]

- Kehl, K.L.; Xu, W.; Lepisto, E.; Elmarakeby, H.; Hassett, M.J.; Van Allen, E.M.; Johnson, B.E.; Schrag, D. Natural Language Processing to Ascertain Cancer Outcomes from Medical Oncologist Notes. JCO Clin. Cancer Inform. 2020, 4, 680–690. [Google Scholar] [CrossRef]

- Savova, G.K.; Danciu, I.; Alamudun, F.; Miller, T.; Lin, C.; Bitterman, D.S.; Tourassi, G.; Warner, J.L. Use of Natural Language Processing to Extract Clinical Cancer Phenotypes from Electronic Medical Records. Cancer Res. 2019, 79, 5463–5470. [Google Scholar] [CrossRef]

- Jee, J.; Fong, C.; Pichotta, K.; Tran, T.N.; Luthra, A.; Waters, M.; Fu, C.; Altoe, M.; Liu, S.-Y.; Maron, S.B.; et al. Automated Real-World Data Integration Improves Cancer Outcome Prediction. Nature 2024, 636, 728–736. [Google Scholar] [CrossRef]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Large Language Models Encode Clinical Knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef]

- Chen, D.; Alnassar, S.A.; Avison, K.E.; Huang, R.S.; Raman, S. Large Language Model Applications for Health Information Extraction in Oncology: Scoping Review. JMIR Cancer 2025, 11, e65984. [Google Scholar] [CrossRef]

- Shah, N.H.; Entwistle 2025, D.; Pfeffer, M.A. Creation and Adoption of Large Language Models in Medicine. JAMA J. Am. Med. Assoc. 2023, 330, 866–869. [Google Scholar] [CrossRef]

- Zhu, M.; Lin, H.; Jiang, J.; Jinia, A.J.; Jee, J.; Pichotta, K.; Waters, M.; Rose, D.; Schultz, N.; Chalise, S.; et al. Large Language Model Trained on Clinical Oncology Data Predicts Cancer Progression. npj Digit. Med. 2025, 8, 397. [Google Scholar] [CrossRef]

- Rao, V.M.; Hla, M.; Moor, M.; Adithan, S.; Kwak, S.; Topol, E.J.; Rajpurkar, P. Multimodal Generative AI for Medical Image Interpretation. Nature 2025, 639, 888–896. [Google Scholar] [CrossRef] [PubMed]

- Izhar, A.; Idris, N.; Japar, N. Medical Radiology Report Generation: A Systematic Review of Current Deep Learning Methods, Trends, and Future Directions. Artif. Intell. Med. 2025, 168, 103220. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Figueredo, G.; Li, R.; Zhang, W.E.; Chen, W.; Chen, X. A Survey of Deep-Learning-Based Radiology Report Generation Using Multimodal Inputs. Med. Image Anal. 2025, 103, 103627. [Google Scholar]

- Hong, K.E.; Ham, J.; Roh, B.; Gu, J.; Park, B.; Kang, S.; You, K.; Eom, J.; Bae, B.; Jo, J.-B.; et al. Diagnostic Accuracy and Clinical Value of a Domain-Specific Multimodal Generative AI Model for Chest Radiograph Report Generation. Radiology 2025, 314, e241476. [Google Scholar] [CrossRef]

- Huang, J.; Wittbrodt, M.T.; Teague, C.N.; Karl, E.; Galal, G.; Thompson, M.; Chapa, A.; Chiu, M.-L.; Herynk, B.; Linchangco, R.; et al. Efficiency and Quality of Generative AI-Assisted Radiograph Reporting. JAMA Netw. Open 2025, 8, e2513921. [Google Scholar] [CrossRef] [PubMed]

- Wornow, M.; Lozano, A.; Dash, D.; Jindal, J.; Mahaffey, K.W.; Shah, N.H. Zero-Shot Clinical Trial Patient Matching with LLMs. NEJM AI 2025, 2, AIcs2400360. [Google Scholar] [CrossRef]

- Von Itzstein, M.S.; Hullings, M.; Mayo, H.; Beg, M.S.; Williams, E.L.; Gerber, D.E. Application of Information Technology to Clinical Trial Evaluation and Enrollment: A Review: A Review. JAMA Oncol. 2021, 7, 1559–1566. [Google Scholar] [CrossRef] [PubMed]

- Chow, R.; Midroni, J.; Kaur, J.; Boldt, G.; Liu, G.; Eng, L.; Liu, F.-F.; Haibe-Kains, B.; Lock, M.; Raman, S. Use of Artificial Intelligence for Cancer Clinical Trial Enrollment: A Systematic Review and Meta-Analysis. J. Natl. Cancer Inst. 2023, 115, 365–374. [Google Scholar] [CrossRef]

- Beck, T.J.; Rammage, M.; Jackson, G.P.; Preininger, A.M.; Dankwa-Mullan, I.; Roebuck, M.C.; Torres, A.; Holtzen, H.; Coverdill, S.E.; Williamson, M.P.; et al. Artificial Intelligence Tool for Optimizing Eligibility Screening for Clinical Trials in a Large Community Cancer Center. JCO Clin. Cancer Inform. 2020, 4, 50–59. [Google Scholar] [CrossRef]

- Lu, X.; Yang, C.; Liang, L.; Hu, G.; Zhong, Z.; Jiang, Z. Artificial Intelligence for Optimizing Recruitment and Retention in Clinical Trials: A Scoping Review. J. Am. Med. Inform. Assoc. JAMIA 2024, 31, 2749–2759. [Google Scholar] [CrossRef]

- Saady, M.; Eissa, M.; Yacoub, A.S.; Hamed, A.B.; Azzazy, H.M.E.-S. Implementation of Artificial Intelligence Approaches in Oncology Clinical Trials: A Systematic Review. Artif. Intell. Med. 2025, 161, 103066. [Google Scholar] [CrossRef]

- Homolak, J. Opportunities and Risks of ChatGPT in Medicine, Science, and Academic Publishing: A Modern Promethean Dilemma. Croat. Med. J. 2023, 64, 1–3. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. arXiv 2020. [Google Scholar] [CrossRef]

- Low, S.Y.; Jackson, M.L.; Hyde, R.J.; Brown, R.E.; Sanghavi, N.M.; Baldwin, J.D.; Pike, C.W.; Muralidharan, J.; Hui, G.; Alexander, N.; et al. Answering Real-World Clinical Questions Using Large Language Model, Retrieval-Augmented Generation, and Agentic Systems. Digit. Health 2025, 11, 20552076251348850. Available online: https://www.asco.org/practice-patients/guidelines/assistant (accessed on 1 September 2025). [CrossRef]

- Ahn, S. Data Science through Natural Language with ChatGPT’s Code Interpreter. Transl. Clin. Pharmacol. 2024, 32, 73–82. [Google Scholar] [CrossRef]

- Sapkota, R.; Roumeliotis, K.I.; Karkee, M. Vibe Coding vs. Agentic Coding: Fundamentals and Practical Implications of Agentic AI. arXiv 2025. [Google Scholar] [CrossRef]

- Rezaeikhonakdar, D. AI Chatbots and Challenges of HIPAA Compliance for AI Developers and Vendors. J. Am. Soc. Law Med. Ethics 2023, 51, 988–995. [Google Scholar] [CrossRef]

- Boehm, K.M.; Khosravi, P.; Vanguri, R.; Gao, J.; Shah, S.P. Harnessing Multimodal Data Integration to Advance Precision Oncology. Nat. Rev. Cancer 2022, 22, 114–126. [Google Scholar] [CrossRef]

- Lipkova, J.; Chen, R.J.; Chen, B.; Lu, M.Y.; Barbieri, M.; Shao, D.; Vaidya, A.J.; Chen, C.; Zhuang, L.; Williamson, D.F.; et al. Artificial Intelligence for Multimodal Data Integration in Oncology. Cancer Cell 2022, 40, 1095–1110. [Google Scholar] [CrossRef] [PubMed]

- Khosravi, P.; Fuchs, T.J.; Ho, D.J. Artificial Intelligence-Driven Cancer Diagnostics: Enhancing Radiology and Pathology through Reproducibility, Explainability, and Multimodality. Cancer Res. 2025, 85, 2356–2367. [Google Scholar] [CrossRef]

- Prelaj, M.V.; Zanitti, M.; Trovo, F.; Genova, C.; Viscardi, G.; Rebuzzi, S.E.; Mazzeo, L.; Provenzano, L.; Kosta, S.; Favali, M.; et al. Artificial Intelligence for Predictive Biomarker Discovery in Immuno-Oncology: A Systematic Review. Ann. Oncol. 2024, 35, 29–65. [Google Scholar] [CrossRef] [PubMed]

- Jansen, R.W.; van Amstel, P.; Martens, R.M.; Kooi, I.E.; Wesseling, P.; de Langen, A.J.; der Houven van Oordt, C.W.M.-V.; Jansen, B.H.E.; Moll, A.C.; Dorsman, J.C.; et al. Non-Invasive Tumor Genotyping Using Radiogenomic Biomarkers, a Systematic Review and Oncology-Wide Pathway Analysis. Oncotarget 2018, 9, 20134–20155. [Google Scholar] [CrossRef]

- Awais, M.; Rehman, A.; Bukhari, S.S. Advances in Liquid Biopsy and Virtual Biopsy for Care of Patients with Glioma: A Narrative Review. Expert Rev. Anticancer Ther. 2025, 25, 529–550. [Google Scholar] [CrossRef]

- Arthur, A.; Johnston, E.W.; Winfield, J.M.; Blackledge, M.D.; Jones, R.L.; Huang, P.H.; Messiou, C. Virtual Biopsy in Soft Tissue Sarcoma. How Close Are We? Front. Oncol. 2023, 12, 892620. [Google Scholar] [CrossRef]

- Cifci, D.; Foersch, S.; Kather, J.N. Artificial Intelligence to Identify Genetic Alterations in Conventional Histopathology. J. Pathol. 2022, 257, 430–444. [Google Scholar] [CrossRef]

- Campanella, G.; Kumar, N.; Nanda, S.; Singi, S.; Fluder, E.; Kwan, R.; Muehlstedt, S.; Pfarr, N.; Schüffler, P.J.; Häggström, I.; et al. Real-World Deployment of a Fine-Tuned Pathology Foundation Model for Lung Cancer Biomarker Detection. Nat. Med. 2025, 12, 892620. [Google Scholar] [CrossRef] [PubMed]

- Echle, A.; Grabsch, H.I.; Quirke, P.; van den Brandt, P.A.; West, N.P.; Hutchins, G.G.A.; Heij, L.R.; Tan, X.; Richman, S.D.; Krause, J.; et al. Clinical-Grade Detection of Microsatellite Instability in Colorectal Tumors by Deep Learning. Gastroenterology 2020, 159, 1406–1416.e11. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Lyu, T.; Liu, S.; Zhang, W.; Zhou, Y.; Zeng, C.; Wu, G. Learn to Estimate Genetic Mutation and Microsatellite Instability with Histopathology H&E Slides in Colon Carcinoma. Cancers 2022, 14, 4144. [Google Scholar] [CrossRef]

- Boehm, K.M.; El Nahhas, O.S.M.; Marra, A.; Waters, M.; Jee, J.; Braunstein, L.; Schultz, N.; Selenica, P.; Wen, H.Y.; Weigelt, B.; et al. Multimodal Histopathologic Models Stratify Hormone Receptor-Positive Early Breast Cancer. Nat. Commun. 2025, 16, 2106. [Google Scholar] [CrossRef] [PubMed]

- Ming, Y.; Chen, T.Y.; Williamson, D.F.K.; Zhao, M.; Shady, M.; Lipkova, J.; Mahmood, F. AI-Based Pathology Predicts Origins for Cancers of Unknown Primary. Nature 2021, 594, 106–110. [Google Scholar] [CrossRef]

- Yoo, S.-K.; Fitzgerald, C.W.; Cho, B.A.; Fitzgerald, B.G.; Han, C.; Koh, E.S.; Pandey, A.; Sfreddo, H.; Crowley, F.; Korostin, M.R.; et al. Prediction of Checkpoint Inhibitor Immunotherapy Efficacy for Cancer Using Routine Blood Tests and Clinical Data. Nat. Med. 2025, 31, 869–880. [Google Scholar] [CrossRef]

- Nangalia, J.; Campbell, P.J. Genome Sequencing during a Patient’s Journey through Cancer. N. Engl. J. Med. 2019, 381, 2145–2156. [Google Scholar] [CrossRef]

- Aleksakhina, S.N.; Imyanitov, E.N. Cancer Therapy Guided by Mutation Tests: Current Status and Perspectives. Int. J. Mol. Sci. 2021, 22, 10931. [Google Scholar] [CrossRef]

- Zardavas, D.; Irrthum, A.; Swanton, C.; Piccart, M. Clinical Management of Breast Cancer Heterogeneity. Nat. Rev. Clin. Oncol. 2015, 12, 381–394. [Google Scholar] [CrossRef] [PubMed]

- Kawakami, E.; Tabata, J.; Yanaihara, N.; Ishikawa, T.; Koseki, K.; Iida, Y.; Saito, M.; Komazaki, H.; Shapiro, J.S.; Goto, C.; et al. Application of Artificial Intelligence for Preoperative Diagnostic and Prognostic Prediction in Epithelial Ovarian Cancer Based on Blood Biomarkers. Clin. Cancer Res. Off. J. Am. Assoc. Cancer Res. 2019, 25, 3006–3015. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, K.; Poirion, O.B.; Lu, L.; Garmire, L.X. Deep Learning-Based Multi-Omics Integration Robustly Predicts Survival in Liver Cancer. Clin. Cancer Res. Off. J. Am. Assoc. Cancer Res. 2018, 24, 1248–1259. [Google Scholar]

- Fujita, M.; Chen, M.-J.M.; Siwak, D.R.; Sasagawa, S.; Oosawa-Tatsuguchi, A.; Arihiro, K.; Ono, A.; Miura, R.; Maejima, K.; Aikata, H.; et al. Proteo-Genomic Characterization of Virus-Associated Liver Cancers Reveals Potential Subtypes and Therapeutic Targets. Nat. Commun. 2022, 13, 6481. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Yan, X.; Dong, Y.; Li, X.; Gao, B. Machine Learning and Multi-Omics Data Reveal Driver Gene-Based Molecular Subtypes in Hepatocellular Carcinoma for Precision Treatment. PLoS Comput. Biol. 2024, 20, e1012113. [Google Scholar] [CrossRef]

- Karin, J.; Mintz, R.; Raveh, B.; Nitzan, M. Interpreting Single-Cell and Spatial Omics Data Using Deep Neural Network Training Dynamics. Nat. Comput. Sci. 2024, 4, 941–954. [Google Scholar] [CrossRef]

- An, H.; Fang, W.; Chen, H.; Huang, W.; Liu, H.; Zhang, Z.; Zhao, H.; Zhang, Y.; Zhao, M.; Qiu, J.; et al. Spatial Transcriptomics Unveils Regional Heterogeneity and Subclonal Dynamics in the Lung Adenocarcinoma Microenvironment. Comput. Methods Programs Biomed. 2025, 270, 108929. [Google Scholar] [CrossRef]

- Takano, Y.; Suzuki, J.; Nomura, K.; Fujii, G.; Zenkoh, J.; Kawai, H.; Kuze, Y.; Kashima, Y.; Nagasawa, S.; Nakamura, Y.; et al. Spatially Resolved Gene Expression Profiling of Tumor Microenvironment Reveals Key Steps of Lung Adenocarcinoma Development. Nat. Commun. 2024, 15, 10637. [Google Scholar] [CrossRef]

- BBischoff, P.; Trinks, A.; Obermayer, B.; Pett, J.P.; Wiederspahn, J.; Uhlitz, F.; Liang, X.; Lehmann, A.; Jurmeister, P.; Elsner, A.; et al. Single-Cell RNA Sequencing Reveals Distinct Tumor Microenvironmental Patterns in Lung Adenocarcinoma. Oncogene 2021, 40, 6748–6758. [Google Scholar] [CrossRef]

- Yurkovich, J.T.; Tian, Q.; Price, N.D.; Hood, L. A Systems Approach to Clinical Oncology Uses Deep Phenotyping to Deliver Personalized Care. Nature Reviews. Clin. Oncol. 2020, 17, 183–194. [Google Scholar]

- Reicher, L.; Shilo, S.; Godneva, A.; Lutsker, G.; Zahavi, L.; Shoer, S.; Krongauz, D.; Rein, M.; Kohn, S.; Segev, T.; et al. Deep Phenotyping of Health-Disease Continuum in the Human Phenotype Project. Nat. Med. 2025, 31, 3191–3203. [Google Scholar] [CrossRef] [PubMed]

- Rose, S.M.S.-F.; Contrepois, K.; Moneghetti, K.J.; Zhou, W.; Mishra, T.; Mataraso, S.; Dagan-Rosenfeld, O.; Ganz, A.B.; Dunn, J.; Hornburg, D.; et al. A Longitudinal Big Data Approach for Precision Health. Nat. Med. 2019, 25, 792–804. [Google Scholar] [CrossRef]

- Yurkovich, J.T.; Evans, S.J.; Rappaport, N.; Boore, J.L.; Lovejoy, J.C.; Price, N.D.; Hood, L.E. The Transition from Genomics to Phenomics in Personalized Population Health. Nature Reviews. Genetics 2024, 25, 286–302. [Google Scholar] [PubMed]

- Tian, P.N.D.; Hood, L. Systems Cancer Medicine: Towards Realization of Predictive, Preventive, Personalized and Participatory (P4) Medicine: Key Symposium: Systems Cancer Medicine. J. Intern. Med. 2012, 271, 111–121. [Google Scholar] [CrossRef]

- Sakurada, K.; Ishikawa, T.; Oba, J.; Kuno, M.; Okano, Y.; Sakamaki, T.; Tamura, T. Medical AI and AI for Medical Sciences. JMA J. 2025, 8, 26–37. [Google Scholar]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly Accurate Protein Structure Prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Ren, F.; Ding, X.; Zheng, M.; Korzinkin, M.; Cai, X.; Zhu, W.; Mantsyzov, A.; Aliper, A.; Aladinskiy, V.; Cao, Z.; et al. AlphaFold Accelerates Artificial Intelligence Powered Drug Discovery: Efficient Discovery of a Novel CDK20 Small Molecule Inhibitor. Chem. Sci. 2023, 14, 1443–1452. [Google Scholar] [CrossRef] [PubMed]

- Le, M.H.N.; Nguyen, P.K.; Nguyen, T.P.T.; Nguyen, H.Q.; Tam, D.N.H.; Huynh, H.H.; Huynh, P.K.; Le, N.Q.K. An In-Depth Review of AI-Powered Advancements in Cancer Drug Discovery. Biochim. Biophys. Acta Mol. Basis Dis. 2025, 1871, 167680. [Google Scholar]

- Weng, Y.; Pan, C.; Shen, Z.; Chen, S.; Xu, L.; Dong, X.; Chen, J. Identification of Potential WSB1 Inhibitors by AlphaFold Modeling, Virtual Screening, and Molecular Dynamics Simulation Studies. Evid. Based Complement. Altern. Med. 2022, 2022, 4629392. [Google Scholar]

- Schauperl, M.; Denny, R.A. AI-Based Protein Structure Prediction in Drug Discovery: Impacts and Challenges. J. Chem. Inf. Model. 2022, 62, 3142–3156. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, V.; McShan, A.C. The Power and Pitfalls of AlphaFold2 for Structure Prediction beyond Rigid Globular Proteins. Nat. Chem. Biol. 2024, 20, 950–959. [Google Scholar] [CrossRef]

- Nussinov, R.; Zhang, M.; Liu, Y.; Jang, H. AlphaFold, Allosteric, and Orthosteric Drug Discovery: Ways Forward. Drug Discov. Today 2023, 28, 103551. [Google Scholar] [CrossRef]

- Terwilliger, T.C.; Liebschner, D.; Croll, T.I.; Williams, C.J.; McCoy, A.J.; Poon, B.K.; Afonine, P.V.; Oeffner, R.D.; Richardson, J.S.; Read, R.J.; et al. AlphaFold Predictions Are Valuable Hypotheses and Accelerate but Do Not Replace Experimental Structure Determination. Nat. Methods 2024, 21, 110–116. [Google Scholar] [CrossRef] [PubMed]

- Lekadir, K.; Frangi, A.F.; Porras, A.R.; Glocker, B.; Cintas, C.; Langlotz, C.P.; Weicken, E.; Asselbergs, F.W.; Prior, F.; Collins, G.S.; et al. FUTURE-AI: International Consensus Guideline for Trustworthy and Deployable Artificial Intelligence in Healthcare. BMJ 2025, 388, e081554. [Google Scholar] [CrossRef] [PubMed]

- Daneshjou, R.; Vodrahalli, K.; Novoa, R.A.; Jenkins, M.; Liang, W.; Rotemberg, V.; Ko, J.; Swetter, S.M.; Bailey, E.E.; Gevaert, O.; et al. Disparities in Dermatology AI Performance on a Diverse, Curated Clinical Image Set. Sci. Adv. 2022, 8, eabq6147. [Google Scholar] [CrossRef]

- Adamson, A.S.; Smith, A. Machine Learning and Health Care Disparities in Dermatology. JAMA Dermatol. 2018, 154, 1247–1248. [Google Scholar] [CrossRef]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Dissecting Racial Bias in an Algorithm Used to Manage the Health of Populations. Science 2019, 366, 447–453. [Google Scholar] [CrossRef]

- Char, D.S.; Shah, N.H.; Magnus, D. Implementing Machine Learning in Health Care—Addressing Ethical Challenges. N. Engl. J. Med. 2018, 378, 981–983. [Google Scholar]

- Seyyed-Kalantari, L.; Zhang, H.; McDermott, M.B.A.; Chen, I.Y.; Ghassemi, M. Underdiagnosis Bias of Artificial Intelligence Algorithms Applied to Chest Radiographs in Under-Served Patient Populations. Nat. Med. 2021, 27, 2176–2182. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Lungren, M.P. The Current and Future State of AI Interpretation of Medical Images. N. Engl. J. Med. 2023, 388, 1981–1990. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, H.; Gichoya, J.W.; Katabi, D.; Ghassemi, M. The Limits of Fair Medical Imaging AI in Real-World Generalization. Nat. Med. 2024, 30, 2838–2848. [Google Scholar] [CrossRef]

- McKinney, S.M.; Sieniek, M.; Godbole, V.; Godwin, J.; Antropova, N.; Ashrafian, H.; Back, T.; Chesus, M.; Corrado, G.S.; Darzi, A.; et al. International Evaluation of an AI System for Breast Cancer Screening. Nature 2020, 577, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Sharma, N.; Ng, A.Y.; James, J.J.; Khara, G.; Ambrózay, É.; Austin, C.C.; Forrai, G.; Fox, G.; Glocker, B.; Heindl, A.; et al. Multi-Vendor Evaluation of Artificial Intelligence as an Independent Reader for Double Reading in Breast Cancer Screening on, 275,900 Mammograms. BMC Cancer 2023, 23, 460. [Google Scholar] [CrossRef] [PubMed]

- Rajkomar, A.; Hardt, M.; Howell, M.D.; Corrado, G.; Chin, M.H. Ensuring Fairness in Machine Learning to Advance Health Equity. Ann. Intern. Med. 2018, 169, 866–872. [Google Scholar] [CrossRef]

- Seyyed-Kalantari, L.; Liu, G.; McDermott, M.; Chen, I.Y.; Ghassemi, M. CheXclusion: Fairness Gaps in Deep Chest X-Ray Classifiers. arXiv 2020. [Google Scholar] [CrossRef]

- Sheller, M.J.; Edwards, B.; Reina, G.A.; Martin, J.; Pati, S.; Kotrotsou, A.; Milchenko, M.; Xu, W.; Marcus, D.; Colen, R.R.; et al. Federated Learning in Medicine: Facilitating Multi-Institutional Collaborations without Sharing Patient Data. Sci. Rep. 2020, 10, 12598. [Google Scholar] [CrossRef]

- Rieke, N.; Hancox, J.; Li, W.; Milletarì, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The Future of Digital Health with Federated Learning. npj Digit. Med. 2020, 3, 119. [Google Scholar] [CrossRef]

- Zhang, H.B.; Lemoine, B.; Mitchell, M. Mitigating Unwanted Biases with Adversarial Learning. arXiv 2018. [Google Scholar] [CrossRef]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key Challenges for Delivering Clinical Impact with Artificial Intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef]

- Schömig-Markiefka, B.; Pryalukhin, A.; Hulla, W.; Bychkov, A.; Fukuoka, J.; Madabhushi, A.; Achter, V.; Nieroda, L.; Büttner, R.; Quaas, A.; et al. Quality Control Stress Test for Deep Learning-Based Diagnostic Model in Digital Pathology. Mod. Pathol. 2021, 34, 2098–2108. [Google Scholar] [CrossRef] [PubMed]

- Tellez, D.; Litjens, G.; Bándi, P.; Bulten, W.; Bokhorst, J.-M.; Ciompi, F.; van der Laak, J. Quantifying the Effects of Data Augmentation and Stain Color Normalization in Convolutional Neural Networks for Computational Pathology. Med. Image Anal. 2019, 58, 101544. [Google Scholar] [CrossRef]

- Napravnik, M.; Hržić, F.; Urschler, M.; Miletić, D.; Štajduhar, I. Lessons Learned from Radiology NET Foundation Models for Transfer Learning in Medical Radiology. Sci. Rep. 2025, 15, 21622. [Google Scholar] [CrossRef] [PubMed]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef]

- Raghu, M.; Zhang, C.; Kleinberg, J.; Bengio, S. Transfusion: Understanding Transfer Learning for Medical Imaging. arXiv 2019. [Google Scholar] [CrossRef]

- Ming, Y.; Williamson, D.F.K.; Chen, T.Y.; Chen, R.J.; Barbieri, M.; Mahmood, F. Data-Efficient and Weakly Supervised Computational Pathology on Whole-Slide Images. Nat. Biomed. Eng. 2021, 5, 555–570. [Google Scholar]

- Fedorov, A.; Longabaugh, W.J.R.; Pot, D.; Clunie, D.A.; Pieper, S.D.; Gibbs, D.L.; Bridge, C.; Herrmann, M.D.; Homeyer, A.; Lewis, R.; et al. National Cancer Institute Imaging Data Commons: Toward Transparency, Reproducibility, and Scalability in Imaging Artificial Intelligence. RadioGraphics 2023, 43, e230180. [Google Scholar] [CrossRef]

- Mongan, J.; Moy, L.; Kahn, C.E. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef]

- Ghassemi, M.; Oakden-Rayner, L.; Beam, A.L. The False Hope of Current Approaches to Explainable Artificial Intelligence in Health Care. Lancet Digit. Health 2021, 3, e745–e750. [Google Scholar]

- Schelter, L.; Klein, T. Automatically Tracking Metadata and Provenance of Machine Learning Experiments. In Machine Learning Systems Workshop at NIPS. 2017. Available online: https://neurips.cc/virtual/2017/workshop/8774 (accessed on 20 October 2025).

- Namli, T.; Sınacı, A.A.; Gönül, S.; Herguido, C.R.; Garcia-Canadilla, P.; Muñoz, A.M.; Esteve, A.V.; Ertürkmen, G.B.L. A Scalable and Transparent Data Pipeline for AI-Enabled Health Data Ecosystems. Front. Med. 2024, 11, 1393123. [Google Scholar] [CrossRef]

- Ratwani, R.M.; Bates, D.W.; Classen, D.C. Patient Safety and Artificial Intelligence in Clinical Care. JAMA Health Forum 2024, 5, e235514. [Google Scholar] [CrossRef]

- Kwong, J.C.C.; Khondker, A.; Lajkosz, K.; McDermott, M.B.A.; Frigola, X.B.; McCradden, M.D.; Mamdani, M.; Kulkarni, G.S.; Johnson, A.E.W. APPRAISE-AI Tool for Quantitative Evaluation of AI Studies for Clinical Decision Support. JAMA Netw. Open 2023, 6, e2335377. [Google Scholar] [CrossRef]

- Shah, N.H.; Halamka, J.D.; Saria, S.; Pencina, M.; Tazbaz, T.; Tripathi, M.; Callahan, A.; Hildahl, H.; Anderson, B. A Nationwide Network of Health AI Assurance Laboratories. JAMA J. Am. Med. Assoc. 2024, 331, 245–249. [Google Scholar] [CrossRef]

- Kolla, L.; Parikh, R.B. Uses and Limitations of Artificial Intelligence for Oncology. Cancer 2024, 130, 2101–2107. [Google Scholar] [CrossRef]