Abstract

Many recommendations and innovative approaches are available for the development and evaluation of complex health interventions. We investigated the dimensions of complexity described in health research and how these descriptions may affect the adopted research methodology (e.g., the choice of designs and methods). We used a mixed method approach to review the scientific literature evaluating complex interventions in the health field. Of 438 articles identified, 179 were subjected to descriptive analysis and 48 to content analysis. The three principal dimensions of complexity were: stakeholder characteristics, intervention multimodality and context. Recognition of such dimensions influenced the methodological choices made during evaluation of the interventions with their use of designs and methods, which aimed to address the complexity. We analysed not only how researchers view complexity but also the effects of such views on researcher practices. Our results highlight the need for clarification of what complexity means and to consider complexity when deciding how to evaluate research interventions.

1. Background

Complex interventions [1,2] challenge both researchers and stakeholders in terms of development and evaluation [3]. These interventions also pose challenges when transferring them to different contexts and scaling them up or out [4,5,6].

Given the substantial influences of intervention characteristics and context on the results [7,8], evaluating complex interventions involves more than assessing their effectiveness. The mechanisms, processes, conditions and modality of implementation must also be explored as to their impacts on context [9]. Researchers are effectively invited to open a “black box” that allows for the assessment of functionality, reliability, quality and causal mechanisms and contextual factors associated with variations in outcomes [10,11].

Several approaches to complexity have been put forward. In 2000, the Medical Research Council (MRC) proposed a definition (revised in 2008) of a complex health intervention [1,10]. Complexity involves the interaction of multiple components, the behaviour of those providing and receiving the intervention, the organisational level targeted, the variability in outcomes and the flexibility of the intervention. Another approach to analysing complexity uses complex-systems thinking [9,12]. Intervention/system complexity is defined in terms of its components and its associations with various systems. Many factors must be considered, including internal (e.g., activities, human, material and financial resources) and external factors (e.g., the social environment and political context). The intervention context embraces the spatial and temporal conjunction of social events, individuals and social interactions; these generate causal mechanisms that interact with the intervention and modify the intervention’s effects [13].

Recognising the system characteristics, Cambon et al. suggest, in their approach to complexity, replacing the term “intervention” with “intervention system” [14]. An intervention system was defined as a set of interrelated human and nonhuman contextual agents within certain spatial and temporal boundaries that generate mechanistic configurations (mechanisms) that are prerequisites for changes in health.

A previous review highlighted challenges imposed by the design, implementation and evaluation of complex interventions [3]. They have to do with the content and standardisation of interventions, the impact on the people involved, the organisational context of implementation, the development of outcome measures and evaluation. Here we focus on evaluation. We recently published a review of the principal methods used to evaluate complex interventions [15]. We found that several methods are used successively or together at various stages of evaluation. Most designs feature process analyses. To complete this quantitative analysis, we used a mixed-methods approach to investigate the dimensions of complexity described in health research and how these descriptions may affect the adopted research methodology. More precisely, our specific objectives were to answer the following questions: 1. What are the key dimensions of complexity described by researchers? 2. How are such complexity dimensions evaluated? 3. How do they implement existing recommendations on complex interventions evaluation? and 4. How do they justify their choices at each stage of the research (from intervention development to its transferability)? Our previous article discussed the concepts and methods used by researchers as described in reviewed articles, but only an in-depth analysis of the entire contents (including the discussions) would clarify how choices were made.

An understanding of researcher attitudes and practices will inform work in progress, in particular, the development of tailored tools for the development, analysis, adaptation, transfer and reporting of interventions [16,17,18,19,20].

2. Methods

We performed a review [21] of peer-reviewed scientific articles evaluating complex interventions in the field of health. The quantitative part of this review has already been published [15]. This article presents the qualitative part of this review. The research strategy was designed to identify articles written by authors who evaluate complex interventions in the field of health (clinical care and health services research, health promotion and prevention) [15]. We used the PRISMA check-list [22].

2.1. Search Strategy

We searched the PubMed database on MEDLINE. The 11-year search period (from 1 January 2004 to 31 December 2014) contained studies that integrated the first definitions of complexity by the MRC [10,12]. We searched for the keywords “complex intervention[s]” AND “evaluation” in the title, abstract and/or body of the article.

2.2. Article Selection

Included articles met the following criteria: 1. They were written in English or French; 2. They were research/evaluation articles, protocols or feasibility/pilot studies (we excluded reviews/meta-analyses, methodological articles, abstracts, book chapters, commentaries/letters, conference papers and other oral presentations and articles reviewing conceptual frameworks); 3. They evaluated a complex intervention; 4. They include a presentation of the dimensions of complexity.

Two reviewers working independently performed the initial title and abstract screening (to exclude articles that were deemed ineligible) followed by a detailed full-text screening of the remaining articles (to exclude those that did not meet all the inclusion criteria). Any disagreements were resolved via discussion with a third reviewer.

2.3. Data Extraction

All articles were independently read by two researchers. Any disagreements were resolved via discussion. Data were extracted using a grid including the following items: 1. Description of articles (title, author(s), country, date, journal, publication type, key words, discipline, publication field and objective of the article); 2. Definition and description of complexity (reference(s) used, dimension(s) of complexity identified by authors) and methods used to address complexity.

2.4. Data Analysis

The extracted articles were subjected to a two-step analysis. The first step was a descriptive analysis that identified any included references to complexity. The second involved a content analysis exploring the dimensions of complexity, the concordance between the dimensions highlighted by the authors and those of relevant citations, the level of detail in the provided descriptions of complexity and the influence of the consideration of complexity on the researchers’ methods. Two authors (J.T., J.K.) performed these analyses and cross-checked their results.

3. Results

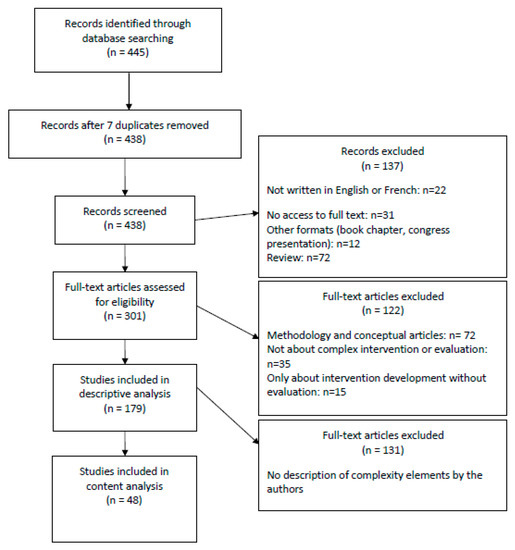

A total of 438 articles were identified. Among them, 179 met the inclusion criteria (Figure 1).

Figure 1.

PRISMA flow chart.

3.1. General Description of the Published Articles

Of the 179 articles included in the study, 33.5% were protocols, 33.5% were pilot or feasibility studies and 33% were evaluation studies. Most articles originated from English-speaking countries. A tenfold increase in the number of articles on the broad theme of healthcare was observed over our screening period (from 4 in 2004 to 39 in 2014). Most articles involved researchers from several scientific disciplines (71.5%), representing collaboration between two (54.5%) to six (1.2%) disciplines. The most frequently represented disciplines were medicine, public health, social sciences, health sciences, nursing, psychology and health research. The articles dealt with the following fields: prevention, health promotion and education (46%), clinical care (36%) and health services research (18%). The main themes were healthcare (40%), pathology (33%) and behavioural change (27%).

Among the 179 articles, 52 did not use a reference involving complexity (the expression “complex intervention” appeared in the keywords, abstracts or body text but without any citation or description), 79 cited an article on complexity and 48 additionally defined, described or justified the intervention complexity. Among these 48 articles, the most frequently cited references (alone or combined) were the MRC frameworks of 2000 and 2008 and related articles [1,10] (86%), Campbell et al. [23] (15%), Oakley et al. [24] (12.5%), and Hawe et al. [12] (12.5%).

A content analysis of the 48 articles (Table 1 and Table 2) that went beyond a single citation allowed us to identify the dimensions of complexity in the interventions.

Table 1.

List of included articles.

Table 2.

Description of included articles.

3.2. The Dimensions of Complexity

A thematic content analysis of the 48 articles revealed three major dimensions of complexity: 1. The characteristics of intervention stakeholders (27 articles); 2. The multimodality of an intervention (21 articles); and 3. The intervention context (14 articles).

3.2.1. Characteristics of Intervention Stakeholders

A total of 27 articles cited the individual characteristics of the different stakeholders (beneficiaries, field workers and decision makers) as one of the dimensions of complexity. The individual characteristics reflected, on the one hand, both intervention stakeholders’ profiles and their possible effects in terms of intervention involvement and/or the relationships between the different stakeholders, and on the other hand, stakeholders’ knowledge and competencies (e.g., training). Although these combined characteristics were mentioned as elements of complexity, the individual characteristics were approached from different angles. Sometimes, they were treated as a “mechanism” or an “active ingredient” that was capable of positively or negatively influencing the outcome. For example, in article 45, authors explained that the complexity of their intervention, which was partly attributable to the professional competencies of the stakeholders, directly influenced the outcomes. At other times, the characteristics were treated as a research objective or as an outcome such as a behavioural change that was difficult to achieve:

“Health care interventions […] are typically complex: they may […] seek to achieve outcomes that are difficult to influence, such as changes in individual patient or professional behaviour.”(article 8)

3.2.2. The Multimodality of an Intervention

Multimodality was identified in 21 articles as a dimension of complexity. We found two types of multimodal interventions. Multimodality may be a combination of several very distinct actions (e.g., training professionals, environmental actions) performed simultaneously or consecutively at different levels. Alternatively, multimodality may be a chain of stages in a single action (e.g., a medical treatment protocol). In article 28, authors compared the results of the evaluation of a complex rehabilitation protocol to the usual treatments for patients discharged from intensive care units. The complexity here lay in a proposal to redesign care to feature several consecutive steps.

3.2.3. The Intervention Context

The context in which the intervention was described was another dimension of complexity in 14 articles. Irrespective of whether the context was organisational, institutional, environmental, or social, it was cited because of its strong influence on both the implementation of the intervention (generally involving some flexibility) and its actual effectiveness.

In article 41, authors explained the importance of defining elements of the context and identifying how these might (positively or negatively) influence the outcomes.

“To describe the contexts in which the intervention was delivered and explore contextual factors that may influence the delivery or impact of the intervention, and the outcomes. […] It may also explain intentional and unintentional differences in delivery. Reflecting on the relationship between organisational context and how each agency used the program to address local needs may have implications for future program design and delivery. […] We conceptualised context as incorporating the social, structural and political environment of each participating organisation.”(article 41)

Unlike the two previous dimensions, which, from the authors’ viewpoints, were sufficient to define interventions as complex, context was always combined with one or several other dimensions of complexity. In other words, context did not appear as an independent component, and, when it did appear, the complexity lay principally in the interaction between the context and stakeholders’ characteristics and/or the multimodality of the intervention.

3.3. The Level of Detail in Descriptions of Dimensions of Complexity

The level of detail varied greatly; we defined the approaches as minimalist, intermediate and in-depth descriptions. The minimalist approach corresponded to using only one citation for the source of complexity with little narrative description or details; the intermediate approach described a correspondence between the citation used and the elements of the intervention developed/evaluated; the in-depth approach included a structured and well-argued process of integrating dimensions of complexity into the evaluative approach.

3.3.1. Minimalist Descriptions

Of the 48 articles analysed, 24 offered minimalist descriptions, mentioning one or more intervention components accompanied by a definition of complexity and a comment on whether this was relevant to intervention development or evaluation. At this level, the dimensions of complexity were identified in terms of the literature review only and not the precise intervention context. Intervention complexity was considered to justify an innovative evaluative approach, but the 24 articles each made only one “formal” link between the definition of complexity and their choice of methods for developing or evaluating their intervention.

3.3.2. Intermediate Descriptions

Sixteen articles provided intermediate-level descriptions of complexity. Unlike in the Minimalist level discussed above, the authors did not simply identify the dimensions of the complexity of the intervention they were developing or evaluating based on the scientific literature, they also explained the dimensions by drawing on their own interventions.

Article 38 presented a pilot study of an e-intervention offering psycho-affective support from the siblings of people affected by their first psychotic episode. Authors introduced the notion of complexity via a citation and then emphasised the need to develop a more flexible, complex, multimodal intervention that could be adapted to the real lifestyles of their target population, whose dimensions of complexity they identified:

“The intervention is “complex” according to the MRC definition because it comprises a number of, and interactions between, components within the intervention that subsequently impact on a number of variable outcomes. The components are: psychoeducation and peer support, each of which is a complex intervention in its own right, and each may work independently as well as inter-dependently in exerting their effects. […]. Another issue for consideration in complex interventions is the number and difficulty of performing behaviours required by those delivering and/or receiving the intervention, such as those involved in this intervention by the sibling-participants.”(article 38)

At this level, although a link was established between the reference(s)/concept(s) used and the elements present in the interventions, reflection on complexity and its elements remained rather limited.

3.3.3. In-Depth Descriptions

The other eight articles provided detailed descriptions of complexity. These in-depth works deconstructed interventions into their various components to identify the dimensions of complexity and thus determine a corresponding methodology for intervention development and evaluation. The authors applied the concepts as closely as possible to their intervention practice. For example, a study on the dental health of patients who had suffered strokes structured complexity differently from that described above. Authors (article 16) interpreted their intervention theoretically by referencing the literature and deconstructing it into components that differed in terms of complexity. In their opinion, the extent of complexity depended on the number of interactions between levels, components and actions targeted by the intervention, the number and variability of participating groups and the contexts of the interventions.

The authors showed, on the one hand, a link between each element and the systems engaged and, on the other hand, the influence of such interdependence on the outcome. In other words, they formulated hypotheses of intervention mechanisms. They considered that an understanding of the interactions between the different elements was essential if a real world intervention was to be successful. This implied that these elements had been considered to matter when the research questions had been formulated and ceased only when the evaluative process was performed. Their analysis of complexity was integral to the development of the intervention, guiding first the theorisation and then the evaluative framework, allowing them to measure the impact of intervention components by the different contexts targeted.

Another article presented an exercise programme for community-dwelling, mobility-restricted, chronically ill older adults featuring structured support by general practitioners (article 34). The authors deconstructed the complexity of their intervention and developed a methodological strategy guided by the MRC framework. They combined all five of the MRC guidance dimensions with specific intervention elements. For example, for the item “number and difficulty of behaviours required by those delivering or receiving the intervention”, they identified “GPs’ counselling behaviour, exercise therapist’s counselling behaviour and patient’s behaviour including motivation and ability (information, instruction, equipment) to perform the exercise programme regularly”. They built their study via detailed reference to the first three phases of the MRC framework.

3.4. Recognising Complexity When Considering Interventions and Evaluations

In 30 of the articles (60.7%), intervention development and/or evaluation followed only the MRC recommendations. Thirteen (27.1%) cited Hawe et al. [12] and/or another reference specific to the theme of the intervention and five (10.4%) cited a complex intervention similar to that contemplated from within the authors’ own discipline.

Although all articles reported the use of methodological approaches that had been adapted to consider complexity, their application varied among studies, as the approaches were introduced at different points during the intervention. Of the 48 studies, 13 (27%) considered complexity only during the development of the intervention, 17 (35%) only during evaluation and 18 (37.5%) throughout the entire process.

Regardless of the point at which complexity was considered, such consideration caused 47 authors (98%) to use a methodological adaptation (e.g., a cluster randomized controlled trial (RCT)) or an alternative (e.g., realistic evaluation) to the individual RCT, the gold standard in clinical research. The principal adaptation was the creation of some form of process evaluation (46%), usually (but not always) combined with a trial. However, the descriptions of the methodological adaptations in relation to the dimensions of complexity varied in their level of detail, as did the reasons for the choices. Some authors cited only a single reference to the development and evaluation of complex interventions to justify the use of a particular methodology and/or evaluative model, specifically when that model diverged from the MRC recommendations. Others strictly applied the recommendations to which they were referring, or engaged in detailed reflection on their methodological choices in terms of the complexity of the intervention, also describing the adaptations in great detail. They also often advocated a wider use of the framework they had followed. In article 10, authors clearly justified their evaluative choices and sought to consider, optimally, the various dimensions of the complexity of their intervention.

“This complexity makes a classical randomized controlled trial (RCT) design […] inappropriate for evaluating the effectiveness of public health interventions in a real-life setting [15,16]. […] Therefore, an evaluation approach is proposed that includes a combination of quantitative and qualitative evaluation methods to answer the three research questions of this study. To answer the first and second question, a quasi-experimental pre-test post-test study design […] is used. […] intervention inputs, activities, and outputs are recorded to assess the implementation process.“(article 10)

They returned to a discussion of the contributions made by their evaluative choice and explained that, in their opinion, a mixed-methods approach yielded detailed information on both the methodological feasibility of the intervention and its exact specification.

Finally, fewer than half of the articles (43%) used a theory-based approach to intervention development. The most frequently used theories were psychosocial constructs of behavioural change (21%).

4. Discussion

We analysed how health researchers defined and described complexity in their evaluations and how these descriptions influenced their methodological choices. This qualitative analysis complements our previous article on methodologies used [15]; here we seek to explain why they were used.

We found three principal dimensions of complexity:

1. Stakeholder characteristics were viewed as either a mechanism/active ingredient or an outcome to be achieved in terms of behavioural change. 2. The next dimension, multimodality, is key. However, multimodality per se does not adequately define complexity. In fact, a “complicated” intervention is distinguished from a “complex” one not only by the multimodality but also by its dynamic character and interactions with the context [25]. 3. The interaction of the intervention with the organisational, social, environmental, or institutional context was clearly recognised as constitutive of complexity. Our review of experiences and practices, as reported in the articles, emphasises the perceived importance of such interactions in terms of intervention effects. This is consistent with the view that a complex intervention is an “event in a system” [2,9,14]. Pragmatic operational approaches are being developed to consider context in developing, evaluating and adapting interventions [17,26,27].

Identifying dimensions of complexity contributes to a better understanding of how and under what conditions an outcome can be achieved. This approach is essential also for transferability as it helps to highlight the “key functions” that need to be maintained to obtain similar outcomes in a new setting [4].

Most identifications and explanations of complexity were pragmatic in nature, countering the ambiguity generated by semantic variations when describing the dimensions of complexity [28]. The authors rarely used recognised terms such as “active ingredients” [29], “key functions” [12], “core elements” [30] and even “components” [10,31]. Reporting of interventions requires improvement [20,32]. In fact, the descriptions of interventions, processes, mechanisms and theoretical models were often poor [32,33]. A detailed description may not be feasible in a scientific article, but articles that included either intermediate or in-depth descriptions tended to highlight just one component of intervention and used methodological adaptations for evaluation. Half of the articles engaged in process evaluation, usually coupled with a trial, to evaluate implementation quality and clarify the mechanisms that did or did not impact the expected intervention outcomes, thus conforming to the MRC recommendations [1,11]. However, any connection between the recognition of intervention complexity and the decision to adapt a certain evaluative method received minimal attention; complexity per se was generally viewed as adequate justification for the use of an evaluative model other than the classic individual RCT, which remained the gold standard, even for those authors who used methodological adaptations [15].

The descriptions of dimensions of complexity varied in terms of precision and depth, the methodologies used and the justifications for adaptations introduced because of complexity. The extent to which researchers and stakeholders deal with complexity differ, as does the correspondence between theory and practice. Indeed, not all intervention complexity dimensions defined in the literature were used, or even mentioned, by a number of authors who claimed that their interventions were complex. However, not all dimensions are always relevant for a given intervention/evaluation. Furthermore, the number of dimensions and the extent to which complexity is considered in a research project depends on many factors, such as the questions asked [15]. Although some new dimensions of complexity were identified, they were generally only briefly discussed. We cannot tell whether this was because the concepts had only been partially adopted by the researchers and field workers or because “theoreticians” and “practitioners” viewed complexity differently.

Strengths and Limitations

The dimensions of complexity can be classified in various ways [11,34]. We have chosen a stakeholders/multimodality/context classification. This was appropriate because it reflected the concerns of the authors. This classification was pragmatic, not mechanistic. Other choices could have been made, e.g., the characteristics of those delivering interventions could have been viewed as either implementation elements and/or key functions (i.e., producers of the result).

We may not have retrieved every useful work on complex interventions. The keyword “complex intervention” retrieved only interventions described as complex by their authors. A previous review on the same subject used a similar method (a search for the term “complex intervention” in the title and/or abstract) [3]. Moreover, the PubMed database references articles in the field of health but lacks some in the fields of educational and social sciences. We did not seek to be exhaustive; rather, we sought to provide an overview of the links between definitions of complexity and choices of evaluation methodologies made by researchers who identify themselves in this way.

Finally, a review of the scientific literature cannot fully achieve the objectives that we set. A review can deal with the practices of only a small proportion of researchers and stakeholders.

5. Conclusions

Here we clarified not only how researchers view complexity but also the effects of such views on researcher practices. Our results highlight the need for clarifications of what complexity means. We would encourage researchers to pay careful attention to their descriptions of the dimensions of the complexity of the interventions they evaluate.

Author Contributions

Conceptualization and methodology: all authors; formal analysis, J.T., J.K.; writing—original draft preparation, J.T., F.A.; writing—review and editing, all authors; supervision and project administration, F.A., J.K.; funding acquisition, F.A. All authors have read and agreed to the published version of the manuscript.

Funding

Université de Lorraine; Université de Bordeaux; French National Cancer Institute (Institut National du Cancer -Inca)/French Public Health Research Institute (Institut de Recherche en Santé Publique—IRESP)/ARC Foundation (Fondation ARC)—call “Primary Prevention 2014”; The Cancer League (La Ligue contre le Cancer)—“Research Project in Epidemiology 2014” The Lorraine Region (Région Lorraine)—“Research Projects of Regional Interest 2014”. Funders were not involved in design of the study, collection, analysis, and interpretation of data, or writing the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Craig, P.; Dieppe, P.; Macintyre, S.; Michie, S.; Nazareth, I.; Petticrew, M. Medical Research Council Guidance. Developing and evaluating complex interventions: The new Medical Research Council guidance. BMJ 2008, 337, a1655. [Google Scholar] [CrossRef] [PubMed]

- Hawe, P.; Shiell, A.; Riley, T. Theorising interventions as events in systems. Am. J. Community Psychol. 2009, 43, 267–276. [Google Scholar] [CrossRef] [PubMed]

- Datta, J.; Petticrew, M. Challenges to Evaluating Complex Interventions: A Content Analysis of Published Papers. BMC Public Health 2013, 13, 568. [Google Scholar] [CrossRef] [PubMed]

- Cambon, L.; Minary, L.; Ridde, V.; Alla, F. Transferability of interventions in health education: A review. BMC Public Health 2012, 12, 497. [Google Scholar] [CrossRef]

- Wang, S.; Moss, J.R.; Hiller, J.E. Applicability and transferability of interventions in evidence-based public health. Health Promot. Int. 2006, 21, 76–83. [Google Scholar] [CrossRef]

- Aarons, G.A.; Sklar, M.; Mustanski, B.; Benbow, N.; Brown, C.H. “Scaling-out” evidence-based interventions to new populations or new health care delivery systems. Implement. Sci. 2017, 12, 111. [Google Scholar] [CrossRef]

- McCabe, S.E.; West, B.T.; Veliz, P.; Frank, K.A.; Boyd, C.J. Social contexts of substance use among U.S. high school seniors: A multicohort national study. J. Adolesc. Health 2014, 55, 842–844. [Google Scholar] [CrossRef]

- Shoveller, J.; Viehbeck, S.; Di Ruggiero, E.; Greyson, D.; Thomson, K.; Knight, R. A critical examination of representations of context within research on population health interventions. Crit. Public Health 2016, 26, 487–500. [Google Scholar] [CrossRef]

- Shiell, A.; Hawe, P.; Gold, L. Complex interventions or complex systems? Implications for health economic evaluation. BMJ 2008, 336, 1281–1283. [Google Scholar] [CrossRef]

- Campbell, M.; Fitzpatrick, R.; Haines, A.; Kinmonth, A.L.; Sandercock, P.; Spiegelhalter, D.; Tyrer, P. Framework for design and evaluation of complex interventions to improve health. BMJ 2000, 321, 694–696. [Google Scholar] [CrossRef]

- Moore, G.F.; Audrey, S.; Barker, M.; Bond, L.; Bonell, C.; Hardeman, W.; Moore, L.; O’Cathain, A.; Tinati, T.; Wight, D.; et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ 2015, 350, h1258. [Google Scholar] [CrossRef] [PubMed]

- Hawe, P.; Shiell, A.; Riley, T. Complex interventions: How “out of control” can a randomised controlled trial be? BMJ 2004, 328, 1561–1563. [Google Scholar] [CrossRef] [PubMed]

- Poland, B.; Frohlich, K.L.; Cargo, M. Context as a Fundamental Dimension of Health Promotion Program Evaluation; Potvin, L., McQueen, D.V., Hall, M., Anderson, L.M., Eds.; Health Promotion Evaluation Practices in the Americas; Springer: New York, NY, USA, 2008; pp. 299–317. [Google Scholar]

- Cambon, L.; Terral, P.; Alla, F. From intervention to interventional system: Towards greater theorization in population health intervention research. BMC Public Health 2019, 19, 339. [Google Scholar] [CrossRef] [PubMed]

- Minary, L.; Trompette, J.; Kivits, J.; Cambon, L.; Tarquinio, C.; Alla, F. Which design to evaluate complex interventions? Toward a methodological framework through a systematic review. BMC Med. Res. Methodol. 2019, 19, 92. [Google Scholar] [CrossRef] [PubMed]

- Petticrew, M.; Knai, C.; Thomas, J.; Rehfuess, E.A.; Noyes, J.; Gerhardus, A.; Grimshaw, J.M.; Rutter, H.; McGill, E. Implications of a complexity perspective for systematic reviews and guideline development in health decision making. BMJ Glob. Health 2019, 4 (Suppl. 1), e000899. [Google Scholar] [CrossRef] [PubMed]

- Pfadenhauer, L.M.; Gerhardus, A.; Mozygemba, K.; Lysdahl, K.B.; Booth, A.; Hofmann, B.; Wahlster, P.; Polus, S.; Burns, J.; Brereton, L.; et al. Making sense of complexity in context and implementation: The Context and Implementation of Complex Interventions (CICI) framework. Implement. Sci. 2017, 12, 21. [Google Scholar] [CrossRef]

- O’Cathain, A.; Croot, L.; Duncan, E.; Rousseau, N.; Sworn, K.; Turner, K.M.; Yardley, L.; Hoddinott, P. Guidance on how to develop complex interventions to improve health and healthcare. BMJ Open 2019, 9, e029954. [Google Scholar] [CrossRef]

- Flemming, K.; Booth, A.; Garside, R.; Tunçalp, Ö.; Noyes, J. Qualitative evidence synthesis for complex interventions and guideline development: Clarification of the purpose, designs and relevant methods. BMJ Glob. Health 2019, 4 (Suppl. 1), e000882. [Google Scholar] [CrossRef]

- Candy, B.; Vickerstaff, V.; Jones, L.; King, M. Description of complex interventions: Analysis of changes in reporting in randomised trials since 2002. Trials 2018, 19, 110. [Google Scholar] [CrossRef]

- Government of Canada, Canadian Institutes of Health Research. (2010) A Guide to Knowledge Synthesis. CIHR. 2010. Available online: http://www.cihr-irsc.gc.ca/e/41382.html (accessed on 25 January 2018).

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ 2009, 339, b2535. [Google Scholar] [CrossRef]

- Campbell, N.C.; Murray, E.; Darbyshire, J.; Emery, J.; Farmer, A.; Griffiths, F.; Guthrie, B.; Lester, H.; Wilson, P.; Kinmonth, A.L. Designing and evaluating complex interventions to improve health care. BMJ 2007, 334, 455–459. [Google Scholar] [CrossRef] [PubMed]

- Oakley, A.; Strange, V.; Bonell, C.; Allen, E.; Stephenson, J. RIPPLE Study Team. Process evaluation in randomised controlled trials of complex interventions. BMJ 2006, 332, 413–416. [Google Scholar] [CrossRef] [PubMed]

- Cohn, S.; Clinch, M.; Bunn, C.; Stronge, P. Entangled complexity: Why complex interventions are just not complicated enough. J. Health Serv. Res. Policy 2013, 18, 40–43. [Google Scholar] [CrossRef]

- Craig, P.; Di Ruggiero, E.; Frohlich, K.L.; Mykhalovskiy, E.; White, M. On behalf of the Canadian Institutes of Health Research (CIHR)–National Institute for Health Research (NIHR) Context Guidance Authors Group. In Taking Account of Context in Population Health Intervention Research: Guidance for Producers, Users and Funders of Research; NIHR Evaluation, Trials and Studies Coordinating Centre: Southampton, UK, 2018. [Google Scholar]

- Movsisyan, A.; Arnold, L.; Evans, R.; Hallingberg, B.; Moore, G.; O’Cathain, A.; Pfadenhauer, L.M.; Segrott, J.; Rehfuess, E. Adapting evidence-informed complex population health interventions for new contexts: A systematic review of guidance. Implement. Sci. 2019, 14, 105. [Google Scholar] [CrossRef] [PubMed]

- Minary, L.; Alla, F.; Cambon, L.; Kivits, J.; Potvin, L. Addressing complexity in population health intervention research: The context/intervention interface. J. Epidemiol. Community Health 2018, 72, 319–323. [Google Scholar] [CrossRef] [PubMed]

- Durlak, J.A. Why Program Implementation Is Important. J. Prev. Interv. Community 1998, 17, 5–18. [Google Scholar] [CrossRef]

- Galbraith, J.S.; Herbst, J.H.; Whittier, D.K.; Jones, P.L.; Smith, B.D.; Uhl, G.; Fisher, H.H. Taxonomy for strengthening the identification of core elements for evidence-based behavioral interventions for HIV/AIDS prevention. Health Educ. Res. 2011, 26, 872–885. [Google Scholar] [CrossRef]

- Clark, A.M. What Are the Components of Complex Interventions in Healthcare? Theorizing Approaches to Parts, Powers and the Whole Intervention. Soc. Sci. Med. 2013, 93, 185–193. [Google Scholar] [CrossRef]

- Hoffmann, T.C.; Glasziou, P.P.; Boutron, I.; Milne, R.; Perera, R.; Moher, D.; Altman, D.G.; Barbour, V.; Macdonald, H.; Johnston, M.; et al. Better reporting of interventions: Template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014, 348, g1687. [Google Scholar] [CrossRef]

- Michie, S.; Wood, C.E.; Johnston, M.; Abraham, C.; Francis, J.J.; Hardeman, W. Behaviour change techniques: The development and evaluation of a taxonomic method for reporting and describing behaviour change interventions (a suite of five studies involving consensus methods, randomised controlled trials and analysis of qualitative data). Health Technol. Assess. 2015, 19, 1–188. [Google Scholar]

- Lewin, S.; Hendry, M.; Chandler, J.; Oxman, A.D.; Michie, S.; Shepperd, S.; Reeves, B.C.; Tugwell, P.; Hannes, K.; Rehfuess, E.A.; et al. Assessing the complexity of interventions within systematic reviews: Development, content and use of a new tool (iCAT_SR). BMC Med. Res. Methodol. 2017, 17, 76. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).