Abstract

During the COVID-19 pandemic, when individuals were confronted with social distancing, social media served as a significant platform for expressing feelings and seeking emotional support. However, a group of automated actors known as social bots have been found to coexist with human users in discussions regarding the coronavirus crisis, which may pose threats to public health. To figure out how these actors distorted public opinion and sentiment expressions in the outbreak, this study selected three critical timepoints in the development of the pandemic and conducted a topic-based sentiment analysis for bot-generated and human-generated tweets. The findings show that suspected social bots contributed to as much as 9.27% of COVID-19 discussions on Twitter. Social bots and humans shared a similar trend on sentiment polarity—positive or negative—for almost all topics. For the most negative topics, social bots were even more negative than humans. Their sentiment expressions were weaker than those of humans for most topics, except for COVID-19 in the US and the healthcare system. In most cases, social bots were more likely to actively amplify humans’ emotions, rather than to trigger humans’ amplification. In discussions of COVID-19 in the US, social bots managed to trigger bot-to-human anger transmission. Although these automated accounts expressed more sadness towards health risks, they failed to pass sadness to humans.

1. Introduction

Social media is an emerging platform for the public to find information, share opinions, and seek coping strategies when faced with health emergencies [1,2,3]. In a disease outbreak, sentiments and emotions from social media data are regarded as useful mental health indicators [4]. Strong emotions, such as anxiety, fear, and stress about the disease, might be triggered by updates on infection and mortality and be shared on social media [5]. In turn, health information, telemedicine, and online psychological counseling on social media can help relieve individuals’ mental pressure. Interactions have also been found to promote emotion transmission on social media [6,7], potentially affecting individuals’ cognition and behaviors [8].

In the coronavirus disease 2019 (COVID-19) outbreak, a global health crisis caused by the infectious coronavirus, the public’s reliance on online information climbed [9,10] in order for people to remain informed and connected as a result of a series of infection control measures such as travel limitations, community quarantine, and social distancing. Researchers found that people expressed their fears of infection and shock regarding the contagiousness of the disease [11], along with their feelings about infection control strategies, on social media [12,13,14]. They also showed emotional reactions towards some health-unrelated themes, such as the economy and the global environmental impact of the COVID-19 pandemic [15]. Meanwhile, racist conspiracies and hateful speech concerning the pandemic [16], which have been proved to provoke negative emotions [17], also proliferated on social media. More social media exposure may have resulted in a higher probability of anxiety and depression [18].

The abovementioned studies on online sentiments during health emergencies assumed that all the content of the digital ecosystem was produced by humans. However, established evidence has proven that social bots—accounts controlled by computer algorithms [19]—can prosper on social media and be involved in online sentiment manipulation [20]. These automated accounts can increase users’ exposure to negative and inflammatory messages, triggering mass hysteria and social conflicts in cyberspace [21]. They may also intentionally pick sides and disseminate a more complex set of emotions to attract more attention [22].

In health-related topics, social bots have been accused of promoting polarized opinions and misleading information [23,24,25]. Scientists have identified social bots in online communication around the COVID-19 pandemic and demonstrated their advocacy of conspiracies [23]. Nevertheless, social bots’ sentiment engagement in the pandemic has not been empirically studied. As Twitter may potentially be used by health experts and policy makers to understand the opinions of the general public [14,26], investigating social bots’ interference in sentiments may lay the groundwork for accurate policy-making and strategical communication in health emergencies. This study aimed to characterize social bots’ sentiment engagements during the COVID-19 pandemic. We selected three important timepoints in the progress of the COVID-19 pandemic and conducted a topic-based sentiment analysis of bot-generated and human-generated tweets to figure out social bots’ sentiment expressions and transmission.

2. Literature

2.1. Emotions and Public Health Emergencies on Social Media

In public health emergencies, such as the COVID-19 pandemic, the increasing number of infected people and rising mortality elicited strong negative emotions such as anxiety, anger, and sadness in the public [5,27]. Prevention measures, such as quarantine and travel bans, limited individuals’ freedom, thus further raising people’s negative emotions [28,29]. Health-unrelated factors like the economic and political impact, media coverage, and government responses also served as drivers of expressions of emotion [30,31]. However, it is worth noting that during public health emergencies, if there is no better or more convenient offline way to vent emotions, it is expected that people will turn to social media [1,2,3,32,33]. In addition to offering medical information and experiences that people may find useful [34,35,36,37], social media also accommodates the need for emotional release. Recent studies on emotions expressed on social media in the COVID-19 pandemic in various countries found negative emotions like depression, anxiety, fear, and anger increased and became more prevalent [4,11,38,39]. Further, since the impacts of geographical boundaries and offline interpersonal circles are attenuated on social media, emotions can be shared more easily and affect others’ emotions more widely, gradually forming a homogeneous emotional state, the process of which is termed “emotion contagion” [29,40].

2.2. Emotional Contagion on Social Media

Emotion contagion describes a phenomenon in which certain individuals’ emotions propagate to others and trigger similar ones; this may take place in both the online and offline worlds [41,42,43]. In recent years, especially with the development of social media, a growing number of studies on emotion contagion have shifted their attention to this new arena [6,7,31]. It has been found that although people on social media cannot encounter non-verbal cues conveying emotions, which are common in face-to-face communication, emotion contagion could still occur via online emotional content [6,7,31,44]. Plus, on social media, the scope of people’s interactions is larger than their social circles in reality [29]. It should also be noted that now, on social media, not only does emotion contagion take place in human-human interactions, but also in human-robot interactions [45].

Complex factors affect emotion contagion on social media. Firstly, the valence of the content; although the academia has not reached consensus on whether positive or negative contents can be shared more on social media, it is clear that emotional content travels faster than non-emotional content [29]. Furthermore, specific to different types of emotions, some emotions in social media messages are more likely to arouse public sharing behaviors than others, such as anger [46]. Contagion of negative emotions, like anger, sadness, and anxiety, on social media may have been accelerated during the COVID-19 pandemic [29]. For example, the “Purell panic”, stating that out of fear of being infected by the virus, people would rush to buy Purell irrationally, in the coronavirus crisis is a convincing example [47]. Furthermore, people’s emotions are susceptible to manipulation by other users or even bots [23,43,48] and their influence can be exacerbated in certain topics related to disease outbreaks [31,49].

2.3. Social Bots and Public Sentiments Manipulation

While mostly acting in a coordinated fashion, social bots—accounts that are in part or entirely controlled by computer algorithms rather than human users [19]—have been found to drive public emotional and sentiments dynamics in social media conversations [19,22,50,51]. Over the past few years, social bots have become increasingly prevalent on social media platforms, such that they now comprise approximately 9–15% of active Twitter accounts [20]. In the Twittersphere, social bots can drive high volumes of traffic, amplify polarizing and conspiracy content [52,53], and contribute to the circulation of misinformation [54].

By analyzing 7.7 million tweets in the 2014 Indian election, scholars found that bots tend to be less polarized when expressing sentiments—either positive or negative—than human users and are less likely to change their stance [55]. However, this does not necessarily indicate that social bots are more neutral in nature. Instead, they are often involved in public sentiment manipulation. Since emotionally charged tweets tend to be retweeted more often and more quickly than neutral ones [56], during polarizing events, social bots intentionally pick sides and disseminate a more complex set of emotions to attract more attention, while human users commonly express basic emotions, including sadness and fear [22]. These automated accounts can also increase users’ exposure to negative and inflammatory messages, which may trigger mass hysteria and social conflicts in cyberspace [21]. In spam campaigns, social bots are employed in an orchestrated fashion to post negative tweets, so as to smear competing products [57]. They can elicit varied emotional reactions, ranging from worship to hatred, during interactions with human users [58]. Even on harmless occasions such as Thanksgiving, these accounts are apt to trigger polarizing emotions in Twitter discourse [22].

2.4. Social Bots and Online Health Communication

Social bots may also be used for manipulating public attitudes, sentiments, and behaviors by engaging in online health-related discussions. On the one hand, through effective and friendly interactions with human users, social bots can assist people with achieving their physical and mental health goals [59], such as through controlling tobacco use [60] and providing pediatric groups with mental health care, education, and consultations [61]. In the fight against the COVID-19 pandemic, researchers have noted that chatbots could be used to spread up-to-date health information, ease people’s health anxiety around seeking medical care, and alleviate psychological pain [62]. On the other hand, in online social networks, some malicious bots pose multifaceted threats to public health. For example, in vaccine controversies, social bots can be exploited to alter people’s willingness in vaccination uptake and strengthen anti-vaccination beliefs through retweeting content posted by users in this opinion group [63]. Furthermore, sophisticated automated accounts, like Russian trolls, strategically pay equal attention to pro- and anti-vaccination arguments; this is a well-acknowledged tactic to disrupt civic conversations, which has effectively tampered with public confidence in vaccine efficacy [24]. In addition, by suggesting medical uses for cannabis, social bots can also be employed to skew the public’s attitudes towards the efficiency of cannabis in dealing with mental and physical problems [25].

During earlier public health emergencies, as shown in the Zika epidemic debate, social bots were detected as both influential and dominant voices on social media [64]. Some recent works have also investigated social bots’ engagement in online conversation pertaining to COVID-19. Researchers from Carnegie Mellon University (CMU) found that many social bots spread and amplified false medical advice and conspiracy theories and in particular, promoted “reopening America” messages [65]. These automated agents have become even more active in the era of COVID-19, doubling the activity with respect to researchers’ estimates based on previous crises and elections [65,66].

It has been well acknowledged that in the outbreak, non-human accounts contributed to social media infodemics [23,66,67], yet bots’ involvement in public sentiments during the pandemic remains unclear. In particular, social bots’ probable amplification of negative emotions, as well as their success and failure in triggering humans’ amplification under distinct sub-topics of health emergencies, have not been examined. This paper seeks to paint a mixed picture of the Twitter discourse during COVID-19 when a diverse range of users shared their concerns, expressed emotions, and transmitted their sentiments and feelings in different ways, with a particular focus on characterizing the role of social bots in the online information ecosystem. To be specific, this study aims to address the following questions:

- RQ1

- When discussing different topics concerning the COVID-19 pandemic, what are the differences between social bots and human users in terms of their (a) sentiment polarity and (b) sentiment strength?

- RQ2

- For those topics showing negative sentiments, what are the differences between social bots and human users in terms of their expressions of specific negative emotions, including (a) anger, (b) anxiety, and (c) sadness?

- RQ3

- Will (and if so, how) the negative emotions in tweets be transmitted among different actors?

3. Materials and Methods

3.1. Data

In this study, we followed previous research [23,68,69] and chose Twitter to explore the sentiment features of social bots during the COVID-19 pandemic. According to the COVID-19 pandemic timeline revealed by the WHO [70], we selected three important time points relating to the WHO’s reactions as data collection timestamps. (1) On 22 January, the WHO mission to Wuhan issued a statement saying that evidence suggested human-to-human transmission in Wuhan, but more investigation was needed to understand the full extent of transmission, which marks the WHO’s attention to and assessment of the potential spread of COVID-19. (2) The WHO declared the novel coronavirus a public health emergency of international concern (PHEIC) on 31 January, indicating the worldwide concern related to COVID-19. (3) The WHO made the assessment that the COVID-19 outbreak could be characterized as a pandemic on 11 March, which meant the world was in the grip of COVID-19.

Although on 11 February, the WHO announced that the disease caused by the novel coronavirus was called COVID-19 (short for Corona Virus Disease 2019), our data collection time points span from January to March. Considering the time points, we used #coronavirus (case insensitive) as a data collection keyword to ensure consistency.

This study used a self-designed Python script to collect tweets and authors. We followed Twitter’s robot policy and only collected publicly available tweet texts in this study. Twitter users’ privacy was totally respected. The following kinds of tweets were included: (1) all original tweets containing the keyword and (2) Quote tweets containing the keyword in the comment part. In other words, all novel contents containing “#coronavirus” were collected. Whereas, quote tweets of which their comment did not contain the keyword and retweets were not included. With this search criteria, we made sure that all the texts with the keyword “#coronavirus” were posted by the documented users. Emojis, symbols, and hyperlinks in the text were filtered out. Twitter users’ privacy was totally respected. Personal information was not collected, analyzed, or displayed in our research.

3.2. Social Bot Detection

Given that social bots have become increasingly sophisticated, the lines between human-like and bot-like behaviors have become further blurred [19]. Researchers have developed multiple approaches to discriminate between social bots and humans [71,72], among which Botometer, formerly known as BotOrNot, is a widely used social bot detection interface for Twitter [73,74,75,76].

Previous studies have figured out the different semantic features of tweet sentiments [55] and the varied social contacts [77] between human users and social bots. Based on machine learning algorithms, Botometer extracts more than 1000 predictive features that capture many suspicious behaviors, mainly through characterizing the account’s profile, friends, social network, temporal activity patterns, language, and sentiments [78,79]. The program then returns an ensemble classification score on a normalized scale, suggesting the likelihood that a Twitter account is a bot. Scores closer to 1 represent a higher chance of bot-ness, while those with scores closer to 0 more likely belong to humans. Congruent with the sensitivity settings of previous studies [73,74,76], we set the threshold to 0.5 to separate humans from bots. In other words, an account was labeled as a social bot if the score was above 0.5. In some cases, Botometer fails to output a score due to issues including account suspensions and authorization problems. Those accounts that could not be assessed were excluded from further analysis.

3.3. Sentiment Analysis

Although the concepts sentiment and emotion have always been used interchangeably in some studies [80], they have slight difference in their emphasis [81]. Sentiment, by default, has been defined as a positive or negative expression in NLP (Natural Language Processing) [82]. Emotion refers to diverse types of feelings or affect, such as anger, anxiety, and sadness, and emotion analysis is regarded as the secondary level of sentiment analysis in a broad sense [83]. To investigate the different sentiment and emotion components present in online messages from bots and individual accounts, we analyzed our samples with Linguistic Inquiry and Word Count (or LIWC). This automated text analysis application extracts structural and psychological observations from text records [84]. LIWC has a word dictionary composed of various linguistic categories, including a list of words that define the scale. By counting the number of words belonging to each linguistic category in each text file, LIWC returns about 90 output variables, including summary language variables, general descriptor categories, and categories tapping psychological constructs. Some of the variables include sub-dictionaries, the scale scores of which will be incremented. For instance, the word “cried” falls into five categories: sadness, verbs, negative emotions, overall affect, and past focus. Notably, LIWC2015 [85], the most recent version of the dictionary, can accommodate numbers and punctuation, as well as short phrases which are common in Twitter and Facebook posts (e.g., “:)” is coded as a positive emotions word). Previous studies have validated different indicators of the program [86,87] and our analysis mainly focused on the categories that are indicative of sentimental and emotional cues in online posts. Our study stressed the affective processes that included positive emotions and negative emotions and we specifically calculated anger, anxiety, and sadness in terms of negative emotions. Meanwhile, following previous studies [56,88,89], our study subtracted the negative score from the positive score to measure sentiments polarity and added the two scores to measure sentiments strength. Higher sentiments polarity indicates the overall sentiment that the targeted text is more positive and greater sentiments strength means more intense sentiments are expressed in the text.

3.4. Structural Topic Model

The topic model is a statistical model of natural language processing developed for uncovering the hidden semantic structures within large corpora [90]. It has been frequently used by computer scientists and has become increasingly popular in social science research [91]. In this study, we used the newly created Structural Topic Model (STM) to extract the latent topics in the original Twitter posts. STM is an unsupervised learning model that was introduced by Roberts et al. [92] as an extension of Latent Dirichlet Allocation (LDA) [93], one of the most common probabilistic topic models for computer-assisted text analysis. By comparing STM benefits with LDA, scholars have found that STM outperforms its predecessor model in covariate inference and out-of-sample prediction [94]. Some social scientists have used STM to analyze text from social media [95].

Before inputting the text documents, STM requires the researchers to specify a value of k, representing the number of topics they want to extract from the corpus. Each word that appears in the corpus has a probability of belonging to a given topic. Although the words of different topics may overlap, users can distinguish topics with the highest-ranking words. Our study used the STM R package to conduct a topic-based sentiment analysis of bot-generated and human-generated tweets. The appropriate value of k (the fixed number of topics) for a given corpus is user-specified, without an absolute “right” answer [96], which means that the researchers needed to set the value based on the results’ interpretability. After several preliminary tests, we initially limited the number of topics within a range of 6–14 and eventually specified k = 12. As suggested by the developers of STM [97], we labeled the topic by investigating the words associated with topics and documents associated with topics. Two functions named “labelTopics” and “findThoughts, plotQuote” in stm R package were used to generate high probability words and example documents of each topic. The STM model automatically calculated the expected topic proportions of the corpus. Moreover, for each document, the topic proportions across the k topics add up to 1 [97]. In this study, the topic with the largest proportion in a tweet were regarded as its dominant topic.

4. Results

As mentioned above, based on the timeline published by the World Health Organization [70], this exploratory study selected three data collection time points. A total of 195,201 tweets (7806, 14,375, and 173,020 at the three time points, respectively) were collected in this study, of which 18,497 (9.27%) were published by social bots, 176,166 (90.25%) by humans, and 535 (0.27%) by unknown users. A total of 118,720 unique users contributed to these tweets, of which 10,865 (9.15%) were social bots, 107,478 (90.53%) were humans, and 375 (0.32%) were unknown users.

Basic statistics. The STM model gave 12 lists of high probability words for 12 topics, which are displayed in descending order of proportion in Table 1. We labeled the top 11 word lists with the topic names. As the word list with the smallest proportion had no interpretable meaning, we labeled it as Else. Confirmed cases and deaths accounted for the largest proportion, followed by disease prevention and economic impacts. We further classified the top 11 topics into two categories: health-related and health-unrelated themes. Health-related themes covered eight topics, including confirmed cases and deaths, disease prevention, COVID-19 in the US, news/Q&A, COVID-19 in China, COVID-19 outbreak, healthcare system, and health risks. Health-unrelated themes included three topics, covering economic impacts, events canceled and postponed, and impacts on public life.

Table 1.

List of 12 tweet topics according to the structural topic model.

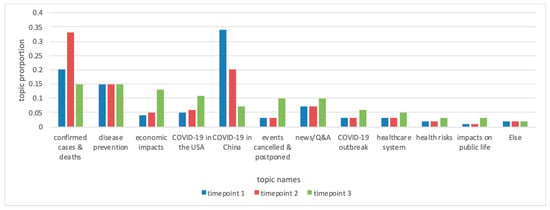

Figure 1 shows the proportions of the 12 topics at the three time points. For each time point, the sum of the 12 topics’ proportions is 1. It should be noted that the proportions of all three health-unrelated topics climbed when the pandemic spread. As for health-related topics, Twitter users in our dataset, who are English language speakers according to our data collection criteria, showed increasing interest for COVID-19 in the US and decreasing interest for COVID-19 in China. The concern for news/Q&A, COVID-19 outbreak, healthcare system, and health risks climbed, while concern for disease prevention remained consistent. The discussions of confirmed cases and deaths reached the highest point at time point 2.

Figure 1.

Topic proportions at the three time points.

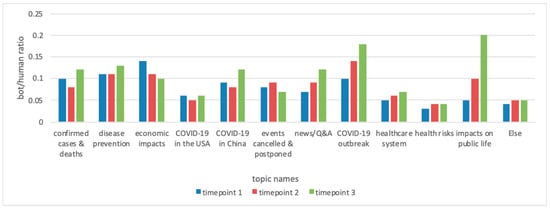

Bots-human ratio in different topics at three time points.Figure 2 shows the bot-human ratio (dividing the number of bot-generated contents by the number of human-generated contents) for every topic at each of the three time points. Tweets about economic impacts, COVID-19 outbreak, and impacts on public life received the most contributions from social bots at time point 1, time point 2, and time point 3, respectively.

Figure 2.

Bot/human ratio for each topic at three time points.

Comparing the results of Figure 1 and Figure 2, we found that although the topic proportions of economic impacts increased as the pandemic developed, the bot-human ratio decreased, indicating that humans’ concern for economic impacts gradually emerged. For several health-related topics (such as news/Q&A, COVID-19 outbreak, healthcare system, and health risk) and one health-unrelated topic (impacts on public life), the increase of the topic proportion paralleled the increase of social bot involvement, suggesting social bots’ potential contributions in promoting the saliency of these topics to the social media agenda. For the topic of COVID-19 in China, social bots’ engagements increased when the topic proportions dropped at time point 3, suggesting social bots were expending effort to maintain public attention towards COVID-19 in China.

Topic-based analysis of sentiment characteristics for social bots and humans. In order to compare the sentiment characteristics of social bots’ contents and humans’ contents in different topics, we calculated the topic-based average value of sentiment polarity and sentiment strength for different user groups. A sentiment polarity greater than 0 refers to overall positive sentiment and polarity lower than 0 means overall negative sentiment. Greater sentiment strength means the sentiments present in texts are more intense and smaller sentiment strength means fewer sentiments are expressed. Table 2 shows the results in ascending order of overall sentiment polarity. Social bots showed similar sentiment polarity to humans in all topics. In four topics (COVID-19 in the US, health risks, COVID-19 in China, and economic impacts), more negative sentiments were expressed than positive sentiments overall and social bots were even more negative than humans when addressing COVID-19 in the US, COVID-19 in China, and economic impacts. In the other seven topics, the overall sentiment polarity was positive and social bots showed an even more positive attitude in impacts on public life, events canceled and postponed, and disease prevention. As for sentiment strength, social bots showed weaker sentiment in most topics, except for COVID-19 in the US and the healthcare system.

Table 2.

Sentiments polarity and strength of all users, social bots, and humans for different topics.

For the four topics of which their overall sentiment polarity was negative, we explored the expressions and human-bot interactions of three specific negative emotions in Table 3 and Table 4. Table 4 reveal the average value of sadness, anger, and anxiety for social bots and humans. When discussing COVID-19 in the US, social bots showed more anger, sadness, and anxiety than humans. When discussing COVID-19 in China, social bots showed more anger and anxiety, but less sadness. For health risks, social bots expressed more sadness and anxiety than humans, but less anger. For economic impacts, social bots showed more anger and anxiety.

Table 3.

Sadness, anger, and anxiety of social bots and humans for topics of which their overall sentiments polarity was negative.

Table 4.

Emotion transmission among different user groups for four topics of which their overall sentiments polarity was negative.

Table 4 shows, for a specific kind of emotion in each topic, how much the emotions were amplified through bot-to-human retweeting, human-to-bot retweeting, bot-to-bot retweeting, and human-to-human retweeting. A total of 62,940 of the 195,201 tweets in our dataset were retweeted at least one time. These 62,940 were retweeted 416,204 times in total after they were published. We identified the retweeters of these 62,940 tweets with official Twitter API and calculated their bot score with Botometer to distinguish humans and suspected bots. Thus, we can classify the 416,204 instances of retweeting behavior into bot-human, human-bot, bot-bot, and human-human retweeting. Then, the scores of anger, anxiety, and sadness present in each of the 62,940 tweets were documented to calculate the volume of emotion transmission during the 416,204 retweeting behaviors. For each topic, we counted the transmission volume of sadness, anger, and anxiety through bot-bot, bot-human, human-bot, and human-human retweeting and finally calculated the emotion transmission proportions of four categories of retweeting behavior. Following the results of Table 2 and Table 3, Table 4 only shows the results of the four topics of which their overall sentiment polarity was negative.

The results shown in Table 4 reveal that emotion transmission among humans was the most intensive, while the emotion transmission among social bots was the least intensive during the pandemic. In most cases, the emotion transmission from humans to social bots was more significant than that from social bots to humans; that is, social bots were more likely to actively amplify humans’ emotions rather than to trigger humans’ amplification. Among the four topics, social bots were most capable of exporting negative emotions when talking about COVID-19 in the US. In particular, bot-to-human anger was greater than human-to-bot anger, suggesting social bots’ success in triggering anger transmission in this topic. While social bots were not capable of triggering sentiment transmission in all the topics, comparing Table 3 and Table 4, we found that although social bots expressed more sadness in response to health risks, they failed to pass sadness to humans.

5. Discussion

In our study, we identified the key concerns and sentiment expressions of different actors on Twitter, through which we confirmed that social bots were intentionally engaged in manipulating public opinion and sentiments during the COVID-19 crisis and that they managed to facilitate the contagion of negative emotions on certain topics.

Generally speaking, the distribution of topic proportions suggests that Twitter users reacted most actively towards the increasing infection cases and mortality, especially when the coronavirus risks spread worldwide (t2). However, unlike a previous study pointing out that the incidence of infection cases and deaths paralleled negative public sentiments [11], we found that individuals’ sentiment expression was overall positive, except when discussing the US, China, the hit to the global economy, and potential coronavirus risks. Due attention has been paid to measures for protecting people from infection, indicating that social media platforms can be effective in disease prevention. Notably, China and the US, two of the primary negative emotion triggers, turned out to be the two most prominent entities in the Twitter agenda, with a shift of focus from the former to the latter as time went by.

Regarding the role of bots in shaping online public opinion and sentiment dynamics, we pinpointed that these automated actors are influential in online social networks, either in a benign or malicious manner. Throughout all three time points that we considered, it was clear that social bots made up only a small proportion of Twitter users (9.15%) participating in COVID-19 related discussions, yet the ratio of their messages was slightly higher (9.27%). However, previous studies have indicated that social bots are often perceived as credible and attractive [98] and that by constituting 5–10% of participants involved in a discussion, these accounts can bias individual’s perceptions and dominate public opinion [99]. Our study showed that the proportion of bots at t1 and t2 remained the same, followed by a slight increase at t3. According to researchers from CMU, it was not until February that social bots started to appear in COVID-19 related conversations more frequently [65]. As shown in Table 2, congruent with previous research [55], social bots used to release weaker sentiment than human users. Our study found that the two communities shared a similar trend on sentiment polarity—positive or negative—for almost all topics, making social bots more deceptive. Through impersonating humans, these automated accounts are designed to push particular narratives and drive the discourse on social network sites [19], primarily through two approaches: content generation and interactions with human users [100].

Scholars have argued that exposure to information with “antagonistic memes”, such as those that linked Zika to global warming, can trigger polarized affections among people [101]. Social bots have been found to create fear and panic on the part of the public by means such as steering online discussions with inaccurate health claims or unverified information [102]. In our case, these malicious actors pushed discouraging and depressing messages to intensify people’s negative feelings. As these accounts facilitate the spread of misinformation by exposing human users to low-credibility content and inducing them to reshare it [76], we argue that they use emotionally-charged posts to bait humans into retweeting, thus boosting the influence of negative emotions. More importantly, since social bots have been found to retweet more than legitimate users, yet be retweeted less than them [19], our study points out that compared with the resharing-trigger strategy, social bots are even more likely to amplify pessimistic words through retweeting human users (see Table 4).

To be more specific, bots became more outraged and anxious while talking about China and the devastating blow towards the global economy, which jeopardized public impressions of these two topics. They also retweeted posts with extreme anger and angst to intensify negative emotions on Twitter. In the outbreak, human users were apt to be angry, worried, and anxious in response to health risks, and fears and actual health risks might be exaggerated while tweeting [5]. The situation was worsened due to the interference of social bots. These actors expressed even more sadness and anxiety than humans while posting, although the former failed to be transmitted from bots to human users.

Another point worth highlighting is that social bots successfully infiltrated and manipulated Twitter discourse about COVID-19 in the US. Congruent with previous research [55], our study found that social bots tended to show lower sentiments strength than human users. However, this was not the case in discussions about coronavirus and the Trump administration, when social bots posted stronger indications of anger, sadness, and anxiety compared with human users. While the negative emotions, especially anger, were inflammatory and contagious enough, human users were even more likely to amplify these messages, which might exacerbate public irritation and social conflict in the online ecosystem [21].

On a different note, in our study, social bots were also found to be increasingly active and positive in the majority of health-related discussions, including news about the outbreak, medical suggestions, and relevant information about disease prevention. On the one hand, this sheds light on the potential benefits of these automated actors. They might be leveraged by media agencies and public institutes to monitor and disseminate breaking health news, as well as to update information regarding the latest developments in disease prevention and treatment [60,61]. As COVID-19 mushroomed, people’s daily life changed markedly due to the epidemic. Our study showed that the number of social bots involved in discussions about impacts on public life increased steadily over time and the group tended to share positive messages, which might help comfort and encourage people to stay positive during the crisis. On the other hand, given the previous findings that social bots played a disproportionate role in spreading articles from low-credibility sources [76], these accounts should be treated cautiously as they may also be misleading by pretending to be positive. For instance, social bots may push narratives suggesting that the epidemic has been halted and urge public gatherings to resume. If social bots generate positive content to share information in opposition to scientific consensus, they may still pose threats to public health [102,103]. One of the negative side-effects is that the public‘s confidence in online health communication may erode [104]. Furthermore, as social media data can be used to complement and extend the surveillance of health behaviors [105], risk communicators and public health officials may fail to accurately monitor the state of emerging threats or correctly gauge public sentiments about health emergencies due to the interference of social bots [103]. Thus, the credibility and impacts of seemingly positive content need to be further examined.

6. Conclusions

As social media has become one of the dominant platforms for health communication in pandemics, the prevalence of social bots may potentially skew public sentiments by amplifying specific voices intentionally. This study extended previous research about social bots and sentiments by shedding light on the topic-based sentiment features of social bots in the context of health emergencies. To be specific, we characterized the differences between social bots and humans in terms of their sentiment polarity and sentiment strength and in particular, explored how negative emotions transmit among different actors.

The results indicate that suspected social bots contributed to as much as 9.27% of COVID-19 discussions on Twitter. Social bots and humans shared similar trends in sentiment polarity for almost all topics. In overall negative topics, social bots were even more negative than humans when addressing COVID-19 in the US, COVID-19 in China, and economic impacts. Social bots’ sentiment expressions were weaker than those of humans in most topics, except for COVID-19 in the US and the healthcare system. Social bots were more likely to actively amplify humans’ emotions, rather than to trigger humans’ amplification. Social bots’ capability of triggering emotion contagion varied among cases. In discussions of COVID-19 in the US, social bots managed to trigger bot-to-human anger transmission, whereas although social bots expressed more sadness towards health risks, they failed to pass sadness to humans.

Limitations and Future Directions

This study used sentiment analysis and topic modeling to capture social bots’ sentiment engagement in distinct topics in online discussions of COVID-19, which may lay the groundwork for health researchers and communicators interested in sentiment dynamics on social media. However, although bot detection algorithms can automatically label suspected social bots, the current mechanism of the Twitter platform does not reveal the behind-the-scenes manipulators of social bots. It is challenging for researchers to demonstrate the political, social, or economic motivation of social bots. Thus, in this study, although we depicted the sentiment features of social bots and put forward several probable explanations, we cannot conclude the intention of social bots based on their sentiment expressions. In this study, we also explored bot–human sentiment transmission in one-step retweet cascades. Social media is a complex information eco-system, so social bots and humans are actors embedded in large-scale social networks. In future studies, a network perspective could be introduced to identify social bots’ roles in starting, promoting, hindering, or terminating sentiment transmission. Sentiment transmission networks of different sizes and patterns could be characterized to extend the current understanding of social bots’ impacts on online sentiments.

Author Contributions

Conceptualization, J.S., W.S., and J.Y.; methodology, W.S.; software, W.S.; validation, W.S.; formal analysis, W.S. and D.L.; data curation, W.S.; writing—original draft preparation, D.L., S.W., J.Z., and W.S.; writing—review and editing, J.Y., W.S., and J.S.; visualization, D.L. and W.S.; supervision, J.S.; project administration, J.S.; funding acquisition, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tang, L.; Bie, B.; Zhi, D. Tweeting about measles during stages of an outbreak: A semantic network approach to the framing of an emerging infectious disease. Am. J. Infect. Control 2018, 46, 1375–1380. [Google Scholar] [CrossRef] [PubMed]

- Lazard, A.J.; Scheinfeld, E.; Bernhardt, J.M.; Wilcox, G.B.; Suran, M. Detecting themes of public concern: A text mining analysis of the Centers for Disease Control and Prevention’s Ebola live Twitter chat. Am. J. Infect. Control 2015, 43, 1109–1111. [Google Scholar] [CrossRef] [PubMed]

- Mollema, L.; Harmsen, I.A.; Broekhuizen, E.; Clijnk, R.; De Melker, H.; Paulussen, T.W.G.M.; Kok, G.; Ruiter, R.A.C.; Das, E. Disease Detection or Public Opinion Reflection? Content Analysis of Tweets, Other Social Media, and Online Newspapers During the Measles Outbreak in The Netherlands in 2013. J. Med. Internet Res. 2015, 17, e128. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Zheng, P.; Jia, Y.; Chen, H.; Mao, Y.; Chen, S.; Wang, Y.; Fu, H.; Dai, J. Mental health problems and social media exposure during COVID-19 outbreak. PLoS ONE 2020, 15, e0231924. [Google Scholar] [CrossRef]

- Ahmed, W.; Bath, P.A.; Sbaffi, L.; DeMartini, G. Novel insights into views towards H1N1 during the 2009 Pandemic: A thematic analysis of Twitter data. Health Inf. Libr. J. 2019, 36, 60–72. [Google Scholar] [CrossRef] [PubMed]

- Harris, R.B.; Paradice, D.B. An Investigation of the Computer-mediated Communication of Emotions. J. Appl. Sci. Res. 2007, 3, 2081–2090. [Google Scholar]

- Coviello, L.; Sohn, Y.; Kramer, A.D.I.; Marlow, C.; Franceschetti, M.; Christakis, N.A.; Fowler, J.H. Detecting Emotional Contagion in Massive Social Networks. PLoS ONE 2014, 9, e90315. [Google Scholar] [CrossRef]

- Jo, W.; Jaeho, L.; Junli, P.; Yeol, K. Online Information Exchange and Anxiety Spread in the Early Stage of Novel Coronavirus Outbreak in South Korea. J. Med. Internet Res. 2020, 22, e19455. [Google Scholar] [CrossRef]

- Vogels, E.A. From Virtual Parties to Ordering Food, How Americans Are Using the Internet during COVID-19. PewResearch Center. 3 April 2020. Available online: https://www.pewresearch.org/fact-tank/2020/04/30/from-virtual-parties-to-ordering-food-how-americans-are-using-the-internet-during-covid-19/ (accessed on 10 September 2020).

- Mander, J. Coronavirus: How Consumers Are Actually Reacting. Global WebIndex. 12 March 2020. Available online: https://blog.globalwebindex.com/trends/coronavirus-and-consumers/ (accessed on 10 September 2020).

- Medford, R.J.; Saleh, S.N.; Sumarsono, A.; Perl, T.M.; Lehmann, C.U. An “Infodemic”: Leveraging High-Volume Twitter Data to Understand Early Public Sentiment for the COVID-19 Outbreak 2020. Open Forum Infect. Dis. 2020, 7, ofaa258. [Google Scholar] [CrossRef]

- Barkur, G.; Kamath, G.B. Sentiment analysis of nationwide lockdown due to COVID 19 outbreak: Evidence from India. Asian J. Psychiatry 2020, 51, 102089. [Google Scholar] [CrossRef]

- Pastor, C.K. Sentiment Analysis of Filipinos and Effects of Extreme Community Quarantine Due to Coronavirus (COVID-19) Pandemic. SSRN Electron. J. 2020, 7, 91–95. [Google Scholar] [CrossRef]

- Dubey, A.D.; Tripathi, S. Analysing the Sentiments towards Work-From-Home Experience during COVID-19 Pandemic. J. Innov. Manag. 2020, 8, 13–19. [Google Scholar] [CrossRef]

- Singh, L.; Bansal, S.; Bode, L.; Budak, C.; Chi, G.; Kawintiranon, K.; Padden, C.; Vanarsdall, R.; Vraga, E.; Wang, Y. A First Look at COVID-19 Information and Misinformation Sharing on Twitter. Available online: https://arxiv.org/pdf/2003.13907.pdf (accessed on 10 September 2020).

- Schild, L.; Ling, C.; Blackburn, J.; Stringhini, G.; Zhang, Y.; Zannettou, S. “Go Eat a Bat, Chang!” An Early Look on the Emergence of Sinophobic Behavior on Web Communities in the Face of Covid-19. Available online: https://arxiv.org/pdf/2004.04046.pdf (accessed on 10 September 2020).

- Chen, L.; Lyu, H.; Yang, T.; Wang, Y.; Luo, J. In the Eyes of the Beholder: Sentiment and Topic Analyses on Social Media Use of Neutral and Controversial Terms for Covid-19. Available online: https://arxiv.org/pdf/2004.10225.pdf (accessed on 10 September 2020).

- Ni, M.Y.; Yang, L.; Leung, C.M.C.; Li, N.; Yao, X.; Wang, Y.; Leung, G.M.; Cowling, B.J.; Liao, Q. Mental Health, Risk Factors, and Social Media Use During the COVID-19 Epidemic and Cordon Sanitaire Among the Community and Health Professionals in Wuhan, China: Cross-Sectional Survey. JMIR Ment. Health 2020, 7, e19009. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The rise of social bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef]

- Varol, O.; Ferrara, E.; Davis, C.A.; Menczer, F.; Flammini, A. Online Human-Bot Interactions: Detection, Estimation, and Characterization. Available online: https://arxiv.org/pdf/1703.03107.pdf (accessed on 10 September 2020).

- Stella, M.; Ferrara, E.; De Domenico, M. Bots increase exposure to negative and inflammatory content in online social systems. Proc. Natl. Acad. Sci. USA 2018, 115, 12435–12440. [Google Scholar] [CrossRef] [PubMed]

- Kušen, E.; Strembeck, M. Why so Emotional? An Analysis of Emotional Bot-generated Content on Twitter. In Proceedings of the 3rd International Conference on Complexity, Future Information Systems and Risk, Madeira, Portugal, 20–21 March 2018; pp. 13–22. [Google Scholar]

- Ferrara, E. #Covid-19 on Twitter: Bots, Conspiracies, and Social Media Activism. Available online: https://arxiv.org/vc/arxiv/papers/2004/2004.09531v1.pdf (accessed on 10 September 2020).

- Broniatowski, D.A.; Jamison, A.M.; Qi, S.; Alkulaib, L.; Chen, T.; Benton, A.; Quinn, S.C.; Dredze, M. Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate. Am. J. Public Health 2018, 108, 1378–1384. [Google Scholar] [CrossRef] [PubMed]

- Allem, J.-P.; Escobedo, P.; Dharmapuri, L. Cannabis Surveillance With Twitter Data: Emerging Topics and Social Bots. Am. J. Public Health 2020, 110, 357–362. [Google Scholar] [CrossRef]

- Samuel, J.; Rahman, M.; Ali, G.G.M.N.; Samuel, Y.; Pelaez, A. Feeling Like It Is Time to Reopen Now? COVID-19 New Normal Scenarios Based on Reopening Sentiment Analytics. Available online: https://arxiv.org/pdf/2005.10961.pdf (accessed on 10 September 2020).

- Hassnain, S.; Omar, N. How COVID-19 is Affecting Apprentices. Biomedica 2020, 36, 251–255. [Google Scholar]

- Kleinberg, B.; van der Vegt, I.; Mozes, M. Measuring Emotions in the COVID-19 Real World Worry Dataset. 2020. Available online: https://www.aclweb.org/anthology/2020.nlpcovid19-acl.11.pdf (accessed on 10 September 2020).

- Steinert, S. Corona and value change. The role of social media and emotional contagion. Ethic- Inf. Technol. 2020, 2020. [Google Scholar] [CrossRef]

- Hung, M.; Lauren, E.; Hon, E.S.; Birmingham, W.C.; Xu, J.; Su, S.; Hon, S.D.; Park, J.; Dang, P.; Lipsky, M.S. Social Network Analysis of COVID-19 Sentiments: Application of Artificial Intelligence. J. Med. Internet Res. 2020, 22, e22590. [Google Scholar] [CrossRef]

- Sánchez, P.P.I.; Witt, G.F.V.; Cabrera, F.E.; Maldonado, C.J. The Contagion of Sentiments during the COVID-19 Pandemic Crisis: The Case of Isolation in Spain. Int. J. Environ. Res. Public Health 2020, 17, 5918. [Google Scholar] [CrossRef] [PubMed]

- Salathé, M.; Khandelwal, S. Assessing Vaccination Sentiments with Online Social Media: Implications for Infectious Disease Dynamics and Control. PLoS Comput. Biol. 2011, 7, e1002199. [Google Scholar] [CrossRef] [PubMed]

- Chew, C.; Eysenbach, G. Pandemics in the Age of Twitter: Content Analysis of Tweets during the 2009 H1N1 Outbreak. PLoS ONE 2010, 5, e14118. [Google Scholar] [CrossRef] [PubMed]

- Fung, I.C.-H.; Tse, Z.T.H.; Cheung, C.-N.; Miu, A.S.; Fu, K.-W. Ebola and the social media. Lancet 2014, 384, 2207. [Google Scholar] [CrossRef]

- Liu, B.F.; Kim, S. How organizations framed the 2009 H1N1 pandemic via social and traditional media: Implications for U.S. health communicators. Public Relat. Rev. 2011, 37, 233–244. [Google Scholar] [CrossRef]

- Keeling, D.I.; Laing, A.W.; Newholm, T. Health Communities as Permissible Space: Supporting Negotiation to Balance Asymmetries. Psychol. Mark. 2015, 32, 303–318. [Google Scholar] [CrossRef]

- Pitt, C.; Mulvey, M.; Kietzmann, J. Quantitative insights from online qualitative data: An example from the health care sector. Psychol. Mark. 2018, 35, 1010–1017. [Google Scholar] [CrossRef]

- Dubey, A.D. Twitter Sentiment Analysis during COVID19 Outbreak. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Li, S.; Wang, Y.; Xue, J.; Zhao, N.; Zhu, T. The Impact of COVID-19 Epidemic Declaration on Psychological Consequences: A Study on Active Weibo Users. Int. J. Environ. Res. Public Health 2020, 17, 2032. [Google Scholar] [CrossRef]

- Ferrara, E.; Yang, Z. Measuring Emotional Contagion in Social Media. PLoS ONE 2015, 10, e0142390. [Google Scholar] [CrossRef]

- Ntika, M.; Sakellariou, I.; Kefalas, P.; Stamatopoulou, I. Experiments with Emotion Contagion in Emergency Evacuation Simulation. In Proceedings of the 4th International Conference on Theory and Practice of Electronic Governance, Beijing, China, 27–30 October 2014. [Google Scholar]

- Hatfield, E.; Cacioppo, J.T.; Rapson, R.L. Emotional Contagion. Curr. Dir. Psychol. Sci. 1993, 2, 96–100. [Google Scholar] [CrossRef]

- Fan, R.; Xu, K.; Zhao, J. An agent-based model for emotion contagion and competition in online social media. Phys. A Stat. Mech. Appl. 2018, 495, 245–259. [Google Scholar] [CrossRef]

- Kramer, A.D.I.; Guillory, J.E.; Hancock, J.T. Experimental evidence of massive-scale emotional contagion through social networks. Proc. Natl. Acad. Sci. USA 2014, 111, 8788–8790. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.-E. Emotional Contagion in Human-Robot Interaction. e-Rev. Tour. Res. 2020, 17, 793–798. [Google Scholar]

- Goldenberg, A.; Gross, J.J. Digital Emotion Contagion. Trends Cogn. Sci. 2020, 24, 316–328. [Google Scholar] [CrossRef]

- Warton, K.A. Coronavirus: How Emotional Contagion Exacts a Toll. Knowledge@Wharton. 10 March 2020. Available online: https://knowledge.wharton.upenn.edu/article/coronavirus-how-emotional-contagion-exacts-a-toll/ (accessed on 12 September 2020).

- Liu, X. A big data approach to examining social bots on Twitter. J. Serv. Mark. 2019, 33, 369–379. [Google Scholar] [CrossRef]

- Kearney, M.; Selvan, P.; Hauer, M.K.; Leader, A.E.; Massey, P.M. Characterizing HPV Vaccine Sentiments and Content on Instagram. Health Educ. Behav. 2019, 46, 37S–48S. [Google Scholar] [CrossRef]

- Bessi, A.; Ferrara, E. Social bots distort the 2016 U.S. Presidential election online discussion. First Monday 2016, 21, 1–14. [Google Scholar] [CrossRef]

- Freitas, C.; Benevenuto, F.; Ghosh, S.; Veloso, A. Reverse Engineering Socialbot Infiltration Strategies in Twitter. In Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Paris, France, 25–28 August 2015. [Google Scholar]

- Bradshaw, S.; Howard, P.; Kollanyi, B.; Neudert, L.-M. Sourcing and Automation of Political News and Information over Social Media in the United States, 2016–2018. Polit. Commun. 2019, 37, 173–193. [Google Scholar] [CrossRef]

- Ozer, M.; Yildirim, M.Y.; Davulcu, H. Negative Link Prediction and Its Applications in Online Political Networks. In Proceedings of the Proceedings of the 28th ACM Conference on Hypertext and Social Media, Prague, Czech Republic, 4–7 July 2017. [Google Scholar]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef]

- Dickerson, J.P.; Kagan, V.; Subrahmanian, V. Using sentiment to detect bots on Twitter: Are humans more opinionated than bots? In Proceedings of the IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Beijing, China, 17–20 August 2014. [Google Scholar]

- Stieglitz, S.; Dang-Xuan, L. Emotions and Information Diffusion in Social Media—Sentiment of Microblogs and Sharing Behavior. J. Manag. Inf. Syst. 2013, 29, 217–248. [Google Scholar] [CrossRef]

- Ferrara, E. Measuring Social Spam and the Effect of Bots on Information Diffusion in Social Media. In Complex Spreading Phenomena in Social Systems; Springer: Berlin/Heidelberg, Germany, 2018; pp. 229–255. [Google Scholar]

- Aiello, L.M.; Deplano, M.; Schifanella, R.; Ruffo, G. People are strange when you′re a stranger: Impact and influence of bots on social networks. In Proceedings of the 6th International AAAI Conference on Weblogs and Social Media, Dublin, Ireland, 4–7 June 2012. [Google Scholar]

- Feil-Seifer, D.; Mataric, M.J. Defining socially assistive robotics. In Proceedings of the 9th International Conference on Rehabilitation Robotics, Chicago, IL, USA, 28 June–1 July 2005. [Google Scholar]

- Deb, A.; Majmundar, A.; Seo, S.; Matsui, A.; Tandon, R.; Yan, S.; Allem, J.-P.; Ferrara, E. Social Bots for Online Public Health Interventions. In Proceedings of the IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Barcelona, Spain, 28–31 August 2018. [Google Scholar]

- Henkemans, O.A.B.; Bierman, B.P.; Janssen, J.; Neerincx, M.A.; Looije, R.; Van Der Bosch, H.; Van Der Giessen, J.A. Using a robot to personalise health education for children with diabetes type 1: A pilot study. Patient Educ. Couns. 2013, 92, 174–181. [Google Scholar] [CrossRef] [PubMed]

- Miner, A.S.; Laranjo, L.; Kocaballi, A.B. Chatbots in the fight against the COVID-19 pandemic. NPJ Digit. Med. 2020, 3, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Yuan, X.; Schuchard, R.J.; Crooks, A.T. Examining Emergent Communities and Social Bots Within the Polarized Online Vaccination Debate in Twitter. Soc. Media Soc. 2019, 5, 2056305119865465. [Google Scholar] [CrossRef]

- Rabello, E.T.; Matta, G.; Silva, T. Visualising Engagement on Zika Epidemic. In Proceedings of the SMART Data Sprint: Interpreters of Platform Data, Lisboa, Portugal, 29 January–2 February 2018; Available online: https://smart.inovamedialab.org/smart-2018/project-reports/visualising-engagement-on-zika-epidemic (accessed on 10 September 2020).

- Kim, A. Nearly Half of the Twitter Accounts Discussing ′Reopening America′ May Be Bots, Researchers Say. CNN. 22 May 2020. Available online: https://edition.cnn.com/2020/05/22/tech/twitter-bots-trnd/index.html (accessed on 10 September 2020).

- Gallotti, R.; Valle, F.; Castaldo, N.; Sacco, P.; De Domenico, M. Assessing the risks of ‘infodemics’ in response to COVID-19 epidemics. Nat. Hum. Behav. 2020, 1–9. [Google Scholar] [CrossRef]

- Memon, S.A.; Carley, K.M. Characterizing COVID-19 Misinformation Communities Using a Novel Twitter Dataset. Available online: https://arxiv.org/pdf/2008.00791.pdf (accessed on 10 September 2020).

- Howard, P.N.; Kollanyi, B.; Woolley, S. Bots and Automation over Twitter during the US Election. Available online: http://blogs.oii.ox.ac.uk/politicalbots/wp-content/uploads/sites/89/2016/11/Data-Memo-US-Election.pdf (accessed on 10 September 2020).

- Luceri, L.; Deb, A.; Badawy, A.; Ferrara, E. Red Bots Do It Better: Comparative Analysis of Social Bot Partisan Behavior. In Proceedings of the Companion Proceedings of The World Wide Web Conference, Association for Computing Machinery, San Francisco, CA, USA, 13–17 May 2019. [Google Scholar]

- WHO. Archived: WHO Timeline—COVID-19; World Health Organisation: Geneva, Switzerland, 2020. [Google Scholar]

- Cao, Q.; Yang, X.; Yu, J.; Palow, C. Uncovering Large Groups of Active Malicious Accounts in Online Social Networks. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014. [Google Scholar]

- Wang, G.; Mohanlal, M.; Wilson, C.; Wang, X.; Metzger, M.; Zheng, H.; Zhao, B.Y. Social Turing Tests: Crowdsourcing Sybil Detection. Available online: https://arxiv.org/pdf/1205.3856.pdf (accessed on 10 September 2020).

- Badawy, A.; Lerman, K.; Ferrara, E. Who Falls for Online Political Manipulation? In Proceedings of the Companion Proceedings of The 2019 World Wide Web Conference, Association for Computing Machinery, San Francisco, CA, USA, 13–17 May 2019. [Google Scholar]

- Ferrara, E. Disinformation and Social Bot Operations in the Run Up to the 2017 French Presidential Election. Available online: https://arxiv.org/ftp/arxiv/papers/1707/1707.00086.pdf (accessed on 10 September 2020).

- Luceri, L.; Deb, A.; Giordano, S.; Ferrara, E. Evolution of bot and human behavior during elections. First Monday 2019, 24. [Google Scholar] [CrossRef]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.-C.; Flammini, A.; Menczer, F. The spread of low-credibility content by social bots. Nat. Commun. 2018, 9, 1–9. [Google Scholar] [CrossRef]

- Subrahmanian, V.S.; Azaria, A.; Durst, S.; Kagan, V.; Galstyan, A.; Lerman, K.; Zhu, L.; Ferrara, E.; Flammini, A.; Menczer, F. The DARPA Twitter Bot Challenge. Computer 2016, 49, 38–46. [Google Scholar] [CrossRef]

- Davis, C.A.; Varol, O.; Ferrara, E.; Flammini, A.; Menczer, F. BotOrNot: A System to Evaluate Social Bots. Available online: https://arxiv.org/pdf/1602.00975.pdf (accessed on 10 September 2020).

- Yang, K.; Varol, O.; Davis, C.A.; Ferrara, E.; Flammini, A.; Menczer, F. Arming the public with artificial intelligence to counter social bots. Hum. Behav. Emerg. Technol. 2019, 1, 48–61. [Google Scholar] [CrossRef]

- Wang, Y.; Rao, Y.; Zhan, X.; Chen, H.; Luo, M.; Yin, J. Sentiment and emotion classification over noisy labels. Knowl. Based Syst. 2016, 111, 207–216. [Google Scholar] [CrossRef]

- Munezero, M.; Montero, C.S.; Sutinen, E.; Pajunen, J. Are They Different? Affect, Feeling, Emotion, Sentiment, and Opinion Detection in Text. IEEE Trans. Affect. Comput. 2014, 5, 101–111. [Google Scholar] [CrossRef]

- Li, Z.; Fan, Y.; Jiang, B.; Lei, T.; Liu, W. A survey on sentiment analysis and opinion mining for social multimedia. Multimedia Tools Appl. 2018, 78, 6939–6967. [Google Scholar] [CrossRef]

- Amalarethinam, D.G.; Nirmal, V.J. Sentiment and Emotion Analysis for Context Sensitive Information Retrieval of Social Networking Sites: A Survey. Int. J. Comput. Appl. 2014, 100, 47–58. [Google Scholar]

- Pennebaker, J.W.; Booth, R.J.; Francis, M.E. Linguistic Inquiry and Word Count; Erlabaum Publisher: Mahwah, NJ, USA, 2001. [Google Scholar]

- Pennebaker, J.W.; Boyd, R.L.; Jordan, K.; Blackburn, K. The Development and Psychometric Properties of LIWC2015; University of Texas: Austin, TX, USA, 2015. [Google Scholar]

- Cohn, M.A.; Mehl, M.R.; Pennebaker, J.W. Linguistic Markers of Psychological Change Surrounding September 11, 2001. Psychol. Sci. 2004, 15, 687–693. [Google Scholar] [CrossRef]

- Peña, J.; Pan, W. Words of advice: Exposure to website model pictures and online persuasive messages affects the linguistic content and style of Women’s weight-related social support messages. Comput. Hum. Behav. 2016, 63, 208–217. [Google Scholar] [CrossRef]

- Hen, R.; Sakamoto, Y.; Chen, R.S.; Sakamoto, Y. Feelings and Perspective Matter: Sharing of Crisis Information in Social Media. In Proceedings of the 47th Hawaii International Conference on System Sciences, Institute of Electrical and Electronics Engineers, Waikoloa, HI, USA, 6–9 January 2014. [Google Scholar]

- Godbole, N.; Srinivasaiah, M.; Skiena, S. Large-Scale Sentiment Analysis for News and Blogs. In international conference on weblogs and social media. In Proceedings of the International Conference on Weblogs and Social Media, Boulder, CO, USA, 26–28 March 2007. [Google Scholar]

- Blei, D.M. Probabilistic topic models. Commun. ACM 2012, 55, 77–84. [Google Scholar] [CrossRef]

- Wesslen, R. Computer-Assisted Text Analysis for Social Science: Topic Models and Beyond. Available online: https://arxiv.org/pdf/1803.11045.pdf (accessed on 10 September 2020).

- Roberts, M.E.; Tingley, D.; Stewart, B.M.; Airoldi, E.M. The structural topic model and applied social science. In Proceedings of the Advances in Neural Information Processing Systems Workshop on Topic Models: Computation, Application, and Evaluation, Harrah’s Lake Tahoe, Stateline, NV, USA, 10 December 2013. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Roberts, M.E.; Stewart, B.M.; Airoldi, E. A Model of Text for Experimentation in the Social Sciences. J. Am. Stat. Assoc. 2016, 111, 988–1003. [Google Scholar] [CrossRef]

- Li, M.; Luo, Z. The ‘bad women drivers’ myth: The overrepresentation of female drivers and gender bias in China’s media. Inf. Commun. Soc. 2020, 23, 776–793. [Google Scholar] [CrossRef]

- Grimmer, J.; Stewart, B.M. Text as Data: The Promise and Pitfalls of Automatic Content Analysis Methods for Political Texts. Polit. Anal. 2013, 21, 267–297. [Google Scholar] [CrossRef]

- Roberts, M.E.; Stewart, B.M.; Tingley, D. stm: An R Package for Structural Topic Models. J. Stat. Softw. 2019, 91, 1–40. [Google Scholar] [CrossRef]

- Edwards, C.; Edwards, A.P.; Spence, P.R.; Shelton, A.K. Is that a bot running the social media feed? Testing the differences in perceptions of communication quality for a human agent and a bot agent on Twitter. Comput. Hum. Behav. 2014, 33, 372–376. [Google Scholar] [CrossRef]

- Cheng, C.; Luo, Y.; Yu, C. Dynamic mechanism of social bots interfering with public opinion in network. Phys. A Stat. Mech. Its Appl. 2020, 551, 124163. [Google Scholar] [CrossRef]

- Grimme, C.; Preuss, M.; Adam, L.; Trautmann, H. Social Bots: Human-Like by Means of Human Control? Big Data 2017, 5, 279–293. [Google Scholar] [CrossRef] [PubMed]

- Kahan, D.M.; Jamieson, K.H.; Landrum, A.; Winneg, K. Culturally antagonistic memes and the Zika virus: An experimental test. J. Risk Res. 2016, 20, 1–40. [Google Scholar] [CrossRef]

- Allem, J.-P.; Ferrara, E. Could Social Bots Pose a Threat to Public Health? Am. J. Public Health 2018, 108, 1005–1006. [Google Scholar] [CrossRef]

- Sutton, J. Health Communication Trolls and Bots Versus Public Health Agencies’ Trusted Voices. Am. J. Public Health 2018, 108, 1281–1282. [Google Scholar] [CrossRef]

- Jamison, A.M.; Broniatowski, D.A.; Quinn, S.C. Malicious Actors on Twitter: A Guide for Public Health Researchers. Am. J. Public Health 2019, 109, 688–692. [Google Scholar] [CrossRef]

- Allem, J.-P.; Ferrara, E.; Uppu, S.P.; Cruz, T.B.; Unger, J.B. E-Cigarette Surveillance With Social Media Data: Social Bots, Emerging Topics, and Trends. JMIR Public Heath. Surveill. 2017, 3, e98. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).