Abstract

Accurate spatiotemporal alignment of multi-view video streams is essential for a wide range of dynamic-scene applications such as multi-view 3D reconstruction, pose estimation, and scene understanding. However, synchronizing multiple cameras remains a significant challenge, especially in heterogeneous setups combining professional- and consumer-grade devices, visible and infrared sensors, or systems with and without audio, where common hardware synchronization capabilities are often unavailable. This limitation is particularly evident in real-world environments, where controlled capture conditions are not feasible. In this work, we present a low-cost, general-purpose synchronization method that achieves millisecond-level temporal alignment across diverse camera systems while supporting both visible (RGB) and infrared (IR) modalities. The proposed solution employs a custom-built LED Clock that encodes time through red and infrared LEDs, allowing visual decoding of the exposure window (start and end times) from recorded frames for millisecond-level synchronization. We benchmark our method against hardware synchronization and achieve a residual error of 1.34 ms RMSE across multiple recordings. In further experiments, our method outperforms light-, audio-, and timecode-based synchronization approaches and directly improves downstream computer vision tasks, including multi-view pose estimation and 3D reconstruction. Finally, we validate the system in large-scale surgical recordings involving over 25 heterogeneous cameras spanning both IR and RGB modalities. This solution simplifies and streamlines the synchronization pipeline and expands access to advanced vision-based sensing in unconstrained environments, including industrial and clinical applications.

1. Introduction

Temporal synchronization of multi-view video streams is essential for a wide range of computer vision tasks, including 3D reconstruction [1,2,3], pose estimation [4,5,6], and scene understanding [7,8,9,10]. The exact tolerance to synchronization errors depends heavily on the application. Semantic scene analysis tasks, such as surgical phase recognition, activity classification, or object detection, tend to aggregate information over extended temporal windows, making them relatively robust to minor temporal misalignments. In contrast, geometry-driven applications like multi-view 3D reconstruction and motion capture require that corresponding frames represent the same physical moment. In these cases, even single-digit millisecond offsets can cause significant reprojection errors, blurry artifacts in reconstructions, or inaccurate motion trajectories.

In laboratory environments, specialized cameras with dedicated interfaces for hardware-based synchronization [11,12,13] can be used to ensure that the shutters are perfectly synchronized. However, such solutions are costly, require complex wired setups, and greatly restrict the range of compatible devices. In contrast, real-world deployments often involve camera arrays that integrate a heterogeneous mix of consumer and professional devices, many of which lack hardware synchronization capabilities.

When hardware synchronization is not available, temporal alignment is often approximated by recording unsynchronized videos and then, during post-processing, estimating time offsets and pairing each frame from one camera with the closest frame from another stream.

In practice, audio-based methods, such as clapping or other transient sound cues, remain common due to their simplicity and widespread availability of built-in microphones [14,15,16]. However, these methods are sensitive to environmental noise and cannot be used with devices lacking audio capture. Moreover, they constrain the spatial arrangement of the cameras, as all cameras must be within audible range and preferably positioned at similar distances from the sound source; otherwise, the relatively low propagation speed of sound causes arrival-time differences that can compromise synchronization. For example, in a room measuring 10 m across, the sound of a clap will take roughly 30 milliseconds to travel from one side to the other, given the speed of sound in air (≈343 m/s). This delay is already on the order of an entire video frame at 30 fps.

One alternative solution is to use timecodes stored alongside the video [17,18], but this requires external timecode systems or pre-synchronizing the cameras’ internal clocks before recording. More recently, content-based approaches have been proposed that estimate temporal relationships directly from image content, for example by tracking general scene features [19], motion patterns [20], lighting changes [21], or human poses [22] across multiple cameras. While some of these methods achieve millisecond-level synchronization accuracy, they are typically tailored to narrow use cases and rely on specific video content and overlapping fields of view, which limits their applicability in more diverse scenarios. Some systems address this by explicitly embedding visually encoded timecodes into the video [23]. However, existing approaches are constrained by the low and fixed refresh rates of consumer screens and are not suitable for scenarios involving infrared (IR) cameras.

In contrast to hardware synchronization, post hoc alignment methods cannot ensure perfect frame correspondence, since the camera shutters operate independently. The residual error is bounded by half the frame period and thus scales with frame rate. For example, at 30 fps, the maximum misalignment can reach 16.7 ms, which is significant for fast motion. Although higher frame rates reduce this error, they also increase storage, power consumption (especially critical for battery-powered cameras), bandwidth, and processing costs, which can be prohibitive for long video sequences such as four-hour surgical recordings. It is therefore beneficial to estimate time offsets with sub-frame precision, enabling accurate frame interpolation for many downstream tasks.

To address this gap, we propose a low-cost, portable, and camera-agnostic method for achieving millisecond-level temporal alignment across heterogeneous camera systems, ranging from inexpensive action cameras to high-end optical tracking systems. The proposed solution is designed to be scalable, supporting configurations ranging from as few as two cameras to several dozen, and remains robust in real-world scenarios.

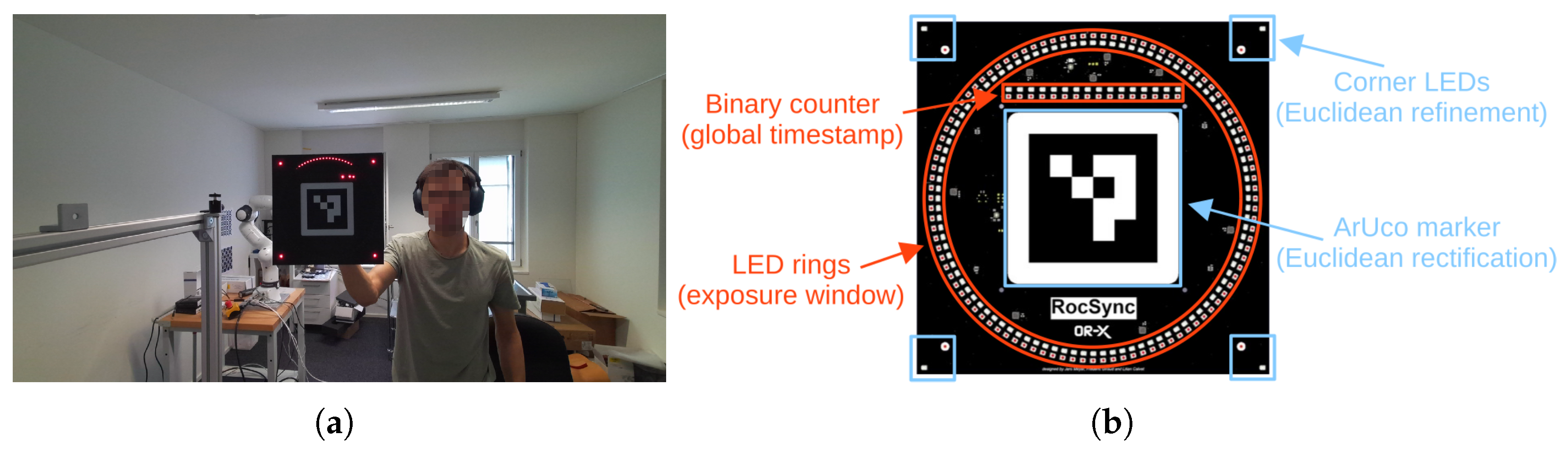

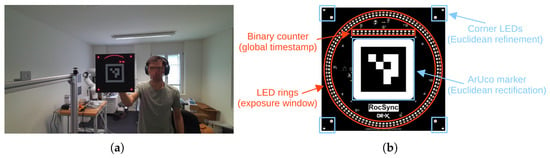

Central to our approach is a custom LED Clock, a device that visually encodes timestamps using LEDs (see Figure 1a). An operator sequentially positions the LED Clock in front of each camera. Timestamps are encoded via two complementary LED components (Figure 1b): a binary counter providing a global timestamp with 100 ms resolution, and a circular arrangement of LEDs illuminated sequentially at 1 ms intervals. Because camera sensors integrate light over a non-zero exposure time, the circular sequence appears in the image as an elliptical arc, whose start and end points indicate the beginning and end of the exposure window. For accurate spatial decoding, an ArUco marker [24] of known geometry is included at the center, enabling detection and homographic rectification of the captured LED components (i.e., binary counter and elliptical arc). Combining the binary timestamp with the arc-based exposure estimate yields a millisecond-accurate global timestamp for the exposure window. This process is repeated for at least two frames per video, enabling estimation of both offset and drift for each camera and allowing precise temporal alignment of the entire recordings. In practice, the LED Clock is shown at the beginning and end of a recording to minimize extrapolation errors. Importantly, cameras do not need overlapping fields of view or simultaneous visibility of the LED Clock. Each camera can be calibrated independently by sequentially presenting the LED Clock, enabling synchronization even across spatially separated or non-overlapping camera setups.

Figure 1.

Our custom LED Clock. (a) Example of the device in use. (b) Rendering of the final PCB design with annotated features (red features encode the timestamp and blue features are used for detection & Euclidean rectification). The exposure window of the camera is visible in (a) as a red elliptical arc, which can be decoded with an accuracy of about 1 ms to enable sub-frame temporal synchronization.

This approach allows multiple video streams to be synchronized with millisecond-level accuracy, regardless of the cameras’ native synchronization capabilities and scene content. Combined with established frame interpolation techniques [25,26], it opens up the possibility of synthesizing temporally aligned frames across all viewpoints, facilitating high-quality 3D reconstruction of dynamic scenes using unsynchronized consumer-grade cameras.

Our contributions are as follows: (1) We present a novel method for synchronizing heterogeneous camera systems comprising a combination of different infrared and RGB(-D) cameras. (2) We thoroughly evaluate our approach through a series of experiments conducted in both controlled laboratory settings and in real-world scenarios involving up to 25 cameras. (3) We demonstrate the importance and benefits of sub-frame temporal synchronization. (4) We contribute to the scientific community by open-sourcing all software and hardware designs developed in this work.

2. Related Work

Video synchronization methods can be broadly categorized into three groups: hardware-based synchronization, timecode-based synchronization, and content-based synchronization.

2.1. Hardware-Based Synchronization

Hardware-based synchronization uses an external electrical signal to trigger the camera shutters simultaneously across multiple devices. This ensures that all cameras capture frames at precisely the same moment. Typically, one camera or a dedicated device acts as the master and generates the synchronization signal, which is then distributed to all other cameras over dedicated cables. The signal distribution can follow either a star or daisy-chain topology. An example of a device that supports hardware synchronization is the Azure Kinect DK [11] which is widely used in scientific applications [6,14,15,27].

There have been approaches to replace dedicated synchronization cables with software-based triggering mechanisms guided by network time synchronization protocols over standard Ethernet [28,29]. Similarly, wireless-aware time synchronization protocols over Wi-Fi have been proposed, eliminating the need for physical cabling while still achieving microsecond-level accuracy [30].

While hardware-based synchronization can achieve perfectly synchronized frames with microsecond-level accuracy, it is inherently limited to devices that support such methods, and interoperability between different camera systems is often not possible. Moreover, most setups require wired connections, which makes them unsuitable for mobile or wearable applications (e.g., head-mounted or vehicle-mounted cameras) and complicates installation, especially when deploying large-scale systems with dozens of cameras.

2.2. Timecode-Based Synchronization

In timecode-based synchronization, each camera independently records timestamp metadata either at the start of the capture or on a per-frame basis. These timestamps can subsequently be used to establish a temporal correspondence across multiple recordings. The timecodes may be derived from an internal real-time clock that has been synchronized prior to capture, or supplied by an external timecode generator, typically via an audio channel.

Common methods for synchronizing the internal real-time clock include network time protocols (e.g., NTP, PTP), GPS time, and dynamic QR codes (as implemented by GoPro [17]). The official GoPro documentation states a synchronization accuracy of <50 ms when using their dynamic QR codes [17]. Each camera then independently timestamps recorded videos using its internal clock. However, the shutters themselves are not synchronized across cameras. As a result, residual misalignment of up to half a frame can remain even when pairing the closest timecodes between two video streams. Furthermore, since timecodes are typically stored with frame-level precision, sub-frame exposure timing is unknown, preventing interpolation between consecutive frames to achieve improved alignment.

Although these methods are easier to deploy than hardware synchronization and some initial synchronizations such as PTP or GPS time require no additional user intervention, they are generally much less precise due to clock drift over time. GoPro [17] reports that their cameras develop a noticeable time offset as a result of drift 60–90 min after synchronization. Timecode-based synchronization is widely used in professional video production, where small offsets of a few frames are often acceptable. However, scientific multi-view applications often require higher temporal accuracy and such inaccuracies are generally unacceptable. Nevertheless, it is frequently employed in dataset acquisition, especially in setups involving action cameras [18].

2.3. Content-Based Synchronization

Content-based synchronization methods operate as post-processing techniques that analyze the actual image and/or audio content to establish temporal alignment between streams. This approach offers greater flexibility, as it does not require specialized cameras or specific setup steps prior to recording, since synchronization is performed offline after the recordings are complete. Synchronization cues can arise from naturally occurring content in the scene, or from deliberately introduced markers such as flashes, claps, or visual patterns. Similar to timecode-based synchronization, these methods do not synchronize the actual camera shutters. However, some content-based approaches provide timestamps with sub-frame precision [20,31], enabling interpolation between frames to achieve improved alignment. Content-based methods can be further divided into several subcategories:

Audio-based synchronization. One of the earliest and most straightforward methods, audio-based synchronization, leverages the audio track captured by cameras with a built-in microphone. Temporal alignment is then established by cross-correlating these signals or detecting and matching distinct events and peaks [32]. Such cues are often intentionally introduced at the beginning of a recording by clapping or producing other sharp sounds. This allows video streams to be synchronized entirely independently of the visual content, making audio-based synchronization particularly robust in visually challenging environments such as cluttered scenes with repetitive textures, strong motion blur, or low-light conditions.

Although overlapping fields of view are not required, cameras must still be in close proximity to capture the same audio signals. Moreover, due to the relatively low propagation speed of sound, each camera should be positioned at roughly equal distances from the source; even a 5-m difference introduces a 15 ms discrepancy in time of arrival. As a result, this approach severely restricts the camera geometry and is not suitable for particularly noisy environments or outdoor settings where audio signals are weak or heavily distorted. Moreover, since audio and video are recorded, processed, and encoded independently, there can be an inherent offset between the two. As a result, even if the audio tracks of two cameras are perfectly synchronized, their corresponding video streams may still exhibit temporal misalignment. While audio is typically recorded at much higher sampling rates than video, enabling timestamping with sub-frame precision, this does not necessarily translate into accurate video alignment due to the aforementioned limitations. Despite all its limitations, its ease of use has led to its adoption in many applications [14,15,16].

Visual feature correspondences. This approach uses moving image features, such as key points, edges, or textures, to find correspondences between multiple video streams that share the same visual content [19,20,33]. While this makes this category of methods straightforward to use, performance highly depends on the content of the scene and the method requires overlapping camera views. There have also been approaches to combine audio and visual features for increased robustness [34].

Visual features can also include abrupt changes in lighting, such as a camera flash or the switching on or off of room lights, which create distinctive changes observable across multiple camera views. Furthermore, sub-frame synchronization accuracy can be achieved by exploiting rolling shutter artifacts present in many CMOS cameras [21]. This approach relies on the assumption that the lighting change is captured simultaneously by all cameras, thus requiring them to be positioned within the same indoor environment. However, in our experiments, we observed that many lights do not switch on instantaneously, and in large environments such as operating rooms, the ceiling illumination often consists of multiple physical light sources that do not activate at exactly the same time, making it difficult to detect a distinct peak.

Pose matching. In videos featuring human subjects, synchronization can be achieved by analyzing and matching human pose and movements across multiple camera views [22,31]. This approach is fully automated and does not require any additional synchronization steps for recordings that already include moving humans. However, it requires overlapping fields of view and comparable zoom levels across cameras to ensure that the subject remains fully visible in all views.

Joint geometric calibration and temporal synchronization. External calibration and temporal synchronization can be performed in one step by recording a moving checkerboard and jointly optimizing the camera extrinsics along with the temporal offset [27,35]. While this approach can achieve accurate synchronization under ideal conditions, it suffers from several limitations: (i) it is operator-dependent, since the accuracy of temporal synchronization is sensitive to the checkerboard motion, and ambiguous checkerboard trajectories can lead to degenerate solutions in the joint optimization, (ii) it is computationally expensive, (iii) it relies on overlapping fields of view, and (iv) it degrades in performance when cameras have substantially different zoom levels (pattern too small or too large in one camera) [36].

Visual timestamp encoding. Some approaches introduce dedicated hardware or visual signals to encode global timestamps directly into video frames. This can be achieved using dynamic QR codes, moving objects, blinking patterns, or LED arrays, which are presented to all cameras and decoded during post-processing [23,37]. The decoded timestamps serve as precise anchor points for synchronizing camera streams to a global timeline. These methods have the advantage of not relying on overlapping camera views and functioning independently of scene content. However, this category of methods remains relatively unexplored, lacks standardization, and is not yet widely adopted.

2.4. Summary

While both hardware-based and content-based synchronization techniques are widely used, each comes with inherent limitations. Hardware-based synchronization requires specialized equipment and complex setups, while content-based methods generally offer lower accuracy or only work for specific use-cases and completely lack support for infrared cameras. Additionally, content-based methods often have camera setup restrictions, as they require overlapping fields of view.

Our method aims to bridge this gap by introducing a general-purpose LED Clock that uses visible and infrared LEDs to encode global timestamps with millisecond-level accuracy. Unlike prior solutions, it supports all RGB and infrared cameras, including 3D optical tracking systems, without requiring shared fields of view or special hardware integration. In addition, it provides millisecond-level synchronization to enable sub-frame interpolation.

3. Method

In this section, we first formalize the video synchronization problem. We then describe the key principles underlying our proposed approach.

3.1. Problem Formulation

Multi-view video synchronization can be formulated as the problem of computing global timestamps for every frame in each video stream. We model the temporal behavior of a camera as a linear relationship between the local clock and some global reference clock, characterized by an initial offset and a constant drift factor. The initial offset arises because the recording starts at an arbitrary time offset relative to the global reference clock. In addition, each stream exhibits a constant drift component arising from small frequency deviations in the cameras’ internal oscillators. These variations cause the clocks to run at slightly different rates, which under the assumption of constant drift results in a temporal error that accumulates linearly over time. Any nonlinear effects, such as those induced by temperature fluctuations, are considered negligible for our target application. We therefore model the transformation from local to global time as follows:

where is the global timestamp of the i-th frame from camera j, is the local timestamp, represents the clock drift of camera j, and denotes its initial time offset. We formulate temporal synchronization of camera j as the problem of estimating and from two or more measured samples , where each sample represents a measurement of the global clock at a specific local timestamp . We formulate this as a linear least-squares problem that minimizes the error between the predicted and observed global timestamps:

which can be efficiently solved using the well-known closed-form solution [38] or any more advanced robust linear regression method [39]. If only a single sample is available, the system can still be solved by setting , which assumes that the camera’s local clock has no drift relative to the global clock.

3.2. Proposed Method

Our method introduces a custom LED Clock equipped with an array of LEDs that serves as a global reference clock (see Figure 1). This device continuously updates its LED pattern to visually encode the current timestamp with millisecond precision. These timestamps can then be extracted from video frames in which the LED Clock device is visible.

Our LED pattern is divided into two subsets (see Figure 1b). The first subset consists of 100 LEDs arranged in a circle, with exactly one LED illuminated at a time. This active LED advances one position every millisecond, completing a full rotation in 100 ms. Because camera shutters are not instantaneous, light is integrated over the exposure period (typically a few milliseconds), causing several adjacent LEDs to appear illuminated in a single frame. The first and last LEDs in this arc correspond to the start and end of the exposure window, respectively, enabling precise determination of both timestamps (see Figure 2c). The step duration of 1 ms was chosen to provide millisecond-level accuracy, while the use of 100 LEDs ensures correct operation for exposure times of up to 100 ms. Together, these design choices ensure compatibility with a wide range of cameras, supporting frame rates from 10 to 1000 fps. Since the LED ring wraps around every 100 ms, a second subset of LEDs is arranged in a linear row to serve as a binary counter. This counter increments with each full rotation of the ring, keeping track of the number of complete 100 ms revolutions. Together, the ring and binary counter LEDs uniquely encode the current timestamp with millisecond precision.

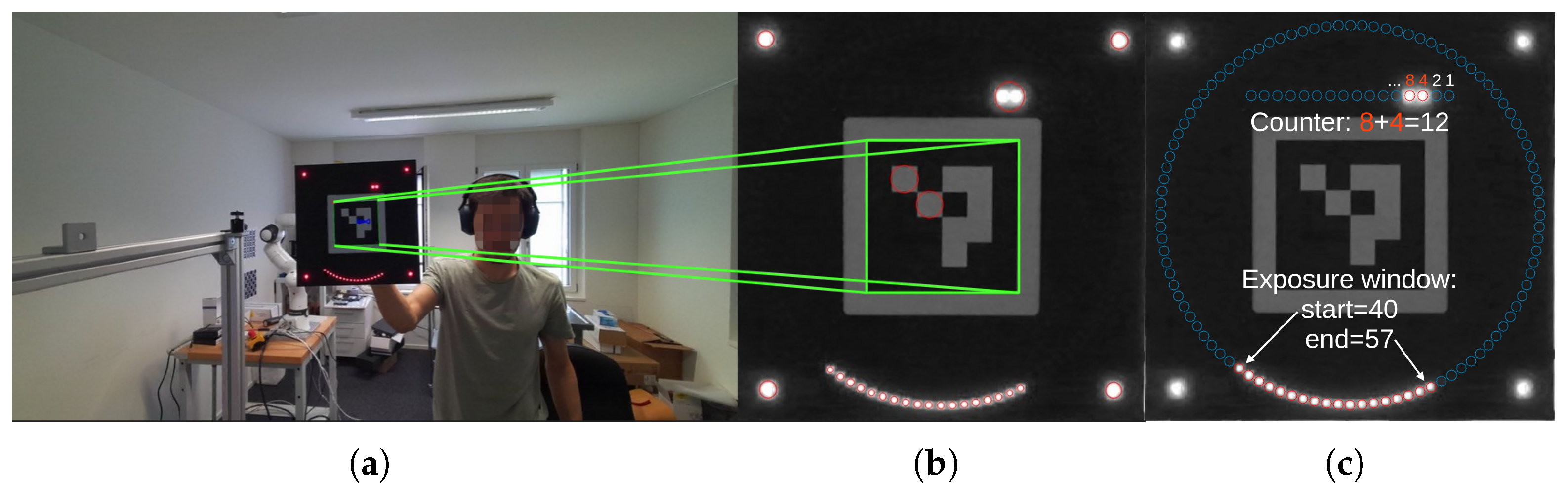

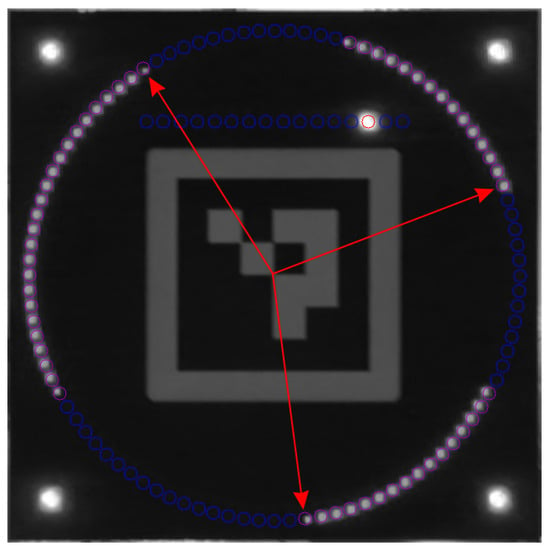

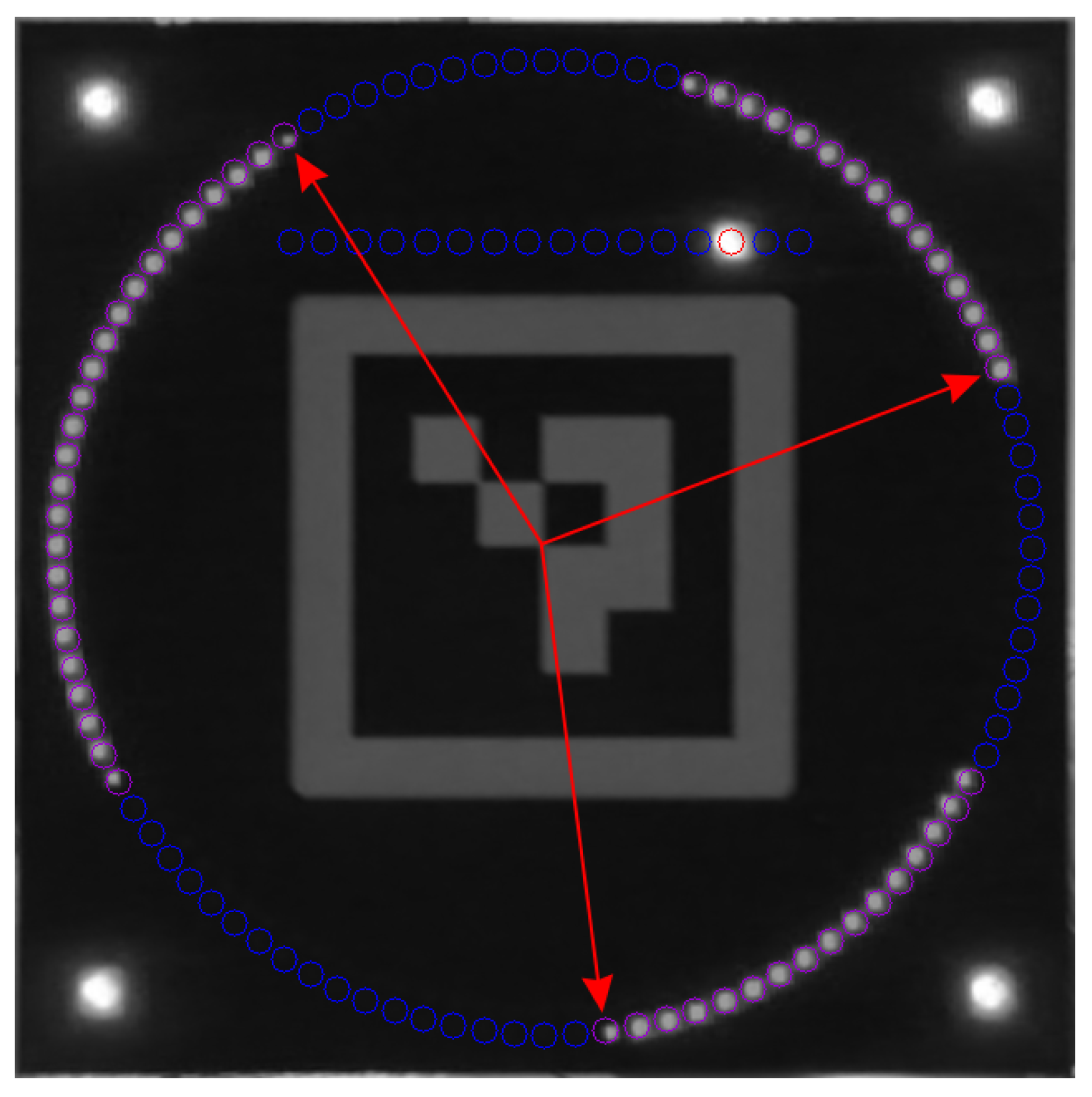

Figure 2.

Our computer vision pipeline: (a) raw image with ArUco detection, (b) coarse homography reprojection using the ArUco marker with detected blobs shown in red circles, and (c) decoded LEDs after refined homography reprojection using the corner blobs from the previous step. All LED positions are marked with blue circles, while illuminated LEDs are highlighted in red. In this example, the binary counter shows 12 and the illuminated ring segment indicates that the exposure was taken from LED 40 to LED 57. Combining this information, we know that the exact exposure of this frame occurred from to .

Each frame that captures the clock device can be decoded into a pair of timestamps , representing the start and end of the exposure of the i-th frame from camera j. We assume that the start of the exposure is always aligned with the regular frame interval, while automatic exposure control only affects the end. Consequently, is used as the measured timestamp when solving Equation (2). By briefly showing the clock device to each camera, we obtain a set of samples

which are used to estimate the drift and offset parameters of camera j, as described in Section 3.1. In practice, the LED Clock is recorded at both the beginning and the end of each capture session, establishing two temporal reference points that minimize estimation error and account for potential clock drift over long recordings.

4. Implementation

Our method consists of two main components: the LED Clock device and a processing pipeline for decoding the global timestamps from video frames and estimating the offset and drift for each video stream.

4.1. Hardware

The custom clock device is based on a PCB featuring 100 red and IR LEDs arranged in a circle, complemented by 16 additional red and IR LEDs serving as a binary counter (see Figure 1b). This design enables the encoding of unique timestamps with millisecond precision for up to 109 min, a limit that can be easily extended by adding more LEDs to the binary counter, depending on the application requirements.

The PCB dimensions were chosen to ensure sufficient spacing between LEDs, minimizing light interference from neighboring LEDs, which could otherwise introduce decoding errors, particularly when the device is viewed from a far distance or captured in low-resolution video. At the same time, the size is small enough to be fully captured by cameras with very narrow fields of view, such as zoom cameras focused on a specific point of interest. The resulting size ensures that the clock is compatible with a wide range of camera fields of view and operator distances.

It features a central ArUco marker [24] for robust detection in cluttered environments and initial homography rectification of the imaged PCB. The marker is complemented by four corner LEDs that serve as precise, well-spaced point correspondences to refine the homography rectification provided by the corners of the ArUco marker. One corner includes an additional IR LED to resolve rotation ambiguity in infrared videos where the ArUco marker is not visible.

The entire system is controlled by a 32-bit RISC-V microcontroller with an external temperature-compensated crystal oscillator (TCXO) for precise timekeeping and is powered by a rechargeable battery, providing several hours of independent operation per charge.

The PCB was designed using the open-source EDA software KiCAD (version 8.0, https://kicad.org, accessed on 27 November 2025), while auxiliary mechanical components were modeled in Autodesk Fusion 360 (version 2.0, Autodesk Inc., San Francisco, CA, USA) and subsequently 3D printed. To maximize contrast between illuminated and non-illuminated LEDs under diverse lighting conditions, the PCB was wrapped in a thin layer of matte black film, which reduces reflections in both the visible and infrared spectrum, effectively improving LED visibility. This approach proved effective even in the presence of strong active infrared emission from a surgical IR tracking camera. Component selection was optimized for manufacturing by the PCB fabrication service JLCPCB (https://jlcpcb.com, accessed on 27 November 2025).

The hardware design prioritizes cost-effectiveness and accessibility. The final device can be manufactured and assembled using standard PCB fabrication services accessible to individual consumers, with an estimated cost of approximately US$20 per unit.

4.2. Software

The software component consists of a Python (version 3.10) module that reads a video, analyzes each frame, and outputs the estimated offset and drift values for the entire video. Using these measurements, global timestamps can then be recomputed for every frame using Equation (1). The following steps describe our computer vision pipeline (see Figure 2 for a visualization of the main steps, from left to right):

- 1.

- Find ArUco marker: Detect the central ArUco marker to determine the approximate position and orientation.

- 2.

- Coarse reprojection: Use the detected marker’s corners to perform a coarse planar homography rectification of the imaged clock.

- 3.

- Locate corner LEDs: Identify the corner LEDs in the coarsely rectified image.

- 4.

- Accurate reprojection: Use the extracted corner LEDs to refine the homography rectification for improved accuracy. While ArUco marker detection is generally robust, the detected corner positions are not always pixel-perfect, making this refinement necessary.

- 5.

- Decode LEDs: Decode the circle and binary counter LEDs by thresholding their respective areas to obtain exact timestamps for the start and end of the exposure.

- 6.

- Timestamp fitting: Perform robust linear regression on all extracted timestamps to reject outliers and estimate global timestamps for all frames (solving Equation (2)).

To ensure robustness, the pipeline incorporates specific rejection criteria at multiple levels:

In step (1), a frame is rejected if the detected ArUco marker occupies less than 0.2% of the image area. This condition indicates that the LED Clock was held too far from the camera, resulting in insufficient resolution to reliably distinguish adjacent LEDs and therefore leading to unreliable decoding.

In step (3), a frame is rejected if the corner LEDs in the coarse reprojection deviate from their expected positions by more than a specified threshold.

In step (5), dynamic local thresholding is used to handle reflections and uneven illumination. Specifically, for each time-encoding LED we compute the average pixel intensity within a circular region centered at the LED’s expected location, which is obtained by projecting the known LED layout of the clock into the image using the planar homography estimated in step (4). We also compute the average intensity within an identically sized circular region placed immediately adjacent to the LED, where no emission is present, to estimate the local background illumination. The difference between these two measurements is then used as input to Otsu’s thresholding algorithm [40], which separates illuminated from non-illuminated LEDs, providing robust and accurate decoding across a wide range of lighting conditions. Additionally, a frame is rejected if the ring does not display a single illuminated sector (e.g., a strong glare or reflection that causes some LEDs to be mistakenly interpreted as illuminated) or if the sector crosses the counter boundary, as this indicates the counter changed during the exposure, resulting in an ambiguous reading which cannot be decoded correctly.

In step (6), robust RANSAC regression [41] is applied to combine the independent timestamps from all decoded frames by fitting a linear function and computing the global offset and drift of the video. This approach effectively discards outlier frames where the clock was decoded incorrectly, producing timestamps that do not conform to the assumed linear model. RANSAC was chosen over other robust linear regression methods because it explicitly excludes outliers when estimating model parameters, rather than merely down-weighting them.

Together, these precautions ensure reliable performance even under suboptimal conditions, such as poor lighting, motion, or the board being viewed from a distance or at an angle.

It is important to note that our current decoding pipeline assumes a global-shutter imaging model. When using rolling-shutter cameras, image rows are exposed sequentially, which introduces a small, systematic temporal offset that depends on the vertical position and apparent height of the LED Clock in the image (see Figure A1). Importantly, our method does not rely on a single observation: the global clock mapping is estimated from several dozen independently decoded frames. This temporal redundancy significantly mitigates the effect of rolling-shutter bias, as confirmed by our experimental results.

5. Experiments

To evaluate the accuracy and robustness of our method, we designed a comprehensive testing framework that compares it against existing synchronization techniques across various camera configurations and combinations, both in laboratory and real-world scenarios. For each configuration, we designed specific experiments and selected an appropriate quantitative metric based on the capabilities and limitations of the cameras involved.

In our first experiment, we used two Kinect cameras with hardware synchronization as the ground-truth reference. This setup allows us to compute the exact residual synchronization error in the time domain.

In our second experiment, we test the wide applicability of our method by comparing it with existing synchronization methods for consumer-grade cameras. Since GoPro cameras lack support for hardware synchronization to provide a reliable ground-truth reference, we instead performed an external calibration for every synchronization method using a fast-moving checkerboard. The resulting reprojection error was then used as a practical metric for evaluating temporal alignment, as it is minimized when the video streams are perfectly synchronized, thus providing a direct and quantitative measure of the temporal alignment achieved by each approach. Additionally, we used the sub-frame accurate timestamps provided by our method to interpolate synchronized 2D positions of the checkerboard instead of simply using the closest frame for every video stream, further minimizing the reprojection error.

Then, in our third experiment, we evaluated the accuracy of our method across two different modalities, namely RGB video cameras and a professional-grade IR optical tracking system. This setup enabled us to assess the robustness and generalizability of our approach when applied to heterogeneous camera systems.

In the fourth and fifth experiments, we demonstrated the importance of sub-frame-accurate synchronization for multi-view computer vision tasks. Specifically, we synchronized a multi-view recording with our method and compared the results of 3D pose estimation and 3D reconstruction when using sub-frame interpolation versus nearest-frame matching.

Finally, in the sixth experiment, we tested robustness outside controlled laboratory conditions through a case study in large-scale surgical data collection involving 25 cameras. The data collection featured a highly heterogeneous camera array, comprising devices from various manufacturers and price ranges, with differing settings such as field of view, frame rate, shutter speed, and sensor type, demonstrating the broad applicability of our method.

Together, this rigorous evaluation allowed us to thoroughly assess the accuracy and versatility of our method across diverse laboratory and real-world scenarios.

5.1. Experiment 1: Validation Against Hardware Synchronization

In this experiment, we evaluate the absolute synchronization error of our method by comparing its output against a ground-truth reference. To obtain the ground truth, we leveraged the hardware synchronization capabilities of the Azure Kinect DK (Microsoft Corporation, Redmond, WA, USA), assuming it provides perfect synchronization. The recording procedure was designed to closely match real-world multi-view data collections. We applied our method to recompute the timestamps for both video streams separately and then calculated the root mean square error (RMSE) between them across all frames. This error should ideally be zero because the shutters are perfectly synchronized. However, it is important to note that the Microsoft Azure Kinect DK uses an OV12A10 RGB sensor with a rolling shutter, meaning that different image rows are exposed sequentially in time. Consequently, the LED pattern on the clock may differ slightly between frames (see the Results paragraph for details). Together with the relatively low frame rate of 30 fps, this setup constitutes a challenging scenario for hardware-synchronization-free methods in terms of synchronization accuracy.

Experimental setup. Two Kinect cameras were placed next to each other, facing opposite directions, and connected using a cable to synchronize their shutter. We then used the k4arecorder utility (Azure Kinect SDK version 1.4.2, https://github.com/microsoft/Azure-Kinect-Sensor-SDK, accessed on 27 November 2025) on a central laptop to record from both cameras simultaneously. The cameras were set to a resolution of pixels at 30 fps with a fixed exposure time of 8.33 ms.

The recording followed a protocol representative of real-world, non-laboratory conditions, similar to those in large-scale data collections involving many cameras (e.g., the 25-camera setup used in Experiment 6), where not all cameras share overlapping visual content. We first showed the clock to the first camera for approximately 5 s, then waited 2 min, and subsequently showed it to the second camera for 5 s. The 2-min delay simulates the scenario of synchronizing more than two cameras, assuming that one full cycle of showing the clock to every camera takes approximately 2 min. The clock was positioned approximately 150 cm from the camera and centered in the field of view. After the initial synchronization cycle, the actual procedure of interest was simulated by waiting for 5 min before performing a second identical synchronization cycle. This allowed for a more accurate measurement of the drift over time. This process was repeated five times, resulting in five independent 14-min recordings from two cameras.

Evaluation score. The 10 videos were processed independently using our method to recompute global timestamps for every frame. Finally, for every video pair, we computed the root mean square error (RMSE) between the recomputed timestamps. The RMSE is defined as:

where N is the total number of frames, and and represent the recomputed timestamps of the i-th frame in the first and second video streams, respectively.

Results. RMSE values over the five runs are reported in Table 1, with an average RMSE of 1.34 ms, indicating strong agreement with the ground-truth reference. This error is significantly lower than the duration of a single frame at 30 fps (33.3 ms), demonstrating that our method achieves meaningful sub-frame accuracy. We attribute the residual error primarily to the rolling shutter of the Kinect’s RGB sensor. Unlike a global shutter that exposes all pixels simultaneously, the rolling shutter captures each row sequentially over a short interval. This causes slight temporal offsets across the frame, meaning that the effective exposure time for a given pixel depends on its vertical position. Therefore, the timestamp encoded by the clock appears slightly shifted depending on the clock’s position within the frame. Additionally, since the LEDs are arranged in a ring and therefore at different vertical positions, the decoded timestamp can vary slightly based on which specific LED was illuminated during exposure, for example, whether the observed arc is predominantly horizontal or vertical. This is further supported by the slight variations in exposure times shown in Figure A1. To reduce this artifact, the clock can be held at a greater distance from the camera, effectively decreasing the vertical pixel distance between the highest and lowest LED.

Table 1.

Root mean square error (RMSE) in milliseconds between the two video streams.

5.2. Experiment 2: GoPro to GoPro

In this experiment, we compare our method to existing synchronization approaches for aligning two GoPro video streams. Since GoPro cameras do not offer built-in hardware synchronization that can serve as a ground-truth reference, we evaluate performance indirectly by performing stereo external calibration based on a moving checkerboard and reporting the resulting reprojection error. The underlying intuition is that when video streams are accurately synchronized, the reprojection error is minimized, thus providing a practical and quantitative measure of temporal alignment. We deliberately maintained the checkerboard in continuous rapid circular motion to amplify the reprojection error caused by temporal misalignment, making the results easier to interpret. We are comparing the following methods:

- 1.

- RocSync (our method);

- 2.

- Global illumination-based synchronization (toggling room lights);

- 3.

- Audio-based synchronization (clapping) using AudioAlign [42];

- 4.

- Timecode-based synchronization (GoPro Timecode Sync using QR code).

For global illumination-based synchronization, we implemented a simple script that estimates the temporal offset by cross-correlating the gradient of average global illumination between the two cameras. Audio-based synchronization was performed with the open-source software AudioAlign [42] by applying FP-EP followed by cross-correlation. Like most battery-powered cameras, GoPros include an internal real-time clock that maintains wall time. This clock is then used to generate per-frame timestamps with frame-level precision, which are encoded in the SMPTE timecode format. These timestamps can be used to identify the corresponding frames in multiple video streams. The GoPro Labs Firmware (https://gopro.com/en/us/info/gopro-labs, accessed on 27 November 2025) enables fast and accurate timecode synchronization using QR codes displayed on a smartphone.

Experimental setup. We placed two GoPro cameras (GoPro Hero 12 and GoPro Hero 10) approximately 1 m apart to capture the same scene. Both cameras were running the GoPro Labs Firmware and they were configured to record at a resolution of with 60 fps and a fixed exposure time of 1/480 s. The internal real-time clocks of the cameras were synchronized immediately prior to recording using an animated QR code displayed on a phone, a feature of the GoPro Labs Firmware.

The recording was then started manually on both cameras, after which we performed the calibration procedures required for each synchronization method. Specifically, we clapped, turned the room light off and on, and finally showed our LED Clock to each camera for 5 s. After completing these initial calibration steps, a 3D-printed precision checkerboard was continuously moved along a circular trajectory for 30 s, making sure that it remained visible in both camera views. Lastly, the initial calibration steps were repeated once more.

The Hero 12 was selected as the reference camera, and the Hero 10 was aligned to it using the different synchronization methods. For each method, the videos were synchronized independently, and the same set of 100 consecutive frames was then used for stereo external calibration with the stereoCalibrate function from the OpenCV library (version 4.12.0). In addition, all original videos were downsampled to 30 fps (half the native frame rate) using ffmpeg (version 7.1.2), and the entire process was repeated on these recordings.

Evaluation score. The synchronization accuracy of each method is evaluated using the mean reprojection error (MRE) of all checkerboard corners in the 100 consecutive frames after stereo calibration. For two cameras, a fully symmetric MRE can be defined using both forward and inverse projection. Let and denote the projection functions of the left camera and right camera respectively. The MRE is written as:

where N is the number of image pairs (views), M is the number of checkerboard points, and are the observed image points in the left and right images, is the 3D position of the j-th checkerboard point, and are the pose (rotation and translation) of the checkerboard in the left camera frame, and and are the rotation and translation from the left camera to the right one. This symmetric formulation measures the reprojection error in both directions, providing an assessment of calibration and temporal alignment.

Results. Table 2 reports the mean reprojection error across five runs, comparing different synchronization methods at 30 fps and 60 fps. At 30 fps, our method with interpolation achieves the lowest errors overall, consistently outperforming existing approaches such as light- or audio-based synchronization, and far surpassing timecode alignment. Without interpolation, our method performs similarly to light-based synchronization, as both provide frame-accurate offset estimation. At 60 fps, the margin between our interpolated method and the closest competing approaches narrows, since the maximum error of frame-accurate synchronization is inherently bounded by half a frame interval. With interpolation, our method achieves nearly the same error at 30 fps as at 60 fps, clearly demonstrating its sub-frame accuracy.

Table 2.

Mean reprojection error (MRE, in pixels) of stereo external calibration across 5 runs. Values are shown as 30 fps/60 fps. Lower values indicate better temporal alignment.

It is important to note that this experiment represents an ideal scenario for light-based synchronization, with a single ceiling-mounted lamp that turns on and off nearly instantaneously. In many real-world settings and larger rooms, illumination often comes from multiple independent lamps and is neither synchronized nor uniform.

Sound-based synchronization exhibits a noticeable error, which we suspect arises from the fact that video and audio streams are encoded separately and only later combined in a container. Differences in audio/image pipeline latency can introduce timing offsets that vary across camera models. Because the error exceeds a single frame interval, sub-frame interpolation does not consistently improve the result.

5.3. Experiment 3: IR Pose-Tracking to RGB Camera

One of the advantages of our approach lies in its ability to synchronize heterogeneous camera systems, including those that rely on infrared imaging. To evaluate this capability, we tested the combination of a consumer action camera (GoPro Hero 12) and a high-end IR marker tracking system. The pose-tracking modality was represented by the Atracsys fusionTrack 500 (Atracsys LLC, Puidoux, Switzerland), a stereo infrared tracking system used for high-precision 3D localization of infrared-sensitive markers in surgical applications. To our knowledge, no existing hardware synchronization method supports this camera setup, so we use the reprojection error as a means to validate the proposed synchronization method.

Experimental setup. Both cameras were mounted on the same tripod. The GoPro camera was set to record at a resolution of with 60 fps. Similar to the previous experiment, a 3D-printed multimodal checkerboard with embedded infrared fiducials was moved through the scene with rapid linear motions visible to both cameras. Since the fusionTrack system records 3D marker and fiducial coordinates instead of 2D images, we developed a dedicated pipeline for detecting and decoding our LED Clock in these recordings (see Appendix A). After synchronizing the recordings using our method, we performed stereo extrinsic calibration with the multimodal checkerboard following the approach of Hein et al. [27]. The experiment was repeated three times in total.

Evaluation score. We use the MRE again. This time, it evaluates how accurately the corners of a moving 3D checkerboard rigidly attached to an IR marker are reprojected onto their corresponding 2D detections in the RGB images. For each synchronized pair, the 3D points correspond to the checkerboard corners expressed in the marker reference frame. Using the pose of the IR marker with respect to the IR camera , these points are first expressed in the IR camera frame, then transformed into the RGB camera frame using the calibrated extrinsic parameters , and finally projected into the RGB image through the pinhole model . The MRE is computed as follows:

where N is the number of synchronized IR–RGB pairs, M is the number of checkerboard corners per view, are the 2D detections in the RGB camera (camera 2), are the 3D coordinates of the checkerboard corners expressed in the marker reference frame, and represent the rotation and translation of the marker with respect to the IR camera (camera 1) for view i, estimated from the IR stereo tracking system, and are the rotation and translation from the IR to the RGB camera, and denotes the projection function of the RGB camera.

This formulation allows evaluating the overall alignment accuracy between both sensing modalities by directly comparing the projected positions of the 3D checkerboard corners to their detected 2D image locations in the RGB frames.

Results. Table 3 reports the mean reprojection error (MRE) across three runs. While no direct comparison to other synchronization methods is possible in this heterogeneous setup, the observed error of 1.81 px at 4K resolution is consistent with previously reported results for the same configuration, namely same checkerboard size and geometry, as well as comparable checkerboard-to-camera distances, using joint optimization of extrinsic parameters and time offset [6]. This result demonstrates high synchronization accuracy.

Table 3.

Resulting mean reprojection error (MRE, in pixels) of stereo external calibration across 3 runs when synchronizing GoPro and Atracsys fusionTrack 500 using our method. Lower values indicate better temporal alignment.

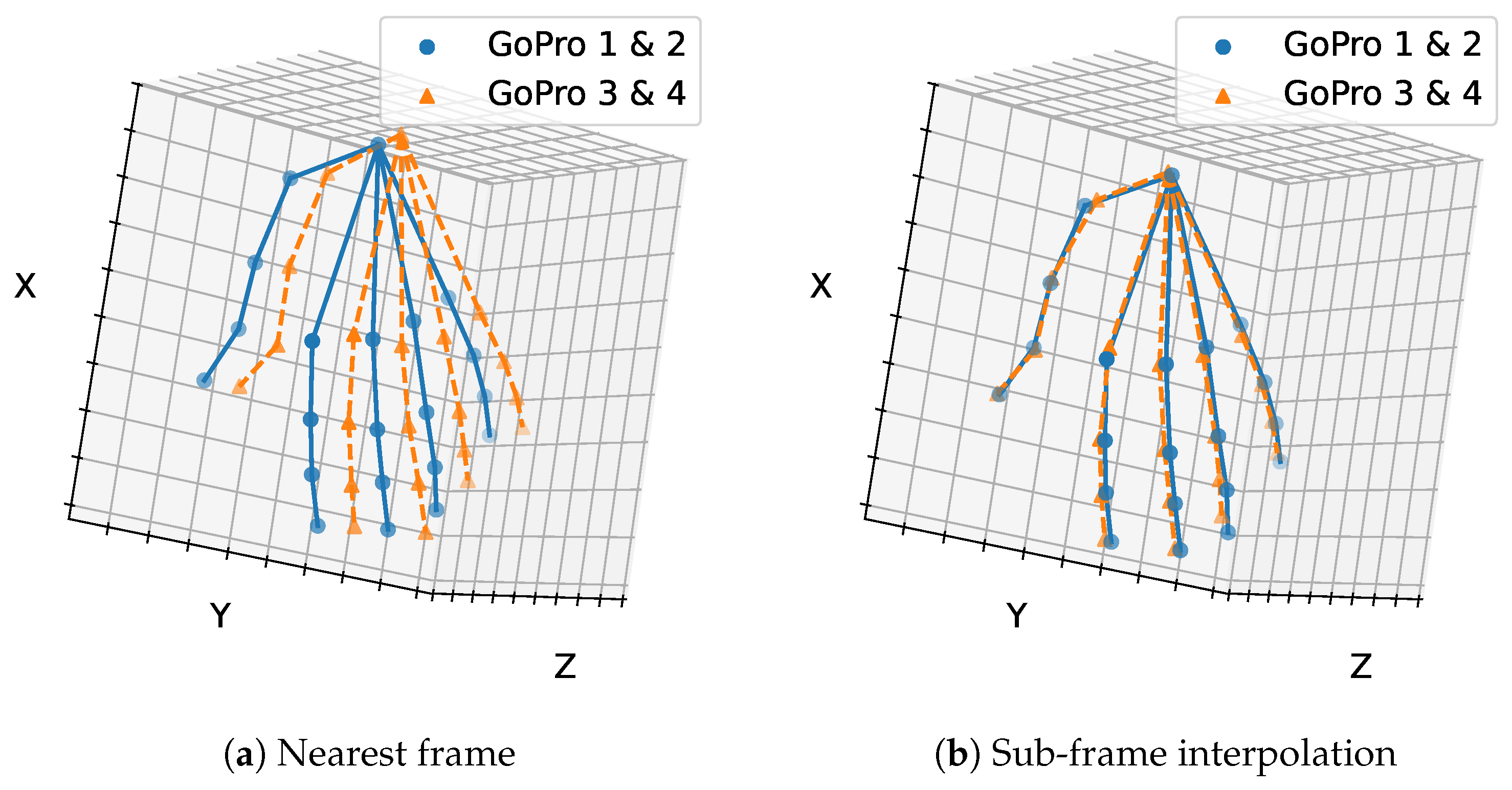

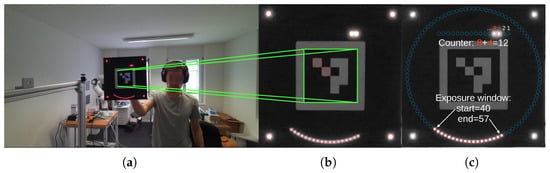

5.4. Experiment 4: 3D Pose Estimation

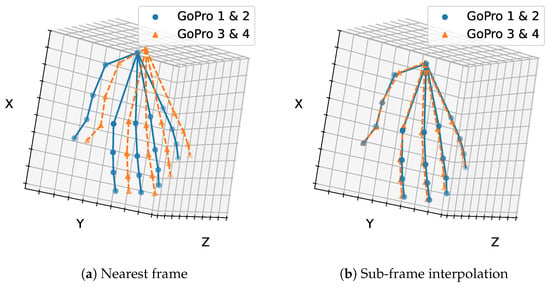

In this experiment, we evaluate the applicability of our method to a common real-world downstream task: multi-view 3D human hand pose estimation. We quantitatively assess performance by comparing triangulation error when using interpolation with sub-frame timestamps versus the nearest-frame strategy. Additionally, side-by-side visualizations of the triangulated geometries qualitatively demonstrate fewer abnormalities when using sub-frame interpolation.

Experimental setup. We employed four GoPro Hero 12 cameras arranged in a square with approximately 30 cm spacing between adjacent units. All cameras recorded at 4K resolution and 30 fps with a shutter speed of 1/240. The captured sequence depicts a simulated surgical scenario in which a surgeon manipulates a saw bone.

Synchronization using our method was performed in two rounds following the same procedure as in previous experiments. For each view, 2D hand poses were manually annotated by an experienced annotator. Six distinct moments (denoted to ) with significant hand motion, where one hand was fully visible, were selected, and 3D hand joints were triangulated twice per moment: first by using the nearest frame from each view, and second by interpolating the 2D coordinates between the two nearest frames in each view using the sub-frame accurate timestamps obtained through our method.

In a second step, the four viewpoints were partitioned into two sets (GoPro 1 & 2 and GoPro 3 & 4), and moment was triangulated separately for each set using only two cameras (again with and without interpolation). The resulting 3D poses were then compared to assess the discrepancy between two independent 3D pose estimations, namely from the two camera subsets, corresponding to the same moment .

Results. For all six moments (–), we evaluated the triangulation error of the 3D hand joints in terms of the mean reprojection error. The results are reported in Table 4. We obtain a mean triangulation error of 4.4 px when using interpolation, compared to 8.8 px when using the nearest-frame synchronization strategy. These results highlight the clear benefit of sub-frame-accurate synchronization.

Table 4.

Mean triangulation error (pixels) of 3D hand joints triangulated using four calibrated GoPros across six selected moments (–) where the full hand is visible and in motion. Results are reported for both nearest-frame synchronization and sub-frame synchronization, demonstrating the benefit of sub-frame-accurate synchronization.

In the partitioned sparse-view scenario, we evaluated the discrepancy between the two resulting 3D poses. As shown in Figure 3, sub-frame synchronization reduced the mean Euclidean distance between poses from 26.07 mm without interpolation to 7.52 mm with interpolation, confirming its clear benefit.

Figure 3.

Mean Euclidean distance between two independent 3D hand pose reconstructions obtained from different camera subsets (GoPros 1 & 2 and 3 & 4) at , (a) with and (b) without sub-frame synchronization. Sub-frame synchronization substantially reduces the discrepancy between reconstructions, highlighting its importance for accurate 3D hand pose estimation.

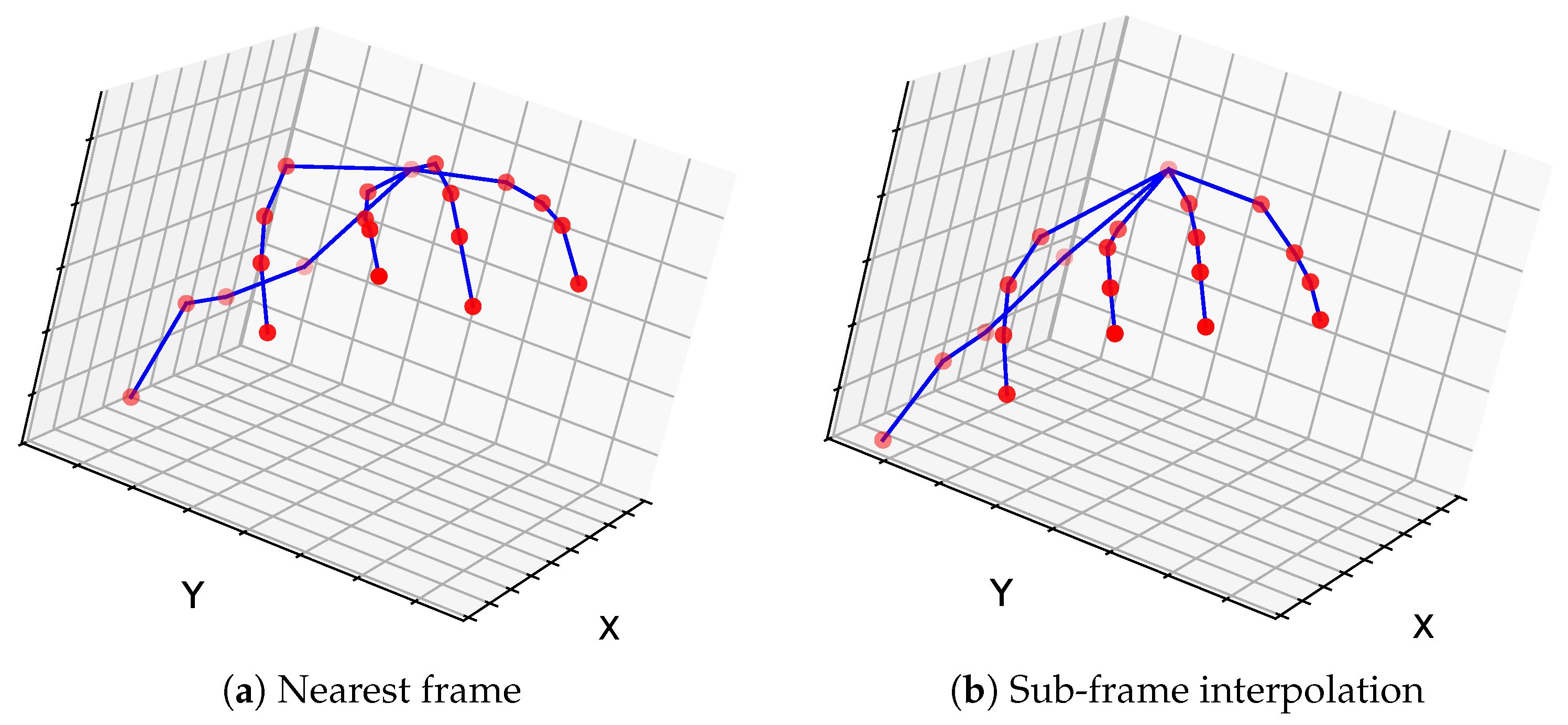

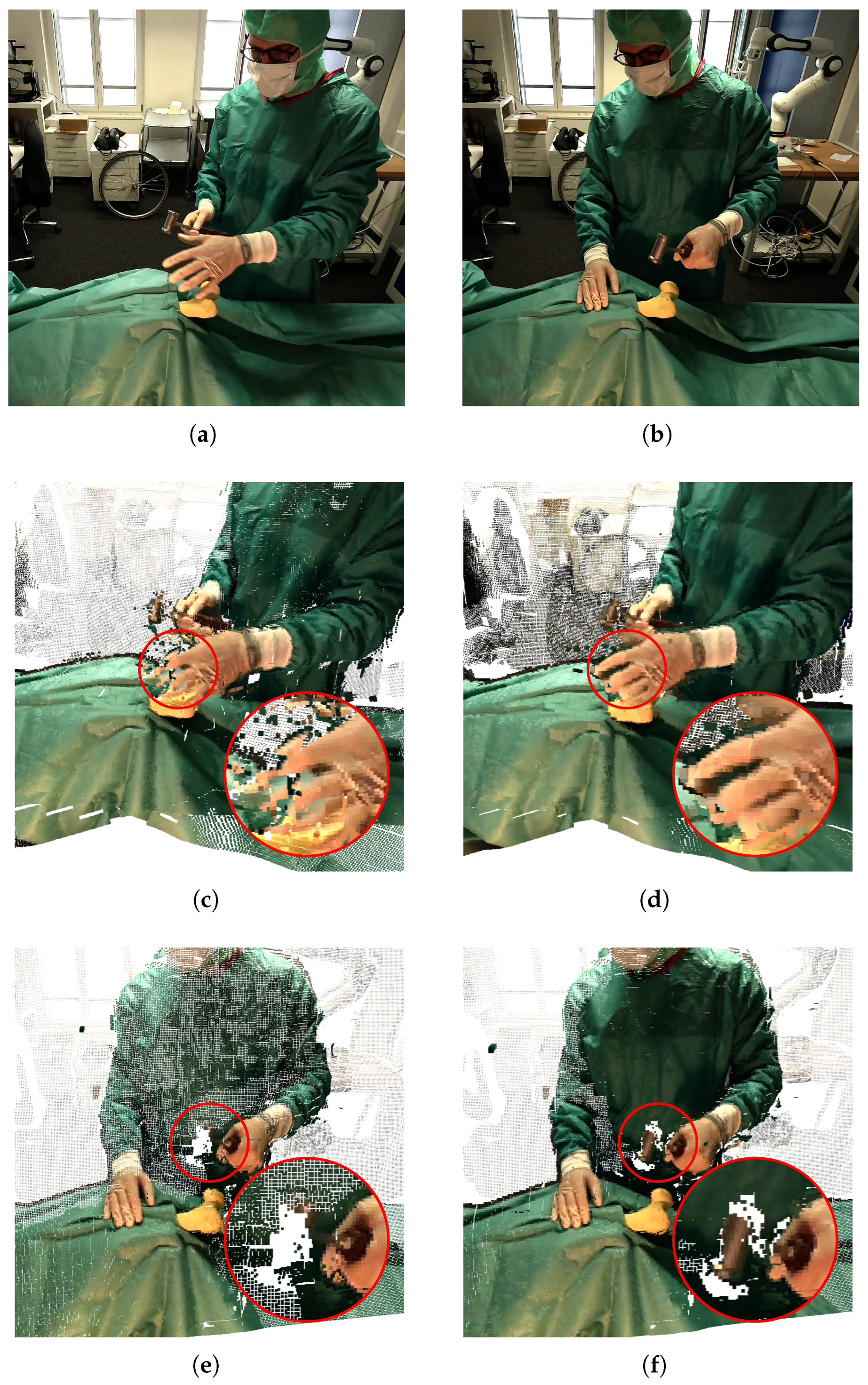

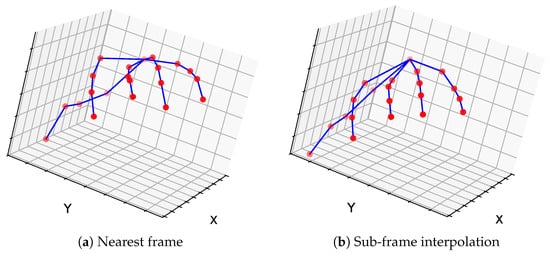

Finally, we present a qualitative comparison with and without sub-frame synchronization in Figure 4a,b, respectively, associated with the scene shown at the top of Figure 5a. We observe a clear discrepancy in hand shape under the nearest-frame strategy, whereas the sub-frame synchronization case produces a reconstruction consistent with the actual hand shape.

Figure 4.

Qualitative comparison of 3D hand reconstructions obtained (a) with nearest-frame synchronization and (b) with sub-frame synchronization (triangulation from GoPro 3 and 4), for the scene shown in Figure 5a (left hand). The nearest-frame approach produces noticeable discrepancies in hand shape, whereas sub-frame synchronization yields a reconstruction consistent with the true hand configuration.

Figure 5.

Comparison of 3D reconstruction results without and with sub-frame synchronization. Top: reference real images corresponding to the reconstructions shown in the middle panels (a) and the bottom panels (b), respectively. Middle: The left hand using a nearest-frame synchronization strategy presents large reconstruction artifacts (c) while being successfully reconstructed using the proposed method that allows for sub-frame synchronization (d). Bottom: 3D reconstruction based on the nearest frame-based synchronization strategy fails in reconstructing the hammer (e) which is mostly reconstructed using sub-frame interpolation (f) (see image center). Panels (c,d) use footage from GoPro 1, GoPro 3, and GoPro 4; panels (e,f) use GoPro 2 and GoPro 3.

5.5. Experiment 5: 3D Reconstruction

In this experiment, we visually demonstrate the benefits of sub-frame-accurate synchronization for multi-view 3D reconstruction. We combined our synchronization method with a state-of-the-art video interpolation technique to generate temporally aligned frames across all viewpoints, thereby significantly enhancing the quality of 3D reconstruction of dynamic scenes, particularly at lower frame rates.

Experimental setup. We reused the recordings from Experiment 4 and selected two moments with significant motion. The method of Jin et al. [26] was applied to generate temporally aligned frames across all viewpoints. MASt3R [43] was then performed on both the interpolated and non-interpolated frames. The reconstructions are shown in Figure 5.

Results. For both selected moments, the interpolated frames produce noticeably higher-quality reconstructions with fewer artifacts compared to the nearest-frame strategy. We conclude that sub-frame interpolation using our synchronization method provides a practical and effective alternative to hardware synchronization for scenes involving fast-moving objects.

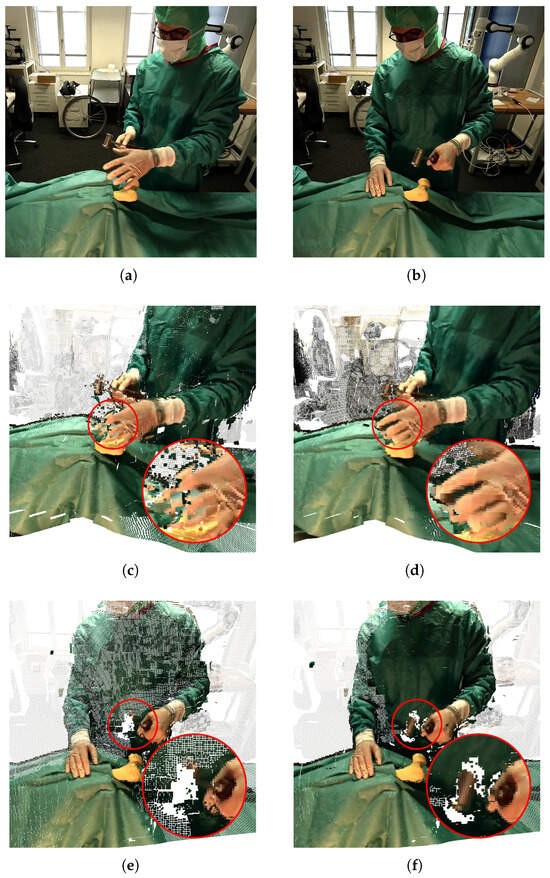

5.6. Large-Scale Data Collections

In addition to controlled experiments, our method has been successfully deployed in multiple surgical data collections, recording several hours of surgery footage using more than 25 cameras. These collections featured a highly heterogeneous camera array, including near-field and far-field GoPro cameras operating in linear lens mode, head-mounted Aria glasses capturing a wide-angle view, Canon CR-N300 capturing close-up views, different iPhone models, Aria glasses, and a high-end infrared surgical navigation system (Atracsys FusionTrack 500) for tool tracking. The devices exhibited significant variations in shutter speeds, frame rates, sensor types, and price points.

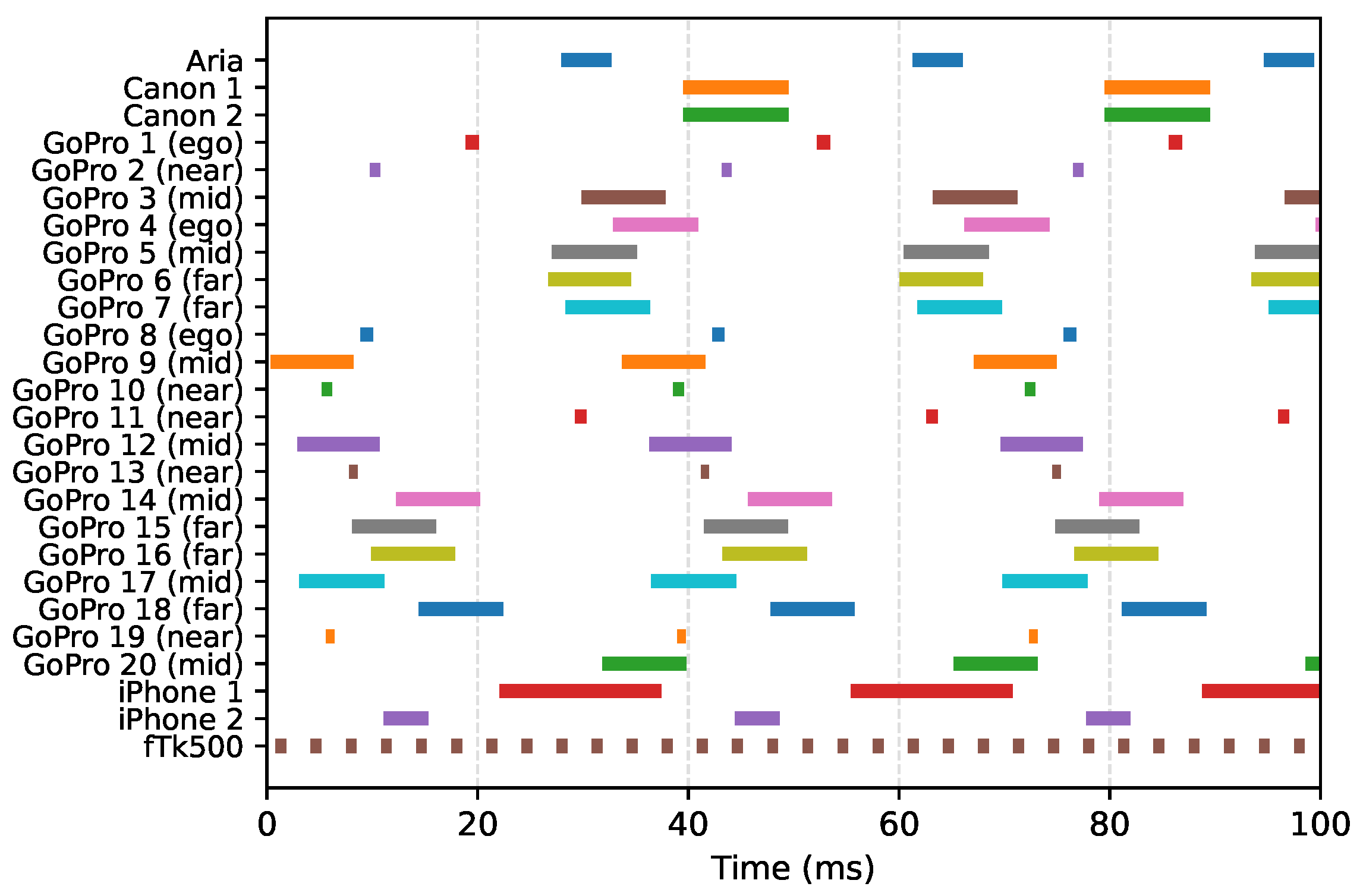

This diversity posed considerable synchronization challenges that are not addressed by existing methods. The successful synchronization of all video streams highlights the flexibility and robustness of our approach in complex, real-world environments. Synchronization was manually confirmed by visually comparing frames with significant motion across all cameras.

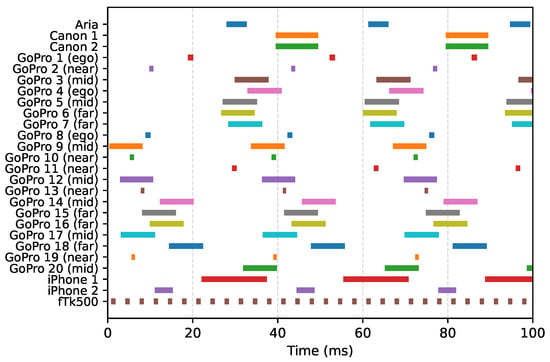

Figure 6 illustrates the relative exposure times of 25 unsynchronized cameras used simultaneously in a single recording. Our method accurately determines the exact start and end of each exposure. Note that the exposure durations differ across cameras, as their configurations were adjusted according to the amount of light within each field of view. Specifically, the Near and Ego groups have considerably shorter exposure times.

Figure 6.

Measured frame timing across 25 unsynchronized cameras. Individual cameras are colored for visual clarity. The variation in bar lengths indicates that cameras use different exposure settings. Exposure windows for near-field and egocentric cameras are markedly shorter than those of the far-field cameras due to the high intensity of the surgical lamp. The Atracsys fusionTrack 500 (fTk500), a stereo infrared camera, operates at a much higher frame rate than the RGB cameras.

To validate our synchronization method, each video was visually inspected at a minimum of two checkpoints. We extracted frames from the aligned video streams and checked for light switch events, where the surgical lamp or the room light was turned off and on at the beginning and end of each recording.

In cases where a camera stopped recording early (e.g., egocentric cameras worn by surgeons leaving the operating room), the second light-switch event was not available. In those situations, we relied on distinct, fast movements or other visible markers to verify the synchronization at the second checkpoint. Synchronization between the IR and RGB streams was validated separately in Experiment 3.

Out of 73 total recordings, only one instance failed synchronization. This was associated with the Aria glasses worn by the lead surgeon. The failure was not due to our method, but rather to frame drops in the recordings from the Aria glasses.

6. Conclusions

We have presented a novel tandem hardware and software solution for millisecond-accurate temporal multi-view video synchronization that is compatible with a wide range of camera systems and has been successfully validated in a broad range of scenarios.

Through extensive experiments, we have demonstrated that for synchronizing two GoPro action cameras, our method outperforms all existing approaches. Moreover, for systems including infrared cameras, our approach remains effective.

We have also demonstrated that sub-frame-accurate synchronization can be achieved without hardware synchronization and can enhance the performance of many downstream computer vision tasks through frame interpolation. This allows operation at lower frame rates while still recovering precise information, reducing storage and computational requirements and enabling more efficient large-scale data collection and analysis.

Finally, our method has been successfully tested outside of the lab with 25+ cameras, demonstrating its robustness and real-world applicability. Together, these results confirm that our approach provides accurate, reliable, and broadly applicable sub-frame synchronization across diverse camera systems and practical scenarios.

We acknowledge that our approach introduces a dedicated hardware component and requires a short manual calibration step, which limits applicability in fully automated or unattended deployments. This represents a trade-off between operational convenience and universality. In practice, calibration is lightweight and performed only at the beginning and end of a recording, typically taking only a few seconds per camera, which we found acceptable even in large-scale setups.

7. Future Work

In this work, we evaluated our method using cameras operating at up to 60 fps. An interesting direction for future research is to extend the evaluation to high-speed cameras (1000+ fps), where temporal precision becomes even more critical for frame-perfect synchronization. While we have demonstrated promising performance compared to widely used content-independent methods, a comparison against more recent content-based or hybrid synchronization approaches in specific use cases would provide valuable additional insights, even though their performance does not necessarily generalize across different scenarios. Additionally, it would be worthwhile to explore whether the computer vision pipeline can be enhanced to mitigate rolling-shutter artifacts by introducing a scan-out time parameter in our model, alongside offset and drift. Another promising direction is the investigation of real-time processing methods to enable synchronization of live video streams, rather than performing offline synchronization during post-production.

Author Contributions

Conceptualization, L.C.; methodology, J.M. and L.C.; hardware, J.M. and F.G.; software, J.M.; investigation, J.M., L.C. and J.W.; writing—original draft preparation, J.M.; writing—review and editing, L.C.; supervision, L.C.; project administration, M.P. and P.F.; funding acquisition, P.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported by the OR-X—a Swiss national research infrastructure for translational surgery—and received associated funding by the University of Zurich and University Hospital Balgrist, as well as the Innosuisse Flagship project PROFICIENCY No. PFFS-21-19.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All source code used in this study is openly available in a public GitHub repository at https://github.com/jaromeyer/RocSync (accessed on 27 November 2025). The version used for the experiments corresponds to commit ‘5d82ddc’. The datasets generated and analyzed during the current study are available from the authors on reasonable request.

Acknowledgments

The authors would like to thank Alan Magdaleno and Max Krähenmann for their help in evaluating the experiments. We also thank Sergey Prokudin and Jonas Hein for sharing data for the large-scale data collections. During the preparation of this manuscript, the author(s) used ChatGPT (version GPT-4o, OpenAI, https://chatgpt.com, accessed on 27 November 2025) for the purposes of correcting grammar and improving text flow. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. fusionTrack Pipeline

The Atracsys fusionTrack 500 system required a modified processing pipeline compared to the one presented in Section 4.2. Unlike standard cameras that output images, this system records sequences of 3D positions and orientations corresponding to detected fiducials and markers. Here, a fiducial refers to a single reflective sphere or infrared LED, while a marker denotes a predefined geometric arrangement composed of three or more fiducials. In our setup, the corner LEDs of our LED clock were registered as a marker. The fusionTrack system thus directly provides both the 3D position and orientation of this marker. The timestamp-encoding LEDs (i.e., counter and ring) are detected as individual fiducials, and the 3D positions of these fiducials are stored accordingly.

- 1.

- Detect marker frames: Identify all frames in which the RocSync marker is successfully detected.

- 2.

- Transform fiducials to the local frame: Transform all detected fiducials into the local coordinate frame defined by the marker.

- 3.

- Filter fiducials: Retain only fiducials that lie close to the plane of the LED clock device.

- 4.

- Decode LEDs: For each LED, check whether a nearby fiducial was detected; if so, interpret that LED as illuminated.

- 5.

- Estimate offset and drift: Apply RANSAC-based linear regression to the decoded timestamps across multiple frames to compute a global offset and drift.

Appendix B. Supplementary Figures

Figure A1.

Three consecutive frames overlaid to demonstrate rolling shutter artifacts (from Experiment 1 with Azure Kinect DK). The beginnings of the three illuminated sectors (marked by the arrows) are unevenly spaced, and the sectors themselves differ in length.

Figure A1.

Three consecutive frames overlaid to demonstrate rolling shutter artifacts (from Experiment 1 with Azure Kinect DK). The beginnings of the three illuminated sectors (marked by the arrows) are unevenly spaced, and the sectors themselves differ in length.

References

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkuehler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 139. [Google Scholar] [CrossRef]

- Wang, F.; Zhu, Q.; Chang, D.; Gao, Q.; Han, J.; Zhang, T.; Hartley, R.; Pollefeys, M. Learning-based Multi-View Stereo: A Survey. arXiv 2024, arXiv:2408.15235. [Google Scholar] [CrossRef]

- Mitra, R.; Gundavarapu, N.B.; Sharma, A.; Jain, A. Multiview-Consistent Semi-Supervised Learning for 3D Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 6906–6915. [Google Scholar]

- Zhang, Y.; Wang, C.; Wang, X.; Liu, W.; Zeng, W. VoxelTrack: Multi-Person 3D Human Pose Estimation and Tracking in the Wild. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 2613–2626. [Google Scholar] [CrossRef]

- Hein, J.; Cavalcanti, N.; Suter, D.; Zingg, L.; Carrillo, F.; Calvet, L.; Farshad, M.; Navab, N.; Pollefeys, M.; Fürnstahl, P. Next-generation surgical navigation: Marker-less multi-view 6DoF pose estimation of surgical instruments. Med. Image Anal. 2025, 103, 103613. [Google Scholar] [CrossRef]

- Grauman, K.; Westbury, A.; Byrne, E.; Cartillier, V.; Chavis, Z.; Furnari, A.; Girdhar, R.; Hamburger, J.; Jiang, H.; Kukreja, D.; et al. Ego4D: Around the World in 3,000 Hours of Egocentric Video. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 9468–9509. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Byrne, E.; Nagarajan, T.; Wang, H.; Martin, M.; Torresani, L. Ego4D goal-step: Toward hierarchical understanding of procedural activities. In Proceedings of the 36th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Curran Associates Inc.: Red Hook, NY, USA, 2023; pp. 38863–38886. [Google Scholar]

- Rodin, I.; Furnari, A.; Min, K.; Tripathi, S.; Farinella, G.M. Action Scene Graphs for Long-Form Understanding of Egocentric Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; IEEE: New York, NY, USA, 2024; pp. 18622–18632. [Google Scholar]

- Li, D.; Feng, J.; Chen, J.; Dong, W.; Li, G.; Shi, G.; Jiao, L. EgoSplat: Open-Vocabulary Egocentric Scene Understanding with Language Embedded 3D Gaussian Splatting. arXiv 2025, arXiv:2503.11345. [Google Scholar]

- Synchronizing Multiple Azure Kinect DK Devices. Available online: https://learn.microsoft.com/en-us/azure/kinect-dk/multi-camera-sync (accessed on 27 July 2025).

- Basler ace2 Camera Series. Available online: https://www.baslerweb.com/en-us/cameras/ace2/ (accessed on 5 September 2025).

- Emergent Vision Technologies HR-Series 10GigE Cameras. Available online: https://emergentvisiontec.com/products/hr-10gige-cameras-rdma-area-scan/ (accessed on 5 September 2025).

- Özsoy, E.; Pellegrini, C.; Czempiel, T.; Tristram, F.; Yuan, K.; Bani-Harouni, D.; Eck, U.; Busam, B.; Keicher, M.; Navab, N. MM-OR: A Large Multimodal Operating Room Dataset for Semantic Understanding of High Intensity Surgical Environments. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2025; IEEE: New York, NY, USA, 2025. [Google Scholar]

- Özsoy, E.; Mamur, A.; Tristram, F.; Pellegrini, C.; Wysocki, M.; Busam, B.; Navab, N. EgoExOR: An Ego-Exo-Centric Operating Room Dataset for Surgical Activity Understanding. arXiv 2025, arXiv:2505.24287. [Google Scholar]

- Hollidt, D.; Streli, P.; Jiang, J.; Haghighi, Y.; Qian, C.; Liu, X.; Holz, C. EgoSim: An Egocentric Multi-view Simulator and Real Dataset for Body-worn Cameras during Motion and Activity. In Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Curran Associates Inc.: Red Hook, NY, USA, 2024; pp. 106607–106627. [Google Scholar]

- Timecode Sync (HERO12 Black). Available online: https://community.gopro.com/s/article/HERO12-Black-Timecode-Sync?language=en_US (accessed on 5 August 2025).

- Li, T.; Slavcheva, M.; Zollhoefer, M.; Green, S.; Lassner, C.; Kim, C.; Schmidt, T.; Lovegrove, S.; Goesele, M.; Newcombe, R.; et al. Neural 3D Video Synthesis from Multi-view Video. arXiv 2022, arXiv:2103.02597. [Google Scholar] [CrossRef]

- Shin, Y.; Molybog, I. Beyond Audio and Pose: A General-Purpose Framework for Video Synchronization. arXiv 2025, arXiv:2506.15937. [Google Scholar] [CrossRef]

- Elhayek, A.; Stoll, C.; Kim, K.I.; Seidel, H.P.; Theobalt, C. Feature-Based Multi-video Synchronization with Subframe Accuracy. In Proceedings of the Joint 34th DAGM and 36th OAGM Symposium, Graz, Austria, 28–31 August 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 266–275. [Google Scholar]

- Šmíd, M.; Matas, J. Rolling Shutter Camera Synchronization with Sub-millisecond Accuracy. In Proceedings of the 12th International Conference on Computer Vision Theory and Applications, Porto, Portugal, 27 February–1 March 2017; pp. 607–614. [Google Scholar]

- Wu, X.; Wu, Z.; Zhang, Y.; Ju, L.; Wang, S. Multi-Video Temporal Synchronization by Matching Pose Features of Shared Moving Subjects. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop, Seoul, Republic of Korea, 27–28 October 2019; IEEE: New York, NY, USA, 2019; pp. 2729–2738. [Google Scholar]

- Zhou, X.; Dai, Y.; Qin, H.; Qiu, S.; Liu, X.; Dai, Y.; Li, J.; Yang, T. Subframe-Level Synchronization in Multi-Camera System Using Time-Calibrated Video. Sensors 2024, 24, 6975. [Google Scholar] [CrossRef]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.; Marín-Jiménez, M. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Kye, D.; Roh, C.; Ko, S.; Eom, C.; Oh, J. AceVFI: A Comprehensive Survey of Advances in Video Frame Interpolation. arXiv 2025, arXiv:2506.01061. [Google Scholar] [CrossRef]

- Jin, X.; Wu, L.; Chen, J.; Cho, I.; Hahm, C.H. Unified Arbitrary-Time Video Frame Interpolation and Prediction. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Hyderabad, India, 6–11 April 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Hein, J.; Giraud, F.; Calvet, L.; Schwarz, A.; Cavalcanti, N.A.; Prokudin, S.; Farshad, M.; Tang, S.; Pollefeys, M.; Carrillo, F.; et al. Creating a Digital Twin of Spinal Surgery: A Proof of Concept. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 2355–2364. [Google Scholar]

- Noda, A.; Tabata, S.; Ishikawa, M.; Yamakawa, Y. Synchronized High-Speed Vision Sensor Network for Expansion of Field of View. Sensors 2018, 18, 1276. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.H.; Wolf, W.H. A case study in clock synchronization for distributed camera systems. In Proceedings of the SPIE Conference on Embedded Processors for Multimedia and Communications II, San Jose, CA, USA, 17–18 January 2005; pp. 164–174. [Google Scholar]

- Chen, P.; Yang, Z. Understanding PTP Performance in Today’s Wi-Fi Networks. IEEE/ACM Trans. Netw. 2023, 31, 3037–3050. [Google Scholar] [CrossRef]

- Liu, Y.; Ai, H.; Xing, J.; Li, X.; Wang, X.; Tao, P. Advancing video synchronization with fractional frame analysis: Introducing a novel dataset and model. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 3828–3836. [Google Scholar]

- Shrstha, P.; Barbieri, M.; Weda, H. Synchronization of multi-camera video recordings based on audio. In Proceedings of the 15th ACM International Conference on Multimedia, Augsburg, Germany, 25–29 September 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 545–548. [Google Scholar]

- Imre, E.; Guillemaut, J.Y.; Hilton, A. Through-the-Lens Multi-camera Synchronisation and Frame-Drop Detection for 3D Reconstruction. In Proceedings of the Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; IEEE Computer Society: Washington, DC, USA, 2012; pp. 395–402. [Google Scholar]

- Shrestha, P.; Barbieri, M.; Weda, H.; Sekulovski, D. Synchronization of Multiple Camera Videos Using Audio-Visual Features. IEEE Trans. Multimed. 2010, 12, 79–92. [Google Scholar] [CrossRef]

- Albl, C.; Kukelova, Z.; Fitzgibbon, A.; Heller, J.; Smid, M.; Pajdla, T. On the Two-View Geometry of Unsynchronized Cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 5593–5602. [Google Scholar]

- Flückiger, T.; Hein, J.; Fischer, V.; Fürnstahl, P.; Calvet, L. Automatic calibration of a multi-camera system with limited overlapping fields of view for 3D surgical scene reconstruction. Int. J. Comput. Assist. Radiol. Surg. 2025. [Google Scholar] [CrossRef] [PubMed]

- Grauman, K.; Westbury, A.; Torresani, L.; Kitani, K.; Malik, J.; Afouras, T.; Ashutosh, K.; Baiyya, V.; Bansal, S.; Boote, B.; et al. Ego-Exo4D: Understanding Skilled Human Activity from First- and Third-Person Perspectives. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 19383–19400. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 4th ed.; Johns Hopkins University Press: Philadelphia, PA, USA, 2013. [Google Scholar]

- Huber, P.J. Robust Estimation of a Location Parameter. Ann. Math. Stat. 1964, 35, 73–101. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Guggenberger, M.; Lux, M.; Böszörmenyi, L. AudioAlign—Synchronization of A/V-Streams Based on Audio Data. In Proceedings of the IEEE International Symposium on Multimedia, Irvine, CA, USA, 10–12 December 2012; IEEE: New York, NY, USA, 2012; pp. 382–383. [Google Scholar]

- Leroy, V.; Cabon, Y.; Revaud, J. Grounding Image Matching in 3D with MASt3R. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 71–91. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.